User login

Study finds nonoperative management of blunt splenic injuries in elderly safe

WAIKOLOA, HAWAII – Nonoperative management of blunt splenic injuries in the geriatric population is safe, based on results from a study of national data.

Although the efficacy and safety of nonoperative management of blunt splenic injuries in adults is well established, “early recommendations stated that advanced age was a contraindication to nonoperative management of blunt splenic injuries due to high reported failure rates,” researchers led by Marc Trust, MD, wrote in an abstract presented at the annual meeting of the American Association for the Surgery of Trauma. “Although more recent literature has shown lower and acceptable failure rates, this population continues to fail more often compared to younger patients. Published data suffers from low patient numbers and is conflicting regarding future rate and safety.”

In an effort to obtain well powered, nationwide data to evaluate the recent failure rates and effect on morality among geriatric patients, Dr. Trust of the University of Texas at Austin and his associates retrospectively reviewed the 2014 National Trauma Databank to identify patients with blunt splenic injury. Those who did not receive splenectomy within 6 hours of admission were considered to have undergone nonoperative management. Failure of nonoperative management was defined as requiring splenectomy during the same hospitalization. The primary endpoints were failure of nonoperative management and mortality.

Of the 18,917 total patients identified with a blunt splenic injury 2,240 (12%) were aged 65 years and older. Geriatric patients failed nonoperative management more often than did younger patients (6% vs. 4%; P less than .0001). Having an Injury Severity Score of 16 or greater was the only independent risk factor associated with failure of nonoperative management in geriatric patients (odds ratio, 2.8; P less than .0001). No difference in mortality was observed in geriatric patients who had successful versus failed nonoperative management (11% vs. 15%; P = .22). Independent risk factors for mortality in geriatric patients who underwent nonoperative management included admission hypotension (OR, 1.5; P = .048), high ISS (OR, 3.8; P less than .0001), low Glasgow Coma Scale (OR, 5.0; P less than .0001), and preexisting cardiac disease (OR, 3.6; P less than .0001). However, failure of nonoperative management was not independently associated with mortality (OR, 1.4; P = .3).

In their abstract, the researchers characterized the increased failure rates of nonoperative blunt splenic injuries in geriatric patients, compared with their counterparts as “acceptable” and noted that they were lower than previously reported in published literature. They reported having no financial disclosures.

WAIKOLOA, HAWAII – Nonoperative management of blunt splenic injuries in the geriatric population is safe, based on results from a study of national data.

Although the efficacy and safety of nonoperative management of blunt splenic injuries in adults is well established, “early recommendations stated that advanced age was a contraindication to nonoperative management of blunt splenic injuries due to high reported failure rates,” researchers led by Marc Trust, MD, wrote in an abstract presented at the annual meeting of the American Association for the Surgery of Trauma. “Although more recent literature has shown lower and acceptable failure rates, this population continues to fail more often compared to younger patients. Published data suffers from low patient numbers and is conflicting regarding future rate and safety.”

In an effort to obtain well powered, nationwide data to evaluate the recent failure rates and effect on morality among geriatric patients, Dr. Trust of the University of Texas at Austin and his associates retrospectively reviewed the 2014 National Trauma Databank to identify patients with blunt splenic injury. Those who did not receive splenectomy within 6 hours of admission were considered to have undergone nonoperative management. Failure of nonoperative management was defined as requiring splenectomy during the same hospitalization. The primary endpoints were failure of nonoperative management and mortality.

Of the 18,917 total patients identified with a blunt splenic injury 2,240 (12%) were aged 65 years and older. Geriatric patients failed nonoperative management more often than did younger patients (6% vs. 4%; P less than .0001). Having an Injury Severity Score of 16 or greater was the only independent risk factor associated with failure of nonoperative management in geriatric patients (odds ratio, 2.8; P less than .0001). No difference in mortality was observed in geriatric patients who had successful versus failed nonoperative management (11% vs. 15%; P = .22). Independent risk factors for mortality in geriatric patients who underwent nonoperative management included admission hypotension (OR, 1.5; P = .048), high ISS (OR, 3.8; P less than .0001), low Glasgow Coma Scale (OR, 5.0; P less than .0001), and preexisting cardiac disease (OR, 3.6; P less than .0001). However, failure of nonoperative management was not independently associated with mortality (OR, 1.4; P = .3).

In their abstract, the researchers characterized the increased failure rates of nonoperative blunt splenic injuries in geriatric patients, compared with their counterparts as “acceptable” and noted that they were lower than previously reported in published literature. They reported having no financial disclosures.

WAIKOLOA, HAWAII – Nonoperative management of blunt splenic injuries in the geriatric population is safe, based on results from a study of national data.

Although the efficacy and safety of nonoperative management of blunt splenic injuries in adults is well established, “early recommendations stated that advanced age was a contraindication to nonoperative management of blunt splenic injuries due to high reported failure rates,” researchers led by Marc Trust, MD, wrote in an abstract presented at the annual meeting of the American Association for the Surgery of Trauma. “Although more recent literature has shown lower and acceptable failure rates, this population continues to fail more often compared to younger patients. Published data suffers from low patient numbers and is conflicting regarding future rate and safety.”

In an effort to obtain well powered, nationwide data to evaluate the recent failure rates and effect on morality among geriatric patients, Dr. Trust of the University of Texas at Austin and his associates retrospectively reviewed the 2014 National Trauma Databank to identify patients with blunt splenic injury. Those who did not receive splenectomy within 6 hours of admission were considered to have undergone nonoperative management. Failure of nonoperative management was defined as requiring splenectomy during the same hospitalization. The primary endpoints were failure of nonoperative management and mortality.

Of the 18,917 total patients identified with a blunt splenic injury 2,240 (12%) were aged 65 years and older. Geriatric patients failed nonoperative management more often than did younger patients (6% vs. 4%; P less than .0001). Having an Injury Severity Score of 16 or greater was the only independent risk factor associated with failure of nonoperative management in geriatric patients (odds ratio, 2.8; P less than .0001). No difference in mortality was observed in geriatric patients who had successful versus failed nonoperative management (11% vs. 15%; P = .22). Independent risk factors for mortality in geriatric patients who underwent nonoperative management included admission hypotension (OR, 1.5; P = .048), high ISS (OR, 3.8; P less than .0001), low Glasgow Coma Scale (OR, 5.0; P less than .0001), and preexisting cardiac disease (OR, 3.6; P less than .0001). However, failure of nonoperative management was not independently associated with mortality (OR, 1.4; P = .3).

In their abstract, the researchers characterized the increased failure rates of nonoperative blunt splenic injuries in geriatric patients, compared with their counterparts as “acceptable” and noted that they were lower than previously reported in published literature. They reported having no financial disclosures.

AT THE AAST ANNUAL MEETING

Therapy, methylphenidate combo proves best treatment of adult ADHD

VIENNA – One-on-one counseling and manualized cognitive-behavioral therapy are equally effective as adjuncts to methylphenidate in the treatment of adult ADHD, Jan K. Buitelaar, MD, PhD, said at the annual congress of the European College of Neuropsychopharmacology.

He highlighted this finding from what he considers a well-conducted German randomized, double-blind, multicenter clinical trial in his discussion of recent major developments in the field of adult attention-deficit/hyperactivity disorder. He singled out the study because it provides clinicians with an evidence-based approach to treatment of this common disorder: namely, psychotherapy plus medication is better than either alone, and it doesn’t matter whether the psychotherapy takes the form of individual clinical behavioral counseling or a structured group cognitive-behavioral therapy (CBT) program tailored specifically for adult ADHD.

Investigators in the German COMPAS (Comparison of Methylphenidate and Psychotherapy in Adult ADHD Study) Consortium randomized 419 18- to 58-year-old outpatients with ADHD to one of four treatment arms: group CBT plus methylphenidate or placebo, or nonspecific individual counseling plus methylphenidate or placebo. Psychotherapy sessions were conducted once weekly for the first 3 months and monthly for the next 9 months. The group CBT emphasized building self-esteem, coping skills, and acceptance. Methylphenidate was started at 10 mg per day and titrated over 6 weeks to 60 mg per day or a maximum of 1.2 mg/kg. The medication portion of the trial was conducted double-blind.

The primary outcome was the change in the ADHD Index of the Conners’ Adult ADHD Rating Scale as assessed by blinded observers from baseline to the end of the initial 3-month intensive treatment. The ADHD Index improved significantly from a mean baseline score of 20.6 to 17.6 in the group therapy arms and 16.5 with individual counseling, with no significant difference between the groups. Methylphenidate proved superior to placebo: those in the groups that received the medication in addition to their psychological intervention had a mean 3-month ADHD Index score that was 1.7 points lower than those on placebo (JAMA Psychiatry. 2015 Dec;72[12]:1199-210).

Regarding secondary outcomes, the ADHD Index results remained stable at the study’s conclusion at 12 months. However, there were no significant changes in self-rated depression scores over time. Blinded observers rated the group CBT patients as showing significantly greater improvement on the Clinical Global Impression Scale of Effectiveness.

The COMPAS trial was funded by the German Federal Ministry of Research and Education.

“This study provides real guidance for those of us who treat adult ADHD. The critical question here, of course, is what is the longer-term effect,” Dr. Buitelaar said.

He reported having no financial conflicts of interest regarding his presentation.

VIENNA – One-on-one counseling and manualized cognitive-behavioral therapy are equally effective as adjuncts to methylphenidate in the treatment of adult ADHD, Jan K. Buitelaar, MD, PhD, said at the annual congress of the European College of Neuropsychopharmacology.

He highlighted this finding from what he considers a well-conducted German randomized, double-blind, multicenter clinical trial in his discussion of recent major developments in the field of adult attention-deficit/hyperactivity disorder. He singled out the study because it provides clinicians with an evidence-based approach to treatment of this common disorder: namely, psychotherapy plus medication is better than either alone, and it doesn’t matter whether the psychotherapy takes the form of individual clinical behavioral counseling or a structured group cognitive-behavioral therapy (CBT) program tailored specifically for adult ADHD.

Investigators in the German COMPAS (Comparison of Methylphenidate and Psychotherapy in Adult ADHD Study) Consortium randomized 419 18- to 58-year-old outpatients with ADHD to one of four treatment arms: group CBT plus methylphenidate or placebo, or nonspecific individual counseling plus methylphenidate or placebo. Psychotherapy sessions were conducted once weekly for the first 3 months and monthly for the next 9 months. The group CBT emphasized building self-esteem, coping skills, and acceptance. Methylphenidate was started at 10 mg per day and titrated over 6 weeks to 60 mg per day or a maximum of 1.2 mg/kg. The medication portion of the trial was conducted double-blind.

The primary outcome was the change in the ADHD Index of the Conners’ Adult ADHD Rating Scale as assessed by blinded observers from baseline to the end of the initial 3-month intensive treatment. The ADHD Index improved significantly from a mean baseline score of 20.6 to 17.6 in the group therapy arms and 16.5 with individual counseling, with no significant difference between the groups. Methylphenidate proved superior to placebo: those in the groups that received the medication in addition to their psychological intervention had a mean 3-month ADHD Index score that was 1.7 points lower than those on placebo (JAMA Psychiatry. 2015 Dec;72[12]:1199-210).

Regarding secondary outcomes, the ADHD Index results remained stable at the study’s conclusion at 12 months. However, there were no significant changes in self-rated depression scores over time. Blinded observers rated the group CBT patients as showing significantly greater improvement on the Clinical Global Impression Scale of Effectiveness.

The COMPAS trial was funded by the German Federal Ministry of Research and Education.

“This study provides real guidance for those of us who treat adult ADHD. The critical question here, of course, is what is the longer-term effect,” Dr. Buitelaar said.

He reported having no financial conflicts of interest regarding his presentation.

VIENNA – One-on-one counseling and manualized cognitive-behavioral therapy are equally effective as adjuncts to methylphenidate in the treatment of adult ADHD, Jan K. Buitelaar, MD, PhD, said at the annual congress of the European College of Neuropsychopharmacology.

He highlighted this finding from what he considers a well-conducted German randomized, double-blind, multicenter clinical trial in his discussion of recent major developments in the field of adult attention-deficit/hyperactivity disorder. He singled out the study because it provides clinicians with an evidence-based approach to treatment of this common disorder: namely, psychotherapy plus medication is better than either alone, and it doesn’t matter whether the psychotherapy takes the form of individual clinical behavioral counseling or a structured group cognitive-behavioral therapy (CBT) program tailored specifically for adult ADHD.

Investigators in the German COMPAS (Comparison of Methylphenidate and Psychotherapy in Adult ADHD Study) Consortium randomized 419 18- to 58-year-old outpatients with ADHD to one of four treatment arms: group CBT plus methylphenidate or placebo, or nonspecific individual counseling plus methylphenidate or placebo. Psychotherapy sessions were conducted once weekly for the first 3 months and monthly for the next 9 months. The group CBT emphasized building self-esteem, coping skills, and acceptance. Methylphenidate was started at 10 mg per day and titrated over 6 weeks to 60 mg per day or a maximum of 1.2 mg/kg. The medication portion of the trial was conducted double-blind.

The primary outcome was the change in the ADHD Index of the Conners’ Adult ADHD Rating Scale as assessed by blinded observers from baseline to the end of the initial 3-month intensive treatment. The ADHD Index improved significantly from a mean baseline score of 20.6 to 17.6 in the group therapy arms and 16.5 with individual counseling, with no significant difference between the groups. Methylphenidate proved superior to placebo: those in the groups that received the medication in addition to their psychological intervention had a mean 3-month ADHD Index score that was 1.7 points lower than those on placebo (JAMA Psychiatry. 2015 Dec;72[12]:1199-210).

Regarding secondary outcomes, the ADHD Index results remained stable at the study’s conclusion at 12 months. However, there were no significant changes in self-rated depression scores over time. Blinded observers rated the group CBT patients as showing significantly greater improvement on the Clinical Global Impression Scale of Effectiveness.

The COMPAS trial was funded by the German Federal Ministry of Research and Education.

“This study provides real guidance for those of us who treat adult ADHD. The critical question here, of course, is what is the longer-term effect,” Dr. Buitelaar said.

He reported having no financial conflicts of interest regarding his presentation.

AT THE ECNP

Key clinical point:

Major finding: ADHD Index scores in patients with adult ADHD improved to a similar extent in response to methylphenidate plus either nonspecific individual counseling or structured group cognitive-behavioral therapy.

Data source: A randomized, multicenter, 12-month, four-arm clinical trial of 419 patients with adult ADHD.

Disclosures: The presenter reported having no financial conflicts of interest.

Growth in hospital-employed physicians shows no signs of slowing

The parade of doctors leaving private practice for an employee position shows no sign of slowing.

In July 2012, an estimated 95,000 physicians (26%) were hospital employed. By July 2015, that number grew to 141,000 (38%), according to study conducted by Avalere for the Physicians Advocacy Institute. The biggest leap occurred between July 2014, when 114,000 physicians were listed as employees, to January 2015, when 133,000 were listed.

“They [most physicians] know a physician or two or a practice that has made that move to become employed, but I think many of them are quite surprised, if not shocked to see the tremendous transition over such a short period of time,” said Matthew Katz, PAI board member and CEO of the Connecticut State Medical Society.

Similarly, the number of hospital-owned physician practices grew from 36,000 (14%) in July 2012 to 67,000 (26%) in July 2015.

Regionally, by July 2015, almost half (49%) of Midwest physicians were hospital employed, while just over a quarter (27%) were in Alaska and Hawaii.

Mr. Katz said that he is hearing that the shift is having an impact on the practice of medicine. While certain aspects, such as physician autonomy, are among common issues raised with employment, he noted that referrals can also be an issue as doctors move from private practice to an employed status.

Many of the physicians he spoke with “have said that it is in some respects limiting them in what they can do and who they can refer to because they are losing their referral base in the community. When physicians become employed, they may no longer be accessible to those community-practicing physicians.”

He continued: “I have not talked to those physicians who have recently become employed to see what they think of their transition, but in talking to the physicians who remain in the community, they are concerned about the loss of those community physicians, the loss of the referral base, the loss of who to send their patients to who are no longer in the community and are now employed and working for the hospital. They seem to, in some respects, have lost touch with some of them once they transitioned to an employment status.”

Kelly Kenney, executive VP at PAI, said that there are some benefits to moving toward hospital-based employment, particularly with the reporting requirements attached to the many quality programs and the IT infrastructure that is a necessary part of it.

“Some physicians who have moved to employment say it just got to be too much,” Ms. Kenney said.

Mr. Katz added that private practice remains an option for most.

“We are still seeing some physicians, at least here in Connecticut and in other places around the country going into private practice trying to make a go of it,” he said. “I don’t think this is the death knell of private practice. I think that the pendulum definitely has swung in one direction, and I believe it will swing back a bit. I think that this will highlight what private practice physicians are facing and the barriers that exist today that did not exist a few years ago.”

He said that he hopes regulators, medical societies, and others step up in education efforts and other assistance to help small and solo practitioners navigate the value-based payment waters and give them the tools to stay in private practice.

Ms. Kenney noted that the flexibility of when physicians can start to meet reporting requirements under MACRA will help.

Mr. Katz added that the Department of Justice can do its part to help the small and solo practices by blocking the any of the mega-insurance mergers that are being proposed. He expressed concern that as insurers get bigger, the ability for small and solo practices to negotiate with them goes away and could be another driver to send physicians into employed situations.

The parade of doctors leaving private practice for an employee position shows no sign of slowing.

In July 2012, an estimated 95,000 physicians (26%) were hospital employed. By July 2015, that number grew to 141,000 (38%), according to study conducted by Avalere for the Physicians Advocacy Institute. The biggest leap occurred between July 2014, when 114,000 physicians were listed as employees, to January 2015, when 133,000 were listed.

“They [most physicians] know a physician or two or a practice that has made that move to become employed, but I think many of them are quite surprised, if not shocked to see the tremendous transition over such a short period of time,” said Matthew Katz, PAI board member and CEO of the Connecticut State Medical Society.

Similarly, the number of hospital-owned physician practices grew from 36,000 (14%) in July 2012 to 67,000 (26%) in July 2015.

Regionally, by July 2015, almost half (49%) of Midwest physicians were hospital employed, while just over a quarter (27%) were in Alaska and Hawaii.

Mr. Katz said that he is hearing that the shift is having an impact on the practice of medicine. While certain aspects, such as physician autonomy, are among common issues raised with employment, he noted that referrals can also be an issue as doctors move from private practice to an employed status.

Many of the physicians he spoke with “have said that it is in some respects limiting them in what they can do and who they can refer to because they are losing their referral base in the community. When physicians become employed, they may no longer be accessible to those community-practicing physicians.”

He continued: “I have not talked to those physicians who have recently become employed to see what they think of their transition, but in talking to the physicians who remain in the community, they are concerned about the loss of those community physicians, the loss of the referral base, the loss of who to send their patients to who are no longer in the community and are now employed and working for the hospital. They seem to, in some respects, have lost touch with some of them once they transitioned to an employment status.”

Kelly Kenney, executive VP at PAI, said that there are some benefits to moving toward hospital-based employment, particularly with the reporting requirements attached to the many quality programs and the IT infrastructure that is a necessary part of it.

“Some physicians who have moved to employment say it just got to be too much,” Ms. Kenney said.

Mr. Katz added that private practice remains an option for most.

“We are still seeing some physicians, at least here in Connecticut and in other places around the country going into private practice trying to make a go of it,” he said. “I don’t think this is the death knell of private practice. I think that the pendulum definitely has swung in one direction, and I believe it will swing back a bit. I think that this will highlight what private practice physicians are facing and the barriers that exist today that did not exist a few years ago.”

He said that he hopes regulators, medical societies, and others step up in education efforts and other assistance to help small and solo practitioners navigate the value-based payment waters and give them the tools to stay in private practice.

Ms. Kenney noted that the flexibility of when physicians can start to meet reporting requirements under MACRA will help.

Mr. Katz added that the Department of Justice can do its part to help the small and solo practices by blocking the any of the mega-insurance mergers that are being proposed. He expressed concern that as insurers get bigger, the ability for small and solo practices to negotiate with them goes away and could be another driver to send physicians into employed situations.

The parade of doctors leaving private practice for an employee position shows no sign of slowing.

In July 2012, an estimated 95,000 physicians (26%) were hospital employed. By July 2015, that number grew to 141,000 (38%), according to study conducted by Avalere for the Physicians Advocacy Institute. The biggest leap occurred between July 2014, when 114,000 physicians were listed as employees, to January 2015, when 133,000 were listed.

“They [most physicians] know a physician or two or a practice that has made that move to become employed, but I think many of them are quite surprised, if not shocked to see the tremendous transition over such a short period of time,” said Matthew Katz, PAI board member and CEO of the Connecticut State Medical Society.

Similarly, the number of hospital-owned physician practices grew from 36,000 (14%) in July 2012 to 67,000 (26%) in July 2015.

Regionally, by July 2015, almost half (49%) of Midwest physicians were hospital employed, while just over a quarter (27%) were in Alaska and Hawaii.

Mr. Katz said that he is hearing that the shift is having an impact on the practice of medicine. While certain aspects, such as physician autonomy, are among common issues raised with employment, he noted that referrals can also be an issue as doctors move from private practice to an employed status.

Many of the physicians he spoke with “have said that it is in some respects limiting them in what they can do and who they can refer to because they are losing their referral base in the community. When physicians become employed, they may no longer be accessible to those community-practicing physicians.”

He continued: “I have not talked to those physicians who have recently become employed to see what they think of their transition, but in talking to the physicians who remain in the community, they are concerned about the loss of those community physicians, the loss of the referral base, the loss of who to send their patients to who are no longer in the community and are now employed and working for the hospital. They seem to, in some respects, have lost touch with some of them once they transitioned to an employment status.”

Kelly Kenney, executive VP at PAI, said that there are some benefits to moving toward hospital-based employment, particularly with the reporting requirements attached to the many quality programs and the IT infrastructure that is a necessary part of it.

“Some physicians who have moved to employment say it just got to be too much,” Ms. Kenney said.

Mr. Katz added that private practice remains an option for most.

“We are still seeing some physicians, at least here in Connecticut and in other places around the country going into private practice trying to make a go of it,” he said. “I don’t think this is the death knell of private practice. I think that the pendulum definitely has swung in one direction, and I believe it will swing back a bit. I think that this will highlight what private practice physicians are facing and the barriers that exist today that did not exist a few years ago.”

He said that he hopes regulators, medical societies, and others step up in education efforts and other assistance to help small and solo practitioners navigate the value-based payment waters and give them the tools to stay in private practice.

Ms. Kenney noted that the flexibility of when physicians can start to meet reporting requirements under MACRA will help.

Mr. Katz added that the Department of Justice can do its part to help the small and solo practices by blocking the any of the mega-insurance mergers that are being proposed. He expressed concern that as insurers get bigger, the ability for small and solo practices to negotiate with them goes away and could be another driver to send physicians into employed situations.

A Quick Lesson on Bundled Payments

The Centers for Medicare & Medicaid Services (CMS) has too many new payment models for a practicing doctor to keep up with them all. But there are three that I think are most important for hospitalists to know something about: hospital value-based purchasing, MACRA-related models, and bundled payments. Here, I’ll focus on the latter, which unlike the first two, influences payment to both hospitals and physicians (as well as other providers).

Bundles for Different Diagnoses

Bundled payment programs are the most visible of CMS’s episode payment models (EPMs). There are currently voluntary bundle models (called Bundled Payments for Care Improvement, or BPCI) across many different diagnoses. And in some locales, there is a mandatory bundle program for hip and knee replacements that began in March 2016 (called Comprehensive Care for Joint Replacement, or CCJR or just CJR).

These programs are set to expand significantly in the next few years. The Surgical Hip and Femur Fracture Treatment (SHFFT) becomes active in 2017 in some locales. It will essentially add hip and femur fractures requiring surgery to the existing CJR program. New bundles for acute myocardial infarction, either managed medically or with percutaneous coronary intervention (PCI), and coronary bypass surgery will become mandatory in some parts of the country beginning July 2017.

How the Programs Work

CMS totals all Medicare dollars paid per patient historically for the relevant bundle. This includes payments to the hospital (e.g., the DRG payment) and all fees paid to physicians, therapists, visiting nurses, skilled nursing facilities, etc., from the time of hospital admission through 90 days after discharge. It then sets a target spend (or price) for that diagnosis that is about 3% below the historical average. Because it is based on the past track record of a hospital and its market (or region), the price will vary from place to place.

If, going forward, the Medicare spend for each patient is below the target, CMS pays that amount to the hospital. But if the spend is above the target, the hospital pays some or all of that amount to CMS. Presumably, hospitals will have negotiated with others, such as physicians, how such an “upside” or penalty payment will be divided between them.

It’s worth noting that all parties continue to bill, and are paid by Medicare, via the same fee-for-service arrangements currently in place. It is only at the time of a “true up” that an upside is paid or penalty assessed. And hospitals are eligible for upside payments only if they perform above a threshold on a few quality and patient satisfaction metrics.

The details of these programs are incredibly complicated, and I’m intentionally providing a very simple description of them here. I think that nearly all practicing clinicians should not try to learn and keep up with all of the precise details. They change often! Instead, it’s best to focus on the big picture only and rely on others at the hospital to keep track of the details.

Ways to Lower the Spend

These programs are intended to provide a significant financial incentive to find lower-cost ways to care for patients while still ensuring good care. Any successful effort to lower the cost should start by analyzing just what Medicare spends on each element of care over the more than 90 days each patient is in the bundle. For example, for hip and knee replacement patients, nearly half of the spend goes toward post-hospital services such as a skilled nursing facility and home nursing visits. So the best opportunity to reduce the spend may be to reduce utilization of these services where appropriate.

For patients in the bundles for coronary artery bypass grafting and acute myocardial infarction treated with PCI, only about 10% of the total spend goes to post-hospital services. For these, it might be more effective to focus cost reductions on other things.

Each organization will need to make its own decisions regarding where to focus cost-reduction efforts across the bundle. For many of us, that will mean moving away from a focus on traditional hospitalist-related cost-containment efforts like length of stay or pharmacy costs and instead looking at the bigger picture, including use of post-hospital services.

Some Things to Watch

I expect there will be a number of side effects of these payment models that hospitalists will care about. Doctors in different specialties, for example, might change their minds about whether they want to serve as attending physicians for “bundle patients.” One scenario is that if orthopedists have an opportunity to realize a significant financial upside, they may prefer to serve as attendings for hip fracture patients rather than leaving to hospitalists financially important decisions such as whether patients are discharged to a skilled nursing facility or home. We’ll just have to see how that plays out and be prepared to advocate for our position if different from other specialties.

Successful performance in bundles requires effective coordination of care across settings, and I’m hopeful this will benefit patients. Hospitals and skilled nursing facilities, for example, will need to work together more effectively to curb unnecessary days in the facilities and to reduce readmissions. Many hospitals have already begun developing a preferred network of skilled nursing facilities for referrals that is based on demonstrating good care and low returns to the hospital. Your hospital has probably already started doing this work even if you haven’t heard about it yet.

For me, one of the most concerning outcomes of bundles is the negotiations between providers regarding how an upside or penalty is to be shared among them. I suspect this won’t be contentious initially, but as the dollars at stake grow, it could lead to increasingly stressful negotiations and relationships.

And, lastly, like any payment model, bundles are “gameable,” especially bundles for medical diagnoses such as congestive heart failure or pneumonia, which can be gamed by lowering the threshold for admitting less-sick patients to inpatient status. The spend for these patients, who are less likely to require expensive post-hospital services or be readmitted, will lower the average spend in the bundle, increasing the chance of an upside payment for the providers. TH

The Centers for Medicare & Medicaid Services (CMS) has too many new payment models for a practicing doctor to keep up with them all. But there are three that I think are most important for hospitalists to know something about: hospital value-based purchasing, MACRA-related models, and bundled payments. Here, I’ll focus on the latter, which unlike the first two, influences payment to both hospitals and physicians (as well as other providers).

Bundles for Different Diagnoses

Bundled payment programs are the most visible of CMS’s episode payment models (EPMs). There are currently voluntary bundle models (called Bundled Payments for Care Improvement, or BPCI) across many different diagnoses. And in some locales, there is a mandatory bundle program for hip and knee replacements that began in March 2016 (called Comprehensive Care for Joint Replacement, or CCJR or just CJR).

These programs are set to expand significantly in the next few years. The Surgical Hip and Femur Fracture Treatment (SHFFT) becomes active in 2017 in some locales. It will essentially add hip and femur fractures requiring surgery to the existing CJR program. New bundles for acute myocardial infarction, either managed medically or with percutaneous coronary intervention (PCI), and coronary bypass surgery will become mandatory in some parts of the country beginning July 2017.

How the Programs Work

CMS totals all Medicare dollars paid per patient historically for the relevant bundle. This includes payments to the hospital (e.g., the DRG payment) and all fees paid to physicians, therapists, visiting nurses, skilled nursing facilities, etc., from the time of hospital admission through 90 days after discharge. It then sets a target spend (or price) for that diagnosis that is about 3% below the historical average. Because it is based on the past track record of a hospital and its market (or region), the price will vary from place to place.

If, going forward, the Medicare spend for each patient is below the target, CMS pays that amount to the hospital. But if the spend is above the target, the hospital pays some or all of that amount to CMS. Presumably, hospitals will have negotiated with others, such as physicians, how such an “upside” or penalty payment will be divided between them.

It’s worth noting that all parties continue to bill, and are paid by Medicare, via the same fee-for-service arrangements currently in place. It is only at the time of a “true up” that an upside is paid or penalty assessed. And hospitals are eligible for upside payments only if they perform above a threshold on a few quality and patient satisfaction metrics.

The details of these programs are incredibly complicated, and I’m intentionally providing a very simple description of them here. I think that nearly all practicing clinicians should not try to learn and keep up with all of the precise details. They change often! Instead, it’s best to focus on the big picture only and rely on others at the hospital to keep track of the details.

Ways to Lower the Spend

These programs are intended to provide a significant financial incentive to find lower-cost ways to care for patients while still ensuring good care. Any successful effort to lower the cost should start by analyzing just what Medicare spends on each element of care over the more than 90 days each patient is in the bundle. For example, for hip and knee replacement patients, nearly half of the spend goes toward post-hospital services such as a skilled nursing facility and home nursing visits. So the best opportunity to reduce the spend may be to reduce utilization of these services where appropriate.

For patients in the bundles for coronary artery bypass grafting and acute myocardial infarction treated with PCI, only about 10% of the total spend goes to post-hospital services. For these, it might be more effective to focus cost reductions on other things.

Each organization will need to make its own decisions regarding where to focus cost-reduction efforts across the bundle. For many of us, that will mean moving away from a focus on traditional hospitalist-related cost-containment efforts like length of stay or pharmacy costs and instead looking at the bigger picture, including use of post-hospital services.

Some Things to Watch

I expect there will be a number of side effects of these payment models that hospitalists will care about. Doctors in different specialties, for example, might change their minds about whether they want to serve as attending physicians for “bundle patients.” One scenario is that if orthopedists have an opportunity to realize a significant financial upside, they may prefer to serve as attendings for hip fracture patients rather than leaving to hospitalists financially important decisions such as whether patients are discharged to a skilled nursing facility or home. We’ll just have to see how that plays out and be prepared to advocate for our position if different from other specialties.

Successful performance in bundles requires effective coordination of care across settings, and I’m hopeful this will benefit patients. Hospitals and skilled nursing facilities, for example, will need to work together more effectively to curb unnecessary days in the facilities and to reduce readmissions. Many hospitals have already begun developing a preferred network of skilled nursing facilities for referrals that is based on demonstrating good care and low returns to the hospital. Your hospital has probably already started doing this work even if you haven’t heard about it yet.

For me, one of the most concerning outcomes of bundles is the negotiations between providers regarding how an upside or penalty is to be shared among them. I suspect this won’t be contentious initially, but as the dollars at stake grow, it could lead to increasingly stressful negotiations and relationships.

And, lastly, like any payment model, bundles are “gameable,” especially bundles for medical diagnoses such as congestive heart failure or pneumonia, which can be gamed by lowering the threshold for admitting less-sick patients to inpatient status. The spend for these patients, who are less likely to require expensive post-hospital services or be readmitted, will lower the average spend in the bundle, increasing the chance of an upside payment for the providers. TH

The Centers for Medicare & Medicaid Services (CMS) has too many new payment models for a practicing doctor to keep up with them all. But there are three that I think are most important for hospitalists to know something about: hospital value-based purchasing, MACRA-related models, and bundled payments. Here, I’ll focus on the latter, which unlike the first two, influences payment to both hospitals and physicians (as well as other providers).

Bundles for Different Diagnoses

Bundled payment programs are the most visible of CMS’s episode payment models (EPMs). There are currently voluntary bundle models (called Bundled Payments for Care Improvement, or BPCI) across many different diagnoses. And in some locales, there is a mandatory bundle program for hip and knee replacements that began in March 2016 (called Comprehensive Care for Joint Replacement, or CCJR or just CJR).

These programs are set to expand significantly in the next few years. The Surgical Hip and Femur Fracture Treatment (SHFFT) becomes active in 2017 in some locales. It will essentially add hip and femur fractures requiring surgery to the existing CJR program. New bundles for acute myocardial infarction, either managed medically or with percutaneous coronary intervention (PCI), and coronary bypass surgery will become mandatory in some parts of the country beginning July 2017.

How the Programs Work

CMS totals all Medicare dollars paid per patient historically for the relevant bundle. This includes payments to the hospital (e.g., the DRG payment) and all fees paid to physicians, therapists, visiting nurses, skilled nursing facilities, etc., from the time of hospital admission through 90 days after discharge. It then sets a target spend (or price) for that diagnosis that is about 3% below the historical average. Because it is based on the past track record of a hospital and its market (or region), the price will vary from place to place.

If, going forward, the Medicare spend for each patient is below the target, CMS pays that amount to the hospital. But if the spend is above the target, the hospital pays some or all of that amount to CMS. Presumably, hospitals will have negotiated with others, such as physicians, how such an “upside” or penalty payment will be divided between them.

It’s worth noting that all parties continue to bill, and are paid by Medicare, via the same fee-for-service arrangements currently in place. It is only at the time of a “true up” that an upside is paid or penalty assessed. And hospitals are eligible for upside payments only if they perform above a threshold on a few quality and patient satisfaction metrics.

The details of these programs are incredibly complicated, and I’m intentionally providing a very simple description of them here. I think that nearly all practicing clinicians should not try to learn and keep up with all of the precise details. They change often! Instead, it’s best to focus on the big picture only and rely on others at the hospital to keep track of the details.

Ways to Lower the Spend

These programs are intended to provide a significant financial incentive to find lower-cost ways to care for patients while still ensuring good care. Any successful effort to lower the cost should start by analyzing just what Medicare spends on each element of care over the more than 90 days each patient is in the bundle. For example, for hip and knee replacement patients, nearly half of the spend goes toward post-hospital services such as a skilled nursing facility and home nursing visits. So the best opportunity to reduce the spend may be to reduce utilization of these services where appropriate.

For patients in the bundles for coronary artery bypass grafting and acute myocardial infarction treated with PCI, only about 10% of the total spend goes to post-hospital services. For these, it might be more effective to focus cost reductions on other things.

Each organization will need to make its own decisions regarding where to focus cost-reduction efforts across the bundle. For many of us, that will mean moving away from a focus on traditional hospitalist-related cost-containment efforts like length of stay or pharmacy costs and instead looking at the bigger picture, including use of post-hospital services.

Some Things to Watch

I expect there will be a number of side effects of these payment models that hospitalists will care about. Doctors in different specialties, for example, might change their minds about whether they want to serve as attending physicians for “bundle patients.” One scenario is that if orthopedists have an opportunity to realize a significant financial upside, they may prefer to serve as attendings for hip fracture patients rather than leaving to hospitalists financially important decisions such as whether patients are discharged to a skilled nursing facility or home. We’ll just have to see how that plays out and be prepared to advocate for our position if different from other specialties.

Successful performance in bundles requires effective coordination of care across settings, and I’m hopeful this will benefit patients. Hospitals and skilled nursing facilities, for example, will need to work together more effectively to curb unnecessary days in the facilities and to reduce readmissions. Many hospitals have already begun developing a preferred network of skilled nursing facilities for referrals that is based on demonstrating good care and low returns to the hospital. Your hospital has probably already started doing this work even if you haven’t heard about it yet.

For me, one of the most concerning outcomes of bundles is the negotiations between providers regarding how an upside or penalty is to be shared among them. I suspect this won’t be contentious initially, but as the dollars at stake grow, it could lead to increasingly stressful negotiations and relationships.

And, lastly, like any payment model, bundles are “gameable,” especially bundles for medical diagnoses such as congestive heart failure or pneumonia, which can be gamed by lowering the threshold for admitting less-sick patients to inpatient status. The spend for these patients, who are less likely to require expensive post-hospital services or be readmitted, will lower the average spend in the bundle, increasing the chance of an upside payment for the providers. TH

No longer a hand-me-down approach to WM

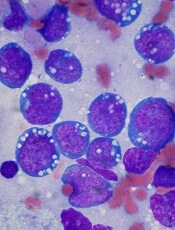

Photo courtesy of DFCI

NEW YORK—Whole-genome sequencing has changed the entire approach to drug development for Waldenström’s macroglobulinemia (WM), according to a speaker at Lymphoma & Myeloma 2016.

The strategy has changed from a hand-me-down one, relying on drugs developed first for other diseases, to a rational plan designed specifically to treat WM patients.

“We would wait for our colleagues in the myeloma world or lymphoma world, CLL world, anywhere we could find them, to help us with the development of drugs,” said Steven Treon, MD, PhD, of the Dana-Farber Cancer Institute in Boston, Massachusetts.

“And, sometimes, this resulted in delays of many, many years before those therapeutics could be vetted out and eventually be handed down to the Waldenström investigators.”

At Lymphoma & Myeloma 2016, Dr Treon described the current therapy of WM and the impact of the discovery of MYD88 and CXCR4 on drug development and treatment choices in WM.

Rituximab-based therapy

WM is a disease that strongly expresses CD20, even though it’s a lymphoplasmacytic disease. So rituximab, given alone and in combination, has been, to date, the most common approach to treating WM.

As a single agent, rituximab produces a response in 25% to 40% of WM patients, but these are very seldom deep responses, Dr Treon noted.

So investigators combined rituximab with cyclophosphamide, nucleoside analogues, proteasome inhibitors, and bendamustine, which increased the overall response rate and depth of response.

This translated into improvement in time to progression, which is now 4 to 5 years with these combination therapies.

“Now, the problem is, when you borrow things from other people, you sometimes end up with problems you didn’t anticipate,” Dr Treon said.

One of the unanticipated side effects with rituximab in WM patients is the IgM flare, which occurs in 40% to 60% of WM patients.

Rituximab can also cause hypogammaglobulinema, leading to infections, and results in a high intolerance rate, which tends to occur a few cycles into induction or maintenance therapy.

“In some cases,” Dr Treon advised, “a switch to ofatumumab, a fully human CD20 molecule, overcomes this intolerance.”

Nucleoside analogues, which used to be the mainstay of WM, have a high rate of transformation into a more aggressive lymphoma or acute myeloid leukemia.

Immunomodulatory drugs, particularly thalidomide, cause peripheral neuropathy greater than grade 2 in 60% of patients.

And a greater incidence of peripheral neuropathy is also observed with proteasome inhibitors, particularly bortezomib, in WM patients than in myeloma or mantle cell lymphoma patients.

“So this was at least the impetus for why we needed to develop novel approaches to treating this disease,” Dr Treon said.

MYD88 mutations

With whole-genome sequencing, investigators discovered that mutations in the MYD88 gene were highly prevalent in WM patients.

About 93% to 95% of WM patients have the L265P MYD88 mutation, and about 2% have the non-L265P MYD88 mutation.

MYD88 has prognostic significance in WM. Mutated MYD88 confers a better prognosis than unmutated MYD88.

MYD88 may also be important in predicting who will respond to drugs like ibrutinib.

CXCR4 mutations

Mutations in CXCR4 are the second most common mutation in WM.

Between 30% and 40% of WM patients have the WHIM-like CXCR4 C-tail mutations, which traffic the Waldenström’s cells to the bone marrow.

This mutation promotes drug resistance, including resistance to ibrutinib because of the enhanced AKT/ERK signaling.

Ibrutinib therapy

With this in mind, investigators set out to evaluate the potential of using a BTK inhibitor in relapsed/refractory WM patients and at the same time observe the genomics that might predict for response and resistance.

They enrolled 63 patients in the multicenter study. Patients had a median of 2 prior therapies (range, 1–9), and 60% had bone marrow involvement.

Patients received single-agent ibrutinib at a dose of 420 mg orally each day until disease progression or the development of unacceptable toxic side effects.

“By the time they came back 4 weeks later to be evaluated, many of them were already in a response,” Dr Treon said. “And what we saw, in fact, was almost immediate improvement in hemoglobin, something that we didn’t see with many of our trials before that.”

The best IgM response reduced levels from 3520 to 880 mg/dL (P<0.001) for the entire cohort.

The best hemoglobin response increased hemoglobin levels 3 points, from 10.5 to 13.8 g/dL (P<0.001).

The progression-free survival at 2 years was 69% and overall survival was 95%.

At 37 months, most patients who achieve a response experience a durable, ongoing response, according to an update presented at the IX International Workshop on Waldenstrom’s Macroglobulinemia.

And toxicities were “very much in line with what our colleagues have seen in other disease groups,” Dr Treon stated.

Response to ibrutinib by mutation status

The overall response rate was 90% for all patients, but there were differences according to mutation status.

Patients with no MYD88 mutation and no CXCR4 mutation had absolutely no major response.

Patients with a MYD88 mutation responded at an overall rate of 100% and had a major response rate of 91.7% if they did not have a CXCR4 mutation as well.

If they also had a CXCR4WHIM mutation, the overall response rate was lower, at 85.7%, and the major response rate was 61.9%.

“It’s still something to be incredibly excited about—61.9% single-agent activity,” Dr Treon said.

Patients with a CXCR4 mutation also respond more slowly than those without the mutation.

This investigator-sponsored study led to the approval of ibrutinib in WM in the US as well as in Europe and Canada.

“And the point to make about this is that investigators can bring their data to the FDA, the EMA, even Canada, and it can make a difference,” Dr Treon said.

“We don’t always have to have company-sponsored registration studies to be able to make these kinds of advances.”

Ibrutinib in rituximab-refractory patients

A multicenter, phase 3 study of ibrutinib in rituximab-refractory patients was “almost a photocopy of our study,” Dr Treon said.

The main difference was that the patients were even sicker, having failed a median of 4 prior therapies.

Patients experienced rapid improvements in hemoglobin and IgM levels and had an overall response rate of 90%.

Patients with a CXCR4 mutation also tended to lag in terms of pace of hemoglobin improvement and reduction in IgM.

The study was just accepted for publication in Lancet Oncology.

IRAK inhibition

Investigators were puzzled by the paucity of complete responses with ibrutinib, and they found the IRAK pathway remained turned on in patients treated with ibrutinib.

So they took cells of patients treated for 6 months with ibrutinib and co-cultured them with ibrutinib and an IRAK inhibitor. They observed the induction of apoptosis.

Based on this finding, the investigators are manufacturing a very potent IRAK1 inhibitor (JH-X-119), which, when combined with ibrutinib, synergistically increases tumor-cell killing.

“And so one of the strategies we have going forward,” Dr Treon said, “is the ability to advance ibrutinib therapy in MYD88-mutated tumors by the addition of the IRAK inhibitor.”

Resistance to ibrutinib

Investigators have found multiple mutations in the C481 site in individual WM patients, which is where ibrutinib binds.

These mutations represent a minority of the clone, but they exert almost a fully clonal signature. The few patients with these mutations also have a CXCR4 mutation.

C481 mutations shift the signaling of cells in the presence of ibrutinib toward ERK, which is a very powerful survival pathway.

So investigators are examining whether an ERK inhibitor combined with ibrutinib can elicit synergistic killing of BTK-resistant cells.

Investigators have also been synthesizing potent HCK inhibitors, which might overcome BTK mutations by shutting down the ability to activate Bruton’s tyrosine kinase.

Other drugs being developed for WM include:

- Combinations with a proteasome inhibitor, such as ixazomib, dexamethasone, and rituximab

- Agents that target MYD88 signaling, such as the BTK inhibitors acalabrutinib and BGB-3111

- Agents that block CXCR4 receptor signaling, such as ulocuplomab

- The BCL2 inhibitor venetoclax

- The CD38-targeted agent daratumumab.

Current treatment strategies

Knowing a patient’s MYD88 and CXCR4 mutation status provides an opportunity to take a rational approach to treating individuals with WM, Dr Treon said.

For patients with mutated MYD88 and no CXCR4 mutation:

- If patients do not have bulky disease or contraindications, ibrutinib may be used if available.

- If patients have bulky disease, a combination of bendamustine and rituximab may be used.

- If patients have amyloidosis, a combination of bortezomib, dexamethasone, and rituximab is possible.

- If patients have IgM peripheral neuropathy, then rituximab with or without an alkylator is recommended.

For patients with mutated MYD88 and mutated CXCR4, the same treatments can be used as for patients with mutated MYD88 and unmutated CXCR4 with the same restrictions.

To achieve an immediate response for patients with a CXCR4 mutation, an alternative therapy to ibrutinib—such as the bendamustine, dexamethasone, rituximab combination—may be the best choice, because CXCR4-mutated individuals have a slower response to ibrutinib.

The problem arises with the MYD88-wild-type patients, because their survival is poorer than the MYD88-mutated patients.

“We still don’t know what’s wrong with these individuals,” Dr Treon said. “We don’t have any idea about what their basic genomic problems are.”

Bendamustine- or bortezomib-based therapy is effective in this population and can be considered.

In terms of salvage therapy, “the only thing to keep in mind is that if something worked the first time and you got at least a 2-year response with it, you can go back and consider it,” Dr Treon said. ![]()

Photo courtesy of DFCI

NEW YORK—Whole-genome sequencing has changed the entire approach to drug development for Waldenström’s macroglobulinemia (WM), according to a speaker at Lymphoma & Myeloma 2016.

The strategy has changed from a hand-me-down one, relying on drugs developed first for other diseases, to a rational plan designed specifically to treat WM patients.

“We would wait for our colleagues in the myeloma world or lymphoma world, CLL world, anywhere we could find them, to help us with the development of drugs,” said Steven Treon, MD, PhD, of the Dana-Farber Cancer Institute in Boston, Massachusetts.

“And, sometimes, this resulted in delays of many, many years before those therapeutics could be vetted out and eventually be handed down to the Waldenström investigators.”

At Lymphoma & Myeloma 2016, Dr Treon described the current therapy of WM and the impact of the discovery of MYD88 and CXCR4 on drug development and treatment choices in WM.

Rituximab-based therapy

WM is a disease that strongly expresses CD20, even though it’s a lymphoplasmacytic disease. So rituximab, given alone and in combination, has been, to date, the most common approach to treating WM.

As a single agent, rituximab produces a response in 25% to 40% of WM patients, but these are very seldom deep responses, Dr Treon noted.

So investigators combined rituximab with cyclophosphamide, nucleoside analogues, proteasome inhibitors, and bendamustine, which increased the overall response rate and depth of response.

This translated into improvement in time to progression, which is now 4 to 5 years with these combination therapies.

“Now, the problem is, when you borrow things from other people, you sometimes end up with problems you didn’t anticipate,” Dr Treon said.

One of the unanticipated side effects with rituximab in WM patients is the IgM flare, which occurs in 40% to 60% of WM patients.

Rituximab can also cause hypogammaglobulinema, leading to infections, and results in a high intolerance rate, which tends to occur a few cycles into induction or maintenance therapy.

“In some cases,” Dr Treon advised, “a switch to ofatumumab, a fully human CD20 molecule, overcomes this intolerance.”

Nucleoside analogues, which used to be the mainstay of WM, have a high rate of transformation into a more aggressive lymphoma or acute myeloid leukemia.

Immunomodulatory drugs, particularly thalidomide, cause peripheral neuropathy greater than grade 2 in 60% of patients.

And a greater incidence of peripheral neuropathy is also observed with proteasome inhibitors, particularly bortezomib, in WM patients than in myeloma or mantle cell lymphoma patients.

“So this was at least the impetus for why we needed to develop novel approaches to treating this disease,” Dr Treon said.

MYD88 mutations

With whole-genome sequencing, investigators discovered that mutations in the MYD88 gene were highly prevalent in WM patients.

About 93% to 95% of WM patients have the L265P MYD88 mutation, and about 2% have the non-L265P MYD88 mutation.

MYD88 has prognostic significance in WM. Mutated MYD88 confers a better prognosis than unmutated MYD88.

MYD88 may also be important in predicting who will respond to drugs like ibrutinib.

CXCR4 mutations

Mutations in CXCR4 are the second most common mutation in WM.

Between 30% and 40% of WM patients have the WHIM-like CXCR4 C-tail mutations, which traffic the Waldenström’s cells to the bone marrow.

This mutation promotes drug resistance, including resistance to ibrutinib because of the enhanced AKT/ERK signaling.

Ibrutinib therapy

With this in mind, investigators set out to evaluate the potential of using a BTK inhibitor in relapsed/refractory WM patients and at the same time observe the genomics that might predict for response and resistance.

They enrolled 63 patients in the multicenter study. Patients had a median of 2 prior therapies (range, 1–9), and 60% had bone marrow involvement.

Patients received single-agent ibrutinib at a dose of 420 mg orally each day until disease progression or the development of unacceptable toxic side effects.

“By the time they came back 4 weeks later to be evaluated, many of them were already in a response,” Dr Treon said. “And what we saw, in fact, was almost immediate improvement in hemoglobin, something that we didn’t see with many of our trials before that.”

The best IgM response reduced levels from 3520 to 880 mg/dL (P<0.001) for the entire cohort.

The best hemoglobin response increased hemoglobin levels 3 points, from 10.5 to 13.8 g/dL (P<0.001).

The progression-free survival at 2 years was 69% and overall survival was 95%.

At 37 months, most patients who achieve a response experience a durable, ongoing response, according to an update presented at the IX International Workshop on Waldenstrom’s Macroglobulinemia.

And toxicities were “very much in line with what our colleagues have seen in other disease groups,” Dr Treon stated.

Response to ibrutinib by mutation status

The overall response rate was 90% for all patients, but there were differences according to mutation status.

Patients with no MYD88 mutation and no CXCR4 mutation had absolutely no major response.

Patients with a MYD88 mutation responded at an overall rate of 100% and had a major response rate of 91.7% if they did not have a CXCR4 mutation as well.

If they also had a CXCR4WHIM mutation, the overall response rate was lower, at 85.7%, and the major response rate was 61.9%.

“It’s still something to be incredibly excited about—61.9% single-agent activity,” Dr Treon said.

Patients with a CXCR4 mutation also respond more slowly than those without the mutation.

This investigator-sponsored study led to the approval of ibrutinib in WM in the US as well as in Europe and Canada.

“And the point to make about this is that investigators can bring their data to the FDA, the EMA, even Canada, and it can make a difference,” Dr Treon said.

“We don’t always have to have company-sponsored registration studies to be able to make these kinds of advances.”

Ibrutinib in rituximab-refractory patients

A multicenter, phase 3 study of ibrutinib in rituximab-refractory patients was “almost a photocopy of our study,” Dr Treon said.

The main difference was that the patients were even sicker, having failed a median of 4 prior therapies.

Patients experienced rapid improvements in hemoglobin and IgM levels and had an overall response rate of 90%.

Patients with a CXCR4 mutation also tended to lag in terms of pace of hemoglobin improvement and reduction in IgM.

The study was just accepted for publication in Lancet Oncology.

IRAK inhibition

Investigators were puzzled by the paucity of complete responses with ibrutinib, and they found the IRAK pathway remained turned on in patients treated with ibrutinib.

So they took cells of patients treated for 6 months with ibrutinib and co-cultured them with ibrutinib and an IRAK inhibitor. They observed the induction of apoptosis.

Based on this finding, the investigators are manufacturing a very potent IRAK1 inhibitor (JH-X-119), which, when combined with ibrutinib, synergistically increases tumor-cell killing.

“And so one of the strategies we have going forward,” Dr Treon said, “is the ability to advance ibrutinib therapy in MYD88-mutated tumors by the addition of the IRAK inhibitor.”

Resistance to ibrutinib

Investigators have found multiple mutations in the C481 site in individual WM patients, which is where ibrutinib binds.

These mutations represent a minority of the clone, but they exert almost a fully clonal signature. The few patients with these mutations also have a CXCR4 mutation.

C481 mutations shift the signaling of cells in the presence of ibrutinib toward ERK, which is a very powerful survival pathway.

So investigators are examining whether an ERK inhibitor combined with ibrutinib can elicit synergistic killing of BTK-resistant cells.

Investigators have also been synthesizing potent HCK inhibitors, which might overcome BTK mutations by shutting down the ability to activate Bruton’s tyrosine kinase.

Other drugs being developed for WM include:

- Combinations with a proteasome inhibitor, such as ixazomib, dexamethasone, and rituximab

- Agents that target MYD88 signaling, such as the BTK inhibitors acalabrutinib and BGB-3111

- Agents that block CXCR4 receptor signaling, such as ulocuplomab

- The BCL2 inhibitor venetoclax

- The CD38-targeted agent daratumumab.

Current treatment strategies

Knowing a patient’s MYD88 and CXCR4 mutation status provides an opportunity to take a rational approach to treating individuals with WM, Dr Treon said.

For patients with mutated MYD88 and no CXCR4 mutation:

- If patients do not have bulky disease or contraindications, ibrutinib may be used if available.

- If patients have bulky disease, a combination of bendamustine and rituximab may be used.

- If patients have amyloidosis, a combination of bortezomib, dexamethasone, and rituximab is possible.

- If patients have IgM peripheral neuropathy, then rituximab with or without an alkylator is recommended.

For patients with mutated MYD88 and mutated CXCR4, the same treatments can be used as for patients with mutated MYD88 and unmutated CXCR4 with the same restrictions.

To achieve an immediate response for patients with a CXCR4 mutation, an alternative therapy to ibrutinib—such as the bendamustine, dexamethasone, rituximab combination—may be the best choice, because CXCR4-mutated individuals have a slower response to ibrutinib.

The problem arises with the MYD88-wild-type patients, because their survival is poorer than the MYD88-mutated patients.

“We still don’t know what’s wrong with these individuals,” Dr Treon said. “We don’t have any idea about what their basic genomic problems are.”

Bendamustine- or bortezomib-based therapy is effective in this population and can be considered.

In terms of salvage therapy, “the only thing to keep in mind is that if something worked the first time and you got at least a 2-year response with it, you can go back and consider it,” Dr Treon said. ![]()

Photo courtesy of DFCI

NEW YORK—Whole-genome sequencing has changed the entire approach to drug development for Waldenström’s macroglobulinemia (WM), according to a speaker at Lymphoma & Myeloma 2016.

The strategy has changed from a hand-me-down one, relying on drugs developed first for other diseases, to a rational plan designed specifically to treat WM patients.

“We would wait for our colleagues in the myeloma world or lymphoma world, CLL world, anywhere we could find them, to help us with the development of drugs,” said Steven Treon, MD, PhD, of the Dana-Farber Cancer Institute in Boston, Massachusetts.

“And, sometimes, this resulted in delays of many, many years before those therapeutics could be vetted out and eventually be handed down to the Waldenström investigators.”

At Lymphoma & Myeloma 2016, Dr Treon described the current therapy of WM and the impact of the discovery of MYD88 and CXCR4 on drug development and treatment choices in WM.

Rituximab-based therapy

WM is a disease that strongly expresses CD20, even though it’s a lymphoplasmacytic disease. So rituximab, given alone and in combination, has been, to date, the most common approach to treating WM.

As a single agent, rituximab produces a response in 25% to 40% of WM patients, but these are very seldom deep responses, Dr Treon noted.

So investigators combined rituximab with cyclophosphamide, nucleoside analogues, proteasome inhibitors, and bendamustine, which increased the overall response rate and depth of response.

This translated into improvement in time to progression, which is now 4 to 5 years with these combination therapies.

“Now, the problem is, when you borrow things from other people, you sometimes end up with problems you didn’t anticipate,” Dr Treon said.

One of the unanticipated side effects with rituximab in WM patients is the IgM flare, which occurs in 40% to 60% of WM patients.

Rituximab can also cause hypogammaglobulinema, leading to infections, and results in a high intolerance rate, which tends to occur a few cycles into induction or maintenance therapy.

“In some cases,” Dr Treon advised, “a switch to ofatumumab, a fully human CD20 molecule, overcomes this intolerance.”

Nucleoside analogues, which used to be the mainstay of WM, have a high rate of transformation into a more aggressive lymphoma or acute myeloid leukemia.

Immunomodulatory drugs, particularly thalidomide, cause peripheral neuropathy greater than grade 2 in 60% of patients.

And a greater incidence of peripheral neuropathy is also observed with proteasome inhibitors, particularly bortezomib, in WM patients than in myeloma or mantle cell lymphoma patients.

“So this was at least the impetus for why we needed to develop novel approaches to treating this disease,” Dr Treon said.

MYD88 mutations

With whole-genome sequencing, investigators discovered that mutations in the MYD88 gene were highly prevalent in WM patients.

About 93% to 95% of WM patients have the L265P MYD88 mutation, and about 2% have the non-L265P MYD88 mutation.

MYD88 has prognostic significance in WM. Mutated MYD88 confers a better prognosis than unmutated MYD88.

MYD88 may also be important in predicting who will respond to drugs like ibrutinib.

CXCR4 mutations

Mutations in CXCR4 are the second most common mutation in WM.

Between 30% and 40% of WM patients have the WHIM-like CXCR4 C-tail mutations, which traffic the Waldenström’s cells to the bone marrow.

This mutation promotes drug resistance, including resistance to ibrutinib because of the enhanced AKT/ERK signaling.

Ibrutinib therapy

With this in mind, investigators set out to evaluate the potential of using a BTK inhibitor in relapsed/refractory WM patients and at the same time observe the genomics that might predict for response and resistance.

They enrolled 63 patients in the multicenter study. Patients had a median of 2 prior therapies (range, 1–9), and 60% had bone marrow involvement.

Patients received single-agent ibrutinib at a dose of 420 mg orally each day until disease progression or the development of unacceptable toxic side effects.

“By the time they came back 4 weeks later to be evaluated, many of them were already in a response,” Dr Treon said. “And what we saw, in fact, was almost immediate improvement in hemoglobin, something that we didn’t see with many of our trials before that.”

The best IgM response reduced levels from 3520 to 880 mg/dL (P<0.001) for the entire cohort.

The best hemoglobin response increased hemoglobin levels 3 points, from 10.5 to 13.8 g/dL (P<0.001).

The progression-free survival at 2 years was 69% and overall survival was 95%.

At 37 months, most patients who achieve a response experience a durable, ongoing response, according to an update presented at the IX International Workshop on Waldenstrom’s Macroglobulinemia.

And toxicities were “very much in line with what our colleagues have seen in other disease groups,” Dr Treon stated.

Response to ibrutinib by mutation status

The overall response rate was 90% for all patients, but there were differences according to mutation status.

Patients with no MYD88 mutation and no CXCR4 mutation had absolutely no major response.

Patients with a MYD88 mutation responded at an overall rate of 100% and had a major response rate of 91.7% if they did not have a CXCR4 mutation as well.

If they also had a CXCR4WHIM mutation, the overall response rate was lower, at 85.7%, and the major response rate was 61.9%.

“It’s still something to be incredibly excited about—61.9% single-agent activity,” Dr Treon said.

Patients with a CXCR4 mutation also respond more slowly than those without the mutation.

This investigator-sponsored study led to the approval of ibrutinib in WM in the US as well as in Europe and Canada.

“And the point to make about this is that investigators can bring their data to the FDA, the EMA, even Canada, and it can make a difference,” Dr Treon said.

“We don’t always have to have company-sponsored registration studies to be able to make these kinds of advances.”

Ibrutinib in rituximab-refractory patients

A multicenter, phase 3 study of ibrutinib in rituximab-refractory patients was “almost a photocopy of our study,” Dr Treon said.

The main difference was that the patients were even sicker, having failed a median of 4 prior therapies.

Patients experienced rapid improvements in hemoglobin and IgM levels and had an overall response rate of 90%.

Patients with a CXCR4 mutation also tended to lag in terms of pace of hemoglobin improvement and reduction in IgM.

The study was just accepted for publication in Lancet Oncology.

IRAK inhibition

Investigators were puzzled by the paucity of complete responses with ibrutinib, and they found the IRAK pathway remained turned on in patients treated with ibrutinib.

So they took cells of patients treated for 6 months with ibrutinib and co-cultured them with ibrutinib and an IRAK inhibitor. They observed the induction of apoptosis.

Based on this finding, the investigators are manufacturing a very potent IRAK1 inhibitor (JH-X-119), which, when combined with ibrutinib, synergistically increases tumor-cell killing.

“And so one of the strategies we have going forward,” Dr Treon said, “is the ability to advance ibrutinib therapy in MYD88-mutated tumors by the addition of the IRAK inhibitor.”

Resistance to ibrutinib

Investigators have found multiple mutations in the C481 site in individual WM patients, which is where ibrutinib binds.

These mutations represent a minority of the clone, but they exert almost a fully clonal signature. The few patients with these mutations also have a CXCR4 mutation.

C481 mutations shift the signaling of cells in the presence of ibrutinib toward ERK, which is a very powerful survival pathway.

So investigators are examining whether an ERK inhibitor combined with ibrutinib can elicit synergistic killing of BTK-resistant cells.

Investigators have also been synthesizing potent HCK inhibitors, which might overcome BTK mutations by shutting down the ability to activate Bruton’s tyrosine kinase.

Other drugs being developed for WM include:

- Combinations with a proteasome inhibitor, such as ixazomib, dexamethasone, and rituximab

- Agents that target MYD88 signaling, such as the BTK inhibitors acalabrutinib and BGB-3111

- Agents that block CXCR4 receptor signaling, such as ulocuplomab

- The BCL2 inhibitor venetoclax

- The CD38-targeted agent daratumumab.

Current treatment strategies

Knowing a patient’s MYD88 and CXCR4 mutation status provides an opportunity to take a rational approach to treating individuals with WM, Dr Treon said.

For patients with mutated MYD88 and no CXCR4 mutation:

- If patients do not have bulky disease or contraindications, ibrutinib may be used if available.

- If patients have bulky disease, a combination of bendamustine and rituximab may be used.

- If patients have amyloidosis, a combination of bortezomib, dexamethasone, and rituximab is possible.

- If patients have IgM peripheral neuropathy, then rituximab with or without an alkylator is recommended.

For patients with mutated MYD88 and mutated CXCR4, the same treatments can be used as for patients with mutated MYD88 and unmutated CXCR4 with the same restrictions.