User login

Study links low diastolic blood pressure to myocardial damage, coronary heart disease

Low diastolic blood pressure (DBP) was significantly associated with myocardial injury and incident coronary heart disease, especially when the systolic blood pressure was 120 mm or higher, investigators reported.

Compared with a DBP of 80 to 89 mm Hg, DBP below 60 mm Hg more than doubled the odds of high-sensitivity cardiac troponin-T levels equaling or exceeding 14 ng per mL, and increased the risk of incident coronary heart disease (CHD) by about 50%, in a large observational study. Associations were strongest when baseline systolic blood pressure was at least 120 mm Hg, signifying elevated pulse pressure, reported Dr. John McEvoy of the Ciccarone Center for the Prevention of Heart Disease, Hopkins University, Baltimore, and associates (J Am Coll Cardiol 2016;68[16]:1713–22).

Their study included 11,565 individuals tracked for 21 years through the Atherosclerosis Risk in Communities Cohort, an observational population-based study of adults from in North Carolina, Mississippi, Minnesota, and Maryland. The researchers excluded participants with known baseline cardiovascular disease or heart failure. High-sensitivity cardiac troponin-T levels were measured at three time points between 1990 and 1992, 1996 and 1998, and 2011 and 2013. Participants averaged 57 years old at enrollment, 57% were female, and 25% were black (J Am Coll Cardiol. 2016 Oct 18. doi: 10.1016/j.jacc.2016.07.754).

Compared with baseline DBP of 80 to 89 mm Hg, DBP under 60 mm Hg was associated with a 2.2-fold greater odds (P = .01) of high-sensitivity cardiac troponin-T levels equal to or exceeding 14 ng per mL during the same visit – indicating prevalent myocardial damage – even after controlling for race, sex, body mass index, smoking and alcohol use, triglyceride and cholesterol levels, diabetes, glomerular filtration rate, and use of antihypertensives and lipid-lowering drugs, said the researchers. The odds of myocardial damage remained increased even when DBP was 60 to 69 mm Hg (odds ratio, 1.5; P = .05). Low DBP also was associated with myocardial damage at any given systolic blood pressure.

Furthermore, low DBP significantly increased the risk of progressively worsening myocardial damage, as indicated by a rising annual change in high-sensitivity cardiac troponin-T levels over 6 years. The association was significant as long as DBP was under 80 mm Hg, but was strongest when DBP was less than 60 mm Hg. Diastolic blood pressure under 60 mm Hg also significantly increased the chances of incident CHD and death, but not stroke.

Low DBP was most strongly linked to subclinical myocardial damage and incident CHD when systolic blood pressure was at least 120 mm Hg, indicating elevated pulse pressure, the researchers reported. Systolic pressure is “the main determinant of cardiac afterload and, thus, a primary driver of myocardial energy requirements,” while low DBP reduces myocardial energy supply, they noted. Therefore, high pulse pressure would lead to the greatest mismatch between myocardial energy demand and supply.

“Among patients being treated to SBP goals of 140 mm Hg or lower, attention may need to be paid not only to SBP, but also, importantly, to achieved DBP. Diastolic and systolic BP are inextricably linked, and our results highlighted the importance of not ignoring the former and focusing only on the latter, instead emphasizing the need to consider both in the optimal treatment of adults with hypertension.,”

The study was supported by the National Institutes of Health/National Institute of Diabetes and Digestive and Kidney Diseases and by the National Heart, Lung, and Blood Institute. Roche Diagnostics provided reagents for the cardiac troponin assays. Dr. McEvoy had no disclosures. One author disclosed ties to Roche; one author disclosed ties to Roche, Abbott Diagnostics, and several other relevant companies; and two authors are coinvestigators on a provisional patent filed by Roche for use of biomarkers in predicting heart failure. The other four authors had no disclosures.

The average age in the study by McEvoy et al. was 57 years. One might anticipate that in an older population, the side effects from lower BPs [blood pressures] due to drug therapy such as hypotension or syncope would be greater, and the potential for adverse cardiovascular events due to a J-curve would be substantially increased compared with what was seen in the present study. Similarly, an exacerbated potential for lower DBP to be harmful might be expected in patients with established coronary artery disease.

The well done study ... shows that lower may not always be better with respect to blood pressure control and, along with other accumulating evidence, strongly suggests careful thought before pushing blood pressure control below current guideline targets, especially if the diastolic blood pressure falls below 60 mm Hg while the pulse pressure is[greater than] 60 mm Hg.

Deepak L. Bhatt, MD, MPH, is at Brigham and Women’s Hospital Heart & Vascular Center, Boston. He disclosed ties to Amarin, Amgen, AstraZeneca, Bristol-Myers Squibb, Eisai, and a number of other pharmaceutical and medical education companies. His comments are from an accompanying editorial (J Am Coll Cardiol. 2016 Oct 18;68[16]:1723-1726).

The average age in the study by McEvoy et al. was 57 years. One might anticipate that in an older population, the side effects from lower BPs [blood pressures] due to drug therapy such as hypotension or syncope would be greater, and the potential for adverse cardiovascular events due to a J-curve would be substantially increased compared with what was seen in the present study. Similarly, an exacerbated potential for lower DBP to be harmful might be expected in patients with established coronary artery disease.

The well done study ... shows that lower may not always be better with respect to blood pressure control and, along with other accumulating evidence, strongly suggests careful thought before pushing blood pressure control below current guideline targets, especially if the diastolic blood pressure falls below 60 mm Hg while the pulse pressure is[greater than] 60 mm Hg.

Deepak L. Bhatt, MD, MPH, is at Brigham and Women’s Hospital Heart & Vascular Center, Boston. He disclosed ties to Amarin, Amgen, AstraZeneca, Bristol-Myers Squibb, Eisai, and a number of other pharmaceutical and medical education companies. His comments are from an accompanying editorial (J Am Coll Cardiol. 2016 Oct 18;68[16]:1723-1726).

The average age in the study by McEvoy et al. was 57 years. One might anticipate that in an older population, the side effects from lower BPs [blood pressures] due to drug therapy such as hypotension or syncope would be greater, and the potential for adverse cardiovascular events due to a J-curve would be substantially increased compared with what was seen in the present study. Similarly, an exacerbated potential for lower DBP to be harmful might be expected in patients with established coronary artery disease.

The well done study ... shows that lower may not always be better with respect to blood pressure control and, along with other accumulating evidence, strongly suggests careful thought before pushing blood pressure control below current guideline targets, especially if the diastolic blood pressure falls below 60 mm Hg while the pulse pressure is[greater than] 60 mm Hg.

Deepak L. Bhatt, MD, MPH, is at Brigham and Women’s Hospital Heart & Vascular Center, Boston. He disclosed ties to Amarin, Amgen, AstraZeneca, Bristol-Myers Squibb, Eisai, and a number of other pharmaceutical and medical education companies. His comments are from an accompanying editorial (J Am Coll Cardiol. 2016 Oct 18;68[16]:1723-1726).

Low diastolic blood pressure (DBP) was significantly associated with myocardial injury and incident coronary heart disease, especially when the systolic blood pressure was 120 mm or higher, investigators reported.

Compared with a DBP of 80 to 89 mm Hg, DBP below 60 mm Hg more than doubled the odds of high-sensitivity cardiac troponin-T levels equaling or exceeding 14 ng per mL, and increased the risk of incident coronary heart disease (CHD) by about 50%, in a large observational study. Associations were strongest when baseline systolic blood pressure was at least 120 mm Hg, signifying elevated pulse pressure, reported Dr. John McEvoy of the Ciccarone Center for the Prevention of Heart Disease, Hopkins University, Baltimore, and associates (J Am Coll Cardiol 2016;68[16]:1713–22).

Their study included 11,565 individuals tracked for 21 years through the Atherosclerosis Risk in Communities Cohort, an observational population-based study of adults from in North Carolina, Mississippi, Minnesota, and Maryland. The researchers excluded participants with known baseline cardiovascular disease or heart failure. High-sensitivity cardiac troponin-T levels were measured at three time points between 1990 and 1992, 1996 and 1998, and 2011 and 2013. Participants averaged 57 years old at enrollment, 57% were female, and 25% were black (J Am Coll Cardiol. 2016 Oct 18. doi: 10.1016/j.jacc.2016.07.754).

Compared with baseline DBP of 80 to 89 mm Hg, DBP under 60 mm Hg was associated with a 2.2-fold greater odds (P = .01) of high-sensitivity cardiac troponin-T levels equal to or exceeding 14 ng per mL during the same visit – indicating prevalent myocardial damage – even after controlling for race, sex, body mass index, smoking and alcohol use, triglyceride and cholesterol levels, diabetes, glomerular filtration rate, and use of antihypertensives and lipid-lowering drugs, said the researchers. The odds of myocardial damage remained increased even when DBP was 60 to 69 mm Hg (odds ratio, 1.5; P = .05). Low DBP also was associated with myocardial damage at any given systolic blood pressure.

Furthermore, low DBP significantly increased the risk of progressively worsening myocardial damage, as indicated by a rising annual change in high-sensitivity cardiac troponin-T levels over 6 years. The association was significant as long as DBP was under 80 mm Hg, but was strongest when DBP was less than 60 mm Hg. Diastolic blood pressure under 60 mm Hg also significantly increased the chances of incident CHD and death, but not stroke.

Low DBP was most strongly linked to subclinical myocardial damage and incident CHD when systolic blood pressure was at least 120 mm Hg, indicating elevated pulse pressure, the researchers reported. Systolic pressure is “the main determinant of cardiac afterload and, thus, a primary driver of myocardial energy requirements,” while low DBP reduces myocardial energy supply, they noted. Therefore, high pulse pressure would lead to the greatest mismatch between myocardial energy demand and supply.

“Among patients being treated to SBP goals of 140 mm Hg or lower, attention may need to be paid not only to SBP, but also, importantly, to achieved DBP. Diastolic and systolic BP are inextricably linked, and our results highlighted the importance of not ignoring the former and focusing only on the latter, instead emphasizing the need to consider both in the optimal treatment of adults with hypertension.,”

The study was supported by the National Institutes of Health/National Institute of Diabetes and Digestive and Kidney Diseases and by the National Heart, Lung, and Blood Institute. Roche Diagnostics provided reagents for the cardiac troponin assays. Dr. McEvoy had no disclosures. One author disclosed ties to Roche; one author disclosed ties to Roche, Abbott Diagnostics, and several other relevant companies; and two authors are coinvestigators on a provisional patent filed by Roche for use of biomarkers in predicting heart failure. The other four authors had no disclosures.

Low diastolic blood pressure (DBP) was significantly associated with myocardial injury and incident coronary heart disease, especially when the systolic blood pressure was 120 mm or higher, investigators reported.

Compared with a DBP of 80 to 89 mm Hg, DBP below 60 mm Hg more than doubled the odds of high-sensitivity cardiac troponin-T levels equaling or exceeding 14 ng per mL, and increased the risk of incident coronary heart disease (CHD) by about 50%, in a large observational study. Associations were strongest when baseline systolic blood pressure was at least 120 mm Hg, signifying elevated pulse pressure, reported Dr. John McEvoy of the Ciccarone Center for the Prevention of Heart Disease, Hopkins University, Baltimore, and associates (J Am Coll Cardiol 2016;68[16]:1713–22).

Their study included 11,565 individuals tracked for 21 years through the Atherosclerosis Risk in Communities Cohort, an observational population-based study of adults from in North Carolina, Mississippi, Minnesota, and Maryland. The researchers excluded participants with known baseline cardiovascular disease or heart failure. High-sensitivity cardiac troponin-T levels were measured at three time points between 1990 and 1992, 1996 and 1998, and 2011 and 2013. Participants averaged 57 years old at enrollment, 57% were female, and 25% were black (J Am Coll Cardiol. 2016 Oct 18. doi: 10.1016/j.jacc.2016.07.754).

Compared with baseline DBP of 80 to 89 mm Hg, DBP under 60 mm Hg was associated with a 2.2-fold greater odds (P = .01) of high-sensitivity cardiac troponin-T levels equal to or exceeding 14 ng per mL during the same visit – indicating prevalent myocardial damage – even after controlling for race, sex, body mass index, smoking and alcohol use, triglyceride and cholesterol levels, diabetes, glomerular filtration rate, and use of antihypertensives and lipid-lowering drugs, said the researchers. The odds of myocardial damage remained increased even when DBP was 60 to 69 mm Hg (odds ratio, 1.5; P = .05). Low DBP also was associated with myocardial damage at any given systolic blood pressure.

Furthermore, low DBP significantly increased the risk of progressively worsening myocardial damage, as indicated by a rising annual change in high-sensitivity cardiac troponin-T levels over 6 years. The association was significant as long as DBP was under 80 mm Hg, but was strongest when DBP was less than 60 mm Hg. Diastolic blood pressure under 60 mm Hg also significantly increased the chances of incident CHD and death, but not stroke.

Low DBP was most strongly linked to subclinical myocardial damage and incident CHD when systolic blood pressure was at least 120 mm Hg, indicating elevated pulse pressure, the researchers reported. Systolic pressure is “the main determinant of cardiac afterload and, thus, a primary driver of myocardial energy requirements,” while low DBP reduces myocardial energy supply, they noted. Therefore, high pulse pressure would lead to the greatest mismatch between myocardial energy demand and supply.

“Among patients being treated to SBP goals of 140 mm Hg or lower, attention may need to be paid not only to SBP, but also, importantly, to achieved DBP. Diastolic and systolic BP are inextricably linked, and our results highlighted the importance of not ignoring the former and focusing only on the latter, instead emphasizing the need to consider both in the optimal treatment of adults with hypertension.,”

The study was supported by the National Institutes of Health/National Institute of Diabetes and Digestive and Kidney Diseases and by the National Heart, Lung, and Blood Institute. Roche Diagnostics provided reagents for the cardiac troponin assays. Dr. McEvoy had no disclosures. One author disclosed ties to Roche; one author disclosed ties to Roche, Abbott Diagnostics, and several other relevant companies; and two authors are coinvestigators on a provisional patent filed by Roche for use of biomarkers in predicting heart failure. The other four authors had no disclosures.

From the Journal of the American College of Cardiology

Key clinical point: Low diastolic blood pressure is associated with myocardial injury and incident coronary heart disease.

Major finding: Diastolic blood pressure below 60 mm Hg more than doubled the odds of high-sensitivity cardiac troponin-T levels equaling or exceeding 14 ng per mL and increased the risk of incident coronary heart disease by about 50%, compared to diastolic blood pressure of 80 to 89 mm Hg. Associations were strongest when pressure was elevated (above 60 mm Hg).

Data source: A prospective observational study of 11,565 adults followed for 21 years as part of the Atherosclerosis Risk in Communities cohort.

Disclosures: The study was supported by the National Institutes of Health/National Institute of Diabetes and Digestive and Kidney Diseases and by the National Heart, Lung, and Blood Institute. Roche Diagnostics provided reagents for the cardiac troponin assays. Dr. McEvoy had no disclosures. One author disclosed ties to Roche; one author disclosed ties to Roche, Abbott Diagnostics, and several other relevant companies; and two authors are coinvestigators on a provisional patent filed by Roche for use of biomarkers in predicting heart failure. The other four authors had no disclosures.

CDC study finds worrisome trends in hospital antibiotic use

U.S. hospitals have not cut overall antibiotic use and have significantly increased the use of several broad-spectrum agents, according to a first-in-kind analysis of national hospital administrative data.

“We identified significant changes in specific antibiotic classes and regional variation that may have important implications for reducing antibiotic-resistant infections,” James Baggs, PhD, and colleagues from the Centers for Disease Control and Prevention, Atlanta, reported in the study, published online on September 19 in JAMA Internal Medicine.

The retrospective study included approximately 300 acute care hospitals in the Truven Health MarketScan Hospital Drug Database, which covered 34 million pediatric and adult patient discharges equating to 166 million patient-daysIn all, 55% of patients received at least one antibiotic dose while in the hospital, and for every 1,000 patient-days, 755 days included antibiotic therapy, the investigators said. Overall antibiotic use rose during the study period by only 5.6 average days of therapy per 1,000 patient-days, which was not statistically significant.

However, the use of third and fourth-generation cephalosporins rose by a mean of 10.3 days of therapy per 1,000 patient-days (95% confidence interval, 3.1 to 17.5), and hospitals also used significantly more macrolides (mean rise, 4.8 days of therapy per 1,000 patient-days; 95% confidence interval, 2.0 to 7.6 days), glycopeptides, (22.4; 17.5 to 27.3); β-lactam/β-lactamase inhibitor combinations (18.0; 13.3 to 22.6), carbapenems (7.4; 4.6 to 10.2), and tetracyclines (3.3; 2.0 to 4.7)

Inpatient antibiotic use also varied significantly by region, the investigators said. Hospitals in rural areas used about 16 more days of antibiotic therapy per 1,000 patient-days compared with those in urban areas. Hospitals in Mid-Atlantic states (New Jersey, New York, Pennsylvania) and Pacific Coast states (Alaska, California, Hawaii, Oregon, and Washington) used the least antibiotics (649 and 665 days per 1,000 patient-days, respectively), while Southwest Central states (Arkansas, Louisiana, Oklahoma, and Texas) used the most (823 days).

The CDC provided funding for the study. The researchers had no disclosures.

The dramatic variation in antibiotic prescribing across individual clinicians, regions in the United States, and internationally indicates great potential for improvement. ... In the article by Baggs et al, inpatient antibiotic prescribing in some regions of the United States is roughly 20% lower than other regions. On a per capita basis, Swedes consume less than half the antibiotics per capita than Americans.

Growing patterns of antibiotic resistance have driven calls for more physician education and new diagnostics. While these efforts may help, it is important to recognize that many emotionally salient factors are driving physicians to inappropriately prescribe antibiotics. Future interventions need to counterbalance these factors using tools from behavioral science to reduce the use of inappropriate antibiotics.

Ateev Mehrotra, MD, MPH, and Jeffrey A. Linder, MD, MPH, are at Harvard University, Boston. They had no disclosures. These comments are from an editorial that accompanied the study ( JAMA Intern Med. 2016 Sept 19. doi: 10.1001/jamainternmed.2016.6254).

The dramatic variation in antibiotic prescribing across individual clinicians, regions in the United States, and internationally indicates great potential for improvement. ... In the article by Baggs et al, inpatient antibiotic prescribing in some regions of the United States is roughly 20% lower than other regions. On a per capita basis, Swedes consume less than half the antibiotics per capita than Americans.

Growing patterns of antibiotic resistance have driven calls for more physician education and new diagnostics. While these efforts may help, it is important to recognize that many emotionally salient factors are driving physicians to inappropriately prescribe antibiotics. Future interventions need to counterbalance these factors using tools from behavioral science to reduce the use of inappropriate antibiotics.

Ateev Mehrotra, MD, MPH, and Jeffrey A. Linder, MD, MPH, are at Harvard University, Boston. They had no disclosures. These comments are from an editorial that accompanied the study ( JAMA Intern Med. 2016 Sept 19. doi: 10.1001/jamainternmed.2016.6254).

The dramatic variation in antibiotic prescribing across individual clinicians, regions in the United States, and internationally indicates great potential for improvement. ... In the article by Baggs et al, inpatient antibiotic prescribing in some regions of the United States is roughly 20% lower than other regions. On a per capita basis, Swedes consume less than half the antibiotics per capita than Americans.

Growing patterns of antibiotic resistance have driven calls for more physician education and new diagnostics. While these efforts may help, it is important to recognize that many emotionally salient factors are driving physicians to inappropriately prescribe antibiotics. Future interventions need to counterbalance these factors using tools from behavioral science to reduce the use of inappropriate antibiotics.

Ateev Mehrotra, MD, MPH, and Jeffrey A. Linder, MD, MPH, are at Harvard University, Boston. They had no disclosures. These comments are from an editorial that accompanied the study ( JAMA Intern Med. 2016 Sept 19. doi: 10.1001/jamainternmed.2016.6254).

U.S. hospitals have not cut overall antibiotic use and have significantly increased the use of several broad-spectrum agents, according to a first-in-kind analysis of national hospital administrative data.

“We identified significant changes in specific antibiotic classes and regional variation that may have important implications for reducing antibiotic-resistant infections,” James Baggs, PhD, and colleagues from the Centers for Disease Control and Prevention, Atlanta, reported in the study, published online on September 19 in JAMA Internal Medicine.

The retrospective study included approximately 300 acute care hospitals in the Truven Health MarketScan Hospital Drug Database, which covered 34 million pediatric and adult patient discharges equating to 166 million patient-daysIn all, 55% of patients received at least one antibiotic dose while in the hospital, and for every 1,000 patient-days, 755 days included antibiotic therapy, the investigators said. Overall antibiotic use rose during the study period by only 5.6 average days of therapy per 1,000 patient-days, which was not statistically significant.

However, the use of third and fourth-generation cephalosporins rose by a mean of 10.3 days of therapy per 1,000 patient-days (95% confidence interval, 3.1 to 17.5), and hospitals also used significantly more macrolides (mean rise, 4.8 days of therapy per 1,000 patient-days; 95% confidence interval, 2.0 to 7.6 days), glycopeptides, (22.4; 17.5 to 27.3); β-lactam/β-lactamase inhibitor combinations (18.0; 13.3 to 22.6), carbapenems (7.4; 4.6 to 10.2), and tetracyclines (3.3; 2.0 to 4.7)

Inpatient antibiotic use also varied significantly by region, the investigators said. Hospitals in rural areas used about 16 more days of antibiotic therapy per 1,000 patient-days compared with those in urban areas. Hospitals in Mid-Atlantic states (New Jersey, New York, Pennsylvania) and Pacific Coast states (Alaska, California, Hawaii, Oregon, and Washington) used the least antibiotics (649 and 665 days per 1,000 patient-days, respectively), while Southwest Central states (Arkansas, Louisiana, Oklahoma, and Texas) used the most (823 days).

The CDC provided funding for the study. The researchers had no disclosures.

U.S. hospitals have not cut overall antibiotic use and have significantly increased the use of several broad-spectrum agents, according to a first-in-kind analysis of national hospital administrative data.

“We identified significant changes in specific antibiotic classes and regional variation that may have important implications for reducing antibiotic-resistant infections,” James Baggs, PhD, and colleagues from the Centers for Disease Control and Prevention, Atlanta, reported in the study, published online on September 19 in JAMA Internal Medicine.

The retrospective study included approximately 300 acute care hospitals in the Truven Health MarketScan Hospital Drug Database, which covered 34 million pediatric and adult patient discharges equating to 166 million patient-daysIn all, 55% of patients received at least one antibiotic dose while in the hospital, and for every 1,000 patient-days, 755 days included antibiotic therapy, the investigators said. Overall antibiotic use rose during the study period by only 5.6 average days of therapy per 1,000 patient-days, which was not statistically significant.

However, the use of third and fourth-generation cephalosporins rose by a mean of 10.3 days of therapy per 1,000 patient-days (95% confidence interval, 3.1 to 17.5), and hospitals also used significantly more macrolides (mean rise, 4.8 days of therapy per 1,000 patient-days; 95% confidence interval, 2.0 to 7.6 days), glycopeptides, (22.4; 17.5 to 27.3); β-lactam/β-lactamase inhibitor combinations (18.0; 13.3 to 22.6), carbapenems (7.4; 4.6 to 10.2), and tetracyclines (3.3; 2.0 to 4.7)

Inpatient antibiotic use also varied significantly by region, the investigators said. Hospitals in rural areas used about 16 more days of antibiotic therapy per 1,000 patient-days compared with those in urban areas. Hospitals in Mid-Atlantic states (New Jersey, New York, Pennsylvania) and Pacific Coast states (Alaska, California, Hawaii, Oregon, and Washington) used the least antibiotics (649 and 665 days per 1,000 patient-days, respectively), while Southwest Central states (Arkansas, Louisiana, Oklahoma, and Texas) used the most (823 days).

The CDC provided funding for the study. The researchers had no disclosures.

FROM JAMA INTERNAL MEDICINE

Key clinical point: Inpatient antibiotic use did not decrease between 2006 and 2012, and the use of several broad-spectrum agents rose significantly.

Major finding: Hospitals significantly decreased their use of fluoroquinolones and first- and second-generation cephalosporins, but these trends were offset by significant rises in the use of vancomycin, carbapenem, third- and fourth-generation cephalosporins, and β-lactam/β- lactamase inhibitor combinations.

Data source: A retrospective study of administrative hospital discharge data for about 300 US hospitals from 2006 through 2012.

Disclosures: The Centers for Disease Control and Prevention provided funding. The researchers had no disclosures.

A dermatologic little list

The following was presented to the Pennsylvania Academy of Dermatology at its annual meeting in Bedford Springs, Pa. The verses were sung to the tune of “I’ve Got a Little List” from Gilbert and Sullivan’s “Mikado.”

For those unsure of how the words fit, the editors of this periodical are considering a lottery. Winners will get an MP3 of the author singing the lyrics. Losers will get two copies.

I’ve Got a Little (Dermatologic) List

One day your staff informs you that a patient who’s called up

Has asked that you call back –

At once! Call him right back! –

But to your consternation you discover that you lack

The telephonic knack

You lack the call-back knack

For the man who wants to be assured he knows that he’s been called

And so he’s ordered voice mail – but it hasn’t been installed

Or else you hear a message that suffuses you with gloom –

Her voice mail works just dandy, but it’s full and got no room

Or else he’s a millennial who doesn’t use the phone

What right has he to moan?

We’ll just leave him alone!

Among the many irritants providing me with grist

The naive integumentalist

Must be there on my list

The one who’s sure that any scaly rash that comes among us

Is certainly a fungus

What else? A yeast or fungus!

Yet doles out betamethasone for every scaly sole

And smears all roundish eczema with ketoconazole

And knows they can’t be bedbugs if the bites don’t come in three

And rules out pityriasis because there is no tree!

And calls each itch that patients have inscribed into a furrow

A scabietic burrow –

An idiocy thorough!

Returning now to patients, I really must insist

To put some on my list

(In fact, they top the list!)

They’re the people who have generated their own laundry list

Or else at least the gist –

(Their list contains the gist) –

The redness of my pimples now takes much too long to fade

I have a strange sensation just below my shoulder blade

I get these funny white bumps when my family travels south

And intermittent cracking at the corners of my mouth

I have a newish brown mark on the right side of my nose

And frequent scaling in between my first and second toes

Now let me double check my list, because you see I fear

That I’ll leave something crucial out – now that I’ve got you here!

This armpit mark’s irregular – you see, there is a stipple

And new light yellow bumps have just appeared around my nipple

The red splotch underneath my breast – my doctor says it’s yeast

I have this dark spot. See my navel? Go one inch northeast

Oh, wait, there is a skin tag on the right side of my neck

And now, as long as I am here, let’s do a body check ...

And yes, there is just one more thing I must ask you about

I am concerned – in fact I’m sure – my hair is falling out!

Now that we are concluding, we should surely not forget

The ones not on the list

Forget about the list!

Those patients every one of us is very glad we’ve met

And happy to assist

The ones who would be missed

Those lovely people each of us is gratified to serve

Who often praise our efforts rather more than we deserve

And anyhow the tables turn, and so sooner or later

We docs will take our turn as patients, crunched to bits of data ...

I hope my cranky litany has served to entertain ya

So thank you for inviting me –

Good morning, Pennsylvania!

Dr. Rockoff practices dermatology in Brookline, Mass, and is a longtime contributor to Dermatology News. He serves on the clinical faculty at Tufts University, Boston, and has taught senior medical students and other trainees for 30 years. His new book “Act Like a Doctor, Think Like a Patient” is now available at amazon.com and barnesandnoble.com. This is his second book. Write to him at [email protected].

The following was presented to the Pennsylvania Academy of Dermatology at its annual meeting in Bedford Springs, Pa. The verses were sung to the tune of “I’ve Got a Little List” from Gilbert and Sullivan’s “Mikado.”

For those unsure of how the words fit, the editors of this periodical are considering a lottery. Winners will get an MP3 of the author singing the lyrics. Losers will get two copies.

I’ve Got a Little (Dermatologic) List

One day your staff informs you that a patient who’s called up

Has asked that you call back –

At once! Call him right back! –

But to your consternation you discover that you lack

The telephonic knack

You lack the call-back knack

For the man who wants to be assured he knows that he’s been called

And so he’s ordered voice mail – but it hasn’t been installed

Or else you hear a message that suffuses you with gloom –

Her voice mail works just dandy, but it’s full and got no room

Or else he’s a millennial who doesn’t use the phone

What right has he to moan?

We’ll just leave him alone!

Among the many irritants providing me with grist

The naive integumentalist

Must be there on my list

The one who’s sure that any scaly rash that comes among us

Is certainly a fungus

What else? A yeast or fungus!

Yet doles out betamethasone for every scaly sole

And smears all roundish eczema with ketoconazole

And knows they can’t be bedbugs if the bites don’t come in three

And rules out pityriasis because there is no tree!

And calls each itch that patients have inscribed into a furrow

A scabietic burrow –

An idiocy thorough!

Returning now to patients, I really must insist

To put some on my list

(In fact, they top the list!)

They’re the people who have generated their own laundry list

Or else at least the gist –

(Their list contains the gist) –

The redness of my pimples now takes much too long to fade

I have a strange sensation just below my shoulder blade

I get these funny white bumps when my family travels south

And intermittent cracking at the corners of my mouth

I have a newish brown mark on the right side of my nose

And frequent scaling in between my first and second toes

Now let me double check my list, because you see I fear

That I’ll leave something crucial out – now that I’ve got you here!

This armpit mark’s irregular – you see, there is a stipple

And new light yellow bumps have just appeared around my nipple

The red splotch underneath my breast – my doctor says it’s yeast

I have this dark spot. See my navel? Go one inch northeast

Oh, wait, there is a skin tag on the right side of my neck

And now, as long as I am here, let’s do a body check ...

And yes, there is just one more thing I must ask you about

I am concerned – in fact I’m sure – my hair is falling out!

Now that we are concluding, we should surely not forget

The ones not on the list

Forget about the list!

Those patients every one of us is very glad we’ve met

And happy to assist

The ones who would be missed

Those lovely people each of us is gratified to serve

Who often praise our efforts rather more than we deserve

And anyhow the tables turn, and so sooner or later

We docs will take our turn as patients, crunched to bits of data ...

I hope my cranky litany has served to entertain ya

So thank you for inviting me –

Good morning, Pennsylvania!

Dr. Rockoff practices dermatology in Brookline, Mass, and is a longtime contributor to Dermatology News. He serves on the clinical faculty at Tufts University, Boston, and has taught senior medical students and other trainees for 30 years. His new book “Act Like a Doctor, Think Like a Patient” is now available at amazon.com and barnesandnoble.com. This is his second book. Write to him at [email protected].

The following was presented to the Pennsylvania Academy of Dermatology at its annual meeting in Bedford Springs, Pa. The verses were sung to the tune of “I’ve Got a Little List” from Gilbert and Sullivan’s “Mikado.”

For those unsure of how the words fit, the editors of this periodical are considering a lottery. Winners will get an MP3 of the author singing the lyrics. Losers will get two copies.

I’ve Got a Little (Dermatologic) List

One day your staff informs you that a patient who’s called up

Has asked that you call back –

At once! Call him right back! –

But to your consternation you discover that you lack

The telephonic knack

You lack the call-back knack

For the man who wants to be assured he knows that he’s been called

And so he’s ordered voice mail – but it hasn’t been installed

Or else you hear a message that suffuses you with gloom –

Her voice mail works just dandy, but it’s full and got no room

Or else he’s a millennial who doesn’t use the phone

What right has he to moan?

We’ll just leave him alone!

Among the many irritants providing me with grist

The naive integumentalist

Must be there on my list

The one who’s sure that any scaly rash that comes among us

Is certainly a fungus

What else? A yeast or fungus!

Yet doles out betamethasone for every scaly sole

And smears all roundish eczema with ketoconazole

And knows they can’t be bedbugs if the bites don’t come in three

And rules out pityriasis because there is no tree!

And calls each itch that patients have inscribed into a furrow

A scabietic burrow –

An idiocy thorough!

Returning now to patients, I really must insist

To put some on my list

(In fact, they top the list!)

They’re the people who have generated their own laundry list

Or else at least the gist –

(Their list contains the gist) –

The redness of my pimples now takes much too long to fade

I have a strange sensation just below my shoulder blade

I get these funny white bumps when my family travels south

And intermittent cracking at the corners of my mouth

I have a newish brown mark on the right side of my nose

And frequent scaling in between my first and second toes

Now let me double check my list, because you see I fear

That I’ll leave something crucial out – now that I’ve got you here!

This armpit mark’s irregular – you see, there is a stipple

And new light yellow bumps have just appeared around my nipple

The red splotch underneath my breast – my doctor says it’s yeast

I have this dark spot. See my navel? Go one inch northeast

Oh, wait, there is a skin tag on the right side of my neck

And now, as long as I am here, let’s do a body check ...

And yes, there is just one more thing I must ask you about

I am concerned – in fact I’m sure – my hair is falling out!

Now that we are concluding, we should surely not forget

The ones not on the list

Forget about the list!

Those patients every one of us is very glad we’ve met

And happy to assist

The ones who would be missed

Those lovely people each of us is gratified to serve

Who often praise our efforts rather more than we deserve

And anyhow the tables turn, and so sooner or later

We docs will take our turn as patients, crunched to bits of data ...

I hope my cranky litany has served to entertain ya

So thank you for inviting me –

Good morning, Pennsylvania!

Dr. Rockoff practices dermatology in Brookline, Mass, and is a longtime contributor to Dermatology News. He serves on the clinical faculty at Tufts University, Boston, and has taught senior medical students and other trainees for 30 years. His new book “Act Like a Doctor, Think Like a Patient” is now available at amazon.com and barnesandnoble.com. This is his second book. Write to him at [email protected].

David Henry's JCSO podcast, October 2016

In the October podcast for The Journal of Community and Supportive Oncology, the Editor-in-Chief, Dr David Henry, discusses Original Reports on toxicity analysis of docetaxel, cisplatin, and 5-fluorouracil neoadjuvant chemotherapy in Indian patients with head and neck cancers and on the impact of a literacy-sensitive intervention on CRC screening knowledge, attitudes, and intention to screen as well as an editorial by JCSO Editor Jame Abraham on lessons learned from using CDK 4/6 inhibitors to treat metastatic breast cancer. Also up for discussion are the approval of cabozantinib for renal cell carcinoma, and two Case Reports on central nervous system manifestations of multiple myeloma and on primary chest-wall leiomyosarcoma. Rounding out the discussion are two featured articles, one on new therapies for urologic cancers and another on a step-by-step guide for doctors who want to take to the Twittersphere.

Listen to the podcast below.

In the October podcast for The Journal of Community and Supportive Oncology, the Editor-in-Chief, Dr David Henry, discusses Original Reports on toxicity analysis of docetaxel, cisplatin, and 5-fluorouracil neoadjuvant chemotherapy in Indian patients with head and neck cancers and on the impact of a literacy-sensitive intervention on CRC screening knowledge, attitudes, and intention to screen as well as an editorial by JCSO Editor Jame Abraham on lessons learned from using CDK 4/6 inhibitors to treat metastatic breast cancer. Also up for discussion are the approval of cabozantinib for renal cell carcinoma, and two Case Reports on central nervous system manifestations of multiple myeloma and on primary chest-wall leiomyosarcoma. Rounding out the discussion are two featured articles, one on new therapies for urologic cancers and another on a step-by-step guide for doctors who want to take to the Twittersphere.

Listen to the podcast below.

In the October podcast for The Journal of Community and Supportive Oncology, the Editor-in-Chief, Dr David Henry, discusses Original Reports on toxicity analysis of docetaxel, cisplatin, and 5-fluorouracil neoadjuvant chemotherapy in Indian patients with head and neck cancers and on the impact of a literacy-sensitive intervention on CRC screening knowledge, attitudes, and intention to screen as well as an editorial by JCSO Editor Jame Abraham on lessons learned from using CDK 4/6 inhibitors to treat metastatic breast cancer. Also up for discussion are the approval of cabozantinib for renal cell carcinoma, and two Case Reports on central nervous system manifestations of multiple myeloma and on primary chest-wall leiomyosarcoma. Rounding out the discussion are two featured articles, one on new therapies for urologic cancers and another on a step-by-step guide for doctors who want to take to the Twittersphere.

Listen to the podcast below.

Plecanatide safe, effective for chronic constipation

LAS VEGAS – Two new studies suggest that the peptide plecanatide is safe and effective in the treatment of chronic idiopathic constipation. The drug had a low rate of diarrhea, about 7.1%.

The research, presented in two posters at the annual meeting of the American College of Gastroenterology, includes a pooled efficacy and safety analysis from two previous phase III clinical trials, as well as an open-label extension study.

“Many patents are still very dissatisfied” with existing drugs, said Satish Rao, MD, PhD, director of the Digestive Health Center at the Medical College of Georgia, Augusta, who presented one of the posters.

The drug is a derivative of uroguanylin, a peptide found in the gastrointestinal tract. Like the native peptide, plecanatide stimulates digestive fluid movement in the proximal small intestine, which in turn encourages regular bowel function. “I think because it is so much closer to the innate uroguanylin molecule, it will have better tolerability,” said Dr. Rao in an interview.

The open-label study followed 2,370 patients who received 3-mg or 6-mg doses of plecanatide once daily for up to 72 weeks. The most common adverse events were diarrhea (7.1%) and urinary tract infection (2.2%), and these were the only adverse events that occurred at a frequency above 2%.

About 5% of patients discontinued due to adverse events, 3.1% because of diarrhea. Patients were also asked to score their satisfaction with the drug and their willingness to continue on it, and the median values to those answers corresponded to quite satisfied and quite likely to continue.

The other study was a pooled analysis of two previously presented double-blind, placebo-controlled phase III trials of plecanatide. These studies included 2,791 patients with chronic idiopathic constipation who were treated over the course of 12 weeks, with 3- and 6-mg doses. Both groups showed significant improvements in the rate of durable overall complete spontaneous bowel movements: 20.5% in the 3-mg group and 19.8% in the 6-mg group, compared with 11.5% in the placebo group (P less than .001 for both comparisons).

Patients experienced improvements as early as the first week of treatment (31.6% in the 3-mg group versus 16.1% in placebo, P less than .001), and improvements were maintained through the end of the treatment period. There were also significant improvements in secondary endpoints, including stool consistency, straining, and bloating.

Adverse events occurred in 30.6% of subjects taking the 3-mg dose and 31.1% of those taking 6 mg, compared with 28.7% in the placebo group. As with the long-term study, the most common adverse event was diarrhea (4.6% in 3 mg and 5.1% in 6 mg, compared with 1.3% in placebo). Of those in the 3-mg group, 4.1% discontinued, as did 4.5% in the 6-mg group, and 2.2% in the placebo group.

“I think these are very exciting results. They clearly show a benefit of plecanatide in patients with chronic constipation. These are really methodologically rigorous, large clinical trials that should provide doctors and patients with confidence that the drug will provide benefits,” commented William D. Chey, MD, a professor of medicine at the University of Michigan, Ann Arbor.

Plecanatide is also being developed for irritable bowel syndrome with constipation, and has finished recruitment for two phase III clinical trials, which Synergy expects to report on later this year.

Dr. Rao is a member of an advisory committee for Forest Laboratories, Hollister, In Control Medical, Ironwood, Sucampo, and Vibrant. Dr. Chey is a consultant for Ironwood and Synergy.

LAS VEGAS – Two new studies suggest that the peptide plecanatide is safe and effective in the treatment of chronic idiopathic constipation. The drug had a low rate of diarrhea, about 7.1%.

The research, presented in two posters at the annual meeting of the American College of Gastroenterology, includes a pooled efficacy and safety analysis from two previous phase III clinical trials, as well as an open-label extension study.

“Many patents are still very dissatisfied” with existing drugs, said Satish Rao, MD, PhD, director of the Digestive Health Center at the Medical College of Georgia, Augusta, who presented one of the posters.

The drug is a derivative of uroguanylin, a peptide found in the gastrointestinal tract. Like the native peptide, plecanatide stimulates digestive fluid movement in the proximal small intestine, which in turn encourages regular bowel function. “I think because it is so much closer to the innate uroguanylin molecule, it will have better tolerability,” said Dr. Rao in an interview.

The open-label study followed 2,370 patients who received 3-mg or 6-mg doses of plecanatide once daily for up to 72 weeks. The most common adverse events were diarrhea (7.1%) and urinary tract infection (2.2%), and these were the only adverse events that occurred at a frequency above 2%.

About 5% of patients discontinued due to adverse events, 3.1% because of diarrhea. Patients were also asked to score their satisfaction with the drug and their willingness to continue on it, and the median values to those answers corresponded to quite satisfied and quite likely to continue.

The other study was a pooled analysis of two previously presented double-blind, placebo-controlled phase III trials of plecanatide. These studies included 2,791 patients with chronic idiopathic constipation who were treated over the course of 12 weeks, with 3- and 6-mg doses. Both groups showed significant improvements in the rate of durable overall complete spontaneous bowel movements: 20.5% in the 3-mg group and 19.8% in the 6-mg group, compared with 11.5% in the placebo group (P less than .001 for both comparisons).

Patients experienced improvements as early as the first week of treatment (31.6% in the 3-mg group versus 16.1% in placebo, P less than .001), and improvements were maintained through the end of the treatment period. There were also significant improvements in secondary endpoints, including stool consistency, straining, and bloating.

Adverse events occurred in 30.6% of subjects taking the 3-mg dose and 31.1% of those taking 6 mg, compared with 28.7% in the placebo group. As with the long-term study, the most common adverse event was diarrhea (4.6% in 3 mg and 5.1% in 6 mg, compared with 1.3% in placebo). Of those in the 3-mg group, 4.1% discontinued, as did 4.5% in the 6-mg group, and 2.2% in the placebo group.

“I think these are very exciting results. They clearly show a benefit of plecanatide in patients with chronic constipation. These are really methodologically rigorous, large clinical trials that should provide doctors and patients with confidence that the drug will provide benefits,” commented William D. Chey, MD, a professor of medicine at the University of Michigan, Ann Arbor.

Plecanatide is also being developed for irritable bowel syndrome with constipation, and has finished recruitment for two phase III clinical trials, which Synergy expects to report on later this year.

Dr. Rao is a member of an advisory committee for Forest Laboratories, Hollister, In Control Medical, Ironwood, Sucampo, and Vibrant. Dr. Chey is a consultant for Ironwood and Synergy.

LAS VEGAS – Two new studies suggest that the peptide plecanatide is safe and effective in the treatment of chronic idiopathic constipation. The drug had a low rate of diarrhea, about 7.1%.

The research, presented in two posters at the annual meeting of the American College of Gastroenterology, includes a pooled efficacy and safety analysis from two previous phase III clinical trials, as well as an open-label extension study.

“Many patents are still very dissatisfied” with existing drugs, said Satish Rao, MD, PhD, director of the Digestive Health Center at the Medical College of Georgia, Augusta, who presented one of the posters.

The drug is a derivative of uroguanylin, a peptide found in the gastrointestinal tract. Like the native peptide, plecanatide stimulates digestive fluid movement in the proximal small intestine, which in turn encourages regular bowel function. “I think because it is so much closer to the innate uroguanylin molecule, it will have better tolerability,” said Dr. Rao in an interview.

The open-label study followed 2,370 patients who received 3-mg or 6-mg doses of plecanatide once daily for up to 72 weeks. The most common adverse events were diarrhea (7.1%) and urinary tract infection (2.2%), and these were the only adverse events that occurred at a frequency above 2%.

About 5% of patients discontinued due to adverse events, 3.1% because of diarrhea. Patients were also asked to score their satisfaction with the drug and their willingness to continue on it, and the median values to those answers corresponded to quite satisfied and quite likely to continue.

The other study was a pooled analysis of two previously presented double-blind, placebo-controlled phase III trials of plecanatide. These studies included 2,791 patients with chronic idiopathic constipation who were treated over the course of 12 weeks, with 3- and 6-mg doses. Both groups showed significant improvements in the rate of durable overall complete spontaneous bowel movements: 20.5% in the 3-mg group and 19.8% in the 6-mg group, compared with 11.5% in the placebo group (P less than .001 for both comparisons).

Patients experienced improvements as early as the first week of treatment (31.6% in the 3-mg group versus 16.1% in placebo, P less than .001), and improvements were maintained through the end of the treatment period. There were also significant improvements in secondary endpoints, including stool consistency, straining, and bloating.

Adverse events occurred in 30.6% of subjects taking the 3-mg dose and 31.1% of those taking 6 mg, compared with 28.7% in the placebo group. As with the long-term study, the most common adverse event was diarrhea (4.6% in 3 mg and 5.1% in 6 mg, compared with 1.3% in placebo). Of those in the 3-mg group, 4.1% discontinued, as did 4.5% in the 6-mg group, and 2.2% in the placebo group.

“I think these are very exciting results. They clearly show a benefit of plecanatide in patients with chronic constipation. These are really methodologically rigorous, large clinical trials that should provide doctors and patients with confidence that the drug will provide benefits,” commented William D. Chey, MD, a professor of medicine at the University of Michigan, Ann Arbor.

Plecanatide is also being developed for irritable bowel syndrome with constipation, and has finished recruitment for two phase III clinical trials, which Synergy expects to report on later this year.

Dr. Rao is a member of an advisory committee for Forest Laboratories, Hollister, In Control Medical, Ironwood, Sucampo, and Vibrant. Dr. Chey is a consultant for Ironwood and Synergy.

AT ACG 2016

Key clinical point: Plecanatide is safe and effective in the treatment of idiopathic chronic constipation.

Major finding: A long-term study and an analysis of two phase III clinical trials show the drug is effective at reducing constipation and has low rates of adverse events and discontinuation.

Data source: Open-label extension trial and randomized, placebo-controlled, clinical trials.

Disclosures: Dr. Rao is a member of an advisory committee for Forest Laboratories, Hollister, In Control Medical, Ironwood, Sucampo, and Vibrant. Dr. Chey is a consultant for Ironwood.

Lyme disease spirochete helps babesiosis gain a foothold

BOSTON – The spirochete that causes Lyme disease in humans may be lending a helping hand to the weaker protozoan that causes babesiosis, escalating the rate of human babesiosis cases in regions where both are endemic.

Peter Krause, MD, a research scientist in epidemiology, medicine, and pediatrics at Yale University School of Public Health, New Haven, Conn., reviewed what’s known about babesiosis–Lyme disease coinfections at the annual meeting of the American Society for Microbiology.

Understanding the entire cycle is necessary, he said, because the effects of coinfection will be different depending on the stage of the cycle, and upon the coinfection pathogens.

Over the course of many years, Dr. Krause and his collaborators have used an interdisciplinary, multi-modal approach to try to understand the interplay between these pathogens, their hosts, and environmental, demographic, and ecologic factors.

One arm of their research has taken them to the lab, where they have modeled coinfection and transmission of Borrelia burgdorferi and Babesia microti from their reservoir host, the white-footed mouse (Peromyscus leucopus), to the vector, the deer tick (Ixodes scapularis), which can transmit both diseases to humans.

In an experimental design that mimicked the natural reservoir-vector ecology, Dr. Krause and his collaborators first infected mice with 5 to 10 nymphal ticks, to approximate the average number of ticks that feed on an individual mouse in the wild. The researchers then tracked the effect of coinfection on transmission of each pathogen to ticks during the larval feeds, finding that B. burgdorferi increased B. microti parasitemia in mice who were coinfected. Coinfection also increases B. microti transmission from mice to ticks. This effect happens at least partly because of the increased parasitemia, Dr. Krause said.

The downstream effect on humans is to increase the risk of babesiosis for those who live in regions where both B. microti and B. burgdorferi are endemic, Dr. Krause said.

B. microti is less “ecologically fit” than B. burgdorferi, Dr. Krause said, noting that there are more ticks and humans infected with the latter, as well as more reservoir mice carrying B. burgdorferi. Also, the rate of geographic expansion is more rapid for B. burgdorferi. “B. microti is only endemic in areas where B. burgdorferi is already endemic; it may not be ‘fit’ enough to establish endemic sites on its own,” Dr. Krause said.

The increased rate of B. microti transmission via ticks from mice, if the mice are coinfected with B. burgdorferi, may help explain the greater-than-expected rate of babesiosis in humans in areas of New England where coinfection is common. “This paradox might be explained by the enhancement of B. microti survival and spread by the coinfecting presence of Borrelia burgdorferi,” Dr. Krause said.

This naturalistic experiment has ecological implications in terms of the human impact as well: “Coinfection may help enhance geographic spread of B. microti to new areas,” Dr. Krause said.

Clinicians in geographic areas where both pathogens are endemic should maintain a high level of suspicion for coinfection, especially for the most ill patients. “Anaplasmosis and/or babesiosis coinfection increases the severity of Lyme disease,” Dr. Krause said. “Health care workers should consider anaplasmosis and/or babesiosis coinfection in Lyme disease patients who have more severe illness or who do not respond to antibiotic therapy.”

Understanding the complex interspecies interplay will be increasingly important as more cases of tick-borne illness are seen, Dr. Krause concluded. “Research on coinfections acquired from Ixodes scapularis has just begun.”

Dr. Krause reported no relevant conflicts of interest.

[email protected]

On Twitter @karioakes

BOSTON – The spirochete that causes Lyme disease in humans may be lending a helping hand to the weaker protozoan that causes babesiosis, escalating the rate of human babesiosis cases in regions where both are endemic.

Peter Krause, MD, a research scientist in epidemiology, medicine, and pediatrics at Yale University School of Public Health, New Haven, Conn., reviewed what’s known about babesiosis–Lyme disease coinfections at the annual meeting of the American Society for Microbiology.

Understanding the entire cycle is necessary, he said, because the effects of coinfection will be different depending on the stage of the cycle, and upon the coinfection pathogens.

Over the course of many years, Dr. Krause and his collaborators have used an interdisciplinary, multi-modal approach to try to understand the interplay between these pathogens, their hosts, and environmental, demographic, and ecologic factors.

One arm of their research has taken them to the lab, where they have modeled coinfection and transmission of Borrelia burgdorferi and Babesia microti from their reservoir host, the white-footed mouse (Peromyscus leucopus), to the vector, the deer tick (Ixodes scapularis), which can transmit both diseases to humans.

In an experimental design that mimicked the natural reservoir-vector ecology, Dr. Krause and his collaborators first infected mice with 5 to 10 nymphal ticks, to approximate the average number of ticks that feed on an individual mouse in the wild. The researchers then tracked the effect of coinfection on transmission of each pathogen to ticks during the larval feeds, finding that B. burgdorferi increased B. microti parasitemia in mice who were coinfected. Coinfection also increases B. microti transmission from mice to ticks. This effect happens at least partly because of the increased parasitemia, Dr. Krause said.

The downstream effect on humans is to increase the risk of babesiosis for those who live in regions where both B. microti and B. burgdorferi are endemic, Dr. Krause said.

B. microti is less “ecologically fit” than B. burgdorferi, Dr. Krause said, noting that there are more ticks and humans infected with the latter, as well as more reservoir mice carrying B. burgdorferi. Also, the rate of geographic expansion is more rapid for B. burgdorferi. “B. microti is only endemic in areas where B. burgdorferi is already endemic; it may not be ‘fit’ enough to establish endemic sites on its own,” Dr. Krause said.

The increased rate of B. microti transmission via ticks from mice, if the mice are coinfected with B. burgdorferi, may help explain the greater-than-expected rate of babesiosis in humans in areas of New England where coinfection is common. “This paradox might be explained by the enhancement of B. microti survival and spread by the coinfecting presence of Borrelia burgdorferi,” Dr. Krause said.

This naturalistic experiment has ecological implications in terms of the human impact as well: “Coinfection may help enhance geographic spread of B. microti to new areas,” Dr. Krause said.

Clinicians in geographic areas where both pathogens are endemic should maintain a high level of suspicion for coinfection, especially for the most ill patients. “Anaplasmosis and/or babesiosis coinfection increases the severity of Lyme disease,” Dr. Krause said. “Health care workers should consider anaplasmosis and/or babesiosis coinfection in Lyme disease patients who have more severe illness or who do not respond to antibiotic therapy.”

Understanding the complex interspecies interplay will be increasingly important as more cases of tick-borne illness are seen, Dr. Krause concluded. “Research on coinfections acquired from Ixodes scapularis has just begun.”

Dr. Krause reported no relevant conflicts of interest.

[email protected]

On Twitter @karioakes

BOSTON – The spirochete that causes Lyme disease in humans may be lending a helping hand to the weaker protozoan that causes babesiosis, escalating the rate of human babesiosis cases in regions where both are endemic.

Peter Krause, MD, a research scientist in epidemiology, medicine, and pediatrics at Yale University School of Public Health, New Haven, Conn., reviewed what’s known about babesiosis–Lyme disease coinfections at the annual meeting of the American Society for Microbiology.

Understanding the entire cycle is necessary, he said, because the effects of coinfection will be different depending on the stage of the cycle, and upon the coinfection pathogens.

Over the course of many years, Dr. Krause and his collaborators have used an interdisciplinary, multi-modal approach to try to understand the interplay between these pathogens, their hosts, and environmental, demographic, and ecologic factors.

One arm of their research has taken them to the lab, where they have modeled coinfection and transmission of Borrelia burgdorferi and Babesia microti from their reservoir host, the white-footed mouse (Peromyscus leucopus), to the vector, the deer tick (Ixodes scapularis), which can transmit both diseases to humans.

In an experimental design that mimicked the natural reservoir-vector ecology, Dr. Krause and his collaborators first infected mice with 5 to 10 nymphal ticks, to approximate the average number of ticks that feed on an individual mouse in the wild. The researchers then tracked the effect of coinfection on transmission of each pathogen to ticks during the larval feeds, finding that B. burgdorferi increased B. microti parasitemia in mice who were coinfected. Coinfection also increases B. microti transmission from mice to ticks. This effect happens at least partly because of the increased parasitemia, Dr. Krause said.

The downstream effect on humans is to increase the risk of babesiosis for those who live in regions where both B. microti and B. burgdorferi are endemic, Dr. Krause said.

B. microti is less “ecologically fit” than B. burgdorferi, Dr. Krause said, noting that there are more ticks and humans infected with the latter, as well as more reservoir mice carrying B. burgdorferi. Also, the rate of geographic expansion is more rapid for B. burgdorferi. “B. microti is only endemic in areas where B. burgdorferi is already endemic; it may not be ‘fit’ enough to establish endemic sites on its own,” Dr. Krause said.

The increased rate of B. microti transmission via ticks from mice, if the mice are coinfected with B. burgdorferi, may help explain the greater-than-expected rate of babesiosis in humans in areas of New England where coinfection is common. “This paradox might be explained by the enhancement of B. microti survival and spread by the coinfecting presence of Borrelia burgdorferi,” Dr. Krause said.

This naturalistic experiment has ecological implications in terms of the human impact as well: “Coinfection may help enhance geographic spread of B. microti to new areas,” Dr. Krause said.

Clinicians in geographic areas where both pathogens are endemic should maintain a high level of suspicion for coinfection, especially for the most ill patients. “Anaplasmosis and/or babesiosis coinfection increases the severity of Lyme disease,” Dr. Krause said. “Health care workers should consider anaplasmosis and/or babesiosis coinfection in Lyme disease patients who have more severe illness or who do not respond to antibiotic therapy.”

Understanding the complex interspecies interplay will be increasingly important as more cases of tick-borne illness are seen, Dr. Krause concluded. “Research on coinfections acquired from Ixodes scapularis has just begun.”

Dr. Krause reported no relevant conflicts of interest.

[email protected]

On Twitter @karioakes

EXPERT ANALYSIS FROM ASM 2016

HIV hospitalizations continue to decline

The total number of HIV hospitalizations fell by a third during 2000-2013, even though the number of people living with HIV increased by more than 50%, according to an investigation by the Agency for Healthcare Research and Quality.

“To some extent, the considerable reduction in hospital utilization by persons with HIV disease may be attributed to the diffusion of new antiretroviral medications and the enhanced ability of clinicians to control viral replication,” wrote investigator Fred Hellinger, PhD, of AHRQ’s Center for Delivery, Organization, and Markets (Med Care. 2016 Jun;54[6]:639-44).

Dr. Hellinger used his agency’s State Inpatient Database to collect data on all HIV-related hospital admissions from California, Florida, New Jersey, New York, and South Carolina during 2000-2013. Overall, people with HIV were 64% less likely to be hospitalized in 2013 than they were in 2000; there was also a slight drop in length of stay.

Meanwhile, the average age of hospitalized HIV patients has risen from 41 to 49 years, and the average number of diagnoses from 6 to more than 12. That’s in part because HIV patients are living longer, and “older patients are generally sicker and have more chronic illnesses ... As HIV patients age, they are being hospitalized for conditions that are not closely related to HIV infection,” Dr. Hellinger said.

“Indeed, the principal diagnosis for almost two-thirds of the HIV patients hospitalized in 2013 in our sample was not HIV infection, and as time passes, the mix of diagnoses recorded for hospitalized patients with HIV is likely to resemble the mix of diagnoses found in the general population of hospitalized patients,” he wrote.

U.S. HIV spending continues to go up, but while the number of patients covered by Medicaid has fallen, the number treated by Medicare has risen 50%, reflecting the increase in average life span.

There has not been much demographic change among HIV inpatients. About half are black, a quarter white, and slightly less than one-fifth Hispanic. One-third are women. More than 1.1 million Americans are living with HIV, and 50,000 are newly infected each year.

Dr. Hellinger had no conflicts of interest.

The total number of HIV hospitalizations fell by a third during 2000-2013, even though the number of people living with HIV increased by more than 50%, according to an investigation by the Agency for Healthcare Research and Quality.

“To some extent, the considerable reduction in hospital utilization by persons with HIV disease may be attributed to the diffusion of new antiretroviral medications and the enhanced ability of clinicians to control viral replication,” wrote investigator Fred Hellinger, PhD, of AHRQ’s Center for Delivery, Organization, and Markets (Med Care. 2016 Jun;54[6]:639-44).

Dr. Hellinger used his agency’s State Inpatient Database to collect data on all HIV-related hospital admissions from California, Florida, New Jersey, New York, and South Carolina during 2000-2013. Overall, people with HIV were 64% less likely to be hospitalized in 2013 than they were in 2000; there was also a slight drop in length of stay.

Meanwhile, the average age of hospitalized HIV patients has risen from 41 to 49 years, and the average number of diagnoses from 6 to more than 12. That’s in part because HIV patients are living longer, and “older patients are generally sicker and have more chronic illnesses ... As HIV patients age, they are being hospitalized for conditions that are not closely related to HIV infection,” Dr. Hellinger said.

“Indeed, the principal diagnosis for almost two-thirds of the HIV patients hospitalized in 2013 in our sample was not HIV infection, and as time passes, the mix of diagnoses recorded for hospitalized patients with HIV is likely to resemble the mix of diagnoses found in the general population of hospitalized patients,” he wrote.

U.S. HIV spending continues to go up, but while the number of patients covered by Medicaid has fallen, the number treated by Medicare has risen 50%, reflecting the increase in average life span.

There has not been much demographic change among HIV inpatients. About half are black, a quarter white, and slightly less than one-fifth Hispanic. One-third are women. More than 1.1 million Americans are living with HIV, and 50,000 are newly infected each year.

Dr. Hellinger had no conflicts of interest.

The total number of HIV hospitalizations fell by a third during 2000-2013, even though the number of people living with HIV increased by more than 50%, according to an investigation by the Agency for Healthcare Research and Quality.

“To some extent, the considerable reduction in hospital utilization by persons with HIV disease may be attributed to the diffusion of new antiretroviral medications and the enhanced ability of clinicians to control viral replication,” wrote investigator Fred Hellinger, PhD, of AHRQ’s Center for Delivery, Organization, and Markets (Med Care. 2016 Jun;54[6]:639-44).

Dr. Hellinger used his agency’s State Inpatient Database to collect data on all HIV-related hospital admissions from California, Florida, New Jersey, New York, and South Carolina during 2000-2013. Overall, people with HIV were 64% less likely to be hospitalized in 2013 than they were in 2000; there was also a slight drop in length of stay.

Meanwhile, the average age of hospitalized HIV patients has risen from 41 to 49 years, and the average number of diagnoses from 6 to more than 12. That’s in part because HIV patients are living longer, and “older patients are generally sicker and have more chronic illnesses ... As HIV patients age, they are being hospitalized for conditions that are not closely related to HIV infection,” Dr. Hellinger said.

“Indeed, the principal diagnosis for almost two-thirds of the HIV patients hospitalized in 2013 in our sample was not HIV infection, and as time passes, the mix of diagnoses recorded for hospitalized patients with HIV is likely to resemble the mix of diagnoses found in the general population of hospitalized patients,” he wrote.

U.S. HIV spending continues to go up, but while the number of patients covered by Medicaid has fallen, the number treated by Medicare has risen 50%, reflecting the increase in average life span.

There has not been much demographic change among HIV inpatients. About half are black, a quarter white, and slightly less than one-fifth Hispanic. One-third are women. More than 1.1 million Americans are living with HIV, and 50,000 are newly infected each year.

Dr. Hellinger had no conflicts of interest.

FROM MEDICAL CARE

Key clinical point:

Major finding: People with HIV were 64% less likely to be hospitalized in 2013 than they were in 2000.

Data source: Review of HIV-related hospitalizations in five U.S. states.

Disclosures: The Agency for Healthcare Research and Quality funded the work. The investigator had no disclosures.

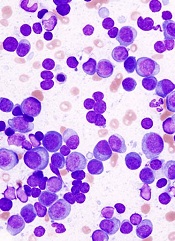

Speaker says use the best regimen ASAP in MM

NEW YORK—It’s important to use the best possible regimen as soon as possible when treating patients with multiple myeloma (MM), according to a speaker at Lymphoma & Myeloma 2016.

Antonio Palumbo, MD, of the University of Torino in Italy, explained that the urgency, from a biological point of view, is because myeloma accumulates genetic mutations that change the disease in a “dramatic way.”

“At diagnosis, you might have 5000 mutations, and, at first relapse, there are 12,000 mutations,” he said. “They are completely different tumors. So the first thing that we have to recognize is that, with time, myeloma is not the same. It’s becoming different tumors.”

Dr Palumbo noted that early intervention is important because genetic and epigenetic abnormalities increase as the disease progresses and becomes more resistant.

For example, if 100 patients receive first-line treatment, 40 of them will not reach the second line. Only 60 patients will receive second-line therapy, and only 35 patients will receive third-line treatment. By the fifth line, only 10 patients, or 10%, will receive it.

“If you have very good genomic stability, you can become sensitive to different treatments,” Dr Palumbo said. “But if you become a different disease, you will not be able to receive the next therapy. That’s why it’s so important . . . to use the best possible regimen as soon as possible.”

Best regimens

The triplet bortezomib-lenalidomide-dexamethasone is the “best possible regimen today,” Dr Palumbo said, “followed by continuous therapy.”

The 2-drug combination of lenalidomide and dexamethasone is probably more suitable for the elderly and the frail.

Alternative regimens include carfilzomib-cyclophosphamide-dexamethasone and ixazomib-lenalidomide-dexamethasone.

Continuous therapy

Continuous therapy has been “one of the major achievements” in the treatment of MM, Dr Palumbo said. Without continuous therapy, patients in complete response remain so for about 1 year when treated with conventional chemotherapy.

Investigators found that maintenance therapy significantly prolongs progression-free survival and overall survival (P=0.02). They recommend intensification with maintenance to optimize treatment efficacy and prolong survival.

Immunomodulatory drugs such as lenalidomide are the backbone of continuous therapy today.

However, ixazomib represents another option. Ixazomib maintenance has been shown to produce durable responses for a median of more than 2 years in previously untreated patients not undergoing autologous stem cell transplant.

Salvage therapy

Seven combinations are now available for salvage therapy, including those with proteasome inhibitors, monoclonal antibodies, pomalidomide, and histone deacetylase inhibitors.

Included in some of these combinations are the newer agents ixazomib, elotuzumab, and daratumumab, “which many might consider the new rituximab,” Dr Palumbo said.

Risk stratification

Dr Palumbo stressed the importance of risk stratification—both in clinical trials and in practice.

“[T]he moment you under-treat a patient, you transform a good-risk patient into a high-risk patient . . . ,” he said.

And if trials comparing treatments are not conducted within risk categories, researchers are comparing apples to oranges—good risk with high risk

The Revised International Staging System (R-ISS) for MM effectively stratifies newly diagnosed patients by combining the original ISS, chromosomal abnormalities, and serum lactate dehydrogenase levels to evaluate prognosis.

The R-ISS defines 3 new, distinct categories that researchers believe effectively define patients’ relative risk with respect to their survival.

Age and frailty

MM patients generally fall into 3 age categories: 25-64, 65-74, and 75-101.

The youngest group can receive autologous transplant, the fit patients ages 65 to 74 can receive full-dose chemotherapy, and the oldest, frailer patients should receive reduced-dose chemotherapy.

“It’s important to differentiate fit from frail,” Dr Palumbo said, “because we cannot give the same treatment schema to a 55-year-old versus 65 or 85. At 85, that schema would create a lot of toxicities.”

In frail patients, it’s always important to check which comorbidities are present using the Charlson comorbidity scale, Dr Palumbo said.

“Remember, when we put together age, chromosome abnormalities, and frailty, frailty is the most relevant prognostic factor when you add everything together,” he said.

“Two drugs versus 3 drugs doesn’t make much difference when you are starting to introduce treatment to these very frail patients with comorbidities.”

Minimal residual disease

Dr Palumbo indicated that a combination of MRI and PET-CT should be used for a more accurate indication of minimal residual disease.

“[W]e hope to have cure, [but] I don’t think, today, myeloma is a curable disease,” he said.

He clarified this by saying that patients who are in complete response and minimal residual disease-negative at 3 years do well, but, by 7 years, “something is happening.”

And at 10 years, progression-free survival has dropped off significantly, “telling us that cure is probably not yet there.” ![]()

NEW YORK—It’s important to use the best possible regimen as soon as possible when treating patients with multiple myeloma (MM), according to a speaker at Lymphoma & Myeloma 2016.

Antonio Palumbo, MD, of the University of Torino in Italy, explained that the urgency, from a biological point of view, is because myeloma accumulates genetic mutations that change the disease in a “dramatic way.”