User login

Risk Factors for Pseudomonas, MRSA in Healthcare-Associated Pneumonia

Clinical question: What risk factors could predict the likelihood of Pseudomonas and methicillin-resistant Staphylococcus aureus (MRSA) in patients hospitalized with healthcare-associated pneumonia (HCAP)?

Background: Patients identified with HCAP have an increased risk for multi-drug-resistant pathogens, such as gram-negative (GNR) organisms and MRSA. Meeting criteria for HCAP does not discriminate between the different infections, which require different antibiotic classes for treatment. Risk factors need to be identified to determine the most likely infectious organism to help guide initial empiric antibiotic therapy.

Study design: Retrospective cohort study.

Setting: Veterans Affairs hospitals.

Synopsis: Of 61,651 veterans with HCAP diagnosis, 1,156 (1.9%) had a discharge diagnosis of Pseudomonas pneumonia and were found to be younger and more likely to be immunocompromised; have hemiplegia; have a history of chronic obstructive pulmonary disease; have had corticosteroid exposure; and have been exposed to a fluoroquinolone, β-lactam, cephalosporin, or carbapenem antiobiotic within 90 days prior to admission. Pseudomonas pneumonia was negatively associated with age >84, drug abuse, diabetes, and higher socioeconomic status. A discharge diagnosis of MRSA pneumonia was found in 641 patients (1.0%), who also were positively associated with the male gender, age >74, recent nursing home stay, and recent exposure to fluoroquinolone antibiotics within 90 days prior to admission.

MRSA pneumonia was negatively associated with complicated diabetes. Neither diagnosis was present in 59,854 patients (97.1%).

This study was limited due to its predominantly male veteran population, low incidence of Pseudomonas and MRSA pneumonia being identified, and Pseudomonas as the only GNR organism analyzed.

Bottom line: Risk factors identified for Pseudomonas and MRSA pneumonia can help guide targeted antibiotics for HCAP patients.

Citation: Metersky ML, Frei CR, Mortenson EM. Predictors of Pseudomonas and methicillin-resistant Staphylococcus aureus in hospitalized patients with healthcare-associated pneumonia. Respirology. 2016;21(1):157-163.

Short Take

Hematuria as Marker of Urologic Cancer

Narrative literature review did not demonstrate beneficial role of screening urinalysis for cancer detection in asymptomatic patients, but it did suggest including gross hematuria as part of routine review of systems.

Citation: Nielsen M, Qaseem A, High Value Care Task Force of the American College of Physicians. Hematuria as a marker of occult urinary tract cancer: advice for high-value care from the American College of Physicians. Ann Intern Med. 2016;164(7):488-497. doi:10.7326/M15-1496.

Clinical question: What risk factors could predict the likelihood of Pseudomonas and methicillin-resistant Staphylococcus aureus (MRSA) in patients hospitalized with healthcare-associated pneumonia (HCAP)?

Background: Patients identified with HCAP have an increased risk for multi-drug-resistant pathogens, such as gram-negative (GNR) organisms and MRSA. Meeting criteria for HCAP does not discriminate between the different infections, which require different antibiotic classes for treatment. Risk factors need to be identified to determine the most likely infectious organism to help guide initial empiric antibiotic therapy.

Study design: Retrospective cohort study.

Setting: Veterans Affairs hospitals.

Synopsis: Of 61,651 veterans with HCAP diagnosis, 1,156 (1.9%) had a discharge diagnosis of Pseudomonas pneumonia and were found to be younger and more likely to be immunocompromised; have hemiplegia; have a history of chronic obstructive pulmonary disease; have had corticosteroid exposure; and have been exposed to a fluoroquinolone, β-lactam, cephalosporin, or carbapenem antiobiotic within 90 days prior to admission. Pseudomonas pneumonia was negatively associated with age >84, drug abuse, diabetes, and higher socioeconomic status. A discharge diagnosis of MRSA pneumonia was found in 641 patients (1.0%), who also were positively associated with the male gender, age >74, recent nursing home stay, and recent exposure to fluoroquinolone antibiotics within 90 days prior to admission.

MRSA pneumonia was negatively associated with complicated diabetes. Neither diagnosis was present in 59,854 patients (97.1%).

This study was limited due to its predominantly male veteran population, low incidence of Pseudomonas and MRSA pneumonia being identified, and Pseudomonas as the only GNR organism analyzed.

Bottom line: Risk factors identified for Pseudomonas and MRSA pneumonia can help guide targeted antibiotics for HCAP patients.

Citation: Metersky ML, Frei CR, Mortenson EM. Predictors of Pseudomonas and methicillin-resistant Staphylococcus aureus in hospitalized patients with healthcare-associated pneumonia. Respirology. 2016;21(1):157-163.

Short Take

Hematuria as Marker of Urologic Cancer

Narrative literature review did not demonstrate beneficial role of screening urinalysis for cancer detection in asymptomatic patients, but it did suggest including gross hematuria as part of routine review of systems.

Citation: Nielsen M, Qaseem A, High Value Care Task Force of the American College of Physicians. Hematuria as a marker of occult urinary tract cancer: advice for high-value care from the American College of Physicians. Ann Intern Med. 2016;164(7):488-497. doi:10.7326/M15-1496.

Clinical question: What risk factors could predict the likelihood of Pseudomonas and methicillin-resistant Staphylococcus aureus (MRSA) in patients hospitalized with healthcare-associated pneumonia (HCAP)?

Background: Patients identified with HCAP have an increased risk for multi-drug-resistant pathogens, such as gram-negative (GNR) organisms and MRSA. Meeting criteria for HCAP does not discriminate between the different infections, which require different antibiotic classes for treatment. Risk factors need to be identified to determine the most likely infectious organism to help guide initial empiric antibiotic therapy.

Study design: Retrospective cohort study.

Setting: Veterans Affairs hospitals.

Synopsis: Of 61,651 veterans with HCAP diagnosis, 1,156 (1.9%) had a discharge diagnosis of Pseudomonas pneumonia and were found to be younger and more likely to be immunocompromised; have hemiplegia; have a history of chronic obstructive pulmonary disease; have had corticosteroid exposure; and have been exposed to a fluoroquinolone, β-lactam, cephalosporin, or carbapenem antiobiotic within 90 days prior to admission. Pseudomonas pneumonia was negatively associated with age >84, drug abuse, diabetes, and higher socioeconomic status. A discharge diagnosis of MRSA pneumonia was found in 641 patients (1.0%), who also were positively associated with the male gender, age >74, recent nursing home stay, and recent exposure to fluoroquinolone antibiotics within 90 days prior to admission.

MRSA pneumonia was negatively associated with complicated diabetes. Neither diagnosis was present in 59,854 patients (97.1%).

This study was limited due to its predominantly male veteran population, low incidence of Pseudomonas and MRSA pneumonia being identified, and Pseudomonas as the only GNR organism analyzed.

Bottom line: Risk factors identified for Pseudomonas and MRSA pneumonia can help guide targeted antibiotics for HCAP patients.

Citation: Metersky ML, Frei CR, Mortenson EM. Predictors of Pseudomonas and methicillin-resistant Staphylococcus aureus in hospitalized patients with healthcare-associated pneumonia. Respirology. 2016;21(1):157-163.

Short Take

Hematuria as Marker of Urologic Cancer

Narrative literature review did not demonstrate beneficial role of screening urinalysis for cancer detection in asymptomatic patients, but it did suggest including gross hematuria as part of routine review of systems.

Citation: Nielsen M, Qaseem A, High Value Care Task Force of the American College of Physicians. Hematuria as a marker of occult urinary tract cancer: advice for high-value care from the American College of Physicians. Ann Intern Med. 2016;164(7):488-497. doi:10.7326/M15-1496.

Dose of transplanted HSCs affects their behavior

in the bone marrow

The dose of hematopoietic stem cells (HSCs) given in a transplant affects how those cells behave in the body, according to research published in Cell Reports.

To track the behavior of transplanted HSCs, researchers “barcoded” individual mouse HSCs with a genetic marker and observed their contributions to hematopoiesis.

The team found that only 20% to 30% of HSCs differentiated into all types of blood cells.

This relatively small group of HSCs produced a disproportionately large amount of blood.

The remaining 70% to 80% of HSCs were more strategic, and their behavior was dependent on the dose of HSCs transplanted.

At higher HSC doses, the “strategic majority” of HSCs opted to differentiate early, producing a balanced array of T cells and B cells. But at low HSC doses, the strategic HSCs prioritized T-cell production.

“The dose of transplanted bone marrow has strong and lasting effects on how HSCs specialize and coordinate their behavior,” said study author Rong Lu, PhD, of the University of Southern California in Los Angeles.

“This suggests that altering transplantation dose could be a tool for improving outcomes for patients—promoting bone marrow engraftment, reducing the risk of infection, and, ultimately, saving lives.” ![]()

in the bone marrow

The dose of hematopoietic stem cells (HSCs) given in a transplant affects how those cells behave in the body, according to research published in Cell Reports.

To track the behavior of transplanted HSCs, researchers “barcoded” individual mouse HSCs with a genetic marker and observed their contributions to hematopoiesis.

The team found that only 20% to 30% of HSCs differentiated into all types of blood cells.

This relatively small group of HSCs produced a disproportionately large amount of blood.

The remaining 70% to 80% of HSCs were more strategic, and their behavior was dependent on the dose of HSCs transplanted.

At higher HSC doses, the “strategic majority” of HSCs opted to differentiate early, producing a balanced array of T cells and B cells. But at low HSC doses, the strategic HSCs prioritized T-cell production.

“The dose of transplanted bone marrow has strong and lasting effects on how HSCs specialize and coordinate their behavior,” said study author Rong Lu, PhD, of the University of Southern California in Los Angeles.

“This suggests that altering transplantation dose could be a tool for improving outcomes for patients—promoting bone marrow engraftment, reducing the risk of infection, and, ultimately, saving lives.” ![]()

in the bone marrow

The dose of hematopoietic stem cells (HSCs) given in a transplant affects how those cells behave in the body, according to research published in Cell Reports.

To track the behavior of transplanted HSCs, researchers “barcoded” individual mouse HSCs with a genetic marker and observed their contributions to hematopoiesis.

The team found that only 20% to 30% of HSCs differentiated into all types of blood cells.

This relatively small group of HSCs produced a disproportionately large amount of blood.

The remaining 70% to 80% of HSCs were more strategic, and their behavior was dependent on the dose of HSCs transplanted.

At higher HSC doses, the “strategic majority” of HSCs opted to differentiate early, producing a balanced array of T cells and B cells. But at low HSC doses, the strategic HSCs prioritized T-cell production.

“The dose of transplanted bone marrow has strong and lasting effects on how HSCs specialize and coordinate their behavior,” said study author Rong Lu, PhD, of the University of Southern California in Los Angeles.

“This suggests that altering transplantation dose could be a tool for improving outcomes for patients—promoting bone marrow engraftment, reducing the risk of infection, and, ultimately, saving lives.” ![]()

FDA approves FVIII single-chain therapy for hemophilia A

The US Food and Drug Administration (FDA) has approved lonoctocog alfa (Afstyla), a recombinant factor VIII (FVIII) single-chain therapy, for use in adults and children with hemophilia A.

The product is approved for routine prophylaxis to reduce the frequency of bleeding episodes, for on-demand treatment and control of bleeding episodes, and for perioperative management of bleeding.

Lonoctocog alfa is the first and only single-chain product for hemophilia A that is specifically designed to provide long-lasting protection from bleeds with 2- to 3-times weekly dosing.

The product uses a covalent bond that forms one structural entity, a single polypeptide-chain, to improve the stability of FVIII and provide longer-lasting FVIII activity.

Lonoctocog alfa should be available in the US early this summer, according to CSL Behring, the company developing the drug.

Clinical trials

The approval of lonoctocog alfa is based on results from the AFFINITY clinical development program, which included a trial of children (n=84) and a trial of adolescents and adults (n=175).

Among patients who received lonoctocog alfa prophylactically, the median annualized bleeding rate (ABR) was 1.14 in the adults and adolescents and 3.69 in children younger than 12.

In all, there were 1195 bleeding events—848 in the adults/adolescents and 347 in the children.

Ninety-four percent of bleeds in adults/adolescents and 96% of bleeds in pediatric patients were effectively controlled with no more than 2 infusions of lonoctocog alfa weekly. Eighty-one percent of bleeds in adults/adolescents and 86% of bleeds in pediatric patients were controlled by a single infusion.

Researchers assessed safety in 258 patients from both studies. Adverse reactions occurred in 14 patients and included hypersensitivity (n=4), dizziness (n=2), paresthesia (n=1), rash (n=1), erythema (n=1), pruritus (n=1), pyrexia (n=1), injection-site pain (n=1), chills (n=1), and feeling hot (n=1).

One patient withdrew from treatment due to hypersensitivity.

None of the patients developed neutralizing antibodies to FVIII or antibodies to host cell proteins. There were no reports of anaphylaxis or thrombosis. ![]()

The US Food and Drug Administration (FDA) has approved lonoctocog alfa (Afstyla), a recombinant factor VIII (FVIII) single-chain therapy, for use in adults and children with hemophilia A.

The product is approved for routine prophylaxis to reduce the frequency of bleeding episodes, for on-demand treatment and control of bleeding episodes, and for perioperative management of bleeding.

Lonoctocog alfa is the first and only single-chain product for hemophilia A that is specifically designed to provide long-lasting protection from bleeds with 2- to 3-times weekly dosing.

The product uses a covalent bond that forms one structural entity, a single polypeptide-chain, to improve the stability of FVIII and provide longer-lasting FVIII activity.

Lonoctocog alfa should be available in the US early this summer, according to CSL Behring, the company developing the drug.

Clinical trials

The approval of lonoctocog alfa is based on results from the AFFINITY clinical development program, which included a trial of children (n=84) and a trial of adolescents and adults (n=175).

Among patients who received lonoctocog alfa prophylactically, the median annualized bleeding rate (ABR) was 1.14 in the adults and adolescents and 3.69 in children younger than 12.

In all, there were 1195 bleeding events—848 in the adults/adolescents and 347 in the children.

Ninety-four percent of bleeds in adults/adolescents and 96% of bleeds in pediatric patients were effectively controlled with no more than 2 infusions of lonoctocog alfa weekly. Eighty-one percent of bleeds in adults/adolescents and 86% of bleeds in pediatric patients were controlled by a single infusion.

Researchers assessed safety in 258 patients from both studies. Adverse reactions occurred in 14 patients and included hypersensitivity (n=4), dizziness (n=2), paresthesia (n=1), rash (n=1), erythema (n=1), pruritus (n=1), pyrexia (n=1), injection-site pain (n=1), chills (n=1), and feeling hot (n=1).

One patient withdrew from treatment due to hypersensitivity.

None of the patients developed neutralizing antibodies to FVIII or antibodies to host cell proteins. There were no reports of anaphylaxis or thrombosis. ![]()

The US Food and Drug Administration (FDA) has approved lonoctocog alfa (Afstyla), a recombinant factor VIII (FVIII) single-chain therapy, for use in adults and children with hemophilia A.

The product is approved for routine prophylaxis to reduce the frequency of bleeding episodes, for on-demand treatment and control of bleeding episodes, and for perioperative management of bleeding.

Lonoctocog alfa is the first and only single-chain product for hemophilia A that is specifically designed to provide long-lasting protection from bleeds with 2- to 3-times weekly dosing.

The product uses a covalent bond that forms one structural entity, a single polypeptide-chain, to improve the stability of FVIII and provide longer-lasting FVIII activity.

Lonoctocog alfa should be available in the US early this summer, according to CSL Behring, the company developing the drug.

Clinical trials

The approval of lonoctocog alfa is based on results from the AFFINITY clinical development program, which included a trial of children (n=84) and a trial of adolescents and adults (n=175).

Among patients who received lonoctocog alfa prophylactically, the median annualized bleeding rate (ABR) was 1.14 in the adults and adolescents and 3.69 in children younger than 12.

In all, there were 1195 bleeding events—848 in the adults/adolescents and 347 in the children.

Ninety-four percent of bleeds in adults/adolescents and 96% of bleeds in pediatric patients were effectively controlled with no more than 2 infusions of lonoctocog alfa weekly. Eighty-one percent of bleeds in adults/adolescents and 86% of bleeds in pediatric patients were controlled by a single infusion.

Researchers assessed safety in 258 patients from both studies. Adverse reactions occurred in 14 patients and included hypersensitivity (n=4), dizziness (n=2), paresthesia (n=1), rash (n=1), erythema (n=1), pruritus (n=1), pyrexia (n=1), injection-site pain (n=1), chills (n=1), and feeling hot (n=1).

One patient withdrew from treatment due to hypersensitivity.

None of the patients developed neutralizing antibodies to FVIII or antibodies to host cell proteins. There were no reports of anaphylaxis or thrombosis. ![]()

Team describes mechanism of aggressive lymphomas

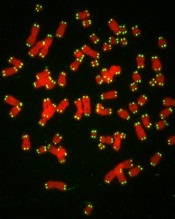

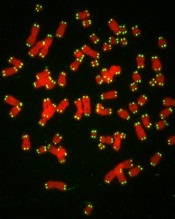

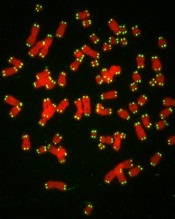

telomeres in green

Image by Claus Azzalin

Researchers say they have identified a mechanism by which defective telomere replication exacerbates tumor growth.

The team found that simultaneous inactivation of the telomere-binding factor POT1 and the tumor suppressor p53 accelerates the onset and increases the severity of T-cell lymphomas.

The research, published in Cell Reports, also suggests a possible way to fight these lymphomas—by targeting the ATR pathway.

The researchers knew that POT1 normally forms a protective cap around telomeres, stopping cell machinery from mistakenly damaging the DNA there and causing harmful mutations.

In fact, POT1 is so critical that cells without functional POT1 would rather die than pass on POT1 mutations. Stress in these cells leads to the activation of ATR, which triggers apoptosis.

However, recent research revealed recurrent mutations affecting POT1 in several cancers, including leukemia and melanoma.

“Somehow, those cells found a way to survive—and thrive,” said Eros Lazzerini Denchi, PhD, of The Scripps Research Institute in La Jolla, California.

“We thought that if we could understand how that happens, maybe we could find a way to kill those cells.”

Using a mouse model, the researchers found that mutations in POT1 lead to cancer when combined with a mutation in p53.

“The cells no longer have the mechanism for dying, and mice develop really aggressive thymic lymphomas,” Dr Lazzerini Denchi said.

When mutated, p53 overrides the protective cell death response initiated by ATR. Then, without POT1 creating a protective cap, the chromosomes are fused together and the DNA is rearranged, driving the accumulation of even more mutations. The mutant cells go on to proliferate and become aggressive tumors.

These findings led the researchers to consider a new strategy for killing these tumors.

They noted that all cells will die if they have no ATR. Since tumors with mutant POT1 already have low ATR levels, the researchers think a drug that knocks out the remaining ATR could kill tumors without affecting healthy cells.

The team plans to investigate this approach in future studies.

“This study shows that by looking at basic biological questions, we can potentially find new ways to treat cancer,” Dr Lazzerini Denchi concluded. ![]()

telomeres in green

Image by Claus Azzalin

Researchers say they have identified a mechanism by which defective telomere replication exacerbates tumor growth.

The team found that simultaneous inactivation of the telomere-binding factor POT1 and the tumor suppressor p53 accelerates the onset and increases the severity of T-cell lymphomas.

The research, published in Cell Reports, also suggests a possible way to fight these lymphomas—by targeting the ATR pathway.

The researchers knew that POT1 normally forms a protective cap around telomeres, stopping cell machinery from mistakenly damaging the DNA there and causing harmful mutations.

In fact, POT1 is so critical that cells without functional POT1 would rather die than pass on POT1 mutations. Stress in these cells leads to the activation of ATR, which triggers apoptosis.

However, recent research revealed recurrent mutations affecting POT1 in several cancers, including leukemia and melanoma.

“Somehow, those cells found a way to survive—and thrive,” said Eros Lazzerini Denchi, PhD, of The Scripps Research Institute in La Jolla, California.

“We thought that if we could understand how that happens, maybe we could find a way to kill those cells.”

Using a mouse model, the researchers found that mutations in POT1 lead to cancer when combined with a mutation in p53.

“The cells no longer have the mechanism for dying, and mice develop really aggressive thymic lymphomas,” Dr Lazzerini Denchi said.

When mutated, p53 overrides the protective cell death response initiated by ATR. Then, without POT1 creating a protective cap, the chromosomes are fused together and the DNA is rearranged, driving the accumulation of even more mutations. The mutant cells go on to proliferate and become aggressive tumors.

These findings led the researchers to consider a new strategy for killing these tumors.

They noted that all cells will die if they have no ATR. Since tumors with mutant POT1 already have low ATR levels, the researchers think a drug that knocks out the remaining ATR could kill tumors without affecting healthy cells.

The team plans to investigate this approach in future studies.

“This study shows that by looking at basic biological questions, we can potentially find new ways to treat cancer,” Dr Lazzerini Denchi concluded. ![]()

telomeres in green

Image by Claus Azzalin

Researchers say they have identified a mechanism by which defective telomere replication exacerbates tumor growth.

The team found that simultaneous inactivation of the telomere-binding factor POT1 and the tumor suppressor p53 accelerates the onset and increases the severity of T-cell lymphomas.

The research, published in Cell Reports, also suggests a possible way to fight these lymphomas—by targeting the ATR pathway.

The researchers knew that POT1 normally forms a protective cap around telomeres, stopping cell machinery from mistakenly damaging the DNA there and causing harmful mutations.

In fact, POT1 is so critical that cells without functional POT1 would rather die than pass on POT1 mutations. Stress in these cells leads to the activation of ATR, which triggers apoptosis.

However, recent research revealed recurrent mutations affecting POT1 in several cancers, including leukemia and melanoma.

“Somehow, those cells found a way to survive—and thrive,” said Eros Lazzerini Denchi, PhD, of The Scripps Research Institute in La Jolla, California.

“We thought that if we could understand how that happens, maybe we could find a way to kill those cells.”

Using a mouse model, the researchers found that mutations in POT1 lead to cancer when combined with a mutation in p53.

“The cells no longer have the mechanism for dying, and mice develop really aggressive thymic lymphomas,” Dr Lazzerini Denchi said.

When mutated, p53 overrides the protective cell death response initiated by ATR. Then, without POT1 creating a protective cap, the chromosomes are fused together and the DNA is rearranged, driving the accumulation of even more mutations. The mutant cells go on to proliferate and become aggressive tumors.

These findings led the researchers to consider a new strategy for killing these tumors.

They noted that all cells will die if they have no ATR. Since tumors with mutant POT1 already have low ATR levels, the researchers think a drug that knocks out the remaining ATR could kill tumors without affecting healthy cells.

The team plans to investigate this approach in future studies.

“This study shows that by looking at basic biological questions, we can potentially find new ways to treat cancer,” Dr Lazzerini Denchi concluded. ![]()

mAb could treat hemophilia A regardless of inhibitors

Results of a phase 1 study suggest the bispecific monoclonal antibody (mAb) emicizumab (ACE910) may be safe and effective for patients with severe hemophilia A, whether or not they have factor VIII (FVIII) inhibitors.

A weekly injection of emicizumab decreased annualized bleeding rates (ABRs), and 13 of the 18 patients studied did not experience any bleeding while on treatment.

In addition, researchers said the mAb had an acceptable safety profile.

These results were published in NEJM. The study was funded by Chugai Pharmaceutical Co., Ltd., which is co-developing emicizumab with Genentech.

Emicizumab is engineered to simultaneously bind factors IXa and X. The mAb mimics the cofactor function of FVIII and is designed to promote blood coagulation in hemophilia A patients regardless of whether they have developed inhibitors to FVIII. As it is distinct in structure from FVIII, emicizumab is not expected to lead to the formation of FVIII inhibitors.

This phase 1 study of emicizumab enrolled both healthy subjects and hemophilia A patients. Results in the healthy subjects were previously published in Blood.

All 18 patients had severe hemophilia A, and 11 had FVIII inhibitors. They were 12 to 58 years of age, and all were Japanese.

The patients received once-weekly subcutaneous injections of emicizumab for 12 successive weeks at one of the following dose levels: 0.3 mg/kg (cohort 1), 1 mg/kg (cohort 2), or 3 mg/kg (cohort 3).

There were 6 patients in each cohort. Cohorts 1 and 2 each had 4 patients with inhibitors, and there were 3 patients with inhibitors in cohort 3.

Efficacy

Whether or not they had inhibitors, patients experienced a decrease in ABR with emicizumab. Median ABRs decreased from 32.5 to 4.4 in cohort 1, 18.3 to 0.0 in cohort 2, and 15.2 to 0.0 in cohort 3.

The annualized bleeding rate decreased from baseline in 17 of the patients. The remaining patient, who was in cohort 3 and did not have FVIII inhibitors, had an annualized bleeding rate of 0.0 both at baseline and while receiving emicizumab.

Thirteen patients did not have any bleeding episodes during treatment—8 of 11 patients with inhibitors and 5 of 7 patients without them.

There were 21 bleeding episodes, all of which were successfully treated with FVIII or a bypassing agent. Eighteen these episodes resolved with 1 or 2 doses.

Fourteen of the bleeding episodes occurred in 1 patient. They were attributed to very high levels of physical activity and hemophilic arthropathy.

Safety

There were 43 adverse events in 15 patients. The researchers said all events were of mild or moderate intensity.

Adverse events that were considered related to emicizumab included injection-site erythema (n=1), increase in blood creatinine kinase (n=1), diarrhea (n=1), injection-site pruritus (n=1), injection-site rash (n=1), and malaise (n=1).

One patient discontinued emicizumab due to injection-site erythema, as the patient experienced this event twice.

There was no evidence of clinically relevant coagulation abnormalities. And there were no thromboembolic events, even when emicizumab was given concomitantly with FVIII products or bypassing agents as episodic treatment for breakthrough bleeds.

None of the patients developed anti-emicizumab antibodies during the 12 weeks of dosing.

One patient had a positive test for anti-emicizumab antibodies at baseline and had a transient increase in the C-reactive protein level on day 3, but this did not affect the pharmacokinetics or pharmacodynamics of emicizumab. ![]()

Results of a phase 1 study suggest the bispecific monoclonal antibody (mAb) emicizumab (ACE910) may be safe and effective for patients with severe hemophilia A, whether or not they have factor VIII (FVIII) inhibitors.

A weekly injection of emicizumab decreased annualized bleeding rates (ABRs), and 13 of the 18 patients studied did not experience any bleeding while on treatment.

In addition, researchers said the mAb had an acceptable safety profile.

These results were published in NEJM. The study was funded by Chugai Pharmaceutical Co., Ltd., which is co-developing emicizumab with Genentech.

Emicizumab is engineered to simultaneously bind factors IXa and X. The mAb mimics the cofactor function of FVIII and is designed to promote blood coagulation in hemophilia A patients regardless of whether they have developed inhibitors to FVIII. As it is distinct in structure from FVIII, emicizumab is not expected to lead to the formation of FVIII inhibitors.

This phase 1 study of emicizumab enrolled both healthy subjects and hemophilia A patients. Results in the healthy subjects were previously published in Blood.

All 18 patients had severe hemophilia A, and 11 had FVIII inhibitors. They were 12 to 58 years of age, and all were Japanese.

The patients received once-weekly subcutaneous injections of emicizumab for 12 successive weeks at one of the following dose levels: 0.3 mg/kg (cohort 1), 1 mg/kg (cohort 2), or 3 mg/kg (cohort 3).

There were 6 patients in each cohort. Cohorts 1 and 2 each had 4 patients with inhibitors, and there were 3 patients with inhibitors in cohort 3.

Efficacy

Whether or not they had inhibitors, patients experienced a decrease in ABR with emicizumab. Median ABRs decreased from 32.5 to 4.4 in cohort 1, 18.3 to 0.0 in cohort 2, and 15.2 to 0.0 in cohort 3.

The annualized bleeding rate decreased from baseline in 17 of the patients. The remaining patient, who was in cohort 3 and did not have FVIII inhibitors, had an annualized bleeding rate of 0.0 both at baseline and while receiving emicizumab.

Thirteen patients did not have any bleeding episodes during treatment—8 of 11 patients with inhibitors and 5 of 7 patients without them.

There were 21 bleeding episodes, all of which were successfully treated with FVIII or a bypassing agent. Eighteen these episodes resolved with 1 or 2 doses.

Fourteen of the bleeding episodes occurred in 1 patient. They were attributed to very high levels of physical activity and hemophilic arthropathy.

Safety

There were 43 adverse events in 15 patients. The researchers said all events were of mild or moderate intensity.

Adverse events that were considered related to emicizumab included injection-site erythema (n=1), increase in blood creatinine kinase (n=1), diarrhea (n=1), injection-site pruritus (n=1), injection-site rash (n=1), and malaise (n=1).

One patient discontinued emicizumab due to injection-site erythema, as the patient experienced this event twice.

There was no evidence of clinically relevant coagulation abnormalities. And there were no thromboembolic events, even when emicizumab was given concomitantly with FVIII products or bypassing agents as episodic treatment for breakthrough bleeds.

None of the patients developed anti-emicizumab antibodies during the 12 weeks of dosing.

One patient had a positive test for anti-emicizumab antibodies at baseline and had a transient increase in the C-reactive protein level on day 3, but this did not affect the pharmacokinetics or pharmacodynamics of emicizumab. ![]()

Results of a phase 1 study suggest the bispecific monoclonal antibody (mAb) emicizumab (ACE910) may be safe and effective for patients with severe hemophilia A, whether or not they have factor VIII (FVIII) inhibitors.

A weekly injection of emicizumab decreased annualized bleeding rates (ABRs), and 13 of the 18 patients studied did not experience any bleeding while on treatment.

In addition, researchers said the mAb had an acceptable safety profile.

These results were published in NEJM. The study was funded by Chugai Pharmaceutical Co., Ltd., which is co-developing emicizumab with Genentech.

Emicizumab is engineered to simultaneously bind factors IXa and X. The mAb mimics the cofactor function of FVIII and is designed to promote blood coagulation in hemophilia A patients regardless of whether they have developed inhibitors to FVIII. As it is distinct in structure from FVIII, emicizumab is not expected to lead to the formation of FVIII inhibitors.

This phase 1 study of emicizumab enrolled both healthy subjects and hemophilia A patients. Results in the healthy subjects were previously published in Blood.

All 18 patients had severe hemophilia A, and 11 had FVIII inhibitors. They were 12 to 58 years of age, and all were Japanese.

The patients received once-weekly subcutaneous injections of emicizumab for 12 successive weeks at one of the following dose levels: 0.3 mg/kg (cohort 1), 1 mg/kg (cohort 2), or 3 mg/kg (cohort 3).

There were 6 patients in each cohort. Cohorts 1 and 2 each had 4 patients with inhibitors, and there were 3 patients with inhibitors in cohort 3.

Efficacy

Whether or not they had inhibitors, patients experienced a decrease in ABR with emicizumab. Median ABRs decreased from 32.5 to 4.4 in cohort 1, 18.3 to 0.0 in cohort 2, and 15.2 to 0.0 in cohort 3.

The annualized bleeding rate decreased from baseline in 17 of the patients. The remaining patient, who was in cohort 3 and did not have FVIII inhibitors, had an annualized bleeding rate of 0.0 both at baseline and while receiving emicizumab.

Thirteen patients did not have any bleeding episodes during treatment—8 of 11 patients with inhibitors and 5 of 7 patients without them.

There were 21 bleeding episodes, all of which were successfully treated with FVIII or a bypassing agent. Eighteen these episodes resolved with 1 or 2 doses.

Fourteen of the bleeding episodes occurred in 1 patient. They were attributed to very high levels of physical activity and hemophilic arthropathy.

Safety

There were 43 adverse events in 15 patients. The researchers said all events were of mild or moderate intensity.

Adverse events that were considered related to emicizumab included injection-site erythema (n=1), increase in blood creatinine kinase (n=1), diarrhea (n=1), injection-site pruritus (n=1), injection-site rash (n=1), and malaise (n=1).

One patient discontinued emicizumab due to injection-site erythema, as the patient experienced this event twice.

There was no evidence of clinically relevant coagulation abnormalities. And there were no thromboembolic events, even when emicizumab was given concomitantly with FVIII products or bypassing agents as episodic treatment for breakthrough bleeds.

None of the patients developed anti-emicizumab antibodies during the 12 weeks of dosing.

One patient had a positive test for anti-emicizumab antibodies at baseline and had a transient increase in the C-reactive protein level on day 3, but this did not affect the pharmacokinetics or pharmacodynamics of emicizumab. ![]()

Woman, 35, With Jaundice and Altered Mental Status

IN THIS ARTICLE

- Results of case patient's initial laboratory work-up

- Top 10 prescription medications associated with idiosyncratic disease

- Outcome for the case patient

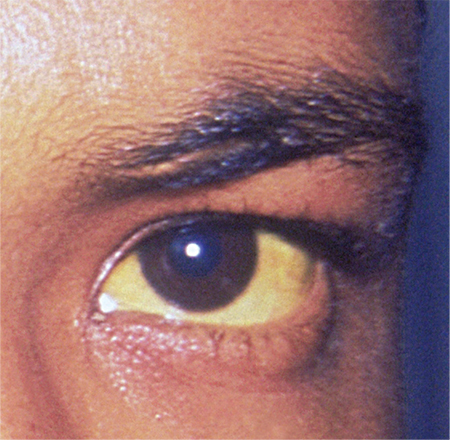

A 35-year-old African-American woman presented to the emergency department (ED) after being found disoriented and lethargic in her apartment by her friends. Given her altered mental status, the history of present illness was limited and informed mainly by her mother and friends. She had been unreachable by telephone for three days, and friends grew concerned when she was absent from work on two consecutive days. After obtaining access to her apartment, they found her in the bathroom jaundiced, incoherent, and surrounded by nonbloody, nonbilious vomit. She had no prior significant medical history, no documented daily medication, and no recent travel. Of note, previous medical contact was limited, and she did not have an established primary care provider. Additionally, there was no contributory family history, including autoimmune illness or liver disease.

ED presentation was marked by indications of grade 4 encephalopathy, including unresponsiveness to noxious stimuli. Initial laboratory work-up was notable for significantly elevated liver function test results (see Table 1). Based on her international normalized ratio (INR), total bilirubin, and creatinine, her initial Model for End-Stage Liver Disease score was 39, correlating to an 83% three-month mortality rate.1 Autoimmune marker testing revealed a positive antinuclear antibody (ANA), elevated immunoglobulin G (IgG), elevated smooth muscle antibody (IgG), normal antimitochondrial antibody, and normal anti-liver/kidney microsome antibody (IgG). Viral hepatitis serologies, including A, B, C, and E, were unremarkable. Ceruloplasmin and iron saturation were within normal limits. Acetaminophen, salicylate, and ethanol levels were negligible. Pregnancy testing and urine toxin testing were negative. Thyroid function tests were normal. Infectious work-up, including pan-culture, remained negative. Syphilis, herpes simplex virus (HSV), HIV, and varicella zoster testing were unremarkable.

CT of the head was not consistent with cerebral edema. CT of the abdomen and pelvis showed evidence of chronic pancreatitis and trace perihepatic ascites. She was intubated for airway protection and transferred to the medical ICU.

On liver biopsy, the patient was found to have acute hepatitis with centrilobular necrosis, approximately 30% to 40%, and prominent cholestasis. Histologically, these findings were reported as most consistent with drug-induced liver injury. Given her comatose state, coagulopathy, and extremely limited life expectancy without liver transplantation, the patient was listed for transplant as a status 1A candidate with fulminant hepatic failure.

She was placed on propofol and N-acetylcysteine infusions in addition to supportive IV resuscitation. The patient’s synthetic and neurocognitive function improved gradually over several weeks, and she was able to provide collateral history. She denied taking any prescription medications or having any ongoing medical issues. She did report that for two months prior to admission she had been taking an oral beauty supplement designed to enhance hair, skin, and nails. She obtained the supplement online. She could not recall the week leading up to admission, but she did note increasing malaise and fatigue beginning two weeks prior to admission. She denied any recreational drug or alcohol use.

Continue for discussion >>

DISCUSSION

Drug-induced liver injury (DILI) is a relatively uncommon occurrence in the United States.2 It is estimated to occur in approximately 20 individuals per 100,000 persons per year.2 However, DILI incidence secondary to herbal and dietary supplement use appears to be on the rise in the US. In a prospective study conducted by the Drug-Induced Liver Injury Network (DILIN) that included patients with liver injury referred to eight DILIN centers between 2004 and 2013, the proportion of DILI cases caused by herbal and dietary supplements increased from 7% to 20% over the study period.3

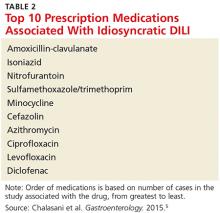

DILI can be subclassified into intrinsic and idiosyncratic. Intrinsic DILI results from substances causing a predictable time course and natural history. Substances causing a varied, unpredictable occurrence of DILI in susceptible individuals are idiosyncratic.4 Overall, acetaminophen overdose is the most common cause of DILI.2 However, the most common idiosyncratic offending agents, taken at FDA-approved dosages, are antimicrobials (see Table 2).5 The second most common offending agents are herbal and dietary supplements.5

In a retrospective cohort study evaluating all cases of acute liver failure (ALF) over a six-year period in an integrated health care system, the leading cause of ALF was DILI.6 Of the 32 patients with confirmed drug-induced ALF in this study, the majority of cases (18) were associated with acetaminophen. Herbal and dietary supplements were implicated in six cases, with miscellaneous medications accounting for the remaining eight cases.6 In terms of outcomes, 18.8% of patients with ALF due to DILI underwent liver transplantation, 68.8% were discharged, and 12.5% died during hospitalization.6

DILI disproportionately affects women and minorities7;although the etiology is unclear, it is hypothesized that increased use of antibiotics may play a role among women.2 Providers should be aware of the increased risk for DILI in these populations and consider this diagnosis in the appropriate setting.

Teasing out the diagnosis

DILI is a diagnosis of exclusion, aided in large part by the history and physical exam.4 An extensive history may alert the health care provider to a potential offending substance as well as provide information on timing of exposure.4 DILI should be suspected in patients with persistently elevated liver enzymes, unremarkable work-up for all other underlying liver disease (including autoimmune and viral serologies), and negative abdominal imaging.4 In particular, acute hepatitis C virus (HCV) and hepatitis E virus (HEV) infection mimic the clinical presentation of DILI and should be excluded with HCV RNA and IgM anti-HEV testing, with reflex HEV RNA testing to confirm positive IgM anti-HEV results.8,9 Liver biopsy is rarely indicated for the diagnosis of DILI.2

The presentation of DILI ranges from asymptomatic, with mildly abnormal results on liver function testing, to fulminant hepatic failure. Acetaminophen is the most frequently reported cause of intrinsic DILI in the US, playing a role in approximately half of all ALF cases.10 DILI can be further subdivided according to the pattern of liver test abnormalities as hepatocellular, mixed, or cholestatic based on the ratio of ALT to alkaline phosphatase (R value).2 Utilizing the formula serum ALT/upper limit of normal (ULN) divided by the serum alkaline phosphatase/ULN to determine R value, liver test abnormalities are defined as hepatocellular (R > 5), mixed (R = 2-5), and cholestatic (R < 2).4 These liver test patterns can be used to predict prognosis (see “Prognosis: Hy’s law”). In a prospective, longitudinal study, DILIN found that chronic DILI was present in 18% of the study population at 6 months following onset.5 Patients with the cholestatic presentation were more likely to develop chronic DILI than were those with the hepatocellular or mixed pattern. Furthermore, the hepatocellular pattern on presentation was associated with greater mortality.5 Patients with the mixed pattern had the most favorable outcomes. Another prospective cohort study found that persistently elevated liver enzymes in DILI patients at 12 months is associated with older age and the cholestatic pattern of liver test abnormalities at presentation, in particular, alkaline phosphatase elevation.11 However, neither length of therapy nor type of offending medication was associated with long-term liver test abnormalities.11

Managing DILI and ALF

In all DILI cases, immediate discontinuation of the offending agent is the initial treatment recommendation.2 Patients presenting with DILI who have an accompanying bilirubin level > 2 mg/dL should be referred to a hepatology specialist due to an increased risk for ALF.2 ALF is defined as coagulopathy to INR ≥ 1.5 and hepatic encephalopathy within 26 weeks of initial symptom onset in individuals without known underlying liver disease, with the exception of autoimmune hepatitis, Wilson disease, and reactivation of hepatitis B.12-15 Fulminant hepatic failure is further specified as encephalopathy occurring within 8 weeks of jaundice onset.12

Patients presenting with ALF should be transferred to an intensive care setting, preferably within a liver transplant center, for supportive care and potential liver transplant evaluation.12 CT of the head should be used to rule out other etiologies for altered mental status.16N-Acetylcysteine is the treatment of choice for acetaminophen-induced ALF, and it has also been shown to improve transplant-free survival outcomes in patients with non-acetaminophen–related early ALF.17 Infectious work-up and continuous monitoring are essential in ALF care, since up to 80% of patients with ALF will develop a bacterial infection.18 A comprehensive infectious work-up should include pan-culture of blood, urine, and sputum in addition to assessment for Epstein-Barr virus, cytomegalovirus, and HSV.4,18 For irreversible ALF, liver transplantation remains the only validated treatment option.12,19

Prognosis: Hy’s law

Hy’s law refers to a method used in clinical trials to assess a drug’s likelihood of causing severe hepatotoxicity; it is also used to predict which patients with DILI will develop ALF.12,20 According to Hy’s law, patients with AST or ALT elevations three times ULN and total bilirubin elevations two times ULN are at increased risk for ALF.In a retrospective cohort study of more than 15,000 patients with DILI, the Hy’s law criteria were found to have high specificity but low sensitivity for detecting individuals at risk for ALF.15 An alternative model, the Drug-Induced Liver Toxicity ALF Score, uses platelet count and bilirubin level to identify patients at risk for ALF with high sensitivity.15

Patient education

Effective patient education is essential to decreasing DILI incidence at a time when herbal and dietary supplement consumption is increasing. Patients will often bring herbal and dietary supplements to their providers to obtain a safety profile prior to initiation. In these cases, it is essential to reinforce with patients the absence of federal regulation of these products. It should be stressed to patients that, due to the lack of government oversight, it is impossible to confidently identify the entirety and quantity of ingredients in these supplements. Furthermore, there is no existing protocol for surveillance or adverse event reporting for these products.21 Because these products are not routinely or systematically studied, even health care providers have no evidence on which to base monitoring or usage recommendations. Providers may direct patients to the National Institutes of Health’s LiverTox website (livertox.NIH.gov) to review prior case reports of hepatotoxicity for specific dietary and herbal supplements.

Level of education is associated with knowledge of the potential for overdose when taking OTC medications that contain acetaminophen.22 As a result, health care providers should strongly reinforce with patients the importance of reading all medication labels and abiding by the listed administration directions. In particular, providers should emphasize that the maximum daily dosage of acetaminophen is 4 g.23 For patients with chronic liver disease, a more conservative recommendation is warranted. Generally, patients with cirrhosis may be advised to consume up to 2 g/d of acetaminophen as a firstline treatment for pain. However, providers should ensure acetaminophen ingestion is limited to a brief period.24

Additionally, it is important to educate patients that many combination OTC medications contain acetaminophen. Of note, chronic opioid users are more likely to accurately identify OTC medications containing acetaminophen, compared with acute opioid users.22 These findings should compel health care providers to deliver in-depth education for all patients, particularly those with less education or experience with medications. Education on avoidance of offending medications, including medications within the same class, when appropriate, is essential for quality patient care.2

Continue to outcome for the case patient >>

OUTCOME FOR THE CASE PATIENT

Following discharge, the patient was monitored closely with regular clinic visits and blood work. Her liver test results improved gradually, with consideration of a repeat biopsy to evaluate for overlap or missed autoimmune disease. Her repeat ANA was negative and IgG was within normal limits. Within three months of admission, her liver tests normalized and repeat biopsy was deferred.

Upon review of the herbal beauty supplement the patient reported taking, shark cartilage was noted as a primary ingredient. In a case report, shark cartilage was identified as a hepatotoxin.25 The patient was advised never to ingest the offending supplement, or any other substances not regulated by the FDA, again. Furthermore, the offending medication was listed as a medication allergy in her electronic health record.

CONCLUSION

It is crucial to emphasize to patients the potential hepatotoxicity of medications and herbal and dietary supplements, especially OTC medications that pose an overdose risk. Patients should review all new supplements with their providers prior to therapy initiation. With known hepatotoxins, providers should closely monitor patients for liver injury while treatment is ongoing. In suspected cases of DILI, a thorough history and physical exam will greatly inform the diagnosis. In the majority of cases, the suspect medication should be discontinued immediately, with subsequent assessment of liver response. Identification of DILI early in the course increases the likelihood of full hepatic recovery and improves patient outcomes.

References

1. Kamath PS, Wiesner RH, Malinchoc M, et al. A model to predict survival in patients with end-stage liver disease. Hepatology. 2001;33(2):464-470.

2. Leise MD, Poterucha JJ, Talwalkar JA. Drug-induced liver injury. Mayo Clin Proc. 2014;89(1):95-106.

3. Navarro VJ, Barnhart H, Bonkovsky HL, et al. Liver injury from herbals and dietary supplements in the US Drug-Induced Liver Injury Network. Hepatology. 2014;60(4):1399-1408.

4. Chalasani NP, Hayashi PH, Bonkovsky HL, et al. ACG Clinical Guideline: the diagnosis and management of idiosyncratic drug-induced liver injury. Am J Gastroenterol. 2014;109(7):950-966.

5. Chalasani N, Bonkovsky HL, Fontana R, et al; United States Drug Induced Liver Injury Network. Features and outcomes of 899 patients with drug-induced liver injury: the DILIN prospective study. Gastroenterology. 2015;148(7):1340-1352.

6. Goldberg DS, Forde KA, Carbonari DM, et al. Population-representative incidence of drug-induced acute liver failure based on an analysis of an integrated health care system. Gastroenterology. 2015;148(7):1353-1361.

7. Reuben A, Koch DG, Lee WM. Drug-induced acute liver failure: results of a US multicenter, prospective study. Hepatology. 2010;52(6):2065-2076.

8. Davern TJ, Chalasani N, Fontana RJ, et al; Drug-Induced Liver Injury Network (DILIN). Acute hepatitis E infection accounts for some cases of suspected drug-induced liver injury. Gastroenterology. 2011;141(5):1665-1672.e1-9.

9. Chalasani N, Fontana RJ, Bonkovsky HL, et al. Causes, clinical features, and outcomes from a prospective study of drug-induced liver injury in the United States. Gastroenterology. 2008;135(6):1924-1934.

10. Fisher K, Vuppalanchi R, Saxena R. Drug-induced liver injury. Arch Pathol Lab Med. 2015;139(7):876-887.

11. Fontana RJ, Hayashi PH, Barnhart H, et al. Persistent liver biochemistry abnormalities are more common in older patients and those with cholestatic drug induced liver injury. Am J Gastroenterol. 2015;110(10):1450-1459.

12. Punzalan CS, Barry CT. Acute liver failure: diagnosis and management. J Intensive Care Med. 2015 Oct 6. [Epub ahead of print]

13. Bower WA, Johns M, Margolis HS, et al. Population-based surveillance for acute liver failure. Am J Gastroenterol. 2007;102(11):2459-2463.

14. O’Grady JG, Schalm SW, Williams R. Acute liver failure: redefining the syndromes. Lancet. 1993;342(8866):273-275.

15. Lo Re V III, Haynes K, Forde KA, et al. Risk of acute liver failure in patients with drug-induced liver injury: evaluation of Hy’s law and a new prognostic model. Clin Gastroenterol Hepatol. 2015;13(13):2360-2368.

16. Polson J, Lee WM; American Association for the Study of Liver Diseases. AASLD position paper: the management of acute liver failure. Hepatology. 2005;41:1179-1197.

17. Lee WM, Hynan LS, Rossaro L, et al. Intravenous N-acetylcysteine improves transplant-free survival in early stage non-acetaminophen acute liver failure. Gastroenterology. 2009;137(3):856-864.

18. Rolando N, Harvey F, Brahm J. Prospective study of bacterial infection in acute liver failure: an analysis of fifty patients. Hepatology. 1990;11(1):49-53.

19. Panackel C, Thomas R, Sebastian B, Mathai SK. Recent advances in management of acute liver failure. Indian J Crit Care Med. 2015;19(1):27-33.

20. Temple R. Hy’s law: predicting serious hepatotoxicity. Pharmacoepidemiol Drug Saf. 2006;15(4):241-243.

21. Bunchorntavakul C, Reddy K. Review article: herbal and dietary supplement hepatotoxicity. Aliment Pharmacol Ther. 2012;37(1):3-17.

22. Boudreau DM, Wirtz H, Von Korff M, et al. A survey of adult awareness and use of medicine containing acetaminophen. Pharmacoepidemiol Drug Saf. 2013;22(3):229-240.

23. Burns MJ, Friedman SL, Larson AM. Acetaminophen (paracetamol) poisoning in adults: pathophysiology, presentation, and diagnosis. UpToDate. www.uptodate.com/contents/acetaminophen-paracetamol-poisoning-in-adults-pathophysiology-presentation-and-diagnosis. Accessed May 20, 2016.

24. Lewis JH, Stine JG. Review article: prescribing medications in patients with cirrhosis—a practical guide. Aliment Pharmacol Ther. 2013;37(12):1132-1156.

25. Ashar B, Vargo E. Shark cartilage-induced hepatitis. Ann Intern Med. 1996;125(9):780-781.

IN THIS ARTICLE

- Results of case patient's initial laboratory work-up

- Top 10 prescription medications associated with idiosyncratic disease

- Outcome for the case patient

A 35-year-old African-American woman presented to the emergency department (ED) after being found disoriented and lethargic in her apartment by her friends. Given her altered mental status, the history of present illness was limited and informed mainly by her mother and friends. She had been unreachable by telephone for three days, and friends grew concerned when she was absent from work on two consecutive days. After obtaining access to her apartment, they found her in the bathroom jaundiced, incoherent, and surrounded by nonbloody, nonbilious vomit. She had no prior significant medical history, no documented daily medication, and no recent travel. Of note, previous medical contact was limited, and she did not have an established primary care provider. Additionally, there was no contributory family history, including autoimmune illness or liver disease.

ED presentation was marked by indications of grade 4 encephalopathy, including unresponsiveness to noxious stimuli. Initial laboratory work-up was notable for significantly elevated liver function test results (see Table 1). Based on her international normalized ratio (INR), total bilirubin, and creatinine, her initial Model for End-Stage Liver Disease score was 39, correlating to an 83% three-month mortality rate.1 Autoimmune marker testing revealed a positive antinuclear antibody (ANA), elevated immunoglobulin G (IgG), elevated smooth muscle antibody (IgG), normal antimitochondrial antibody, and normal anti-liver/kidney microsome antibody (IgG). Viral hepatitis serologies, including A, B, C, and E, were unremarkable. Ceruloplasmin and iron saturation were within normal limits. Acetaminophen, salicylate, and ethanol levels were negligible. Pregnancy testing and urine toxin testing were negative. Thyroid function tests were normal. Infectious work-up, including pan-culture, remained negative. Syphilis, herpes simplex virus (HSV), HIV, and varicella zoster testing were unremarkable.

CT of the head was not consistent with cerebral edema. CT of the abdomen and pelvis showed evidence of chronic pancreatitis and trace perihepatic ascites. She was intubated for airway protection and transferred to the medical ICU.

On liver biopsy, the patient was found to have acute hepatitis with centrilobular necrosis, approximately 30% to 40%, and prominent cholestasis. Histologically, these findings were reported as most consistent with drug-induced liver injury. Given her comatose state, coagulopathy, and extremely limited life expectancy without liver transplantation, the patient was listed for transplant as a status 1A candidate with fulminant hepatic failure.

She was placed on propofol and N-acetylcysteine infusions in addition to supportive IV resuscitation. The patient’s synthetic and neurocognitive function improved gradually over several weeks, and she was able to provide collateral history. She denied taking any prescription medications or having any ongoing medical issues. She did report that for two months prior to admission she had been taking an oral beauty supplement designed to enhance hair, skin, and nails. She obtained the supplement online. She could not recall the week leading up to admission, but she did note increasing malaise and fatigue beginning two weeks prior to admission. She denied any recreational drug or alcohol use.

Continue for discussion >>

DISCUSSION

Drug-induced liver injury (DILI) is a relatively uncommon occurrence in the United States.2 It is estimated to occur in approximately 20 individuals per 100,000 persons per year.2 However, DILI incidence secondary to herbal and dietary supplement use appears to be on the rise in the US. In a prospective study conducted by the Drug-Induced Liver Injury Network (DILIN) that included patients with liver injury referred to eight DILIN centers between 2004 and 2013, the proportion of DILI cases caused by herbal and dietary supplements increased from 7% to 20% over the study period.3

DILI can be subclassified into intrinsic and idiosyncratic. Intrinsic DILI results from substances causing a predictable time course and natural history. Substances causing a varied, unpredictable occurrence of DILI in susceptible individuals are idiosyncratic.4 Overall, acetaminophen overdose is the most common cause of DILI.2 However, the most common idiosyncratic offending agents, taken at FDA-approved dosages, are antimicrobials (see Table 2).5 The second most common offending agents are herbal and dietary supplements.5

In a retrospective cohort study evaluating all cases of acute liver failure (ALF) over a six-year period in an integrated health care system, the leading cause of ALF was DILI.6 Of the 32 patients with confirmed drug-induced ALF in this study, the majority of cases (18) were associated with acetaminophen. Herbal and dietary supplements were implicated in six cases, with miscellaneous medications accounting for the remaining eight cases.6 In terms of outcomes, 18.8% of patients with ALF due to DILI underwent liver transplantation, 68.8% were discharged, and 12.5% died during hospitalization.6

DILI disproportionately affects women and minorities7;although the etiology is unclear, it is hypothesized that increased use of antibiotics may play a role among women.2 Providers should be aware of the increased risk for DILI in these populations and consider this diagnosis in the appropriate setting.

Teasing out the diagnosis

DILI is a diagnosis of exclusion, aided in large part by the history and physical exam.4 An extensive history may alert the health care provider to a potential offending substance as well as provide information on timing of exposure.4 DILI should be suspected in patients with persistently elevated liver enzymes, unremarkable work-up for all other underlying liver disease (including autoimmune and viral serologies), and negative abdominal imaging.4 In particular, acute hepatitis C virus (HCV) and hepatitis E virus (HEV) infection mimic the clinical presentation of DILI and should be excluded with HCV RNA and IgM anti-HEV testing, with reflex HEV RNA testing to confirm positive IgM anti-HEV results.8,9 Liver biopsy is rarely indicated for the diagnosis of DILI.2

The presentation of DILI ranges from asymptomatic, with mildly abnormal results on liver function testing, to fulminant hepatic failure. Acetaminophen is the most frequently reported cause of intrinsic DILI in the US, playing a role in approximately half of all ALF cases.10 DILI can be further subdivided according to the pattern of liver test abnormalities as hepatocellular, mixed, or cholestatic based on the ratio of ALT to alkaline phosphatase (R value).2 Utilizing the formula serum ALT/upper limit of normal (ULN) divided by the serum alkaline phosphatase/ULN to determine R value, liver test abnormalities are defined as hepatocellular (R > 5), mixed (R = 2-5), and cholestatic (R < 2).4 These liver test patterns can be used to predict prognosis (see “Prognosis: Hy’s law”). In a prospective, longitudinal study, DILIN found that chronic DILI was present in 18% of the study population at 6 months following onset.5 Patients with the cholestatic presentation were more likely to develop chronic DILI than were those with the hepatocellular or mixed pattern. Furthermore, the hepatocellular pattern on presentation was associated with greater mortality.5 Patients with the mixed pattern had the most favorable outcomes. Another prospective cohort study found that persistently elevated liver enzymes in DILI patients at 12 months is associated with older age and the cholestatic pattern of liver test abnormalities at presentation, in particular, alkaline phosphatase elevation.11 However, neither length of therapy nor type of offending medication was associated with long-term liver test abnormalities.11

Managing DILI and ALF

In all DILI cases, immediate discontinuation of the offending agent is the initial treatment recommendation.2 Patients presenting with DILI who have an accompanying bilirubin level > 2 mg/dL should be referred to a hepatology specialist due to an increased risk for ALF.2 ALF is defined as coagulopathy to INR ≥ 1.5 and hepatic encephalopathy within 26 weeks of initial symptom onset in individuals without known underlying liver disease, with the exception of autoimmune hepatitis, Wilson disease, and reactivation of hepatitis B.12-15 Fulminant hepatic failure is further specified as encephalopathy occurring within 8 weeks of jaundice onset.12

Patients presenting with ALF should be transferred to an intensive care setting, preferably within a liver transplant center, for supportive care and potential liver transplant evaluation.12 CT of the head should be used to rule out other etiologies for altered mental status.16N-Acetylcysteine is the treatment of choice for acetaminophen-induced ALF, and it has also been shown to improve transplant-free survival outcomes in patients with non-acetaminophen–related early ALF.17 Infectious work-up and continuous monitoring are essential in ALF care, since up to 80% of patients with ALF will develop a bacterial infection.18 A comprehensive infectious work-up should include pan-culture of blood, urine, and sputum in addition to assessment for Epstein-Barr virus, cytomegalovirus, and HSV.4,18 For irreversible ALF, liver transplantation remains the only validated treatment option.12,19

Prognosis: Hy’s law

Hy’s law refers to a method used in clinical trials to assess a drug’s likelihood of causing severe hepatotoxicity; it is also used to predict which patients with DILI will develop ALF.12,20 According to Hy’s law, patients with AST or ALT elevations three times ULN and total bilirubin elevations two times ULN are at increased risk for ALF.In a retrospective cohort study of more than 15,000 patients with DILI, the Hy’s law criteria were found to have high specificity but low sensitivity for detecting individuals at risk for ALF.15 An alternative model, the Drug-Induced Liver Toxicity ALF Score, uses platelet count and bilirubin level to identify patients at risk for ALF with high sensitivity.15

Patient education

Effective patient education is essential to decreasing DILI incidence at a time when herbal and dietary supplement consumption is increasing. Patients will often bring herbal and dietary supplements to their providers to obtain a safety profile prior to initiation. In these cases, it is essential to reinforce with patients the absence of federal regulation of these products. It should be stressed to patients that, due to the lack of government oversight, it is impossible to confidently identify the entirety and quantity of ingredients in these supplements. Furthermore, there is no existing protocol for surveillance or adverse event reporting for these products.21 Because these products are not routinely or systematically studied, even health care providers have no evidence on which to base monitoring or usage recommendations. Providers may direct patients to the National Institutes of Health’s LiverTox website (livertox.NIH.gov) to review prior case reports of hepatotoxicity for specific dietary and herbal supplements.

Level of education is associated with knowledge of the potential for overdose when taking OTC medications that contain acetaminophen.22 As a result, health care providers should strongly reinforce with patients the importance of reading all medication labels and abiding by the listed administration directions. In particular, providers should emphasize that the maximum daily dosage of acetaminophen is 4 g.23 For patients with chronic liver disease, a more conservative recommendation is warranted. Generally, patients with cirrhosis may be advised to consume up to 2 g/d of acetaminophen as a firstline treatment for pain. However, providers should ensure acetaminophen ingestion is limited to a brief period.24

Additionally, it is important to educate patients that many combination OTC medications contain acetaminophen. Of note, chronic opioid users are more likely to accurately identify OTC medications containing acetaminophen, compared with acute opioid users.22 These findings should compel health care providers to deliver in-depth education for all patients, particularly those with less education or experience with medications. Education on avoidance of offending medications, including medications within the same class, when appropriate, is essential for quality patient care.2

Continue to outcome for the case patient >>

OUTCOME FOR THE CASE PATIENT

Following discharge, the patient was monitored closely with regular clinic visits and blood work. Her liver test results improved gradually, with consideration of a repeat biopsy to evaluate for overlap or missed autoimmune disease. Her repeat ANA was negative and IgG was within normal limits. Within three months of admission, her liver tests normalized and repeat biopsy was deferred.

Upon review of the herbal beauty supplement the patient reported taking, shark cartilage was noted as a primary ingredient. In a case report, shark cartilage was identified as a hepatotoxin.25 The patient was advised never to ingest the offending supplement, or any other substances not regulated by the FDA, again. Furthermore, the offending medication was listed as a medication allergy in her electronic health record.

CONCLUSION

It is crucial to emphasize to patients the potential hepatotoxicity of medications and herbal and dietary supplements, especially OTC medications that pose an overdose risk. Patients should review all new supplements with their providers prior to therapy initiation. With known hepatotoxins, providers should closely monitor patients for liver injury while treatment is ongoing. In suspected cases of DILI, a thorough history and physical exam will greatly inform the diagnosis. In the majority of cases, the suspect medication should be discontinued immediately, with subsequent assessment of liver response. Identification of DILI early in the course increases the likelihood of full hepatic recovery and improves patient outcomes.

References

1. Kamath PS, Wiesner RH, Malinchoc M, et al. A model to predict survival in patients with end-stage liver disease. Hepatology. 2001;33(2):464-470.

2. Leise MD, Poterucha JJ, Talwalkar JA. Drug-induced liver injury. Mayo Clin Proc. 2014;89(1):95-106.

3. Navarro VJ, Barnhart H, Bonkovsky HL, et al. Liver injury from herbals and dietary supplements in the US Drug-Induced Liver Injury Network. Hepatology. 2014;60(4):1399-1408.

4. Chalasani NP, Hayashi PH, Bonkovsky HL, et al. ACG Clinical Guideline: the diagnosis and management of idiosyncratic drug-induced liver injury. Am J Gastroenterol. 2014;109(7):950-966.

5. Chalasani N, Bonkovsky HL, Fontana R, et al; United States Drug Induced Liver Injury Network. Features and outcomes of 899 patients with drug-induced liver injury: the DILIN prospective study. Gastroenterology. 2015;148(7):1340-1352.

6. Goldberg DS, Forde KA, Carbonari DM, et al. Population-representative incidence of drug-induced acute liver failure based on an analysis of an integrated health care system. Gastroenterology. 2015;148(7):1353-1361.

7. Reuben A, Koch DG, Lee WM. Drug-induced acute liver failure: results of a US multicenter, prospective study. Hepatology. 2010;52(6):2065-2076.

8. Davern TJ, Chalasani N, Fontana RJ, et al; Drug-Induced Liver Injury Network (DILIN). Acute hepatitis E infection accounts for some cases of suspected drug-induced liver injury. Gastroenterology. 2011;141(5):1665-1672.e1-9.

9. Chalasani N, Fontana RJ, Bonkovsky HL, et al. Causes, clinical features, and outcomes from a prospective study of drug-induced liver injury in the United States. Gastroenterology. 2008;135(6):1924-1934.

10. Fisher K, Vuppalanchi R, Saxena R. Drug-induced liver injury. Arch Pathol Lab Med. 2015;139(7):876-887.

11. Fontana RJ, Hayashi PH, Barnhart H, et al. Persistent liver biochemistry abnormalities are more common in older patients and those with cholestatic drug induced liver injury. Am J Gastroenterol. 2015;110(10):1450-1459.

12. Punzalan CS, Barry CT. Acute liver failure: diagnosis and management. J Intensive Care Med. 2015 Oct 6. [Epub ahead of print]

13. Bower WA, Johns M, Margolis HS, et al. Population-based surveillance for acute liver failure. Am J Gastroenterol. 2007;102(11):2459-2463.

14. O’Grady JG, Schalm SW, Williams R. Acute liver failure: redefining the syndromes. Lancet. 1993;342(8866):273-275.

15. Lo Re V III, Haynes K, Forde KA, et al. Risk of acute liver failure in patients with drug-induced liver injury: evaluation of Hy’s law and a new prognostic model. Clin Gastroenterol Hepatol. 2015;13(13):2360-2368.

16. Polson J, Lee WM; American Association for the Study of Liver Diseases. AASLD position paper: the management of acute liver failure. Hepatology. 2005;41:1179-1197.

17. Lee WM, Hynan LS, Rossaro L, et al. Intravenous N-acetylcysteine improves transplant-free survival in early stage non-acetaminophen acute liver failure. Gastroenterology. 2009;137(3):856-864.

18. Rolando N, Harvey F, Brahm J. Prospective study of bacterial infection in acute liver failure: an analysis of fifty patients. Hepatology. 1990;11(1):49-53.

19. Panackel C, Thomas R, Sebastian B, Mathai SK. Recent advances in management of acute liver failure. Indian J Crit Care Med. 2015;19(1):27-33.

20. Temple R. Hy’s law: predicting serious hepatotoxicity. Pharmacoepidemiol Drug Saf. 2006;15(4):241-243.

21. Bunchorntavakul C, Reddy K. Review article: herbal and dietary supplement hepatotoxicity. Aliment Pharmacol Ther. 2012;37(1):3-17.

22. Boudreau DM, Wirtz H, Von Korff M, et al. A survey of adult awareness and use of medicine containing acetaminophen. Pharmacoepidemiol Drug Saf. 2013;22(3):229-240.

23. Burns MJ, Friedman SL, Larson AM. Acetaminophen (paracetamol) poisoning in adults: pathophysiology, presentation, and diagnosis. UpToDate. www.uptodate.com/contents/acetaminophen-paracetamol-poisoning-in-adults-pathophysiology-presentation-and-diagnosis. Accessed May 20, 2016.

24. Lewis JH, Stine JG. Review article: prescribing medications in patients with cirrhosis—a practical guide. Aliment Pharmacol Ther. 2013;37(12):1132-1156.

25. Ashar B, Vargo E. Shark cartilage-induced hepatitis. Ann Intern Med. 1996;125(9):780-781.

IN THIS ARTICLE

- Results of case patient's initial laboratory work-up

- Top 10 prescription medications associated with idiosyncratic disease

- Outcome for the case patient

A 35-year-old African-American woman presented to the emergency department (ED) after being found disoriented and lethargic in her apartment by her friends. Given her altered mental status, the history of present illness was limited and informed mainly by her mother and friends. She had been unreachable by telephone for three days, and friends grew concerned when she was absent from work on two consecutive days. After obtaining access to her apartment, they found her in the bathroom jaundiced, incoherent, and surrounded by nonbloody, nonbilious vomit. She had no prior significant medical history, no documented daily medication, and no recent travel. Of note, previous medical contact was limited, and she did not have an established primary care provider. Additionally, there was no contributory family history, including autoimmune illness or liver disease.

ED presentation was marked by indications of grade 4 encephalopathy, including unresponsiveness to noxious stimuli. Initial laboratory work-up was notable for significantly elevated liver function test results (see Table 1). Based on her international normalized ratio (INR), total bilirubin, and creatinine, her initial Model for End-Stage Liver Disease score was 39, correlating to an 83% three-month mortality rate.1 Autoimmune marker testing revealed a positive antinuclear antibody (ANA), elevated immunoglobulin G (IgG), elevated smooth muscle antibody (IgG), normal antimitochondrial antibody, and normal anti-liver/kidney microsome antibody (IgG). Viral hepatitis serologies, including A, B, C, and E, were unremarkable. Ceruloplasmin and iron saturation were within normal limits. Acetaminophen, salicylate, and ethanol levels were negligible. Pregnancy testing and urine toxin testing were negative. Thyroid function tests were normal. Infectious work-up, including pan-culture, remained negative. Syphilis, herpes simplex virus (HSV), HIV, and varicella zoster testing were unremarkable.

CT of the head was not consistent with cerebral edema. CT of the abdomen and pelvis showed evidence of chronic pancreatitis and trace perihepatic ascites. She was intubated for airway protection and transferred to the medical ICU.

On liver biopsy, the patient was found to have acute hepatitis with centrilobular necrosis, approximately 30% to 40%, and prominent cholestasis. Histologically, these findings were reported as most consistent with drug-induced liver injury. Given her comatose state, coagulopathy, and extremely limited life expectancy without liver transplantation, the patient was listed for transplant as a status 1A candidate with fulminant hepatic failure.

She was placed on propofol and N-acetylcysteine infusions in addition to supportive IV resuscitation. The patient’s synthetic and neurocognitive function improved gradually over several weeks, and she was able to provide collateral history. She denied taking any prescription medications or having any ongoing medical issues. She did report that for two months prior to admission she had been taking an oral beauty supplement designed to enhance hair, skin, and nails. She obtained the supplement online. She could not recall the week leading up to admission, but she did note increasing malaise and fatigue beginning two weeks prior to admission. She denied any recreational drug or alcohol use.

Continue for discussion >>

DISCUSSION

Drug-induced liver injury (DILI) is a relatively uncommon occurrence in the United States.2 It is estimated to occur in approximately 20 individuals per 100,000 persons per year.2 However, DILI incidence secondary to herbal and dietary supplement use appears to be on the rise in the US. In a prospective study conducted by the Drug-Induced Liver Injury Network (DILIN) that included patients with liver injury referred to eight DILIN centers between 2004 and 2013, the proportion of DILI cases caused by herbal and dietary supplements increased from 7% to 20% over the study period.3

DILI can be subclassified into intrinsic and idiosyncratic. Intrinsic DILI results from substances causing a predictable time course and natural history. Substances causing a varied, unpredictable occurrence of DILI in susceptible individuals are idiosyncratic.4 Overall, acetaminophen overdose is the most common cause of DILI.2 However, the most common idiosyncratic offending agents, taken at FDA-approved dosages, are antimicrobials (see Table 2).5 The second most common offending agents are herbal and dietary supplements.5