User login

Computerized checklist can reduce CLABSI rate

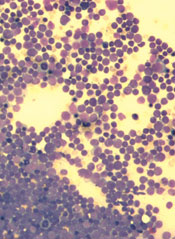

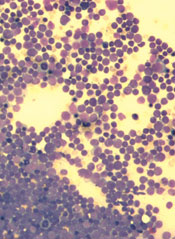

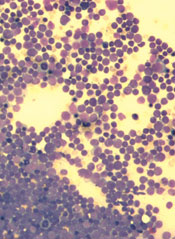

Staphylococcus infection

Credit: Bill Branson

A computerized safety checklist that pulls information from patients’ electronic medical records can reduce the incidence of central line-associated bloodstream infections (CLABSIs), according to a study published in Pediatrics.

The study was conducted among children admitted to the pediatric intensive care unit at Lucile Packard Children’s Hospital Stanford in California.

Researchers found the safety checklist increased overall staff compliance with best practices for CLABSI prevention and resulted in a 3-fold reduction in CLABSI incidence.

The automated checklist, and a dashboard-style interface used to interact with it, was designed to help caregivers follow national guidelines for CLABSI prevention. The system combed through data in a patient’s electronic medical record and pushed alerts to physicians and nurses when a patient’s central line was due for care.

The dashboard interface displayed real-time alerts on a large LCD screen in the nurses’ station. Alerts—shown as red, yellow, or green dots beside patients’ names—were generated if, for example, the dressing on a patient’s central line was due to be changed, or if it was time for caregivers to re-evaluate whether medications given in the central line could be switched to oral formulations instead.

“The information was visible and easy to digest,” said study author Deborah Franzon, MD. “We improved compliance with best-care practices and pulled information that otherwise would have been difficult to look for. It reduced busy work and made it possible for the healthcare team to perform their jobs more efficiently and effectively.”

The system was implemented on May 1, 2011, but the researchers considered the rollout period to extend to August 31, 2011. So this period was not included in the analysis.

The team compared data on CLABSI rates, compliance with bundle elements, and staff perceptions/knowledge before the intervention began—from June 1, 2009, to April 30, 2011—and after the system was fully implemented—September 1, 2011, to December 31, 2012.

CLABSI rates decreased from 2.6 per 1000 line-days before the intervention to 0.7 per 1000 line-days afterward (P=0.02). There were a total of 19 CLABSIs per 7322 line-days pre-intervention and 7 CLABSIs per 6155 line-days post-intervention.

The researchers estimated that the intervention saved approximately $260,000 per year in healthcare costs. Treating a single CLABSI costs approximately $39,000.

The team also found that daily documentation of line necessity increased from 30% before the intervention to 73% after (P<0.001). Compliance with dressing changes increased from 87% to 90% (P=0.003).

Compliance with cap changes increased from 87% to 93% (P<0.001). And compliance with port needle changes increased from 69% to 95% (P<0.001). However, compliance with insertion bundle documentation decreased from 67% to 62% (P=0.001).

After the system was implemented, there was a significant increase in staff perception that the medical team addressed central line necessity during rounds (P=0.02). But there was no significant difference in communication among team members (P=0.73) or knowledge regarding the components of the maintenance bundle (P=0.39).

Nevertheless, the researchers concluded that their system promotes compliance with best practices for CLABSI prevention, thereby reducing the risk of harm to patients.

The team hopes to use the system in other ways, such as monitoring the recovery of children who have received organ transplants.

“[The system] lets physicians focus on taking care of the patient while automating some of the background safety checks,” said study author Natalie Pageler, MD. “The nice thing about this tool is that it’s integrated into the electronic medical record, which we use every single day.” ![]()

Staphylococcus infection

Credit: Bill Branson

A computerized safety checklist that pulls information from patients’ electronic medical records can reduce the incidence of central line-associated bloodstream infections (CLABSIs), according to a study published in Pediatrics.

The study was conducted among children admitted to the pediatric intensive care unit at Lucile Packard Children’s Hospital Stanford in California.

Researchers found the safety checklist increased overall staff compliance with best practices for CLABSI prevention and resulted in a 3-fold reduction in CLABSI incidence.

The automated checklist, and a dashboard-style interface used to interact with it, was designed to help caregivers follow national guidelines for CLABSI prevention. The system combed through data in a patient’s electronic medical record and pushed alerts to physicians and nurses when a patient’s central line was due for care.

The dashboard interface displayed real-time alerts on a large LCD screen in the nurses’ station. Alerts—shown as red, yellow, or green dots beside patients’ names—were generated if, for example, the dressing on a patient’s central line was due to be changed, or if it was time for caregivers to re-evaluate whether medications given in the central line could be switched to oral formulations instead.

“The information was visible and easy to digest,” said study author Deborah Franzon, MD. “We improved compliance with best-care practices and pulled information that otherwise would have been difficult to look for. It reduced busy work and made it possible for the healthcare team to perform their jobs more efficiently and effectively.”

The system was implemented on May 1, 2011, but the researchers considered the rollout period to extend to August 31, 2011. So this period was not included in the analysis.

The team compared data on CLABSI rates, compliance with bundle elements, and staff perceptions/knowledge before the intervention began—from June 1, 2009, to April 30, 2011—and after the system was fully implemented—September 1, 2011, to December 31, 2012.

CLABSI rates decreased from 2.6 per 1000 line-days before the intervention to 0.7 per 1000 line-days afterward (P=0.02). There were a total of 19 CLABSIs per 7322 line-days pre-intervention and 7 CLABSIs per 6155 line-days post-intervention.

The researchers estimated that the intervention saved approximately $260,000 per year in healthcare costs. Treating a single CLABSI costs approximately $39,000.

The team also found that daily documentation of line necessity increased from 30% before the intervention to 73% after (P<0.001). Compliance with dressing changes increased from 87% to 90% (P=0.003).

Compliance with cap changes increased from 87% to 93% (P<0.001). And compliance with port needle changes increased from 69% to 95% (P<0.001). However, compliance with insertion bundle documentation decreased from 67% to 62% (P=0.001).

After the system was implemented, there was a significant increase in staff perception that the medical team addressed central line necessity during rounds (P=0.02). But there was no significant difference in communication among team members (P=0.73) or knowledge regarding the components of the maintenance bundle (P=0.39).

Nevertheless, the researchers concluded that their system promotes compliance with best practices for CLABSI prevention, thereby reducing the risk of harm to patients.

The team hopes to use the system in other ways, such as monitoring the recovery of children who have received organ transplants.

“[The system] lets physicians focus on taking care of the patient while automating some of the background safety checks,” said study author Natalie Pageler, MD. “The nice thing about this tool is that it’s integrated into the electronic medical record, which we use every single day.” ![]()

Staphylococcus infection

Credit: Bill Branson

A computerized safety checklist that pulls information from patients’ electronic medical records can reduce the incidence of central line-associated bloodstream infections (CLABSIs), according to a study published in Pediatrics.

The study was conducted among children admitted to the pediatric intensive care unit at Lucile Packard Children’s Hospital Stanford in California.

Researchers found the safety checklist increased overall staff compliance with best practices for CLABSI prevention and resulted in a 3-fold reduction in CLABSI incidence.

The automated checklist, and a dashboard-style interface used to interact with it, was designed to help caregivers follow national guidelines for CLABSI prevention. The system combed through data in a patient’s electronic medical record and pushed alerts to physicians and nurses when a patient’s central line was due for care.

The dashboard interface displayed real-time alerts on a large LCD screen in the nurses’ station. Alerts—shown as red, yellow, or green dots beside patients’ names—were generated if, for example, the dressing on a patient’s central line was due to be changed, or if it was time for caregivers to re-evaluate whether medications given in the central line could be switched to oral formulations instead.

“The information was visible and easy to digest,” said study author Deborah Franzon, MD. “We improved compliance with best-care practices and pulled information that otherwise would have been difficult to look for. It reduced busy work and made it possible for the healthcare team to perform their jobs more efficiently and effectively.”

The system was implemented on May 1, 2011, but the researchers considered the rollout period to extend to August 31, 2011. So this period was not included in the analysis.

The team compared data on CLABSI rates, compliance with bundle elements, and staff perceptions/knowledge before the intervention began—from June 1, 2009, to April 30, 2011—and after the system was fully implemented—September 1, 2011, to December 31, 2012.

CLABSI rates decreased from 2.6 per 1000 line-days before the intervention to 0.7 per 1000 line-days afterward (P=0.02). There were a total of 19 CLABSIs per 7322 line-days pre-intervention and 7 CLABSIs per 6155 line-days post-intervention.

The researchers estimated that the intervention saved approximately $260,000 per year in healthcare costs. Treating a single CLABSI costs approximately $39,000.

The team also found that daily documentation of line necessity increased from 30% before the intervention to 73% after (P<0.001). Compliance with dressing changes increased from 87% to 90% (P=0.003).

Compliance with cap changes increased from 87% to 93% (P<0.001). And compliance with port needle changes increased from 69% to 95% (P<0.001). However, compliance with insertion bundle documentation decreased from 67% to 62% (P=0.001).

After the system was implemented, there was a significant increase in staff perception that the medical team addressed central line necessity during rounds (P=0.02). But there was no significant difference in communication among team members (P=0.73) or knowledge regarding the components of the maintenance bundle (P=0.39).

Nevertheless, the researchers concluded that their system promotes compliance with best practices for CLABSI prevention, thereby reducing the risk of harm to patients.

The team hopes to use the system in other ways, such as monitoring the recovery of children who have received organ transplants.

“[The system] lets physicians focus on taking care of the patient while automating some of the background safety checks,” said study author Natalie Pageler, MD. “The nice thing about this tool is that it’s integrated into the electronic medical record, which we use every single day.” ![]()

Transfusion increases risks in PCI patients, study shows

Credit: UAB Hospital

In a large study, patients who received red blood cell (RBC) transfusions after percutaneous coronary intervention (PCI) had a higher risk of in-hospital heart attack, stroke, and death than their non-transfused peers.

The retrospective study included data on nearly 2 million patients who underwent a PCI at hospitals across the US.

The research revealed considerable variation in transfusion practices for this patient population, although the overall rate of transfusion was low.

This makes sense, as giving RBC transfusions to patients with coronary artery disease is controversial, according to the study authors.

They said there is a growing body of evidence suggesting that transfusion in the setting of acute coronary syndromes (ACS) and in hospitalized patients with a history of coronary artery disease may be associated with an increased risk of heart attack and death.

Furthermore, current guideline statements are cautious about recommending transfusion in hospitalized patients with a history of coronary artery disease and make no recommendation on transfusion in the setting of ACS, citing an absence of definitive evidence.

With this in mind, Matthew W. Sherwood, MD, of Duke Clinical Research Institute in Durham, North Carolina, and his colleagues examined transfusion practice patterns and outcomes in 1,967,218 patients (2,258,711 visits) who underwent PCI from July 2009 to March 2013 at 1431 US hospitals.

The team reported their findings in JAMA.

Overall, 2.1% of patients had a transfusion. However, transfusion practices varied among the hospitals. The unadjusted transfusion rates ranged from 0% to 13%. Overall, 96.3% of hospitals transfused less than 5% of patients, and 3.7% of hospitals transfused 5% of patients or more.

Risk-standardized rates of transfusion by hospital ranged from 0.3% to 9.3%. The risk was adjusted for factors such as age, sex, body mass index, ACS presentation, PCI status, history of congestive heart failure, etc.

Compared to no transfusion, receiving an RBC transfusion was associated with a greater risk of heart attack (4.5% vs 1.8%), stroke (2.0% vs 0.2%), and in-hospital death (12.5% vs 1.2%), irrespective of bleeding complications.

Patients were more likely to receive a transfusion if they were older, female, and had hypertension, diabetes, advanced renal dysfunction, and prior heart attack or heart failure.

The researchers speculated that the variation in transfusion practice patterns observed in this study may be related to several factors, including previously held beliefs about the benefit of transfusion and recently published data indicating the lack of benefit and potential hazard associated with transfusion.

The team said these data highlight the need for randomized trials of transfusion strategies to guide practice in patients undergoing PCI. And until these trials provide more definitive answers, clinicians should try to reduce the risk of bleeding and, therefore, the need for transfusion in patients undergoing PCI. ![]()

Credit: UAB Hospital

In a large study, patients who received red blood cell (RBC) transfusions after percutaneous coronary intervention (PCI) had a higher risk of in-hospital heart attack, stroke, and death than their non-transfused peers.

The retrospective study included data on nearly 2 million patients who underwent a PCI at hospitals across the US.

The research revealed considerable variation in transfusion practices for this patient population, although the overall rate of transfusion was low.

This makes sense, as giving RBC transfusions to patients with coronary artery disease is controversial, according to the study authors.

They said there is a growing body of evidence suggesting that transfusion in the setting of acute coronary syndromes (ACS) and in hospitalized patients with a history of coronary artery disease may be associated with an increased risk of heart attack and death.

Furthermore, current guideline statements are cautious about recommending transfusion in hospitalized patients with a history of coronary artery disease and make no recommendation on transfusion in the setting of ACS, citing an absence of definitive evidence.

With this in mind, Matthew W. Sherwood, MD, of Duke Clinical Research Institute in Durham, North Carolina, and his colleagues examined transfusion practice patterns and outcomes in 1,967,218 patients (2,258,711 visits) who underwent PCI from July 2009 to March 2013 at 1431 US hospitals.

The team reported their findings in JAMA.

Overall, 2.1% of patients had a transfusion. However, transfusion practices varied among the hospitals. The unadjusted transfusion rates ranged from 0% to 13%. Overall, 96.3% of hospitals transfused less than 5% of patients, and 3.7% of hospitals transfused 5% of patients or more.

Risk-standardized rates of transfusion by hospital ranged from 0.3% to 9.3%. The risk was adjusted for factors such as age, sex, body mass index, ACS presentation, PCI status, history of congestive heart failure, etc.

Compared to no transfusion, receiving an RBC transfusion was associated with a greater risk of heart attack (4.5% vs 1.8%), stroke (2.0% vs 0.2%), and in-hospital death (12.5% vs 1.2%), irrespective of bleeding complications.

Patients were more likely to receive a transfusion if they were older, female, and had hypertension, diabetes, advanced renal dysfunction, and prior heart attack or heart failure.

The researchers speculated that the variation in transfusion practice patterns observed in this study may be related to several factors, including previously held beliefs about the benefit of transfusion and recently published data indicating the lack of benefit and potential hazard associated with transfusion.

The team said these data highlight the need for randomized trials of transfusion strategies to guide practice in patients undergoing PCI. And until these trials provide more definitive answers, clinicians should try to reduce the risk of bleeding and, therefore, the need for transfusion in patients undergoing PCI. ![]()

Credit: UAB Hospital

In a large study, patients who received red blood cell (RBC) transfusions after percutaneous coronary intervention (PCI) had a higher risk of in-hospital heart attack, stroke, and death than their non-transfused peers.

The retrospective study included data on nearly 2 million patients who underwent a PCI at hospitals across the US.

The research revealed considerable variation in transfusion practices for this patient population, although the overall rate of transfusion was low.

This makes sense, as giving RBC transfusions to patients with coronary artery disease is controversial, according to the study authors.

They said there is a growing body of evidence suggesting that transfusion in the setting of acute coronary syndromes (ACS) and in hospitalized patients with a history of coronary artery disease may be associated with an increased risk of heart attack and death.

Furthermore, current guideline statements are cautious about recommending transfusion in hospitalized patients with a history of coronary artery disease and make no recommendation on transfusion in the setting of ACS, citing an absence of definitive evidence.

With this in mind, Matthew W. Sherwood, MD, of Duke Clinical Research Institute in Durham, North Carolina, and his colleagues examined transfusion practice patterns and outcomes in 1,967,218 patients (2,258,711 visits) who underwent PCI from July 2009 to March 2013 at 1431 US hospitals.

The team reported their findings in JAMA.

Overall, 2.1% of patients had a transfusion. However, transfusion practices varied among the hospitals. The unadjusted transfusion rates ranged from 0% to 13%. Overall, 96.3% of hospitals transfused less than 5% of patients, and 3.7% of hospitals transfused 5% of patients or more.

Risk-standardized rates of transfusion by hospital ranged from 0.3% to 9.3%. The risk was adjusted for factors such as age, sex, body mass index, ACS presentation, PCI status, history of congestive heart failure, etc.

Compared to no transfusion, receiving an RBC transfusion was associated with a greater risk of heart attack (4.5% vs 1.8%), stroke (2.0% vs 0.2%), and in-hospital death (12.5% vs 1.2%), irrespective of bleeding complications.

Patients were more likely to receive a transfusion if they were older, female, and had hypertension, diabetes, advanced renal dysfunction, and prior heart attack or heart failure.

The researchers speculated that the variation in transfusion practice patterns observed in this study may be related to several factors, including previously held beliefs about the benefit of transfusion and recently published data indicating the lack of benefit and potential hazard associated with transfusion.

The team said these data highlight the need for randomized trials of transfusion strategies to guide practice in patients undergoing PCI. And until these trials provide more definitive answers, clinicians should try to reduce the risk of bleeding and, therefore, the need for transfusion in patients undergoing PCI. ![]()

Peer‐Reviewed Journals and Social Media

Only 20 years ago, science from peer‐reviewed journals was still distributed and consumed in the same fashion that evolved from the earliest days of medical science: in print at monthly or weekly intervals. The Internet radically accelerated this paradigm but left the essential processes intact; journals could publish the information and readers could read it more easily, but the basic forums for interaction and discussion over the content remained the same. Enter Web 2.0 and the era of social media. Authors, editors, and readers can now interact easily with each other over the content in real time and across great distances.

Social media may not have changed the way science is produced and reviewed, but it is certainly changing how people consume and use the science. Some have suggested that social media activity around particular articles or journals may be a more important measure of impact than traditional measures of citation,[1] and others have suggested that Twitter activity in particular has changed both the speed and quality of discussion about new studies within the scientific community.[2] In the face of these trends, the Journal of Hospital Medicine (JHM) has decided to develop a bold strategy for leadership in this emerging area, with an initial focus on increasing JHM's activity and visibility on Twitter.

As part of this initial focus, JHM has successfully developed and implemented a protocol for use by authors to compose 2 Tweets describing their publications: the first announces the article's publication (e.g., New evidence on white coats and risk for hospital‐acquired infections), and the second promotes a key point from the article (e.g., Does the doctor's white coat spread hospital infection?). These Tweets are encouraged (but not required) from the corresponding author for every article in every edition, and JHM's editorial staff works with individual authors to refine their message and maximize their impact. To help authors, we have developed several tips for effective tweeting (Table 1).

| 1. Make it short:The limit is 140 characters, but getting retweets requires additional room for others to add their 2 cents, so try to get it under 100 characters. |

| 2. Make it simple: If your tweet includes complex terminology or analytic methods, it is not likely to get picked up. Make it easy to read for the lay public. |

| 3. Make it clear: Your article may have several conclusions, but pick the most newsworthy for the general public. It is usually best to focus on the main finding. |

| 4. Pose a question: Raise interest by piquing the curiosity of potential readers. A good question can motivate readers to click on your article to find the answer. |

| 5. Add a hashtag: Hashtags index tweets on Twitter. It is best to pick 1 or 2 existing tags from the healthcare hashtag project that fit the focus of your article ( |

| 6. Build your following: Include your Twitter handle to alert current/prospective followers of your publication. |

Even after just 1 year of this Twitter‐focused strategy, we are already seeing noteworthy impact and have learned several lessons.

AUTHORS CAN AND WILL GENERATE TWEETS FOR THEIR ARTICLES

When we started asking authors to generate tweets for their articles, Twitter was relatively new, and we were unsure if authors would be willing and able to participate. Since we started, we have noticed a steady increase in the number of author‐generated tweets. Today, more than three‐quarters of tweets per issue are author generated. Anecdotal feedback has been very positive, and authors have expressed interest in the plan for tweeting as well as feedback on how well their tweets were written. If authors or institutions are on Twitter, we also encourage using the Twitter name or handle in the tweet, which serves as a way for others on Twitter to identify directly with the author or institution and often results in greater interest in a particular tweet. Of note, authors have no obligation to become regular users of Twitter or engage with followers of JHM's Twitter feed, but many find themselves following the journal's feed more closely (and responding to posts by other authors) once they have joined Twitter and tweeted about their own work via JHM.

#HASHTAGS MAKE IT HAPPEN

Because Twitter users are a very large crowd of people with diverse interests, it is important to target tweets to the groups that would be most interested in studies. The use of hashtags makes it easy to index tweets. One of the major edits of author‐generated tweets that we provide is to index the articles to the most popular hashtags. For example, medical education studies can be indexed under #meded, which is a popular hashtag for clinician educators. Other important hashtags for hospitalists include #ptsafety, #readmissions, #healthpolicy, #healthcosts, or #infectiousdisease. To select hashtags, we have found the healthcare hashtag directory maintained by Symplur (Upland, CA;

HIGH IMPACT STUDIES MAKE A BIGGER IMPACT ON TWITTER

We observed a high number of retweets and comments about articles that were the most viewed studies on JHM online, referring to Project BOOST (Better Outcomes for Older Adults Through Safe Transitions) and the Society of Hospital Medicine's Choosing Wisely campaign. This is not surprising given the national focus on readmissions as well as cost‐conscious care. Moreover, our experience is in line with observations that Twitter provides an additional source of page views and article downloads for medical journals[3] and research, which demonstrates that studies that are tweeted will eventually be cited more.[4, 5]

TECHNOLOGY STUDIES ARE ADORED BY TWITTER

Studies and research examining the use of smartphones, apps, or social media in healthcare draw a lot of attention on Twitter, particularly from other technophiles in healthcare who often use the #hscm healthcare social media hashtag. Such studies often resonate with Twitter users, who tend to be engaged in technology at a high level and are interested in how to advance the use of technology in the healthcare workplace.

JHM's social media strategy has already been very successful in its early implementation; the JHM twitter feed has >600 followers. Although most authors submit their own tweets (71/117 or 61% of articles over the last year), JHM has also created social media roles for editors to fill in tweets when missing and ensure timely and consistent output from the JHM feed. We have also started a Facebook page, with a rapidly growing number of followers, and we continue to see our social media influence scores rise. In the next year we hope to develop a JHM blog, with invited commentary as well as a process for unsolicited submissions from our readership.

Increasingly, a journal's impact (small i) is measured not only in the traditional metric of impact factor (a representation of the number of papers cited in a given journal publication year), but also by the journal's ability to disseminate knowledge and awareness of issues key to the field. Social media is a major element of the next phase of evidence dissemination, and JHM is pleased to be developing and growing its footprint in the digital world.

- , . Exploring the use of social media to measure journal article impact. AMIA Annu Symp Proc. 2011;2011:374–381.

- . Peer review: trial by Twitter. Nature. 2011;469(7330):286–287.

- , , , . Social media release increases dissemination of original articles in the clinical pain sciences. PLoS One. 2013;8(7):e68914.

- . Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. J Med Internet Res. 2011;13(4):e123.

- , , , . Do altmetrics work? Twitter and ten other social web services. PLoS One. 2013;8(5):e64841.

Only 20 years ago, science from peer‐reviewed journals was still distributed and consumed in the same fashion that evolved from the earliest days of medical science: in print at monthly or weekly intervals. The Internet radically accelerated this paradigm but left the essential processes intact; journals could publish the information and readers could read it more easily, but the basic forums for interaction and discussion over the content remained the same. Enter Web 2.0 and the era of social media. Authors, editors, and readers can now interact easily with each other over the content in real time and across great distances.

Social media may not have changed the way science is produced and reviewed, but it is certainly changing how people consume and use the science. Some have suggested that social media activity around particular articles or journals may be a more important measure of impact than traditional measures of citation,[1] and others have suggested that Twitter activity in particular has changed both the speed and quality of discussion about new studies within the scientific community.[2] In the face of these trends, the Journal of Hospital Medicine (JHM) has decided to develop a bold strategy for leadership in this emerging area, with an initial focus on increasing JHM's activity and visibility on Twitter.

As part of this initial focus, JHM has successfully developed and implemented a protocol for use by authors to compose 2 Tweets describing their publications: the first announces the article's publication (e.g., New evidence on white coats and risk for hospital‐acquired infections), and the second promotes a key point from the article (e.g., Does the doctor's white coat spread hospital infection?). These Tweets are encouraged (but not required) from the corresponding author for every article in every edition, and JHM's editorial staff works with individual authors to refine their message and maximize their impact. To help authors, we have developed several tips for effective tweeting (Table 1).

| 1. Make it short:The limit is 140 characters, but getting retweets requires additional room for others to add their 2 cents, so try to get it under 100 characters. |

| 2. Make it simple: If your tweet includes complex terminology or analytic methods, it is not likely to get picked up. Make it easy to read for the lay public. |

| 3. Make it clear: Your article may have several conclusions, but pick the most newsworthy for the general public. It is usually best to focus on the main finding. |

| 4. Pose a question: Raise interest by piquing the curiosity of potential readers. A good question can motivate readers to click on your article to find the answer. |

| 5. Add a hashtag: Hashtags index tweets on Twitter. It is best to pick 1 or 2 existing tags from the healthcare hashtag project that fit the focus of your article ( |

| 6. Build your following: Include your Twitter handle to alert current/prospective followers of your publication. |

Even after just 1 year of this Twitter‐focused strategy, we are already seeing noteworthy impact and have learned several lessons.

AUTHORS CAN AND WILL GENERATE TWEETS FOR THEIR ARTICLES

When we started asking authors to generate tweets for their articles, Twitter was relatively new, and we were unsure if authors would be willing and able to participate. Since we started, we have noticed a steady increase in the number of author‐generated tweets. Today, more than three‐quarters of tweets per issue are author generated. Anecdotal feedback has been very positive, and authors have expressed interest in the plan for tweeting as well as feedback on how well their tweets were written. If authors or institutions are on Twitter, we also encourage using the Twitter name or handle in the tweet, which serves as a way for others on Twitter to identify directly with the author or institution and often results in greater interest in a particular tweet. Of note, authors have no obligation to become regular users of Twitter or engage with followers of JHM's Twitter feed, but many find themselves following the journal's feed more closely (and responding to posts by other authors) once they have joined Twitter and tweeted about their own work via JHM.

#HASHTAGS MAKE IT HAPPEN

Because Twitter users are a very large crowd of people with diverse interests, it is important to target tweets to the groups that would be most interested in studies. The use of hashtags makes it easy to index tweets. One of the major edits of author‐generated tweets that we provide is to index the articles to the most popular hashtags. For example, medical education studies can be indexed under #meded, which is a popular hashtag for clinician educators. Other important hashtags for hospitalists include #ptsafety, #readmissions, #healthpolicy, #healthcosts, or #infectiousdisease. To select hashtags, we have found the healthcare hashtag directory maintained by Symplur (Upland, CA;

HIGH IMPACT STUDIES MAKE A BIGGER IMPACT ON TWITTER

We observed a high number of retweets and comments about articles that were the most viewed studies on JHM online, referring to Project BOOST (Better Outcomes for Older Adults Through Safe Transitions) and the Society of Hospital Medicine's Choosing Wisely campaign. This is not surprising given the national focus on readmissions as well as cost‐conscious care. Moreover, our experience is in line with observations that Twitter provides an additional source of page views and article downloads for medical journals[3] and research, which demonstrates that studies that are tweeted will eventually be cited more.[4, 5]

TECHNOLOGY STUDIES ARE ADORED BY TWITTER

Studies and research examining the use of smartphones, apps, or social media in healthcare draw a lot of attention on Twitter, particularly from other technophiles in healthcare who often use the #hscm healthcare social media hashtag. Such studies often resonate with Twitter users, who tend to be engaged in technology at a high level and are interested in how to advance the use of technology in the healthcare workplace.

JHM's social media strategy has already been very successful in its early implementation; the JHM twitter feed has >600 followers. Although most authors submit their own tweets (71/117 or 61% of articles over the last year), JHM has also created social media roles for editors to fill in tweets when missing and ensure timely and consistent output from the JHM feed. We have also started a Facebook page, with a rapidly growing number of followers, and we continue to see our social media influence scores rise. In the next year we hope to develop a JHM blog, with invited commentary as well as a process for unsolicited submissions from our readership.

Increasingly, a journal's impact (small i) is measured not only in the traditional metric of impact factor (a representation of the number of papers cited in a given journal publication year), but also by the journal's ability to disseminate knowledge and awareness of issues key to the field. Social media is a major element of the next phase of evidence dissemination, and JHM is pleased to be developing and growing its footprint in the digital world.

Only 20 years ago, science from peer‐reviewed journals was still distributed and consumed in the same fashion that evolved from the earliest days of medical science: in print at monthly or weekly intervals. The Internet radically accelerated this paradigm but left the essential processes intact; journals could publish the information and readers could read it more easily, but the basic forums for interaction and discussion over the content remained the same. Enter Web 2.0 and the era of social media. Authors, editors, and readers can now interact easily with each other over the content in real time and across great distances.

Social media may not have changed the way science is produced and reviewed, but it is certainly changing how people consume and use the science. Some have suggested that social media activity around particular articles or journals may be a more important measure of impact than traditional measures of citation,[1] and others have suggested that Twitter activity in particular has changed both the speed and quality of discussion about new studies within the scientific community.[2] In the face of these trends, the Journal of Hospital Medicine (JHM) has decided to develop a bold strategy for leadership in this emerging area, with an initial focus on increasing JHM's activity and visibility on Twitter.

As part of this initial focus, JHM has successfully developed and implemented a protocol for use by authors to compose 2 Tweets describing their publications: the first announces the article's publication (e.g., New evidence on white coats and risk for hospital‐acquired infections), and the second promotes a key point from the article (e.g., Does the doctor's white coat spread hospital infection?). These Tweets are encouraged (but not required) from the corresponding author for every article in every edition, and JHM's editorial staff works with individual authors to refine their message and maximize their impact. To help authors, we have developed several tips for effective tweeting (Table 1).

| 1. Make it short:The limit is 140 characters, but getting retweets requires additional room for others to add their 2 cents, so try to get it under 100 characters. |

| 2. Make it simple: If your tweet includes complex terminology or analytic methods, it is not likely to get picked up. Make it easy to read for the lay public. |

| 3. Make it clear: Your article may have several conclusions, but pick the most newsworthy for the general public. It is usually best to focus on the main finding. |

| 4. Pose a question: Raise interest by piquing the curiosity of potential readers. A good question can motivate readers to click on your article to find the answer. |

| 5. Add a hashtag: Hashtags index tweets on Twitter. It is best to pick 1 or 2 existing tags from the healthcare hashtag project that fit the focus of your article ( |

| 6. Build your following: Include your Twitter handle to alert current/prospective followers of your publication. |

Even after just 1 year of this Twitter‐focused strategy, we are already seeing noteworthy impact and have learned several lessons.

AUTHORS CAN AND WILL GENERATE TWEETS FOR THEIR ARTICLES

When we started asking authors to generate tweets for their articles, Twitter was relatively new, and we were unsure if authors would be willing and able to participate. Since we started, we have noticed a steady increase in the number of author‐generated tweets. Today, more than three‐quarters of tweets per issue are author generated. Anecdotal feedback has been very positive, and authors have expressed interest in the plan for tweeting as well as feedback on how well their tweets were written. If authors or institutions are on Twitter, we also encourage using the Twitter name or handle in the tweet, which serves as a way for others on Twitter to identify directly with the author or institution and often results in greater interest in a particular tweet. Of note, authors have no obligation to become regular users of Twitter or engage with followers of JHM's Twitter feed, but many find themselves following the journal's feed more closely (and responding to posts by other authors) once they have joined Twitter and tweeted about their own work via JHM.

#HASHTAGS MAKE IT HAPPEN

Because Twitter users are a very large crowd of people with diverse interests, it is important to target tweets to the groups that would be most interested in studies. The use of hashtags makes it easy to index tweets. One of the major edits of author‐generated tweets that we provide is to index the articles to the most popular hashtags. For example, medical education studies can be indexed under #meded, which is a popular hashtag for clinician educators. Other important hashtags for hospitalists include #ptsafety, #readmissions, #healthpolicy, #healthcosts, or #infectiousdisease. To select hashtags, we have found the healthcare hashtag directory maintained by Symplur (Upland, CA;

HIGH IMPACT STUDIES MAKE A BIGGER IMPACT ON TWITTER

We observed a high number of retweets and comments about articles that were the most viewed studies on JHM online, referring to Project BOOST (Better Outcomes for Older Adults Through Safe Transitions) and the Society of Hospital Medicine's Choosing Wisely campaign. This is not surprising given the national focus on readmissions as well as cost‐conscious care. Moreover, our experience is in line with observations that Twitter provides an additional source of page views and article downloads for medical journals[3] and research, which demonstrates that studies that are tweeted will eventually be cited more.[4, 5]

TECHNOLOGY STUDIES ARE ADORED BY TWITTER

Studies and research examining the use of smartphones, apps, or social media in healthcare draw a lot of attention on Twitter, particularly from other technophiles in healthcare who often use the #hscm healthcare social media hashtag. Such studies often resonate with Twitter users, who tend to be engaged in technology at a high level and are interested in how to advance the use of technology in the healthcare workplace.

JHM's social media strategy has already been very successful in its early implementation; the JHM twitter feed has >600 followers. Although most authors submit their own tweets (71/117 or 61% of articles over the last year), JHM has also created social media roles for editors to fill in tweets when missing and ensure timely and consistent output from the JHM feed. We have also started a Facebook page, with a rapidly growing number of followers, and we continue to see our social media influence scores rise. In the next year we hope to develop a JHM blog, with invited commentary as well as a process for unsolicited submissions from our readership.

Increasingly, a journal's impact (small i) is measured not only in the traditional metric of impact factor (a representation of the number of papers cited in a given journal publication year), but also by the journal's ability to disseminate knowledge and awareness of issues key to the field. Social media is a major element of the next phase of evidence dissemination, and JHM is pleased to be developing and growing its footprint in the digital world.

- , . Exploring the use of social media to measure journal article impact. AMIA Annu Symp Proc. 2011;2011:374–381.

- . Peer review: trial by Twitter. Nature. 2011;469(7330):286–287.

- , , , . Social media release increases dissemination of original articles in the clinical pain sciences. PLoS One. 2013;8(7):e68914.

- . Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. J Med Internet Res. 2011;13(4):e123.

- , , , . Do altmetrics work? Twitter and ten other social web services. PLoS One. 2013;8(5):e64841.

- , . Exploring the use of social media to measure journal article impact. AMIA Annu Symp Proc. 2011;2011:374–381.

- . Peer review: trial by Twitter. Nature. 2011;469(7330):286–287.

- , , , . Social media release increases dissemination of original articles in the clinical pain sciences. PLoS One. 2013;8(7):e68914.

- . Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. J Med Internet Res. 2011;13(4):e123.

- , , , . Do altmetrics work? Twitter and ten other social web services. PLoS One. 2013;8(5):e64841.

FIM at Discharge and Rehospitalization

Federally mandated pay‐for‐performance initiatives promote minimizing 30‐day hospital readmissions to improve healthcare quality and reduce costs. Although the reasons for readmissions are multifactorial, many patients are readmitted for a condition other than their initial hospital admitting diagnosis.[1] Impairments in functional status experienced during acute care hospitalization contribute to patients being discharged in a debilitated state and being vulnerable to postdischarge complications and potentially hospital readmission.[2] As such, decreased functional status may be an important and potentially modifiable risk factor for acute care hospital readmission.[3]

Previous studies have suggested that impaired functional status may be an important predictor of rehospitalization.[4, 5, 6, 7] However, inferences from existing studies are limited because they did not consider functional status as their primary focus, they only considered specific patient populations (eg, stroke) or readmissions occurring well beyond the 30‐day period defined by federal pay‐for‐performance standards.[4, 5, 6, 8, 9, 10] Our objective was to evaluate the association between functional status near the time of discharge from acute care hospital and 30‐day readmission for patients admitted to an acute inpatient rehabilitation facility. As a secondary objective, we sought to investigate the relationship between functional status and readmission by diagnostic category (medical, neurologic, or orthopedic).

METHODS

Study Population and Setting

We conducted a single‐center, retrospective study of patients admitted to an inpatient rehabilitation facility at a community hospital between July 1, 2006 and December 31, 2012. This facility provides intensive rehabilitation consisting of 3 hours of therapy per day, skilled nursing care on a 24‐hour basis, and medical care by a physiatrist. We excluded patients who died during inpatient rehabilitation (n=15, 0.2%) and patients not admitted directly from an acute care setting (n=178, 2.0%).

Data Source and Covariates

Data were derived from the Uniform Data System for Medical Rehabilitation (UDSMR), which is an administrative database providing the following data upon admission to an inpatient rehabilitation facility[11, 12, 13]: age, gender, race/ethnicity, marital status, the discharge setting, the admission Functional Independence Measure (FIM) score (details further below), and admission diagnostic category as defined by the primary discharge diagnosis from the acute care hospital and grouped by functional related groups (a case‐mix system for medical rehabilitation).[12, 14] The 3M ClinTrac management software (3M, St. Paul, MN), used for mandatory reporting to the State of Maryland, provided all‐payerrefined diagnosis related group (APRDRG) and severity of illness (SOI) combinations (a tool to group patients into clinically comparable disease and severity‐of‐illness categories expected to use similar resources and experience similar outcomes). The University HealthSystem Consortium (UHC) database provided national readmission rates for all APRDRG‐SOI combinations using a methodology that has been previously described.[15, 16] Expected readmission rates for APRDRG‐SOI combinations served as a patient risk stratification tool based on clinical logic that evaluates age, comorbidities, principal diagnosis during hospitalization, and procedures conducted during hospitalization.[17]

Primary Outcome: Acute Care Readmission

The primary outcome was all‐cause acute care readmission, defined as patient transfer to an acute care hospital during inpatient rehabilitation within 30 days from admission to inpatient rehabilitation. The care model for our inpatient rehabilitation unit is such that when patients become sick or develop a complication, they are admitted directly to a clinical unit (eg, intensive care unit) at the community hospital through a rapid‐response intervention, or the physiatrist arranges with an admitting inpatient attending to accept the patient directly to his or her service.

Primary Exposure: Functional Independence Measure

Functional status was measured using the FIM score.[18] The FIM score is an 18‐item measure of functional status, with each item scored on a scale from 1 to 7 (dependent to independent). Various aspects of motor function and cognitive function are assessed. The FIM has been validated and shown to be reliable and reproducible.[13, 19, 20] By definition for the FIM instrument, admission FIM scores are assessed by trained multidisciplinary personnel first over the 72 hours of the rehabilitation stay, and for this study served as a proxy for patient functional status upon discharge from the acute care setting in our analysis. This 72‐hour time window allows for full assessment by therapists and nurses; however, in clinical practice at the inpatient rehabilitation unit involved in this study, much of the FIM assessment occurs within the first 24 hours of the rehabilitation stay. For our analysis, we divided FIM scores into low, medium, and high functional groups. The thresholds for these groups were based on total FIM score tertiles from a prior study<60, 60 to 76, and >76.[16] As a secondary analysis we created 6 subscales of the overall FIM score based on previous research. These subscales included: transfers (transfer to chair/wheelchair, toilet, and tub/shower), locomotion (walking and stairs), self‐care (eating, grooming, bathing, dressing, and toileting), sphincter control (bladder and bowel management), communication (comprehension and expression), and social cognition (social interaction, problem solving, and memory).[21]

Statistical Analysis

To evaluate differences in patient characteristics by diagnostic category, analysis of variance and 2 tests were used for continuous and dichotomous variables, respectively. Logistic regression was used to evaluate the association between FIM score category and readmission status, adjusting for potentially confounding variables available from the UDSMR and UHC databases. We used interaction terms to test whether the association between the FIM score and readmissions varied significantly across diagnostic categories and by age. As a secondary analysis, we modeled FIM score as a continuous variable. We expressed the odds ratio in this analysis per 10‐point change in FIM, because this represents a clinically relevant change in function.[22] Logistic regression was also used to evaluate the association between FIM subscale scores (transfers, locomotion, self‐care, sphincter control, communication, and social cognition) and readmission status. Statistical significance was defined as a 2‐sided P<0.05. Data were analyzed with R (version 2.15.0;

RESULTS

Readmitted Patients and Diagnostic Categories

A total of 9405 consecutive eligible patients were admitted to the acute inpatient rehabilitation facility between July 1, 2006 and December 31, 2012. A total of 1182 (13%) patients were readmitted back to an acute care hospital from inpatient rehabilitation. Median (interquartile range) time to readmission from acute care hospital discharge was 6 days (310 days), and median length of stay for patients who were discharged to the community from inpatient rehabilitation was 8 days (612 days).

Table 1 shows characteristics of all inpatient rehabilitation patients by diagnostic category. For the neurologic category, the most common primary diagnoses were stroke and spinal cord injury; for the medical category, infection, renal failure, congestive heart failure, and chronic obstructive pulmonary disease; and for the orthopedic category, spinal arthrodesis, knee and hip replacements. Mean FIM scores were lowest and highest for patients admitted with a primarily neurologic and orthopedic diagnosis, respectively.

| Characteristic | All Patients, N=9405 | Diagnostic Category | |||

|---|---|---|---|---|---|

| Neurologic, n=3706 | Medical, n=2135 | Orthopedic, n=3564 | P Valueb | ||

| |||||

| Age, y | 67.8 (14.2) | 66.7 (15.3) | 67.0 (14.9) | 69.3 (12.4) | <0.001 |

| Male | 4,068 (43%) | 1,816 (49%) | 1,119 (52%) | 1,133 (32%) | <0.001 |

| Race | <0.001 | ||||

| Caucasian | 6,106 (65%) | 2344 (63%) | 1,320 (62%) | 2,442 (69%) | |

| African American | 2,501 (27%) | 984 (27%) | 658 (31%) | 859 (24%) | |

| Other | 798 (8%) | 378 (10%) | 157 (7%) | 263 (7%) | |

| Married | 4,330 (46%) | 1,683 (45%) | 931 (44%) | 1,716 (48%) | 0.002 |

| APRDRG‐SOI expected readmission rate | 18.0 (7.4) | 20.5 (6.8) | 21.3 (7.5) | 13.5 (5.6) | <0.001 |

| Total admission FIM score | 68.7 (17.2) | 60.4 (18.6) | 69.1 (15.5) | 77.2 (11.7) | <0.001 |

FIM Score Category and Risk of Readmission

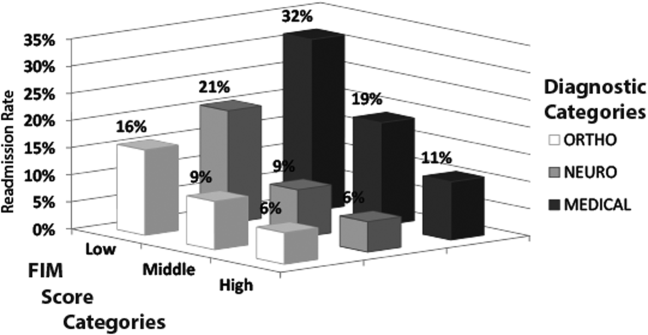

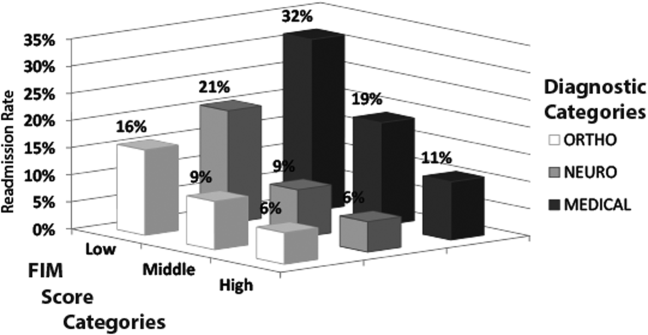

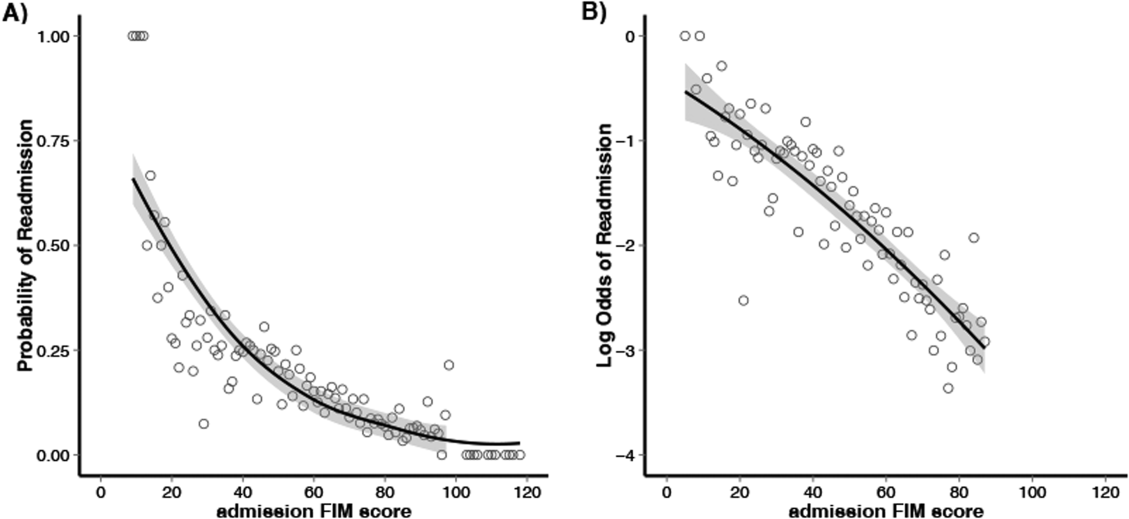

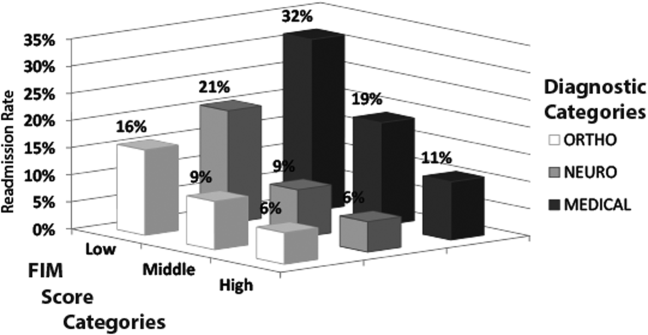

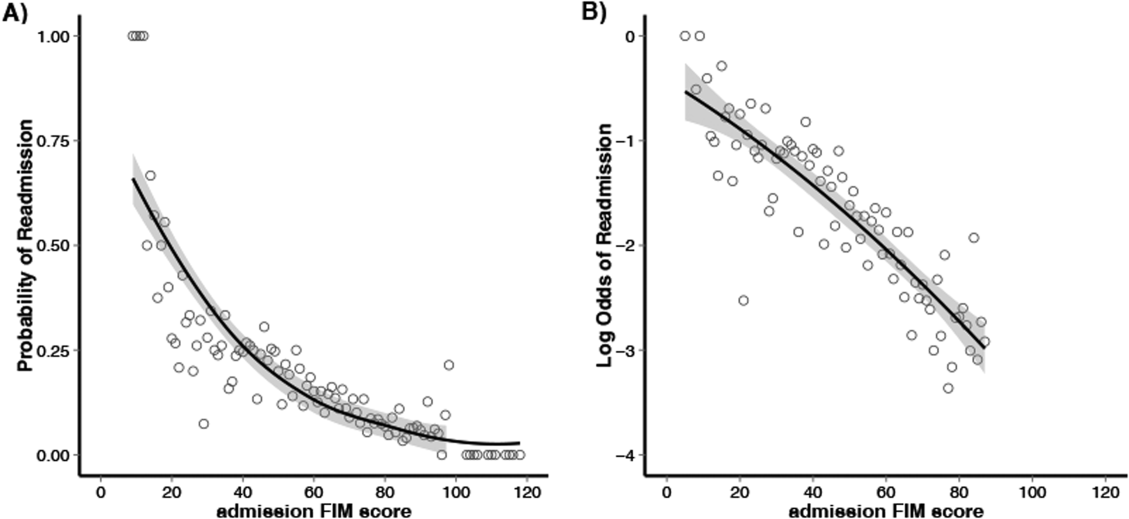

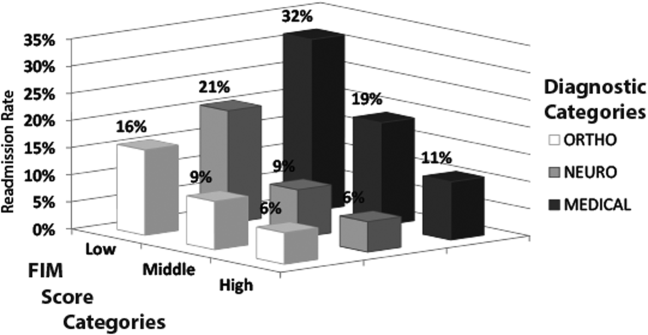

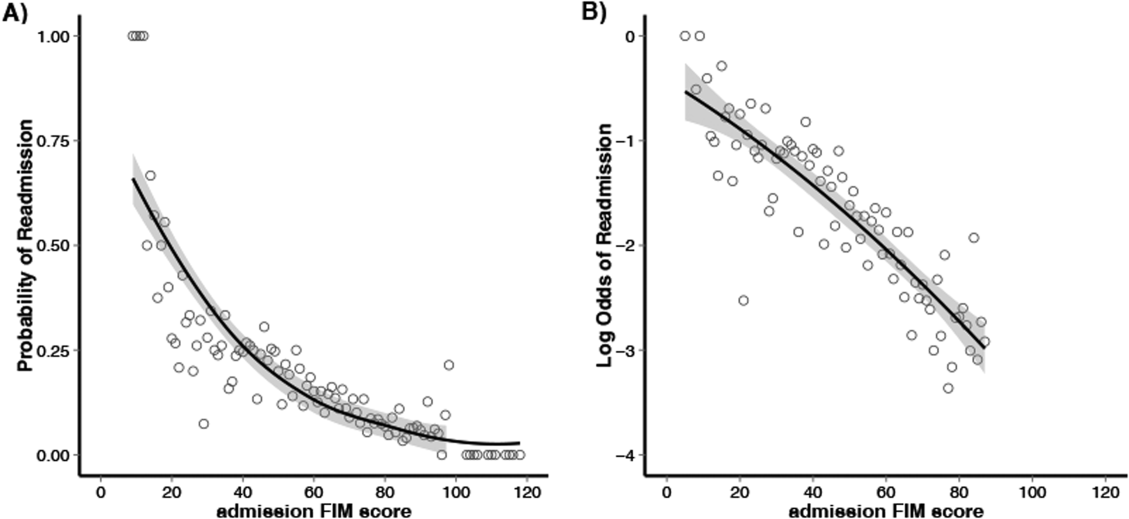

Figure 1 shows that patients in the low admission FIM score category had the highest unadjusted rate of readmission for each diagnostic category. In unadjusted analysis, Table 2 shows that younger age, male sex, APDRG‐SOI expected readmission rate, and orthopedic and medical diagnostic categories were associated with readmission. As a continuous variable, FIM scores were linearly associated with readmission (Figure 2), with an unadjusted odds ratio (OR) and 95% confidence interval (CI) of 1.4 (1.4‐1.4, P<0.001) for a 10‐point decrease in FIM. Compared to patients with high admission FIM scores, patients with low and middle FIM scores had higher unadjusted odds of readmission (OR: 4.0; 95% CI: 3.4‐4.7; P<0.001 and OR: 1.8; 95% CI: 1.5‐2.1; P<0.001, respectively). Mean FIM subscale scores for patients readmitted versus not readmitted were transfers (5.3 vs 7.0, P<0.001), locomotion (1.6 vs 2.3, P<0.001), self‐care (17.0 vs 20.8, P<0.001), communication (10.6 vs 11.5, P<0.001), and social cognition (15.1 vs 16.6, P<0.001).

| Bivariable Analysisb | Multivariable Analysisb | |||||

|---|---|---|---|---|---|---|

| Characteristic | All Patients, N=9405 | Readmitted, n=1,182 | OR (95% CI) | P Value | OR (95% CI) | P Value |

| ||||||

| Age, y | 68.0 (14.2) | 66.4 (14.5) | 0.9 (0.91.0) | <0.001 | 0.9 (0.91.0) | <0.001 |

| Male | 3,431 (42%) | 637 (54%) | 1.6 (1.41.8) | <0.001 | 1.3 (1.11.5) | < 0.001 |

| Race | ||||||

| Caucasian | 5,340 (65%) | 766 (65%) | 1.0 | 1.0 | ||

| African American | 2,177 (26%) | 324 (27%) | 1.0 (0.91.2) | 0.60 | 1.0 (0.81.1) | 0.75 |

| Other | 706 (9%) | 92 (8%) | 0.9 (0.71.1) | 0.41 | 0.8 (0.61.0) | 0.12 |

| Married | 3,775 (46%) | 555 (47%) | 1.0 (0.91.2) | 0.50 | 1.0 (0.91.2) | 0.67 |

| Admission diagnosis category | ||||||

| Neurologic | 3,205 (39%) | 501 (42%) | 1.0 | 1.0 | ||

| Medical | 1,726 (21%) | 409 (35%) | 1.5 (1.31.7) | <0.001 | 1.8 (1.62.1) | < 0.001 |

| Orthopedic | 3,292 (40%) | 272 (23%) | 0.5 (0.50.6) | <0.001 | 1.3 (1.11.6) | 0.005 |

| APDRG‐SOI expected readmission rate | 17.4 (7.1%) | 22.2 (8.0%) | 1.1 (1.11.1) | <0.001 | 1.1 (1.01.1) | < 0.001 |

| Total FIM score category | ||||||

| High FIM, >76 points | 3,517 (43%) | 257 (22%) | 1.0 | 1.0 | ||

| Middle FIM, 60points | 2,742 (33%) | 353 (30%) | 1.8 (1.52.1) | <0.001 | 1.5 (1.31.8) | < 0.001 |

| Low FIM, <60 points | 1,964 (24%) | 572 (48%) | 4.0 (3.44.7) | <0.001 | 3.0 (2.53.6) | < 0.001 |

Multivariable and Subset Analyses

Patients with a primary medical diagnosis had higher odds of readmission to the hospital, (OR: 1.8; 95% CI: 1.6‐2.1, P<0.001), relative to patients with a neurologic or orthopedic diagnosis (Table 2). Across all diagnoses, the adjusted odds ratios (95% CIs) for the low and middle versus high FIM score category were 3.0 (2.5‐3.6; P<0.001) and 1.5 (1.3‐1.8; P<0.001) respectively (Table 2). When modeled as a continuous variable, a 10‐point decrease in FIM score was associated with a significantly increased adjusted readmission rate (OR: 1.4; 95% CI: 1.3‐1.4; P<0.001). In adjusted analysis including all subscales of the FIM, only the physical subscales, transfers (P<0.001), locomotion (P=0.002), and self‐care (P<0.001), were significantly associated with readmission. For each diagnostic category, there were similar significant associations between admission FIM score group and readmission status (Table 3). The odds of readmission by FIM score did not differ significantly across the 3 major diagnostic categories (P=0.20 for interaction term), suggesting that the effect of functional status was similar across various types of patients. We also did not observe a statistical interaction between age and FIM score group in predicting readmission (P=0.58). Patients in the lowest FIM group with a medical diagnosis had the highest adjusted readmission rate of 28.7% (Table 3).

| Multivariable Analysisa | Adjusted Readmission Ratesb | |||

|---|---|---|---|---|

| No. | OR (95% CI) | P Value | % (95% CI) | |

| ||||

| Neurologic | ||||

| High FIM (>76 points) | 755 | 1.0 | 7.3 (4.710.0) | |

| Middle FIM (6076 points) | 1,283 | 1.4 (1.02.1) | 0.06 | 9.1 (7.011.1) |

| Low FIM (<60 points) | 1,668 | 3.3 (2.34.7) | <0.001 | 18.7 (16.820.6) |

| Medical | ||||

| High FIM (>76 points) | 807 | 1.0 | 11.2 (8.114.3) | |

| Middle FIM (6076 points) | 766 | 1.8 (1.32.4) | <0.001 | 17.7 (14.520.9) |

| Low FIM (<60 points) | 562 | 3.2 (2.44.3) | <0.001 | 28.7 (25.132.4) |

| Orthopedic | ||||

| High FIM (>76 points) | 2,212 | 1.0 | 6.1 (4.77.6) | |

| Middle FIM (6076 points) | 1,046 | 1.4 (1.11.9) | 0.02 | 8.3 (6.410.1) |

| Low FIM (<60 points) | 306 | 2.2 (1.53.3) | <0.001 | 13.5 (10.416.7) |

DISCUSSION

In this study of 9405 consecutive patients admitted from acute care hospitals to a single inpatient rehabilitation facility, we investigated the association between functional status and readmission to an acute care hospital. We found that low functional status near the time of acute care hospital discharge was strongly associated with higher readmission rates. This relationship was consistently observed across major patient diagnostic categories, with low functioning medical patients having the highest rate of readmission (28.7%). Efforts to maintain or improve functional status during acute care hospitalization may be an important modifiable risk factor for acute care hospital readmission.

Previous studies have suggested that functional status may serve as an indicator of physiological reserve, and therefore vulnerability to medical complications and readmission.[6, 16, 23, 24, 25] Physiologic reserve refers to a person's ability to endure acute illness and is influenced by a number of factors, such as the adequacy of oxygen delivery to tissues, cardiovascular health, immune state, and nutritional status.[26] We found that motor subscales of the FIM score (transfers, locomotion, and self‐care), but not the other subscales, were independently associated with readmissions, which may suggest that lower motor scores are a stronger marker of physiologic reserve.[10, 16, 27] Although not our primary focus, we did note in our multivariable models that after adjusting for functional status, patients in a medical diagnostic category had higher readmission rates compared to patients with a primary neurologic or orthopedic diagnosis, but the impact of FIM score was consistent across all these diagnostic categories. We speculate that medical conditions that result in hospitalization, such as sepsis or acute kidney failure, may be more likely to result in multiorgan dysfunction that may impair physiological reserve and increase susceptibility to medical complications.[28, 29, 30, 31] In comparison, acute neurologic and orthopedic diagnoses, such as stroke or hip arthroplasty, directly impair gross motor function,[32, 33, 34, 35] with relative sparing of overall physiologic reserve.

The association between low functional status and readmissions is supported by previous studies across multiple hospital settings.[4, 5, 7, 8, 9, 27, 36] Despite this finding, routine inpatient medical practice may not fully address functional impairments. For instance, systematic measurement and documentation of functional status on admission and during hospitalization are not routine and may be a barrier to identifying medical patients at high risk for readmission.[37, 38, 39] Moreover, without recognition of functional impairment and its implications, current clinical practice may suboptimally prevent and treat physical impairments during inpatient care. However, such barriers can be surmounted. For example, in the medical intensive care unit setting, there is growing recognition that proactive and aggressive management of hospital‐acquired functional impairments through early rehabilitation is safe and feasible, improving patient outcomes while reducing hospital costs and readmissions.[3, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51] Moreover, 2 recent meta‐analyses have shown that physical therapy hospital‐based exercise programs can improve length of stay, overall hospital costs, and rates of discharge to home.[52, 53] Finally, a randomized trial has demonstrated that an individualized exercise regimen started in the acute hospital setting with long‐term telephone follow‐up can significantly reduce emergency hospital readmissions and improve quality of life in older adults.[54] Therefore, decreased functional status likely represents a modifiable risk factor for hospital readmission, and further research is necessary to more systematically identify low‐functioning patients and implement early mobility and activity programs to reduce hospital‐acquired functional impairment.[2, 49, 55]

Our analysis has potential limitations. First, this was an observational study and we are unable to demonstrate a direct cause‐and‐effect relationship between functional status and readmission. However, our results are consistent with prior literature in this field. Second, our cohort only included patients who were discharged from an acute hospital to a rehabilitation facility, which may limit its generalizability. However, we included a large patient sample size with a broad range of admission FIM scores, and our findings are consistent with other studies conducted in different clinical settings. Third, although 1 of our goals was to evaluate how readmission rates differed by diagnostic category, it is possible that individual diagnoses within each category may have different risks for readmission, and future larger studies could evaluate more detailed diagnostic grouping approaches. Fourth, we also recognize that although FIM score assessment has been validated, admission assessment occurs over a 72‐hour time period, during which patients' function could potentially change a clinically meaningful degree. Fifth, there may be residual confounding because of limitations in available data within our administrative dataset; however, we did account for severity of illness using a standardized measure, and prior research has demonstrated that the relationship between functional status and readmissions may be minimally confounded by demographic and clinical variables.[8, 16, 27, 56] Finally, we lacked readmission data following discharge from rehabilitation; it is possible that the association between FIM score at the time of rehabilitation initiation may have had limited predictive value among patients who successfully completed rehabilitation and were sent home.

CONCLUSION

In conclusion, in this study of patients admitted from acute care hospitals to a single inpatient rehabilitation facility, we observed a strong association between decreased functional status and increased hospital readmission. In particular, medical patients with lower physical functioning exhibited an especially high rate of readmission. Incorporating functional status assessment into routine medical care may help identify patients at higher risk of readmission. Moreover, preventing and treating impaired functional status during inpatient admission, through early activity and mobility, should be evaluated as a way of improving patient outcomes and reducing hospital readmissions.

Disclosures: Erik Hoyer, MD, is supported by the Rehabilitation Medicine Scientist Training Program (RMSTP; 5K12HD001097). The authors report no conflicts of interest.

- , , . Rehospitalizations among patients in the Medicare fee‐for‐service program. N Engl J Med. 2009;360(14):1418–1428.

- . Post‐hospital syndrome—an acquired, transient condition of generalized risk. N Engl J Med. 2013;368(2):100–102.

- , , , et al. Receiving early mobility during an intensive care unit admission is a predictor of improved outcomes in acute respiratory failure. Am J Med Sci. 2011;341(5):373–377.

- , . Association of physical functioning with same‐hospital readmission after stroke. Am J Phys Med Rehabil. 2004;83(6):434–438.

- , , , . Posthospital care transitions: patterns, complications, and risk identification. Health Serv Res. 2004;39(5):1449–1465.

- , , , , , . Risk factors for nonelective hospital readmissions. J Gen Intern Med. 1996;11(12):762–764.

- , , , et al. Risk prediction models for hospital readmission: a systematic review. JAMA. 2011;306(15):1688–1698.

- , , , et al. Hospital readmission in persons with stroke following postacute inpatient rehabilitation. J Gerontol A Biol Sci Med Sci. 2012;67(8):875–881.

- , , , , , . Hospital readmission of persons with hip fracture following medical rehabilitation. Arch Gerontol Geriatr. 2003;36(1):15–22.

- , , , , , . Characteristics of persons rehospitalized after stroke rehabilitation. Arch Phys Med Rehabil. 2001;82(10):1367–1374.

- , , , et al. A classification system for inpatient rehabilitation patients: a review and proposed revisions to the functional independence measure‐function related groups. PB98–105992, September. Washington, DC: US Department of Commerce, National Technical Information Services; 1997.

- , , , , , . A case‐mix classification system for medical rehabilitation. Med Care. 1994;32(4):366–379.

- , , , . The reliability of the functional independence measure: a quantitative review. Arch Phys Med Rehabil. 1996;77(12):1226–1232.

- , , , , , . Four methods for characterizing disability in the formation of function related groups. Arch Phys Med Rehabil. 1994;75(12):1277–1283.

- , , , et al. Association of self‐reported hospital discharge handoffs with 30‐day readmissions. JAMA Intern Med. 2013;173(8):624–629.

- , , , , , . Functional status impairment is associated with unplanned readmissions. Arch Phys Med Rehabil. 2013;94(10):1951–1958.

- , , , et al. All patient refined diagnosis related groups (APR‐DRGs). Version 15.0. Report No.: 98‐054 Rev. 00. Wallingford, CT: 3M Health Information Systems; 1998.

- The inpatient rehabilitation facility–patient assessment instrument (IRF‐PAI) training manual. 2012. http://www.cms.gov/.

- , , , et al. Relationships between disability measures and nursing effort during medical rehabilitation for patients with traumatic brain and spinal cord injury. Arch Phys Med Rehabil. 1997;78(2):143–149.

- , , , . Interrater reliability of the 7‐level functional independence measure (FIM). Scand J Rehabil Med. 1994;26(3):115–119.

- , , , , , . Comparison of logistic regression and neural networks to predict rehospitalization in patients with stroke. J Clin Epidemiol. 2001;54(11):1159–1165.

- , , . Comparison of the responsiveness of the Barthel Index and the motor component of the Functional Independence Measure in stroke: the impact of using different methods for measuring responsiveness. J Clin Epidemiol. 2002;55(9):922–928.

- , . Prediction of hospital readmission for heart failure: development of a simple risk score based on administrative data. J Am Coll Cardiol. 1999;33(6):1560–1566.

- , , . Are all readmissions bad readmissions? N Engl J Med. 2010;363(3):297–298.

- , . Hospital readmission as an accountability measure. JAMA. 2011;305(5):504–505.

- . Susceptibility to critical illness: reserve, response and therapy. Intensive Care Med. 2000;26(suppl 1):S57–S63.

- , , , , . Predictors of discharge to acute care after inpatient rehabilitation in severely affected stroke patients. Am J Phys Med Rehabil. 2012;91(5):387–392.

- , , , et al. Clinical characteristics and outcomes of sepsis‐related vs non‐sepsis‐related ARDS. Chest. 2010;138(3):559–567.

- , . Long‐term outcomes from sepsis. Curr Infect Dis Rep. 2007;9(5):382–386.

- , . Heart failure performance measures and outcomes: real or illusory gains. JAMA. 2009;302(7):792–794.

- , , , , . Patients' self‐assessed functional status in heart failure by new york heart association class: a prognostic predictor of hospitalizations, quality of life and death. J Card Fail. 2010;16(2):150–156.

- , , , et al. Knee arthroplasty: disabilities in comparison to the general population and to hip arthroplasty using a French national longitudinal survey. PLoS One. 2008;3(7):e2561.

- , , , et al. Gait asymmetry in community‐ambulating stroke survivors. Arch Phys Med Rehabil. 2008;89(2):304–310.

- , , , . Recovery of upper extremity function in stroke patients: The Copenhagen Stroke Study. Arch Phys Med Rehabil. 1994;75(4):394–398.

- , , , , , . The effect of admission physiological variables on 30 day outcome after stroke. J Clin Neurosci. 2005;12(8):905–910.

- , , , et al. Unplanned readmissions after hospital discharge among patients identified as being at high risk for readmission using a validated predictive algorithm. Open Med. 2011;5(2):e104–e111.

- . Can hospitalization‐associated disability be prevented? JAMA. 2011;306(16):1800–1801.

- , , . Hospitalization‐associated disability: “she was probably able to ambulate, but I'm not sure.” JAMA. 2011;306(16):1782–1793.

- , , , , , . Importance of functional measures in predicting mortality among older hospitalized patients. JAMA. 1998;279(15):1187–1193.

- . Mobilizing patients in the intensive care unit: improving neuromuscular weakness and physical function. JAMA. 2008;300(14):1685–1690.

- , , . Technology to enhance physical rehabilitation of critically ill patients. Crit Care Med. 2009;37(10 suppl):S436–S441.

- , , , et al. Early physical medicine and rehabilitation for patients with acute respiratory failure: a quality improvement project. Arch Phys Med Rehabil. 2010;91(4):536–542.

- , , , et al. ICU early physical rehabilitation programs: financial modeling of cost savings. Crit Care Med. 2013;41(3):717–724.

- , , , et al. Early physical and occupational therapy in mechanically ventilated, critically ill patients: a randomised controlled trial. Lancet. 2009;373(9678):1874–1882.

- , , , et al. Early intensive care unit mobility therapy in the treatment of acute respiratory failure. Crit Care Med. 2008;36(8):2238–2243.

- , , , et al. Early activity is feasible and safe in respiratory failure patients. Crit Care Med. 2007;35(1):139–145.

- , . Rehabilitation quality improvement in an intensive care unit setting: implementation of a quality improvement model. Top Stroke Rehabil. 2010;17(4):271–281.

- , , , , . Sustainability and scalability of the hospital elder life program at a community hospital. J Am Geriatr Soc. 2011;59(2):359–365.

- , , , et al. A multicomponent intervention to prevent delirium in hospitalized older patients. N Engl J Med. 1999;340(9):669–676.

- , , , et al. Functional disability 5 years after acute respiratory distress syndrome. N Engl J Med. 2011;364(14):1293–1304.

- , , , et al. Rehabilitation therapy and outcomes in acute respiratory failure: an observational pilot project. J Crit Care. 2010;25(2):254–262.

- , , . Exercise for acutely hospitalised older medical patients. Cochrane Database Syst Rev. 2007;(1):CD005955.

- , , . Extra physical therapy reduces patient length of stay and improves functional outcomes and quality of life in people with acute or subacute conditions: a systematic review. Arch Phys Med Rehabil. 2011;92(9):1490–1500.

- , , , , , . Fewer emergency readmissions and better quality of life for older adults at risk of hospital readmission: a randomized controlled trial to determine the effectiveness of a 24‐week exercise and telephone follow‐up program. J Am Geriatr Soc. 2009;57(3):395–402.

- , , , , , . Effects of an acute care for elders unit on costs and 30‐day readmissions. JAMA Intern Med. 2013:1–7.

- , , , , . Risks of acute hospital transfer and mortality during stroke rehabilitation. Arch Phys Med Rehabil. 2003;84(5):712–718.

Federally mandated pay‐for‐performance initiatives promote minimizing 30‐day hospital readmissions to improve healthcare quality and reduce costs. Although the reasons for readmissions are multifactorial, many patients are readmitted for a condition other than their initial hospital admitting diagnosis.[1] Impairments in functional status experienced during acute care hospitalization contribute to patients being discharged in a debilitated state and being vulnerable to postdischarge complications and potentially hospital readmission.[2] As such, decreased functional status may be an important and potentially modifiable risk factor for acute care hospital readmission.[3]

Previous studies have suggested that impaired functional status may be an important predictor of rehospitalization.[4, 5, 6, 7] However, inferences from existing studies are limited because they did not consider functional status as their primary focus, they only considered specific patient populations (eg, stroke) or readmissions occurring well beyond the 30‐day period defined by federal pay‐for‐performance standards.[4, 5, 6, 8, 9, 10] Our objective was to evaluate the association between functional status near the time of discharge from acute care hospital and 30‐day readmission for patients admitted to an acute inpatient rehabilitation facility. As a secondary objective, we sought to investigate the relationship between functional status and readmission by diagnostic category (medical, neurologic, or orthopedic).

METHODS

Study Population and Setting

We conducted a single‐center, retrospective study of patients admitted to an inpatient rehabilitation facility at a community hospital between July 1, 2006 and December 31, 2012. This facility provides intensive rehabilitation consisting of 3 hours of therapy per day, skilled nursing care on a 24‐hour basis, and medical care by a physiatrist. We excluded patients who died during inpatient rehabilitation (n=15, 0.2%) and patients not admitted directly from an acute care setting (n=178, 2.0%).

Data Source and Covariates

Data were derived from the Uniform Data System for Medical Rehabilitation (UDSMR), which is an administrative database providing the following data upon admission to an inpatient rehabilitation facility[11, 12, 13]: age, gender, race/ethnicity, marital status, the discharge setting, the admission Functional Independence Measure (FIM) score (details further below), and admission diagnostic category as defined by the primary discharge diagnosis from the acute care hospital and grouped by functional related groups (a case‐mix system for medical rehabilitation).[12, 14] The 3M ClinTrac management software (3M, St. Paul, MN), used for mandatory reporting to the State of Maryland, provided all‐payerrefined diagnosis related group (APRDRG) and severity of illness (SOI) combinations (a tool to group patients into clinically comparable disease and severity‐of‐illness categories expected to use similar resources and experience similar outcomes). The University HealthSystem Consortium (UHC) database provided national readmission rates for all APRDRG‐SOI combinations using a methodology that has been previously described.[15, 16] Expected readmission rates for APRDRG‐SOI combinations served as a patient risk stratification tool based on clinical logic that evaluates age, comorbidities, principal diagnosis during hospitalization, and procedures conducted during hospitalization.[17]

Primary Outcome: Acute Care Readmission

The primary outcome was all‐cause acute care readmission, defined as patient transfer to an acute care hospital during inpatient rehabilitation within 30 days from admission to inpatient rehabilitation. The care model for our inpatient rehabilitation unit is such that when patients become sick or develop a complication, they are admitted directly to a clinical unit (eg, intensive care unit) at the community hospital through a rapid‐response intervention, or the physiatrist arranges with an admitting inpatient attending to accept the patient directly to his or her service.

Primary Exposure: Functional Independence Measure

Functional status was measured using the FIM score.[18] The FIM score is an 18‐item measure of functional status, with each item scored on a scale from 1 to 7 (dependent to independent). Various aspects of motor function and cognitive function are assessed. The FIM has been validated and shown to be reliable and reproducible.[13, 19, 20] By definition for the FIM instrument, admission FIM scores are assessed by trained multidisciplinary personnel first over the 72 hours of the rehabilitation stay, and for this study served as a proxy for patient functional status upon discharge from the acute care setting in our analysis. This 72‐hour time window allows for full assessment by therapists and nurses; however, in clinical practice at the inpatient rehabilitation unit involved in this study, much of the FIM assessment occurs within the first 24 hours of the rehabilitation stay. For our analysis, we divided FIM scores into low, medium, and high functional groups. The thresholds for these groups were based on total FIM score tertiles from a prior study<60, 60 to 76, and >76.[16] As a secondary analysis we created 6 subscales of the overall FIM score based on previous research. These subscales included: transfers (transfer to chair/wheelchair, toilet, and tub/shower), locomotion (walking and stairs), self‐care (eating, grooming, bathing, dressing, and toileting), sphincter control (bladder and bowel management), communication (comprehension and expression), and social cognition (social interaction, problem solving, and memory).[21]

Statistical Analysis