User login

Why Do Lateral Unicompartmental Knee Arthroplasties Fail Today?

In 1975, Skolnick and colleagues1 introduced unicompartmental knee arthroplasty (UKA) for patients with isolated unicompartmental osteoarthritis (OA). They reported a study of 14 UKA procedures, of which 12 were at the medial and 2 at the lateral side. Forty years since this procedure was introduced, UKA is used in 8% to 12% of all knee arthroplasties.2-6 A minority of these procedures are performed at the lateral side (5%-10%).6-8

The considerable anatomical and kinematical differences between compartments9-14 make it impossible to directly compare outcomes of medial and lateral UKA. For example, a greater degree of femoral roll and more posterior translation at the lateral side in flexion9,10,13 can contribute to different pattern and volume differences of cartilage wear.15 Because of these differences, and because of implant design factors and lower surgical volume, lateral UKA is considered a technically more challenging surgery compared to medial UKA.12,16,17

Since isolated lateral compartment OA is relatively scarce, current literature on lateral UKA is limited, and most studies combine medial and lateral outcomes to report UKA outcomes and failure modes.3,4,18-20 However, as the UKA has grown in popularity over the last decade,2,21-25 the number of reports about the lateral UKA also has increased. Recent studies reported excellent short-term survivorship results of the lateral UKA (96%-99%)26,27 and smaller lateral UKA studies reported the 10-year survivorship with varying outcomes from good (84%)14,28-30 to excellent (94%-100%).8,31,32 Indeed, a recent systematic review showed survivorship of lateral UKA at 5, 10, and 15 years of 93%, 91%, and 89%, respectively.33Because of the differences between the medial and lateral compartment, it is important to know the failure modes of lateral UKA in order to improve clinical outcomes and revision rates. We performed a systematic review of cohort studies and registry-based studies that reported lateral UKA failure to assess the causes of lateral UKA failure. In addition, we compared the failure modes in cohort studies with those found in registry-based studies.

Patients and Methods

Search Strategy and Criteria

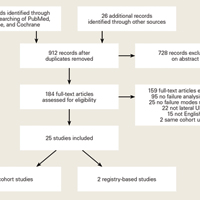

Databases of PubMed, Embase, and Cochrane (Cochrane Central Register of Clinical Trials) were searched with the terms “knee, arthroplasty, replacement,” “unicompartmental,” “unicondylar,” “partial,” “UKA,” “UKR,” “UCA,” “UCR,” “PKA,” “PKR,” “PCA,” “prosthesis failure,” “reoperation,” “survivorship,” and “treatment failure.” After removal of duplicates, 2 authors (JPvdL and HAZ) scanned the articles for their title and abstract to assess eligibility for the study.

Inclusion criteria were: (I) English language articles describing studies in humans published in the last 25 years, (II) retrospective and prospective studies, (III) featured lateral UKA, (IV) OA was indication for surgery, and (V) included failure modes data. The exclusion criteria were studies that featured: (I) only a specific group of failure (eg, bearing dislocations only), (II) previous surgery in ipsilateral knee (high tibial osteotomy, medial UKA), (III) acute concurrent knee diagnoses (acute anterior cruciate ligament rupture, acute meniscal tear), (IV) combined reporting of medial and lateral UKA, or (V) multiple studies with the same patient database.

Data Collection

All studies that reported modes of failure were used in this study and these failure modes were noted in a datasheet in Microsoft Excel 2011 (Microsoft).

Statistical Analysis

For this systematic review, statistical analysis was performed with IBM SPSS Statistics 22 (SPSS Inc.). We performed chi square tests and Fisher’s exact tests to assess a difference between cohort studies and registry-based studies with the null hypothesis of no difference between both groups. A difference was considered significant when P < .05.

Results

Through the search of the databases, 1294 studies were identified and 26 handpicked studies were added. Initially, based on the title and abstract, 184 of these studies were found eligible.

A total of 366 lateral UKA failures were included. The most common failure modes were progression of OA (29%), aseptic loosening (23%), and bearing dislocation (10%). Infection (6%), instability (6%), unexplained pain (6%), and fractures (4%) were less common causes of failure of lateral UKA (Table 2).

One hundred fifty-five of these failures were reported in the cohort studies. The most common modes of failure were OA progression (36%), bearing dislocation (17%) and aseptic loosening (16%). Less common were infection (10%), fractures (5%), pain (5%), and other causes (6%). In registry-based studies, with 211 lateral UKA failures, the most common modes of failure were aseptic loosening (28%), OA progression (24%), other causes (12%), instability (10%), pain (7%), bearing dislocation (5%), and polyethylene wear (4%) (Table 2).

When pooling cohort and registry-based studies, progression of OA was significantly more common than aseptic loosening (29% vs 23%, respectively; P < .01). It was also significantly more common in the cohort studies (36% vs 16%, respectively; P < .01) but no significant difference was found between progression of OA and aseptic loosening in registry-based studies (24% and 28%, respectively; P = .16) (Table 2).

When comparing cohort with registry-based studies, progression of OA was higher in cohort studies (36% vs. 24%; P < .01). Other failures modes that were more common in cohort studies compared with registry-based studies were bearing dislocation (17% vs 5%, respectively; P < .01) and infections (10% vs 3%, P < .01). Failure modes that were more common in registry-based studies than cohort studies were aseptic loosening (28% vs 16%, respectively; P < .01), other causes (12% vs 6%, respectively, P = .02), and instability (10% vs 1%, respectively, P < .01) (Table 2).

Discussion

In this systematic review, the most common failure modes in lateral UKA review were OA progression (29%), aseptic loosening (23%), and bearing dislocation (10%). Progression of OA and bearing dislocation were the most common modes of failure in cohort studies (36% and 17%, respectively), while aseptic loosening and OA progression were the most common failure modes in registry-based studies (28% and 24%, respectively).

As mentioned above, there are differences in anatomy and kinematics between the medial and lateral compartment. When the lateral UKA failure modes are compared with studies reporting medial UKA failure modes, differences in failure modes are seen.34 Siddiqui and Ahmad35 performed a systematic review of outcomes after UKA revision and presented a table with the failure modes of included studies. Unfortunately they did not report the ratio of medial and lateral UKA. However, when assuming an average percentage of 90% to 95% of medial UKA,6,7,36 the main failure mode in their review in 17 out of 21 studies was aseptic loosening. Indeed, a recent systematic review on medial UKA failure modes showed that aseptic loosening is the most common cause of failure following this procedure.34 Similarly, a search through registry-based studies6,7 and large cohort studies37-40 that only reported medial UKA failures showed that the majority of these studies7,37-39 also reported aseptic loosening as the main cause of failure in medial UKA. When comparing the results of our systematic review of lateral UKA failures with the results of these studies of medial UKA failures, it seems that OA progression seems to play a more dominant role in failures of lateral UKA, while aseptic loosening seems to be more common in medial UKA.

Differences in anatomy and kinematics of the medial and lateral compartment can explain this. Malalignment of the joint is an important factor in the etiology of OA41,42 and biomechanical studies showed that this malalignment can cause decreased viability and further degenerative changes of cartilage of the knee.43 Hernigou and Deschamps44 showed that the alignment of the knee after medial UKA is an important factor in postoperative joint changes. They found that overcorrection of varus deformity during medial UKA surgery, measured by the hip-knee-ankle (HKA) angle, was associated with increased OA at the lateral condyle and less tibial wear of the medial UKA. Undercorrection of the varus caused an increase in tibial wear of polyethylene. Chatellard and colleagues45 found the same results in the correction of varus, measured by HKA. In addition, they found that when the prosthetic (medial) joint space was smaller than healthy (lateral) joint space, this was correlated with lower prosthesis survival. A smaller joint space at the healthy side was correlated with OA progression at the lateral compartment and tibial component wear.

These studies explain the mechanism of progression of OA and aseptic loosening. Harrington46 assessed the load in patients with valgus and varus deformity. Patients with a valgus deformity have high mechanical load on the lateral condyle during the static phase, but during the dynamic phase, a major part of this load shifts to the medial condyle. In the patients with varus deformity, the mechanical load was noted on the medial condyle during both the static and dynamic phase. Ohdera and colleagues47 advised, based on this biomechanical study and their own experiences, to correct the knee during lateral UKA to a slight valgus angle (5°-7°) to prevent OA progression at the medial side. van der List and colleagues48 similarly showed that undercorrection of 3° to 7° was correlated with better functional outcomes when compared to more neutral alignment. Moreover, Khamaisy and colleagues49 recently showed that overcorrection during UKA surgery is more common in lateral than medial UKA.

These studies are important to understanding why OA progression is more common as a failure mode in lateral UKA. The shift of mechanical load from the lateral to medial epicondyle during the dynamic phase also could explain why aseptic loosening is less common in lateral UKA. As Hernigou and Deschamps44 and Chatellard and colleagues45 stated, undercorrection of varus deformity in medial UKA is associated with higher mechanical load on the medial prosthesis side and smaller joint space width. These factors are correlated with mechanical failure of medial UKA. We think this process can be applied to lateral UKA, with the addition that the mechanical load is higher on the healthy medial compartment during the dynamic phase. This causes more forces on the healthy (medial) side in lateral UKA, and in medial UKA more forces on the prosthesis (medial) side, which results in more OA progression in lateral UKA and more aseptic loosening in medial UKA. This finding is consistent with the results of our review of more OA progression and less aseptic loosening in lateral UKA. This study also suggests that medial and lateral UKA should not be reported together in studies that present survivorship, failure modes, or clinical outcomes.

A large discrepancy was seen in bearing dislocation between cohort studies (17%) and registry-based studies (5%). When we take a closer look to the bearing dislocation failures in the cohort studies, most of the failures were reported in only 2 cohort studies.50,51 In a study by Pandit and colleagues,50 3 different prosthesis designs were used in 3 different time periods. In the first series of lateral UKA (1983-1991), 6 out of 51 (12%) bearings dislocated. In the second series (1998-2004), a modified technique was used and 3 out of 65 (5%) bearings dislocated. In the third series (2004-2008), a modified technique and a domed tibial component was used and only 1 out of 68 bearings dislocated (1%). In a study published in 1996, Gunther and colleagues51 also used surgical techniques and implants that were modified over the course of the study period. Because of these modified techniques, different implant designs, and year of publication, bearing dislocation most likely plays a smaller role than the 17% reported in the cohort studies. This discrepancy is a good example of the important role for the registries and registry-based studies in reporting failure modes and survivorship, especially in lateral UKA due to the low surgical frequency. Pabinger and colleagues52 recently performed a systematic review of cohort studies and registry-based studies in which they stated that the reliability in non-registry-based studies should be questioned and they considered registry-based studies superior in reporting UKA outcomes and revision rates. Furthermore, given the differences in anatomic and kinematic differences between the medial and lateral compartment and different failure modes between medial and lateral UKA, it would be better if future studies presented the medial and lateral failures separately. As stated above, most large cohort studies and especially annual registries currently do not report modes of failure of medial and lateral UKA separately.3,4,18-20

There are limitations in this study. First, this systematic review is not a full meta-analysis but a pooled analysis of collected study series and retrospective studies. Therefore, we cannot exclude sampling bias, confounders, and selection bias from the literature. We included all studies reporting failure modes of lateral UKA and excluded all case reports. We made a conscious choice about including all lateral UKA failures because this is the first systematic review of lateral UKA failure modes. Another limitation is that the follow-up period of the studies differed (Table 1) and we did not correct for the follow-up period. As stated in the example of bearing dislocations, some of these studies reported old or different techniques, while other, more recently published studies used more modified techniques11,29,53-56 Unfortunately, most studies did not report the time of arthroplasty survival and therefore we could not correct for the follow-up period.

In conclusion, progression of OA is the most common failure mode in lateral UKA, followed by aseptic loosening. Anatomic and kinematic factors such as alignment, mechanical forces during dynamic phase, and correction of valgus seem to play important roles in failure modes of lateral UKA. In the future, failure modes of medial and lateral UKA should be reported separately.

Am J Orthop. 2016;45(7):432-438, 462. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. Skolnick MD, Bryan RS, Peterson LFA. Unicompartmental polycentric knee arthroplasty. Description and preliminary results. Clin Orthop Relat Res. 1975;(112):208-214.

2. Riddle DL, Jiranek WA, McGlynn FJ. Yearly Incidence of Unicompartmental Knee Arthroplasty in the United States. J Arthroplasty. 2008;23(3):408-412.

3. Australian Orthopaedic Association. Hip and Knee Arthroplasty 2014 Annual Report. https://aoanjrr.sahmri.com/documents/10180/172286/Annual%20Report%202014. Accessed June 3, 2015.

4. Swedish Knee Arthroplasty Register. 2013 Annual Report.http://myknee.se/pdf/SKAR2013_Eng.pdf. Accessed June 3, 2015.

5. The New Zealand Joint Registry. Fourteen Year Report. January 1999 to December 2012. 2013. http://nzoa.org.nz/system/files/NJR 14 Year Report.pdf. Accessed June 3, 2015.

6. Baker PN, Jameson SS, Deehan DJ, Gregg PJ, Porter M, Tucker K. Mid-term equivalent survival of medial and lateral unicondylar knee replacement: an analysis of data from a National Joint Registry. J Bone Joint Surg Br. 2012;94(12):1641-1648.

7. Lewold S, Robertsson O, Knutson K, Lidgren L. Revision of unicompartmental knee arthroplasty: outcome in 1,135 cases from the Swedish Knee Arthroplasty study. Acta Orthop Scand. 1998;69(5):469-474.

8. Pennington DW, Swienckowski JJ, Lutes WB, Drake GN. Lateral unicompartmental knee arthroplasty: survivorship and technical considerations at an average follow-up of 12.4 years. J Arthroplasty. 2006;21(1):13-17.

9. Hill PF, Vedi V, Williams A, Iwaki H, Pinskerova V, Freeman MA. Tibiofemoral movement 2: the loaded and unloaded living knee studied by MRI. J Bone Joint Surg Br. 2000;82(8):1196-1198.

10. Nakagawa S, Kadoya Y, Todo S, et al. Tibiofemoral movement 3: full flexion in the living knee studied by MRI. J Bone Joint Surg Br. 2000;82(8):1199-1200.

11. Ashraf T, Newman JH, Evans RL, Ackroyd CE. Lateral unicompartmental knee replacement survivorship and clinical experience over 21 years. J Bone Joint Surg Br. 2002;84(8):1126-1130.

12. Scott RD. Lateral unicompartmental replacement: a road less traveled. Orthopedics. 2005;28(9):983-984.

13. Sah AP, Scott RD. Lateral unicompartmental knee arthroplasty through a medial approach. Study with an average five-year follow-up. J Bone Joint Surg Am. 2007;89(9):1948-1954.

14. Argenson JN, Parratte S, Bertani A, Flecher X, Aubaniac JM. Long-term results with a lateral unicondylar replacement. Clin Orthop Relat Res. 2008;466(11):2686-2693.

15. Weidow J, Pak J, Karrholm J. Different patterns of cartilage wear in medial and lateral gonarthrosis. Acta Orthop Scand. 2002;73(3):326-329.

16. Ollivier M, Abdel MP, Parratte S, Argenson JN. Lateral unicondylar knee arthroplasty (UKA): contemporary indications, surgical technique, and results. Int Orthop. 2014;38(2):449-455.

17. Demange MK, Von Keudell A, Probst C, Yoshioka H, Gomoll AH. Patient-specific implants for lateral unicompartmental knee arthroplasty. Int Orthop. 2015;39(8):1519-1526.

18. Khan Z, Nawaz SZ, Kahane S, Esler C, Chatterji U. Conversion of unicompartmental knee arthroplasty to total knee arthroplasty: the challenges and need for augments. Acta Orthop Belg. 2013;79(6):699-705.

19. Epinette JA, Brunschweiler B, Mertl P, et al. Unicompartmental knee arthroplasty modes of failure: wear is not the main reason for failure: a multicentre study of 418 failed knees. Orthop Traumatol Surg Res. 2012;98(6 Suppl):S124-S130.

20. Bordini B, Stea S, Falcioni S, Ancarani C, Toni A. Unicompartmental knee arthroplasty: 11-year experience from 3929 implants in RIPO register. Knee. 2014;21(6):1275-1279.

21. Bolognesi MP, Greiner MA, Attarian DE, et al. Unicompartmental knee arthroplasty and total knee arthroplasty among medicare beneficiaries, 2000 to 2009. J Bone Joint Surg Am. 2013;95(22):e174.

22. Nwachukwu BU, McCormick FM, Schairer WW, Frank RM, Provencher MT, Roche MW. Unicompartmental knee arthroplasty versus high tibial osteotomy: United States practice patterns for the surgical treatment of unicompartmental arthritis. J Arthroplasty. 2014;29(8):1586-1589.

23. van der List JP, Chawla H, Pearle AD. Robotic-assisted knee arthroplasty: an overview. Am J Orthop. 2016;45(4):202-211.

24. van der List JP, Chawla H, Joskowicz L, Pearle AD. Current state of computer navigation and robotics in unicompartmental and total knee arthroplasty: a systematic review with meta-analysis. Knee Surg Sports Traumatol Arthrosc. 2016 Sep 6. [Epub ahead of print]

25. Zuiderbaan HA, van der List JP, Kleeblad LJ, et al. Modern indications, results and global trends in the use of unicompartmental knee arthroplasty and high tibial osteotomy for the treatment of medial unicondylar knee osteoarthritis. Am J Orthop. 2016;45(6):E355-E361.

26. Smith JR, Robinson JR, Porteous AJ, et al. Fixed bearing lateral unicompartmental knee arthroplasty--short to midterm survivorship and knee scores for 101 prostheses. Knee. 2014;21(4):843-847.

27. Berend KR, Kolczun MC 2nd, George JW Jr, Lombardi AV Jr. Lateral unicompartmental knee arthroplasty through a lateral parapatellar approach has high early survivorship. Clin Orthop Relat Res. 2012;470(1):77-83.

28. Keblish PA, Briard JL. Mobile-bearing unicompartmental knee arthroplasty: a 2-center study with an 11-year (mean) follow-up. J Arthroplasty. 2004;19(7 Suppl 2):87-94.

29. Bertani A, Flecher X, Parratte S, Aubaniac JM, Argenson JN. Unicompartmental-knee arthroplasty for treatment of lateral gonarthrosis: about 30 cases. Midterm results. Rev Chir Orthop Reparatrice Appar Mot. 2008;94(8):763-770.

30. Sebilo A, Casin C, Lebel B, et al. Clinical and technical factors influencing outcomes of unicompartmental knee arthroplasty: Retrospective multicentre study of 944 knees. Orthop Traumatol Surg Res. 2013;99(4 Suppl):S227-S234.

31. Cartier P, Khefacha A, Sanouiller JL, Frederick K. Unicondylar knee arthroplasty in middle-aged patients: A minimum 5-year follow-up. Orthopedics. 2007;30(8 Suppl):62-65.

32. Lustig S, Paillot JL, Servien E, Henry J, Ait Si Selmi T, Neyret P. Cemented all polyethylene tibial insert unicompartimental knee arthroplasty: a long term follow-up study. Orthop Traumatol Surg Res. 2009;95(1):12-21.

33. van der List JP, McDonald LS, Pearle AD. Systematic review of medial versus lateral survivorship in unicompartmental knee arthroplasty. Knee. 2015;22(6):454-460.

34. van der List JP, Zuiderbaan HA, Pearle AD. Why do medial unicompartmental knee arthroplasties fail today? J Arthroplasty. 2016;31(5):1016-1021.

35. Siddiqui NA, Ahmad ZM. Revision of unicondylar to total knee arthroplasty: a systematic review. Open Orthop J. 2012;6:268-275.

36. Pennington DW, Swienckowski JJ, Lutes WB, Drake GN. Lateral unicompartmental knee arthroplasty: survivorship and technical considerations at an average follow-up of 12.4 years. J Arthroplasty. 2006;21(1):13-17.

37. Kalra S, Smith TO, Berko B, Walton NP. Assessment of radiolucent lines around the Oxford unicompartmental knee replacement: sensitivity and specificity for loosening. J Bone Joint Surg Br. 2011;93(6):777-781.

38. Wynn Jones H, Chan W, Harrison T, Smith TO, Masonda P, Walton NP. Revision of medial Oxford unicompartmental knee replacement to a total knee replacement: similar to a primary? Knee. 2012;19(4):339-343.

39. Sierra RJ, Kassel CA, Wetters NG, Berend KR, Della Valle CJ, Lombardi AV. Revision of unicompartmental arthroplasty to total knee arthroplasty: not always a slam dunk! J Arthroplasty. 2013;28(8 Suppl):128-132.

40. Citak M, Dersch K, Kamath AF, Haasper C, Gehrke T, Kendoff D. Common causes of failed unicompartmental knee arthroplasty: a single-centre analysis of four hundred and seventy one cases. Int Orthop. 2014;38(5):961-965.

41. Hunter DJ, Wilson DR. Role of alignment and biomechanics in osteoarthritis and implications for imaging. Radiol Clin North Am. 2009;47(4):553-566.

42. Hunter DJ, Sharma L, Skaife T. Alignment and osteoarthritis of the knee. J Bone Joint Surg Am. 2009;91 Suppl 1:85-89.

43. Roemhildt ML, Beynnon BD, Gauthier AE, Gardner-Morse M, Ertem F, Badger GJ. Chronic in vivo load alteration induces degenerative changes in the rat tibiofemoral joint. Osteoarthritis Cartilage. 2013;21(2):346-357.

44. Hernigou P, Deschamps G. Alignment influences wear in the knee after medial unicompartmental arthroplasty. Clin Orthop Relat Res. 2004;(423):161-165.

45. Chatellard R, Sauleau V, Colmar M, et al. Medial unicompartmental knee arthroplasty: does tibial component position influence clinical outcomes and arthroplasty survival? Orthop Traumatol Surg Res. 2013;99(4 Suppl):S219-S225.

46. Harrington IJ. Static and dynamic loading patterns in knee joints with deformities. J Bone Joint Surg Am. 1983;65(2):247-259.

47. Ohdera T, Tokunaga J, Kobayashi A. Unicompartmental knee arthroplasty for lateral gonarthrosis: midterm results. J Arthroplasty. 2001;16(2):196-200.

48. van der List JP, Chawla H, Villa JC, Zuiderbaan HA, Pearle AD. Early functional outcome after lateral UKA is sensitive to postoperative lower limb alignment. Knee Surg Sports Traumatol Arthrosc. 2015 Nov 26. [Epub ahead of print]

49. Khamaisy S, Gladnick BP, Nam D, Reinhardt KR, Heyse TJ, Pearle AD. Lower limb alignment control: Is it more challenging in lateral compared to medial unicondylar knee arthroplasty? Knee. 2015;22(4):347-350.

50. Pandit H, Jenkins C, Beard DJ, et al. Mobile bearing dislocation in lateral unicompartmental knee replacement. Knee. 2010;17(6):392-397.

51. Gunther TV, Murray DW, Miller R, et al. Lateral unicompartmental arthroplasty with the Oxford meniscal knee. Knee. 1996;3(1):33-39.

52. Pabinger C, Lumenta DB, Cupak D, Berghold A, Boehler N, Labek G. Quality of outcome data in knee arthroplasty: Comparison of registry data and worldwide non-registry studies from 4 decades. Acta Orthopaedica. 2015;86(1):58-62.

53. Lustig S, Elguindy A, Servien E, et al. 5- to 16-year follow-up of 54 consecutive lateral unicondylar knee arthroplasties with a fixed-all polyethylene bearing. J Arthroplasty. 2011;26(8):1318-1325.

54. Walton MJ, Weale AE, Newman JH. The progression of arthritis following lateral unicompartmental knee replacement. Knee. 2006;13(5):374-377.

55. Lustig S, Lording T, Frank F, Debette C, Servien E, Neyret P. Progression of medial osteoarthritis and long term results of lateral unicompartmental arthroplasty: 10 to 18 year follow-up of 54 consecutive implants. Knee. 2014;21(S1):S26-S32.

56. O’Rourke MR, Gardner JJ, Callaghan JJ, et al. Unicompartmental knee replacement: a minimum twenty-one-year followup, end-result study. Clin Orthop Relat Res. 2005;440:27-37.

57. Citak M, Cross MB, Gehrke T, Dersch K, Kendoff D. Modes of failure and revision of failed lateral unicompartmental knee arthroplasties. Knee. 2015;22(4):338-340.

58. Liebs TR, Herzberg W. Better quality of life after medial versus lateral unicondylar knee arthroplasty knee. Clin Orthop Relat Res. 2013;471(8):2629-2640.

59. Weston-Simons JS, Pandit H, Kendrick BJ, et al. The mid-term outcomes of the Oxford Domed Lateral unicompartmental knee replacement. Bone Joint J. 2014;96-B(1):59-64.

60. Thompson SA, Liabaud B, Nellans KW, Geller JA. Factors associated with poor outcomes following unicompartmental knee arthroplasty: redefining the “classic” indications for surgery. J Arthroplasty. 2013;28(9):1561-1564.

61. Saxler G, Temmen D, Bontemps G. Medium-term results of the AMC-unicompartmental knee arthroplasty. Knee. 2004;11(5):349-355.

62. Forster MC, Bauze AJ, Keene GCR. Lateral unicompartmental knee replacement: Fixed or mobile bearing? Knee Surg Sports Traumatol Arthrosc. 2007;15(9):1107-1111.

63. Streit MR, Walker T, Bruckner T, et al. Mobile-bearing lateral unicompartmental knee replacement with the Oxford domed tibial component: an independent series. J Bone Joint Surg Br. 2012;94(10):1356-1361.

64. Altuntas AO, Alsop H, Cobb JP. Early results of a domed tibia, mobile bearing lateral unicompartmental knee arthroplasty from an independent centre. Knee. 2013;20(6):466-470.

65. Ashraf T, Newman JH, Desai VV, Beard D, Nevelos JE. Polyethylene wear in a non-congruous unicompartmental knee replacement: a retrieval analysis. Knee. 2004;11(3):177-181.

66. Schelfaut S, Beckers L, Verdonk P, Bellemans J, Victor J. The risk of bearing dislocation in lateral unicompartmental knee arthroplasty using a mobile biconcave design. Knee Surg Sports Traumatol Arthrosc. 2013;21(11):2487-2494.

67. Marson B, Prasad N, Jenkins R, Lewis M. Lateral unicompartmental knee replacements: Early results from a District General Hospital. Eur J Orthop Surg Traumatol. 2014;24(6):987-991.

68. Walker T, Gotterbarm T, Bruckner T, Merle C, Streit MR. Total versus unicompartmental knee replacement for isolated lateral osteoarthritis: a matched-pairs study. Int Orthop. 2014;38(11):2259-2264.

In 1975, Skolnick and colleagues1 introduced unicompartmental knee arthroplasty (UKA) for patients with isolated unicompartmental osteoarthritis (OA). They reported a study of 14 UKA procedures, of which 12 were at the medial and 2 at the lateral side. Forty years since this procedure was introduced, UKA is used in 8% to 12% of all knee arthroplasties.2-6 A minority of these procedures are performed at the lateral side (5%-10%).6-8

The considerable anatomical and kinematical differences between compartments9-14 make it impossible to directly compare outcomes of medial and lateral UKA. For example, a greater degree of femoral roll and more posterior translation at the lateral side in flexion9,10,13 can contribute to different pattern and volume differences of cartilage wear.15 Because of these differences, and because of implant design factors and lower surgical volume, lateral UKA is considered a technically more challenging surgery compared to medial UKA.12,16,17

Since isolated lateral compartment OA is relatively scarce, current literature on lateral UKA is limited, and most studies combine medial and lateral outcomes to report UKA outcomes and failure modes.3,4,18-20 However, as the UKA has grown in popularity over the last decade,2,21-25 the number of reports about the lateral UKA also has increased. Recent studies reported excellent short-term survivorship results of the lateral UKA (96%-99%)26,27 and smaller lateral UKA studies reported the 10-year survivorship with varying outcomes from good (84%)14,28-30 to excellent (94%-100%).8,31,32 Indeed, a recent systematic review showed survivorship of lateral UKA at 5, 10, and 15 years of 93%, 91%, and 89%, respectively.33Because of the differences between the medial and lateral compartment, it is important to know the failure modes of lateral UKA in order to improve clinical outcomes and revision rates. We performed a systematic review of cohort studies and registry-based studies that reported lateral UKA failure to assess the causes of lateral UKA failure. In addition, we compared the failure modes in cohort studies with those found in registry-based studies.

Patients and Methods

Search Strategy and Criteria

Databases of PubMed, Embase, and Cochrane (Cochrane Central Register of Clinical Trials) were searched with the terms “knee, arthroplasty, replacement,” “unicompartmental,” “unicondylar,” “partial,” “UKA,” “UKR,” “UCA,” “UCR,” “PKA,” “PKR,” “PCA,” “prosthesis failure,” “reoperation,” “survivorship,” and “treatment failure.” After removal of duplicates, 2 authors (JPvdL and HAZ) scanned the articles for their title and abstract to assess eligibility for the study.

Inclusion criteria were: (I) English language articles describing studies in humans published in the last 25 years, (II) retrospective and prospective studies, (III) featured lateral UKA, (IV) OA was indication for surgery, and (V) included failure modes data. The exclusion criteria were studies that featured: (I) only a specific group of failure (eg, bearing dislocations only), (II) previous surgery in ipsilateral knee (high tibial osteotomy, medial UKA), (III) acute concurrent knee diagnoses (acute anterior cruciate ligament rupture, acute meniscal tear), (IV) combined reporting of medial and lateral UKA, or (V) multiple studies with the same patient database.

Data Collection

All studies that reported modes of failure were used in this study and these failure modes were noted in a datasheet in Microsoft Excel 2011 (Microsoft).

Statistical Analysis

For this systematic review, statistical analysis was performed with IBM SPSS Statistics 22 (SPSS Inc.). We performed chi square tests and Fisher’s exact tests to assess a difference between cohort studies and registry-based studies with the null hypothesis of no difference between both groups. A difference was considered significant when P < .05.

Results

Through the search of the databases, 1294 studies were identified and 26 handpicked studies were added. Initially, based on the title and abstract, 184 of these studies were found eligible.

A total of 366 lateral UKA failures were included. The most common failure modes were progression of OA (29%), aseptic loosening (23%), and bearing dislocation (10%). Infection (6%), instability (6%), unexplained pain (6%), and fractures (4%) were less common causes of failure of lateral UKA (Table 2).

One hundred fifty-five of these failures were reported in the cohort studies. The most common modes of failure were OA progression (36%), bearing dislocation (17%) and aseptic loosening (16%). Less common were infection (10%), fractures (5%), pain (5%), and other causes (6%). In registry-based studies, with 211 lateral UKA failures, the most common modes of failure were aseptic loosening (28%), OA progression (24%), other causes (12%), instability (10%), pain (7%), bearing dislocation (5%), and polyethylene wear (4%) (Table 2).

When pooling cohort and registry-based studies, progression of OA was significantly more common than aseptic loosening (29% vs 23%, respectively; P < .01). It was also significantly more common in the cohort studies (36% vs 16%, respectively; P < .01) but no significant difference was found between progression of OA and aseptic loosening in registry-based studies (24% and 28%, respectively; P = .16) (Table 2).

When comparing cohort with registry-based studies, progression of OA was higher in cohort studies (36% vs. 24%; P < .01). Other failures modes that were more common in cohort studies compared with registry-based studies were bearing dislocation (17% vs 5%, respectively; P < .01) and infections (10% vs 3%, P < .01). Failure modes that were more common in registry-based studies than cohort studies were aseptic loosening (28% vs 16%, respectively; P < .01), other causes (12% vs 6%, respectively, P = .02), and instability (10% vs 1%, respectively, P < .01) (Table 2).

Discussion

In this systematic review, the most common failure modes in lateral UKA review were OA progression (29%), aseptic loosening (23%), and bearing dislocation (10%). Progression of OA and bearing dislocation were the most common modes of failure in cohort studies (36% and 17%, respectively), while aseptic loosening and OA progression were the most common failure modes in registry-based studies (28% and 24%, respectively).

As mentioned above, there are differences in anatomy and kinematics between the medial and lateral compartment. When the lateral UKA failure modes are compared with studies reporting medial UKA failure modes, differences in failure modes are seen.34 Siddiqui and Ahmad35 performed a systematic review of outcomes after UKA revision and presented a table with the failure modes of included studies. Unfortunately they did not report the ratio of medial and lateral UKA. However, when assuming an average percentage of 90% to 95% of medial UKA,6,7,36 the main failure mode in their review in 17 out of 21 studies was aseptic loosening. Indeed, a recent systematic review on medial UKA failure modes showed that aseptic loosening is the most common cause of failure following this procedure.34 Similarly, a search through registry-based studies6,7 and large cohort studies37-40 that only reported medial UKA failures showed that the majority of these studies7,37-39 also reported aseptic loosening as the main cause of failure in medial UKA. When comparing the results of our systematic review of lateral UKA failures with the results of these studies of medial UKA failures, it seems that OA progression seems to play a more dominant role in failures of lateral UKA, while aseptic loosening seems to be more common in medial UKA.

Differences in anatomy and kinematics of the medial and lateral compartment can explain this. Malalignment of the joint is an important factor in the etiology of OA41,42 and biomechanical studies showed that this malalignment can cause decreased viability and further degenerative changes of cartilage of the knee.43 Hernigou and Deschamps44 showed that the alignment of the knee after medial UKA is an important factor in postoperative joint changes. They found that overcorrection of varus deformity during medial UKA surgery, measured by the hip-knee-ankle (HKA) angle, was associated with increased OA at the lateral condyle and less tibial wear of the medial UKA. Undercorrection of the varus caused an increase in tibial wear of polyethylene. Chatellard and colleagues45 found the same results in the correction of varus, measured by HKA. In addition, they found that when the prosthetic (medial) joint space was smaller than healthy (lateral) joint space, this was correlated with lower prosthesis survival. A smaller joint space at the healthy side was correlated with OA progression at the lateral compartment and tibial component wear.

These studies explain the mechanism of progression of OA and aseptic loosening. Harrington46 assessed the load in patients with valgus and varus deformity. Patients with a valgus deformity have high mechanical load on the lateral condyle during the static phase, but during the dynamic phase, a major part of this load shifts to the medial condyle. In the patients with varus deformity, the mechanical load was noted on the medial condyle during both the static and dynamic phase. Ohdera and colleagues47 advised, based on this biomechanical study and their own experiences, to correct the knee during lateral UKA to a slight valgus angle (5°-7°) to prevent OA progression at the medial side. van der List and colleagues48 similarly showed that undercorrection of 3° to 7° was correlated with better functional outcomes when compared to more neutral alignment. Moreover, Khamaisy and colleagues49 recently showed that overcorrection during UKA surgery is more common in lateral than medial UKA.

These studies are important to understanding why OA progression is more common as a failure mode in lateral UKA. The shift of mechanical load from the lateral to medial epicondyle during the dynamic phase also could explain why aseptic loosening is less common in lateral UKA. As Hernigou and Deschamps44 and Chatellard and colleagues45 stated, undercorrection of varus deformity in medial UKA is associated with higher mechanical load on the medial prosthesis side and smaller joint space width. These factors are correlated with mechanical failure of medial UKA. We think this process can be applied to lateral UKA, with the addition that the mechanical load is higher on the healthy medial compartment during the dynamic phase. This causes more forces on the healthy (medial) side in lateral UKA, and in medial UKA more forces on the prosthesis (medial) side, which results in more OA progression in lateral UKA and more aseptic loosening in medial UKA. This finding is consistent with the results of our review of more OA progression and less aseptic loosening in lateral UKA. This study also suggests that medial and lateral UKA should not be reported together in studies that present survivorship, failure modes, or clinical outcomes.

A large discrepancy was seen in bearing dislocation between cohort studies (17%) and registry-based studies (5%). When we take a closer look to the bearing dislocation failures in the cohort studies, most of the failures were reported in only 2 cohort studies.50,51 In a study by Pandit and colleagues,50 3 different prosthesis designs were used in 3 different time periods. In the first series of lateral UKA (1983-1991), 6 out of 51 (12%) bearings dislocated. In the second series (1998-2004), a modified technique was used and 3 out of 65 (5%) bearings dislocated. In the third series (2004-2008), a modified technique and a domed tibial component was used and only 1 out of 68 bearings dislocated (1%). In a study published in 1996, Gunther and colleagues51 also used surgical techniques and implants that were modified over the course of the study period. Because of these modified techniques, different implant designs, and year of publication, bearing dislocation most likely plays a smaller role than the 17% reported in the cohort studies. This discrepancy is a good example of the important role for the registries and registry-based studies in reporting failure modes and survivorship, especially in lateral UKA due to the low surgical frequency. Pabinger and colleagues52 recently performed a systematic review of cohort studies and registry-based studies in which they stated that the reliability in non-registry-based studies should be questioned and they considered registry-based studies superior in reporting UKA outcomes and revision rates. Furthermore, given the differences in anatomic and kinematic differences between the medial and lateral compartment and different failure modes between medial and lateral UKA, it would be better if future studies presented the medial and lateral failures separately. As stated above, most large cohort studies and especially annual registries currently do not report modes of failure of medial and lateral UKA separately.3,4,18-20

There are limitations in this study. First, this systematic review is not a full meta-analysis but a pooled analysis of collected study series and retrospective studies. Therefore, we cannot exclude sampling bias, confounders, and selection bias from the literature. We included all studies reporting failure modes of lateral UKA and excluded all case reports. We made a conscious choice about including all lateral UKA failures because this is the first systematic review of lateral UKA failure modes. Another limitation is that the follow-up period of the studies differed (Table 1) and we did not correct for the follow-up period. As stated in the example of bearing dislocations, some of these studies reported old or different techniques, while other, more recently published studies used more modified techniques11,29,53-56 Unfortunately, most studies did not report the time of arthroplasty survival and therefore we could not correct for the follow-up period.

In conclusion, progression of OA is the most common failure mode in lateral UKA, followed by aseptic loosening. Anatomic and kinematic factors such as alignment, mechanical forces during dynamic phase, and correction of valgus seem to play important roles in failure modes of lateral UKA. In the future, failure modes of medial and lateral UKA should be reported separately.

Am J Orthop. 2016;45(7):432-438, 462. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

In 1975, Skolnick and colleagues1 introduced unicompartmental knee arthroplasty (UKA) for patients with isolated unicompartmental osteoarthritis (OA). They reported a study of 14 UKA procedures, of which 12 were at the medial and 2 at the lateral side. Forty years since this procedure was introduced, UKA is used in 8% to 12% of all knee arthroplasties.2-6 A minority of these procedures are performed at the lateral side (5%-10%).6-8

The considerable anatomical and kinematical differences between compartments9-14 make it impossible to directly compare outcomes of medial and lateral UKA. For example, a greater degree of femoral roll and more posterior translation at the lateral side in flexion9,10,13 can contribute to different pattern and volume differences of cartilage wear.15 Because of these differences, and because of implant design factors and lower surgical volume, lateral UKA is considered a technically more challenging surgery compared to medial UKA.12,16,17

Since isolated lateral compartment OA is relatively scarce, current literature on lateral UKA is limited, and most studies combine medial and lateral outcomes to report UKA outcomes and failure modes.3,4,18-20 However, as the UKA has grown in popularity over the last decade,2,21-25 the number of reports about the lateral UKA also has increased. Recent studies reported excellent short-term survivorship results of the lateral UKA (96%-99%)26,27 and smaller lateral UKA studies reported the 10-year survivorship with varying outcomes from good (84%)14,28-30 to excellent (94%-100%).8,31,32 Indeed, a recent systematic review showed survivorship of lateral UKA at 5, 10, and 15 years of 93%, 91%, and 89%, respectively.33Because of the differences between the medial and lateral compartment, it is important to know the failure modes of lateral UKA in order to improve clinical outcomes and revision rates. We performed a systematic review of cohort studies and registry-based studies that reported lateral UKA failure to assess the causes of lateral UKA failure. In addition, we compared the failure modes in cohort studies with those found in registry-based studies.

Patients and Methods

Search Strategy and Criteria

Databases of PubMed, Embase, and Cochrane (Cochrane Central Register of Clinical Trials) were searched with the terms “knee, arthroplasty, replacement,” “unicompartmental,” “unicondylar,” “partial,” “UKA,” “UKR,” “UCA,” “UCR,” “PKA,” “PKR,” “PCA,” “prosthesis failure,” “reoperation,” “survivorship,” and “treatment failure.” After removal of duplicates, 2 authors (JPvdL and HAZ) scanned the articles for their title and abstract to assess eligibility for the study.

Inclusion criteria were: (I) English language articles describing studies in humans published in the last 25 years, (II) retrospective and prospective studies, (III) featured lateral UKA, (IV) OA was indication for surgery, and (V) included failure modes data. The exclusion criteria were studies that featured: (I) only a specific group of failure (eg, bearing dislocations only), (II) previous surgery in ipsilateral knee (high tibial osteotomy, medial UKA), (III) acute concurrent knee diagnoses (acute anterior cruciate ligament rupture, acute meniscal tear), (IV) combined reporting of medial and lateral UKA, or (V) multiple studies with the same patient database.

Data Collection

All studies that reported modes of failure were used in this study and these failure modes were noted in a datasheet in Microsoft Excel 2011 (Microsoft).

Statistical Analysis

For this systematic review, statistical analysis was performed with IBM SPSS Statistics 22 (SPSS Inc.). We performed chi square tests and Fisher’s exact tests to assess a difference between cohort studies and registry-based studies with the null hypothesis of no difference between both groups. A difference was considered significant when P < .05.

Results

Through the search of the databases, 1294 studies were identified and 26 handpicked studies were added. Initially, based on the title and abstract, 184 of these studies were found eligible.

A total of 366 lateral UKA failures were included. The most common failure modes were progression of OA (29%), aseptic loosening (23%), and bearing dislocation (10%). Infection (6%), instability (6%), unexplained pain (6%), and fractures (4%) were less common causes of failure of lateral UKA (Table 2).

One hundred fifty-five of these failures were reported in the cohort studies. The most common modes of failure were OA progression (36%), bearing dislocation (17%) and aseptic loosening (16%). Less common were infection (10%), fractures (5%), pain (5%), and other causes (6%). In registry-based studies, with 211 lateral UKA failures, the most common modes of failure were aseptic loosening (28%), OA progression (24%), other causes (12%), instability (10%), pain (7%), bearing dislocation (5%), and polyethylene wear (4%) (Table 2).

When pooling cohort and registry-based studies, progression of OA was significantly more common than aseptic loosening (29% vs 23%, respectively; P < .01). It was also significantly more common in the cohort studies (36% vs 16%, respectively; P < .01) but no significant difference was found between progression of OA and aseptic loosening in registry-based studies (24% and 28%, respectively; P = .16) (Table 2).

When comparing cohort with registry-based studies, progression of OA was higher in cohort studies (36% vs. 24%; P < .01). Other failures modes that were more common in cohort studies compared with registry-based studies were bearing dislocation (17% vs 5%, respectively; P < .01) and infections (10% vs 3%, P < .01). Failure modes that were more common in registry-based studies than cohort studies were aseptic loosening (28% vs 16%, respectively; P < .01), other causes (12% vs 6%, respectively, P = .02), and instability (10% vs 1%, respectively, P < .01) (Table 2).

Discussion

In this systematic review, the most common failure modes in lateral UKA review were OA progression (29%), aseptic loosening (23%), and bearing dislocation (10%). Progression of OA and bearing dislocation were the most common modes of failure in cohort studies (36% and 17%, respectively), while aseptic loosening and OA progression were the most common failure modes in registry-based studies (28% and 24%, respectively).

As mentioned above, there are differences in anatomy and kinematics between the medial and lateral compartment. When the lateral UKA failure modes are compared with studies reporting medial UKA failure modes, differences in failure modes are seen.34 Siddiqui and Ahmad35 performed a systematic review of outcomes after UKA revision and presented a table with the failure modes of included studies. Unfortunately they did not report the ratio of medial and lateral UKA. However, when assuming an average percentage of 90% to 95% of medial UKA,6,7,36 the main failure mode in their review in 17 out of 21 studies was aseptic loosening. Indeed, a recent systematic review on medial UKA failure modes showed that aseptic loosening is the most common cause of failure following this procedure.34 Similarly, a search through registry-based studies6,7 and large cohort studies37-40 that only reported medial UKA failures showed that the majority of these studies7,37-39 also reported aseptic loosening as the main cause of failure in medial UKA. When comparing the results of our systematic review of lateral UKA failures with the results of these studies of medial UKA failures, it seems that OA progression seems to play a more dominant role in failures of lateral UKA, while aseptic loosening seems to be more common in medial UKA.

Differences in anatomy and kinematics of the medial and lateral compartment can explain this. Malalignment of the joint is an important factor in the etiology of OA41,42 and biomechanical studies showed that this malalignment can cause decreased viability and further degenerative changes of cartilage of the knee.43 Hernigou and Deschamps44 showed that the alignment of the knee after medial UKA is an important factor in postoperative joint changes. They found that overcorrection of varus deformity during medial UKA surgery, measured by the hip-knee-ankle (HKA) angle, was associated with increased OA at the lateral condyle and less tibial wear of the medial UKA. Undercorrection of the varus caused an increase in tibial wear of polyethylene. Chatellard and colleagues45 found the same results in the correction of varus, measured by HKA. In addition, they found that when the prosthetic (medial) joint space was smaller than healthy (lateral) joint space, this was correlated with lower prosthesis survival. A smaller joint space at the healthy side was correlated with OA progression at the lateral compartment and tibial component wear.

These studies explain the mechanism of progression of OA and aseptic loosening. Harrington46 assessed the load in patients with valgus and varus deformity. Patients with a valgus deformity have high mechanical load on the lateral condyle during the static phase, but during the dynamic phase, a major part of this load shifts to the medial condyle. In the patients with varus deformity, the mechanical load was noted on the medial condyle during both the static and dynamic phase. Ohdera and colleagues47 advised, based on this biomechanical study and their own experiences, to correct the knee during lateral UKA to a slight valgus angle (5°-7°) to prevent OA progression at the medial side. van der List and colleagues48 similarly showed that undercorrection of 3° to 7° was correlated with better functional outcomes when compared to more neutral alignment. Moreover, Khamaisy and colleagues49 recently showed that overcorrection during UKA surgery is more common in lateral than medial UKA.

These studies are important to understanding why OA progression is more common as a failure mode in lateral UKA. The shift of mechanical load from the lateral to medial epicondyle during the dynamic phase also could explain why aseptic loosening is less common in lateral UKA. As Hernigou and Deschamps44 and Chatellard and colleagues45 stated, undercorrection of varus deformity in medial UKA is associated with higher mechanical load on the medial prosthesis side and smaller joint space width. These factors are correlated with mechanical failure of medial UKA. We think this process can be applied to lateral UKA, with the addition that the mechanical load is higher on the healthy medial compartment during the dynamic phase. This causes more forces on the healthy (medial) side in lateral UKA, and in medial UKA more forces on the prosthesis (medial) side, which results in more OA progression in lateral UKA and more aseptic loosening in medial UKA. This finding is consistent with the results of our review of more OA progression and less aseptic loosening in lateral UKA. This study also suggests that medial and lateral UKA should not be reported together in studies that present survivorship, failure modes, or clinical outcomes.

A large discrepancy was seen in bearing dislocation between cohort studies (17%) and registry-based studies (5%). When we take a closer look to the bearing dislocation failures in the cohort studies, most of the failures were reported in only 2 cohort studies.50,51 In a study by Pandit and colleagues,50 3 different prosthesis designs were used in 3 different time periods. In the first series of lateral UKA (1983-1991), 6 out of 51 (12%) bearings dislocated. In the second series (1998-2004), a modified technique was used and 3 out of 65 (5%) bearings dislocated. In the third series (2004-2008), a modified technique and a domed tibial component was used and only 1 out of 68 bearings dislocated (1%). In a study published in 1996, Gunther and colleagues51 also used surgical techniques and implants that were modified over the course of the study period. Because of these modified techniques, different implant designs, and year of publication, bearing dislocation most likely plays a smaller role than the 17% reported in the cohort studies. This discrepancy is a good example of the important role for the registries and registry-based studies in reporting failure modes and survivorship, especially in lateral UKA due to the low surgical frequency. Pabinger and colleagues52 recently performed a systematic review of cohort studies and registry-based studies in which they stated that the reliability in non-registry-based studies should be questioned and they considered registry-based studies superior in reporting UKA outcomes and revision rates. Furthermore, given the differences in anatomic and kinematic differences between the medial and lateral compartment and different failure modes between medial and lateral UKA, it would be better if future studies presented the medial and lateral failures separately. As stated above, most large cohort studies and especially annual registries currently do not report modes of failure of medial and lateral UKA separately.3,4,18-20

There are limitations in this study. First, this systematic review is not a full meta-analysis but a pooled analysis of collected study series and retrospective studies. Therefore, we cannot exclude sampling bias, confounders, and selection bias from the literature. We included all studies reporting failure modes of lateral UKA and excluded all case reports. We made a conscious choice about including all lateral UKA failures because this is the first systematic review of lateral UKA failure modes. Another limitation is that the follow-up period of the studies differed (Table 1) and we did not correct for the follow-up period. As stated in the example of bearing dislocations, some of these studies reported old or different techniques, while other, more recently published studies used more modified techniques11,29,53-56 Unfortunately, most studies did not report the time of arthroplasty survival and therefore we could not correct for the follow-up period.

In conclusion, progression of OA is the most common failure mode in lateral UKA, followed by aseptic loosening. Anatomic and kinematic factors such as alignment, mechanical forces during dynamic phase, and correction of valgus seem to play important roles in failure modes of lateral UKA. In the future, failure modes of medial and lateral UKA should be reported separately.

Am J Orthop. 2016;45(7):432-438, 462. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. Skolnick MD, Bryan RS, Peterson LFA. Unicompartmental polycentric knee arthroplasty. Description and preliminary results. Clin Orthop Relat Res. 1975;(112):208-214.

2. Riddle DL, Jiranek WA, McGlynn FJ. Yearly Incidence of Unicompartmental Knee Arthroplasty in the United States. J Arthroplasty. 2008;23(3):408-412.

3. Australian Orthopaedic Association. Hip and Knee Arthroplasty 2014 Annual Report. https://aoanjrr.sahmri.com/documents/10180/172286/Annual%20Report%202014. Accessed June 3, 2015.

4. Swedish Knee Arthroplasty Register. 2013 Annual Report.http://myknee.se/pdf/SKAR2013_Eng.pdf. Accessed June 3, 2015.

5. The New Zealand Joint Registry. Fourteen Year Report. January 1999 to December 2012. 2013. http://nzoa.org.nz/system/files/NJR 14 Year Report.pdf. Accessed June 3, 2015.

6. Baker PN, Jameson SS, Deehan DJ, Gregg PJ, Porter M, Tucker K. Mid-term equivalent survival of medial and lateral unicondylar knee replacement: an analysis of data from a National Joint Registry. J Bone Joint Surg Br. 2012;94(12):1641-1648.

7. Lewold S, Robertsson O, Knutson K, Lidgren L. Revision of unicompartmental knee arthroplasty: outcome in 1,135 cases from the Swedish Knee Arthroplasty study. Acta Orthop Scand. 1998;69(5):469-474.

8. Pennington DW, Swienckowski JJ, Lutes WB, Drake GN. Lateral unicompartmental knee arthroplasty: survivorship and technical considerations at an average follow-up of 12.4 years. J Arthroplasty. 2006;21(1):13-17.

9. Hill PF, Vedi V, Williams A, Iwaki H, Pinskerova V, Freeman MA. Tibiofemoral movement 2: the loaded and unloaded living knee studied by MRI. J Bone Joint Surg Br. 2000;82(8):1196-1198.

10. Nakagawa S, Kadoya Y, Todo S, et al. Tibiofemoral movement 3: full flexion in the living knee studied by MRI. J Bone Joint Surg Br. 2000;82(8):1199-1200.

11. Ashraf T, Newman JH, Evans RL, Ackroyd CE. Lateral unicompartmental knee replacement survivorship and clinical experience over 21 years. J Bone Joint Surg Br. 2002;84(8):1126-1130.

12. Scott RD. Lateral unicompartmental replacement: a road less traveled. Orthopedics. 2005;28(9):983-984.

13. Sah AP, Scott RD. Lateral unicompartmental knee arthroplasty through a medial approach. Study with an average five-year follow-up. J Bone Joint Surg Am. 2007;89(9):1948-1954.

14. Argenson JN, Parratte S, Bertani A, Flecher X, Aubaniac JM. Long-term results with a lateral unicondylar replacement. Clin Orthop Relat Res. 2008;466(11):2686-2693.

15. Weidow J, Pak J, Karrholm J. Different patterns of cartilage wear in medial and lateral gonarthrosis. Acta Orthop Scand. 2002;73(3):326-329.

16. Ollivier M, Abdel MP, Parratte S, Argenson JN. Lateral unicondylar knee arthroplasty (UKA): contemporary indications, surgical technique, and results. Int Orthop. 2014;38(2):449-455.

17. Demange MK, Von Keudell A, Probst C, Yoshioka H, Gomoll AH. Patient-specific implants for lateral unicompartmental knee arthroplasty. Int Orthop. 2015;39(8):1519-1526.

18. Khan Z, Nawaz SZ, Kahane S, Esler C, Chatterji U. Conversion of unicompartmental knee arthroplasty to total knee arthroplasty: the challenges and need for augments. Acta Orthop Belg. 2013;79(6):699-705.

19. Epinette JA, Brunschweiler B, Mertl P, et al. Unicompartmental knee arthroplasty modes of failure: wear is not the main reason for failure: a multicentre study of 418 failed knees. Orthop Traumatol Surg Res. 2012;98(6 Suppl):S124-S130.

20. Bordini B, Stea S, Falcioni S, Ancarani C, Toni A. Unicompartmental knee arthroplasty: 11-year experience from 3929 implants in RIPO register. Knee. 2014;21(6):1275-1279.

21. Bolognesi MP, Greiner MA, Attarian DE, et al. Unicompartmental knee arthroplasty and total knee arthroplasty among medicare beneficiaries, 2000 to 2009. J Bone Joint Surg Am. 2013;95(22):e174.

22. Nwachukwu BU, McCormick FM, Schairer WW, Frank RM, Provencher MT, Roche MW. Unicompartmental knee arthroplasty versus high tibial osteotomy: United States practice patterns for the surgical treatment of unicompartmental arthritis. J Arthroplasty. 2014;29(8):1586-1589.

23. van der List JP, Chawla H, Pearle AD. Robotic-assisted knee arthroplasty: an overview. Am J Orthop. 2016;45(4):202-211.

24. van der List JP, Chawla H, Joskowicz L, Pearle AD. Current state of computer navigation and robotics in unicompartmental and total knee arthroplasty: a systematic review with meta-analysis. Knee Surg Sports Traumatol Arthrosc. 2016 Sep 6. [Epub ahead of print]

25. Zuiderbaan HA, van der List JP, Kleeblad LJ, et al. Modern indications, results and global trends in the use of unicompartmental knee arthroplasty and high tibial osteotomy for the treatment of medial unicondylar knee osteoarthritis. Am J Orthop. 2016;45(6):E355-E361.

26. Smith JR, Robinson JR, Porteous AJ, et al. Fixed bearing lateral unicompartmental knee arthroplasty--short to midterm survivorship and knee scores for 101 prostheses. Knee. 2014;21(4):843-847.

27. Berend KR, Kolczun MC 2nd, George JW Jr, Lombardi AV Jr. Lateral unicompartmental knee arthroplasty through a lateral parapatellar approach has high early survivorship. Clin Orthop Relat Res. 2012;470(1):77-83.

28. Keblish PA, Briard JL. Mobile-bearing unicompartmental knee arthroplasty: a 2-center study with an 11-year (mean) follow-up. J Arthroplasty. 2004;19(7 Suppl 2):87-94.

29. Bertani A, Flecher X, Parratte S, Aubaniac JM, Argenson JN. Unicompartmental-knee arthroplasty for treatment of lateral gonarthrosis: about 30 cases. Midterm results. Rev Chir Orthop Reparatrice Appar Mot. 2008;94(8):763-770.

30. Sebilo A, Casin C, Lebel B, et al. Clinical and technical factors influencing outcomes of unicompartmental knee arthroplasty: Retrospective multicentre study of 944 knees. Orthop Traumatol Surg Res. 2013;99(4 Suppl):S227-S234.

31. Cartier P, Khefacha A, Sanouiller JL, Frederick K. Unicondylar knee arthroplasty in middle-aged patients: A minimum 5-year follow-up. Orthopedics. 2007;30(8 Suppl):62-65.

32. Lustig S, Paillot JL, Servien E, Henry J, Ait Si Selmi T, Neyret P. Cemented all polyethylene tibial insert unicompartimental knee arthroplasty: a long term follow-up study. Orthop Traumatol Surg Res. 2009;95(1):12-21.

33. van der List JP, McDonald LS, Pearle AD. Systematic review of medial versus lateral survivorship in unicompartmental knee arthroplasty. Knee. 2015;22(6):454-460.

34. van der List JP, Zuiderbaan HA, Pearle AD. Why do medial unicompartmental knee arthroplasties fail today? J Arthroplasty. 2016;31(5):1016-1021.

35. Siddiqui NA, Ahmad ZM. Revision of unicondylar to total knee arthroplasty: a systematic review. Open Orthop J. 2012;6:268-275.

36. Pennington DW, Swienckowski JJ, Lutes WB, Drake GN. Lateral unicompartmental knee arthroplasty: survivorship and technical considerations at an average follow-up of 12.4 years. J Arthroplasty. 2006;21(1):13-17.

37. Kalra S, Smith TO, Berko B, Walton NP. Assessment of radiolucent lines around the Oxford unicompartmental knee replacement: sensitivity and specificity for loosening. J Bone Joint Surg Br. 2011;93(6):777-781.

38. Wynn Jones H, Chan W, Harrison T, Smith TO, Masonda P, Walton NP. Revision of medial Oxford unicompartmental knee replacement to a total knee replacement: similar to a primary? Knee. 2012;19(4):339-343.

39. Sierra RJ, Kassel CA, Wetters NG, Berend KR, Della Valle CJ, Lombardi AV. Revision of unicompartmental arthroplasty to total knee arthroplasty: not always a slam dunk! J Arthroplasty. 2013;28(8 Suppl):128-132.

40. Citak M, Dersch K, Kamath AF, Haasper C, Gehrke T, Kendoff D. Common causes of failed unicompartmental knee arthroplasty: a single-centre analysis of four hundred and seventy one cases. Int Orthop. 2014;38(5):961-965.

41. Hunter DJ, Wilson DR. Role of alignment and biomechanics in osteoarthritis and implications for imaging. Radiol Clin North Am. 2009;47(4):553-566.

42. Hunter DJ, Sharma L, Skaife T. Alignment and osteoarthritis of the knee. J Bone Joint Surg Am. 2009;91 Suppl 1:85-89.

43. Roemhildt ML, Beynnon BD, Gauthier AE, Gardner-Morse M, Ertem F, Badger GJ. Chronic in vivo load alteration induces degenerative changes in the rat tibiofemoral joint. Osteoarthritis Cartilage. 2013;21(2):346-357.

44. Hernigou P, Deschamps G. Alignment influences wear in the knee after medial unicompartmental arthroplasty. Clin Orthop Relat Res. 2004;(423):161-165.

45. Chatellard R, Sauleau V, Colmar M, et al. Medial unicompartmental knee arthroplasty: does tibial component position influence clinical outcomes and arthroplasty survival? Orthop Traumatol Surg Res. 2013;99(4 Suppl):S219-S225.

46. Harrington IJ. Static and dynamic loading patterns in knee joints with deformities. J Bone Joint Surg Am. 1983;65(2):247-259.

47. Ohdera T, Tokunaga J, Kobayashi A. Unicompartmental knee arthroplasty for lateral gonarthrosis: midterm results. J Arthroplasty. 2001;16(2):196-200.

48. van der List JP, Chawla H, Villa JC, Zuiderbaan HA, Pearle AD. Early functional outcome after lateral UKA is sensitive to postoperative lower limb alignment. Knee Surg Sports Traumatol Arthrosc. 2015 Nov 26. [Epub ahead of print]

49. Khamaisy S, Gladnick BP, Nam D, Reinhardt KR, Heyse TJ, Pearle AD. Lower limb alignment control: Is it more challenging in lateral compared to medial unicondylar knee arthroplasty? Knee. 2015;22(4):347-350.

50. Pandit H, Jenkins C, Beard DJ, et al. Mobile bearing dislocation in lateral unicompartmental knee replacement. Knee. 2010;17(6):392-397.

51. Gunther TV, Murray DW, Miller R, et al. Lateral unicompartmental arthroplasty with the Oxford meniscal knee. Knee. 1996;3(1):33-39.

52. Pabinger C, Lumenta DB, Cupak D, Berghold A, Boehler N, Labek G. Quality of outcome data in knee arthroplasty: Comparison of registry data and worldwide non-registry studies from 4 decades. Acta Orthopaedica. 2015;86(1):58-62.

53. Lustig S, Elguindy A, Servien E, et al. 5- to 16-year follow-up of 54 consecutive lateral unicondylar knee arthroplasties with a fixed-all polyethylene bearing. J Arthroplasty. 2011;26(8):1318-1325.

54. Walton MJ, Weale AE, Newman JH. The progression of arthritis following lateral unicompartmental knee replacement. Knee. 2006;13(5):374-377.

55. Lustig S, Lording T, Frank F, Debette C, Servien E, Neyret P. Progression of medial osteoarthritis and long term results of lateral unicompartmental arthroplasty: 10 to 18 year follow-up of 54 consecutive implants. Knee. 2014;21(S1):S26-S32.

56. O’Rourke MR, Gardner JJ, Callaghan JJ, et al. Unicompartmental knee replacement: a minimum twenty-one-year followup, end-result study. Clin Orthop Relat Res. 2005;440:27-37.

57. Citak M, Cross MB, Gehrke T, Dersch K, Kendoff D. Modes of failure and revision of failed lateral unicompartmental knee arthroplasties. Knee. 2015;22(4):338-340.

58. Liebs TR, Herzberg W. Better quality of life after medial versus lateral unicondylar knee arthroplasty knee. Clin Orthop Relat Res. 2013;471(8):2629-2640.

59. Weston-Simons JS, Pandit H, Kendrick BJ, et al. The mid-term outcomes of the Oxford Domed Lateral unicompartmental knee replacement. Bone Joint J. 2014;96-B(1):59-64.

60. Thompson SA, Liabaud B, Nellans KW, Geller JA. Factors associated with poor outcomes following unicompartmental knee arthroplasty: redefining the “classic” indications for surgery. J Arthroplasty. 2013;28(9):1561-1564.

61. Saxler G, Temmen D, Bontemps G. Medium-term results of the AMC-unicompartmental knee arthroplasty. Knee. 2004;11(5):349-355.

62. Forster MC, Bauze AJ, Keene GCR. Lateral unicompartmental knee replacement: Fixed or mobile bearing? Knee Surg Sports Traumatol Arthrosc. 2007;15(9):1107-1111.

63. Streit MR, Walker T, Bruckner T, et al. Mobile-bearing lateral unicompartmental knee replacement with the Oxford domed tibial component: an independent series. J Bone Joint Surg Br. 2012;94(10):1356-1361.

64. Altuntas AO, Alsop H, Cobb JP. Early results of a domed tibia, mobile bearing lateral unicompartmental knee arthroplasty from an independent centre. Knee. 2013;20(6):466-470.

65. Ashraf T, Newman JH, Desai VV, Beard D, Nevelos JE. Polyethylene wear in a non-congruous unicompartmental knee replacement: a retrieval analysis. Knee. 2004;11(3):177-181.

66. Schelfaut S, Beckers L, Verdonk P, Bellemans J, Victor J. The risk of bearing dislocation in lateral unicompartmental knee arthroplasty using a mobile biconcave design. Knee Surg Sports Traumatol Arthrosc. 2013;21(11):2487-2494.

67. Marson B, Prasad N, Jenkins R, Lewis M. Lateral unicompartmental knee replacements: Early results from a District General Hospital. Eur J Orthop Surg Traumatol. 2014;24(6):987-991.

68. Walker T, Gotterbarm T, Bruckner T, Merle C, Streit MR. Total versus unicompartmental knee replacement for isolated lateral osteoarthritis: a matched-pairs study. Int Orthop. 2014;38(11):2259-2264.

1. Skolnick MD, Bryan RS, Peterson LFA. Unicompartmental polycentric knee arthroplasty. Description and preliminary results. Clin Orthop Relat Res. 1975;(112):208-214.

2. Riddle DL, Jiranek WA, McGlynn FJ. Yearly Incidence of Unicompartmental Knee Arthroplasty in the United States. J Arthroplasty. 2008;23(3):408-412.

3. Australian Orthopaedic Association. Hip and Knee Arthroplasty 2014 Annual Report. https://aoanjrr.sahmri.com/documents/10180/172286/Annual%20Report%202014. Accessed June 3, 2015.

4. Swedish Knee Arthroplasty Register. 2013 Annual Report.http://myknee.se/pdf/SKAR2013_Eng.pdf. Accessed June 3, 2015.

5. The New Zealand Joint Registry. Fourteen Year Report. January 1999 to December 2012. 2013. http://nzoa.org.nz/system/files/NJR 14 Year Report.pdf. Accessed June 3, 2015.

6. Baker PN, Jameson SS, Deehan DJ, Gregg PJ, Porter M, Tucker K. Mid-term equivalent survival of medial and lateral unicondylar knee replacement: an analysis of data from a National Joint Registry. J Bone Joint Surg Br. 2012;94(12):1641-1648.

7. Lewold S, Robertsson O, Knutson K, Lidgren L. Revision of unicompartmental knee arthroplasty: outcome in 1,135 cases from the Swedish Knee Arthroplasty study. Acta Orthop Scand. 1998;69(5):469-474.

8. Pennington DW, Swienckowski JJ, Lutes WB, Drake GN. Lateral unicompartmental knee arthroplasty: survivorship and technical considerations at an average follow-up of 12.4 years. J Arthroplasty. 2006;21(1):13-17.

9. Hill PF, Vedi V, Williams A, Iwaki H, Pinskerova V, Freeman MA. Tibiofemoral movement 2: the loaded and unloaded living knee studied by MRI. J Bone Joint Surg Br. 2000;82(8):1196-1198.

10. Nakagawa S, Kadoya Y, Todo S, et al. Tibiofemoral movement 3: full flexion in the living knee studied by MRI. J Bone Joint Surg Br. 2000;82(8):1199-1200.

11. Ashraf T, Newman JH, Evans RL, Ackroyd CE. Lateral unicompartmental knee replacement survivorship and clinical experience over 21 years. J Bone Joint Surg Br. 2002;84(8):1126-1130.

12. Scott RD. Lateral unicompartmental replacement: a road less traveled. Orthopedics. 2005;28(9):983-984.

13. Sah AP, Scott RD. Lateral unicompartmental knee arthroplasty through a medial approach. Study with an average five-year follow-up. J Bone Joint Surg Am. 2007;89(9):1948-1954.

14. Argenson JN, Parratte S, Bertani A, Flecher X, Aubaniac JM. Long-term results with a lateral unicondylar replacement. Clin Orthop Relat Res. 2008;466(11):2686-2693.

15. Weidow J, Pak J, Karrholm J. Different patterns of cartilage wear in medial and lateral gonarthrosis. Acta Orthop Scand. 2002;73(3):326-329.

16. Ollivier M, Abdel MP, Parratte S, Argenson JN. Lateral unicondylar knee arthroplasty (UKA): contemporary indications, surgical technique, and results. Int Orthop. 2014;38(2):449-455.

17. Demange MK, Von Keudell A, Probst C, Yoshioka H, Gomoll AH. Patient-specific implants for lateral unicompartmental knee arthroplasty. Int Orthop. 2015;39(8):1519-1526.

18. Khan Z, Nawaz SZ, Kahane S, Esler C, Chatterji U. Conversion of unicompartmental knee arthroplasty to total knee arthroplasty: the challenges and need for augments. Acta Orthop Belg. 2013;79(6):699-705.

19. Epinette JA, Brunschweiler B, Mertl P, et al. Unicompartmental knee arthroplasty modes of failure: wear is not the main reason for failure: a multicentre study of 418 failed knees. Orthop Traumatol Surg Res. 2012;98(6 Suppl):S124-S130.

20. Bordini B, Stea S, Falcioni S, Ancarani C, Toni A. Unicompartmental knee arthroplasty: 11-year experience from 3929 implants in RIPO register. Knee. 2014;21(6):1275-1279.

21. Bolognesi MP, Greiner MA, Attarian DE, et al. Unicompartmental knee arthroplasty and total knee arthroplasty among medicare beneficiaries, 2000 to 2009. J Bone Joint Surg Am. 2013;95(22):e174.

22. Nwachukwu BU, McCormick FM, Schairer WW, Frank RM, Provencher MT, Roche MW. Unicompartmental knee arthroplasty versus high tibial osteotomy: United States practice patterns for the surgical treatment of unicompartmental arthritis. J Arthroplasty. 2014;29(8):1586-1589.

23. van der List JP, Chawla H, Pearle AD. Robotic-assisted knee arthroplasty: an overview. Am J Orthop. 2016;45(4):202-211.

24. van der List JP, Chawla H, Joskowicz L, Pearle AD. Current state of computer navigation and robotics in unicompartmental and total knee arthroplasty: a systematic review with meta-analysis. Knee Surg Sports Traumatol Arthrosc. 2016 Sep 6. [Epub ahead of print]

25. Zuiderbaan HA, van der List JP, Kleeblad LJ, et al. Modern indications, results and global trends in the use of unicompartmental knee arthroplasty and high tibial osteotomy for the treatment of medial unicondylar knee osteoarthritis. Am J Orthop. 2016;45(6):E355-E361.

26. Smith JR, Robinson JR, Porteous AJ, et al. Fixed bearing lateral unicompartmental knee arthroplasty--short to midterm survivorship and knee scores for 101 prostheses. Knee. 2014;21(4):843-847.

27. Berend KR, Kolczun MC 2nd, George JW Jr, Lombardi AV Jr. Lateral unicompartmental knee arthroplasty through a lateral parapatellar approach has high early survivorship. Clin Orthop Relat Res. 2012;470(1):77-83.

28. Keblish PA, Briard JL. Mobile-bearing unicompartmental knee arthroplasty: a 2-center study with an 11-year (mean) follow-up. J Arthroplasty. 2004;19(7 Suppl 2):87-94.

29. Bertani A, Flecher X, Parratte S, Aubaniac JM, Argenson JN. Unicompartmental-knee arthroplasty for treatment of lateral gonarthrosis: about 30 cases. Midterm results. Rev Chir Orthop Reparatrice Appar Mot. 2008;94(8):763-770.

30. Sebilo A, Casin C, Lebel B, et al. Clinical and technical factors influencing outcomes of unicompartmental knee arthroplasty: Retrospective multicentre study of 944 knees. Orthop Traumatol Surg Res. 2013;99(4 Suppl):S227-S234.

31. Cartier P, Khefacha A, Sanouiller JL, Frederick K. Unicondylar knee arthroplasty in middle-aged patients: A minimum 5-year follow-up. Orthopedics. 2007;30(8 Suppl):62-65.

32. Lustig S, Paillot JL, Servien E, Henry J, Ait Si Selmi T, Neyret P. Cemented all polyethylene tibial insert unicompartimental knee arthroplasty: a long term follow-up study. Orthop Traumatol Surg Res. 2009;95(1):12-21.

33. van der List JP, McDonald LS, Pearle AD. Systematic review of medial versus lateral survivorship in unicompartmental knee arthroplasty. Knee. 2015;22(6):454-460.

34. van der List JP, Zuiderbaan HA, Pearle AD. Why do medial unicompartmental knee arthroplasties fail today? J Arthroplasty. 2016;31(5):1016-1021.

35. Siddiqui NA, Ahmad ZM. Revision of unicondylar to total knee arthroplasty: a systematic review. Open Orthop J. 2012;6:268-275.

36. Pennington DW, Swienckowski JJ, Lutes WB, Drake GN. Lateral unicompartmental knee arthroplasty: survivorship and technical considerations at an average follow-up of 12.4 years. J Arthroplasty. 2006;21(1):13-17.

37. Kalra S, Smith TO, Berko B, Walton NP. Assessment of radiolucent lines around the Oxford unicompartmental knee replacement: sensitivity and specificity for loosening. J Bone Joint Surg Br. 2011;93(6):777-781.

38. Wynn Jones H, Chan W, Harrison T, Smith TO, Masonda P, Walton NP. Revision of medial Oxford unicompartmental knee replacement to a total knee replacement: similar to a primary? Knee. 2012;19(4):339-343.

39. Sierra RJ, Kassel CA, Wetters NG, Berend KR, Della Valle CJ, Lombardi AV. Revision of unicompartmental arthroplasty to total knee arthroplasty: not always a slam dunk! J Arthroplasty. 2013;28(8 Suppl):128-132.

40. Citak M, Dersch K, Kamath AF, Haasper C, Gehrke T, Kendoff D. Common causes of failed unicompartmental knee arthroplasty: a single-centre analysis of four hundred and seventy one cases. Int Orthop. 2014;38(5):961-965.

41. Hunter DJ, Wilson DR. Role of alignment and biomechanics in osteoarthritis and implications for imaging. Radiol Clin North Am. 2009;47(4):553-566.

42. Hunter DJ, Sharma L, Skaife T. Alignment and osteoarthritis of the knee. J Bone Joint Surg Am. 2009;91 Suppl 1:85-89.

43. Roemhildt ML, Beynnon BD, Gauthier AE, Gardner-Morse M, Ertem F, Badger GJ. Chronic in vivo load alteration induces degenerative changes in the rat tibiofemoral joint. Osteoarthritis Cartilage. 2013;21(2):346-357.

44. Hernigou P, Deschamps G. Alignment influences wear in the knee after medial unicompartmental arthroplasty. Clin Orthop Relat Res. 2004;(423):161-165.

45. Chatellard R, Sauleau V, Colmar M, et al. Medial unicompartmental knee arthroplasty: does tibial component position influence clinical outcomes and arthroplasty survival? Orthop Traumatol Surg Res. 2013;99(4 Suppl):S219-S225.

46. Harrington IJ. Static and dynamic loading patterns in knee joints with deformities. J Bone Joint Surg Am. 1983;65(2):247-259.

47. Ohdera T, Tokunaga J, Kobayashi A. Unicompartmental knee arthroplasty for lateral gonarthrosis: midterm results. J Arthroplasty. 2001;16(2):196-200.

48. van der List JP, Chawla H, Villa JC, Zuiderbaan HA, Pearle AD. Early functional outcome after lateral UKA is sensitive to postoperative lower limb alignment. Knee Surg Sports Traumatol Arthrosc. 2015 Nov 26. [Epub ahead of print]

49. Khamaisy S, Gladnick BP, Nam D, Reinhardt KR, Heyse TJ, Pearle AD. Lower limb alignment control: Is it more challenging in lateral compared to medial unicondylar knee arthroplasty? Knee. 2015;22(4):347-350.

50. Pandit H, Jenkins C, Beard DJ, et al. Mobile bearing dislocation in lateral unicompartmental knee replacement. Knee. 2010;17(6):392-397.