User login

Robotic Technology Produces More Conservative Tibial Resection Than Conventional Techniques in UKA

Unicompartmental knee arthroplasty (UKA) is considered a less invasive approach for the treatment of unicompartmental knee arthritis when compared with total knee arthroplasty (TKA), with optimal preservation of kinematics.1 Despite excellent functional outcomes, conversion to TKA may be necessary if the UKA fails, or in patients with progressive knee arthritis. Some studies have found UKA conversion to TKA to be comparable with primary TKA,2,3 whereas others have found that conversion often requires bone graft, augments, and stemmed components and has increased complications and inferior results compared to primary TKA.4-7 While some studies report that <10% of UKA conversions to TKA require augments,2 others have found that as many as 76% require augments.4-8

Schwarzkopf and colleagues9 recently demonstrated that UKA conversion to TKA is comparable with primary TKA when a conservative tibial resection is performed during the index procedure. However, they reported increased complexity when greater tibial resection was performed and thicker polyethylene inserts were used at the time of the index UKA. The odds ratio of needing an augment or stem during the conversion to TKA was 26.8 (95% confidence interval, 3.71-194) when an aggressive tibial resection was performed during the UKA.9 Tibial resection thickness may thus be predictive of anticipated complexity of UKA revision to TKA and may aid in preoperative planning.

Robotic assistance has been shown to enhance the accuracy of bone preparation, implant component alignment, and soft tissue balance in UKA.10-15 It has yet to be determined whether this improved accuracy translates to improved clinical performance or longevity of the UKA implant. However, the enhanced accuracy of robotic technology may result in more conservative tibial resection when compared to conventional UKA and may be advantageous if conversion to TKA becomes necessary.

The purpose of this study was to compare the distribution of polyethylene insert sizes implanted during conventional and robotic-assisted UKA. We hypothesized that robotic assistance would demonstrate more conservative tibial resection compared to conventional methods of bone preparation.

Methods

We retrospectively compared the distribution of polyethylene insert sizes implanted during consecutive conventional and robotic-assisted UKA procedures. Several manufacturers were queried to provide a listing of the polyethylene insert sizes utilized, ranging from 8 mm to 14 mm. The analysis included 8421 robotic-assisted UKA cases and 27,989 conventional UKA cases. Data were provided by Zimmer Biomet and Smith & Nephew regarding conventional cases, as well as Blue Belt Technologies (now part of Smith & Nephew) and MAKO Surgical (now part of Stryker) regarding robotic-assisted cases. (Dr. Lonner has an ongoing relationship as a consultant with Blue Belt Technologies, whose data was utilized in this study.) Using tibial insert thickness as a surrogate measure of the extent of tibial resection, an insert size of ≥10 mm was defined as aggressive while <10 mm was considered conservative. This cutoff was established based on its corresponding resection level with primary TKA and the anticipated need for augments. Statistical analysis was performed using a Mann-Whitney-Wilcoxon test. Significance was set at P < .05.

Results

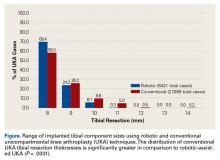

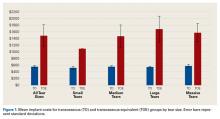

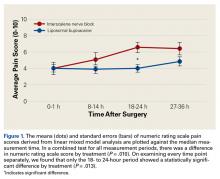

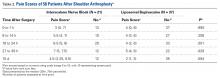

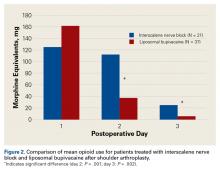

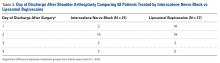

Tibial resection thickness was found to be most commonly conservative in nature, with sizes 8-mm and 9-mm polyethylene inserts utilized in the majority of both robotic-assisted and conventional UKA cases. However, statistically more 8-mm and 9-mm polyethylene inserts were used in the robotic group (93.6%) than in the conventional group (84.5%) (P < .0001; Figure). Aggressive tibial resection, requiring tibial inserts ≥10 mm, was performed in 6.4% of robotic-assisted cases and 15.5% of conventional cases.

Discussion

Robotic assistance enhances the accuracy of bone preparation, implant component alignment, and soft tissue balance in UKA.10-15 It has yet to be determined whether this improved accuracy translates to improved clinical performance or longevity of the UKA implant. However, we demonstrate that the enhanced accuracy of robotic technology results in more conservative tibial resection when compared to conventional techniques with a potential benefit suggested in the literature upon conversion to TKA.

The findings of this study have important implications for patients undergoing conversion of UKA to TKA, potentially optimizing the ease of revision and clinical outcomes. The outcomes of UKA conversion to TKA are often considered inferior to those of primary TKA, compromised by bone loss, need for augmentation, and challenges of restoring the joint line and rotation.9,16-22 Barrett and Scott18 reported only 66% of patients had good or excellent results at an average of 4.6 years of follow-up after UKA conversion to TKA. Over 50% required stemmed implants and bone graft or bone cement augmentation to address osseous insufficiency. The authors suggested that the primary determinant of the complexity of the conversion to TKA was the surgical technique used in the index procedure. They concluded that UKA conversion to TKA can be as successful as a primary TKA and primary TKA implants can be used without bone augmentation or stems during the revision procedure if minimal tibial bone is resected at the time of the index UKA.18 Schwarzkopf and colleagues9 supported this conclusion when they found that aggressive tibial resection during UKA resulted in the need for bone graft, stem, wedge, or augment in 70% of cases when converted to TKA. Similarly, Khan and colleagues23 found that 26% of patients required bone grafting and 26% required some form of augmentation, and Springer and colleagues3 reported that 68% required a graft, augment, or stem.3,22 Using data from the New Zealand Joint Registry, Pearse and colleagues5 reported that revision TKA components were necessary in 28% of patients and concluded that converting a UKA to TKA gives a less reliable result than primary TKA, and with functional results that are not significantly better than a revision from a TKA.

Conservative tibial resection during UKA minimizes the complexity and concerns of bone loss upon conversion to TKA. Schwarzkopf and colleagues9 found 96.6% of patients with conservative tibial resection received a primary TKA implant, without augments or stems. Furthermore, patients with a primary TKA implant showed improved tibial survivorship, with revision as an end point, compared with patients who received a TKA implant that required stems and augments or bone graft for support.9 Also emphasizing the importance of minimal tibial resection, O’Donnell and colleagues8 compared a cohort of patients undergoing conversion of a minimal resection resurfacing onlay-type UKA to TKA with a cohort of patients undergoing primary TKA. They found that 40% of patients required bone grafting for contained defects, 3.6% required metal augments, and 1.8% required stems.8 There was no significant difference between the groups in terms of range of motion, functional outcome, or radiologic outcomes. The authors concluded that revision of minimal resection resurfacing implants to TKA is associated with similar results to primary TKA and is superior to revision of UKA with greater bone loss. Prior studies have shown that one of the advantages of robotic-assisted UKA is the accuracy and precision of bone resection. The present study supports this premise by showing that tibial resection is significantly more conservative using robotic-assisted techniques when using tibial component thickness as a surrogate for extent of bone resection. While our study did not address implant durability or the impact of conservative resection on conversion to TKA, studies referenced above suggest that the conservative nature of bone preparation would have a relevant impact on the revision of the implant to TKA.

Our study is a retrospective case series that reports tibial component thickness as a surrogate for volume of tibial resection during UKA. While the implication is that more conservative tibial resection may optimize durability and ease of conversion to TKA, future study will be needed to compare robotic-assisted and conventional cases of UKA upon conversion to TKA in order to ascertain whether the more conventional resections of robotic-assisted UKA in fact lead to revision that is comparable with primary TKA in terms of bone loss at the time of revision, components utilized, the need for bone graft, augments, or stems, and clinical outcomes. Given the method of data collection in this study, we could not control for clinical deformity, selection bias, surgeon experience, or medial vs lateral knee compartments. These potential confounders represent weaknesses of this study.

In conclusion, conversion of UKA to TKA may be associated with significant osseous insufficiency, which may compromise patient outcomes in comparison to primary TKA. Studies have shown that UKA conversion to TKA is comparable to primary TKA when minimal tibial resection is performed during the UKA, and the need for augmentation, grafting or stems is increased with more aggressive tibial resection. This study has shown that when robotic assistance is utilized, tibial resection is more precise, less variable, and more conservative compared to conventional techniques.

Am J Orthop. 2016;45(7):E465-E468. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. Patil S, Colwell CW Jr, Ezzet KA, D’Lima DD. Can normal knee kinematics be restored with unicompartmental knee replacement? J Bone Joint Surg Am. 2005;87(2):332-338.

2. Johnson S, Jones P, Newman JH. The survivorship and results of total knee replacements converted from unicompartmental knee replacements. Knee. 2007;14(2):154-157.

3. Springer BD, Scott RD, Thornhill TS. Conversion of failed unicompartmental knee arthroplasty to TKA. Clin Orthop Relat Res. 2006;446:214-220.

4. Järvenpää J, Kettunen J, Miettinen H, Kröger H. The clinical outcome of revision knee replacement after unicompartmental knee arthroplasty versus primary total knee arthroplasty: 8-17 years follow-up study of 49 patients. Int Orthop. 2010;34(5):649-653.

5. Pearse AJ, Hooper GJ, Rothwell AG, Frampton C. Osteotomy and unicompartmental knee arthroplasty converted to total knee arthroplasty: data from the New Zealand Joint Registry. J Arthroplasty. 2012;27(10):1827-1831.

6. Rancourt MF, Kemp KA, Plamondon SM, Kim PR, Dervin GF. Unicompartmental knee arthroplasties revised to total knee arthroplasties compared with primary total knee arthroplasties. J Arthroplasty. 2012;27(8 Suppl):106-110.

7. Sierra RJ, Kassel CA, Wetters NG, Berend KR, Della Valle CJ, Lombardi AV. Revision of unicompartmental arthroplasty to total knee arthroplasty: not always a slam dunk! J Arthroplasty. 2013;28(8 Suppl):128-132.

8. O’Donnell TM, Abouazza O, Neil MJ. Revision of minimal resection resurfacing unicondylar knee arthroplasty to total knee arthroplasty: results compared with primary total knee arthroplasty. J Arthroplasty. 2013;28(1):33-39.

9. Schwarzkopf R, Mikhael B, Li L, Josephs L, Scott RD. Effect of initial tibial resection thickness on outcomes of revision UKA. Orthopedics. 2013;36(4):e409-e414.

10. Conditt MA, Roche MW. Minimally invasive robotic-arm-guided unicompartmental knee arthroplasty. J Bone Joint Surg Am. 2009;91 Suppl 1:63-68.

11. Dunbar NJ, Roche MW, Park BH, Branch SH, Conditt MA, Banks SA. Accuracy of dynamic tactile-guided unicompartmental knee arthroplasty. J Arthroplasty. 2012;27(5):803-808.e1.

12. Karia M, Masjedi M, Andrews B, Jaffry Z, Cobb J. Robotic assistance enables inexperienced surgeons to perform unicompartmental knee arthroplasties on dry bone models with accuracy superior to conventional methods. Adv Orthop. 2013;2013:481039.

13. Lonner JH, John TK, Conditt MA. Robotic arm-assisted UKA improves tibial component alignment: a pilot study. Clin Orthop Relat Res. 2010;468(1):141-146.

14. Lonner JH, Smith JR, Picard F, Hamlin B, Rowe PJ, Riches PE. High degree of accuracy of a novel image-free handheld robot for unicondylar knee arthroplasty in a cadaveric study. Clin Orthop Relat Res. 2015;473(1):206-212.

15. Smith JR, Picard F, Rowe PJ, Deakin A, Riches PE. The accuracy of a robotically-controlled freehand sculpting tool for unicondylar knee arthroplasty. Bone Joint J. 2013;95-B(suppl 28):68.

16. Chakrabarty G, Newman JH, Ackroyd CE. Revision of unicompartmental arthroplasty of the knee. Clinical and technical considerations. J Arthroplasty. 1998;13(2):191-196.

17. Levine WN, Ozuna RM, Scott RD, Thornhill TS. Conversion of failed modern unicompartmental arthroplasty to total knee arthroplasty. J Arthroplasty. 1996;11(7):797-801.

18. Barrett WP, Scott RD. Revision of failed unicondylar unicompartmental knee arthroplasty. J Bone Joint Surg Am. 1987;69(9):1328-1335.

19. Padgett DE, Stern SH, Insall JN. Revision total knee arthroplasty for failed unicompartmental replacement. J Bone Joint Surg Am. 1991;73(2):186-190.

20. Aleto TJ, Berend ME, Ritter MA, Faris PM, Meneghini RM. Early failure of unicompartmental knee arthroplasty leading to revision. J Arthroplasty. 2008;23(2):159-163.

21. McAuley JP, Engh GA, Ammeen DJ. Revision of failed unicompartmental knee arthroplasty. Clin Orthop Relat Res. 2001;(392):279-282.22. Böhm I, Landsiedl F. Revision surgery after failed unicompartmental knee arthroplasty: a study of 35 cases. J Arthroplasty. 2000;15(8):982-989.

23. Khan Z, Nawaz SZ, Kahane S, Ester C, Chatterji U. Conversion of unicompartmental knee arthroplasty to total knee arthroplasty: the challenges and need for augments. Acta Orthop Belg. 2013;79(6):699-705.

Unicompartmental knee arthroplasty (UKA) is considered a less invasive approach for the treatment of unicompartmental knee arthritis when compared with total knee arthroplasty (TKA), with optimal preservation of kinematics.1 Despite excellent functional outcomes, conversion to TKA may be necessary if the UKA fails, or in patients with progressive knee arthritis. Some studies have found UKA conversion to TKA to be comparable with primary TKA,2,3 whereas others have found that conversion often requires bone graft, augments, and stemmed components and has increased complications and inferior results compared to primary TKA.4-7 While some studies report that <10% of UKA conversions to TKA require augments,2 others have found that as many as 76% require augments.4-8

Schwarzkopf and colleagues9 recently demonstrated that UKA conversion to TKA is comparable with primary TKA when a conservative tibial resection is performed during the index procedure. However, they reported increased complexity when greater tibial resection was performed and thicker polyethylene inserts were used at the time of the index UKA. The odds ratio of needing an augment or stem during the conversion to TKA was 26.8 (95% confidence interval, 3.71-194) when an aggressive tibial resection was performed during the UKA.9 Tibial resection thickness may thus be predictive of anticipated complexity of UKA revision to TKA and may aid in preoperative planning.

Robotic assistance has been shown to enhance the accuracy of bone preparation, implant component alignment, and soft tissue balance in UKA.10-15 It has yet to be determined whether this improved accuracy translates to improved clinical performance or longevity of the UKA implant. However, the enhanced accuracy of robotic technology may result in more conservative tibial resection when compared to conventional UKA and may be advantageous if conversion to TKA becomes necessary.

The purpose of this study was to compare the distribution of polyethylene insert sizes implanted during conventional and robotic-assisted UKA. We hypothesized that robotic assistance would demonstrate more conservative tibial resection compared to conventional methods of bone preparation.

Methods

We retrospectively compared the distribution of polyethylene insert sizes implanted during consecutive conventional and robotic-assisted UKA procedures. Several manufacturers were queried to provide a listing of the polyethylene insert sizes utilized, ranging from 8 mm to 14 mm. The analysis included 8421 robotic-assisted UKA cases and 27,989 conventional UKA cases. Data were provided by Zimmer Biomet and Smith & Nephew regarding conventional cases, as well as Blue Belt Technologies (now part of Smith & Nephew) and MAKO Surgical (now part of Stryker) regarding robotic-assisted cases. (Dr. Lonner has an ongoing relationship as a consultant with Blue Belt Technologies, whose data was utilized in this study.) Using tibial insert thickness as a surrogate measure of the extent of tibial resection, an insert size of ≥10 mm was defined as aggressive while <10 mm was considered conservative. This cutoff was established based on its corresponding resection level with primary TKA and the anticipated need for augments. Statistical analysis was performed using a Mann-Whitney-Wilcoxon test. Significance was set at P < .05.

Results

Tibial resection thickness was found to be most commonly conservative in nature, with sizes 8-mm and 9-mm polyethylene inserts utilized in the majority of both robotic-assisted and conventional UKA cases. However, statistically more 8-mm and 9-mm polyethylene inserts were used in the robotic group (93.6%) than in the conventional group (84.5%) (P < .0001; Figure). Aggressive tibial resection, requiring tibial inserts ≥10 mm, was performed in 6.4% of robotic-assisted cases and 15.5% of conventional cases.

Discussion

Robotic assistance enhances the accuracy of bone preparation, implant component alignment, and soft tissue balance in UKA.10-15 It has yet to be determined whether this improved accuracy translates to improved clinical performance or longevity of the UKA implant. However, we demonstrate that the enhanced accuracy of robotic technology results in more conservative tibial resection when compared to conventional techniques with a potential benefit suggested in the literature upon conversion to TKA.

The findings of this study have important implications for patients undergoing conversion of UKA to TKA, potentially optimizing the ease of revision and clinical outcomes. The outcomes of UKA conversion to TKA are often considered inferior to those of primary TKA, compromised by bone loss, need for augmentation, and challenges of restoring the joint line and rotation.9,16-22 Barrett and Scott18 reported only 66% of patients had good or excellent results at an average of 4.6 years of follow-up after UKA conversion to TKA. Over 50% required stemmed implants and bone graft or bone cement augmentation to address osseous insufficiency. The authors suggested that the primary determinant of the complexity of the conversion to TKA was the surgical technique used in the index procedure. They concluded that UKA conversion to TKA can be as successful as a primary TKA and primary TKA implants can be used without bone augmentation or stems during the revision procedure if minimal tibial bone is resected at the time of the index UKA.18 Schwarzkopf and colleagues9 supported this conclusion when they found that aggressive tibial resection during UKA resulted in the need for bone graft, stem, wedge, or augment in 70% of cases when converted to TKA. Similarly, Khan and colleagues23 found that 26% of patients required bone grafting and 26% required some form of augmentation, and Springer and colleagues3 reported that 68% required a graft, augment, or stem.3,22 Using data from the New Zealand Joint Registry, Pearse and colleagues5 reported that revision TKA components were necessary in 28% of patients and concluded that converting a UKA to TKA gives a less reliable result than primary TKA, and with functional results that are not significantly better than a revision from a TKA.

Conservative tibial resection during UKA minimizes the complexity and concerns of bone loss upon conversion to TKA. Schwarzkopf and colleagues9 found 96.6% of patients with conservative tibial resection received a primary TKA implant, without augments or stems. Furthermore, patients with a primary TKA implant showed improved tibial survivorship, with revision as an end point, compared with patients who received a TKA implant that required stems and augments or bone graft for support.9 Also emphasizing the importance of minimal tibial resection, O’Donnell and colleagues8 compared a cohort of patients undergoing conversion of a minimal resection resurfacing onlay-type UKA to TKA with a cohort of patients undergoing primary TKA. They found that 40% of patients required bone grafting for contained defects, 3.6% required metal augments, and 1.8% required stems.8 There was no significant difference between the groups in terms of range of motion, functional outcome, or radiologic outcomes. The authors concluded that revision of minimal resection resurfacing implants to TKA is associated with similar results to primary TKA and is superior to revision of UKA with greater bone loss. Prior studies have shown that one of the advantages of robotic-assisted UKA is the accuracy and precision of bone resection. The present study supports this premise by showing that tibial resection is significantly more conservative using robotic-assisted techniques when using tibial component thickness as a surrogate for extent of bone resection. While our study did not address implant durability or the impact of conservative resection on conversion to TKA, studies referenced above suggest that the conservative nature of bone preparation would have a relevant impact on the revision of the implant to TKA.

Our study is a retrospective case series that reports tibial component thickness as a surrogate for volume of tibial resection during UKA. While the implication is that more conservative tibial resection may optimize durability and ease of conversion to TKA, future study will be needed to compare robotic-assisted and conventional cases of UKA upon conversion to TKA in order to ascertain whether the more conventional resections of robotic-assisted UKA in fact lead to revision that is comparable with primary TKA in terms of bone loss at the time of revision, components utilized, the need for bone graft, augments, or stems, and clinical outcomes. Given the method of data collection in this study, we could not control for clinical deformity, selection bias, surgeon experience, or medial vs lateral knee compartments. These potential confounders represent weaknesses of this study.

In conclusion, conversion of UKA to TKA may be associated with significant osseous insufficiency, which may compromise patient outcomes in comparison to primary TKA. Studies have shown that UKA conversion to TKA is comparable to primary TKA when minimal tibial resection is performed during the UKA, and the need for augmentation, grafting or stems is increased with more aggressive tibial resection. This study has shown that when robotic assistance is utilized, tibial resection is more precise, less variable, and more conservative compared to conventional techniques.

Am J Orthop. 2016;45(7):E465-E468. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

Unicompartmental knee arthroplasty (UKA) is considered a less invasive approach for the treatment of unicompartmental knee arthritis when compared with total knee arthroplasty (TKA), with optimal preservation of kinematics.1 Despite excellent functional outcomes, conversion to TKA may be necessary if the UKA fails, or in patients with progressive knee arthritis. Some studies have found UKA conversion to TKA to be comparable with primary TKA,2,3 whereas others have found that conversion often requires bone graft, augments, and stemmed components and has increased complications and inferior results compared to primary TKA.4-7 While some studies report that <10% of UKA conversions to TKA require augments,2 others have found that as many as 76% require augments.4-8

Schwarzkopf and colleagues9 recently demonstrated that UKA conversion to TKA is comparable with primary TKA when a conservative tibial resection is performed during the index procedure. However, they reported increased complexity when greater tibial resection was performed and thicker polyethylene inserts were used at the time of the index UKA. The odds ratio of needing an augment or stem during the conversion to TKA was 26.8 (95% confidence interval, 3.71-194) when an aggressive tibial resection was performed during the UKA.9 Tibial resection thickness may thus be predictive of anticipated complexity of UKA revision to TKA and may aid in preoperative planning.

Robotic assistance has been shown to enhance the accuracy of bone preparation, implant component alignment, and soft tissue balance in UKA.10-15 It has yet to be determined whether this improved accuracy translates to improved clinical performance or longevity of the UKA implant. However, the enhanced accuracy of robotic technology may result in more conservative tibial resection when compared to conventional UKA and may be advantageous if conversion to TKA becomes necessary.

The purpose of this study was to compare the distribution of polyethylene insert sizes implanted during conventional and robotic-assisted UKA. We hypothesized that robotic assistance would demonstrate more conservative tibial resection compared to conventional methods of bone preparation.

Methods

We retrospectively compared the distribution of polyethylene insert sizes implanted during consecutive conventional and robotic-assisted UKA procedures. Several manufacturers were queried to provide a listing of the polyethylene insert sizes utilized, ranging from 8 mm to 14 mm. The analysis included 8421 robotic-assisted UKA cases and 27,989 conventional UKA cases. Data were provided by Zimmer Biomet and Smith & Nephew regarding conventional cases, as well as Blue Belt Technologies (now part of Smith & Nephew) and MAKO Surgical (now part of Stryker) regarding robotic-assisted cases. (Dr. Lonner has an ongoing relationship as a consultant with Blue Belt Technologies, whose data was utilized in this study.) Using tibial insert thickness as a surrogate measure of the extent of tibial resection, an insert size of ≥10 mm was defined as aggressive while <10 mm was considered conservative. This cutoff was established based on its corresponding resection level with primary TKA and the anticipated need for augments. Statistical analysis was performed using a Mann-Whitney-Wilcoxon test. Significance was set at P < .05.

Results

Tibial resection thickness was found to be most commonly conservative in nature, with sizes 8-mm and 9-mm polyethylene inserts utilized in the majority of both robotic-assisted and conventional UKA cases. However, statistically more 8-mm and 9-mm polyethylene inserts were used in the robotic group (93.6%) than in the conventional group (84.5%) (P < .0001; Figure). Aggressive tibial resection, requiring tibial inserts ≥10 mm, was performed in 6.4% of robotic-assisted cases and 15.5% of conventional cases.

Discussion

Robotic assistance enhances the accuracy of bone preparation, implant component alignment, and soft tissue balance in UKA.10-15 It has yet to be determined whether this improved accuracy translates to improved clinical performance or longevity of the UKA implant. However, we demonstrate that the enhanced accuracy of robotic technology results in more conservative tibial resection when compared to conventional techniques with a potential benefit suggested in the literature upon conversion to TKA.

The findings of this study have important implications for patients undergoing conversion of UKA to TKA, potentially optimizing the ease of revision and clinical outcomes. The outcomes of UKA conversion to TKA are often considered inferior to those of primary TKA, compromised by bone loss, need for augmentation, and challenges of restoring the joint line and rotation.9,16-22 Barrett and Scott18 reported only 66% of patients had good or excellent results at an average of 4.6 years of follow-up after UKA conversion to TKA. Over 50% required stemmed implants and bone graft or bone cement augmentation to address osseous insufficiency. The authors suggested that the primary determinant of the complexity of the conversion to TKA was the surgical technique used in the index procedure. They concluded that UKA conversion to TKA can be as successful as a primary TKA and primary TKA implants can be used without bone augmentation or stems during the revision procedure if minimal tibial bone is resected at the time of the index UKA.18 Schwarzkopf and colleagues9 supported this conclusion when they found that aggressive tibial resection during UKA resulted in the need for bone graft, stem, wedge, or augment in 70% of cases when converted to TKA. Similarly, Khan and colleagues23 found that 26% of patients required bone grafting and 26% required some form of augmentation, and Springer and colleagues3 reported that 68% required a graft, augment, or stem.3,22 Using data from the New Zealand Joint Registry, Pearse and colleagues5 reported that revision TKA components were necessary in 28% of patients and concluded that converting a UKA to TKA gives a less reliable result than primary TKA, and with functional results that are not significantly better than a revision from a TKA.

Conservative tibial resection during UKA minimizes the complexity and concerns of bone loss upon conversion to TKA. Schwarzkopf and colleagues9 found 96.6% of patients with conservative tibial resection received a primary TKA implant, without augments or stems. Furthermore, patients with a primary TKA implant showed improved tibial survivorship, with revision as an end point, compared with patients who received a TKA implant that required stems and augments or bone graft for support.9 Also emphasizing the importance of minimal tibial resection, O’Donnell and colleagues8 compared a cohort of patients undergoing conversion of a minimal resection resurfacing onlay-type UKA to TKA with a cohort of patients undergoing primary TKA. They found that 40% of patients required bone grafting for contained defects, 3.6% required metal augments, and 1.8% required stems.8 There was no significant difference between the groups in terms of range of motion, functional outcome, or radiologic outcomes. The authors concluded that revision of minimal resection resurfacing implants to TKA is associated with similar results to primary TKA and is superior to revision of UKA with greater bone loss. Prior studies have shown that one of the advantages of robotic-assisted UKA is the accuracy and precision of bone resection. The present study supports this premise by showing that tibial resection is significantly more conservative using robotic-assisted techniques when using tibial component thickness as a surrogate for extent of bone resection. While our study did not address implant durability or the impact of conservative resection on conversion to TKA, studies referenced above suggest that the conservative nature of bone preparation would have a relevant impact on the revision of the implant to TKA.

Our study is a retrospective case series that reports tibial component thickness as a surrogate for volume of tibial resection during UKA. While the implication is that more conservative tibial resection may optimize durability and ease of conversion to TKA, future study will be needed to compare robotic-assisted and conventional cases of UKA upon conversion to TKA in order to ascertain whether the more conventional resections of robotic-assisted UKA in fact lead to revision that is comparable with primary TKA in terms of bone loss at the time of revision, components utilized, the need for bone graft, augments, or stems, and clinical outcomes. Given the method of data collection in this study, we could not control for clinical deformity, selection bias, surgeon experience, or medial vs lateral knee compartments. These potential confounders represent weaknesses of this study.

In conclusion, conversion of UKA to TKA may be associated with significant osseous insufficiency, which may compromise patient outcomes in comparison to primary TKA. Studies have shown that UKA conversion to TKA is comparable to primary TKA when minimal tibial resection is performed during the UKA, and the need for augmentation, grafting or stems is increased with more aggressive tibial resection. This study has shown that when robotic assistance is utilized, tibial resection is more precise, less variable, and more conservative compared to conventional techniques.

Am J Orthop. 2016;45(7):E465-E468. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. Patil S, Colwell CW Jr, Ezzet KA, D’Lima DD. Can normal knee kinematics be restored with unicompartmental knee replacement? J Bone Joint Surg Am. 2005;87(2):332-338.

2. Johnson S, Jones P, Newman JH. The survivorship and results of total knee replacements converted from unicompartmental knee replacements. Knee. 2007;14(2):154-157.

3. Springer BD, Scott RD, Thornhill TS. Conversion of failed unicompartmental knee arthroplasty to TKA. Clin Orthop Relat Res. 2006;446:214-220.

4. Järvenpää J, Kettunen J, Miettinen H, Kröger H. The clinical outcome of revision knee replacement after unicompartmental knee arthroplasty versus primary total knee arthroplasty: 8-17 years follow-up study of 49 patients. Int Orthop. 2010;34(5):649-653.

5. Pearse AJ, Hooper GJ, Rothwell AG, Frampton C. Osteotomy and unicompartmental knee arthroplasty converted to total knee arthroplasty: data from the New Zealand Joint Registry. J Arthroplasty. 2012;27(10):1827-1831.

6. Rancourt MF, Kemp KA, Plamondon SM, Kim PR, Dervin GF. Unicompartmental knee arthroplasties revised to total knee arthroplasties compared with primary total knee arthroplasties. J Arthroplasty. 2012;27(8 Suppl):106-110.

7. Sierra RJ, Kassel CA, Wetters NG, Berend KR, Della Valle CJ, Lombardi AV. Revision of unicompartmental arthroplasty to total knee arthroplasty: not always a slam dunk! J Arthroplasty. 2013;28(8 Suppl):128-132.

8. O’Donnell TM, Abouazza O, Neil MJ. Revision of minimal resection resurfacing unicondylar knee arthroplasty to total knee arthroplasty: results compared with primary total knee arthroplasty. J Arthroplasty. 2013;28(1):33-39.

9. Schwarzkopf R, Mikhael B, Li L, Josephs L, Scott RD. Effect of initial tibial resection thickness on outcomes of revision UKA. Orthopedics. 2013;36(4):e409-e414.

10. Conditt MA, Roche MW. Minimally invasive robotic-arm-guided unicompartmental knee arthroplasty. J Bone Joint Surg Am. 2009;91 Suppl 1:63-68.

11. Dunbar NJ, Roche MW, Park BH, Branch SH, Conditt MA, Banks SA. Accuracy of dynamic tactile-guided unicompartmental knee arthroplasty. J Arthroplasty. 2012;27(5):803-808.e1.

12. Karia M, Masjedi M, Andrews B, Jaffry Z, Cobb J. Robotic assistance enables inexperienced surgeons to perform unicompartmental knee arthroplasties on dry bone models with accuracy superior to conventional methods. Adv Orthop. 2013;2013:481039.

13. Lonner JH, John TK, Conditt MA. Robotic arm-assisted UKA improves tibial component alignment: a pilot study. Clin Orthop Relat Res. 2010;468(1):141-146.

14. Lonner JH, Smith JR, Picard F, Hamlin B, Rowe PJ, Riches PE. High degree of accuracy of a novel image-free handheld robot for unicondylar knee arthroplasty in a cadaveric study. Clin Orthop Relat Res. 2015;473(1):206-212.

15. Smith JR, Picard F, Rowe PJ, Deakin A, Riches PE. The accuracy of a robotically-controlled freehand sculpting tool for unicondylar knee arthroplasty. Bone Joint J. 2013;95-B(suppl 28):68.

16. Chakrabarty G, Newman JH, Ackroyd CE. Revision of unicompartmental arthroplasty of the knee. Clinical and technical considerations. J Arthroplasty. 1998;13(2):191-196.

17. Levine WN, Ozuna RM, Scott RD, Thornhill TS. Conversion of failed modern unicompartmental arthroplasty to total knee arthroplasty. J Arthroplasty. 1996;11(7):797-801.

18. Barrett WP, Scott RD. Revision of failed unicondylar unicompartmental knee arthroplasty. J Bone Joint Surg Am. 1987;69(9):1328-1335.

19. Padgett DE, Stern SH, Insall JN. Revision total knee arthroplasty for failed unicompartmental replacement. J Bone Joint Surg Am. 1991;73(2):186-190.

20. Aleto TJ, Berend ME, Ritter MA, Faris PM, Meneghini RM. Early failure of unicompartmental knee arthroplasty leading to revision. J Arthroplasty. 2008;23(2):159-163.

21. McAuley JP, Engh GA, Ammeen DJ. Revision of failed unicompartmental knee arthroplasty. Clin Orthop Relat Res. 2001;(392):279-282.22. Böhm I, Landsiedl F. Revision surgery after failed unicompartmental knee arthroplasty: a study of 35 cases. J Arthroplasty. 2000;15(8):982-989.

23. Khan Z, Nawaz SZ, Kahane S, Ester C, Chatterji U. Conversion of unicompartmental knee arthroplasty to total knee arthroplasty: the challenges and need for augments. Acta Orthop Belg. 2013;79(6):699-705.

1. Patil S, Colwell CW Jr, Ezzet KA, D’Lima DD. Can normal knee kinematics be restored with unicompartmental knee replacement? J Bone Joint Surg Am. 2005;87(2):332-338.

2. Johnson S, Jones P, Newman JH. The survivorship and results of total knee replacements converted from unicompartmental knee replacements. Knee. 2007;14(2):154-157.

3. Springer BD, Scott RD, Thornhill TS. Conversion of failed unicompartmental knee arthroplasty to TKA. Clin Orthop Relat Res. 2006;446:214-220.

4. Järvenpää J, Kettunen J, Miettinen H, Kröger H. The clinical outcome of revision knee replacement after unicompartmental knee arthroplasty versus primary total knee arthroplasty: 8-17 years follow-up study of 49 patients. Int Orthop. 2010;34(5):649-653.

5. Pearse AJ, Hooper GJ, Rothwell AG, Frampton C. Osteotomy and unicompartmental knee arthroplasty converted to total knee arthroplasty: data from the New Zealand Joint Registry. J Arthroplasty. 2012;27(10):1827-1831.

6. Rancourt MF, Kemp KA, Plamondon SM, Kim PR, Dervin GF. Unicompartmental knee arthroplasties revised to total knee arthroplasties compared with primary total knee arthroplasties. J Arthroplasty. 2012;27(8 Suppl):106-110.

7. Sierra RJ, Kassel CA, Wetters NG, Berend KR, Della Valle CJ, Lombardi AV. Revision of unicompartmental arthroplasty to total knee arthroplasty: not always a slam dunk! J Arthroplasty. 2013;28(8 Suppl):128-132.

8. O’Donnell TM, Abouazza O, Neil MJ. Revision of minimal resection resurfacing unicondylar knee arthroplasty to total knee arthroplasty: results compared with primary total knee arthroplasty. J Arthroplasty. 2013;28(1):33-39.

9. Schwarzkopf R, Mikhael B, Li L, Josephs L, Scott RD. Effect of initial tibial resection thickness on outcomes of revision UKA. Orthopedics. 2013;36(4):e409-e414.

10. Conditt MA, Roche MW. Minimally invasive robotic-arm-guided unicompartmental knee arthroplasty. J Bone Joint Surg Am. 2009;91 Suppl 1:63-68.

11. Dunbar NJ, Roche MW, Park BH, Branch SH, Conditt MA, Banks SA. Accuracy of dynamic tactile-guided unicompartmental knee arthroplasty. J Arthroplasty. 2012;27(5):803-808.e1.

12. Karia M, Masjedi M, Andrews B, Jaffry Z, Cobb J. Robotic assistance enables inexperienced surgeons to perform unicompartmental knee arthroplasties on dry bone models with accuracy superior to conventional methods. Adv Orthop. 2013;2013:481039.

13. Lonner JH, John TK, Conditt MA. Robotic arm-assisted UKA improves tibial component alignment: a pilot study. Clin Orthop Relat Res. 2010;468(1):141-146.

14. Lonner JH, Smith JR, Picard F, Hamlin B, Rowe PJ, Riches PE. High degree of accuracy of a novel image-free handheld robot for unicondylar knee arthroplasty in a cadaveric study. Clin Orthop Relat Res. 2015;473(1):206-212.

15. Smith JR, Picard F, Rowe PJ, Deakin A, Riches PE. The accuracy of a robotically-controlled freehand sculpting tool for unicondylar knee arthroplasty. Bone Joint J. 2013;95-B(suppl 28):68.

16. Chakrabarty G, Newman JH, Ackroyd CE. Revision of unicompartmental arthroplasty of the knee. Clinical and technical considerations. J Arthroplasty. 1998;13(2):191-196.

17. Levine WN, Ozuna RM, Scott RD, Thornhill TS. Conversion of failed modern unicompartmental arthroplasty to total knee arthroplasty. J Arthroplasty. 1996;11(7):797-801.

18. Barrett WP, Scott RD. Revision of failed unicondylar unicompartmental knee arthroplasty. J Bone Joint Surg Am. 1987;69(9):1328-1335.

19. Padgett DE, Stern SH, Insall JN. Revision total knee arthroplasty for failed unicompartmental replacement. J Bone Joint Surg Am. 1991;73(2):186-190.

20. Aleto TJ, Berend ME, Ritter MA, Faris PM, Meneghini RM. Early failure of unicompartmental knee arthroplasty leading to revision. J Arthroplasty. 2008;23(2):159-163.

21. McAuley JP, Engh GA, Ammeen DJ. Revision of failed unicompartmental knee arthroplasty. Clin Orthop Relat Res. 2001;(392):279-282.22. Böhm I, Landsiedl F. Revision surgery after failed unicompartmental knee arthroplasty: a study of 35 cases. J Arthroplasty. 2000;15(8):982-989.

23. Khan Z, Nawaz SZ, Kahane S, Ester C, Chatterji U. Conversion of unicompartmental knee arthroplasty to total knee arthroplasty: the challenges and need for augments. Acta Orthop Belg. 2013;79(6):699-705.

Perceived Leg-Length Discrepancy After Primary Total Knee Arthroplasty: Does Knee Alignment Play a Role?

Leg-length discrepancy (LLD) is common in the general population1 and particularly in patients with degenerative joint diseases of the hip and knee.2 Common complications of LLD include femoral, sciatic, and peroneal nerve palsy; lower back pain; gait abnormalities3; and general dissatisfaction. LLD is a concern for orthopedic surgeons who perform total knee arthroplasty (TKA) because limb lengthening is common after this procedure.4,5 Surgeons are aware of the limb lengthening that occurs during TKA,4,5 and studies have confirmed that LLD usually decreases after TKA.4,5

Despite surgeons’ best efforts, some patients still perceive LLD after surgery, though the incidence of perceived LLD in patients who have had TKA has not been well documented. Aside from actual, objectively measured LLD, there may be other factors that lead patients to perceive LLD. Study results have suggested that preoperative varus–valgus alignment of the knee joint may correlate with how much an operative leg is lengthened after TKA4,5; however, the outcome investigated was objective LLD measurements, not perceived LLD. Understanding the factors that may influence patients’ ability to perceive LLD would allow surgeons to preoperatively identify patients who are at higher risk for postoperative perceived LLD. This information, along with expected time to resolution of postoperative perceived LLD, would allow surgeons to educate their patients accordingly.

We conducted a study to determine the incidence of perceived LLD before and after primary TKA in patients with unilateral osteoarthritis and to determine the correlation between mechanical axis of the knee and perceived LLD before and after surgery. Given that surgery may correct mechanical axis misalignment, we investigated the correlation between this correction and its ability to change patients’ preoperative and postoperative perceived LLD. We hypothesized that a large correction of mechanical axis would lead patients to perceive LLD after surgery. The relationship of body mass index (BMI) and age to patients’ perceived LLD was also assessed. The incidence and time frame of resolution of postoperative perceived LLD were determined.

Methods

Approval for this study was received from the Institutional Review Board at our institution, Rush University Medical Center in Chicago, Illinois. Seventy-three patients undergoing primary TKA performed by 3 surgeons at 2 institutions between February 2010 and January 2013 were prospectively enrolled. Inclusion criteria were age 18 years to 90 years and primary TKA for unilateral osteoarthritis; exclusion criteria were allergy or intolerance to the study materials, operative treatment of affected joint or its underlying etiology within prior month, previous surgeries (other than arthroscopy) on affected joint, previous surgeries (on unaffected lower extremity) that may influence preoperative and postoperative leg lengths, and any substance abuse or dependence within the past 6 months. Patients provided written informed consent for total knee arthroplasty.

All surgeries were performed by Dr. Levine, Dr. Della Valle, and Dr. Sporer using the medial parapatellar or midvastus approach with tourniquet. Similar standard postoperative rehabilitation protocols with early mobilization were used in all cases.

During clinical evaluation, patient demographic data were collected and LLD surveys administered. Patients were asked, before surgery and 3 to 6 weeks, 3 months, 6 months, and 1 year after surgery, if they perceived LLD. A patient who no longer perceived LLD after surgery was no longer followed for this study.

At the preoperative clinic visit and at the 3-month or 6-week postoperative visit, standing mechanical axis radiographs were viewed by 2 of the authors (not the primary surgeons) using PACS (picture archiving and communication system software). The mechanical axis of the operative leg was measured with ImageJ software by taking the angle from the center of the femur to the middle of the ankle joint, with the vertex assigned to the middle of the knee joint.

We used a 2-tailed unpaired t test to determine the relationship of preoperative mechanical axis to perceived LLD (or lack thereof) before surgery. The data were analyzed for separate varus and valgus deformities. Then we determined the relationship of postoperative mechanical axis to perceived LLD (or lack thereof) after surgery. The McNemar test was used to determine the effect of surgery on patients’ LLD perceptions.

To determine the relationship between preoperative-to-postoperative change in mechanical axis and change in LLD perceptions, we divided patients into 4 groups. Group 1 had both preoperative and postoperative perceived LLD, group 2 had no preoperative or postoperative perceived LLD, group 3 had preoperative perceived LLD but no postoperative perceived LLD, and group 4 had postoperative perceived LLD but no preoperative perceived LLD. The absolute value of the difference between preoperative and postoperative mechanical axis was then determined, relative to 180°, to account for changes in varus to valgus deformity before and after surgery and vice versa. Analysis of variance (ANOVA) was used to detect differences between groups. This analysis was then stratified based on BMI and age.

Results

Of the 73 enrolled patients, 2 were excluded from results analysis because of inadequate data—one did not complete the postoperative LLD survey, and the other did not have postoperative standing mechanical axis radiographs—leaving 71 patients (27 men, 44 women) with adequate data. Mean (SD) age of all patients was 65 (8.4) years (range, 47-89 years). Mean (SD) BMI was 35.1 (9.9; range, 20.2-74.8).

Of the 71 patients with adequate data, 18 had preoperative perceived LLD and 53 did not; in addition, 7 had postoperative perceived LLD and 64 did not. All 7 patients with postoperative perceived LLD noted resolution of LLD, at a mean of 8.5 weeks (range, 3 weeks-3 months). There was a significant difference between the 18 patients with preoperative perceived LLD and the 7 with postoperative perceived LLD (P = .035, analyzed with the McNemar test).

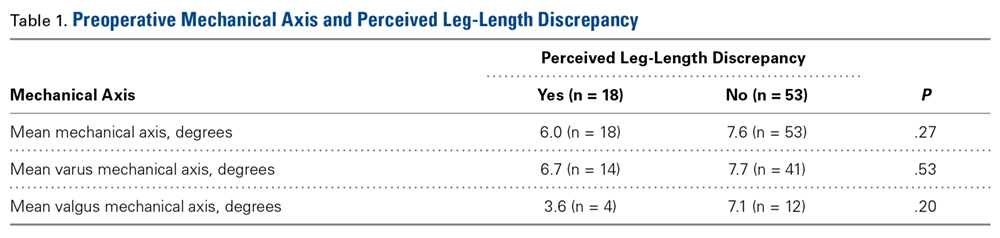

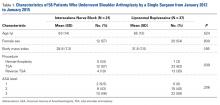

Table 1 lists the mean preoperative mechanical axis measurements for patients with and without preoperative perceived LLD.

Table 2 lists the mean postoperative mechanical axis measurements for patients with and without postoperative perceived LLD.

Table 3 lists the mean absolute values of mechanical axis correction (preoperative to postoperative) for the 4 patient groups described in the Methods section.

Discussion

In this study, 18 patients (25%) had preoperative perceived LLD, proving that perceived LLD is common in patients who undergo TKA for unilateral osteoarthritis. Surgeons should give their patients a preoperative survey on perceived LLD, as survey responses may inform and influence surgical decisions and strategies.

Of the 18 patients with preoperative perceived LLD, only 1 had postoperative perceived LLD. That perceived LLD decreased after surgery makes sense given the widely accepted notion that actual LLD is common before primary TKA but in most cases is corrected during surgery.4,5 As LLD correction during surgery is so successful, surgeons should tell their patients with preoperative perceived LLD that in most cases it will be fixed after TKA.

Although the incidence of perceived LLD decreased after TKA (as mentioned earlier), the decrease seemed to be restricted mostly to patients with preoperative perceived LLD, and the underlying LLD was most probably corrected by the surgery. However, surgery introduced perceived LLD in 6 cases, supporting the notion that it is crucial to understand which patients are at higher risk for postoperative perceived LLD and what if any time frame can be expected for resolution in these cases. In our study, all cases of perceived LLD had resolved by a mean follow-up of 8.5 weeks (range, 3 weeks-3 months). This phenomenon of resolution may be attributed to some of the physical, objective LLD corrections that naturally occur throughout the postoperative course,4 though psychological factors may also be involved. Our study results suggest patients should be counseled that, though about 10% of patients perceive LLD after primary TKA, the vast majority of perceived LLD cases resolve within 3 months.

One study goal was to determine the relationship between the mechanical axis of the knee and perceived LLD both before and after surgery. There were no significant relationships. This was also true when cases of varus and valgus deformity were analyzed separately.

Another study goal was to determine if a surgical change in the mechanical alignment of the knee would influence preoperative-to-postoperative LLD perceptions. In our analysis, patients were divided into 4 groups based on their preoperative and postoperative LLD perceptions (see Methods section). ANOVA revealed no significant differences in absolute values of mechanical axis correction among the 4 groups. Likewise, there were no correlations between BMI and age and mechanical axis correction among the groups, suggesting LLD perception is unrelated to any of these variables. Ideally, if a relationship between a threshold knee alignment value and perceived LLD existed, surgeons would be able to counsel patients at higher risk for perceived LLD about how their knee alignment may contribute to their perception. Unfortunately, our study results did not show any significant statistical relationships in this regard.

The problem of LLD in patients undergoing TKA is not new, and much research is needed to determine the correlation between perceived versus actual discrepancies, and why they occur. Our study results confirmed that TKA corrects most cases of preoperative perceived LLD but introduces perceived LLD in other cases. Whether preoperative or postoperative LLD is merely perceived or is in fact an actual discrepancy remains to be seen.

One limitation of this study was its lack of leg-length measurements. Although we studied knee alignment specifically, it would have been useful to compare perceived LLD with measured leg lengths, either clinically or radiographically, especially since leg lengths obviously play a role in any perceived LLD. We used mechanical alignment as a surrogate for actual LLD because we hypothesized that alignment may contribute to patients’ perceived discrepancies.

Another limitation was the relatively small sample. Only 24 cases of perceived LLD were analyzed. Given our low rates of perceived LLD (25% before surgery, 10% after surgery), it is difficult to study a large enough TKA group to establish a statistically significant number of cases. Nevertheless, investigators may use larger groups to establish more meaningful relationships.

A third limitation was that alignment was measured on the operative side but not the contralateral side. As we were focusing on perceived discrepancy, contralateral knee alignment may play an important role. Our study involved patients with unilateral osteoarthritis, so it would be reasonable to assume the nonoperative knee was almost neutral in alignment in most cases. However, given that varus/valgus misalignment is a known risk factor for osteoarthritis,6 many of our patients with unilateral disease may very well have had preexisting misalignment of both knees. The undetermined alignment of the nonoperative side may be a confounding variable in the relationship between operative knee alignment and perceived LLD.

Fourth, not all patients were surveyed 3 weeks after surgery. Some were first surveyed at 6 weeks, and it is possible there were cases of transient postoperative LLD that resolved before that point. Therefore, our reported incidence of postoperative LLD could have missed some cases. In addition, our mean 8.5-week period for LLD resolution may not have accounted for these resolved cases of transient perceived LLD.

Am J Orthop. 2016;45(7):E429-E433. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. O’Brien S, Kernohan G, Fitzpatrick C, Hill J, Beverland D. Perception of imposed leg length inequality in normal subjects. Hip Int. 2010;20(4):505-511.

2. Noll DR. Leg length discrepancy and osteoarthritic knee pain in the elderly: an observational study. J Am Osteopath Assoc. 2013;113(9):670-678.

3. Clark CR, Huddleston HD, Schoch EP 3rd, Thomas BJ. Leg-length discrepancy after total hip arthroplasty. J Am Acad Orthop Surg. 2006;14(1):38-45.

4. Chang MJ, Kang YG, Chang CB, Seong SC, Kim TK. The patterns of limb length, height, weight and body mass index changes after total knee arthroplasty. J Arthroplasty. 2013;28(10):1856-1861.

5. Lang JE, Scott RD, Lonner JH, Bono JV, Hunter DJ, Li L. Magnitude of limb lengthening after primary total knee arthroplasty. J Arthroplasty. 2012;27(3):341-346.

6. Sharma L, Song J, Dunlop D, et al. Varus and valgus alignment and incident and progressive knee osteoarthritis. Ann Rheum Dis. 2010;69(11):1940-1945.

Leg-length discrepancy (LLD) is common in the general population1 and particularly in patients with degenerative joint diseases of the hip and knee.2 Common complications of LLD include femoral, sciatic, and peroneal nerve palsy; lower back pain; gait abnormalities3; and general dissatisfaction. LLD is a concern for orthopedic surgeons who perform total knee arthroplasty (TKA) because limb lengthening is common after this procedure.4,5 Surgeons are aware of the limb lengthening that occurs during TKA,4,5 and studies have confirmed that LLD usually decreases after TKA.4,5

Despite surgeons’ best efforts, some patients still perceive LLD after surgery, though the incidence of perceived LLD in patients who have had TKA has not been well documented. Aside from actual, objectively measured LLD, there may be other factors that lead patients to perceive LLD. Study results have suggested that preoperative varus–valgus alignment of the knee joint may correlate with how much an operative leg is lengthened after TKA4,5; however, the outcome investigated was objective LLD measurements, not perceived LLD. Understanding the factors that may influence patients’ ability to perceive LLD would allow surgeons to preoperatively identify patients who are at higher risk for postoperative perceived LLD. This information, along with expected time to resolution of postoperative perceived LLD, would allow surgeons to educate their patients accordingly.

We conducted a study to determine the incidence of perceived LLD before and after primary TKA in patients with unilateral osteoarthritis and to determine the correlation between mechanical axis of the knee and perceived LLD before and after surgery. Given that surgery may correct mechanical axis misalignment, we investigated the correlation between this correction and its ability to change patients’ preoperative and postoperative perceived LLD. We hypothesized that a large correction of mechanical axis would lead patients to perceive LLD after surgery. The relationship of body mass index (BMI) and age to patients’ perceived LLD was also assessed. The incidence and time frame of resolution of postoperative perceived LLD were determined.

Methods

Approval for this study was received from the Institutional Review Board at our institution, Rush University Medical Center in Chicago, Illinois. Seventy-three patients undergoing primary TKA performed by 3 surgeons at 2 institutions between February 2010 and January 2013 were prospectively enrolled. Inclusion criteria were age 18 years to 90 years and primary TKA for unilateral osteoarthritis; exclusion criteria were allergy or intolerance to the study materials, operative treatment of affected joint or its underlying etiology within prior month, previous surgeries (other than arthroscopy) on affected joint, previous surgeries (on unaffected lower extremity) that may influence preoperative and postoperative leg lengths, and any substance abuse or dependence within the past 6 months. Patients provided written informed consent for total knee arthroplasty.

All surgeries were performed by Dr. Levine, Dr. Della Valle, and Dr. Sporer using the medial parapatellar or midvastus approach with tourniquet. Similar standard postoperative rehabilitation protocols with early mobilization were used in all cases.

During clinical evaluation, patient demographic data were collected and LLD surveys administered. Patients were asked, before surgery and 3 to 6 weeks, 3 months, 6 months, and 1 year after surgery, if they perceived LLD. A patient who no longer perceived LLD after surgery was no longer followed for this study.

At the preoperative clinic visit and at the 3-month or 6-week postoperative visit, standing mechanical axis radiographs were viewed by 2 of the authors (not the primary surgeons) using PACS (picture archiving and communication system software). The mechanical axis of the operative leg was measured with ImageJ software by taking the angle from the center of the femur to the middle of the ankle joint, with the vertex assigned to the middle of the knee joint.

We used a 2-tailed unpaired t test to determine the relationship of preoperative mechanical axis to perceived LLD (or lack thereof) before surgery. The data were analyzed for separate varus and valgus deformities. Then we determined the relationship of postoperative mechanical axis to perceived LLD (or lack thereof) after surgery. The McNemar test was used to determine the effect of surgery on patients’ LLD perceptions.

To determine the relationship between preoperative-to-postoperative change in mechanical axis and change in LLD perceptions, we divided patients into 4 groups. Group 1 had both preoperative and postoperative perceived LLD, group 2 had no preoperative or postoperative perceived LLD, group 3 had preoperative perceived LLD but no postoperative perceived LLD, and group 4 had postoperative perceived LLD but no preoperative perceived LLD. The absolute value of the difference between preoperative and postoperative mechanical axis was then determined, relative to 180°, to account for changes in varus to valgus deformity before and after surgery and vice versa. Analysis of variance (ANOVA) was used to detect differences between groups. This analysis was then stratified based on BMI and age.

Results

Of the 73 enrolled patients, 2 were excluded from results analysis because of inadequate data—one did not complete the postoperative LLD survey, and the other did not have postoperative standing mechanical axis radiographs—leaving 71 patients (27 men, 44 women) with adequate data. Mean (SD) age of all patients was 65 (8.4) years (range, 47-89 years). Mean (SD) BMI was 35.1 (9.9; range, 20.2-74.8).

Of the 71 patients with adequate data, 18 had preoperative perceived LLD and 53 did not; in addition, 7 had postoperative perceived LLD and 64 did not. All 7 patients with postoperative perceived LLD noted resolution of LLD, at a mean of 8.5 weeks (range, 3 weeks-3 months). There was a significant difference between the 18 patients with preoperative perceived LLD and the 7 with postoperative perceived LLD (P = .035, analyzed with the McNemar test).

Table 1 lists the mean preoperative mechanical axis measurements for patients with and without preoperative perceived LLD.

Table 2 lists the mean postoperative mechanical axis measurements for patients with and without postoperative perceived LLD.

Table 3 lists the mean absolute values of mechanical axis correction (preoperative to postoperative) for the 4 patient groups described in the Methods section.

Discussion

In this study, 18 patients (25%) had preoperative perceived LLD, proving that perceived LLD is common in patients who undergo TKA for unilateral osteoarthritis. Surgeons should give their patients a preoperative survey on perceived LLD, as survey responses may inform and influence surgical decisions and strategies.

Of the 18 patients with preoperative perceived LLD, only 1 had postoperative perceived LLD. That perceived LLD decreased after surgery makes sense given the widely accepted notion that actual LLD is common before primary TKA but in most cases is corrected during surgery.4,5 As LLD correction during surgery is so successful, surgeons should tell their patients with preoperative perceived LLD that in most cases it will be fixed after TKA.

Although the incidence of perceived LLD decreased after TKA (as mentioned earlier), the decrease seemed to be restricted mostly to patients with preoperative perceived LLD, and the underlying LLD was most probably corrected by the surgery. However, surgery introduced perceived LLD in 6 cases, supporting the notion that it is crucial to understand which patients are at higher risk for postoperative perceived LLD and what if any time frame can be expected for resolution in these cases. In our study, all cases of perceived LLD had resolved by a mean follow-up of 8.5 weeks (range, 3 weeks-3 months). This phenomenon of resolution may be attributed to some of the physical, objective LLD corrections that naturally occur throughout the postoperative course,4 though psychological factors may also be involved. Our study results suggest patients should be counseled that, though about 10% of patients perceive LLD after primary TKA, the vast majority of perceived LLD cases resolve within 3 months.

One study goal was to determine the relationship between the mechanical axis of the knee and perceived LLD both before and after surgery. There were no significant relationships. This was also true when cases of varus and valgus deformity were analyzed separately.

Another study goal was to determine if a surgical change in the mechanical alignment of the knee would influence preoperative-to-postoperative LLD perceptions. In our analysis, patients were divided into 4 groups based on their preoperative and postoperative LLD perceptions (see Methods section). ANOVA revealed no significant differences in absolute values of mechanical axis correction among the 4 groups. Likewise, there were no correlations between BMI and age and mechanical axis correction among the groups, suggesting LLD perception is unrelated to any of these variables. Ideally, if a relationship between a threshold knee alignment value and perceived LLD existed, surgeons would be able to counsel patients at higher risk for perceived LLD about how their knee alignment may contribute to their perception. Unfortunately, our study results did not show any significant statistical relationships in this regard.

The problem of LLD in patients undergoing TKA is not new, and much research is needed to determine the correlation between perceived versus actual discrepancies, and why they occur. Our study results confirmed that TKA corrects most cases of preoperative perceived LLD but introduces perceived LLD in other cases. Whether preoperative or postoperative LLD is merely perceived or is in fact an actual discrepancy remains to be seen.

One limitation of this study was its lack of leg-length measurements. Although we studied knee alignment specifically, it would have been useful to compare perceived LLD with measured leg lengths, either clinically or radiographically, especially since leg lengths obviously play a role in any perceived LLD. We used mechanical alignment as a surrogate for actual LLD because we hypothesized that alignment may contribute to patients’ perceived discrepancies.

Another limitation was the relatively small sample. Only 24 cases of perceived LLD were analyzed. Given our low rates of perceived LLD (25% before surgery, 10% after surgery), it is difficult to study a large enough TKA group to establish a statistically significant number of cases. Nevertheless, investigators may use larger groups to establish more meaningful relationships.

A third limitation was that alignment was measured on the operative side but not the contralateral side. As we were focusing on perceived discrepancy, contralateral knee alignment may play an important role. Our study involved patients with unilateral osteoarthritis, so it would be reasonable to assume the nonoperative knee was almost neutral in alignment in most cases. However, given that varus/valgus misalignment is a known risk factor for osteoarthritis,6 many of our patients with unilateral disease may very well have had preexisting misalignment of both knees. The undetermined alignment of the nonoperative side may be a confounding variable in the relationship between operative knee alignment and perceived LLD.

Fourth, not all patients were surveyed 3 weeks after surgery. Some were first surveyed at 6 weeks, and it is possible there were cases of transient postoperative LLD that resolved before that point. Therefore, our reported incidence of postoperative LLD could have missed some cases. In addition, our mean 8.5-week period for LLD resolution may not have accounted for these resolved cases of transient perceived LLD.

Am J Orthop. 2016;45(7):E429-E433. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

Leg-length discrepancy (LLD) is common in the general population1 and particularly in patients with degenerative joint diseases of the hip and knee.2 Common complications of LLD include femoral, sciatic, and peroneal nerve palsy; lower back pain; gait abnormalities3; and general dissatisfaction. LLD is a concern for orthopedic surgeons who perform total knee arthroplasty (TKA) because limb lengthening is common after this procedure.4,5 Surgeons are aware of the limb lengthening that occurs during TKA,4,5 and studies have confirmed that LLD usually decreases after TKA.4,5

Despite surgeons’ best efforts, some patients still perceive LLD after surgery, though the incidence of perceived LLD in patients who have had TKA has not been well documented. Aside from actual, objectively measured LLD, there may be other factors that lead patients to perceive LLD. Study results have suggested that preoperative varus–valgus alignment of the knee joint may correlate with how much an operative leg is lengthened after TKA4,5; however, the outcome investigated was objective LLD measurements, not perceived LLD. Understanding the factors that may influence patients’ ability to perceive LLD would allow surgeons to preoperatively identify patients who are at higher risk for postoperative perceived LLD. This information, along with expected time to resolution of postoperative perceived LLD, would allow surgeons to educate their patients accordingly.

We conducted a study to determine the incidence of perceived LLD before and after primary TKA in patients with unilateral osteoarthritis and to determine the correlation between mechanical axis of the knee and perceived LLD before and after surgery. Given that surgery may correct mechanical axis misalignment, we investigated the correlation between this correction and its ability to change patients’ preoperative and postoperative perceived LLD. We hypothesized that a large correction of mechanical axis would lead patients to perceive LLD after surgery. The relationship of body mass index (BMI) and age to patients’ perceived LLD was also assessed. The incidence and time frame of resolution of postoperative perceived LLD were determined.

Methods

Approval for this study was received from the Institutional Review Board at our institution, Rush University Medical Center in Chicago, Illinois. Seventy-three patients undergoing primary TKA performed by 3 surgeons at 2 institutions between February 2010 and January 2013 were prospectively enrolled. Inclusion criteria were age 18 years to 90 years and primary TKA for unilateral osteoarthritis; exclusion criteria were allergy or intolerance to the study materials, operative treatment of affected joint or its underlying etiology within prior month, previous surgeries (other than arthroscopy) on affected joint, previous surgeries (on unaffected lower extremity) that may influence preoperative and postoperative leg lengths, and any substance abuse or dependence within the past 6 months. Patients provided written informed consent for total knee arthroplasty.

All surgeries were performed by Dr. Levine, Dr. Della Valle, and Dr. Sporer using the medial parapatellar or midvastus approach with tourniquet. Similar standard postoperative rehabilitation protocols with early mobilization were used in all cases.

During clinical evaluation, patient demographic data were collected and LLD surveys administered. Patients were asked, before surgery and 3 to 6 weeks, 3 months, 6 months, and 1 year after surgery, if they perceived LLD. A patient who no longer perceived LLD after surgery was no longer followed for this study.

At the preoperative clinic visit and at the 3-month or 6-week postoperative visit, standing mechanical axis radiographs were viewed by 2 of the authors (not the primary surgeons) using PACS (picture archiving and communication system software). The mechanical axis of the operative leg was measured with ImageJ software by taking the angle from the center of the femur to the middle of the ankle joint, with the vertex assigned to the middle of the knee joint.

We used a 2-tailed unpaired t test to determine the relationship of preoperative mechanical axis to perceived LLD (or lack thereof) before surgery. The data were analyzed for separate varus and valgus deformities. Then we determined the relationship of postoperative mechanical axis to perceived LLD (or lack thereof) after surgery. The McNemar test was used to determine the effect of surgery on patients’ LLD perceptions.

To determine the relationship between preoperative-to-postoperative change in mechanical axis and change in LLD perceptions, we divided patients into 4 groups. Group 1 had both preoperative and postoperative perceived LLD, group 2 had no preoperative or postoperative perceived LLD, group 3 had preoperative perceived LLD but no postoperative perceived LLD, and group 4 had postoperative perceived LLD but no preoperative perceived LLD. The absolute value of the difference between preoperative and postoperative mechanical axis was then determined, relative to 180°, to account for changes in varus to valgus deformity before and after surgery and vice versa. Analysis of variance (ANOVA) was used to detect differences between groups. This analysis was then stratified based on BMI and age.

Results

Of the 73 enrolled patients, 2 were excluded from results analysis because of inadequate data—one did not complete the postoperative LLD survey, and the other did not have postoperative standing mechanical axis radiographs—leaving 71 patients (27 men, 44 women) with adequate data. Mean (SD) age of all patients was 65 (8.4) years (range, 47-89 years). Mean (SD) BMI was 35.1 (9.9; range, 20.2-74.8).

Of the 71 patients with adequate data, 18 had preoperative perceived LLD and 53 did not; in addition, 7 had postoperative perceived LLD and 64 did not. All 7 patients with postoperative perceived LLD noted resolution of LLD, at a mean of 8.5 weeks (range, 3 weeks-3 months). There was a significant difference between the 18 patients with preoperative perceived LLD and the 7 with postoperative perceived LLD (P = .035, analyzed with the McNemar test).

Table 1 lists the mean preoperative mechanical axis measurements for patients with and without preoperative perceived LLD.

Table 2 lists the mean postoperative mechanical axis measurements for patients with and without postoperative perceived LLD.

Table 3 lists the mean absolute values of mechanical axis correction (preoperative to postoperative) for the 4 patient groups described in the Methods section.

Discussion

In this study, 18 patients (25%) had preoperative perceived LLD, proving that perceived LLD is common in patients who undergo TKA for unilateral osteoarthritis. Surgeons should give their patients a preoperative survey on perceived LLD, as survey responses may inform and influence surgical decisions and strategies.

Of the 18 patients with preoperative perceived LLD, only 1 had postoperative perceived LLD. That perceived LLD decreased after surgery makes sense given the widely accepted notion that actual LLD is common before primary TKA but in most cases is corrected during surgery.4,5 As LLD correction during surgery is so successful, surgeons should tell their patients with preoperative perceived LLD that in most cases it will be fixed after TKA.

Although the incidence of perceived LLD decreased after TKA (as mentioned earlier), the decrease seemed to be restricted mostly to patients with preoperative perceived LLD, and the underlying LLD was most probably corrected by the surgery. However, surgery introduced perceived LLD in 6 cases, supporting the notion that it is crucial to understand which patients are at higher risk for postoperative perceived LLD and what if any time frame can be expected for resolution in these cases. In our study, all cases of perceived LLD had resolved by a mean follow-up of 8.5 weeks (range, 3 weeks-3 months). This phenomenon of resolution may be attributed to some of the physical, objective LLD corrections that naturally occur throughout the postoperative course,4 though psychological factors may also be involved. Our study results suggest patients should be counseled that, though about 10% of patients perceive LLD after primary TKA, the vast majority of perceived LLD cases resolve within 3 months.

One study goal was to determine the relationship between the mechanical axis of the knee and perceived LLD both before and after surgery. There were no significant relationships. This was also true when cases of varus and valgus deformity were analyzed separately.

Another study goal was to determine if a surgical change in the mechanical alignment of the knee would influence preoperative-to-postoperative LLD perceptions. In our analysis, patients were divided into 4 groups based on their preoperative and postoperative LLD perceptions (see Methods section). ANOVA revealed no significant differences in absolute values of mechanical axis correction among the 4 groups. Likewise, there were no correlations between BMI and age and mechanical axis correction among the groups, suggesting LLD perception is unrelated to any of these variables. Ideally, if a relationship between a threshold knee alignment value and perceived LLD existed, surgeons would be able to counsel patients at higher risk for perceived LLD about how their knee alignment may contribute to their perception. Unfortunately, our study results did not show any significant statistical relationships in this regard.

The problem of LLD in patients undergoing TKA is not new, and much research is needed to determine the correlation between perceived versus actual discrepancies, and why they occur. Our study results confirmed that TKA corrects most cases of preoperative perceived LLD but introduces perceived LLD in other cases. Whether preoperative or postoperative LLD is merely perceived or is in fact an actual discrepancy remains to be seen.

One limitation of this study was its lack of leg-length measurements. Although we studied knee alignment specifically, it would have been useful to compare perceived LLD with measured leg lengths, either clinically or radiographically, especially since leg lengths obviously play a role in any perceived LLD. We used mechanical alignment as a surrogate for actual LLD because we hypothesized that alignment may contribute to patients’ perceived discrepancies.

Another limitation was the relatively small sample. Only 24 cases of perceived LLD were analyzed. Given our low rates of perceived LLD (25% before surgery, 10% after surgery), it is difficult to study a large enough TKA group to establish a statistically significant number of cases. Nevertheless, investigators may use larger groups to establish more meaningful relationships.

A third limitation was that alignment was measured on the operative side but not the contralateral side. As we were focusing on perceived discrepancy, contralateral knee alignment may play an important role. Our study involved patients with unilateral osteoarthritis, so it would be reasonable to assume the nonoperative knee was almost neutral in alignment in most cases. However, given that varus/valgus misalignment is a known risk factor for osteoarthritis,6 many of our patients with unilateral disease may very well have had preexisting misalignment of both knees. The undetermined alignment of the nonoperative side may be a confounding variable in the relationship between operative knee alignment and perceived LLD.

Fourth, not all patients were surveyed 3 weeks after surgery. Some were first surveyed at 6 weeks, and it is possible there were cases of transient postoperative LLD that resolved before that point. Therefore, our reported incidence of postoperative LLD could have missed some cases. In addition, our mean 8.5-week period for LLD resolution may not have accounted for these resolved cases of transient perceived LLD.

Am J Orthop. 2016;45(7):E429-E433. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. O’Brien S, Kernohan G, Fitzpatrick C, Hill J, Beverland D. Perception of imposed leg length inequality in normal subjects. Hip Int. 2010;20(4):505-511.