User login

Diversity, equity, and inclusion in reproductive health care

These barriers represent inequality in access to reproductive medical services.

These challenges are also seen in other reproductive disorders such as polycystic ovary syndrome (PCOS), fibroids, and endometriosis. It is estimated that < 25% of individuals with infertility in the United States access the resources required to have their treatment needs met (Fertil Steril. 2015 Nov;104(5):1104-10. doi: 10.1016/j.fertnstert.2015.07.113)

In 2020, the American Society for Reproductive Medicine (ASRM) created a task force on Diversity, Equity, and Inclusion (DEI) chaired by Board Member Michael A. Thomas, MD. Two years later, the ASRM elevated this task force to a committee that is now chaired by Gloria Richard-Davis, MD. As health care systems and societies increasingly recognize these obstacles to care, I invited Dr. Thomas, the current president of the ASRM, to address this vital concern. Dr. Thomas is professor and chair, department of obstetrics and gynecology, at the University of Cincinnati.

While not limited to reproductive health care, how prevalent is the lack of DEI and what factors contribute to this problem?

When we established the initial ASRM DEI task force, we wanted to look at DEI issues within our profession and as an access-to-care initiative. We found that ASRM and ABOG (American Board of Obstetrics and Gynecology) were not asking questions about the makeup of our REI (Reproductive Endocrinology & Infertility) providers, nursing staff, and lab personnel. We had some older data from 2018 about the REI fellowships. Since that time, there appears to be an upward trend of people of color in REI fellowships.

We still need more data about academic, hybrid, and private REI practices when it comes to all employees. The goal would be to increase the number of people of color in all aspects of our field.

As far as access to care, we know that people of color do not have the ability to undergo ART (assisted reproductive technology) procedures at the same rate. This could be due to affordability, slower and/or later referral patterns, and personal stigma issues. Even in mandated states, people of color are seen by IVF providers in lower numbers. There is a need for a better understanding of the access-to-care issues, especially when affordability is not a problem, and the barriers to our LGBTQ+ patients.

Can you provide information about actions by the ASRM DEI task force and any plans for the future?

The DEI task force is now an ASRM committee. This committee is chaired by Dr. Gloria Richard-Davis and continues to work on increasing people of color in the REI workforce and understanding and decreasing access to care issues faced by people of color and members of the LGBTQ+ community.

What can physicians do at the local, state, and national level to support DEI?

All REI and ob.gyn. physicians can work with insurance companies to work on the current barriers that stand in the way of patients who want to have a family. For example, physicians can work with insurance companies to remove their definition of infertility as exposure to sperm for 1 year before fertility coverage can take effect. Also, mandated insurance coverage in all 50 states would allow even smaller companies to require this benefit to patients.

Many people of color work in smaller companies that, unfortunately, are not required to offer IVF coverage in states where mandated insurance coverage is available. As potential encouraging news, ASRM, RESOLVE (The National Infertility Association) and other patient advocacy groups are working with each state to help enact fertility mandates.

Which group, if any, has been most negatively affected by a lack of DEI?

People of color, LGBTQ+ communities, people with disabilities, single individuals, and those with income challenges are the most likely to be affected by adverse DEI policies.

While it is long overdue, why do you believe DEI has become such a touchstone and pervasive movement at this time?

This is the million-dollar question. After the George Floyd death, there was a global re-examination of how people of color were treated in every aspect of society. ASRM was the first to start this DEI initiative in women’s health.

ASRM and its patient advocacy partners are working with every nonmandated state toward the goal of passing infertility legislation to dramatically reduce the financial burden on all patients. We are starting to see more states either coming on board with mandates or at least discussing the possibilities. ASRM and RESOLVE have seen some recent positive outcomes with improved insurance for military families and government workers.

We can all agree that access to infertility treatment, particularly IVF, is not equivalent among different racial/ethnic populations. Part of the ASRM DEI task force is to evaluate research on IVF outcomes and race/ethnicity. Can you share why pregnancy outcomes would be included to potentially improve DEI?

More research needs to be done on pregnancy outcomes in women of color. We know that women of color have a decreased pregnancy rate in ART cycles even when controlling for age and other factors. We also know that birth outcomes are worse in these women. More understanding of this problem for women of color, especially African American women needs to be done.

Estimates are that more than one in eight LGBTQ+ patients live in states where physicians can refuse to treat them. Consequently, how can we improve DEI in these regions?

As someone with a number of family members in the LGBTQ+ community, this is a problem that is close to my heart. There appear to be many barriers that are being built to disenfranchise our LGBTQ+ community members. It is up to ASRM and patient advocacy groups to work with legislators to pass more inclusive laws and for insurance companies to update their definitions of infertility to be more inclusive for all.

Any final comments?

Everyone should have the right to become a parent whether they want to now or in the future!

Dr. Trolice is director of The IVF Center in Winter Park, Fla., and professor of obstetrics and gynecology at the University of Central Florida, Orlando.

These barriers represent inequality in access to reproductive medical services.

These challenges are also seen in other reproductive disorders such as polycystic ovary syndrome (PCOS), fibroids, and endometriosis. It is estimated that < 25% of individuals with infertility in the United States access the resources required to have their treatment needs met (Fertil Steril. 2015 Nov;104(5):1104-10. doi: 10.1016/j.fertnstert.2015.07.113)

In 2020, the American Society for Reproductive Medicine (ASRM) created a task force on Diversity, Equity, and Inclusion (DEI) chaired by Board Member Michael A. Thomas, MD. Two years later, the ASRM elevated this task force to a committee that is now chaired by Gloria Richard-Davis, MD. As health care systems and societies increasingly recognize these obstacles to care, I invited Dr. Thomas, the current president of the ASRM, to address this vital concern. Dr. Thomas is professor and chair, department of obstetrics and gynecology, at the University of Cincinnati.

While not limited to reproductive health care, how prevalent is the lack of DEI and what factors contribute to this problem?

When we established the initial ASRM DEI task force, we wanted to look at DEI issues within our profession and as an access-to-care initiative. We found that ASRM and ABOG (American Board of Obstetrics and Gynecology) were not asking questions about the makeup of our REI (Reproductive Endocrinology & Infertility) providers, nursing staff, and lab personnel. We had some older data from 2018 about the REI fellowships. Since that time, there appears to be an upward trend of people of color in REI fellowships.

We still need more data about academic, hybrid, and private REI practices when it comes to all employees. The goal would be to increase the number of people of color in all aspects of our field.

As far as access to care, we know that people of color do not have the ability to undergo ART (assisted reproductive technology) procedures at the same rate. This could be due to affordability, slower and/or later referral patterns, and personal stigma issues. Even in mandated states, people of color are seen by IVF providers in lower numbers. There is a need for a better understanding of the access-to-care issues, especially when affordability is not a problem, and the barriers to our LGBTQ+ patients.

Can you provide information about actions by the ASRM DEI task force and any plans for the future?

The DEI task force is now an ASRM committee. This committee is chaired by Dr. Gloria Richard-Davis and continues to work on increasing people of color in the REI workforce and understanding and decreasing access to care issues faced by people of color and members of the LGBTQ+ community.

What can physicians do at the local, state, and national level to support DEI?

All REI and ob.gyn. physicians can work with insurance companies to work on the current barriers that stand in the way of patients who want to have a family. For example, physicians can work with insurance companies to remove their definition of infertility as exposure to sperm for 1 year before fertility coverage can take effect. Also, mandated insurance coverage in all 50 states would allow even smaller companies to require this benefit to patients.

Many people of color work in smaller companies that, unfortunately, are not required to offer IVF coverage in states where mandated insurance coverage is available. As potential encouraging news, ASRM, RESOLVE (The National Infertility Association) and other patient advocacy groups are working with each state to help enact fertility mandates.

Which group, if any, has been most negatively affected by a lack of DEI?

People of color, LGBTQ+ communities, people with disabilities, single individuals, and those with income challenges are the most likely to be affected by adverse DEI policies.

While it is long overdue, why do you believe DEI has become such a touchstone and pervasive movement at this time?

This is the million-dollar question. After the George Floyd death, there was a global re-examination of how people of color were treated in every aspect of society. ASRM was the first to start this DEI initiative in women’s health.

ASRM and its patient advocacy partners are working with every nonmandated state toward the goal of passing infertility legislation to dramatically reduce the financial burden on all patients. We are starting to see more states either coming on board with mandates or at least discussing the possibilities. ASRM and RESOLVE have seen some recent positive outcomes with improved insurance for military families and government workers.

We can all agree that access to infertility treatment, particularly IVF, is not equivalent among different racial/ethnic populations. Part of the ASRM DEI task force is to evaluate research on IVF outcomes and race/ethnicity. Can you share why pregnancy outcomes would be included to potentially improve DEI?

More research needs to be done on pregnancy outcomes in women of color. We know that women of color have a decreased pregnancy rate in ART cycles even when controlling for age and other factors. We also know that birth outcomes are worse in these women. More understanding of this problem for women of color, especially African American women needs to be done.

Estimates are that more than one in eight LGBTQ+ patients live in states where physicians can refuse to treat them. Consequently, how can we improve DEI in these regions?

As someone with a number of family members in the LGBTQ+ community, this is a problem that is close to my heart. There appear to be many barriers that are being built to disenfranchise our LGBTQ+ community members. It is up to ASRM and patient advocacy groups to work with legislators to pass more inclusive laws and for insurance companies to update their definitions of infertility to be more inclusive for all.

Any final comments?

Everyone should have the right to become a parent whether they want to now or in the future!

Dr. Trolice is director of The IVF Center in Winter Park, Fla., and professor of obstetrics and gynecology at the University of Central Florida, Orlando.

These barriers represent inequality in access to reproductive medical services.

These challenges are also seen in other reproductive disorders such as polycystic ovary syndrome (PCOS), fibroids, and endometriosis. It is estimated that < 25% of individuals with infertility in the United States access the resources required to have their treatment needs met (Fertil Steril. 2015 Nov;104(5):1104-10. doi: 10.1016/j.fertnstert.2015.07.113)

In 2020, the American Society for Reproductive Medicine (ASRM) created a task force on Diversity, Equity, and Inclusion (DEI) chaired by Board Member Michael A. Thomas, MD. Two years later, the ASRM elevated this task force to a committee that is now chaired by Gloria Richard-Davis, MD. As health care systems and societies increasingly recognize these obstacles to care, I invited Dr. Thomas, the current president of the ASRM, to address this vital concern. Dr. Thomas is professor and chair, department of obstetrics and gynecology, at the University of Cincinnati.

While not limited to reproductive health care, how prevalent is the lack of DEI and what factors contribute to this problem?

When we established the initial ASRM DEI task force, we wanted to look at DEI issues within our profession and as an access-to-care initiative. We found that ASRM and ABOG (American Board of Obstetrics and Gynecology) were not asking questions about the makeup of our REI (Reproductive Endocrinology & Infertility) providers, nursing staff, and lab personnel. We had some older data from 2018 about the REI fellowships. Since that time, there appears to be an upward trend of people of color in REI fellowships.

We still need more data about academic, hybrid, and private REI practices when it comes to all employees. The goal would be to increase the number of people of color in all aspects of our field.

As far as access to care, we know that people of color do not have the ability to undergo ART (assisted reproductive technology) procedures at the same rate. This could be due to affordability, slower and/or later referral patterns, and personal stigma issues. Even in mandated states, people of color are seen by IVF providers in lower numbers. There is a need for a better understanding of the access-to-care issues, especially when affordability is not a problem, and the barriers to our LGBTQ+ patients.

Can you provide information about actions by the ASRM DEI task force and any plans for the future?

The DEI task force is now an ASRM committee. This committee is chaired by Dr. Gloria Richard-Davis and continues to work on increasing people of color in the REI workforce and understanding and decreasing access to care issues faced by people of color and members of the LGBTQ+ community.

What can physicians do at the local, state, and national level to support DEI?

All REI and ob.gyn. physicians can work with insurance companies to work on the current barriers that stand in the way of patients who want to have a family. For example, physicians can work with insurance companies to remove their definition of infertility as exposure to sperm for 1 year before fertility coverage can take effect. Also, mandated insurance coverage in all 50 states would allow even smaller companies to require this benefit to patients.

Many people of color work in smaller companies that, unfortunately, are not required to offer IVF coverage in states where mandated insurance coverage is available. As potential encouraging news, ASRM, RESOLVE (The National Infertility Association) and other patient advocacy groups are working with each state to help enact fertility mandates.

Which group, if any, has been most negatively affected by a lack of DEI?

People of color, LGBTQ+ communities, people with disabilities, single individuals, and those with income challenges are the most likely to be affected by adverse DEI policies.

While it is long overdue, why do you believe DEI has become such a touchstone and pervasive movement at this time?

This is the million-dollar question. After the George Floyd death, there was a global re-examination of how people of color were treated in every aspect of society. ASRM was the first to start this DEI initiative in women’s health.

ASRM and its patient advocacy partners are working with every nonmandated state toward the goal of passing infertility legislation to dramatically reduce the financial burden on all patients. We are starting to see more states either coming on board with mandates or at least discussing the possibilities. ASRM and RESOLVE have seen some recent positive outcomes with improved insurance for military families and government workers.

We can all agree that access to infertility treatment, particularly IVF, is not equivalent among different racial/ethnic populations. Part of the ASRM DEI task force is to evaluate research on IVF outcomes and race/ethnicity. Can you share why pregnancy outcomes would be included to potentially improve DEI?

More research needs to be done on pregnancy outcomes in women of color. We know that women of color have a decreased pregnancy rate in ART cycles even when controlling for age and other factors. We also know that birth outcomes are worse in these women. More understanding of this problem for women of color, especially African American women needs to be done.

Estimates are that more than one in eight LGBTQ+ patients live in states where physicians can refuse to treat them. Consequently, how can we improve DEI in these regions?

As someone with a number of family members in the LGBTQ+ community, this is a problem that is close to my heart. There appear to be many barriers that are being built to disenfranchise our LGBTQ+ community members. It is up to ASRM and patient advocacy groups to work with legislators to pass more inclusive laws and for insurance companies to update their definitions of infertility to be more inclusive for all.

Any final comments?

Everyone should have the right to become a parent whether they want to now or in the future!

Dr. Trolice is director of The IVF Center in Winter Park, Fla., and professor of obstetrics and gynecology at the University of Central Florida, Orlando.

Does an elevated TSH value always require therapy?

Thyroxine and L-thyroxine are two of the 10 most frequently prescribed medicinal products. “One large health insurance company ranks thyroid hormone at fourth place in the list of most-sold medications in the United States. It is possibly the second most commonly prescribed preparation,” said Joachim Feldkamp, MD, PhD, director of the University Clinic for General Internal Medicine, Endocrinology, Diabetology, and Infectious Diseases at Central Hospital, Bielefeld, Germany, at the online press conference for the German Society of Endocrinology’s hormone week.

The preparation is prescribed when the thyroid gland produces too little thyroid hormone. The messenger substance thyroid-stimulating hormone (TSH) is used as a screening value to assess thyroid function. An increase in TSH can indicate that too little thyroid hormone is being produced.

“But this does not mean that an underactive thyroid gland is hiding behind every elevated TSH value,” said Dr. Feldkamp. Normally, the TSH value lies between 0.3 and 4.2 mU/L. “Hypothyroidism, as it’s known, is formally present if the TSH value lies above the upper limit of 4.2 mU/L,” said Dr. Feldkamp.

Check again

However, not every elevated TSH value needs to be treated immediately. “From large-scale investigations, we know that TSH values are subject to fluctuations,” said Dr. Feldkamp. Individual measurements must therefore be taken with a grain of salt and almost never justify a therapeutic decision. Therefore, a slightly elevated TSH value should be checked again 2-6 months later, and the patient should be asked if they are experiencing any symptoms. “In 50%-60% of cases, the TSH value normalized at the second checkup without requiring any treatment,” Dr. Feldkamp explained.

The TSH value could be elevated for several reasons:

- Fluctuations depending on the time of day. At night and early in the morning, the TSH value is much higher than in the afternoon. An acute lack of sleep can lead to higher TSH values in the morning.

- Fluctuations depending on the time of year. In winter, TSH values are slightly higher than in the summer owing to adaptation to cooler temperatures. Researchers in the Arctic, for example, have significantly higher TSH values than people who live in warmer regions.

- Age-dependent differences. Children and adolescents have higher TSH values than adults do. The TSH values of adolescents cannot be based on those of adults because this would lead to incorrect treatment. In addition, TSH values increase with age, and slightly elevated values are initially no cause for treatment in people aged 70-80 years. Caution is advised during treatment, because overtreatment can lead to cardiac arrhythmias and a decrease in bone density.

- Sex-specific differences. The TSH values of women are generally a little higher than those in men.

- Obesity. In obesity, TSH increases and often exceeds the normal values usually recorded in persons of normal weight. The elevated values do not reflect a state of hypofunction but rather the body’s adjustment mechanism. If these patients lose weight, the TSH values will drop spontaneously. Slightly elevated TSH values in obese people should not be treated with thyroid hormones.

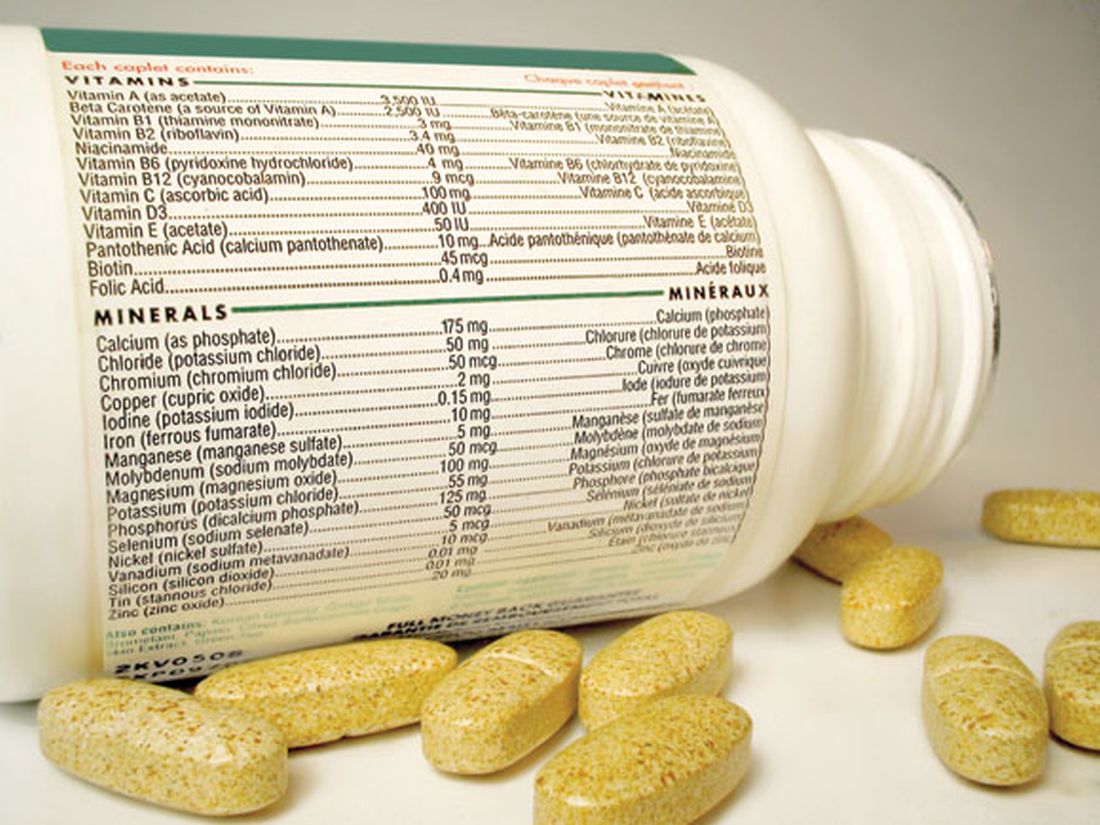

The nutritional supplement biotin (vitamin H or vitamin B7), which is often taken for skin, hair, and nail growth disorders, can distort measured values. In many of the laboratory methods used, the biotin competes with the test substances used. As a result, it can lead to falsely high and falsely low TSH values. At high doses of biotin (for example, 10 mg), there should be at least a 3-day pause (and ideally a pause of 1 week) before measuring TSH.

Hasty prescriptions

“Sometimes, because of the assumption that every high TSH value is due to sickness-related hypothyroidism, thyroid hormones can be prescribed too quickly,” said Dr. Feldkamp. This is also true for patients with thyroid nodules due to iodine deficiency, who are often still treated with thyroid hormones.

“These days, because we are generally an iodine-deficient nation, iodine would potentially be given in combination with thyroid hormones but not with thyroid hormones alone. There are lots of patients who have been taking thyroid hormones for 30 or 40 years due to thyroid nodules. That should definitely be reviewed,” said Dr. Feldkamp.

When to treat?

Dr. Feldkamp does not believe that standard determination of the TSH value is sensible and advises that clinicians examine patients with newly occurring symptoms, such as excess weight, impaired weight regulation despite reduced appetite, depression, or a high need for sleep.

If there are symptoms, the thyroid function must be clarified further. “This includes determination of free thyroid hormones T3 and T4; detection of antibodies against autologous thyroid tissue such as TPO-Ab [antibody against thyroid peroxidase], TG-Ab [antibody against thyroglobulin], and TRAb [antibody against TSH receptor]; and ultrasound examination of the metabolic organ,” said Dr. Feldkamp. Autoimmune-related hypothyroidism (Hashimoto’s thyroiditis) is the most common cause of an overly high TSH level.

Treatment should take place in the following situations:

- In young patients with TSH values greater than 10 mU/L;

- In young (< 65 years) symptomatic patients with TSH values of 4 to less than 10 mU/L;

- With elevated TSH values that result from thyroid surgery or radioactive iodine therapy;

- In patients with a diffuse enlarged or severely nodular thyroid gland

- In pregnant women with elevated TSH values.

This article was translated from Medscape’s German Edition and a version appeared on Medscape.com.

Thyroxine and L-thyroxine are two of the 10 most frequently prescribed medicinal products. “One large health insurance company ranks thyroid hormone at fourth place in the list of most-sold medications in the United States. It is possibly the second most commonly prescribed preparation,” said Joachim Feldkamp, MD, PhD, director of the University Clinic for General Internal Medicine, Endocrinology, Diabetology, and Infectious Diseases at Central Hospital, Bielefeld, Germany, at the online press conference for the German Society of Endocrinology’s hormone week.

The preparation is prescribed when the thyroid gland produces too little thyroid hormone. The messenger substance thyroid-stimulating hormone (TSH) is used as a screening value to assess thyroid function. An increase in TSH can indicate that too little thyroid hormone is being produced.

“But this does not mean that an underactive thyroid gland is hiding behind every elevated TSH value,” said Dr. Feldkamp. Normally, the TSH value lies between 0.3 and 4.2 mU/L. “Hypothyroidism, as it’s known, is formally present if the TSH value lies above the upper limit of 4.2 mU/L,” said Dr. Feldkamp.

Check again

However, not every elevated TSH value needs to be treated immediately. “From large-scale investigations, we know that TSH values are subject to fluctuations,” said Dr. Feldkamp. Individual measurements must therefore be taken with a grain of salt and almost never justify a therapeutic decision. Therefore, a slightly elevated TSH value should be checked again 2-6 months later, and the patient should be asked if they are experiencing any symptoms. “In 50%-60% of cases, the TSH value normalized at the second checkup without requiring any treatment,” Dr. Feldkamp explained.

The TSH value could be elevated for several reasons:

- Fluctuations depending on the time of day. At night and early in the morning, the TSH value is much higher than in the afternoon. An acute lack of sleep can lead to higher TSH values in the morning.

- Fluctuations depending on the time of year. In winter, TSH values are slightly higher than in the summer owing to adaptation to cooler temperatures. Researchers in the Arctic, for example, have significantly higher TSH values than people who live in warmer regions.

- Age-dependent differences. Children and adolescents have higher TSH values than adults do. The TSH values of adolescents cannot be based on those of adults because this would lead to incorrect treatment. In addition, TSH values increase with age, and slightly elevated values are initially no cause for treatment in people aged 70-80 years. Caution is advised during treatment, because overtreatment can lead to cardiac arrhythmias and a decrease in bone density.

- Sex-specific differences. The TSH values of women are generally a little higher than those in men.

- Obesity. In obesity, TSH increases and often exceeds the normal values usually recorded in persons of normal weight. The elevated values do not reflect a state of hypofunction but rather the body’s adjustment mechanism. If these patients lose weight, the TSH values will drop spontaneously. Slightly elevated TSH values in obese people should not be treated with thyroid hormones.

The nutritional supplement biotin (vitamin H or vitamin B7), which is often taken for skin, hair, and nail growth disorders, can distort measured values. In many of the laboratory methods used, the biotin competes with the test substances used. As a result, it can lead to falsely high and falsely low TSH values. At high doses of biotin (for example, 10 mg), there should be at least a 3-day pause (and ideally a pause of 1 week) before measuring TSH.

Hasty prescriptions

“Sometimes, because of the assumption that every high TSH value is due to sickness-related hypothyroidism, thyroid hormones can be prescribed too quickly,” said Dr. Feldkamp. This is also true for patients with thyroid nodules due to iodine deficiency, who are often still treated with thyroid hormones.

“These days, because we are generally an iodine-deficient nation, iodine would potentially be given in combination with thyroid hormones but not with thyroid hormones alone. There are lots of patients who have been taking thyroid hormones for 30 or 40 years due to thyroid nodules. That should definitely be reviewed,” said Dr. Feldkamp.

When to treat?

Dr. Feldkamp does not believe that standard determination of the TSH value is sensible and advises that clinicians examine patients with newly occurring symptoms, such as excess weight, impaired weight regulation despite reduced appetite, depression, or a high need for sleep.

If there are symptoms, the thyroid function must be clarified further. “This includes determination of free thyroid hormones T3 and T4; detection of antibodies against autologous thyroid tissue such as TPO-Ab [antibody against thyroid peroxidase], TG-Ab [antibody against thyroglobulin], and TRAb [antibody against TSH receptor]; and ultrasound examination of the metabolic organ,” said Dr. Feldkamp. Autoimmune-related hypothyroidism (Hashimoto’s thyroiditis) is the most common cause of an overly high TSH level.

Treatment should take place in the following situations:

- In young patients with TSH values greater than 10 mU/L;

- In young (< 65 years) symptomatic patients with TSH values of 4 to less than 10 mU/L;

- With elevated TSH values that result from thyroid surgery or radioactive iodine therapy;

- In patients with a diffuse enlarged or severely nodular thyroid gland

- In pregnant women with elevated TSH values.

This article was translated from Medscape’s German Edition and a version appeared on Medscape.com.

Thyroxine and L-thyroxine are two of the 10 most frequently prescribed medicinal products. “One large health insurance company ranks thyroid hormone at fourth place in the list of most-sold medications in the United States. It is possibly the second most commonly prescribed preparation,” said Joachim Feldkamp, MD, PhD, director of the University Clinic for General Internal Medicine, Endocrinology, Diabetology, and Infectious Diseases at Central Hospital, Bielefeld, Germany, at the online press conference for the German Society of Endocrinology’s hormone week.

The preparation is prescribed when the thyroid gland produces too little thyroid hormone. The messenger substance thyroid-stimulating hormone (TSH) is used as a screening value to assess thyroid function. An increase in TSH can indicate that too little thyroid hormone is being produced.

“But this does not mean that an underactive thyroid gland is hiding behind every elevated TSH value,” said Dr. Feldkamp. Normally, the TSH value lies between 0.3 and 4.2 mU/L. “Hypothyroidism, as it’s known, is formally present if the TSH value lies above the upper limit of 4.2 mU/L,” said Dr. Feldkamp.

Check again

However, not every elevated TSH value needs to be treated immediately. “From large-scale investigations, we know that TSH values are subject to fluctuations,” said Dr. Feldkamp. Individual measurements must therefore be taken with a grain of salt and almost never justify a therapeutic decision. Therefore, a slightly elevated TSH value should be checked again 2-6 months later, and the patient should be asked if they are experiencing any symptoms. “In 50%-60% of cases, the TSH value normalized at the second checkup without requiring any treatment,” Dr. Feldkamp explained.

The TSH value could be elevated for several reasons:

- Fluctuations depending on the time of day. At night and early in the morning, the TSH value is much higher than in the afternoon. An acute lack of sleep can lead to higher TSH values in the morning.

- Fluctuations depending on the time of year. In winter, TSH values are slightly higher than in the summer owing to adaptation to cooler temperatures. Researchers in the Arctic, for example, have significantly higher TSH values than people who live in warmer regions.

- Age-dependent differences. Children and adolescents have higher TSH values than adults do. The TSH values of adolescents cannot be based on those of adults because this would lead to incorrect treatment. In addition, TSH values increase with age, and slightly elevated values are initially no cause for treatment in people aged 70-80 years. Caution is advised during treatment, because overtreatment can lead to cardiac arrhythmias and a decrease in bone density.

- Sex-specific differences. The TSH values of women are generally a little higher than those in men.

- Obesity. In obesity, TSH increases and often exceeds the normal values usually recorded in persons of normal weight. The elevated values do not reflect a state of hypofunction but rather the body’s adjustment mechanism. If these patients lose weight, the TSH values will drop spontaneously. Slightly elevated TSH values in obese people should not be treated with thyroid hormones.

The nutritional supplement biotin (vitamin H or vitamin B7), which is often taken for skin, hair, and nail growth disorders, can distort measured values. In many of the laboratory methods used, the biotin competes with the test substances used. As a result, it can lead to falsely high and falsely low TSH values. At high doses of biotin (for example, 10 mg), there should be at least a 3-day pause (and ideally a pause of 1 week) before measuring TSH.

Hasty prescriptions

“Sometimes, because of the assumption that every high TSH value is due to sickness-related hypothyroidism, thyroid hormones can be prescribed too quickly,” said Dr. Feldkamp. This is also true for patients with thyroid nodules due to iodine deficiency, who are often still treated with thyroid hormones.

“These days, because we are generally an iodine-deficient nation, iodine would potentially be given in combination with thyroid hormones but not with thyroid hormones alone. There are lots of patients who have been taking thyroid hormones for 30 or 40 years due to thyroid nodules. That should definitely be reviewed,” said Dr. Feldkamp.

When to treat?

Dr. Feldkamp does not believe that standard determination of the TSH value is sensible and advises that clinicians examine patients with newly occurring symptoms, such as excess weight, impaired weight regulation despite reduced appetite, depression, or a high need for sleep.

If there are symptoms, the thyroid function must be clarified further. “This includes determination of free thyroid hormones T3 and T4; detection of antibodies against autologous thyroid tissue such as TPO-Ab [antibody against thyroid peroxidase], TG-Ab [antibody against thyroglobulin], and TRAb [antibody against TSH receptor]; and ultrasound examination of the metabolic organ,” said Dr. Feldkamp. Autoimmune-related hypothyroidism (Hashimoto’s thyroiditis) is the most common cause of an overly high TSH level.

Treatment should take place in the following situations:

- In young patients with TSH values greater than 10 mU/L;

- In young (< 65 years) symptomatic patients with TSH values of 4 to less than 10 mU/L;

- With elevated TSH values that result from thyroid surgery or radioactive iodine therapy;

- In patients with a diffuse enlarged or severely nodular thyroid gland

- In pregnant women with elevated TSH values.

This article was translated from Medscape’s German Edition and a version appeared on Medscape.com.

Do new Alzheimer’s drugs get us closer to solving the Alzheimer’s disease riddle?

Two antiamyloid drugs were recently approved by the Food and Drug Administration for treating early-stage Alzheimer’s disease (AD). In trials of both lecanemab (Leqembi) and donanemab, a long-held neuropharmacologic dream was realized: Most amyloid plaques – the primary pathologic marker for AD – were eliminated from the brains of patients with late pre-AD or early AD.

Implications for the amyloid hypothesis

The reduction of amyloid plaques has been argued by many scientists and clinical authorities to be the likely pharmacologic solution for AD. These trials are appropriately viewed as a test of the hypothesis that amyloid bodies are a primary cause of the neurobehavioral symptoms we call AD.

In parallel with that striking reduction in amyloid bodies, drug-treated patients had an initially slower progression of neurobehavioral decline than did placebo-treated control patients. That slowing in symptom progression was accompanied by a modest but statistically significant difference in neurobehavioral ability. After several months in treatment, the rate of decline again paralleled that recorded in the control group. The sustained difference of about a half point on cognitive assessment scores separating treatment and control participants was well short of the 1.5-point difference typically considered clinically significant.

A small number of unexpected and unexplained deaths occurred in the treatment groups. Brain swelling and/or micro-hemorrhages were seen in 20%-30% of treated individuals. Significant brain shrinkage was recorded. These adverse findings are indicative of drug-induced trauma in the target organ for these drugs (i.e., the brain) and were the basis for a boxed warning label for drug usage. Antiamyloid drug treatment was not effective in patients who had higher initial numbers of amyloid plaques, indicating that these drugs would not measurably help the majority of AD patients, who are at more advanced disease stages.

These drugs do not appear to be an “answer” for AD. A modest delay in progression does not mean that we’re on a path to a “cure.” Treatment cost estimates are high – more than $80,000 per year. With requisite PET exams and high copays, patient accessibility issues will be daunting.

Of note, To the contrary, they add strong support for the counterargument that the emergence of amyloid plaques is an effect and not a fundamental cause of that progressive loss of neurologic function that we ultimately define as “Alzheimer’s disease.”

Time to switch gears

The more obvious path to winning the battle against this human scourge is prevention. A recent analysis published in The Lancet argued that about 40% of AD and other dementias are potentially preventable. I disagree. I believe that 80%-90% of prospective cases can be substantially delayed or prevented. Studies have shown that progression to AD or other dementias is driven primarily by the progressive deterioration of organic brain health, expressed by the loss of what psychologists have termed “cognitive reserve.” Cognitive reserve is resilience arising from active brain usage, akin to physical resilience attributable to a physically active life. Scientific studies have shown us that an individual’s cognitive resilience (reserve) is a greater predictor of risk for dementia than are amyloid plaques – indeed, greater than any combination of pathologic markers in dementia patients.

Building up cognitive reserve

It’s increasingly clear to this observer that cognitive reserve is synonymous with organic brain health. The primary factors that underlie cognitive reserve are processing speed in the brain, executive control, response withholding, memory acquisition, reasoning, and attention abilities. Faster, more accurate brains are necessarily more physically optimized. They necessarily sustain brain system connectivity. They are necessarily healthier. Such brains bear a relatively low risk of developing AD or other dementias, just as physically healthier bodies bear a lower risk of being prematurely banished to semi-permanent residence in an easy chair or a bed.

Brain health can be sustained by deploying inexpensive, self-administered, app-based assessments of neurologic performance limits, which inform patients and their medical teams about general brain health status. These assessments can help doctors guide their patients to adopt more intelligent brain-healthy lifestyles, or direct them to the “brain gym” to progressively exercise their brains in ways that contribute to rapid, potentially large-scale, rejuvenating improvements in physical and functional brain health.

Randomized controlled trials incorporating different combinations of physical exercise, diet, and cognitive training have recorded significant improvements in physical and functional neurologic status, indicating substantially advanced brain health. Consistent moderate-to-intense physical exercise, brain- and heart-healthy eating habits, and, particularly, computerized brain training have repeatedly been shown to improve cognitive function and physically rejuvenate the brain. With cognitive training in the right forms, improvements in processing speed and other measures manifest improving brain health and greater safety.

In the National Institutes of Health–funded ACTIVE study with more than 2,800 older adults, just 10-18 hours of a specific speed of processing training (now part of BrainHQ, a program that I was involved in developing) reduced the probability of a progression to dementia over the following 10 years by 29%, and by 48% in those who did the most training.

This approach is several orders of magnitude less expensive than the pricey new AD drugs. It presents less serious issues of accessibility and has no side effects. It delivers far more powerful therapeutic benefits in older normal and at-risk populations.

Sustained wellness supporting prevention is the far more sensible medical way forward to save people from AD and other dementias – at a far lower medical and societal cost.

Dr. Merzenich is professor emeritus, department of neuroscience, University of California, San Francisco. He reported conflicts of interest with Posit Science, Stronger Brains, and the National Institutes of Health.

A version of this article first appeared on Medscape.com.

Two antiamyloid drugs were recently approved by the Food and Drug Administration for treating early-stage Alzheimer’s disease (AD). In trials of both lecanemab (Leqembi) and donanemab, a long-held neuropharmacologic dream was realized: Most amyloid plaques – the primary pathologic marker for AD – were eliminated from the brains of patients with late pre-AD or early AD.

Implications for the amyloid hypothesis

The reduction of amyloid plaques has been argued by many scientists and clinical authorities to be the likely pharmacologic solution for AD. These trials are appropriately viewed as a test of the hypothesis that amyloid bodies are a primary cause of the neurobehavioral symptoms we call AD.

In parallel with that striking reduction in amyloid bodies, drug-treated patients had an initially slower progression of neurobehavioral decline than did placebo-treated control patients. That slowing in symptom progression was accompanied by a modest but statistically significant difference in neurobehavioral ability. After several months in treatment, the rate of decline again paralleled that recorded in the control group. The sustained difference of about a half point on cognitive assessment scores separating treatment and control participants was well short of the 1.5-point difference typically considered clinically significant.

A small number of unexpected and unexplained deaths occurred in the treatment groups. Brain swelling and/or micro-hemorrhages were seen in 20%-30% of treated individuals. Significant brain shrinkage was recorded. These adverse findings are indicative of drug-induced trauma in the target organ for these drugs (i.e., the brain) and were the basis for a boxed warning label for drug usage. Antiamyloid drug treatment was not effective in patients who had higher initial numbers of amyloid plaques, indicating that these drugs would not measurably help the majority of AD patients, who are at more advanced disease stages.

These drugs do not appear to be an “answer” for AD. A modest delay in progression does not mean that we’re on a path to a “cure.” Treatment cost estimates are high – more than $80,000 per year. With requisite PET exams and high copays, patient accessibility issues will be daunting.

Of note, To the contrary, they add strong support for the counterargument that the emergence of amyloid plaques is an effect and not a fundamental cause of that progressive loss of neurologic function that we ultimately define as “Alzheimer’s disease.”

Time to switch gears

The more obvious path to winning the battle against this human scourge is prevention. A recent analysis published in The Lancet argued that about 40% of AD and other dementias are potentially preventable. I disagree. I believe that 80%-90% of prospective cases can be substantially delayed or prevented. Studies have shown that progression to AD or other dementias is driven primarily by the progressive deterioration of organic brain health, expressed by the loss of what psychologists have termed “cognitive reserve.” Cognitive reserve is resilience arising from active brain usage, akin to physical resilience attributable to a physically active life. Scientific studies have shown us that an individual’s cognitive resilience (reserve) is a greater predictor of risk for dementia than are amyloid plaques – indeed, greater than any combination of pathologic markers in dementia patients.

Building up cognitive reserve

It’s increasingly clear to this observer that cognitive reserve is synonymous with organic brain health. The primary factors that underlie cognitive reserve are processing speed in the brain, executive control, response withholding, memory acquisition, reasoning, and attention abilities. Faster, more accurate brains are necessarily more physically optimized. They necessarily sustain brain system connectivity. They are necessarily healthier. Such brains bear a relatively low risk of developing AD or other dementias, just as physically healthier bodies bear a lower risk of being prematurely banished to semi-permanent residence in an easy chair or a bed.

Brain health can be sustained by deploying inexpensive, self-administered, app-based assessments of neurologic performance limits, which inform patients and their medical teams about general brain health status. These assessments can help doctors guide their patients to adopt more intelligent brain-healthy lifestyles, or direct them to the “brain gym” to progressively exercise their brains in ways that contribute to rapid, potentially large-scale, rejuvenating improvements in physical and functional brain health.

Randomized controlled trials incorporating different combinations of physical exercise, diet, and cognitive training have recorded significant improvements in physical and functional neurologic status, indicating substantially advanced brain health. Consistent moderate-to-intense physical exercise, brain- and heart-healthy eating habits, and, particularly, computerized brain training have repeatedly been shown to improve cognitive function and physically rejuvenate the brain. With cognitive training in the right forms, improvements in processing speed and other measures manifest improving brain health and greater safety.

In the National Institutes of Health–funded ACTIVE study with more than 2,800 older adults, just 10-18 hours of a specific speed of processing training (now part of BrainHQ, a program that I was involved in developing) reduced the probability of a progression to dementia over the following 10 years by 29%, and by 48% in those who did the most training.

This approach is several orders of magnitude less expensive than the pricey new AD drugs. It presents less serious issues of accessibility and has no side effects. It delivers far more powerful therapeutic benefits in older normal and at-risk populations.

Sustained wellness supporting prevention is the far more sensible medical way forward to save people from AD and other dementias – at a far lower medical and societal cost.

Dr. Merzenich is professor emeritus, department of neuroscience, University of California, San Francisco. He reported conflicts of interest with Posit Science, Stronger Brains, and the National Institutes of Health.

A version of this article first appeared on Medscape.com.

Two antiamyloid drugs were recently approved by the Food and Drug Administration for treating early-stage Alzheimer’s disease (AD). In trials of both lecanemab (Leqembi) and donanemab, a long-held neuropharmacologic dream was realized: Most amyloid plaques – the primary pathologic marker for AD – were eliminated from the brains of patients with late pre-AD or early AD.

Implications for the amyloid hypothesis

The reduction of amyloid plaques has been argued by many scientists and clinical authorities to be the likely pharmacologic solution for AD. These trials are appropriately viewed as a test of the hypothesis that amyloid bodies are a primary cause of the neurobehavioral symptoms we call AD.

In parallel with that striking reduction in amyloid bodies, drug-treated patients had an initially slower progression of neurobehavioral decline than did placebo-treated control patients. That slowing in symptom progression was accompanied by a modest but statistically significant difference in neurobehavioral ability. After several months in treatment, the rate of decline again paralleled that recorded in the control group. The sustained difference of about a half point on cognitive assessment scores separating treatment and control participants was well short of the 1.5-point difference typically considered clinically significant.

A small number of unexpected and unexplained deaths occurred in the treatment groups. Brain swelling and/or micro-hemorrhages were seen in 20%-30% of treated individuals. Significant brain shrinkage was recorded. These adverse findings are indicative of drug-induced trauma in the target organ for these drugs (i.e., the brain) and were the basis for a boxed warning label for drug usage. Antiamyloid drug treatment was not effective in patients who had higher initial numbers of amyloid plaques, indicating that these drugs would not measurably help the majority of AD patients, who are at more advanced disease stages.

These drugs do not appear to be an “answer” for AD. A modest delay in progression does not mean that we’re on a path to a “cure.” Treatment cost estimates are high – more than $80,000 per year. With requisite PET exams and high copays, patient accessibility issues will be daunting.

Of note, To the contrary, they add strong support for the counterargument that the emergence of amyloid plaques is an effect and not a fundamental cause of that progressive loss of neurologic function that we ultimately define as “Alzheimer’s disease.”

Time to switch gears

The more obvious path to winning the battle against this human scourge is prevention. A recent analysis published in The Lancet argued that about 40% of AD and other dementias are potentially preventable. I disagree. I believe that 80%-90% of prospective cases can be substantially delayed or prevented. Studies have shown that progression to AD or other dementias is driven primarily by the progressive deterioration of organic brain health, expressed by the loss of what psychologists have termed “cognitive reserve.” Cognitive reserve is resilience arising from active brain usage, akin to physical resilience attributable to a physically active life. Scientific studies have shown us that an individual’s cognitive resilience (reserve) is a greater predictor of risk for dementia than are amyloid plaques – indeed, greater than any combination of pathologic markers in dementia patients.

Building up cognitive reserve

It’s increasingly clear to this observer that cognitive reserve is synonymous with organic brain health. The primary factors that underlie cognitive reserve are processing speed in the brain, executive control, response withholding, memory acquisition, reasoning, and attention abilities. Faster, more accurate brains are necessarily more physically optimized. They necessarily sustain brain system connectivity. They are necessarily healthier. Such brains bear a relatively low risk of developing AD or other dementias, just as physically healthier bodies bear a lower risk of being prematurely banished to semi-permanent residence in an easy chair or a bed.

Brain health can be sustained by deploying inexpensive, self-administered, app-based assessments of neurologic performance limits, which inform patients and their medical teams about general brain health status. These assessments can help doctors guide their patients to adopt more intelligent brain-healthy lifestyles, or direct them to the “brain gym” to progressively exercise their brains in ways that contribute to rapid, potentially large-scale, rejuvenating improvements in physical and functional brain health.

Randomized controlled trials incorporating different combinations of physical exercise, diet, and cognitive training have recorded significant improvements in physical and functional neurologic status, indicating substantially advanced brain health. Consistent moderate-to-intense physical exercise, brain- and heart-healthy eating habits, and, particularly, computerized brain training have repeatedly been shown to improve cognitive function and physically rejuvenate the brain. With cognitive training in the right forms, improvements in processing speed and other measures manifest improving brain health and greater safety.

In the National Institutes of Health–funded ACTIVE study with more than 2,800 older adults, just 10-18 hours of a specific speed of processing training (now part of BrainHQ, a program that I was involved in developing) reduced the probability of a progression to dementia over the following 10 years by 29%, and by 48% in those who did the most training.

This approach is several orders of magnitude less expensive than the pricey new AD drugs. It presents less serious issues of accessibility and has no side effects. It delivers far more powerful therapeutic benefits in older normal and at-risk populations.

Sustained wellness supporting prevention is the far more sensible medical way forward to save people from AD and other dementias – at a far lower medical and societal cost.

Dr. Merzenich is professor emeritus, department of neuroscience, University of California, San Francisco. He reported conflicts of interest with Posit Science, Stronger Brains, and the National Institutes of Health.

A version of this article first appeared on Medscape.com.

Social media makes kids with type 1 diabetes feel less alone

After being diagnosed with type 1 diabetes in 2021, British teenager Johnny Bailey felt isolated. That’s when he turned to social media, where he found others living with type 1 diabetes. He began to share his experience and now has more than 329,000 followers on his TikTok account, where he regularly posts videos.

These include short clips of him demonstrating how he changes his FreeStyle Libre sensor for his flash glucose monitor. In the videos, Johnny appropriately places his sensor on the back of his arm with background music, makes facial expressions, and transforms a dreaded diabetes-related task into an experience that appears fun and entertaining. In the limited videos I was able to review, he follows all the appropriate steps for sensor placement.

Many youths living with type 1 diabetes struggle with living with a chronic medical condition. Because type 1 diabetes is a rare condition, affecting about 1 in 500 children in the United States, many youth may not meet anyone else their age with type 1 diabetes through school, social events, or extracurricular activities.

For adolescents with intensively managed conditions like type 1 diabetes, this can present numerous psychosocial challenges – specifically, many youth experience shame or stigma associated with managing type 1 diabetes.

Diabetes-specific tasks may include wearing an insulin pump, monitoring blood glucose with finger pricks or a continuous glucose monitor (CGM), giving injections of insulin before meals and snacks, adjusting times for meals and snacks based on metabolic needs, waking up in the middle of the night to treat high or low blood glucose – the list goes on and on.

One study estimated that the average time it takes a child with type 1 diabetes to perform diabetes-specific tasks is over 5 hours per day.

Although much of this diabetes management time is spent by parents, as children get older and become teenagers, they are gradually transitioning to taking on more of this responsibility themselves. Wearing diabetes technology (insulin pumps and CGMs) can draw unwanted attention, leading to diabetes-specific body image concerns. Kids may also have to excuse themselves from an activity to treat a low or high blood glucose, creating uncomfortable situations when others inquire about why the activity was interrupted. As a result, many youths will avoid managing their diabetes properly to avoid drawing unwanted attention, consequently put their health at risk.

Those who are afraid of placing their glucose sensor owing to fear of pain may be reassured by seeing Johnny placing his sensor with a smile on his face. Some of his content also highlights other stigmatizing situations that teens may face, for example someone with a judgmental look questioning why he needed to give an insulin injection here.

This highlights an important concept – that people with type 1 diabetes may face criticism when dosing insulin in public, but it doesn’t mean they should feel forced to manage diabetes in private unless they choose to. Johnny is an inspirational individual who has bravely taken his type 1 diabetes experiences and used his creative skills to make these seemingly boring health-related tasks fun, interesting, and accessible.

Social media has become an outlet for people with type 1 diabetes to connect with others who can relate to their experiences.

However, there’s another side to consider. Although social media may provide a great source of support for youth, it may also adversely affect mental health. Just as quickly as social media outlets have grown, so has concern over excessive social media use and its impact on adolescents’ mental health. There’s a growing body of literature that describes the negative mental health aspects related to social media use.

Some adolescents struggling to manage type 1 diabetes may feel worse when seeing others thrive on social media, which has the potential to worsen stigma and shame. Youth may wonder how someone else is able to manage their type 1 diabetes so well when they are facing so many challenges.

Short videos on social media provide an incomplete picture of living with type 1 diabetes – just a glimpse into others’ lives, and only the parts that they want others to see. Managing a chronic condition can’t be fully represented in 10-second videos. And if youths choose to post their type 1 diabetes experiences on social media, they also risk receiving backlash or criticism, which can negatively their impact mental health in return.

Furthermore, the content being posted may not always be accurate or educational, leading to the potential for some youth to misunderstand type 1 diabetes.

Although I wouldn’t discourage youth with type 1 diabetes from engaging on social media and viewing diabetes-related content, they need to know that social media is flooded with misinformation. Creating an open space for youth to ask their clinicians questions about type 1 diabetes–related topics they view on social media is vital to ensuring they are viewing accurate information, so they are able to continue to manage their diabetes safely.

As a pediatric endocrinologist, I sometimes share resources on social media with patients if I believe it will help them cope with their type 1 diabetes diagnosis and management. I have had numerous patients – many of whom have struggled to accept their diagnosis – mention with joy and excitement that they were following an organization addressing type 1 diabetes on social media.

When making suggestions, I may refer them to The Diabetes Link, an organization with resources for young adults with type 1 diabetes that creates a space to connect with other young adults with type 1 diabetes. diaTribe is another organization created and led by people with diabetes that has a plethora of resources and provides evidence-based education for patients. I have also shared Project 50-in-50, which highlights two individuals with type 1 diabetes hiking the highest peak in each state in less than 50 days. Being able to see type 1 diabetes in a positive light is a huge step toward a more positive outlook on diabetes management.

Dr. Nally is an assistant professor, department of pediatrics, and a pediatric endocrinologist, division of pediatric endocrinology, at Yale University, New Haven, Conn. She reported conflicts of interest with Medtronic and the National Institutes of Health.

A version of this article first appeared on Medscape.com.

After being diagnosed with type 1 diabetes in 2021, British teenager Johnny Bailey felt isolated. That’s when he turned to social media, where he found others living with type 1 diabetes. He began to share his experience and now has more than 329,000 followers on his TikTok account, where he regularly posts videos.

These include short clips of him demonstrating how he changes his FreeStyle Libre sensor for his flash glucose monitor. In the videos, Johnny appropriately places his sensor on the back of his arm with background music, makes facial expressions, and transforms a dreaded diabetes-related task into an experience that appears fun and entertaining. In the limited videos I was able to review, he follows all the appropriate steps for sensor placement.

Many youths living with type 1 diabetes struggle with living with a chronic medical condition. Because type 1 diabetes is a rare condition, affecting about 1 in 500 children in the United States, many youth may not meet anyone else their age with type 1 diabetes through school, social events, or extracurricular activities.

For adolescents with intensively managed conditions like type 1 diabetes, this can present numerous psychosocial challenges – specifically, many youth experience shame or stigma associated with managing type 1 diabetes.

Diabetes-specific tasks may include wearing an insulin pump, monitoring blood glucose with finger pricks or a continuous glucose monitor (CGM), giving injections of insulin before meals and snacks, adjusting times for meals and snacks based on metabolic needs, waking up in the middle of the night to treat high or low blood glucose – the list goes on and on.

One study estimated that the average time it takes a child with type 1 diabetes to perform diabetes-specific tasks is over 5 hours per day.

Although much of this diabetes management time is spent by parents, as children get older and become teenagers, they are gradually transitioning to taking on more of this responsibility themselves. Wearing diabetes technology (insulin pumps and CGMs) can draw unwanted attention, leading to diabetes-specific body image concerns. Kids may also have to excuse themselves from an activity to treat a low or high blood glucose, creating uncomfortable situations when others inquire about why the activity was interrupted. As a result, many youths will avoid managing their diabetes properly to avoid drawing unwanted attention, consequently put their health at risk.

Those who are afraid of placing their glucose sensor owing to fear of pain may be reassured by seeing Johnny placing his sensor with a smile on his face. Some of his content also highlights other stigmatizing situations that teens may face, for example someone with a judgmental look questioning why he needed to give an insulin injection here.

This highlights an important concept – that people with type 1 diabetes may face criticism when dosing insulin in public, but it doesn’t mean they should feel forced to manage diabetes in private unless they choose to. Johnny is an inspirational individual who has bravely taken his type 1 diabetes experiences and used his creative skills to make these seemingly boring health-related tasks fun, interesting, and accessible.

Social media has become an outlet for people with type 1 diabetes to connect with others who can relate to their experiences.

However, there’s another side to consider. Although social media may provide a great source of support for youth, it may also adversely affect mental health. Just as quickly as social media outlets have grown, so has concern over excessive social media use and its impact on adolescents’ mental health. There’s a growing body of literature that describes the negative mental health aspects related to social media use.

Some adolescents struggling to manage type 1 diabetes may feel worse when seeing others thrive on social media, which has the potential to worsen stigma and shame. Youth may wonder how someone else is able to manage their type 1 diabetes so well when they are facing so many challenges.

Short videos on social media provide an incomplete picture of living with type 1 diabetes – just a glimpse into others’ lives, and only the parts that they want others to see. Managing a chronic condition can’t be fully represented in 10-second videos. And if youths choose to post their type 1 diabetes experiences on social media, they also risk receiving backlash or criticism, which can negatively their impact mental health in return.

Furthermore, the content being posted may not always be accurate or educational, leading to the potential for some youth to misunderstand type 1 diabetes.

Although I wouldn’t discourage youth with type 1 diabetes from engaging on social media and viewing diabetes-related content, they need to know that social media is flooded with misinformation. Creating an open space for youth to ask their clinicians questions about type 1 diabetes–related topics they view on social media is vital to ensuring they are viewing accurate information, so they are able to continue to manage their diabetes safely.

As a pediatric endocrinologist, I sometimes share resources on social media with patients if I believe it will help them cope with their type 1 diabetes diagnosis and management. I have had numerous patients – many of whom have struggled to accept their diagnosis – mention with joy and excitement that they were following an organization addressing type 1 diabetes on social media.

When making suggestions, I may refer them to The Diabetes Link, an organization with resources for young adults with type 1 diabetes that creates a space to connect with other young adults with type 1 diabetes. diaTribe is another organization created and led by people with diabetes that has a plethora of resources and provides evidence-based education for patients. I have also shared Project 50-in-50, which highlights two individuals with type 1 diabetes hiking the highest peak in each state in less than 50 days. Being able to see type 1 diabetes in a positive light is a huge step toward a more positive outlook on diabetes management.

Dr. Nally is an assistant professor, department of pediatrics, and a pediatric endocrinologist, division of pediatric endocrinology, at Yale University, New Haven, Conn. She reported conflicts of interest with Medtronic and the National Institutes of Health.

A version of this article first appeared on Medscape.com.

After being diagnosed with type 1 diabetes in 2021, British teenager Johnny Bailey felt isolated. That’s when he turned to social media, where he found others living with type 1 diabetes. He began to share his experience and now has more than 329,000 followers on his TikTok account, where he regularly posts videos.

These include short clips of him demonstrating how he changes his FreeStyle Libre sensor for his flash glucose monitor. In the videos, Johnny appropriately places his sensor on the back of his arm with background music, makes facial expressions, and transforms a dreaded diabetes-related task into an experience that appears fun and entertaining. In the limited videos I was able to review, he follows all the appropriate steps for sensor placement.

Many youths living with type 1 diabetes struggle with living with a chronic medical condition. Because type 1 diabetes is a rare condition, affecting about 1 in 500 children in the United States, many youth may not meet anyone else their age with type 1 diabetes through school, social events, or extracurricular activities.

For adolescents with intensively managed conditions like type 1 diabetes, this can present numerous psychosocial challenges – specifically, many youth experience shame or stigma associated with managing type 1 diabetes.

Diabetes-specific tasks may include wearing an insulin pump, monitoring blood glucose with finger pricks or a continuous glucose monitor (CGM), giving injections of insulin before meals and snacks, adjusting times for meals and snacks based on metabolic needs, waking up in the middle of the night to treat high or low blood glucose – the list goes on and on.

One study estimated that the average time it takes a child with type 1 diabetes to perform diabetes-specific tasks is over 5 hours per day.

Although much of this diabetes management time is spent by parents, as children get older and become teenagers, they are gradually transitioning to taking on more of this responsibility themselves. Wearing diabetes technology (insulin pumps and CGMs) can draw unwanted attention, leading to diabetes-specific body image concerns. Kids may also have to excuse themselves from an activity to treat a low or high blood glucose, creating uncomfortable situations when others inquire about why the activity was interrupted. As a result, many youths will avoid managing their diabetes properly to avoid drawing unwanted attention, consequently put their health at risk.

Those who are afraid of placing their glucose sensor owing to fear of pain may be reassured by seeing Johnny placing his sensor with a smile on his face. Some of his content also highlights other stigmatizing situations that teens may face, for example someone with a judgmental look questioning why he needed to give an insulin injection here.

This highlights an important concept – that people with type 1 diabetes may face criticism when dosing insulin in public, but it doesn’t mean they should feel forced to manage diabetes in private unless they choose to. Johnny is an inspirational individual who has bravely taken his type 1 diabetes experiences and used his creative skills to make these seemingly boring health-related tasks fun, interesting, and accessible.

Social media has become an outlet for people with type 1 diabetes to connect with others who can relate to their experiences.

However, there’s another side to consider. Although social media may provide a great source of support for youth, it may also adversely affect mental health. Just as quickly as social media outlets have grown, so has concern over excessive social media use and its impact on adolescents’ mental health. There’s a growing body of literature that describes the negative mental health aspects related to social media use.

Some adolescents struggling to manage type 1 diabetes may feel worse when seeing others thrive on social media, which has the potential to worsen stigma and shame. Youth may wonder how someone else is able to manage their type 1 diabetes so well when they are facing so many challenges.

Short videos on social media provide an incomplete picture of living with type 1 diabetes – just a glimpse into others’ lives, and only the parts that they want others to see. Managing a chronic condition can’t be fully represented in 10-second videos. And if youths choose to post their type 1 diabetes experiences on social media, they also risk receiving backlash or criticism, which can negatively their impact mental health in return.

Furthermore, the content being posted may not always be accurate or educational, leading to the potential for some youth to misunderstand type 1 diabetes.

Although I wouldn’t discourage youth with type 1 diabetes from engaging on social media and viewing diabetes-related content, they need to know that social media is flooded with misinformation. Creating an open space for youth to ask their clinicians questions about type 1 diabetes–related topics they view on social media is vital to ensuring they are viewing accurate information, so they are able to continue to manage their diabetes safely.

As a pediatric endocrinologist, I sometimes share resources on social media with patients if I believe it will help them cope with their type 1 diabetes diagnosis and management. I have had numerous patients – many of whom have struggled to accept their diagnosis – mention with joy and excitement that they were following an organization addressing type 1 diabetes on social media.

When making suggestions, I may refer them to The Diabetes Link, an organization with resources for young adults with type 1 diabetes that creates a space to connect with other young adults with type 1 diabetes. diaTribe is another organization created and led by people with diabetes that has a plethora of resources and provides evidence-based education for patients. I have also shared Project 50-in-50, which highlights two individuals with type 1 diabetes hiking the highest peak in each state in less than 50 days. Being able to see type 1 diabetes in a positive light is a huge step toward a more positive outlook on diabetes management.

Dr. Nally is an assistant professor, department of pediatrics, and a pediatric endocrinologist, division of pediatric endocrinology, at Yale University, New Haven, Conn. She reported conflicts of interest with Medtronic and the National Institutes of Health.

A version of this article first appeared on Medscape.com.

Hyperbaric oxygen therapy for traumatic brain injury: Promising or wishful thinking?

A recent review by Hadanny and colleagues recommends hyperbaric oxygen therapy (HBOT) for acute moderate to severe traumatic brain injury (TBI) and selected patients with prolonged postconcussive syndrome.

This article piqued my curiosity because I trained in HBOT more than 20 years ago. As a passionate scuba diver, my motivation was to master treatment for air embolism and decompression illness. Thankfully, these diving accidents are rare. However, I used HBOT for nonhealing wounds, and its efficacy was sometimes remarkable.

Paradoxical results with oxygen therapy

Although it may seem self-evident that “more oxygen is better” for medical illness, this is not necessarily true. I recently interviewed Ola Didrik Saugstad, MD, who demonstrated that the traditional practice of resuscitating newborns with 100% oxygen was more toxic than resuscitation with air (which contains 21% oxygen). His counterintuitive discovery led to a lifesaving change in the international newborn resuscitation guidelines.

The Food and Drug Administration has approved HBOT for a wide variety of conditions, but some practitioners enthusiastically promote it for off-label indications. These include antiaging, autism, multiple sclerosis, and the aforementioned TBI.

More than 50 years ago, HBOT was proposed for stroke, another disorder where the brain has been deprived of oxygen. Despite obvious logic, clinical trials have been unconvincing. The FDA has not approved HBOT for stroke.

HBOT in practice

During HBOT, the patient breathes 100% oxygen while the whole body is pressurized within a hyperbaric chamber. The chamber’s construction allows pressures above normal sea level of 1.0 atmosphere absolute (ATA). For example, The U.S. Navy Treatment Table for decompression sickness recommends 100% oxygen at 2.8 ATA. Chambers may hold one or more patients at a time.

The frequency of therapy varies but often consists of 20-60 sessions lasting 90-120 minutes. For off-label use like TBI, patients usually pay out of pocket. Given the multiple treatments, costs can add up.

Inconsistent evidence and sham controls

The unwieldy 33-page evidence review by Hadanny and colleagues cites multiple studies supporting HBOT for TBI. However, many, if not all, suffer from methodological flaws. These include vague inclusion criteria, lack of a control group, small patient numbers, treatment at different times since injury, poorly defined or varying HBOT protocols, varying outcome measures, and superficial results analysis.

A sham or control arm is essential for HBOT research trials, given the potential placebo effect of placing a human being inside a large, high-tech, sealed tube for an hour or more. In some sham-controlled studies, which consisted of low-pressure oxygen (that is, 1.3 ATA as sham vs. 2.4 ATA as treatment), all groups experienced symptom improvement. The review authors argue that the low-dose HBOT sham arms were biologically active and that the improvements seen mean that both high- and low-dose HBOT is therapeutic. The alternative explanation is that the placebo effect accounted for improvement in both groups.

The late Michael Bennett, a world authority on hyperbaric and underwater medicine, doubted that conventional HBOT sham controls could genuinely have a therapeutic effect, and I agree. The upcoming HOT-POCS trial (discussed below) should answer the question more definitively.

Mechanisms of action and safety

Mechanisms of benefit for HBOT include increased oxygen availability and angiogenesis. Animal research suggests that it may reduce secondary cell death from TBI, through stabilization of the blood-brain barrier and inflammation reduction.

HBOT is generally safe and well tolerated. A retrospective analysis of 1.5 million outpatient hyperbaric treatments revealed that less than 1% were associated with adverse events. The most common were ear and sinus barotrauma. Because HBOT uses increased air pressure, patients must equalize their ears and sinuses. Those who cannot because of altered consciousness, anatomical defects, or congestion must undergo myringotomy or terminate therapy. Claustrophobia was the second most common adverse effect. Convulsions and tension pneumocephalus were rare.