User login

Overburdened: Health care workers more likely to die by suicide

This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study.

If you run into a health care provider these days and ask, “How are you doing?” you’re likely to get a response like this one: “You know, hanging in there.” You smile and move on. But it may be time to go a step further. If you ask that next question – “No, really, how are you doing?” Well, you might need to carve out some time.

It’s been a rough few years for those of us in the health care professions. Our lives, dominated by COVID-related concerns at home, were equally dominated by COVID concerns at work. On the job, there were fewer and fewer of us around as exploitation and COVID-related stressors led doctors, nurses, and others to leave the profession entirely or take early retirement. Even now, I’m not sure we’ve recovered. Staffing in the hospitals is still a huge problem, and the persistence of impersonal meetings via teleconference – which not only prevent any sort of human connection but, audaciously, run from one into another without a break – robs us of even the subtle joy of walking from one hallway to another for 5 minutes of reflection before sitting down to view the next hastily cobbled together PowerPoint.

I’m speaking in generalities, of course.

I’m talking about how bad things are now because, in truth, they’ve never been great. And that may be why health care workers – people with jobs focused on serving others – are nevertheless at substantially increased risk for suicide.

Analyses through the years have shown that physicians tend to have higher rates of death from suicide than the general population. There are reasons for this that may not entirely be because of work-related stress. Doctors’ suicide attempts are more often lethal – we know what is likely to work, after all.

And, according to this paper in JAMA, it is those people who may be suffering most of all.

The study is a nationally representative sample based on the 2008 American Community Survey. Records were linked to the National Death Index through 2019.

Survey respondents were classified into five categories of health care worker, as you can see here. And 1,666,000 non–health care workers served as the control group.

Let’s take a look at the numbers.

I’m showing you age- and sex-standardized rates of death from suicide, starting with non–health care workers. In this study, physicians have similar rates of death from suicide to the general population. Nurses have higher rates, but health care support workers – nurses’ aides, home health aides – have rates nearly twice that of the general population.

Only social and behavioral health workers had rates lower than those in the general population, perhaps because they know how to access life-saving resources.

Of course, these groups differ in a lot of ways – education and income, for example. But even after adjustment for these factors as well as for sex, race, and marital status, the results persist. The only group with even a trend toward lower suicide rates are social and behavioral health workers.

There has been much hand-wringing about rates of physician suicide in the past. It is still a very real problem. But this paper finally highlights that there is a lot more to the health care profession than physicians. It’s time we acknowledge and support the people in our profession who seem to be suffering more than any of us: the aides, the techs, the support staff – the overworked and underpaid who have to deal with all the stresses that physicians like me face and then some.

There’s more to suicide risk than just your job; I know that. Family matters. Relationships matter. Medical and psychiatric illnesses matter. But to ignore this problem when it is right here, in our own house so to speak, can’t continue.

Might I suggest we start by asking someone in our profession – whether doctor, nurse, aide, or tech – how they are doing. How they are really doing. And when we are done listening, we use what we hear to advocate for real change.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study.

If you run into a health care provider these days and ask, “How are you doing?” you’re likely to get a response like this one: “You know, hanging in there.” You smile and move on. But it may be time to go a step further. If you ask that next question – “No, really, how are you doing?” Well, you might need to carve out some time.

It’s been a rough few years for those of us in the health care professions. Our lives, dominated by COVID-related concerns at home, were equally dominated by COVID concerns at work. On the job, there were fewer and fewer of us around as exploitation and COVID-related stressors led doctors, nurses, and others to leave the profession entirely or take early retirement. Even now, I’m not sure we’ve recovered. Staffing in the hospitals is still a huge problem, and the persistence of impersonal meetings via teleconference – which not only prevent any sort of human connection but, audaciously, run from one into another without a break – robs us of even the subtle joy of walking from one hallway to another for 5 minutes of reflection before sitting down to view the next hastily cobbled together PowerPoint.

I’m speaking in generalities, of course.

I’m talking about how bad things are now because, in truth, they’ve never been great. And that may be why health care workers – people with jobs focused on serving others – are nevertheless at substantially increased risk for suicide.

Analyses through the years have shown that physicians tend to have higher rates of death from suicide than the general population. There are reasons for this that may not entirely be because of work-related stress. Doctors’ suicide attempts are more often lethal – we know what is likely to work, after all.

And, according to this paper in JAMA, it is those people who may be suffering most of all.

The study is a nationally representative sample based on the 2008 American Community Survey. Records were linked to the National Death Index through 2019.

Survey respondents were classified into five categories of health care worker, as you can see here. And 1,666,000 non–health care workers served as the control group.

Let’s take a look at the numbers.

I’m showing you age- and sex-standardized rates of death from suicide, starting with non–health care workers. In this study, physicians have similar rates of death from suicide to the general population. Nurses have higher rates, but health care support workers – nurses’ aides, home health aides – have rates nearly twice that of the general population.

Only social and behavioral health workers had rates lower than those in the general population, perhaps because they know how to access life-saving resources.

Of course, these groups differ in a lot of ways – education and income, for example. But even after adjustment for these factors as well as for sex, race, and marital status, the results persist. The only group with even a trend toward lower suicide rates are social and behavioral health workers.

There has been much hand-wringing about rates of physician suicide in the past. It is still a very real problem. But this paper finally highlights that there is a lot more to the health care profession than physicians. It’s time we acknowledge and support the people in our profession who seem to be suffering more than any of us: the aides, the techs, the support staff – the overworked and underpaid who have to deal with all the stresses that physicians like me face and then some.

There’s more to suicide risk than just your job; I know that. Family matters. Relationships matter. Medical and psychiatric illnesses matter. But to ignore this problem when it is right here, in our own house so to speak, can’t continue.

Might I suggest we start by asking someone in our profession – whether doctor, nurse, aide, or tech – how they are doing. How they are really doing. And when we are done listening, we use what we hear to advocate for real change.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study.

If you run into a health care provider these days and ask, “How are you doing?” you’re likely to get a response like this one: “You know, hanging in there.” You smile and move on. But it may be time to go a step further. If you ask that next question – “No, really, how are you doing?” Well, you might need to carve out some time.

It’s been a rough few years for those of us in the health care professions. Our lives, dominated by COVID-related concerns at home, were equally dominated by COVID concerns at work. On the job, there were fewer and fewer of us around as exploitation and COVID-related stressors led doctors, nurses, and others to leave the profession entirely or take early retirement. Even now, I’m not sure we’ve recovered. Staffing in the hospitals is still a huge problem, and the persistence of impersonal meetings via teleconference – which not only prevent any sort of human connection but, audaciously, run from one into another without a break – robs us of even the subtle joy of walking from one hallway to another for 5 minutes of reflection before sitting down to view the next hastily cobbled together PowerPoint.

I’m speaking in generalities, of course.

I’m talking about how bad things are now because, in truth, they’ve never been great. And that may be why health care workers – people with jobs focused on serving others – are nevertheless at substantially increased risk for suicide.

Analyses through the years have shown that physicians tend to have higher rates of death from suicide than the general population. There are reasons for this that may not entirely be because of work-related stress. Doctors’ suicide attempts are more often lethal – we know what is likely to work, after all.

And, according to this paper in JAMA, it is those people who may be suffering most of all.

The study is a nationally representative sample based on the 2008 American Community Survey. Records were linked to the National Death Index through 2019.

Survey respondents were classified into five categories of health care worker, as you can see here. And 1,666,000 non–health care workers served as the control group.

Let’s take a look at the numbers.

I’m showing you age- and sex-standardized rates of death from suicide, starting with non–health care workers. In this study, physicians have similar rates of death from suicide to the general population. Nurses have higher rates, but health care support workers – nurses’ aides, home health aides – have rates nearly twice that of the general population.

Only social and behavioral health workers had rates lower than those in the general population, perhaps because they know how to access life-saving resources.

Of course, these groups differ in a lot of ways – education and income, for example. But even after adjustment for these factors as well as for sex, race, and marital status, the results persist. The only group with even a trend toward lower suicide rates are social and behavioral health workers.

There has been much hand-wringing about rates of physician suicide in the past. It is still a very real problem. But this paper finally highlights that there is a lot more to the health care profession than physicians. It’s time we acknowledge and support the people in our profession who seem to be suffering more than any of us: the aides, the techs, the support staff – the overworked and underpaid who have to deal with all the stresses that physicians like me face and then some.

There’s more to suicide risk than just your job; I know that. Family matters. Relationships matter. Medical and psychiatric illnesses matter. But to ignore this problem when it is right here, in our own house so to speak, can’t continue.

Might I suggest we start by asking someone in our profession – whether doctor, nurse, aide, or tech – how they are doing. How they are really doing. And when we are done listening, we use what we hear to advocate for real change.

Dr. Wilson is associate professor of medicine and public health and director of the Clinical and Translational Research Accelerator at Yale University, New Haven, Conn. He has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Laboratory testing: No doctor required?

This transcript has been edited for clarity.

Let’s assume, for the sake of argument, that I am a healthy 43-year old man. Nevertheless, I am interested in getting my vitamin D level checked. My primary care doc says it’s unnecessary, but that doesn’t matter because a variety of direct-to-consumer testing companies will do it without a doctor’s prescription – for a fee of course.

Is that okay? Should I be able to get the test?

What if instead of my vitamin D level, I want to test my testosterone level, or my PSA, or my cadmium level, or my Lyme disease antibodies, or even have a full-body MRI scan?

These questions are becoming more and more common, because the direct-to-consumer testing market is exploding.

We’re talking about direct-to-consumer testing, thanks to this paper: Policies of US Companies Offering Direct-to-Consumer Laboratory Tests, appearing in JAMA Internal Medicine, which characterizes the testing practices of direct-to-consumer testing companies.

But before we get to the study, a word on this market. Direct-to-consumer lab testing is projected to be a $2 billion industry by 2025, and lab testing megacorporations Quest Diagnostics and Labcorp are both jumping headlong into this space.

Why is this happening? A couple of reasons, I think. First, the increasing cost of health care has led payers to place significant restrictions on what tests can be ordered and under what circumstances. Physicians are all too familiar with the “prior authorization” system that seeks to limit even the tests we think would benefit our patients.

Frustrated with such a system, it’s no wonder that patients are increasingly deciding to go it on their own. Sure, insurance won’t cover these tests, but the prices are transparent and competition actually keeps them somewhat reasonable. So, is this a win-win? Shouldn’t we allow people to get the tests they want, at least if they are willing to pay for it?

Of course, it’s not quite that simple. If the tests are normal, or negative, then sure – no harm, no foul. But when they are positive, everything changes. What happens when the PSA test I got myself via a direct-to-consumer testing company comes back elevated? Well, at that point, I am right back into the traditional mode of medicine – seeing my doctor, probably getting repeat testing, biopsies, etc., – and some payer will be on the hook for that, which is to say that all of us will be on the hook for that.

One other reason direct-to-consumer testing is getting more popular is a more difficult-to-characterize phenomenon which I might call postpandemic individualism. I’ve seen this across several domains, but I think in some ways the pandemic led people to focus more attention on themselves, perhaps because we were so isolated from each other. Optimizing health through data – whether using a fitness tracking watch, meticulously counting macronutrient intake, or ordering your own lab tests – may be a form of exerting control over a universe that feels increasingly chaotic. But what do I know? I’m not a psychologist.

The study characterizes a total of 21 direct-to-consumer testing companies. They offer a variety of services, as you can see here, with the majority in the endocrine space: thyroid, diabetes, men’s and women’s health. A smattering of companies offer more esoteric testing, such as heavy metals and Lyme disease.

Who’s in charge of all this? It’s fairly regulated, actually, but perhaps not in the way you think. The FDA uses its CLIA authority to ensure that these tests are accurate. The FTC ensures that the companies do not engage in false advertising. But no one is minding the store as to whether the tests are actually beneficial either to an individual or to society.

The 21 companies varied dramatically in regard to how they handle communicating the risks and results of these tests. All of them had a disclaimer that the information does not represent comprehensive medical advice. Fine. But a minority acknowledged any risks or limitations of the tests. Less than half had a statement of HIPAA compliance. And 17 out of 21 provided no information as to whether customers could request their data to be deleted, while 18 out of 21 stated that there could be follow-up for abnormal results, but often it was unclear exactly how that would work.

So, let’s circle back to the first question: Should a healthy person be able to get a laboratory test simply because they want to? The libertarians among us would argue certainly yes, though perhaps without thinking through the societal implications of abnormal results. The evidence-based medicine folks will, accurately, state that there are no clinical trials to suggest that screening healthy people with tests like these has any benefit.

But we should be cautious here. This question is scienceable; you could design a trial to test whether screening healthy 43-year-olds for testosterone level led to significant improvements in overall mortality. It would just take a few million people and about 40 years of follow-up.

And even if it didn’t help, we let people throw their money away on useless things all the time. The only difference between someone spending money on a useless test or on a useless dietary supplement is that someone has to deal with the result.

So, can you do this right? Can you make a direct-to-consumer testing company that is not essentially a free-rider on the rest of the health care ecosystem?

I think there are ways. You’d need physicians involved at all stages to help interpret the testing and guide next steps. You’d need some transparent guidelines, written in language that patients can understand, for what will happen given any conceivable result – and what costs those results might lead to for them and their insurance company. Most important, you’d need longitudinal follow-up and the ability to recommend changes, retest in the future, and potentially address the cost implications of the downstream findings. In the end, it starts to sound very much like a doctor’s office.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Conn. He reported no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

Let’s assume, for the sake of argument, that I am a healthy 43-year old man. Nevertheless, I am interested in getting my vitamin D level checked. My primary care doc says it’s unnecessary, but that doesn’t matter because a variety of direct-to-consumer testing companies will do it without a doctor’s prescription – for a fee of course.

Is that okay? Should I be able to get the test?

What if instead of my vitamin D level, I want to test my testosterone level, or my PSA, or my cadmium level, or my Lyme disease antibodies, or even have a full-body MRI scan?

These questions are becoming more and more common, because the direct-to-consumer testing market is exploding.

We’re talking about direct-to-consumer testing, thanks to this paper: Policies of US Companies Offering Direct-to-Consumer Laboratory Tests, appearing in JAMA Internal Medicine, which characterizes the testing practices of direct-to-consumer testing companies.

But before we get to the study, a word on this market. Direct-to-consumer lab testing is projected to be a $2 billion industry by 2025, and lab testing megacorporations Quest Diagnostics and Labcorp are both jumping headlong into this space.

Why is this happening? A couple of reasons, I think. First, the increasing cost of health care has led payers to place significant restrictions on what tests can be ordered and under what circumstances. Physicians are all too familiar with the “prior authorization” system that seeks to limit even the tests we think would benefit our patients.

Frustrated with such a system, it’s no wonder that patients are increasingly deciding to go it on their own. Sure, insurance won’t cover these tests, but the prices are transparent and competition actually keeps them somewhat reasonable. So, is this a win-win? Shouldn’t we allow people to get the tests they want, at least if they are willing to pay for it?

Of course, it’s not quite that simple. If the tests are normal, or negative, then sure – no harm, no foul. But when they are positive, everything changes. What happens when the PSA test I got myself via a direct-to-consumer testing company comes back elevated? Well, at that point, I am right back into the traditional mode of medicine – seeing my doctor, probably getting repeat testing, biopsies, etc., – and some payer will be on the hook for that, which is to say that all of us will be on the hook for that.

One other reason direct-to-consumer testing is getting more popular is a more difficult-to-characterize phenomenon which I might call postpandemic individualism. I’ve seen this across several domains, but I think in some ways the pandemic led people to focus more attention on themselves, perhaps because we were so isolated from each other. Optimizing health through data – whether using a fitness tracking watch, meticulously counting macronutrient intake, or ordering your own lab tests – may be a form of exerting control over a universe that feels increasingly chaotic. But what do I know? I’m not a psychologist.

The study characterizes a total of 21 direct-to-consumer testing companies. They offer a variety of services, as you can see here, with the majority in the endocrine space: thyroid, diabetes, men’s and women’s health. A smattering of companies offer more esoteric testing, such as heavy metals and Lyme disease.

Who’s in charge of all this? It’s fairly regulated, actually, but perhaps not in the way you think. The FDA uses its CLIA authority to ensure that these tests are accurate. The FTC ensures that the companies do not engage in false advertising. But no one is minding the store as to whether the tests are actually beneficial either to an individual or to society.

The 21 companies varied dramatically in regard to how they handle communicating the risks and results of these tests. All of them had a disclaimer that the information does not represent comprehensive medical advice. Fine. But a minority acknowledged any risks or limitations of the tests. Less than half had a statement of HIPAA compliance. And 17 out of 21 provided no information as to whether customers could request their data to be deleted, while 18 out of 21 stated that there could be follow-up for abnormal results, but often it was unclear exactly how that would work.

So, let’s circle back to the first question: Should a healthy person be able to get a laboratory test simply because they want to? The libertarians among us would argue certainly yes, though perhaps without thinking through the societal implications of abnormal results. The evidence-based medicine folks will, accurately, state that there are no clinical trials to suggest that screening healthy people with tests like these has any benefit.

But we should be cautious here. This question is scienceable; you could design a trial to test whether screening healthy 43-year-olds for testosterone level led to significant improvements in overall mortality. It would just take a few million people and about 40 years of follow-up.

And even if it didn’t help, we let people throw their money away on useless things all the time. The only difference between someone spending money on a useless test or on a useless dietary supplement is that someone has to deal with the result.

So, can you do this right? Can you make a direct-to-consumer testing company that is not essentially a free-rider on the rest of the health care ecosystem?

I think there are ways. You’d need physicians involved at all stages to help interpret the testing and guide next steps. You’d need some transparent guidelines, written in language that patients can understand, for what will happen given any conceivable result – and what costs those results might lead to for them and their insurance company. Most important, you’d need longitudinal follow-up and the ability to recommend changes, retest in the future, and potentially address the cost implications of the downstream findings. In the end, it starts to sound very much like a doctor’s office.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Conn. He reported no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

Let’s assume, for the sake of argument, that I am a healthy 43-year old man. Nevertheless, I am interested in getting my vitamin D level checked. My primary care doc says it’s unnecessary, but that doesn’t matter because a variety of direct-to-consumer testing companies will do it without a doctor’s prescription – for a fee of course.

Is that okay? Should I be able to get the test?

What if instead of my vitamin D level, I want to test my testosterone level, or my PSA, or my cadmium level, or my Lyme disease antibodies, or even have a full-body MRI scan?

These questions are becoming more and more common, because the direct-to-consumer testing market is exploding.

We’re talking about direct-to-consumer testing, thanks to this paper: Policies of US Companies Offering Direct-to-Consumer Laboratory Tests, appearing in JAMA Internal Medicine, which characterizes the testing practices of direct-to-consumer testing companies.

But before we get to the study, a word on this market. Direct-to-consumer lab testing is projected to be a $2 billion industry by 2025, and lab testing megacorporations Quest Diagnostics and Labcorp are both jumping headlong into this space.

Why is this happening? A couple of reasons, I think. First, the increasing cost of health care has led payers to place significant restrictions on what tests can be ordered and under what circumstances. Physicians are all too familiar with the “prior authorization” system that seeks to limit even the tests we think would benefit our patients.

Frustrated with such a system, it’s no wonder that patients are increasingly deciding to go it on their own. Sure, insurance won’t cover these tests, but the prices are transparent and competition actually keeps them somewhat reasonable. So, is this a win-win? Shouldn’t we allow people to get the tests they want, at least if they are willing to pay for it?

Of course, it’s not quite that simple. If the tests are normal, or negative, then sure – no harm, no foul. But when they are positive, everything changes. What happens when the PSA test I got myself via a direct-to-consumer testing company comes back elevated? Well, at that point, I am right back into the traditional mode of medicine – seeing my doctor, probably getting repeat testing, biopsies, etc., – and some payer will be on the hook for that, which is to say that all of us will be on the hook for that.

One other reason direct-to-consumer testing is getting more popular is a more difficult-to-characterize phenomenon which I might call postpandemic individualism. I’ve seen this across several domains, but I think in some ways the pandemic led people to focus more attention on themselves, perhaps because we were so isolated from each other. Optimizing health through data – whether using a fitness tracking watch, meticulously counting macronutrient intake, or ordering your own lab tests – may be a form of exerting control over a universe that feels increasingly chaotic. But what do I know? I’m not a psychologist.

The study characterizes a total of 21 direct-to-consumer testing companies. They offer a variety of services, as you can see here, with the majority in the endocrine space: thyroid, diabetes, men’s and women’s health. A smattering of companies offer more esoteric testing, such as heavy metals and Lyme disease.

Who’s in charge of all this? It’s fairly regulated, actually, but perhaps not in the way you think. The FDA uses its CLIA authority to ensure that these tests are accurate. The FTC ensures that the companies do not engage in false advertising. But no one is minding the store as to whether the tests are actually beneficial either to an individual or to society.

The 21 companies varied dramatically in regard to how they handle communicating the risks and results of these tests. All of them had a disclaimer that the information does not represent comprehensive medical advice. Fine. But a minority acknowledged any risks or limitations of the tests. Less than half had a statement of HIPAA compliance. And 17 out of 21 provided no information as to whether customers could request their data to be deleted, while 18 out of 21 stated that there could be follow-up for abnormal results, but often it was unclear exactly how that would work.

So, let’s circle back to the first question: Should a healthy person be able to get a laboratory test simply because they want to? The libertarians among us would argue certainly yes, though perhaps without thinking through the societal implications of abnormal results. The evidence-based medicine folks will, accurately, state that there are no clinical trials to suggest that screening healthy people with tests like these has any benefit.

But we should be cautious here. This question is scienceable; you could design a trial to test whether screening healthy 43-year-olds for testosterone level led to significant improvements in overall mortality. It would just take a few million people and about 40 years of follow-up.

And even if it didn’t help, we let people throw their money away on useless things all the time. The only difference between someone spending money on a useless test or on a useless dietary supplement is that someone has to deal with the result.

So, can you do this right? Can you make a direct-to-consumer testing company that is not essentially a free-rider on the rest of the health care ecosystem?

I think there are ways. You’d need physicians involved at all stages to help interpret the testing and guide next steps. You’d need some transparent guidelines, written in language that patients can understand, for what will happen given any conceivable result – and what costs those results might lead to for them and their insurance company. Most important, you’d need longitudinal follow-up and the ability to recommend changes, retest in the future, and potentially address the cost implications of the downstream findings. In the end, it starts to sound very much like a doctor’s office.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Conn. He reported no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

Bad blood: Could brain bleeds be contagious?

This transcript has been edited for clarity.

How do you tell if a condition is caused by an infection?

It seems like an obvious question, right? In the post–van Leeuwenhoek era we can look at whatever part of the body is diseased under a microscope and see microbes – you know, the usual suspects.

Except when we can’t. And there are plenty of cases where we can’t: where the microbe is too small to be seen without more advanced imaging techniques, like with viruses; or when the pathogen is sparsely populated or hard to culture, like Mycobacterium.

Finding out that a condition is the result of an infection is not only an exercise for 19th century physicians. After all, it was 2008 when Barry Marshall and Robin Warren won their Nobel Prize for proving that stomach ulcers, long thought to be due to “stress,” were actually caused by a tiny microbe called Helicobacter pylori.

And this week, we are looking at a study which, once again, begins to suggest that a condition thought to be more or less random – cerebral amyloid angiopathy – may actually be the result of an infectious disease.

We’re talking about this paper, appearing in JAMA, which is just a great example of old-fashioned shoe-leather epidemiology. But let’s get up to speed on cerebral amyloid angiopathy (CAA) first.

CAA is characterized by the deposition of amyloid protein in the brain. While there are some genetic causes, they are quite rare, and most cases are thought to be idiopathic. Recent analyses suggest that somewhere between 5% and 7% of cognitively normal older adults have CAA, but the rate is much higher among those with intracerebral hemorrhage – brain bleeds. In fact, CAA is the second-most common cause of bleeding in the brain, second only to severe hypertension.

An article in Nature highlights cases that seemed to develop after the administration of cadaveric pituitary hormone.

Other studies have shown potential transmission via dura mater grafts and neurosurgical instruments. But despite those clues, no infectious organism has been identified. Some have suggested that the long latent period and difficulty of finding a responsible microbe points to a prion-like disease not yet known. But these studies are more or less case series. The new JAMA paper gives us, if not a smoking gun, a pretty decent set of fingerprints.

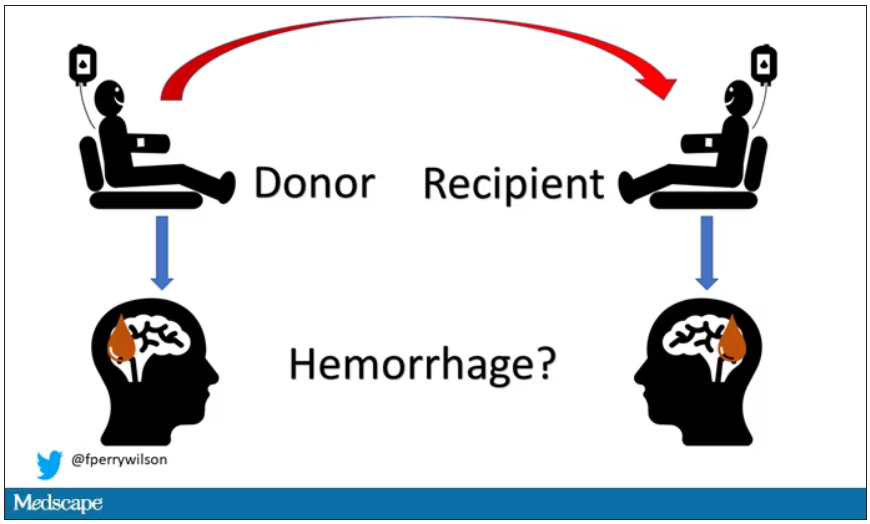

Here’s the idea: If CAA is caused by some infectious agent, it may be transmitted in the blood. We know that a decent percentage of people who have spontaneous brain bleeds have CAA. If those people donated blood in the past, maybe the people who received that blood would be at risk for brain bleeds too.

Of course, to really test that hypothesis, you’d need to know who every blood donor in a country was and every person who received that blood and all their subsequent diagnoses for basically their entire lives. No one has that kind of data, right?

Well, if you’ve been watching this space, you’ll know that a few countries do. Enter Sweden and Denmark, with their national electronic health record that captures all of this information, and much more, on every single person who lives or has lived in those countries since before 1970. Unbelievable.

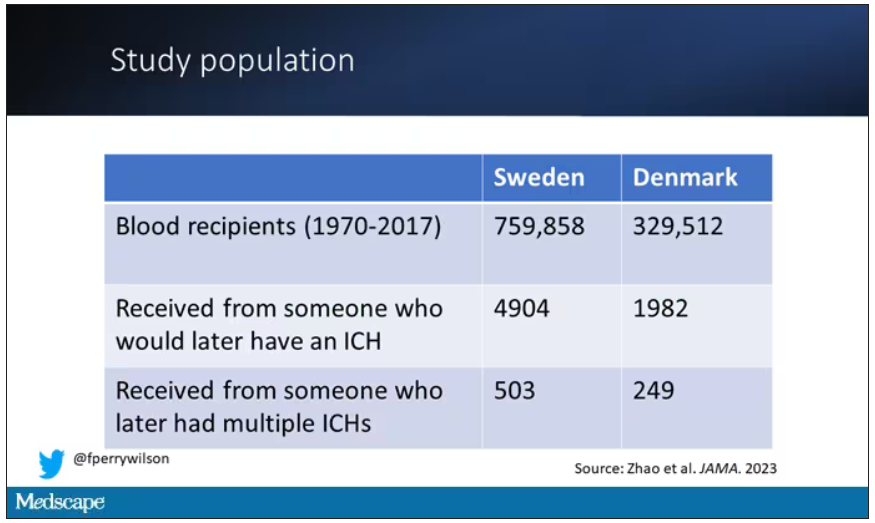

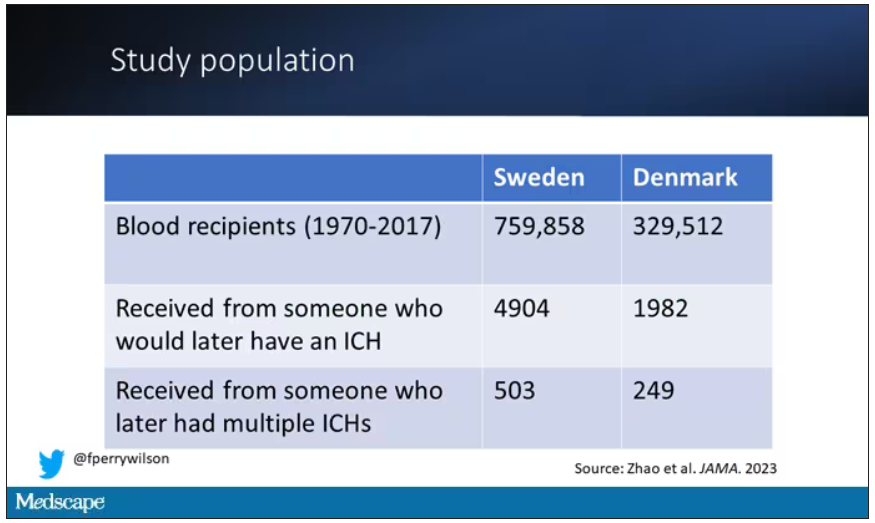

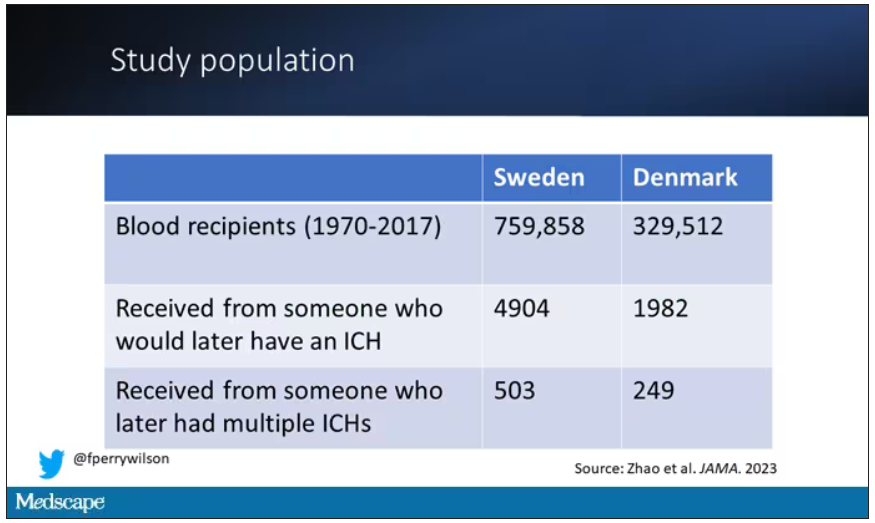

So that’s exactly what the researchers, led by Jingchen Zhao at Karolinska (Sweden) University, did. They identified roughly 760,000 individuals in Sweden and 330,000 people in Denmark who had received a blood transfusion between 1970 and 2017.

Of course, most of those blood donors – 99% of them, actually – never went on to have any bleeding in the brain. It is a rare thing, fortunately.

But some of the donors did, on average within about 5 years of the time they donated blood. The researchers characterized each donor as either never having a brain bleed, having a single bleed, or having multiple bleeds. The latter is most strongly associated with CAA.

The big question: Would recipients who got blood from individuals who later on had brain bleeds, have brain bleeds themselves?

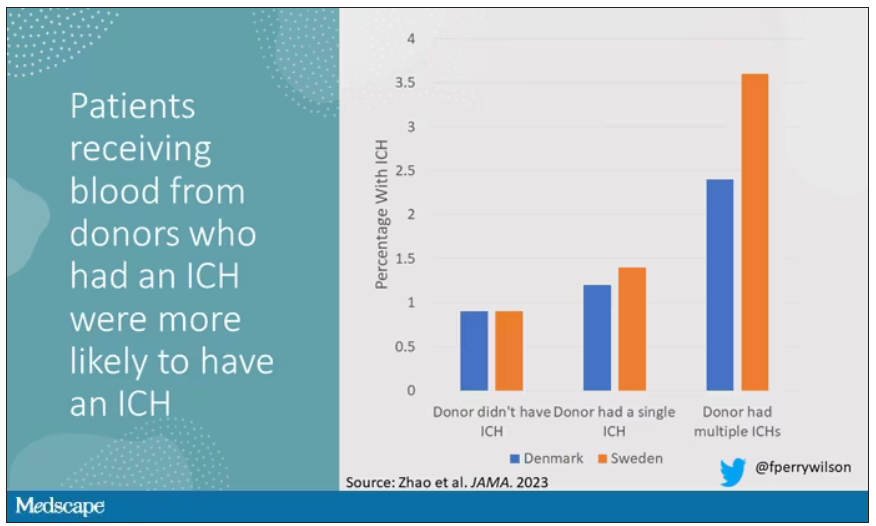

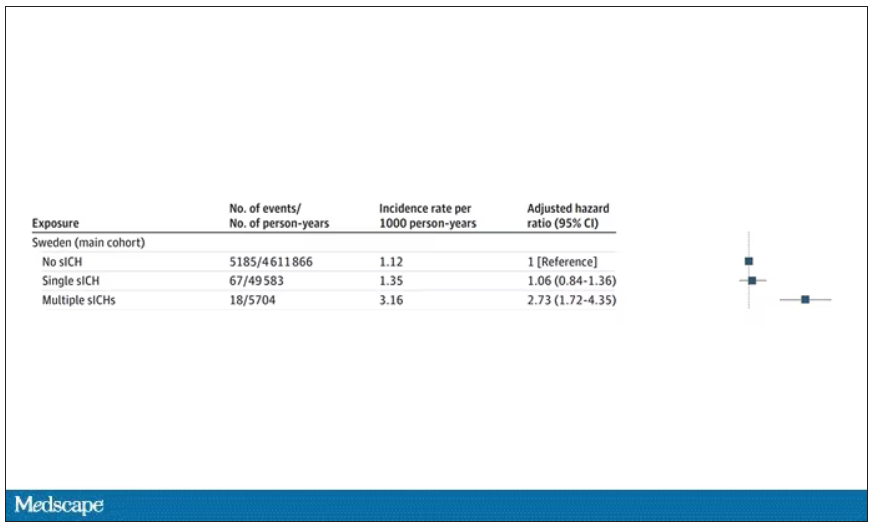

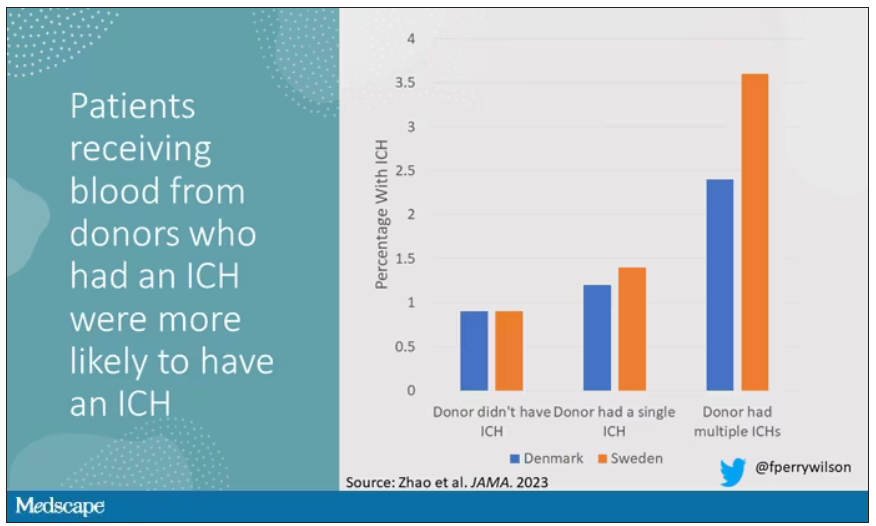

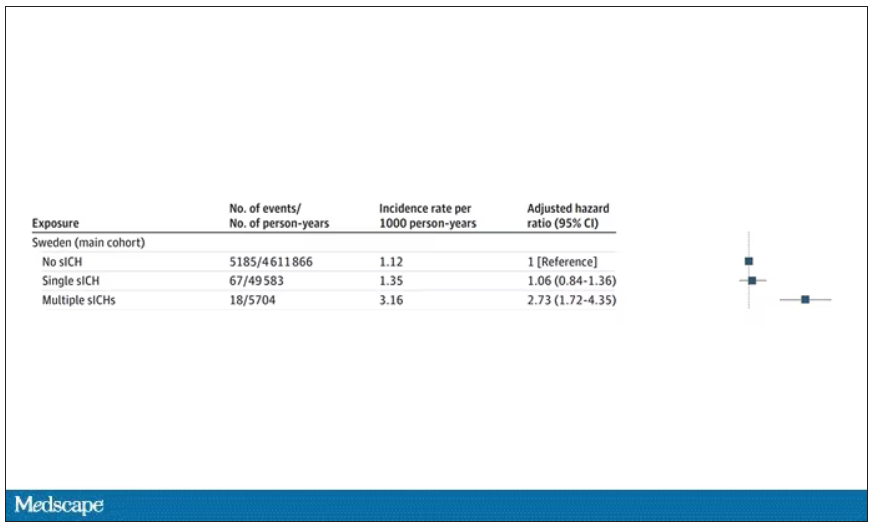

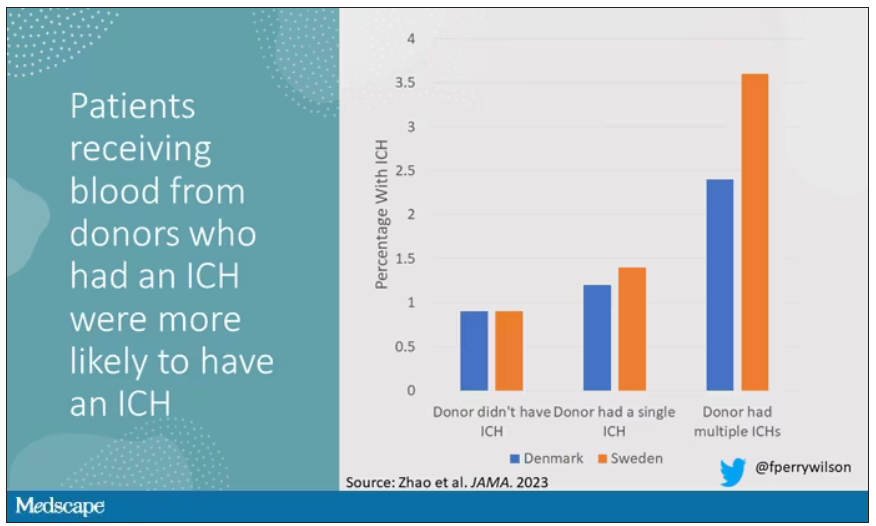

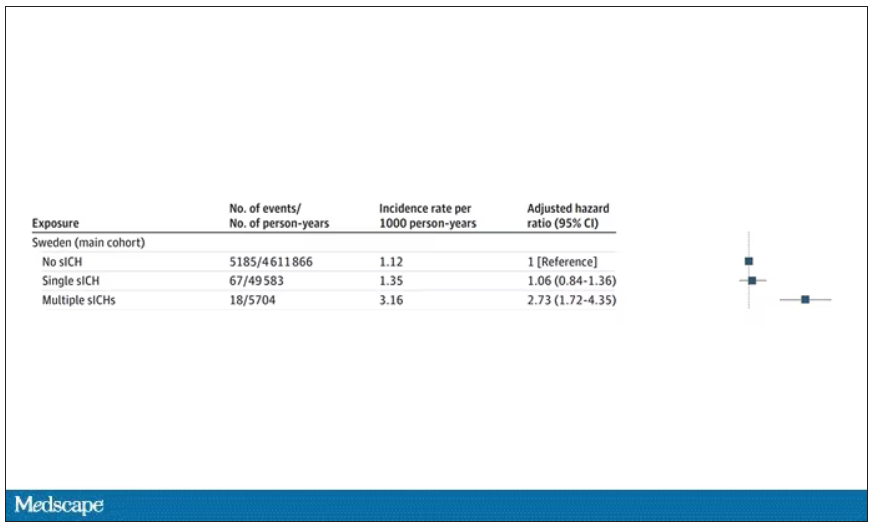

The answer is yes, though with an asterisk. You can see the results here. The risk of recipients having a brain bleed was lowest if the blood they received was from people who never had a brain bleed, higher if the individual had a single brain bleed, and highest if they got blood from a donor who would go on to have multiple brain bleeds.

All in all, individuals who received blood from someone who would later have multiple hemorrhages were three times more likely to themselves develop bleeds themselves. It’s fairly compelling evidence of a transmissible agent.

Of course, there are some potential confounders to consider here. Whose blood you get is not totally random. If, for example, people with type O blood are just more likely to have brain bleeds, then you could get results like this, as type O tends to donate to type O and both groups would have higher risk after donation. But the authors adjusted for blood type. They also adjusted for number of transfusions, calendar year, age, sex, and indication for transfusion.

Perhaps most compelling, and most clever, is that they used ischemic stroke as a negative control. Would people who received blood from someone who later had an ischemic stroke themselves be more likely to go on to have an ischemic stroke? No signal at all. It does not appear that there is a transmissible agent associated with ischemic stroke – only the brain bleeds.

I know what you’re thinking. What’s the agent? What’s the microbe, or virus, or prion, or toxin? The study gives us no insight there. These nationwide databases are awesome but they can only do so much. Because of the vagaries of medical coding and the difficulty of making the CAA diagnosis, the authors are using brain bleeds as a proxy here; we don’t even know for sure whether these were CAA-associated brain bleeds.

It’s also worth noting that there’s little we can do about this. None of the blood donors in this study had a brain bleed prior to donation; it’s not like we could screen people out of donating in the future. We have no test for whatever this agent is, if it even exists, nor do we have a potential treatment. Fortunately, whatever it is, it is extremely rare.

Still, this paper feels like a shot across the bow. At this point, the probability has shifted strongly away from CAA being a purely random disease and toward it being an infectious one. It may be time to round up some of the unusual suspects.

Dr. F. Perry Wilson is an associate professor of medicine and public health and director of Yale University’s Clinical and Translational Research Accelerator in New Haven, Conn. He reported no conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

How do you tell if a condition is caused by an infection?

It seems like an obvious question, right? In the post–van Leeuwenhoek era we can look at whatever part of the body is diseased under a microscope and see microbes – you know, the usual suspects.

Except when we can’t. And there are plenty of cases where we can’t: where the microbe is too small to be seen without more advanced imaging techniques, like with viruses; or when the pathogen is sparsely populated or hard to culture, like Mycobacterium.

Finding out that a condition is the result of an infection is not only an exercise for 19th century physicians. After all, it was 2008 when Barry Marshall and Robin Warren won their Nobel Prize for proving that stomach ulcers, long thought to be due to “stress,” were actually caused by a tiny microbe called Helicobacter pylori.

And this week, we are looking at a study which, once again, begins to suggest that a condition thought to be more or less random – cerebral amyloid angiopathy – may actually be the result of an infectious disease.

We’re talking about this paper, appearing in JAMA, which is just a great example of old-fashioned shoe-leather epidemiology. But let’s get up to speed on cerebral amyloid angiopathy (CAA) first.

CAA is characterized by the deposition of amyloid protein in the brain. While there are some genetic causes, they are quite rare, and most cases are thought to be idiopathic. Recent analyses suggest that somewhere between 5% and 7% of cognitively normal older adults have CAA, but the rate is much higher among those with intracerebral hemorrhage – brain bleeds. In fact, CAA is the second-most common cause of bleeding in the brain, second only to severe hypertension.

An article in Nature highlights cases that seemed to develop after the administration of cadaveric pituitary hormone.

Other studies have shown potential transmission via dura mater grafts and neurosurgical instruments. But despite those clues, no infectious organism has been identified. Some have suggested that the long latent period and difficulty of finding a responsible microbe points to a prion-like disease not yet known. But these studies are more or less case series. The new JAMA paper gives us, if not a smoking gun, a pretty decent set of fingerprints.

Here’s the idea: If CAA is caused by some infectious agent, it may be transmitted in the blood. We know that a decent percentage of people who have spontaneous brain bleeds have CAA. If those people donated blood in the past, maybe the people who received that blood would be at risk for brain bleeds too.

Of course, to really test that hypothesis, you’d need to know who every blood donor in a country was and every person who received that blood and all their subsequent diagnoses for basically their entire lives. No one has that kind of data, right?

Well, if you’ve been watching this space, you’ll know that a few countries do. Enter Sweden and Denmark, with their national electronic health record that captures all of this information, and much more, on every single person who lives or has lived in those countries since before 1970. Unbelievable.

So that’s exactly what the researchers, led by Jingchen Zhao at Karolinska (Sweden) University, did. They identified roughly 760,000 individuals in Sweden and 330,000 people in Denmark who had received a blood transfusion between 1970 and 2017.

Of course, most of those blood donors – 99% of them, actually – never went on to have any bleeding in the brain. It is a rare thing, fortunately.

But some of the donors did, on average within about 5 years of the time they donated blood. The researchers characterized each donor as either never having a brain bleed, having a single bleed, or having multiple bleeds. The latter is most strongly associated with CAA.

The big question: Would recipients who got blood from individuals who later on had brain bleeds, have brain bleeds themselves?

The answer is yes, though with an asterisk. You can see the results here. The risk of recipients having a brain bleed was lowest if the blood they received was from people who never had a brain bleed, higher if the individual had a single brain bleed, and highest if they got blood from a donor who would go on to have multiple brain bleeds.

All in all, individuals who received blood from someone who would later have multiple hemorrhages were three times more likely to themselves develop bleeds themselves. It’s fairly compelling evidence of a transmissible agent.

Of course, there are some potential confounders to consider here. Whose blood you get is not totally random. If, for example, people with type O blood are just more likely to have brain bleeds, then you could get results like this, as type O tends to donate to type O and both groups would have higher risk after donation. But the authors adjusted for blood type. They also adjusted for number of transfusions, calendar year, age, sex, and indication for transfusion.

Perhaps most compelling, and most clever, is that they used ischemic stroke as a negative control. Would people who received blood from someone who later had an ischemic stroke themselves be more likely to go on to have an ischemic stroke? No signal at all. It does not appear that there is a transmissible agent associated with ischemic stroke – only the brain bleeds.

I know what you’re thinking. What’s the agent? What’s the microbe, or virus, or prion, or toxin? The study gives us no insight there. These nationwide databases are awesome but they can only do so much. Because of the vagaries of medical coding and the difficulty of making the CAA diagnosis, the authors are using brain bleeds as a proxy here; we don’t even know for sure whether these were CAA-associated brain bleeds.

It’s also worth noting that there’s little we can do about this. None of the blood donors in this study had a brain bleed prior to donation; it’s not like we could screen people out of donating in the future. We have no test for whatever this agent is, if it even exists, nor do we have a potential treatment. Fortunately, whatever it is, it is extremely rare.

Still, this paper feels like a shot across the bow. At this point, the probability has shifted strongly away from CAA being a purely random disease and toward it being an infectious one. It may be time to round up some of the unusual suspects.

Dr. F. Perry Wilson is an associate professor of medicine and public health and director of Yale University’s Clinical and Translational Research Accelerator in New Haven, Conn. He reported no conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

How do you tell if a condition is caused by an infection?

It seems like an obvious question, right? In the post–van Leeuwenhoek era we can look at whatever part of the body is diseased under a microscope and see microbes – you know, the usual suspects.

Except when we can’t. And there are plenty of cases where we can’t: where the microbe is too small to be seen without more advanced imaging techniques, like with viruses; or when the pathogen is sparsely populated or hard to culture, like Mycobacterium.

Finding out that a condition is the result of an infection is not only an exercise for 19th century physicians. After all, it was 2008 when Barry Marshall and Robin Warren won their Nobel Prize for proving that stomach ulcers, long thought to be due to “stress,” were actually caused by a tiny microbe called Helicobacter pylori.

And this week, we are looking at a study which, once again, begins to suggest that a condition thought to be more or less random – cerebral amyloid angiopathy – may actually be the result of an infectious disease.

We’re talking about this paper, appearing in JAMA, which is just a great example of old-fashioned shoe-leather epidemiology. But let’s get up to speed on cerebral amyloid angiopathy (CAA) first.

CAA is characterized by the deposition of amyloid protein in the brain. While there are some genetic causes, they are quite rare, and most cases are thought to be idiopathic. Recent analyses suggest that somewhere between 5% and 7% of cognitively normal older adults have CAA, but the rate is much higher among those with intracerebral hemorrhage – brain bleeds. In fact, CAA is the second-most common cause of bleeding in the brain, second only to severe hypertension.

An article in Nature highlights cases that seemed to develop after the administration of cadaveric pituitary hormone.

Other studies have shown potential transmission via dura mater grafts and neurosurgical instruments. But despite those clues, no infectious organism has been identified. Some have suggested that the long latent period and difficulty of finding a responsible microbe points to a prion-like disease not yet known. But these studies are more or less case series. The new JAMA paper gives us, if not a smoking gun, a pretty decent set of fingerprints.

Here’s the idea: If CAA is caused by some infectious agent, it may be transmitted in the blood. We know that a decent percentage of people who have spontaneous brain bleeds have CAA. If those people donated blood in the past, maybe the people who received that blood would be at risk for brain bleeds too.

Of course, to really test that hypothesis, you’d need to know who every blood donor in a country was and every person who received that blood and all their subsequent diagnoses for basically their entire lives. No one has that kind of data, right?

Well, if you’ve been watching this space, you’ll know that a few countries do. Enter Sweden and Denmark, with their national electronic health record that captures all of this information, and much more, on every single person who lives or has lived in those countries since before 1970. Unbelievable.

So that’s exactly what the researchers, led by Jingchen Zhao at Karolinska (Sweden) University, did. They identified roughly 760,000 individuals in Sweden and 330,000 people in Denmark who had received a blood transfusion between 1970 and 2017.

Of course, most of those blood donors – 99% of them, actually – never went on to have any bleeding in the brain. It is a rare thing, fortunately.

But some of the donors did, on average within about 5 years of the time they donated blood. The researchers characterized each donor as either never having a brain bleed, having a single bleed, or having multiple bleeds. The latter is most strongly associated with CAA.

The big question: Would recipients who got blood from individuals who later on had brain bleeds, have brain bleeds themselves?

The answer is yes, though with an asterisk. You can see the results here. The risk of recipients having a brain bleed was lowest if the blood they received was from people who never had a brain bleed, higher if the individual had a single brain bleed, and highest if they got blood from a donor who would go on to have multiple brain bleeds.

All in all, individuals who received blood from someone who would later have multiple hemorrhages were three times more likely to themselves develop bleeds themselves. It’s fairly compelling evidence of a transmissible agent.

Of course, there are some potential confounders to consider here. Whose blood you get is not totally random. If, for example, people with type O blood are just more likely to have brain bleeds, then you could get results like this, as type O tends to donate to type O and both groups would have higher risk after donation. But the authors adjusted for blood type. They also adjusted for number of transfusions, calendar year, age, sex, and indication for transfusion.

Perhaps most compelling, and most clever, is that they used ischemic stroke as a negative control. Would people who received blood from someone who later had an ischemic stroke themselves be more likely to go on to have an ischemic stroke? No signal at all. It does not appear that there is a transmissible agent associated with ischemic stroke – only the brain bleeds.

I know what you’re thinking. What’s the agent? What’s the microbe, or virus, or prion, or toxin? The study gives us no insight there. These nationwide databases are awesome but they can only do so much. Because of the vagaries of medical coding and the difficulty of making the CAA diagnosis, the authors are using brain bleeds as a proxy here; we don’t even know for sure whether these were CAA-associated brain bleeds.

It’s also worth noting that there’s little we can do about this. None of the blood donors in this study had a brain bleed prior to donation; it’s not like we could screen people out of donating in the future. We have no test for whatever this agent is, if it even exists, nor do we have a potential treatment. Fortunately, whatever it is, it is extremely rare.

Still, this paper feels like a shot across the bow. At this point, the probability has shifted strongly away from CAA being a purely random disease and toward it being an infectious one. It may be time to round up some of the unusual suspects.

Dr. F. Perry Wilson is an associate professor of medicine and public health and director of Yale University’s Clinical and Translational Research Accelerator in New Haven, Conn. He reported no conflicts of interest.

A version of this article first appeared on Medscape.com.

The new normal in body temperature

This transcript has been edited for clarity.

Every branch of science has its constants. Physics has the speed of light, the gravitational constant, the Planck constant. Chemistry gives us Avogadro’s number, Faraday’s constant, the charge of an electron. Medicine isn’t quite as reliable as physics when it comes to these things, but insofar as there are any constants in medicine, might I suggest normal body temperature: 37° Celsius, 98.6° Fahrenheit.

Sure, serum sodium may be less variable and lactate concentration more clinically relevant, but even my 7-year-old knows that normal body temperature is 98.6°.

Except, as it turns out, 98.6° isn’t normal at all.

How did we arrive at 37.0° C for normal body temperature? We got it from this guy – German physician Carl Reinhold August Wunderlich, who, in addition to looking eerily like Luciano Pavarotti, was the first to realize that fever was not itself a disease but a symptom of one.

In 1851, Dr. Wunderlich released his measurements of more than 1 million body temperatures taken from 25,000 Germans – a painstaking process at the time, which employed a foot-long thermometer and took 20 minutes to obtain a measurement.

The average temperature measured, of course, was 37° C.

We’re more than 150 years post-Wunderlich right now, and the average person in the United States might be quite a bit different from the average German in 1850. Moreover, we can do a lot better than just measuring a ton of people and taking the average, because we have statistics. The problem with measuring a bunch of people and taking the average temperature as normal is that you can’t be sure that the people you are measuring are normal. There are obvious causes of elevated temperature that you could exclude. Let’s not take people with a respiratory infection or who are taking Tylenol, for example. But as highlighted in this paper in JAMA Internal Medicine, we can do a lot better than that.

The study leverages the fact that body temperature is typically measured during all medical office visits and recorded in the ever-present electronic medical record.

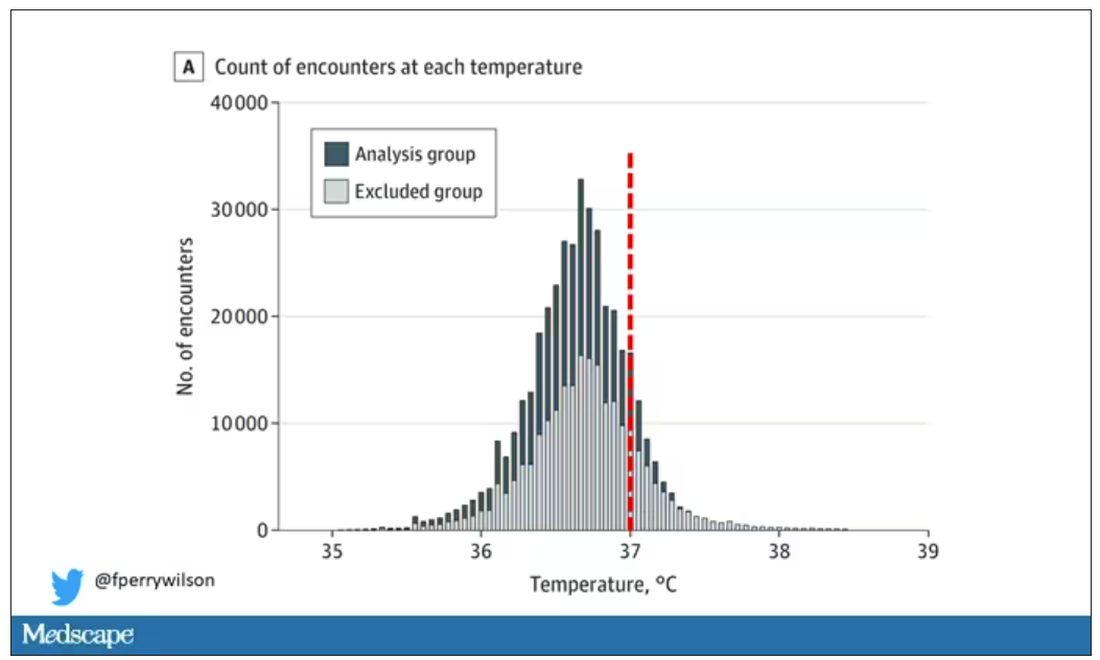

Researchers from Stanford identified 724,199 patient encounters with outpatient temperature data. They excluded extreme temperatures – less than 34° C or greater than 40° C – excluded patients under 20 or above 80 years, and excluded those with extremes of height, weight, or body mass index.

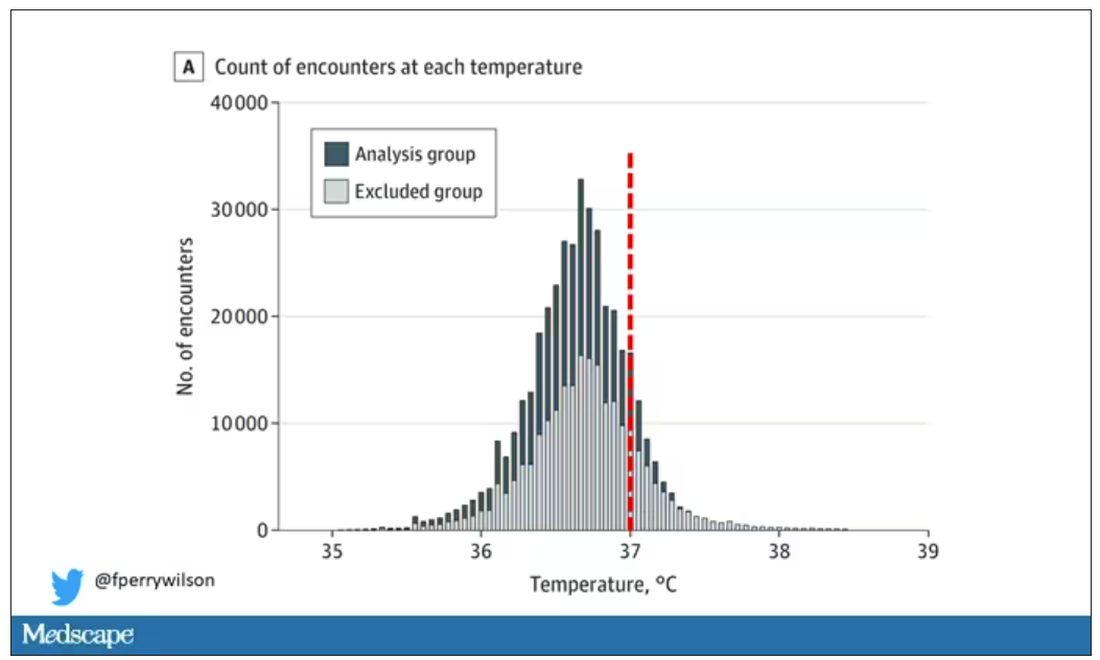

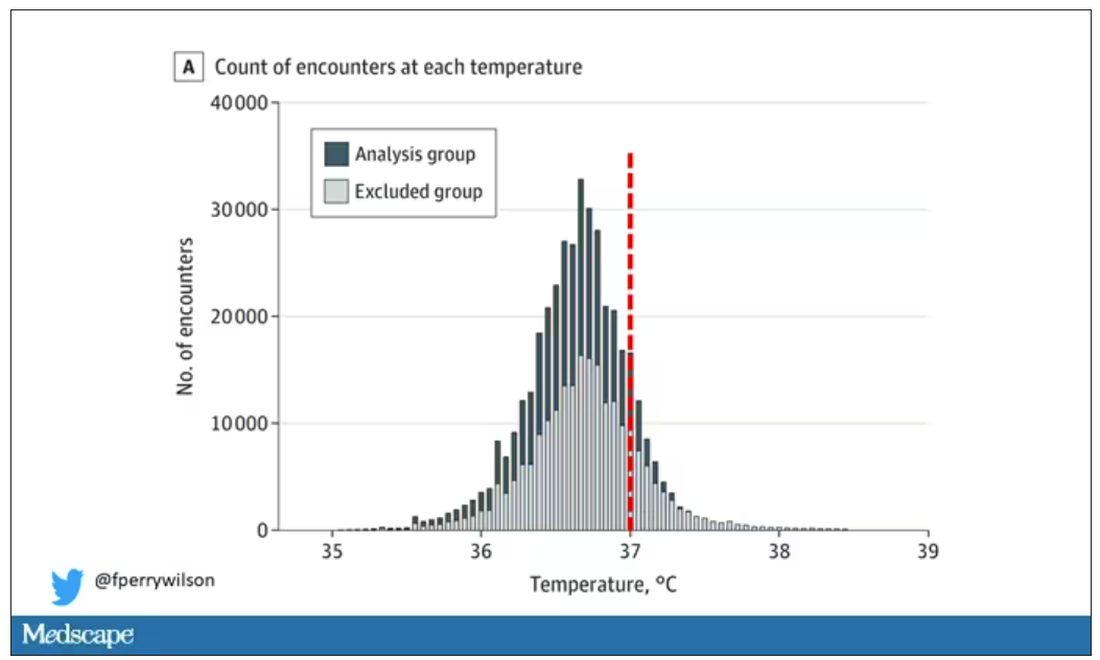

You end up with a distribution like this. Note that the peak is clearly lower than 37° C.

But we’re still not at “normal.” Some people would be seeing their doctor for conditions that affect body temperature, such as infection. You could use diagnosis codes to flag these individuals and drop them, but that feels a bit arbitrary.

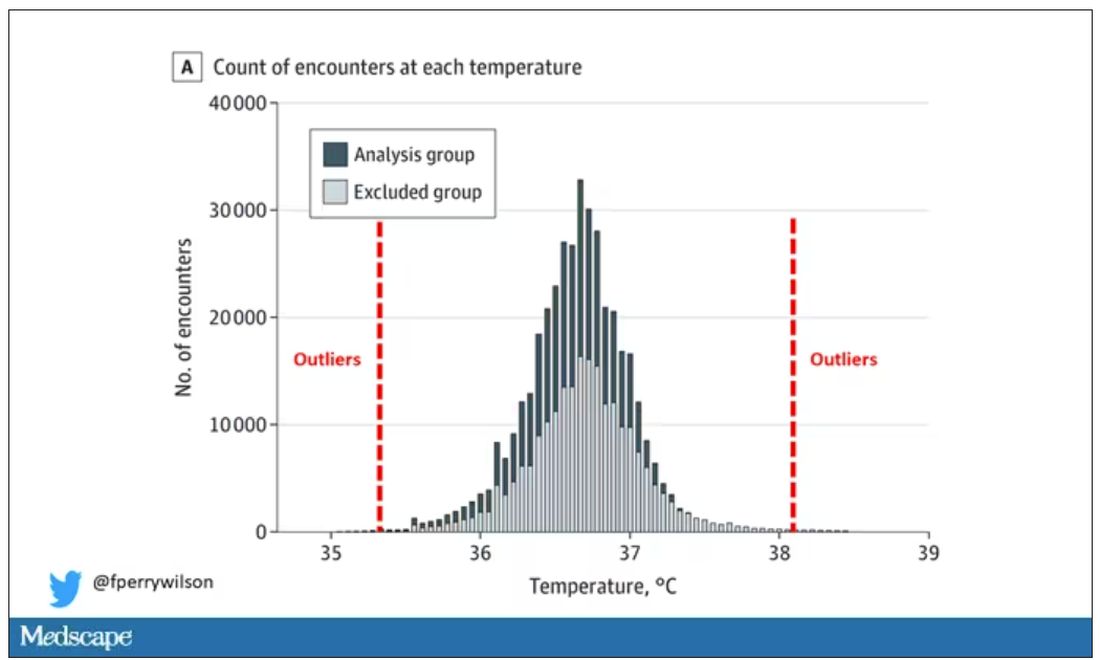

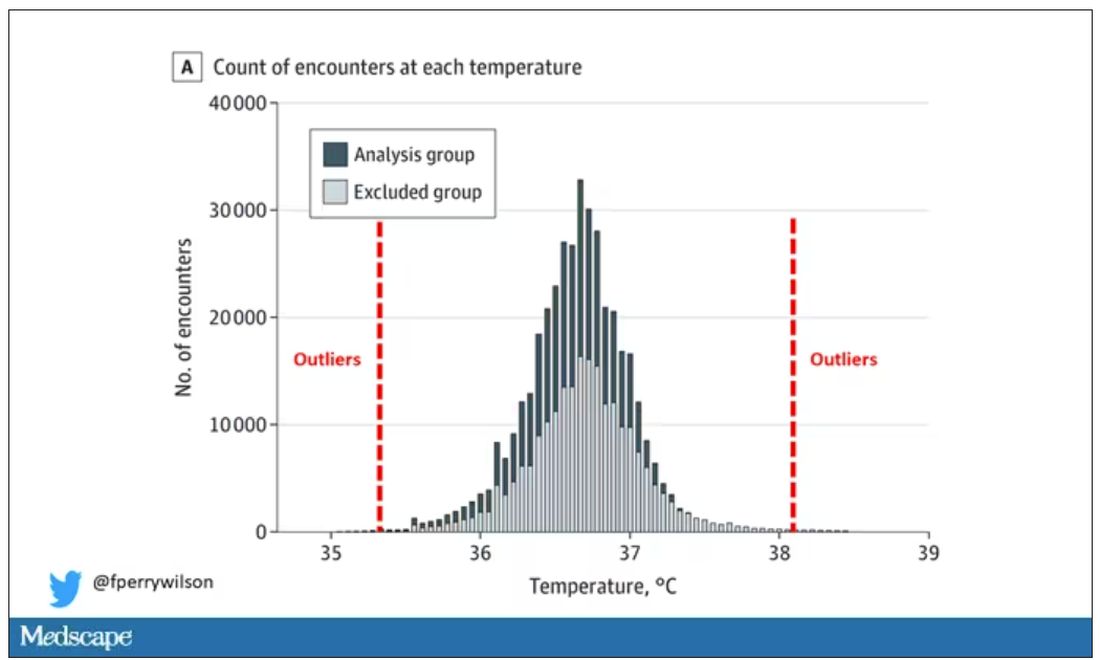

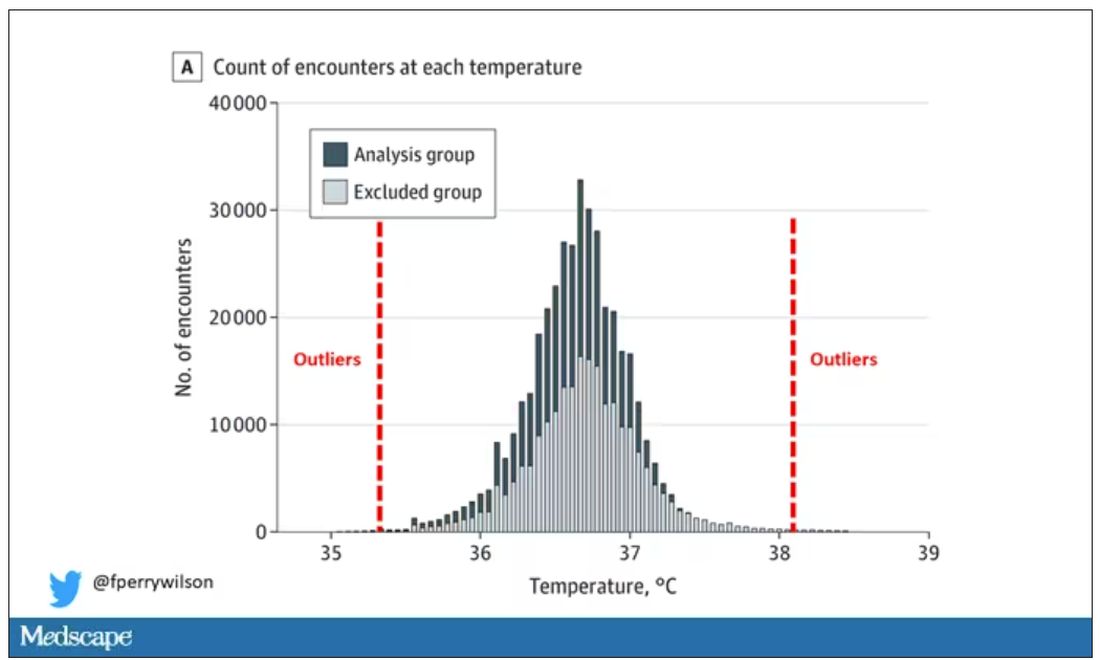

I really love how the researchers used data to fix this problem. They used a technique called LIMIT (Laboratory Information Mining for Individualized Thresholds). It works like this:

Take all the temperature measurements and then identify the outliers – the very tails of the distribution.

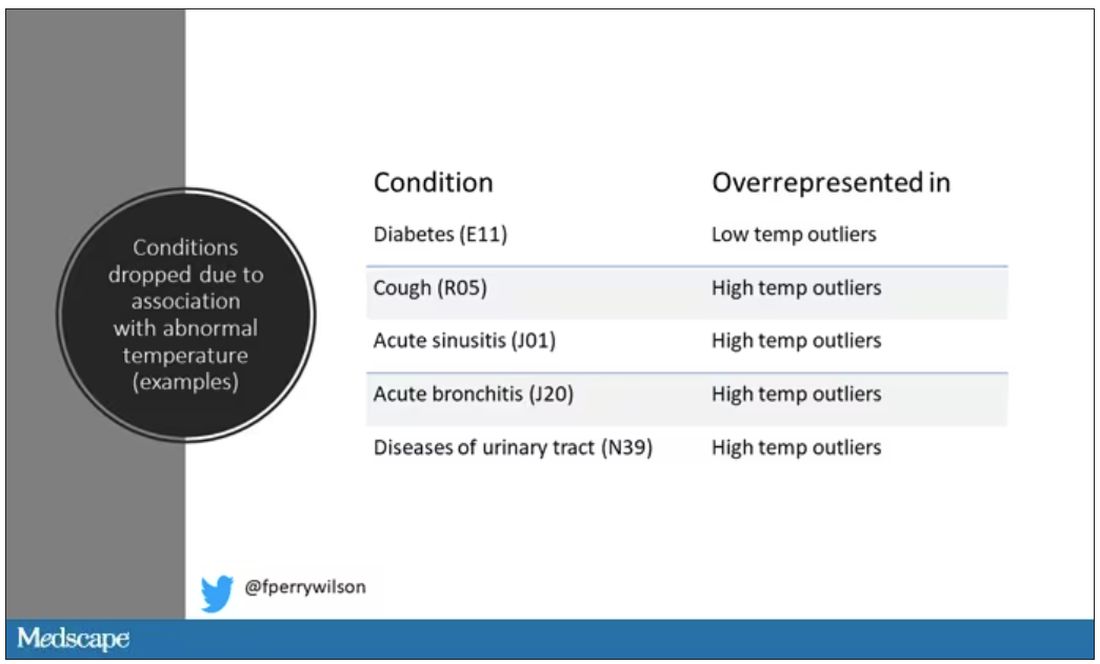

Look at all the diagnosis codes in those distributions. Determine which diagnosis codes are overrepresented in those distributions. Now you have a data-driven way to say that yes, these diagnoses are associated with weird temperatures. Next, eliminate everyone with those diagnoses from the dataset. What you are left with is a normal population, or at least a population that doesn’t have a condition that seems to meaningfully affect temperature.

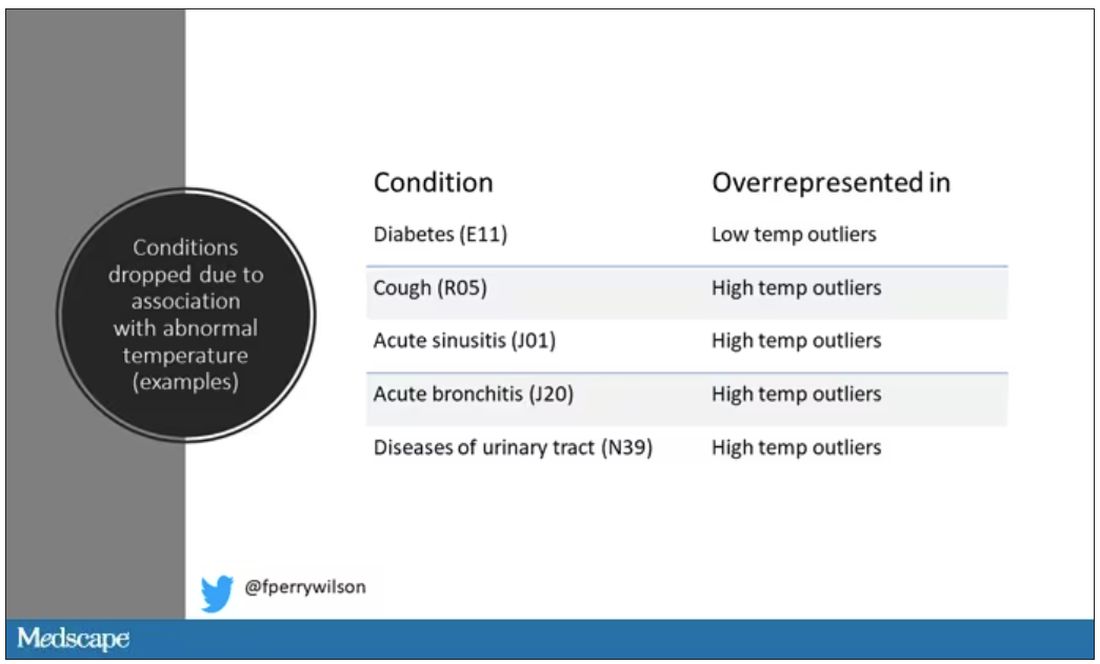

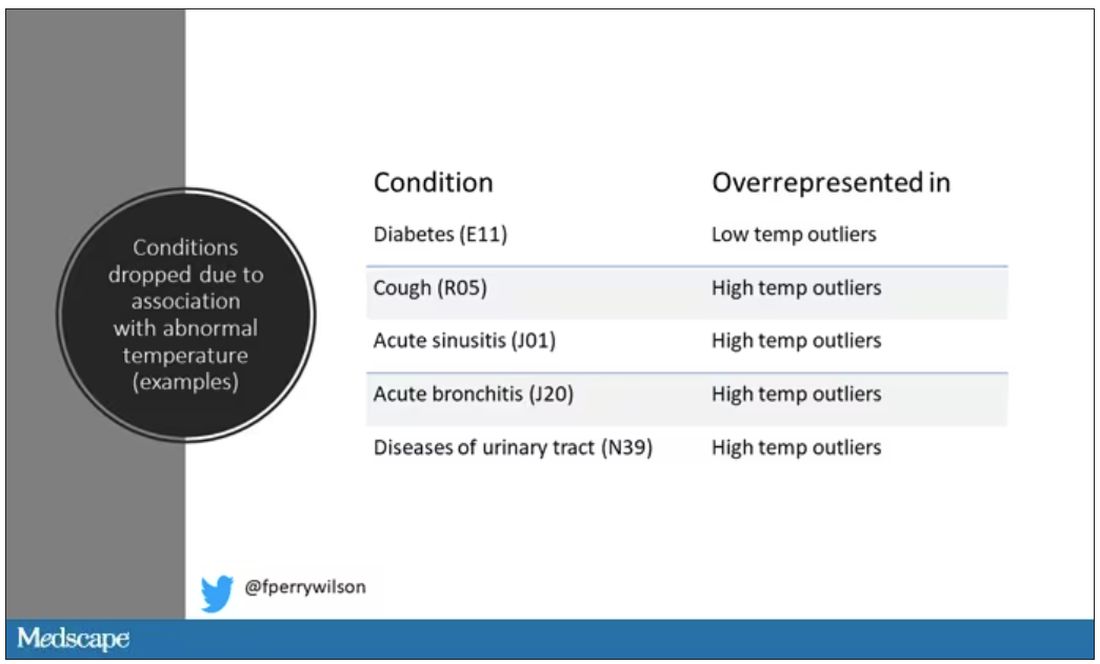

So, who was dropped? Well, a lot of people, actually. It turned out that diabetes was way overrepresented in the outlier group. Although 9.2% of the population had diabetes, 26% of people with very low temperatures did, so everyone with diabetes is removed from the dataset. While 5% of the population had a cough at their encounter, 7% of the people with very high temperature and 7% of the people with very low temperature had a cough, so everyone with cough gets thrown out.

The algorithm excluded people on antibiotics or who had sinusitis, urinary tract infections, pneumonia, and, yes, a diagnosis of “fever.” The list makes sense, which is always nice when you have a purely algorithmic classification system.

What do we have left? What is the real normal temperature? Ready?

It’s 36.64° C, or about 98.0° F.

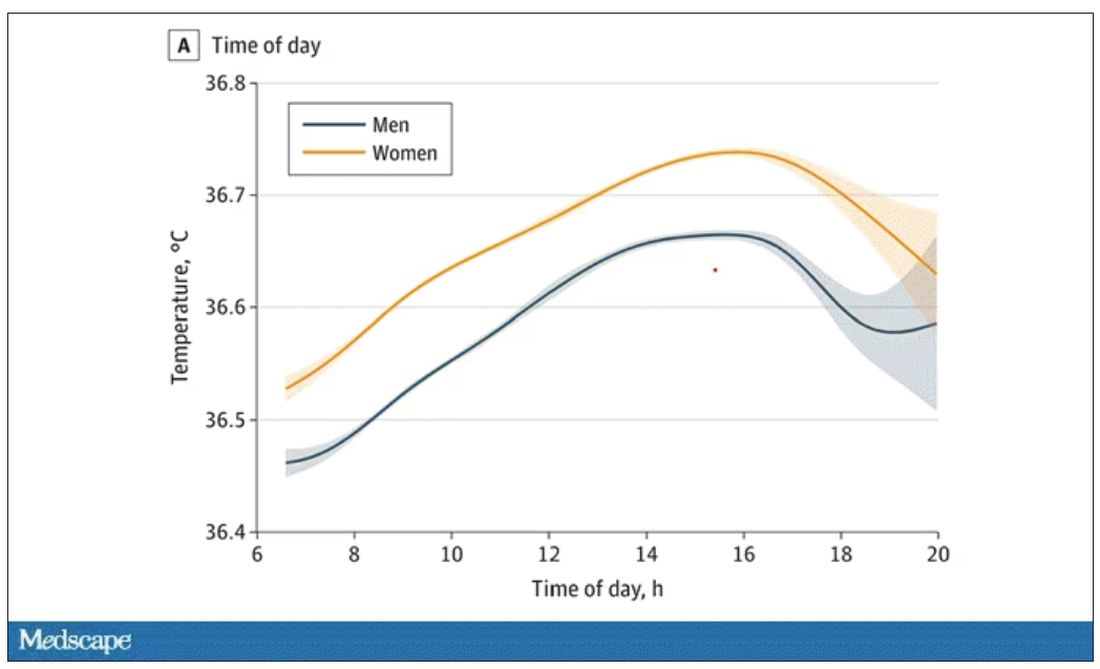

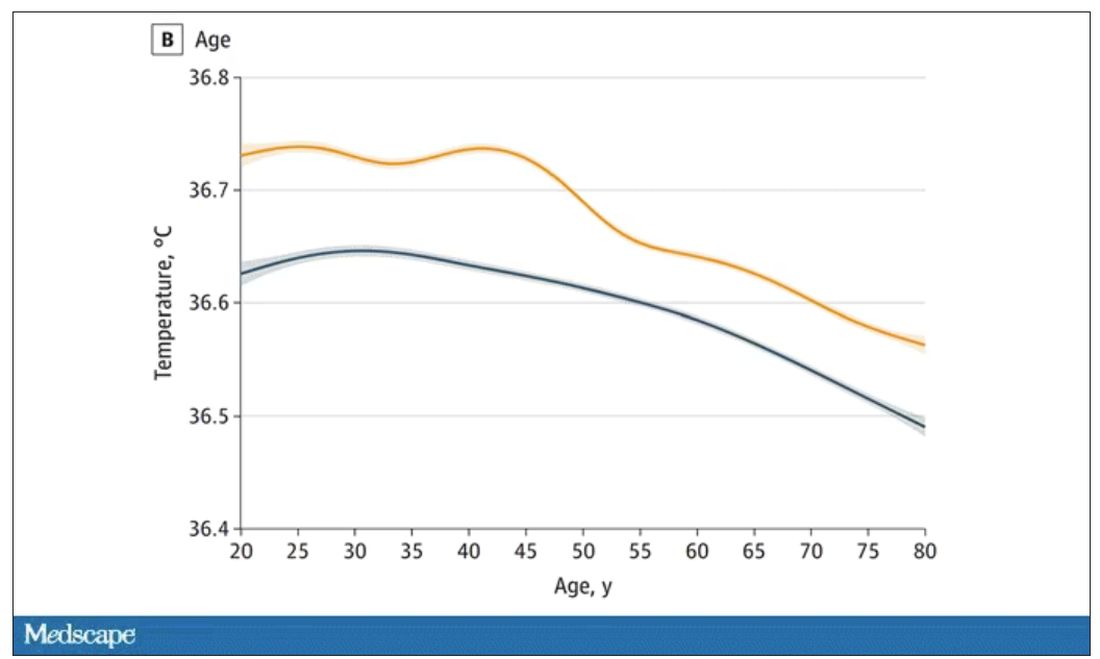

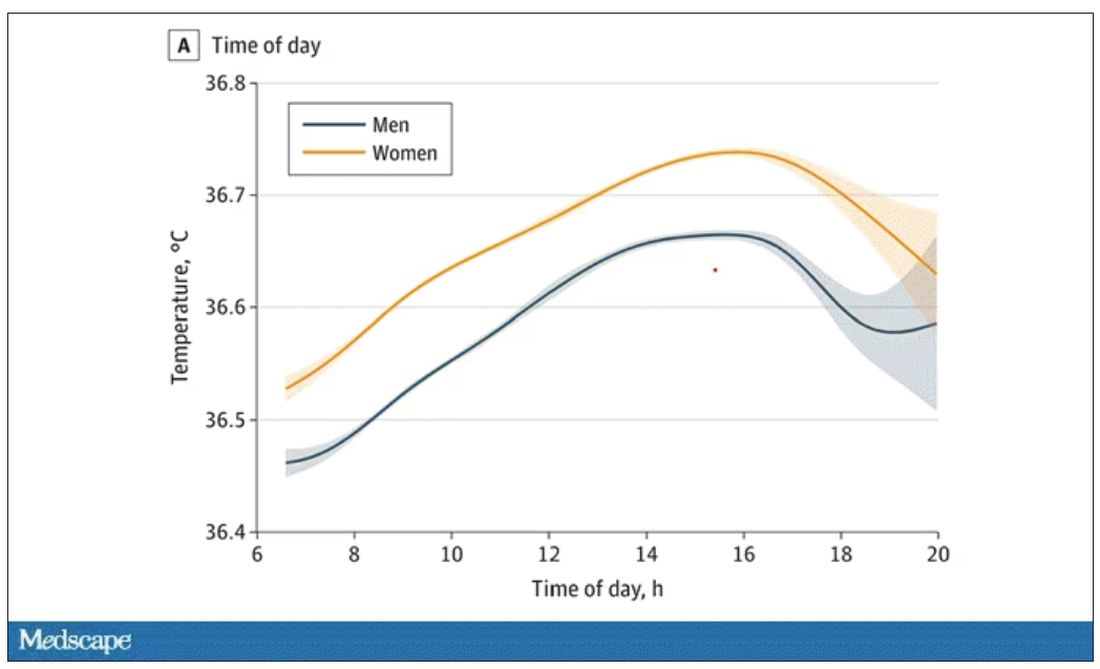

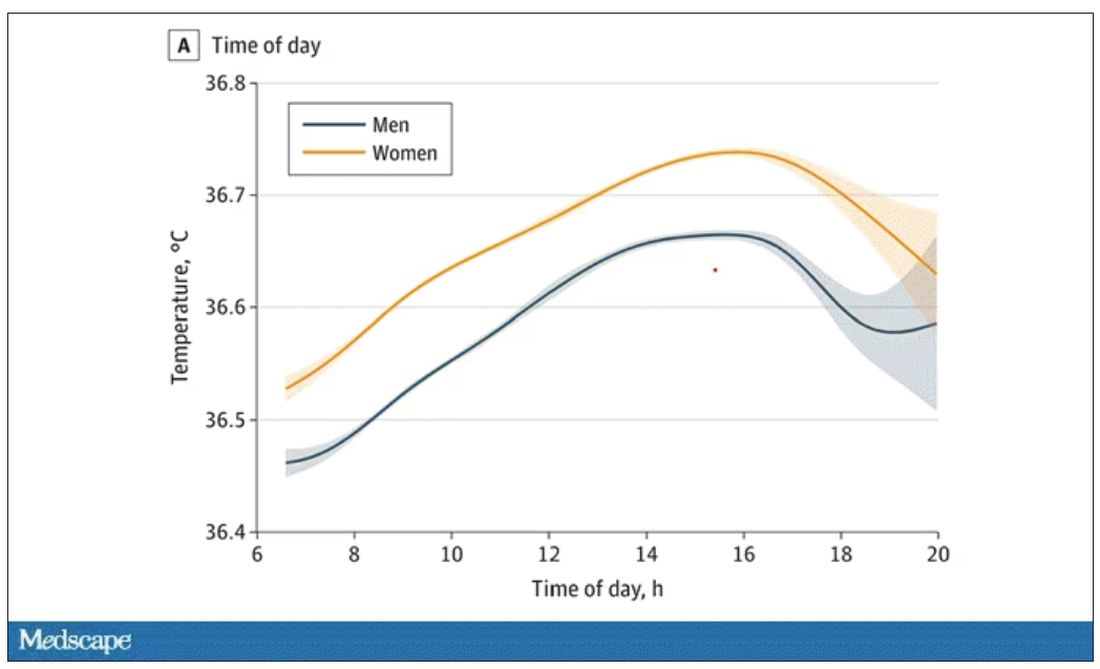

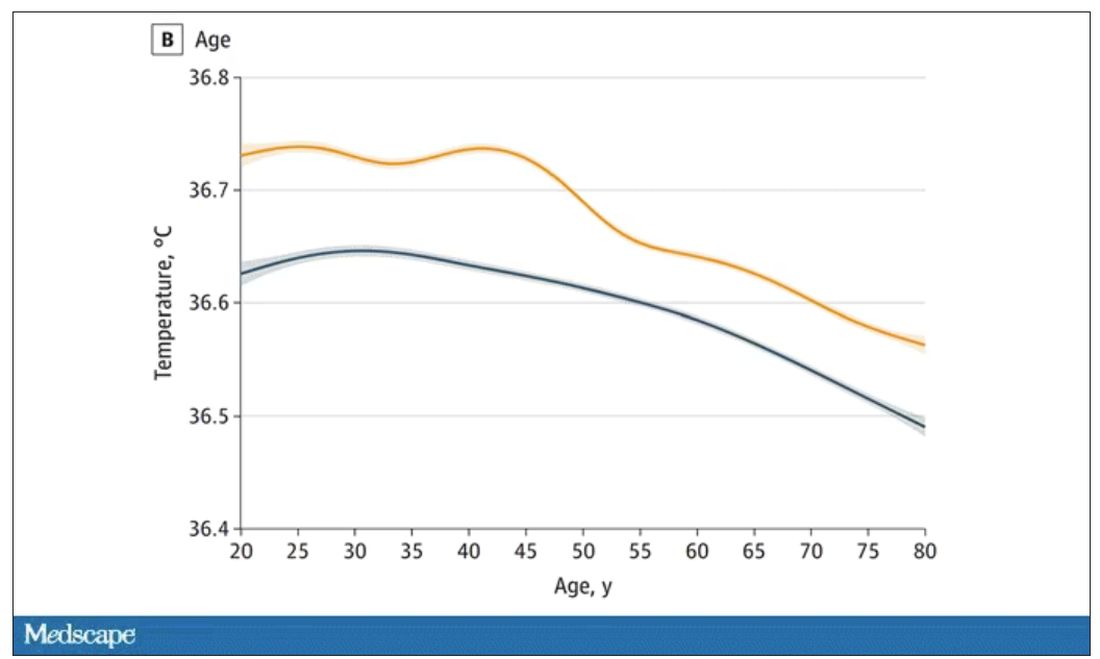

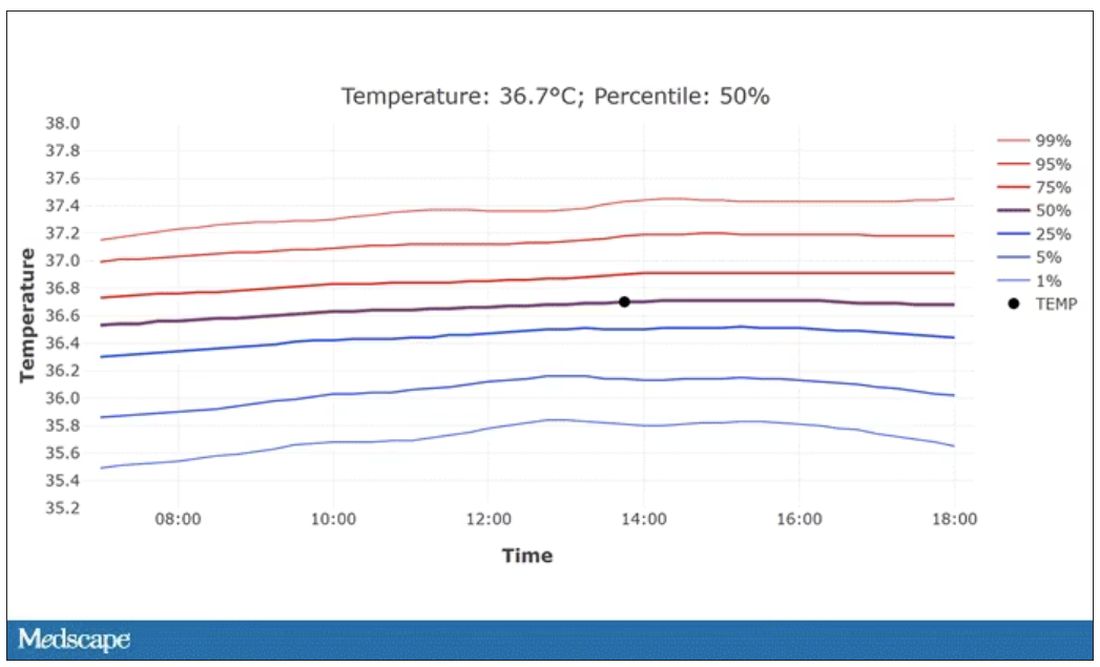

Of course, normal temperature varied depending on the time of day it was measured – higher in the afternoon.

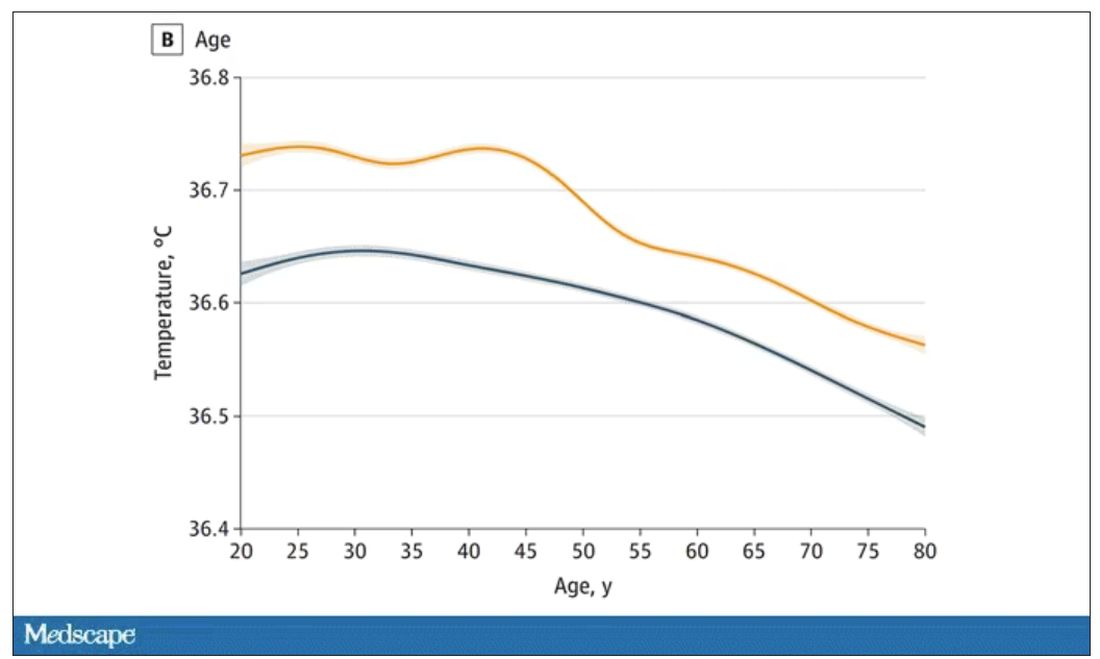

The normal temperature in women tended to be higher than in men. The normal temperature declined with age as well.

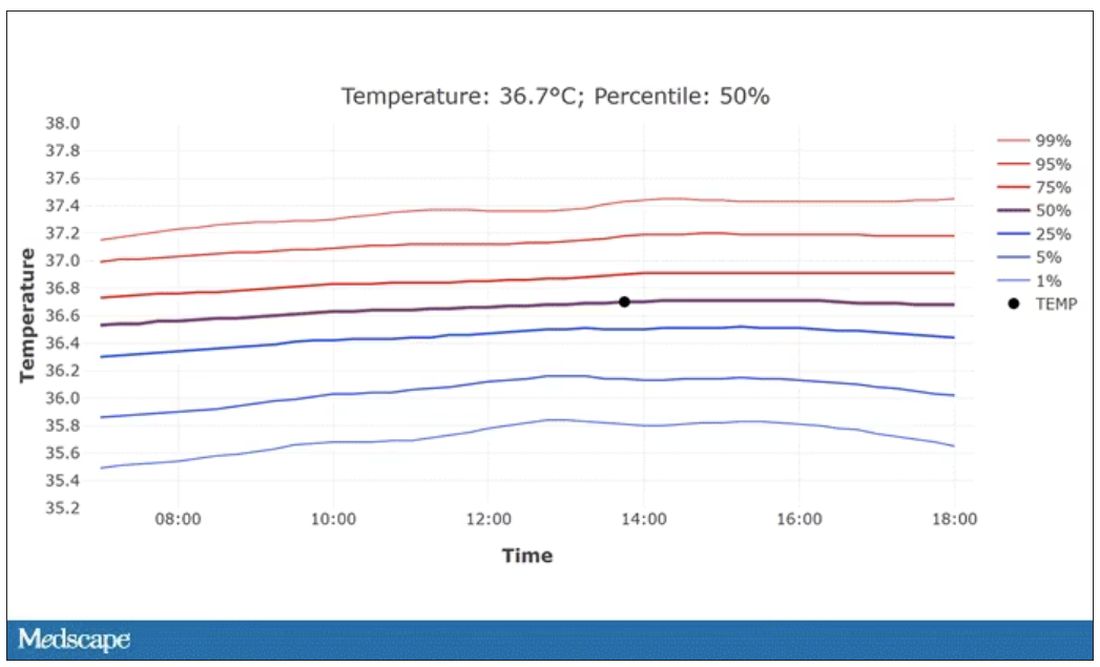

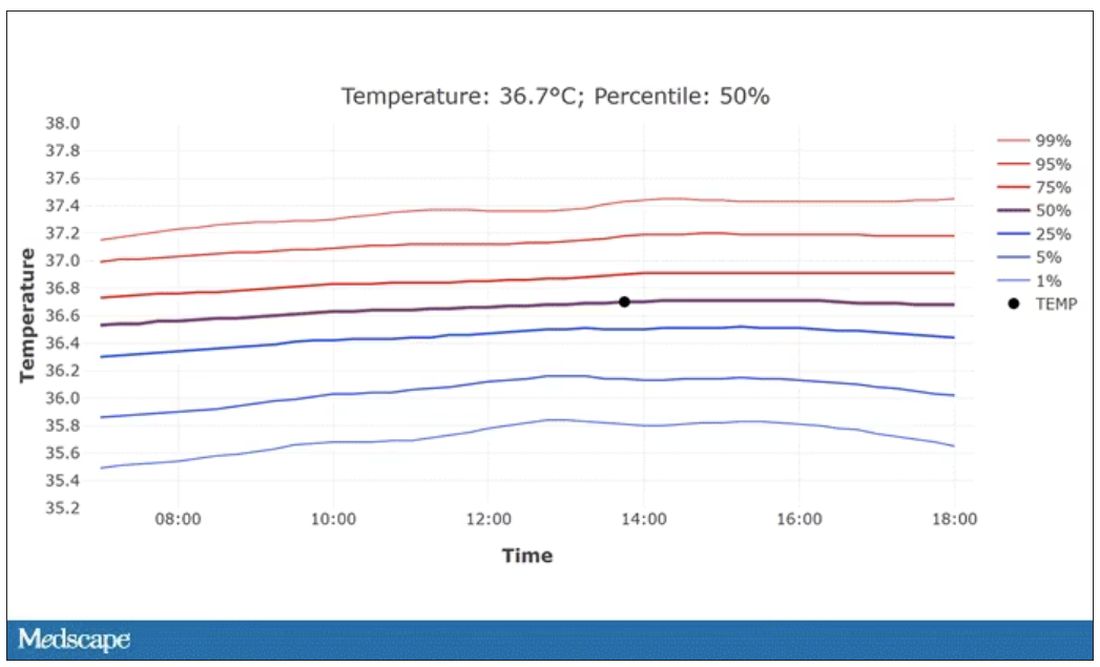

In fact, the researchers built a nice online calculator where you can enter your own, or your patient’s, parameters and calculate a normal body temperature for them. Here’s mine. My normal temperature at around 2 p.m. should be 36.7° C.

So, we’re all more cold-blooded than we thought. Is this just because of better methods? Maybe. But studies have actually shown that body temperature may be decreasing over time in humans, possibly because of the lower levels of inflammation we face in modern life (thanks to improvements in hygiene and antibiotics).

Of course, I’m sure some of you are asking yourselves whether any of this really matters. Is 37° C close enough?

Sure, this may be sort of puttering around the edges of physical diagnosis, but I think the methodology is really interesting and can obviously be applied to other broadly collected data points. But these data show us that thin, older individuals really do run cooler, and that we may need to pay more attention to a low-grade fever in that population than we otherwise would.

In any case, it’s time for a little re-education. If someone asks you what normal body temperature is, just say 36.6° C, 98.0° F. For his work in this area, I suggest we call it Wunderlich’s constant.

Dr. Wilson is associate professor of medicine and public health at Yale University, New Haven, Conn., and director of Yale’s Clinical and Translational Research Accelerator. He has no disclosures.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

Every branch of science has its constants. Physics has the speed of light, the gravitational constant, the Planck constant. Chemistry gives us Avogadro’s number, Faraday’s constant, the charge of an electron. Medicine isn’t quite as reliable as physics when it comes to these things, but insofar as there are any constants in medicine, might I suggest normal body temperature: 37° Celsius, 98.6° Fahrenheit.

Sure, serum sodium may be less variable and lactate concentration more clinically relevant, but even my 7-year-old knows that normal body temperature is 98.6°.

Except, as it turns out, 98.6° isn’t normal at all.

How did we arrive at 37.0° C for normal body temperature? We got it from this guy – German physician Carl Reinhold August Wunderlich, who, in addition to looking eerily like Luciano Pavarotti, was the first to realize that fever was not itself a disease but a symptom of one.

In 1851, Dr. Wunderlich released his measurements of more than 1 million body temperatures taken from 25,000 Germans – a painstaking process at the time, which employed a foot-long thermometer and took 20 minutes to obtain a measurement.

The average temperature measured, of course, was 37° C.

We’re more than 150 years post-Wunderlich right now, and the average person in the United States might be quite a bit different from the average German in 1850. Moreover, we can do a lot better than just measuring a ton of people and taking the average, because we have statistics. The problem with measuring a bunch of people and taking the average temperature as normal is that you can’t be sure that the people you are measuring are normal. There are obvious causes of elevated temperature that you could exclude. Let’s not take people with a respiratory infection or who are taking Tylenol, for example. But as highlighted in this paper in JAMA Internal Medicine, we can do a lot better than that.

The study leverages the fact that body temperature is typically measured during all medical office visits and recorded in the ever-present electronic medical record.

Researchers from Stanford identified 724,199 patient encounters with outpatient temperature data. They excluded extreme temperatures – less than 34° C or greater than 40° C – excluded patients under 20 or above 80 years, and excluded those with extremes of height, weight, or body mass index.

You end up with a distribution like this. Note that the peak is clearly lower than 37° C.

But we’re still not at “normal.” Some people would be seeing their doctor for conditions that affect body temperature, such as infection. You could use diagnosis codes to flag these individuals and drop them, but that feels a bit arbitrary.

I really love how the researchers used data to fix this problem. They used a technique called LIMIT (Laboratory Information Mining for Individualized Thresholds). It works like this:

Take all the temperature measurements and then identify the outliers – the very tails of the distribution.

Look at all the diagnosis codes in those distributions. Determine which diagnosis codes are overrepresented in those distributions. Now you have a data-driven way to say that yes, these diagnoses are associated with weird temperatures. Next, eliminate everyone with those diagnoses from the dataset. What you are left with is a normal population, or at least a population that doesn’t have a condition that seems to meaningfully affect temperature.

So, who was dropped? Well, a lot of people, actually. It turned out that diabetes was way overrepresented in the outlier group. Although 9.2% of the population had diabetes, 26% of people with very low temperatures did, so everyone with diabetes is removed from the dataset. While 5% of the population had a cough at their encounter, 7% of the people with very high temperature and 7% of the people with very low temperature had a cough, so everyone with cough gets thrown out.

The algorithm excluded people on antibiotics or who had sinusitis, urinary tract infections, pneumonia, and, yes, a diagnosis of “fever.” The list makes sense, which is always nice when you have a purely algorithmic classification system.

What do we have left? What is the real normal temperature? Ready?

It’s 36.64° C, or about 98.0° F.

Of course, normal temperature varied depending on the time of day it was measured – higher in the afternoon.

The normal temperature in women tended to be higher than in men. The normal temperature declined with age as well.

In fact, the researchers built a nice online calculator where you can enter your own, or your patient’s, parameters and calculate a normal body temperature for them. Here’s mine. My normal temperature at around 2 p.m. should be 36.7° C.

So, we’re all more cold-blooded than we thought. Is this just because of better methods? Maybe. But studies have actually shown that body temperature may be decreasing over time in humans, possibly because of the lower levels of inflammation we face in modern life (thanks to improvements in hygiene and antibiotics).

Of course, I’m sure some of you are asking yourselves whether any of this really matters. Is 37° C close enough?

Sure, this may be sort of puttering around the edges of physical diagnosis, but I think the methodology is really interesting and can obviously be applied to other broadly collected data points. But these data show us that thin, older individuals really do run cooler, and that we may need to pay more attention to a low-grade fever in that population than we otherwise would.

In any case, it’s time for a little re-education. If someone asks you what normal body temperature is, just say 36.6° C, 98.0° F. For his work in this area, I suggest we call it Wunderlich’s constant.

Dr. Wilson is associate professor of medicine and public health at Yale University, New Haven, Conn., and director of Yale’s Clinical and Translational Research Accelerator. He has no disclosures.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

Every branch of science has its constants. Physics has the speed of light, the gravitational constant, the Planck constant. Chemistry gives us Avogadro’s number, Faraday’s constant, the charge of an electron. Medicine isn’t quite as reliable as physics when it comes to these things, but insofar as there are any constants in medicine, might I suggest normal body temperature: 37° Celsius, 98.6° Fahrenheit.

Sure, serum sodium may be less variable and lactate concentration more clinically relevant, but even my 7-year-old knows that normal body temperature is 98.6°.

Except, as it turns out, 98.6° isn’t normal at all.

How did we arrive at 37.0° C for normal body temperature? We got it from this guy – German physician Carl Reinhold August Wunderlich, who, in addition to looking eerily like Luciano Pavarotti, was the first to realize that fever was not itself a disease but a symptom of one.

In 1851, Dr. Wunderlich released his measurements of more than 1 million body temperatures taken from 25,000 Germans – a painstaking process at the time, which employed a foot-long thermometer and took 20 minutes to obtain a measurement.

The average temperature measured, of course, was 37° C.

We’re more than 150 years post-Wunderlich right now, and the average person in the United States might be quite a bit different from the average German in 1850. Moreover, we can do a lot better than just measuring a ton of people and taking the average, because we have statistics. The problem with measuring a bunch of people and taking the average temperature as normal is that you can’t be sure that the people you are measuring are normal. There are obvious causes of elevated temperature that you could exclude. Let’s not take people with a respiratory infection or who are taking Tylenol, for example. But as highlighted in this paper in JAMA Internal Medicine, we can do a lot better than that.

The study leverages the fact that body temperature is typically measured during all medical office visits and recorded in the ever-present electronic medical record.

Researchers from Stanford identified 724,199 patient encounters with outpatient temperature data. They excluded extreme temperatures – less than 34° C or greater than 40° C – excluded patients under 20 or above 80 years, and excluded those with extremes of height, weight, or body mass index.

You end up with a distribution like this. Note that the peak is clearly lower than 37° C.

But we’re still not at “normal.” Some people would be seeing their doctor for conditions that affect body temperature, such as infection. You could use diagnosis codes to flag these individuals and drop them, but that feels a bit arbitrary.

I really love how the researchers used data to fix this problem. They used a technique called LIMIT (Laboratory Information Mining for Individualized Thresholds). It works like this:

Take all the temperature measurements and then identify the outliers – the very tails of the distribution.

Look at all the diagnosis codes in those distributions. Determine which diagnosis codes are overrepresented in those distributions. Now you have a data-driven way to say that yes, these diagnoses are associated with weird temperatures. Next, eliminate everyone with those diagnoses from the dataset. What you are left with is a normal population, or at least a population that doesn’t have a condition that seems to meaningfully affect temperature.

So, who was dropped? Well, a lot of people, actually. It turned out that diabetes was way overrepresented in the outlier group. Although 9.2% of the population had diabetes, 26% of people with very low temperatures did, so everyone with diabetes is removed from the dataset. While 5% of the population had a cough at their encounter, 7% of the people with very high temperature and 7% of the people with very low temperature had a cough, so everyone with cough gets thrown out.

The algorithm excluded people on antibiotics or who had sinusitis, urinary tract infections, pneumonia, and, yes, a diagnosis of “fever.” The list makes sense, which is always nice when you have a purely algorithmic classification system.

What do we have left? What is the real normal temperature? Ready?

It’s 36.64° C, or about 98.0° F.

Of course, normal temperature varied depending on the time of day it was measured – higher in the afternoon.

The normal temperature in women tended to be higher than in men. The normal temperature declined with age as well.

In fact, the researchers built a nice online calculator where you can enter your own, or your patient’s, parameters and calculate a normal body temperature for them. Here’s mine. My normal temperature at around 2 p.m. should be 36.7° C.

So, we’re all more cold-blooded than we thought. Is this just because of better methods? Maybe. But studies have actually shown that body temperature may be decreasing over time in humans, possibly because of the lower levels of inflammation we face in modern life (thanks to improvements in hygiene and antibiotics).

Of course, I’m sure some of you are asking yourselves whether any of this really matters. Is 37° C close enough?

Sure, this may be sort of puttering around the edges of physical diagnosis, but I think the methodology is really interesting and can obviously be applied to other broadly collected data points. But these data show us that thin, older individuals really do run cooler, and that we may need to pay more attention to a low-grade fever in that population than we otherwise would.

In any case, it’s time for a little re-education. If someone asks you what normal body temperature is, just say 36.6° C, 98.0° F. For his work in this area, I suggest we call it Wunderlich’s constant.

Dr. Wilson is associate professor of medicine and public health at Yale University, New Haven, Conn., and director of Yale’s Clinical and Translational Research Accelerator. He has no disclosures.

A version of this article appeared on Medscape.com.

‘Decapitated’ boy saved by surgery team

This transcript has been edited for clarity.

F. Perry Wilson, MD, MSCE: I am joined today by Dr. Ohad Einav. He’s a staff surgeon in orthopedics at Hadassah Medical Center in Jerusalem. He’s with me to talk about an absolutely incredible surgical case, something that is terrifying to most non–orthopedic surgeons and I imagine is fairly scary for spine surgeons like him as well. But what we don’t have is information about how this works from a medical perspective. So, first of all, Dr. Einav, thank you for taking time to speak with me today.

Ohad Einav, MD: Thank you for having me.

Dr. Wilson: Can you tell us about Suleiman Hassan and what happened to him before he came into your care?

Dr. Einav: Hassan is a 12-year-old child who was riding his bicycle on the West Bank, about 40 minutes from here. Unfortunately, he was involved in a motor vehicle accident and he suffered injuries to his abdomen and cervical spine. He was transported to our service by helicopter from the scene of the accident.

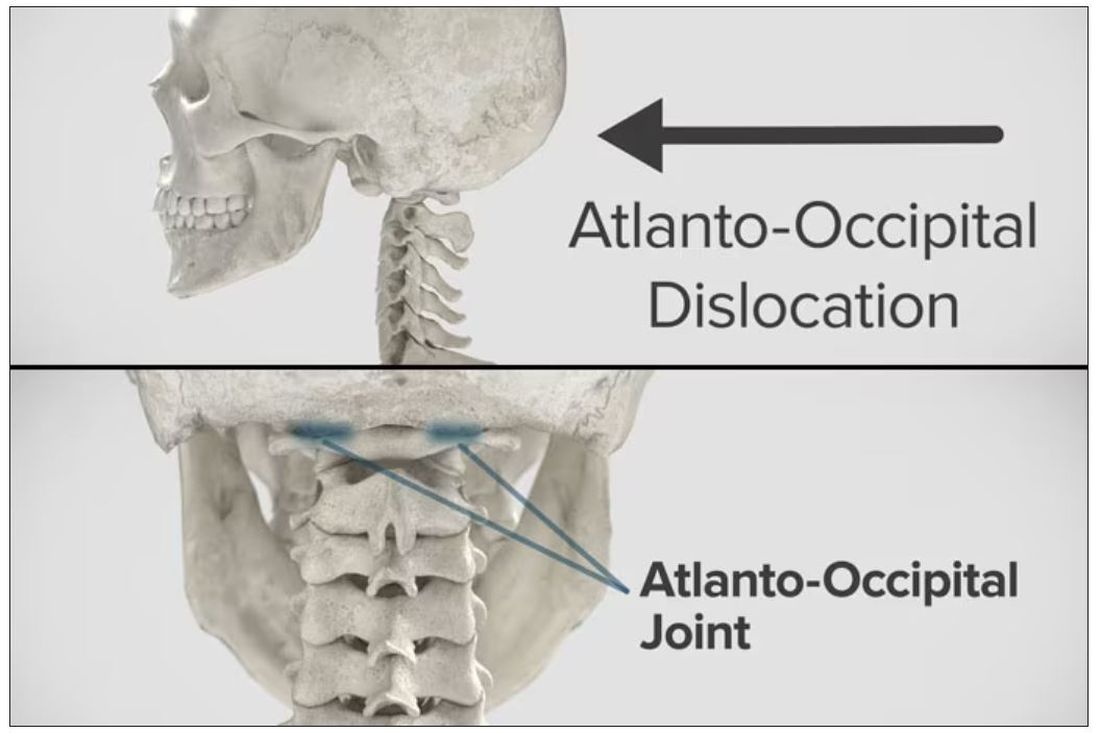

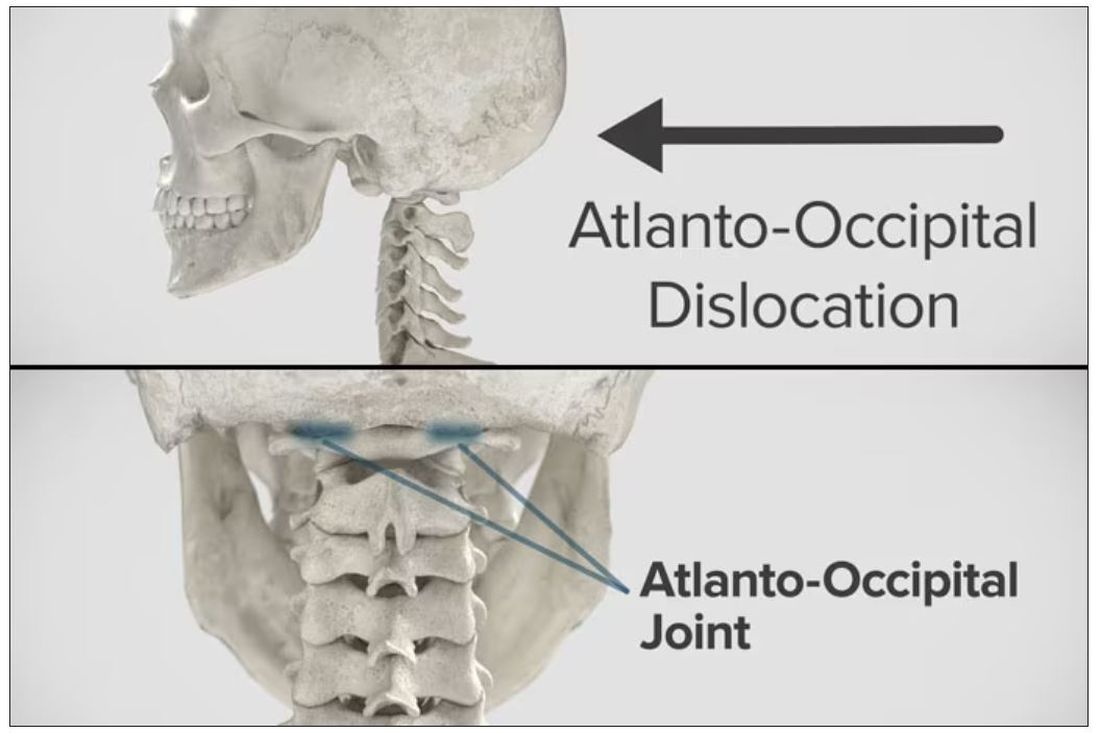

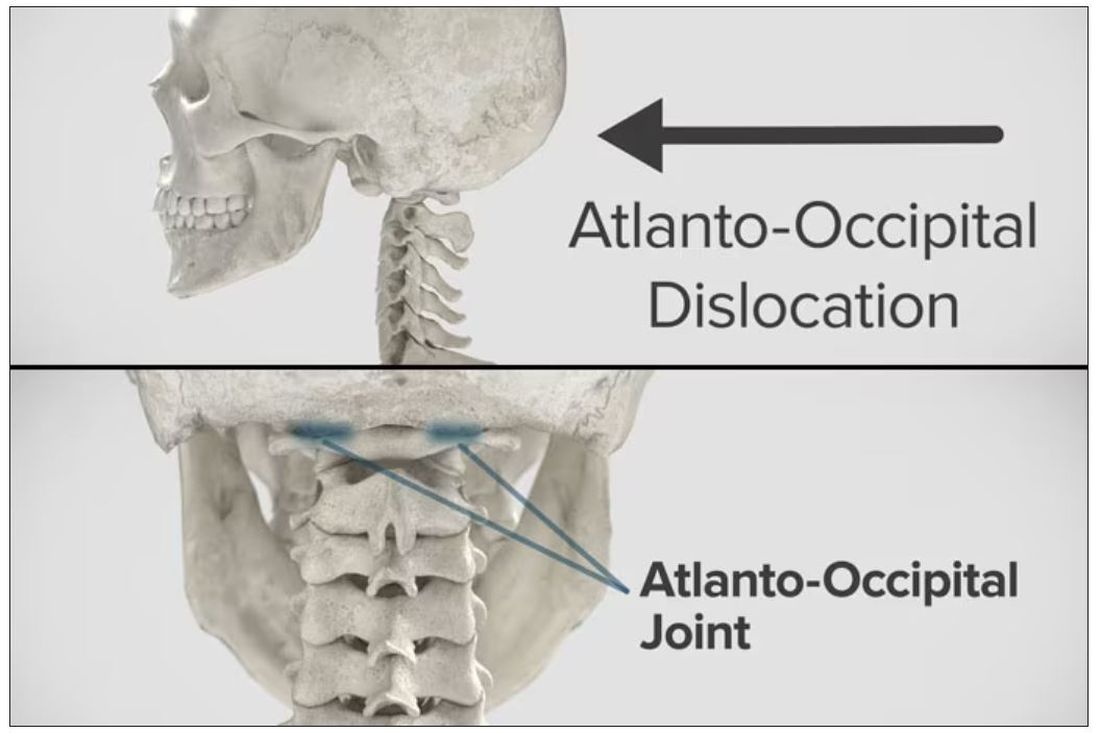

Dr. Wilson: “Injury to the cervical spine” might be something of an understatement. He had what’s called atlanto-occipital dislocation, colloquially often referred to as internal decapitation. Can you tell us what that means? It sounds terrifying.

Dr. Einav: It’s an injury to the ligaments between the occiput and the upper cervical spine, with or without bony fracture. The atlanto-occipital joint is formed by the superior articular facet of the atlas and the occipital condyle, stabilized by an articular capsule between the head and neck, and is supported by various ligaments around it that stabilize the joint and allow joint movements, including flexion, extension, and some rotation in the lower levels.

Dr. Wilson: This joint has several degrees of freedom, which means it needs a lot of support. With this type of injury, where essentially you have severing of the ligaments, is it usually survivable? How dangerous is this?

Dr. Einav: The mortality rate is 50%-60%, depending on the primary impact, the injury, transportation later on, and then the surgery and surgical management.

Dr. Wilson: Tell us a bit about this patient’s status when he came to your medical center. I assume he was in bad shape.

Dr. Einav: Hassan arrived at our medical center with a Glasgow Coma Scale score of 15. He was fully conscious. He was hemodynamically stable except for a bad laceration on his abdomen. He had a Philadelphia collar around his neck. He was transported by chopper because the paramedics suspected that he had a cervical spine injury and decided to bring him to a Level 1 trauma center.

He was monitored and we treated him according to the ATLS [advanced trauma life support] protocol. He didn’t have any gross sensory deficits, but he was a little confused about the whole situation and the accident. Therefore, we could do a general examination but we couldn’t rely on that regarding any sensory deficit that he may or may not have. We decided as a team that it would be better to slow down and control the situation. We decided not to operate on him immediately. We basically stabilized him and made sure that he didn’t have any traumatic internal organ damage. Later on we took him to the OR and performed surgery.

Dr. Wilson: It’s amazing that he had intact motor function, considering the extent of his injury. The spinal cord was spared somewhat during the injury. There must have been a moment when you realized that this kid, who was conscious and could move all four extremities, had a very severe neck injury. Was that due to a CT scan or physical exam? And what was your feeling when you saw that he had atlanto-occipital dislocation?

Dr. Einav: As a surgeon, you have a gut feeling in regard to the general examination of the patient. But I never rely on gut feelings. On the CT, I understood exactly what he had, what we needed to do, and the time frame.

Dr. Wilson: You’ve done these types of surgeries before, right? Obviously, no one has done a lot of them because this isn’t very common. But you knew what to do. Did you have a plan? Where does your experience come into play in a situation like this?

Dr. Einav: I graduated from the spine program of Toronto University, where I did a fellowship in trauma of the spine and complex spine surgery. I had very good teachers, and during my fellowship I treated a few cases in older patients that were similar but not the same. Therefore, I knew exactly what needed to be done.

Dr. Wilson: For those of us who aren’t surgeons, take us into the OR with you. This is obviously an incredibly delicate procedure. You are high up in the spinal cord at the base of the brain. The slightest mistake could have devastating consequences. What are the key elements of this procedure? What can go wrong here? What is the number-one thing you have to look out for when you’re trying to fix an internal decapitation?

Dr. Einav: The key element in surgeries of the cervical spine – trauma and complex spine surgery – is planning. I never go to the OR without knowing what I’m going to do. I have a few plans – plan A, plan B, plan C – in case something fails. So, I definitely know what the next step will be. I always think about the surgery a few hours before, if I have time to prepare.

The second thing that is very important is teamwork. The team needs to be coordinated. Everybody needs to know what their job is. With these types of injuries, it’s not the time for rookies. If you are new, please stand back and let the more experienced people do that job. I’m talking about surgeons, nurses, anesthesiologists – everyone.

Another important thing in planning is choosing the right hardware. For example, in this case we had a problem because most of the hardware is designed for adults, and we had to improvise because there isn’t a lot of hardware on the market for the pediatric population. The adult plates and screws are too big, so we had to improvise.

Dr. Wilson: Tell us more about that. How do you improvise spinal hardware for a 12-year-old?

Dr. Einav: In this case, I chose to use hardware from one of the companies that works with us.

You can see in this model the area of the injury, and the area that we worked on. To perform the surgery, I had to use some plates and rods from a different company. This company’s (NuVasive) hardware has a small attachment to the skull, which was helpful for affixing the skull to the cervical spine, instead of using a big plate that would sit at the base of the skull and would not be very good for him. Most of the hardware is made for adults and not for kids.

Dr. Wilson: Will that hardware preserve the motor function of his neck? Will he be able to turn his head and extend and flex it?

Dr. Einav: The injury leads to instability and destruction of both articulations between the head and neck. Therefore, those articulations won’t be able to function the same way in the future. There is a decrease of something like 50% of the flexion and extension of Hassan’s cervical spine. Therefore, I decided that in this case there would be no chance of saving Hassan’s motor function unless we performed a fusion between the head and the neck, and therefore I decided that this would be the best procedure with the best survival rate. So, in the future, he will have some diminished flexion, extension, and rotation of his head.

Dr. Wilson: How long did his surgery take?

Dr. Einav: To be honest, I don’t remember. But I can tell you that it took us time. It was very challenging to coordinate with everyone. The most problematic part of the surgery to perform is what we call “flip-over.”