User login

Minimum 5-Year Follow-up of Articular Surface Replacement Acetabular Components Used in Total Hip Arthroplasty

ABSTRACT

The articular surface replacement (ASR) monoblock metal-on-metal acetabular component was recalled due to a higher than expected early failure rate. We evaluated the survivorship of the device and variables that may be predictive of failure at a minimum of 5-year follow-up. A single-center, single-surgeon retrospective review was conducted in patients who received the DePuy Synthes ASR™ XL Acetabular hip system from December 2005 to November 2009. Mean values and percentages were calculated and compared using the Fisher’s exact test, simple logistic regression, and Student’s t-test. The significance level was P ≤ .05. This study included 29 patients (24 males, 5 females) with 32 ASR™ XL acetabular hip systems. Mean age and body mass index (BMI) reached 55.2 years and 28.9 kg/m2, respectively. Mean postoperative follow-up was 6.2 years. A total of 2 patients (6.9%) died of an unrelated cause and 1 patient was lost to follow-up (3.4%), leaving 26 patients with 28 hip replacements, all of whom were available for follow-up. The 5-year revision rate was 34.4% (10 patients with 11 hip replacements). Mean time to revision was 3.1 years. Age (P = .76), gender (P = .49), BMI (P = .29), acetabular component abduction angle (P = .12), and acetabulum size (P = .59) were not associated with the increased rate for hip failure. Blood cobalt (7.6 vs 6.8 µg/L, P = .58) and chromium (5.0 vs 2.2 µg/L, P = .31) levels were not significantly higher in the revised group when compared with those of the unrevised group. In the revised group, a 91% decrease in cobalt and 78% decrease in chromium levels were observed at a mean of 6 months following the revision. This study demonstrates a high rate of failure of ASR acetabular components used in total hip arthroplasty at a minimum of 5 years of follow-up. No variable that was predictive of failure could be identified in this series. Close clinical surveillance of these patients is required.

Continue to: Metal-on-metal...

Metal-on-metal (MoM) articulations have been widely explored as an alternative to polyethylene bearings in total hip arthroplasty (THA), with proposed benefits including improved range of motion, lower dislocation rates, and enhanced durability.1 Comprising cobalt and chromium, these MoM bearings gained widespread popularity in the United States, particularly in younger and more active patients looking for longer lasting devices.

The articular surface replacement (ASR) acetabular system (DePuy Synthes) was approved for sale by the US Food and Drug Administration in 2003 and implanted in an estimated 93,000 cases.2 Since then, however, the early failure rate of the prosthesis has been well documented,3-5 leading to a formal global product recall in August 2010. The Australian Orthopaedic Association National Joint Replacement Registry (AOANJRR) was amongst the first to report a 6.4% rate of failure of the device at 3 years when inserted with a Corail stem.6 An acceptable upper rate of hip prosthesis failure is considered to reach 1% per year, with the majority of implants reporting well below this value. A 10.9% failure rate at 5 years was documented when the prosthesis was inserted for resurfacing. The National Joint Registry of England and Wales confirmed these findings and observed a 13% and 12% rate of failure at 5 years for the acetabular and resurfacing systems, respectively.2 With the notable failure of the ASR system, this study reports our single-center 5-year survivorship experience and evaluates any variable that might be predictive of an early failure to aid in patient counseling.

METHODS

A single-center, single-surgeon, retrospective review of a consecutive series of patients was performed from December 2005 to November 2009. This study included all patients who underwent a primary THA with a DePuy Synthes ASR™ XL Acetabular hip system. No patients were excluded. Institutional Review Board approval was obtained. Patient demographics comprising of age, gender, and body mass index (BMI) were recorded. The primary endpoint of this study was 5-year survivorship rates. Secondary endpoints included duration to revision surgery, blood cobalt and chromium levels, time interval of blood ion tests, acetabulum size, acetabular component abduction angle, and duration to follow-up.

Candidates for the ASR™ XL Acetabular hip system included young patients and/or those considered to be physically active. In a select few, ASR devices were implanted upon patient request.

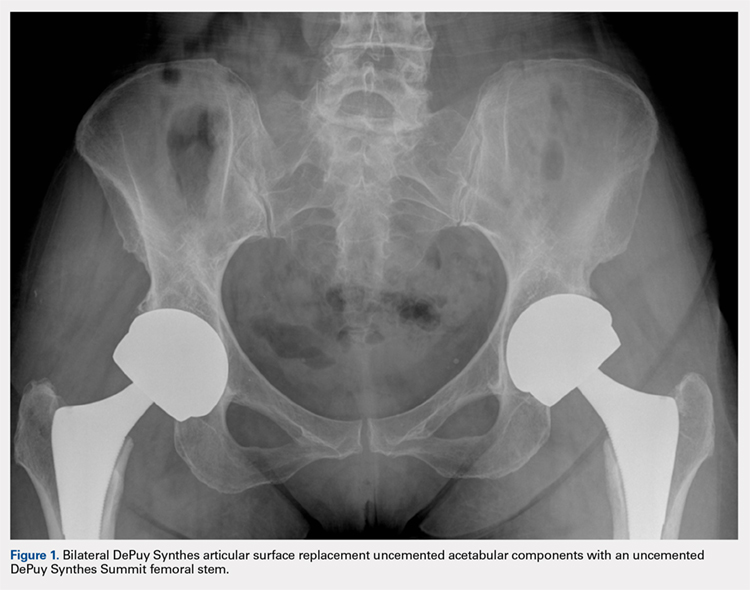

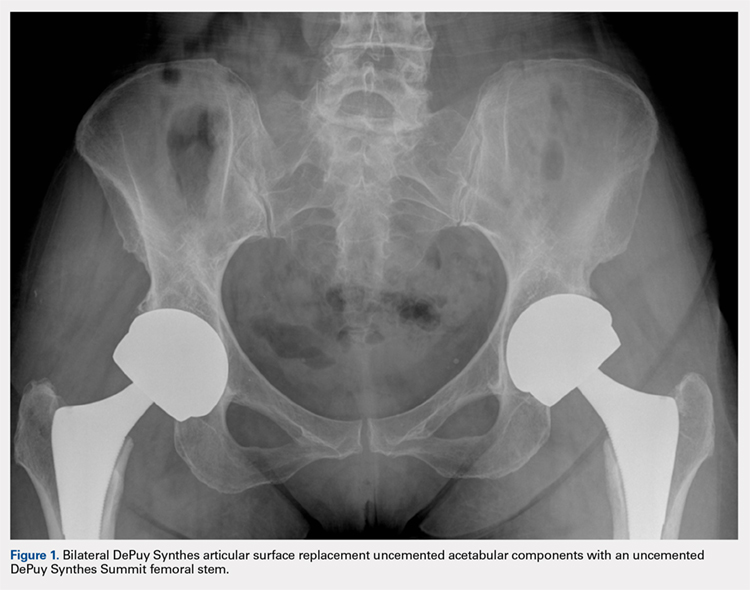

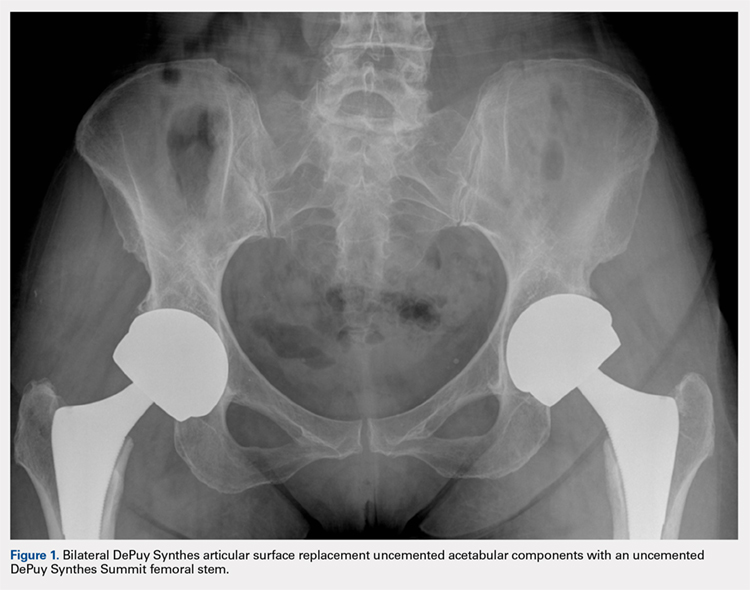

All patients underwent primary total hip replacement with a DePuy Synthes ASR™ XL uncemented acetabular component and an uncemented femoral stem (DePuy Synthes, Summit, or Tri-Lock) inserted via a standard posterior approach (Figure 1). Acetabulum sizes ranged from 52 mm to 68 mm in diameter.

All patients were followed-up yearly in the outpatient setting. Routine (yearly) metal-ion level sampling (whole blood) was started in 2010 for all patients. Laboratory tests were conducted at a single laboratory (Lab Corp.). Abduction cup inclination angles were measured by the providing surgeon using digital radiology software (GE Centricity systems).

The Student’s t-test was used to compare mean values (such as age, BMI, and metal ion levels) between the failure and no-failure groups. The 2-sided Fisher’s exact test analyzed differences in gender. Simple logistic regression analyzed variables associated with the failure group. Significance was P ≤ .05.

Continue to: Results...

RESULTS

A total of 29 patients (24 males, 5 females) with 32 ASR hip replacements were included in this study. Indications for surgery comprised osteoarthritis (28 hips, 87.5%) and avascular necrosis of the hip (4 hips, 12.5%). Mean age and BMI were 55.2 years and 28.9 kg/m2, respectively. A total of 2 patients (6.9%) died of an unrelated cause (1 myocardial infarct, 1 suicide), and 1 patient was lost to follow-up (3.4%), leaving 26 patients with 28 hip replacements, all of whom finished a 5-year minimum follow-up.

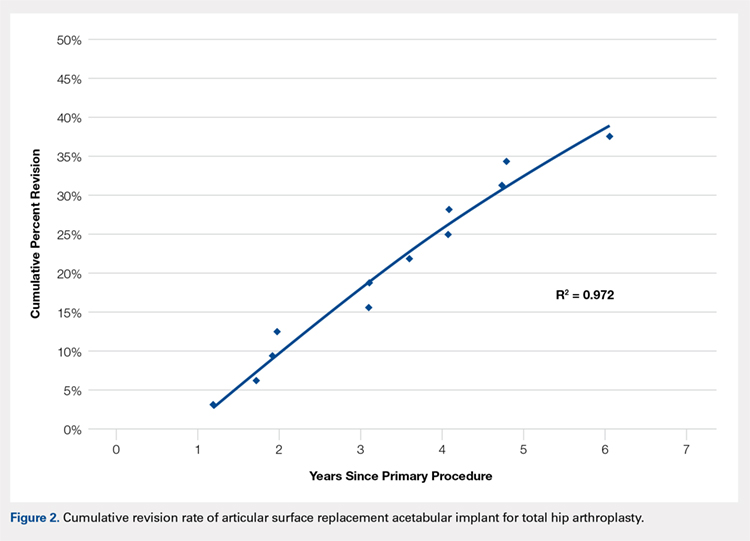

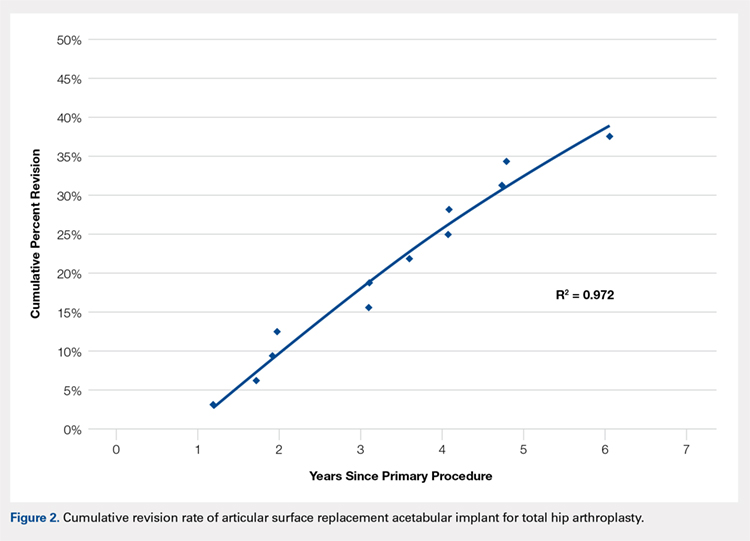

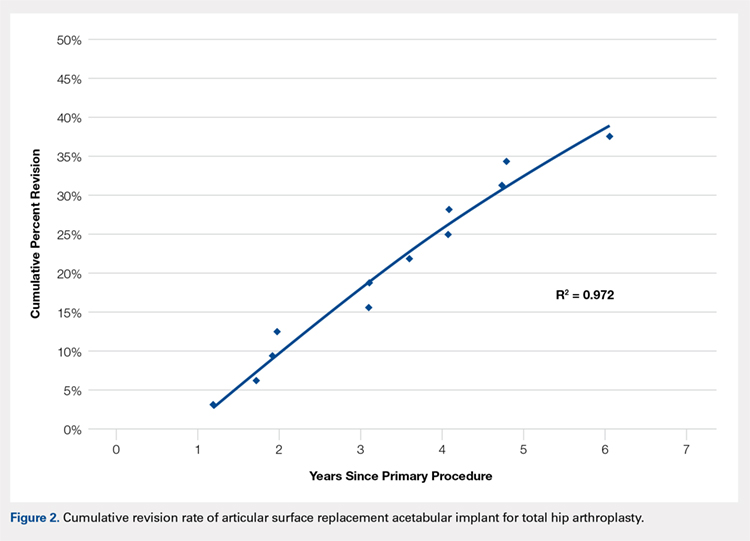

No implant failures were noted in the first year. The 5-year revision rate reached 34.4% (10 patients with 11 hip replacements). Mean time to revision for this subgroup was 3.1 years. Overall, an implant failure was observed in 37.5% of patients (11 patients with 12 hip replacements) at a mean postoperative follow-up of 6.2 years (Figure 2). Indications for implant revision were pain in 11 (92.7%) cases and infection in 1 (8.3%).

Of the 11 hips revised due to pain, 9 were performed by the original surgeon (8 were completed with primary acetabular components, 1 with a revision shell). Figure 3 shows a bilateral revision performed with primary acetabular components and retained DePuy Synthes Pinnacle femoral stems. In all these cases except 1, the ASR component was grossly loose. One case presented with pseudotumor and impingement between the femoral prosthetic neck and acetabular component after migration of a loose component. After revision, the patient returned with substantial anterior hip pain and heterotopic ossification, and failed conservative treatment, requiring another surgery with prosthesis retention, removal of heterotopic ossification, and iliopsoas lengthening. The surgery successfully relieved the symptoms. No other patients required additional surgery after their revision. In comparison to the original ASR component, the revision shell was 2 to 4 mm larger in diameter. No patient required component revision at a mean of 2.9 years after the revision surgery.

The patient with secondary revision developed a hematogenous streptococcal infection after a dental procedure performed without prophylactic antibiotics. The patient was initially lost to follow-up after the primary surgery and reported no antecedent pain prior to the revision. A substantial metal fluid collection was identified in the hip at the time of débridement and without component loosening. After débridement, the patient developed persistent metal stained wound drainage, necessitating ultimate successful treatment with a 2-stage exchange procedure.

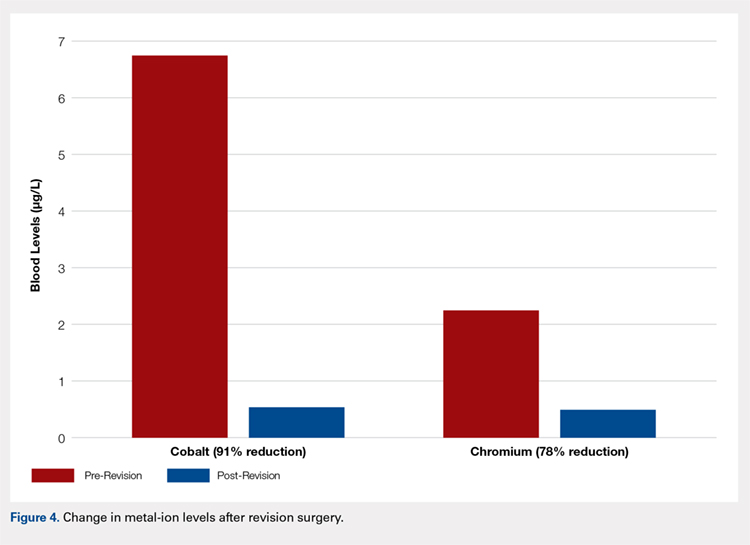

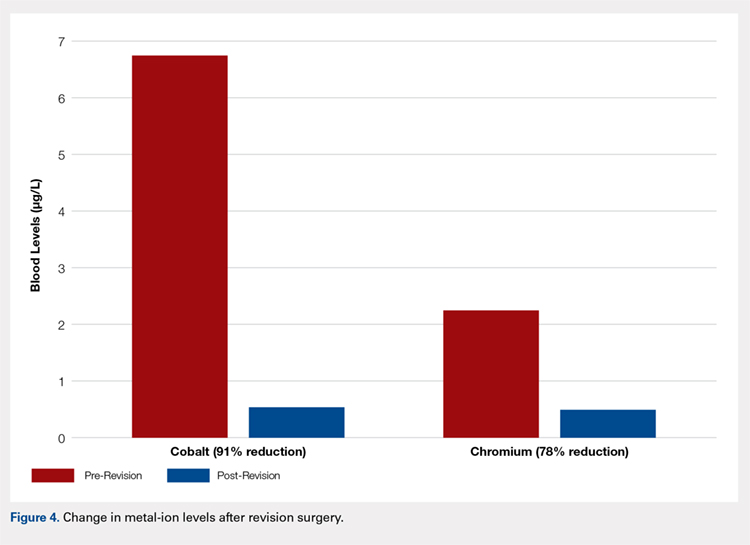

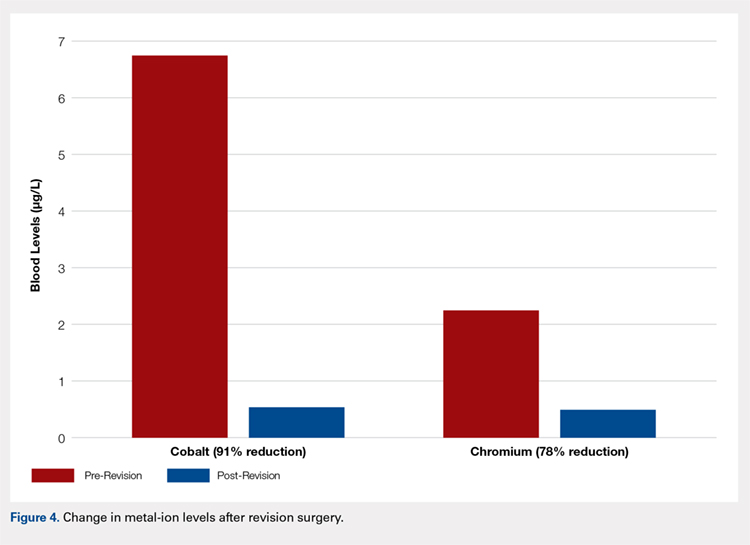

Age (P = .76), gender (P = .49), BMI (P = .29), acetabular component abduction angle (P = .12), and acetabulum size (P = .59) were not associated with an increased rate for hip failure (Table). Blood cobalt (7.6 vs 6.8 µg/L, P = .58) and chromium (5.0 vs 2.2 µg/L, P = .31) levels were not significantly higher in the revised group when compared with those of the unrevised group. The upper limits of blood cobalt and chromium levels reached 18.9 and 15.9 µg/L for the revised group and 16.8 and 5.4 µg/L for the non-revised group, respectively. In the revised group, a 91% decrease in cobalt and 78% decrease in chromium levels were observed at a mean of 6 months after the revision (Figure 4).

Table. Variables Not Associated with Early ASR Failure

|

| No Failure (n = 20) | Failure (n = 12) | P value |

Age (years) | 55.4 ± 6.4 | 54.7 ± 6.3 | .76 | |

BMI (kg/m2) | 29.7 ± 6.7 | 27.4 ± 4.0 | .29 | |

Gender | .49 | |||

Female | 3 (15%) | 3 (25%) | ||

Male | 17 (85%) | 9 (75%) | ||

Acetabulum size (mm) | 59.1 ± 3.9 | 58.3 ± 3.8 | .59 | |

Abduction angle (degrees) | 44.9 ± 4.5 | 42.3 ± 3.8 | .12 | |

Serum levels (µg/L) | ||||

Cobalt | 6.8 ± 6.0 | 7.6 ± 4.7 | .58 | |

| Chromium | 2.2 ± 1.7 | 5.0 ± 5.0 | .31 |

Continue to: Discussion...

DISCUSSION

According to the Center for Disease Control and Prevention, 310,800 total hip replacements were performed among inpatients aged 45 years and older in the US in 2010.7 Specifically, in the 55- to 64-year-old age group, the number of procedures performed tripled from 2000 through 2010. As younger and more active patients opt for hip replacements, a growing need for prosthesis with enhanced durability is observed.

Despite the early proposed advantages of large head MoM bearings, our retrospective study of the DePuy Synthes ASR™ XL Acetabular hip system yielded 15.6% and 34.4% failure rates at 3 and 5 years, respectively. These higher-than-expected rates of failure are consistent with published data. The British Hip Society reported a 21% to 35% revision rate at 4 years and 49% at 6 years for the ASR XL prosthesis.8 In comparison, other MoM prosthesis, on average, report a 12% to 15% rate of failure at 5 years.

Considerable controversy surrounds the causes of adverse wear failure in MoM bearings.9,10 The non-modular design of the ASR prostheses is frequently implicated as a cause of early failure. The lack of a central hole in the 1-piece component compromises the tactile feel of insertion, thereby reducing the surgeon’s ability to assess complete seating.11 This condition may potentially increase the abduction angle at the time of insertion. Screw fixation of the non-modular device is not possible. The ASR XL device (148° to 160°) is less than a hemisphere (180°) in size and hence features a diminished functional articular surface, further compromising implant fixation.11 The functional articular surface is defined as the optimal surface area (10 mm) needed for a MoM implant.12 Griffin and colleagues13 reported a 48 mm ASR XL component, when implanted at 45° of abduction, to function similar to an implant at 59° of abduction, leading to diminished lubrication, metallosis, and edge loading. The version of the acetabular component may similarly and adversely affect implant wear characteristics. Furthermore, the variable thickness of the implant, which is thicker at the dome and thinner at the rim, may further promote edge loading by shifting the center of rotation of the femoral head out from the center of the acetabular prosthesis.11 Studies have also shown that increased wear of the MoM articulation is associated with an acetabular component inclination angle in excess of 55°10,14 and a failure of fixation at time of implantation.15 This study, however, found no correlation between the abduction angle and risk of early implant failure for the ASR acetabular component. No correlation was also detected between the acetabulum size and revision surgery.

The AOANJRR reported loosening (44%), infection (20%), metal sensitivity (12%), fracture (9%), and dislocation of prosthesis (7%) as the indications for revision surgery for the ASR prosthesis.6 Furthermore, a single-center retrospective review of 70 consecutive MoM THAs with ultra-large diameter femoral head and monoblock acetabular components showed that 17.1% required revision within 3 years for loosening, pain, and squeaking.1 Overall, 28.6% of patients reported implant dysfunction. In this study, we observed a similar rate of failure at 3 years (15.6%) for pain (11) and infection (1). The revision surgery successfully relieved all of these symptoms. One patient presented with heterotopic ossification and anterior hip pain after the original revision and required additional surgery with prosthesis retention. No patient in this series required repeat component revisions at a mean of 2.9 years after surgery. In all but 1 case, primary acetabular components were used in the revision, and in all cases except that with infection, the femoral component was retained. Replacement shells were 2 to 4 mm larger in diameter than the original ASR component.

Recently, concerns have arisen regarding the long-term effects of serum cobalt and chromium metal ions levels. Studies have shown increased serum metal ion levels,15 groin pain,16 pseudotumor formation,17 and metallosis18 after the implantation of MoM bearings. In a case study by Mao and colleagues,19 1 patient reported headaches, anorexia, continuous metallic taste in her mouth, and weight loss. A cerebrospinal fluid analysis revealed cobalt and chromium levels at 9 and 13 nmol/L, respectively, indicating that these metal ions can cross the blood-brain barrier. Another patient reported painful muscle fatigue, night cramps, fainting spells, cognitive decline, and an inability to climb stairs. His serum cobalt level reached 258 nmol/L (reference range, 0-20 nmol/L), and chromium level totaled 88 nmol/L (reference range, 0-100 nmol/L). At 8-week follow-up after revision surgery, the symptoms of the patient had resolved, with serum cobalt levels dropping to 42 nmol/L.19 None of the patients in this study presented with any signs or symptoms of metal toxicity. The upper limits of blood cobalt and chromium levels in our study population reached 18.9 and 15.9 µg/L for the revised group and 16.8 and 5.4 µg/L for the non-revised group, respectively. However, we noted a similar drop in post-revision blood cobalt (91% decrease) and chromium (78% decrease) levels.

In summary, our data showed a high revision rate of the DePuy Synthes ASR™ XL Acetabular hip system. Our findings are consistent with internationally published data. In the absence of reliable predictors of early failure, continued close clinical surveillance and laboratory monitoring of these patients are warranted.

CONCLUSION

This study demonstrates the high failure rate of the DePuy Synthes ASR™ XL Acetabular hip system used in THA at a minimum of 5 years of follow-up. No variable that was predictive of failure could be identified in this series. Close clinical surveillance of these patients is therefore required. Metal levels dropped quickly after revision, and the revision surgery can generally be performed with slightly larger primary components. Symptomatic patients with ASR hip replacements, regardless of blood metal-ion levels, were candidates for the revision surgery. Not all failed hips exhibited substantially elevated metal levels. Asymptomatic patients with high blood metal-ion levels should be closely followed-up and revision surgery should be strongly considered, consistent with recently published guidelines.20

- Bernthal NM, Celestre PC, Stavrakis AI, Ludington JC, Oakes DA. Disappointing short-term results with the DePuy ASR XL metal-on-metal total hip arthroplasty. J Arthroplasty. 2012;27(4):539. doi:10.1016/j.arth.2011.08.022.

- de Steiger RN, Hang JR, Miller LN, Graves SE, Davidson DC. Five-year results of the ASR XL acetabular system and the ASR hip resurfacing system: An analysis from the Australian Orthopaedic Association National Joint Replacement Registry. J Bone Joint Surg Am. 2011;93(24):2287. doi:10.2106/JBJS.J.01727.

- Langton DJ, Jameson SS, Joyce TJ, Hallab NJ, Natu S, Nargol AV. Early failure of metal-on-metal bearings in hip resurfacing and large-diameter total hip replacement: a consequence of excess wear. J Bone Joint Surg Br. 2010;92(1):38-46. doi:10.1302/0301-620X.92B1.22770.

- Siebel T, Maubach S, Morlock MM. Lessons learned from early clinical experience and results of 300 ASR hip resurfacing implantations. Proc Inst Mech Eng H. 2006;220(2):345-353. doi:10.1243/095441105X69079.

- Jameson SS, Langton DJ, Nargol AV. Articular surface replacement of the hip: a prospective single-surgeon series. J Bone Joint Surg Br. 2010;92(1):28-37. doi:10.1302/0301-620X.92B1.22769.

- Australian Orthopaedic Association National Joint Replacement Registry annual report 2010. Australian Orthopaedic Association Web site. https://aoanjrr.sahmri.com/annual-reports-2010. Accessed June 19, 2018.

- Wolford ML, Palso K, Bercovitz A. Hospitalization for total hip replacement among inpatients aged 45 and over: United States, 2000-2010. Centers for Disease Control and Prevention Web site. http://www.cdc.gov/nchs/data/databriefs/db186.pdf. Accessed July 13, 2015.

- Hodgkinson J, Skinner J, Kay P. Large diameter metal on metal bearing total hip replacements. British Hip Society Web site. https://www.britishhipsociety.com/uploaded/BHS_MOM_THR.pdf. Accessed August 6, 2015.

- Hart AJ, Ilo K, Underwood R, et al. The relationship between the angle of version and rate of wear of retrieved metal-on-metal resurfacings: a prospective, CT-based study. J Bone Joint Surg Br. 2011;93(3):315-320. doi:10.1302/0301-620X.93B3.25545.

- Langton DJ, Joyce TJ, Jameson SS, et al. Adverse reaction to metal debris following hip resurfacing: the influence of component type, orientation and volumetric wear. J Bone Joint Surg Br. 2011;93(2):164-171. doi:10.1302/0301-620X.93B2.25099.

- Steele GD, Fehring TK, Odum SM, Dennos AC, Nadaud MC. Early failure of articular surface replacement XL total hip arthroplasty. J Arthroplasty. 2011;26(6):14-18. doi:10.1016/j.arth.2011.03.027.

- De Haan R, Campbell PA, Su EP, De Smet KA. Revision of metal-on-metal resurfacing arthroplasty of the hip: the influence of malpositioning of the components. J Bone Joint Surg Br. 2008;90(9):1158-1163. doi:10.1302/0301-620X.90B9.19891.

- Griffin WL, Nanson CJ, Springer BD, Davies MA, Fehring TK. Reduced articular surface of one-piece cups: a cause of runaway wear and early failure. Clin Orthop Relat Res. 2010;468(9):2328-2332. doi:10.1007/s11999-010-1383-8.

- Grammatopolous G, Pandit H, Glyn-Jones S, et al. Optimal acetablular orientation for hip resurfacing. J Bone Joint Surg Br. 2010;92(8):1072-1078. doi:10.1302/0301-620X.92B8.24194.

- MacDonalad SJ, McCalden RW, Chess DG, et al. Meta-onmetal versus polyethylene in hip arthoplasty: a randomized clinical trial. Clin Orthop Relat Res. 2003;(406):282-296.

- Bin Nasser A, Beaule PE, O'Neill M, Kim PR, Fazekas A. Incidence of groin pain after metal-on-metal hip resurfacing. Clin Orthop Relat Res. 2010;468(2):392-399. doi:10.1007/s11999-009-1133-y.

- Mahendra G, Pandit H, Kliskey K, Murray D, Gill HS, Athanasou N. Necrotic and inflammatory changes in metal-on-metal resurfacing hip arthroplasties. Acta Orthop. 2009;80(6):653-659. doi:10.3109/17453670903473016.

- Neumann DRP, Thaler C, Hitzl W, Huber M, Hofstädter T, Dorn U. Long term results of a contemporary metal-on-metal total hip arthroplasty. J Arthroplasty. 2010;25(5):700-708. doi:10.1016/j.arth.2009.05.018.

- Mao X, Wong AA, Crawford RW. Cobalt toxicity--an emerging clinical problem in patients with metal-on-metal hip prostheses? Med J Aust. 2011;194(12):649-651.

- Information statement: current concerns with metal-on-metal hip arthroplasty. American Academy of Orthopaedic Surgeons Web site. https://aaos.org/uploadedFiles/PreProduction/About/Opinion_Statements/advistmt/1035%20Current%20Concerns%20with%20Metal-on-Metal%20Hip%20Arthroplasty.pdf. Accessed June 19, 2018.

ABSTRACT

The articular surface replacement (ASR) monoblock metal-on-metal acetabular component was recalled due to a higher than expected early failure rate. We evaluated the survivorship of the device and variables that may be predictive of failure at a minimum of 5-year follow-up. A single-center, single-surgeon retrospective review was conducted in patients who received the DePuy Synthes ASR™ XL Acetabular hip system from December 2005 to November 2009. Mean values and percentages were calculated and compared using the Fisher’s exact test, simple logistic regression, and Student’s t-test. The significance level was P ≤ .05. This study included 29 patients (24 males, 5 females) with 32 ASR™ XL acetabular hip systems. Mean age and body mass index (BMI) reached 55.2 years and 28.9 kg/m2, respectively. Mean postoperative follow-up was 6.2 years. A total of 2 patients (6.9%) died of an unrelated cause and 1 patient was lost to follow-up (3.4%), leaving 26 patients with 28 hip replacements, all of whom were available for follow-up. The 5-year revision rate was 34.4% (10 patients with 11 hip replacements). Mean time to revision was 3.1 years. Age (P = .76), gender (P = .49), BMI (P = .29), acetabular component abduction angle (P = .12), and acetabulum size (P = .59) were not associated with the increased rate for hip failure. Blood cobalt (7.6 vs 6.8 µg/L, P = .58) and chromium (5.0 vs 2.2 µg/L, P = .31) levels were not significantly higher in the revised group when compared with those of the unrevised group. In the revised group, a 91% decrease in cobalt and 78% decrease in chromium levels were observed at a mean of 6 months following the revision. This study demonstrates a high rate of failure of ASR acetabular components used in total hip arthroplasty at a minimum of 5 years of follow-up. No variable that was predictive of failure could be identified in this series. Close clinical surveillance of these patients is required.

Continue to: Metal-on-metal...

Metal-on-metal (MoM) articulations have been widely explored as an alternative to polyethylene bearings in total hip arthroplasty (THA), with proposed benefits including improved range of motion, lower dislocation rates, and enhanced durability.1 Comprising cobalt and chromium, these MoM bearings gained widespread popularity in the United States, particularly in younger and more active patients looking for longer lasting devices.

The articular surface replacement (ASR) acetabular system (DePuy Synthes) was approved for sale by the US Food and Drug Administration in 2003 and implanted in an estimated 93,000 cases.2 Since then, however, the early failure rate of the prosthesis has been well documented,3-5 leading to a formal global product recall in August 2010. The Australian Orthopaedic Association National Joint Replacement Registry (AOANJRR) was amongst the first to report a 6.4% rate of failure of the device at 3 years when inserted with a Corail stem.6 An acceptable upper rate of hip prosthesis failure is considered to reach 1% per year, with the majority of implants reporting well below this value. A 10.9% failure rate at 5 years was documented when the prosthesis was inserted for resurfacing. The National Joint Registry of England and Wales confirmed these findings and observed a 13% and 12% rate of failure at 5 years for the acetabular and resurfacing systems, respectively.2 With the notable failure of the ASR system, this study reports our single-center 5-year survivorship experience and evaluates any variable that might be predictive of an early failure to aid in patient counseling.

METHODS

A single-center, single-surgeon, retrospective review of a consecutive series of patients was performed from December 2005 to November 2009. This study included all patients who underwent a primary THA with a DePuy Synthes ASR™ XL Acetabular hip system. No patients were excluded. Institutional Review Board approval was obtained. Patient demographics comprising of age, gender, and body mass index (BMI) were recorded. The primary endpoint of this study was 5-year survivorship rates. Secondary endpoints included duration to revision surgery, blood cobalt and chromium levels, time interval of blood ion tests, acetabulum size, acetabular component abduction angle, and duration to follow-up.

Candidates for the ASR™ XL Acetabular hip system included young patients and/or those considered to be physically active. In a select few, ASR devices were implanted upon patient request.

All patients underwent primary total hip replacement with a DePuy Synthes ASR™ XL uncemented acetabular component and an uncemented femoral stem (DePuy Synthes, Summit, or Tri-Lock) inserted via a standard posterior approach (Figure 1). Acetabulum sizes ranged from 52 mm to 68 mm in diameter.

All patients were followed-up yearly in the outpatient setting. Routine (yearly) metal-ion level sampling (whole blood) was started in 2010 for all patients. Laboratory tests were conducted at a single laboratory (Lab Corp.). Abduction cup inclination angles were measured by the providing surgeon using digital radiology software (GE Centricity systems).

The Student’s t-test was used to compare mean values (such as age, BMI, and metal ion levels) between the failure and no-failure groups. The 2-sided Fisher’s exact test analyzed differences in gender. Simple logistic regression analyzed variables associated with the failure group. Significance was P ≤ .05.

Continue to: Results...

RESULTS

A total of 29 patients (24 males, 5 females) with 32 ASR hip replacements were included in this study. Indications for surgery comprised osteoarthritis (28 hips, 87.5%) and avascular necrosis of the hip (4 hips, 12.5%). Mean age and BMI were 55.2 years and 28.9 kg/m2, respectively. A total of 2 patients (6.9%) died of an unrelated cause (1 myocardial infarct, 1 suicide), and 1 patient was lost to follow-up (3.4%), leaving 26 patients with 28 hip replacements, all of whom finished a 5-year minimum follow-up.

No implant failures were noted in the first year. The 5-year revision rate reached 34.4% (10 patients with 11 hip replacements). Mean time to revision for this subgroup was 3.1 years. Overall, an implant failure was observed in 37.5% of patients (11 patients with 12 hip replacements) at a mean postoperative follow-up of 6.2 years (Figure 2). Indications for implant revision were pain in 11 (92.7%) cases and infection in 1 (8.3%).

Of the 11 hips revised due to pain, 9 were performed by the original surgeon (8 were completed with primary acetabular components, 1 with a revision shell). Figure 3 shows a bilateral revision performed with primary acetabular components and retained DePuy Synthes Pinnacle femoral stems. In all these cases except 1, the ASR component was grossly loose. One case presented with pseudotumor and impingement between the femoral prosthetic neck and acetabular component after migration of a loose component. After revision, the patient returned with substantial anterior hip pain and heterotopic ossification, and failed conservative treatment, requiring another surgery with prosthesis retention, removal of heterotopic ossification, and iliopsoas lengthening. The surgery successfully relieved the symptoms. No other patients required additional surgery after their revision. In comparison to the original ASR component, the revision shell was 2 to 4 mm larger in diameter. No patient required component revision at a mean of 2.9 years after the revision surgery.

The patient with secondary revision developed a hematogenous streptococcal infection after a dental procedure performed without prophylactic antibiotics. The patient was initially lost to follow-up after the primary surgery and reported no antecedent pain prior to the revision. A substantial metal fluid collection was identified in the hip at the time of débridement and without component loosening. After débridement, the patient developed persistent metal stained wound drainage, necessitating ultimate successful treatment with a 2-stage exchange procedure.

Age (P = .76), gender (P = .49), BMI (P = .29), acetabular component abduction angle (P = .12), and acetabulum size (P = .59) were not associated with an increased rate for hip failure (Table). Blood cobalt (7.6 vs 6.8 µg/L, P = .58) and chromium (5.0 vs 2.2 µg/L, P = .31) levels were not significantly higher in the revised group when compared with those of the unrevised group. The upper limits of blood cobalt and chromium levels reached 18.9 and 15.9 µg/L for the revised group and 16.8 and 5.4 µg/L for the non-revised group, respectively. In the revised group, a 91% decrease in cobalt and 78% decrease in chromium levels were observed at a mean of 6 months after the revision (Figure 4).

Table. Variables Not Associated with Early ASR Failure

|

| No Failure (n = 20) | Failure (n = 12) | P value |

Age (years) | 55.4 ± 6.4 | 54.7 ± 6.3 | .76 | |

BMI (kg/m2) | 29.7 ± 6.7 | 27.4 ± 4.0 | .29 | |

Gender | .49 | |||

Female | 3 (15%) | 3 (25%) | ||

Male | 17 (85%) | 9 (75%) | ||

Acetabulum size (mm) | 59.1 ± 3.9 | 58.3 ± 3.8 | .59 | |

Abduction angle (degrees) | 44.9 ± 4.5 | 42.3 ± 3.8 | .12 | |

Serum levels (µg/L) | ||||

Cobalt | 6.8 ± 6.0 | 7.6 ± 4.7 | .58 | |

| Chromium | 2.2 ± 1.7 | 5.0 ± 5.0 | .31 |

Continue to: Discussion...

DISCUSSION

According to the Center for Disease Control and Prevention, 310,800 total hip replacements were performed among inpatients aged 45 years and older in the US in 2010.7 Specifically, in the 55- to 64-year-old age group, the number of procedures performed tripled from 2000 through 2010. As younger and more active patients opt for hip replacements, a growing need for prosthesis with enhanced durability is observed.

Despite the early proposed advantages of large head MoM bearings, our retrospective study of the DePuy Synthes ASR™ XL Acetabular hip system yielded 15.6% and 34.4% failure rates at 3 and 5 years, respectively. These higher-than-expected rates of failure are consistent with published data. The British Hip Society reported a 21% to 35% revision rate at 4 years and 49% at 6 years for the ASR XL prosthesis.8 In comparison, other MoM prosthesis, on average, report a 12% to 15% rate of failure at 5 years.

Considerable controversy surrounds the causes of adverse wear failure in MoM bearings.9,10 The non-modular design of the ASR prostheses is frequently implicated as a cause of early failure. The lack of a central hole in the 1-piece component compromises the tactile feel of insertion, thereby reducing the surgeon’s ability to assess complete seating.11 This condition may potentially increase the abduction angle at the time of insertion. Screw fixation of the non-modular device is not possible. The ASR XL device (148° to 160°) is less than a hemisphere (180°) in size and hence features a diminished functional articular surface, further compromising implant fixation.11 The functional articular surface is defined as the optimal surface area (10 mm) needed for a MoM implant.12 Griffin and colleagues13 reported a 48 mm ASR XL component, when implanted at 45° of abduction, to function similar to an implant at 59° of abduction, leading to diminished lubrication, metallosis, and edge loading. The version of the acetabular component may similarly and adversely affect implant wear characteristics. Furthermore, the variable thickness of the implant, which is thicker at the dome and thinner at the rim, may further promote edge loading by shifting the center of rotation of the femoral head out from the center of the acetabular prosthesis.11 Studies have also shown that increased wear of the MoM articulation is associated with an acetabular component inclination angle in excess of 55°10,14 and a failure of fixation at time of implantation.15 This study, however, found no correlation between the abduction angle and risk of early implant failure for the ASR acetabular component. No correlation was also detected between the acetabulum size and revision surgery.

The AOANJRR reported loosening (44%), infection (20%), metal sensitivity (12%), fracture (9%), and dislocation of prosthesis (7%) as the indications for revision surgery for the ASR prosthesis.6 Furthermore, a single-center retrospective review of 70 consecutive MoM THAs with ultra-large diameter femoral head and monoblock acetabular components showed that 17.1% required revision within 3 years for loosening, pain, and squeaking.1 Overall, 28.6% of patients reported implant dysfunction. In this study, we observed a similar rate of failure at 3 years (15.6%) for pain (11) and infection (1). The revision surgery successfully relieved all of these symptoms. One patient presented with heterotopic ossification and anterior hip pain after the original revision and required additional surgery with prosthesis retention. No patient in this series required repeat component revisions at a mean of 2.9 years after surgery. In all but 1 case, primary acetabular components were used in the revision, and in all cases except that with infection, the femoral component was retained. Replacement shells were 2 to 4 mm larger in diameter than the original ASR component.

Recently, concerns have arisen regarding the long-term effects of serum cobalt and chromium metal ions levels. Studies have shown increased serum metal ion levels,15 groin pain,16 pseudotumor formation,17 and metallosis18 after the implantation of MoM bearings. In a case study by Mao and colleagues,19 1 patient reported headaches, anorexia, continuous metallic taste in her mouth, and weight loss. A cerebrospinal fluid analysis revealed cobalt and chromium levels at 9 and 13 nmol/L, respectively, indicating that these metal ions can cross the blood-brain barrier. Another patient reported painful muscle fatigue, night cramps, fainting spells, cognitive decline, and an inability to climb stairs. His serum cobalt level reached 258 nmol/L (reference range, 0-20 nmol/L), and chromium level totaled 88 nmol/L (reference range, 0-100 nmol/L). At 8-week follow-up after revision surgery, the symptoms of the patient had resolved, with serum cobalt levels dropping to 42 nmol/L.19 None of the patients in this study presented with any signs or symptoms of metal toxicity. The upper limits of blood cobalt and chromium levels in our study population reached 18.9 and 15.9 µg/L for the revised group and 16.8 and 5.4 µg/L for the non-revised group, respectively. However, we noted a similar drop in post-revision blood cobalt (91% decrease) and chromium (78% decrease) levels.

In summary, our data showed a high revision rate of the DePuy Synthes ASR™ XL Acetabular hip system. Our findings are consistent with internationally published data. In the absence of reliable predictors of early failure, continued close clinical surveillance and laboratory monitoring of these patients are warranted.

CONCLUSION

This study demonstrates the high failure rate of the DePuy Synthes ASR™ XL Acetabular hip system used in THA at a minimum of 5 years of follow-up. No variable that was predictive of failure could be identified in this series. Close clinical surveillance of these patients is therefore required. Metal levels dropped quickly after revision, and the revision surgery can generally be performed with slightly larger primary components. Symptomatic patients with ASR hip replacements, regardless of blood metal-ion levels, were candidates for the revision surgery. Not all failed hips exhibited substantially elevated metal levels. Asymptomatic patients with high blood metal-ion levels should be closely followed-up and revision surgery should be strongly considered, consistent with recently published guidelines.20

ABSTRACT

The articular surface replacement (ASR) monoblock metal-on-metal acetabular component was recalled due to a higher than expected early failure rate. We evaluated the survivorship of the device and variables that may be predictive of failure at a minimum of 5-year follow-up. A single-center, single-surgeon retrospective review was conducted in patients who received the DePuy Synthes ASR™ XL Acetabular hip system from December 2005 to November 2009. Mean values and percentages were calculated and compared using the Fisher’s exact test, simple logistic regression, and Student’s t-test. The significance level was P ≤ .05. This study included 29 patients (24 males, 5 females) with 32 ASR™ XL acetabular hip systems. Mean age and body mass index (BMI) reached 55.2 years and 28.9 kg/m2, respectively. Mean postoperative follow-up was 6.2 years. A total of 2 patients (6.9%) died of an unrelated cause and 1 patient was lost to follow-up (3.4%), leaving 26 patients with 28 hip replacements, all of whom were available for follow-up. The 5-year revision rate was 34.4% (10 patients with 11 hip replacements). Mean time to revision was 3.1 years. Age (P = .76), gender (P = .49), BMI (P = .29), acetabular component abduction angle (P = .12), and acetabulum size (P = .59) were not associated with the increased rate for hip failure. Blood cobalt (7.6 vs 6.8 µg/L, P = .58) and chromium (5.0 vs 2.2 µg/L, P = .31) levels were not significantly higher in the revised group when compared with those of the unrevised group. In the revised group, a 91% decrease in cobalt and 78% decrease in chromium levels were observed at a mean of 6 months following the revision. This study demonstrates a high rate of failure of ASR acetabular components used in total hip arthroplasty at a minimum of 5 years of follow-up. No variable that was predictive of failure could be identified in this series. Close clinical surveillance of these patients is required.

Continue to: Metal-on-metal...

Metal-on-metal (MoM) articulations have been widely explored as an alternative to polyethylene bearings in total hip arthroplasty (THA), with proposed benefits including improved range of motion, lower dislocation rates, and enhanced durability.1 Comprising cobalt and chromium, these MoM bearings gained widespread popularity in the United States, particularly in younger and more active patients looking for longer lasting devices.

The articular surface replacement (ASR) acetabular system (DePuy Synthes) was approved for sale by the US Food and Drug Administration in 2003 and implanted in an estimated 93,000 cases.2 Since then, however, the early failure rate of the prosthesis has been well documented,3-5 leading to a formal global product recall in August 2010. The Australian Orthopaedic Association National Joint Replacement Registry (AOANJRR) was amongst the first to report a 6.4% rate of failure of the device at 3 years when inserted with a Corail stem.6 An acceptable upper rate of hip prosthesis failure is considered to reach 1% per year, with the majority of implants reporting well below this value. A 10.9% failure rate at 5 years was documented when the prosthesis was inserted for resurfacing. The National Joint Registry of England and Wales confirmed these findings and observed a 13% and 12% rate of failure at 5 years for the acetabular and resurfacing systems, respectively.2 With the notable failure of the ASR system, this study reports our single-center 5-year survivorship experience and evaluates any variable that might be predictive of an early failure to aid in patient counseling.

METHODS

A single-center, single-surgeon, retrospective review of a consecutive series of patients was performed from December 2005 to November 2009. This study included all patients who underwent a primary THA with a DePuy Synthes ASR™ XL Acetabular hip system. No patients were excluded. Institutional Review Board approval was obtained. Patient demographics comprising of age, gender, and body mass index (BMI) were recorded. The primary endpoint of this study was 5-year survivorship rates. Secondary endpoints included duration to revision surgery, blood cobalt and chromium levels, time interval of blood ion tests, acetabulum size, acetabular component abduction angle, and duration to follow-up.

Candidates for the ASR™ XL Acetabular hip system included young patients and/or those considered to be physically active. In a select few, ASR devices were implanted upon patient request.

All patients underwent primary total hip replacement with a DePuy Synthes ASR™ XL uncemented acetabular component and an uncemented femoral stem (DePuy Synthes, Summit, or Tri-Lock) inserted via a standard posterior approach (Figure 1). Acetabulum sizes ranged from 52 mm to 68 mm in diameter.

All patients were followed-up yearly in the outpatient setting. Routine (yearly) metal-ion level sampling (whole blood) was started in 2010 for all patients. Laboratory tests were conducted at a single laboratory (Lab Corp.). Abduction cup inclination angles were measured by the providing surgeon using digital radiology software (GE Centricity systems).

The Student’s t-test was used to compare mean values (such as age, BMI, and metal ion levels) between the failure and no-failure groups. The 2-sided Fisher’s exact test analyzed differences in gender. Simple logistic regression analyzed variables associated with the failure group. Significance was P ≤ .05.

Continue to: Results...

RESULTS

A total of 29 patients (24 males, 5 females) with 32 ASR hip replacements were included in this study. Indications for surgery comprised osteoarthritis (28 hips, 87.5%) and avascular necrosis of the hip (4 hips, 12.5%). Mean age and BMI were 55.2 years and 28.9 kg/m2, respectively. A total of 2 patients (6.9%) died of an unrelated cause (1 myocardial infarct, 1 suicide), and 1 patient was lost to follow-up (3.4%), leaving 26 patients with 28 hip replacements, all of whom finished a 5-year minimum follow-up.

No implant failures were noted in the first year. The 5-year revision rate reached 34.4% (10 patients with 11 hip replacements). Mean time to revision for this subgroup was 3.1 years. Overall, an implant failure was observed in 37.5% of patients (11 patients with 12 hip replacements) at a mean postoperative follow-up of 6.2 years (Figure 2). Indications for implant revision were pain in 11 (92.7%) cases and infection in 1 (8.3%).

Of the 11 hips revised due to pain, 9 were performed by the original surgeon (8 were completed with primary acetabular components, 1 with a revision shell). Figure 3 shows a bilateral revision performed with primary acetabular components and retained DePuy Synthes Pinnacle femoral stems. In all these cases except 1, the ASR component was grossly loose. One case presented with pseudotumor and impingement between the femoral prosthetic neck and acetabular component after migration of a loose component. After revision, the patient returned with substantial anterior hip pain and heterotopic ossification, and failed conservative treatment, requiring another surgery with prosthesis retention, removal of heterotopic ossification, and iliopsoas lengthening. The surgery successfully relieved the symptoms. No other patients required additional surgery after their revision. In comparison to the original ASR component, the revision shell was 2 to 4 mm larger in diameter. No patient required component revision at a mean of 2.9 years after the revision surgery.

The patient with secondary revision developed a hematogenous streptococcal infection after a dental procedure performed without prophylactic antibiotics. The patient was initially lost to follow-up after the primary surgery and reported no antecedent pain prior to the revision. A substantial metal fluid collection was identified in the hip at the time of débridement and without component loosening. After débridement, the patient developed persistent metal stained wound drainage, necessitating ultimate successful treatment with a 2-stage exchange procedure.

Age (P = .76), gender (P = .49), BMI (P = .29), acetabular component abduction angle (P = .12), and acetabulum size (P = .59) were not associated with an increased rate for hip failure (Table). Blood cobalt (7.6 vs 6.8 µg/L, P = .58) and chromium (5.0 vs 2.2 µg/L, P = .31) levels were not significantly higher in the revised group when compared with those of the unrevised group. The upper limits of blood cobalt and chromium levels reached 18.9 and 15.9 µg/L for the revised group and 16.8 and 5.4 µg/L for the non-revised group, respectively. In the revised group, a 91% decrease in cobalt and 78% decrease in chromium levels were observed at a mean of 6 months after the revision (Figure 4).

Table. Variables Not Associated with Early ASR Failure

|

| No Failure (n = 20) | Failure (n = 12) | P value |

Age (years) | 55.4 ± 6.4 | 54.7 ± 6.3 | .76 | |

BMI (kg/m2) | 29.7 ± 6.7 | 27.4 ± 4.0 | .29 | |

Gender | .49 | |||

Female | 3 (15%) | 3 (25%) | ||

Male | 17 (85%) | 9 (75%) | ||

Acetabulum size (mm) | 59.1 ± 3.9 | 58.3 ± 3.8 | .59 | |

Abduction angle (degrees) | 44.9 ± 4.5 | 42.3 ± 3.8 | .12 | |

Serum levels (µg/L) | ||||

Cobalt | 6.8 ± 6.0 | 7.6 ± 4.7 | .58 | |

| Chromium | 2.2 ± 1.7 | 5.0 ± 5.0 | .31 |

Continue to: Discussion...

DISCUSSION

According to the Center for Disease Control and Prevention, 310,800 total hip replacements were performed among inpatients aged 45 years and older in the US in 2010.7 Specifically, in the 55- to 64-year-old age group, the number of procedures performed tripled from 2000 through 2010. As younger and more active patients opt for hip replacements, a growing need for prosthesis with enhanced durability is observed.

Despite the early proposed advantages of large head MoM bearings, our retrospective study of the DePuy Synthes ASR™ XL Acetabular hip system yielded 15.6% and 34.4% failure rates at 3 and 5 years, respectively. These higher-than-expected rates of failure are consistent with published data. The British Hip Society reported a 21% to 35% revision rate at 4 years and 49% at 6 years for the ASR XL prosthesis.8 In comparison, other MoM prosthesis, on average, report a 12% to 15% rate of failure at 5 years.

Considerable controversy surrounds the causes of adverse wear failure in MoM bearings.9,10 The non-modular design of the ASR prostheses is frequently implicated as a cause of early failure. The lack of a central hole in the 1-piece component compromises the tactile feel of insertion, thereby reducing the surgeon’s ability to assess complete seating.11 This condition may potentially increase the abduction angle at the time of insertion. Screw fixation of the non-modular device is not possible. The ASR XL device (148° to 160°) is less than a hemisphere (180°) in size and hence features a diminished functional articular surface, further compromising implant fixation.11 The functional articular surface is defined as the optimal surface area (10 mm) needed for a MoM implant.12 Griffin and colleagues13 reported a 48 mm ASR XL component, when implanted at 45° of abduction, to function similar to an implant at 59° of abduction, leading to diminished lubrication, metallosis, and edge loading. The version of the acetabular component may similarly and adversely affect implant wear characteristics. Furthermore, the variable thickness of the implant, which is thicker at the dome and thinner at the rim, may further promote edge loading by shifting the center of rotation of the femoral head out from the center of the acetabular prosthesis.11 Studies have also shown that increased wear of the MoM articulation is associated with an acetabular component inclination angle in excess of 55°10,14 and a failure of fixation at time of implantation.15 This study, however, found no correlation between the abduction angle and risk of early implant failure for the ASR acetabular component. No correlation was also detected between the acetabulum size and revision surgery.

The AOANJRR reported loosening (44%), infection (20%), metal sensitivity (12%), fracture (9%), and dislocation of prosthesis (7%) as the indications for revision surgery for the ASR prosthesis.6 Furthermore, a single-center retrospective review of 70 consecutive MoM THAs with ultra-large diameter femoral head and monoblock acetabular components showed that 17.1% required revision within 3 years for loosening, pain, and squeaking.1 Overall, 28.6% of patients reported implant dysfunction. In this study, we observed a similar rate of failure at 3 years (15.6%) for pain (11) and infection (1). The revision surgery successfully relieved all of these symptoms. One patient presented with heterotopic ossification and anterior hip pain after the original revision and required additional surgery with prosthesis retention. No patient in this series required repeat component revisions at a mean of 2.9 years after surgery. In all but 1 case, primary acetabular components were used in the revision, and in all cases except that with infection, the femoral component was retained. Replacement shells were 2 to 4 mm larger in diameter than the original ASR component.

Recently, concerns have arisen regarding the long-term effects of serum cobalt and chromium metal ions levels. Studies have shown increased serum metal ion levels,15 groin pain,16 pseudotumor formation,17 and metallosis18 after the implantation of MoM bearings. In a case study by Mao and colleagues,19 1 patient reported headaches, anorexia, continuous metallic taste in her mouth, and weight loss. A cerebrospinal fluid analysis revealed cobalt and chromium levels at 9 and 13 nmol/L, respectively, indicating that these metal ions can cross the blood-brain barrier. Another patient reported painful muscle fatigue, night cramps, fainting spells, cognitive decline, and an inability to climb stairs. His serum cobalt level reached 258 nmol/L (reference range, 0-20 nmol/L), and chromium level totaled 88 nmol/L (reference range, 0-100 nmol/L). At 8-week follow-up after revision surgery, the symptoms of the patient had resolved, with serum cobalt levels dropping to 42 nmol/L.19 None of the patients in this study presented with any signs or symptoms of metal toxicity. The upper limits of blood cobalt and chromium levels in our study population reached 18.9 and 15.9 µg/L for the revised group and 16.8 and 5.4 µg/L for the non-revised group, respectively. However, we noted a similar drop in post-revision blood cobalt (91% decrease) and chromium (78% decrease) levels.

In summary, our data showed a high revision rate of the DePuy Synthes ASR™ XL Acetabular hip system. Our findings are consistent with internationally published data. In the absence of reliable predictors of early failure, continued close clinical surveillance and laboratory monitoring of these patients are warranted.

CONCLUSION

This study demonstrates the high failure rate of the DePuy Synthes ASR™ XL Acetabular hip system used in THA at a minimum of 5 years of follow-up. No variable that was predictive of failure could be identified in this series. Close clinical surveillance of these patients is therefore required. Metal levels dropped quickly after revision, and the revision surgery can generally be performed with slightly larger primary components. Symptomatic patients with ASR hip replacements, regardless of blood metal-ion levels, were candidates for the revision surgery. Not all failed hips exhibited substantially elevated metal levels. Asymptomatic patients with high blood metal-ion levels should be closely followed-up and revision surgery should be strongly considered, consistent with recently published guidelines.20

- Bernthal NM, Celestre PC, Stavrakis AI, Ludington JC, Oakes DA. Disappointing short-term results with the DePuy ASR XL metal-on-metal total hip arthroplasty. J Arthroplasty. 2012;27(4):539. doi:10.1016/j.arth.2011.08.022.

- de Steiger RN, Hang JR, Miller LN, Graves SE, Davidson DC. Five-year results of the ASR XL acetabular system and the ASR hip resurfacing system: An analysis from the Australian Orthopaedic Association National Joint Replacement Registry. J Bone Joint Surg Am. 2011;93(24):2287. doi:10.2106/JBJS.J.01727.

- Langton DJ, Jameson SS, Joyce TJ, Hallab NJ, Natu S, Nargol AV. Early failure of metal-on-metal bearings in hip resurfacing and large-diameter total hip replacement: a consequence of excess wear. J Bone Joint Surg Br. 2010;92(1):38-46. doi:10.1302/0301-620X.92B1.22770.

- Siebel T, Maubach S, Morlock MM. Lessons learned from early clinical experience and results of 300 ASR hip resurfacing implantations. Proc Inst Mech Eng H. 2006;220(2):345-353. doi:10.1243/095441105X69079.

- Jameson SS, Langton DJ, Nargol AV. Articular surface replacement of the hip: a prospective single-surgeon series. J Bone Joint Surg Br. 2010;92(1):28-37. doi:10.1302/0301-620X.92B1.22769.

- Australian Orthopaedic Association National Joint Replacement Registry annual report 2010. Australian Orthopaedic Association Web site. https://aoanjrr.sahmri.com/annual-reports-2010. Accessed June 19, 2018.

- Wolford ML, Palso K, Bercovitz A. Hospitalization for total hip replacement among inpatients aged 45 and over: United States, 2000-2010. Centers for Disease Control and Prevention Web site. http://www.cdc.gov/nchs/data/databriefs/db186.pdf. Accessed July 13, 2015.

- Hodgkinson J, Skinner J, Kay P. Large diameter metal on metal bearing total hip replacements. British Hip Society Web site. https://www.britishhipsociety.com/uploaded/BHS_MOM_THR.pdf. Accessed August 6, 2015.

- Hart AJ, Ilo K, Underwood R, et al. The relationship between the angle of version and rate of wear of retrieved metal-on-metal resurfacings: a prospective, CT-based study. J Bone Joint Surg Br. 2011;93(3):315-320. doi:10.1302/0301-620X.93B3.25545.

- Langton DJ, Joyce TJ, Jameson SS, et al. Adverse reaction to metal debris following hip resurfacing: the influence of component type, orientation and volumetric wear. J Bone Joint Surg Br. 2011;93(2):164-171. doi:10.1302/0301-620X.93B2.25099.

- Steele GD, Fehring TK, Odum SM, Dennos AC, Nadaud MC. Early failure of articular surface replacement XL total hip arthroplasty. J Arthroplasty. 2011;26(6):14-18. doi:10.1016/j.arth.2011.03.027.

- De Haan R, Campbell PA, Su EP, De Smet KA. Revision of metal-on-metal resurfacing arthroplasty of the hip: the influence of malpositioning of the components. J Bone Joint Surg Br. 2008;90(9):1158-1163. doi:10.1302/0301-620X.90B9.19891.

- Griffin WL, Nanson CJ, Springer BD, Davies MA, Fehring TK. Reduced articular surface of one-piece cups: a cause of runaway wear and early failure. Clin Orthop Relat Res. 2010;468(9):2328-2332. doi:10.1007/s11999-010-1383-8.

- Grammatopolous G, Pandit H, Glyn-Jones S, et al. Optimal acetablular orientation for hip resurfacing. J Bone Joint Surg Br. 2010;92(8):1072-1078. doi:10.1302/0301-620X.92B8.24194.

- MacDonalad SJ, McCalden RW, Chess DG, et al. Meta-onmetal versus polyethylene in hip arthoplasty: a randomized clinical trial. Clin Orthop Relat Res. 2003;(406):282-296.

- Bin Nasser A, Beaule PE, O'Neill M, Kim PR, Fazekas A. Incidence of groin pain after metal-on-metal hip resurfacing. Clin Orthop Relat Res. 2010;468(2):392-399. doi:10.1007/s11999-009-1133-y.

- Mahendra G, Pandit H, Kliskey K, Murray D, Gill HS, Athanasou N. Necrotic and inflammatory changes in metal-on-metal resurfacing hip arthroplasties. Acta Orthop. 2009;80(6):653-659. doi:10.3109/17453670903473016.

- Neumann DRP, Thaler C, Hitzl W, Huber M, Hofstädter T, Dorn U. Long term results of a contemporary metal-on-metal total hip arthroplasty. J Arthroplasty. 2010;25(5):700-708. doi:10.1016/j.arth.2009.05.018.

- Mao X, Wong AA, Crawford RW. Cobalt toxicity--an emerging clinical problem in patients with metal-on-metal hip prostheses? Med J Aust. 2011;194(12):649-651.

- Information statement: current concerns with metal-on-metal hip arthroplasty. American Academy of Orthopaedic Surgeons Web site. https://aaos.org/uploadedFiles/PreProduction/About/Opinion_Statements/advistmt/1035%20Current%20Concerns%20with%20Metal-on-Metal%20Hip%20Arthroplasty.pdf. Accessed June 19, 2018.

- Bernthal NM, Celestre PC, Stavrakis AI, Ludington JC, Oakes DA. Disappointing short-term results with the DePuy ASR XL metal-on-metal total hip arthroplasty. J Arthroplasty. 2012;27(4):539. doi:10.1016/j.arth.2011.08.022.

- de Steiger RN, Hang JR, Miller LN, Graves SE, Davidson DC. Five-year results of the ASR XL acetabular system and the ASR hip resurfacing system: An analysis from the Australian Orthopaedic Association National Joint Replacement Registry. J Bone Joint Surg Am. 2011;93(24):2287. doi:10.2106/JBJS.J.01727.

- Langton DJ, Jameson SS, Joyce TJ, Hallab NJ, Natu S, Nargol AV. Early failure of metal-on-metal bearings in hip resurfacing and large-diameter total hip replacement: a consequence of excess wear. J Bone Joint Surg Br. 2010;92(1):38-46. doi:10.1302/0301-620X.92B1.22770.

- Siebel T, Maubach S, Morlock MM. Lessons learned from early clinical experience and results of 300 ASR hip resurfacing implantations. Proc Inst Mech Eng H. 2006;220(2):345-353. doi:10.1243/095441105X69079.

- Jameson SS, Langton DJ, Nargol AV. Articular surface replacement of the hip: a prospective single-surgeon series. J Bone Joint Surg Br. 2010;92(1):28-37. doi:10.1302/0301-620X.92B1.22769.

- Australian Orthopaedic Association National Joint Replacement Registry annual report 2010. Australian Orthopaedic Association Web site. https://aoanjrr.sahmri.com/annual-reports-2010. Accessed June 19, 2018.

- Wolford ML, Palso K, Bercovitz A. Hospitalization for total hip replacement among inpatients aged 45 and over: United States, 2000-2010. Centers for Disease Control and Prevention Web site. http://www.cdc.gov/nchs/data/databriefs/db186.pdf. Accessed July 13, 2015.

- Hodgkinson J, Skinner J, Kay P. Large diameter metal on metal bearing total hip replacements. British Hip Society Web site. https://www.britishhipsociety.com/uploaded/BHS_MOM_THR.pdf. Accessed August 6, 2015.

- Hart AJ, Ilo K, Underwood R, et al. The relationship between the angle of version and rate of wear of retrieved metal-on-metal resurfacings: a prospective, CT-based study. J Bone Joint Surg Br. 2011;93(3):315-320. doi:10.1302/0301-620X.93B3.25545.

- Langton DJ, Joyce TJ, Jameson SS, et al. Adverse reaction to metal debris following hip resurfacing: the influence of component type, orientation and volumetric wear. J Bone Joint Surg Br. 2011;93(2):164-171. doi:10.1302/0301-620X.93B2.25099.

- Steele GD, Fehring TK, Odum SM, Dennos AC, Nadaud MC. Early failure of articular surface replacement XL total hip arthroplasty. J Arthroplasty. 2011;26(6):14-18. doi:10.1016/j.arth.2011.03.027.

- De Haan R, Campbell PA, Su EP, De Smet KA. Revision of metal-on-metal resurfacing arthroplasty of the hip: the influence of malpositioning of the components. J Bone Joint Surg Br. 2008;90(9):1158-1163. doi:10.1302/0301-620X.90B9.19891.

- Griffin WL, Nanson CJ, Springer BD, Davies MA, Fehring TK. Reduced articular surface of one-piece cups: a cause of runaway wear and early failure. Clin Orthop Relat Res. 2010;468(9):2328-2332. doi:10.1007/s11999-010-1383-8.

- Grammatopolous G, Pandit H, Glyn-Jones S, et al. Optimal acetablular orientation for hip resurfacing. J Bone Joint Surg Br. 2010;92(8):1072-1078. doi:10.1302/0301-620X.92B8.24194.

- MacDonalad SJ, McCalden RW, Chess DG, et al. Meta-onmetal versus polyethylene in hip arthoplasty: a randomized clinical trial. Clin Orthop Relat Res. 2003;(406):282-296.

- Bin Nasser A, Beaule PE, O'Neill M, Kim PR, Fazekas A. Incidence of groin pain after metal-on-metal hip resurfacing. Clin Orthop Relat Res. 2010;468(2):392-399. doi:10.1007/s11999-009-1133-y.

- Mahendra G, Pandit H, Kliskey K, Murray D, Gill HS, Athanasou N. Necrotic and inflammatory changes in metal-on-metal resurfacing hip arthroplasties. Acta Orthop. 2009;80(6):653-659. doi:10.3109/17453670903473016.

- Neumann DRP, Thaler C, Hitzl W, Huber M, Hofstädter T, Dorn U. Long term results of a contemporary metal-on-metal total hip arthroplasty. J Arthroplasty. 2010;25(5):700-708. doi:10.1016/j.arth.2009.05.018.

- Mao X, Wong AA, Crawford RW. Cobalt toxicity--an emerging clinical problem in patients with metal-on-metal hip prostheses? Med J Aust. 2011;194(12):649-651.

- Information statement: current concerns with metal-on-metal hip arthroplasty. American Academy of Orthopaedic Surgeons Web site. https://aaos.org/uploadedFiles/PreProduction/About/Opinion_Statements/advistmt/1035%20Current%20Concerns%20with%20Metal-on-Metal%20Hip%20Arthroplasty.pdf. Accessed June 19, 2018.

TAKE-HOME POINTS

- High rate of failure of DePuy Synthes ASR™ XL Acetabular hip system used in THA, approaching 34.4% at 5 years.

- Mean time to revision was 3.1 years with pain being the most common indication for revision surgery.

- Age, gender, acetabular component abduction angle, acetabular size, and serum cobalt or chromium levels were not associated with increased rate of failure.

- Serum cobalt and chromium levels decreased significantly within 6 months of revision surgery.

- Close clinical surveillance and laboratory monitoring of patients is required.

Pembrolizumab does not surpass paclitaxel for gastric cancer

BARCELONA – The immune checkpoint inhibitor pembrolizumab (Keytruda) did not significantly improve overall survival of advanced/metastatic gastric or gastroesophageal junction cancer compared with paclitaxel, the results of the KEYNOTE-061 study show.

Among 395 patients with gastric or gastroesophageal junction (GEJ) cancer who had expression of the programmed death ligand 1 (PD-L1) on 1% or more of their tumor cells, lymphocytes, and macrophages, median overall survival (OS) for patients treated with pembrolizumab was 9.1 months, compared with 8.3 months. This translated into a hazard ratio (HR) for death in the pembrolizumab arm of 0.82, but with the 95% confidence interval crossing 1.00, and a P value (.04205) that did not meet the prespecified threshold for significance (P equal to or less than .0135).

Despite the failure of the trial to reach its primary endpoint, “these results may support further research to identify patients likely to benefit from pembrolizumab monotherapy and ongoing development of pembrolizumab-based combination therapy for gastric cancer,” he said at the European Society of Medical Oncology World Congress on Gastrointestinal Cancer.

Pembrolizumab is approved by the Food and Drug Administration for the treatment of patients with recurrent locally advanced or metastatic gastric or GEJ adenocarcinoma with tumors confirmed to carry PD-L1 for whom two or more prior lines of therapy had failed.

The approval was based on results of the nonrandomized, open label KEYNOTE-059 trial, which enrolled 259 patients with gastric or GEJ adenocarcinoma that progressed on at least two prior systemic treatments for advanced disease. Of the enrollees, 143 patients had tumors with a PD-L1 Combined Positive Score (CPS) of 1 or greater. The primary trial outcome, the objective response rate for these 143 patients, was 13.3% (95% confidence interval; 8.2-20), with a complete response rate of 1.4% and a partial response rate of 11.9%. The duration of response ranged from at least 2.8 months to at least 19.4 months.

The drug is also approved for unresectable of metastatic gastric tumors with high levels of microsatellite instability that progressed on prior therapy.

The KEYNOTE-061 study was designed to test the proposition that pembrolizumab could improve on paclitaxel for treatment of patients with adenocarcinoma of the stomach or GEJ that was metastatic or locally advanced and unresectable, and for which first-line therapy with a platinum agent and fluoropyrimidine had failed.

A total of 592 patients from Europe, Israel, North America, Asia, and Australia were enrolled and randomly assigned to receive either pembrolizumab 200 mg every 3 weeks for 35 cycles, or paclitaxel 80 mg/m2 on days 1, 8, and 15 of each 4-week cycle. Each treatment was continued until the maximum number of cycles (for pembrolizumab) or until confirmed disease progression, intolerable toxicity, patient withdrawal, or investigator’s decision.

As noted, OS for patients with a CPS score of 1 or greater, the primary endpoint, did not differ between treatment arms, but there was a numerical tilt that appeared to be in favor of pembrolizumab.

For example, the 12-month OS rates were 39.8% in the pembrolizumab groups vs. 27.1% in the paclitaxel group, and respective 18-month OS rates were 25.7% and 14.8%.

In an analysis of protocol-specified subgroups, there were general trends slightly favoring the checkpoint inhibitor over the taxane, but the only significant difference was among patients with GEJ cancers as the primary tumor location (HR, 0.61, 95% confidence interval, 0.41-0.90).

Pembrolizumab offered a small but significant survival advantage among patients with Eastern Cooperative Oncology Group performance status of 0, with a median OS of 12.3 months vs. 9.3 months (HR, 0.69, 95% CI, 0.49-0.97).

Analyses of PFS and OS by CPS score groups (less than 1, 1-10, or 10 and higher) showed no significant differences, however.

Treatment-related adverse events were more frequent with paclitaxel (84.1% vs. 52.7% of patients on pembrolizumab), but pembrolizumab was associated with more treatment-related deaths (three vs. one). Two of the deaths in the pembrolizumab arm were immune mediated. Grade 3 or greater adverse events occurred in 14.3% vs. 34.8%, respectively.

In the question-and-response following Dr. Shitara’s presentation, session comoderator David Cunningham, MD, of Royal Marsden NHS Foundation Trust in Sutton, England, asked why the KEYNOTE-061 investigators did not pit pembrolizumab against the combination of paclitaxel and ramucirumab (Cyramza) “since that’s what many people would use in this situation.”

Dr. Shitara replied that ramucirumab was not available or accepted as an option in many countries when the trial was first planned in 2014. He added that paclitaxel and ramucirumab should be the control arm for clinical trials going forward.

SOURCE: Shitara K. et al. ESMO World Congress on Gastrointestinal Cancer 2018. Abstract LBA-005.

BARCELONA – The immune checkpoint inhibitor pembrolizumab (Keytruda) did not significantly improve overall survival of advanced/metastatic gastric or gastroesophageal junction cancer compared with paclitaxel, the results of the KEYNOTE-061 study show.

Among 395 patients with gastric or gastroesophageal junction (GEJ) cancer who had expression of the programmed death ligand 1 (PD-L1) on 1% or more of their tumor cells, lymphocytes, and macrophages, median overall survival (OS) for patients treated with pembrolizumab was 9.1 months, compared with 8.3 months. This translated into a hazard ratio (HR) for death in the pembrolizumab arm of 0.82, but with the 95% confidence interval crossing 1.00, and a P value (.04205) that did not meet the prespecified threshold for significance (P equal to or less than .0135).

Despite the failure of the trial to reach its primary endpoint, “these results may support further research to identify patients likely to benefit from pembrolizumab monotherapy and ongoing development of pembrolizumab-based combination therapy for gastric cancer,” he said at the European Society of Medical Oncology World Congress on Gastrointestinal Cancer.

Pembrolizumab is approved by the Food and Drug Administration for the treatment of patients with recurrent locally advanced or metastatic gastric or GEJ adenocarcinoma with tumors confirmed to carry PD-L1 for whom two or more prior lines of therapy had failed.

The approval was based on results of the nonrandomized, open label KEYNOTE-059 trial, which enrolled 259 patients with gastric or GEJ adenocarcinoma that progressed on at least two prior systemic treatments for advanced disease. Of the enrollees, 143 patients had tumors with a PD-L1 Combined Positive Score (CPS) of 1 or greater. The primary trial outcome, the objective response rate for these 143 patients, was 13.3% (95% confidence interval; 8.2-20), with a complete response rate of 1.4% and a partial response rate of 11.9%. The duration of response ranged from at least 2.8 months to at least 19.4 months.

The drug is also approved for unresectable of metastatic gastric tumors with high levels of microsatellite instability that progressed on prior therapy.

The KEYNOTE-061 study was designed to test the proposition that pembrolizumab could improve on paclitaxel for treatment of patients with adenocarcinoma of the stomach or GEJ that was metastatic or locally advanced and unresectable, and for which first-line therapy with a platinum agent and fluoropyrimidine had failed.

A total of 592 patients from Europe, Israel, North America, Asia, and Australia were enrolled and randomly assigned to receive either pembrolizumab 200 mg every 3 weeks for 35 cycles, or paclitaxel 80 mg/m2 on days 1, 8, and 15 of each 4-week cycle. Each treatment was continued until the maximum number of cycles (for pembrolizumab) or until confirmed disease progression, intolerable toxicity, patient withdrawal, or investigator’s decision.

As noted, OS for patients with a CPS score of 1 or greater, the primary endpoint, did not differ between treatment arms, but there was a numerical tilt that appeared to be in favor of pembrolizumab.

For example, the 12-month OS rates were 39.8% in the pembrolizumab groups vs. 27.1% in the paclitaxel group, and respective 18-month OS rates were 25.7% and 14.8%.

In an analysis of protocol-specified subgroups, there were general trends slightly favoring the checkpoint inhibitor over the taxane, but the only significant difference was among patients with GEJ cancers as the primary tumor location (HR, 0.61, 95% confidence interval, 0.41-0.90).

Pembrolizumab offered a small but significant survival advantage among patients with Eastern Cooperative Oncology Group performance status of 0, with a median OS of 12.3 months vs. 9.3 months (HR, 0.69, 95% CI, 0.49-0.97).

Analyses of PFS and OS by CPS score groups (less than 1, 1-10, or 10 and higher) showed no significant differences, however.

Treatment-related adverse events were more frequent with paclitaxel (84.1% vs. 52.7% of patients on pembrolizumab), but pembrolizumab was associated with more treatment-related deaths (three vs. one). Two of the deaths in the pembrolizumab arm were immune mediated. Grade 3 or greater adverse events occurred in 14.3% vs. 34.8%, respectively.

In the question-and-response following Dr. Shitara’s presentation, session comoderator David Cunningham, MD, of Royal Marsden NHS Foundation Trust in Sutton, England, asked why the KEYNOTE-061 investigators did not pit pembrolizumab against the combination of paclitaxel and ramucirumab (Cyramza) “since that’s what many people would use in this situation.”

Dr. Shitara replied that ramucirumab was not available or accepted as an option in many countries when the trial was first planned in 2014. He added that paclitaxel and ramucirumab should be the control arm for clinical trials going forward.

SOURCE: Shitara K. et al. ESMO World Congress on Gastrointestinal Cancer 2018. Abstract LBA-005.

BARCELONA – The immune checkpoint inhibitor pembrolizumab (Keytruda) did not significantly improve overall survival of advanced/metastatic gastric or gastroesophageal junction cancer compared with paclitaxel, the results of the KEYNOTE-061 study show.

Among 395 patients with gastric or gastroesophageal junction (GEJ) cancer who had expression of the programmed death ligand 1 (PD-L1) on 1% or more of their tumor cells, lymphocytes, and macrophages, median overall survival (OS) for patients treated with pembrolizumab was 9.1 months, compared with 8.3 months. This translated into a hazard ratio (HR) for death in the pembrolizumab arm of 0.82, but with the 95% confidence interval crossing 1.00, and a P value (.04205) that did not meet the prespecified threshold for significance (P equal to or less than .0135).

Despite the failure of the trial to reach its primary endpoint, “these results may support further research to identify patients likely to benefit from pembrolizumab monotherapy and ongoing development of pembrolizumab-based combination therapy for gastric cancer,” he said at the European Society of Medical Oncology World Congress on Gastrointestinal Cancer.

Pembrolizumab is approved by the Food and Drug Administration for the treatment of patients with recurrent locally advanced or metastatic gastric or GEJ adenocarcinoma with tumors confirmed to carry PD-L1 for whom two or more prior lines of therapy had failed.

The approval was based on results of the nonrandomized, open label KEYNOTE-059 trial, which enrolled 259 patients with gastric or GEJ adenocarcinoma that progressed on at least two prior systemic treatments for advanced disease. Of the enrollees, 143 patients had tumors with a PD-L1 Combined Positive Score (CPS) of 1 or greater. The primary trial outcome, the objective response rate for these 143 patients, was 13.3% (95% confidence interval; 8.2-20), with a complete response rate of 1.4% and a partial response rate of 11.9%. The duration of response ranged from at least 2.8 months to at least 19.4 months.

The drug is also approved for unresectable of metastatic gastric tumors with high levels of microsatellite instability that progressed on prior therapy.

The KEYNOTE-061 study was designed to test the proposition that pembrolizumab could improve on paclitaxel for treatment of patients with adenocarcinoma of the stomach or GEJ that was metastatic or locally advanced and unresectable, and for which first-line therapy with a platinum agent and fluoropyrimidine had failed.

A total of 592 patients from Europe, Israel, North America, Asia, and Australia were enrolled and randomly assigned to receive either pembrolizumab 200 mg every 3 weeks for 35 cycles, or paclitaxel 80 mg/m2 on days 1, 8, and 15 of each 4-week cycle. Each treatment was continued until the maximum number of cycles (for pembrolizumab) or until confirmed disease progression, intolerable toxicity, patient withdrawal, or investigator’s decision.

As noted, OS for patients with a CPS score of 1 or greater, the primary endpoint, did not differ between treatment arms, but there was a numerical tilt that appeared to be in favor of pembrolizumab.

For example, the 12-month OS rates were 39.8% in the pembrolizumab groups vs. 27.1% in the paclitaxel group, and respective 18-month OS rates were 25.7% and 14.8%.

In an analysis of protocol-specified subgroups, there were general trends slightly favoring the checkpoint inhibitor over the taxane, but the only significant difference was among patients with GEJ cancers as the primary tumor location (HR, 0.61, 95% confidence interval, 0.41-0.90).

Pembrolizumab offered a small but significant survival advantage among patients with Eastern Cooperative Oncology Group performance status of 0, with a median OS of 12.3 months vs. 9.3 months (HR, 0.69, 95% CI, 0.49-0.97).

Analyses of PFS and OS by CPS score groups (less than 1, 1-10, or 10 and higher) showed no significant differences, however.

Treatment-related adverse events were more frequent with paclitaxel (84.1% vs. 52.7% of patients on pembrolizumab), but pembrolizumab was associated with more treatment-related deaths (three vs. one). Two of the deaths in the pembrolizumab arm were immune mediated. Grade 3 or greater adverse events occurred in 14.3% vs. 34.8%, respectively.

In the question-and-response following Dr. Shitara’s presentation, session comoderator David Cunningham, MD, of Royal Marsden NHS Foundation Trust in Sutton, England, asked why the KEYNOTE-061 investigators did not pit pembrolizumab against the combination of paclitaxel and ramucirumab (Cyramza) “since that’s what many people would use in this situation.”

Dr. Shitara replied that ramucirumab was not available or accepted as an option in many countries when the trial was first planned in 2014. He added that paclitaxel and ramucirumab should be the control arm for clinical trials going forward.

SOURCE: Shitara K. et al. ESMO World Congress on Gastrointestinal Cancer 2018. Abstract LBA-005.

REPORTING FROM ESMO GI 2018

Key clinical point: KEYNOTE-061 did not meet its primary endpoint of a significant survival advantage with pembrolizumab over paclitaxel.

Major finding: There was no significant difference in overall survival between patients with gastric or gastroesophageal junction cancers treated with pembrolizumab or paclitaxel.

Study details: Phase 3 randomized open-label trial in 592 patients with advanced or metastatic and unresectable tumors of the stomach of GEJ.

Disclosures: The trial was supported by Merck. Dr. Shitara has disclosed honoraria from Abbvie, Novartis and Yakult, consulting or advising with Astellas, BMS. Lilly, Ono Pharmaceutical, Pfizer, and Takeda, and institutional research funding from other companies. Dr. Cunningham has disclosed institutional research finding from Merck Serono and others.

Source: Shitara K et al. ESMO World Congress on Gastrointestinal Cancer 2018. Abstract LBA-005.

Ramucirumab improves HCC survival after sorafenib failure

BARCELONA – For patients with hepatocellular carcinoma (HCC) with elevated alpha-fetoprotein (AFP) levels who have disease progression following first-line sorafenib (Nexavar), the antiangiogenic agent ramucirumab was associated with a modest but significant improvement in overall survival, compared with placebo, a pooled analysis of clinical trial data showed.

Among 316 patients assigned to receive ramucirumab in the phase 3 REACH and REACH-2 trials, median overall survival was 8.1 months, compared with 5.0 months for placebo, an absolute difference of 3.1 months that translated into a hazard ratio favoring ramucirumab of 0.694 (P = .0002), reported Andrew X. Zhu, MD, PhD of the Massachusetts General Hospital Cancer Center in Boston.

Ramucirumab (Cyramza) is a human IgG1 monoclonal antibody directed against ligand activation of the vascular endothelial growth factor receptor 2.