User login

Addressing The Joint Commission's Concern About Opioid-Induced Respiratory Depression

The recent article by Susan Kreimer, “Serious Complications from Opioid Overuse in Hospitalized Patients Prompts Nationwide Alert” (February 2013, p. 34) highlights a very important patient safety issue—opioid-induced respiratory depression.

Post-operative patients often manage their pain with patient-controlled analgesia (PCA) pumps. An estimated 14 million patients use PCA annually.1 As the article points out, PCA “offers built-in safety features—if patients become too sedated, they can’t push a button for extra doses—but that isn’t always the case.”

As Dr. Jason McKeown says, “While PCA may be the safest mode of opioid delivery, it is true that regardless of the route of administration, respiratory depression may still occur. To help prevent such incidents from happening, it should be remembered that some of the most significant strides in medicine and surgery are directly attributable to anesthesiology’s advances in patient monitoring.”

With the goal of helping to reduce adverse events and deaths with PCA pumps, the Physician-Patient Alliance for Health & Safety (PPAHS) recently released a safety checklist that reminds caregivers of the essential steps needed to be taken to initiate PCA with a patient, and to continue to assess that patient’s use of PCA. This checklist was developed after consultation with a group of 19 renowned health experts and is a free download at www.ppahs.org.

The checklist provides five recommended steps to have been completed when initiating PCA:

- Risk factors that increase risk of respiratory depression have been considered.

- Pre-procedural cognitive assessment has determined patient is capable of participating in pain management.

However, it should be noted that these first two steps are not an attempt at risk stratification. In reviewing current approaches to address failure-to-rescue, Dr. Andreas Taenzer and his colleagues showed that these current approaches are not able to predict which patients are at risk and at which point the crisis can be detected.

- Patient has been provided with information on proper patient use of PCA pump (other recipients of information—family/visitors) and purpose of monitoring.

The Institute for Safe Medical Practice (www.ismp.org) cautions against PCA proxy and stresses the importance of patient education. The safe use of PCA includes making sure the patient controlling the device actually knows how to use it and the importance of the monitoring used to continuously assess their status.

- Two health-care providers have independently double-checked: patient ID; allergies; drug selection and concentration; dosage adjustments; pump settings; and line attachment to patient and tubing insertion.

Error prevention is critical. The Pennsylvania Patient Safety Authority recently released its analysis of medication errors and adverse drug reactions involving intravenous fentanyl that were reported to them. Researchers found 2,319 events between June 2004 to March 2012; that’s almost 25 events per month. Although one error a day may seem high, their analysis is confined to reports that were made to the authority and only include fentanyl, a potent, synthetic narcotic analgesic with a rapid onset and short duration of action.

- Patient is electronically monitored with both pulse oximetry and capnography.

As Dr. Robert Stoelting, president of the Anesthesia Patient Safety Foundation, recently stated: “The conclusions and recommendations of APSF are that intermittent ‘spot checks’ of [pulse oximetry] and ventilation are not adequate for reliably recognizing clinically significant, evolving, drug-induced, respiratory depression in the postoperative period....APSF recommends that monitoring be continuous and not intermittent, and that continuous electronic monitoring with both pulse oximetry for oxygenation and capnography for the adequacy of ventilation be considered for all patients.”

Frank Federico, a member of the Patient Safety Advisory Group at The Joint Commission and executive director at the Institute for Healthcare Improvement, concurs: “Although nurse spot checks on patients are advisable, pulse oximetry and capnography are essential risk prevention tools in any pain management plan.”

PPAHS encourages all hospitals and health-care facilities to download and utilize the PCA Safety Checklist.

The recent article by Susan Kreimer, “Serious Complications from Opioid Overuse in Hospitalized Patients Prompts Nationwide Alert” (February 2013, p. 34) highlights a very important patient safety issue—opioid-induced respiratory depression.

Post-operative patients often manage their pain with patient-controlled analgesia (PCA) pumps. An estimated 14 million patients use PCA annually.1 As the article points out, PCA “offers built-in safety features—if patients become too sedated, they can’t push a button for extra doses—but that isn’t always the case.”

As Dr. Jason McKeown says, “While PCA may be the safest mode of opioid delivery, it is true that regardless of the route of administration, respiratory depression may still occur. To help prevent such incidents from happening, it should be remembered that some of the most significant strides in medicine and surgery are directly attributable to anesthesiology’s advances in patient monitoring.”

With the goal of helping to reduce adverse events and deaths with PCA pumps, the Physician-Patient Alliance for Health & Safety (PPAHS) recently released a safety checklist that reminds caregivers of the essential steps needed to be taken to initiate PCA with a patient, and to continue to assess that patient’s use of PCA. This checklist was developed after consultation with a group of 19 renowned health experts and is a free download at www.ppahs.org.

The checklist provides five recommended steps to have been completed when initiating PCA:

- Risk factors that increase risk of respiratory depression have been considered.

- Pre-procedural cognitive assessment has determined patient is capable of participating in pain management.

However, it should be noted that these first two steps are not an attempt at risk stratification. In reviewing current approaches to address failure-to-rescue, Dr. Andreas Taenzer and his colleagues showed that these current approaches are not able to predict which patients are at risk and at which point the crisis can be detected.

- Patient has been provided with information on proper patient use of PCA pump (other recipients of information—family/visitors) and purpose of monitoring.

The Institute for Safe Medical Practice (www.ismp.org) cautions against PCA proxy and stresses the importance of patient education. The safe use of PCA includes making sure the patient controlling the device actually knows how to use it and the importance of the monitoring used to continuously assess their status.

- Two health-care providers have independently double-checked: patient ID; allergies; drug selection and concentration; dosage adjustments; pump settings; and line attachment to patient and tubing insertion.

Error prevention is critical. The Pennsylvania Patient Safety Authority recently released its analysis of medication errors and adverse drug reactions involving intravenous fentanyl that were reported to them. Researchers found 2,319 events between June 2004 to March 2012; that’s almost 25 events per month. Although one error a day may seem high, their analysis is confined to reports that were made to the authority and only include fentanyl, a potent, synthetic narcotic analgesic with a rapid onset and short duration of action.

- Patient is electronically monitored with both pulse oximetry and capnography.

As Dr. Robert Stoelting, president of the Anesthesia Patient Safety Foundation, recently stated: “The conclusions and recommendations of APSF are that intermittent ‘spot checks’ of [pulse oximetry] and ventilation are not adequate for reliably recognizing clinically significant, evolving, drug-induced, respiratory depression in the postoperative period....APSF recommends that monitoring be continuous and not intermittent, and that continuous electronic monitoring with both pulse oximetry for oxygenation and capnography for the adequacy of ventilation be considered for all patients.”

Frank Federico, a member of the Patient Safety Advisory Group at The Joint Commission and executive director at the Institute for Healthcare Improvement, concurs: “Although nurse spot checks on patients are advisable, pulse oximetry and capnography are essential risk prevention tools in any pain management plan.”

PPAHS encourages all hospitals and health-care facilities to download and utilize the PCA Safety Checklist.

The recent article by Susan Kreimer, “Serious Complications from Opioid Overuse in Hospitalized Patients Prompts Nationwide Alert” (February 2013, p. 34) highlights a very important patient safety issue—opioid-induced respiratory depression.

Post-operative patients often manage their pain with patient-controlled analgesia (PCA) pumps. An estimated 14 million patients use PCA annually.1 As the article points out, PCA “offers built-in safety features—if patients become too sedated, they can’t push a button for extra doses—but that isn’t always the case.”

As Dr. Jason McKeown says, “While PCA may be the safest mode of opioid delivery, it is true that regardless of the route of administration, respiratory depression may still occur. To help prevent such incidents from happening, it should be remembered that some of the most significant strides in medicine and surgery are directly attributable to anesthesiology’s advances in patient monitoring.”

With the goal of helping to reduce adverse events and deaths with PCA pumps, the Physician-Patient Alliance for Health & Safety (PPAHS) recently released a safety checklist that reminds caregivers of the essential steps needed to be taken to initiate PCA with a patient, and to continue to assess that patient’s use of PCA. This checklist was developed after consultation with a group of 19 renowned health experts and is a free download at www.ppahs.org.

The checklist provides five recommended steps to have been completed when initiating PCA:

- Risk factors that increase risk of respiratory depression have been considered.

- Pre-procedural cognitive assessment has determined patient is capable of participating in pain management.

However, it should be noted that these first two steps are not an attempt at risk stratification. In reviewing current approaches to address failure-to-rescue, Dr. Andreas Taenzer and his colleagues showed that these current approaches are not able to predict which patients are at risk and at which point the crisis can be detected.

- Patient has been provided with information on proper patient use of PCA pump (other recipients of information—family/visitors) and purpose of monitoring.

The Institute for Safe Medical Practice (www.ismp.org) cautions against PCA proxy and stresses the importance of patient education. The safe use of PCA includes making sure the patient controlling the device actually knows how to use it and the importance of the monitoring used to continuously assess their status.

- Two health-care providers have independently double-checked: patient ID; allergies; drug selection and concentration; dosage adjustments; pump settings; and line attachment to patient and tubing insertion.

Error prevention is critical. The Pennsylvania Patient Safety Authority recently released its analysis of medication errors and adverse drug reactions involving intravenous fentanyl that were reported to them. Researchers found 2,319 events between June 2004 to March 2012; that’s almost 25 events per month. Although one error a day may seem high, their analysis is confined to reports that were made to the authority and only include fentanyl, a potent, synthetic narcotic analgesic with a rapid onset and short duration of action.

- Patient is electronically monitored with both pulse oximetry and capnography.

As Dr. Robert Stoelting, president of the Anesthesia Patient Safety Foundation, recently stated: “The conclusions and recommendations of APSF are that intermittent ‘spot checks’ of [pulse oximetry] and ventilation are not adequate for reliably recognizing clinically significant, evolving, drug-induced, respiratory depression in the postoperative period....APSF recommends that monitoring be continuous and not intermittent, and that continuous electronic monitoring with both pulse oximetry for oxygenation and capnography for the adequacy of ventilation be considered for all patients.”

Frank Federico, a member of the Patient Safety Advisory Group at The Joint Commission and executive director at the Institute for Healthcare Improvement, concurs: “Although nurse spot checks on patients are advisable, pulse oximetry and capnography are essential risk prevention tools in any pain management plan.”

PPAHS encourages all hospitals and health-care facilities to download and utilize the PCA Safety Checklist.

'Systems,' Not 'Points,' Is Correct Terminology for ROS Statements

The “Billing and Coding Bandwagon” article in the May 2011 issue of The Hospitalist (p. 26) recently was brought to my attention and I have some concerns that the following statement gives a false impression of acceptable documentation: “When I first started working, I couldn’t believe that I could get audited and fined just because I didn’t add ‘10-point’ or ‘12-point’ to my note of ‘review of systems: negative,’” says hospitalist Amaka Nweke, MD, assistant director with Hospitalists Management Group (HMG) at Kenosha Medical Center in Kenosha, Wis. “I had a lot of frustration, because I had to repackage and re-present my notes in a manner that makes sense to Medicare but makes no sense to physicians.”

In fact, that would still not be considered acceptable documentation for services that require a complete review of systems (99222, 99223, 99219, 99220, 99235, and 99236). The documentation guidelines clearly state: “A complete ROS [review of systems] inquires about the system(s) directly related to the problem(s) identified in the HPI plus all additional body systems.” At least 10 organ systems must be reviewed. Those systems with positive or pertinent negative responses must be individually documented. For the remaining systems, a notation indicating all other systems are negative is permissible. In the absence of such a notation, at least 10 systems must be individually documented.

What Medicare is saying is the provider must have inquired about all 14 systems, not just 10 or 12. The term “point” means nothing in an ROS statement. “Systems” is the correct terminology, not “points.”

I am afraid the article is misleading and could be providing inappropriate documentation advice to hospitalists dealing with CMS and AMA guidelines.

The “Billing and Coding Bandwagon” article in the May 2011 issue of The Hospitalist (p. 26) recently was brought to my attention and I have some concerns that the following statement gives a false impression of acceptable documentation: “When I first started working, I couldn’t believe that I could get audited and fined just because I didn’t add ‘10-point’ or ‘12-point’ to my note of ‘review of systems: negative,’” says hospitalist Amaka Nweke, MD, assistant director with Hospitalists Management Group (HMG) at Kenosha Medical Center in Kenosha, Wis. “I had a lot of frustration, because I had to repackage and re-present my notes in a manner that makes sense to Medicare but makes no sense to physicians.”

In fact, that would still not be considered acceptable documentation for services that require a complete review of systems (99222, 99223, 99219, 99220, 99235, and 99236). The documentation guidelines clearly state: “A complete ROS [review of systems] inquires about the system(s) directly related to the problem(s) identified in the HPI plus all additional body systems.” At least 10 organ systems must be reviewed. Those systems with positive or pertinent negative responses must be individually documented. For the remaining systems, a notation indicating all other systems are negative is permissible. In the absence of such a notation, at least 10 systems must be individually documented.

What Medicare is saying is the provider must have inquired about all 14 systems, not just 10 or 12. The term “point” means nothing in an ROS statement. “Systems” is the correct terminology, not “points.”

I am afraid the article is misleading and could be providing inappropriate documentation advice to hospitalists dealing with CMS and AMA guidelines.

The “Billing and Coding Bandwagon” article in the May 2011 issue of The Hospitalist (p. 26) recently was brought to my attention and I have some concerns that the following statement gives a false impression of acceptable documentation: “When I first started working, I couldn’t believe that I could get audited and fined just because I didn’t add ‘10-point’ or ‘12-point’ to my note of ‘review of systems: negative,’” says hospitalist Amaka Nweke, MD, assistant director with Hospitalists Management Group (HMG) at Kenosha Medical Center in Kenosha, Wis. “I had a lot of frustration, because I had to repackage and re-present my notes in a manner that makes sense to Medicare but makes no sense to physicians.”

In fact, that would still not be considered acceptable documentation for services that require a complete review of systems (99222, 99223, 99219, 99220, 99235, and 99236). The documentation guidelines clearly state: “A complete ROS [review of systems] inquires about the system(s) directly related to the problem(s) identified in the HPI plus all additional body systems.” At least 10 organ systems must be reviewed. Those systems with positive or pertinent negative responses must be individually documented. For the remaining systems, a notation indicating all other systems are negative is permissible. In the absence of such a notation, at least 10 systems must be individually documented.

What Medicare is saying is the provider must have inquired about all 14 systems, not just 10 or 12. The term “point” means nothing in an ROS statement. “Systems” is the correct terminology, not “points.”

I am afraid the article is misleading and could be providing inappropriate documentation advice to hospitalists dealing with CMS and AMA guidelines.

Some Thoughts on the Patient-Doctor Relationship

There is an inherent power differential in the patient-doctor relationship: The patient comes to the doctor as an authority on his/her physical or emotional state and is thus either intellectually or emotionally dependent on the doctor’s treatment plan and advice. It is therefore absolutely essential that the doctor respect the patient as an equal participant in the treatment. Although the doctor certainly has knowledge about how similar conditions were successfully treated in the past, hopefully a medical professional will display an attitude of respect and mutual collaboration with the patient to resolve his/her problem.

Listening is a key component of conveying an attitude of respect toward the patient. Nowadays practitioners are most often taking notes at their computers while speaking with the patient. This is certainly time-efficient and may in fact be necessary in order for a medical practice to remain solvent with the demands of Medicare and insurance companies. However, multitasking does not convey to the patient that they are connecting with the doctor. Listening is a complex action, which not only involves the ears, but the eyes, the kinesthetic responses of the whole body, and attention to the patient’s nonverbal communication.

Some of the key faux pas to avoid when listening to the patient include:

- Not centering oneself before engaging in a “crucial conversation”;

- Not listening because one is thinking ahead to his/her own response;

- Not maintaining eye contact;

- Not being aware of when one feels challenged and/or defensive;

- Discouraging the patient from contributing his/her own ideas;

- Not allowing the patient to give feedback on what s/he heard as instructions; and

- Taking phone calls or allowing interruptions during a consultation.

It is always helpful to give a patient clear, written instructions about medications, diet, exercise, etc., that result from the consultation. Some doctors send this report via secure email to the patient for review, which is an excellent technique.

The art of apology is another topic that greatly impacts the doctor-patient relationship, as well as the doctor’s relationship with the patient’s family members. This art is a process that has recently emerged in the medical and medical insurance industries. Kaiser Permanente’s director of medical-legal affairs has adopted the practice of asking permission to videotape the actual conversation in which a physician apologizes to a patient for a mistake in a procedure. These conversations are meant to help medical professionals learn how to admit mistakes and ask for forgiveness. Oftentimes patients are looking for just such a communication, which may allow them to put to rest feelings of resentment, bitterness, and regret.

Our patients’ well-being is our ideal goal. Knowing that they have been heard and their feelings understood may in the long run allow patients and their families to heal mind/body/soul more powerfully than we had ever thought. Of course, in our litigious society this may well be an art that remains to be developed over the long term.

There is an inherent power differential in the patient-doctor relationship: The patient comes to the doctor as an authority on his/her physical or emotional state and is thus either intellectually or emotionally dependent on the doctor’s treatment plan and advice. It is therefore absolutely essential that the doctor respect the patient as an equal participant in the treatment. Although the doctor certainly has knowledge about how similar conditions were successfully treated in the past, hopefully a medical professional will display an attitude of respect and mutual collaboration with the patient to resolve his/her problem.

Listening is a key component of conveying an attitude of respect toward the patient. Nowadays practitioners are most often taking notes at their computers while speaking with the patient. This is certainly time-efficient and may in fact be necessary in order for a medical practice to remain solvent with the demands of Medicare and insurance companies. However, multitasking does not convey to the patient that they are connecting with the doctor. Listening is a complex action, which not only involves the ears, but the eyes, the kinesthetic responses of the whole body, and attention to the patient’s nonverbal communication.

Some of the key faux pas to avoid when listening to the patient include:

- Not centering oneself before engaging in a “crucial conversation”;

- Not listening because one is thinking ahead to his/her own response;

- Not maintaining eye contact;

- Not being aware of when one feels challenged and/or defensive;

- Discouraging the patient from contributing his/her own ideas;

- Not allowing the patient to give feedback on what s/he heard as instructions; and

- Taking phone calls or allowing interruptions during a consultation.

It is always helpful to give a patient clear, written instructions about medications, diet, exercise, etc., that result from the consultation. Some doctors send this report via secure email to the patient for review, which is an excellent technique.

The art of apology is another topic that greatly impacts the doctor-patient relationship, as well as the doctor’s relationship with the patient’s family members. This art is a process that has recently emerged in the medical and medical insurance industries. Kaiser Permanente’s director of medical-legal affairs has adopted the practice of asking permission to videotape the actual conversation in which a physician apologizes to a patient for a mistake in a procedure. These conversations are meant to help medical professionals learn how to admit mistakes and ask for forgiveness. Oftentimes patients are looking for just such a communication, which may allow them to put to rest feelings of resentment, bitterness, and regret.

Our patients’ well-being is our ideal goal. Knowing that they have been heard and their feelings understood may in the long run allow patients and their families to heal mind/body/soul more powerfully than we had ever thought. Of course, in our litigious society this may well be an art that remains to be developed over the long term.

There is an inherent power differential in the patient-doctor relationship: The patient comes to the doctor as an authority on his/her physical or emotional state and is thus either intellectually or emotionally dependent on the doctor’s treatment plan and advice. It is therefore absolutely essential that the doctor respect the patient as an equal participant in the treatment. Although the doctor certainly has knowledge about how similar conditions were successfully treated in the past, hopefully a medical professional will display an attitude of respect and mutual collaboration with the patient to resolve his/her problem.

Listening is a key component of conveying an attitude of respect toward the patient. Nowadays practitioners are most often taking notes at their computers while speaking with the patient. This is certainly time-efficient and may in fact be necessary in order for a medical practice to remain solvent with the demands of Medicare and insurance companies. However, multitasking does not convey to the patient that they are connecting with the doctor. Listening is a complex action, which not only involves the ears, but the eyes, the kinesthetic responses of the whole body, and attention to the patient’s nonverbal communication.

Some of the key faux pas to avoid when listening to the patient include:

- Not centering oneself before engaging in a “crucial conversation”;

- Not listening because one is thinking ahead to his/her own response;

- Not maintaining eye contact;

- Not being aware of when one feels challenged and/or defensive;

- Discouraging the patient from contributing his/her own ideas;

- Not allowing the patient to give feedback on what s/he heard as instructions; and

- Taking phone calls or allowing interruptions during a consultation.

It is always helpful to give a patient clear, written instructions about medications, diet, exercise, etc., that result from the consultation. Some doctors send this report via secure email to the patient for review, which is an excellent technique.

The art of apology is another topic that greatly impacts the doctor-patient relationship, as well as the doctor’s relationship with the patient’s family members. This art is a process that has recently emerged in the medical and medical insurance industries. Kaiser Permanente’s director of medical-legal affairs has adopted the practice of asking permission to videotape the actual conversation in which a physician apologizes to a patient for a mistake in a procedure. These conversations are meant to help medical professionals learn how to admit mistakes and ask for forgiveness. Oftentimes patients are looking for just such a communication, which may allow them to put to rest feelings of resentment, bitterness, and regret.

Our patients’ well-being is our ideal goal. Knowing that they have been heard and their feelings understood may in the long run allow patients and their families to heal mind/body/soul more powerfully than we had ever thought. Of course, in our litigious society this may well be an art that remains to be developed over the long term.

Coordinated Approach May Help in Caring for Hospitals’ Neediest Patients

To my way of thinking, a person’s diagnosis or pathophysiology is not as strong a predictor of needing inpatient hospital care as it might have been 10 or 20 years ago. Rather than the clinical diagnosis (e.g. pneumonia), it seems to me that frailty or social complexity often are the principal determinants of which patients are admitted to a hospital for medical conditions.

Some of these patients are admitted frequently but appear to realize little or no benefit from hospitalization. These patients typically have little or no social support, and they often have either significant mental health disorders or substance abuse, or both. Much has been written about these patients, and I recommend an article by Dr. Atul Gawande in the Jan. 24, 2011, issue of The New Yorker titled “The Hot Spotters: Can We Lower Medical Costs by Giving the Neediest Patients Better Care?”

The Agency for Healthcare Research and Quality’s “Statistical Brief 354” on how health-care expenditures are allocated across the population reported that 1% of the population accounted for more than 22% of health-care spending in 2008. One in 5 of those were in that category again in 2009. Some of these patients would benefit from care plans.

The Role of Care Plans

It seems that there may be few effective inpatient interventions that will benefit these patients. After all, they have chronic issues that require ongoing relationships with outpatient providers, something that many of these patients lack. But for some (most?) of these patients, it seems clear that frequent hospitalizations don’t help and sometimes just perpetuate or worsen the patient’s dependence on the hospital at a high financial cost to society—and significant frustration and burnout on the part of hospital caregivers, including hospitalists.

For most hospitals, this problem is significant enough to require some sort of coordinated approach to the care of the dozens of types of patients in this category. Implementing whatever plan of care seems appropriate to the caregivers during each admission is frustrating, ensures lots of variation in care, and makes it easier for manipulative patients to abuse the hospital resources and personnel.

A better approach is to follow the same plan of care from one hospital visit to the next. You already knew that. But developing a care plan to follow during each ED visit and admission is time-consuming and often fraught with uncertainty about where boundaries should be set. So if you’re like me, you might just try to guide the patient to discharge this time and hope that whoever sees the patient on the next admission will take the initiative to develop the care plan. The result is that few such plans are developed.

Your Hospital Needs a Care Plan

Relying on individual doctors or nurses to take the initiative to develop care plans will almost always mean few plans are developed, they will vary in their effectiveness, and other providers may not be aware a plan exists. This was the case at the hospital where I practice until I heard Dr. Rick Hilger, MD, SFHM, a hospitalist at Regions Hospital in Minneapolis, present on this topic at HM12 in San Diego.

Dr. Hilger led a multidisciplinary team to develop care plans (they call them “restriction care plans”) and found that they dramatically reduced the rate of hospital admissions and ED visits for these patients. Hearing about this experience served as a kick in the pants for me, so I did much the same thing at “my” hospital. We have now developed plans for more than 20 patients and found that they visit our ED and are admitted less often. And, anecdotally at least, hospitalists and other hospital staff find that the care plans reduce, at least a little, the stress of caring for these patients.

Unanswered Questions

Although it seems clear that care plans reduce visits to the hospital that develops them, I suspect that some of these patients aren’t consuming any fewer health-care resources. They may just seek care from a different hospital.

My home state of Washington is working to develop individual patient care plans available to all hospitals in the state. A system called the Emergency Department Information Exchange (EDIE) has been adopted by nearly all the hospitals in the state. It allows them to share information on ED visits and such things as care plans with one another. For example, through EDIE, each hospital could see the opiate dosing schedule and admission criteria agreed to by patient and primary-care physician.

So it seems that care plans and the technology to share them can make it more difficult for patients to harm themselves by visiting many hospitals to get excessive opiate prescriptions, for example. This should benefit the patient and lower ED and hospital expenditures for these patients. But we don’t know what portion of costs simply is shifted to other settings, so there is no easy way to know the net effect on health-care costs.

An important unanswered question is whether these care plans improve patient well-being. It seems clear they do in some cases, but it is hard to know whether some patients may be worse off because of the plan.

Conclusion

I think nearly every hospital would benefit from a care plan committee composed of at least one hospitalist, ED physician, a nursing representative, and potentially other disciplines (see “Care Plan Attributes,” above). Our committee includes our inpatient psychiatrist, a really valuable contributor.

Dr. Nelson has been a practicing hospitalist since 1988. He is co-founder and past president of SHM, and principal in Nelson Flores Hospital Medicine Consultants. He is co-director for SHM’s “Best Practices in Managing a Hospital Medicine Program” course. Write to him at [email protected].

To my way of thinking, a person’s diagnosis or pathophysiology is not as strong a predictor of needing inpatient hospital care as it might have been 10 or 20 years ago. Rather than the clinical diagnosis (e.g. pneumonia), it seems to me that frailty or social complexity often are the principal determinants of which patients are admitted to a hospital for medical conditions.

Some of these patients are admitted frequently but appear to realize little or no benefit from hospitalization. These patients typically have little or no social support, and they often have either significant mental health disorders or substance abuse, or both. Much has been written about these patients, and I recommend an article by Dr. Atul Gawande in the Jan. 24, 2011, issue of The New Yorker titled “The Hot Spotters: Can We Lower Medical Costs by Giving the Neediest Patients Better Care?”

The Agency for Healthcare Research and Quality’s “Statistical Brief 354” on how health-care expenditures are allocated across the population reported that 1% of the population accounted for more than 22% of health-care spending in 2008. One in 5 of those were in that category again in 2009. Some of these patients would benefit from care plans.

The Role of Care Plans

It seems that there may be few effective inpatient interventions that will benefit these patients. After all, they have chronic issues that require ongoing relationships with outpatient providers, something that many of these patients lack. But for some (most?) of these patients, it seems clear that frequent hospitalizations don’t help and sometimes just perpetuate or worsen the patient’s dependence on the hospital at a high financial cost to society—and significant frustration and burnout on the part of hospital caregivers, including hospitalists.

For most hospitals, this problem is significant enough to require some sort of coordinated approach to the care of the dozens of types of patients in this category. Implementing whatever plan of care seems appropriate to the caregivers during each admission is frustrating, ensures lots of variation in care, and makes it easier for manipulative patients to abuse the hospital resources and personnel.

A better approach is to follow the same plan of care from one hospital visit to the next. You already knew that. But developing a care plan to follow during each ED visit and admission is time-consuming and often fraught with uncertainty about where boundaries should be set. So if you’re like me, you might just try to guide the patient to discharge this time and hope that whoever sees the patient on the next admission will take the initiative to develop the care plan. The result is that few such plans are developed.

Your Hospital Needs a Care Plan

Relying on individual doctors or nurses to take the initiative to develop care plans will almost always mean few plans are developed, they will vary in their effectiveness, and other providers may not be aware a plan exists. This was the case at the hospital where I practice until I heard Dr. Rick Hilger, MD, SFHM, a hospitalist at Regions Hospital in Minneapolis, present on this topic at HM12 in San Diego.

Dr. Hilger led a multidisciplinary team to develop care plans (they call them “restriction care plans”) and found that they dramatically reduced the rate of hospital admissions and ED visits for these patients. Hearing about this experience served as a kick in the pants for me, so I did much the same thing at “my” hospital. We have now developed plans for more than 20 patients and found that they visit our ED and are admitted less often. And, anecdotally at least, hospitalists and other hospital staff find that the care plans reduce, at least a little, the stress of caring for these patients.

Unanswered Questions

Although it seems clear that care plans reduce visits to the hospital that develops them, I suspect that some of these patients aren’t consuming any fewer health-care resources. They may just seek care from a different hospital.

My home state of Washington is working to develop individual patient care plans available to all hospitals in the state. A system called the Emergency Department Information Exchange (EDIE) has been adopted by nearly all the hospitals in the state. It allows them to share information on ED visits and such things as care plans with one another. For example, through EDIE, each hospital could see the opiate dosing schedule and admission criteria agreed to by patient and primary-care physician.

So it seems that care plans and the technology to share them can make it more difficult for patients to harm themselves by visiting many hospitals to get excessive opiate prescriptions, for example. This should benefit the patient and lower ED and hospital expenditures for these patients. But we don’t know what portion of costs simply is shifted to other settings, so there is no easy way to know the net effect on health-care costs.

An important unanswered question is whether these care plans improve patient well-being. It seems clear they do in some cases, but it is hard to know whether some patients may be worse off because of the plan.

Conclusion

I think nearly every hospital would benefit from a care plan committee composed of at least one hospitalist, ED physician, a nursing representative, and potentially other disciplines (see “Care Plan Attributes,” above). Our committee includes our inpatient psychiatrist, a really valuable contributor.

Dr. Nelson has been a practicing hospitalist since 1988. He is co-founder and past president of SHM, and principal in Nelson Flores Hospital Medicine Consultants. He is co-director for SHM’s “Best Practices in Managing a Hospital Medicine Program” course. Write to him at [email protected].

To my way of thinking, a person’s diagnosis or pathophysiology is not as strong a predictor of needing inpatient hospital care as it might have been 10 or 20 years ago. Rather than the clinical diagnosis (e.g. pneumonia), it seems to me that frailty or social complexity often are the principal determinants of which patients are admitted to a hospital for medical conditions.

Some of these patients are admitted frequently but appear to realize little or no benefit from hospitalization. These patients typically have little or no social support, and they often have either significant mental health disorders or substance abuse, or both. Much has been written about these patients, and I recommend an article by Dr. Atul Gawande in the Jan. 24, 2011, issue of The New Yorker titled “The Hot Spotters: Can We Lower Medical Costs by Giving the Neediest Patients Better Care?”

The Agency for Healthcare Research and Quality’s “Statistical Brief 354” on how health-care expenditures are allocated across the population reported that 1% of the population accounted for more than 22% of health-care spending in 2008. One in 5 of those were in that category again in 2009. Some of these patients would benefit from care plans.

The Role of Care Plans

It seems that there may be few effective inpatient interventions that will benefit these patients. After all, they have chronic issues that require ongoing relationships with outpatient providers, something that many of these patients lack. But for some (most?) of these patients, it seems clear that frequent hospitalizations don’t help and sometimes just perpetuate or worsen the patient’s dependence on the hospital at a high financial cost to society—and significant frustration and burnout on the part of hospital caregivers, including hospitalists.

For most hospitals, this problem is significant enough to require some sort of coordinated approach to the care of the dozens of types of patients in this category. Implementing whatever plan of care seems appropriate to the caregivers during each admission is frustrating, ensures lots of variation in care, and makes it easier for manipulative patients to abuse the hospital resources and personnel.

A better approach is to follow the same plan of care from one hospital visit to the next. You already knew that. But developing a care plan to follow during each ED visit and admission is time-consuming and often fraught with uncertainty about where boundaries should be set. So if you’re like me, you might just try to guide the patient to discharge this time and hope that whoever sees the patient on the next admission will take the initiative to develop the care plan. The result is that few such plans are developed.

Your Hospital Needs a Care Plan

Relying on individual doctors or nurses to take the initiative to develop care plans will almost always mean few plans are developed, they will vary in their effectiveness, and other providers may not be aware a plan exists. This was the case at the hospital where I practice until I heard Dr. Rick Hilger, MD, SFHM, a hospitalist at Regions Hospital in Minneapolis, present on this topic at HM12 in San Diego.

Dr. Hilger led a multidisciplinary team to develop care plans (they call them “restriction care plans”) and found that they dramatically reduced the rate of hospital admissions and ED visits for these patients. Hearing about this experience served as a kick in the pants for me, so I did much the same thing at “my” hospital. We have now developed plans for more than 20 patients and found that they visit our ED and are admitted less often. And, anecdotally at least, hospitalists and other hospital staff find that the care plans reduce, at least a little, the stress of caring for these patients.

Unanswered Questions

Although it seems clear that care plans reduce visits to the hospital that develops them, I suspect that some of these patients aren’t consuming any fewer health-care resources. They may just seek care from a different hospital.

My home state of Washington is working to develop individual patient care plans available to all hospitals in the state. A system called the Emergency Department Information Exchange (EDIE) has been adopted by nearly all the hospitals in the state. It allows them to share information on ED visits and such things as care plans with one another. For example, through EDIE, each hospital could see the opiate dosing schedule and admission criteria agreed to by patient and primary-care physician.

So it seems that care plans and the technology to share them can make it more difficult for patients to harm themselves by visiting many hospitals to get excessive opiate prescriptions, for example. This should benefit the patient and lower ED and hospital expenditures for these patients. But we don’t know what portion of costs simply is shifted to other settings, so there is no easy way to know the net effect on health-care costs.

An important unanswered question is whether these care plans improve patient well-being. It seems clear they do in some cases, but it is hard to know whether some patients may be worse off because of the plan.

Conclusion

I think nearly every hospital would benefit from a care plan committee composed of at least one hospitalist, ED physician, a nursing representative, and potentially other disciplines (see “Care Plan Attributes,” above). Our committee includes our inpatient psychiatrist, a really valuable contributor.

Dr. Nelson has been a practicing hospitalist since 1988. He is co-founder and past president of SHM, and principal in Nelson Flores Hospital Medicine Consultants. He is co-director for SHM’s “Best Practices in Managing a Hospital Medicine Program” course. Write to him at [email protected].

Surviving Sepsis Campaign 2012 Guidelines: Updates For the Hospitalist

Background

Sepsis is a clinical syndrome with systemic effects that can progress to severe sepsis and/or septic shock. The incidence of severe sepsis and septic shock is rising in the United States, and these syndromes are associated with significant morbidity and a mortality rate as high as 25% to 35%.1 In fact, sepsis is one of the 10 leading causes of death in the U.S., accounting for 2% of hospital admissions but 17% of in-hospital deaths.1

The main principles of effective treatment for severe sepsis and septic shock are timely recognition and early aggressive therapy. Launched in 2002, the Surviving Sepsis Campaign (SSC) was the result of a collaboration of three professional societies. The goal of the SSC collaborative was to reduce mortality from severe sepsis and septic shock by 25%. To that end, the SSC convened representatives from several international societies to develop a set of evidence-based guidelines as a means of guiding clinicians in optimizing management of patients with severe sepsis and septic shock. Since the original publication of the SSC guidelines in 2004, there have been two updates—one in 2008 and one in February 2013.2

Guideline Updates

Quantitative, protocol-driven initial resuscitation in the first six hours for patients with severe sepsis and septic shock remains a high-level recommendation, but SSC has added normalization of the lactate level as a resuscitation goal. This new suggestion is based on two studies published since the 2008 SCC guidelines that showed noninferiority to previously established goals and absolute mortality benefit.3,4

There is a new focus on screening for sepsis and the use of hospital-based performance-improvement programs, which were not previously addressed in the 2008 SCC guidelines. Patients with suspected infections and who are seriously ill should be screened in order to identify sepsis early during the hospital course. Additionally, it is recommended that hospitals implement performance-improvement measures by which multidisciplinary teams can address treatment of sepsis by improving compliance with the SSC bundles, citing their own data as the model but ultimately leaving this recommendation as ungradable in regards to the quality of available supporting evidence.5

Cultures drawn before antibiotics and early imaging to confirm potential sources are still recommended, but the committee has added the use of one: 3 beta D-glucan and the mannan antigen and anti-mannan antibody assays when considering invasive candidiasis as your infective agent. They do note the known risk of false positive results with these assays and warn that they should be used with caution.

Early, broad-spectrum antibiotic administration within the first hour of presentation was upgraded for severe sepsis and downgraded for septic shock. The decision to initiate double coverage for suspected gram-negative infection is not recommended specifically but can be considered in situations when highly antibiotic resistant pathogens are potentially present. Daily assessment of the appropriate antibiotic regimen remains an important tenet, and the use of low procalcitonin levels as a tool to assist in the decision to discontinue antibiotics has been introduced. Source control is still strongly recommended in the first 12 hours of treatment.

The SSC 2012 guidelines specifically address the rate of fluid administered and the type of fluid that should be used. It is now recommended that a fluid challenge of 30 mL/kg be used for initial resuscitation, but the guidelines leave it up to the clinician to give more fluid if needed. There is a strong push for use of crystalloids rather than colloids during initial resuscitation and thereafter. Disfavor for colloids stemmed from trials showing increased mortality when comparing resuscitation with hydroxyethyl starch versus crystalloid for patients in septic shock.6,7 Albumin, on the other hand, is recommended to resuscitate patients with severe sepsis and septic shock in cases for which large amounts of crystalloid are required.

The 2012 SSC guidelines recommend norepinephrine (NE) alone as the first-line vasopressor in sepsis and no longer include dopamine in this category. In fact, the use of dopamine in septic shock has been downgraded and should only to be considered in patients at low risk of tachyarrhythmia and in bradycardia syndromes. Epinephrine is now favored as the second agent or as a substitute to NE. Phenylephrine is no longer recommended unless there is contraindication to using NE, the patient has a high cardiac output, or it is used as a salvage therapy. Vasopressin is considered only an adjunctive agent to NE and should never be used alone.

Recommendations regarding corticosteroid therapy remain largely unchanged from 2008 SCC guidelines, which only support their use when adequate volume resuscitation and vasopressor support has failed to achieve hemodynamic stability. Glucose control is recommended but at the new target of achieving a level of <180 mg/dL, up from a previous target of <150 mg/dL.

Notably, recombinant human activated protein C was completely omitted from the 2012 guidelines, prompted by the voluntary removal of the drug by the manufacturer after failing to show benefit. Use of selenium and intravenous immunoglobulin received comment, but there is insufficient evidence supporting their benefit at the current time. They also encourage clinicians to incorporate goals of care and end-of-life issues into the treatment plan and discuss this with patients and/or surrogates early in treatment.

Guideline Analysis

Prior versions of the SSC guidelines have been met with a fair amount of skepticism.8 Much of the criticism is based on the industry sponsorship of the 2004 version, the lack of transparency regarding potential conflicts of interest of the committee members, and that the bundle recommendations largely were based on only one trial and, therefore, not evidenced-based.9 The 2012 SSC committee seems to have addressed these issues as the guidelines are free of commercial sponsorship in the 2008 and current versions. They also rigorously applied the GRADE system to methodically assess the strength and quality of supporting evidence. The result is a set of guidelines that are partially evidence-based and partially based on expert opinion, but this is clearly delineated in these newest guidelines. This provides clinicians with a clear and concise recommended approach to the patient with severe sepsis and septic shock.

The guidelines continue to place a heavy emphasis on three- and six-hour treatment bundles, and with the assistance of the Institute for Health Care Improvement efforts to improve implementation of the bundle, they are already are widespread with an eye to expand across the country. The components of the three-hour treatment bundle (lactate measurement, blood cultures prior to initiation of antibiotics, broad-spectrum antibiotics, and IV crystalloids for hypotension or for a lactate of >4 mmol/L) recommended by the SSC have not changed substantially since 2008. The one exception is the rate at which IV crystalloid should be administered of 30 mL/kg, which is up from 20 mL/kg. Only time will tell how this change will affect bundle compliance or reduce mortality. But this does pose a significant challenge to quality and performance improvement groups accustomed to tracking compliance with IV fluid administration under the old standard and the educational campaigns associated with a change.

It appears that the SSC is here to stay, now in its third iteration. The lasting legacy of the SSC guidelines might not rest with the content of the guidelines, per se, but in raising awareness of severe sepsis and septic shock in a way that had not previously been considered.

HM Takeaways

The revised 2012 SCC updates bring some new tools to the clinician for early recognition and effective management of patients with sepsis. The push for institutions to adopt screening and performance measures reflects a general trend in health care to create high-performance systems. As these new guidelines are put into practice, there are several changes that might require augmentation of quality metrics being tracked at institutions nationally and internationally.

Dr. Pendharker is assistant professor of medicine in the division of hospital medicine at the University of California San Francisco and San Francisco General Hospital. Dr. Gomez is assistant professor of medicine in the division of pulmonary and critical care medicine at UCSF and San Francisco General Hospital.

References

- Hall MJ, Williams SN, DeFrances CJ, et al. Inpatient care for septicemia or sepsis: a challenge for patients and hospitals. NCHS Data Brief. 2011:1-8.

- Dellinger RP, Levy MM, Rhodes A, et al. Surviving Sepsis Campaign: international guidelines for management of severe sepsis and septic shock: 2012. Crit Care Med. 2013;41:580-637.

- Jansen TC, van Bommel J, Schoonderbeek FJ, et al. Early lactate-guided therapy in intensive care unit patients: a multicenter, open-label, randomized controlled trial. Am J Respir Crit Care Med. 2010;182:752-761.

- Jones AE, Shapiro NI, Trzeciak S, et al. Lactate clearance vs central venous oxygen saturation as goals of early sepsis therapy: a randomized clinical trial. JAMA. 2010;303:739-746.

- Levy MM, Dellinger RP, Townsend SR, et al. The Surviving Sepsis Campaign: results of an international guideline-based performance improvement program targeting severe sepsis. Crit Care Med. 2010;38:367-374.

- Guidet B, Martinet O, Boulain T, et al. Assessment of hemodynamic efficacy and safety of 6% hydroxyethylstarch 130/0.4 vs. 0.9% NaCl fluid replacement in patients with severe sepsis: The CRYSTMAS study. Crit Care. 2012;16:R94.

- Perner A, Haase N, Guttormsen AB, et al. Hydroxyethyl starch 130/0.42 versus Ringer’s acetate in severe sepsis. N Engl J Med. 2012;367:124-134.

- Marik PE. Surviving sepsis: going beyond the guidelines. Ann Intensive Care. 2011;1:17.

- Rivers E, Nguyen B, Havstad S, et al. Early goal-directed therapy in the treatment of severe sepsis and septic shock. N Engl J Med. 2001;345:1368-1377.

Background

Sepsis is a clinical syndrome with systemic effects that can progress to severe sepsis and/or septic shock. The incidence of severe sepsis and septic shock is rising in the United States, and these syndromes are associated with significant morbidity and a mortality rate as high as 25% to 35%.1 In fact, sepsis is one of the 10 leading causes of death in the U.S., accounting for 2% of hospital admissions but 17% of in-hospital deaths.1

The main principles of effective treatment for severe sepsis and septic shock are timely recognition and early aggressive therapy. Launched in 2002, the Surviving Sepsis Campaign (SSC) was the result of a collaboration of three professional societies. The goal of the SSC collaborative was to reduce mortality from severe sepsis and septic shock by 25%. To that end, the SSC convened representatives from several international societies to develop a set of evidence-based guidelines as a means of guiding clinicians in optimizing management of patients with severe sepsis and septic shock. Since the original publication of the SSC guidelines in 2004, there have been two updates—one in 2008 and one in February 2013.2

Guideline Updates

Quantitative, protocol-driven initial resuscitation in the first six hours for patients with severe sepsis and septic shock remains a high-level recommendation, but SSC has added normalization of the lactate level as a resuscitation goal. This new suggestion is based on two studies published since the 2008 SCC guidelines that showed noninferiority to previously established goals and absolute mortality benefit.3,4

There is a new focus on screening for sepsis and the use of hospital-based performance-improvement programs, which were not previously addressed in the 2008 SCC guidelines. Patients with suspected infections and who are seriously ill should be screened in order to identify sepsis early during the hospital course. Additionally, it is recommended that hospitals implement performance-improvement measures by which multidisciplinary teams can address treatment of sepsis by improving compliance with the SSC bundles, citing their own data as the model but ultimately leaving this recommendation as ungradable in regards to the quality of available supporting evidence.5

Cultures drawn before antibiotics and early imaging to confirm potential sources are still recommended, but the committee has added the use of one: 3 beta D-glucan and the mannan antigen and anti-mannan antibody assays when considering invasive candidiasis as your infective agent. They do note the known risk of false positive results with these assays and warn that they should be used with caution.

Early, broad-spectrum antibiotic administration within the first hour of presentation was upgraded for severe sepsis and downgraded for septic shock. The decision to initiate double coverage for suspected gram-negative infection is not recommended specifically but can be considered in situations when highly antibiotic resistant pathogens are potentially present. Daily assessment of the appropriate antibiotic regimen remains an important tenet, and the use of low procalcitonin levels as a tool to assist in the decision to discontinue antibiotics has been introduced. Source control is still strongly recommended in the first 12 hours of treatment.

The SSC 2012 guidelines specifically address the rate of fluid administered and the type of fluid that should be used. It is now recommended that a fluid challenge of 30 mL/kg be used for initial resuscitation, but the guidelines leave it up to the clinician to give more fluid if needed. There is a strong push for use of crystalloids rather than colloids during initial resuscitation and thereafter. Disfavor for colloids stemmed from trials showing increased mortality when comparing resuscitation with hydroxyethyl starch versus crystalloid for patients in septic shock.6,7 Albumin, on the other hand, is recommended to resuscitate patients with severe sepsis and septic shock in cases for which large amounts of crystalloid are required.

The 2012 SSC guidelines recommend norepinephrine (NE) alone as the first-line vasopressor in sepsis and no longer include dopamine in this category. In fact, the use of dopamine in septic shock has been downgraded and should only to be considered in patients at low risk of tachyarrhythmia and in bradycardia syndromes. Epinephrine is now favored as the second agent or as a substitute to NE. Phenylephrine is no longer recommended unless there is contraindication to using NE, the patient has a high cardiac output, or it is used as a salvage therapy. Vasopressin is considered only an adjunctive agent to NE and should never be used alone.

Recommendations regarding corticosteroid therapy remain largely unchanged from 2008 SCC guidelines, which only support their use when adequate volume resuscitation and vasopressor support has failed to achieve hemodynamic stability. Glucose control is recommended but at the new target of achieving a level of <180 mg/dL, up from a previous target of <150 mg/dL.

Notably, recombinant human activated protein C was completely omitted from the 2012 guidelines, prompted by the voluntary removal of the drug by the manufacturer after failing to show benefit. Use of selenium and intravenous immunoglobulin received comment, but there is insufficient evidence supporting their benefit at the current time. They also encourage clinicians to incorporate goals of care and end-of-life issues into the treatment plan and discuss this with patients and/or surrogates early in treatment.

Guideline Analysis

Prior versions of the SSC guidelines have been met with a fair amount of skepticism.8 Much of the criticism is based on the industry sponsorship of the 2004 version, the lack of transparency regarding potential conflicts of interest of the committee members, and that the bundle recommendations largely were based on only one trial and, therefore, not evidenced-based.9 The 2012 SSC committee seems to have addressed these issues as the guidelines are free of commercial sponsorship in the 2008 and current versions. They also rigorously applied the GRADE system to methodically assess the strength and quality of supporting evidence. The result is a set of guidelines that are partially evidence-based and partially based on expert opinion, but this is clearly delineated in these newest guidelines. This provides clinicians with a clear and concise recommended approach to the patient with severe sepsis and septic shock.

The guidelines continue to place a heavy emphasis on three- and six-hour treatment bundles, and with the assistance of the Institute for Health Care Improvement efforts to improve implementation of the bundle, they are already are widespread with an eye to expand across the country. The components of the three-hour treatment bundle (lactate measurement, blood cultures prior to initiation of antibiotics, broad-spectrum antibiotics, and IV crystalloids for hypotension or for a lactate of >4 mmol/L) recommended by the SSC have not changed substantially since 2008. The one exception is the rate at which IV crystalloid should be administered of 30 mL/kg, which is up from 20 mL/kg. Only time will tell how this change will affect bundle compliance or reduce mortality. But this does pose a significant challenge to quality and performance improvement groups accustomed to tracking compliance with IV fluid administration under the old standard and the educational campaigns associated with a change.

It appears that the SSC is here to stay, now in its third iteration. The lasting legacy of the SSC guidelines might not rest with the content of the guidelines, per se, but in raising awareness of severe sepsis and septic shock in a way that had not previously been considered.

HM Takeaways

The revised 2012 SCC updates bring some new tools to the clinician for early recognition and effective management of patients with sepsis. The push for institutions to adopt screening and performance measures reflects a general trend in health care to create high-performance systems. As these new guidelines are put into practice, there are several changes that might require augmentation of quality metrics being tracked at institutions nationally and internationally.

Dr. Pendharker is assistant professor of medicine in the division of hospital medicine at the University of California San Francisco and San Francisco General Hospital. Dr. Gomez is assistant professor of medicine in the division of pulmonary and critical care medicine at UCSF and San Francisco General Hospital.

References

- Hall MJ, Williams SN, DeFrances CJ, et al. Inpatient care for septicemia or sepsis: a challenge for patients and hospitals. NCHS Data Brief. 2011:1-8.

- Dellinger RP, Levy MM, Rhodes A, et al. Surviving Sepsis Campaign: international guidelines for management of severe sepsis and septic shock: 2012. Crit Care Med. 2013;41:580-637.

- Jansen TC, van Bommel J, Schoonderbeek FJ, et al. Early lactate-guided therapy in intensive care unit patients: a multicenter, open-label, randomized controlled trial. Am J Respir Crit Care Med. 2010;182:752-761.

- Jones AE, Shapiro NI, Trzeciak S, et al. Lactate clearance vs central venous oxygen saturation as goals of early sepsis therapy: a randomized clinical trial. JAMA. 2010;303:739-746.

- Levy MM, Dellinger RP, Townsend SR, et al. The Surviving Sepsis Campaign: results of an international guideline-based performance improvement program targeting severe sepsis. Crit Care Med. 2010;38:367-374.

- Guidet B, Martinet O, Boulain T, et al. Assessment of hemodynamic efficacy and safety of 6% hydroxyethylstarch 130/0.4 vs. 0.9% NaCl fluid replacement in patients with severe sepsis: The CRYSTMAS study. Crit Care. 2012;16:R94.

- Perner A, Haase N, Guttormsen AB, et al. Hydroxyethyl starch 130/0.42 versus Ringer’s acetate in severe sepsis. N Engl J Med. 2012;367:124-134.

- Marik PE. Surviving sepsis: going beyond the guidelines. Ann Intensive Care. 2011;1:17.

- Rivers E, Nguyen B, Havstad S, et al. Early goal-directed therapy in the treatment of severe sepsis and septic shock. N Engl J Med. 2001;345:1368-1377.

Background

Sepsis is a clinical syndrome with systemic effects that can progress to severe sepsis and/or septic shock. The incidence of severe sepsis and septic shock is rising in the United States, and these syndromes are associated with significant morbidity and a mortality rate as high as 25% to 35%.1 In fact, sepsis is one of the 10 leading causes of death in the U.S., accounting for 2% of hospital admissions but 17% of in-hospital deaths.1

The main principles of effective treatment for severe sepsis and septic shock are timely recognition and early aggressive therapy. Launched in 2002, the Surviving Sepsis Campaign (SSC) was the result of a collaboration of three professional societies. The goal of the SSC collaborative was to reduce mortality from severe sepsis and septic shock by 25%. To that end, the SSC convened representatives from several international societies to develop a set of evidence-based guidelines as a means of guiding clinicians in optimizing management of patients with severe sepsis and septic shock. Since the original publication of the SSC guidelines in 2004, there have been two updates—one in 2008 and one in February 2013.2

Guideline Updates

Quantitative, protocol-driven initial resuscitation in the first six hours for patients with severe sepsis and septic shock remains a high-level recommendation, but SSC has added normalization of the lactate level as a resuscitation goal. This new suggestion is based on two studies published since the 2008 SCC guidelines that showed noninferiority to previously established goals and absolute mortality benefit.3,4

There is a new focus on screening for sepsis and the use of hospital-based performance-improvement programs, which were not previously addressed in the 2008 SCC guidelines. Patients with suspected infections and who are seriously ill should be screened in order to identify sepsis early during the hospital course. Additionally, it is recommended that hospitals implement performance-improvement measures by which multidisciplinary teams can address treatment of sepsis by improving compliance with the SSC bundles, citing their own data as the model but ultimately leaving this recommendation as ungradable in regards to the quality of available supporting evidence.5

Cultures drawn before antibiotics and early imaging to confirm potential sources are still recommended, but the committee has added the use of one: 3 beta D-glucan and the mannan antigen and anti-mannan antibody assays when considering invasive candidiasis as your infective agent. They do note the known risk of false positive results with these assays and warn that they should be used with caution.

Early, broad-spectrum antibiotic administration within the first hour of presentation was upgraded for severe sepsis and downgraded for septic shock. The decision to initiate double coverage for suspected gram-negative infection is not recommended specifically but can be considered in situations when highly antibiotic resistant pathogens are potentially present. Daily assessment of the appropriate antibiotic regimen remains an important tenet, and the use of low procalcitonin levels as a tool to assist in the decision to discontinue antibiotics has been introduced. Source control is still strongly recommended in the first 12 hours of treatment.

The SSC 2012 guidelines specifically address the rate of fluid administered and the type of fluid that should be used. It is now recommended that a fluid challenge of 30 mL/kg be used for initial resuscitation, but the guidelines leave it up to the clinician to give more fluid if needed. There is a strong push for use of crystalloids rather than colloids during initial resuscitation and thereafter. Disfavor for colloids stemmed from trials showing increased mortality when comparing resuscitation with hydroxyethyl starch versus crystalloid for patients in septic shock.6,7 Albumin, on the other hand, is recommended to resuscitate patients with severe sepsis and septic shock in cases for which large amounts of crystalloid are required.

The 2012 SSC guidelines recommend norepinephrine (NE) alone as the first-line vasopressor in sepsis and no longer include dopamine in this category. In fact, the use of dopamine in septic shock has been downgraded and should only to be considered in patients at low risk of tachyarrhythmia and in bradycardia syndromes. Epinephrine is now favored as the second agent or as a substitute to NE. Phenylephrine is no longer recommended unless there is contraindication to using NE, the patient has a high cardiac output, or it is used as a salvage therapy. Vasopressin is considered only an adjunctive agent to NE and should never be used alone.

Recommendations regarding corticosteroid therapy remain largely unchanged from 2008 SCC guidelines, which only support their use when adequate volume resuscitation and vasopressor support has failed to achieve hemodynamic stability. Glucose control is recommended but at the new target of achieving a level of <180 mg/dL, up from a previous target of <150 mg/dL.

Notably, recombinant human activated protein C was completely omitted from the 2012 guidelines, prompted by the voluntary removal of the drug by the manufacturer after failing to show benefit. Use of selenium and intravenous immunoglobulin received comment, but there is insufficient evidence supporting their benefit at the current time. They also encourage clinicians to incorporate goals of care and end-of-life issues into the treatment plan and discuss this with patients and/or surrogates early in treatment.

Guideline Analysis

Prior versions of the SSC guidelines have been met with a fair amount of skepticism.8 Much of the criticism is based on the industry sponsorship of the 2004 version, the lack of transparency regarding potential conflicts of interest of the committee members, and that the bundle recommendations largely were based on only one trial and, therefore, not evidenced-based.9 The 2012 SSC committee seems to have addressed these issues as the guidelines are free of commercial sponsorship in the 2008 and current versions. They also rigorously applied the GRADE system to methodically assess the strength and quality of supporting evidence. The result is a set of guidelines that are partially evidence-based and partially based on expert opinion, but this is clearly delineated in these newest guidelines. This provides clinicians with a clear and concise recommended approach to the patient with severe sepsis and septic shock.

The guidelines continue to place a heavy emphasis on three- and six-hour treatment bundles, and with the assistance of the Institute for Health Care Improvement efforts to improve implementation of the bundle, they are already are widespread with an eye to expand across the country. The components of the three-hour treatment bundle (lactate measurement, blood cultures prior to initiation of antibiotics, broad-spectrum antibiotics, and IV crystalloids for hypotension or for a lactate of >4 mmol/L) recommended by the SSC have not changed substantially since 2008. The one exception is the rate at which IV crystalloid should be administered of 30 mL/kg, which is up from 20 mL/kg. Only time will tell how this change will affect bundle compliance or reduce mortality. But this does pose a significant challenge to quality and performance improvement groups accustomed to tracking compliance with IV fluid administration under the old standard and the educational campaigns associated with a change.

It appears that the SSC is here to stay, now in its third iteration. The lasting legacy of the SSC guidelines might not rest with the content of the guidelines, per se, but in raising awareness of severe sepsis and septic shock in a way that had not previously been considered.

HM Takeaways

The revised 2012 SCC updates bring some new tools to the clinician for early recognition and effective management of patients with sepsis. The push for institutions to adopt screening and performance measures reflects a general trend in health care to create high-performance systems. As these new guidelines are put into practice, there are several changes that might require augmentation of quality metrics being tracked at institutions nationally and internationally.

Dr. Pendharker is assistant professor of medicine in the division of hospital medicine at the University of California San Francisco and San Francisco General Hospital. Dr. Gomez is assistant professor of medicine in the division of pulmonary and critical care medicine at UCSF and San Francisco General Hospital.

References

- Hall MJ, Williams SN, DeFrances CJ, et al. Inpatient care for septicemia or sepsis: a challenge for patients and hospitals. NCHS Data Brief. 2011:1-8.

- Dellinger RP, Levy MM, Rhodes A, et al. Surviving Sepsis Campaign: international guidelines for management of severe sepsis and septic shock: 2012. Crit Care Med. 2013;41:580-637.

- Jansen TC, van Bommel J, Schoonderbeek FJ, et al. Early lactate-guided therapy in intensive care unit patients: a multicenter, open-label, randomized controlled trial. Am J Respir Crit Care Med. 2010;182:752-761.

- Jones AE, Shapiro NI, Trzeciak S, et al. Lactate clearance vs central venous oxygen saturation as goals of early sepsis therapy: a randomized clinical trial. JAMA. 2010;303:739-746.

- Levy MM, Dellinger RP, Townsend SR, et al. The Surviving Sepsis Campaign: results of an international guideline-based performance improvement program targeting severe sepsis. Crit Care Med. 2010;38:367-374.

- Guidet B, Martinet O, Boulain T, et al. Assessment of hemodynamic efficacy and safety of 6% hydroxyethylstarch 130/0.4 vs. 0.9% NaCl fluid replacement in patients with severe sepsis: The CRYSTMAS study. Crit Care. 2012;16:R94.

- Perner A, Haase N, Guttormsen AB, et al. Hydroxyethyl starch 130/0.42 versus Ringer’s acetate in severe sepsis. N Engl J Med. 2012;367:124-134.

- Marik PE. Surviving sepsis: going beyond the guidelines. Ann Intensive Care. 2011;1:17.

- Rivers E, Nguyen B, Havstad S, et al. Early goal-directed therapy in the treatment of severe sepsis and septic shock. N Engl J Med. 2001;345:1368-1377.

How Should Common Symptoms at the End of Life be Managed?

Case

A 58-year-old male with colon cancer metastatic to the liver and lungs presents with vomiting, dyspnea, and abdominal pain. His disease has progressed through third-line chemotherapy and his care is now focused entirely on symptom management. He has not had a bowel movement in five days and he began vomiting two days ago.

Overview

The majority of patients in the United States die in acute-care hospitals. The Study to Understand Prognosis and Preferences for Outcomes and Risks of Treatments (SUPPORT), which evaluated the courses of close to 10,000 hospitalized patients with serious and life-limiting illnesses, illustrated that patients’ end-of-life (EOL) experiences often are characterized by poor symptom management and invasive care that is not congruent with the patients’ overall goals of care.1 Studies of factors identified as priorities in EOL care have consistently shown that excellent pain and symptom management are highly valued by patients and families. As the hospitalist movement continues to grow, hospitalists will play a large role in caring for patients at EOL and will need to know how to provide adequate pain and symptom management so that high-quality care can be achieved.

Pain: A Basic Tenet

A basic tenet of palliative medicine is to evaluate and treat all types of suffering.2 Physical pain at EOL is frequently accompanied by other types of pain, such as psychological, social, religious, or existential pain. However, this review will focus on the pharmacologic management of physical pain.

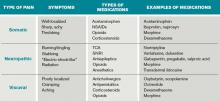

Pain management must begin with a thorough evaluation of the severity, location, and characteristics of the discomfort to assess which therapies are most likely to be beneficial (see Table 1).3 The consistent use of one scale of pain severity (such as 0-10, or mild/moderate/severe) assists in the choice of initial dose of pain medication, in determining the response to the medication, and in assessing the need for change in dose.4