User login

Long-term behavioral follow-up of children exposed to mood stabilizers and antidepressants: A look forward

Much of the focus of reproductive psychiatry over the last 1 to 2 decades has been on issues regarding risk of fetal exposure to psychiatric medications in the context of the specific risk for teratogenesis or organ malformation. Concerns and questions are mostly focused on exposure to any number of medications that women take during the first trimester, as it is during that period that the major organs are formed.

More recently, there has been appropriate interest in the effect of fetal exposure to psychiatric medications with respect to risk for obstetrical and neonatal complications. This particularly has been the case with respect to antidepressants where fetal exposure to these medications, which while associated with symptoms of transient jitteriness and irritability about 20% of the time, have not been associated with symptoms requiring frank clinical intervention.

Concerning mood stabilizers, the risk for organ dysgenesis following fetal exposure to sodium valproate has been very well established, and we’ve known for over a decade about the adverse effects of fetal exposure to sodium valproate on behavioral outcomes (Lancet Neurol. 2013 Mar;12[3]:244-52). We also now have ample data on lamotrigine, one of the most widely used medicines by reproductive-age women for treatment of bipolar disorder that supports the absence of a risk of organ malformation in first-trimester exposure.

Most recently, in a study of 292 children of women with epilepsy, an evaluation of women being treated with more modern anticonvulsants such as lamotrigine and levetiracetam alone or as polytherapy was performed. The results showed no difference in language, motor, cognitive, social, emotional, and general adaptive functioning in children exposed to either lamotrigine or levetiracetam relative to unexposed children of women with epilepsy. However, the researchers found an increase in anti-epileptic drug plasma level appeared to be associated with decreased motor and sensory function. These are reassuring data that really confirm earlier work, which failed to reveal a signal of concern for lamotrigine and now provide some of the first data on levetiracetam, which is widely used by reproductive-age women with epilepsy (JAMA Neurol. 2021 Aug 1;78[8]:927-936). While one caveat of the study is a short follow-up of 2 years, the absence of a signal of concern is reassuring. With more and more data demonstrating bipolar disorder is an illness that requires chronic treatment for many people, and that discontinuation is associated with high risk for relapse, it is an advance in the field to have data on risk for teratogenesis and data on longer-term neurobehavioral outcomes.

There is vast information regarding reproductive safety, organ malformation, and acute neonatal outcomes for antidepressants. The last decade has brought interest in and analysis of specific reports of increased risk of both autism spectrum disorder (ASD) and attention-deficit/hyperactivity disorder (ADHD) following fetal exposure to antidepressants. What can be said based on reviews of pooled meta-analyses is that the risk for ASD and ADHD has been put to rest for most clinicians and patients (J Clin Psychiatry. 2020 May 26;81[3]:20f13463). With other neurodevelopmental disorders, results have been somewhat inconclusive. Over the last 5-10 years, there have been sporadic reports of concerns about problems in a specific domain of neurodevelopment in offspring of women who have used antidepressants during pregnancy, whether it be speech, language, or motor functioning, but no signal of concern has been consistent.

In a previous column, I addressed a Danish study that showed no increased risk of longer-term sequelae after fetal exposure to antidepressants. Now, a new study has examined 1.93 million pregnancies in the Medicaid Analytic eXtract and 1.25 million pregnancies in the IBM MarketScan Research Database with follow-up up to 14 years of age where the specific interval for fetal exposure was from gestational age of 19 weeks to delivery, as that is the period that corresponds most to synaptogenesis in the brain. The researchers examined a spectrum of neurodevelopmental disorders such as developmental speech issues, ADHD, ASD, dyslexia, and learning disorders, among others. They found a twofold increased risk for neurodevelopmental disorders in the unadjusted models that flattened to no finding when factoring in environmental and genetic risk variables, highlighting the importance of dealing appropriately with confounders when performing these analyses. Those confounders examined include the mother’s use of alcohol and tobacco, and her body mass index and overall general health (JAMA Intern Med. 2022;182[11]:1149-60).

Given the consistency of these results with earlier data, patients can be increasingly comfortable as they weigh the benefits and risks of antidepressant use during pregnancy, factoring in the risk of fetal exposure with added data on long-term neurobehavioral sequelae. With that said, we need to remember the importance of initiatives to address alcohol consumption, poor nutrition, tobacco use, elevated BMI, and general health during pregnancy. These are modifiable risks that we as clinicians should focus on in order to optimize outcomes during pregnancy.

We have come so far in knowledge about fetal exposure to antidepressants relative to other classes of medications women take during pregnancy, about which, frankly, we are still starved for data. As use of psychiatric medications during pregnancy continues to grow, we can rest a bit more comfortably. But we should also address some of the other behaviors that have adverse effects on maternal and child well-being.

Dr. Cohen is the director of the Ammon-Pinizzotto Center for Women’s Mental Health at Massachusetts General Hospital (MGH) in Boston, which provides information resources and conducts clinical care and research in reproductive mental health. He has been a consultant to manufacturers of psychiatric medications. Email Dr. Cohen at [email protected].

Much of the focus of reproductive psychiatry over the last 1 to 2 decades has been on issues regarding risk of fetal exposure to psychiatric medications in the context of the specific risk for teratogenesis or organ malformation. Concerns and questions are mostly focused on exposure to any number of medications that women take during the first trimester, as it is during that period that the major organs are formed.

More recently, there has been appropriate interest in the effect of fetal exposure to psychiatric medications with respect to risk for obstetrical and neonatal complications. This particularly has been the case with respect to antidepressants where fetal exposure to these medications, which while associated with symptoms of transient jitteriness and irritability about 20% of the time, have not been associated with symptoms requiring frank clinical intervention.

Concerning mood stabilizers, the risk for organ dysgenesis following fetal exposure to sodium valproate has been very well established, and we’ve known for over a decade about the adverse effects of fetal exposure to sodium valproate on behavioral outcomes (Lancet Neurol. 2013 Mar;12[3]:244-52). We also now have ample data on lamotrigine, one of the most widely used medicines by reproductive-age women for treatment of bipolar disorder that supports the absence of a risk of organ malformation in first-trimester exposure.

Most recently, in a study of 292 children of women with epilepsy, an evaluation of women being treated with more modern anticonvulsants such as lamotrigine and levetiracetam alone or as polytherapy was performed. The results showed no difference in language, motor, cognitive, social, emotional, and general adaptive functioning in children exposed to either lamotrigine or levetiracetam relative to unexposed children of women with epilepsy. However, the researchers found an increase in anti-epileptic drug plasma level appeared to be associated with decreased motor and sensory function. These are reassuring data that really confirm earlier work, which failed to reveal a signal of concern for lamotrigine and now provide some of the first data on levetiracetam, which is widely used by reproductive-age women with epilepsy (JAMA Neurol. 2021 Aug 1;78[8]:927-936). While one caveat of the study is a short follow-up of 2 years, the absence of a signal of concern is reassuring. With more and more data demonstrating bipolar disorder is an illness that requires chronic treatment for many people, and that discontinuation is associated with high risk for relapse, it is an advance in the field to have data on risk for teratogenesis and data on longer-term neurobehavioral outcomes.

There is vast information regarding reproductive safety, organ malformation, and acute neonatal outcomes for antidepressants. The last decade has brought interest in and analysis of specific reports of increased risk of both autism spectrum disorder (ASD) and attention-deficit/hyperactivity disorder (ADHD) following fetal exposure to antidepressants. What can be said based on reviews of pooled meta-analyses is that the risk for ASD and ADHD has been put to rest for most clinicians and patients (J Clin Psychiatry. 2020 May 26;81[3]:20f13463). With other neurodevelopmental disorders, results have been somewhat inconclusive. Over the last 5-10 years, there have been sporadic reports of concerns about problems in a specific domain of neurodevelopment in offspring of women who have used antidepressants during pregnancy, whether it be speech, language, or motor functioning, but no signal of concern has been consistent.

In a previous column, I addressed a Danish study that showed no increased risk of longer-term sequelae after fetal exposure to antidepressants. Now, a new study has examined 1.93 million pregnancies in the Medicaid Analytic eXtract and 1.25 million pregnancies in the IBM MarketScan Research Database with follow-up up to 14 years of age where the specific interval for fetal exposure was from gestational age of 19 weeks to delivery, as that is the period that corresponds most to synaptogenesis in the brain. The researchers examined a spectrum of neurodevelopmental disorders such as developmental speech issues, ADHD, ASD, dyslexia, and learning disorders, among others. They found a twofold increased risk for neurodevelopmental disorders in the unadjusted models that flattened to no finding when factoring in environmental and genetic risk variables, highlighting the importance of dealing appropriately with confounders when performing these analyses. Those confounders examined include the mother’s use of alcohol and tobacco, and her body mass index and overall general health (JAMA Intern Med. 2022;182[11]:1149-60).

Given the consistency of these results with earlier data, patients can be increasingly comfortable as they weigh the benefits and risks of antidepressant use during pregnancy, factoring in the risk of fetal exposure with added data on long-term neurobehavioral sequelae. With that said, we need to remember the importance of initiatives to address alcohol consumption, poor nutrition, tobacco use, elevated BMI, and general health during pregnancy. These are modifiable risks that we as clinicians should focus on in order to optimize outcomes during pregnancy.

We have come so far in knowledge about fetal exposure to antidepressants relative to other classes of medications women take during pregnancy, about which, frankly, we are still starved for data. As use of psychiatric medications during pregnancy continues to grow, we can rest a bit more comfortably. But we should also address some of the other behaviors that have adverse effects on maternal and child well-being.

Dr. Cohen is the director of the Ammon-Pinizzotto Center for Women’s Mental Health at Massachusetts General Hospital (MGH) in Boston, which provides information resources and conducts clinical care and research in reproductive mental health. He has been a consultant to manufacturers of psychiatric medications. Email Dr. Cohen at [email protected].

Much of the focus of reproductive psychiatry over the last 1 to 2 decades has been on issues regarding risk of fetal exposure to psychiatric medications in the context of the specific risk for teratogenesis or organ malformation. Concerns and questions are mostly focused on exposure to any number of medications that women take during the first trimester, as it is during that period that the major organs are formed.

More recently, there has been appropriate interest in the effect of fetal exposure to psychiatric medications with respect to risk for obstetrical and neonatal complications. This particularly has been the case with respect to antidepressants where fetal exposure to these medications, which while associated with symptoms of transient jitteriness and irritability about 20% of the time, have not been associated with symptoms requiring frank clinical intervention.

Concerning mood stabilizers, the risk for organ dysgenesis following fetal exposure to sodium valproate has been very well established, and we’ve known for over a decade about the adverse effects of fetal exposure to sodium valproate on behavioral outcomes (Lancet Neurol. 2013 Mar;12[3]:244-52). We also now have ample data on lamotrigine, one of the most widely used medicines by reproductive-age women for treatment of bipolar disorder that supports the absence of a risk of organ malformation in first-trimester exposure.

Most recently, in a study of 292 children of women with epilepsy, an evaluation of women being treated with more modern anticonvulsants such as lamotrigine and levetiracetam alone or as polytherapy was performed. The results showed no difference in language, motor, cognitive, social, emotional, and general adaptive functioning in children exposed to either lamotrigine or levetiracetam relative to unexposed children of women with epilepsy. However, the researchers found an increase in anti-epileptic drug plasma level appeared to be associated with decreased motor and sensory function. These are reassuring data that really confirm earlier work, which failed to reveal a signal of concern for lamotrigine and now provide some of the first data on levetiracetam, which is widely used by reproductive-age women with epilepsy (JAMA Neurol. 2021 Aug 1;78[8]:927-936). While one caveat of the study is a short follow-up of 2 years, the absence of a signal of concern is reassuring. With more and more data demonstrating bipolar disorder is an illness that requires chronic treatment for many people, and that discontinuation is associated with high risk for relapse, it is an advance in the field to have data on risk for teratogenesis and data on longer-term neurobehavioral outcomes.

There is vast information regarding reproductive safety, organ malformation, and acute neonatal outcomes for antidepressants. The last decade has brought interest in and analysis of specific reports of increased risk of both autism spectrum disorder (ASD) and attention-deficit/hyperactivity disorder (ADHD) following fetal exposure to antidepressants. What can be said based on reviews of pooled meta-analyses is that the risk for ASD and ADHD has been put to rest for most clinicians and patients (J Clin Psychiatry. 2020 May 26;81[3]:20f13463). With other neurodevelopmental disorders, results have been somewhat inconclusive. Over the last 5-10 years, there have been sporadic reports of concerns about problems in a specific domain of neurodevelopment in offspring of women who have used antidepressants during pregnancy, whether it be speech, language, or motor functioning, but no signal of concern has been consistent.

In a previous column, I addressed a Danish study that showed no increased risk of longer-term sequelae after fetal exposure to antidepressants. Now, a new study has examined 1.93 million pregnancies in the Medicaid Analytic eXtract and 1.25 million pregnancies in the IBM MarketScan Research Database with follow-up up to 14 years of age where the specific interval for fetal exposure was from gestational age of 19 weeks to delivery, as that is the period that corresponds most to synaptogenesis in the brain. The researchers examined a spectrum of neurodevelopmental disorders such as developmental speech issues, ADHD, ASD, dyslexia, and learning disorders, among others. They found a twofold increased risk for neurodevelopmental disorders in the unadjusted models that flattened to no finding when factoring in environmental and genetic risk variables, highlighting the importance of dealing appropriately with confounders when performing these analyses. Those confounders examined include the mother’s use of alcohol and tobacco, and her body mass index and overall general health (JAMA Intern Med. 2022;182[11]:1149-60).

Given the consistency of these results with earlier data, patients can be increasingly comfortable as they weigh the benefits and risks of antidepressant use during pregnancy, factoring in the risk of fetal exposure with added data on long-term neurobehavioral sequelae. With that said, we need to remember the importance of initiatives to address alcohol consumption, poor nutrition, tobacco use, elevated BMI, and general health during pregnancy. These are modifiable risks that we as clinicians should focus on in order to optimize outcomes during pregnancy.

We have come so far in knowledge about fetal exposure to antidepressants relative to other classes of medications women take during pregnancy, about which, frankly, we are still starved for data. As use of psychiatric medications during pregnancy continues to grow, we can rest a bit more comfortably. But we should also address some of the other behaviors that have adverse effects on maternal and child well-being.

Dr. Cohen is the director of the Ammon-Pinizzotto Center for Women’s Mental Health at Massachusetts General Hospital (MGH) in Boston, which provides information resources and conducts clinical care and research in reproductive mental health. He has been a consultant to manufacturers of psychiatric medications. Email Dr. Cohen at [email protected].

Staving off holiday weight gain

Five pounds of weight gain during the holidays is a disproven myth that pops up annually like holiday lights. But before you do a happy dance and pile that extra whipped cream on your pie, you should know two things. One, people do gain weight during the holidays. Two, the extra pounds tend to stick around because most people never lose their holiday weight. Over time, these extra pounds can lead to obesity and weight-related conditions such as diabetes and hypertension.

Let’s be clear. Your weight is one of many markers of your wellness and metabolic health. However, weight changes can indicate that your health is off balance. Holiday weight gain often comes from indulging in increased rich foods, less physical activity, higher stress levels, and sleep disruption.

Optimizing lifestyle factors and trying to lose weight is challenging any time of the year. However, the holiday bustle makes losing weight during this time even more challenging for most people. But maintaining your weight and overall wellness is manageable with three simple shifts in mindset, mindful eating, and meal strategy. Let’s discuss each.

Mindset

From personal and professional experience, I see two primary attitudes regarding holiday eating. They are either “I’ll wait till January to go on a diet” or “I’m on a diet, so I can’t eat anything I like during the holidays.” Both attitude extremes prevent enjoyable and healthy eating during the holidays because they place the focus on food. With both mindsets, food is in control, which leaves you feeling out of control. Rather than having an “all or none” mindset during the holidays, I encourage you to ask yourself:

- “What matters most to me during the holidays?” In a recent survey, 72% of Americans said they look forward to during the holidays. Although food often accompanies family celebrations, it’s the time with family that matters most. Choose to savor sweet time spent with loved ones instead of stuffing yourself with excess sugary sweets.

- “How can I enjoy myself without food or alcoholic beverages?” So often, we eat or drink certain foods out of habit. Shift your mindset from “we always do this” to “what could we do instead?” Asking this question may be the doorway to creating new, non–food-centered traditions.

- “How can I have the foods I love during the holidays and still meet my weight and wellness goals?” This question helps you create opportunities instead of depriving yourself. Rather than depriving yourself, you could cut back on snacking or reduce your sugar intake elsewhere. Or add an extra workout session or stress reduction practice during the holidays.

Mindful eating

The purpose of mindful eating isn’t weight loss. Some studies suggest it may help maintain weight. More importantly, mindfulness can improve your relationship with food and promote wellness. Traditional tips for mindful eating include doing the following as you eat: Being present in the moment, not judging your food, slowing down, and savoring the taste of your food. During the holidays, asking additional questions may enhance mindful eating. For instance:

- “Am I eating to avoid uncomfortable emotions?” The holidays can trigger emotions such as grief, sadness, and anxiety. Also, preexisting can worsen. Decadent foods become a quick fix leading to more emotional eating during this season. Addressing these emotions can help you avoid overeating during the holidays. For mental health resources, visit the

- “What food or drink do I most enjoy during the holidays?” Trying to resist your favorite holiday treats can be an exhausting test of “willpower.” Eventually, and psychological reasons, and you “cheat” on your plan to not eat holiday treats. To prevent this painful battle of treat versus cheat, plan to eat your “indulgence food” in moderation. Savor the foods you enjoy. Then cut out the rest of the food you don’t like or feel you must eat because “Aunty Sarah will feel bad.”

Meal strategy

Many holiday treats and parties are unavoidable unless you plan to hide in a cave for the next few weeks. Rather than torturing yourself nibbling on celery and sipping on sparkling water during your holiday event, create a strategy. For 8 years, I’ve been on my weight loss and wellness journey. I have a holiday strategy that helps my patients, clients, and me maintain our weight and wellness during the holidays. One critical part of the strategy is to anticipate indulgence events. Specifically, look at all the planned holiday events and choose three indulgence events. The rest of the time, do your best to stay on your plan. Knowing your indulgence events to look forward to gives you a sense of control over when you indulge. On non-indulgent days, think, “I can eat it but choose not to” instead of the limiting thought, “I can’t eat that.” Choice is a powerful tool. Once at an indulgence event, I focus on mindful eating and enjoying people around me, which cuts down on overeating just because “I can.”

This holiday season is a reunion time for many people, after enduring long separations from family and friends due to the pandemic. Relishing time with loved ones should be your focus during the holidays – not eating yourself into worse health or worrying about dieting. Even if you choose not to make all the shifts in mindset, mindful eating, and meal strategy mentioned, choosing even one change to focus on can help you both enjoy the holidays and have increased control over your weight and wellness. Whatever you do, may you and your loved ones have a safe, healthy, and enjoyable holiday season.

Sylvia Gonsahn-Bollie, MD, DipABOM, is an integrative obesity specialist who specializes in individualized solutions for emotional and biological overeating. She is CEO and lead physician at Embrace You Weight and Wellness, Telehealth & Virtual Counseling. She has disclosed having no relevant financial relationships. A version of this article first appeared on Medscape.com.

Five pounds of weight gain during the holidays is a disproven myth that pops up annually like holiday lights. But before you do a happy dance and pile that extra whipped cream on your pie, you should know two things. One, people do gain weight during the holidays. Two, the extra pounds tend to stick around because most people never lose their holiday weight. Over time, these extra pounds can lead to obesity and weight-related conditions such as diabetes and hypertension.

Let’s be clear. Your weight is one of many markers of your wellness and metabolic health. However, weight changes can indicate that your health is off balance. Holiday weight gain often comes from indulging in increased rich foods, less physical activity, higher stress levels, and sleep disruption.

Optimizing lifestyle factors and trying to lose weight is challenging any time of the year. However, the holiday bustle makes losing weight during this time even more challenging for most people. But maintaining your weight and overall wellness is manageable with three simple shifts in mindset, mindful eating, and meal strategy. Let’s discuss each.

Mindset

From personal and professional experience, I see two primary attitudes regarding holiday eating. They are either “I’ll wait till January to go on a diet” or “I’m on a diet, so I can’t eat anything I like during the holidays.” Both attitude extremes prevent enjoyable and healthy eating during the holidays because they place the focus on food. With both mindsets, food is in control, which leaves you feeling out of control. Rather than having an “all or none” mindset during the holidays, I encourage you to ask yourself:

- “What matters most to me during the holidays?” In a recent survey, 72% of Americans said they look forward to during the holidays. Although food often accompanies family celebrations, it’s the time with family that matters most. Choose to savor sweet time spent with loved ones instead of stuffing yourself with excess sugary sweets.

- “How can I enjoy myself without food or alcoholic beverages?” So often, we eat or drink certain foods out of habit. Shift your mindset from “we always do this” to “what could we do instead?” Asking this question may be the doorway to creating new, non–food-centered traditions.

- “How can I have the foods I love during the holidays and still meet my weight and wellness goals?” This question helps you create opportunities instead of depriving yourself. Rather than depriving yourself, you could cut back on snacking or reduce your sugar intake elsewhere. Or add an extra workout session or stress reduction practice during the holidays.

Mindful eating

The purpose of mindful eating isn’t weight loss. Some studies suggest it may help maintain weight. More importantly, mindfulness can improve your relationship with food and promote wellness. Traditional tips for mindful eating include doing the following as you eat: Being present in the moment, not judging your food, slowing down, and savoring the taste of your food. During the holidays, asking additional questions may enhance mindful eating. For instance:

- “Am I eating to avoid uncomfortable emotions?” The holidays can trigger emotions such as grief, sadness, and anxiety. Also, preexisting can worsen. Decadent foods become a quick fix leading to more emotional eating during this season. Addressing these emotions can help you avoid overeating during the holidays. For mental health resources, visit the

- “What food or drink do I most enjoy during the holidays?” Trying to resist your favorite holiday treats can be an exhausting test of “willpower.” Eventually, and psychological reasons, and you “cheat” on your plan to not eat holiday treats. To prevent this painful battle of treat versus cheat, plan to eat your “indulgence food” in moderation. Savor the foods you enjoy. Then cut out the rest of the food you don’t like or feel you must eat because “Aunty Sarah will feel bad.”

Meal strategy

Many holiday treats and parties are unavoidable unless you plan to hide in a cave for the next few weeks. Rather than torturing yourself nibbling on celery and sipping on sparkling water during your holiday event, create a strategy. For 8 years, I’ve been on my weight loss and wellness journey. I have a holiday strategy that helps my patients, clients, and me maintain our weight and wellness during the holidays. One critical part of the strategy is to anticipate indulgence events. Specifically, look at all the planned holiday events and choose three indulgence events. The rest of the time, do your best to stay on your plan. Knowing your indulgence events to look forward to gives you a sense of control over when you indulge. On non-indulgent days, think, “I can eat it but choose not to” instead of the limiting thought, “I can’t eat that.” Choice is a powerful tool. Once at an indulgence event, I focus on mindful eating and enjoying people around me, which cuts down on overeating just because “I can.”

This holiday season is a reunion time for many people, after enduring long separations from family and friends due to the pandemic. Relishing time with loved ones should be your focus during the holidays – not eating yourself into worse health or worrying about dieting. Even if you choose not to make all the shifts in mindset, mindful eating, and meal strategy mentioned, choosing even one change to focus on can help you both enjoy the holidays and have increased control over your weight and wellness. Whatever you do, may you and your loved ones have a safe, healthy, and enjoyable holiday season.

Sylvia Gonsahn-Bollie, MD, DipABOM, is an integrative obesity specialist who specializes in individualized solutions for emotional and biological overeating. She is CEO and lead physician at Embrace You Weight and Wellness, Telehealth & Virtual Counseling. She has disclosed having no relevant financial relationships. A version of this article first appeared on Medscape.com.

Five pounds of weight gain during the holidays is a disproven myth that pops up annually like holiday lights. But before you do a happy dance and pile that extra whipped cream on your pie, you should know two things. One, people do gain weight during the holidays. Two, the extra pounds tend to stick around because most people never lose their holiday weight. Over time, these extra pounds can lead to obesity and weight-related conditions such as diabetes and hypertension.

Let’s be clear. Your weight is one of many markers of your wellness and metabolic health. However, weight changes can indicate that your health is off balance. Holiday weight gain often comes from indulging in increased rich foods, less physical activity, higher stress levels, and sleep disruption.

Optimizing lifestyle factors and trying to lose weight is challenging any time of the year. However, the holiday bustle makes losing weight during this time even more challenging for most people. But maintaining your weight and overall wellness is manageable with three simple shifts in mindset, mindful eating, and meal strategy. Let’s discuss each.

Mindset

From personal and professional experience, I see two primary attitudes regarding holiday eating. They are either “I’ll wait till January to go on a diet” or “I’m on a diet, so I can’t eat anything I like during the holidays.” Both attitude extremes prevent enjoyable and healthy eating during the holidays because they place the focus on food. With both mindsets, food is in control, which leaves you feeling out of control. Rather than having an “all or none” mindset during the holidays, I encourage you to ask yourself:

- “What matters most to me during the holidays?” In a recent survey, 72% of Americans said they look forward to during the holidays. Although food often accompanies family celebrations, it’s the time with family that matters most. Choose to savor sweet time spent with loved ones instead of stuffing yourself with excess sugary sweets.

- “How can I enjoy myself without food or alcoholic beverages?” So often, we eat or drink certain foods out of habit. Shift your mindset from “we always do this” to “what could we do instead?” Asking this question may be the doorway to creating new, non–food-centered traditions.

- “How can I have the foods I love during the holidays and still meet my weight and wellness goals?” This question helps you create opportunities instead of depriving yourself. Rather than depriving yourself, you could cut back on snacking or reduce your sugar intake elsewhere. Or add an extra workout session or stress reduction practice during the holidays.

Mindful eating

The purpose of mindful eating isn’t weight loss. Some studies suggest it may help maintain weight. More importantly, mindfulness can improve your relationship with food and promote wellness. Traditional tips for mindful eating include doing the following as you eat: Being present in the moment, not judging your food, slowing down, and savoring the taste of your food. During the holidays, asking additional questions may enhance mindful eating. For instance:

- “Am I eating to avoid uncomfortable emotions?” The holidays can trigger emotions such as grief, sadness, and anxiety. Also, preexisting can worsen. Decadent foods become a quick fix leading to more emotional eating during this season. Addressing these emotions can help you avoid overeating during the holidays. For mental health resources, visit the

- “What food or drink do I most enjoy during the holidays?” Trying to resist your favorite holiday treats can be an exhausting test of “willpower.” Eventually, and psychological reasons, and you “cheat” on your plan to not eat holiday treats. To prevent this painful battle of treat versus cheat, plan to eat your “indulgence food” in moderation. Savor the foods you enjoy. Then cut out the rest of the food you don’t like or feel you must eat because “Aunty Sarah will feel bad.”

Meal strategy

Many holiday treats and parties are unavoidable unless you plan to hide in a cave for the next few weeks. Rather than torturing yourself nibbling on celery and sipping on sparkling water during your holiday event, create a strategy. For 8 years, I’ve been on my weight loss and wellness journey. I have a holiday strategy that helps my patients, clients, and me maintain our weight and wellness during the holidays. One critical part of the strategy is to anticipate indulgence events. Specifically, look at all the planned holiday events and choose three indulgence events. The rest of the time, do your best to stay on your plan. Knowing your indulgence events to look forward to gives you a sense of control over when you indulge. On non-indulgent days, think, “I can eat it but choose not to” instead of the limiting thought, “I can’t eat that.” Choice is a powerful tool. Once at an indulgence event, I focus on mindful eating and enjoying people around me, which cuts down on overeating just because “I can.”

This holiday season is a reunion time for many people, after enduring long separations from family and friends due to the pandemic. Relishing time with loved ones should be your focus during the holidays – not eating yourself into worse health or worrying about dieting. Even if you choose not to make all the shifts in mindset, mindful eating, and meal strategy mentioned, choosing even one change to focus on can help you both enjoy the holidays and have increased control over your weight and wellness. Whatever you do, may you and your loved ones have a safe, healthy, and enjoyable holiday season.

Sylvia Gonsahn-Bollie, MD, DipABOM, is an integrative obesity specialist who specializes in individualized solutions for emotional and biological overeating. She is CEO and lead physician at Embrace You Weight and Wellness, Telehealth & Virtual Counseling. She has disclosed having no relevant financial relationships. A version of this article first appeared on Medscape.com.

Update on high-grade vulvar interepithelial neoplasia

Vulvar squamous cell carcinomas (VSCC) comprise approximately 90% of all vulvar malignancies. Unlike cervical SCC, which are predominantly human papilloma virus (HPV) positive, only a minority of VSCC are HPV positive – on the order of 15%-25% of cases. Most cases occur in the setting of lichen sclerosus and are HPV negative.

Lichen sclerosus is a chronic inflammatory dermatitis typically involving the anogenital area, which in some cases can become seriously distorted (e.g. atrophy of the labia minora, clitoral phimosis, and introital stenosis). Although most cases are diagnosed in postmenopausal women, LS can affect women of any age. The true prevalence of lichen sclerosus is unknown. Recent studies have shown a prevalence of 1 in 60; among older women, it can even be as high as 1 in 30. While lichen sclerosus is a pruriginous condition, it is often asymptomatic. It is not considered a premalignant condition. The diagnosis is clinical; however, suspicious lesions (erosions/ulcerations, hyperkeratosis, pigmented areas, ecchymosis, warty or papular lesions), particularly when recalcitrant to adequate first-line therapy, should be biopsied.

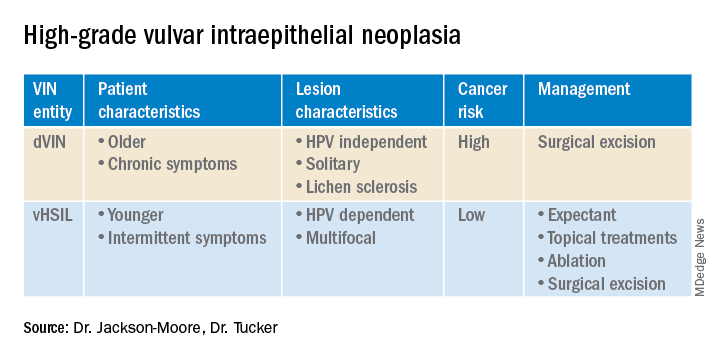

VSCC arises from precursor lesions or high-grade vulvar intraepithelial neoplasia (VIN). The 2015 International Society for the Study of Vulvovaginal Disease nomenclature classifies high-grade VIN into high-grade squamous intraepithelial lesion (HSIL) and differentiated VIN (dVIN). Most patients with high-grade VIN are diagnosed with HSIL or usual type VIN. A preponderance of these lesions (75%-85%) are HPV positive, predominantly HPV 16. Vulvar HSIL (vHSIL) lesions affect younger women. The lesions tend to be multifocal and extensive. On the other hand, dVIN typically affects older women and commonly develops as a solitary lesion. While dVIN accounts for only a small subset of patients with high-grade VIN, these lesions are HPV negative and associated with lichen sclerosus.

Both disease entities, vHSIL and dVIN, are increasing in incidence. There is a higher risk and shortened period of progression to cancer in patients with dVIN compared to HSIL. The cancer risk of vHSIL is relatively low. The 10-year cumulative VSCC risk reported in the literature is 10.3%; 9.7% for vHSIL and 50% for dVIN. Patients with vHSIL could benefit from less aggressive treatment modalities.

Patients present with a constellation of signs such as itching, pain, burning, bleeding, and discharge. Chronic symptoms portend HPV-independent lesions associated with lichen sclerosus while episodic signs are suggestive of HPV-positive lesions.

The recurrence risk of high-grade VIN is 46%-70%. Risk factors for recurrence include age greater than 50, immunosuppression, metasynchronous HSIL, and multifocal lesions. Recurrences occur in up to 50% of women who have undergone surgery. For those who undergo surgical treatment for high-grade VIN, recurrence is more common in the setting of positive margins, underlying lichen sclerosis, persistent HPV infection, and immunosuppression.

Management of high-grade VIN is determined by the lesion characteristics, patient characteristics, and medical expertise. Given the risk of progression of high-grade VIN to cancer and risk of underlying cancer, surgical therapy is typically recommended. The treatment of choice is surgical excision in cases of dVIN. Surgical treatments include CO2 laser ablation, wide local excision, and vulvectomy. Women who undergo surgical treatment for vHSIL have about a 50% chance of the condition recurring 1 year later, irrespective of whether treatment is by surgical excision or laser vaporization.

Since surgery can be associated with disfigurement and sexual dysfunction, alternatives to surgery should be considered in cases of vHSIL. The potential for effect on sexual function should be part of preoperative counseling and treatment. Women treated for VIN often experience increased inhibition of sexual excitement and increased inhibition of orgasm. One study found that in women undergoing vulvar excision for VIN, the impairment was found to be psychological in nature. Overall, the studies of sexual effect from treatment of VIN have found that women do not return to their pretreatment sexual function. However, the optimal management of vHSIL has not been determined. Nonsurgical options include topical therapies (imiquimod, 5-fluorouracil, cidofovir, and interferon) and nonpharmacologic treatments, such as photodynamic therapy.

Imiquimod, a topical immune modulator, is the most studied pharmacologic treatment of vHSIL. The drug induces secretion of cytokines, creating an immune response that clears the HPV infection. Imiquimod is safe and well tolerated. The clinical response rate varies between 35% and 81%. A recent study demonstrated the efficacy of imiquimod and the treatment was found to be noninferior to surgery. Adverse events differed, with local pain following surgical treatment and local pruritus and erythema associated with imiquimod use. Some patients did not respond to imiquimod; it was thought by the authors of the study that specific immunological factors affect the clinical response.

In conclusion, high-grade VIN is a heterogeneous disease made up of two distinct disease entities with rising incidence. In contrast to dVIN, the cancer risk is low for patients with vHSIL. Treatment should be driven by the clinical characteristics of the vulvar lesions, patients’ preferences, sexual activity, and compliance. Future directions include risk stratification of patients with vHSIL who are most likely to benefit from topical treatments, thus reducing overtreatment. Molecular biomarkers that could identify dVIN at an early stage are needed.

Dr. Jackson-Moore is associate professor in gynecologic oncology at the University of North Carolina at Chapel Hill. Dr. Tucker is assistant professor of gynecologic oncology at the university.

References

Cendejas BR et al. Am J Obstet Gynecol. 2015 Mar;212(3):291-7.

Lebreton M et al. J Gynecol Obstet Hum Reprod. 2020 Nov;49(9):101801.

Thuijs NB et al. Int J Cancer. 2021 Jan 1;148(1):90-8. doi: 10.1002/ijc.33198. .

Trutnovsky G et al. Lancet. 2022 May 7;399(10337):1790-8. Erratum in: Lancet. 2022 Oct 8;400(10359):1194.

Vulvar squamous cell carcinomas (VSCC) comprise approximately 90% of all vulvar malignancies. Unlike cervical SCC, which are predominantly human papilloma virus (HPV) positive, only a minority of VSCC are HPV positive – on the order of 15%-25% of cases. Most cases occur in the setting of lichen sclerosus and are HPV negative.

Lichen sclerosus is a chronic inflammatory dermatitis typically involving the anogenital area, which in some cases can become seriously distorted (e.g. atrophy of the labia minora, clitoral phimosis, and introital stenosis). Although most cases are diagnosed in postmenopausal women, LS can affect women of any age. The true prevalence of lichen sclerosus is unknown. Recent studies have shown a prevalence of 1 in 60; among older women, it can even be as high as 1 in 30. While lichen sclerosus is a pruriginous condition, it is often asymptomatic. It is not considered a premalignant condition. The diagnosis is clinical; however, suspicious lesions (erosions/ulcerations, hyperkeratosis, pigmented areas, ecchymosis, warty or papular lesions), particularly when recalcitrant to adequate first-line therapy, should be biopsied.

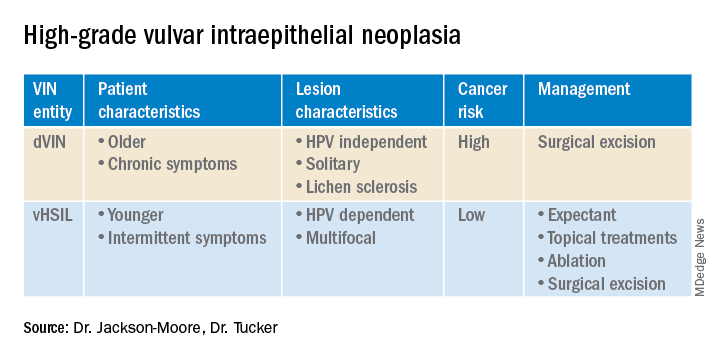

VSCC arises from precursor lesions or high-grade vulvar intraepithelial neoplasia (VIN). The 2015 International Society for the Study of Vulvovaginal Disease nomenclature classifies high-grade VIN into high-grade squamous intraepithelial lesion (HSIL) and differentiated VIN (dVIN). Most patients with high-grade VIN are diagnosed with HSIL or usual type VIN. A preponderance of these lesions (75%-85%) are HPV positive, predominantly HPV 16. Vulvar HSIL (vHSIL) lesions affect younger women. The lesions tend to be multifocal and extensive. On the other hand, dVIN typically affects older women and commonly develops as a solitary lesion. While dVIN accounts for only a small subset of patients with high-grade VIN, these lesions are HPV negative and associated with lichen sclerosus.

Both disease entities, vHSIL and dVIN, are increasing in incidence. There is a higher risk and shortened period of progression to cancer in patients with dVIN compared to HSIL. The cancer risk of vHSIL is relatively low. The 10-year cumulative VSCC risk reported in the literature is 10.3%; 9.7% for vHSIL and 50% for dVIN. Patients with vHSIL could benefit from less aggressive treatment modalities.

Patients present with a constellation of signs such as itching, pain, burning, bleeding, and discharge. Chronic symptoms portend HPV-independent lesions associated with lichen sclerosus while episodic signs are suggestive of HPV-positive lesions.

The recurrence risk of high-grade VIN is 46%-70%. Risk factors for recurrence include age greater than 50, immunosuppression, metasynchronous HSIL, and multifocal lesions. Recurrences occur in up to 50% of women who have undergone surgery. For those who undergo surgical treatment for high-grade VIN, recurrence is more common in the setting of positive margins, underlying lichen sclerosis, persistent HPV infection, and immunosuppression.

Management of high-grade VIN is determined by the lesion characteristics, patient characteristics, and medical expertise. Given the risk of progression of high-grade VIN to cancer and risk of underlying cancer, surgical therapy is typically recommended. The treatment of choice is surgical excision in cases of dVIN. Surgical treatments include CO2 laser ablation, wide local excision, and vulvectomy. Women who undergo surgical treatment for vHSIL have about a 50% chance of the condition recurring 1 year later, irrespective of whether treatment is by surgical excision or laser vaporization.

Since surgery can be associated with disfigurement and sexual dysfunction, alternatives to surgery should be considered in cases of vHSIL. The potential for effect on sexual function should be part of preoperative counseling and treatment. Women treated for VIN often experience increased inhibition of sexual excitement and increased inhibition of orgasm. One study found that in women undergoing vulvar excision for VIN, the impairment was found to be psychological in nature. Overall, the studies of sexual effect from treatment of VIN have found that women do not return to their pretreatment sexual function. However, the optimal management of vHSIL has not been determined. Nonsurgical options include topical therapies (imiquimod, 5-fluorouracil, cidofovir, and interferon) and nonpharmacologic treatments, such as photodynamic therapy.

Imiquimod, a topical immune modulator, is the most studied pharmacologic treatment of vHSIL. The drug induces secretion of cytokines, creating an immune response that clears the HPV infection. Imiquimod is safe and well tolerated. The clinical response rate varies between 35% and 81%. A recent study demonstrated the efficacy of imiquimod and the treatment was found to be noninferior to surgery. Adverse events differed, with local pain following surgical treatment and local pruritus and erythema associated with imiquimod use. Some patients did not respond to imiquimod; it was thought by the authors of the study that specific immunological factors affect the clinical response.

In conclusion, high-grade VIN is a heterogeneous disease made up of two distinct disease entities with rising incidence. In contrast to dVIN, the cancer risk is low for patients with vHSIL. Treatment should be driven by the clinical characteristics of the vulvar lesions, patients’ preferences, sexual activity, and compliance. Future directions include risk stratification of patients with vHSIL who are most likely to benefit from topical treatments, thus reducing overtreatment. Molecular biomarkers that could identify dVIN at an early stage are needed.

Dr. Jackson-Moore is associate professor in gynecologic oncology at the University of North Carolina at Chapel Hill. Dr. Tucker is assistant professor of gynecologic oncology at the university.

References

Cendejas BR et al. Am J Obstet Gynecol. 2015 Mar;212(3):291-7.

Lebreton M et al. J Gynecol Obstet Hum Reprod. 2020 Nov;49(9):101801.

Thuijs NB et al. Int J Cancer. 2021 Jan 1;148(1):90-8. doi: 10.1002/ijc.33198. .

Trutnovsky G et al. Lancet. 2022 May 7;399(10337):1790-8. Erratum in: Lancet. 2022 Oct 8;400(10359):1194.

Vulvar squamous cell carcinomas (VSCC) comprise approximately 90% of all vulvar malignancies. Unlike cervical SCC, which are predominantly human papilloma virus (HPV) positive, only a minority of VSCC are HPV positive – on the order of 15%-25% of cases. Most cases occur in the setting of lichen sclerosus and are HPV negative.

Lichen sclerosus is a chronic inflammatory dermatitis typically involving the anogenital area, which in some cases can become seriously distorted (e.g. atrophy of the labia minora, clitoral phimosis, and introital stenosis). Although most cases are diagnosed in postmenopausal women, LS can affect women of any age. The true prevalence of lichen sclerosus is unknown. Recent studies have shown a prevalence of 1 in 60; among older women, it can even be as high as 1 in 30. While lichen sclerosus is a pruriginous condition, it is often asymptomatic. It is not considered a premalignant condition. The diagnosis is clinical; however, suspicious lesions (erosions/ulcerations, hyperkeratosis, pigmented areas, ecchymosis, warty or papular lesions), particularly when recalcitrant to adequate first-line therapy, should be biopsied.

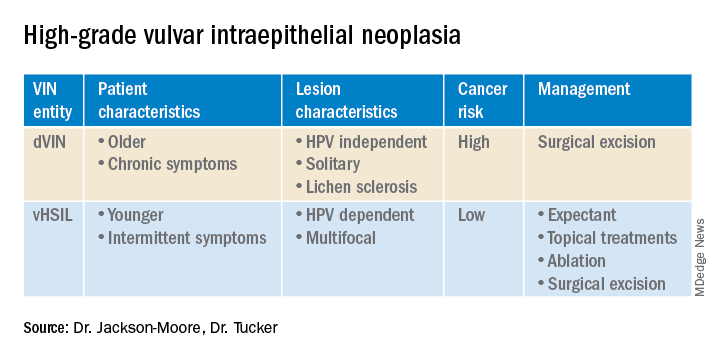

VSCC arises from precursor lesions or high-grade vulvar intraepithelial neoplasia (VIN). The 2015 International Society for the Study of Vulvovaginal Disease nomenclature classifies high-grade VIN into high-grade squamous intraepithelial lesion (HSIL) and differentiated VIN (dVIN). Most patients with high-grade VIN are diagnosed with HSIL or usual type VIN. A preponderance of these lesions (75%-85%) are HPV positive, predominantly HPV 16. Vulvar HSIL (vHSIL) lesions affect younger women. The lesions tend to be multifocal and extensive. On the other hand, dVIN typically affects older women and commonly develops as a solitary lesion. While dVIN accounts for only a small subset of patients with high-grade VIN, these lesions are HPV negative and associated with lichen sclerosus.

Both disease entities, vHSIL and dVIN, are increasing in incidence. There is a higher risk and shortened period of progression to cancer in patients with dVIN compared to HSIL. The cancer risk of vHSIL is relatively low. The 10-year cumulative VSCC risk reported in the literature is 10.3%; 9.7% for vHSIL and 50% for dVIN. Patients with vHSIL could benefit from less aggressive treatment modalities.

Patients present with a constellation of signs such as itching, pain, burning, bleeding, and discharge. Chronic symptoms portend HPV-independent lesions associated with lichen sclerosus while episodic signs are suggestive of HPV-positive lesions.

The recurrence risk of high-grade VIN is 46%-70%. Risk factors for recurrence include age greater than 50, immunosuppression, metasynchronous HSIL, and multifocal lesions. Recurrences occur in up to 50% of women who have undergone surgery. For those who undergo surgical treatment for high-grade VIN, recurrence is more common in the setting of positive margins, underlying lichen sclerosis, persistent HPV infection, and immunosuppression.

Management of high-grade VIN is determined by the lesion characteristics, patient characteristics, and medical expertise. Given the risk of progression of high-grade VIN to cancer and risk of underlying cancer, surgical therapy is typically recommended. The treatment of choice is surgical excision in cases of dVIN. Surgical treatments include CO2 laser ablation, wide local excision, and vulvectomy. Women who undergo surgical treatment for vHSIL have about a 50% chance of the condition recurring 1 year later, irrespective of whether treatment is by surgical excision or laser vaporization.

Since surgery can be associated with disfigurement and sexual dysfunction, alternatives to surgery should be considered in cases of vHSIL. The potential for effect on sexual function should be part of preoperative counseling and treatment. Women treated for VIN often experience increased inhibition of sexual excitement and increased inhibition of orgasm. One study found that in women undergoing vulvar excision for VIN, the impairment was found to be psychological in nature. Overall, the studies of sexual effect from treatment of VIN have found that women do not return to their pretreatment sexual function. However, the optimal management of vHSIL has not been determined. Nonsurgical options include topical therapies (imiquimod, 5-fluorouracil, cidofovir, and interferon) and nonpharmacologic treatments, such as photodynamic therapy.

Imiquimod, a topical immune modulator, is the most studied pharmacologic treatment of vHSIL. The drug induces secretion of cytokines, creating an immune response that clears the HPV infection. Imiquimod is safe and well tolerated. The clinical response rate varies between 35% and 81%. A recent study demonstrated the efficacy of imiquimod and the treatment was found to be noninferior to surgery. Adverse events differed, with local pain following surgical treatment and local pruritus and erythema associated with imiquimod use. Some patients did not respond to imiquimod; it was thought by the authors of the study that specific immunological factors affect the clinical response.

In conclusion, high-grade VIN is a heterogeneous disease made up of two distinct disease entities with rising incidence. In contrast to dVIN, the cancer risk is low for patients with vHSIL. Treatment should be driven by the clinical characteristics of the vulvar lesions, patients’ preferences, sexual activity, and compliance. Future directions include risk stratification of patients with vHSIL who are most likely to benefit from topical treatments, thus reducing overtreatment. Molecular biomarkers that could identify dVIN at an early stage are needed.

Dr. Jackson-Moore is associate professor in gynecologic oncology at the University of North Carolina at Chapel Hill. Dr. Tucker is assistant professor of gynecologic oncology at the university.

References

Cendejas BR et al. Am J Obstet Gynecol. 2015 Mar;212(3):291-7.

Lebreton M et al. J Gynecol Obstet Hum Reprod. 2020 Nov;49(9):101801.

Thuijs NB et al. Int J Cancer. 2021 Jan 1;148(1):90-8. doi: 10.1002/ijc.33198. .

Trutnovsky G et al. Lancet. 2022 May 7;399(10337):1790-8. Erratum in: Lancet. 2022 Oct 8;400(10359):1194.

The importance of connection and community

You only are free when you realize you belong no place – you belong every place – no place at all. The price is high. The reward is great. ~ Maya Angelou

At 8 o’clock, every weekday morning, for years and years now, two friends appear in my kitchen for coffee, and so one identity I carry includes being part of the “coffee ladies.” While this is one of the smaller and more intimate groups to which I belong, I am also a member (“distinguished,” no less) of a slightly larger group: the American Psychiatric Association, and being part of both groups is meaningful to me in more ways than I can describe.

When I think back over the years, I – like most people – have belonged to many people and places, either officially or unofficially. It is these connections that define us, fill our time, give us meaning and purpose, and anchor us. We belong to our families and friends, but we also belong to our professional and community groups, our institutions – whether they are hospitals, schools, religious centers, country clubs, or charitable organizations – as well as interest and advocacy groups. And finally, we belong to our coworkers and to our patients, and they to us, especially if we see the same people over time. Being a psychiatrist can be a solitary career, and it can take a little effort to be a part of larger worlds, especially for those who find solace in more individual activities.

As I’ve gotten older, I’ve noticed that I belong to fewer of these groups. I’m no longer a little league or field hockey mom, nor a member of the neighborhood babysitting co-op, and I’ve exhausted the gamut of council and leadership positions in my APA district branch. I’ve joined organizations only to pay the membership fee, and then never gone to their meetings or events. The pandemic has accounted for some of this: I still belong to my book club, but I often read the book and don’t go to the Zoom meetings as I miss the real-life aspect of getting together. Being boxed on a screen is not the same as the one-on-one conversations before the formal book discussion. And while I still carry a host of identities, I imagine it is not unusual to belong to fewer organizations as time passes. It’s not all bad, there is something good to be said for living life at a less frenetic pace as fewer entities lay claim to my time.

In psychiatry, our patients span the range of human experience: Some are very engaged with their worlds, while others struggle to make even the most basic of connections. Their lives may seem disconnected – empty, even – and I find myself encouraging people to reach out, to find activities that will ease their loneliness and integrate a feeling of belonging in a way that adds meaning and purpose. For some people, this may be as simple as asking a friend to have lunch, but even that can be an overwhelming obstacle for someone who is depressed, or for someone who has no friends.

Patients may counter my suggestions with a host of reasons as to why they can’t connect. Perhaps their friend is too busy with work or his family, the lunch would cost too much, there’s no transportation, or no restaurant that could meet their dietary needs. Or perhaps they are just too fearful of being rejected.

Psychiatric disorders, by their nature, can be very isolating. Depressed and anxious people often find it a struggle just to get through their days, adding new people and activities is not something that brings joy. For people suffering with psychosis, their internal realities are often all-consuming and there may be no room for accommodating others. And finally, what I hear over and over, is that people are afraid of what others might think of them, and this fear is paralyzing. I try to suggest that we never really know or control what others think of us, but obviously, this does not reassure most patients as they are also bewildered by their irrational fear. To go to an event unaccompanied, or even to a party to which they have been invited, is a hurdle they won’t (or can’t) attempt.

The pandemic, with its initial months of shutdown, and then with years of fear of illness, has created new ways of connecting. Our “Zoom” world can be very convenient – in many ways it has opened up aspects of learning and connection for people who are short on time,or struggle with transportation. In the comfort of our living rooms, in pajamas and slippers, we can take classes, join clubs, attend Alcoholics Anonymous meetings, go to conferences or religious services, and be part of any number of organizations without flying or searching for parking. I love that, with 1 hour and a single click, I can now attend my department’s weekly Grand Rounds. But for many who struggle with using technology, or who don’t feel the same benefits from online encounters, the pandemic has been an isolating and lonely time.

It should not be assumed that isolation has been a negative experience for everyone. For many who struggle with interpersonal relationships, for children who are bullied or teased at school or who feel self-conscious sitting alone at lunch, there may not be the presumed “fear of missing out.” As one adult patient told me: “You know, I do ‘alone’ well.” For some, it has been a relief to be relieved of the pressure to socialize, attend parties, or pursue online dating – a process I think of as “people-shopping” which looks so different from the old days of organic interactions that led to romantic interactions over time. Many have found relief without the pressures of social interactions.

Community, connection, and belonging are not inconsequential things, however. They are part of what adds to life’s richness, and they are associated with good health and longevity. The Harvard Study of Adult Development, begun in 1938, has been tracking two groups of Boston teenagers – and now their wives and children – for 84 years. Tracking one group of Harvard students and another group of teens from poorer areas in Boston, the project is now on its 4th director.

George Vaillant, MD, author of “Aging Well: Surprising Guideposts to a Happier Life from the Landmark Harvard Study of Adult Development” (New York: Little, Brown Spark, 2002) was the program’s director from 1972 to 2004. “When the study began, nobody cared about empathy or attachment. But the key to healthy aging is relationships, relationships, relationships,” Dr. Vaillant said in an interview in the Harvard Gazette.

Susan Pinker is a social psychologist and author of “The Village Effect: How Face-to-Face Contact Can Make Us Healthier and Happier” (Toronto: Random House Canada, 2014). In her 2017 TED talk, she notes that in all developed countries, women live 6-8 years longer than men, and are half as likely to die at any age. She is underwhelmed by digital relationships, and says that real life relationships affect our physiological states differently and in more beneficial ways. “Building your village and sustaining it is a matter of life and death,” she states at the end of her TED talk.

I spoke with Ms. Pinker about her thoughts on how our personal villages change over time. She was quick to tell me that she is not against digital communities. “I’m not a Luddite. As a writer, I probably spend as much time facing a screen as anyone else. But it’s important to remember that digital communities can amplify existing relationships, and don’t replace in-person social contact. A lot of people have drunk the Kool-Aid about virtual experiences, even though they are not the same as real life interactions.

“Loneliness takes on a U-shaped function across adulthood,” she explained with regard to how age impacts our social connections. “People are lonely when they first leave home or when they finish college and go out into the world. Then they settle into new situations; they can make friends at work, through their children, in their neighborhood, or by belonging to organizations. As people settle into their adult lives, there are increased opportunities to connect in person. But loneliness increases again in late middle age.” She explained that everyone loses people as their children move away, friends move, and couples may divorce or a spouse dies.

“Attrition of our social face-to-face networks is an ugly feature of aging,” Ms. Pinker said. “Some people are good at replacing the vacant spots; they sense that it is important to invest in different relationships as you age. It’s like a garden that you need to tend by replacing the perennials that die off in the winter.” The United States, she pointed out, has a culture that is particularly difficult for people in their later years.

My world is a little quieter than it once was, but collecting and holding on to people is important to me. The organizations and affiliations change over time, as does the brand of coffee. So I try to inspire some of my more isolated patients to prioritize their relationships, to let go of their grudges, to tolerate the discomfort of moving from their places of comfort to the temporary discomfort of reaching out in the service of achieving a less solitary, more purposeful, and healthier life. When it doesn’t come naturally, it can be hard work.

Dr. Miller is a coauthor of “Committed: The Battle Over Involuntary Psychiatric Care” (Johns Hopkins University Press, 2016). She has a private practice and is assistant professor of psychiatry and behavioral sciences at Johns Hopkins University, Baltimore. She has disclosed no relevant financial relationships.

You only are free when you realize you belong no place – you belong every place – no place at all. The price is high. The reward is great. ~ Maya Angelou

At 8 o’clock, every weekday morning, for years and years now, two friends appear in my kitchen for coffee, and so one identity I carry includes being part of the “coffee ladies.” While this is one of the smaller and more intimate groups to which I belong, I am also a member (“distinguished,” no less) of a slightly larger group: the American Psychiatric Association, and being part of both groups is meaningful to me in more ways than I can describe.

When I think back over the years, I – like most people – have belonged to many people and places, either officially or unofficially. It is these connections that define us, fill our time, give us meaning and purpose, and anchor us. We belong to our families and friends, but we also belong to our professional and community groups, our institutions – whether they are hospitals, schools, religious centers, country clubs, or charitable organizations – as well as interest and advocacy groups. And finally, we belong to our coworkers and to our patients, and they to us, especially if we see the same people over time. Being a psychiatrist can be a solitary career, and it can take a little effort to be a part of larger worlds, especially for those who find solace in more individual activities.

As I’ve gotten older, I’ve noticed that I belong to fewer of these groups. I’m no longer a little league or field hockey mom, nor a member of the neighborhood babysitting co-op, and I’ve exhausted the gamut of council and leadership positions in my APA district branch. I’ve joined organizations only to pay the membership fee, and then never gone to their meetings or events. The pandemic has accounted for some of this: I still belong to my book club, but I often read the book and don’t go to the Zoom meetings as I miss the real-life aspect of getting together. Being boxed on a screen is not the same as the one-on-one conversations before the formal book discussion. And while I still carry a host of identities, I imagine it is not unusual to belong to fewer organizations as time passes. It’s not all bad, there is something good to be said for living life at a less frenetic pace as fewer entities lay claim to my time.

In psychiatry, our patients span the range of human experience: Some are very engaged with their worlds, while others struggle to make even the most basic of connections. Their lives may seem disconnected – empty, even – and I find myself encouraging people to reach out, to find activities that will ease their loneliness and integrate a feeling of belonging in a way that adds meaning and purpose. For some people, this may be as simple as asking a friend to have lunch, but even that can be an overwhelming obstacle for someone who is depressed, or for someone who has no friends.

Patients may counter my suggestions with a host of reasons as to why they can’t connect. Perhaps their friend is too busy with work or his family, the lunch would cost too much, there’s no transportation, or no restaurant that could meet their dietary needs. Or perhaps they are just too fearful of being rejected.

Psychiatric disorders, by their nature, can be very isolating. Depressed and anxious people often find it a struggle just to get through their days, adding new people and activities is not something that brings joy. For people suffering with psychosis, their internal realities are often all-consuming and there may be no room for accommodating others. And finally, what I hear over and over, is that people are afraid of what others might think of them, and this fear is paralyzing. I try to suggest that we never really know or control what others think of us, but obviously, this does not reassure most patients as they are also bewildered by their irrational fear. To go to an event unaccompanied, or even to a party to which they have been invited, is a hurdle they won’t (or can’t) attempt.

The pandemic, with its initial months of shutdown, and then with years of fear of illness, has created new ways of connecting. Our “Zoom” world can be very convenient – in many ways it has opened up aspects of learning and connection for people who are short on time,or struggle with transportation. In the comfort of our living rooms, in pajamas and slippers, we can take classes, join clubs, attend Alcoholics Anonymous meetings, go to conferences or religious services, and be part of any number of organizations without flying or searching for parking. I love that, with 1 hour and a single click, I can now attend my department’s weekly Grand Rounds. But for many who struggle with using technology, or who don’t feel the same benefits from online encounters, the pandemic has been an isolating and lonely time.

It should not be assumed that isolation has been a negative experience for everyone. For many who struggle with interpersonal relationships, for children who are bullied or teased at school or who feel self-conscious sitting alone at lunch, there may not be the presumed “fear of missing out.” As one adult patient told me: “You know, I do ‘alone’ well.” For some, it has been a relief to be relieved of the pressure to socialize, attend parties, or pursue online dating – a process I think of as “people-shopping” which looks so different from the old days of organic interactions that led to romantic interactions over time. Many have found relief without the pressures of social interactions.

Community, connection, and belonging are not inconsequential things, however. They are part of what adds to life’s richness, and they are associated with good health and longevity. The Harvard Study of Adult Development, begun in 1938, has been tracking two groups of Boston teenagers – and now their wives and children – for 84 years. Tracking one group of Harvard students and another group of teens from poorer areas in Boston, the project is now on its 4th director.

George Vaillant, MD, author of “Aging Well: Surprising Guideposts to a Happier Life from the Landmark Harvard Study of Adult Development” (New York: Little, Brown Spark, 2002) was the program’s director from 1972 to 2004. “When the study began, nobody cared about empathy or attachment. But the key to healthy aging is relationships, relationships, relationships,” Dr. Vaillant said in an interview in the Harvard Gazette.

Susan Pinker is a social psychologist and author of “The Village Effect: How Face-to-Face Contact Can Make Us Healthier and Happier” (Toronto: Random House Canada, 2014). In her 2017 TED talk, she notes that in all developed countries, women live 6-8 years longer than men, and are half as likely to die at any age. She is underwhelmed by digital relationships, and says that real life relationships affect our physiological states differently and in more beneficial ways. “Building your village and sustaining it is a matter of life and death,” she states at the end of her TED talk.

I spoke with Ms. Pinker about her thoughts on how our personal villages change over time. She was quick to tell me that she is not against digital communities. “I’m not a Luddite. As a writer, I probably spend as much time facing a screen as anyone else. But it’s important to remember that digital communities can amplify existing relationships, and don’t replace in-person social contact. A lot of people have drunk the Kool-Aid about virtual experiences, even though they are not the same as real life interactions.

“Loneliness takes on a U-shaped function across adulthood,” she explained with regard to how age impacts our social connections. “People are lonely when they first leave home or when they finish college and go out into the world. Then they settle into new situations; they can make friends at work, through their children, in their neighborhood, or by belonging to organizations. As people settle into their adult lives, there are increased opportunities to connect in person. But loneliness increases again in late middle age.” She explained that everyone loses people as their children move away, friends move, and couples may divorce or a spouse dies.

“Attrition of our social face-to-face networks is an ugly feature of aging,” Ms. Pinker said. “Some people are good at replacing the vacant spots; they sense that it is important to invest in different relationships as you age. It’s like a garden that you need to tend by replacing the perennials that die off in the winter.” The United States, she pointed out, has a culture that is particularly difficult for people in their later years.

My world is a little quieter than it once was, but collecting and holding on to people is important to me. The organizations and affiliations change over time, as does the brand of coffee. So I try to inspire some of my more isolated patients to prioritize their relationships, to let go of their grudges, to tolerate the discomfort of moving from their places of comfort to the temporary discomfort of reaching out in the service of achieving a less solitary, more purposeful, and healthier life. When it doesn’t come naturally, it can be hard work.

Dr. Miller is a coauthor of “Committed: The Battle Over Involuntary Psychiatric Care” (Johns Hopkins University Press, 2016). She has a private practice and is assistant professor of psychiatry and behavioral sciences at Johns Hopkins University, Baltimore. She has disclosed no relevant financial relationships.

You only are free when you realize you belong no place – you belong every place – no place at all. The price is high. The reward is great. ~ Maya Angelou

At 8 o’clock, every weekday morning, for years and years now, two friends appear in my kitchen for coffee, and so one identity I carry includes being part of the “coffee ladies.” While this is one of the smaller and more intimate groups to which I belong, I am also a member (“distinguished,” no less) of a slightly larger group: the American Psychiatric Association, and being part of both groups is meaningful to me in more ways than I can describe.

When I think back over the years, I – like most people – have belonged to many people and places, either officially or unofficially. It is these connections that define us, fill our time, give us meaning and purpose, and anchor us. We belong to our families and friends, but we also belong to our professional and community groups, our institutions – whether they are hospitals, schools, religious centers, country clubs, or charitable organizations – as well as interest and advocacy groups. And finally, we belong to our coworkers and to our patients, and they to us, especially if we see the same people over time. Being a psychiatrist can be a solitary career, and it can take a little effort to be a part of larger worlds, especially for those who find solace in more individual activities.

As I’ve gotten older, I’ve noticed that I belong to fewer of these groups. I’m no longer a little league or field hockey mom, nor a member of the neighborhood babysitting co-op, and I’ve exhausted the gamut of council and leadership positions in my APA district branch. I’ve joined organizations only to pay the membership fee, and then never gone to their meetings or events. The pandemic has accounted for some of this: I still belong to my book club, but I often read the book and don’t go to the Zoom meetings as I miss the real-life aspect of getting together. Being boxed on a screen is not the same as the one-on-one conversations before the formal book discussion. And while I still carry a host of identities, I imagine it is not unusual to belong to fewer organizations as time passes. It’s not all bad, there is something good to be said for living life at a less frenetic pace as fewer entities lay claim to my time.

In psychiatry, our patients span the range of human experience: Some are very engaged with their worlds, while others struggle to make even the most basic of connections. Their lives may seem disconnected – empty, even – and I find myself encouraging people to reach out, to find activities that will ease their loneliness and integrate a feeling of belonging in a way that adds meaning and purpose. For some people, this may be as simple as asking a friend to have lunch, but even that can be an overwhelming obstacle for someone who is depressed, or for someone who has no friends.

Patients may counter my suggestions with a host of reasons as to why they can’t connect. Perhaps their friend is too busy with work or his family, the lunch would cost too much, there’s no transportation, or no restaurant that could meet their dietary needs. Or perhaps they are just too fearful of being rejected.

Psychiatric disorders, by their nature, can be very isolating. Depressed and anxious people often find it a struggle just to get through their days, adding new people and activities is not something that brings joy. For people suffering with psychosis, their internal realities are often all-consuming and there may be no room for accommodating others. And finally, what I hear over and over, is that people are afraid of what others might think of them, and this fear is paralyzing. I try to suggest that we never really know or control what others think of us, but obviously, this does not reassure most patients as they are also bewildered by their irrational fear. To go to an event unaccompanied, or even to a party to which they have been invited, is a hurdle they won’t (or can’t) attempt.

The pandemic, with its initial months of shutdown, and then with years of fear of illness, has created new ways of connecting. Our “Zoom” world can be very convenient – in many ways it has opened up aspects of learning and connection for people who are short on time,or struggle with transportation. In the comfort of our living rooms, in pajamas and slippers, we can take classes, join clubs, attend Alcoholics Anonymous meetings, go to conferences or religious services, and be part of any number of organizations without flying or searching for parking. I love that, with 1 hour and a single click, I can now attend my department’s weekly Grand Rounds. But for many who struggle with using technology, or who don’t feel the same benefits from online encounters, the pandemic has been an isolating and lonely time.