User login

FDA strengthens mammography regulations: Final rule

A final rule, updating the regulations issued under the Mammography Quality Standards Act of 1992, requires that mammography facilities notify patients about the density of their breasts, strengthens the FDA’s oversight of facilities, and provides guidance to help physicians better categorize and assess mammograms, according to a March 9 press release.

The rule requires implementation of the changes within 18 months.

According to the final rule document, the updates are “intended to improve the delivery of mammography services” in ways that reflect changes in mammography technology, quality standards, and the way results are categorized, reported, and communicated to patients and providers.

For instance, mammography reports must include an assessment of breast density to provide greater detail on the potential limitations of the mammogram results and allow patients and physicians to make more informed decisions, such as the possibility of additional imaging for women with dense breast tissue.

“Today’s action represents the agency’s broader commitment to support innovation to prevent, detect and treat cancer,” said Hilary Marston, MD, MPH, FDA’s chief medical officer, in the agency’s press release. The FDA remains “committed to advancing efforts to improve the health of women and strengthen the fight against breast cancer.”

A version of this article first appeared on Medscape.com.

A final rule, updating the regulations issued under the Mammography Quality Standards Act of 1992, requires that mammography facilities notify patients about the density of their breasts, strengthens the FDA’s oversight of facilities, and provides guidance to help physicians better categorize and assess mammograms, according to a March 9 press release.

The rule requires implementation of the changes within 18 months.

According to the final rule document, the updates are “intended to improve the delivery of mammography services” in ways that reflect changes in mammography technology, quality standards, and the way results are categorized, reported, and communicated to patients and providers.

For instance, mammography reports must include an assessment of breast density to provide greater detail on the potential limitations of the mammogram results and allow patients and physicians to make more informed decisions, such as the possibility of additional imaging for women with dense breast tissue.

“Today’s action represents the agency’s broader commitment to support innovation to prevent, detect and treat cancer,” said Hilary Marston, MD, MPH, FDA’s chief medical officer, in the agency’s press release. The FDA remains “committed to advancing efforts to improve the health of women and strengthen the fight against breast cancer.”

A version of this article first appeared on Medscape.com.

A final rule, updating the regulations issued under the Mammography Quality Standards Act of 1992, requires that mammography facilities notify patients about the density of their breasts, strengthens the FDA’s oversight of facilities, and provides guidance to help physicians better categorize and assess mammograms, according to a March 9 press release.

The rule requires implementation of the changes within 18 months.

According to the final rule document, the updates are “intended to improve the delivery of mammography services” in ways that reflect changes in mammography technology, quality standards, and the way results are categorized, reported, and communicated to patients and providers.

For instance, mammography reports must include an assessment of breast density to provide greater detail on the potential limitations of the mammogram results and allow patients and physicians to make more informed decisions, such as the possibility of additional imaging for women with dense breast tissue.

“Today’s action represents the agency’s broader commitment to support innovation to prevent, detect and treat cancer,” said Hilary Marston, MD, MPH, FDA’s chief medical officer, in the agency’s press release. The FDA remains “committed to advancing efforts to improve the health of women and strengthen the fight against breast cancer.”

A version of this article first appeared on Medscape.com.

Telehealth doctor indicted on health care fraud, opioid distribution charges

Sangita Patel, MD, 50, practiced at Advance Medical Home Physicians in Troy.

According to court documents, between July 2020 and June 2022 Patel was responsible for submitting Medicare claims for improper telehealth visits she didn’t conduct herself.

Dr. Patel, who accepted patients who paid in cash as well as those with Medicare and Medicaid coverage, billed approximately $3.4 million to Medicare between 2018 and 2022, according to court documents. An unusual number of these visits were billed using complex codes, an indication of health care fraud. The investigation also found that on many days, Dr. Patel billed for more than 24 hours of services. During this period, according to the document, 76% of Dr. Patel’s Medicare reimbursements were for telehealth.

Prosecutors say that Dr. Patel prescribed Schedule II controlled substances to more than 90% of the patients in these telehealth visits. She delegated her prescription authority to an unlicensed medical assistant. Through undercover visits and cell site search warrant data, the investigation found that Dr. Patel directed patients to contact, via cell phone, this assistant, who then entered electronic prescriptions into the electronic medical records system. Dr. Patel then signed the prescriptions and sent them to the pharmacies without ever interacting with the patients. Prosecutors also used text messages, obtained by search warrant, between Dr. Patel and her assistant and between the assistant and undercover informers to build their case.

Dr. Patel is also accused of referring patients to other providers, who in turn billed Medicare for claims associated with those patients. Advance Medical received $143,000 from these providers, potentially in violation of anti-kickback laws, according to bank records obtained by subpoena.

If convicted, Dr. Patel could be sentenced to up to 10 years in federal prison.

A version of this article first appeared on Medscape.com.

Sangita Patel, MD, 50, practiced at Advance Medical Home Physicians in Troy.

According to court documents, between July 2020 and June 2022 Patel was responsible for submitting Medicare claims for improper telehealth visits she didn’t conduct herself.

Dr. Patel, who accepted patients who paid in cash as well as those with Medicare and Medicaid coverage, billed approximately $3.4 million to Medicare between 2018 and 2022, according to court documents. An unusual number of these visits were billed using complex codes, an indication of health care fraud. The investigation also found that on many days, Dr. Patel billed for more than 24 hours of services. During this period, according to the document, 76% of Dr. Patel’s Medicare reimbursements were for telehealth.

Prosecutors say that Dr. Patel prescribed Schedule II controlled substances to more than 90% of the patients in these telehealth visits. She delegated her prescription authority to an unlicensed medical assistant. Through undercover visits and cell site search warrant data, the investigation found that Dr. Patel directed patients to contact, via cell phone, this assistant, who then entered electronic prescriptions into the electronic medical records system. Dr. Patel then signed the prescriptions and sent them to the pharmacies without ever interacting with the patients. Prosecutors also used text messages, obtained by search warrant, between Dr. Patel and her assistant and between the assistant and undercover informers to build their case.

Dr. Patel is also accused of referring patients to other providers, who in turn billed Medicare for claims associated with those patients. Advance Medical received $143,000 from these providers, potentially in violation of anti-kickback laws, according to bank records obtained by subpoena.

If convicted, Dr. Patel could be sentenced to up to 10 years in federal prison.

A version of this article first appeared on Medscape.com.

Sangita Patel, MD, 50, practiced at Advance Medical Home Physicians in Troy.

According to court documents, between July 2020 and June 2022 Patel was responsible for submitting Medicare claims for improper telehealth visits she didn’t conduct herself.

Dr. Patel, who accepted patients who paid in cash as well as those with Medicare and Medicaid coverage, billed approximately $3.4 million to Medicare between 2018 and 2022, according to court documents. An unusual number of these visits were billed using complex codes, an indication of health care fraud. The investigation also found that on many days, Dr. Patel billed for more than 24 hours of services. During this period, according to the document, 76% of Dr. Patel’s Medicare reimbursements were for telehealth.

Prosecutors say that Dr. Patel prescribed Schedule II controlled substances to more than 90% of the patients in these telehealth visits. She delegated her prescription authority to an unlicensed medical assistant. Through undercover visits and cell site search warrant data, the investigation found that Dr. Patel directed patients to contact, via cell phone, this assistant, who then entered electronic prescriptions into the electronic medical records system. Dr. Patel then signed the prescriptions and sent them to the pharmacies without ever interacting with the patients. Prosecutors also used text messages, obtained by search warrant, between Dr. Patel and her assistant and between the assistant and undercover informers to build their case.

Dr. Patel is also accused of referring patients to other providers, who in turn billed Medicare for claims associated with those patients. Advance Medical received $143,000 from these providers, potentially in violation of anti-kickback laws, according to bank records obtained by subpoena.

If convicted, Dr. Patel could be sentenced to up to 10 years in federal prison.

A version of this article first appeared on Medscape.com.

Specialty and age may contribute to suicidal thoughts among physicians

A physician’s specialty can make a difference when it comes to having suicidal thoughts. Doctors who specialize in family medicine, obstetrics-gynecology, and psychiatry reported double the rates of suicidal thoughts than doctors in oncology, rheumatology, and pulmonary medicine, according to Doctors’ Burden: Medscape Physician Suicide Report 2023.

“The specialties with the highest reporting of physician suicidal thoughts are also those with the greatest physician shortages, based on the number of job openings posted by recruiting sites,” said Peter Yellowlees, MD, professor of psychiatry and chief wellness officer at UC Davis Health.

Doctors in those specialties are overworked, which can lead to burnout, he said.

There’s also a generational divide among physicians who reported suicidal thoughts. Millennials (age 27-41) and Gen-X physicians (age 42-56) were more likely to report these thoughts than were Baby Boomers (age 57-75) and the Silent Generation (age 76-95).

“Younger physicians are more burned out – they may have less control over their lives and less meaning than some older doctors who can do what they want,” said Dr. Yellowlees.

One millennial respondent commented that being on call and being required to chart detailed notes in the EHR has contributed to her burnout. “I’m more impatient and make less time and effort to see my friends and family.”

One Silent Generation respondent commented, “I am semi-retired, I take no call, I work no weekends, I provide anesthesia care in my area of special expertise, I work clinically about 46 days a year. Life is good, particularly compared to my younger colleagues who are working 60-plus hours a week with evening work, weekend work, and call. I feel really sorry for them.”

When young people enter medical school, they’re quite healthy, with low rates of depression and burnout, said Dr. Yellowlees. Yet, studies have shown that rates of burnout and suicidal thoughts increased within 2 years. “That reflects what happens when a group of idealistic young people hit a horrible system,” he said.

Who’s responsible?

Millennials were three times as likely as baby boomers to say that a medical school or health care organization should be responsible when a student or physician commits suicide.

“Young physicians may expect more of their employers than my generation did, which we see in residency programs that have unionized,” said Dr. Yellowlees, a Baby Boomer.

“As more young doctors are employed by health care organizations, they also may expect more resources to be available to them, such as wellness programs,” he added.

Younger doctors also focus more on work-life balance than older doctors, including time off and having hobbies, he said. “They are much more rational in terms of their overall beliefs and expectations than the older generation.”

Whom doctors confide in

Nearly 60% of physician-respondents with suicidal thoughts said they confided in a professional or someone they knew. Men were just as likely as women to reach out to a therapist (38%), whereas men were slightly more likely to confide in a family member and women were slightly more likely to confide in a colleague.

“It’s interesting that women are more active in seeking support at work – they often have developed a network of colleagues to support each other’s careers and whom they can confide in,” said Dr. Yellowlees.

He emphasized that 40% of physicians said they didn’t confide in anyone when they had suicidal thoughts. Of those, just over half said they could cope without professional help.

One respondent commented, “It’s just a thought; nothing I would actually do.” Another commented, “Mental health professionals can’t fix the underlying reason for the problem.”

Many doctors were concerned about risking disclosure to their medical boards (42%); that it would show up on their insurance records (33%); and that their colleagues would find out (25%), according to the report.

One respondent commented, “I don’t trust doctors to keep it to themselves.”

Another barrier doctors mentioned was a lack of time to seek help. One commented, “Time. I have none, when am I supposed to find an hour for counseling?”

A version of this article originally appeared on Medscape.com.

A physician’s specialty can make a difference when it comes to having suicidal thoughts. Doctors who specialize in family medicine, obstetrics-gynecology, and psychiatry reported double the rates of suicidal thoughts than doctors in oncology, rheumatology, and pulmonary medicine, according to Doctors’ Burden: Medscape Physician Suicide Report 2023.

“The specialties with the highest reporting of physician suicidal thoughts are also those with the greatest physician shortages, based on the number of job openings posted by recruiting sites,” said Peter Yellowlees, MD, professor of psychiatry and chief wellness officer at UC Davis Health.

Doctors in those specialties are overworked, which can lead to burnout, he said.

There’s also a generational divide among physicians who reported suicidal thoughts. Millennials (age 27-41) and Gen-X physicians (age 42-56) were more likely to report these thoughts than were Baby Boomers (age 57-75) and the Silent Generation (age 76-95).

“Younger physicians are more burned out – they may have less control over their lives and less meaning than some older doctors who can do what they want,” said Dr. Yellowlees.

One millennial respondent commented that being on call and being required to chart detailed notes in the EHR has contributed to her burnout. “I’m more impatient and make less time and effort to see my friends and family.”

One Silent Generation respondent commented, “I am semi-retired, I take no call, I work no weekends, I provide anesthesia care in my area of special expertise, I work clinically about 46 days a year. Life is good, particularly compared to my younger colleagues who are working 60-plus hours a week with evening work, weekend work, and call. I feel really sorry for them.”

When young people enter medical school, they’re quite healthy, with low rates of depression and burnout, said Dr. Yellowlees. Yet, studies have shown that rates of burnout and suicidal thoughts increased within 2 years. “That reflects what happens when a group of idealistic young people hit a horrible system,” he said.

Who’s responsible?

Millennials were three times as likely as baby boomers to say that a medical school or health care organization should be responsible when a student or physician commits suicide.

“Young physicians may expect more of their employers than my generation did, which we see in residency programs that have unionized,” said Dr. Yellowlees, a Baby Boomer.

“As more young doctors are employed by health care organizations, they also may expect more resources to be available to them, such as wellness programs,” he added.

Younger doctors also focus more on work-life balance than older doctors, including time off and having hobbies, he said. “They are much more rational in terms of their overall beliefs and expectations than the older generation.”

Whom doctors confide in

Nearly 60% of physician-respondents with suicidal thoughts said they confided in a professional or someone they knew. Men were just as likely as women to reach out to a therapist (38%), whereas men were slightly more likely to confide in a family member and women were slightly more likely to confide in a colleague.

“It’s interesting that women are more active in seeking support at work – they often have developed a network of colleagues to support each other’s careers and whom they can confide in,” said Dr. Yellowlees.

He emphasized that 40% of physicians said they didn’t confide in anyone when they had suicidal thoughts. Of those, just over half said they could cope without professional help.

One respondent commented, “It’s just a thought; nothing I would actually do.” Another commented, “Mental health professionals can’t fix the underlying reason for the problem.”

Many doctors were concerned about risking disclosure to their medical boards (42%); that it would show up on their insurance records (33%); and that their colleagues would find out (25%), according to the report.

One respondent commented, “I don’t trust doctors to keep it to themselves.”

Another barrier doctors mentioned was a lack of time to seek help. One commented, “Time. I have none, when am I supposed to find an hour for counseling?”

A version of this article originally appeared on Medscape.com.

A physician’s specialty can make a difference when it comes to having suicidal thoughts. Doctors who specialize in family medicine, obstetrics-gynecology, and psychiatry reported double the rates of suicidal thoughts than doctors in oncology, rheumatology, and pulmonary medicine, according to Doctors’ Burden: Medscape Physician Suicide Report 2023.

“The specialties with the highest reporting of physician suicidal thoughts are also those with the greatest physician shortages, based on the number of job openings posted by recruiting sites,” said Peter Yellowlees, MD, professor of psychiatry and chief wellness officer at UC Davis Health.

Doctors in those specialties are overworked, which can lead to burnout, he said.

There’s also a generational divide among physicians who reported suicidal thoughts. Millennials (age 27-41) and Gen-X physicians (age 42-56) were more likely to report these thoughts than were Baby Boomers (age 57-75) and the Silent Generation (age 76-95).

“Younger physicians are more burned out – they may have less control over their lives and less meaning than some older doctors who can do what they want,” said Dr. Yellowlees.

One millennial respondent commented that being on call and being required to chart detailed notes in the EHR has contributed to her burnout. “I’m more impatient and make less time and effort to see my friends and family.”

One Silent Generation respondent commented, “I am semi-retired, I take no call, I work no weekends, I provide anesthesia care in my area of special expertise, I work clinically about 46 days a year. Life is good, particularly compared to my younger colleagues who are working 60-plus hours a week with evening work, weekend work, and call. I feel really sorry for them.”

When young people enter medical school, they’re quite healthy, with low rates of depression and burnout, said Dr. Yellowlees. Yet, studies have shown that rates of burnout and suicidal thoughts increased within 2 years. “That reflects what happens when a group of idealistic young people hit a horrible system,” he said.

Who’s responsible?

Millennials were three times as likely as baby boomers to say that a medical school or health care organization should be responsible when a student or physician commits suicide.

“Young physicians may expect more of their employers than my generation did, which we see in residency programs that have unionized,” said Dr. Yellowlees, a Baby Boomer.

“As more young doctors are employed by health care organizations, they also may expect more resources to be available to them, such as wellness programs,” he added.

Younger doctors also focus more on work-life balance than older doctors, including time off and having hobbies, he said. “They are much more rational in terms of their overall beliefs and expectations than the older generation.”

Whom doctors confide in

Nearly 60% of physician-respondents with suicidal thoughts said they confided in a professional or someone they knew. Men were just as likely as women to reach out to a therapist (38%), whereas men were slightly more likely to confide in a family member and women were slightly more likely to confide in a colleague.

“It’s interesting that women are more active in seeking support at work – they often have developed a network of colleagues to support each other’s careers and whom they can confide in,” said Dr. Yellowlees.

He emphasized that 40% of physicians said they didn’t confide in anyone when they had suicidal thoughts. Of those, just over half said they could cope without professional help.

One respondent commented, “It’s just a thought; nothing I would actually do.” Another commented, “Mental health professionals can’t fix the underlying reason for the problem.”

Many doctors were concerned about risking disclosure to their medical boards (42%); that it would show up on their insurance records (33%); and that their colleagues would find out (25%), according to the report.

One respondent commented, “I don’t trust doctors to keep it to themselves.”

Another barrier doctors mentioned was a lack of time to seek help. One commented, “Time. I have none, when am I supposed to find an hour for counseling?”

A version of this article originally appeared on Medscape.com.

Popular book by USC oncologist pulled because of plagiarism

The Los Angeles Times reported earlier this week that it identified at least 95 instances of plagiarism by author David B. Agus, MD, in “The Book of Animal Secrets: Nature’s Lessons for a Long and Happy Life.”

According to the LA Times, Dr. Agus copied passages from numerous sources, including The New York Times, National Geographic, Wikipedia, and smaller niche sites. Some instances involved a sentence or two; others involved multiparagraph, word-for-word copying without attribution.

The book by Dr. Agus – who interviews celebrities for a health-related miniseries on Paramount Plus – had reached the top spot on Amazon’s list of best-selling books about animals a week before its planned March 7 release.

Publisher Simon & Schuster released a statement announcing a recall of the book at Dr. Agus’ expense “until a fully revised and corrected edition can be released.”

Dr. Agus included his own statement apologizing “to the scientists and writers whose work or words were used or not fully attributed,” and said he will “rewrite the passages in question with new language, will provide proper and full attribution, and when ready will announce a new publication date.”

“Writers should always be credited for their work, and I deeply regret these mistakes and the lack of rigor in finalizing the book,” he stated, adding that “[t]his book contains important lessons, messages, and guidance about health that I wanted to convey to the readers. I do not want these mistakes to interfere with that effort.”

A version of this article first appeared on Medscape.com.

The Los Angeles Times reported earlier this week that it identified at least 95 instances of plagiarism by author David B. Agus, MD, in “The Book of Animal Secrets: Nature’s Lessons for a Long and Happy Life.”

According to the LA Times, Dr. Agus copied passages from numerous sources, including The New York Times, National Geographic, Wikipedia, and smaller niche sites. Some instances involved a sentence or two; others involved multiparagraph, word-for-word copying without attribution.

The book by Dr. Agus – who interviews celebrities for a health-related miniseries on Paramount Plus – had reached the top spot on Amazon’s list of best-selling books about animals a week before its planned March 7 release.

Publisher Simon & Schuster released a statement announcing a recall of the book at Dr. Agus’ expense “until a fully revised and corrected edition can be released.”

Dr. Agus included his own statement apologizing “to the scientists and writers whose work or words were used or not fully attributed,” and said he will “rewrite the passages in question with new language, will provide proper and full attribution, and when ready will announce a new publication date.”

“Writers should always be credited for their work, and I deeply regret these mistakes and the lack of rigor in finalizing the book,” he stated, adding that “[t]his book contains important lessons, messages, and guidance about health that I wanted to convey to the readers. I do not want these mistakes to interfere with that effort.”

A version of this article first appeared on Medscape.com.

The Los Angeles Times reported earlier this week that it identified at least 95 instances of plagiarism by author David B. Agus, MD, in “The Book of Animal Secrets: Nature’s Lessons for a Long and Happy Life.”

According to the LA Times, Dr. Agus copied passages from numerous sources, including The New York Times, National Geographic, Wikipedia, and smaller niche sites. Some instances involved a sentence or two; others involved multiparagraph, word-for-word copying without attribution.

The book by Dr. Agus – who interviews celebrities for a health-related miniseries on Paramount Plus – had reached the top spot on Amazon’s list of best-selling books about animals a week before its planned March 7 release.

Publisher Simon & Schuster released a statement announcing a recall of the book at Dr. Agus’ expense “until a fully revised and corrected edition can be released.”

Dr. Agus included his own statement apologizing “to the scientists and writers whose work or words were used or not fully attributed,” and said he will “rewrite the passages in question with new language, will provide proper and full attribution, and when ready will announce a new publication date.”

“Writers should always be credited for their work, and I deeply regret these mistakes and the lack of rigor in finalizing the book,” he stated, adding that “[t]his book contains important lessons, messages, and guidance about health that I wanted to convey to the readers. I do not want these mistakes to interfere with that effort.”

A version of this article first appeared on Medscape.com.

HER2-low breast cancer is not a separate clinical entity: Study

Much attention has been focused recently on the idea that breast cancer with a low expression of HER2 can be treated with HER2-targeted agents. Not surprisingly, manufacturers of these drugs have pounced on this idea, as it opens up a whole new patient population: previously these drugs were only for tumors with a high HER2 expression.

There is a large potential market at stake: HER2-low (also referred to as ERBB2-low), as defined by a score of 0 to 3+ on immunohistochemistry (IHC), is seen in approximately 50%-60% of all breast cancers

However, The authors argued that it actually it represents a series of biological differences from HER2-negative disease that do not have a strong bearing on outcomes.

The analysis was published online in JAMA Oncology.

For the study, researchers from the University of Chicago examined data on more than 1.1 million breast cancer patients recorded as HER2-negative in the U.S. National Cancer Database, and re-classified almost two thirds as HER2-low on further analysis.

They found that HER2-low status was associated with higher estrogen receptor (ER) expression, as well as a lower rate of pathologic complete response, compared with HER2-negative disease. It was also linked to an improvement in overall survival on multivariate analysis of up to 9% in advance stage triple negative tumors.

“However, the clinical significance of these differences is questionable,” the researchers commented.

HER2-low status alone “should not influence neoadjuvant treatment decisions with currently approved regimens,” they added.

These results “do not support classification of HER2-low breast cancer as a distinct clinical subtype,” the team concluded.

Not necessarily, according to Giuseppe Curigliano, MD, PhD, director of the new drugs and early drug development for innovative therapies division at the European Institute of Oncology, Milan. He argued the opposite case, that HER2-low is a separate clinical entity, in a recent debate on the topic held at the 2022 San Antonio Breast Cancer Symposium.

In a comment, Dr. Curigliano said that a “major strength” of the current study is its large patient cohort, which reflects the majority of cancer diagnoses in the United States, but that it nevertheless has “important limitations that should be considered when interpreting the results.”

The inclusion of only overall survival in the dataset limits the ability to make associations between HER2-low status and cancer-specific prognosis, as “survival may lag years behind recurrence.”

The lack of centralized assessment of IHC results is also an issue, as “some of the results may be associated with regional variation in practice of classifying cases as HER2 0 versus HER2 1+.”

“In my opinion, this is a great limitation,” he said, in being able to conclude that there is “no prognostic difference between ERBB2-low and -negative patients.”

He also noted that, from a molecular point of view, “the key determinant of the gene expression profile is the expression of hormone receptors, [and] if we perform a correction for hormone receptor expression, only marginal differences in gene expression are found” between HER2-low and HER2-negative tumors.

“Similarly, large genomic studies have identified no specific and consistent difference in genomic profiles,” Dr. Curigliano said, and so, HER2-low disease, “as currently defined, should not be considered a distinct molecular entity, but rather a heterogeneous group of tumors, with biology primarily driven by hormone receptor expression.”

Analysis quoted by both sides

Senior author of the new analysis, Frederick M. Howard, MD, from the section of hematology-oncology in the department of medicine at the University of Chicago, said that his team’s work was quoted by both sides of the debate at SABCS 2022.

This reflects the fact that, while differences between ERBB2-low and -negative are present, it is “questionable how clinically significant those differences are,” he said in an interview. It’s a matter of “the eye of the beholder.”

Dr. Howard does not think that clinicians are going to modify standard treatment regimens based solely on HER2-low status, and that low expression of the protein “is probably just a reflection of some underlying biologic processes.”

Dr. Howard agreed with Dr. Curigliano that a “caveat” of their study is that the IHC analyses were performed locally, especially as it has shown that there can be discordance between pathologists in around 40% of cases, and that the associations they found might therefore be “strengthened” by more precise quantification of HER2 expression.

“But, even then,” Dr. Howard continued, “I doubt that [ERBB2-low status] is going to be that strong a prognostic factor.”

He believes that advances in analytic techniques will, in the future, allow tumors to be characterized more precisely, and it may be that HER2-low tumors end up being called something else in 5 years.

Renewed interest in this subgroup

In their paper, Dr. Howard and colleagues pointed out that, as a group, HER2-low tumors are heterogeneous, with HER2-low found in both hormone receptor–positive and triple-negative breast cancers. Also, various studies have come to different conclusions about what HER2 low means prognostically, with conclusions ranging from negative to neutral and to positive prognoses.

However, new research has shown that patients with HER2-low tumors can benefit from HER2-targeted drugs. In particular, the recent report that the antibody-drug conjugate trastuzumab deruxtecan doubled progression-free survival versus chemotherapy in ERBB2-low tumors led to “renewed interest in the subgroup,” the researchers noted.

To examine this subgroup further, they embarked on their analysis. Examining the National Cancer Database, they gathered information on more than 1.1 million U.S. patients diagnosed with HER2-negative invasive breast cancer in the 10-year period 2010-2019, and for whom IHC results were available.

The patients were reclassified as having HER2-low disease if they had an IHC score of 1+, or 2+ with a negative in situ hybridization test, while those with an IHC score of 0 were deemed to be HER2-negative. They were followed up until Nov. 30, 2022.

These patients had a mean age of 62.4 years, and 99.1% were female. The majority (78.6%) were non-Hispanic white. HER2-low was identified in 65.5% of the cohort, while 34.5% were HER2-negative.

The proportion of HER2-low disease was lower in non-Hispanic black (62.8%) and Hispanic (61%) patients than in non-Hispanic White patients, at 66.1%.

HER2-low disease was also more common in hormone receptor–positive than triple-negative tumors, at just 51.5%, rising to 58.6% for progesterone receptor–positive, ER-negative, and 69.1% for PR-positive, ER-positive tumors.

Multivariate logistic regression analysis taking into account age, sex, race and ethnicity, comorbidity score, and treatment facility type, among other factors, revealed that the likelihood of HER2-low disease was significantly with increased ER expression, at an adjusted odds ratio of 1.15 for each 10% increase (P < .001).

Non-Hispanic Black, Asian, and Pacific Islander patients had similar rates of HER2-low disease as non-Hispanic White patients after adjustment, whereas Native American patients had an increased rate, at an aOR of 1.22 (P < .001), and Hispanic patients had a lower rate, at an aOR of 0.85 (P < .001).

HER2-low status was associated with a slightly reduced likelihood of having a pathologic complete response (aOR, 0.89; P < .001), with similar results when restricting the analysis to patients with triple negative or hormone receptor-positive tumors.

After a median follow-up of 54 months, HER2-low disease was associated with a minor improvement in survival on multivariate analysis, at an adjusted hazard ratio for death of 0.98 (P < .001).

The greatest improvement in survival was seen in patients with stage III and IV triple-negative breast cancer, at HRs of 0.92 and 0.91, respectively, although the researchers noted that this represented only a 2% and 0.4% improvement in 5-year overall survival, respectively.

The study was supported by the Breast Cancer Research Foundation, the American Society of Clinical Oncology/Conquer Cancer Foundation, the Department of Defense, the National Institutes of Health/National Cancer Institute, Susan G. Komen, the Breast Cancer Research Foundation, and the University of Chicago Elwood V. Jensen Scholars Program. Dr. Howard reported no relevant financial relationships. Dr. Curigliano has relationships with Pfizer, Novartis, Lilly, Roche, Seattle Genetics, Celltrion, Veracyte, Daiichi Sankyo, AstraZeneca, Merck, Seagen, Exact Sciences, Gilead, Bristol-Myers Squibb, Scientific Affairs Group, and Ellipsis.

A version of this article first appeared on Medscape.com.

Much attention has been focused recently on the idea that breast cancer with a low expression of HER2 can be treated with HER2-targeted agents. Not surprisingly, manufacturers of these drugs have pounced on this idea, as it opens up a whole new patient population: previously these drugs were only for tumors with a high HER2 expression.

There is a large potential market at stake: HER2-low (also referred to as ERBB2-low), as defined by a score of 0 to 3+ on immunohistochemistry (IHC), is seen in approximately 50%-60% of all breast cancers

However, The authors argued that it actually it represents a series of biological differences from HER2-negative disease that do not have a strong bearing on outcomes.

The analysis was published online in JAMA Oncology.

For the study, researchers from the University of Chicago examined data on more than 1.1 million breast cancer patients recorded as HER2-negative in the U.S. National Cancer Database, and re-classified almost two thirds as HER2-low on further analysis.

They found that HER2-low status was associated with higher estrogen receptor (ER) expression, as well as a lower rate of pathologic complete response, compared with HER2-negative disease. It was also linked to an improvement in overall survival on multivariate analysis of up to 9% in advance stage triple negative tumors.

“However, the clinical significance of these differences is questionable,” the researchers commented.

HER2-low status alone “should not influence neoadjuvant treatment decisions with currently approved regimens,” they added.

These results “do not support classification of HER2-low breast cancer as a distinct clinical subtype,” the team concluded.

Not necessarily, according to Giuseppe Curigliano, MD, PhD, director of the new drugs and early drug development for innovative therapies division at the European Institute of Oncology, Milan. He argued the opposite case, that HER2-low is a separate clinical entity, in a recent debate on the topic held at the 2022 San Antonio Breast Cancer Symposium.

In a comment, Dr. Curigliano said that a “major strength” of the current study is its large patient cohort, which reflects the majority of cancer diagnoses in the United States, but that it nevertheless has “important limitations that should be considered when interpreting the results.”

The inclusion of only overall survival in the dataset limits the ability to make associations between HER2-low status and cancer-specific prognosis, as “survival may lag years behind recurrence.”

The lack of centralized assessment of IHC results is also an issue, as “some of the results may be associated with regional variation in practice of classifying cases as HER2 0 versus HER2 1+.”

“In my opinion, this is a great limitation,” he said, in being able to conclude that there is “no prognostic difference between ERBB2-low and -negative patients.”

He also noted that, from a molecular point of view, “the key determinant of the gene expression profile is the expression of hormone receptors, [and] if we perform a correction for hormone receptor expression, only marginal differences in gene expression are found” between HER2-low and HER2-negative tumors.

“Similarly, large genomic studies have identified no specific and consistent difference in genomic profiles,” Dr. Curigliano said, and so, HER2-low disease, “as currently defined, should not be considered a distinct molecular entity, but rather a heterogeneous group of tumors, with biology primarily driven by hormone receptor expression.”

Analysis quoted by both sides

Senior author of the new analysis, Frederick M. Howard, MD, from the section of hematology-oncology in the department of medicine at the University of Chicago, said that his team’s work was quoted by both sides of the debate at SABCS 2022.

This reflects the fact that, while differences between ERBB2-low and -negative are present, it is “questionable how clinically significant those differences are,” he said in an interview. It’s a matter of “the eye of the beholder.”

Dr. Howard does not think that clinicians are going to modify standard treatment regimens based solely on HER2-low status, and that low expression of the protein “is probably just a reflection of some underlying biologic processes.”

Dr. Howard agreed with Dr. Curigliano that a “caveat” of their study is that the IHC analyses were performed locally, especially as it has shown that there can be discordance between pathologists in around 40% of cases, and that the associations they found might therefore be “strengthened” by more precise quantification of HER2 expression.

“But, even then,” Dr. Howard continued, “I doubt that [ERBB2-low status] is going to be that strong a prognostic factor.”

He believes that advances in analytic techniques will, in the future, allow tumors to be characterized more precisely, and it may be that HER2-low tumors end up being called something else in 5 years.

Renewed interest in this subgroup

In their paper, Dr. Howard and colleagues pointed out that, as a group, HER2-low tumors are heterogeneous, with HER2-low found in both hormone receptor–positive and triple-negative breast cancers. Also, various studies have come to different conclusions about what HER2 low means prognostically, with conclusions ranging from negative to neutral and to positive prognoses.

However, new research has shown that patients with HER2-low tumors can benefit from HER2-targeted drugs. In particular, the recent report that the antibody-drug conjugate trastuzumab deruxtecan doubled progression-free survival versus chemotherapy in ERBB2-low tumors led to “renewed interest in the subgroup,” the researchers noted.

To examine this subgroup further, they embarked on their analysis. Examining the National Cancer Database, they gathered information on more than 1.1 million U.S. patients diagnosed with HER2-negative invasive breast cancer in the 10-year period 2010-2019, and for whom IHC results were available.

The patients were reclassified as having HER2-low disease if they had an IHC score of 1+, or 2+ with a negative in situ hybridization test, while those with an IHC score of 0 were deemed to be HER2-negative. They were followed up until Nov. 30, 2022.

These patients had a mean age of 62.4 years, and 99.1% were female. The majority (78.6%) were non-Hispanic white. HER2-low was identified in 65.5% of the cohort, while 34.5% were HER2-negative.

The proportion of HER2-low disease was lower in non-Hispanic black (62.8%) and Hispanic (61%) patients than in non-Hispanic White patients, at 66.1%.

HER2-low disease was also more common in hormone receptor–positive than triple-negative tumors, at just 51.5%, rising to 58.6% for progesterone receptor–positive, ER-negative, and 69.1% for PR-positive, ER-positive tumors.

Multivariate logistic regression analysis taking into account age, sex, race and ethnicity, comorbidity score, and treatment facility type, among other factors, revealed that the likelihood of HER2-low disease was significantly with increased ER expression, at an adjusted odds ratio of 1.15 for each 10% increase (P < .001).

Non-Hispanic Black, Asian, and Pacific Islander patients had similar rates of HER2-low disease as non-Hispanic White patients after adjustment, whereas Native American patients had an increased rate, at an aOR of 1.22 (P < .001), and Hispanic patients had a lower rate, at an aOR of 0.85 (P < .001).

HER2-low status was associated with a slightly reduced likelihood of having a pathologic complete response (aOR, 0.89; P < .001), with similar results when restricting the analysis to patients with triple negative or hormone receptor-positive tumors.

After a median follow-up of 54 months, HER2-low disease was associated with a minor improvement in survival on multivariate analysis, at an adjusted hazard ratio for death of 0.98 (P < .001).

The greatest improvement in survival was seen in patients with stage III and IV triple-negative breast cancer, at HRs of 0.92 and 0.91, respectively, although the researchers noted that this represented only a 2% and 0.4% improvement in 5-year overall survival, respectively.

The study was supported by the Breast Cancer Research Foundation, the American Society of Clinical Oncology/Conquer Cancer Foundation, the Department of Defense, the National Institutes of Health/National Cancer Institute, Susan G. Komen, the Breast Cancer Research Foundation, and the University of Chicago Elwood V. Jensen Scholars Program. Dr. Howard reported no relevant financial relationships. Dr. Curigliano has relationships with Pfizer, Novartis, Lilly, Roche, Seattle Genetics, Celltrion, Veracyte, Daiichi Sankyo, AstraZeneca, Merck, Seagen, Exact Sciences, Gilead, Bristol-Myers Squibb, Scientific Affairs Group, and Ellipsis.

A version of this article first appeared on Medscape.com.

Much attention has been focused recently on the idea that breast cancer with a low expression of HER2 can be treated with HER2-targeted agents. Not surprisingly, manufacturers of these drugs have pounced on this idea, as it opens up a whole new patient population: previously these drugs were only for tumors with a high HER2 expression.

There is a large potential market at stake: HER2-low (also referred to as ERBB2-low), as defined by a score of 0 to 3+ on immunohistochemistry (IHC), is seen in approximately 50%-60% of all breast cancers

However, The authors argued that it actually it represents a series of biological differences from HER2-negative disease that do not have a strong bearing on outcomes.

The analysis was published online in JAMA Oncology.

For the study, researchers from the University of Chicago examined data on more than 1.1 million breast cancer patients recorded as HER2-negative in the U.S. National Cancer Database, and re-classified almost two thirds as HER2-low on further analysis.

They found that HER2-low status was associated with higher estrogen receptor (ER) expression, as well as a lower rate of pathologic complete response, compared with HER2-negative disease. It was also linked to an improvement in overall survival on multivariate analysis of up to 9% in advance stage triple negative tumors.

“However, the clinical significance of these differences is questionable,” the researchers commented.

HER2-low status alone “should not influence neoadjuvant treatment decisions with currently approved regimens,” they added.

These results “do not support classification of HER2-low breast cancer as a distinct clinical subtype,” the team concluded.

Not necessarily, according to Giuseppe Curigliano, MD, PhD, director of the new drugs and early drug development for innovative therapies division at the European Institute of Oncology, Milan. He argued the opposite case, that HER2-low is a separate clinical entity, in a recent debate on the topic held at the 2022 San Antonio Breast Cancer Symposium.

In a comment, Dr. Curigliano said that a “major strength” of the current study is its large patient cohort, which reflects the majority of cancer diagnoses in the United States, but that it nevertheless has “important limitations that should be considered when interpreting the results.”

The inclusion of only overall survival in the dataset limits the ability to make associations between HER2-low status and cancer-specific prognosis, as “survival may lag years behind recurrence.”

The lack of centralized assessment of IHC results is also an issue, as “some of the results may be associated with regional variation in practice of classifying cases as HER2 0 versus HER2 1+.”

“In my opinion, this is a great limitation,” he said, in being able to conclude that there is “no prognostic difference between ERBB2-low and -negative patients.”

He also noted that, from a molecular point of view, “the key determinant of the gene expression profile is the expression of hormone receptors, [and] if we perform a correction for hormone receptor expression, only marginal differences in gene expression are found” between HER2-low and HER2-negative tumors.

“Similarly, large genomic studies have identified no specific and consistent difference in genomic profiles,” Dr. Curigliano said, and so, HER2-low disease, “as currently defined, should not be considered a distinct molecular entity, but rather a heterogeneous group of tumors, with biology primarily driven by hormone receptor expression.”

Analysis quoted by both sides

Senior author of the new analysis, Frederick M. Howard, MD, from the section of hematology-oncology in the department of medicine at the University of Chicago, said that his team’s work was quoted by both sides of the debate at SABCS 2022.

This reflects the fact that, while differences between ERBB2-low and -negative are present, it is “questionable how clinically significant those differences are,” he said in an interview. It’s a matter of “the eye of the beholder.”

Dr. Howard does not think that clinicians are going to modify standard treatment regimens based solely on HER2-low status, and that low expression of the protein “is probably just a reflection of some underlying biologic processes.”

Dr. Howard agreed with Dr. Curigliano that a “caveat” of their study is that the IHC analyses were performed locally, especially as it has shown that there can be discordance between pathologists in around 40% of cases, and that the associations they found might therefore be “strengthened” by more precise quantification of HER2 expression.

“But, even then,” Dr. Howard continued, “I doubt that [ERBB2-low status] is going to be that strong a prognostic factor.”

He believes that advances in analytic techniques will, in the future, allow tumors to be characterized more precisely, and it may be that HER2-low tumors end up being called something else in 5 years.

Renewed interest in this subgroup

In their paper, Dr. Howard and colleagues pointed out that, as a group, HER2-low tumors are heterogeneous, with HER2-low found in both hormone receptor–positive and triple-negative breast cancers. Also, various studies have come to different conclusions about what HER2 low means prognostically, with conclusions ranging from negative to neutral and to positive prognoses.

However, new research has shown that patients with HER2-low tumors can benefit from HER2-targeted drugs. In particular, the recent report that the antibody-drug conjugate trastuzumab deruxtecan doubled progression-free survival versus chemotherapy in ERBB2-low tumors led to “renewed interest in the subgroup,” the researchers noted.

To examine this subgroup further, they embarked on their analysis. Examining the National Cancer Database, they gathered information on more than 1.1 million U.S. patients diagnosed with HER2-negative invasive breast cancer in the 10-year period 2010-2019, and for whom IHC results were available.

The patients were reclassified as having HER2-low disease if they had an IHC score of 1+, or 2+ with a negative in situ hybridization test, while those with an IHC score of 0 were deemed to be HER2-negative. They were followed up until Nov. 30, 2022.

These patients had a mean age of 62.4 years, and 99.1% were female. The majority (78.6%) were non-Hispanic white. HER2-low was identified in 65.5% of the cohort, while 34.5% were HER2-negative.

The proportion of HER2-low disease was lower in non-Hispanic black (62.8%) and Hispanic (61%) patients than in non-Hispanic White patients, at 66.1%.

HER2-low disease was also more common in hormone receptor–positive than triple-negative tumors, at just 51.5%, rising to 58.6% for progesterone receptor–positive, ER-negative, and 69.1% for PR-positive, ER-positive tumors.

Multivariate logistic regression analysis taking into account age, sex, race and ethnicity, comorbidity score, and treatment facility type, among other factors, revealed that the likelihood of HER2-low disease was significantly with increased ER expression, at an adjusted odds ratio of 1.15 for each 10% increase (P < .001).

Non-Hispanic Black, Asian, and Pacific Islander patients had similar rates of HER2-low disease as non-Hispanic White patients after adjustment, whereas Native American patients had an increased rate, at an aOR of 1.22 (P < .001), and Hispanic patients had a lower rate, at an aOR of 0.85 (P < .001).

HER2-low status was associated with a slightly reduced likelihood of having a pathologic complete response (aOR, 0.89; P < .001), with similar results when restricting the analysis to patients with triple negative or hormone receptor-positive tumors.

After a median follow-up of 54 months, HER2-low disease was associated with a minor improvement in survival on multivariate analysis, at an adjusted hazard ratio for death of 0.98 (P < .001).

The greatest improvement in survival was seen in patients with stage III and IV triple-negative breast cancer, at HRs of 0.92 and 0.91, respectively, although the researchers noted that this represented only a 2% and 0.4% improvement in 5-year overall survival, respectively.

The study was supported by the Breast Cancer Research Foundation, the American Society of Clinical Oncology/Conquer Cancer Foundation, the Department of Defense, the National Institutes of Health/National Cancer Institute, Susan G. Komen, the Breast Cancer Research Foundation, and the University of Chicago Elwood V. Jensen Scholars Program. Dr. Howard reported no relevant financial relationships. Dr. Curigliano has relationships with Pfizer, Novartis, Lilly, Roche, Seattle Genetics, Celltrion, Veracyte, Daiichi Sankyo, AstraZeneca, Merck, Seagen, Exact Sciences, Gilead, Bristol-Myers Squibb, Scientific Affairs Group, and Ellipsis.

A version of this article first appeared on Medscape.com.

FROM JAMA ONCOLOGY

FDA expands abemaciclib use in high-risk early breast cancer

Abemaciclib was previously approved for this group of high-risk patients with the requirement that they have a Ki-67 score of at least 20%. The new expansion removes the Ki-67 testing requirement, meaning more patients are now eligible to receive this drug. High-risk patients eligible for the CDK4/6 inhibitor can now be identified solely on the basis of nodal status, tumor size, and tumor grade.

The FDA’s decision to expand the approval was based on 4-year data from the phase 3 monarchE trial of adjuvant abemaciclib, which showed benefit in invasive disease-free survival beyond the 2-year treatment course.

At 4 years, 85.5% of patients remained recurrence free with abemaciclib plus endocrine therapy, compared with 78.6% who received endocrine therapy alone, an absolute difference in invasive disease-free survival of 6.9%.

“The initial Verzenio FDA approval in early breast cancer was practice changing and now, through this indication expansion, we have the potential to reduce the risk of breast cancer recurrence for many more patients, relying solely on commonly utilized clinicopathologic features to identify them,” Erika P. Hamilton, MD, an investigator on the monarchE clinical trial, said in a press release.

A version of this article first appeared on Medscape.com.

Abemaciclib was previously approved for this group of high-risk patients with the requirement that they have a Ki-67 score of at least 20%. The new expansion removes the Ki-67 testing requirement, meaning more patients are now eligible to receive this drug. High-risk patients eligible for the CDK4/6 inhibitor can now be identified solely on the basis of nodal status, tumor size, and tumor grade.

The FDA’s decision to expand the approval was based on 4-year data from the phase 3 monarchE trial of adjuvant abemaciclib, which showed benefit in invasive disease-free survival beyond the 2-year treatment course.

At 4 years, 85.5% of patients remained recurrence free with abemaciclib plus endocrine therapy, compared with 78.6% who received endocrine therapy alone, an absolute difference in invasive disease-free survival of 6.9%.

“The initial Verzenio FDA approval in early breast cancer was practice changing and now, through this indication expansion, we have the potential to reduce the risk of breast cancer recurrence for many more patients, relying solely on commonly utilized clinicopathologic features to identify them,” Erika P. Hamilton, MD, an investigator on the monarchE clinical trial, said in a press release.

A version of this article first appeared on Medscape.com.

Abemaciclib was previously approved for this group of high-risk patients with the requirement that they have a Ki-67 score of at least 20%. The new expansion removes the Ki-67 testing requirement, meaning more patients are now eligible to receive this drug. High-risk patients eligible for the CDK4/6 inhibitor can now be identified solely on the basis of nodal status, tumor size, and tumor grade.

The FDA’s decision to expand the approval was based on 4-year data from the phase 3 monarchE trial of adjuvant abemaciclib, which showed benefit in invasive disease-free survival beyond the 2-year treatment course.

At 4 years, 85.5% of patients remained recurrence free with abemaciclib plus endocrine therapy, compared with 78.6% who received endocrine therapy alone, an absolute difference in invasive disease-free survival of 6.9%.

“The initial Verzenio FDA approval in early breast cancer was practice changing and now, through this indication expansion, we have the potential to reduce the risk of breast cancer recurrence for many more patients, relying solely on commonly utilized clinicopathologic features to identify them,” Erika P. Hamilton, MD, an investigator on the monarchE clinical trial, said in a press release.

A version of this article first appeared on Medscape.com.

Med center and top cardio surgeon must pay $8.5 million for fraud, concurrent surgeries

the Department of Justice (DOJ) announced.

The lawsuit alleges that James L. Luketich, MD, the longtime chair of the school’s cardiothoracic surgery department, regularly performed up to three complex surgical procedures simultaneously, moving among multiple operating rooms and attending to matters other than patient care. The investigation began after Jonathan D’Cunha, MD, a former UPMC surgeon, raised concerns about his colleague’s surgical scheduling and billing practices.

Dr. Luketich’s overbooking of procedures led to patients enduring hours of medically unnecessary anesthesia time and risking surgical complications, according to court documents.

In addition, the complaint states that these practices violated the False Claims Act, which prohibits “teaching physicians” like Dr. Luketich from billing Medicare and other government health plans for “concurrent surgeries” – regulations federal authorities say UPMC leadership were aware of and the University of Pittsburgh Physicians (UPP), also named in the suit, permitted Dr. Luketich to skirt.

The whistleblower provision of the False Claims Act allows private parties to file an action on behalf of the United States and receive a portion of the recovery to help deter health care fraud, says the DOJ.

The defendants previously asked the court to dismiss the case, but a judge denied the request in June 2022.

Paul Wood, vice president and chief communications officer for UPMC, told this news organization that the lawsuit pertained to Dr. Luketich’s “most complicated, team-based surgical procedures.”

“At issue was compliance with the Centers for Medicare & Medicaid Services’ (CMS’s) Teaching Physician Regulation and related billing guidance as well as with UPMC’s internal surgical policies,” he said.

“While UPMC continues to believe Dr. Luketich’s surgical practice complies with CMS requirements, it has agreed to [the settlement] to avoid the distraction and expense of further litigation,” said Mr. Wood, adding that all parties agree that UPMC can seek clarity from CMS regarding future billing of these surgeries.

Efrem Grail, JD, Dr. Luketich’s attorney, said in an interview that he and Dr. Luketich are pleased that the settlement puts an end to the case and that he hopes the United States will issue “authoritative guidance” on billing regulations for teaching physicians, something medical schools and hospitals have sought for years.

Dr. Luketich, UPMC, and UPP face more legal challenges from a separate medical malpractice lawsuit. In March 2018, Bernadette Fedorka underwent a lung transplant at UPMC. Although Dr. Luketich did not perform the surgery, Ms. Fedorka alleges that his poor leadership caused understaffing of the lung transplant program and contributed to surgical complications, including a 4-inch piece of wire left in her neck.

Ms. Fedorka claims that suboxone impaired Dr. Luketich’s decision-making. He began taking the drug in 2008 to manage the pain from a slipped disc injury after a history of prescription drug abuse. Both UPMC and Dr. Luketich have denied the validity of Ms. Fedorka’s claims.

The malpractice suit centers on a recording of a conversation between Dr. Luketich and David Wilson, MD, who prescribed the suboxone and treated the surgeon’s opioid use disorder for several years. Dr. Luketich has accused former colleagues, Dr. D’Cunha and Lara Schaheen, MD, of illegally recording the private conversation that discussed Dr. Luketich’s suboxone prescription – something both physicians deny.

For the billing fraud case, Dr. Luketich has agreed to complete a corrective action plan and submit to a third-party audit of his Medicare billings for 1 year.

“This is an important settlement and a just conclusion to the United States’ investigation into Dr. Luketich’s surgical and billing practices and UPMC and UPP’s acceptance of those practices,” Acting U.S. Attorney Troy Rivetti said in a statement that, “no medical provider – however renowned – is excepted from scrutiny or above the law.”

A version of this article first appeared on Medscape.com.

the Department of Justice (DOJ) announced.

The lawsuit alleges that James L. Luketich, MD, the longtime chair of the school’s cardiothoracic surgery department, regularly performed up to three complex surgical procedures simultaneously, moving among multiple operating rooms and attending to matters other than patient care. The investigation began after Jonathan D’Cunha, MD, a former UPMC surgeon, raised concerns about his colleague’s surgical scheduling and billing practices.

Dr. Luketich’s overbooking of procedures led to patients enduring hours of medically unnecessary anesthesia time and risking surgical complications, according to court documents.

In addition, the complaint states that these practices violated the False Claims Act, which prohibits “teaching physicians” like Dr. Luketich from billing Medicare and other government health plans for “concurrent surgeries” – regulations federal authorities say UPMC leadership were aware of and the University of Pittsburgh Physicians (UPP), also named in the suit, permitted Dr. Luketich to skirt.

The whistleblower provision of the False Claims Act allows private parties to file an action on behalf of the United States and receive a portion of the recovery to help deter health care fraud, says the DOJ.

The defendants previously asked the court to dismiss the case, but a judge denied the request in June 2022.

Paul Wood, vice president and chief communications officer for UPMC, told this news organization that the lawsuit pertained to Dr. Luketich’s “most complicated, team-based surgical procedures.”

“At issue was compliance with the Centers for Medicare & Medicaid Services’ (CMS’s) Teaching Physician Regulation and related billing guidance as well as with UPMC’s internal surgical policies,” he said.

“While UPMC continues to believe Dr. Luketich’s surgical practice complies with CMS requirements, it has agreed to [the settlement] to avoid the distraction and expense of further litigation,” said Mr. Wood, adding that all parties agree that UPMC can seek clarity from CMS regarding future billing of these surgeries.

Efrem Grail, JD, Dr. Luketich’s attorney, said in an interview that he and Dr. Luketich are pleased that the settlement puts an end to the case and that he hopes the United States will issue “authoritative guidance” on billing regulations for teaching physicians, something medical schools and hospitals have sought for years.

Dr. Luketich, UPMC, and UPP face more legal challenges from a separate medical malpractice lawsuit. In March 2018, Bernadette Fedorka underwent a lung transplant at UPMC. Although Dr. Luketich did not perform the surgery, Ms. Fedorka alleges that his poor leadership caused understaffing of the lung transplant program and contributed to surgical complications, including a 4-inch piece of wire left in her neck.

Ms. Fedorka claims that suboxone impaired Dr. Luketich’s decision-making. He began taking the drug in 2008 to manage the pain from a slipped disc injury after a history of prescription drug abuse. Both UPMC and Dr. Luketich have denied the validity of Ms. Fedorka’s claims.

The malpractice suit centers on a recording of a conversation between Dr. Luketich and David Wilson, MD, who prescribed the suboxone and treated the surgeon’s opioid use disorder for several years. Dr. Luketich has accused former colleagues, Dr. D’Cunha and Lara Schaheen, MD, of illegally recording the private conversation that discussed Dr. Luketich’s suboxone prescription – something both physicians deny.

For the billing fraud case, Dr. Luketich has agreed to complete a corrective action plan and submit to a third-party audit of his Medicare billings for 1 year.

“This is an important settlement and a just conclusion to the United States’ investigation into Dr. Luketich’s surgical and billing practices and UPMC and UPP’s acceptance of those practices,” Acting U.S. Attorney Troy Rivetti said in a statement that, “no medical provider – however renowned – is excepted from scrutiny or above the law.”

A version of this article first appeared on Medscape.com.

the Department of Justice (DOJ) announced.

The lawsuit alleges that James L. Luketich, MD, the longtime chair of the school’s cardiothoracic surgery department, regularly performed up to three complex surgical procedures simultaneously, moving among multiple operating rooms and attending to matters other than patient care. The investigation began after Jonathan D’Cunha, MD, a former UPMC surgeon, raised concerns about his colleague’s surgical scheduling and billing practices.

Dr. Luketich’s overbooking of procedures led to patients enduring hours of medically unnecessary anesthesia time and risking surgical complications, according to court documents.

In addition, the complaint states that these practices violated the False Claims Act, which prohibits “teaching physicians” like Dr. Luketich from billing Medicare and other government health plans for “concurrent surgeries” – regulations federal authorities say UPMC leadership were aware of and the University of Pittsburgh Physicians (UPP), also named in the suit, permitted Dr. Luketich to skirt.

The whistleblower provision of the False Claims Act allows private parties to file an action on behalf of the United States and receive a portion of the recovery to help deter health care fraud, says the DOJ.

The defendants previously asked the court to dismiss the case, but a judge denied the request in June 2022.

Paul Wood, vice president and chief communications officer for UPMC, told this news organization that the lawsuit pertained to Dr. Luketich’s “most complicated, team-based surgical procedures.”

“At issue was compliance with the Centers for Medicare & Medicaid Services’ (CMS’s) Teaching Physician Regulation and related billing guidance as well as with UPMC’s internal surgical policies,” he said.

“While UPMC continues to believe Dr. Luketich’s surgical practice complies with CMS requirements, it has agreed to [the settlement] to avoid the distraction and expense of further litigation,” said Mr. Wood, adding that all parties agree that UPMC can seek clarity from CMS regarding future billing of these surgeries.

Efrem Grail, JD, Dr. Luketich’s attorney, said in an interview that he and Dr. Luketich are pleased that the settlement puts an end to the case and that he hopes the United States will issue “authoritative guidance” on billing regulations for teaching physicians, something medical schools and hospitals have sought for years.

Dr. Luketich, UPMC, and UPP face more legal challenges from a separate medical malpractice lawsuit. In March 2018, Bernadette Fedorka underwent a lung transplant at UPMC. Although Dr. Luketich did not perform the surgery, Ms. Fedorka alleges that his poor leadership caused understaffing of the lung transplant program and contributed to surgical complications, including a 4-inch piece of wire left in her neck.

Ms. Fedorka claims that suboxone impaired Dr. Luketich’s decision-making. He began taking the drug in 2008 to manage the pain from a slipped disc injury after a history of prescription drug abuse. Both UPMC and Dr. Luketich have denied the validity of Ms. Fedorka’s claims.

The malpractice suit centers on a recording of a conversation between Dr. Luketich and David Wilson, MD, who prescribed the suboxone and treated the surgeon’s opioid use disorder for several years. Dr. Luketich has accused former colleagues, Dr. D’Cunha and Lara Schaheen, MD, of illegally recording the private conversation that discussed Dr. Luketich’s suboxone prescription – something both physicians deny.

For the billing fraud case, Dr. Luketich has agreed to complete a corrective action plan and submit to a third-party audit of his Medicare billings for 1 year.

“This is an important settlement and a just conclusion to the United States’ investigation into Dr. Luketich’s surgical and billing practices and UPMC and UPP’s acceptance of those practices,” Acting U.S. Attorney Troy Rivetti said in a statement that, “no medical provider – however renowned – is excepted from scrutiny or above the law.”

A version of this article first appeared on Medscape.com.

Bridging the Digital Divide in Teledermatology Usage: A Retrospective Review of Patient Visits

Teledermatology is an effective patient care model for the delivery of high-quality dermatologic care.1 Teledermatology can occur using synchronous, asynchronous, and hybrid models of care. In asynchronous visits (AVs), patients or health professionals submit photographs and information for dermatologists to review and provide treatment recommendations. With synchronous visits (SVs), patients have a visit with a dermatology health professional in real time via live video conferencing software. Hybrid models incorporate asynchronous strategies for patient intake forms and skin photograph submissions as well as synchronous methods for live video consultation in a single visit.1 However, remarkable inequities in internet access limit telemedicine usage among medically marginalized patient populations, including racialized, elderly, and low socioeconomic status groups.2

Synchronous visits, a relatively newer teledermatology format, allow for communication with dermatology professionals from the convenience of a patient’s selected location. The live interaction of SVs allows dermatology professionals to answer questions, provide treatment recommendations, and build therapeutic relationships with patients. Concerns for dermatologist reimbursement, malpractice/liability, and technological challenges stalled large-scale uptake of teledermatology platforms.3 The COVID-19 pandemic led to a drastic increase in teledermatology usage of approximately 587.2%, largely due to public safety measures and Medicaid reimbursement parity between SV and in-office visits (IVs).3,4

With the implementation of SVs as a patient care model, we investigated the demographics of patients who utilized SVs, AVs, or IVs, and we propose strategies to promote equity in dermatologic care access.

Methods

This study was approved by the University of Pittsburgh institutional review board (STUDY20110043). We performed a retrospective electronic medical record review of deidentified data from the University of Pittsburgh Medical Center, a tertiary care center in Allegheny County, Pennsylvania, with an established asynchronous teledermatology program. Hybrid SVs were integrated into the University of Pittsburgh Medical Center patient care visit options in March 2020. Patients were instructed to upload photographs of their skin conditions prior to SV appointments. The study included visits occurring between July and December 2020. Visit types included SVs, AVs, and IVs.

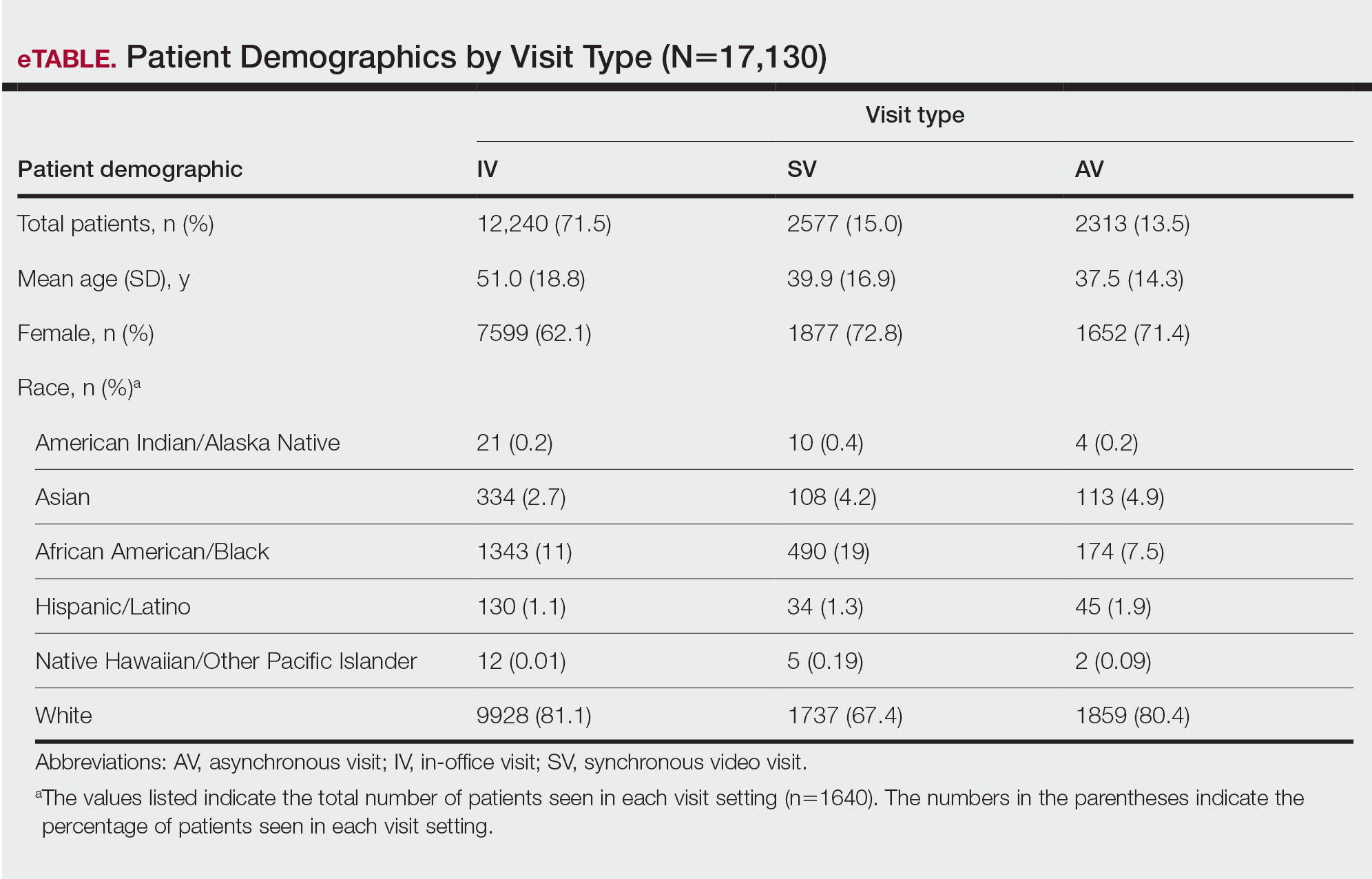

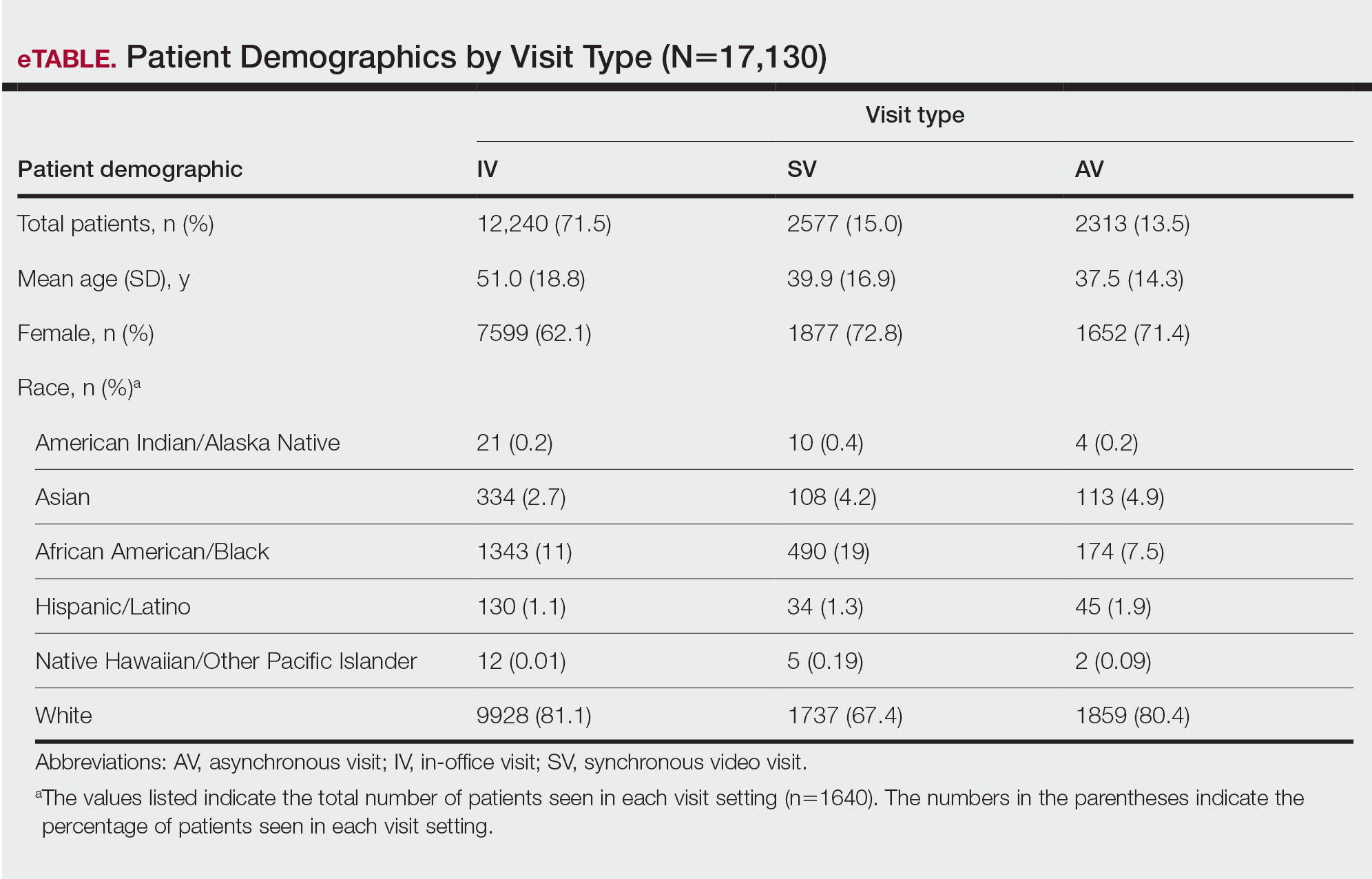

We analyzed the initial dermatology visits of 17,130 patients aged 17.5 years and older. Recorded data included diagnosis, age, sex, race, ethnicity, and insurance type for each visit type. Patients without a reported race (990 patients) or ethnicity (1712 patients) were excluded from analysis of race/ethnicity data. Patient zip codes were compared with the zip codes of Allegheny County municipalities as reported by the Allegheny County Elections Division.

Statistical Analysis—Descriptive statistics were calculated; frequency with percentage was used to report categorical variables, and the mean (SD) was used for normally distributed continuous variables. Univariate analysis was performed using the χ2 test for categorical variables. One-way analysis of variance was used to compare age among visit types. Statistical significance was defined as P<.05. IBM SPSS Statistics for Windows, Version 24 (IBM Corp) was used for all statistical analyses.

Results

In our study population, 81.2% (13,916) of patients were residents of Allegheny County, where 51.6% of residents are female and 81.4% are older than 18 years according to data from 2020.5 The racial and ethnic demographics of Allegheny County were 13.4% African American/Black, 0.2% American Indian/Alaska Native, 4.2% Asian, 2.3% Hispanic/Latino, and 79.6% White. The percentage of residents who identified as Native Hawaiian/Pacific Islander was reported to be greater than 0% but less than 0.5%.5

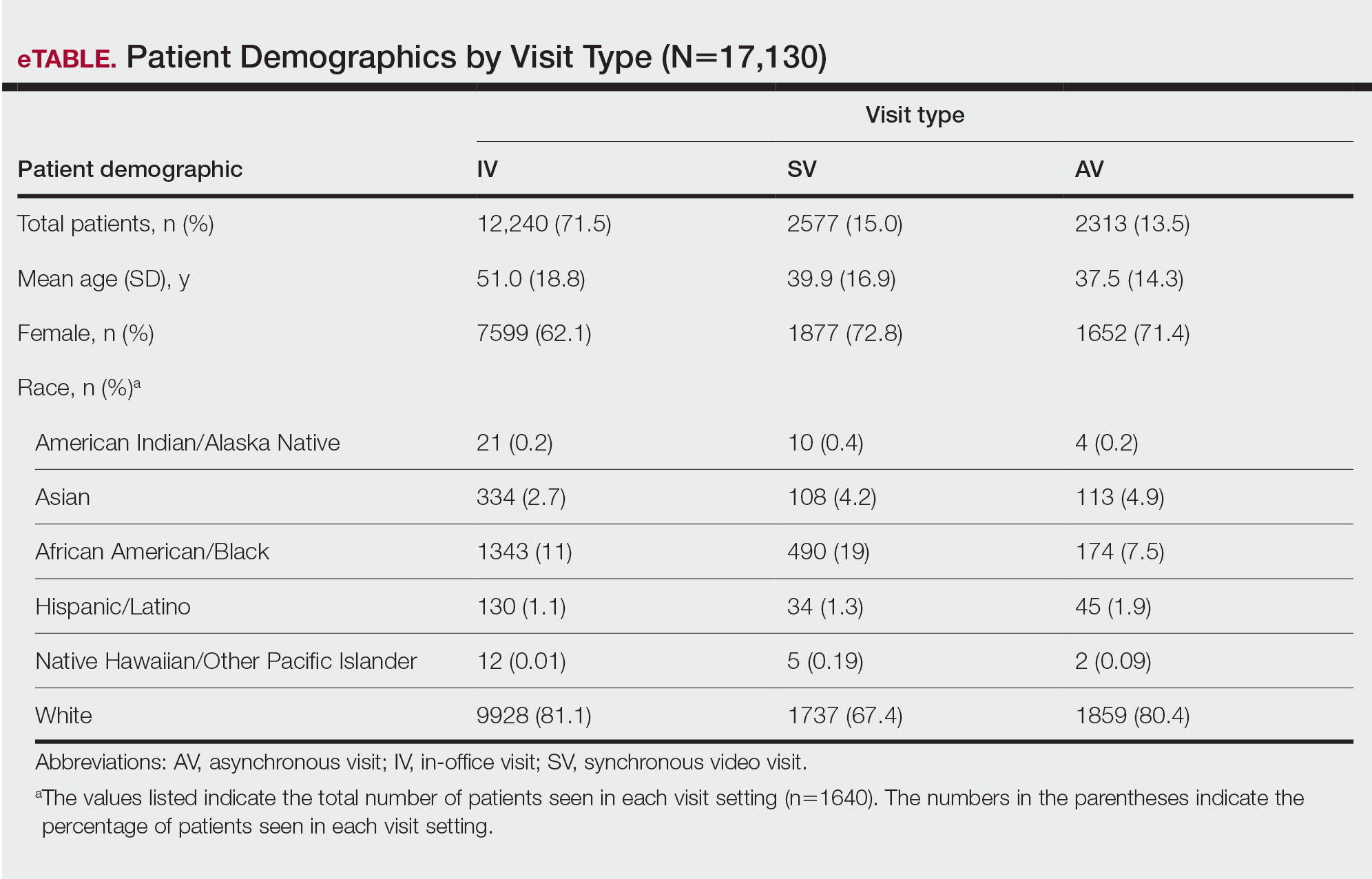

In our analysis, IVs were the most utilized visit type, accounting for 71.5% (12,240) of visits, followed by 15.0% (2577) for SVs and 13.5% (2313) for AVs. The mean age (SD) of IV patients was 51.0 (18.8) years compared with 39.9 (16.9) years for SV patients and 37.5 (14.3) years for AV patients (eTable). The majority of patients for all visits were female: 62.1% (7599) for IVs, 71.4% (1652) for AVs, and 72.8% (1877) for SVs. The largest racial or ethnic group for all visit types included White patients (83.8% [13,524] of all patients), followed by Black (12.4% [2007]), Hispanic/Latino (1.4% [209]), Asian (3.4% [555]), American Indian/Alaska Native (0.2% [35]), and Native Hawaiian/Other Pacific Islander patients (0.1% [19]).

Asian patients, who comprised 4.2% of Allegheny County residents,5 accounted for 2.7% (334) of IVs, 4.9% (113) of AVs, and 4.2% (108) of SVs. Black patients, who were reported as 13.4% of the Allegheny County population,5 were more likely to utilize SVs (19% [490])compared with AVs (7.5% [174]) and IVs (11% [1343]). Hispanic/Latino patients had a disproportionally lower utilization of dermatologic care in all settings, comprising 1.4% (209) of all patients in our study compared with 2.3% of Allegheny County residents.5 White patients, who comprised 79.6% of Allegheny County residents, accounted for 81.1% (9928) of IVs, 67.4% (1737) of SVs, and 80.4% (1859) of AVs. There was no significant difference in the percentage of American Indian/Alaska Native and Native Hawaiian/Other Pacific Islander patients among visit types.

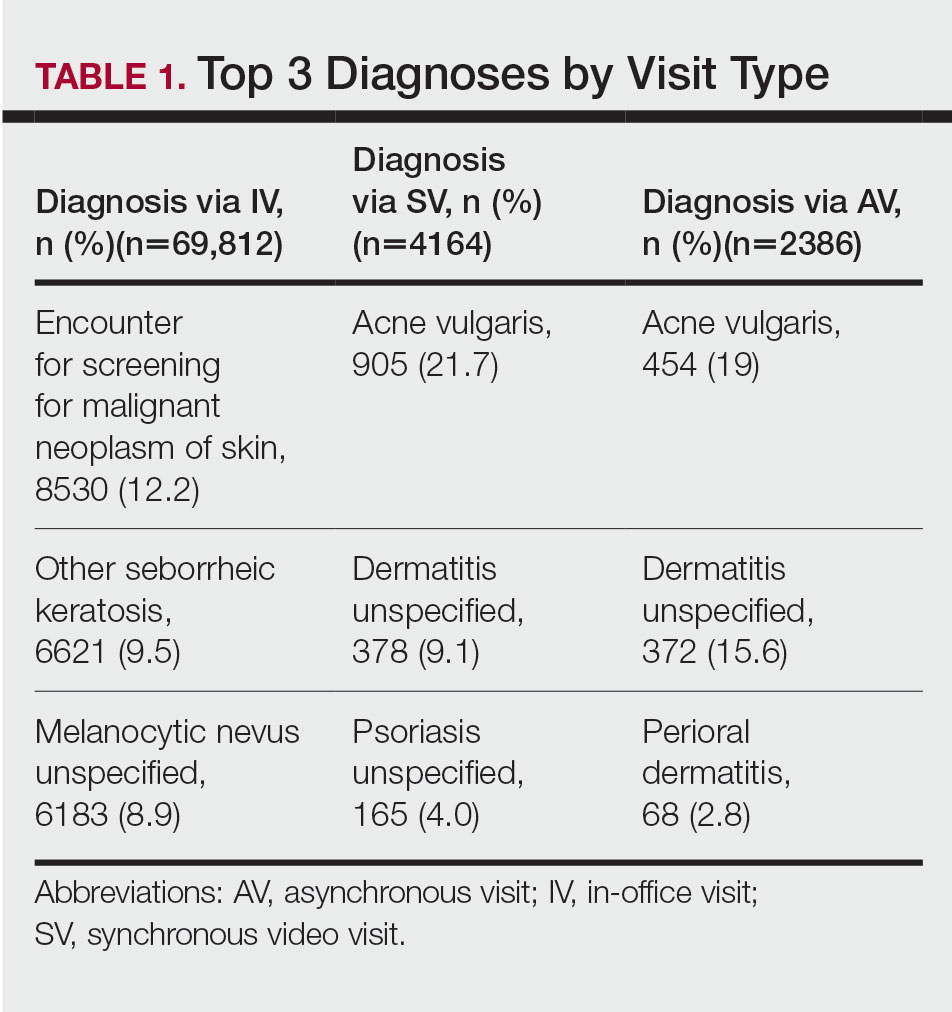

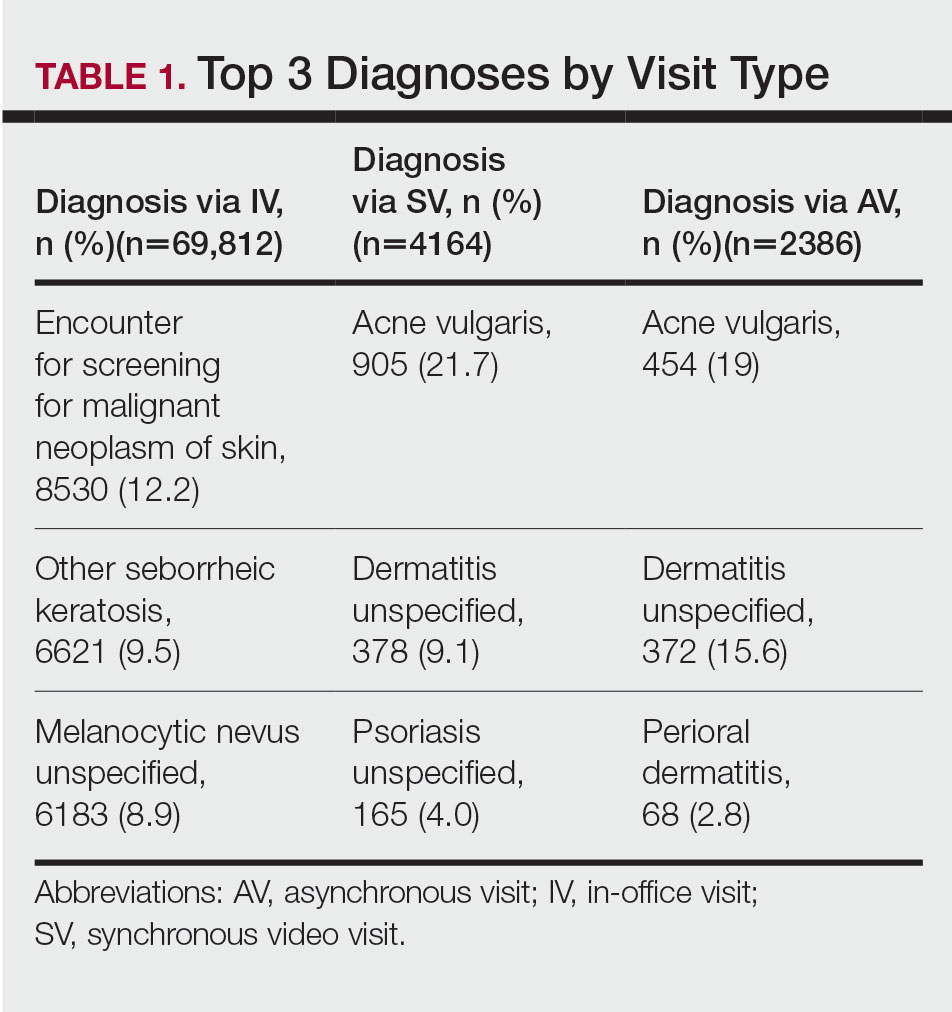

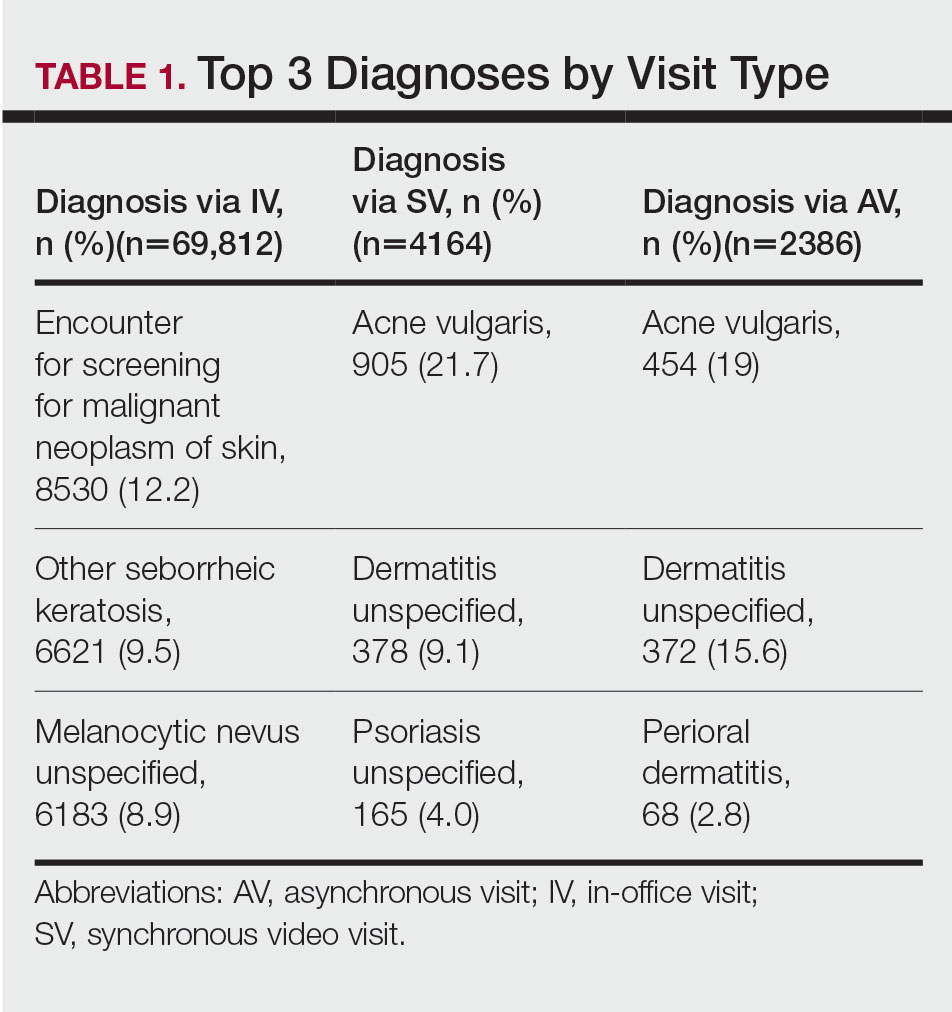

The 3 most common diagnoses for IVs were skin cancer screening, seborrheic keratosis, and melanocytic nevus (Table 1). Skin cancer screening was the most common diagnosis, accounting for 12.2% (8530) of 69,812 IVs. The 3 most common diagnoses for SVs were acne vulgaris, dermatitis, and psoriasis. The 3 most common diagnoses for AVs were acne vulgaris, dermatitis, and perioral dermatitis.

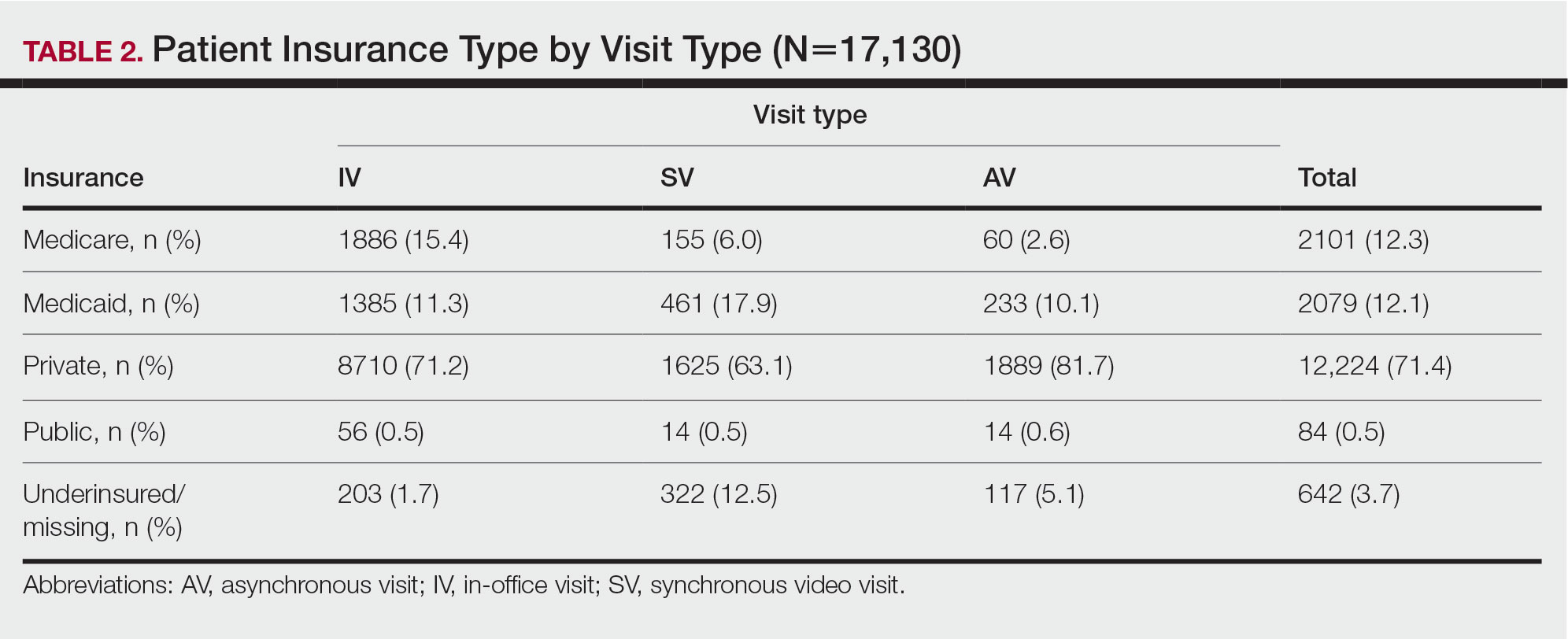

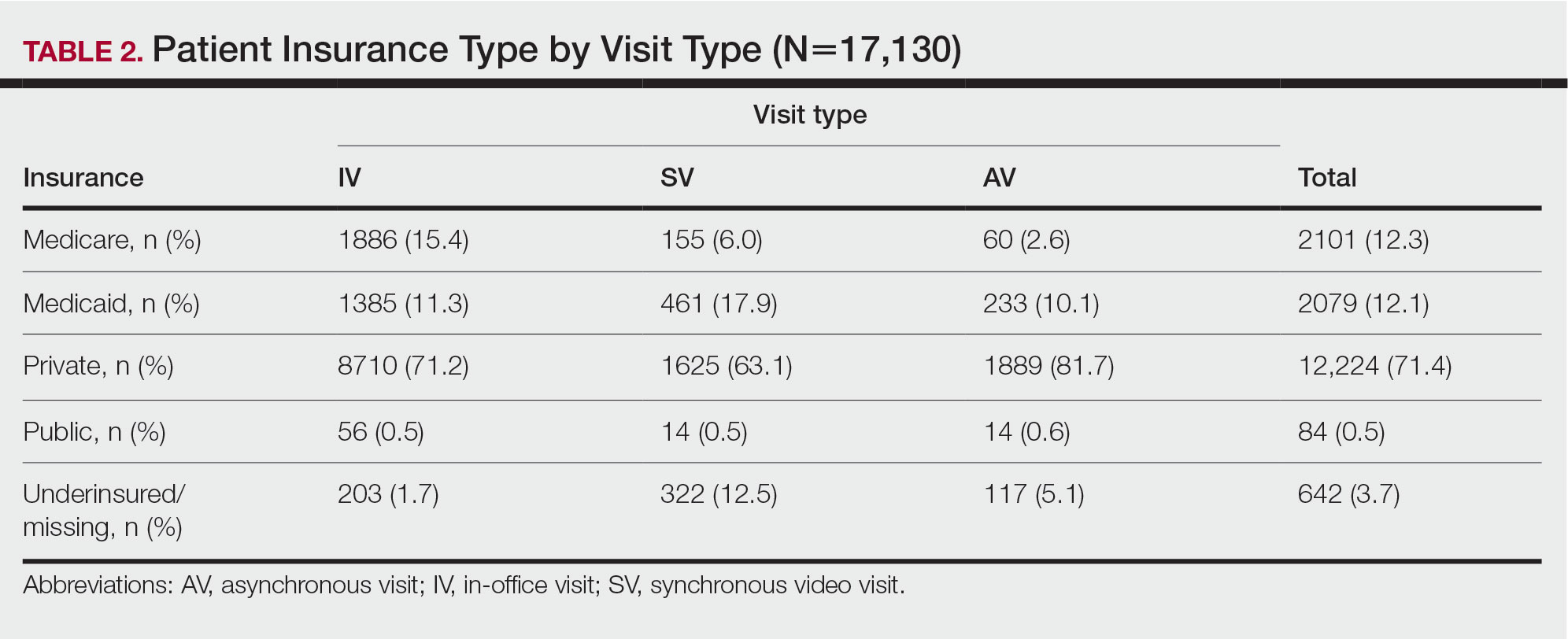

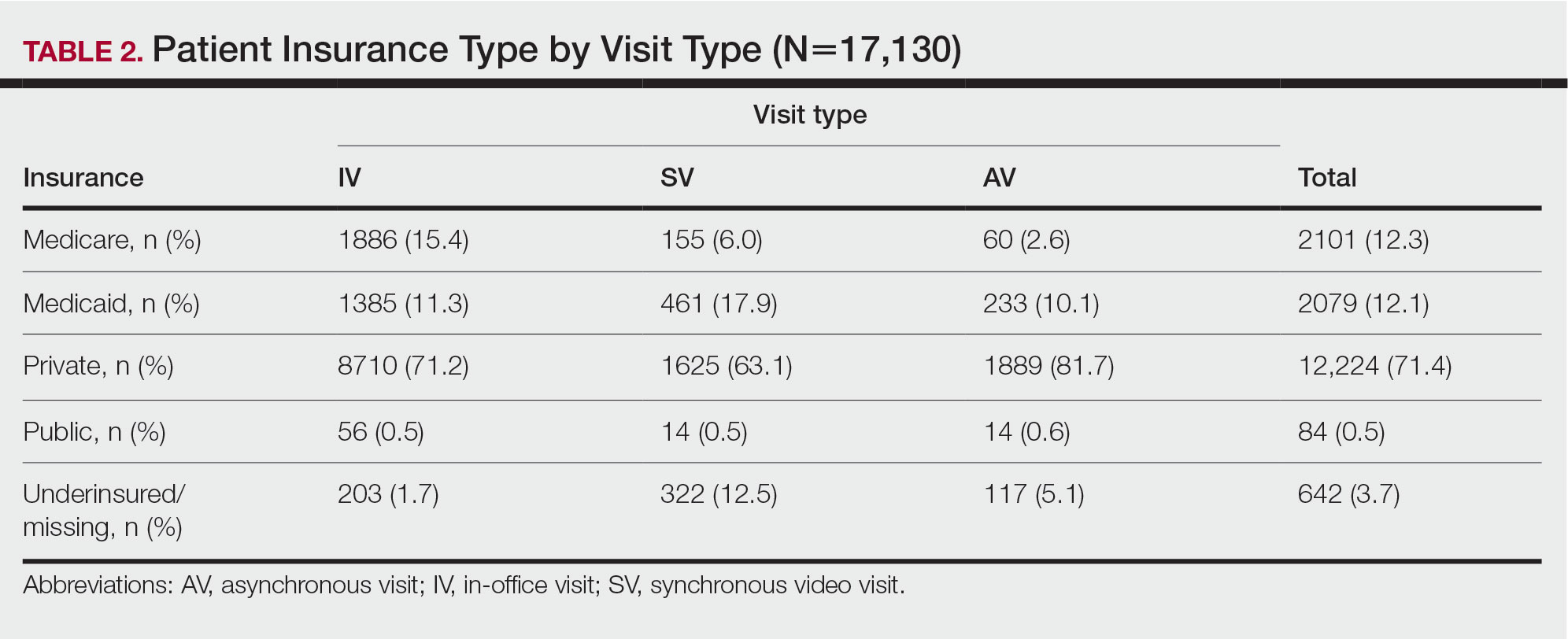

Private insurance was the most common insurance type among all patients (71.4% [12,224])(Table 2). A higher percentage of patients with Medicaid insurance (17.9% [461]) utilized SVs compared with AVs (10.1% [233]) and IVs (11.3% 1385]). Similarly, a higher percentage of patients with no insurance or no insurance listed were seen via SVs (12.5% [322]) compared with AVs (5.1% [117]) and IVs (1.7% [203]). Patients with Medicare insurance used IVs (15.4% [1886]) more than SVs (6.0% [155]) or AVs (2.6% [60]). There was no significant difference among visit type usage for patients with public insurance.

Comment

Teledermatology Benefits—In this retrospective review of medical records of patients who obtained dermatologic care after the implementation of SVs at our institution, we found a proportionally higher use of SVs among Black patients, patients with Medicaid, and patients who are underinsured. Benefits of teledermatology include decreases in patient transportation and associated costs, time away from work or home, and need for childcare.6 The SV format provides the additional advantage of direct live interaction and the development of a patient-physician or patient–physician assistant relationship. Although the prerequisite technology, internet, and broadband connectivity preclude use of teledermatology for many vulnerable patients,2 its convenience ultimately may reduce inequities in access.