User login

Excess Thrombotic Risk in RA Has No Clear Driving Factor

LIVERPOOL, ENGLAND — People with rheumatoid arthritis (RA) have a consistently higher risk for venous thromboembolism (VTE) than the general population, but the reasons for this remain unclear, research presented at the annual meeting of the British Society for Rheumatology (BSR) reaffirmed.

Regardless of age, sex, body mass index (BMI), duration of disease, use of estrogen-based oral contraceptives, or hormone replacement therapy (HRT), people with RA are more likely to experience a pulmonary embolism or deep vein thrombosis than those without RA.

However, “these are rare events,” James Galloway, MBChB, PhD, professor of rheumatology and deputy head of the Centre for Rheumatic Diseases at King’s College London in England, said at the meeting.

In one analysis of data from 117,050 individuals living in England and Wales that are held within a large primary care practice database, Dr. Galloway and colleagues found that the unadjusted incidence of VTE in people diagnosed with RA (n = 23,410) was 0.44% vs 0.26% for matched controls within the general population (n = 93,640).

RA and VTE Risk

The overall risk for VTE was 46% higher among people with RA than among those without, although the absolute difference was small, Dr. Galloway reported.

“RA is associated with an increased risk of VTE; that’s been well described over the years,” Dr. Galloway told this news organization. Past research into why there is an elevated risk for VTE in patients with RA has often focused on the role of disease activity and inflammation.

“In the last few years, a new class of drugs, the JAK [Janus kinase] inhibitors, have emerged in which we have seen a signal of increased VTE risk from a number of studies. And I think that puts a spotlight on our understanding of VTE risk,” Dr. Galloway said.

He added “JAK inhibitors are very powerful at controlling inflammation, but if you take away inflammation, there is still an excess risk. What else could be driving that?”

To examine the excess risk for VTE seen in people with RA, Dr. Galloway and colleagues performed three separate analyses using data collected between January 1999 and December 2018 by the Royal College of General Practitioners Research and Surveillance Center.

One analysis looked at VTE risk according to age, sex, and BMI; another looked at the effect of the duration of RA; and a third analysis focused on the use of estrogen-based oral contraceptives or HRT.

For all three analyses, those with RA were matched in a 4:1 ratio to people from the general population without RA on the basis of current age, sex, calendar time, and years since registration at the primary care practice.

Observational Data Challenged

“These are observational data, so it’s important to weigh up the strengths and limitations,” Dr. Galloway acknowledged. Strengths are the large sample size and long follow-up provided by the database, which assesses and monitors more than 2000 primary care practices in England and Wales.

Confounding is still possible, despite adjusting for multiple factors that included sociodemographic factors; clinical features; and VTE risk factors such as smoking status, alcohol use, thrombophilia, reduced mobility, lower limb fracture, and a family history of VTE if data had been available. There wasn’t information on disease activity, for example, and disease duration was used as a surrogate marker for this.

Sitting in the audience, Marwan Bukhari, MBBS, PhD, challenged the population-matching process.

“Do you think maybe it was the matching that was the problem?” asked Dr. Bukhari, who is consultant rheumatologist at University Hospitals of Morecambe Bay NHS Foundation Trust and an honorary senior lecturer at the University of Manchester, both in England.

“They’re not entirely matched completely, correctly. Even if it is 4:1, there’s a difference between the populations,” he said.

Age, Sex, and Bodyweight

Over an average of 8.2 years’ follow-up, the adjusted hazard ratios (aHRs) comparing VTE risk in women and men with and without RA were a respective 1.62 and 1.52. The corresponding aHRs for VTE according to different age groups were 2.13 for age 18-49 years, 1.57 for age 50-69 years, and 1.34 for age 70 years and older.

“The highest excess risk was in the youngest age group,” Dr. Galloway pointed out, “but all age groups showing a significant increased risk of venous thromboembolism.”

Similar findings were seen across different BMI categories, with the highest risk occurring in those in the lowest BMI group. The aHRs were 1.66, 1.60, and 1.41 for the BMI categories of less than 25 kg/m2, 25-30 kg/m2, and more than 30 kg/m2, respectively.

Duration of RA

As for disease duration, nearly two thirds (63.9%) of the 23,410 adults with RA included in this analysis were included at or within 2 years of a diagnosis of RA, 7.8% within 2-5 years of diagnosis, 9.8% within 5-10 years of diagnosis, and 18.5% at 10 or more years after diagnosis.

The aHR for an increased relative risk for VTE in people with RA vs the control group ranged from 1.49 for 0-2 years of diagnosis up to 1.63 for more than 10 years since diagnosis.

“We could see no evidence that the VTE excess risk in rheumatoid arthritis was with a specific time since diagnosis,” Dr. Galloway said in the interview. “It appears that the risk is increased in people with established RA, whether you’ve had the disease for 2 years or 10 years.”

Similar findings were also seen when they looked at aHRs for pulmonary embolism (1.46-2.02) and deep vein thrombosis (1.43-1.89) separately.

Oral Contraceptives and HRT

Data on the use of estrogen-based oral contraceptives or HRT were detailed in a virtual poster presentation. In this analysis, there were 16,664 women with and 65,448 without RA, and the average follow-up was 8.3 years.

“The number of people available for this analysis was small, and bigger studies are needed,” Dr. Galloway said in the interview. Indeed, in the RA group, just 3.3% had used an estrogen-based oral contraceptive and 4.5% had used HRT compared with 3.9% and 3.8% in the control group, respectively.

The overall VTE risk was 52% higher in women with RA than in those without RA.

Risk for VTE was higher among women with RA regardless of the use of estrogen-based oral contraceptives or not (aHRs, 1.43 and 1.52, respectively) and regardless of the use of HRT or not (aHRs, 2.32 and 1.51).

Assess and Monitor

Together these data increase understanding of how age, gender, obesity, duration of disease, and estrogen-based contraception and HRT may make a difference to someone’s VTE risk.

“In all people with RA, we observe an increased risk of venous thromboembolism, and that is both relevant in a contemporary era when we think about prescribing and the different risks of drugs we use for therapeutic strategies,” Dr. Galloway said.

The overall take-home message, he said, is that VTE risk should be considered in everyone with RA and assessed and monitored accordingly. This includes those who may have traditionally been thought of as having a lower risk than others, such as men vs women, younger vs older individuals, and those who may have had RA for a few years.

The research was funded by Pfizer. Dr. Galloway reported receiving honoraria from Pfizer, AbbVie, Biovitrum, Bristol Myers Squibb, Celgene, Chugai, Galapagos, Janssen, Lilly, Novartis, Roche, Sanofi, Sobi, and UCB. Two coauthors of the work were employees of Pfizer. Dr. Bukhari had no conflicts of interest and was not involved in the research.

A version of this article appeared on Medscape.com.

LIVERPOOL, ENGLAND — People with rheumatoid arthritis (RA) have a consistently higher risk for venous thromboembolism (VTE) than the general population, but the reasons for this remain unclear, research presented at the annual meeting of the British Society for Rheumatology (BSR) reaffirmed.

Regardless of age, sex, body mass index (BMI), duration of disease, use of estrogen-based oral contraceptives, or hormone replacement therapy (HRT), people with RA are more likely to experience a pulmonary embolism or deep vein thrombosis than those without RA.

However, “these are rare events,” James Galloway, MBChB, PhD, professor of rheumatology and deputy head of the Centre for Rheumatic Diseases at King’s College London in England, said at the meeting.

In one analysis of data from 117,050 individuals living in England and Wales that are held within a large primary care practice database, Dr. Galloway and colleagues found that the unadjusted incidence of VTE in people diagnosed with RA (n = 23,410) was 0.44% vs 0.26% for matched controls within the general population (n = 93,640).

RA and VTE Risk

The overall risk for VTE was 46% higher among people with RA than among those without, although the absolute difference was small, Dr. Galloway reported.

“RA is associated with an increased risk of VTE; that’s been well described over the years,” Dr. Galloway told this news organization. Past research into why there is an elevated risk for VTE in patients with RA has often focused on the role of disease activity and inflammation.

“In the last few years, a new class of drugs, the JAK [Janus kinase] inhibitors, have emerged in which we have seen a signal of increased VTE risk from a number of studies. And I think that puts a spotlight on our understanding of VTE risk,” Dr. Galloway said.

He added “JAK inhibitors are very powerful at controlling inflammation, but if you take away inflammation, there is still an excess risk. What else could be driving that?”

To examine the excess risk for VTE seen in people with RA, Dr. Galloway and colleagues performed three separate analyses using data collected between January 1999 and December 2018 by the Royal College of General Practitioners Research and Surveillance Center.

One analysis looked at VTE risk according to age, sex, and BMI; another looked at the effect of the duration of RA; and a third analysis focused on the use of estrogen-based oral contraceptives or HRT.

For all three analyses, those with RA were matched in a 4:1 ratio to people from the general population without RA on the basis of current age, sex, calendar time, and years since registration at the primary care practice.

Observational Data Challenged

“These are observational data, so it’s important to weigh up the strengths and limitations,” Dr. Galloway acknowledged. Strengths are the large sample size and long follow-up provided by the database, which assesses and monitors more than 2000 primary care practices in England and Wales.

Confounding is still possible, despite adjusting for multiple factors that included sociodemographic factors; clinical features; and VTE risk factors such as smoking status, alcohol use, thrombophilia, reduced mobility, lower limb fracture, and a family history of VTE if data had been available. There wasn’t information on disease activity, for example, and disease duration was used as a surrogate marker for this.

Sitting in the audience, Marwan Bukhari, MBBS, PhD, challenged the population-matching process.

“Do you think maybe it was the matching that was the problem?” asked Dr. Bukhari, who is consultant rheumatologist at University Hospitals of Morecambe Bay NHS Foundation Trust and an honorary senior lecturer at the University of Manchester, both in England.

“They’re not entirely matched completely, correctly. Even if it is 4:1, there’s a difference between the populations,” he said.

Age, Sex, and Bodyweight

Over an average of 8.2 years’ follow-up, the adjusted hazard ratios (aHRs) comparing VTE risk in women and men with and without RA were a respective 1.62 and 1.52. The corresponding aHRs for VTE according to different age groups were 2.13 for age 18-49 years, 1.57 for age 50-69 years, and 1.34 for age 70 years and older.

“The highest excess risk was in the youngest age group,” Dr. Galloway pointed out, “but all age groups showing a significant increased risk of venous thromboembolism.”

Similar findings were seen across different BMI categories, with the highest risk occurring in those in the lowest BMI group. The aHRs were 1.66, 1.60, and 1.41 for the BMI categories of less than 25 kg/m2, 25-30 kg/m2, and more than 30 kg/m2, respectively.

Duration of RA

As for disease duration, nearly two thirds (63.9%) of the 23,410 adults with RA included in this analysis were included at or within 2 years of a diagnosis of RA, 7.8% within 2-5 years of diagnosis, 9.8% within 5-10 years of diagnosis, and 18.5% at 10 or more years after diagnosis.

The aHR for an increased relative risk for VTE in people with RA vs the control group ranged from 1.49 for 0-2 years of diagnosis up to 1.63 for more than 10 years since diagnosis.

“We could see no evidence that the VTE excess risk in rheumatoid arthritis was with a specific time since diagnosis,” Dr. Galloway said in the interview. “It appears that the risk is increased in people with established RA, whether you’ve had the disease for 2 years or 10 years.”

Similar findings were also seen when they looked at aHRs for pulmonary embolism (1.46-2.02) and deep vein thrombosis (1.43-1.89) separately.

Oral Contraceptives and HRT

Data on the use of estrogen-based oral contraceptives or HRT were detailed in a virtual poster presentation. In this analysis, there were 16,664 women with and 65,448 without RA, and the average follow-up was 8.3 years.

“The number of people available for this analysis was small, and bigger studies are needed,” Dr. Galloway said in the interview. Indeed, in the RA group, just 3.3% had used an estrogen-based oral contraceptive and 4.5% had used HRT compared with 3.9% and 3.8% in the control group, respectively.

The overall VTE risk was 52% higher in women with RA than in those without RA.

Risk for VTE was higher among women with RA regardless of the use of estrogen-based oral contraceptives or not (aHRs, 1.43 and 1.52, respectively) and regardless of the use of HRT or not (aHRs, 2.32 and 1.51).

Assess and Monitor

Together these data increase understanding of how age, gender, obesity, duration of disease, and estrogen-based contraception and HRT may make a difference to someone’s VTE risk.

“In all people with RA, we observe an increased risk of venous thromboembolism, and that is both relevant in a contemporary era when we think about prescribing and the different risks of drugs we use for therapeutic strategies,” Dr. Galloway said.

The overall take-home message, he said, is that VTE risk should be considered in everyone with RA and assessed and monitored accordingly. This includes those who may have traditionally been thought of as having a lower risk than others, such as men vs women, younger vs older individuals, and those who may have had RA for a few years.

The research was funded by Pfizer. Dr. Galloway reported receiving honoraria from Pfizer, AbbVie, Biovitrum, Bristol Myers Squibb, Celgene, Chugai, Galapagos, Janssen, Lilly, Novartis, Roche, Sanofi, Sobi, and UCB. Two coauthors of the work were employees of Pfizer. Dr. Bukhari had no conflicts of interest and was not involved in the research.

A version of this article appeared on Medscape.com.

LIVERPOOL, ENGLAND — People with rheumatoid arthritis (RA) have a consistently higher risk for venous thromboembolism (VTE) than the general population, but the reasons for this remain unclear, research presented at the annual meeting of the British Society for Rheumatology (BSR) reaffirmed.

Regardless of age, sex, body mass index (BMI), duration of disease, use of estrogen-based oral contraceptives, or hormone replacement therapy (HRT), people with RA are more likely to experience a pulmonary embolism or deep vein thrombosis than those without RA.

However, “these are rare events,” James Galloway, MBChB, PhD, professor of rheumatology and deputy head of the Centre for Rheumatic Diseases at King’s College London in England, said at the meeting.

In one analysis of data from 117,050 individuals living in England and Wales that are held within a large primary care practice database, Dr. Galloway and colleagues found that the unadjusted incidence of VTE in people diagnosed with RA (n = 23,410) was 0.44% vs 0.26% for matched controls within the general population (n = 93,640).

RA and VTE Risk

The overall risk for VTE was 46% higher among people with RA than among those without, although the absolute difference was small, Dr. Galloway reported.

“RA is associated with an increased risk of VTE; that’s been well described over the years,” Dr. Galloway told this news organization. Past research into why there is an elevated risk for VTE in patients with RA has often focused on the role of disease activity and inflammation.

“In the last few years, a new class of drugs, the JAK [Janus kinase] inhibitors, have emerged in which we have seen a signal of increased VTE risk from a number of studies. And I think that puts a spotlight on our understanding of VTE risk,” Dr. Galloway said.

He added “JAK inhibitors are very powerful at controlling inflammation, but if you take away inflammation, there is still an excess risk. What else could be driving that?”

To examine the excess risk for VTE seen in people with RA, Dr. Galloway and colleagues performed three separate analyses using data collected between January 1999 and December 2018 by the Royal College of General Practitioners Research and Surveillance Center.

One analysis looked at VTE risk according to age, sex, and BMI; another looked at the effect of the duration of RA; and a third analysis focused on the use of estrogen-based oral contraceptives or HRT.

For all three analyses, those with RA were matched in a 4:1 ratio to people from the general population without RA on the basis of current age, sex, calendar time, and years since registration at the primary care practice.

Observational Data Challenged

“These are observational data, so it’s important to weigh up the strengths and limitations,” Dr. Galloway acknowledged. Strengths are the large sample size and long follow-up provided by the database, which assesses and monitors more than 2000 primary care practices in England and Wales.

Confounding is still possible, despite adjusting for multiple factors that included sociodemographic factors; clinical features; and VTE risk factors such as smoking status, alcohol use, thrombophilia, reduced mobility, lower limb fracture, and a family history of VTE if data had been available. There wasn’t information on disease activity, for example, and disease duration was used as a surrogate marker for this.

Sitting in the audience, Marwan Bukhari, MBBS, PhD, challenged the population-matching process.

“Do you think maybe it was the matching that was the problem?” asked Dr. Bukhari, who is consultant rheumatologist at University Hospitals of Morecambe Bay NHS Foundation Trust and an honorary senior lecturer at the University of Manchester, both in England.

“They’re not entirely matched completely, correctly. Even if it is 4:1, there’s a difference between the populations,” he said.

Age, Sex, and Bodyweight

Over an average of 8.2 years’ follow-up, the adjusted hazard ratios (aHRs) comparing VTE risk in women and men with and without RA were a respective 1.62 and 1.52. The corresponding aHRs for VTE according to different age groups were 2.13 for age 18-49 years, 1.57 for age 50-69 years, and 1.34 for age 70 years and older.

“The highest excess risk was in the youngest age group,” Dr. Galloway pointed out, “but all age groups showing a significant increased risk of venous thromboembolism.”

Similar findings were seen across different BMI categories, with the highest risk occurring in those in the lowest BMI group. The aHRs were 1.66, 1.60, and 1.41 for the BMI categories of less than 25 kg/m2, 25-30 kg/m2, and more than 30 kg/m2, respectively.

Duration of RA

As for disease duration, nearly two thirds (63.9%) of the 23,410 adults with RA included in this analysis were included at or within 2 years of a diagnosis of RA, 7.8% within 2-5 years of diagnosis, 9.8% within 5-10 years of diagnosis, and 18.5% at 10 or more years after diagnosis.

The aHR for an increased relative risk for VTE in people with RA vs the control group ranged from 1.49 for 0-2 years of diagnosis up to 1.63 for more than 10 years since diagnosis.

“We could see no evidence that the VTE excess risk in rheumatoid arthritis was with a specific time since diagnosis,” Dr. Galloway said in the interview. “It appears that the risk is increased in people with established RA, whether you’ve had the disease for 2 years or 10 years.”

Similar findings were also seen when they looked at aHRs for pulmonary embolism (1.46-2.02) and deep vein thrombosis (1.43-1.89) separately.

Oral Contraceptives and HRT

Data on the use of estrogen-based oral contraceptives or HRT were detailed in a virtual poster presentation. In this analysis, there were 16,664 women with and 65,448 without RA, and the average follow-up was 8.3 years.

“The number of people available for this analysis was small, and bigger studies are needed,” Dr. Galloway said in the interview. Indeed, in the RA group, just 3.3% had used an estrogen-based oral contraceptive and 4.5% had used HRT compared with 3.9% and 3.8% in the control group, respectively.

The overall VTE risk was 52% higher in women with RA than in those without RA.

Risk for VTE was higher among women with RA regardless of the use of estrogen-based oral contraceptives or not (aHRs, 1.43 and 1.52, respectively) and regardless of the use of HRT or not (aHRs, 2.32 and 1.51).

Assess and Monitor

Together these data increase understanding of how age, gender, obesity, duration of disease, and estrogen-based contraception and HRT may make a difference to someone’s VTE risk.

“In all people with RA, we observe an increased risk of venous thromboembolism, and that is both relevant in a contemporary era when we think about prescribing and the different risks of drugs we use for therapeutic strategies,” Dr. Galloway said.

The overall take-home message, he said, is that VTE risk should be considered in everyone with RA and assessed and monitored accordingly. This includes those who may have traditionally been thought of as having a lower risk than others, such as men vs women, younger vs older individuals, and those who may have had RA for a few years.

The research was funded by Pfizer. Dr. Galloway reported receiving honoraria from Pfizer, AbbVie, Biovitrum, Bristol Myers Squibb, Celgene, Chugai, Galapagos, Janssen, Lilly, Novartis, Roche, Sanofi, Sobi, and UCB. Two coauthors of the work were employees of Pfizer. Dr. Bukhari had no conflicts of interest and was not involved in the research.

A version of this article appeared on Medscape.com.

FROM BSR 2024

Clinical Manifestation of Degos Disease: Painful Penile Ulcers

To the Editor:

A 56-year-old man was referred to our Grand Rounds by another dermatologist in our health system for evaluation of a red scaly rash on the trunk that had been present for more than a year. More recently, over the course of approximately 9 months he experienced recurrent painful penile ulcers that lasted for approximately 4 weeks and then self-resolved. He had a medical history of central retinal vein occlusion, primary hyperparathyroidism, and nonspecific colitis. A family history was notable for lung cancer in the patient’s father and myelodysplastic syndrome and breast cancer in his mother; however, there was no family history of a similar rash. A bacterial culture of the penile ulcer was negative. Testing for antibodies against HIV and herpes simplex virus (HSV) types 1 and 2 was negative. Results of a serum VDRL test were nonreactive, which ruled out syphilis. The patient was treated by the referring dermatologist with azithromycin for possible chancroid without relief.

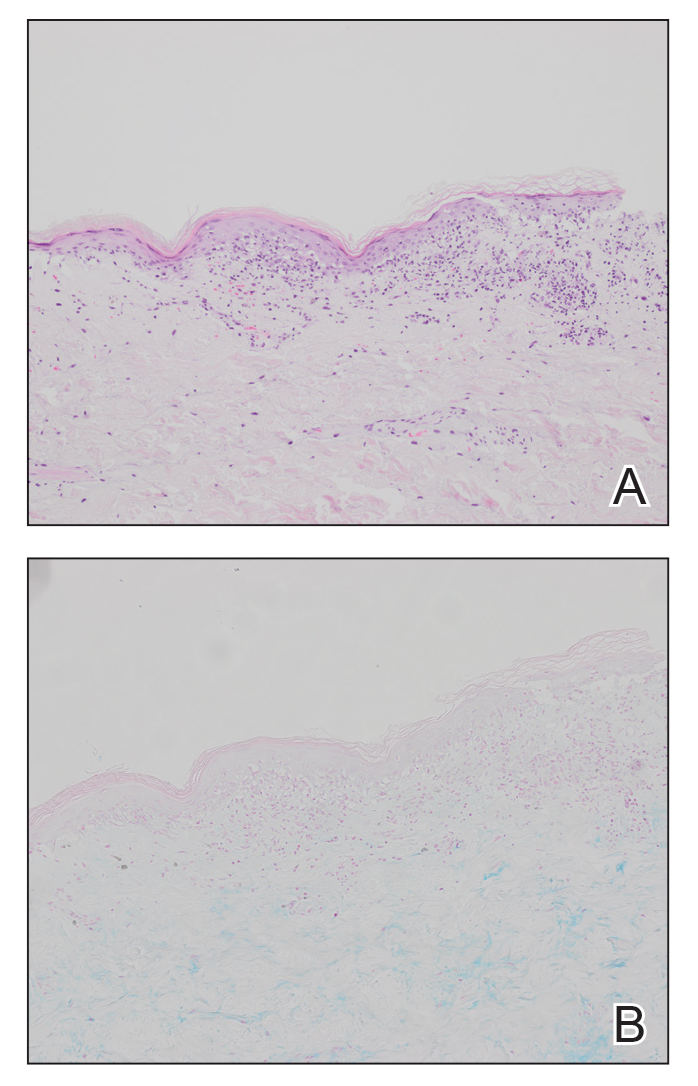

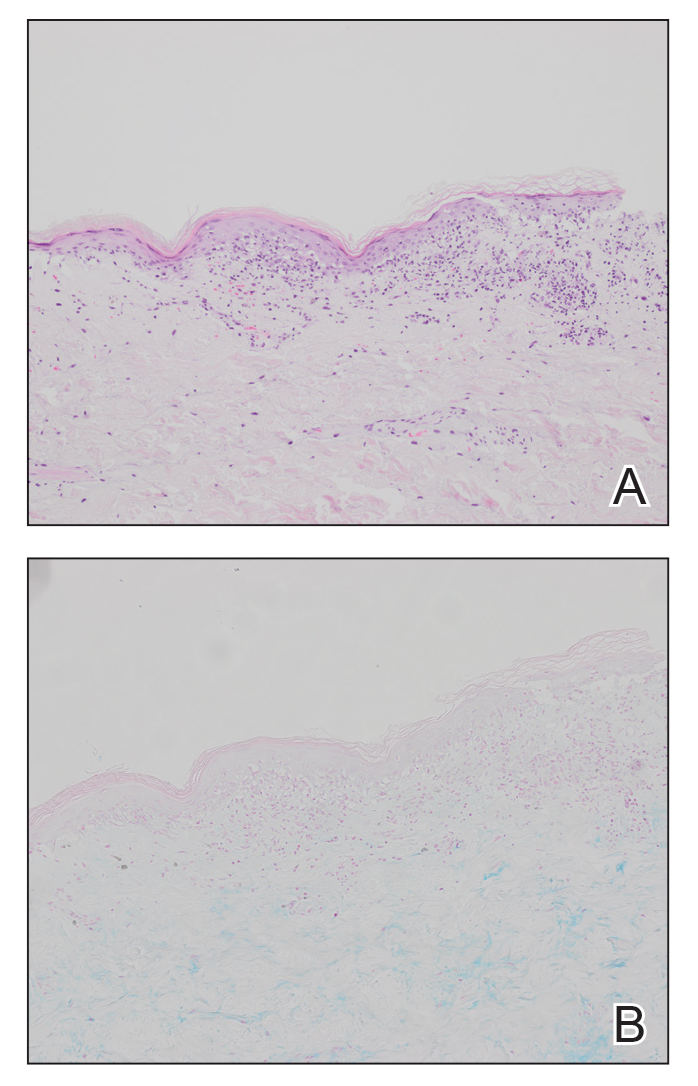

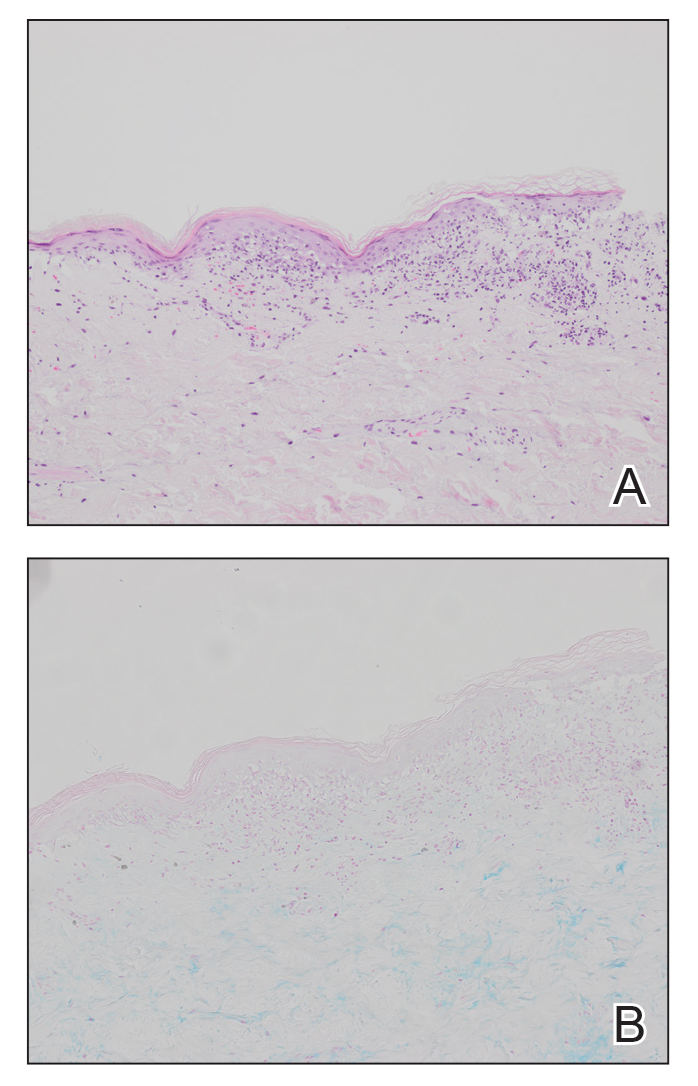

The patient was being followed by the referring dermatologist who initially was concerned for Degos disease based on clinical examination findings, prompting biopsy of a lesion on the back, which revealed vacuolar interface dermatitis, a sparse superficial perivascular lymphocytic infiltrate, and increased mucin—all highly suspicious for connective tissue disease (Figure 1). An antinuclear antibody test was positive, with a titer of 1:640. The patient was started on prednisone and referred to rheumatology; however, further evaluation by rheumatology for an autoimmune process—including anticardiolipin antibodies—was unremarkable. A few months prior to the current presentation, he also had mildly elevated liver function test results. A colonoscopy was performed, and a biopsy revealed nonspecific colitis. A biopsy of the penile ulcer also was nonspecific, showing only ulceration and acute and chronic inflammation. No epidermal interface change was seen. Results from a Grocott-Gomori methenamine-silver stain, Treponema pallidum immunostain, and HSV polymerase chain reaction were negative for fungal organisms, spirochetes, and HSV, respectively. The differential diagnosis included trauma, aphthous ulceration, and Behçet disease. Behçet disease was suspected by the referring dermatologist, and the patient was treated with colchicine, prednisone, pimecrolimus cream, and topical lidocaine; however, the lesions persisted, and he was subsequently referred to our Grand Rounds for further evaluation.

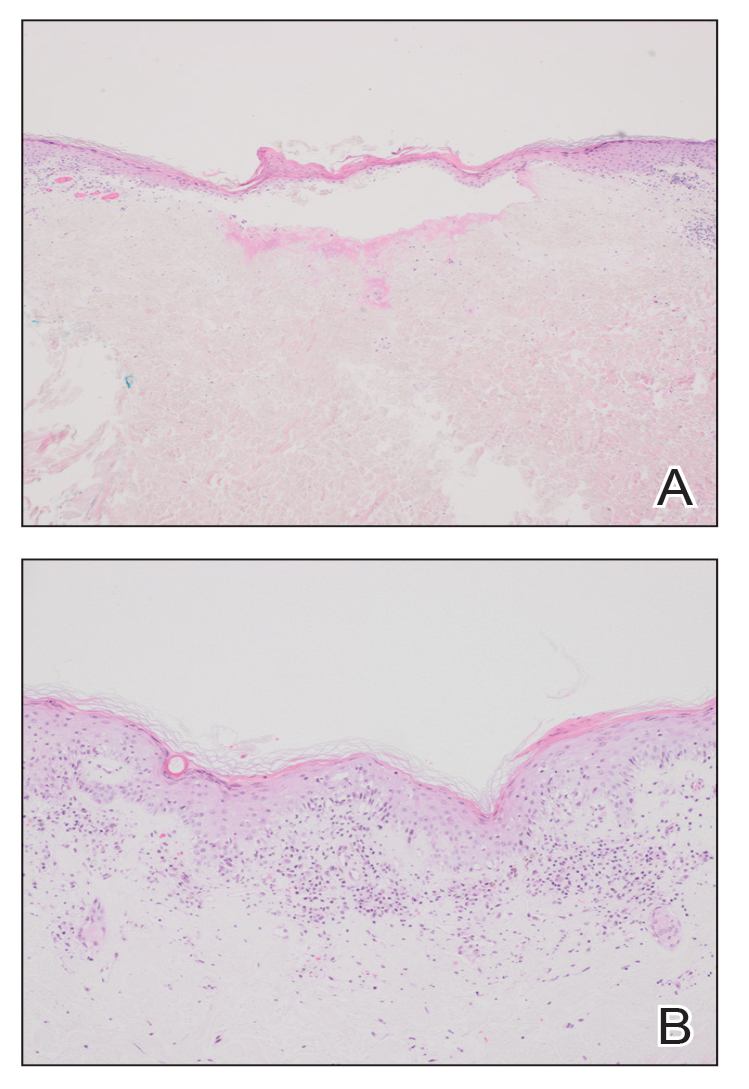

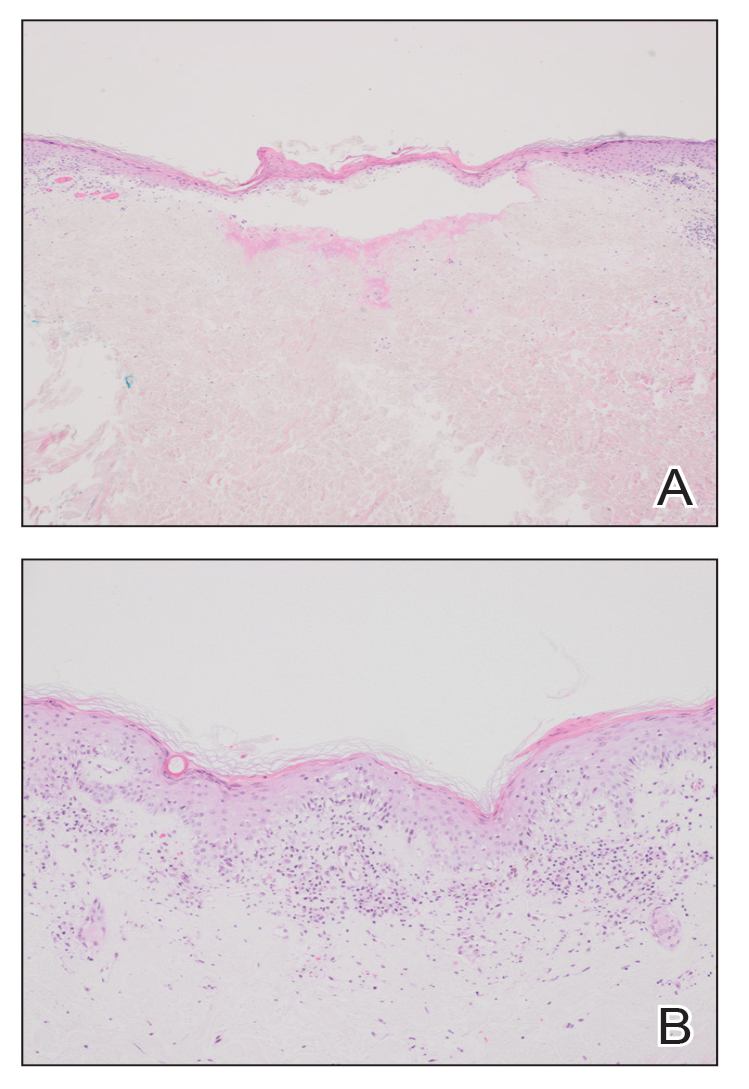

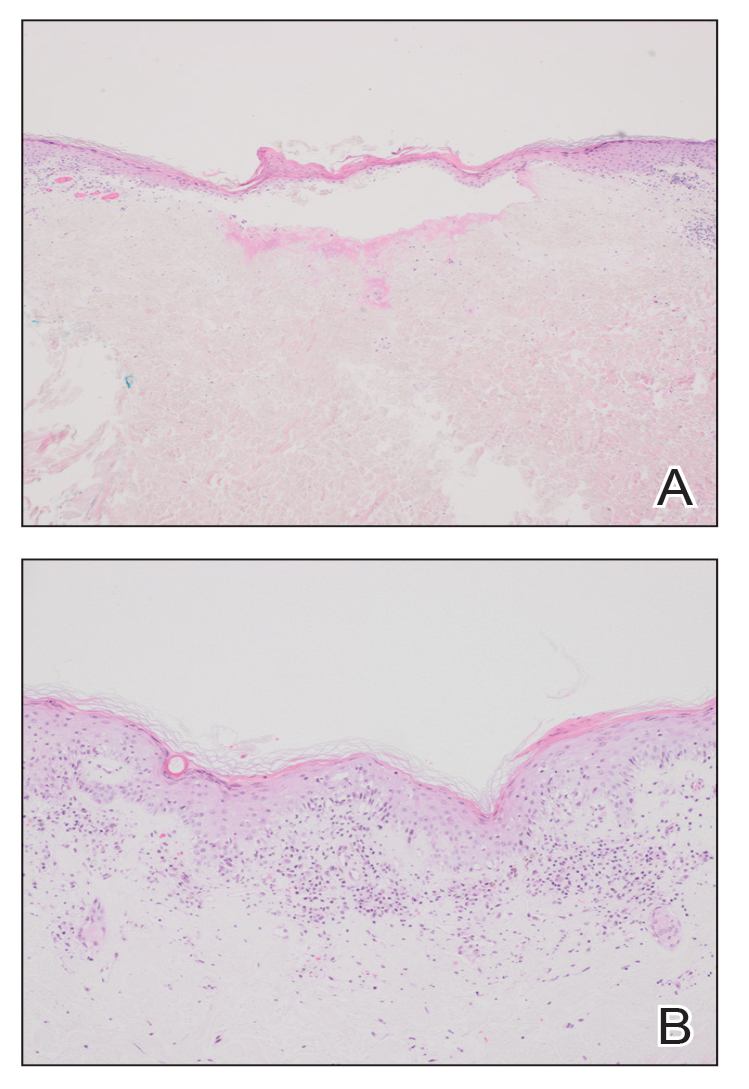

At the current presentation, physical examination revealed several small papules with white atrophic centers and erythematous rims on the trunk and extremities (Figure 2A). An ulceration was noted on the penile shaft (Figure 2B). Further evaluation for Behçet disease, including testing for pathergy and HLA-B51, was negative. Degos disease was strongly suspected clinically, and a repeat biopsy was performed of a lesion on the abdomen, which revealed central epidermal necrosis, atrophy, and parakeratosis with an underlying wedge-shaped dermal infarct surrounded by multiple small occluded dermal vessels, perivascular inflammation, and dermal edema (Figure 3). Direct immunofluorescence was performed using antibodies against IgG, IgA, IgM, fibrinogen, albumin, and C3, which was negative. These findings from direct immunofluorescence and histopathology as well as the clinical presentation were considered compatible with Degos disease. The patient was started on aspirin and pentoxifylline. Pentoxifylline 400 mg twice daily appeared to lessen some of the pain. Pain management specialists started the patient on gabapentin.

Approximately 4 months after the Grand Rounds evaluation, during which time he continued treatment with pentoxifylline, he was admitted to the hospital for intractable nausea and vomiting. His condition acutely declined due to bowel perforation, and he was started on eculizumab 1200 mg every 14 days. Because of an increased risk for meningococcal meningitis while on this medication, he also was given erythromycin 500 mg twice daily prophylactically. He was being followed by hematology for the vasculopathy, and they were planning to monitor for any disease changes with computed tomography of the chest, abdomen, and pelvis every 3 months, as well as echocardiogram every 6 months for any development of pericardial or pleural fibrosis. Approximately 1 month later, the patient was admitted to the hospital again but died after 1 week from gastrointestinal complications (approximately 22 months after the onset of the rash).

Degos disease (atrophic papulosis) is a rare small vessel vasculopathy of unknown etiology, but complement-mediated endothelial injury plays a role.1,2 It typically occurs in the fourth decade of life, with a slight female predominance.3,4 The skin lesions are characteristic and described as 5- to 10-mm papules with atrophic white centers and erythematous telangiectatic rims, most commonly on the upper body and typically sparing the head, palms, and soles.1 Penile ulceration is an uncommon cutaneous feature, with only a few cases reported in the literature.5,6 Approximately one-third of patients will have only skin lesions, but two-thirds will develop systemic involvement 1 to 2 years after onset, with the gastrointestinal tract and central nervous system most commonly involved. For those with systemic involvement, the 5-year survival rate is approximately 55%, and the most common causes of death are bowel perforation, peritonitis, and stroke.3,4 Because some patients appear to never develop systemic complications, Theodoridis et al4 proposed that the disease be classified as either malignant atrophic papulosis or benign atrophic papulosis to indicate the malignant systemic form and the benign cutaneous form, respectively.

The histopathology of Degos disease changes as the lesions evolve.7 Early lesions show a superficial and deep perivascular and periadnexal lymphocytic infiltrate, possible interface dermatitis, and dermal mucin resembling lupus. The more fully developed lesions show a greater degree of inflammation and interface change as well as lymphocytic vasculitis. This stage also may have epidermal atrophy and early papillary dermal sclerosis resembling lichen sclerosus. The late-stage lesions, clinically observed as papules with atrophic white centers and surrounding erythema, show the classic pathology of wedge-shaped dermal sclerosis and central epidermal atrophy with surrounding hyperkeratosis. Interface dermatitis and dermal mucin can be seen in all stages, though mucin is diminished in the later stage.

Effective treatment options are limited; however, antithrombotics or compounds that facilitate blood perfusion, such as aspirin or pentoxifylline, initially can be used.1 Eculizumab, a humanized monoclonal antibody that prevents the cleavage of C5, has been used for salvage therapy,8 as in our case. Treprostinil, a prostacyclin analog that causes arterial vasodilation and inhibition of platelet aggregation, has been reported to improve bowel and cutaneous lesions, functional status, and neurologic symptoms.9

Our case highlights important features of Degos disease. First, it is important for both the clinician and the pathologist to recognize that the histopathology of Degos disease changes as the lesions evolve. In our case, although the lesions were characteristic of Degos disease clinically, the initial biopsy was suspicious for connective tissue disease, which led to an autoimmune evaluation that ultimately was unremarkable. Recognizing that early lesions of Degos disease can resemble connective tissue disease histologically could have prevented this delay in diagnosis. However, Degos disease has been reported in association with autoimmune diseases.10 Second, although penile ulceration is uncommon, it can be a prominent cutaneous manifestation of the disease. Finally, eculizumab and treprostinil are therapeutic options that have shown some efficacy in improving symptoms and cutaneous lesions.8,9

- Theodoridis A, Makrantonaki E, Zouboulis CC. Malignant atrophic papulosis (Köhlmeier-Degos disease)—a review. Orphanet J Rare Dis. 2013;8:10. doi:10.1186/1750-1172-8-10

- Magro CM, Poe JC, Kim C, et al. Degos disease: a C5b-9/interferon-α-mediated endotheliopathy syndrome. Am J Clin Pathol. 2011;135:599-610. doi:10.1309/AJCP66QIMFARLZKI

- Hu P, Mao Z, Liu C, et al. Malignant atrophic papulosis with motor aphasia and intestinal perforation: a case report and review of published works. J Dermatol. 2018;45:723-726. doi:10.1111/1346-8138.14280

- Theodoridis A, Konstantinidou A, Makrantonaki E, et al. Malignant and benign forms of atrophic papulosis (Köhlmeier-Degos disease): systemic involvement determines the prognosis. Br J Dermatol. 2014;170:110-115. doi:10.1111/bjd.12642

- Thomson KF, Highet AS. Penile ulceration in fatal malignant atrophic papulosis (Degos’ disease). Br J Dermatol. 2000;143:1320-1322. doi:10.1046/j.1365-2133.2000.03911.x

- Aydogan K, Alkan G, Karadogan Koran S, et al. Painful penile ulceration in a patient with malignant atrophic papulosis. J Eur Acad Dermatol Venereol. 2005;19:612-616. doi:10.1111/j.1468-3083.2005.01227.x

- Harvell JD, Williford PL, White WL. Benign cutaneous Degos’ disease: a case report with emphasis on histopathology as papules chronologically evolve. Am J Dermatopathol. 2001;23:116-123. doi:10.1097/00000372-200104000-00006

- Oliver B, Boehm M, Rosing DR, et al. Diffuse atrophic papules and plaques, intermittent abdominal pain, paresthesias, and cardiac abnormalities in a 55-year-old woman. J Am Acad Dermatol. 2016;75:1274-1277. doi:10.1016/j.jaad.2016.09.015

- Shapiro LS, Toledo-Garcia AE, Farrell JF. Effective treatment of malignant atrophic papulosis (Köhlmeier-Degos disease) with treprostinil—early experience. Orphanet J Rare Dis. 2013;8:52. doi:10.1186/1750-1172-8-52

- Burgin S, Stone JH, Shenoy-Bhangle AS, et al. Case records of the Massachusetts General Hospital. Case 18-2014. A 32-year-old man with a rash, myalgia, and weakness. N Engl J Med. 2014;370:2327-2337. doi:10.1056/NEJMcpc1304161

To the Editor:

A 56-year-old man was referred to our Grand Rounds by another dermatologist in our health system for evaluation of a red scaly rash on the trunk that had been present for more than a year. More recently, over the course of approximately 9 months he experienced recurrent painful penile ulcers that lasted for approximately 4 weeks and then self-resolved. He had a medical history of central retinal vein occlusion, primary hyperparathyroidism, and nonspecific colitis. A family history was notable for lung cancer in the patient’s father and myelodysplastic syndrome and breast cancer in his mother; however, there was no family history of a similar rash. A bacterial culture of the penile ulcer was negative. Testing for antibodies against HIV and herpes simplex virus (HSV) types 1 and 2 was negative. Results of a serum VDRL test were nonreactive, which ruled out syphilis. The patient was treated by the referring dermatologist with azithromycin for possible chancroid without relief.

The patient was being followed by the referring dermatologist who initially was concerned for Degos disease based on clinical examination findings, prompting biopsy of a lesion on the back, which revealed vacuolar interface dermatitis, a sparse superficial perivascular lymphocytic infiltrate, and increased mucin—all highly suspicious for connective tissue disease (Figure 1). An antinuclear antibody test was positive, with a titer of 1:640. The patient was started on prednisone and referred to rheumatology; however, further evaluation by rheumatology for an autoimmune process—including anticardiolipin antibodies—was unremarkable. A few months prior to the current presentation, he also had mildly elevated liver function test results. A colonoscopy was performed, and a biopsy revealed nonspecific colitis. A biopsy of the penile ulcer also was nonspecific, showing only ulceration and acute and chronic inflammation. No epidermal interface change was seen. Results from a Grocott-Gomori methenamine-silver stain, Treponema pallidum immunostain, and HSV polymerase chain reaction were negative for fungal organisms, spirochetes, and HSV, respectively. The differential diagnosis included trauma, aphthous ulceration, and Behçet disease. Behçet disease was suspected by the referring dermatologist, and the patient was treated with colchicine, prednisone, pimecrolimus cream, and topical lidocaine; however, the lesions persisted, and he was subsequently referred to our Grand Rounds for further evaluation.

At the current presentation, physical examination revealed several small papules with white atrophic centers and erythematous rims on the trunk and extremities (Figure 2A). An ulceration was noted on the penile shaft (Figure 2B). Further evaluation for Behçet disease, including testing for pathergy and HLA-B51, was negative. Degos disease was strongly suspected clinically, and a repeat biopsy was performed of a lesion on the abdomen, which revealed central epidermal necrosis, atrophy, and parakeratosis with an underlying wedge-shaped dermal infarct surrounded by multiple small occluded dermal vessels, perivascular inflammation, and dermal edema (Figure 3). Direct immunofluorescence was performed using antibodies against IgG, IgA, IgM, fibrinogen, albumin, and C3, which was negative. These findings from direct immunofluorescence and histopathology as well as the clinical presentation were considered compatible with Degos disease. The patient was started on aspirin and pentoxifylline. Pentoxifylline 400 mg twice daily appeared to lessen some of the pain. Pain management specialists started the patient on gabapentin.

Approximately 4 months after the Grand Rounds evaluation, during which time he continued treatment with pentoxifylline, he was admitted to the hospital for intractable nausea and vomiting. His condition acutely declined due to bowel perforation, and he was started on eculizumab 1200 mg every 14 days. Because of an increased risk for meningococcal meningitis while on this medication, he also was given erythromycin 500 mg twice daily prophylactically. He was being followed by hematology for the vasculopathy, and they were planning to monitor for any disease changes with computed tomography of the chest, abdomen, and pelvis every 3 months, as well as echocardiogram every 6 months for any development of pericardial or pleural fibrosis. Approximately 1 month later, the patient was admitted to the hospital again but died after 1 week from gastrointestinal complications (approximately 22 months after the onset of the rash).

Degos disease (atrophic papulosis) is a rare small vessel vasculopathy of unknown etiology, but complement-mediated endothelial injury plays a role.1,2 It typically occurs in the fourth decade of life, with a slight female predominance.3,4 The skin lesions are characteristic and described as 5- to 10-mm papules with atrophic white centers and erythematous telangiectatic rims, most commonly on the upper body and typically sparing the head, palms, and soles.1 Penile ulceration is an uncommon cutaneous feature, with only a few cases reported in the literature.5,6 Approximately one-third of patients will have only skin lesions, but two-thirds will develop systemic involvement 1 to 2 years after onset, with the gastrointestinal tract and central nervous system most commonly involved. For those with systemic involvement, the 5-year survival rate is approximately 55%, and the most common causes of death are bowel perforation, peritonitis, and stroke.3,4 Because some patients appear to never develop systemic complications, Theodoridis et al4 proposed that the disease be classified as either malignant atrophic papulosis or benign atrophic papulosis to indicate the malignant systemic form and the benign cutaneous form, respectively.

The histopathology of Degos disease changes as the lesions evolve.7 Early lesions show a superficial and deep perivascular and periadnexal lymphocytic infiltrate, possible interface dermatitis, and dermal mucin resembling lupus. The more fully developed lesions show a greater degree of inflammation and interface change as well as lymphocytic vasculitis. This stage also may have epidermal atrophy and early papillary dermal sclerosis resembling lichen sclerosus. The late-stage lesions, clinically observed as papules with atrophic white centers and surrounding erythema, show the classic pathology of wedge-shaped dermal sclerosis and central epidermal atrophy with surrounding hyperkeratosis. Interface dermatitis and dermal mucin can be seen in all stages, though mucin is diminished in the later stage.

Effective treatment options are limited; however, antithrombotics or compounds that facilitate blood perfusion, such as aspirin or pentoxifylline, initially can be used.1 Eculizumab, a humanized monoclonal antibody that prevents the cleavage of C5, has been used for salvage therapy,8 as in our case. Treprostinil, a prostacyclin analog that causes arterial vasodilation and inhibition of platelet aggregation, has been reported to improve bowel and cutaneous lesions, functional status, and neurologic symptoms.9

Our case highlights important features of Degos disease. First, it is important for both the clinician and the pathologist to recognize that the histopathology of Degos disease changes as the lesions evolve. In our case, although the lesions were characteristic of Degos disease clinically, the initial biopsy was suspicious for connective tissue disease, which led to an autoimmune evaluation that ultimately was unremarkable. Recognizing that early lesions of Degos disease can resemble connective tissue disease histologically could have prevented this delay in diagnosis. However, Degos disease has been reported in association with autoimmune diseases.10 Second, although penile ulceration is uncommon, it can be a prominent cutaneous manifestation of the disease. Finally, eculizumab and treprostinil are therapeutic options that have shown some efficacy in improving symptoms and cutaneous lesions.8,9

To the Editor:

A 56-year-old man was referred to our Grand Rounds by another dermatologist in our health system for evaluation of a red scaly rash on the trunk that had been present for more than a year. More recently, over the course of approximately 9 months he experienced recurrent painful penile ulcers that lasted for approximately 4 weeks and then self-resolved. He had a medical history of central retinal vein occlusion, primary hyperparathyroidism, and nonspecific colitis. A family history was notable for lung cancer in the patient’s father and myelodysplastic syndrome and breast cancer in his mother; however, there was no family history of a similar rash. A bacterial culture of the penile ulcer was negative. Testing for antibodies against HIV and herpes simplex virus (HSV) types 1 and 2 was negative. Results of a serum VDRL test were nonreactive, which ruled out syphilis. The patient was treated by the referring dermatologist with azithromycin for possible chancroid without relief.

The patient was being followed by the referring dermatologist who initially was concerned for Degos disease based on clinical examination findings, prompting biopsy of a lesion on the back, which revealed vacuolar interface dermatitis, a sparse superficial perivascular lymphocytic infiltrate, and increased mucin—all highly suspicious for connective tissue disease (Figure 1). An antinuclear antibody test was positive, with a titer of 1:640. The patient was started on prednisone and referred to rheumatology; however, further evaluation by rheumatology for an autoimmune process—including anticardiolipin antibodies—was unremarkable. A few months prior to the current presentation, he also had mildly elevated liver function test results. A colonoscopy was performed, and a biopsy revealed nonspecific colitis. A biopsy of the penile ulcer also was nonspecific, showing only ulceration and acute and chronic inflammation. No epidermal interface change was seen. Results from a Grocott-Gomori methenamine-silver stain, Treponema pallidum immunostain, and HSV polymerase chain reaction were negative for fungal organisms, spirochetes, and HSV, respectively. The differential diagnosis included trauma, aphthous ulceration, and Behçet disease. Behçet disease was suspected by the referring dermatologist, and the patient was treated with colchicine, prednisone, pimecrolimus cream, and topical lidocaine; however, the lesions persisted, and he was subsequently referred to our Grand Rounds for further evaluation.

At the current presentation, physical examination revealed several small papules with white atrophic centers and erythematous rims on the trunk and extremities (Figure 2A). An ulceration was noted on the penile shaft (Figure 2B). Further evaluation for Behçet disease, including testing for pathergy and HLA-B51, was negative. Degos disease was strongly suspected clinically, and a repeat biopsy was performed of a lesion on the abdomen, which revealed central epidermal necrosis, atrophy, and parakeratosis with an underlying wedge-shaped dermal infarct surrounded by multiple small occluded dermal vessels, perivascular inflammation, and dermal edema (Figure 3). Direct immunofluorescence was performed using antibodies against IgG, IgA, IgM, fibrinogen, albumin, and C3, which was negative. These findings from direct immunofluorescence and histopathology as well as the clinical presentation were considered compatible with Degos disease. The patient was started on aspirin and pentoxifylline. Pentoxifylline 400 mg twice daily appeared to lessen some of the pain. Pain management specialists started the patient on gabapentin.

Approximately 4 months after the Grand Rounds evaluation, during which time he continued treatment with pentoxifylline, he was admitted to the hospital for intractable nausea and vomiting. His condition acutely declined due to bowel perforation, and he was started on eculizumab 1200 mg every 14 days. Because of an increased risk for meningococcal meningitis while on this medication, he also was given erythromycin 500 mg twice daily prophylactically. He was being followed by hematology for the vasculopathy, and they were planning to monitor for any disease changes with computed tomography of the chest, abdomen, and pelvis every 3 months, as well as echocardiogram every 6 months for any development of pericardial or pleural fibrosis. Approximately 1 month later, the patient was admitted to the hospital again but died after 1 week from gastrointestinal complications (approximately 22 months after the onset of the rash).

Degos disease (atrophic papulosis) is a rare small vessel vasculopathy of unknown etiology, but complement-mediated endothelial injury plays a role.1,2 It typically occurs in the fourth decade of life, with a slight female predominance.3,4 The skin lesions are characteristic and described as 5- to 10-mm papules with atrophic white centers and erythematous telangiectatic rims, most commonly on the upper body and typically sparing the head, palms, and soles.1 Penile ulceration is an uncommon cutaneous feature, with only a few cases reported in the literature.5,6 Approximately one-third of patients will have only skin lesions, but two-thirds will develop systemic involvement 1 to 2 years after onset, with the gastrointestinal tract and central nervous system most commonly involved. For those with systemic involvement, the 5-year survival rate is approximately 55%, and the most common causes of death are bowel perforation, peritonitis, and stroke.3,4 Because some patients appear to never develop systemic complications, Theodoridis et al4 proposed that the disease be classified as either malignant atrophic papulosis or benign atrophic papulosis to indicate the malignant systemic form and the benign cutaneous form, respectively.

The histopathology of Degos disease changes as the lesions evolve.7 Early lesions show a superficial and deep perivascular and periadnexal lymphocytic infiltrate, possible interface dermatitis, and dermal mucin resembling lupus. The more fully developed lesions show a greater degree of inflammation and interface change as well as lymphocytic vasculitis. This stage also may have epidermal atrophy and early papillary dermal sclerosis resembling lichen sclerosus. The late-stage lesions, clinically observed as papules with atrophic white centers and surrounding erythema, show the classic pathology of wedge-shaped dermal sclerosis and central epidermal atrophy with surrounding hyperkeratosis. Interface dermatitis and dermal mucin can be seen in all stages, though mucin is diminished in the later stage.

Effective treatment options are limited; however, antithrombotics or compounds that facilitate blood perfusion, such as aspirin or pentoxifylline, initially can be used.1 Eculizumab, a humanized monoclonal antibody that prevents the cleavage of C5, has been used for salvage therapy,8 as in our case. Treprostinil, a prostacyclin analog that causes arterial vasodilation and inhibition of platelet aggregation, has been reported to improve bowel and cutaneous lesions, functional status, and neurologic symptoms.9

Our case highlights important features of Degos disease. First, it is important for both the clinician and the pathologist to recognize that the histopathology of Degos disease changes as the lesions evolve. In our case, although the lesions were characteristic of Degos disease clinically, the initial biopsy was suspicious for connective tissue disease, which led to an autoimmune evaluation that ultimately was unremarkable. Recognizing that early lesions of Degos disease can resemble connective tissue disease histologically could have prevented this delay in diagnosis. However, Degos disease has been reported in association with autoimmune diseases.10 Second, although penile ulceration is uncommon, it can be a prominent cutaneous manifestation of the disease. Finally, eculizumab and treprostinil are therapeutic options that have shown some efficacy in improving symptoms and cutaneous lesions.8,9

- Theodoridis A, Makrantonaki E, Zouboulis CC. Malignant atrophic papulosis (Köhlmeier-Degos disease)—a review. Orphanet J Rare Dis. 2013;8:10. doi:10.1186/1750-1172-8-10

- Magro CM, Poe JC, Kim C, et al. Degos disease: a C5b-9/interferon-α-mediated endotheliopathy syndrome. Am J Clin Pathol. 2011;135:599-610. doi:10.1309/AJCP66QIMFARLZKI

- Hu P, Mao Z, Liu C, et al. Malignant atrophic papulosis with motor aphasia and intestinal perforation: a case report and review of published works. J Dermatol. 2018;45:723-726. doi:10.1111/1346-8138.14280

- Theodoridis A, Konstantinidou A, Makrantonaki E, et al. Malignant and benign forms of atrophic papulosis (Köhlmeier-Degos disease): systemic involvement determines the prognosis. Br J Dermatol. 2014;170:110-115. doi:10.1111/bjd.12642

- Thomson KF, Highet AS. Penile ulceration in fatal malignant atrophic papulosis (Degos’ disease). Br J Dermatol. 2000;143:1320-1322. doi:10.1046/j.1365-2133.2000.03911.x

- Aydogan K, Alkan G, Karadogan Koran S, et al. Painful penile ulceration in a patient with malignant atrophic papulosis. J Eur Acad Dermatol Venereol. 2005;19:612-616. doi:10.1111/j.1468-3083.2005.01227.x

- Harvell JD, Williford PL, White WL. Benign cutaneous Degos’ disease: a case report with emphasis on histopathology as papules chronologically evolve. Am J Dermatopathol. 2001;23:116-123. doi:10.1097/00000372-200104000-00006

- Oliver B, Boehm M, Rosing DR, et al. Diffuse atrophic papules and plaques, intermittent abdominal pain, paresthesias, and cardiac abnormalities in a 55-year-old woman. J Am Acad Dermatol. 2016;75:1274-1277. doi:10.1016/j.jaad.2016.09.015

- Shapiro LS, Toledo-Garcia AE, Farrell JF. Effective treatment of malignant atrophic papulosis (Köhlmeier-Degos disease) with treprostinil—early experience. Orphanet J Rare Dis. 2013;8:52. doi:10.1186/1750-1172-8-52

- Burgin S, Stone JH, Shenoy-Bhangle AS, et al. Case records of the Massachusetts General Hospital. Case 18-2014. A 32-year-old man with a rash, myalgia, and weakness. N Engl J Med. 2014;370:2327-2337. doi:10.1056/NEJMcpc1304161

- Theodoridis A, Makrantonaki E, Zouboulis CC. Malignant atrophic papulosis (Köhlmeier-Degos disease)—a review. Orphanet J Rare Dis. 2013;8:10. doi:10.1186/1750-1172-8-10

- Magro CM, Poe JC, Kim C, et al. Degos disease: a C5b-9/interferon-α-mediated endotheliopathy syndrome. Am J Clin Pathol. 2011;135:599-610. doi:10.1309/AJCP66QIMFARLZKI

- Hu P, Mao Z, Liu C, et al. Malignant atrophic papulosis with motor aphasia and intestinal perforation: a case report and review of published works. J Dermatol. 2018;45:723-726. doi:10.1111/1346-8138.14280

- Theodoridis A, Konstantinidou A, Makrantonaki E, et al. Malignant and benign forms of atrophic papulosis (Köhlmeier-Degos disease): systemic involvement determines the prognosis. Br J Dermatol. 2014;170:110-115. doi:10.1111/bjd.12642

- Thomson KF, Highet AS. Penile ulceration in fatal malignant atrophic papulosis (Degos’ disease). Br J Dermatol. 2000;143:1320-1322. doi:10.1046/j.1365-2133.2000.03911.x

- Aydogan K, Alkan G, Karadogan Koran S, et al. Painful penile ulceration in a patient with malignant atrophic papulosis. J Eur Acad Dermatol Venereol. 2005;19:612-616. doi:10.1111/j.1468-3083.2005.01227.x

- Harvell JD, Williford PL, White WL. Benign cutaneous Degos’ disease: a case report with emphasis on histopathology as papules chronologically evolve. Am J Dermatopathol. 2001;23:116-123. doi:10.1097/00000372-200104000-00006

- Oliver B, Boehm M, Rosing DR, et al. Diffuse atrophic papules and plaques, intermittent abdominal pain, paresthesias, and cardiac abnormalities in a 55-year-old woman. J Am Acad Dermatol. 2016;75:1274-1277. doi:10.1016/j.jaad.2016.09.015

- Shapiro LS, Toledo-Garcia AE, Farrell JF. Effective treatment of malignant atrophic papulosis (Köhlmeier-Degos disease) with treprostinil—early experience. Orphanet J Rare Dis. 2013;8:52. doi:10.1186/1750-1172-8-52

- Burgin S, Stone JH, Shenoy-Bhangle AS, et al. Case records of the Massachusetts General Hospital. Case 18-2014. A 32-year-old man with a rash, myalgia, and weakness. N Engl J Med. 2014;370:2327-2337. doi:10.1056/NEJMcpc1304161

PRACTICE POINTS

- Papules with atrophic white centers and erythematous telangiectatic rims are the characteristic skin lesions found in Degos disease.

- A painful penile ulceration also may occur in Degos disease, though it is uncommon.

- The histopathology of skin lesions changes as the lesions evolve. Early lesions may resemble connective tissue disease. Late lesions show the classic pathology of wedge-shaped dermal sclerosis.

Mandatory DMV Reporting Tied to Dementia Underdiagnosis

, new research suggests.

Investigators found that primary care physicians (PCPs) in states with clinician reporting mandates had a 59% higher probability of underdiagnosing dementia compared with their counterparts in states that require patients to self-report or that have no reporting mandates.

“Our findings in this cross-sectional study raise concerns about potential adverse effects of mandatory clinician reporting for dementia diagnosis and underscore the need for careful consideration of the effect of such policies,” wrote the investigators, led by Soeren Mattke, MD, DSc, director of the USC Brain Health Observatory and research professor of economics at the University of Southern California, Los Angeles.

The study was published online in JAMA Network Open.

Lack of Guidance

As the US population ages, the number of older drivers is increasing, with 55.8 million drivers 65 years old or older. Approximately 7 million people in this age group have dementia — an estimate that is expected to increase to nearly 12 million by 2040.

The aging population raises a “critical policy question” about how to ensure road safety. Although the American Medical Association’s Code of Ethics outlines a physician’s obligation to identify drivers with medical impairments that impede safe driving, guidance restricting cognitively impaired drivers from driving is lacking.

In addition, evidence as to whether cognitive impairment indeed poses a threat to driving safety is mixed and has led to a lack of uniform policies with respect to reporting dementia.

Four states explicitly require clinicians to report dementia diagnoses to the DMV, which will then determine the patient’s fitness to drive, whereas 14 states require people with dementia to self-report. The remaining states have no explicit reporting requirements.

The issue of mandatory reporting is controversial, the researchers noted. On the one hand, physicians could protect patients and others by reporting potentially unsafe drivers.

On the other hand, evidence of an association with lower accident risks in patients with dementia is sparse and mandatory reporting may adversely affect physician-patient relationships. Empirical evidence for unintended consequences of reporting laws is lacking.

To examine the potential link between dementia underdiagnosis and mandatory reporting policies, the investigators analyzed the 100% data from the Medicare fee-for-service program and Medicare Advantage plans from 2017 to 2019, which included 223,036 PCPs with a panel of 25 or more Medicare patients.

The researchers examined dementia diagnosis rates in the patient panel of PCPs, rather than neurologists or gerontologists, regardless of who documented the diagnosis. Dr. Mattke said that it is possible that the diagnosis was established after referral to a specialist.

Each physician’s expected number of dementia cases was estimated using a predictive model based on patient characteristics. The researchers then compared the estimate with observed dementia diagnoses, thereby identifying clinicians who underdiagnosed dementia after sampling errors were accounted for.

‘Heavy-Handed Interference’

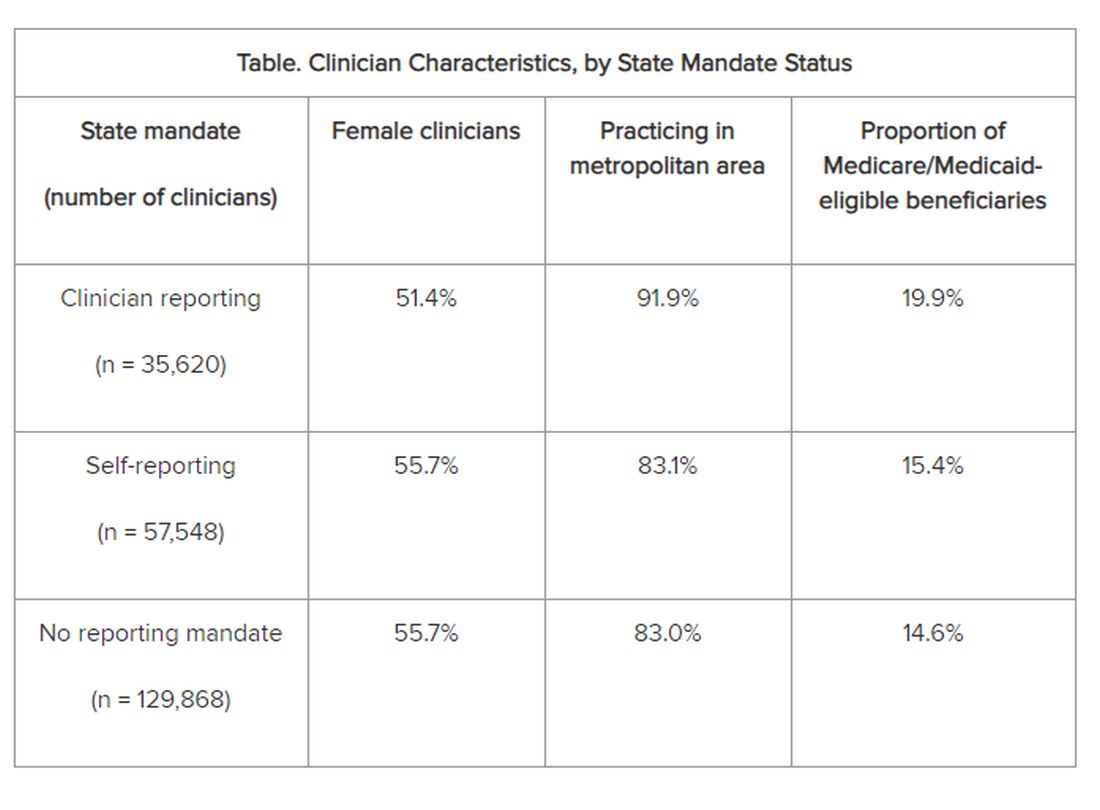

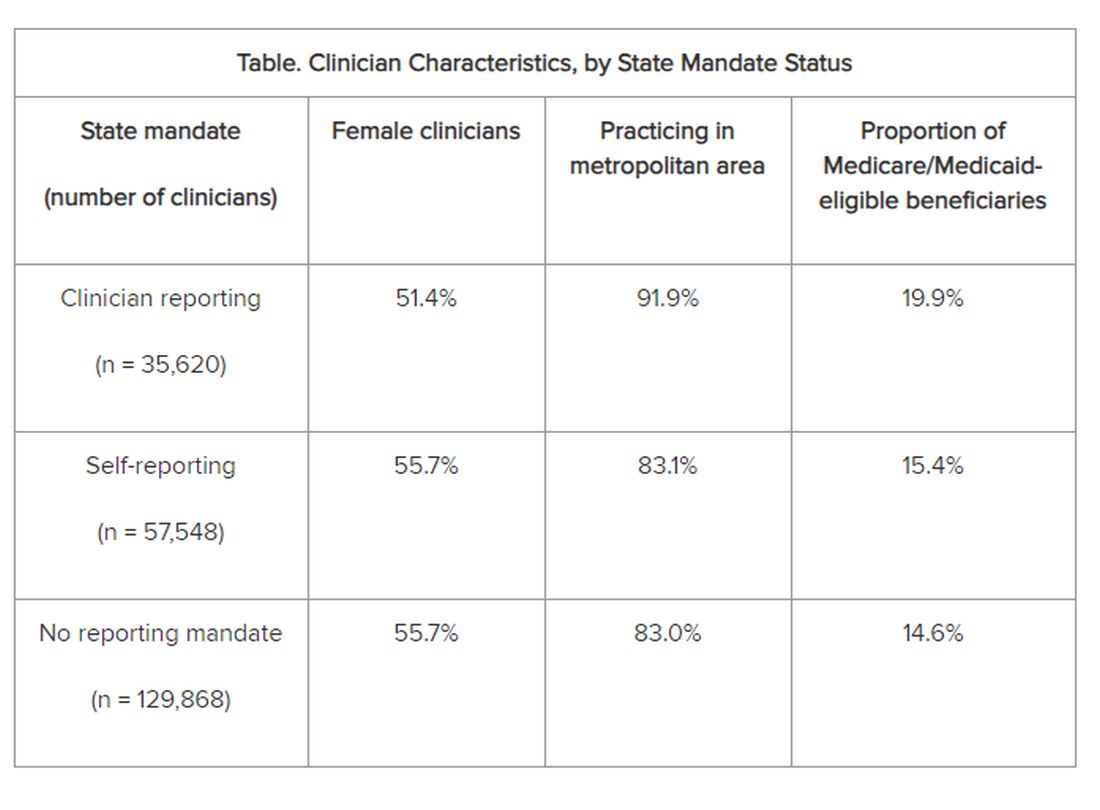

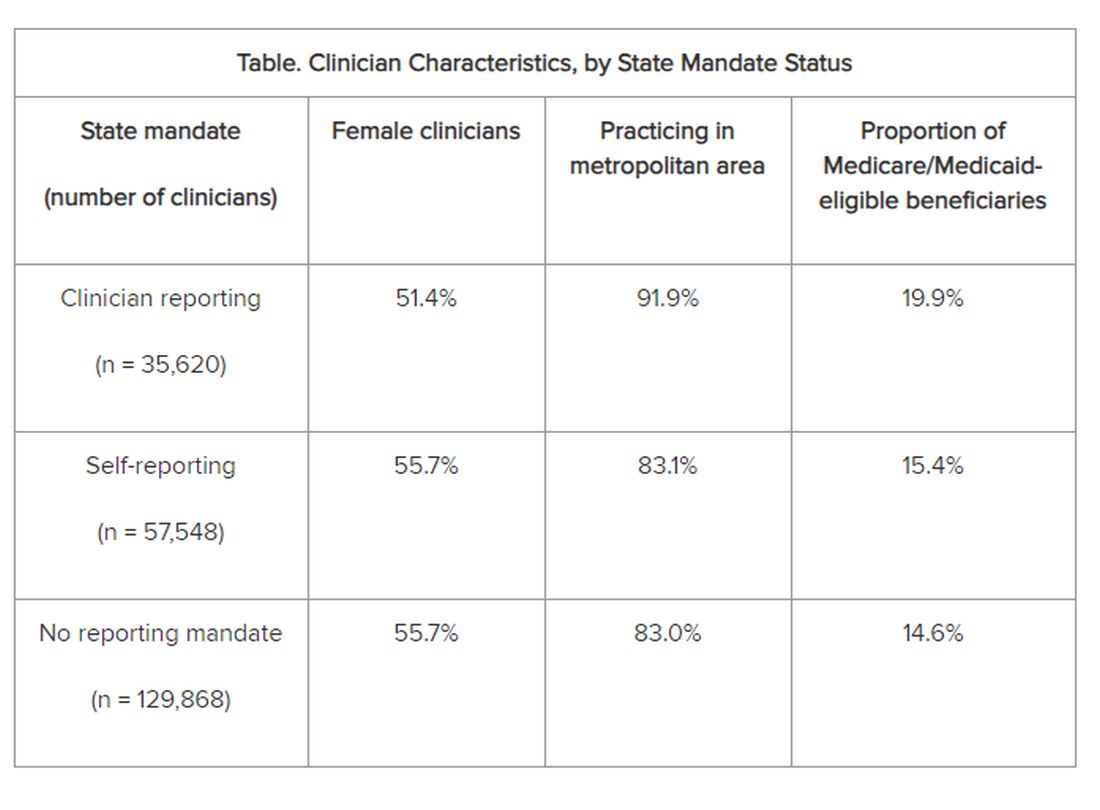

The researchers adjusted for several covariates potentially associated with a clinician’s probability of underdiagnosing dementia. These included sex, office location, practice specialty, racial/ethnic composition of the patient panel, and percentage of patients dually eligible for Medicare and Medicaid. The table shows PCP characteristics.

Adjusted results showed that PCPs practicing in states with clinician reporting mandates had a 12.4% (95% confidence interval [CI], 10.5%-14.2%) probability of underdiagnosing dementia versus 7.8% (95% CI, 6.9%-8.7%) in states with self-reporting and 7.7% (95% CI, 6.9%-8.4%) in states with no mandates, translating into a 4–percentage point difference (P < .001).

“Our study is the first to provide empirical evidence for the potential adverse effects of reporting policies,” the researchers noted. “Although we found that some clinicians underdiagnosed dementia regardless of state mandates, the key finding of this study reveals that primary care clinicians who practice in states with clinician reporting mandates were 59% more likely to do so…compared with those states with no reporting requirements…or driver self-reporting requirements.”

The investigators suggested that one potential explanation for underdiagnosis is patient resistance to cognitive testing. If patients were aware that the clinician was obligated by law to report their dementia diagnosis to the DMV, “they might be more inclined to conceal their symptoms or refuse further assessments, in addition to the general stigma and resistance to a formal assessment after a positive dementia screening result.”

“The findings suggest that policymakers might want to rethink those physician reporting mandates, since we also could not find conclusive evidence that they improve road safety,” Dr. Mattke said. “Maybe patients and their physicians can arrive at a sensible approach to determine driving fitness without such heavy-handed interference.”

However, he cautioned that the findings are not definitive and further study is needed before firm recommendations either for or against mandatory reporting.

In addition, the researchers noted several study limitations. One is that dementia underdiagnosis may also be associated with factors not captured in their model, including physician-patient relationships, health literacy, or language barriers.

However, Dr. Mattke noted, “ my sense is that those unobservable factors are not systematically related to state reporting policies and having omitted them would therefore not bias our results.”

Experts Weigh In

Commenting on the research, Morgan Daven, MA, the Alzheimer’s Association vice president of health systems, said that dementia is widely and significantly underdiagnosed, and not only in the states with dementia reporting mandates. Many factors may contribute to underdiagnosis, and although the study shows an association between reporting mandates and underdiagnosis, it does not demonstrate causation.

That said, Mr. Daven added, “fear and stigma related to dementia may inhibit the clinician, the patient, and their family from pursuing detection and diagnosis for dementia. As a society, we need to address dementia fear and stigma for all parties.”

He noted that useful tools include healthcare policies, workforce training, public awareness and education, and public policies to mitigate fear and stigma and their negative effects on diagnosis, care, support, and communication.

A potential study limitation is that it relied only on diagnoses by PCPs. Mr. Daven noted that the diagnosis of Alzheimer’ disease — the most common cause of dementia — is confirmation of amyloid buildup via a biomarker test, using PET or cerebrospinal fluid analysis.

“Both of these tests are extremely limited in their use and accessibility in a primary care setting. Inclusion of diagnoses by dementia specialists would provide a more complete picture,” he said.

Mr. Daven added that the Alzheimer’s Association encourages families to proactively discuss driving and other disease-related safety concerns as soon as possible. The Alzheimer’s Association Dementia and Driving webpage offers tips and strategies to discuss driving concerns with a family member.

In an accompanying editorial, Donald Redelmeier, MD, MS(HSR), and Vidhi Bhatt, BSc, both of the Department of Medicine, University of Toronto, differentiate the mandate for physicians to warn patients with dementia about traffic safety from the mandate for reporting child maltreatment, gunshot victims, or communicable diseases. They noted that mandated warnings “are not easy, can engender patient dissatisfaction, and need to be handled with tact.”

Yet, they pointed out, “breaking bad news is what practicing medicine entails.” They emphasized that, regardless of government mandates, “counseling patients for more road safety is an essential skill for clinicians in diverse states who hope to help their patients avoid becoming more traffic statistics.”

Research reported in this publication was supported by Genentech, a member of the Roche Group, and a grant from the National Institute on Aging of the National Institutes of Health. Dr. Mattke reported receiving grants from Genentech for a research contract with USC during the conduct of the study; personal fees from Eisai, Biogen, C2N, Novo Nordisk, Novartis, and Roche Genentech; and serving on the Senscio Systems board of directors, ALZpath scientific advisory board, AiCure scientific advisory board, and Boston Millennia Partners scientific advisory board outside the submitted work. The other authors’ disclosures are listed on the original paper. The editorial was supported by the Canada Research Chair in Medical Decision Sciences, the Canadian Institutes of Health Research, Kimel-Schatzky Traumatic Brain Injury Research Fund, and the Graduate Diploma Program in Health Research at the University of Toronto. The editorial authors report no other relevant financial relationships.

A version of this article appeared on Medscape.com.

, new research suggests.

Investigators found that primary care physicians (PCPs) in states with clinician reporting mandates had a 59% higher probability of underdiagnosing dementia compared with their counterparts in states that require patients to self-report or that have no reporting mandates.

“Our findings in this cross-sectional study raise concerns about potential adverse effects of mandatory clinician reporting for dementia diagnosis and underscore the need for careful consideration of the effect of such policies,” wrote the investigators, led by Soeren Mattke, MD, DSc, director of the USC Brain Health Observatory and research professor of economics at the University of Southern California, Los Angeles.

The study was published online in JAMA Network Open.

Lack of Guidance

As the US population ages, the number of older drivers is increasing, with 55.8 million drivers 65 years old or older. Approximately 7 million people in this age group have dementia — an estimate that is expected to increase to nearly 12 million by 2040.

The aging population raises a “critical policy question” about how to ensure road safety. Although the American Medical Association’s Code of Ethics outlines a physician’s obligation to identify drivers with medical impairments that impede safe driving, guidance restricting cognitively impaired drivers from driving is lacking.

In addition, evidence as to whether cognitive impairment indeed poses a threat to driving safety is mixed and has led to a lack of uniform policies with respect to reporting dementia.

Four states explicitly require clinicians to report dementia diagnoses to the DMV, which will then determine the patient’s fitness to drive, whereas 14 states require people with dementia to self-report. The remaining states have no explicit reporting requirements.

The issue of mandatory reporting is controversial, the researchers noted. On the one hand, physicians could protect patients and others by reporting potentially unsafe drivers.

On the other hand, evidence of an association with lower accident risks in patients with dementia is sparse and mandatory reporting may adversely affect physician-patient relationships. Empirical evidence for unintended consequences of reporting laws is lacking.

To examine the potential link between dementia underdiagnosis and mandatory reporting policies, the investigators analyzed the 100% data from the Medicare fee-for-service program and Medicare Advantage plans from 2017 to 2019, which included 223,036 PCPs with a panel of 25 or more Medicare patients.

The researchers examined dementia diagnosis rates in the patient panel of PCPs, rather than neurologists or gerontologists, regardless of who documented the diagnosis. Dr. Mattke said that it is possible that the diagnosis was established after referral to a specialist.

Each physician’s expected number of dementia cases was estimated using a predictive model based on patient characteristics. The researchers then compared the estimate with observed dementia diagnoses, thereby identifying clinicians who underdiagnosed dementia after sampling errors were accounted for.

‘Heavy-Handed Interference’

The researchers adjusted for several covariates potentially associated with a clinician’s probability of underdiagnosing dementia. These included sex, office location, practice specialty, racial/ethnic composition of the patient panel, and percentage of patients dually eligible for Medicare and Medicaid. The table shows PCP characteristics.

Adjusted results showed that PCPs practicing in states with clinician reporting mandates had a 12.4% (95% confidence interval [CI], 10.5%-14.2%) probability of underdiagnosing dementia versus 7.8% (95% CI, 6.9%-8.7%) in states with self-reporting and 7.7% (95% CI, 6.9%-8.4%) in states with no mandates, translating into a 4–percentage point difference (P < .001).

“Our study is the first to provide empirical evidence for the potential adverse effects of reporting policies,” the researchers noted. “Although we found that some clinicians underdiagnosed dementia regardless of state mandates, the key finding of this study reveals that primary care clinicians who practice in states with clinician reporting mandates were 59% more likely to do so…compared with those states with no reporting requirements…or driver self-reporting requirements.”

The investigators suggested that one potential explanation for underdiagnosis is patient resistance to cognitive testing. If patients were aware that the clinician was obligated by law to report their dementia diagnosis to the DMV, “they might be more inclined to conceal their symptoms or refuse further assessments, in addition to the general stigma and resistance to a formal assessment after a positive dementia screening result.”

“The findings suggest that policymakers might want to rethink those physician reporting mandates, since we also could not find conclusive evidence that they improve road safety,” Dr. Mattke said. “Maybe patients and their physicians can arrive at a sensible approach to determine driving fitness without such heavy-handed interference.”

However, he cautioned that the findings are not definitive and further study is needed before firm recommendations either for or against mandatory reporting.

In addition, the researchers noted several study limitations. One is that dementia underdiagnosis may also be associated with factors not captured in their model, including physician-patient relationships, health literacy, or language barriers.

However, Dr. Mattke noted, “ my sense is that those unobservable factors are not systematically related to state reporting policies and having omitted them would therefore not bias our results.”

Experts Weigh In

Commenting on the research, Morgan Daven, MA, the Alzheimer’s Association vice president of health systems, said that dementia is widely and significantly underdiagnosed, and not only in the states with dementia reporting mandates. Many factors may contribute to underdiagnosis, and although the study shows an association between reporting mandates and underdiagnosis, it does not demonstrate causation.

That said, Mr. Daven added, “fear and stigma related to dementia may inhibit the clinician, the patient, and their family from pursuing detection and diagnosis for dementia. As a society, we need to address dementia fear and stigma for all parties.”

He noted that useful tools include healthcare policies, workforce training, public awareness and education, and public policies to mitigate fear and stigma and their negative effects on diagnosis, care, support, and communication.

A potential study limitation is that it relied only on diagnoses by PCPs. Mr. Daven noted that the diagnosis of Alzheimer’ disease — the most common cause of dementia — is confirmation of amyloid buildup via a biomarker test, using PET or cerebrospinal fluid analysis.

“Both of these tests are extremely limited in their use and accessibility in a primary care setting. Inclusion of diagnoses by dementia specialists would provide a more complete picture,” he said.

Mr. Daven added that the Alzheimer’s Association encourages families to proactively discuss driving and other disease-related safety concerns as soon as possible. The Alzheimer’s Association Dementia and Driving webpage offers tips and strategies to discuss driving concerns with a family member.

In an accompanying editorial, Donald Redelmeier, MD, MS(HSR), and Vidhi Bhatt, BSc, both of the Department of Medicine, University of Toronto, differentiate the mandate for physicians to warn patients with dementia about traffic safety from the mandate for reporting child maltreatment, gunshot victims, or communicable diseases. They noted that mandated warnings “are not easy, can engender patient dissatisfaction, and need to be handled with tact.”

Yet, they pointed out, “breaking bad news is what practicing medicine entails.” They emphasized that, regardless of government mandates, “counseling patients for more road safety is an essential skill for clinicians in diverse states who hope to help their patients avoid becoming more traffic statistics.”

Research reported in this publication was supported by Genentech, a member of the Roche Group, and a grant from the National Institute on Aging of the National Institutes of Health. Dr. Mattke reported receiving grants from Genentech for a research contract with USC during the conduct of the study; personal fees from Eisai, Biogen, C2N, Novo Nordisk, Novartis, and Roche Genentech; and serving on the Senscio Systems board of directors, ALZpath scientific advisory board, AiCure scientific advisory board, and Boston Millennia Partners scientific advisory board outside the submitted work. The other authors’ disclosures are listed on the original paper. The editorial was supported by the Canada Research Chair in Medical Decision Sciences, the Canadian Institutes of Health Research, Kimel-Schatzky Traumatic Brain Injury Research Fund, and the Graduate Diploma Program in Health Research at the University of Toronto. The editorial authors report no other relevant financial relationships.

A version of this article appeared on Medscape.com.

, new research suggests.

Investigators found that primary care physicians (PCPs) in states with clinician reporting mandates had a 59% higher probability of underdiagnosing dementia compared with their counterparts in states that require patients to self-report or that have no reporting mandates.

“Our findings in this cross-sectional study raise concerns about potential adverse effects of mandatory clinician reporting for dementia diagnosis and underscore the need for careful consideration of the effect of such policies,” wrote the investigators, led by Soeren Mattke, MD, DSc, director of the USC Brain Health Observatory and research professor of economics at the University of Southern California, Los Angeles.

The study was published online in JAMA Network Open.

Lack of Guidance

As the US population ages, the number of older drivers is increasing, with 55.8 million drivers 65 years old or older. Approximately 7 million people in this age group have dementia — an estimate that is expected to increase to nearly 12 million by 2040.

The aging population raises a “critical policy question” about how to ensure road safety. Although the American Medical Association’s Code of Ethics outlines a physician’s obligation to identify drivers with medical impairments that impede safe driving, guidance restricting cognitively impaired drivers from driving is lacking.

In addition, evidence as to whether cognitive impairment indeed poses a threat to driving safety is mixed and has led to a lack of uniform policies with respect to reporting dementia.

Four states explicitly require clinicians to report dementia diagnoses to the DMV, which will then determine the patient’s fitness to drive, whereas 14 states require people with dementia to self-report. The remaining states have no explicit reporting requirements.

The issue of mandatory reporting is controversial, the researchers noted. On the one hand, physicians could protect patients and others by reporting potentially unsafe drivers.

On the other hand, evidence of an association with lower accident risks in patients with dementia is sparse and mandatory reporting may adversely affect physician-patient relationships. Empirical evidence for unintended consequences of reporting laws is lacking.

To examine the potential link between dementia underdiagnosis and mandatory reporting policies, the investigators analyzed the 100% data from the Medicare fee-for-service program and Medicare Advantage plans from 2017 to 2019, which included 223,036 PCPs with a panel of 25 or more Medicare patients.

The researchers examined dementia diagnosis rates in the patient panel of PCPs, rather than neurologists or gerontologists, regardless of who documented the diagnosis. Dr. Mattke said that it is possible that the diagnosis was established after referral to a specialist.

Each physician’s expected number of dementia cases was estimated using a predictive model based on patient characteristics. The researchers then compared the estimate with observed dementia diagnoses, thereby identifying clinicians who underdiagnosed dementia after sampling errors were accounted for.

‘Heavy-Handed Interference’

The researchers adjusted for several covariates potentially associated with a clinician’s probability of underdiagnosing dementia. These included sex, office location, practice specialty, racial/ethnic composition of the patient panel, and percentage of patients dually eligible for Medicare and Medicaid. The table shows PCP characteristics.

Adjusted results showed that PCPs practicing in states with clinician reporting mandates had a 12.4% (95% confidence interval [CI], 10.5%-14.2%) probability of underdiagnosing dementia versus 7.8% (95% CI, 6.9%-8.7%) in states with self-reporting and 7.7% (95% CI, 6.9%-8.4%) in states with no mandates, translating into a 4–percentage point difference (P < .001).

“Our study is the first to provide empirical evidence for the potential adverse effects of reporting policies,” the researchers noted. “Although we found that some clinicians underdiagnosed dementia regardless of state mandates, the key finding of this study reveals that primary care clinicians who practice in states with clinician reporting mandates were 59% more likely to do so…compared with those states with no reporting requirements…or driver self-reporting requirements.”

The investigators suggested that one potential explanation for underdiagnosis is patient resistance to cognitive testing. If patients were aware that the clinician was obligated by law to report their dementia diagnosis to the DMV, “they might be more inclined to conceal their symptoms or refuse further assessments, in addition to the general stigma and resistance to a formal assessment after a positive dementia screening result.”

“The findings suggest that policymakers might want to rethink those physician reporting mandates, since we also could not find conclusive evidence that they improve road safety,” Dr. Mattke said. “Maybe patients and their physicians can arrive at a sensible approach to determine driving fitness without such heavy-handed interference.”

However, he cautioned that the findings are not definitive and further study is needed before firm recommendations either for or against mandatory reporting.

In addition, the researchers noted several study limitations. One is that dementia underdiagnosis may also be associated with factors not captured in their model, including physician-patient relationships, health literacy, or language barriers.

However, Dr. Mattke noted, “ my sense is that those unobservable factors are not systematically related to state reporting policies and having omitted them would therefore not bias our results.”

Experts Weigh In

Commenting on the research, Morgan Daven, MA, the Alzheimer’s Association vice president of health systems, said that dementia is widely and significantly underdiagnosed, and not only in the states with dementia reporting mandates. Many factors may contribute to underdiagnosis, and although the study shows an association between reporting mandates and underdiagnosis, it does not demonstrate causation.