User login

Understanding Psychopathology of PNES

Patients who experience altered responsiveness during a psychogenic nonepileptic seizures (PNES) may have some sort of psychological vulnerability suggests a recent analysis of video-EEG confirmed PNES.

- Among 77 patients with confirmed PNES, 47 (66%) were found to have altered responsiveness.

- This group was more likely to display experiential avoidance, the tendency to avoid thoughts, feelings, memories, and related internal experiences.

- A review of patients’ demographics, clinical history, and questionnaires also found patients with altered responsiveness during PNES had more affect intolerance, suggesting their inability to tolerate emotions.

- The same group presented with a family history of seizures, headaches, and loss of consciousness during traumatic brain injury.

- Researchers suggested that these patients may benefit from a treatment plan that concentrates on emotion management.

Baslet G, Tolchin B, Dworetzky BA. Altered responsiveness in psychogenic nonepileptic seizures and its implication to underlying psychopathology. Seizure. 2017;52:162-168.

Patients who experience altered responsiveness during a psychogenic nonepileptic seizures (PNES) may have some sort of psychological vulnerability suggests a recent analysis of video-EEG confirmed PNES.

- Among 77 patients with confirmed PNES, 47 (66%) were found to have altered responsiveness.

- This group was more likely to display experiential avoidance, the tendency to avoid thoughts, feelings, memories, and related internal experiences.

- A review of patients’ demographics, clinical history, and questionnaires also found patients with altered responsiveness during PNES had more affect intolerance, suggesting their inability to tolerate emotions.

- The same group presented with a family history of seizures, headaches, and loss of consciousness during traumatic brain injury.

- Researchers suggested that these patients may benefit from a treatment plan that concentrates on emotion management.

Baslet G, Tolchin B, Dworetzky BA. Altered responsiveness in psychogenic nonepileptic seizures and its implication to underlying psychopathology. Seizure. 2017;52:162-168.

Patients who experience altered responsiveness during a psychogenic nonepileptic seizures (PNES) may have some sort of psychological vulnerability suggests a recent analysis of video-EEG confirmed PNES.

- Among 77 patients with confirmed PNES, 47 (66%) were found to have altered responsiveness.

- This group was more likely to display experiential avoidance, the tendency to avoid thoughts, feelings, memories, and related internal experiences.

- A review of patients’ demographics, clinical history, and questionnaires also found patients with altered responsiveness during PNES had more affect intolerance, suggesting their inability to tolerate emotions.

- The same group presented with a family history of seizures, headaches, and loss of consciousness during traumatic brain injury.

- Researchers suggested that these patients may benefit from a treatment plan that concentrates on emotion management.

Baslet G, Tolchin B, Dworetzky BA. Altered responsiveness in psychogenic nonepileptic seizures and its implication to underlying psychopathology. Seizure. 2017;52:162-168.

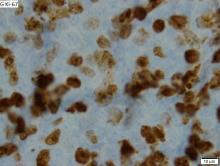

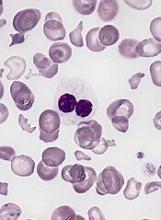

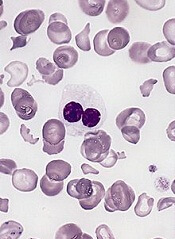

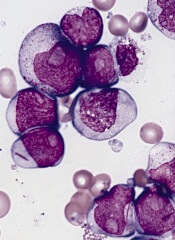

Rituximab key to survival after transplant for mantle cell lymphoma

The study, which outlines the experience across a variety of different treatment patterns at City of Hope National Medical Center, Duarte, Calif., between January 1997 and November 2013, suggests a “large benefit” of adding rituximab, wrote Matthew G. Mei, MD, of the center’s department of hematology and hematopoietic cell transplantation, and his colleagues. Further, maintenance rituximab was associated with improved survival outcomes in patients with positron emission tomography (PET)-negative status at first complete remission.

The benefit of rituximab “stands out, and adds to the increasing body of evidence supporting this practice for all MCL patients after ASCT, regardless of age and frontline induction regimens,” wrote Dr. Mei and his colleagues (Biol Blood Marrow Transplant 2017 November. doi: 10.1016/j.bbmt.2017.07.006). This was the case even with improvements in early diagnosis and supportive care, and the incorporation of novel agents such as bortezomib, lenalidomide, and ibrutinib, they wrote, noting significantly better outcomes for patients who underwent ASCT after 2007.

In multivariate analysis, maintenance rituximab therapy after ASCT was the single most important factor associated with improvement in progression-free survival (relative risk [RR], .25; 95% confidence interval, .14-.44) and overall survival (RR, .17; 95% CI, .07-.38).

Positron emission tomography scans were done prior to ASCT for 133 patients; after ASCT, 105 (79%) were found to be in a PET-negative complete remission. All but one of the patients with PET-negative disease received rituximab before ASCT. For that PET-negative subset, maintenance rituximab was significantly associated with improvements in progression-free survival (RR, .20; 95% CI, .09-.43) and overall survival (RR, .17; 95% CI, .05-.59).

This study represents one of the largest single-center reports to date on MCL patients who have undergone ASCT, according to the authors. “This study also sets the stage for prospective investigation aiming at optimization of maintenance therapy following ASCT.”

Dr. Mei reported no disclosures, and senior author Lihua E. Budde, MD, PhD, reported being a member of the Lymphoma Research Foundation MCL consortium. The study was supported by research funding from the National Cancer Institute.

This study confirms the value of maintenance rituximab for a large cohort of patients with mantle cell lymphoma who have undergone high-dose chemotherapy and autologous stem cell transplantation outside of clinical trials.

The findings also affirm results of a recent phase 3 randomized trial (LyMa) suggesting that in previously untreated MCL patients who have undergone ASCT, rituximab maintenance is superior to observation in improving overall survival and progression-free survival.

However, the most interesting aspect of this study is the positron emission tomography data. Namely, the benefit of rituximab maintenance was apparent in patients regardless of whether they were in a PET-positive or PET-negative first complete remission at ASCT. “This important finding implies that the benefit of rituximab maintenance after ASCT is present for low- and high-risk MCL patients.”

Despite these confirmatory findings, the treatment landscape for MCL has changed significantly in recent years, particularly with the introduction of treatments such as ibrutinib.

In a clinical trial currently underway, the European Mantle Cell Lymphoma Network is evaluating ibrutinib as an upfront treatment for young and fit patients. Specifically, the study compares first-line ASCT and rituximab maintenance, ASCT with ibrutinib maintenance, or a transplant-free approach with ibrutinib and chemotherapy.

Unless and until the data from this study “redefine the value of ASCT in the ibrutinib era, ASCT and rituximab maintenance should be recommended as the standard treatment after ASCT for transplant-eligible patients with MCL.”

Tobias Roider, MD, and Sascha Dietrich, MD, are with the Department of Medicine V, University of Heidelberg, Germany. Their comments are in an editorial (Biol Blood Marrow Transplant 2017 November. doi: 10.1016/j.bbmt.2017.09.008). The authors reported no financial disclosures or conflicts of interest.

This study confirms the value of maintenance rituximab for a large cohort of patients with mantle cell lymphoma who have undergone high-dose chemotherapy and autologous stem cell transplantation outside of clinical trials.

The findings also affirm results of a recent phase 3 randomized trial (LyMa) suggesting that in previously untreated MCL patients who have undergone ASCT, rituximab maintenance is superior to observation in improving overall survival and progression-free survival.

However, the most interesting aspect of this study is the positron emission tomography data. Namely, the benefit of rituximab maintenance was apparent in patients regardless of whether they were in a PET-positive or PET-negative first complete remission at ASCT. “This important finding implies that the benefit of rituximab maintenance after ASCT is present for low- and high-risk MCL patients.”

Despite these confirmatory findings, the treatment landscape for MCL has changed significantly in recent years, particularly with the introduction of treatments such as ibrutinib.

In a clinical trial currently underway, the European Mantle Cell Lymphoma Network is evaluating ibrutinib as an upfront treatment for young and fit patients. Specifically, the study compares first-line ASCT and rituximab maintenance, ASCT with ibrutinib maintenance, or a transplant-free approach with ibrutinib and chemotherapy.

Unless and until the data from this study “redefine the value of ASCT in the ibrutinib era, ASCT and rituximab maintenance should be recommended as the standard treatment after ASCT for transplant-eligible patients with MCL.”

Tobias Roider, MD, and Sascha Dietrich, MD, are with the Department of Medicine V, University of Heidelberg, Germany. Their comments are in an editorial (Biol Blood Marrow Transplant 2017 November. doi: 10.1016/j.bbmt.2017.09.008). The authors reported no financial disclosures or conflicts of interest.

This study confirms the value of maintenance rituximab for a large cohort of patients with mantle cell lymphoma who have undergone high-dose chemotherapy and autologous stem cell transplantation outside of clinical trials.

The findings also affirm results of a recent phase 3 randomized trial (LyMa) suggesting that in previously untreated MCL patients who have undergone ASCT, rituximab maintenance is superior to observation in improving overall survival and progression-free survival.

However, the most interesting aspect of this study is the positron emission tomography data. Namely, the benefit of rituximab maintenance was apparent in patients regardless of whether they were in a PET-positive or PET-negative first complete remission at ASCT. “This important finding implies that the benefit of rituximab maintenance after ASCT is present for low- and high-risk MCL patients.”

Despite these confirmatory findings, the treatment landscape for MCL has changed significantly in recent years, particularly with the introduction of treatments such as ibrutinib.

In a clinical trial currently underway, the European Mantle Cell Lymphoma Network is evaluating ibrutinib as an upfront treatment for young and fit patients. Specifically, the study compares first-line ASCT and rituximab maintenance, ASCT with ibrutinib maintenance, or a transplant-free approach with ibrutinib and chemotherapy.

Unless and until the data from this study “redefine the value of ASCT in the ibrutinib era, ASCT and rituximab maintenance should be recommended as the standard treatment after ASCT for transplant-eligible patients with MCL.”

Tobias Roider, MD, and Sascha Dietrich, MD, are with the Department of Medicine V, University of Heidelberg, Germany. Their comments are in an editorial (Biol Blood Marrow Transplant 2017 November. doi: 10.1016/j.bbmt.2017.09.008). The authors reported no financial disclosures or conflicts of interest.

The study, which outlines the experience across a variety of different treatment patterns at City of Hope National Medical Center, Duarte, Calif., between January 1997 and November 2013, suggests a “large benefit” of adding rituximab, wrote Matthew G. Mei, MD, of the center’s department of hematology and hematopoietic cell transplantation, and his colleagues. Further, maintenance rituximab was associated with improved survival outcomes in patients with positron emission tomography (PET)-negative status at first complete remission.

The benefit of rituximab “stands out, and adds to the increasing body of evidence supporting this practice for all MCL patients after ASCT, regardless of age and frontline induction regimens,” wrote Dr. Mei and his colleagues (Biol Blood Marrow Transplant 2017 November. doi: 10.1016/j.bbmt.2017.07.006). This was the case even with improvements in early diagnosis and supportive care, and the incorporation of novel agents such as bortezomib, lenalidomide, and ibrutinib, they wrote, noting significantly better outcomes for patients who underwent ASCT after 2007.

In multivariate analysis, maintenance rituximab therapy after ASCT was the single most important factor associated with improvement in progression-free survival (relative risk [RR], .25; 95% confidence interval, .14-.44) and overall survival (RR, .17; 95% CI, .07-.38).

Positron emission tomography scans were done prior to ASCT for 133 patients; after ASCT, 105 (79%) were found to be in a PET-negative complete remission. All but one of the patients with PET-negative disease received rituximab before ASCT. For that PET-negative subset, maintenance rituximab was significantly associated with improvements in progression-free survival (RR, .20; 95% CI, .09-.43) and overall survival (RR, .17; 95% CI, .05-.59).

This study represents one of the largest single-center reports to date on MCL patients who have undergone ASCT, according to the authors. “This study also sets the stage for prospective investigation aiming at optimization of maintenance therapy following ASCT.”

Dr. Mei reported no disclosures, and senior author Lihua E. Budde, MD, PhD, reported being a member of the Lymphoma Research Foundation MCL consortium. The study was supported by research funding from the National Cancer Institute.

The study, which outlines the experience across a variety of different treatment patterns at City of Hope National Medical Center, Duarte, Calif., between January 1997 and November 2013, suggests a “large benefit” of adding rituximab, wrote Matthew G. Mei, MD, of the center’s department of hematology and hematopoietic cell transplantation, and his colleagues. Further, maintenance rituximab was associated with improved survival outcomes in patients with positron emission tomography (PET)-negative status at first complete remission.

The benefit of rituximab “stands out, and adds to the increasing body of evidence supporting this practice for all MCL patients after ASCT, regardless of age and frontline induction regimens,” wrote Dr. Mei and his colleagues (Biol Blood Marrow Transplant 2017 November. doi: 10.1016/j.bbmt.2017.07.006). This was the case even with improvements in early diagnosis and supportive care, and the incorporation of novel agents such as bortezomib, lenalidomide, and ibrutinib, they wrote, noting significantly better outcomes for patients who underwent ASCT after 2007.

In multivariate analysis, maintenance rituximab therapy after ASCT was the single most important factor associated with improvement in progression-free survival (relative risk [RR], .25; 95% confidence interval, .14-.44) and overall survival (RR, .17; 95% CI, .07-.38).

Positron emission tomography scans were done prior to ASCT for 133 patients; after ASCT, 105 (79%) were found to be in a PET-negative complete remission. All but one of the patients with PET-negative disease received rituximab before ASCT. For that PET-negative subset, maintenance rituximab was significantly associated with improvements in progression-free survival (RR, .20; 95% CI, .09-.43) and overall survival (RR, .17; 95% CI, .05-.59).

This study represents one of the largest single-center reports to date on MCL patients who have undergone ASCT, according to the authors. “This study also sets the stage for prospective investigation aiming at optimization of maintenance therapy following ASCT.”

Dr. Mei reported no disclosures, and senior author Lihua E. Budde, MD, PhD, reported being a member of the Lymphoma Research Foundation MCL consortium. The study was supported by research funding from the National Cancer Institute.

FROM BIOLOGY OF BLOOD AND MARROW TRANSPLANTATION

Key clinical point: Over time and in many different patterns, rituximab maintenance therapy stood out as the prominent factor influencing survival in patients with mantle cell lymphoma who undergo autologous stem cell transplant.

Major finding: Maintenance rituximab was significantly associated with superior progression-free survival (relative risk, .25; 95% confidence interval, .14-.44) and overall survival (RR, .17; 95% CI, .07-.38).

Data source: Retrospective analysis of data for 191 patients with MCL who underwent ASCT at a medical center in California between January 1997 and November 2013.

Disclosures: The study was supported by research funding from the National Cancer Institute. Senior author Lihua E. Budde, MD, PhD, reported being a member of the Lymphoma Research Foundation MCL consortium.

Summary of guidelines for DMARDs for elective surgery

Clinical question: What is the best management for disease-modifying antirheumatic drugs (DMARDs) for patients with RA, ankylosing spondylitis, psoriatic arthritis, juvenile idiopathic arthritis, or systemic lupus erythematosus (SLE) undergoing elective total knee arthroplasty (TKA) or total hip arthroplasty (THA)?

Background: There are limited data in the evaluation of risks of flare with stopping DMARDs versus the risks of infection with continuing them perioperatively for elective TKA or THA, which are procedures frequently required by this patient population.

Study design: Multistep systematic literature review.

Setting: Collaboration between American College of Rheumatology and American Association of Hip and Knee Surgeons.

Synopsis: Through literature review and a requirement of 80% agreement by the panel, seven recommendations were created. Continue methotrexate, leflunomide, hydroxychloroquine, and sulfasalazine. Biologic agents should be held with surgery scheduled at the end of dosing cycle and restarted when the wound is healed, sutures/staples are removed, and there are no signs of infection (~14 days). Tofacitinib should be held for all conditions except SLE for 1 week. For severe SLE, continue mycophenolate mofetil, azathioprine, cyclosporine, or tacrolimus but hold for 1 week for nonsevere SLE. If current dose of glucocorticoids is less than 20 mg/day, the current dose should be administered rather than administering stress-dose steroids.

Limitations include a limited number of studies conducted in the perioperative period, the existing data are based on lower dosages, and it is unknown whether results can be extrapolated to surgical procedures beyond TKA and THA. Additionally there is a need for further studies on glucocorticoid management and biologic agents.

Bottom line: Perioperative management of DMARDs is complex and understudied, but the review provides an evidence-based guide for patients undergoing TKA and THA.

Citation: Goodman SM, Springer B, Gordon G, et. al. 2017 American College of Rheumatology/American Association of Hip and Knee Surgeons Guideline for the Perioperative Management of Antirheumatic Medication in Patients With Rheumatic Diseases Undergoing Elective Total Hip or Total Knee Arthroplasty. Arthritis Care Res. 2017 Aug;69(8):1111-24.

Dr. Kochar is hospitalist and assistant professor of medicine, Icahn School of Medicine of the Mount Sinai Health System.

Clinical question: What is the best management for disease-modifying antirheumatic drugs (DMARDs) for patients with RA, ankylosing spondylitis, psoriatic arthritis, juvenile idiopathic arthritis, or systemic lupus erythematosus (SLE) undergoing elective total knee arthroplasty (TKA) or total hip arthroplasty (THA)?

Background: There are limited data in the evaluation of risks of flare with stopping DMARDs versus the risks of infection with continuing them perioperatively for elective TKA or THA, which are procedures frequently required by this patient population.

Study design: Multistep systematic literature review.

Setting: Collaboration between American College of Rheumatology and American Association of Hip and Knee Surgeons.

Synopsis: Through literature review and a requirement of 80% agreement by the panel, seven recommendations were created. Continue methotrexate, leflunomide, hydroxychloroquine, and sulfasalazine. Biologic agents should be held with surgery scheduled at the end of dosing cycle and restarted when the wound is healed, sutures/staples are removed, and there are no signs of infection (~14 days). Tofacitinib should be held for all conditions except SLE for 1 week. For severe SLE, continue mycophenolate mofetil, azathioprine, cyclosporine, or tacrolimus but hold for 1 week for nonsevere SLE. If current dose of glucocorticoids is less than 20 mg/day, the current dose should be administered rather than administering stress-dose steroids.

Limitations include a limited number of studies conducted in the perioperative period, the existing data are based on lower dosages, and it is unknown whether results can be extrapolated to surgical procedures beyond TKA and THA. Additionally there is a need for further studies on glucocorticoid management and biologic agents.

Bottom line: Perioperative management of DMARDs is complex and understudied, but the review provides an evidence-based guide for patients undergoing TKA and THA.

Citation: Goodman SM, Springer B, Gordon G, et. al. 2017 American College of Rheumatology/American Association of Hip and Knee Surgeons Guideline for the Perioperative Management of Antirheumatic Medication in Patients With Rheumatic Diseases Undergoing Elective Total Hip or Total Knee Arthroplasty. Arthritis Care Res. 2017 Aug;69(8):1111-24.

Dr. Kochar is hospitalist and assistant professor of medicine, Icahn School of Medicine of the Mount Sinai Health System.

Clinical question: What is the best management for disease-modifying antirheumatic drugs (DMARDs) for patients with RA, ankylosing spondylitis, psoriatic arthritis, juvenile idiopathic arthritis, or systemic lupus erythematosus (SLE) undergoing elective total knee arthroplasty (TKA) or total hip arthroplasty (THA)?

Background: There are limited data in the evaluation of risks of flare with stopping DMARDs versus the risks of infection with continuing them perioperatively for elective TKA or THA, which are procedures frequently required by this patient population.

Study design: Multistep systematic literature review.

Setting: Collaboration between American College of Rheumatology and American Association of Hip and Knee Surgeons.

Synopsis: Through literature review and a requirement of 80% agreement by the panel, seven recommendations were created. Continue methotrexate, leflunomide, hydroxychloroquine, and sulfasalazine. Biologic agents should be held with surgery scheduled at the end of dosing cycle and restarted when the wound is healed, sutures/staples are removed, and there are no signs of infection (~14 days). Tofacitinib should be held for all conditions except SLE for 1 week. For severe SLE, continue mycophenolate mofetil, azathioprine, cyclosporine, or tacrolimus but hold for 1 week for nonsevere SLE. If current dose of glucocorticoids is less than 20 mg/day, the current dose should be administered rather than administering stress-dose steroids.

Limitations include a limited number of studies conducted in the perioperative period, the existing data are based on lower dosages, and it is unknown whether results can be extrapolated to surgical procedures beyond TKA and THA. Additionally there is a need for further studies on glucocorticoid management and biologic agents.

Bottom line: Perioperative management of DMARDs is complex and understudied, but the review provides an evidence-based guide for patients undergoing TKA and THA.

Citation: Goodman SM, Springer B, Gordon G, et. al. 2017 American College of Rheumatology/American Association of Hip and Knee Surgeons Guideline for the Perioperative Management of Antirheumatic Medication in Patients With Rheumatic Diseases Undergoing Elective Total Hip or Total Knee Arthroplasty. Arthritis Care Res. 2017 Aug;69(8):1111-24.

Dr. Kochar is hospitalist and assistant professor of medicine, Icahn School of Medicine of the Mount Sinai Health System.

VIDEO: Outpatient hysterectomies offer advantages for surgeons, patients

NATIONAL HARBOR, MD. – Moving hysterectomy and advanced gynecologic procedures to the ambulatory surgical environment is better for patients, surgeons, and the health care system, Richard B. Rosenfield, MD, who is in private practice in Portland, Ore., said at the AAGL Global Congress.

“We’ve been basically proving this model over the last decade by performing advanced laparoscopic surgery in the outpatient environment, and we do this for a number of reasons,” Dr. Rosenfield said in an interview. “The patients get to go home the same day, which they typically enjoy, we avoid the hospital-acquired infections, which is great, and in addition to that, the physicians tend to really appreciate the efficiency of an outpatient center.”

But with the focus on value-based payment under federal health programs, there should also be a greater focus on getting more high-volume surgeons to perform their procedures, he said. The idea is to lower the hospital readmissions, complications, and infections that could arise during procedures by less experienced surgeons and redirect the cost savings toward payments for surgeons with better outcomes, Dr. Rosenfield said. But this should be coupled with training and mentoring for lower-volume surgeons, he said.

Dr. Rosenfield reported having no relevant financial disclosures.

[email protected]

On Twitter @eaztweets

NATIONAL HARBOR, MD. – Moving hysterectomy and advanced gynecologic procedures to the ambulatory surgical environment is better for patients, surgeons, and the health care system, Richard B. Rosenfield, MD, who is in private practice in Portland, Ore., said at the AAGL Global Congress.

“We’ve been basically proving this model over the last decade by performing advanced laparoscopic surgery in the outpatient environment, and we do this for a number of reasons,” Dr. Rosenfield said in an interview. “The patients get to go home the same day, which they typically enjoy, we avoid the hospital-acquired infections, which is great, and in addition to that, the physicians tend to really appreciate the efficiency of an outpatient center.”

But with the focus on value-based payment under federal health programs, there should also be a greater focus on getting more high-volume surgeons to perform their procedures, he said. The idea is to lower the hospital readmissions, complications, and infections that could arise during procedures by less experienced surgeons and redirect the cost savings toward payments for surgeons with better outcomes, Dr. Rosenfield said. But this should be coupled with training and mentoring for lower-volume surgeons, he said.

Dr. Rosenfield reported having no relevant financial disclosures.

[email protected]

On Twitter @eaztweets

NATIONAL HARBOR, MD. – Moving hysterectomy and advanced gynecologic procedures to the ambulatory surgical environment is better for patients, surgeons, and the health care system, Richard B. Rosenfield, MD, who is in private practice in Portland, Ore., said at the AAGL Global Congress.

“We’ve been basically proving this model over the last decade by performing advanced laparoscopic surgery in the outpatient environment, and we do this for a number of reasons,” Dr. Rosenfield said in an interview. “The patients get to go home the same day, which they typically enjoy, we avoid the hospital-acquired infections, which is great, and in addition to that, the physicians tend to really appreciate the efficiency of an outpatient center.”

But with the focus on value-based payment under federal health programs, there should also be a greater focus on getting more high-volume surgeons to perform their procedures, he said. The idea is to lower the hospital readmissions, complications, and infections that could arise during procedures by less experienced surgeons and redirect the cost savings toward payments for surgeons with better outcomes, Dr. Rosenfield said. But this should be coupled with training and mentoring for lower-volume surgeons, he said.

Dr. Rosenfield reported having no relevant financial disclosures.

[email protected]

On Twitter @eaztweets

AT AAGL 2017

Is It Safe to Manage Thyroid Cancer by Surveillance?

Research groups have suggested that small papillary thyroid cancers (PTCs) (< 2 cm) have been overdiagnosed and overtreated. But then the question is how to manage patients with small PTCs and the even smaller (< 1 cm) papillary microcarcinomas (PMCs).

In 1993, physicians from Kuma Hospital, Kobe, Japan, initiated an active surveillance trial for patients with low-risk PMCs without worrisome features. They observed that only a minority of patients showed disease progression, and those patients were successfully treated with rescue surgery. The Cancer Institute Hospital in Tokyo reported similar promising data from a trial 2 years later. The 2015 American Thyroid Association guidelines now acknowledge that active surveillance can be an alternative to immediate surgery in patients with low-risk PMCs.

At Kuma Hospital, the researchers noted that over time disease progression differed according to patient age. Using data on 1,211 patients in their surveillance program from 1993 to 2011, they estimated the lifetime probabilities of disease progression during active surveillance.

The estimated trend curves of disease progression “varied markedly,” the researchers found, depending on the patient’s age at presentation. Patients in their 20s and 30s had a steep increase for the first 10 to 20 years, with a gradual increase thereafter. Patients in their 40s or older showed a milder increase. The estimated lifetime probability of disease progression fell with each decade of age at presentation.

Related: Hashimoto’s Thyroiditis and Lymphoma

The researchers propose that surveillance be continued for the patient’s lifetime because tumors progress in a “small but noteworthy” percentage of patients. When patients with low-risk PMCs are treated surgically at presentation, they should still be followed after the surgery. Two- thirds of the patients who underwent immediate surgery still needed l-thyroxine and most likely will for their lifetime, the researchers note. However, PMCs that may progress after the 10-year point of active surveillance could be expected to have a very mild progressive nature, the researchers conclude, and the outcome of surgery should be excellent.

Source:

Miyauchi A, Kudo T, Ito Y, et al. Surgery. 2017. http://dx.doi.org/10.1016/j.surg.2017.03.028. In press.

Research groups have suggested that small papillary thyroid cancers (PTCs) (< 2 cm) have been overdiagnosed and overtreated. But then the question is how to manage patients with small PTCs and the even smaller (< 1 cm) papillary microcarcinomas (PMCs).

In 1993, physicians from Kuma Hospital, Kobe, Japan, initiated an active surveillance trial for patients with low-risk PMCs without worrisome features. They observed that only a minority of patients showed disease progression, and those patients were successfully treated with rescue surgery. The Cancer Institute Hospital in Tokyo reported similar promising data from a trial 2 years later. The 2015 American Thyroid Association guidelines now acknowledge that active surveillance can be an alternative to immediate surgery in patients with low-risk PMCs.

At Kuma Hospital, the researchers noted that over time disease progression differed according to patient age. Using data on 1,211 patients in their surveillance program from 1993 to 2011, they estimated the lifetime probabilities of disease progression during active surveillance.

The estimated trend curves of disease progression “varied markedly,” the researchers found, depending on the patient’s age at presentation. Patients in their 20s and 30s had a steep increase for the first 10 to 20 years, with a gradual increase thereafter. Patients in their 40s or older showed a milder increase. The estimated lifetime probability of disease progression fell with each decade of age at presentation.

Related: Hashimoto’s Thyroiditis and Lymphoma

The researchers propose that surveillance be continued for the patient’s lifetime because tumors progress in a “small but noteworthy” percentage of patients. When patients with low-risk PMCs are treated surgically at presentation, they should still be followed after the surgery. Two- thirds of the patients who underwent immediate surgery still needed l-thyroxine and most likely will for their lifetime, the researchers note. However, PMCs that may progress after the 10-year point of active surveillance could be expected to have a very mild progressive nature, the researchers conclude, and the outcome of surgery should be excellent.

Source:

Miyauchi A, Kudo T, Ito Y, et al. Surgery. 2017. http://dx.doi.org/10.1016/j.surg.2017.03.028. In press.

Research groups have suggested that small papillary thyroid cancers (PTCs) (< 2 cm) have been overdiagnosed and overtreated. But then the question is how to manage patients with small PTCs and the even smaller (< 1 cm) papillary microcarcinomas (PMCs).

In 1993, physicians from Kuma Hospital, Kobe, Japan, initiated an active surveillance trial for patients with low-risk PMCs without worrisome features. They observed that only a minority of patients showed disease progression, and those patients were successfully treated with rescue surgery. The Cancer Institute Hospital in Tokyo reported similar promising data from a trial 2 years later. The 2015 American Thyroid Association guidelines now acknowledge that active surveillance can be an alternative to immediate surgery in patients with low-risk PMCs.

At Kuma Hospital, the researchers noted that over time disease progression differed according to patient age. Using data on 1,211 patients in their surveillance program from 1993 to 2011, they estimated the lifetime probabilities of disease progression during active surveillance.

The estimated trend curves of disease progression “varied markedly,” the researchers found, depending on the patient’s age at presentation. Patients in their 20s and 30s had a steep increase for the first 10 to 20 years, with a gradual increase thereafter. Patients in their 40s or older showed a milder increase. The estimated lifetime probability of disease progression fell with each decade of age at presentation.

Related: Hashimoto’s Thyroiditis and Lymphoma

The researchers propose that surveillance be continued for the patient’s lifetime because tumors progress in a “small but noteworthy” percentage of patients. When patients with low-risk PMCs are treated surgically at presentation, they should still be followed after the surgery. Two- thirds of the patients who underwent immediate surgery still needed l-thyroxine and most likely will for their lifetime, the researchers note. However, PMCs that may progress after the 10-year point of active surveillance could be expected to have a very mild progressive nature, the researchers conclude, and the outcome of surgery should be excellent.

Source:

Miyauchi A, Kudo T, Ito Y, et al. Surgery. 2017. http://dx.doi.org/10.1016/j.surg.2017.03.028. In press.

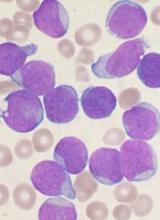

Rigosertib produces better OS in MDS than tAML

Rigosertib has demonstrated activity and tolerability in patients with myelodysplastic syndromes (MDS) and acute myeloid leukemia transformed from MDS (tAML), according to researchers.

In a phase 1/2 study, rigosertib produced responses in a quarter of MDS/tAML patients and enabled stable disease in another quarter.

Overall survival (OS) was about a year longer for responders than for non-responders.

MDS patients were more likely to respond to rigosertib and therefore enjoyed longer OS than tAML patients.

Overall, rigosertib was considered well-tolerated. There were no treatment-related deaths, though 18% of patients experienced treatment-related serious adverse events (AEs).

Lewis Silverman, MD, of Icahn School of Medicine at Mount Sinai in New York, New York, and his colleagues described these results in Leukemia Research.

The study was sponsored by Onconova Therapeutics, Inc., the company developing rigosertib.

Rigosertib is an inhibitor of Ras-effector pathways that interacts with the Ras binding domains common to several signaling proteins, including Raf and PI3 kinase.

Dr Silverman and his colleagues tested intravenous rigosertib in a dose-escalation, phase 1/2 study of 22 patients. Patients had tAML (n=13), high-risk MDS (n=6), intermediate-2-risk MDS (n=2), or chronic myelomonocytic leukemia (n=1).

All patients had relapsed or were refractory to standard therapy and had no approved options for second-line therapies. The patients’ median age was 78 (range, 59-84), and 90% were male.

Patients received 3- to 7-day continuous infusions of rigosertib at doses ranging from 650 mg/m2/day to 1700 mg/m2/day in 14-day cycles.

The mean number of treatment cycles was 5.6 ± 5.8 (range, 1-23). The maximum tolerated dose of rigosertib was 1700 mg/m2/day, and the recommended phase 2 dose was 1375 mg/m2/day.

Safety

All patients had at least 1 AE. The most common AEs of any grade were fatigue (n=16, 73%), diarrhea (n=12, 55%), pyrexia (n=12, 55%), dyspnea (n=11, 50%), insomnia (n=11, 50%), anemia (n=10, 46%), constipation (n=9, 41%), nausea (n=9, 41%), cough (n=9, 41%), and decreased appetite (n=9, 41%).

The most common grade 3 or higher AEs were anemia (n=9, 41%), thrombocytopenia (n=5, 23%), pneumonia (n=5, 23%), hypoglycemia (n=4, 18%), hyponatremia (n=4, 18%), and hypophosphatemia (n=4, 18%).

Four patients (18%) had treatment-related serious AEs. This included hematuria and pollakiuria (n=1), dysuria and pollakiuria (n=1), asthenia (n=1), and dyspnea (n=1). Thirteen patients (59%) stopped treatment due to AEs.

Ten patients, who remained on study from 1 to 19 months, died within 30 days of stopping rigosertib. There were no treatment-related deaths.

Efficacy

Nineteen patients were evaluable for efficacy.

Five patients responded to treatment. Four patients with MDS had a marrow complete response, and 1 with tAML had a marrow partial response. Two of the patients with marrow complete response also had hematologic improvements.

Five patients had stable disease, 3 with MDS and 2 with tAML.

The median OS was 15.7 months for responders and 2.0 months for non-responders (P=0.0070). The median OS was 12.0 months for MDS patients and 2.0 months for tAML patients (P<0.0001).

“The publication of results from this historical study provides support of the relationship between bone marrow blast response and improvement in overall survival in this group of patients with MDS and acute myeloid leukemia for whom no FDA-approved treatments are currently available,” said Ramesh Kumar, president and chief executive officer of Onconova Therapeutics, Inc.

He added that these data are “fundamental to the rationale” of ongoing studies of rigosertib in high-risk MDS patients. ![]()

Rigosertib has demonstrated activity and tolerability in patients with myelodysplastic syndromes (MDS) and acute myeloid leukemia transformed from MDS (tAML), according to researchers.

In a phase 1/2 study, rigosertib produced responses in a quarter of MDS/tAML patients and enabled stable disease in another quarter.

Overall survival (OS) was about a year longer for responders than for non-responders.

MDS patients were more likely to respond to rigosertib and therefore enjoyed longer OS than tAML patients.

Overall, rigosertib was considered well-tolerated. There were no treatment-related deaths, though 18% of patients experienced treatment-related serious adverse events (AEs).

Lewis Silverman, MD, of Icahn School of Medicine at Mount Sinai in New York, New York, and his colleagues described these results in Leukemia Research.

The study was sponsored by Onconova Therapeutics, Inc., the company developing rigosertib.

Rigosertib is an inhibitor of Ras-effector pathways that interacts with the Ras binding domains common to several signaling proteins, including Raf and PI3 kinase.

Dr Silverman and his colleagues tested intravenous rigosertib in a dose-escalation, phase 1/2 study of 22 patients. Patients had tAML (n=13), high-risk MDS (n=6), intermediate-2-risk MDS (n=2), or chronic myelomonocytic leukemia (n=1).

All patients had relapsed or were refractory to standard therapy and had no approved options for second-line therapies. The patients’ median age was 78 (range, 59-84), and 90% were male.

Patients received 3- to 7-day continuous infusions of rigosertib at doses ranging from 650 mg/m2/day to 1700 mg/m2/day in 14-day cycles.

The mean number of treatment cycles was 5.6 ± 5.8 (range, 1-23). The maximum tolerated dose of rigosertib was 1700 mg/m2/day, and the recommended phase 2 dose was 1375 mg/m2/day.

Safety

All patients had at least 1 AE. The most common AEs of any grade were fatigue (n=16, 73%), diarrhea (n=12, 55%), pyrexia (n=12, 55%), dyspnea (n=11, 50%), insomnia (n=11, 50%), anemia (n=10, 46%), constipation (n=9, 41%), nausea (n=9, 41%), cough (n=9, 41%), and decreased appetite (n=9, 41%).

The most common grade 3 or higher AEs were anemia (n=9, 41%), thrombocytopenia (n=5, 23%), pneumonia (n=5, 23%), hypoglycemia (n=4, 18%), hyponatremia (n=4, 18%), and hypophosphatemia (n=4, 18%).

Four patients (18%) had treatment-related serious AEs. This included hematuria and pollakiuria (n=1), dysuria and pollakiuria (n=1), asthenia (n=1), and dyspnea (n=1). Thirteen patients (59%) stopped treatment due to AEs.

Ten patients, who remained on study from 1 to 19 months, died within 30 days of stopping rigosertib. There were no treatment-related deaths.

Efficacy

Nineteen patients were evaluable for efficacy.

Five patients responded to treatment. Four patients with MDS had a marrow complete response, and 1 with tAML had a marrow partial response. Two of the patients with marrow complete response also had hematologic improvements.

Five patients had stable disease, 3 with MDS and 2 with tAML.

The median OS was 15.7 months for responders and 2.0 months for non-responders (P=0.0070). The median OS was 12.0 months for MDS patients and 2.0 months for tAML patients (P<0.0001).

“The publication of results from this historical study provides support of the relationship between bone marrow blast response and improvement in overall survival in this group of patients with MDS and acute myeloid leukemia for whom no FDA-approved treatments are currently available,” said Ramesh Kumar, president and chief executive officer of Onconova Therapeutics, Inc.

He added that these data are “fundamental to the rationale” of ongoing studies of rigosertib in high-risk MDS patients. ![]()

Rigosertib has demonstrated activity and tolerability in patients with myelodysplastic syndromes (MDS) and acute myeloid leukemia transformed from MDS (tAML), according to researchers.

In a phase 1/2 study, rigosertib produced responses in a quarter of MDS/tAML patients and enabled stable disease in another quarter.

Overall survival (OS) was about a year longer for responders than for non-responders.

MDS patients were more likely to respond to rigosertib and therefore enjoyed longer OS than tAML patients.

Overall, rigosertib was considered well-tolerated. There were no treatment-related deaths, though 18% of patients experienced treatment-related serious adverse events (AEs).

Lewis Silverman, MD, of Icahn School of Medicine at Mount Sinai in New York, New York, and his colleagues described these results in Leukemia Research.

The study was sponsored by Onconova Therapeutics, Inc., the company developing rigosertib.

Rigosertib is an inhibitor of Ras-effector pathways that interacts with the Ras binding domains common to several signaling proteins, including Raf and PI3 kinase.

Dr Silverman and his colleagues tested intravenous rigosertib in a dose-escalation, phase 1/2 study of 22 patients. Patients had tAML (n=13), high-risk MDS (n=6), intermediate-2-risk MDS (n=2), or chronic myelomonocytic leukemia (n=1).

All patients had relapsed or were refractory to standard therapy and had no approved options for second-line therapies. The patients’ median age was 78 (range, 59-84), and 90% were male.

Patients received 3- to 7-day continuous infusions of rigosertib at doses ranging from 650 mg/m2/day to 1700 mg/m2/day in 14-day cycles.

The mean number of treatment cycles was 5.6 ± 5.8 (range, 1-23). The maximum tolerated dose of rigosertib was 1700 mg/m2/day, and the recommended phase 2 dose was 1375 mg/m2/day.

Safety

All patients had at least 1 AE. The most common AEs of any grade were fatigue (n=16, 73%), diarrhea (n=12, 55%), pyrexia (n=12, 55%), dyspnea (n=11, 50%), insomnia (n=11, 50%), anemia (n=10, 46%), constipation (n=9, 41%), nausea (n=9, 41%), cough (n=9, 41%), and decreased appetite (n=9, 41%).

The most common grade 3 or higher AEs were anemia (n=9, 41%), thrombocytopenia (n=5, 23%), pneumonia (n=5, 23%), hypoglycemia (n=4, 18%), hyponatremia (n=4, 18%), and hypophosphatemia (n=4, 18%).

Four patients (18%) had treatment-related serious AEs. This included hematuria and pollakiuria (n=1), dysuria and pollakiuria (n=1), asthenia (n=1), and dyspnea (n=1). Thirteen patients (59%) stopped treatment due to AEs.

Ten patients, who remained on study from 1 to 19 months, died within 30 days of stopping rigosertib. There were no treatment-related deaths.

Efficacy

Nineteen patients were evaluable for efficacy.

Five patients responded to treatment. Four patients with MDS had a marrow complete response, and 1 with tAML had a marrow partial response. Two of the patients with marrow complete response also had hematologic improvements.

Five patients had stable disease, 3 with MDS and 2 with tAML.

The median OS was 15.7 months for responders and 2.0 months for non-responders (P=0.0070). The median OS was 12.0 months for MDS patients and 2.0 months for tAML patients (P<0.0001).

“The publication of results from this historical study provides support of the relationship between bone marrow blast response and improvement in overall survival in this group of patients with MDS and acute myeloid leukemia for whom no FDA-approved treatments are currently available,” said Ramesh Kumar, president and chief executive officer of Onconova Therapeutics, Inc.

He added that these data are “fundamental to the rationale” of ongoing studies of rigosertib in high-risk MDS patients. ![]()

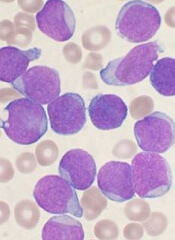

Drug can treat severely ill SCD patients, case suggests

ATLANTA—Results of a case study suggest voxelotor (previously GBT440) can be effective in severely ill patients with sickle cell disease (SCD).

Voxelotor is currently under investigation in the phase 3 HOPE study, which includes SCD patients age 12 and older.

A 67-year-old male SCD patient could not participate in the study due to severe, transfusion-refractory anemia, so he received voxelotor via compassionate access.

This patient’s results were presented at the Sickle Cell Disease Association of America (SCDAA) 45th Annual National Convention.

The patient had the HbSS genotype with severe anemia that was refractory to transfusion. The patient had developed red cell antibodies after receiving multiple transfusions, and these antibodies prevented further transfusions to correct his anemia.

The patient also had moderate chronic obstructive pulmonary disease requiring supplemental oxygen therapy, recurrent and frequent pain exacerbations, extreme fatigue, and clinical depression.

The patient received voxelotor at 900 mg orally once daily. He responded to the treatment within 1 to 2 weeks, experiencing improvements in pain, fatigue, and overall mental health (as measured by the Patient Health Quality-9 score).

The patient’s hemoglobin levels rose quickly, to approximately 1.5 g/dL above baseline, with a sustained increase over 66 weeks in the range of 1 to 1.5 g/dL.

There were reductions in reticulocyte count and bilirubin as well, both consistent with diminished hemolysis.

The patient’s blood oxygen saturation improved on the standard walk test, from 86 mmHg at baseline to 96 mmHg at 65 weeks, and he discontinued continuous oxygen supplementation.

The patient has not been hospitalized due to sickle cell pain since he started taking voxelotor.

He has experienced a treatment-related side effect—grade 2 diarrhea. This occurred 9 weeks after he started voxelotor treatment, when the dose was increased to 1500 mg daily, but it resolved upon return to 900 mg. The patient has experienced no other treatment-related side effects.

Clinical and laboratory improvements have continued for more than 17 months, and the patient remains on treatment today under compassionate use access.

“This severely ill SCD patient’s clinical response, assessed by both objective and subjective measures, illustrates why we are encouraged by the voxelotor program,” said Ted W. Love, MD, president and chief executive officer of Global Blood Therapeutics, the company developing voxelotor.

“We plan to present additional data from other severely ill sickle cell patients who have received voxelotor via single-patient compassionate access treatment at FSCDR [the Foundation for Sickle Cell Disease Research] at an upcoming medical meeting. Of course, controlled clinical trials are needed to assess the efficacy and safety of voxelotor in SCD patients, including those with severe anemia.” ![]()

ATLANTA—Results of a case study suggest voxelotor (previously GBT440) can be effective in severely ill patients with sickle cell disease (SCD).

Voxelotor is currently under investigation in the phase 3 HOPE study, which includes SCD patients age 12 and older.

A 67-year-old male SCD patient could not participate in the study due to severe, transfusion-refractory anemia, so he received voxelotor via compassionate access.

This patient’s results were presented at the Sickle Cell Disease Association of America (SCDAA) 45th Annual National Convention.

The patient had the HbSS genotype with severe anemia that was refractory to transfusion. The patient had developed red cell antibodies after receiving multiple transfusions, and these antibodies prevented further transfusions to correct his anemia.

The patient also had moderate chronic obstructive pulmonary disease requiring supplemental oxygen therapy, recurrent and frequent pain exacerbations, extreme fatigue, and clinical depression.

The patient received voxelotor at 900 mg orally once daily. He responded to the treatment within 1 to 2 weeks, experiencing improvements in pain, fatigue, and overall mental health (as measured by the Patient Health Quality-9 score).

The patient’s hemoglobin levels rose quickly, to approximately 1.5 g/dL above baseline, with a sustained increase over 66 weeks in the range of 1 to 1.5 g/dL.

There were reductions in reticulocyte count and bilirubin as well, both consistent with diminished hemolysis.

The patient’s blood oxygen saturation improved on the standard walk test, from 86 mmHg at baseline to 96 mmHg at 65 weeks, and he discontinued continuous oxygen supplementation.

The patient has not been hospitalized due to sickle cell pain since he started taking voxelotor.

He has experienced a treatment-related side effect—grade 2 diarrhea. This occurred 9 weeks after he started voxelotor treatment, when the dose was increased to 1500 mg daily, but it resolved upon return to 900 mg. The patient has experienced no other treatment-related side effects.

Clinical and laboratory improvements have continued for more than 17 months, and the patient remains on treatment today under compassionate use access.

“This severely ill SCD patient’s clinical response, assessed by both objective and subjective measures, illustrates why we are encouraged by the voxelotor program,” said Ted W. Love, MD, president and chief executive officer of Global Blood Therapeutics, the company developing voxelotor.

“We plan to present additional data from other severely ill sickle cell patients who have received voxelotor via single-patient compassionate access treatment at FSCDR [the Foundation for Sickle Cell Disease Research] at an upcoming medical meeting. Of course, controlled clinical trials are needed to assess the efficacy and safety of voxelotor in SCD patients, including those with severe anemia.” ![]()

ATLANTA—Results of a case study suggest voxelotor (previously GBT440) can be effective in severely ill patients with sickle cell disease (SCD).

Voxelotor is currently under investigation in the phase 3 HOPE study, which includes SCD patients age 12 and older.

A 67-year-old male SCD patient could not participate in the study due to severe, transfusion-refractory anemia, so he received voxelotor via compassionate access.

This patient’s results were presented at the Sickle Cell Disease Association of America (SCDAA) 45th Annual National Convention.

The patient had the HbSS genotype with severe anemia that was refractory to transfusion. The patient had developed red cell antibodies after receiving multiple transfusions, and these antibodies prevented further transfusions to correct his anemia.

The patient also had moderate chronic obstructive pulmonary disease requiring supplemental oxygen therapy, recurrent and frequent pain exacerbations, extreme fatigue, and clinical depression.

The patient received voxelotor at 900 mg orally once daily. He responded to the treatment within 1 to 2 weeks, experiencing improvements in pain, fatigue, and overall mental health (as measured by the Patient Health Quality-9 score).

The patient’s hemoglobin levels rose quickly, to approximately 1.5 g/dL above baseline, with a sustained increase over 66 weeks in the range of 1 to 1.5 g/dL.

There were reductions in reticulocyte count and bilirubin as well, both consistent with diminished hemolysis.

The patient’s blood oxygen saturation improved on the standard walk test, from 86 mmHg at baseline to 96 mmHg at 65 weeks, and he discontinued continuous oxygen supplementation.

The patient has not been hospitalized due to sickle cell pain since he started taking voxelotor.

He has experienced a treatment-related side effect—grade 2 diarrhea. This occurred 9 weeks after he started voxelotor treatment, when the dose was increased to 1500 mg daily, but it resolved upon return to 900 mg. The patient has experienced no other treatment-related side effects.

Clinical and laboratory improvements have continued for more than 17 months, and the patient remains on treatment today under compassionate use access.

“This severely ill SCD patient’s clinical response, assessed by both objective and subjective measures, illustrates why we are encouraged by the voxelotor program,” said Ted W. Love, MD, president and chief executive officer of Global Blood Therapeutics, the company developing voxelotor.

“We plan to present additional data from other severely ill sickle cell patients who have received voxelotor via single-patient compassionate access treatment at FSCDR [the Foundation for Sickle Cell Disease Research] at an upcoming medical meeting. Of course, controlled clinical trials are needed to assess the efficacy and safety of voxelotor in SCD patients, including those with severe anemia.” ![]()

FDA approves generic clofarabine

The US Food and Drug Administration (FDA) has approved Dr. Reddy’s Laboratories Ltd.’s Clofarabine Injection, a therapeutic equivalent generic version of Clolar® (clofarabine) Injection.

The generic drug is now approved to treat patients age 1 to 21 who have relapsed or refractory acute lymphoblastic leukemia and have received at least 2 prior treatment regimens.

Dr. Reddy’s Clofarabine Injection is available in single-dose, 20 mL flint vials containing 20 mg of clofarabine in 20 mL of solution (1 mg/mL). ![]()

The US Food and Drug Administration (FDA) has approved Dr. Reddy’s Laboratories Ltd.’s Clofarabine Injection, a therapeutic equivalent generic version of Clolar® (clofarabine) Injection.

The generic drug is now approved to treat patients age 1 to 21 who have relapsed or refractory acute lymphoblastic leukemia and have received at least 2 prior treatment regimens.

Dr. Reddy’s Clofarabine Injection is available in single-dose, 20 mL flint vials containing 20 mg of clofarabine in 20 mL of solution (1 mg/mL). ![]()

The US Food and Drug Administration (FDA) has approved Dr. Reddy’s Laboratories Ltd.’s Clofarabine Injection, a therapeutic equivalent generic version of Clolar® (clofarabine) Injection.

The generic drug is now approved to treat patients age 1 to 21 who have relapsed or refractory acute lymphoblastic leukemia and have received at least 2 prior treatment regimens.

Dr. Reddy’s Clofarabine Injection is available in single-dose, 20 mL flint vials containing 20 mg of clofarabine in 20 mL of solution (1 mg/mL). ![]()

Generic azacitidine approved in Canada

Health Canada has approved Dr. Reddy’s Laboratories Ltd.’s Azacitidine for Injection 100 mg/vial, a bioequivalent generic version of VIDAZA® (azacitidine for injection).

The generic drug is approved for the same indications as VIDAZA®.

This includes treating adults with intermediate-2 or high-risk myelodysplastic syndromes (according to the International Prognostic Scoring System) who are not eligible for hematopoietic stem cell transplant.

It also includes treating adults who have acute myeloid leukemia with 20% to 30% blasts and multi-lineage dysplasia (according to World Health Organization classification) who are not eligible for hematopoietic stem cell transplant.

“The approval and launch of Azacitidine for Injection is an important milestone for Dr. Reddy’s in Canada,” said Vinod Ramachandran, PhD, country manager, Dr. Reddy’s Canada.

“The launch of the first generic azacitidine for injection is another step in our long-term commitment to bring more cost-effective options to Canadian patients.” ![]()

Health Canada has approved Dr. Reddy’s Laboratories Ltd.’s Azacitidine for Injection 100 mg/vial, a bioequivalent generic version of VIDAZA® (azacitidine for injection).

The generic drug is approved for the same indications as VIDAZA®.

This includes treating adults with intermediate-2 or high-risk myelodysplastic syndromes (according to the International Prognostic Scoring System) who are not eligible for hematopoietic stem cell transplant.

It also includes treating adults who have acute myeloid leukemia with 20% to 30% blasts and multi-lineage dysplasia (according to World Health Organization classification) who are not eligible for hematopoietic stem cell transplant.

“The approval and launch of Azacitidine for Injection is an important milestone for Dr. Reddy’s in Canada,” said Vinod Ramachandran, PhD, country manager, Dr. Reddy’s Canada.

“The launch of the first generic azacitidine for injection is another step in our long-term commitment to bring more cost-effective options to Canadian patients.” ![]()

Health Canada has approved Dr. Reddy’s Laboratories Ltd.’s Azacitidine for Injection 100 mg/vial, a bioequivalent generic version of VIDAZA® (azacitidine for injection).

The generic drug is approved for the same indications as VIDAZA®.

This includes treating adults with intermediate-2 or high-risk myelodysplastic syndromes (according to the International Prognostic Scoring System) who are not eligible for hematopoietic stem cell transplant.

It also includes treating adults who have acute myeloid leukemia with 20% to 30% blasts and multi-lineage dysplasia (according to World Health Organization classification) who are not eligible for hematopoietic stem cell transplant.

“The approval and launch of Azacitidine for Injection is an important milestone for Dr. Reddy’s in Canada,” said Vinod Ramachandran, PhD, country manager, Dr. Reddy’s Canada.

“The launch of the first generic azacitidine for injection is another step in our long-term commitment to bring more cost-effective options to Canadian patients.” ![]()

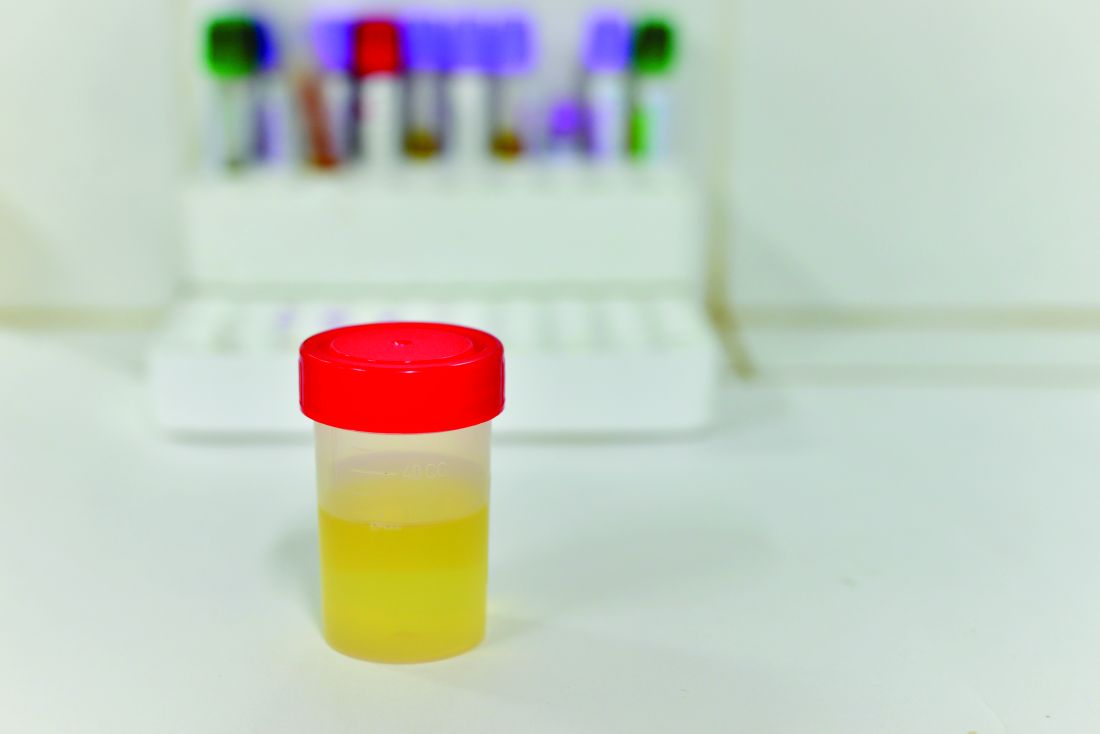

Novel biomarker test appears accurate for UTI diagnosis in febrile children under 2 years

Novel test for the urinary neutrophil gelatinase–associated lipocalin (uNGAL) biomarker have good sensitivity and specificity to distinguish whether infants and children younger than 2 years old have a urinary tract infection (UTI), said Tamar R. Lubell, MD, and her associates at Columbia University, New York.

“NGAL is a protein expressed in neutrophils and several other human tissues, including alpha-intercalated cells in the collecting duct of the kidney,” the researchers explained. “The urine and serum contain very low levels of NGAL protein at steady state, with expression of NGAL rising rapidly in response to cell damage caused by ischemia-reperfusion injury, presence of cytotoxins, and sepsis.”

Of the 260 children whose catheterized urine was analyzed, 14% had UTIs. The median uNGAL concentration was 215.1 ng/mL in the UTI group, compared with 4.4 ng/mL in the culture-negative group. Urinalysis and Gram stain also were performed.

“uNGAL had higher sensitivity than [urinalysis], with similar specificity,” the investigators said. “Gram stain had a somewhat lower sensitivity than uNGAL, but with high specificity.”

The researchers identified a cutoff point for uNGAL levels to be 39.1 ng/mL. A previous case-control study of 108 infants with UTI had a cutoff for uNGAL levels of 38 ng/mL, with sensitivity of 93% and specificity of 95%. Most urine samples in that study were obtained by clean catch rather than by catheterization, as in the current study.

“Further studies will need to both confirm our findings and determine the benefit and cost effectiveness of uNGAL testing, compared with [urinalysis],” Dr. Lubell and her associates said.

Read more in Pediatrics (2017 Nov 16. doi: 10.1542/peds.2017-1090).

Novel test for the urinary neutrophil gelatinase–associated lipocalin (uNGAL) biomarker have good sensitivity and specificity to distinguish whether infants and children younger than 2 years old have a urinary tract infection (UTI), said Tamar R. Lubell, MD, and her associates at Columbia University, New York.

“NGAL is a protein expressed in neutrophils and several other human tissues, including alpha-intercalated cells in the collecting duct of the kidney,” the researchers explained. “The urine and serum contain very low levels of NGAL protein at steady state, with expression of NGAL rising rapidly in response to cell damage caused by ischemia-reperfusion injury, presence of cytotoxins, and sepsis.”

Of the 260 children whose catheterized urine was analyzed, 14% had UTIs. The median uNGAL concentration was 215.1 ng/mL in the UTI group, compared with 4.4 ng/mL in the culture-negative group. Urinalysis and Gram stain also were performed.

“uNGAL had higher sensitivity than [urinalysis], with similar specificity,” the investigators said. “Gram stain had a somewhat lower sensitivity than uNGAL, but with high specificity.”

The researchers identified a cutoff point for uNGAL levels to be 39.1 ng/mL. A previous case-control study of 108 infants with UTI had a cutoff for uNGAL levels of 38 ng/mL, with sensitivity of 93% and specificity of 95%. Most urine samples in that study were obtained by clean catch rather than by catheterization, as in the current study.

“Further studies will need to both confirm our findings and determine the benefit and cost effectiveness of uNGAL testing, compared with [urinalysis],” Dr. Lubell and her associates said.

Read more in Pediatrics (2017 Nov 16. doi: 10.1542/peds.2017-1090).

Novel test for the urinary neutrophil gelatinase–associated lipocalin (uNGAL) biomarker have good sensitivity and specificity to distinguish whether infants and children younger than 2 years old have a urinary tract infection (UTI), said Tamar R. Lubell, MD, and her associates at Columbia University, New York.

“NGAL is a protein expressed in neutrophils and several other human tissues, including alpha-intercalated cells in the collecting duct of the kidney,” the researchers explained. “The urine and serum contain very low levels of NGAL protein at steady state, with expression of NGAL rising rapidly in response to cell damage caused by ischemia-reperfusion injury, presence of cytotoxins, and sepsis.”

Of the 260 children whose catheterized urine was analyzed, 14% had UTIs. The median uNGAL concentration was 215.1 ng/mL in the UTI group, compared with 4.4 ng/mL in the culture-negative group. Urinalysis and Gram stain also were performed.

“uNGAL had higher sensitivity than [urinalysis], with similar specificity,” the investigators said. “Gram stain had a somewhat lower sensitivity than uNGAL, but with high specificity.”

The researchers identified a cutoff point for uNGAL levels to be 39.1 ng/mL. A previous case-control study of 108 infants with UTI had a cutoff for uNGAL levels of 38 ng/mL, with sensitivity of 93% and specificity of 95%. Most urine samples in that study were obtained by clean catch rather than by catheterization, as in the current study.

“Further studies will need to both confirm our findings and determine the benefit and cost effectiveness of uNGAL testing, compared with [urinalysis],” Dr. Lubell and her associates said.

Read more in Pediatrics (2017 Nov 16. doi: 10.1542/peds.2017-1090).

FROM PEDIATRICS