User login

Safety Standards a Top Priority for ASLMS President

Arisa E. Ortiz, MD, began her term as president of the American Society for Laser Medicine and Surgery (ASLMS) during the organization’s annual meeting in April 2024.

After earning her medical degree from Albany Medical College, Albany, New York, Dr. Ortiz, a native of Los Angeles, completed her dermatology residency training at the University of California, Irvine, and the university’s Beckman Laser Institute. Next, she completed a laser and cosmetic dermatology fellowship at Massachusetts General Hospital, Harvard Medical School, and the Wellman Center for Photomedicine, all in Boston, and acquired additional fellowship training in Mohs micrographic surgery at the University of California, San Diego (UCSD). Dr. Ortiz is currently director of laser and cosmetic dermatology and a clinical professor of dermatology at UCSD.

She has authored more than 60 publications on new innovations in cutaneous surgery and is a frequent speaker at meetings of the American Academy of Dermatology, the American Society for Dermatologic Surgery (ASDS), and ASLMS, and she cochairs the annual Masters of Aesthetics Symposium in San Diego. Dr. Ortiz has received several awards, including the 2024 Castle Connolly Top Doctor Award and the Exceptional Women in Medicine Award; Newsweek America’s Best Dermatologists; the ASLMS Dr. Horace Furumoto Young Investigator Award, the ASLMS Best of Session Award for Cutaneous Applications, and the ASDS President’s Outstanding Service Award. Her primary research focuses on the laser treatment of nonmelanoma skin cancer.

In an interview, Dr. Ortiz spoke about her goals as ASLMS president and other topics related to dermatology.

Who inspired you most to become a doctor?

I’ve wanted to become a doctor for as long as I can remember. My fascination with science and the idea of helping people improve their health were driving forces. However, my biggest influence early on was my uncle, who was a pediatrician. His dedication and passion for medicine deeply inspired me and solidified my desire to pursue a career in healthcare.

I understand that a bout with chickenpox as a teenager influenced your decision to specialize in dermatology.

It’s an interesting and somewhat humorous story. When I was 18, I contracted chickenpox and ended up with scars on my face. It was a tough experience as a teenager, but it’s fascinating how such events can shape your life. In my quest for help, I opened the Yellow Pages and randomly chose a dermatologist nearby, who turned out to be Gary Lask, MD, director of lasers at UCLA [University of California, Los Angeles]. During our visit, I mentioned that I was premed, and he encouraged me to consider dermatology. About 6 years later, as a second-year medical student, I realized my passion for dermatology. I reached out to Dr. Lask and told him: “You were right. I want to be a dermatologist. Now, you have to help me get in!” Today, he remains my mentor, and I am deeply grateful for his guidance and support on this journey.

One of the initiatives for your term as ASLMS president includes a focus on safety standards for lasers and energy-based devices. Why is this important now?

Working at the university, I frequently encounter severe complications arising from the improper use of lasers and energy-based devices. As these procedures gain popularity, more providers are offering them, yet often without adequate training. As the world’s premier laser society, it is our duty to ensure patient safety. In the ever-evolving field of laser medicine, it is crucial that we continually strive to enhance the regulation of laser usage, ensuring that patients receive the highest standard of care with minimal risk.

One of the suggestions you have for the safety initiative is to offer a rigorous laser safety certification course with continuing education opportunities as a way foster a culture of heightened safety standards. Please explain what would be included in such a course and how it would align with current efforts to report adverse events such as the ASDS-Northwestern University Cutaneous Procedures Adverse Events Reporting (CAPER) registry and the Food and Drug Administration’s MedWatch Program.

A laser safety certification task force has been established to determine the best approach for developing a comprehensive course. The task force aims to assess the necessity of a formal safety certification in our industry, identify the resources needed to support such a certification, establish general safety protocols to form the content foundation, address potential legal concerns, and outline the process for formal certification program recognition. This exploratory work is expected to conclude by the end of the year. The proposed course may include modules on the fundamentals of laser physics, safe operation techniques, patient selection and management, and emergency protocols. Continuing education opportunities would be considered to keep practitioners updated on the latest advancements and safety protocols in laser medicine, thereby fostering a culture of heightened safety standards.

Another initiative for your term is the rollout of a tattoo removal program for former gang members based on the UCSD Clean Slate Tattoo Removal Program. Please tell us more about your vision for this national program.

UCSD Dermatology, in collaboration with UCSD Global Health, has been involved in the Clean Slate Tattoo Removal Program for the past decade. This initiative supports and rehabilitates former gang members by offering laser tattoo removal, helping them reintegrate into society. My vision is to equip our members with the necessary protocols to implement this outreach initiative in their own communities. By providing opportunities for reform and growth, we aim to foster safer and more inclusive communities nationwide.

You were one of the first clinicians to use a laser to treat basal cell carcinoma (BCC). Who are the ideal candidates for this procedure? Is the technique ready for wide clinical adoption? If not, what kind of studies are needed to make it so?

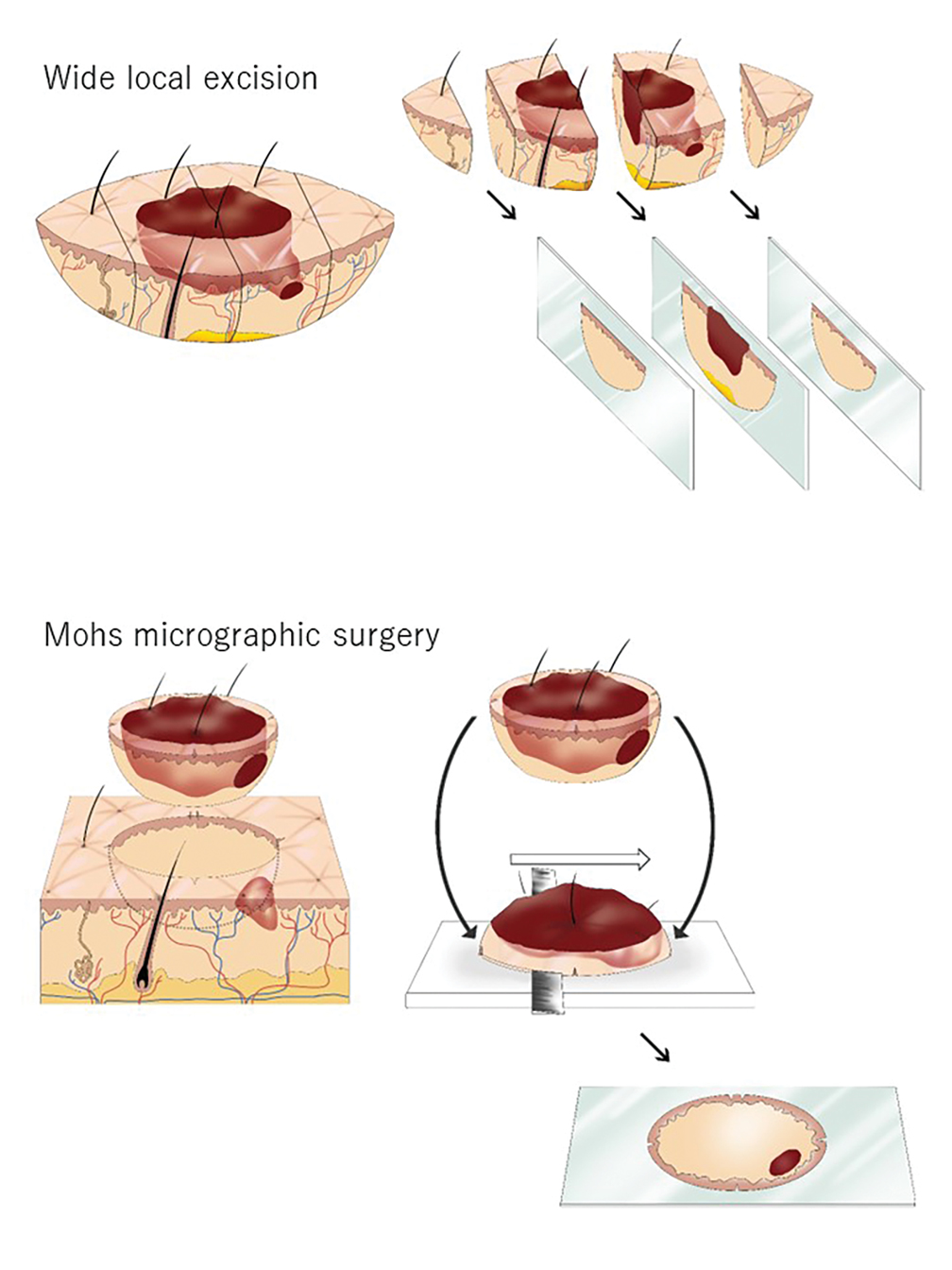

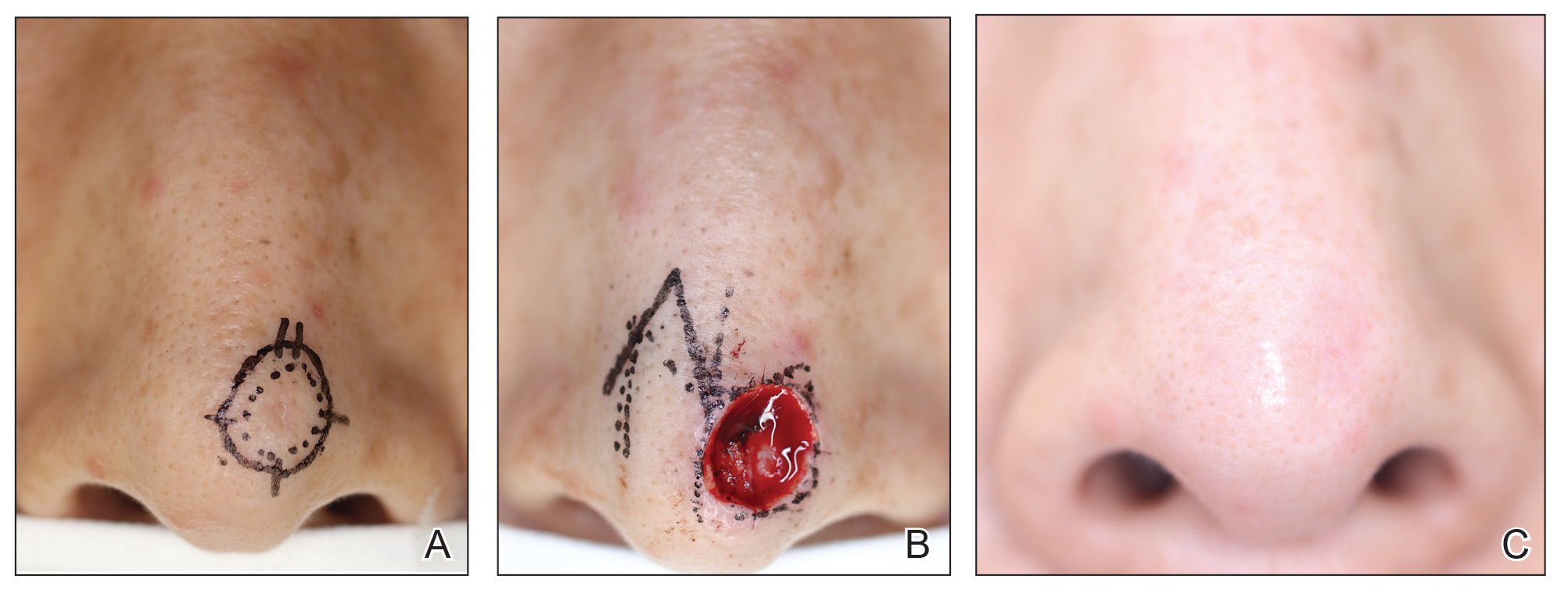

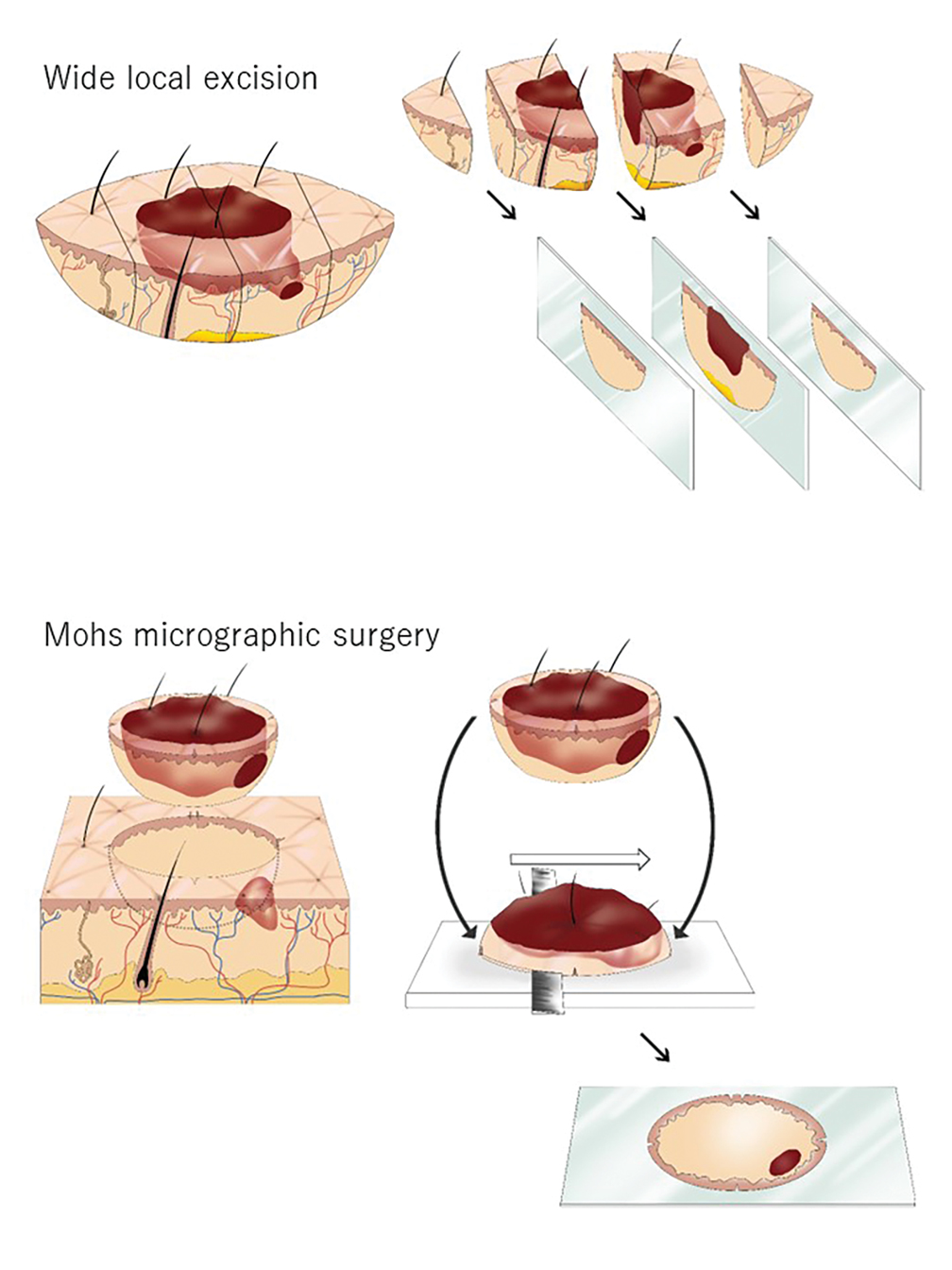

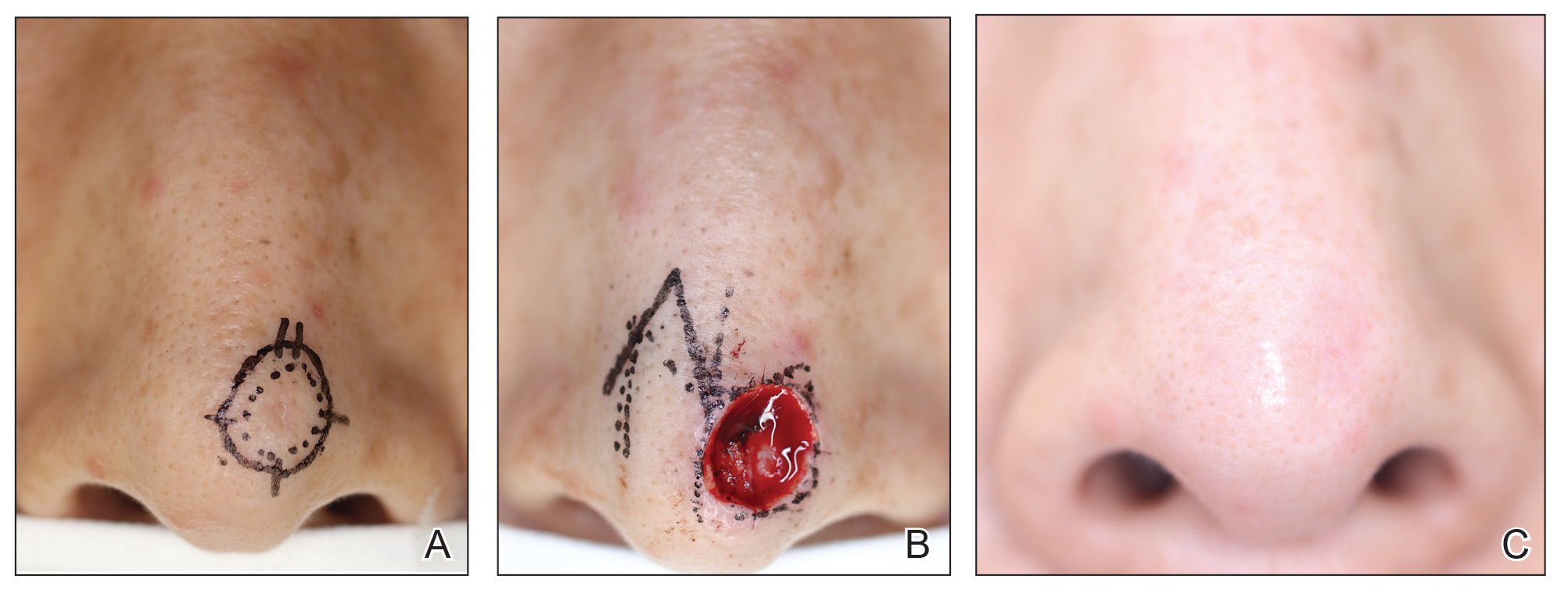

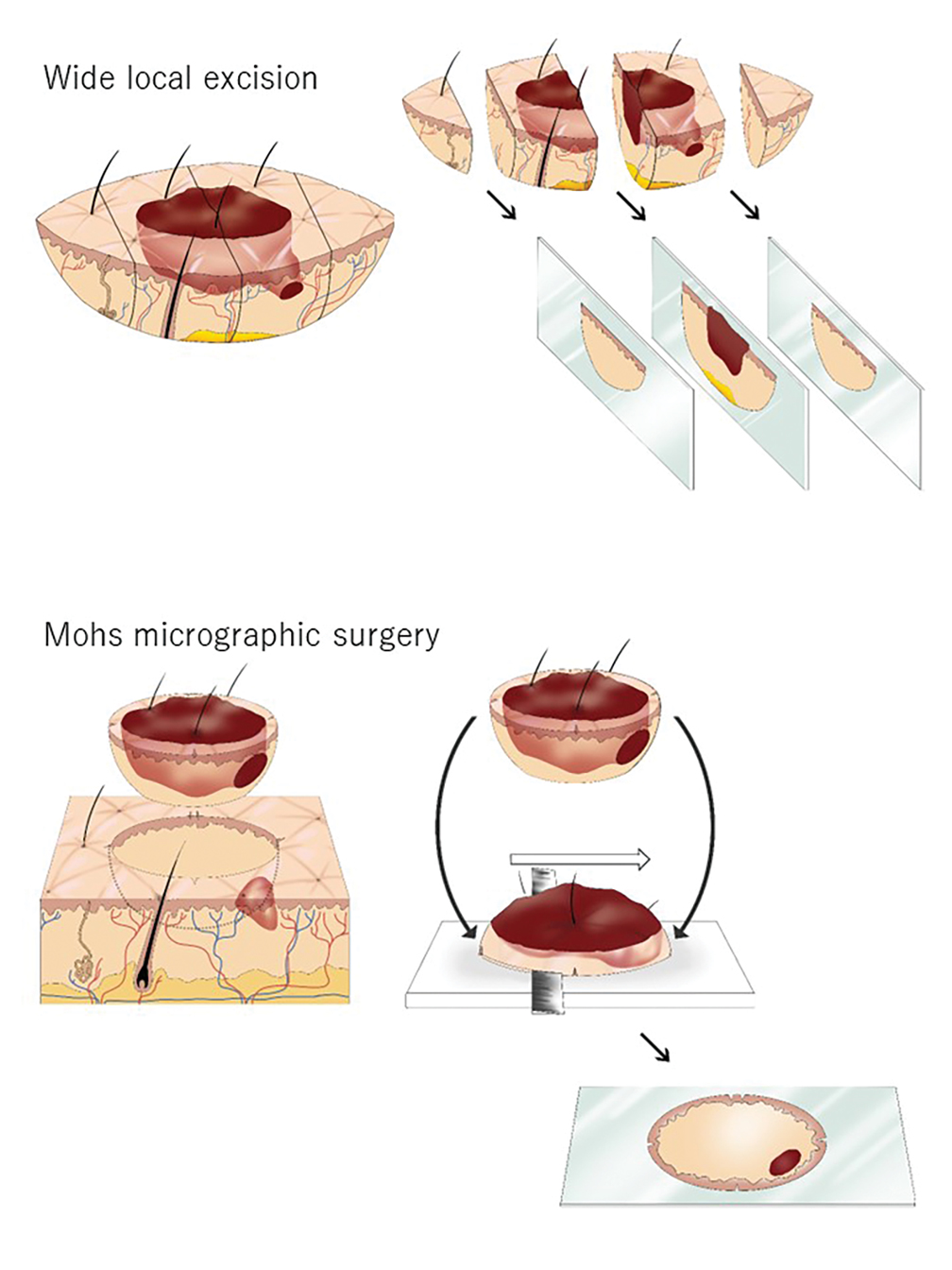

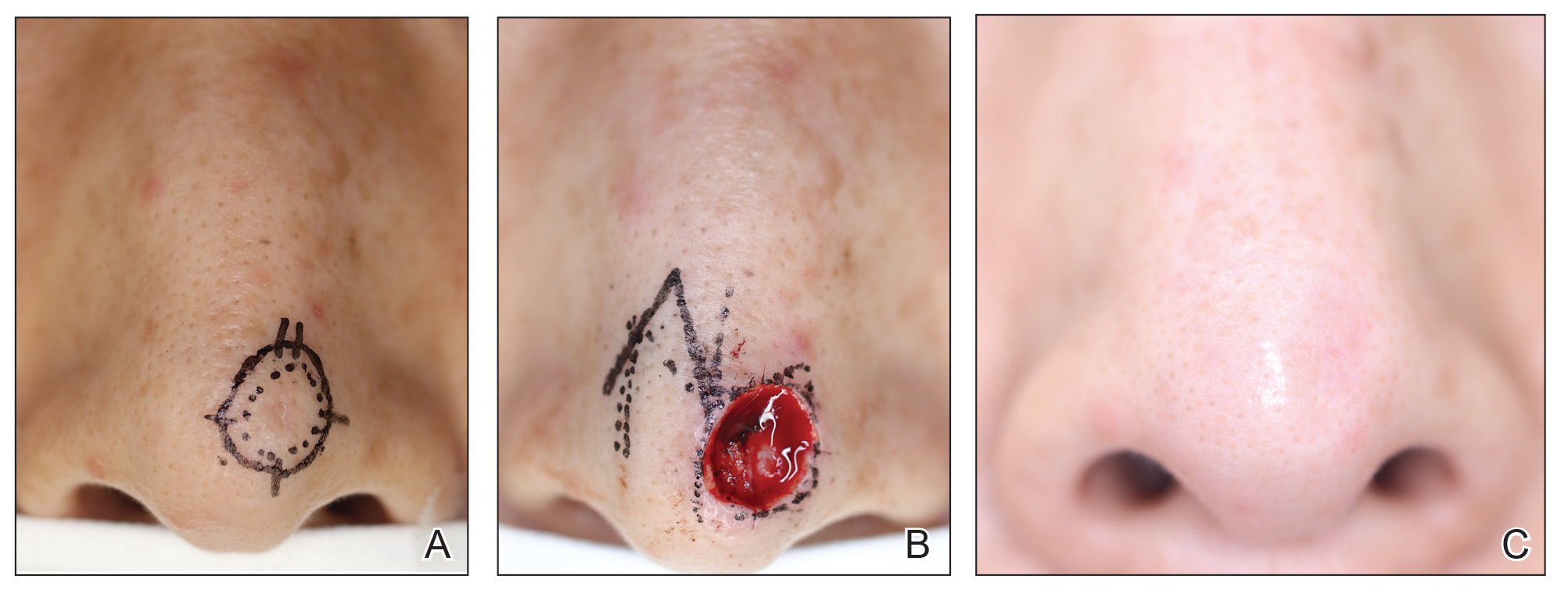

My research passion lies in optimizing laser treatments for BCC. During my fellowship with R. Rox Anderson, MD, and Mathew Avram, MD, at the MGH Wellman Center for Photomedicine, we conducted a pilot study using the 1064-nm Nd:YAG laser, achieving a 92% clearance rate after one treatment. Inspired by these results, we conducted a larger multicenter study, which demonstrated a 90% clearance rate after a single treatment. I now incorporate this technique into my daily practice. The ideal candidates for this procedure are patients with BCC that do not meet the Mohs Appropriate Use Criteria, such as those with nodular or superficial BCC subtypes on the body, individuals who are poor surgical candidates, or those who are surgically exhausted. However, I do not recommend this treatment for patients who are primarily concerned about facial scarring, particularly younger individuals; in such cases, Mohs surgery still remains the preferred option. While I believe this technique is ready for broader clinical adoption, it requires an understanding of laser endpoints. We are also exploring antibody-targeted gold nanorods to enhance the selectivity and standardization of the treatment.

Who inspires you most in your work today?

My patients are my greatest inspiration. Their trust and dedication motivate me to stay at the forefront of dermatologic advancements, ensuring I provide the most cutting-edge and safe treatments possible. Their commitment drives my relentless pursuit of continuous learning and innovation in the field.

What’s the best advice you can give to female dermatologists seeking leadership positions at the local, state, or national level?

My best advice is to have the courage to ask for what you seek. Societies are always looking for members who are eager to participate and contribute. If you express your interest in becoming more involved, there is likely a position available for you. The more you are willing to contribute to a society, the more likely you will be noticed and excel into higher leadership positions. Take initiative, show your commitment, and don’t hesitate to step forward when opportunities arise.

What’s the one tried-and-true laser- or energy-based procedure that you consider a “must” for your dermatology practice? And why?

Determining a single “must-have” laser- or energy-based procedure is a challenging question as it greatly depends on the specific needs of your patient population. However, one of the most common concerns among patients involves issues like redness and pigmentation. Therefore, having a versatile laser or an intense pulsed light device that effectively targets both red and brown pigmentation is indispensable for most practices.

In your view, what are the top three trends in aesthetic dermatology?

Over the years, I have observed several key trends in aesthetic dermatology:

- Minimally invasive procedures. There is a growing preference for less invasive treatments. Patients increasingly desire minimal downtime while still achieving significant results.

- Advancements in laser and energy-based devices for darker skin. There have been substantial advancements in technologies that are safer and more effective for darker skin tones. These developments play a crucial role in addressing diverse patient needs and providing inclusive dermatologic care.

- Natural aesthetic. I am hopeful that the trend toward an overdone appearance is fading. There seems to be a shift back towards a more natural and conservative aesthetic, emphasizing subtle enhancements over dramatic changes.

What development in dermatology are you most excited about in the next 5 years?

I am most excited to see how artificial intelligence and robotics play a role in energy-based devices.

Dr. Ortiz disclosed having financial relationships with several pharmaceutical and device companies. She is also cochair of the MOAS.

Arisa E. Ortiz, MD, began her term as president of the American Society for Laser Medicine and Surgery (ASLMS) during the organization’s annual meeting in April 2024.

After earning her medical degree from Albany Medical College, Albany, New York, Dr. Ortiz, a native of Los Angeles, completed her dermatology residency training at the University of California, Irvine, and the university’s Beckman Laser Institute. Next, she completed a laser and cosmetic dermatology fellowship at Massachusetts General Hospital, Harvard Medical School, and the Wellman Center for Photomedicine, all in Boston, and acquired additional fellowship training in Mohs micrographic surgery at the University of California, San Diego (UCSD). Dr. Ortiz is currently director of laser and cosmetic dermatology and a clinical professor of dermatology at UCSD.

She has authored more than 60 publications on new innovations in cutaneous surgery and is a frequent speaker at meetings of the American Academy of Dermatology, the American Society for Dermatologic Surgery (ASDS), and ASLMS, and she cochairs the annual Masters of Aesthetics Symposium in San Diego. Dr. Ortiz has received several awards, including the 2024 Castle Connolly Top Doctor Award and the Exceptional Women in Medicine Award; Newsweek America’s Best Dermatologists; the ASLMS Dr. Horace Furumoto Young Investigator Award, the ASLMS Best of Session Award for Cutaneous Applications, and the ASDS President’s Outstanding Service Award. Her primary research focuses on the laser treatment of nonmelanoma skin cancer.

In an interview, Dr. Ortiz spoke about her goals as ASLMS president and other topics related to dermatology.

Who inspired you most to become a doctor?

I’ve wanted to become a doctor for as long as I can remember. My fascination with science and the idea of helping people improve their health were driving forces. However, my biggest influence early on was my uncle, who was a pediatrician. His dedication and passion for medicine deeply inspired me and solidified my desire to pursue a career in healthcare.

I understand that a bout with chickenpox as a teenager influenced your decision to specialize in dermatology.

It’s an interesting and somewhat humorous story. When I was 18, I contracted chickenpox and ended up with scars on my face. It was a tough experience as a teenager, but it’s fascinating how such events can shape your life. In my quest for help, I opened the Yellow Pages and randomly chose a dermatologist nearby, who turned out to be Gary Lask, MD, director of lasers at UCLA [University of California, Los Angeles]. During our visit, I mentioned that I was premed, and he encouraged me to consider dermatology. About 6 years later, as a second-year medical student, I realized my passion for dermatology. I reached out to Dr. Lask and told him: “You were right. I want to be a dermatologist. Now, you have to help me get in!” Today, he remains my mentor, and I am deeply grateful for his guidance and support on this journey.

One of the initiatives for your term as ASLMS president includes a focus on safety standards for lasers and energy-based devices. Why is this important now?

Working at the university, I frequently encounter severe complications arising from the improper use of lasers and energy-based devices. As these procedures gain popularity, more providers are offering them, yet often without adequate training. As the world’s premier laser society, it is our duty to ensure patient safety. In the ever-evolving field of laser medicine, it is crucial that we continually strive to enhance the regulation of laser usage, ensuring that patients receive the highest standard of care with minimal risk.

One of the suggestions you have for the safety initiative is to offer a rigorous laser safety certification course with continuing education opportunities as a way foster a culture of heightened safety standards. Please explain what would be included in such a course and how it would align with current efforts to report adverse events such as the ASDS-Northwestern University Cutaneous Procedures Adverse Events Reporting (CAPER) registry and the Food and Drug Administration’s MedWatch Program.

A laser safety certification task force has been established to determine the best approach for developing a comprehensive course. The task force aims to assess the necessity of a formal safety certification in our industry, identify the resources needed to support such a certification, establish general safety protocols to form the content foundation, address potential legal concerns, and outline the process for formal certification program recognition. This exploratory work is expected to conclude by the end of the year. The proposed course may include modules on the fundamentals of laser physics, safe operation techniques, patient selection and management, and emergency protocols. Continuing education opportunities would be considered to keep practitioners updated on the latest advancements and safety protocols in laser medicine, thereby fostering a culture of heightened safety standards.

Another initiative for your term is the rollout of a tattoo removal program for former gang members based on the UCSD Clean Slate Tattoo Removal Program. Please tell us more about your vision for this national program.

UCSD Dermatology, in collaboration with UCSD Global Health, has been involved in the Clean Slate Tattoo Removal Program for the past decade. This initiative supports and rehabilitates former gang members by offering laser tattoo removal, helping them reintegrate into society. My vision is to equip our members with the necessary protocols to implement this outreach initiative in their own communities. By providing opportunities for reform and growth, we aim to foster safer and more inclusive communities nationwide.

You were one of the first clinicians to use a laser to treat basal cell carcinoma (BCC). Who are the ideal candidates for this procedure? Is the technique ready for wide clinical adoption? If not, what kind of studies are needed to make it so?

My research passion lies in optimizing laser treatments for BCC. During my fellowship with R. Rox Anderson, MD, and Mathew Avram, MD, at the MGH Wellman Center for Photomedicine, we conducted a pilot study using the 1064-nm Nd:YAG laser, achieving a 92% clearance rate after one treatment. Inspired by these results, we conducted a larger multicenter study, which demonstrated a 90% clearance rate after a single treatment. I now incorporate this technique into my daily practice. The ideal candidates for this procedure are patients with BCC that do not meet the Mohs Appropriate Use Criteria, such as those with nodular or superficial BCC subtypes on the body, individuals who are poor surgical candidates, or those who are surgically exhausted. However, I do not recommend this treatment for patients who are primarily concerned about facial scarring, particularly younger individuals; in such cases, Mohs surgery still remains the preferred option. While I believe this technique is ready for broader clinical adoption, it requires an understanding of laser endpoints. We are also exploring antibody-targeted gold nanorods to enhance the selectivity and standardization of the treatment.

Who inspires you most in your work today?

My patients are my greatest inspiration. Their trust and dedication motivate me to stay at the forefront of dermatologic advancements, ensuring I provide the most cutting-edge and safe treatments possible. Their commitment drives my relentless pursuit of continuous learning and innovation in the field.

What’s the best advice you can give to female dermatologists seeking leadership positions at the local, state, or national level?

My best advice is to have the courage to ask for what you seek. Societies are always looking for members who are eager to participate and contribute. If you express your interest in becoming more involved, there is likely a position available for you. The more you are willing to contribute to a society, the more likely you will be noticed and excel into higher leadership positions. Take initiative, show your commitment, and don’t hesitate to step forward when opportunities arise.

What’s the one tried-and-true laser- or energy-based procedure that you consider a “must” for your dermatology practice? And why?

Determining a single “must-have” laser- or energy-based procedure is a challenging question as it greatly depends on the specific needs of your patient population. However, one of the most common concerns among patients involves issues like redness and pigmentation. Therefore, having a versatile laser or an intense pulsed light device that effectively targets both red and brown pigmentation is indispensable for most practices.

In your view, what are the top three trends in aesthetic dermatology?

Over the years, I have observed several key trends in aesthetic dermatology:

- Minimally invasive procedures. There is a growing preference for less invasive treatments. Patients increasingly desire minimal downtime while still achieving significant results.

- Advancements in laser and energy-based devices for darker skin. There have been substantial advancements in technologies that are safer and more effective for darker skin tones. These developments play a crucial role in addressing diverse patient needs and providing inclusive dermatologic care.

- Natural aesthetic. I am hopeful that the trend toward an overdone appearance is fading. There seems to be a shift back towards a more natural and conservative aesthetic, emphasizing subtle enhancements over dramatic changes.

What development in dermatology are you most excited about in the next 5 years?

I am most excited to see how artificial intelligence and robotics play a role in energy-based devices.

Dr. Ortiz disclosed having financial relationships with several pharmaceutical and device companies. She is also cochair of the MOAS.

Arisa E. Ortiz, MD, began her term as president of the American Society for Laser Medicine and Surgery (ASLMS) during the organization’s annual meeting in April 2024.

After earning her medical degree from Albany Medical College, Albany, New York, Dr. Ortiz, a native of Los Angeles, completed her dermatology residency training at the University of California, Irvine, and the university’s Beckman Laser Institute. Next, she completed a laser and cosmetic dermatology fellowship at Massachusetts General Hospital, Harvard Medical School, and the Wellman Center for Photomedicine, all in Boston, and acquired additional fellowship training in Mohs micrographic surgery at the University of California, San Diego (UCSD). Dr. Ortiz is currently director of laser and cosmetic dermatology and a clinical professor of dermatology at UCSD.

She has authored more than 60 publications on new innovations in cutaneous surgery and is a frequent speaker at meetings of the American Academy of Dermatology, the American Society for Dermatologic Surgery (ASDS), and ASLMS, and she cochairs the annual Masters of Aesthetics Symposium in San Diego. Dr. Ortiz has received several awards, including the 2024 Castle Connolly Top Doctor Award and the Exceptional Women in Medicine Award; Newsweek America’s Best Dermatologists; the ASLMS Dr. Horace Furumoto Young Investigator Award, the ASLMS Best of Session Award for Cutaneous Applications, and the ASDS President’s Outstanding Service Award. Her primary research focuses on the laser treatment of nonmelanoma skin cancer.

In an interview, Dr. Ortiz spoke about her goals as ASLMS president and other topics related to dermatology.

Who inspired you most to become a doctor?

I’ve wanted to become a doctor for as long as I can remember. My fascination with science and the idea of helping people improve their health were driving forces. However, my biggest influence early on was my uncle, who was a pediatrician. His dedication and passion for medicine deeply inspired me and solidified my desire to pursue a career in healthcare.

I understand that a bout with chickenpox as a teenager influenced your decision to specialize in dermatology.

It’s an interesting and somewhat humorous story. When I was 18, I contracted chickenpox and ended up with scars on my face. It was a tough experience as a teenager, but it’s fascinating how such events can shape your life. In my quest for help, I opened the Yellow Pages and randomly chose a dermatologist nearby, who turned out to be Gary Lask, MD, director of lasers at UCLA [University of California, Los Angeles]. During our visit, I mentioned that I was premed, and he encouraged me to consider dermatology. About 6 years later, as a second-year medical student, I realized my passion for dermatology. I reached out to Dr. Lask and told him: “You were right. I want to be a dermatologist. Now, you have to help me get in!” Today, he remains my mentor, and I am deeply grateful for his guidance and support on this journey.

One of the initiatives for your term as ASLMS president includes a focus on safety standards for lasers and energy-based devices. Why is this important now?

Working at the university, I frequently encounter severe complications arising from the improper use of lasers and energy-based devices. As these procedures gain popularity, more providers are offering them, yet often without adequate training. As the world’s premier laser society, it is our duty to ensure patient safety. In the ever-evolving field of laser medicine, it is crucial that we continually strive to enhance the regulation of laser usage, ensuring that patients receive the highest standard of care with minimal risk.

One of the suggestions you have for the safety initiative is to offer a rigorous laser safety certification course with continuing education opportunities as a way foster a culture of heightened safety standards. Please explain what would be included in such a course and how it would align with current efforts to report adverse events such as the ASDS-Northwestern University Cutaneous Procedures Adverse Events Reporting (CAPER) registry and the Food and Drug Administration’s MedWatch Program.

A laser safety certification task force has been established to determine the best approach for developing a comprehensive course. The task force aims to assess the necessity of a formal safety certification in our industry, identify the resources needed to support such a certification, establish general safety protocols to form the content foundation, address potential legal concerns, and outline the process for formal certification program recognition. This exploratory work is expected to conclude by the end of the year. The proposed course may include modules on the fundamentals of laser physics, safe operation techniques, patient selection and management, and emergency protocols. Continuing education opportunities would be considered to keep practitioners updated on the latest advancements and safety protocols in laser medicine, thereby fostering a culture of heightened safety standards.

Another initiative for your term is the rollout of a tattoo removal program for former gang members based on the UCSD Clean Slate Tattoo Removal Program. Please tell us more about your vision for this national program.

UCSD Dermatology, in collaboration with UCSD Global Health, has been involved in the Clean Slate Tattoo Removal Program for the past decade. This initiative supports and rehabilitates former gang members by offering laser tattoo removal, helping them reintegrate into society. My vision is to equip our members with the necessary protocols to implement this outreach initiative in their own communities. By providing opportunities for reform and growth, we aim to foster safer and more inclusive communities nationwide.

You were one of the first clinicians to use a laser to treat basal cell carcinoma (BCC). Who are the ideal candidates for this procedure? Is the technique ready for wide clinical adoption? If not, what kind of studies are needed to make it so?

My research passion lies in optimizing laser treatments for BCC. During my fellowship with R. Rox Anderson, MD, and Mathew Avram, MD, at the MGH Wellman Center for Photomedicine, we conducted a pilot study using the 1064-nm Nd:YAG laser, achieving a 92% clearance rate after one treatment. Inspired by these results, we conducted a larger multicenter study, which demonstrated a 90% clearance rate after a single treatment. I now incorporate this technique into my daily practice. The ideal candidates for this procedure are patients with BCC that do not meet the Mohs Appropriate Use Criteria, such as those with nodular or superficial BCC subtypes on the body, individuals who are poor surgical candidates, or those who are surgically exhausted. However, I do not recommend this treatment for patients who are primarily concerned about facial scarring, particularly younger individuals; in such cases, Mohs surgery still remains the preferred option. While I believe this technique is ready for broader clinical adoption, it requires an understanding of laser endpoints. We are also exploring antibody-targeted gold nanorods to enhance the selectivity and standardization of the treatment.

Who inspires you most in your work today?

My patients are my greatest inspiration. Their trust and dedication motivate me to stay at the forefront of dermatologic advancements, ensuring I provide the most cutting-edge and safe treatments possible. Their commitment drives my relentless pursuit of continuous learning and innovation in the field.

What’s the best advice you can give to female dermatologists seeking leadership positions at the local, state, or national level?

My best advice is to have the courage to ask for what you seek. Societies are always looking for members who are eager to participate and contribute. If you express your interest in becoming more involved, there is likely a position available for you. The more you are willing to contribute to a society, the more likely you will be noticed and excel into higher leadership positions. Take initiative, show your commitment, and don’t hesitate to step forward when opportunities arise.

What’s the one tried-and-true laser- or energy-based procedure that you consider a “must” for your dermatology practice? And why?

Determining a single “must-have” laser- or energy-based procedure is a challenging question as it greatly depends on the specific needs of your patient population. However, one of the most common concerns among patients involves issues like redness and pigmentation. Therefore, having a versatile laser or an intense pulsed light device that effectively targets both red and brown pigmentation is indispensable for most practices.

In your view, what are the top three trends in aesthetic dermatology?

Over the years, I have observed several key trends in aesthetic dermatology:

- Minimally invasive procedures. There is a growing preference for less invasive treatments. Patients increasingly desire minimal downtime while still achieving significant results.

- Advancements in laser and energy-based devices for darker skin. There have been substantial advancements in technologies that are safer and more effective for darker skin tones. These developments play a crucial role in addressing diverse patient needs and providing inclusive dermatologic care.

- Natural aesthetic. I am hopeful that the trend toward an overdone appearance is fading. There seems to be a shift back towards a more natural and conservative aesthetic, emphasizing subtle enhancements over dramatic changes.

What development in dermatology are you most excited about in the next 5 years?

I am most excited to see how artificial intelligence and robotics play a role in energy-based devices.

Dr. Ortiz disclosed having financial relationships with several pharmaceutical and device companies. She is also cochair of the MOAS.

Pilot Study Finds Experimental CBD Cream Decreases UVA Skin Damage

, results from a small prospective pilot study showed.

“This study hopefully reinvigorates interest in the utilization of whether it be plant-based, human-derived, or synthetic cannabinoids in the management of dermatologic disease,” one of the study investigators, Adam Friedman, MD, professor and chair of dermatology at George Washington University, Washington, DC, told this news organization. The study was published in the Journal of the American Academy of Dermatology.

For the prospective, single-center, pilot trial, which is believed to be the first of its kind, 19 volunteers aged 22-65 with Fitzpatrick skin types I-III applied either a nano-encapsulated CBD cream or a vehicle cream to blind spots on the skin of the buttocks twice daily for 14 days. Next, researchers applied a minimal erythema dose of UV radiation to the treated skin areas for 30 minutes. After 24 hours, they visually inspected the treated areas to clinically compare the erythema. They also performed five 4-mm punch biopsies from UVA- and non-UVA–exposed treatment sites on each buttock, as well as from an untreated control site that was at least 5 cm away from the treated left buttock.

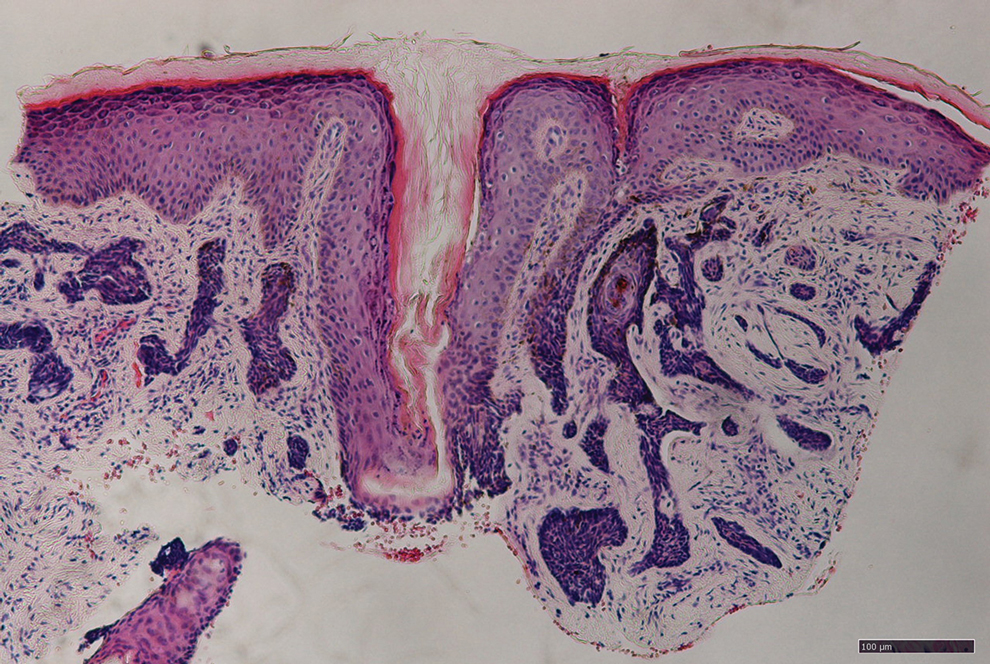

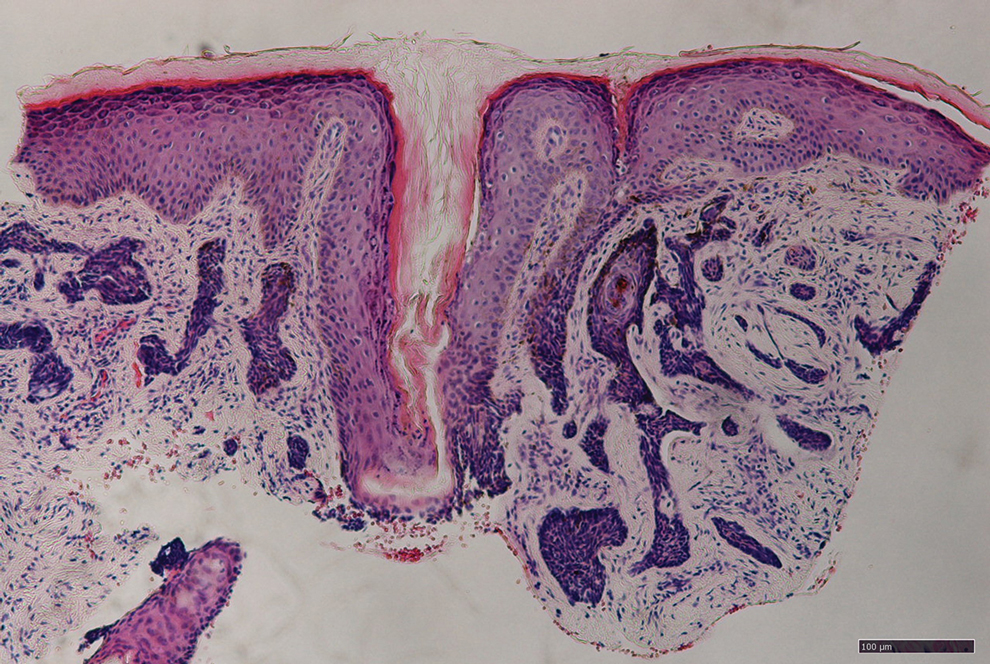

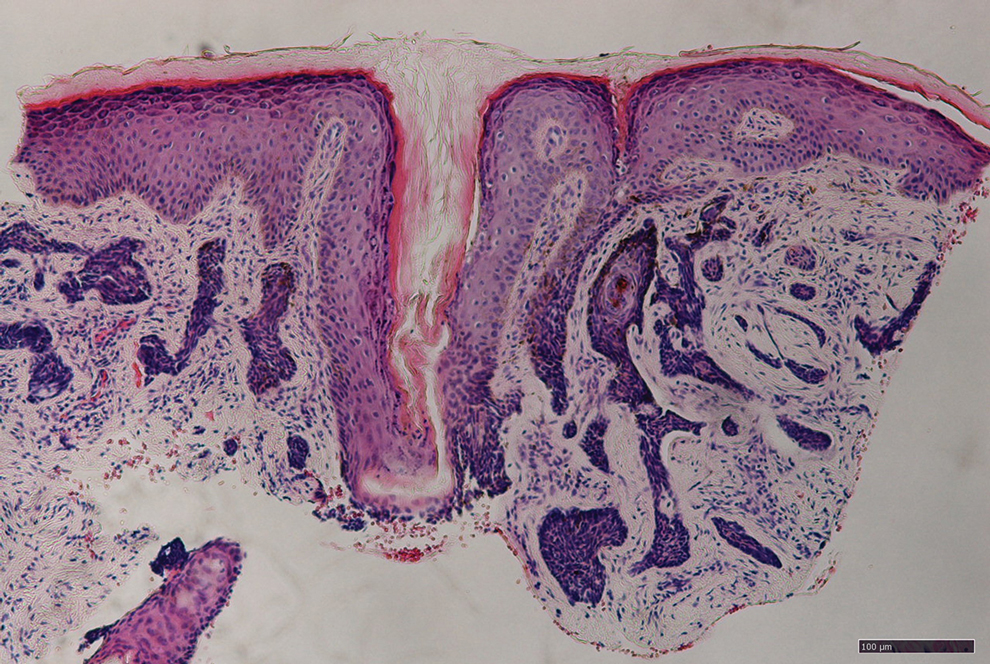

At 24 hours, 21% of study participants showed less redness on CBD-treated skin compared with control-treated skin, while histology showed that CBD-treated skin demonstrated reduced UVA-induced epidermal hyperplasia compared with control-treated skin (a mean 11.3% change from baseline vs 28.7%, respectively; P = .01). In other findings, application of CBD cream reduced DNA damage and DNA mutations associated with UVA-induced skin aging/damage and ultimately skin cancer.

In addition, the CBD-treated skin samples had a reduction in the UVA-associated increase in the premutagenic marker 8-oxoguanine DNA glycosylase 1 and a reduction of two major UVA-induced mitochondrial DNA deletions associated with skin photoaging.

The research, Dr. Friedman noted, “took a village of collaborators and almost 3 years to pull together,” including collaborating with his long-standing mentor, Brian Berman, MD, PhD, professor emeritus of dermatology and dermatologic surgery at the University of Miami, Coral Gables, Florida, and a study coauthor. The study “demonstrated that purposeful delivery of CBD using an established nanoparticle platform ... can have a quantifiable impact on preventing the expected DNA damage and cellular injury one should see from UVA exposure,” said Dr. Friedman, who codeveloped the nanoparticle platform with his father, Joel M. Friedman, MD, PhD, professor of microbiology and immunology at Albert Einstein College of Medicine, New York City.

“Never before has a dermatologic study on topical cannabinoids dove so deeply into the biological impact of this natural ingredient to highlight its potential, here, as a mitigation strategy for unprotected exposure to prevent the downstream sequelae of UV radiation,” Dr. Friedman said.

In the paper, he and his coauthors acknowledged certain limitations of their study, including its small sample size and the single-center design.

Dr. Friedman disclosed that he coinvented the nanoparticle technology used in the trial. Dr. Berman is a consultant at MINO Labs, which funded the study. The remaining authors had no disclosures. The study was done in collaboration with the Center for Clinical and Cosmetic Research in Aventura, Florida.

A version of this article first appeared on Medscape.com.

, results from a small prospective pilot study showed.

“This study hopefully reinvigorates interest in the utilization of whether it be plant-based, human-derived, or synthetic cannabinoids in the management of dermatologic disease,” one of the study investigators, Adam Friedman, MD, professor and chair of dermatology at George Washington University, Washington, DC, told this news organization. The study was published in the Journal of the American Academy of Dermatology.

For the prospective, single-center, pilot trial, which is believed to be the first of its kind, 19 volunteers aged 22-65 with Fitzpatrick skin types I-III applied either a nano-encapsulated CBD cream or a vehicle cream to blind spots on the skin of the buttocks twice daily for 14 days. Next, researchers applied a minimal erythema dose of UV radiation to the treated skin areas for 30 minutes. After 24 hours, they visually inspected the treated areas to clinically compare the erythema. They also performed five 4-mm punch biopsies from UVA- and non-UVA–exposed treatment sites on each buttock, as well as from an untreated control site that was at least 5 cm away from the treated left buttock.

At 24 hours, 21% of study participants showed less redness on CBD-treated skin compared with control-treated skin, while histology showed that CBD-treated skin demonstrated reduced UVA-induced epidermal hyperplasia compared with control-treated skin (a mean 11.3% change from baseline vs 28.7%, respectively; P = .01). In other findings, application of CBD cream reduced DNA damage and DNA mutations associated with UVA-induced skin aging/damage and ultimately skin cancer.

In addition, the CBD-treated skin samples had a reduction in the UVA-associated increase in the premutagenic marker 8-oxoguanine DNA glycosylase 1 and a reduction of two major UVA-induced mitochondrial DNA deletions associated with skin photoaging.

The research, Dr. Friedman noted, “took a village of collaborators and almost 3 years to pull together,” including collaborating with his long-standing mentor, Brian Berman, MD, PhD, professor emeritus of dermatology and dermatologic surgery at the University of Miami, Coral Gables, Florida, and a study coauthor. The study “demonstrated that purposeful delivery of CBD using an established nanoparticle platform ... can have a quantifiable impact on preventing the expected DNA damage and cellular injury one should see from UVA exposure,” said Dr. Friedman, who codeveloped the nanoparticle platform with his father, Joel M. Friedman, MD, PhD, professor of microbiology and immunology at Albert Einstein College of Medicine, New York City.

“Never before has a dermatologic study on topical cannabinoids dove so deeply into the biological impact of this natural ingredient to highlight its potential, here, as a mitigation strategy for unprotected exposure to prevent the downstream sequelae of UV radiation,” Dr. Friedman said.

In the paper, he and his coauthors acknowledged certain limitations of their study, including its small sample size and the single-center design.

Dr. Friedman disclosed that he coinvented the nanoparticle technology used in the trial. Dr. Berman is a consultant at MINO Labs, which funded the study. The remaining authors had no disclosures. The study was done in collaboration with the Center for Clinical and Cosmetic Research in Aventura, Florida.

A version of this article first appeared on Medscape.com.

, results from a small prospective pilot study showed.

“This study hopefully reinvigorates interest in the utilization of whether it be plant-based, human-derived, or synthetic cannabinoids in the management of dermatologic disease,” one of the study investigators, Adam Friedman, MD, professor and chair of dermatology at George Washington University, Washington, DC, told this news organization. The study was published in the Journal of the American Academy of Dermatology.

For the prospective, single-center, pilot trial, which is believed to be the first of its kind, 19 volunteers aged 22-65 with Fitzpatrick skin types I-III applied either a nano-encapsulated CBD cream or a vehicle cream to blind spots on the skin of the buttocks twice daily for 14 days. Next, researchers applied a minimal erythema dose of UV radiation to the treated skin areas for 30 minutes. After 24 hours, they visually inspected the treated areas to clinically compare the erythema. They also performed five 4-mm punch biopsies from UVA- and non-UVA–exposed treatment sites on each buttock, as well as from an untreated control site that was at least 5 cm away from the treated left buttock.

At 24 hours, 21% of study participants showed less redness on CBD-treated skin compared with control-treated skin, while histology showed that CBD-treated skin demonstrated reduced UVA-induced epidermal hyperplasia compared with control-treated skin (a mean 11.3% change from baseline vs 28.7%, respectively; P = .01). In other findings, application of CBD cream reduced DNA damage and DNA mutations associated with UVA-induced skin aging/damage and ultimately skin cancer.

In addition, the CBD-treated skin samples had a reduction in the UVA-associated increase in the premutagenic marker 8-oxoguanine DNA glycosylase 1 and a reduction of two major UVA-induced mitochondrial DNA deletions associated with skin photoaging.

The research, Dr. Friedman noted, “took a village of collaborators and almost 3 years to pull together,” including collaborating with his long-standing mentor, Brian Berman, MD, PhD, professor emeritus of dermatology and dermatologic surgery at the University of Miami, Coral Gables, Florida, and a study coauthor. The study “demonstrated that purposeful delivery of CBD using an established nanoparticle platform ... can have a quantifiable impact on preventing the expected DNA damage and cellular injury one should see from UVA exposure,” said Dr. Friedman, who codeveloped the nanoparticle platform with his father, Joel M. Friedman, MD, PhD, professor of microbiology and immunology at Albert Einstein College of Medicine, New York City.

“Never before has a dermatologic study on topical cannabinoids dove so deeply into the biological impact of this natural ingredient to highlight its potential, here, as a mitigation strategy for unprotected exposure to prevent the downstream sequelae of UV radiation,” Dr. Friedman said.

In the paper, he and his coauthors acknowledged certain limitations of their study, including its small sample size and the single-center design.

Dr. Friedman disclosed that he coinvented the nanoparticle technology used in the trial. Dr. Berman is a consultant at MINO Labs, which funded the study. The remaining authors had no disclosures. The study was done in collaboration with the Center for Clinical and Cosmetic Research in Aventura, Florida.

A version of this article first appeared on Medscape.com.

FROM THE JOURNAL OF THE AMERICAN ACADEMY OF DERMATOLOGY

Study Finds Varying Skin Cancer Rates Based on Sexual Orientation

Addressing dynamics of each SM subgroup will require increasingly tailored prevention, screening, and research efforts, the study authors said.

“We identified specific subgroups within the sexual minority community who are at higher risk for skin cancer, specifically White gay males and Hispanic and non-Hispanic Black SM men and women — particularly individuals who identify as bisexual,” senior author Matthew Mansh, MD, said in an interview. He is an assistant professor of dermatology at the University of California, San Francisco. The study was published online in JAMA Dermatology.

Using data of adults in the US general population from the Behavioral Risk Factor Surveillance System from January 2014 to December 2021, investigators included more than 1.5 million respondents. The proportions of SM women and men (who self-identified as bisexual, lesbian, gay, “something else,” or other) were 2.6% and 2.0%, respectively.

Lifetime skin cancer prevalence was higher among SM men than among heterosexual men (7.4% vs 6.8%; adjusted odds ratio [aOR], 1.16). In analyses stratified by racial and ethnic group, AORs for non-Hispanic Black and Hispanic SM men vs their heterosexual counterparts were 2.18 and 3.81, respectively. The corresponding figures for non-Hispanic Black and Hispanic SM women were 2.33 and 2.46, respectively.

When investigators combined all minority respondents along gender lines, lifetime skin cancer prevalence was higher in bisexual men (aOR, 3.94), bisexual women (aOR, 1.51), and women identifying as something else or other (aOR, 2.70) than in their heterosexual peers.

“I wasn’t expecting that Hispanic or non-Hispanic Black SMs would be at higher risk for skin cancer,” Dr. Mansh said. Even if these groups have more behavioral risk factors for UV radiation (UVR) exposure, he explained, UVR exposure is less strongly linked with skin cancer in darker skin than in lighter skin. Reasons for the counterintuitive finding could include different screening habits among SM people of different racial and ethnic groups, he said, and analyzing such factors will require further research.

Although some effect sizes were modest, the authors wrote, their findings may have important implications for population-based research and public health efforts aimed at early skin cancer detection and prevention. Presently, the United States lacks established guidelines for skin cancer screening. In a 2023 statement published in JAMA, the US Preventive Services Task Force said that there is insufficient evidence to determine the benefit-harm balance of skin cancer screening in asymptomatic people.

“So there has been a lot of recent talk and a need to identify which subset groups of patients might be higher risk for skin cancer and might benefit from more screening,” Dr. Mansh said in an interview. “Understanding more about the high-risk demographic and clinical features that predispose someone to skin cancer helps identify these high-risk populations that could be used to develop better screening guidelines.”

Identifying groups at a higher risk for skin cancer also allows experts to design more targeted counseling or public health interventions focused on these groups, Dr. Mansh added. Absent screening guidelines, experts emphasize changing modifiable risk factors such as UVR exposure, smoking, and alcohol use. “And we know that the message that might change behaviors in a cisgender heterosexual man might be different than in a gay White male or a Hispanic bisexual male.”

A 2017 review showed that interventions to reduce behaviors involving UVR exposure, such as indoor tanning, among young cisgender women focused largely on aging and appearance-based concerns. A 2019 study showed that messages focused on avoiding skin cancer may help motivate SM men to reduce tanning behaviors.

Furthermore, said Dr. Mansh, all electronic health record products available in the United States must provide data fields for sexual orientation. “I don’t believe many dermatologists, depending on the setting, collect that information routinely. Integrating sexual orientation and/or gender identity data into patient intake forms so that it can be integrated into the electronic health record is probably very helpful, not only for your clinical practice but also for future research studies.”

Asked to comment on the results, Rebecca I. Hartman, MD, MPH, who was not involved with the study, said that its impact on clinical practice will be challenging to ascertain. She is chief of dermatology with the VA Boston Healthcare System, assistant professor of dermatology at Harvard Medical School, and director of melanoma epidemiology at Brigham and Women’s Hospital, all in Boston, Massachusetts.

“The study found significant adjusted odds ratios,” Dr. Hartman explained, “but for some of the different populations, the overall lifetime rate of skin cancer is still quite low.” For example, 1.0% for SM non-Hispanic Black men or a difference of 2.1% vs 1.8% in SM Hispanic women. “Thus, I am not sure specific screening recommendations are warranted, although some populations, such as Hispanic sexual minority males, seemed to have a much higher risk (3.8-fold on adjusted analysis) that warrants further investigation.”

For now, she advised assessing patients’ risks for skin cancer based on well-established risk factors such as sun exposure/indoor tanning, skin phototype, immunosuppression, and age.

Dr. Mansh reported no relevant conflicts or funding sources for the study. Dr. Hartman reported no relevant conflicts.

A version of this article appeared on Medscape.com.

Addressing dynamics of each SM subgroup will require increasingly tailored prevention, screening, and research efforts, the study authors said.

“We identified specific subgroups within the sexual minority community who are at higher risk for skin cancer, specifically White gay males and Hispanic and non-Hispanic Black SM men and women — particularly individuals who identify as bisexual,” senior author Matthew Mansh, MD, said in an interview. He is an assistant professor of dermatology at the University of California, San Francisco. The study was published online in JAMA Dermatology.

Using data of adults in the US general population from the Behavioral Risk Factor Surveillance System from January 2014 to December 2021, investigators included more than 1.5 million respondents. The proportions of SM women and men (who self-identified as bisexual, lesbian, gay, “something else,” or other) were 2.6% and 2.0%, respectively.

Lifetime skin cancer prevalence was higher among SM men than among heterosexual men (7.4% vs 6.8%; adjusted odds ratio [aOR], 1.16). In analyses stratified by racial and ethnic group, AORs for non-Hispanic Black and Hispanic SM men vs their heterosexual counterparts were 2.18 and 3.81, respectively. The corresponding figures for non-Hispanic Black and Hispanic SM women were 2.33 and 2.46, respectively.

When investigators combined all minority respondents along gender lines, lifetime skin cancer prevalence was higher in bisexual men (aOR, 3.94), bisexual women (aOR, 1.51), and women identifying as something else or other (aOR, 2.70) than in their heterosexual peers.

“I wasn’t expecting that Hispanic or non-Hispanic Black SMs would be at higher risk for skin cancer,” Dr. Mansh said. Even if these groups have more behavioral risk factors for UV radiation (UVR) exposure, he explained, UVR exposure is less strongly linked with skin cancer in darker skin than in lighter skin. Reasons for the counterintuitive finding could include different screening habits among SM people of different racial and ethnic groups, he said, and analyzing such factors will require further research.

Although some effect sizes were modest, the authors wrote, their findings may have important implications for population-based research and public health efforts aimed at early skin cancer detection and prevention. Presently, the United States lacks established guidelines for skin cancer screening. In a 2023 statement published in JAMA, the US Preventive Services Task Force said that there is insufficient evidence to determine the benefit-harm balance of skin cancer screening in asymptomatic people.

“So there has been a lot of recent talk and a need to identify which subset groups of patients might be higher risk for skin cancer and might benefit from more screening,” Dr. Mansh said in an interview. “Understanding more about the high-risk demographic and clinical features that predispose someone to skin cancer helps identify these high-risk populations that could be used to develop better screening guidelines.”

Identifying groups at a higher risk for skin cancer also allows experts to design more targeted counseling or public health interventions focused on these groups, Dr. Mansh added. Absent screening guidelines, experts emphasize changing modifiable risk factors such as UVR exposure, smoking, and alcohol use. “And we know that the message that might change behaviors in a cisgender heterosexual man might be different than in a gay White male or a Hispanic bisexual male.”

A 2017 review showed that interventions to reduce behaviors involving UVR exposure, such as indoor tanning, among young cisgender women focused largely on aging and appearance-based concerns. A 2019 study showed that messages focused on avoiding skin cancer may help motivate SM men to reduce tanning behaviors.

Furthermore, said Dr. Mansh, all electronic health record products available in the United States must provide data fields for sexual orientation. “I don’t believe many dermatologists, depending on the setting, collect that information routinely. Integrating sexual orientation and/or gender identity data into patient intake forms so that it can be integrated into the electronic health record is probably very helpful, not only for your clinical practice but also for future research studies.”

Asked to comment on the results, Rebecca I. Hartman, MD, MPH, who was not involved with the study, said that its impact on clinical practice will be challenging to ascertain. She is chief of dermatology with the VA Boston Healthcare System, assistant professor of dermatology at Harvard Medical School, and director of melanoma epidemiology at Brigham and Women’s Hospital, all in Boston, Massachusetts.

“The study found significant adjusted odds ratios,” Dr. Hartman explained, “but for some of the different populations, the overall lifetime rate of skin cancer is still quite low.” For example, 1.0% for SM non-Hispanic Black men or a difference of 2.1% vs 1.8% in SM Hispanic women. “Thus, I am not sure specific screening recommendations are warranted, although some populations, such as Hispanic sexual minority males, seemed to have a much higher risk (3.8-fold on adjusted analysis) that warrants further investigation.”

For now, she advised assessing patients’ risks for skin cancer based on well-established risk factors such as sun exposure/indoor tanning, skin phototype, immunosuppression, and age.

Dr. Mansh reported no relevant conflicts or funding sources for the study. Dr. Hartman reported no relevant conflicts.

A version of this article appeared on Medscape.com.

Addressing dynamics of each SM subgroup will require increasingly tailored prevention, screening, and research efforts, the study authors said.

“We identified specific subgroups within the sexual minority community who are at higher risk for skin cancer, specifically White gay males and Hispanic and non-Hispanic Black SM men and women — particularly individuals who identify as bisexual,” senior author Matthew Mansh, MD, said in an interview. He is an assistant professor of dermatology at the University of California, San Francisco. The study was published online in JAMA Dermatology.

Using data of adults in the US general population from the Behavioral Risk Factor Surveillance System from January 2014 to December 2021, investigators included more than 1.5 million respondents. The proportions of SM women and men (who self-identified as bisexual, lesbian, gay, “something else,” or other) were 2.6% and 2.0%, respectively.

Lifetime skin cancer prevalence was higher among SM men than among heterosexual men (7.4% vs 6.8%; adjusted odds ratio [aOR], 1.16). In analyses stratified by racial and ethnic group, AORs for non-Hispanic Black and Hispanic SM men vs their heterosexual counterparts were 2.18 and 3.81, respectively. The corresponding figures for non-Hispanic Black and Hispanic SM women were 2.33 and 2.46, respectively.

When investigators combined all minority respondents along gender lines, lifetime skin cancer prevalence was higher in bisexual men (aOR, 3.94), bisexual women (aOR, 1.51), and women identifying as something else or other (aOR, 2.70) than in their heterosexual peers.

“I wasn’t expecting that Hispanic or non-Hispanic Black SMs would be at higher risk for skin cancer,” Dr. Mansh said. Even if these groups have more behavioral risk factors for UV radiation (UVR) exposure, he explained, UVR exposure is less strongly linked with skin cancer in darker skin than in lighter skin. Reasons for the counterintuitive finding could include different screening habits among SM people of different racial and ethnic groups, he said, and analyzing such factors will require further research.

Although some effect sizes were modest, the authors wrote, their findings may have important implications for population-based research and public health efforts aimed at early skin cancer detection and prevention. Presently, the United States lacks established guidelines for skin cancer screening. In a 2023 statement published in JAMA, the US Preventive Services Task Force said that there is insufficient evidence to determine the benefit-harm balance of skin cancer screening in asymptomatic people.

“So there has been a lot of recent talk and a need to identify which subset groups of patients might be higher risk for skin cancer and might benefit from more screening,” Dr. Mansh said in an interview. “Understanding more about the high-risk demographic and clinical features that predispose someone to skin cancer helps identify these high-risk populations that could be used to develop better screening guidelines.”

Identifying groups at a higher risk for skin cancer also allows experts to design more targeted counseling or public health interventions focused on these groups, Dr. Mansh added. Absent screening guidelines, experts emphasize changing modifiable risk factors such as UVR exposure, smoking, and alcohol use. “And we know that the message that might change behaviors in a cisgender heterosexual man might be different than in a gay White male or a Hispanic bisexual male.”

A 2017 review showed that interventions to reduce behaviors involving UVR exposure, such as indoor tanning, among young cisgender women focused largely on aging and appearance-based concerns. A 2019 study showed that messages focused on avoiding skin cancer may help motivate SM men to reduce tanning behaviors.

Furthermore, said Dr. Mansh, all electronic health record products available in the United States must provide data fields for sexual orientation. “I don’t believe many dermatologists, depending on the setting, collect that information routinely. Integrating sexual orientation and/or gender identity data into patient intake forms so that it can be integrated into the electronic health record is probably very helpful, not only for your clinical practice but also for future research studies.”

Asked to comment on the results, Rebecca I. Hartman, MD, MPH, who was not involved with the study, said that its impact on clinical practice will be challenging to ascertain. She is chief of dermatology with the VA Boston Healthcare System, assistant professor of dermatology at Harvard Medical School, and director of melanoma epidemiology at Brigham and Women’s Hospital, all in Boston, Massachusetts.

“The study found significant adjusted odds ratios,” Dr. Hartman explained, “but for some of the different populations, the overall lifetime rate of skin cancer is still quite low.” For example, 1.0% for SM non-Hispanic Black men or a difference of 2.1% vs 1.8% in SM Hispanic women. “Thus, I am not sure specific screening recommendations are warranted, although some populations, such as Hispanic sexual minority males, seemed to have a much higher risk (3.8-fold on adjusted analysis) that warrants further investigation.”

For now, she advised assessing patients’ risks for skin cancer based on well-established risk factors such as sun exposure/indoor tanning, skin phototype, immunosuppression, and age.

Dr. Mansh reported no relevant conflicts or funding sources for the study. Dr. Hartman reported no relevant conflicts.

A version of this article appeared on Medscape.com.

FROM JAMA DERMATOLOGY

Dermatoporosis in Older Adults: A Condition That Requires Holistic, Creative Management

WASHINGTON — and conveys the skin’s vulnerability to serious medical complications, said Adam Friedman, MD, at the ElderDerm conference on dermatology in the older patient.

Key features of dermatoporosis include atrophic skin, solar purpura, white pseudoscars, easily acquired skin lacerations and tears, bruises, and delayed healing. “We’re going to see more of this, and it will more and more be a chief complaint of patients,” said Dr. Friedman, professor and chair of dermatology at George Washington University (GWU) in Washington, and co-chair of the meeting. GWU hosted the conference, describing it as a first-of-its-kind meeting dedicated to improving dermatologic care for older adults.

Dermatoporosis was described in the literature in 2007 by dermatologists at the University of Geneva in Switzerland. “It is not only a cosmetic problem,” Dr. Friedman said. “This is a medical problem ... which can absolutely lead to comorbidities [such as deep dissecting hematomas] that are a huge strain on the healthcare system.”

Dermatologists can meet the moment with holistic, creative combination treatment and counseling approaches aimed at improving the mechanical strength of skin and preventing potential complications in older patients, Dr. Friedman said at the meeting.

He described the case of a 76-year-old woman who presented with dermatoporosis on her arms involving pronounced skin atrophy, solar purpura, and a small covered laceration. “This was a patient who was both devastated by the appearance” and impacted by the pain and burden of dressing frequent wounds, said Dr. Friedman, who is also the director of the Residency Program, of Translational Research, and of Supportive Oncodermatology, all within the Department of Dermatology at GWU.

With 11 months of topical treatment that included daily application of calcipotriene 0.05% ointment and nightly application of tazarotene 0.045% lotion and oral supplementation with 1000-mg vitamin C twice daily and 1000-mg citrus bioflavonoid complex daily, as well as no changes to the medications she took for various comorbidities, the solar purpura improved significantly and “we made a huge difference in the integrity of her skin,” he said.

Dr. Friedman also described this case in a recently published article in the Journal of Drugs in Dermatology titled “What’s Old Is New: An Emerging Focus on Dermatoporosis”.

Likely Pathophysiology

Advancing age and chronic ultraviolet (UV) radiation exposure are the chief drivers of dermatoporosis. In addition to UVA and UVB light, other secondary drivers include genetic susceptibility, topical and systematic corticosteroid use, and anticoagulant treatment.

Its pathogenesis is not well described in the literature but is easy to envision, Dr. Friedman said. For one, both advancing age and exposure to UV light lead to a reduction in hygroscopic glycosaminoglycans, including hyaluronate (HA), and the impact of this diminishment is believed to go “beyond [the loss of] buoyancy,” he noted. Researchers have “been showing these are not just water-loving molecules, they also have some biologic properties” relating to keratinocyte production and epidermal turnover that appear to be intricately linked to the pathogenesis of dermatoporosis.

HAs have been shown to interact with the cell surface receptor CD44 to stimulate keratinocyte proliferation, and low levels of CD44 have been reported in skin with dermatoporosis compared with a younger control population. (A newly characterized organelle, the hyaluronosome, serves as an HA factory and contains CD44 and heparin-binding epidermal growth factor, Dr. Friedman noted. Inadequate functioning may be involved in skin atrophy.)

Advancing age also brings an increase in matrix metalloproteinases (MMPs)–1, –2, and –3, which are “the demolition workers of the skin,” and downregulation of a tissue inhibitor of MMPs, he said.

Adding insult to injury, dermis-penetrating UVA also activates MMPs, “obliterating collagen and elastin.” UVB generates DNA photoproducts, including oxidative stress and damaging skin cell DNA. “That UV light induces breakdown [of the skin] through different mechanisms and inhibits buildup is a simple concept I think our patients can understand,” Dr. Friedman said.

Multifaceted Treatment

For an older adult, “there is never a wrong time to start sun-protective measures” to prevent or try to halt the progression of dermatoporosis, Dr. Friedman said, noting that “UV radiation is an immunosuppressant, so there are many good reasons to start” if the adult is not already taking measures on a regular basis.

Potential treatments for the syndrome of dermatoporosis are backed by few clinical studies, but dermatologists are skilled at translating the use of products from one disease state to another based on understandings of pathophysiology and mechanistic pathways, Dr. Friedman commented in an interview after the meeting.

For instance, “from decades of research, we know what retinoids will do to the skin,” he said in the interview. “We know they will turn on collagen-1 and -3 genes in the skin, and that they will increase the production of glycosaminoglycans ... By understanding the biology, we can translate this to dermatoporosis.” These changes were demonstrated, for instance, in a small study of topical retinol in older adults.

Studies of topical alpha hydroxy acid (AHA), moreover, have demonstrated epidermal thickening and firmness, and “some studies show they can limit steroid-induced atrophy,” Dr. Friedman said at the meeting. “And things like lactic acid and urea are super accessible.”

Topical dehydroepiandrosterone is backed by even less data than retinoids or AHAs are, “but it’s still something to consider” as part of a multimechanistic approach to dermatoporosis, Dr. Friedman shared, noting that a small study demonstrated beneficial effects on epidermal atrophy in aging skin.

The use of vitamin D analogues such as calcipotriene, which is approved for the treatment of psoriasis, may also be promising. “One concept is that [vitamin D analogues] increase calcium concentrations in the epidermis, and calcium is so central to keratinocyte differentiation” and epidermal function that calcipotriene in combination with topical steroid therapy has been shown to limit skin atrophy, he noted.

Nutritionally, low protein intake is a known problem in the older population and is associated with increased skin fragility and poorer healing. From a prevention and treatment standpoint, therefore, patients can be counseled to be attentive to their diets, Dr. Friedman said. Experts have recommended a higher protein intake for older adults than for younger adults; in 2013, an international group recommended a protein intake of 1-1.5 g/kg/d for healthy older adults and more for those with acute or chronic illness.

“Patients love talking about diet and skin disease ... and they love over-the-counter nutraceuticals as well because they want something natural,” Dr. Friedman said. “I like using bioflavonoids in combination with vitamin C, which can be effective especially for solar purpura.”

A 6-week randomized, placebo-controlled, double-blind trial involving 67 patients with purpura associated with aging found a 50% reduction in purpura lesions among those took a particular citrus bioflavonoid blend twice daily. “I thought this was a pretty well-done study,” he said, noting that patient self-assessment and investigator global assessment were utilized.

Skin Injury and Wound Prevention

In addition to recommending gentle skin cleansers and daily moisturizing, dermatologists should talk to their older patients with dermatoporosis about their home environments. “What is it like? Is there furniture with sharp edges?” Dr. Friedman advised. If so, could they use sleeves or protectors on their arms or legs “to protect against injury?”

In a later meeting session about lower-extremity wounds on geriatric patients, Michael Stempel, DPM, assistant professor of medicine and surgery and chief of podiatry at GWU, said that he was happy to hear the term dermatoporosis being used because like diabetes, it’s a risk factor for developing lower-extremity wounds and poor wound healing.

He shared the case of an older woman with dermatoporosis who “tripped and skinned her knee against a step and then self-treated it for over a month by pouring hydrogen peroxide over it and letting air get to it.” The wound developed into “full-thickness tissue loss,” said Dr. Stempel, also medical director of the Wound Healing and Limb Preservation Center at GWU Hospital.

Misperceptions are common among older patients about how a simple wound should be managed; for instance, the adage “just let it get air” is not uncommon. This makes anticipatory guidance about basic wound care — such as the importance of a moist and occlusive environment and the safe use of hydrogen peroxide — especially important for patients with dermatoporosis, Dr. Friedman commented after the meeting.

Dermatoporosis is quantifiable, Dr. Friedman said during the meeting, with a scoring system having been developed by the researchers in Switzerland who originally coined the term. Its use in practice is unnecessary, but its existence is “nice to share with patients who feel bothered because oftentimes, patients feel it’s been dismissed by other providers,” he said. “Telling your patients there’s an actual name for their problem, and that there are ways to quantify and measure changes over time, is validating.”

Its recognition as a medical condition, Dr. Friedman added, also enables the dermatologist to bring it up and counsel appropriately — without a patient feeling shame — when it is identified in the context of a skin excision, treatment of a primary inflammatory skin disease, or management of another dermatologic problem.

Dr. Friedman disclosed that he is a consultant/advisory board member for L’Oréal, La Roche-Posay, Galderma, and other companies; a speaker for Regeneron/Sanofi, Incyte, BMD, and Janssen; and has grants from Pfizer, Lilly, Incyte, and other companies. Dr. Stempel reported no disclosures.

A version of this article first appeared on Medscape.com.

WASHINGTON — and conveys the skin’s vulnerability to serious medical complications, said Adam Friedman, MD, at the ElderDerm conference on dermatology in the older patient.

Key features of dermatoporosis include atrophic skin, solar purpura, white pseudoscars, easily acquired skin lacerations and tears, bruises, and delayed healing. “We’re going to see more of this, and it will more and more be a chief complaint of patients,” said Dr. Friedman, professor and chair of dermatology at George Washington University (GWU) in Washington, and co-chair of the meeting. GWU hosted the conference, describing it as a first-of-its-kind meeting dedicated to improving dermatologic care for older adults.

Dermatoporosis was described in the literature in 2007 by dermatologists at the University of Geneva in Switzerland. “It is not only a cosmetic problem,” Dr. Friedman said. “This is a medical problem ... which can absolutely lead to comorbidities [such as deep dissecting hematomas] that are a huge strain on the healthcare system.”

Dermatologists can meet the moment with holistic, creative combination treatment and counseling approaches aimed at improving the mechanical strength of skin and preventing potential complications in older patients, Dr. Friedman said at the meeting.

He described the case of a 76-year-old woman who presented with dermatoporosis on her arms involving pronounced skin atrophy, solar purpura, and a small covered laceration. “This was a patient who was both devastated by the appearance” and impacted by the pain and burden of dressing frequent wounds, said Dr. Friedman, who is also the director of the Residency Program, of Translational Research, and of Supportive Oncodermatology, all within the Department of Dermatology at GWU.

With 11 months of topical treatment that included daily application of calcipotriene 0.05% ointment and nightly application of tazarotene 0.045% lotion and oral supplementation with 1000-mg vitamin C twice daily and 1000-mg citrus bioflavonoid complex daily, as well as no changes to the medications she took for various comorbidities, the solar purpura improved significantly and “we made a huge difference in the integrity of her skin,” he said.

Dr. Friedman also described this case in a recently published article in the Journal of Drugs in Dermatology titled “What’s Old Is New: An Emerging Focus on Dermatoporosis”.

Likely Pathophysiology

Advancing age and chronic ultraviolet (UV) radiation exposure are the chief drivers of dermatoporosis. In addition to UVA and UVB light, other secondary drivers include genetic susceptibility, topical and systematic corticosteroid use, and anticoagulant treatment.

Its pathogenesis is not well described in the literature but is easy to envision, Dr. Friedman said. For one, both advancing age and exposure to UV light lead to a reduction in hygroscopic glycosaminoglycans, including hyaluronate (HA), and the impact of this diminishment is believed to go “beyond [the loss of] buoyancy,” he noted. Researchers have “been showing these are not just water-loving molecules, they also have some biologic properties” relating to keratinocyte production and epidermal turnover that appear to be intricately linked to the pathogenesis of dermatoporosis.

HAs have been shown to interact with the cell surface receptor CD44 to stimulate keratinocyte proliferation, and low levels of CD44 have been reported in skin with dermatoporosis compared with a younger control population. (A newly characterized organelle, the hyaluronosome, serves as an HA factory and contains CD44 and heparin-binding epidermal growth factor, Dr. Friedman noted. Inadequate functioning may be involved in skin atrophy.)

Advancing age also brings an increase in matrix metalloproteinases (MMPs)–1, –2, and –3, which are “the demolition workers of the skin,” and downregulation of a tissue inhibitor of MMPs, he said.

Adding insult to injury, dermis-penetrating UVA also activates MMPs, “obliterating collagen and elastin.” UVB generates DNA photoproducts, including oxidative stress and damaging skin cell DNA. “That UV light induces breakdown [of the skin] through different mechanisms and inhibits buildup is a simple concept I think our patients can understand,” Dr. Friedman said.

Multifaceted Treatment

For an older adult, “there is never a wrong time to start sun-protective measures” to prevent or try to halt the progression of dermatoporosis, Dr. Friedman said, noting that “UV radiation is an immunosuppressant, so there are many good reasons to start” if the adult is not already taking measures on a regular basis.

Potential treatments for the syndrome of dermatoporosis are backed by few clinical studies, but dermatologists are skilled at translating the use of products from one disease state to another based on understandings of pathophysiology and mechanistic pathways, Dr. Friedman commented in an interview after the meeting.

For instance, “from decades of research, we know what retinoids will do to the skin,” he said in the interview. “We know they will turn on collagen-1 and -3 genes in the skin, and that they will increase the production of glycosaminoglycans ... By understanding the biology, we can translate this to dermatoporosis.” These changes were demonstrated, for instance, in a small study of topical retinol in older adults.

Studies of topical alpha hydroxy acid (AHA), moreover, have demonstrated epidermal thickening and firmness, and “some studies show they can limit steroid-induced atrophy,” Dr. Friedman said at the meeting. “And things like lactic acid and urea are super accessible.”

Topical dehydroepiandrosterone is backed by even less data than retinoids or AHAs are, “but it’s still something to consider” as part of a multimechanistic approach to dermatoporosis, Dr. Friedman shared, noting that a small study demonstrated beneficial effects on epidermal atrophy in aging skin.

The use of vitamin D analogues such as calcipotriene, which is approved for the treatment of psoriasis, may also be promising. “One concept is that [vitamin D analogues] increase calcium concentrations in the epidermis, and calcium is so central to keratinocyte differentiation” and epidermal function that calcipotriene in combination with topical steroid therapy has been shown to limit skin atrophy, he noted.

Nutritionally, low protein intake is a known problem in the older population and is associated with increased skin fragility and poorer healing. From a prevention and treatment standpoint, therefore, patients can be counseled to be attentive to their diets, Dr. Friedman said. Experts have recommended a higher protein intake for older adults than for younger adults; in 2013, an international group recommended a protein intake of 1-1.5 g/kg/d for healthy older adults and more for those with acute or chronic illness.

“Patients love talking about diet and skin disease ... and they love over-the-counter nutraceuticals as well because they want something natural,” Dr. Friedman said. “I like using bioflavonoids in combination with vitamin C, which can be effective especially for solar purpura.”

A 6-week randomized, placebo-controlled, double-blind trial involving 67 patients with purpura associated with aging found a 50% reduction in purpura lesions among those took a particular citrus bioflavonoid blend twice daily. “I thought this was a pretty well-done study,” he said, noting that patient self-assessment and investigator global assessment were utilized.

Skin Injury and Wound Prevention

In addition to recommending gentle skin cleansers and daily moisturizing, dermatologists should talk to their older patients with dermatoporosis about their home environments. “What is it like? Is there furniture with sharp edges?” Dr. Friedman advised. If so, could they use sleeves or protectors on their arms or legs “to protect against injury?”

In a later meeting session about lower-extremity wounds on geriatric patients, Michael Stempel, DPM, assistant professor of medicine and surgery and chief of podiatry at GWU, said that he was happy to hear the term dermatoporosis being used because like diabetes, it’s a risk factor for developing lower-extremity wounds and poor wound healing.

He shared the case of an older woman with dermatoporosis who “tripped and skinned her knee against a step and then self-treated it for over a month by pouring hydrogen peroxide over it and letting air get to it.” The wound developed into “full-thickness tissue loss,” said Dr. Stempel, also medical director of the Wound Healing and Limb Preservation Center at GWU Hospital.

Misperceptions are common among older patients about how a simple wound should be managed; for instance, the adage “just let it get air” is not uncommon. This makes anticipatory guidance about basic wound care — such as the importance of a moist and occlusive environment and the safe use of hydrogen peroxide — especially important for patients with dermatoporosis, Dr. Friedman commented after the meeting.

Dermatoporosis is quantifiable, Dr. Friedman said during the meeting, with a scoring system having been developed by the researchers in Switzerland who originally coined the term. Its use in practice is unnecessary, but its existence is “nice to share with patients who feel bothered because oftentimes, patients feel it’s been dismissed by other providers,” he said. “Telling your patients there’s an actual name for their problem, and that there are ways to quantify and measure changes over time, is validating.”

Its recognition as a medical condition, Dr. Friedman added, also enables the dermatologist to bring it up and counsel appropriately — without a patient feeling shame — when it is identified in the context of a skin excision, treatment of a primary inflammatory skin disease, or management of another dermatologic problem.

Dr. Friedman disclosed that he is a consultant/advisory board member for L’Oréal, La Roche-Posay, Galderma, and other companies; a speaker for Regeneron/Sanofi, Incyte, BMD, and Janssen; and has grants from Pfizer, Lilly, Incyte, and other companies. Dr. Stempel reported no disclosures.

A version of this article first appeared on Medscape.com.

WASHINGTON — and conveys the skin’s vulnerability to serious medical complications, said Adam Friedman, MD, at the ElderDerm conference on dermatology in the older patient.

Key features of dermatoporosis include atrophic skin, solar purpura, white pseudoscars, easily acquired skin lacerations and tears, bruises, and delayed healing. “We’re going to see more of this, and it will more and more be a chief complaint of patients,” said Dr. Friedman, professor and chair of dermatology at George Washington University (GWU) in Washington, and co-chair of the meeting. GWU hosted the conference, describing it as a first-of-its-kind meeting dedicated to improving dermatologic care for older adults.