User login

FDA to add myocarditis warning to mRNA COVID-19 vaccines

The Food and Drug Administration is adding a warning to mRNA COVID-19 vaccines’ fact sheets as medical experts continue to investigate cases of heart inflammation, which are rare but are more likely to occur in young men and teen boys.

Doran Fink, MD, PhD, deputy director of the FDA’s division of vaccines and related products applications, told a Centers for Disease Control and Prevention expert panel on June 23 that the FDA is finalizing language on a warning statement for health care providers, vaccine recipients, and parents or caregivers of teens.

The incidents are more likely to follow the second dose of the Pfizer or Moderna vaccine, with chest pain and other symptoms occurring within several days to a week, the warning will note.

“Based on limited follow-up, most cases appear to have been associated with resolution of symptoms, but limited information is available about potential long-term sequelae,” Dr. Fink said, describing the statement to the Advisory Committee on Immunization Practices, independent experts who advise the CDC.

“Symptoms suggestive of myocarditis or pericarditis should result in vaccine recipients seeking medical attention,” he said.

Benefits outweigh risks

Although no formal vote occurred after the meeting, the ACIP members delivered a strong endorsement for continuing to vaccinate 12- to 29-year-olds with the Pfizer and Moderna vaccines despite the warning.

“To me it’s clear, based on current information, that the benefits of vaccine clearly outweigh the risks,” said ACIP member Veronica McNally, president and CEO of the Franny Strong Foundation in Bloomfield, Mich., a sentiment echoed by other members.

As ACIP was meeting, leaders of the nation’s major physician, nurse, and public health associations issued a statement supporting continued vaccination: “The facts are clear: this is an extremely rare side effect, and only an exceedingly small number of people will experience it after vaccination.

“Importantly, for the young people who do, most cases are mild, and individuals recover often on their own or with minimal treatment. In addition, we know that myocarditis and pericarditis are much more common if you get COVID-19, and the risks to the heart from COVID-19 infection can be more severe.”

ACIP heard the evidence behind that claim. According to the Vaccine Safety Datalink, which contains data from more than 12 million medical records, myocarditis or pericarditis occurs in 12- to 39-year-olds at a rate of 8 per 1 million after the second Pfizer dose and 19.8 per 1 million after the second Moderna dose.

The CDC continues to investigate the link between the mRNA vaccines and heart inflammation, including any differences between the vaccines.

Most of the symptoms resolved quickly, said Tom Shimabukuro, deputy director of CDC’s Immunization Safety Office. Of 323 cases analyzed by the CDC, 309 were hospitalized, 295 were discharged, and 218, or 79%, had recovered from symptoms.

“Most postvaccine myocarditis has been responding to minimal treatment,” pediatric cardiologist Matthew Oster, MD, MPH, from Children’s Healthcare of Atlanta, told the panel.

COVID ‘risks are higher’

Overall, the CDC has reported 2,767 COVID-19 deaths among people aged 12-29 years, and there have been 4,018 reported cases of the COVID-linked inflammatory disorder MIS-C since the beginning of the pandemic.

That amounts to 1 MIS-C case in every 3,200 COVID infections – 36% of them among teens aged 12-20 years and 62% among children who are Hispanic or Black and non-Hispanic, according to a CDC presentation.

The CDC estimated that every 1 million second-dose COVID vaccines administered to 12- to 17-year-old boys could prevent 5,700 cases of COVID-19, 215 hospitalizations, 71 ICU admissions, and 2 deaths. There could also be 56-69 myocarditis cases.

The emergence of new variants in the United States and the skewed pattern of vaccination around the country also may increase the risk to unvaccinated young people, noted Grace Lee, MD, MPH, chair of the ACIP’s COVID-19 Vaccine Safety Technical Subgroup and a pediatric infectious disease physician at Stanford (Calif.) Children’s Health.

“If you’re in an area with low vaccination, the risks are higher,” she said. “The benefits [of the vaccine] are going to be far, far greater than any risk.”

Individuals, parents, and their clinicians should consider the full scope of risk when making decisions about vaccination, she said.

As the pandemic evolves, medical experts have to balance the known risks and benefits while they gather more information, said William Schaffner, MD, an infectious disease physician at Vanderbilt University, Nashville, Tenn., and medical director of the National Foundation for Infectious Diseases.

“The story is not over,” Dr. Schaffner said in an interview. “Clearly, we are still working in the face of a pandemic, so there’s urgency to continue vaccinating. But they would like to know more about the long-term consequences of the myocarditis.”

Booster possibilities

Meanwhile, ACIP began conversations on the parameters for a possible vaccine booster. For now, there are simply questions: Would a third vaccine help the immunocompromised gain protection? Should people get a different type of vaccine – mRNA versus adenovirus vector – for their booster? Most important, how long do antibodies last?

“Prior to going around giving everyone boosters, we really need to improve the overall vaccination coverage,” said Helen Keipp Talbot, MD, associate professor of medicine at Vanderbilt University. “That will protect everyone.”

A version of this article first appeared on Medscape.com.

The Food and Drug Administration is adding a warning to mRNA COVID-19 vaccines’ fact sheets as medical experts continue to investigate cases of heart inflammation, which are rare but are more likely to occur in young men and teen boys.

Doran Fink, MD, PhD, deputy director of the FDA’s division of vaccines and related products applications, told a Centers for Disease Control and Prevention expert panel on June 23 that the FDA is finalizing language on a warning statement for health care providers, vaccine recipients, and parents or caregivers of teens.

The incidents are more likely to follow the second dose of the Pfizer or Moderna vaccine, with chest pain and other symptoms occurring within several days to a week, the warning will note.

“Based on limited follow-up, most cases appear to have been associated with resolution of symptoms, but limited information is available about potential long-term sequelae,” Dr. Fink said, describing the statement to the Advisory Committee on Immunization Practices, independent experts who advise the CDC.

“Symptoms suggestive of myocarditis or pericarditis should result in vaccine recipients seeking medical attention,” he said.

Benefits outweigh risks

Although no formal vote occurred after the meeting, the ACIP members delivered a strong endorsement for continuing to vaccinate 12- to 29-year-olds with the Pfizer and Moderna vaccines despite the warning.

“To me it’s clear, based on current information, that the benefits of vaccine clearly outweigh the risks,” said ACIP member Veronica McNally, president and CEO of the Franny Strong Foundation in Bloomfield, Mich., a sentiment echoed by other members.

As ACIP was meeting, leaders of the nation’s major physician, nurse, and public health associations issued a statement supporting continued vaccination: “The facts are clear: this is an extremely rare side effect, and only an exceedingly small number of people will experience it after vaccination.

“Importantly, for the young people who do, most cases are mild, and individuals recover often on their own or with minimal treatment. In addition, we know that myocarditis and pericarditis are much more common if you get COVID-19, and the risks to the heart from COVID-19 infection can be more severe.”

ACIP heard the evidence behind that claim. According to the Vaccine Safety Datalink, which contains data from more than 12 million medical records, myocarditis or pericarditis occurs in 12- to 39-year-olds at a rate of 8 per 1 million after the second Pfizer dose and 19.8 per 1 million after the second Moderna dose.

The CDC continues to investigate the link between the mRNA vaccines and heart inflammation, including any differences between the vaccines.

Most of the symptoms resolved quickly, said Tom Shimabukuro, deputy director of CDC’s Immunization Safety Office. Of 323 cases analyzed by the CDC, 309 were hospitalized, 295 were discharged, and 218, or 79%, had recovered from symptoms.

“Most postvaccine myocarditis has been responding to minimal treatment,” pediatric cardiologist Matthew Oster, MD, MPH, from Children’s Healthcare of Atlanta, told the panel.

COVID ‘risks are higher’

Overall, the CDC has reported 2,767 COVID-19 deaths among people aged 12-29 years, and there have been 4,018 reported cases of the COVID-linked inflammatory disorder MIS-C since the beginning of the pandemic.

That amounts to 1 MIS-C case in every 3,200 COVID infections – 36% of them among teens aged 12-20 years and 62% among children who are Hispanic or Black and non-Hispanic, according to a CDC presentation.

The CDC estimated that every 1 million second-dose COVID vaccines administered to 12- to 17-year-old boys could prevent 5,700 cases of COVID-19, 215 hospitalizations, 71 ICU admissions, and 2 deaths. There could also be 56-69 myocarditis cases.

The emergence of new variants in the United States and the skewed pattern of vaccination around the country also may increase the risk to unvaccinated young people, noted Grace Lee, MD, MPH, chair of the ACIP’s COVID-19 Vaccine Safety Technical Subgroup and a pediatric infectious disease physician at Stanford (Calif.) Children’s Health.

“If you’re in an area with low vaccination, the risks are higher,” she said. “The benefits [of the vaccine] are going to be far, far greater than any risk.”

Individuals, parents, and their clinicians should consider the full scope of risk when making decisions about vaccination, she said.

As the pandemic evolves, medical experts have to balance the known risks and benefits while they gather more information, said William Schaffner, MD, an infectious disease physician at Vanderbilt University, Nashville, Tenn., and medical director of the National Foundation for Infectious Diseases.

“The story is not over,” Dr. Schaffner said in an interview. “Clearly, we are still working in the face of a pandemic, so there’s urgency to continue vaccinating. But they would like to know more about the long-term consequences of the myocarditis.”

Booster possibilities

Meanwhile, ACIP began conversations on the parameters for a possible vaccine booster. For now, there are simply questions: Would a third vaccine help the immunocompromised gain protection? Should people get a different type of vaccine – mRNA versus adenovirus vector – for their booster? Most important, how long do antibodies last?

“Prior to going around giving everyone boosters, we really need to improve the overall vaccination coverage,” said Helen Keipp Talbot, MD, associate professor of medicine at Vanderbilt University. “That will protect everyone.”

A version of this article first appeared on Medscape.com.

The Food and Drug Administration is adding a warning to mRNA COVID-19 vaccines’ fact sheets as medical experts continue to investigate cases of heart inflammation, which are rare but are more likely to occur in young men and teen boys.

Doran Fink, MD, PhD, deputy director of the FDA’s division of vaccines and related products applications, told a Centers for Disease Control and Prevention expert panel on June 23 that the FDA is finalizing language on a warning statement for health care providers, vaccine recipients, and parents or caregivers of teens.

The incidents are more likely to follow the second dose of the Pfizer or Moderna vaccine, with chest pain and other symptoms occurring within several days to a week, the warning will note.

“Based on limited follow-up, most cases appear to have been associated with resolution of symptoms, but limited information is available about potential long-term sequelae,” Dr. Fink said, describing the statement to the Advisory Committee on Immunization Practices, independent experts who advise the CDC.

“Symptoms suggestive of myocarditis or pericarditis should result in vaccine recipients seeking medical attention,” he said.

Benefits outweigh risks

Although no formal vote occurred after the meeting, the ACIP members delivered a strong endorsement for continuing to vaccinate 12- to 29-year-olds with the Pfizer and Moderna vaccines despite the warning.

“To me it’s clear, based on current information, that the benefits of vaccine clearly outweigh the risks,” said ACIP member Veronica McNally, president and CEO of the Franny Strong Foundation in Bloomfield, Mich., a sentiment echoed by other members.

As ACIP was meeting, leaders of the nation’s major physician, nurse, and public health associations issued a statement supporting continued vaccination: “The facts are clear: this is an extremely rare side effect, and only an exceedingly small number of people will experience it after vaccination.

“Importantly, for the young people who do, most cases are mild, and individuals recover often on their own or with minimal treatment. In addition, we know that myocarditis and pericarditis are much more common if you get COVID-19, and the risks to the heart from COVID-19 infection can be more severe.”

ACIP heard the evidence behind that claim. According to the Vaccine Safety Datalink, which contains data from more than 12 million medical records, myocarditis or pericarditis occurs in 12- to 39-year-olds at a rate of 8 per 1 million after the second Pfizer dose and 19.8 per 1 million after the second Moderna dose.

The CDC continues to investigate the link between the mRNA vaccines and heart inflammation, including any differences between the vaccines.

Most of the symptoms resolved quickly, said Tom Shimabukuro, deputy director of CDC’s Immunization Safety Office. Of 323 cases analyzed by the CDC, 309 were hospitalized, 295 were discharged, and 218, or 79%, had recovered from symptoms.

“Most postvaccine myocarditis has been responding to minimal treatment,” pediatric cardiologist Matthew Oster, MD, MPH, from Children’s Healthcare of Atlanta, told the panel.

COVID ‘risks are higher’

Overall, the CDC has reported 2,767 COVID-19 deaths among people aged 12-29 years, and there have been 4,018 reported cases of the COVID-linked inflammatory disorder MIS-C since the beginning of the pandemic.

That amounts to 1 MIS-C case in every 3,200 COVID infections – 36% of them among teens aged 12-20 years and 62% among children who are Hispanic or Black and non-Hispanic, according to a CDC presentation.

The CDC estimated that every 1 million second-dose COVID vaccines administered to 12- to 17-year-old boys could prevent 5,700 cases of COVID-19, 215 hospitalizations, 71 ICU admissions, and 2 deaths. There could also be 56-69 myocarditis cases.

The emergence of new variants in the United States and the skewed pattern of vaccination around the country also may increase the risk to unvaccinated young people, noted Grace Lee, MD, MPH, chair of the ACIP’s COVID-19 Vaccine Safety Technical Subgroup and a pediatric infectious disease physician at Stanford (Calif.) Children’s Health.

“If you’re in an area with low vaccination, the risks are higher,” she said. “The benefits [of the vaccine] are going to be far, far greater than any risk.”

Individuals, parents, and their clinicians should consider the full scope of risk when making decisions about vaccination, she said.

As the pandemic evolves, medical experts have to balance the known risks and benefits while they gather more information, said William Schaffner, MD, an infectious disease physician at Vanderbilt University, Nashville, Tenn., and medical director of the National Foundation for Infectious Diseases.

“The story is not over,” Dr. Schaffner said in an interview. “Clearly, we are still working in the face of a pandemic, so there’s urgency to continue vaccinating. But they would like to know more about the long-term consequences of the myocarditis.”

Booster possibilities

Meanwhile, ACIP began conversations on the parameters for a possible vaccine booster. For now, there are simply questions: Would a third vaccine help the immunocompromised gain protection? Should people get a different type of vaccine – mRNA versus adenovirus vector – for their booster? Most important, how long do antibodies last?

“Prior to going around giving everyone boosters, we really need to improve the overall vaccination coverage,” said Helen Keipp Talbot, MD, associate professor of medicine at Vanderbilt University. “That will protect everyone.”

A version of this article first appeared on Medscape.com.

Gray hair goes away and squids go to space

Goodbye stress, goodbye gray hair

Last year was a doozy, so it wouldn’t be too surprising if we all had a few new gray strands in our hair. But what if we told you that you don’t need to start dying them or plucking them out? What if they could magically go back to the way they were? Well, it may be possible, sans magic and sans stress.

Investigators recently discovered that the age-old belief that stress will permanently turn your hair gray may not be true after all. There’s a strong possibility that it could turn back to its original color once the stressful agent is eliminated.

“Understanding the mechanisms that allow ‘old’ gray hairs to return to their ‘young’ pigmented states could yield new clues about the malleability of human aging in general and how it is influenced by stress,” said senior author Martin Picard, PhD, of Columbia University, New York.

For the study, 14 volunteers were asked to keep a stress diary and review their levels of stress throughout the week. The researchers used a new method of viewing and capturing the images of tiny parts of the hairs to see how much graying took place in each part of the strand. And what they found – some strands naturally turning back to the original color – had never been documented before.

How did it happen? Our good friend the mitochondria. We haven’t really heard that word since eighth-grade biology, but it’s actually the key link between stress hormones and hair pigmentation. Think of them as little radars picking up all different kinds of signals in your body, like mental/emotional stress. They get a big enough alert and they’re going to react, thus gray hair.

So that’s all it takes? Cut the stress and a full head of gray can go back to brown? Not exactly. The researchers said there may be a “threshold because of biological age and other factors.” They believe middle age is near that threshold and it could easily be pushed over due to stress and could potentially go back. But if you’ve been rocking the salt and pepper or silver fox for a number of years and are looking for change, you might want to just eliminate the stress and pick up a bottle of dye.

One small step for squid

Space does a number on the human body. Forget the obvious like going for a walk outside without a spacesuit, or even the well-known risks like the degradation of bone in microgravity; there are numerous smaller but still important changes to the body during spaceflight, like the disruption of the symbiotic relationship between gut bacteria and the human body. This causes the immune system to lose the ability to recognize threats, and illnesses spread more easily.

Naturally, if astronauts are going to undertake years-long journeys to Mars and beyond, a thorough understanding of this disturbance is necessary, and that’s why NASA has sent a bunch of squid to the International Space Station.

When it comes to animal studies, squid aren’t the usual culprits, but there’s a reason NASA chose calamari over the alternatives: The Hawaiian bobtail squid has a symbiotic relationship with bacteria that regulate their bioluminescence in much the same way that we have a symbiotic relationship with our gut bacteria, but the squid is a much simpler animal. If the bioluminescence-regulating bacteria are disturbed during their time in space, it will be much easier to figure out what’s going wrong.

The experiment is ongoing, but we should salute the brave squid who have taken a giant leap for squidkind. Though if NASA didn’t send them up in a giant bubble, we’re going to be very disappointed.

Less plastic, more vanilla

Have you been racked by guilt over the number of plastic water bottles you use? What about the amount of ice cream you eat? Well, this one’s for you.

Plastic isn’t the first thing you think about when you open up a pint of vanilla ice cream and catch the sweet, spicy vanilla scent, or when you smell those fresh vanilla scones coming out of the oven at the coffee shop, but a new study shows that the flavor of vanilla can come from water bottles.

Here’s the deal. A compound called vanillin is responsible for the scent of vanilla, and it can come naturally from the bean or it can be made synthetically. Believe it or not, 85% of vanillin is made synthetically from fossil fuels!

We’ve definitely grown accustomed to our favorite vanilla scents, foods, and cosmetics. In 2018, the global demand for vanillin was about 40,800 tons and is expected to grow to 65,000 tons by 2025, which far exceeds the supply of natural vanilla.

So what can we do? Well, we can use genetically engineered bacteria to turn plastic water bottles into vanillin, according to a study published in the journal Green Chemistry.

The plastic can be broken down into terephthalic acid, which is very similar, chemically speaking, to vanillin. Similar enough that a bit of bioengineering produced Escherichia coli that could convert the acid into the tasty treat, according to researchers at the University of Edinburgh.

A perfect solution? Decreasing plastic waste while producing a valued food product? The thought of consuming plastic isn’t appetizing, so just eat your ice cream and try to forget about it.

No withdrawals from this bank

Into each life, some milestones must fall: High school graduation, birth of a child, first house, 50th wedding anniversary, COVID-19. One LOTME staffer got really excited – way too excited, actually – when his Nissan Sentra reached 300,000 miles.

Well, there are milestones, and then there are milestones. “1,000 Reasons for Hope” is a report celebrating the first 1,000 brains donated to the VA-BU-CLF Brain Bank. For those of you keeping score at home, that would be the Department of Veterans Affairs, Boston University, and the Concussion Legacy Foundation.

The Brain Bank, created in 2008 to study concussions and chronic traumatic encephalopathy, is the brainchild – yes, we went there – of Chris Nowinski, PhD, a former professional wrestler, and Ann McKee, MD, an expert on neurogenerative disease. “Our discoveries have already inspired changes to sports that will prevent many future cases of CTE in the next generation of athletes,” Dr. Nowinski, the CEO of CLF, said in a written statement.

Data from the first thousand brains show that 706 men, including 305 former NFL players, had football as their primary exposure to head impacts. Women were underrepresented, making up only 2.8% of brain donations, so recruiting females is a priority. Anyone interested in pledging can go to PledgeMyBrain.org or call 617-992-0615 for the 24-hour emergency donation pager.

LOTME wanted to help, so we called the Brain Bank to find out about donating. They asked a few questions and we told them what we do for a living. “Oh, you’re with LOTME? Yeah, we’ve … um, seen that before. It’s, um … funny. Can we put you on hold?” We’re starting to get a little sick of the on-hold music by now.

Goodbye stress, goodbye gray hair

Last year was a doozy, so it wouldn’t be too surprising if we all had a few new gray strands in our hair. But what if we told you that you don’t need to start dying them or plucking them out? What if they could magically go back to the way they were? Well, it may be possible, sans magic and sans stress.

Investigators recently discovered that the age-old belief that stress will permanently turn your hair gray may not be true after all. There’s a strong possibility that it could turn back to its original color once the stressful agent is eliminated.

“Understanding the mechanisms that allow ‘old’ gray hairs to return to their ‘young’ pigmented states could yield new clues about the malleability of human aging in general and how it is influenced by stress,” said senior author Martin Picard, PhD, of Columbia University, New York.

For the study, 14 volunteers were asked to keep a stress diary and review their levels of stress throughout the week. The researchers used a new method of viewing and capturing the images of tiny parts of the hairs to see how much graying took place in each part of the strand. And what they found – some strands naturally turning back to the original color – had never been documented before.

How did it happen? Our good friend the mitochondria. We haven’t really heard that word since eighth-grade biology, but it’s actually the key link between stress hormones and hair pigmentation. Think of them as little radars picking up all different kinds of signals in your body, like mental/emotional stress. They get a big enough alert and they’re going to react, thus gray hair.

So that’s all it takes? Cut the stress and a full head of gray can go back to brown? Not exactly. The researchers said there may be a “threshold because of biological age and other factors.” They believe middle age is near that threshold and it could easily be pushed over due to stress and could potentially go back. But if you’ve been rocking the salt and pepper or silver fox for a number of years and are looking for change, you might want to just eliminate the stress and pick up a bottle of dye.

One small step for squid

Space does a number on the human body. Forget the obvious like going for a walk outside without a spacesuit, or even the well-known risks like the degradation of bone in microgravity; there are numerous smaller but still important changes to the body during spaceflight, like the disruption of the symbiotic relationship between gut bacteria and the human body. This causes the immune system to lose the ability to recognize threats, and illnesses spread more easily.

Naturally, if astronauts are going to undertake years-long journeys to Mars and beyond, a thorough understanding of this disturbance is necessary, and that’s why NASA has sent a bunch of squid to the International Space Station.

When it comes to animal studies, squid aren’t the usual culprits, but there’s a reason NASA chose calamari over the alternatives: The Hawaiian bobtail squid has a symbiotic relationship with bacteria that regulate their bioluminescence in much the same way that we have a symbiotic relationship with our gut bacteria, but the squid is a much simpler animal. If the bioluminescence-regulating bacteria are disturbed during their time in space, it will be much easier to figure out what’s going wrong.

The experiment is ongoing, but we should salute the brave squid who have taken a giant leap for squidkind. Though if NASA didn’t send them up in a giant bubble, we’re going to be very disappointed.

Less plastic, more vanilla

Have you been racked by guilt over the number of plastic water bottles you use? What about the amount of ice cream you eat? Well, this one’s for you.

Plastic isn’t the first thing you think about when you open up a pint of vanilla ice cream and catch the sweet, spicy vanilla scent, or when you smell those fresh vanilla scones coming out of the oven at the coffee shop, but a new study shows that the flavor of vanilla can come from water bottles.

Here’s the deal. A compound called vanillin is responsible for the scent of vanilla, and it can come naturally from the bean or it can be made synthetically. Believe it or not, 85% of vanillin is made synthetically from fossil fuels!

We’ve definitely grown accustomed to our favorite vanilla scents, foods, and cosmetics. In 2018, the global demand for vanillin was about 40,800 tons and is expected to grow to 65,000 tons by 2025, which far exceeds the supply of natural vanilla.

So what can we do? Well, we can use genetically engineered bacteria to turn plastic water bottles into vanillin, according to a study published in the journal Green Chemistry.

The plastic can be broken down into terephthalic acid, which is very similar, chemically speaking, to vanillin. Similar enough that a bit of bioengineering produced Escherichia coli that could convert the acid into the tasty treat, according to researchers at the University of Edinburgh.

A perfect solution? Decreasing plastic waste while producing a valued food product? The thought of consuming plastic isn’t appetizing, so just eat your ice cream and try to forget about it.

No withdrawals from this bank

Into each life, some milestones must fall: High school graduation, birth of a child, first house, 50th wedding anniversary, COVID-19. One LOTME staffer got really excited – way too excited, actually – when his Nissan Sentra reached 300,000 miles.

Well, there are milestones, and then there are milestones. “1,000 Reasons for Hope” is a report celebrating the first 1,000 brains donated to the VA-BU-CLF Brain Bank. For those of you keeping score at home, that would be the Department of Veterans Affairs, Boston University, and the Concussion Legacy Foundation.

The Brain Bank, created in 2008 to study concussions and chronic traumatic encephalopathy, is the brainchild – yes, we went there – of Chris Nowinski, PhD, a former professional wrestler, and Ann McKee, MD, an expert on neurogenerative disease. “Our discoveries have already inspired changes to sports that will prevent many future cases of CTE in the next generation of athletes,” Dr. Nowinski, the CEO of CLF, said in a written statement.

Data from the first thousand brains show that 706 men, including 305 former NFL players, had football as their primary exposure to head impacts. Women were underrepresented, making up only 2.8% of brain donations, so recruiting females is a priority. Anyone interested in pledging can go to PledgeMyBrain.org or call 617-992-0615 for the 24-hour emergency donation pager.

LOTME wanted to help, so we called the Brain Bank to find out about donating. They asked a few questions and we told them what we do for a living. “Oh, you’re with LOTME? Yeah, we’ve … um, seen that before. It’s, um … funny. Can we put you on hold?” We’re starting to get a little sick of the on-hold music by now.

Goodbye stress, goodbye gray hair

Last year was a doozy, so it wouldn’t be too surprising if we all had a few new gray strands in our hair. But what if we told you that you don’t need to start dying them or plucking them out? What if they could magically go back to the way they were? Well, it may be possible, sans magic and sans stress.

Investigators recently discovered that the age-old belief that stress will permanently turn your hair gray may not be true after all. There’s a strong possibility that it could turn back to its original color once the stressful agent is eliminated.

“Understanding the mechanisms that allow ‘old’ gray hairs to return to their ‘young’ pigmented states could yield new clues about the malleability of human aging in general and how it is influenced by stress,” said senior author Martin Picard, PhD, of Columbia University, New York.

For the study, 14 volunteers were asked to keep a stress diary and review their levels of stress throughout the week. The researchers used a new method of viewing and capturing the images of tiny parts of the hairs to see how much graying took place in each part of the strand. And what they found – some strands naturally turning back to the original color – had never been documented before.

How did it happen? Our good friend the mitochondria. We haven’t really heard that word since eighth-grade biology, but it’s actually the key link between stress hormones and hair pigmentation. Think of them as little radars picking up all different kinds of signals in your body, like mental/emotional stress. They get a big enough alert and they’re going to react, thus gray hair.

So that’s all it takes? Cut the stress and a full head of gray can go back to brown? Not exactly. The researchers said there may be a “threshold because of biological age and other factors.” They believe middle age is near that threshold and it could easily be pushed over due to stress and could potentially go back. But if you’ve been rocking the salt and pepper or silver fox for a number of years and are looking for change, you might want to just eliminate the stress and pick up a bottle of dye.

One small step for squid

Space does a number on the human body. Forget the obvious like going for a walk outside without a spacesuit, or even the well-known risks like the degradation of bone in microgravity; there are numerous smaller but still important changes to the body during spaceflight, like the disruption of the symbiotic relationship between gut bacteria and the human body. This causes the immune system to lose the ability to recognize threats, and illnesses spread more easily.

Naturally, if astronauts are going to undertake years-long journeys to Mars and beyond, a thorough understanding of this disturbance is necessary, and that’s why NASA has sent a bunch of squid to the International Space Station.

When it comes to animal studies, squid aren’t the usual culprits, but there’s a reason NASA chose calamari over the alternatives: The Hawaiian bobtail squid has a symbiotic relationship with bacteria that regulate their bioluminescence in much the same way that we have a symbiotic relationship with our gut bacteria, but the squid is a much simpler animal. If the bioluminescence-regulating bacteria are disturbed during their time in space, it will be much easier to figure out what’s going wrong.

The experiment is ongoing, but we should salute the brave squid who have taken a giant leap for squidkind. Though if NASA didn’t send them up in a giant bubble, we’re going to be very disappointed.

Less plastic, more vanilla

Have you been racked by guilt over the number of plastic water bottles you use? What about the amount of ice cream you eat? Well, this one’s for you.

Plastic isn’t the first thing you think about when you open up a pint of vanilla ice cream and catch the sweet, spicy vanilla scent, or when you smell those fresh vanilla scones coming out of the oven at the coffee shop, but a new study shows that the flavor of vanilla can come from water bottles.

Here’s the deal. A compound called vanillin is responsible for the scent of vanilla, and it can come naturally from the bean or it can be made synthetically. Believe it or not, 85% of vanillin is made synthetically from fossil fuels!

We’ve definitely grown accustomed to our favorite vanilla scents, foods, and cosmetics. In 2018, the global demand for vanillin was about 40,800 tons and is expected to grow to 65,000 tons by 2025, which far exceeds the supply of natural vanilla.

So what can we do? Well, we can use genetically engineered bacteria to turn plastic water bottles into vanillin, according to a study published in the journal Green Chemistry.

The plastic can be broken down into terephthalic acid, which is very similar, chemically speaking, to vanillin. Similar enough that a bit of bioengineering produced Escherichia coli that could convert the acid into the tasty treat, according to researchers at the University of Edinburgh.

A perfect solution? Decreasing plastic waste while producing a valued food product? The thought of consuming plastic isn’t appetizing, so just eat your ice cream and try to forget about it.

No withdrawals from this bank

Into each life, some milestones must fall: High school graduation, birth of a child, first house, 50th wedding anniversary, COVID-19. One LOTME staffer got really excited – way too excited, actually – when his Nissan Sentra reached 300,000 miles.

Well, there are milestones, and then there are milestones. “1,000 Reasons for Hope” is a report celebrating the first 1,000 brains donated to the VA-BU-CLF Brain Bank. For those of you keeping score at home, that would be the Department of Veterans Affairs, Boston University, and the Concussion Legacy Foundation.

The Brain Bank, created in 2008 to study concussions and chronic traumatic encephalopathy, is the brainchild – yes, we went there – of Chris Nowinski, PhD, a former professional wrestler, and Ann McKee, MD, an expert on neurogenerative disease. “Our discoveries have already inspired changes to sports that will prevent many future cases of CTE in the next generation of athletes,” Dr. Nowinski, the CEO of CLF, said in a written statement.

Data from the first thousand brains show that 706 men, including 305 former NFL players, had football as their primary exposure to head impacts. Women were underrepresented, making up only 2.8% of brain donations, so recruiting females is a priority. Anyone interested in pledging can go to PledgeMyBrain.org or call 617-992-0615 for the 24-hour emergency donation pager.

LOTME wanted to help, so we called the Brain Bank to find out about donating. They asked a few questions and we told them what we do for a living. “Oh, you’re with LOTME? Yeah, we’ve … um, seen that before. It’s, um … funny. Can we put you on hold?” We’re starting to get a little sick of the on-hold music by now.

Scaly beard rash

Waxy loose scale with associated erythema on the face and scalp is a classic sign of seborrheic dermatitis (SD).

SD is caused by inflammation related to the presence of Malassezia, which proliferates on sebum-rich areas of skin. Malassezia is normally present on the skin, but some individuals have a heightened sensitivity to it, leading to erythema and scale. It is prudent to examine the scalp, nasolabial folds, and around the ears where it often occurs concomitantly.

There are multiple topical and systemic options which treat the fungal involvement, the subsequent inflammation, and reduce the scale.1 Topical azole antifungals are effective for reducing the amount of Malassezia present. Topical steroids work well to reduce the erythema. Fortunately, low-potency steroids, including hydrocortisone and desonide, are adequate. This is important since SD frequently involves the face and higher-potency steroids can cause skin atrophy or rebound erythema.

Salicylic acid products exfoliate the scale and topical tar products suppress the scale, both leading to clinical improvement. Sunlight and narrow beam UVB light therapy are also effective treatments. As was true with this patient, SD often improves during the summer months (when there is more sunlight) and when patients shave, as this allows for additional sun exposure to the skin.

The patient in this case was told to use ketoconazole shampoo for his scalp, beard, and mustache. He was instructed to use it at least 3 times per week, applying it to the scalp as the first part of his bathing routine and then waiting until the end to rinse it off. This technique maximizes the antifungal shampoo’s contact time on the skin. He was also given a prescription for ketoconazole cream to apply twice daily to the areas of facial erythema and scale. He was counseled that shaving his beard and mustache might help reduce the SD in those areas.

Photo and text courtesy of Daniel Stulberg, MD, FAAFP, Department of Family and Community Medicine, University of New Mexico School of Medicine, Albuquerque

Borda LJ, Perper M, Keri JE. Treatment of seborrheic dermatitis: a comprehensive review. J Dermatolog Treat. 2019;30:158-169. doi: 10.1080/09546634.2018.1473554

Waxy loose scale with associated erythema on the face and scalp is a classic sign of seborrheic dermatitis (SD).

SD is caused by inflammation related to the presence of Malassezia, which proliferates on sebum-rich areas of skin. Malassezia is normally present on the skin, but some individuals have a heightened sensitivity to it, leading to erythema and scale. It is prudent to examine the scalp, nasolabial folds, and around the ears where it often occurs concomitantly.

There are multiple topical and systemic options which treat the fungal involvement, the subsequent inflammation, and reduce the scale.1 Topical azole antifungals are effective for reducing the amount of Malassezia present. Topical steroids work well to reduce the erythema. Fortunately, low-potency steroids, including hydrocortisone and desonide, are adequate. This is important since SD frequently involves the face and higher-potency steroids can cause skin atrophy or rebound erythema.

Salicylic acid products exfoliate the scale and topical tar products suppress the scale, both leading to clinical improvement. Sunlight and narrow beam UVB light therapy are also effective treatments. As was true with this patient, SD often improves during the summer months (when there is more sunlight) and when patients shave, as this allows for additional sun exposure to the skin.

The patient in this case was told to use ketoconazole shampoo for his scalp, beard, and mustache. He was instructed to use it at least 3 times per week, applying it to the scalp as the first part of his bathing routine and then waiting until the end to rinse it off. This technique maximizes the antifungal shampoo’s contact time on the skin. He was also given a prescription for ketoconazole cream to apply twice daily to the areas of facial erythema and scale. He was counseled that shaving his beard and mustache might help reduce the SD in those areas.

Photo and text courtesy of Daniel Stulberg, MD, FAAFP, Department of Family and Community Medicine, University of New Mexico School of Medicine, Albuquerque

Waxy loose scale with associated erythema on the face and scalp is a classic sign of seborrheic dermatitis (SD).

SD is caused by inflammation related to the presence of Malassezia, which proliferates on sebum-rich areas of skin. Malassezia is normally present on the skin, but some individuals have a heightened sensitivity to it, leading to erythema and scale. It is prudent to examine the scalp, nasolabial folds, and around the ears where it often occurs concomitantly.

There are multiple topical and systemic options which treat the fungal involvement, the subsequent inflammation, and reduce the scale.1 Topical azole antifungals are effective for reducing the amount of Malassezia present. Topical steroids work well to reduce the erythema. Fortunately, low-potency steroids, including hydrocortisone and desonide, are adequate. This is important since SD frequently involves the face and higher-potency steroids can cause skin atrophy or rebound erythema.

Salicylic acid products exfoliate the scale and topical tar products suppress the scale, both leading to clinical improvement. Sunlight and narrow beam UVB light therapy are also effective treatments. As was true with this patient, SD often improves during the summer months (when there is more sunlight) and when patients shave, as this allows for additional sun exposure to the skin.

The patient in this case was told to use ketoconazole shampoo for his scalp, beard, and mustache. He was instructed to use it at least 3 times per week, applying it to the scalp as the first part of his bathing routine and then waiting until the end to rinse it off. This technique maximizes the antifungal shampoo’s contact time on the skin. He was also given a prescription for ketoconazole cream to apply twice daily to the areas of facial erythema and scale. He was counseled that shaving his beard and mustache might help reduce the SD in those areas.

Photo and text courtesy of Daniel Stulberg, MD, FAAFP, Department of Family and Community Medicine, University of New Mexico School of Medicine, Albuquerque

Borda LJ, Perper M, Keri JE. Treatment of seborrheic dermatitis: a comprehensive review. J Dermatolog Treat. 2019;30:158-169. doi: 10.1080/09546634.2018.1473554

Borda LJ, Perper M, Keri JE. Treatment of seborrheic dermatitis: a comprehensive review. J Dermatolog Treat. 2019;30:158-169. doi: 10.1080/09546634.2018.1473554

Performance matters in adenoma detection

Low adenoma detection rates (ADRs) were associated with a greater risk of death in colorectal cancer (CRC) patients, especially among those with high-risk adenomas, based on a review of more than 250,000 colonoscopies.

“Both performance quality of the endoscopist as well as specific characteristics of resected adenomas at colonoscopy are associated with colorectal cancer mortality,” but the impact of these combined factors on colorectal cancer mortality has not been examined on a large scale, according to Elisabeth A. Waldmann, MD, of the Medical University of Vienna and colleagues.

In a study published in Clinical Gastroenterology & Hepatology, the researchers reviewed 259,885 colonoscopies performed by 361 endoscopists. Over an average follow-up period of 59 months, 165 CRC-related deaths occurred.

Across all risk groups, CRC mortality was higher among patients whose colonoscopies yielded an ADR of less than 25%, although this was not statistically significant in all groups.

The researchers then stratified patients into those with a negative colonoscopy, those with low-risk adenomas (one to two adenomas less than 10 mm), and those with high-risk adenomas (advanced adenomas or at least three adenomas), with the negative colonoscopy group used as the reference group for comparisons. The average age of the patients was 61 years, and approximately half were women.

Endoscopists were classified as having an ADR of less than 25% or 25% and higher.

Among individuals with low-risk adenomas, CRC mortality was similar whether the ADR on a negative colonoscopy was less than 25% or 25% or higher (adjusted hazard ratios, 1.25 and 1.22, respectively). CRC mortality also remained unaffected by ADR in patients with negatively colonoscopies (aHR, 1.27).

By contrast, individuals with high-risk adenomas had a significantly increased risk of CRC death if their colonoscopy was performed by an endoscopist with an ADR of less than 25%, compared with those whose endoscopists had ADRs of 25% or higher (aHR, 2.25 and 1.35, respectively).

“Our study demonstrated that adding ADR to the risk stratification model improved risk assessment in all risk groups,” the researchers noted. “Importantly, stratification improved most for individuals with high-risk adenomas, the group demanding most resources in health care systems.”

The study findings were limited by several factors including the focus on only screening and surveillance colonoscopies, not including diagnostic colonoscopies, and the inability to adjust for comorbidities and lifestyle factors that might impact CRC mortality, the researchers noted. The 22.4% average ADR in the current study was low, compared with other studies, and could be a limitation as well, although previous guidelines recommend a target ADR of at least 20%.

“Despite the extensive body of literature supporting the importance of ADR in terms of CRC prevention, its implementation into clinical surveillance is challenging,” as physicians under pressure might try to game their ADRs, the researchers wrote.

The findings support the value of mandatory assessment of performance quality, the researchers added. However, “because of the potential possibility of gaming one’s ADR one conclusion drawn by the study results should be that endoscopists’ quality parameters should be monitored and those not meeting the standards trained to improve rather than requiring minimum ADRs as premise for offering screening colonoscopy.”

Improve performance, but don’t discount patient factors

The study is important at this time because colorectal cancer is the third-leading cause of cancer death in the United States, Atsushi Sakuraba, MD, of the University of Chicago said in an interview.

“Screening colonoscopy has been shown to decrease CRC mortality, but factors influencing outcomes after screening colonoscopies remain to be determined,” he said.

“It was expected that high-quality colonoscopy performed by an endoscopist with ADR of 25% or greater was associated with a lower risk for CRC death,” Dr. Sakuraba said. “The strength of the study is that the authors demonstrated that high-quality colonoscopy was more important in individuals with high-risk adenomas, such as advanced adenomas or at least three adenomas.”

The study findings have implications for practice in that they show the importance of monitoring performance quality in screening colonoscopy, Dr. Sakuraba said, “especially when patients have high-risk adenomas.” However, “the authors included only age and sex as variables, but the influence of other factors, such as smoking, [body mass index], and race, need to be studied.”

The researchers had no financial conflicts to disclose. Dr. Sakuraba had no financial conflicts to disclose.

Help your patients understand colorectal cancer prevention and screening options by sharing AGA’s patient education from the GI Patient Center: www.gastro.org/CRC.

Low adenoma detection rates (ADRs) were associated with a greater risk of death in colorectal cancer (CRC) patients, especially among those with high-risk adenomas, based on a review of more than 250,000 colonoscopies.

“Both performance quality of the endoscopist as well as specific characteristics of resected adenomas at colonoscopy are associated with colorectal cancer mortality,” but the impact of these combined factors on colorectal cancer mortality has not been examined on a large scale, according to Elisabeth A. Waldmann, MD, of the Medical University of Vienna and colleagues.

In a study published in Clinical Gastroenterology & Hepatology, the researchers reviewed 259,885 colonoscopies performed by 361 endoscopists. Over an average follow-up period of 59 months, 165 CRC-related deaths occurred.

Across all risk groups, CRC mortality was higher among patients whose colonoscopies yielded an ADR of less than 25%, although this was not statistically significant in all groups.

The researchers then stratified patients into those with a negative colonoscopy, those with low-risk adenomas (one to two adenomas less than 10 mm), and those with high-risk adenomas (advanced adenomas or at least three adenomas), with the negative colonoscopy group used as the reference group for comparisons. The average age of the patients was 61 years, and approximately half were women.

Endoscopists were classified as having an ADR of less than 25% or 25% and higher.

Among individuals with low-risk adenomas, CRC mortality was similar whether the ADR on a negative colonoscopy was less than 25% or 25% or higher (adjusted hazard ratios, 1.25 and 1.22, respectively). CRC mortality also remained unaffected by ADR in patients with negatively colonoscopies (aHR, 1.27).

By contrast, individuals with high-risk adenomas had a significantly increased risk of CRC death if their colonoscopy was performed by an endoscopist with an ADR of less than 25%, compared with those whose endoscopists had ADRs of 25% or higher (aHR, 2.25 and 1.35, respectively).

“Our study demonstrated that adding ADR to the risk stratification model improved risk assessment in all risk groups,” the researchers noted. “Importantly, stratification improved most for individuals with high-risk adenomas, the group demanding most resources in health care systems.”

The study findings were limited by several factors including the focus on only screening and surveillance colonoscopies, not including diagnostic colonoscopies, and the inability to adjust for comorbidities and lifestyle factors that might impact CRC mortality, the researchers noted. The 22.4% average ADR in the current study was low, compared with other studies, and could be a limitation as well, although previous guidelines recommend a target ADR of at least 20%.

“Despite the extensive body of literature supporting the importance of ADR in terms of CRC prevention, its implementation into clinical surveillance is challenging,” as physicians under pressure might try to game their ADRs, the researchers wrote.

The findings support the value of mandatory assessment of performance quality, the researchers added. However, “because of the potential possibility of gaming one’s ADR one conclusion drawn by the study results should be that endoscopists’ quality parameters should be monitored and those not meeting the standards trained to improve rather than requiring minimum ADRs as premise for offering screening colonoscopy.”

Improve performance, but don’t discount patient factors

The study is important at this time because colorectal cancer is the third-leading cause of cancer death in the United States, Atsushi Sakuraba, MD, of the University of Chicago said in an interview.

“Screening colonoscopy has been shown to decrease CRC mortality, but factors influencing outcomes after screening colonoscopies remain to be determined,” he said.

“It was expected that high-quality colonoscopy performed by an endoscopist with ADR of 25% or greater was associated with a lower risk for CRC death,” Dr. Sakuraba said. “The strength of the study is that the authors demonstrated that high-quality colonoscopy was more important in individuals with high-risk adenomas, such as advanced adenomas or at least three adenomas.”

The study findings have implications for practice in that they show the importance of monitoring performance quality in screening colonoscopy, Dr. Sakuraba said, “especially when patients have high-risk adenomas.” However, “the authors included only age and sex as variables, but the influence of other factors, such as smoking, [body mass index], and race, need to be studied.”

The researchers had no financial conflicts to disclose. Dr. Sakuraba had no financial conflicts to disclose.

Help your patients understand colorectal cancer prevention and screening options by sharing AGA’s patient education from the GI Patient Center: www.gastro.org/CRC.

Low adenoma detection rates (ADRs) were associated with a greater risk of death in colorectal cancer (CRC) patients, especially among those with high-risk adenomas, based on a review of more than 250,000 colonoscopies.

“Both performance quality of the endoscopist as well as specific characteristics of resected adenomas at colonoscopy are associated with colorectal cancer mortality,” but the impact of these combined factors on colorectal cancer mortality has not been examined on a large scale, according to Elisabeth A. Waldmann, MD, of the Medical University of Vienna and colleagues.

In a study published in Clinical Gastroenterology & Hepatology, the researchers reviewed 259,885 colonoscopies performed by 361 endoscopists. Over an average follow-up period of 59 months, 165 CRC-related deaths occurred.

Across all risk groups, CRC mortality was higher among patients whose colonoscopies yielded an ADR of less than 25%, although this was not statistically significant in all groups.

The researchers then stratified patients into those with a negative colonoscopy, those with low-risk adenomas (one to two adenomas less than 10 mm), and those with high-risk adenomas (advanced adenomas or at least three adenomas), with the negative colonoscopy group used as the reference group for comparisons. The average age of the patients was 61 years, and approximately half were women.

Endoscopists were classified as having an ADR of less than 25% or 25% and higher.

Among individuals with low-risk adenomas, CRC mortality was similar whether the ADR on a negative colonoscopy was less than 25% or 25% or higher (adjusted hazard ratios, 1.25 and 1.22, respectively). CRC mortality also remained unaffected by ADR in patients with negatively colonoscopies (aHR, 1.27).

By contrast, individuals with high-risk adenomas had a significantly increased risk of CRC death if their colonoscopy was performed by an endoscopist with an ADR of less than 25%, compared with those whose endoscopists had ADRs of 25% or higher (aHR, 2.25 and 1.35, respectively).

“Our study demonstrated that adding ADR to the risk stratification model improved risk assessment in all risk groups,” the researchers noted. “Importantly, stratification improved most for individuals with high-risk adenomas, the group demanding most resources in health care systems.”

The study findings were limited by several factors including the focus on only screening and surveillance colonoscopies, not including diagnostic colonoscopies, and the inability to adjust for comorbidities and lifestyle factors that might impact CRC mortality, the researchers noted. The 22.4% average ADR in the current study was low, compared with other studies, and could be a limitation as well, although previous guidelines recommend a target ADR of at least 20%.

“Despite the extensive body of literature supporting the importance of ADR in terms of CRC prevention, its implementation into clinical surveillance is challenging,” as physicians under pressure might try to game their ADRs, the researchers wrote.

The findings support the value of mandatory assessment of performance quality, the researchers added. However, “because of the potential possibility of gaming one’s ADR one conclusion drawn by the study results should be that endoscopists’ quality parameters should be monitored and those not meeting the standards trained to improve rather than requiring minimum ADRs as premise for offering screening colonoscopy.”

Improve performance, but don’t discount patient factors

The study is important at this time because colorectal cancer is the third-leading cause of cancer death in the United States, Atsushi Sakuraba, MD, of the University of Chicago said in an interview.

“Screening colonoscopy has been shown to decrease CRC mortality, but factors influencing outcomes after screening colonoscopies remain to be determined,” he said.

“It was expected that high-quality colonoscopy performed by an endoscopist with ADR of 25% or greater was associated with a lower risk for CRC death,” Dr. Sakuraba said. “The strength of the study is that the authors demonstrated that high-quality colonoscopy was more important in individuals with high-risk adenomas, such as advanced adenomas or at least three adenomas.”

The study findings have implications for practice in that they show the importance of monitoring performance quality in screening colonoscopy, Dr. Sakuraba said, “especially when patients have high-risk adenomas.” However, “the authors included only age and sex as variables, but the influence of other factors, such as smoking, [body mass index], and race, need to be studied.”

The researchers had no financial conflicts to disclose. Dr. Sakuraba had no financial conflicts to disclose.

Help your patients understand colorectal cancer prevention and screening options by sharing AGA’s patient education from the GI Patient Center: www.gastro.org/CRC.

FROM CLINICAL GASTROENTEROLOGY & HEPATOLOGY

HMAs benefit children with relapsed/refractory AML

Hypomethylating agents are generally considered to be agents of choice for older adults with acute myeloid leukemia who cannot tolerate the rigors of more intensive therapies, but HMAs also can serve as a bridge to transplant for children and young adults with relapsed or refractory acute myeloid leukemia.

That’s according to Himalee S. Sabnis, MD, MSc and colleagues at Emory University and the Aflac Cancer and Blood Disorders Center at Children’s Healthcare of Atlanta.

In a scientific poster presented during the annual meeting of the American Society of Pediatric Hematology/Oncology, the investigators reported results of a retrospective study of HMA use in patients with relapsed or refractory pediatric AML treated in their center.

Curative intent and palliation

They identified 25 patients (15 boys) with a median age of 8.3 years (range 1.4 to 21 years) with relapsed/refractory AML who received HMAs for curative intent prior to hematopoietic stem cell transplant (HSCT), palliation, or in combination with donor leukocyte infusion (DLI).

Of the 21 patients with relapsed disease, 16 were in first relapse and 5 were in second relapse or greater. Four of the patients had primary refractory disease. The cytogenetic and molecular features were KMT2A rearrangements in six patients, monosomy 7/deletion 7 q in four patients, 8;21 translocation in three patients, and FLT3-ITD mutations in four patients.

The patients received a median of 5.3 HMA cycles each. Of the 133 total HMA cycles, 87 were with azacitidine, and 46 were with decitabine.

HMAs were used as monotherapy in 62% of cycles, and in combination with other therapies in 38%. Of the combinations, 16 were with donor leukocyte infusion, and 9 were gemtuzumab ozogamicin (Mylotarg).

Of the 13 patients for whom HMAs were used as part of treatment plan with curative intent, 5 proceeded to HSCT, and 8 did not. Of the 5 patients, 1 died from transplant-related causes, and 4 were alive post transplant. Of the 8 patients who did not undergo transplant, 1 had chimeric antigen receptor T- cell (CAR T) therapy, and 7 experienced disease progression.

The mean duration of palliative care was 144 days, with patients receiving from one to nine cycles with an HMA, and no treatment interruptions due to toxicity.

Of 5 patients who received donor leukocyte infusions, 3 reached minimal residual disease negativity; all 3 of these patients had late relapses but remained long-term survivors, the investigators reported.

They concluded that “hypomethylating agents can be used effectively as a bridge to transplantation in relapsed and refractory AML with gemtuzumab ozogamicin being the most common agent for combination therapy. Palliation with HMAs is associated with low toxicity and high tolerability in relapsed/refractory AML. Use of HMAs with DLI can induce sustained remissions in some patients.”

The authors propose prospective clinical trials using HMAs in the relapsed/refractory pediatric AML setting in combination with gemtuzumab ozogamicin, alternative targeted agents, and chemotherapy.

HMAs in treatment-related AML

Shilpa Shahani, MD, a pediatric oncologist and assistant clinical professor of pediatrics at City of Hope in Duarte, Calif., who was not involved in the study, has experience administering HMAs primarily in the adolescent and young adult population with AML.

“Azacitidine and decitabine are good for treatment-related leukemias,” she said in an interview. “They can be used otherwise for people who have relapsed disease and are trying to navigate other options.”

Although they are not standard first-line agents in younger patients, HMAs can play a useful role in therapy for relapsed or refractory disease, she said.

The authors and Dr. Shahani reported having no conflicts of interest to disclose.

Hypomethylating agents are generally considered to be agents of choice for older adults with acute myeloid leukemia who cannot tolerate the rigors of more intensive therapies, but HMAs also can serve as a bridge to transplant for children and young adults with relapsed or refractory acute myeloid leukemia.

That’s according to Himalee S. Sabnis, MD, MSc and colleagues at Emory University and the Aflac Cancer and Blood Disorders Center at Children’s Healthcare of Atlanta.

In a scientific poster presented during the annual meeting of the American Society of Pediatric Hematology/Oncology, the investigators reported results of a retrospective study of HMA use in patients with relapsed or refractory pediatric AML treated in their center.

Curative intent and palliation

They identified 25 patients (15 boys) with a median age of 8.3 years (range 1.4 to 21 years) with relapsed/refractory AML who received HMAs for curative intent prior to hematopoietic stem cell transplant (HSCT), palliation, or in combination with donor leukocyte infusion (DLI).

Of the 21 patients with relapsed disease, 16 were in first relapse and 5 were in second relapse or greater. Four of the patients had primary refractory disease. The cytogenetic and molecular features were KMT2A rearrangements in six patients, monosomy 7/deletion 7 q in four patients, 8;21 translocation in three patients, and FLT3-ITD mutations in four patients.

The patients received a median of 5.3 HMA cycles each. Of the 133 total HMA cycles, 87 were with azacitidine, and 46 were with decitabine.

HMAs were used as monotherapy in 62% of cycles, and in combination with other therapies in 38%. Of the combinations, 16 were with donor leukocyte infusion, and 9 were gemtuzumab ozogamicin (Mylotarg).

Of the 13 patients for whom HMAs were used as part of treatment plan with curative intent, 5 proceeded to HSCT, and 8 did not. Of the 5 patients, 1 died from transplant-related causes, and 4 were alive post transplant. Of the 8 patients who did not undergo transplant, 1 had chimeric antigen receptor T- cell (CAR T) therapy, and 7 experienced disease progression.

The mean duration of palliative care was 144 days, with patients receiving from one to nine cycles with an HMA, and no treatment interruptions due to toxicity.

Of 5 patients who received donor leukocyte infusions, 3 reached minimal residual disease negativity; all 3 of these patients had late relapses but remained long-term survivors, the investigators reported.

They concluded that “hypomethylating agents can be used effectively as a bridge to transplantation in relapsed and refractory AML with gemtuzumab ozogamicin being the most common agent for combination therapy. Palliation with HMAs is associated with low toxicity and high tolerability in relapsed/refractory AML. Use of HMAs with DLI can induce sustained remissions in some patients.”

The authors propose prospective clinical trials using HMAs in the relapsed/refractory pediatric AML setting in combination with gemtuzumab ozogamicin, alternative targeted agents, and chemotherapy.

HMAs in treatment-related AML

Shilpa Shahani, MD, a pediatric oncologist and assistant clinical professor of pediatrics at City of Hope in Duarte, Calif., who was not involved in the study, has experience administering HMAs primarily in the adolescent and young adult population with AML.

“Azacitidine and decitabine are good for treatment-related leukemias,” she said in an interview. “They can be used otherwise for people who have relapsed disease and are trying to navigate other options.”

Although they are not standard first-line agents in younger patients, HMAs can play a useful role in therapy for relapsed or refractory disease, she said.

The authors and Dr. Shahani reported having no conflicts of interest to disclose.

Hypomethylating agents are generally considered to be agents of choice for older adults with acute myeloid leukemia who cannot tolerate the rigors of more intensive therapies, but HMAs also can serve as a bridge to transplant for children and young adults with relapsed or refractory acute myeloid leukemia.

That’s according to Himalee S. Sabnis, MD, MSc and colleagues at Emory University and the Aflac Cancer and Blood Disorders Center at Children’s Healthcare of Atlanta.

In a scientific poster presented during the annual meeting of the American Society of Pediatric Hematology/Oncology, the investigators reported results of a retrospective study of HMA use in patients with relapsed or refractory pediatric AML treated in their center.

Curative intent and palliation

They identified 25 patients (15 boys) with a median age of 8.3 years (range 1.4 to 21 years) with relapsed/refractory AML who received HMAs for curative intent prior to hematopoietic stem cell transplant (HSCT), palliation, or in combination with donor leukocyte infusion (DLI).

Of the 21 patients with relapsed disease, 16 were in first relapse and 5 were in second relapse or greater. Four of the patients had primary refractory disease. The cytogenetic and molecular features were KMT2A rearrangements in six patients, monosomy 7/deletion 7 q in four patients, 8;21 translocation in three patients, and FLT3-ITD mutations in four patients.

The patients received a median of 5.3 HMA cycles each. Of the 133 total HMA cycles, 87 were with azacitidine, and 46 were with decitabine.

HMAs were used as monotherapy in 62% of cycles, and in combination with other therapies in 38%. Of the combinations, 16 were with donor leukocyte infusion, and 9 were gemtuzumab ozogamicin (Mylotarg).

Of the 13 patients for whom HMAs were used as part of treatment plan with curative intent, 5 proceeded to HSCT, and 8 did not. Of the 5 patients, 1 died from transplant-related causes, and 4 were alive post transplant. Of the 8 patients who did not undergo transplant, 1 had chimeric antigen receptor T- cell (CAR T) therapy, and 7 experienced disease progression.

The mean duration of palliative care was 144 days, with patients receiving from one to nine cycles with an HMA, and no treatment interruptions due to toxicity.

Of 5 patients who received donor leukocyte infusions, 3 reached minimal residual disease negativity; all 3 of these patients had late relapses but remained long-term survivors, the investigators reported.

They concluded that “hypomethylating agents can be used effectively as a bridge to transplantation in relapsed and refractory AML with gemtuzumab ozogamicin being the most common agent for combination therapy. Palliation with HMAs is associated with low toxicity and high tolerability in relapsed/refractory AML. Use of HMAs with DLI can induce sustained remissions in some patients.”

The authors propose prospective clinical trials using HMAs in the relapsed/refractory pediatric AML setting in combination with gemtuzumab ozogamicin, alternative targeted agents, and chemotherapy.

HMAs in treatment-related AML

Shilpa Shahani, MD, a pediatric oncologist and assistant clinical professor of pediatrics at City of Hope in Duarte, Calif., who was not involved in the study, has experience administering HMAs primarily in the adolescent and young adult population with AML.

“Azacitidine and decitabine are good for treatment-related leukemias,” she said in an interview. “They can be used otherwise for people who have relapsed disease and are trying to navigate other options.”

Although they are not standard first-line agents in younger patients, HMAs can play a useful role in therapy for relapsed or refractory disease, she said.

The authors and Dr. Shahani reported having no conflicts of interest to disclose.

FROM THE 2021 ASPHO CONFERENCE

Restricted dietary acid load may reduce odds of migraine

Key clinical point: High dietary acid load was associated with higher odds of migraine. Restricting dietary acid load could therefore reduce the odds of migraine in susceptible patients.

Major finding: The risk for migraine was higher among individuals in highest vs. lowest tertile of dietary acid load measures, including potential renal acid load (odds ratio [OR], 7.208; 95% confidence interval [95% CI], 3.33-15.55), net endogenous acid production (OR, 4.10; 95% CI, 1.92-8.77) scores, and the protein/potassium ratio (OR, 4.12; 95% CI, 1.93-8.81; all Ptrend less than .001).

Study details: Findings are from a case-control study of 1,096 participants including those with migraine (n=514) and healthy volunteers (n=582).

Disclosures: The study was supported by the Iranian Centre of Neurological Research, Neuroscience Institute. All authors declared no conflicts of interest.

Source: Mousavi M et al. Neurol Ther. 2021 Apr 24. doi: 10.1007/s40120-021-00247-2.

Key clinical point: High dietary acid load was associated with higher odds of migraine. Restricting dietary acid load could therefore reduce the odds of migraine in susceptible patients.

Major finding: The risk for migraine was higher among individuals in highest vs. lowest tertile of dietary acid load measures, including potential renal acid load (odds ratio [OR], 7.208; 95% confidence interval [95% CI], 3.33-15.55), net endogenous acid production (OR, 4.10; 95% CI, 1.92-8.77) scores, and the protein/potassium ratio (OR, 4.12; 95% CI, 1.93-8.81; all Ptrend less than .001).

Study details: Findings are from a case-control study of 1,096 participants including those with migraine (n=514) and healthy volunteers (n=582).

Disclosures: The study was supported by the Iranian Centre of Neurological Research, Neuroscience Institute. All authors declared no conflicts of interest.

Source: Mousavi M et al. Neurol Ther. 2021 Apr 24. doi: 10.1007/s40120-021-00247-2.

Key clinical point: High dietary acid load was associated with higher odds of migraine. Restricting dietary acid load could therefore reduce the odds of migraine in susceptible patients.

Major finding: The risk for migraine was higher among individuals in highest vs. lowest tertile of dietary acid load measures, including potential renal acid load (odds ratio [OR], 7.208; 95% confidence interval [95% CI], 3.33-15.55), net endogenous acid production (OR, 4.10; 95% CI, 1.92-8.77) scores, and the protein/potassium ratio (OR, 4.12; 95% CI, 1.93-8.81; all Ptrend less than .001).

Study details: Findings are from a case-control study of 1,096 participants including those with migraine (n=514) and healthy volunteers (n=582).

Disclosures: The study was supported by the Iranian Centre of Neurological Research, Neuroscience Institute. All authors declared no conflicts of interest.

Source: Mousavi M et al. Neurol Ther. 2021 Apr 24. doi: 10.1007/s40120-021-00247-2.

Migraine linked to increased hypertension risk in menopausal women

Key clinical point: Menopausal women with migraine are at a higher risk for incident hypertension.

Major finding: Migraine was associated with an increased risk for incident hypertension (hazard ratiomigraine, 1.29; 95% confidence interval, 1.24-1.35) in menopausal women.

Study details: Findings are from a longitudinal cohort study of 56,202 menopausal women free of hypertension or cardiovascular disease at the age of menopause who participated in the French E3N cohort.

Disclosures: The authors reported no targeted funding. CJ MacDonald and T Kurth received funding and/or honoraria from multiple sources. Other authors had no disclosures relevant to the manuscript.

Source: MacDonald CJ et al. Neurology. 2021 Apr 21. doi: 10.1212/WNL.0000000000011986.

Key clinical point: Menopausal women with migraine are at a higher risk for incident hypertension.

Major finding: Migraine was associated with an increased risk for incident hypertension (hazard ratiomigraine, 1.29; 95% confidence interval, 1.24-1.35) in menopausal women.

Study details: Findings are from a longitudinal cohort study of 56,202 menopausal women free of hypertension or cardiovascular disease at the age of menopause who participated in the French E3N cohort.

Disclosures: The authors reported no targeted funding. CJ MacDonald and T Kurth received funding and/or honoraria from multiple sources. Other authors had no disclosures relevant to the manuscript.

Source: MacDonald CJ et al. Neurology. 2021 Apr 21. doi: 10.1212/WNL.0000000000011986.

Key clinical point: Menopausal women with migraine are at a higher risk for incident hypertension.

Major finding: Migraine was associated with an increased risk for incident hypertension (hazard ratiomigraine, 1.29; 95% confidence interval, 1.24-1.35) in menopausal women.

Study details: Findings are from a longitudinal cohort study of 56,202 menopausal women free of hypertension or cardiovascular disease at the age of menopause who participated in the French E3N cohort.

Disclosures: The authors reported no targeted funding. CJ MacDonald and T Kurth received funding and/or honoraria from multiple sources. Other authors had no disclosures relevant to the manuscript.

Source: MacDonald CJ et al. Neurology. 2021 Apr 21. doi: 10.1212/WNL.0000000000011986.

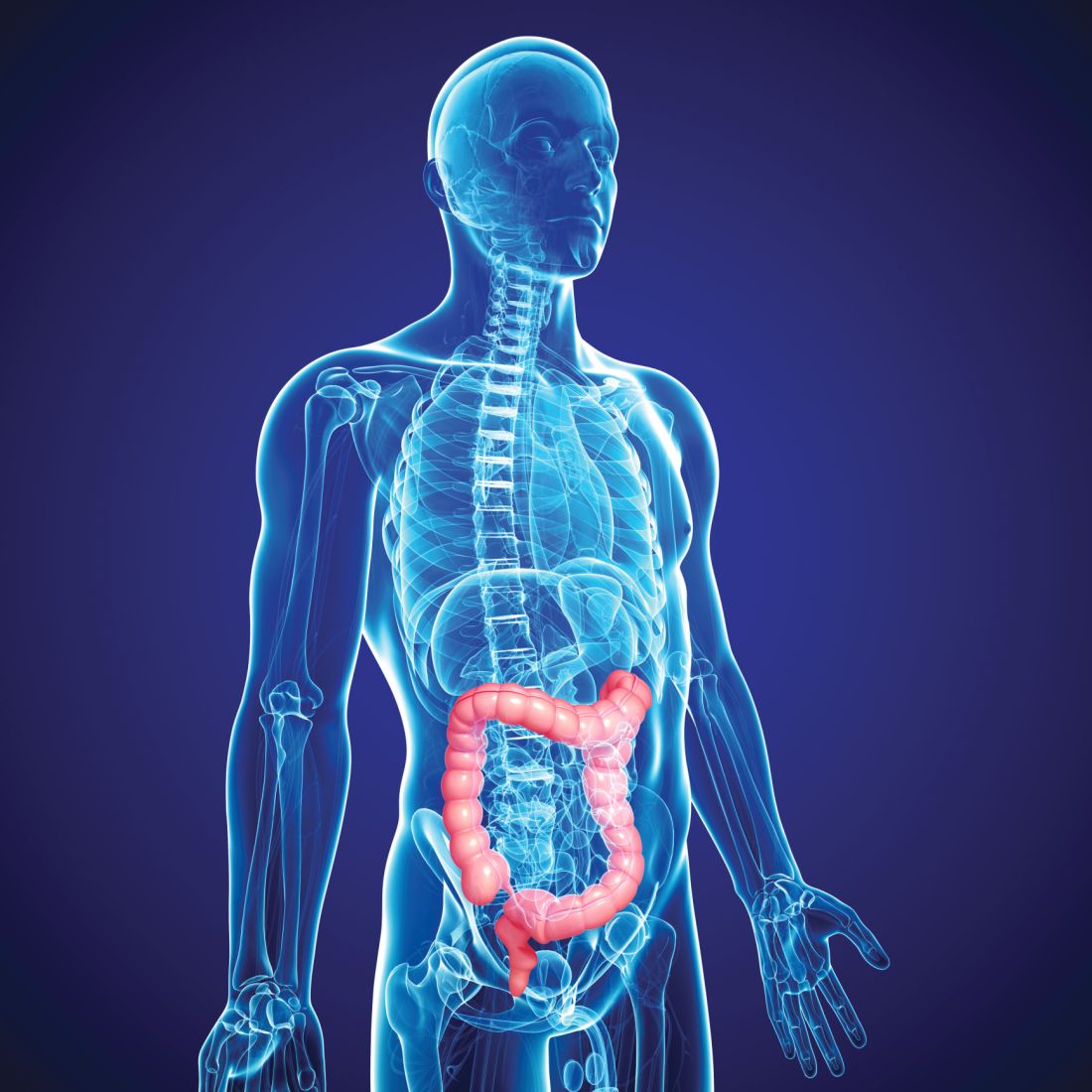

Algorithms for Prediction of Clinical Deterioration on the General Wards: A Scoping Review

The early identification of clinical deterioration among adult hospitalized patients remains a challenge.1 Delayed identification is associated with increased morbidity and mortality, unplanned intensive care unit (ICU) admissions, prolonged hospitalization, and higher costs.2,3 Earlier detection of deterioration using predictive algorithms of vital sign monitoring might avoid these negative outcomes.4 In this scoping review, we summarize current algorithms and their evidence.

Vital signs provide the backbone for detecting clinical deterioration. Early warning scores (EWS) and outreach protocols were developed to bring structure to the assessment of vital signs. Most EWS claim to predict clinical end points such as unplanned ICU admission up to 24 hours in advance.5,6 Reviews of EWS showed a positive trend toward reduced length of stay and mortality. However, conclusions about general efficacy could not be generated because of case heterogeneity and methodologic shortcomings.4,7 Continuous automated vital sign monitoring of patients on the general ward can now be accomplished with wearable devices.8 The first reports on continuous monitoring showed earlier detection of deterioration but not improved clinical end points.4,9 Since then, different reports on continuous monitoring have shown positive effects but concluded that unprocessed monitoring data per se falls short of generating actionable alarms.4,10,11

Predictive algorithms, which often use artificial intelligence (AI), are increasingly employed to recognize complex patterns or abnormalities and support predictions of events in big data sets.12,13 Especially when combined with continuous vital sign monitoring, predictive algorithms have the potential to expedite detection of clinical deterioration and improve patient outcomes. Predictive algorithms using vital signs in the ICU have shown promising results.14 The impact of predictive algorithms on the general wards, however, is unclear.