User login

Unhealthy Alcohol Use May Increase in the Years After Bariatric Surgery

After bariatric surgery, patients have a “much higher” risk of unhealthy alcohol use—even if they had no documented unhealthy drinking at baseline, according to researchers from the Durham Veteran Affairs (VA) Medical Center in North Carolina.

Based on their findings, the researchers estimate that for every 21 patients who undergo laparoscopic sleeve gastrectomy (LSG) or Roux-en-Y gastric bypass (RYGB), on average one from each group will develop unhealthy alcohol use.

The researchers collected electronic health record (EHR) data from 2,608 veterans who underwent LSG or RYGB at any bariatric center in the VA health system between 2008 and 2016, and compared that group with a nonsurgical control group.

Nearly all the patients screened negative for unhealthy alcohol use in the 2-year baseline period; however, their mean AUDIT-C scores and the probability of unhealthy alcohol use both increased significantly 3 to 8 years after surgery when compared with the control group. Eight years after an LSG, the probability was 3.4% higher (7.9% vs 4.5%). Eight years after an RYGB, the probability was 9.2% vs 4.4%, a difference of 4.8%.

The estimated prevalence of unhealthy alcohol use 8 years after bariatric surgery was higher for patients with unhealthy drinking at baseline (30 40%) than it was for those without baseline unhealthy drinking (5 - 10%). However, the probability was significantly higher for patients who had an RYGB than it was for nonsurgical control patients after 8 years, which might reflect alcohol pharmacokinetics changes, the researchers say.

Not drinking alcohol is the safest option after bariatric surgery, the researchers say, given that blood alcohol concentration peaks at higher levels after the operation. They advise monitoring patients long-term, using the three-item AUDIT-C scale. And, importantly, they advise cautioning patients undergoing bariatric surgery that drinking alcohol can escalate, even if they have had no history of drinking above recommended limits.

After bariatric surgery, patients have a “much higher” risk of unhealthy alcohol use—even if they had no documented unhealthy drinking at baseline, according to researchers from the Durham Veteran Affairs (VA) Medical Center in North Carolina.

Based on their findings, the researchers estimate that for every 21 patients who undergo laparoscopic sleeve gastrectomy (LSG) or Roux-en-Y gastric bypass (RYGB), on average one from each group will develop unhealthy alcohol use.

The researchers collected electronic health record (EHR) data from 2,608 veterans who underwent LSG or RYGB at any bariatric center in the VA health system between 2008 and 2016, and compared that group with a nonsurgical control group.

Nearly all the patients screened negative for unhealthy alcohol use in the 2-year baseline period; however, their mean AUDIT-C scores and the probability of unhealthy alcohol use both increased significantly 3 to 8 years after surgery when compared with the control group. Eight years after an LSG, the probability was 3.4% higher (7.9% vs 4.5%). Eight years after an RYGB, the probability was 9.2% vs 4.4%, a difference of 4.8%.

The estimated prevalence of unhealthy alcohol use 8 years after bariatric surgery was higher for patients with unhealthy drinking at baseline (30 40%) than it was for those without baseline unhealthy drinking (5 - 10%). However, the probability was significantly higher for patients who had an RYGB than it was for nonsurgical control patients after 8 years, which might reflect alcohol pharmacokinetics changes, the researchers say.

Not drinking alcohol is the safest option after bariatric surgery, the researchers say, given that blood alcohol concentration peaks at higher levels after the operation. They advise monitoring patients long-term, using the three-item AUDIT-C scale. And, importantly, they advise cautioning patients undergoing bariatric surgery that drinking alcohol can escalate, even if they have had no history of drinking above recommended limits.

After bariatric surgery, patients have a “much higher” risk of unhealthy alcohol use—even if they had no documented unhealthy drinking at baseline, according to researchers from the Durham Veteran Affairs (VA) Medical Center in North Carolina.

Based on their findings, the researchers estimate that for every 21 patients who undergo laparoscopic sleeve gastrectomy (LSG) or Roux-en-Y gastric bypass (RYGB), on average one from each group will develop unhealthy alcohol use.

The researchers collected electronic health record (EHR) data from 2,608 veterans who underwent LSG or RYGB at any bariatric center in the VA health system between 2008 and 2016, and compared that group with a nonsurgical control group.

Nearly all the patients screened negative for unhealthy alcohol use in the 2-year baseline period; however, their mean AUDIT-C scores and the probability of unhealthy alcohol use both increased significantly 3 to 8 years after surgery when compared with the control group. Eight years after an LSG, the probability was 3.4% higher (7.9% vs 4.5%). Eight years after an RYGB, the probability was 9.2% vs 4.4%, a difference of 4.8%.

The estimated prevalence of unhealthy alcohol use 8 years after bariatric surgery was higher for patients with unhealthy drinking at baseline (30 40%) than it was for those without baseline unhealthy drinking (5 - 10%). However, the probability was significantly higher for patients who had an RYGB than it was for nonsurgical control patients after 8 years, which might reflect alcohol pharmacokinetics changes, the researchers say.

Not drinking alcohol is the safest option after bariatric surgery, the researchers say, given that blood alcohol concentration peaks at higher levels after the operation. They advise monitoring patients long-term, using the three-item AUDIT-C scale. And, importantly, they advise cautioning patients undergoing bariatric surgery that drinking alcohol can escalate, even if they have had no history of drinking above recommended limits.

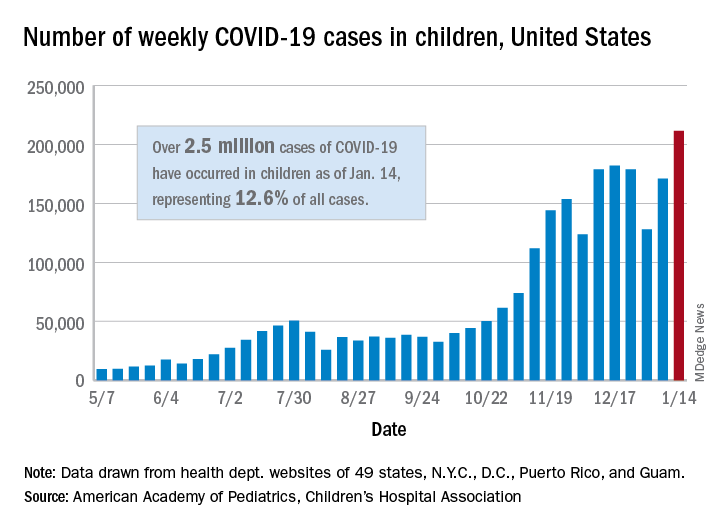

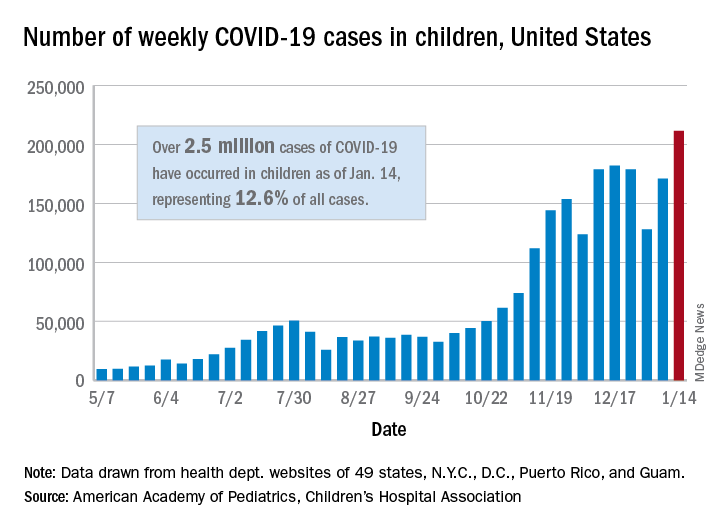

COVID-19 in children: Latest weekly increase is largest yet

according to a report from the American Academy of Pediatrics and the Children’s Hospital Association.

There were 211,466 new cases reported in children during the week of Jan. 8-14, topping the previous high (Dec. 11-17) by almost 30,000. Those new cases bring the total for the pandemic to over 2.5 million children infected with the coronavirus, which represents 12.6% of all reported cases, the AAP and the CHA said Jan. 19 in their weekly COVID-19 report.

The rise in cases also brought an increase in the proportion reported among children. The week before (Jan. 1-7), cases in children were 12.9% of all cases reported, but the most recent week saw that number rise to 14.5% of all cases, the highest it’s been since early October, based on data collected from the health department websites of 49 states (excluding New York), the District of Columbia, New York City, Puerto Rio, and Guam.

The corresponding figures for severe illness continue to be low: Children represent 1.8% of all hospitalizations from COVID-19 in 24 states and New York City and 0.06% of all deaths in 43 states and New York City. Three deaths were reported for the week of Jan. 8-14, making for a total of 191 since the pandemic started, the AAP and CHA said in their report.

Among the states, California has the most overall cases at just over 350,000, Wyoming has the highest proportion of cases in children (20.3%), and North Dakota has the highest rate of infection (over 8,100 per 100,000 children). The infection rate for the nation is now above 3,300 per 100,000 children, and 11 states reported rates over 5,000, according to the AAP and the CHA.

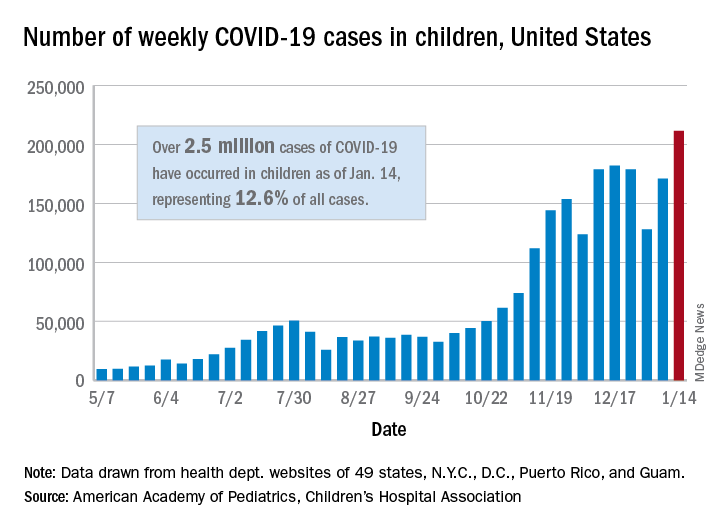

according to a report from the American Academy of Pediatrics and the Children’s Hospital Association.

There were 211,466 new cases reported in children during the week of Jan. 8-14, topping the previous high (Dec. 11-17) by almost 30,000. Those new cases bring the total for the pandemic to over 2.5 million children infected with the coronavirus, which represents 12.6% of all reported cases, the AAP and the CHA said Jan. 19 in their weekly COVID-19 report.

The rise in cases also brought an increase in the proportion reported among children. The week before (Jan. 1-7), cases in children were 12.9% of all cases reported, but the most recent week saw that number rise to 14.5% of all cases, the highest it’s been since early October, based on data collected from the health department websites of 49 states (excluding New York), the District of Columbia, New York City, Puerto Rio, and Guam.

The corresponding figures for severe illness continue to be low: Children represent 1.8% of all hospitalizations from COVID-19 in 24 states and New York City and 0.06% of all deaths in 43 states and New York City. Three deaths were reported for the week of Jan. 8-14, making for a total of 191 since the pandemic started, the AAP and CHA said in their report.

Among the states, California has the most overall cases at just over 350,000, Wyoming has the highest proportion of cases in children (20.3%), and North Dakota has the highest rate of infection (over 8,100 per 100,000 children). The infection rate for the nation is now above 3,300 per 100,000 children, and 11 states reported rates over 5,000, according to the AAP and the CHA.

according to a report from the American Academy of Pediatrics and the Children’s Hospital Association.

There were 211,466 new cases reported in children during the week of Jan. 8-14, topping the previous high (Dec. 11-17) by almost 30,000. Those new cases bring the total for the pandemic to over 2.5 million children infected with the coronavirus, which represents 12.6% of all reported cases, the AAP and the CHA said Jan. 19 in their weekly COVID-19 report.

The rise in cases also brought an increase in the proportion reported among children. The week before (Jan. 1-7), cases in children were 12.9% of all cases reported, but the most recent week saw that number rise to 14.5% of all cases, the highest it’s been since early October, based on data collected from the health department websites of 49 states (excluding New York), the District of Columbia, New York City, Puerto Rio, and Guam.

The corresponding figures for severe illness continue to be low: Children represent 1.8% of all hospitalizations from COVID-19 in 24 states and New York City and 0.06% of all deaths in 43 states and New York City. Three deaths were reported for the week of Jan. 8-14, making for a total of 191 since the pandemic started, the AAP and CHA said in their report.

Among the states, California has the most overall cases at just over 350,000, Wyoming has the highest proportion of cases in children (20.3%), and North Dakota has the highest rate of infection (over 8,100 per 100,000 children). The infection rate for the nation is now above 3,300 per 100,000 children, and 11 states reported rates over 5,000, according to the AAP and the CHA.

Gut microbiome may predict nivolumab efficacy in gastric cancer

Researchers have demonstrated bacterial invasion of the epithelial cell pathway in the gut microbiome and suggest that this could potentially become a novel biomarker.

“In addition, we found gastric cancer–specific gut microbiome predictive of responses to immune checkpoint inhibitors,” said study author Yu Sunakawa, MD, PhD, an associate professor in the department of clinical oncology at St. Marianna University, Kawasaki, Japan.

Dr. Sunakawa presented the study’s results at the 2021 Gastrointestinal Cancers Symposium.

The gut microbiome holds great interest as a potential biomarker for response. Previous studies suggested that it may hold the key to immunotherapy responses. The concept has been demonstrated in several studies involving patients with melanoma, but this is the first study in patients with gastric cancer.

Nivolumab monotherapy has been shown to provide a survival benefit with a manageable safety profile in previously treated patients with gastric cancer or gastroesophageal junction (GEJ) cancer, Dr. Sunakawa noted. However, fewer than half of patients responded to therapy.

“The disease control rate was about 40%, and many patients did not experience any tumor degradation,” he said. “About 60% of the patients did not respond to nivolumab as a late-line therapy.”

In the observational/translational DELIVER trial, investigators enrolled 501 patients with recurrent or metastatic adenocarcinoma of the stomach or GEJ. The patients were recruited from 50 sites in Japan.

The primary endpoint was the relationship between the genomic pathway in the gut microbiome and efficacy of nivolumab and whether there was progressive disease or not at the first evaluation, as determined in accordance with Response Evaluation Criteria in Solid Tumors criteria.

Genomic data were measured by genome shotgun sequence at a central laboratory. Biomarkers were analyzed by Wilcoxon rank sum test in the first 200 patients, who constituted the training cohort. The top 30 biomarker candidates were validated in the last 300 patients (the validation cohort) using the Bonferroni method.

Clinical and genomic data were available for 437 patients (87%). Of this group, 180 constituted the training cohort, and 257, the validation cohort.

The phylogenetic composition of common bacterial taxa was similar for both cohorts.

In the training cohort, 62.2% of patients had progressive disease, as did 53.2% in the validation cohort. The microbiome was more diverse among the patients who did not have progressive disease than among those who did have progressive disease.

The authors noted that, although there was no statistically significant pathway to be validated for a primary endpoint using the Bonferroni method, bacterial invasion of epithelial cells in the KEGG pathway was associated with clinical outcomes in both the training cohort (P = .057) and the validation cohort (P = .014). However, these pathways were not significantly associated with progressive disease after Bonferroni correction, a conservative test that adjusts for multiple comparisons.

An exploratory analysis of genus showed that Odoribacter and Veillonella species were associated with tumor response to nivolumab in both cohorts.

Dr. Sunakawa noted that biomarker analyses are ongoing. The researchers are investigating the relationships between microbiome and survival times, as well as other endpoints.

Still some gaps

In a discussion of the study, Jonathan Yeung, MD, PhD, of Princess Margaret Cancer Center, Toronto, congratulated the investigators on their study, noting that “the logistical hurdles must have been tremendous to obtain these data.”

However, Dr. Yeung pointed out some limitations and gaps in the data that were presented. For example, he found that the ratio of the training set to the validation set was unusual. “The training set is usually larger and usually an 80/20 ratio,” he said. “In their design, the validation set is larger, and I’m quite curious about their rationale.

“The conclusion of the study is that a more diverse microbiome was observed in patients with a tumor response than in those without a response,” he continued, “but they don’t actually show the statistical test used to make this conclusion. There is considerable overlap between the groups, and more compelling data are needed to make that conclusion.”

Another limitation was the marked imbalance in the number of patients whose condition responded to nivolumab in comparison with those whose condition did not (20 vs. 417 patients). This could have affected the statistical power of the study.

But overall, Dr. Yeung congratulated the authors for presenting a very impressive dataset. “The preliminary data are very interesting, and I look forward to the final results,” he said.

The study was funded by Ono Pharmaceutical and Bristol-Myers Squibb, which markets nivolumab. Dr. Sunakawa has received honoraria from Bayer Yakuhin, Bristol-Myers Squibb Japan, Chugai, Kyowa Hakko Kirin, Lilly Japan, Nippon Kayaku, Sanofi, Taiho, Takeda, and Yakult Honsha. He has held a consulting or advisory role for Bristol-Myers Squibb Japan, Daiichi Sankyo, and Takeda and has received research funding from Chugai Pharma, Daiichi Sankyo, Lilly Japan, Sanofi, Taiho Pharmaceutical, and Takeda. The Gastrointestinal Cancers Symposium is sponsored by the American Gastroenterological Association, the American Society for Clinical Oncology, the American Society for Radiation Oncology, and the Society of Surgical Oncology.

A version of this article first appeared on Medscape.com.

Researchers have demonstrated bacterial invasion of the epithelial cell pathway in the gut microbiome and suggest that this could potentially become a novel biomarker.

“In addition, we found gastric cancer–specific gut microbiome predictive of responses to immune checkpoint inhibitors,” said study author Yu Sunakawa, MD, PhD, an associate professor in the department of clinical oncology at St. Marianna University, Kawasaki, Japan.

Dr. Sunakawa presented the study’s results at the 2021 Gastrointestinal Cancers Symposium.

The gut microbiome holds great interest as a potential biomarker for response. Previous studies suggested that it may hold the key to immunotherapy responses. The concept has been demonstrated in several studies involving patients with melanoma, but this is the first study in patients with gastric cancer.

Nivolumab monotherapy has been shown to provide a survival benefit with a manageable safety profile in previously treated patients with gastric cancer or gastroesophageal junction (GEJ) cancer, Dr. Sunakawa noted. However, fewer than half of patients responded to therapy.

“The disease control rate was about 40%, and many patients did not experience any tumor degradation,” he said. “About 60% of the patients did not respond to nivolumab as a late-line therapy.”

In the observational/translational DELIVER trial, investigators enrolled 501 patients with recurrent or metastatic adenocarcinoma of the stomach or GEJ. The patients were recruited from 50 sites in Japan.

The primary endpoint was the relationship between the genomic pathway in the gut microbiome and efficacy of nivolumab and whether there was progressive disease or not at the first evaluation, as determined in accordance with Response Evaluation Criteria in Solid Tumors criteria.

Genomic data were measured by genome shotgun sequence at a central laboratory. Biomarkers were analyzed by Wilcoxon rank sum test in the first 200 patients, who constituted the training cohort. The top 30 biomarker candidates were validated in the last 300 patients (the validation cohort) using the Bonferroni method.

Clinical and genomic data were available for 437 patients (87%). Of this group, 180 constituted the training cohort, and 257, the validation cohort.

The phylogenetic composition of common bacterial taxa was similar for both cohorts.

In the training cohort, 62.2% of patients had progressive disease, as did 53.2% in the validation cohort. The microbiome was more diverse among the patients who did not have progressive disease than among those who did have progressive disease.

The authors noted that, although there was no statistically significant pathway to be validated for a primary endpoint using the Bonferroni method, bacterial invasion of epithelial cells in the KEGG pathway was associated with clinical outcomes in both the training cohort (P = .057) and the validation cohort (P = .014). However, these pathways were not significantly associated with progressive disease after Bonferroni correction, a conservative test that adjusts for multiple comparisons.

An exploratory analysis of genus showed that Odoribacter and Veillonella species were associated with tumor response to nivolumab in both cohorts.

Dr. Sunakawa noted that biomarker analyses are ongoing. The researchers are investigating the relationships between microbiome and survival times, as well as other endpoints.

Still some gaps

In a discussion of the study, Jonathan Yeung, MD, PhD, of Princess Margaret Cancer Center, Toronto, congratulated the investigators on their study, noting that “the logistical hurdles must have been tremendous to obtain these data.”

However, Dr. Yeung pointed out some limitations and gaps in the data that were presented. For example, he found that the ratio of the training set to the validation set was unusual. “The training set is usually larger and usually an 80/20 ratio,” he said. “In their design, the validation set is larger, and I’m quite curious about their rationale.

“The conclusion of the study is that a more diverse microbiome was observed in patients with a tumor response than in those without a response,” he continued, “but they don’t actually show the statistical test used to make this conclusion. There is considerable overlap between the groups, and more compelling data are needed to make that conclusion.”

Another limitation was the marked imbalance in the number of patients whose condition responded to nivolumab in comparison with those whose condition did not (20 vs. 417 patients). This could have affected the statistical power of the study.

But overall, Dr. Yeung congratulated the authors for presenting a very impressive dataset. “The preliminary data are very interesting, and I look forward to the final results,” he said.

The study was funded by Ono Pharmaceutical and Bristol-Myers Squibb, which markets nivolumab. Dr. Sunakawa has received honoraria from Bayer Yakuhin, Bristol-Myers Squibb Japan, Chugai, Kyowa Hakko Kirin, Lilly Japan, Nippon Kayaku, Sanofi, Taiho, Takeda, and Yakult Honsha. He has held a consulting or advisory role for Bristol-Myers Squibb Japan, Daiichi Sankyo, and Takeda and has received research funding from Chugai Pharma, Daiichi Sankyo, Lilly Japan, Sanofi, Taiho Pharmaceutical, and Takeda. The Gastrointestinal Cancers Symposium is sponsored by the American Gastroenterological Association, the American Society for Clinical Oncology, the American Society for Radiation Oncology, and the Society of Surgical Oncology.

A version of this article first appeared on Medscape.com.

Researchers have demonstrated bacterial invasion of the epithelial cell pathway in the gut microbiome and suggest that this could potentially become a novel biomarker.

“In addition, we found gastric cancer–specific gut microbiome predictive of responses to immune checkpoint inhibitors,” said study author Yu Sunakawa, MD, PhD, an associate professor in the department of clinical oncology at St. Marianna University, Kawasaki, Japan.

Dr. Sunakawa presented the study’s results at the 2021 Gastrointestinal Cancers Symposium.

The gut microbiome holds great interest as a potential biomarker for response. Previous studies suggested that it may hold the key to immunotherapy responses. The concept has been demonstrated in several studies involving patients with melanoma, but this is the first study in patients with gastric cancer.

Nivolumab monotherapy has been shown to provide a survival benefit with a manageable safety profile in previously treated patients with gastric cancer or gastroesophageal junction (GEJ) cancer, Dr. Sunakawa noted. However, fewer than half of patients responded to therapy.

“The disease control rate was about 40%, and many patients did not experience any tumor degradation,” he said. “About 60% of the patients did not respond to nivolumab as a late-line therapy.”

In the observational/translational DELIVER trial, investigators enrolled 501 patients with recurrent or metastatic adenocarcinoma of the stomach or GEJ. The patients were recruited from 50 sites in Japan.

The primary endpoint was the relationship between the genomic pathway in the gut microbiome and efficacy of nivolumab and whether there was progressive disease or not at the first evaluation, as determined in accordance with Response Evaluation Criteria in Solid Tumors criteria.

Genomic data were measured by genome shotgun sequence at a central laboratory. Biomarkers were analyzed by Wilcoxon rank sum test in the first 200 patients, who constituted the training cohort. The top 30 biomarker candidates were validated in the last 300 patients (the validation cohort) using the Bonferroni method.

Clinical and genomic data were available for 437 patients (87%). Of this group, 180 constituted the training cohort, and 257, the validation cohort.

The phylogenetic composition of common bacterial taxa was similar for both cohorts.

In the training cohort, 62.2% of patients had progressive disease, as did 53.2% in the validation cohort. The microbiome was more diverse among the patients who did not have progressive disease than among those who did have progressive disease.

The authors noted that, although there was no statistically significant pathway to be validated for a primary endpoint using the Bonferroni method, bacterial invasion of epithelial cells in the KEGG pathway was associated with clinical outcomes in both the training cohort (P = .057) and the validation cohort (P = .014). However, these pathways were not significantly associated with progressive disease after Bonferroni correction, a conservative test that adjusts for multiple comparisons.

An exploratory analysis of genus showed that Odoribacter and Veillonella species were associated with tumor response to nivolumab in both cohorts.

Dr. Sunakawa noted that biomarker analyses are ongoing. The researchers are investigating the relationships between microbiome and survival times, as well as other endpoints.

Still some gaps

In a discussion of the study, Jonathan Yeung, MD, PhD, of Princess Margaret Cancer Center, Toronto, congratulated the investigators on their study, noting that “the logistical hurdles must have been tremendous to obtain these data.”

However, Dr. Yeung pointed out some limitations and gaps in the data that were presented. For example, he found that the ratio of the training set to the validation set was unusual. “The training set is usually larger and usually an 80/20 ratio,” he said. “In their design, the validation set is larger, and I’m quite curious about their rationale.

“The conclusion of the study is that a more diverse microbiome was observed in patients with a tumor response than in those without a response,” he continued, “but they don’t actually show the statistical test used to make this conclusion. There is considerable overlap between the groups, and more compelling data are needed to make that conclusion.”

Another limitation was the marked imbalance in the number of patients whose condition responded to nivolumab in comparison with those whose condition did not (20 vs. 417 patients). This could have affected the statistical power of the study.

But overall, Dr. Yeung congratulated the authors for presenting a very impressive dataset. “The preliminary data are very interesting, and I look forward to the final results,” he said.

The study was funded by Ono Pharmaceutical and Bristol-Myers Squibb, which markets nivolumab. Dr. Sunakawa has received honoraria from Bayer Yakuhin, Bristol-Myers Squibb Japan, Chugai, Kyowa Hakko Kirin, Lilly Japan, Nippon Kayaku, Sanofi, Taiho, Takeda, and Yakult Honsha. He has held a consulting or advisory role for Bristol-Myers Squibb Japan, Daiichi Sankyo, and Takeda and has received research funding from Chugai Pharma, Daiichi Sankyo, Lilly Japan, Sanofi, Taiho Pharmaceutical, and Takeda. The Gastrointestinal Cancers Symposium is sponsored by the American Gastroenterological Association, the American Society for Clinical Oncology, the American Society for Radiation Oncology, and the Society of Surgical Oncology.

A version of this article first appeared on Medscape.com.

Von Willebrand disease guidelines address women’s bleeding concerns

New guidelines issued jointly by four major international hematology groups focus on the management of patients with von Willebrand disease (VWD), the most common bleeding disorder in the world.

The evidence-based guidelines, published in Blood Advances, were developed in collaboration by the American Society of Hematology (ASH), the International Society on Thrombosis and Haemostasis, the National Hemophilia Foundation, and the World Federation of Hemophilia. They outline key recommendations spanning the care of patients with a broad range of therapeutic needs.

“We addressed some of the questions that were most important to the community, but certainly there are a lot of areas that we couldn’t cover” said coauthor Veronica H. Flood, MD, of the Medical College of Wisconsin in Milwaukee.

The guidelines process began with a survey sent to the von Willebrand disease community, including patients, caregivers, nurses, physicians, and scientists. The respondents were asked to prioritize issues that they felt should be addressed in the guidelines.

“Interestingly, some of the issues were the same between patients and caregivers and physicians, and some were different, but there were obviously some areas that we just couldn’t cover,” she said in an interview.

One of the areas of greatest concern for respondents was bleeding in women, and many of the recommendations include specific considerations for management of gynecologic and obstetric patients, Dr. Flood said.

“We also tried to make the questions applicable to as many patients with von Willebrand disease as possible,” she added.

Some of the questions, such as recommendation 1, regarding prophylaxis, are geared toward management of patients with severe disease, while others, such as recommendations for treatment of menstrual bleeding, are more suited for patients with milder VWD.

All of the recommendations in the guidelines are “conditional” (suggested), due to very low certainty in the evidence of effects, the authors noted.

Prophylaxis

The guidelines suggest long-term prophylaxis for patients with a history of severe and frequent bleeds, with periodic assessment of the need for prophylaxis.

Desmopressin

For those patients who may benefit from the use of desmopressin, primarily those with type 1 VWD, and who have a baseline von Willebrand factor (VWF) level below 0.30 IU/mL, the panel issued a conditional recommendation for a desmopressin trial with treatment based on the patient’s results compared with not performing a trial and treating with tranexamic acid or factor concentrate. The guidelines also advise against treating with desmopressin in the absence of a trial. In a section of “good practice statements,” the guidelines indicate that using desmopressin in patients with type 2B VWD is generally contraindicated, because of the risk of thrombocytopenia as a result of increased platelet binding. In addition, desmopressin is generally contraindicated in patients with active cardiovascular disease, patients with seizure disorders, patients less than 2 years old, and patients with type 1C VWD in the setting of surgery.

Antithrombotic therapy

The guideline panelists conditionally recommend antithrombotic therapy with either antiplatelet agents or anticoagulants, with an emphasis on reassessing bleeding risk throughout the course of treatment.

An accompanying good practice statement calls for individualized assessments of risks and benefits of specific antithrombotic therapies by a multidisciplinary team including hematologists, cardiovascular specialists, and the patient.

Major surgery

This section includes a recommendation for targeting both factor VIII and VWF activity levels to a minimum of 50 IU/mL for at least 3 days after surgery, and a suggestion against using factor VIII target levels alone.

Minor surgery/invasive procedures

The panelists suggest increasing VWF activity levels to a minimum of 0.50 IU/mL with desmopressin or factor concentrate with the addition of tranexamic acid over raising VWF levels to at least 0.50 IU/mL with desmopressin or factor concentrate alone.

In addition, the panelists suggest “giving tranexamic acid alone over increasing VWF activity levels to a minimum threshold of 0.50 IU/mL with any intervention in patients with type 1 VWD with baseline VWF activity levels of 0.30 IU/mL and a mild bleeding phenotype undergoing minor mucosal procedures.”

Heavy menstrual bleeding

In women with heavy menstrual bleeding who do not plan to conceive, the panel suggests either combined hormonal therapy or levonorgestrel-releasing intrauterine system, or tranexamic acid over desmopressin.

In women who wish to conceive, the panel suggests using tranexamic acid over desmopressin.

Neuraxial anesthesia during labor

For women in labor for whom neuraxial anesthesia is considered, the guidelines suggest targeting a VWF activity level from 0.50 to 1.50 IU/mL over targeting a level above 1.50 IU/mL.

Postpartum management

“The guideline panel suggests the use of tranexamic acid over not using it in women with type 1 VWD or low VWF levels (and this may also apply to types 2 and 3 VWD) during the postpartum period,” the guidelines say.

An accompanying good practice statement says that tranexamic acid can be provided orally or intravenously. The oral dose is 25 mg/kg three times daily for 10-14 days, or longer if blood loss remains heavy.

Dr. Flood said that the guidelines were developed under the assumption that they would apply to care of patients in regions with a high or moderately high degree of clinical resources.

“We recognize that this eliminates a great deal of the globe, and our hope is that ASH and the other sponsoring organizations are going to let us revise this and do a version for lower-resourced settings,” she said.

New guidelines issued jointly by four major international hematology groups focus on the management of patients with von Willebrand disease (VWD), the most common bleeding disorder in the world.

The evidence-based guidelines, published in Blood Advances, were developed in collaboration by the American Society of Hematology (ASH), the International Society on Thrombosis and Haemostasis, the National Hemophilia Foundation, and the World Federation of Hemophilia. They outline key recommendations spanning the care of patients with a broad range of therapeutic needs.

“We addressed some of the questions that were most important to the community, but certainly there are a lot of areas that we couldn’t cover” said coauthor Veronica H. Flood, MD, of the Medical College of Wisconsin in Milwaukee.

The guidelines process began with a survey sent to the von Willebrand disease community, including patients, caregivers, nurses, physicians, and scientists. The respondents were asked to prioritize issues that they felt should be addressed in the guidelines.

“Interestingly, some of the issues were the same between patients and caregivers and physicians, and some were different, but there were obviously some areas that we just couldn’t cover,” she said in an interview.

One of the areas of greatest concern for respondents was bleeding in women, and many of the recommendations include specific considerations for management of gynecologic and obstetric patients, Dr. Flood said.

“We also tried to make the questions applicable to as many patients with von Willebrand disease as possible,” she added.

Some of the questions, such as recommendation 1, regarding prophylaxis, are geared toward management of patients with severe disease, while others, such as recommendations for treatment of menstrual bleeding, are more suited for patients with milder VWD.

All of the recommendations in the guidelines are “conditional” (suggested), due to very low certainty in the evidence of effects, the authors noted.

Prophylaxis

The guidelines suggest long-term prophylaxis for patients with a history of severe and frequent bleeds, with periodic assessment of the need for prophylaxis.

Desmopressin

For those patients who may benefit from the use of desmopressin, primarily those with type 1 VWD, and who have a baseline von Willebrand factor (VWF) level below 0.30 IU/mL, the panel issued a conditional recommendation for a desmopressin trial with treatment based on the patient’s results compared with not performing a trial and treating with tranexamic acid or factor concentrate. The guidelines also advise against treating with desmopressin in the absence of a trial. In a section of “good practice statements,” the guidelines indicate that using desmopressin in patients with type 2B VWD is generally contraindicated, because of the risk of thrombocytopenia as a result of increased platelet binding. In addition, desmopressin is generally contraindicated in patients with active cardiovascular disease, patients with seizure disorders, patients less than 2 years old, and patients with type 1C VWD in the setting of surgery.

Antithrombotic therapy

The guideline panelists conditionally recommend antithrombotic therapy with either antiplatelet agents or anticoagulants, with an emphasis on reassessing bleeding risk throughout the course of treatment.

An accompanying good practice statement calls for individualized assessments of risks and benefits of specific antithrombotic therapies by a multidisciplinary team including hematologists, cardiovascular specialists, and the patient.

Major surgery

This section includes a recommendation for targeting both factor VIII and VWF activity levels to a minimum of 50 IU/mL for at least 3 days after surgery, and a suggestion against using factor VIII target levels alone.

Minor surgery/invasive procedures

The panelists suggest increasing VWF activity levels to a minimum of 0.50 IU/mL with desmopressin or factor concentrate with the addition of tranexamic acid over raising VWF levels to at least 0.50 IU/mL with desmopressin or factor concentrate alone.

In addition, the panelists suggest “giving tranexamic acid alone over increasing VWF activity levels to a minimum threshold of 0.50 IU/mL with any intervention in patients with type 1 VWD with baseline VWF activity levels of 0.30 IU/mL and a mild bleeding phenotype undergoing minor mucosal procedures.”

Heavy menstrual bleeding

In women with heavy menstrual bleeding who do not plan to conceive, the panel suggests either combined hormonal therapy or levonorgestrel-releasing intrauterine system, or tranexamic acid over desmopressin.

In women who wish to conceive, the panel suggests using tranexamic acid over desmopressin.

Neuraxial anesthesia during labor

For women in labor for whom neuraxial anesthesia is considered, the guidelines suggest targeting a VWF activity level from 0.50 to 1.50 IU/mL over targeting a level above 1.50 IU/mL.

Postpartum management

“The guideline panel suggests the use of tranexamic acid over not using it in women with type 1 VWD or low VWF levels (and this may also apply to types 2 and 3 VWD) during the postpartum period,” the guidelines say.

An accompanying good practice statement says that tranexamic acid can be provided orally or intravenously. The oral dose is 25 mg/kg three times daily for 10-14 days, or longer if blood loss remains heavy.

Dr. Flood said that the guidelines were developed under the assumption that they would apply to care of patients in regions with a high or moderately high degree of clinical resources.

“We recognize that this eliminates a great deal of the globe, and our hope is that ASH and the other sponsoring organizations are going to let us revise this and do a version for lower-resourced settings,” she said.

New guidelines issued jointly by four major international hematology groups focus on the management of patients with von Willebrand disease (VWD), the most common bleeding disorder in the world.

The evidence-based guidelines, published in Blood Advances, were developed in collaboration by the American Society of Hematology (ASH), the International Society on Thrombosis and Haemostasis, the National Hemophilia Foundation, and the World Federation of Hemophilia. They outline key recommendations spanning the care of patients with a broad range of therapeutic needs.

“We addressed some of the questions that were most important to the community, but certainly there are a lot of areas that we couldn’t cover” said coauthor Veronica H. Flood, MD, of the Medical College of Wisconsin in Milwaukee.

The guidelines process began with a survey sent to the von Willebrand disease community, including patients, caregivers, nurses, physicians, and scientists. The respondents were asked to prioritize issues that they felt should be addressed in the guidelines.

“Interestingly, some of the issues were the same between patients and caregivers and physicians, and some were different, but there were obviously some areas that we just couldn’t cover,” she said in an interview.

One of the areas of greatest concern for respondents was bleeding in women, and many of the recommendations include specific considerations for management of gynecologic and obstetric patients, Dr. Flood said.

“We also tried to make the questions applicable to as many patients with von Willebrand disease as possible,” she added.

Some of the questions, such as recommendation 1, regarding prophylaxis, are geared toward management of patients with severe disease, while others, such as recommendations for treatment of menstrual bleeding, are more suited for patients with milder VWD.

All of the recommendations in the guidelines are “conditional” (suggested), due to very low certainty in the evidence of effects, the authors noted.

Prophylaxis

The guidelines suggest long-term prophylaxis for patients with a history of severe and frequent bleeds, with periodic assessment of the need for prophylaxis.

Desmopressin

For those patients who may benefit from the use of desmopressin, primarily those with type 1 VWD, and who have a baseline von Willebrand factor (VWF) level below 0.30 IU/mL, the panel issued a conditional recommendation for a desmopressin trial with treatment based on the patient’s results compared with not performing a trial and treating with tranexamic acid or factor concentrate. The guidelines also advise against treating with desmopressin in the absence of a trial. In a section of “good practice statements,” the guidelines indicate that using desmopressin in patients with type 2B VWD is generally contraindicated, because of the risk of thrombocytopenia as a result of increased platelet binding. In addition, desmopressin is generally contraindicated in patients with active cardiovascular disease, patients with seizure disorders, patients less than 2 years old, and patients with type 1C VWD in the setting of surgery.

Antithrombotic therapy

The guideline panelists conditionally recommend antithrombotic therapy with either antiplatelet agents or anticoagulants, with an emphasis on reassessing bleeding risk throughout the course of treatment.

An accompanying good practice statement calls for individualized assessments of risks and benefits of specific antithrombotic therapies by a multidisciplinary team including hematologists, cardiovascular specialists, and the patient.

Major surgery

This section includes a recommendation for targeting both factor VIII and VWF activity levels to a minimum of 50 IU/mL for at least 3 days after surgery, and a suggestion against using factor VIII target levels alone.

Minor surgery/invasive procedures

The panelists suggest increasing VWF activity levels to a minimum of 0.50 IU/mL with desmopressin or factor concentrate with the addition of tranexamic acid over raising VWF levels to at least 0.50 IU/mL with desmopressin or factor concentrate alone.

In addition, the panelists suggest “giving tranexamic acid alone over increasing VWF activity levels to a minimum threshold of 0.50 IU/mL with any intervention in patients with type 1 VWD with baseline VWF activity levels of 0.30 IU/mL and a mild bleeding phenotype undergoing minor mucosal procedures.”

Heavy menstrual bleeding

In women with heavy menstrual bleeding who do not plan to conceive, the panel suggests either combined hormonal therapy or levonorgestrel-releasing intrauterine system, or tranexamic acid over desmopressin.

In women who wish to conceive, the panel suggests using tranexamic acid over desmopressin.

Neuraxial anesthesia during labor

For women in labor for whom neuraxial anesthesia is considered, the guidelines suggest targeting a VWF activity level from 0.50 to 1.50 IU/mL over targeting a level above 1.50 IU/mL.

Postpartum management

“The guideline panel suggests the use of tranexamic acid over not using it in women with type 1 VWD or low VWF levels (and this may also apply to types 2 and 3 VWD) during the postpartum period,” the guidelines say.

An accompanying good practice statement says that tranexamic acid can be provided orally or intravenously. The oral dose is 25 mg/kg three times daily for 10-14 days, or longer if blood loss remains heavy.

Dr. Flood said that the guidelines were developed under the assumption that they would apply to care of patients in regions with a high or moderately high degree of clinical resources.

“We recognize that this eliminates a great deal of the globe, and our hope is that ASH and the other sponsoring organizations are going to let us revise this and do a version for lower-resourced settings,” she said.

FROM BLOOD ADVANCES

Topical tranexamic acid for melasma

By addressing the vascular component of melasma, off-label use of oral tranexamic acid has been a beneficial adjunct for this difficult-to-treat condition. For on-label use treating menorrhagia (the oral form) and short-term prophylaxis of bleeding in hemophilia patients undergoing dental procedures – (the injectable form), tranexamic acid acts as an antifibrinolytic.

By inhibiting plasminogen activation, according to a 2018 review article “tranexamic acid mitigates UV radiation–induced melanogenesis and neovascularization,” both exhibited in the clinical manifestations of melasma.1 In addition to inhibiting fibrinolysis, tranexamic acid has direct effects on UV-induced pigmentation, “via its inhibitory effects on UV light–induced plasminogen activator on keratinocytes and [subsequent] plasmin activity,” the article states. “Plasminogen activator induces tyrosinase activity, resulting in increased melanin synthesis. The presence of plasmin [which dissolves clots by degrading fibrin] results in increased production of both arachidonic acid and fibroblast growth factor, which stimulate melanogenesis and neovascularization, respectively.”

With oral use, the risk of clot formation, especially in those who have a history of blood clots, clotting disorders (such as factor V Leiden), smoking, or other hypercoagulability risks should be weighed.

Topical tranexamic acid used locally mitigates systemic risk, and according to published studies, has been found to be efficacious for hemostasis in knee and hip arthroplasty surgery and for epistaxis. However, clinical outcomes with the topical treatment have largely not been on par with regards to efficacy for melasma when compared with oral tranexamic acid.

. Topical tranexamic acid, in my experience, when applied immediately after fractional 1927-nm diode laser treatment, not only has been noted by patients to feel soothing, but anecdotally has been found to improve pigmentation.

Moreover, there are now several peer-reviewed studies showing some benefit for treating pigmentation from photodamage or melasma with laser-assisted delivery of topical tranexamic acid. Treatment of these conditions may also benefit from nonablative 1927-nm laser alone.

In one recently published study, 10 female melasma patients, Fitzpatrick skin types II-IV, underwent five full-face low-energy, low-density (power 4-5 W, fluence 2-8 mJ, 2-8 passes) 1927-nm fractional thulium fiber laser treatment.2 Topical tranexamic acid was applied immediately after laser treatment and continued twice daily for 7 days. Seven patients completed the study. Based on the Global Aesthetics Improvement Scale (GAIS) ratings, all seven patients noted improvement at day 180, at which time six of the patients were considered to have improved from baseline, according to the investigator GAIS ratings. Using the Melasma Area Severity Index (MASI) score, the greatest degree of improvement was seen at day 90; there were three recurrences of melasma with worsening of the MASI score between day 90 and day 180.

In a split-face, double-blind, randomized controlled study, 46 patients with Fitzpatrick skin types III-V, with recalcitrant melasma received four weekly treatments of full-face fractional 1927-nm thulium laser; topical tranexamic acid was applied to one side of the face and normal saline applied to the other side under occlusion, immediately after treatment.3 At 3 months, significant improvements from baseline were seen with Melanin Index (MI) and modified MASI (mMASI) scores for the sides treated with tranexamic acid and the control side, with no statistically significant differences between the two. However, at month 6, among the 29 patients available for follow-up, significant differences in MI and mMASI scores from baseline were still evident, with the exception of MI scores on the control sides.

No adverse events from using topical tranexamic acid with laser were noted in either study. Split-face randomized control studies with use of topical tranexamic acid after fractional 1927-nm diode laser in comparison to fractional 1927-nm thulium laser would be notable in this vascular and heat-sensitive condition as well.

Dr. Wesley and Dr. Talakoub are cocontributors to this column. Dr. Wesley practices dermatology in Beverly Hills, Calif. Dr. Talakoub is in private practice in McLean, Va. This month’s column is by Dr. Wesley. Write to them at [email protected]. They had no relevant disclosures.

References

1. Sheu SL. Cutis. 2018 Feb;101(2):E7-E8.

2. Wang, JV et al. J Cosmet Dermatol. 2021 Jan;20(1):105-9.

3. Wanitphakdeedecha R. et al. Lasers Med Sci. 2020 Dec;35(9):2015-21.

By addressing the vascular component of melasma, off-label use of oral tranexamic acid has been a beneficial adjunct for this difficult-to-treat condition. For on-label use treating menorrhagia (the oral form) and short-term prophylaxis of bleeding in hemophilia patients undergoing dental procedures – (the injectable form), tranexamic acid acts as an antifibrinolytic.

By inhibiting plasminogen activation, according to a 2018 review article “tranexamic acid mitigates UV radiation–induced melanogenesis and neovascularization,” both exhibited in the clinical manifestations of melasma.1 In addition to inhibiting fibrinolysis, tranexamic acid has direct effects on UV-induced pigmentation, “via its inhibitory effects on UV light–induced plasminogen activator on keratinocytes and [subsequent] plasmin activity,” the article states. “Plasminogen activator induces tyrosinase activity, resulting in increased melanin synthesis. The presence of plasmin [which dissolves clots by degrading fibrin] results in increased production of both arachidonic acid and fibroblast growth factor, which stimulate melanogenesis and neovascularization, respectively.”

With oral use, the risk of clot formation, especially in those who have a history of blood clots, clotting disorders (such as factor V Leiden), smoking, or other hypercoagulability risks should be weighed.

Topical tranexamic acid used locally mitigates systemic risk, and according to published studies, has been found to be efficacious for hemostasis in knee and hip arthroplasty surgery and for epistaxis. However, clinical outcomes with the topical treatment have largely not been on par with regards to efficacy for melasma when compared with oral tranexamic acid.

. Topical tranexamic acid, in my experience, when applied immediately after fractional 1927-nm diode laser treatment, not only has been noted by patients to feel soothing, but anecdotally has been found to improve pigmentation.

Moreover, there are now several peer-reviewed studies showing some benefit for treating pigmentation from photodamage or melasma with laser-assisted delivery of topical tranexamic acid. Treatment of these conditions may also benefit from nonablative 1927-nm laser alone.

In one recently published study, 10 female melasma patients, Fitzpatrick skin types II-IV, underwent five full-face low-energy, low-density (power 4-5 W, fluence 2-8 mJ, 2-8 passes) 1927-nm fractional thulium fiber laser treatment.2 Topical tranexamic acid was applied immediately after laser treatment and continued twice daily for 7 days. Seven patients completed the study. Based on the Global Aesthetics Improvement Scale (GAIS) ratings, all seven patients noted improvement at day 180, at which time six of the patients were considered to have improved from baseline, according to the investigator GAIS ratings. Using the Melasma Area Severity Index (MASI) score, the greatest degree of improvement was seen at day 90; there were three recurrences of melasma with worsening of the MASI score between day 90 and day 180.

In a split-face, double-blind, randomized controlled study, 46 patients with Fitzpatrick skin types III-V, with recalcitrant melasma received four weekly treatments of full-face fractional 1927-nm thulium laser; topical tranexamic acid was applied to one side of the face and normal saline applied to the other side under occlusion, immediately after treatment.3 At 3 months, significant improvements from baseline were seen with Melanin Index (MI) and modified MASI (mMASI) scores for the sides treated with tranexamic acid and the control side, with no statistically significant differences between the two. However, at month 6, among the 29 patients available for follow-up, significant differences in MI and mMASI scores from baseline were still evident, with the exception of MI scores on the control sides.

No adverse events from using topical tranexamic acid with laser were noted in either study. Split-face randomized control studies with use of topical tranexamic acid after fractional 1927-nm diode laser in comparison to fractional 1927-nm thulium laser would be notable in this vascular and heat-sensitive condition as well.

Dr. Wesley and Dr. Talakoub are cocontributors to this column. Dr. Wesley practices dermatology in Beverly Hills, Calif. Dr. Talakoub is in private practice in McLean, Va. This month’s column is by Dr. Wesley. Write to them at [email protected]. They had no relevant disclosures.

References

1. Sheu SL. Cutis. 2018 Feb;101(2):E7-E8.

2. Wang, JV et al. J Cosmet Dermatol. 2021 Jan;20(1):105-9.

3. Wanitphakdeedecha R. et al. Lasers Med Sci. 2020 Dec;35(9):2015-21.

By addressing the vascular component of melasma, off-label use of oral tranexamic acid has been a beneficial adjunct for this difficult-to-treat condition. For on-label use treating menorrhagia (the oral form) and short-term prophylaxis of bleeding in hemophilia patients undergoing dental procedures – (the injectable form), tranexamic acid acts as an antifibrinolytic.

By inhibiting plasminogen activation, according to a 2018 review article “tranexamic acid mitigates UV radiation–induced melanogenesis and neovascularization,” both exhibited in the clinical manifestations of melasma.1 In addition to inhibiting fibrinolysis, tranexamic acid has direct effects on UV-induced pigmentation, “via its inhibitory effects on UV light–induced plasminogen activator on keratinocytes and [subsequent] plasmin activity,” the article states. “Plasminogen activator induces tyrosinase activity, resulting in increased melanin synthesis. The presence of plasmin [which dissolves clots by degrading fibrin] results in increased production of both arachidonic acid and fibroblast growth factor, which stimulate melanogenesis and neovascularization, respectively.”

With oral use, the risk of clot formation, especially in those who have a history of blood clots, clotting disorders (such as factor V Leiden), smoking, or other hypercoagulability risks should be weighed.

Topical tranexamic acid used locally mitigates systemic risk, and according to published studies, has been found to be efficacious for hemostasis in knee and hip arthroplasty surgery and for epistaxis. However, clinical outcomes with the topical treatment have largely not been on par with regards to efficacy for melasma when compared with oral tranexamic acid.

. Topical tranexamic acid, in my experience, when applied immediately after fractional 1927-nm diode laser treatment, not only has been noted by patients to feel soothing, but anecdotally has been found to improve pigmentation.

Moreover, there are now several peer-reviewed studies showing some benefit for treating pigmentation from photodamage or melasma with laser-assisted delivery of topical tranexamic acid. Treatment of these conditions may also benefit from nonablative 1927-nm laser alone.

In one recently published study, 10 female melasma patients, Fitzpatrick skin types II-IV, underwent five full-face low-energy, low-density (power 4-5 W, fluence 2-8 mJ, 2-8 passes) 1927-nm fractional thulium fiber laser treatment.2 Topical tranexamic acid was applied immediately after laser treatment and continued twice daily for 7 days. Seven patients completed the study. Based on the Global Aesthetics Improvement Scale (GAIS) ratings, all seven patients noted improvement at day 180, at which time six of the patients were considered to have improved from baseline, according to the investigator GAIS ratings. Using the Melasma Area Severity Index (MASI) score, the greatest degree of improvement was seen at day 90; there were three recurrences of melasma with worsening of the MASI score between day 90 and day 180.

In a split-face, double-blind, randomized controlled study, 46 patients with Fitzpatrick skin types III-V, with recalcitrant melasma received four weekly treatments of full-face fractional 1927-nm thulium laser; topical tranexamic acid was applied to one side of the face and normal saline applied to the other side under occlusion, immediately after treatment.3 At 3 months, significant improvements from baseline were seen with Melanin Index (MI) and modified MASI (mMASI) scores for the sides treated with tranexamic acid and the control side, with no statistically significant differences between the two. However, at month 6, among the 29 patients available for follow-up, significant differences in MI and mMASI scores from baseline were still evident, with the exception of MI scores on the control sides.

No adverse events from using topical tranexamic acid with laser were noted in either study. Split-face randomized control studies with use of topical tranexamic acid after fractional 1927-nm diode laser in comparison to fractional 1927-nm thulium laser would be notable in this vascular and heat-sensitive condition as well.

Dr. Wesley and Dr. Talakoub are cocontributors to this column. Dr. Wesley practices dermatology in Beverly Hills, Calif. Dr. Talakoub is in private practice in McLean, Va. This month’s column is by Dr. Wesley. Write to them at [email protected]. They had no relevant disclosures.

References

1. Sheu SL. Cutis. 2018 Feb;101(2):E7-E8.

2. Wang, JV et al. J Cosmet Dermatol. 2021 Jan;20(1):105-9.

3. Wanitphakdeedecha R. et al. Lasers Med Sci. 2020 Dec;35(9):2015-21.

Schools, COVID-19, and Jan. 6, 2021

The first weeks of 2021 have us considering how best to face compound challenges and we expect parents will be looking to their pediatricians for guidance. There are daily stories of the COVID-19 death toll, an abstraction made real by tragic stories of shattered families. Most families are approaching the first anniversary of their children being in virtual school, with growing concerns about the quality of virtual education, loss of socialization and group activities, and additional risks facing poor and vulnerable children. There are real concerns about the future impact of children spending so much time every day on their screens for school, extracurricular activities, social time, and relaxation. While the COVID-19 vaccines promise a return to “normal” sometime in 2021, in-person school may not return until late in the spring or next fall.

After the events of Jan. 6, families face an additional challenge: Discussing the violent invasion of the U.S. Capitol by the president’s supporters. This event was shocking, frightening, and confusing for most, and continues to be heavily covered in the news and online. There is a light in all this darkness. We have the opportunity to talk with our children – and to share explanations, perspectives, values, and even the discomfort of the unknowns – about COVID-19, use of the Internet, and the violence of Jan 6. We will consider how parents can approach this challenge for three age groups. With each group, parents will need to be calm and curious and will need time to give their children their full attention. We are all living through history. When parents can be fully present with their children, even for short periods at meals or at bedtime, it will help all to get their balance back and start to make sense of the extraordinary events we have been facing.

The youngest children (aged 3-6 years), those who were in preschool or kindergarten before the pandemic, need the most from their parents during this time. If they are attending school virtually, their online school days are likely short and challenging. Children at this age are mastering behavior rather than cognitive tasks. They are learning how to manage their bodies in space (stay in their seats!), how to be patient and kind (take turns!), and how to manage frustration (math is hard, try again!). Without the physical presence of their teacher and classmates, these lessons are tougher to internalize. Given their age-appropriate short attention spans, they often walk away from a screen, even if it’s class time. They are more likely to be paying attention to their parents, responding to the emotional climate at home. Even if they are not watching news websites themselves, they are likely to have overheard or noticed the news about recent events. Parents of young children should take care to turn off the television or their own computer, as repeated frightening videos of the insurrection can cause their children to worry that these events continue to unfold. These children need their parents’ undivided attention, even just for a little while. Play a board game with them (good chance to stay in their seats, take turns, and manage losing). Or get them outside for some physical play. While playing, parents can ask what they have seen, heard, or understand about what happened in the Capitol. Then they can correct misperceptions that might be frightening and offer reasonable reassurances in language these young children can understand. They might tell their children that sometimes people get angry when they have lost, and even adults can behave badly and make mistakes. They can focus on who the helpers are, and what they could do to help also. They could write letters of appreciation to their elected officials or to the Capitol police who were so brave in protecting others. If their children are curious, parents can find books or videos that are age appropriate about the Constitution and how elections work in a democracy. Parents don’t need to be able to answer every question, watching “Schoolhouse Rock” videos on YouTube together is a wonderful way to complement their online school and support their healthy development.

School-aged children (7-12 years) are developmentally focused on mastery experiences, whether they are social, academic, or athletic. They may be better equipped to pay attention and do homework than their younger siblings, but they will miss building friendships and having a real audience for their efforts as they build emotional maturity. They are prone to worry and distress about the big events that they can understand, at least in concrete terms, but have never faced before. These children usually have been able to use social media and online games to stay connected to friends, but they are less likely than their older siblings to independently exercise or explore new interests without a parent or teacher to guide and support them. These children are likely to be spending a lot of their time online on websites their parents don’t know about, and most likely to be curious about the events of Jan. 6. Parents should close their own device and invite their school-age children to show them what they are working on in school. Be curious about all of it, even how they are doing gym or music class. Then ask about what they have seen or heard about the election and its aftermath at school, from friends, or on their own. Let them be the teachers about what happened and how they learned about it. Parents can correct misinformation or offer reliable sources of information they can investigate together. What they will need is validation of the difficult feelings that events like these can cause; that is, acknowledgment, acceptance, and understanding of big feelings, without trying to just make those feelings go away. Parents might help them to be curious about what can make people get angry, break laws, and even hurt others, and how we protest injustices in a democracy. These children may be ready to take a deeper dive into history, via a good film or documentary, with their parents’ company for discussion afterward. Be their audience and model curiosity and patience, all the while validating the feelings that might arise.

Teenagers are developmentally focused on building their own identities, cultivating independence, and deeper relationships beyond their family. While they may be well equipped to manage online learning and to stay connected to their friends and teachers through electronic means, they are also facing considerable challenge, as their ability to explore new interests, build new relationships, and be meaningfully independent has been profoundly restrained over the past year. And they are facing other losses, as milestones like proms, performances, and competitions have been altered or missed. Parents still know when their teenager is most likely to talk, and they should check in with them during those times. They can ask them about what classes are working online and which ones aren’t, and what extracurriculars are still possible. They should not be discouraged if their teenager only offers cursory responses, it matters that they are showing up and showing interest. The election and its aftermath provide a meaningful matter to discuss; parents can find out if it is being discussed by any teachers or friends. What do they think triggered the events of Jan. 6? Who should be held responsible? How to reasonably protest injustice? What does a society do when citizens can’t agree on facts? More than offering reassurance, parents should be curious about their adolescent’s developing identity and their values, how they are thinking about complex issues around free speech and justice. It is a wonderful opportunity for parents to learn about their adolescent’s emerging identity, to be tolerant of their autonomy, and an opportunity to offer their perspective and values.

At every age, parents need to be present by listening and drawing their children out without distraction. Now is a time to build relationships and to use the difficult events of the day to shed light on deeper issues and values. This is hard, but far better than having children deal with these issues in darkness or alone.

Dr. Swick is physician in chief at Ohana, Center for Child and Adolescent Behavioral Health, Community Hospital of the Monterey (Calif.) Peninsula. Dr. Jellinek is professor emeritus of psychiatry and pediatrics at Harvard Medical School, Boston. Email them at [email protected].

The first weeks of 2021 have us considering how best to face compound challenges and we expect parents will be looking to their pediatricians for guidance. There are daily stories of the COVID-19 death toll, an abstraction made real by tragic stories of shattered families. Most families are approaching the first anniversary of their children being in virtual school, with growing concerns about the quality of virtual education, loss of socialization and group activities, and additional risks facing poor and vulnerable children. There are real concerns about the future impact of children spending so much time every day on their screens for school, extracurricular activities, social time, and relaxation. While the COVID-19 vaccines promise a return to “normal” sometime in 2021, in-person school may not return until late in the spring or next fall.

After the events of Jan. 6, families face an additional challenge: Discussing the violent invasion of the U.S. Capitol by the president’s supporters. This event was shocking, frightening, and confusing for most, and continues to be heavily covered in the news and online. There is a light in all this darkness. We have the opportunity to talk with our children – and to share explanations, perspectives, values, and even the discomfort of the unknowns – about COVID-19, use of the Internet, and the violence of Jan 6. We will consider how parents can approach this challenge for three age groups. With each group, parents will need to be calm and curious and will need time to give their children their full attention. We are all living through history. When parents can be fully present with their children, even for short periods at meals or at bedtime, it will help all to get their balance back and start to make sense of the extraordinary events we have been facing.

The youngest children (aged 3-6 years), those who were in preschool or kindergarten before the pandemic, need the most from their parents during this time. If they are attending school virtually, their online school days are likely short and challenging. Children at this age are mastering behavior rather than cognitive tasks. They are learning how to manage their bodies in space (stay in their seats!), how to be patient and kind (take turns!), and how to manage frustration (math is hard, try again!). Without the physical presence of their teacher and classmates, these lessons are tougher to internalize. Given their age-appropriate short attention spans, they often walk away from a screen, even if it’s class time. They are more likely to be paying attention to their parents, responding to the emotional climate at home. Even if they are not watching news websites themselves, they are likely to have overheard or noticed the news about recent events. Parents of young children should take care to turn off the television or their own computer, as repeated frightening videos of the insurrection can cause their children to worry that these events continue to unfold. These children need their parents’ undivided attention, even just for a little while. Play a board game with them (good chance to stay in their seats, take turns, and manage losing). Or get them outside for some physical play. While playing, parents can ask what they have seen, heard, or understand about what happened in the Capitol. Then they can correct misperceptions that might be frightening and offer reasonable reassurances in language these young children can understand. They might tell their children that sometimes people get angry when they have lost, and even adults can behave badly and make mistakes. They can focus on who the helpers are, and what they could do to help also. They could write letters of appreciation to their elected officials or to the Capitol police who were so brave in protecting others. If their children are curious, parents can find books or videos that are age appropriate about the Constitution and how elections work in a democracy. Parents don’t need to be able to answer every question, watching “Schoolhouse Rock” videos on YouTube together is a wonderful way to complement their online school and support their healthy development.

School-aged children (7-12 years) are developmentally focused on mastery experiences, whether they are social, academic, or athletic. They may be better equipped to pay attention and do homework than their younger siblings, but they will miss building friendships and having a real audience for their efforts as they build emotional maturity. They are prone to worry and distress about the big events that they can understand, at least in concrete terms, but have never faced before. These children usually have been able to use social media and online games to stay connected to friends, but they are less likely than their older siblings to independently exercise or explore new interests without a parent or teacher to guide and support them. These children are likely to be spending a lot of their time online on websites their parents don’t know about, and most likely to be curious about the events of Jan. 6. Parents should close their own device and invite their school-age children to show them what they are working on in school. Be curious about all of it, even how they are doing gym or music class. Then ask about what they have seen or heard about the election and its aftermath at school, from friends, or on their own. Let them be the teachers about what happened and how they learned about it. Parents can correct misinformation or offer reliable sources of information they can investigate together. What they will need is validation of the difficult feelings that events like these can cause; that is, acknowledgment, acceptance, and understanding of big feelings, without trying to just make those feelings go away. Parents might help them to be curious about what can make people get angry, break laws, and even hurt others, and how we protest injustices in a democracy. These children may be ready to take a deeper dive into history, via a good film or documentary, with their parents’ company for discussion afterward. Be their audience and model curiosity and patience, all the while validating the feelings that might arise.

Teenagers are developmentally focused on building their own identities, cultivating independence, and deeper relationships beyond their family. While they may be well equipped to manage online learning and to stay connected to their friends and teachers through electronic means, they are also facing considerable challenge, as their ability to explore new interests, build new relationships, and be meaningfully independent has been profoundly restrained over the past year. And they are facing other losses, as milestones like proms, performances, and competitions have been altered or missed. Parents still know when their teenager is most likely to talk, and they should check in with them during those times. They can ask them about what classes are working online and which ones aren’t, and what extracurriculars are still possible. They should not be discouraged if their teenager only offers cursory responses, it matters that they are showing up and showing interest. The election and its aftermath provide a meaningful matter to discuss; parents can find out if it is being discussed by any teachers or friends. What do they think triggered the events of Jan. 6? Who should be held responsible? How to reasonably protest injustice? What does a society do when citizens can’t agree on facts? More than offering reassurance, parents should be curious about their adolescent’s developing identity and their values, how they are thinking about complex issues around free speech and justice. It is a wonderful opportunity for parents to learn about their adolescent’s emerging identity, to be tolerant of their autonomy, and an opportunity to offer their perspective and values.

At every age, parents need to be present by listening and drawing their children out without distraction. Now is a time to build relationships and to use the difficult events of the day to shed light on deeper issues and values. This is hard, but far better than having children deal with these issues in darkness or alone.

Dr. Swick is physician in chief at Ohana, Center for Child and Adolescent Behavioral Health, Community Hospital of the Monterey (Calif.) Peninsula. Dr. Jellinek is professor emeritus of psychiatry and pediatrics at Harvard Medical School, Boston. Email them at [email protected].

The first weeks of 2021 have us considering how best to face compound challenges and we expect parents will be looking to their pediatricians for guidance. There are daily stories of the COVID-19 death toll, an abstraction made real by tragic stories of shattered families. Most families are approaching the first anniversary of their children being in virtual school, with growing concerns about the quality of virtual education, loss of socialization and group activities, and additional risks facing poor and vulnerable children. There are real concerns about the future impact of children spending so much time every day on their screens for school, extracurricular activities, social time, and relaxation. While the COVID-19 vaccines promise a return to “normal” sometime in 2021, in-person school may not return until late in the spring or next fall.

After the events of Jan. 6, families face an additional challenge: Discussing the violent invasion of the U.S. Capitol by the president’s supporters. This event was shocking, frightening, and confusing for most, and continues to be heavily covered in the news and online. There is a light in all this darkness. We have the opportunity to talk with our children – and to share explanations, perspectives, values, and even the discomfort of the unknowns – about COVID-19, use of the Internet, and the violence of Jan 6. We will consider how parents can approach this challenge for three age groups. With each group, parents will need to be calm and curious and will need time to give their children their full attention. We are all living through history. When parents can be fully present with their children, even for short periods at meals or at bedtime, it will help all to get their balance back and start to make sense of the extraordinary events we have been facing.