User login

New NIH consortium aims to coordinate pediatric research programs

across its institutes and centers.

Almost all of the 27 institutes and centers of the NIH fund at least some kind of child health research, totaling more than $4 billion in the 2017 fiscal year, according to an NIH statement. “The new consortium aims to harmonize these activities, explore gaps and opportunities in the overall pediatric research portfolio, and set priorities.”

Research funded by NIH “has resulted in tremendous advances against diseases and conditions that affect child health and well-being, including asthma, cancer, autism, obesity, and intellectual and developmental disabilities,” explained Diana W. Bianchi, MD, in the statement. Dr. Bianchi is director of the Eunice Kennedy Shriver National Institute of Child Health and Human Development, the lead NIH institute for the consortium.

The new consortium, which will be led by the NICHD director, will meet several times a year.

across its institutes and centers.

Almost all of the 27 institutes and centers of the NIH fund at least some kind of child health research, totaling more than $4 billion in the 2017 fiscal year, according to an NIH statement. “The new consortium aims to harmonize these activities, explore gaps and opportunities in the overall pediatric research portfolio, and set priorities.”

Research funded by NIH “has resulted in tremendous advances against diseases and conditions that affect child health and well-being, including asthma, cancer, autism, obesity, and intellectual and developmental disabilities,” explained Diana W. Bianchi, MD, in the statement. Dr. Bianchi is director of the Eunice Kennedy Shriver National Institute of Child Health and Human Development, the lead NIH institute for the consortium.

The new consortium, which will be led by the NICHD director, will meet several times a year.

across its institutes and centers.

Almost all of the 27 institutes and centers of the NIH fund at least some kind of child health research, totaling more than $4 billion in the 2017 fiscal year, according to an NIH statement. “The new consortium aims to harmonize these activities, explore gaps and opportunities in the overall pediatric research portfolio, and set priorities.”

Research funded by NIH “has resulted in tremendous advances against diseases and conditions that affect child health and well-being, including asthma, cancer, autism, obesity, and intellectual and developmental disabilities,” explained Diana W. Bianchi, MD, in the statement. Dr. Bianchi is director of the Eunice Kennedy Shriver National Institute of Child Health and Human Development, the lead NIH institute for the consortium.

The new consortium, which will be led by the NICHD director, will meet several times a year.

RBC transfusions with surgery may increase VTE risk

In patients undergoing surgery, RBC transfusions may be associated with the development of new or progressive venous thromboembolism within 30 days of the procedure, results of a recent registry study suggest.

Patients who received perioperative RBC transfusions had significantly higher odds of developing postoperative venous thromboembolism (VTE) overall, as well as higher odds specifically for deep venous thrombosis (DVT) and pulmonary embolism (PE), according to results published in JAMA Surgery.

“In a subset of patients receiving perioperative RBC transfusions, a synergistic and incremental dose-related risk for VTE development may exist,” Dr. Goel and her coauthors wrote.

The analysis was based on prospectively collected North American registry data including 750,937 patients who underwent a surgical procedure in 2014, of which 47,410 (6.3%) received one or more perioperative RBC transfusions. VTE occurred in 6,309 patients (0.8%), of which 4,336 cases were DVT (0.6%) and 2,514 were PE (0.3%).

The patients who received perioperative RBC transfusions had significantly increased odds of developing VTE in the 30-day postoperative period (adjusted odds ratio, 2.1; 95% confidence interval, 2.0-2.3) versus those who had no transfusions, according to results of a multivariable analysis adjusting for age, sex, length of hospital stay, use of mechanical ventilation, and other potentially confounding factors.

Similarly, researchers found transfused patients had higher odds of both DVT (aOR, 2.2; 95% CI, 2.1-2.4), and PE (aOR, 1.9; 95% CI, 1.7-2.1).

Odds of VTE increased significantly along with increasing number of perioperative RBC transfusions from an aOR of 2.1 for those with just one transfusion to 4.5 for those who had three or more transfusion events (P less than .001 for trend), results of a dose-response analysis showed.

The association between RBC transfusions perioperatively and VTE postoperatively remained robust after propensity score matching and was statistically significant in all surgical subspecialties, the researchers reported.

However, they also noted that these results will require validation in prospective cohort studies and randomized clinical trials. “If proven, they underscore the continued need for more stringent and optimal perioperative blood management practices in addition to rigorous VTE prophylaxis in patients undergoing surgery.”

The study was funded in part by grants from the National Institutes of Health and Cornell University. The researchers reported having no conflicts of interest.

SOURCE: Goel R et al. JAMA Surg. 2018 Jun 13. doi: 10.1001/jamasurg.2018.1565.

In patients undergoing surgery, RBC transfusions may be associated with the development of new or progressive venous thromboembolism within 30 days of the procedure, results of a recent registry study suggest.

Patients who received perioperative RBC transfusions had significantly higher odds of developing postoperative venous thromboembolism (VTE) overall, as well as higher odds specifically for deep venous thrombosis (DVT) and pulmonary embolism (PE), according to results published in JAMA Surgery.

“In a subset of patients receiving perioperative RBC transfusions, a synergistic and incremental dose-related risk for VTE development may exist,” Dr. Goel and her coauthors wrote.

The analysis was based on prospectively collected North American registry data including 750,937 patients who underwent a surgical procedure in 2014, of which 47,410 (6.3%) received one or more perioperative RBC transfusions. VTE occurred in 6,309 patients (0.8%), of which 4,336 cases were DVT (0.6%) and 2,514 were PE (0.3%).

The patients who received perioperative RBC transfusions had significantly increased odds of developing VTE in the 30-day postoperative period (adjusted odds ratio, 2.1; 95% confidence interval, 2.0-2.3) versus those who had no transfusions, according to results of a multivariable analysis adjusting for age, sex, length of hospital stay, use of mechanical ventilation, and other potentially confounding factors.

Similarly, researchers found transfused patients had higher odds of both DVT (aOR, 2.2; 95% CI, 2.1-2.4), and PE (aOR, 1.9; 95% CI, 1.7-2.1).

Odds of VTE increased significantly along with increasing number of perioperative RBC transfusions from an aOR of 2.1 for those with just one transfusion to 4.5 for those who had three or more transfusion events (P less than .001 for trend), results of a dose-response analysis showed.

The association between RBC transfusions perioperatively and VTE postoperatively remained robust after propensity score matching and was statistically significant in all surgical subspecialties, the researchers reported.

However, they also noted that these results will require validation in prospective cohort studies and randomized clinical trials. “If proven, they underscore the continued need for more stringent and optimal perioperative blood management practices in addition to rigorous VTE prophylaxis in patients undergoing surgery.”

The study was funded in part by grants from the National Institutes of Health and Cornell University. The researchers reported having no conflicts of interest.

SOURCE: Goel R et al. JAMA Surg. 2018 Jun 13. doi: 10.1001/jamasurg.2018.1565.

In patients undergoing surgery, RBC transfusions may be associated with the development of new or progressive venous thromboembolism within 30 days of the procedure, results of a recent registry study suggest.

Patients who received perioperative RBC transfusions had significantly higher odds of developing postoperative venous thromboembolism (VTE) overall, as well as higher odds specifically for deep venous thrombosis (DVT) and pulmonary embolism (PE), according to results published in JAMA Surgery.

“In a subset of patients receiving perioperative RBC transfusions, a synergistic and incremental dose-related risk for VTE development may exist,” Dr. Goel and her coauthors wrote.

The analysis was based on prospectively collected North American registry data including 750,937 patients who underwent a surgical procedure in 2014, of which 47,410 (6.3%) received one or more perioperative RBC transfusions. VTE occurred in 6,309 patients (0.8%), of which 4,336 cases were DVT (0.6%) and 2,514 were PE (0.3%).

The patients who received perioperative RBC transfusions had significantly increased odds of developing VTE in the 30-day postoperative period (adjusted odds ratio, 2.1; 95% confidence interval, 2.0-2.3) versus those who had no transfusions, according to results of a multivariable analysis adjusting for age, sex, length of hospital stay, use of mechanical ventilation, and other potentially confounding factors.

Similarly, researchers found transfused patients had higher odds of both DVT (aOR, 2.2; 95% CI, 2.1-2.4), and PE (aOR, 1.9; 95% CI, 1.7-2.1).

Odds of VTE increased significantly along with increasing number of perioperative RBC transfusions from an aOR of 2.1 for those with just one transfusion to 4.5 for those who had three or more transfusion events (P less than .001 for trend), results of a dose-response analysis showed.

The association between RBC transfusions perioperatively and VTE postoperatively remained robust after propensity score matching and was statistically significant in all surgical subspecialties, the researchers reported.

However, they also noted that these results will require validation in prospective cohort studies and randomized clinical trials. “If proven, they underscore the continued need for more stringent and optimal perioperative blood management practices in addition to rigorous VTE prophylaxis in patients undergoing surgery.”

The study was funded in part by grants from the National Institutes of Health and Cornell University. The researchers reported having no conflicts of interest.

SOURCE: Goel R et al. JAMA Surg. 2018 Jun 13. doi: 10.1001/jamasurg.2018.1565.

FROM JAMA SURGERY

Key clinical point:

Major finding: Patients who received perioperative RBC transfusions had significantly increased odds of developing VTE in the 30-day postoperative period (adjusted odds ratio, 2.1; 95% confidence interval, 2.0-2.3), compared with patients who did not receive transfusions.

Study details: An analysis of prospectively collected North American registry data including 750,937 patients who underwent a surgical procedure in 2014.

Disclosures: The study was funded in part by grants from the National Institutes of Health and Cornell University, New York. The researchers reported having no conflicts of interest.

Source: Goel R et al. JAMA Surg. 2018 Jun 13. doi: 10.1001/jamasurg.2018.1565.

Risk of Recurrent ICH Is Higher Among Blacks and Hispanics

The increased severity of hypertension among minorities does not fully account for their increased risk.

Compared with their white peers, black and Hispanic patients with intracerebral hemorrhage (ICH) have a higher risk of recurrence, according to data published online ahead of print June 6 in Neurology. Although black and Hispanic patients have more severe hypertension than whites do, severity of hypertension does not fully account for this increased risk. Future studies should examine whether novel biologic, socioeconomic, or cultural factors play a role, said the researchers.

The scientific literature indicates that blacks and Hispanics have a higher risk of first ICH than whites do. Alessandro Biffi, MD, head of the Aging and Brain Health Research group at Massachusetts General Hospital in Boston, and colleagues hypothesized that hypertension among these populations might contribute toward this increased risk. Because the subject had not been explored previously, Dr. Biffi and colleagues investigated the role of blood pressure and its variability in determining the risk of recurrent ICH among nonwhites.

An Analysis of Two Studies

The authors examined data from a longitudinal study of ICH conducted by Massachusetts General Hospital and from the Ethnic/Racial Variations of Intracerebral Hemorrhage (ERICH) study. They included patients who were 18 or older with a diagnosis of acute primary ICH in their analysis.

At enrollment, participants reported their race or ethnicity during a structured interview and underwent blood pressure measurement. The investigators performed follow-up through phone calls and reviews of medical records. Every six months, investigators recorded at least one blood-pressure measurement and quantified blood-pressure variability. Dr. Biffi and colleagues used Cox regression survival analysis to identify risk factors for ICH recurrence.

Systolic Blood Pressure Was Associated With Recurrence

Of the 2,291 patients included in the analysis, 1,121 were white, 529 were black, 605 were Hispanic, and 36 were of other race or ethnicity. The median systolic blood pressure during follow-up was 149 mm Hg for black participants, 146 mm Hg for Hispanic participants, and 141 mm Hg for white participants. Systolic blood pressure variability also was higher for black participants (median, 3.5%), compared with white participants (median

In all, 26 (1.7%) white participants had ICH recurrence, compared with 35 (6.6%) black participants and 37 (6.1%) Hispanic participants. In univariable analyses, higher systolic blood pressure and greater systolic blood pressure variability were associated with increased ICH recurrence risk. Diastolic blood pressure and diastolic blood pressure variability, however, were not associated with ICH recurrence risk.

In multivariable analyses, black and Hispanic race or ethnicity remained independently associated with increased ICH recurrence risk in both studies, even after adjustment for systolic blood pressure and systolic blood pressure variability. Exposure to antihypertensive agents during follow-up was not associated with ICH recurrence and did not affect the results significantly. The associations were consistent in both studies.

Suggested Reading

Rodriguez-Torres A, Murphy M, Kourkoulis C, et al. Hypertension and intracerebral hemorrhage recurrence among white, black, and Hispanic individuals. Neurology. 2018 Jun 6 [Epub ahead of print].

The increased severity of hypertension among minorities does not fully account for their increased risk.

The increased severity of hypertension among minorities does not fully account for their increased risk.

Compared with their white peers, black and Hispanic patients with intracerebral hemorrhage (ICH) have a higher risk of recurrence, according to data published online ahead of print June 6 in Neurology. Although black and Hispanic patients have more severe hypertension than whites do, severity of hypertension does not fully account for this increased risk. Future studies should examine whether novel biologic, socioeconomic, or cultural factors play a role, said the researchers.

The scientific literature indicates that blacks and Hispanics have a higher risk of first ICH than whites do. Alessandro Biffi, MD, head of the Aging and Brain Health Research group at Massachusetts General Hospital in Boston, and colleagues hypothesized that hypertension among these populations might contribute toward this increased risk. Because the subject had not been explored previously, Dr. Biffi and colleagues investigated the role of blood pressure and its variability in determining the risk of recurrent ICH among nonwhites.

An Analysis of Two Studies

The authors examined data from a longitudinal study of ICH conducted by Massachusetts General Hospital and from the Ethnic/Racial Variations of Intracerebral Hemorrhage (ERICH) study. They included patients who were 18 or older with a diagnosis of acute primary ICH in their analysis.

At enrollment, participants reported their race or ethnicity during a structured interview and underwent blood pressure measurement. The investigators performed follow-up through phone calls and reviews of medical records. Every six months, investigators recorded at least one blood-pressure measurement and quantified blood-pressure variability. Dr. Biffi and colleagues used Cox regression survival analysis to identify risk factors for ICH recurrence.

Systolic Blood Pressure Was Associated With Recurrence

Of the 2,291 patients included in the analysis, 1,121 were white, 529 were black, 605 were Hispanic, and 36 were of other race or ethnicity. The median systolic blood pressure during follow-up was 149 mm Hg for black participants, 146 mm Hg for Hispanic participants, and 141 mm Hg for white participants. Systolic blood pressure variability also was higher for black participants (median, 3.5%), compared with white participants (median

In all, 26 (1.7%) white participants had ICH recurrence, compared with 35 (6.6%) black participants and 37 (6.1%) Hispanic participants. In univariable analyses, higher systolic blood pressure and greater systolic blood pressure variability were associated with increased ICH recurrence risk. Diastolic blood pressure and diastolic blood pressure variability, however, were not associated with ICH recurrence risk.

In multivariable analyses, black and Hispanic race or ethnicity remained independently associated with increased ICH recurrence risk in both studies, even after adjustment for systolic blood pressure and systolic blood pressure variability. Exposure to antihypertensive agents during follow-up was not associated with ICH recurrence and did not affect the results significantly. The associations were consistent in both studies.

Suggested Reading

Rodriguez-Torres A, Murphy M, Kourkoulis C, et al. Hypertension and intracerebral hemorrhage recurrence among white, black, and Hispanic individuals. Neurology. 2018 Jun 6 [Epub ahead of print].

Compared with their white peers, black and Hispanic patients with intracerebral hemorrhage (ICH) have a higher risk of recurrence, according to data published online ahead of print June 6 in Neurology. Although black and Hispanic patients have more severe hypertension than whites do, severity of hypertension does not fully account for this increased risk. Future studies should examine whether novel biologic, socioeconomic, or cultural factors play a role, said the researchers.

The scientific literature indicates that blacks and Hispanics have a higher risk of first ICH than whites do. Alessandro Biffi, MD, head of the Aging and Brain Health Research group at Massachusetts General Hospital in Boston, and colleagues hypothesized that hypertension among these populations might contribute toward this increased risk. Because the subject had not been explored previously, Dr. Biffi and colleagues investigated the role of blood pressure and its variability in determining the risk of recurrent ICH among nonwhites.

An Analysis of Two Studies

The authors examined data from a longitudinal study of ICH conducted by Massachusetts General Hospital and from the Ethnic/Racial Variations of Intracerebral Hemorrhage (ERICH) study. They included patients who were 18 or older with a diagnosis of acute primary ICH in their analysis.

At enrollment, participants reported their race or ethnicity during a structured interview and underwent blood pressure measurement. The investigators performed follow-up through phone calls and reviews of medical records. Every six months, investigators recorded at least one blood-pressure measurement and quantified blood-pressure variability. Dr. Biffi and colleagues used Cox regression survival analysis to identify risk factors for ICH recurrence.

Systolic Blood Pressure Was Associated With Recurrence

Of the 2,291 patients included in the analysis, 1,121 were white, 529 were black, 605 were Hispanic, and 36 were of other race or ethnicity. The median systolic blood pressure during follow-up was 149 mm Hg for black participants, 146 mm Hg for Hispanic participants, and 141 mm Hg for white participants. Systolic blood pressure variability also was higher for black participants (median, 3.5%), compared with white participants (median

In all, 26 (1.7%) white participants had ICH recurrence, compared with 35 (6.6%) black participants and 37 (6.1%) Hispanic participants. In univariable analyses, higher systolic blood pressure and greater systolic blood pressure variability were associated with increased ICH recurrence risk. Diastolic blood pressure and diastolic blood pressure variability, however, were not associated with ICH recurrence risk.

In multivariable analyses, black and Hispanic race or ethnicity remained independently associated with increased ICH recurrence risk in both studies, even after adjustment for systolic blood pressure and systolic blood pressure variability. Exposure to antihypertensive agents during follow-up was not associated with ICH recurrence and did not affect the results significantly. The associations were consistent in both studies.

Suggested Reading

Rodriguez-Torres A, Murphy M, Kourkoulis C, et al. Hypertension and intracerebral hemorrhage recurrence among white, black, and Hispanic individuals. Neurology. 2018 Jun 6 [Epub ahead of print].

Giving hospitalists a larger clinical footprint

Sneak Peek: The Hospital Leader blog

“We are playing the same sport, but a different game,” the wise, thoughtful emergency medicine attending physician once told me. “I am playing speed chess – I need to make a move quickly, or I lose – no matter what. My moves have to be right, but they don’t always necessarily need to be the optimal one. I am not always thinking five moves ahead. You guys [in internal medicine] are playing master chess. You have more time, but that means you are trying to always think about the whole game and make the best move possible.”

The pendulum has swung quickly from, “problem #7, chronic anemia: stable but I am not sure it has been worked up before, so I ordered a smear, retic count, and iron panel,” to “problem #1, acute blood loss anemia: now stable after transfusion, seems safe for discharge and GI follow-up.” (NOTE: “Acute blood loss anemia” is a phrase I learned from our “clinical documentation integrity specialist” – I think it gets me “50 CDI points” or something.)

Our job is not merely to work shifts and stabilize patients – there already is a specialty for that, and it is not the one we chose.

Clearly the correct balance is somewhere between the two extremes of “working up everything” and “deferring (nearly) everything to the outpatient setting.”

There are many forces that are contributing to current hospitalist work styles. As the work continues to become more exhaustingly intense and the average number of patients seen by a hospitalist grows impossibly upward, the duration of on-service stints has shortened.

In most settings, long gone are the days of the month-long teaching attending rotation. By day 12, I feel worn and ragged. For “nonteaching” services, hospitalists seem to increasingly treat each day as a separate shift to be covered, oftentimes handing the service back-and-forth every few days, or a week at most. With this structure, who can possibly think about the “whole patient”? Whose patient is this anyways?

Read the full post at hospitalleader.org.

Also on The Hospital Leader …

- How Hospitalists See the Forgotten Victims of Gun Violence by Vineet Arora, MD, MAPP, MHM

- Hospitals, Hospice and SNFs: The Big Deceit by Brad Flansbaum, DO, MPH, MHM

- But He’s a Good Doctor by Leslie Flores, MHA, SFHM

Sneak Peek: The Hospital Leader blog

Sneak Peek: The Hospital Leader blog

“We are playing the same sport, but a different game,” the wise, thoughtful emergency medicine attending physician once told me. “I am playing speed chess – I need to make a move quickly, or I lose – no matter what. My moves have to be right, but they don’t always necessarily need to be the optimal one. I am not always thinking five moves ahead. You guys [in internal medicine] are playing master chess. You have more time, but that means you are trying to always think about the whole game and make the best move possible.”

The pendulum has swung quickly from, “problem #7, chronic anemia: stable but I am not sure it has been worked up before, so I ordered a smear, retic count, and iron panel,” to “problem #1, acute blood loss anemia: now stable after transfusion, seems safe for discharge and GI follow-up.” (NOTE: “Acute blood loss anemia” is a phrase I learned from our “clinical documentation integrity specialist” – I think it gets me “50 CDI points” or something.)

Our job is not merely to work shifts and stabilize patients – there already is a specialty for that, and it is not the one we chose.

Clearly the correct balance is somewhere between the two extremes of “working up everything” and “deferring (nearly) everything to the outpatient setting.”

There are many forces that are contributing to current hospitalist work styles. As the work continues to become more exhaustingly intense and the average number of patients seen by a hospitalist grows impossibly upward, the duration of on-service stints has shortened.

In most settings, long gone are the days of the month-long teaching attending rotation. By day 12, I feel worn and ragged. For “nonteaching” services, hospitalists seem to increasingly treat each day as a separate shift to be covered, oftentimes handing the service back-and-forth every few days, or a week at most. With this structure, who can possibly think about the “whole patient”? Whose patient is this anyways?

Read the full post at hospitalleader.org.

Also on The Hospital Leader …

- How Hospitalists See the Forgotten Victims of Gun Violence by Vineet Arora, MD, MAPP, MHM

- Hospitals, Hospice and SNFs: The Big Deceit by Brad Flansbaum, DO, MPH, MHM

- But He’s a Good Doctor by Leslie Flores, MHA, SFHM

“We are playing the same sport, but a different game,” the wise, thoughtful emergency medicine attending physician once told me. “I am playing speed chess – I need to make a move quickly, or I lose – no matter what. My moves have to be right, but they don’t always necessarily need to be the optimal one. I am not always thinking five moves ahead. You guys [in internal medicine] are playing master chess. You have more time, but that means you are trying to always think about the whole game and make the best move possible.”

The pendulum has swung quickly from, “problem #7, chronic anemia: stable but I am not sure it has been worked up before, so I ordered a smear, retic count, and iron panel,” to “problem #1, acute blood loss anemia: now stable after transfusion, seems safe for discharge and GI follow-up.” (NOTE: “Acute blood loss anemia” is a phrase I learned from our “clinical documentation integrity specialist” – I think it gets me “50 CDI points” or something.)

Our job is not merely to work shifts and stabilize patients – there already is a specialty for that, and it is not the one we chose.

Clearly the correct balance is somewhere between the two extremes of “working up everything” and “deferring (nearly) everything to the outpatient setting.”

There are many forces that are contributing to current hospitalist work styles. As the work continues to become more exhaustingly intense and the average number of patients seen by a hospitalist grows impossibly upward, the duration of on-service stints has shortened.

In most settings, long gone are the days of the month-long teaching attending rotation. By day 12, I feel worn and ragged. For “nonteaching” services, hospitalists seem to increasingly treat each day as a separate shift to be covered, oftentimes handing the service back-and-forth every few days, or a week at most. With this structure, who can possibly think about the “whole patient”? Whose patient is this anyways?

Read the full post at hospitalleader.org.

Also on The Hospital Leader …

- How Hospitalists See the Forgotten Victims of Gun Violence by Vineet Arora, MD, MAPP, MHM

- Hospitals, Hospice and SNFs: The Big Deceit by Brad Flansbaum, DO, MPH, MHM

- But He’s a Good Doctor by Leslie Flores, MHA, SFHM

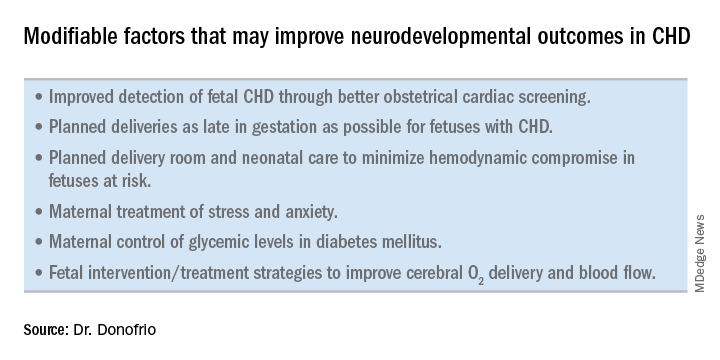

How congenital heart disease affects brain development

Congenital heart disease (CHD) is the most common congenital anomaly, with an estimated incidence of 6-12 per 1,000 live births. It is also the congenital anomaly that most often leads to death or significant morbidity. Advances in surgical procedures and operating room care as well as specialized care in the ICU have led to significant improvements in survival over the past 10-20 years – even for the most complex cases of CHD. We now expect the majority of newborns with CHD not only to survive, but to grow up into adulthood.

The focus of clinical research has thus transitioned from survival to issues of long-term morbidity and outcomes, and the more recent literature has clearly shown us that children with CHD are at high risk of learning disabilities and other neurodevelopmental abnormalities. The prevalence of impairment rises with the complexity of CHD, from a prevalence of approximately 20% in mild CHD to as much as 75% in severe CHD. Almost all neonates and infants who undergo palliative surgical procedures have neurodevelopmental impairments.

The neurobehavioral “signature” of CHD includes cognitive defects (usually mild), short attention span, fine and gross motor delays, speech and language delays, visual motor integration, and executive function deficits. Executive function deficits and attention deficits are among the problems that often do not present in children until they reach middle school and beyond, when they are expected to learn more complicated material and handle more complex tasks. Long-term surveillance and care have thus become a major focus at our institution and others throughout the country.

At the same time, evidence has increased in the past 5-10 years that adverse neurodevelopmental outcomes in children with complex CHD may stem from genetic factors as well as compromise to the brain in utero because of altered blood flow, compromise at the time of delivery, and insults during and after corrective or palliative surgery. Surgical strategies and operating room teams have become significantly better at protecting the brain, and new research now is directed toward understanding the neurologic abnormalities that are present in newborns prior to surgical intervention.

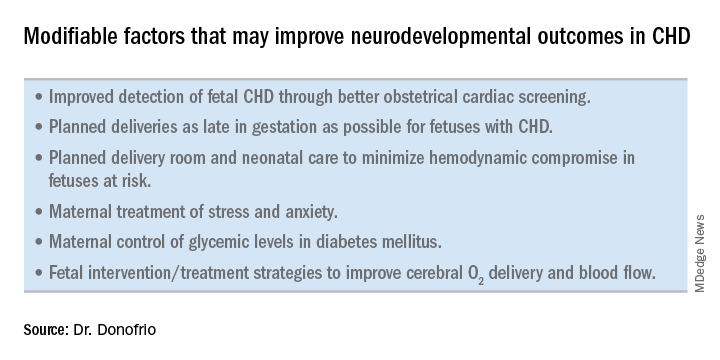

Increasingly, researchers are now focused on looking at the in utero origins of brain impairments in children with CHD and trying to understand specific prenatal causes, mechanisms, and potentially modifiable factors. We’re asking what we can do during pregnancy to improve neurodevelopmental outcomes.

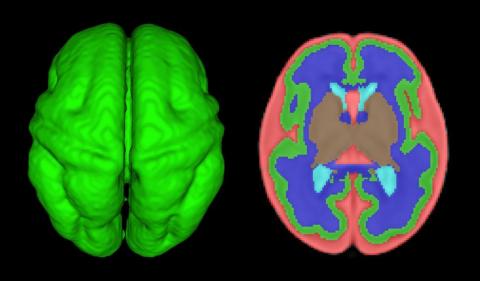

Impaired brain growth

The question of how CHD affects blood flow to the fetal brain is an important one. We found some time ago in a study using Doppler ultrasound that 44% of fetuses with CHD had blood flow abnormalities in the middle cerebral artery at some point in the late second or third trimester, suggesting that the blood vessels had dilated to allow more cerebral perfusion. This phenomenon, termed “brain sparing,” is believed to be an autoregulatory mechanism that occurs as a result of diminished oxygen delivery or inadequate blood flow to the brain (Pediatr Cardiol. 2003 Jan;24[5]:436-43).

Subsequent studies have similarly documented abnormal cerebral blood flow in fetuses with various types of congenital heart lesions. What is left to be determined is whether this autoregulatory mechanism is adequate to maintain perfusion in the presence of specific, high-risk CHD.

Abnormalities were more often seen in CHD with obstructed aortic flow, such as hypoplastic left heart syndrome (HLHS) in which the aorta is perfused retrograde through the fetal ductus arteriosus (Circulation. 2010 Jan 4;121:26-33).

Other fetal imaging studies have similarly demonstrated a progressive third-trimester decrease in both cortical gray and white matter and in gyrification (cortical folding) (Cereb Cortex. 2013;23:2932-43), as well as decreased cerebral oxygen delivery and consumption (Circulation. 2015;131:1313-23) in fetuses with severe CHD. It appears that the brain may start out normal in size, but in the third trimester, the accelerated metabolic demands that come with rapid growth and development are not sufficiently met by the fetal cardiovascular circulation in CHD.

In the newborn with CHD, preoperative brain imaging studies have demonstrated structural abnormalities suggesting delayed development (for example, microcephaly and a widened operculum), microstructural abnormalities suggesting abnormal myelination and neuroaxonal development, and lower brain maturity scores (a composite score that combines multiple factors, such as myelination and cortical in-folding, to represent “brain age”).

Moreover, some of the newborn brain imaging studies have correlated brain MRI findings with neonatal neurodevelopmental assessments. For instance, investigators found that full-term newborns with CHD had decreased gray matter brain volume and increased cerebrospinal fluid volume and that these impairments were associated with poor behavioral state regulation and poor visual orienting (J Pediatr. 2014;164:1121-7).

Interestingly, it has been found that the full-term baby with specific complex CHD, including newborns with single ventricle CHD or transposition of the great arteries, is more likely to have a brain maturity score that is equivalent to that of a baby born at 35 weeks’ gestation. This means that, in some infants with CHD, the brain has lagged in growth by about a month, resulting in a pattern of disturbed development and subsequent injury that is similar to that of premature infants.

It also means that infants with CHD and an immature brain are especially vulnerable to brain injury when open-heart surgery is needed. In short, we now appreciate that the brain in patients with CHD is likely more fragile than we previously thought – and that this fragility is prenatal in its origins.

Delivery room planning

Ideally, our goal is to find ways of changing the circulation in utero to improve cerebral oxygenation and blood flow, and, consequently, improve brain development and long-term neurocognitive function. Despite significant efforts in this area, we’re not there yet.

Examples of strategies that are being tested include catheter intervention to open the aortic valve in utero for fetuses with critical aortic stenosis. This procedure currently is being performed to try to prevent progression of the valve abnormality to HLHS, but it has not been determined whether the intervention affects cerebral blood flow. Maternal oxygen therapy has been shown to change cerebral blood flow in the short term for fetuses with HLHS, but its long-term use has not been studied. At the time of birth, to prevent injury in the potentially more fragile brain of the newborn with CHD, what we can do is to identify those fetuses who are more likely to be at risk for hypoxia low cardiac output and hemodynamic compromise in the delivery room, and plan for specialized delivery room and perinatal management beyond standard neonatal care.

Most newborns with CHD are assigned to Level 1; they have no predicted risk of compromise in the delivery room – or even in the first couple weeks of life – and can deliver at a local hospital with neonatal evaluation and then consult with the pediatric cardiologist. Defects include shunt lesions such as septal defects or mild valve abnormalities.

Patients assigned to Level 2 have minimal risk of compromise in the delivery room but are expected to require postnatal surgery, cardiac catheterization, or another procedure before going home. They can be stabilized by the neonatologist, usually with initiation of a prostaglandin infusion, before transfer to the cardiac center for the planned intervention. Defects include single ventricle CHD and severe Tetralogy of Fallot.

Fetuses assigned to Level 3 and Level 4 are expected to have hemodynamic instability at cord clamping, requiring immediate specialty care in the delivery room that is likely to include urgent cardiac catheterization or surgical intervention. These defects are rare and include diagnoses such as transposition of the great arteries, HLHS with a restrictive or closed foramen ovale, and CHD with associated heart failure and hydrops.

We have found that fetal echocardiography accurately predicts postnatal risk and the need for specialized delivery room care in newborns diagnosed in utero with CHD and that level-of-care protocols ensure safe delivery and optimize fetal outcomes (J Am Soc Echocardiogr. 2015;28:1339-49; Am J Cardiol. 2013;111:737-47).

Such delivery planning, which is coordinated between obstetric, neonatal, cardiology, and surgical services with specialty teams as needed (for example, cardiac intensive care, interventional cardiology, and cardiac surgery), is recommended in a 2014 AHA statement on the diagnosis and treatment of fetal cardiac disease. In recent years it has become the standard of care in many health systems (Circulation. 2014;129[21]:2183-242).

The effect of maternal stress on the in utero environment is also getting increased attention in pediatric cardiology. Alterations in neurocognitive development and fetal and child cardiovascular health are likely to be associated with maternal stress during pregnancy, and studies have shown that maternal stress is high with prenatal diagnoses of CHD. We have to ask: Is stress a modifiable risk factor? There must be ways in which we can do better with prenatal counseling and support after a fetal diagnosis of CHD.

Screening for CHD

Initiating strategies to improve neurodevelopmental outcomes in infants with CHD rests partly on identifying babies with CHD before birth through improved fetal cardiac screening. Research cited in the 2014 AHA statement indicates that nearly all women giving birth to babies with CHD in the United States have obstetric ultrasound examinations in the second or third trimesters, but that only about 30% of the fetuses are diagnosed prenatally.

Current indications for referral for a fetal echocardiogram – in addition to suspicion of a structural heart abnormality on obstetric ultrasound – include maternal factors, such as diabetes mellitus, that raise the risk of CHD above the baseline population risk for low-risk pregnancies.

Women with pregestational diabetes mellitus have a nearly fivefold increase in CHD, compared with the general population (3%-5%), and should be referred for fetal echocardiography. Women with gestational diabetes mellitus have no or minimally increased risk for fetal CHD, but it has been shown that there is an increased risk for cardiac hypertrophy – particularly late in gestation – if glycemic levels are poorly controlled. The 2014 AHA guidelines recommend that fetal echocardiographic evaluation be considered in those who have HbA1c levels greater than 6% in the second half of pregnancy.

Dr. Mary T. Donofrio is a pediatric cardiologist and director of the fetal heart program and critical care delivery program at Children’s National Medical Center, Washington. She reported that she has no disclosures relevant to this article.

Congenital heart disease (CHD) is the most common congenital anomaly, with an estimated incidence of 6-12 per 1,000 live births. It is also the congenital anomaly that most often leads to death or significant morbidity. Advances in surgical procedures and operating room care as well as specialized care in the ICU have led to significant improvements in survival over the past 10-20 years – even for the most complex cases of CHD. We now expect the majority of newborns with CHD not only to survive, but to grow up into adulthood.

The focus of clinical research has thus transitioned from survival to issues of long-term morbidity and outcomes, and the more recent literature has clearly shown us that children with CHD are at high risk of learning disabilities and other neurodevelopmental abnormalities. The prevalence of impairment rises with the complexity of CHD, from a prevalence of approximately 20% in mild CHD to as much as 75% in severe CHD. Almost all neonates and infants who undergo palliative surgical procedures have neurodevelopmental impairments.

The neurobehavioral “signature” of CHD includes cognitive defects (usually mild), short attention span, fine and gross motor delays, speech and language delays, visual motor integration, and executive function deficits. Executive function deficits and attention deficits are among the problems that often do not present in children until they reach middle school and beyond, when they are expected to learn more complicated material and handle more complex tasks. Long-term surveillance and care have thus become a major focus at our institution and others throughout the country.

At the same time, evidence has increased in the past 5-10 years that adverse neurodevelopmental outcomes in children with complex CHD may stem from genetic factors as well as compromise to the brain in utero because of altered blood flow, compromise at the time of delivery, and insults during and after corrective or palliative surgery. Surgical strategies and operating room teams have become significantly better at protecting the brain, and new research now is directed toward understanding the neurologic abnormalities that are present in newborns prior to surgical intervention.

Increasingly, researchers are now focused on looking at the in utero origins of brain impairments in children with CHD and trying to understand specific prenatal causes, mechanisms, and potentially modifiable factors. We’re asking what we can do during pregnancy to improve neurodevelopmental outcomes.

Impaired brain growth

The question of how CHD affects blood flow to the fetal brain is an important one. We found some time ago in a study using Doppler ultrasound that 44% of fetuses with CHD had blood flow abnormalities in the middle cerebral artery at some point in the late second or third trimester, suggesting that the blood vessels had dilated to allow more cerebral perfusion. This phenomenon, termed “brain sparing,” is believed to be an autoregulatory mechanism that occurs as a result of diminished oxygen delivery or inadequate blood flow to the brain (Pediatr Cardiol. 2003 Jan;24[5]:436-43).

Subsequent studies have similarly documented abnormal cerebral blood flow in fetuses with various types of congenital heart lesions. What is left to be determined is whether this autoregulatory mechanism is adequate to maintain perfusion in the presence of specific, high-risk CHD.

Abnormalities were more often seen in CHD with obstructed aortic flow, such as hypoplastic left heart syndrome (HLHS) in which the aorta is perfused retrograde through the fetal ductus arteriosus (Circulation. 2010 Jan 4;121:26-33).

Other fetal imaging studies have similarly demonstrated a progressive third-trimester decrease in both cortical gray and white matter and in gyrification (cortical folding) (Cereb Cortex. 2013;23:2932-43), as well as decreased cerebral oxygen delivery and consumption (Circulation. 2015;131:1313-23) in fetuses with severe CHD. It appears that the brain may start out normal in size, but in the third trimester, the accelerated metabolic demands that come with rapid growth and development are not sufficiently met by the fetal cardiovascular circulation in CHD.

In the newborn with CHD, preoperative brain imaging studies have demonstrated structural abnormalities suggesting delayed development (for example, microcephaly and a widened operculum), microstructural abnormalities suggesting abnormal myelination and neuroaxonal development, and lower brain maturity scores (a composite score that combines multiple factors, such as myelination and cortical in-folding, to represent “brain age”).

Moreover, some of the newborn brain imaging studies have correlated brain MRI findings with neonatal neurodevelopmental assessments. For instance, investigators found that full-term newborns with CHD had decreased gray matter brain volume and increased cerebrospinal fluid volume and that these impairments were associated with poor behavioral state regulation and poor visual orienting (J Pediatr. 2014;164:1121-7).

Interestingly, it has been found that the full-term baby with specific complex CHD, including newborns with single ventricle CHD or transposition of the great arteries, is more likely to have a brain maturity score that is equivalent to that of a baby born at 35 weeks’ gestation. This means that, in some infants with CHD, the brain has lagged in growth by about a month, resulting in a pattern of disturbed development and subsequent injury that is similar to that of premature infants.

It also means that infants with CHD and an immature brain are especially vulnerable to brain injury when open-heart surgery is needed. In short, we now appreciate that the brain in patients with CHD is likely more fragile than we previously thought – and that this fragility is prenatal in its origins.

Delivery room planning

Ideally, our goal is to find ways of changing the circulation in utero to improve cerebral oxygenation and blood flow, and, consequently, improve brain development and long-term neurocognitive function. Despite significant efforts in this area, we’re not there yet.

Examples of strategies that are being tested include catheter intervention to open the aortic valve in utero for fetuses with critical aortic stenosis. This procedure currently is being performed to try to prevent progression of the valve abnormality to HLHS, but it has not been determined whether the intervention affects cerebral blood flow. Maternal oxygen therapy has been shown to change cerebral blood flow in the short term for fetuses with HLHS, but its long-term use has not been studied. At the time of birth, to prevent injury in the potentially more fragile brain of the newborn with CHD, what we can do is to identify those fetuses who are more likely to be at risk for hypoxia low cardiac output and hemodynamic compromise in the delivery room, and plan for specialized delivery room and perinatal management beyond standard neonatal care.

Most newborns with CHD are assigned to Level 1; they have no predicted risk of compromise in the delivery room – or even in the first couple weeks of life – and can deliver at a local hospital with neonatal evaluation and then consult with the pediatric cardiologist. Defects include shunt lesions such as septal defects or mild valve abnormalities.

Patients assigned to Level 2 have minimal risk of compromise in the delivery room but are expected to require postnatal surgery, cardiac catheterization, or another procedure before going home. They can be stabilized by the neonatologist, usually with initiation of a prostaglandin infusion, before transfer to the cardiac center for the planned intervention. Defects include single ventricle CHD and severe Tetralogy of Fallot.

Fetuses assigned to Level 3 and Level 4 are expected to have hemodynamic instability at cord clamping, requiring immediate specialty care in the delivery room that is likely to include urgent cardiac catheterization or surgical intervention. These defects are rare and include diagnoses such as transposition of the great arteries, HLHS with a restrictive or closed foramen ovale, and CHD with associated heart failure and hydrops.

We have found that fetal echocardiography accurately predicts postnatal risk and the need for specialized delivery room care in newborns diagnosed in utero with CHD and that level-of-care protocols ensure safe delivery and optimize fetal outcomes (J Am Soc Echocardiogr. 2015;28:1339-49; Am J Cardiol. 2013;111:737-47).

Such delivery planning, which is coordinated between obstetric, neonatal, cardiology, and surgical services with specialty teams as needed (for example, cardiac intensive care, interventional cardiology, and cardiac surgery), is recommended in a 2014 AHA statement on the diagnosis and treatment of fetal cardiac disease. In recent years it has become the standard of care in many health systems (Circulation. 2014;129[21]:2183-242).

The effect of maternal stress on the in utero environment is also getting increased attention in pediatric cardiology. Alterations in neurocognitive development and fetal and child cardiovascular health are likely to be associated with maternal stress during pregnancy, and studies have shown that maternal stress is high with prenatal diagnoses of CHD. We have to ask: Is stress a modifiable risk factor? There must be ways in which we can do better with prenatal counseling and support after a fetal diagnosis of CHD.

Screening for CHD

Initiating strategies to improve neurodevelopmental outcomes in infants with CHD rests partly on identifying babies with CHD before birth through improved fetal cardiac screening. Research cited in the 2014 AHA statement indicates that nearly all women giving birth to babies with CHD in the United States have obstetric ultrasound examinations in the second or third trimesters, but that only about 30% of the fetuses are diagnosed prenatally.

Current indications for referral for a fetal echocardiogram – in addition to suspicion of a structural heart abnormality on obstetric ultrasound – include maternal factors, such as diabetes mellitus, that raise the risk of CHD above the baseline population risk for low-risk pregnancies.

Women with pregestational diabetes mellitus have a nearly fivefold increase in CHD, compared with the general population (3%-5%), and should be referred for fetal echocardiography. Women with gestational diabetes mellitus have no or minimally increased risk for fetal CHD, but it has been shown that there is an increased risk for cardiac hypertrophy – particularly late in gestation – if glycemic levels are poorly controlled. The 2014 AHA guidelines recommend that fetal echocardiographic evaluation be considered in those who have HbA1c levels greater than 6% in the second half of pregnancy.

Dr. Mary T. Donofrio is a pediatric cardiologist and director of the fetal heart program and critical care delivery program at Children’s National Medical Center, Washington. She reported that she has no disclosures relevant to this article.

Congenital heart disease (CHD) is the most common congenital anomaly, with an estimated incidence of 6-12 per 1,000 live births. It is also the congenital anomaly that most often leads to death or significant morbidity. Advances in surgical procedures and operating room care as well as specialized care in the ICU have led to significant improvements in survival over the past 10-20 years – even for the most complex cases of CHD. We now expect the majority of newborns with CHD not only to survive, but to grow up into adulthood.

The focus of clinical research has thus transitioned from survival to issues of long-term morbidity and outcomes, and the more recent literature has clearly shown us that children with CHD are at high risk of learning disabilities and other neurodevelopmental abnormalities. The prevalence of impairment rises with the complexity of CHD, from a prevalence of approximately 20% in mild CHD to as much as 75% in severe CHD. Almost all neonates and infants who undergo palliative surgical procedures have neurodevelopmental impairments.

The neurobehavioral “signature” of CHD includes cognitive defects (usually mild), short attention span, fine and gross motor delays, speech and language delays, visual motor integration, and executive function deficits. Executive function deficits and attention deficits are among the problems that often do not present in children until they reach middle school and beyond, when they are expected to learn more complicated material and handle more complex tasks. Long-term surveillance and care have thus become a major focus at our institution and others throughout the country.

At the same time, evidence has increased in the past 5-10 years that adverse neurodevelopmental outcomes in children with complex CHD may stem from genetic factors as well as compromise to the brain in utero because of altered blood flow, compromise at the time of delivery, and insults during and after corrective or palliative surgery. Surgical strategies and operating room teams have become significantly better at protecting the brain, and new research now is directed toward understanding the neurologic abnormalities that are present in newborns prior to surgical intervention.

Increasingly, researchers are now focused on looking at the in utero origins of brain impairments in children with CHD and trying to understand specific prenatal causes, mechanisms, and potentially modifiable factors. We’re asking what we can do during pregnancy to improve neurodevelopmental outcomes.

Impaired brain growth

The question of how CHD affects blood flow to the fetal brain is an important one. We found some time ago in a study using Doppler ultrasound that 44% of fetuses with CHD had blood flow abnormalities in the middle cerebral artery at some point in the late second or third trimester, suggesting that the blood vessels had dilated to allow more cerebral perfusion. This phenomenon, termed “brain sparing,” is believed to be an autoregulatory mechanism that occurs as a result of diminished oxygen delivery or inadequate blood flow to the brain (Pediatr Cardiol. 2003 Jan;24[5]:436-43).

Subsequent studies have similarly documented abnormal cerebral blood flow in fetuses with various types of congenital heart lesions. What is left to be determined is whether this autoregulatory mechanism is adequate to maintain perfusion in the presence of specific, high-risk CHD.

Abnormalities were more often seen in CHD with obstructed aortic flow, such as hypoplastic left heart syndrome (HLHS) in which the aorta is perfused retrograde through the fetal ductus arteriosus (Circulation. 2010 Jan 4;121:26-33).

Other fetal imaging studies have similarly demonstrated a progressive third-trimester decrease in both cortical gray and white matter and in gyrification (cortical folding) (Cereb Cortex. 2013;23:2932-43), as well as decreased cerebral oxygen delivery and consumption (Circulation. 2015;131:1313-23) in fetuses with severe CHD. It appears that the brain may start out normal in size, but in the third trimester, the accelerated metabolic demands that come with rapid growth and development are not sufficiently met by the fetal cardiovascular circulation in CHD.

In the newborn with CHD, preoperative brain imaging studies have demonstrated structural abnormalities suggesting delayed development (for example, microcephaly and a widened operculum), microstructural abnormalities suggesting abnormal myelination and neuroaxonal development, and lower brain maturity scores (a composite score that combines multiple factors, such as myelination and cortical in-folding, to represent “brain age”).

Moreover, some of the newborn brain imaging studies have correlated brain MRI findings with neonatal neurodevelopmental assessments. For instance, investigators found that full-term newborns with CHD had decreased gray matter brain volume and increased cerebrospinal fluid volume and that these impairments were associated with poor behavioral state regulation and poor visual orienting (J Pediatr. 2014;164:1121-7).

Interestingly, it has been found that the full-term baby with specific complex CHD, including newborns with single ventricle CHD or transposition of the great arteries, is more likely to have a brain maturity score that is equivalent to that of a baby born at 35 weeks’ gestation. This means that, in some infants with CHD, the brain has lagged in growth by about a month, resulting in a pattern of disturbed development and subsequent injury that is similar to that of premature infants.

It also means that infants with CHD and an immature brain are especially vulnerable to brain injury when open-heart surgery is needed. In short, we now appreciate that the brain in patients with CHD is likely more fragile than we previously thought – and that this fragility is prenatal in its origins.

Delivery room planning

Ideally, our goal is to find ways of changing the circulation in utero to improve cerebral oxygenation and blood flow, and, consequently, improve brain development and long-term neurocognitive function. Despite significant efforts in this area, we’re not there yet.

Examples of strategies that are being tested include catheter intervention to open the aortic valve in utero for fetuses with critical aortic stenosis. This procedure currently is being performed to try to prevent progression of the valve abnormality to HLHS, but it has not been determined whether the intervention affects cerebral blood flow. Maternal oxygen therapy has been shown to change cerebral blood flow in the short term for fetuses with HLHS, but its long-term use has not been studied. At the time of birth, to prevent injury in the potentially more fragile brain of the newborn with CHD, what we can do is to identify those fetuses who are more likely to be at risk for hypoxia low cardiac output and hemodynamic compromise in the delivery room, and plan for specialized delivery room and perinatal management beyond standard neonatal care.

Most newborns with CHD are assigned to Level 1; they have no predicted risk of compromise in the delivery room – or even in the first couple weeks of life – and can deliver at a local hospital with neonatal evaluation and then consult with the pediatric cardiologist. Defects include shunt lesions such as septal defects or mild valve abnormalities.

Patients assigned to Level 2 have minimal risk of compromise in the delivery room but are expected to require postnatal surgery, cardiac catheterization, or another procedure before going home. They can be stabilized by the neonatologist, usually with initiation of a prostaglandin infusion, before transfer to the cardiac center for the planned intervention. Defects include single ventricle CHD and severe Tetralogy of Fallot.

Fetuses assigned to Level 3 and Level 4 are expected to have hemodynamic instability at cord clamping, requiring immediate specialty care in the delivery room that is likely to include urgent cardiac catheterization or surgical intervention. These defects are rare and include diagnoses such as transposition of the great arteries, HLHS with a restrictive or closed foramen ovale, and CHD with associated heart failure and hydrops.

We have found that fetal echocardiography accurately predicts postnatal risk and the need for specialized delivery room care in newborns diagnosed in utero with CHD and that level-of-care protocols ensure safe delivery and optimize fetal outcomes (J Am Soc Echocardiogr. 2015;28:1339-49; Am J Cardiol. 2013;111:737-47).

Such delivery planning, which is coordinated between obstetric, neonatal, cardiology, and surgical services with specialty teams as needed (for example, cardiac intensive care, interventional cardiology, and cardiac surgery), is recommended in a 2014 AHA statement on the diagnosis and treatment of fetal cardiac disease. In recent years it has become the standard of care in many health systems (Circulation. 2014;129[21]:2183-242).

The effect of maternal stress on the in utero environment is also getting increased attention in pediatric cardiology. Alterations in neurocognitive development and fetal and child cardiovascular health are likely to be associated with maternal stress during pregnancy, and studies have shown that maternal stress is high with prenatal diagnoses of CHD. We have to ask: Is stress a modifiable risk factor? There must be ways in which we can do better with prenatal counseling and support after a fetal diagnosis of CHD.

Screening for CHD

Initiating strategies to improve neurodevelopmental outcomes in infants with CHD rests partly on identifying babies with CHD before birth through improved fetal cardiac screening. Research cited in the 2014 AHA statement indicates that nearly all women giving birth to babies with CHD in the United States have obstetric ultrasound examinations in the second or third trimesters, but that only about 30% of the fetuses are diagnosed prenatally.

Current indications for referral for a fetal echocardiogram – in addition to suspicion of a structural heart abnormality on obstetric ultrasound – include maternal factors, such as diabetes mellitus, that raise the risk of CHD above the baseline population risk for low-risk pregnancies.

Women with pregestational diabetes mellitus have a nearly fivefold increase in CHD, compared with the general population (3%-5%), and should be referred for fetal echocardiography. Women with gestational diabetes mellitus have no or minimally increased risk for fetal CHD, but it has been shown that there is an increased risk for cardiac hypertrophy – particularly late in gestation – if glycemic levels are poorly controlled. The 2014 AHA guidelines recommend that fetal echocardiographic evaluation be considered in those who have HbA1c levels greater than 6% in the second half of pregnancy.

Dr. Mary T. Donofrio is a pediatric cardiologist and director of the fetal heart program and critical care delivery program at Children’s National Medical Center, Washington. She reported that she has no disclosures relevant to this article.

How better imaging technology for prenatal diagnoses can improve outcomes

We live during an unprecedented time in the history of ob.gyn. practice. Only a relatively short time ago, the only way ob.gyns. could assess the health of the fetus was through the invasive and risky procedures of the amniocentesis and, later, chorionic villus sampling. A woman who might eventually have had a baby with a congenital abnormality would not have known of her fetus’s defect until after birth, when successful intervention might have been extremely difficult to achieve or even too late. At the time, in utero evaluation could be done only by static, low-resolution sonographic images of the fetus. By today’s standards of imaging technology, these once-revolutionary pictures are almost tantamount to cave paintings.

Therefore, while it is imperative that we employ all available technologies and techniques possible to detect and diagnose potential fetal developmental defects, we must also bear in mind that no test is ever infallible. It is our obligation to provide the very best information based on expert and thorough review.

This month we have invited Mary Donofrio, MD, director of the fetal heart program at Children’s National Medical Center, Washington, to discuss how the latest advances in imaging technology have enabled us to screen for and diagnose congenital heart diseases, and improve outcomes for mother and baby.

Dr. Reece, who specializes in maternal-fetal medicine, is vice president for medical affairs at the University of Maryland, Baltimore, as well as the John Z. and Akiko K. Bowers Distinguished Professor and dean of the school of medicine. Dr. Reece said he had no relevant financial disclosures. He is the medical editor of this column. Contact him at [email protected].

We live during an unprecedented time in the history of ob.gyn. practice. Only a relatively short time ago, the only way ob.gyns. could assess the health of the fetus was through the invasive and risky procedures of the amniocentesis and, later, chorionic villus sampling. A woman who might eventually have had a baby with a congenital abnormality would not have known of her fetus’s defect until after birth, when successful intervention might have been extremely difficult to achieve or even too late. At the time, in utero evaluation could be done only by static, low-resolution sonographic images of the fetus. By today’s standards of imaging technology, these once-revolutionary pictures are almost tantamount to cave paintings.

Therefore, while it is imperative that we employ all available technologies and techniques possible to detect and diagnose potential fetal developmental defects, we must also bear in mind that no test is ever infallible. It is our obligation to provide the very best information based on expert and thorough review.

This month we have invited Mary Donofrio, MD, director of the fetal heart program at Children’s National Medical Center, Washington, to discuss how the latest advances in imaging technology have enabled us to screen for and diagnose congenital heart diseases, and improve outcomes for mother and baby.

Dr. Reece, who specializes in maternal-fetal medicine, is vice president for medical affairs at the University of Maryland, Baltimore, as well as the John Z. and Akiko K. Bowers Distinguished Professor and dean of the school of medicine. Dr. Reece said he had no relevant financial disclosures. He is the medical editor of this column. Contact him at [email protected].

We live during an unprecedented time in the history of ob.gyn. practice. Only a relatively short time ago, the only way ob.gyns. could assess the health of the fetus was through the invasive and risky procedures of the amniocentesis and, later, chorionic villus sampling. A woman who might eventually have had a baby with a congenital abnormality would not have known of her fetus’s defect until after birth, when successful intervention might have been extremely difficult to achieve or even too late. At the time, in utero evaluation could be done only by static, low-resolution sonographic images of the fetus. By today’s standards of imaging technology, these once-revolutionary pictures are almost tantamount to cave paintings.

Therefore, while it is imperative that we employ all available technologies and techniques possible to detect and diagnose potential fetal developmental defects, we must also bear in mind that no test is ever infallible. It is our obligation to provide the very best information based on expert and thorough review.

This month we have invited Mary Donofrio, MD, director of the fetal heart program at Children’s National Medical Center, Washington, to discuss how the latest advances in imaging technology have enabled us to screen for and diagnose congenital heart diseases, and improve outcomes for mother and baby.

Dr. Reece, who specializes in maternal-fetal medicine, is vice president for medical affairs at the University of Maryland, Baltimore, as well as the John Z. and Akiko K. Bowers Distinguished Professor and dean of the school of medicine. Dr. Reece said he had no relevant financial disclosures. He is the medical editor of this column. Contact him at [email protected].

Functional disability prevails despite rheumatoid arthritis treatment

AMSTERDAM – Functional disability remains a significant problem for people with rheumatoid arthritis, with the prevalence remaining at least 15% higher over time than in individuals without the disease.

“We found a higher prevalence of functional disability in patients with RA versus non-RA,” the presenting study investigator Elena Myasoedova, MD, PhD, said at the European Congress of Rheumatology.

Dr. Myasoedova, who is a clinical fellow in rheumatology at the Mayo Clinic in Rochester, Minn., added that the increase in prevalence over time was significantly higher in subjects with RA than in those without RA (P = .003), but that there was no difference in the pace of this increase with adjustment for the duration of RA disease (P = .51).

There was also no difference in functional disability between the two groups of patients by about the 8th or 9th decade.

RA remains one of the most common conditions associated with functional disability, Dr. Myasoedova said, with several risk factors for physical impairment identified, including being female, of older age, smoking, and the use of certain medications (glucocorticoids and antidepressants), as well as sociodemographic factors.

A discrepancy between improved RA disease control and persistent impairment in physical function has been noted in prior studies, but there are few data on how this might change over time. Dr. Myasoedova and her associates investigated this by analyzing data from the Rochester Epidemiology Project, which collects medical data on individuals living in Olmsted County, Minnesota. They identified two populations of adults aged 18 and older: one diagnosed with RA according to 1987 American College of Rheumatology criteria between 1999 and 2013, and one without RA but who were of a similar age and sex and enrolled in the project around the same time.

As part of the project, participants completed an annual questionnaire asking about their health and ability to perform six activities of daily living (ADL). These include the ability to wash, dress, feed, and toilet oneself without assistance, as well as perform normal household chores and walk unaided. Over the course of study, 7,466 questionnaires have been completed by the participants and functional disability was defined as having difficulty with at least one of these six ADLs, Dr. Myasoedova explained.

At baseline, subjects with and without RA were aged a mean of 55 and 56 years, respectively, and 70% in both groups were female. Similar percentages were current (about 15%), former (about 30%), or never smokers (about 55%), and about 40% were obese.

Just under two-thirds (64.4%) of patients in the RA cohort were positive for rheumatoid factor (RF) or anti–cyclic citrullinated peptide (CCP) antibodies. While there was a similar prevalence of functional disability in RA patients who were or were not RF or CCP positive (both 25%, P = .67), there was an increasing prevalence noted in those who were positive versus those who were negative over time (P = .027).

Although the investigators did not conduct an objective assessment for functional disability, these findings highlight the need for vigilant management of patients with RA, Dr. Myasoedova proposed.

“Early and aggressive treatment regimens aimed at tight inflammation control can help prevent the disabling effects of high disease activity and joint damage, thereby lowering functional disability,” she said in an interview ahead of the congress.

Future work, she observed, should look at how the pattern of functional disability changes and the use of transition modeling to understand the bidirectional pattern of potential change and accumulation of functional disability in RA. The investigators also plan to look at risk factors for persistent and worsening functional disability and how treatment – including “treat to target” and biologics – might affect this.

The National Institute of Arthritis and Musculoskeletal and Skin Diseases supported the study. Dr. Myasoedova had no conflicts of interest.

SOURCE: Myasoedova E et al. Ann Rheum Dis. 2018;77(Suppl 2):54. Abstract OP0009.

AMSTERDAM – Functional disability remains a significant problem for people with rheumatoid arthritis, with the prevalence remaining at least 15% higher over time than in individuals without the disease.

“We found a higher prevalence of functional disability in patients with RA versus non-RA,” the presenting study investigator Elena Myasoedova, MD, PhD, said at the European Congress of Rheumatology.

Dr. Myasoedova, who is a clinical fellow in rheumatology at the Mayo Clinic in Rochester, Minn., added that the increase in prevalence over time was significantly higher in subjects with RA than in those without RA (P = .003), but that there was no difference in the pace of this increase with adjustment for the duration of RA disease (P = .51).

There was also no difference in functional disability between the two groups of patients by about the 8th or 9th decade.

RA remains one of the most common conditions associated with functional disability, Dr. Myasoedova said, with several risk factors for physical impairment identified, including being female, of older age, smoking, and the use of certain medications (glucocorticoids and antidepressants), as well as sociodemographic factors.

A discrepancy between improved RA disease control and persistent impairment in physical function has been noted in prior studies, but there are few data on how this might change over time. Dr. Myasoedova and her associates investigated this by analyzing data from the Rochester Epidemiology Project, which collects medical data on individuals living in Olmsted County, Minnesota. They identified two populations of adults aged 18 and older: one diagnosed with RA according to 1987 American College of Rheumatology criteria between 1999 and 2013, and one without RA but who were of a similar age and sex and enrolled in the project around the same time.

As part of the project, participants completed an annual questionnaire asking about their health and ability to perform six activities of daily living (ADL). These include the ability to wash, dress, feed, and toilet oneself without assistance, as well as perform normal household chores and walk unaided. Over the course of study, 7,466 questionnaires have been completed by the participants and functional disability was defined as having difficulty with at least one of these six ADLs, Dr. Myasoedova explained.

At baseline, subjects with and without RA were aged a mean of 55 and 56 years, respectively, and 70% in both groups were female. Similar percentages were current (about 15%), former (about 30%), or never smokers (about 55%), and about 40% were obese.

Just under two-thirds (64.4%) of patients in the RA cohort were positive for rheumatoid factor (RF) or anti–cyclic citrullinated peptide (CCP) antibodies. While there was a similar prevalence of functional disability in RA patients who were or were not RF or CCP positive (both 25%, P = .67), there was an increasing prevalence noted in those who were positive versus those who were negative over time (P = .027).

Although the investigators did not conduct an objective assessment for functional disability, these findings highlight the need for vigilant management of patients with RA, Dr. Myasoedova proposed.

“Early and aggressive treatment regimens aimed at tight inflammation control can help prevent the disabling effects of high disease activity and joint damage, thereby lowering functional disability,” she said in an interview ahead of the congress.

Future work, she observed, should look at how the pattern of functional disability changes and the use of transition modeling to understand the bidirectional pattern of potential change and accumulation of functional disability in RA. The investigators also plan to look at risk factors for persistent and worsening functional disability and how treatment – including “treat to target” and biologics – might affect this.

The National Institute of Arthritis and Musculoskeletal and Skin Diseases supported the study. Dr. Myasoedova had no conflicts of interest.

SOURCE: Myasoedova E et al. Ann Rheum Dis. 2018;77(Suppl 2):54. Abstract OP0009.

AMSTERDAM – Functional disability remains a significant problem for people with rheumatoid arthritis, with the prevalence remaining at least 15% higher over time than in individuals without the disease.

“We found a higher prevalence of functional disability in patients with RA versus non-RA,” the presenting study investigator Elena Myasoedova, MD, PhD, said at the European Congress of Rheumatology.

Dr. Myasoedova, who is a clinical fellow in rheumatology at the Mayo Clinic in Rochester, Minn., added that the increase in prevalence over time was significantly higher in subjects with RA than in those without RA (P = .003), but that there was no difference in the pace of this increase with adjustment for the duration of RA disease (P = .51).

There was also no difference in functional disability between the two groups of patients by about the 8th or 9th decade.

RA remains one of the most common conditions associated with functional disability, Dr. Myasoedova said, with several risk factors for physical impairment identified, including being female, of older age, smoking, and the use of certain medications (glucocorticoids and antidepressants), as well as sociodemographic factors.

A discrepancy between improved RA disease control and persistent impairment in physical function has been noted in prior studies, but there are few data on how this might change over time. Dr. Myasoedova and her associates investigated this by analyzing data from the Rochester Epidemiology Project, which collects medical data on individuals living in Olmsted County, Minnesota. They identified two populations of adults aged 18 and older: one diagnosed with RA according to 1987 American College of Rheumatology criteria between 1999 and 2013, and one without RA but who were of a similar age and sex and enrolled in the project around the same time.

As part of the project, participants completed an annual questionnaire asking about their health and ability to perform six activities of daily living (ADL). These include the ability to wash, dress, feed, and toilet oneself without assistance, as well as perform normal household chores and walk unaided. Over the course of study, 7,466 questionnaires have been completed by the participants and functional disability was defined as having difficulty with at least one of these six ADLs, Dr. Myasoedova explained.

At baseline, subjects with and without RA were aged a mean of 55 and 56 years, respectively, and 70% in both groups were female. Similar percentages were current (about 15%), former (about 30%), or never smokers (about 55%), and about 40% were obese.