User login

Trauma surgeons can safely manage many TBI patients

SAN DIEGO – Many patients with traumatic brain injury (TBI) can be safely managed by trauma surgeons or intensive care physicians, if a guideline-based individual protocol is followed. In a recent single-center study using this protocol, charges fell, repeat imaging decreased, and patient outcomes did not suffer when neurosurgery consults were reserved for individuals with more severe brain injuries.

Every year, emergency departments see 2.5 million visits for traumatic brain injuries ranging from concussions to devastating open injuries, and 11% of those seen are hospitalized. Still, only 10% of patients with TBI will require neurosurgical intervention, Dr. Bellal Joseph of the University of Arizona said at the annual meeting of the American Surgical Association.

Finding a way to conserve resources is important, said Dr. Joseph, since the total number of emergency department visits for TBI is increasing, but resources remain constrained: neurosurgeons are in shorter supply than ever. Further, TBI management may not be changed by numerous repeat head CTs, which are costly and can expose patients to significant amounts of radiation.

Dr. Joseph and his coinvestigators at the University of Arizona had previously developed Brain Injury Guidelines (BIG), which would mandate repeat head CTs and neurosurgery consults only for larger intracranial bleeds and displaced skull fractures. The guidelines are used as part of an individualized protocol that includes overall clinical assessment and patient-specific factors, such as anticoagulation status and whether the patient was intoxicated at the time of injury.

After a period of education regarding the guidelines, the University of Arizona’s Level I trauma center – the only one in the state – implemented BIG use in 2012. For the 5-year period from 2009 to 2014 encompassing implementation of the guidelines, investigators followed all patients admitted for TBI and tracked use of hospital resources and patient outcomes during the study period.

A total of 2,184 patients with TBI were included in the study, divided into five cohorts by year of admission, and stratified by severity of brain injury. Patients were included if they were admitted for TBI from the emergency department and the initial head CT found a skull fracture or intracranial hemorrhage. Dr. Bellal and his colleagues collected data regarding the number of neurosurgery consults, repeat head CTs, and patient demographic and injury characteristics. They tracked patient outcomes including in-hospital mortality, any progression on repeat head CT, and patient disposition on discharge.

TBI injuries were classified by Glasgow Coma Scale scoring (13-15 for mild TBI; 9-12 for moderate; and less than 8 for severe).

Over time, the proportion of patients with severe brain injury who received repeat head CTs did not change significantly. However, scans for those with less severe injury declined significantly, with a marked drop in repeat head CTs seen at the time of implementation of the BIG guidelines (P < .001 for mild and P = .012 for moderate brain injuries).

Similarly, 100% of patients with severe TBI received a neurosurgical consult in each year of the study period, but the number of consults declined significantly for those with mild and moderate injuries (P < .001 for both mild and moderate injuries).

Hospital length of stay decreased from a mean 6.2 days to 4.7 at the end of the study period (P = .028), and total hospital costs fell by nearly half, from a total $8.1 million for the 2009-2010 cohort to $4.3 million for the 2013-2014 cohort (P < .001).

Mortality, discharge score on the Glasgow Coma scale, and the proportion of patients discharged to home after their hospital stay did not change significantly over the study period.

Study limitations included potential lack of generalizability to smaller or more rural centers, and the potential for confounding by changes in other institutional factors over the study period. The study did not track long-term neurologic or quality of life outcomes.

Discussant Dr. Karen Brasel of Oregon Health & Science University, Portland, said that the study is the latest in a series of reports in the TBI field that speak to the need to avoid “knee-jerk use of resources based on diagnosis alone.” She cautioned that it is still important to examine individual patient outcomes for the few patients who did not receive a neurosurgery consult but then deteriorated, to better evaluate who is at most risk for poor outcomes.

Still, said Dr. Joseph, a “guideline-based individualized protocol for traumatic brain injury can help reduce the burden on neurological services. Life changes, and so does medicine.”

The authors reported no conflicts of interest.

The complete manuscript of this study and its presentation at the American Surgical Association’s 135th Annual Meeting, April 2015, in San Diego, California, are anticipated to be published in the Annals of Surgery pending editorial review.

SAN DIEGO – Many patients with traumatic brain injury (TBI) can be safely managed by trauma surgeons or intensive care physicians, if a guideline-based individual protocol is followed. In a recent single-center study using this protocol, charges fell, repeat imaging decreased, and patient outcomes did not suffer when neurosurgery consults were reserved for individuals with more severe brain injuries.

Every year, emergency departments see 2.5 million visits for traumatic brain injuries ranging from concussions to devastating open injuries, and 11% of those seen are hospitalized. Still, only 10% of patients with TBI will require neurosurgical intervention, Dr. Bellal Joseph of the University of Arizona said at the annual meeting of the American Surgical Association.

Finding a way to conserve resources is important, said Dr. Joseph, since the total number of emergency department visits for TBI is increasing, but resources remain constrained: neurosurgeons are in shorter supply than ever. Further, TBI management may not be changed by numerous repeat head CTs, which are costly and can expose patients to significant amounts of radiation.

Dr. Joseph and his coinvestigators at the University of Arizona had previously developed Brain Injury Guidelines (BIG), which would mandate repeat head CTs and neurosurgery consults only for larger intracranial bleeds and displaced skull fractures. The guidelines are used as part of an individualized protocol that includes overall clinical assessment and patient-specific factors, such as anticoagulation status and whether the patient was intoxicated at the time of injury.

After a period of education regarding the guidelines, the University of Arizona’s Level I trauma center – the only one in the state – implemented BIG use in 2012. For the 5-year period from 2009 to 2014 encompassing implementation of the guidelines, investigators followed all patients admitted for TBI and tracked use of hospital resources and patient outcomes during the study period.

A total of 2,184 patients with TBI were included in the study, divided into five cohorts by year of admission, and stratified by severity of brain injury. Patients were included if they were admitted for TBI from the emergency department and the initial head CT found a skull fracture or intracranial hemorrhage. Dr. Bellal and his colleagues collected data regarding the number of neurosurgery consults, repeat head CTs, and patient demographic and injury characteristics. They tracked patient outcomes including in-hospital mortality, any progression on repeat head CT, and patient disposition on discharge.

TBI injuries were classified by Glasgow Coma Scale scoring (13-15 for mild TBI; 9-12 for moderate; and less than 8 for severe).

Over time, the proportion of patients with severe brain injury who received repeat head CTs did not change significantly. However, scans for those with less severe injury declined significantly, with a marked drop in repeat head CTs seen at the time of implementation of the BIG guidelines (P < .001 for mild and P = .012 for moderate brain injuries).

Similarly, 100% of patients with severe TBI received a neurosurgical consult in each year of the study period, but the number of consults declined significantly for those with mild and moderate injuries (P < .001 for both mild and moderate injuries).

Hospital length of stay decreased from a mean 6.2 days to 4.7 at the end of the study period (P = .028), and total hospital costs fell by nearly half, from a total $8.1 million for the 2009-2010 cohort to $4.3 million for the 2013-2014 cohort (P < .001).

Mortality, discharge score on the Glasgow Coma scale, and the proportion of patients discharged to home after their hospital stay did not change significantly over the study period.

Study limitations included potential lack of generalizability to smaller or more rural centers, and the potential for confounding by changes in other institutional factors over the study period. The study did not track long-term neurologic or quality of life outcomes.

Discussant Dr. Karen Brasel of Oregon Health & Science University, Portland, said that the study is the latest in a series of reports in the TBI field that speak to the need to avoid “knee-jerk use of resources based on diagnosis alone.” She cautioned that it is still important to examine individual patient outcomes for the few patients who did not receive a neurosurgery consult but then deteriorated, to better evaluate who is at most risk for poor outcomes.

Still, said Dr. Joseph, a “guideline-based individualized protocol for traumatic brain injury can help reduce the burden on neurological services. Life changes, and so does medicine.”

The authors reported no conflicts of interest.

The complete manuscript of this study and its presentation at the American Surgical Association’s 135th Annual Meeting, April 2015, in San Diego, California, are anticipated to be published in the Annals of Surgery pending editorial review.

SAN DIEGO – Many patients with traumatic brain injury (TBI) can be safely managed by trauma surgeons or intensive care physicians, if a guideline-based individual protocol is followed. In a recent single-center study using this protocol, charges fell, repeat imaging decreased, and patient outcomes did not suffer when neurosurgery consults were reserved for individuals with more severe brain injuries.

Every year, emergency departments see 2.5 million visits for traumatic brain injuries ranging from concussions to devastating open injuries, and 11% of those seen are hospitalized. Still, only 10% of patients with TBI will require neurosurgical intervention, Dr. Bellal Joseph of the University of Arizona said at the annual meeting of the American Surgical Association.

Finding a way to conserve resources is important, said Dr. Joseph, since the total number of emergency department visits for TBI is increasing, but resources remain constrained: neurosurgeons are in shorter supply than ever. Further, TBI management may not be changed by numerous repeat head CTs, which are costly and can expose patients to significant amounts of radiation.

Dr. Joseph and his coinvestigators at the University of Arizona had previously developed Brain Injury Guidelines (BIG), which would mandate repeat head CTs and neurosurgery consults only for larger intracranial bleeds and displaced skull fractures. The guidelines are used as part of an individualized protocol that includes overall clinical assessment and patient-specific factors, such as anticoagulation status and whether the patient was intoxicated at the time of injury.

After a period of education regarding the guidelines, the University of Arizona’s Level I trauma center – the only one in the state – implemented BIG use in 2012. For the 5-year period from 2009 to 2014 encompassing implementation of the guidelines, investigators followed all patients admitted for TBI and tracked use of hospital resources and patient outcomes during the study period.

A total of 2,184 patients with TBI were included in the study, divided into five cohorts by year of admission, and stratified by severity of brain injury. Patients were included if they were admitted for TBI from the emergency department and the initial head CT found a skull fracture or intracranial hemorrhage. Dr. Bellal and his colleagues collected data regarding the number of neurosurgery consults, repeat head CTs, and patient demographic and injury characteristics. They tracked patient outcomes including in-hospital mortality, any progression on repeat head CT, and patient disposition on discharge.

TBI injuries were classified by Glasgow Coma Scale scoring (13-15 for mild TBI; 9-12 for moderate; and less than 8 for severe).

Over time, the proportion of patients with severe brain injury who received repeat head CTs did not change significantly. However, scans for those with less severe injury declined significantly, with a marked drop in repeat head CTs seen at the time of implementation of the BIG guidelines (P < .001 for mild and P = .012 for moderate brain injuries).

Similarly, 100% of patients with severe TBI received a neurosurgical consult in each year of the study period, but the number of consults declined significantly for those with mild and moderate injuries (P < .001 for both mild and moderate injuries).

Hospital length of stay decreased from a mean 6.2 days to 4.7 at the end of the study period (P = .028), and total hospital costs fell by nearly half, from a total $8.1 million for the 2009-2010 cohort to $4.3 million for the 2013-2014 cohort (P < .001).

Mortality, discharge score on the Glasgow Coma scale, and the proportion of patients discharged to home after their hospital stay did not change significantly over the study period.

Study limitations included potential lack of generalizability to smaller or more rural centers, and the potential for confounding by changes in other institutional factors over the study period. The study did not track long-term neurologic or quality of life outcomes.

Discussant Dr. Karen Brasel of Oregon Health & Science University, Portland, said that the study is the latest in a series of reports in the TBI field that speak to the need to avoid “knee-jerk use of resources based on diagnosis alone.” She cautioned that it is still important to examine individual patient outcomes for the few patients who did not receive a neurosurgery consult but then deteriorated, to better evaluate who is at most risk for poor outcomes.

Still, said Dr. Joseph, a “guideline-based individualized protocol for traumatic brain injury can help reduce the burden on neurological services. Life changes, and so does medicine.”

The authors reported no conflicts of interest.

The complete manuscript of this study and its presentation at the American Surgical Association’s 135th Annual Meeting, April 2015, in San Diego, California, are anticipated to be published in the Annals of Surgery pending editorial review.

AT THE ASA ANNUAL MEETING

Key clinical point: Patients with less severe TBIs can be safely managed by intensivists or trauma surgeons.

Major finding: For TBI patients, hospital length of stay decreased from a mean 6.2 days to 4.7 (P = .028), and total hospital costs fell by nearly half, from $8.1 million for the 2009-2010 cohort to $4.3 million for the 2013-2014 cohort (P < .001).

Data source: Prospective single-center 5-year database of all TBI patients with positive imaging findings.

Disclosures: The authors reported no conflicts of interest.

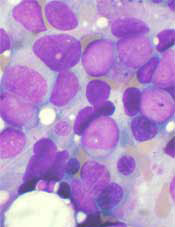

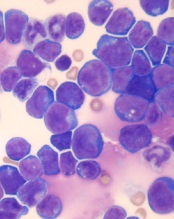

How a vaccine may reduce the risk of ALL

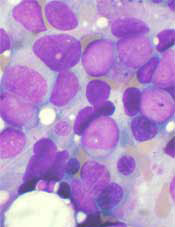

Photo by Petr Kratochvil

Researchers believe they have discovered how a commonly administered vaccine protects children from developing acute lymphoblastic leukemia (ALL).

The Haemophilus influenzae Type b (Hib) vaccine is part of the standard vaccination schedule recommended for children by the US Centers for Disease Control and Prevention. The vaccine is routinely given in 4 doses before children reach 15 months of age.

The Hib vaccine prevents ear infections and meningitis caused by the Hib bacterium, but it may also protect against ALL.

This protection has been suggested in previous studies, but it is not well-known among the public at large, and the mechanism underlying this effect has been poorly understood.

Now, researchers have shown that recurrent Hib infections can shift certain genes into overdrive, converting pre-leukemic cells into full-blown cancer. The team described this work in Nature Immunology.

“These experiments help explain why the incidence of leukemia has been dramatically reduced since the advent of regular vaccinations during infancy,” said study author Markus Müschen, MD, PhD, of the University of California San Francisco.

“Hib and other childhood infections can cause recurrent and vehement immune responses, which we have found could lead to leukemia, but infants that have received vaccines are largely protected and acquire long-term immunity through very mild immune reactions.”

For this study, Dr Müschen and his colleagues tested the idea that chronic inflammation caused by recurrent infections might cause additional genetic lesions in blood cells already carrying an oncogene, promoting their transformation to overt disease.

The team conducted experiments in mice and discovered that the enzymes AID and RAG1-RAG2 drive this process. AID and RAG1-RAG2 introduce mutations in DNA that allow immune cells to adapt to infectious challenges, and these enzymes are necessary for a normal and efficient immune response.

The researchers found that, in the presence of chronic infection, AID and RAG1-RAG2 were hyperactivated, randomly cutting and mutating genes.

Additional experiments revealed that AID and RAG1-RAG2 working together is critical to introduce the additional lesions that result in ALL.

Though the researchers focused on a bacterial infection in this study, they believe the same mechanisms may be at work in viral infections.

The team is currently conducting experiments to determine if protection against leukemia is provided by vaccines against viral infections, such as the measles-mumps-rubella vaccine. ![]()

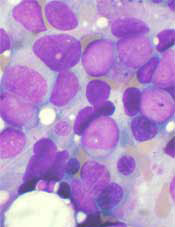

Photo by Petr Kratochvil

Researchers believe they have discovered how a commonly administered vaccine protects children from developing acute lymphoblastic leukemia (ALL).

The Haemophilus influenzae Type b (Hib) vaccine is part of the standard vaccination schedule recommended for children by the US Centers for Disease Control and Prevention. The vaccine is routinely given in 4 doses before children reach 15 months of age.

The Hib vaccine prevents ear infections and meningitis caused by the Hib bacterium, but it may also protect against ALL.

This protection has been suggested in previous studies, but it is not well-known among the public at large, and the mechanism underlying this effect has been poorly understood.

Now, researchers have shown that recurrent Hib infections can shift certain genes into overdrive, converting pre-leukemic cells into full-blown cancer. The team described this work in Nature Immunology.

“These experiments help explain why the incidence of leukemia has been dramatically reduced since the advent of regular vaccinations during infancy,” said study author Markus Müschen, MD, PhD, of the University of California San Francisco.

“Hib and other childhood infections can cause recurrent and vehement immune responses, which we have found could lead to leukemia, but infants that have received vaccines are largely protected and acquire long-term immunity through very mild immune reactions.”

For this study, Dr Müschen and his colleagues tested the idea that chronic inflammation caused by recurrent infections might cause additional genetic lesions in blood cells already carrying an oncogene, promoting their transformation to overt disease.

The team conducted experiments in mice and discovered that the enzymes AID and RAG1-RAG2 drive this process. AID and RAG1-RAG2 introduce mutations in DNA that allow immune cells to adapt to infectious challenges, and these enzymes are necessary for a normal and efficient immune response.

The researchers found that, in the presence of chronic infection, AID and RAG1-RAG2 were hyperactivated, randomly cutting and mutating genes.

Additional experiments revealed that AID and RAG1-RAG2 working together is critical to introduce the additional lesions that result in ALL.

Though the researchers focused on a bacterial infection in this study, they believe the same mechanisms may be at work in viral infections.

The team is currently conducting experiments to determine if protection against leukemia is provided by vaccines against viral infections, such as the measles-mumps-rubella vaccine. ![]()

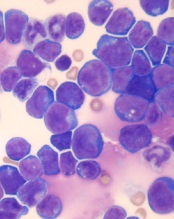

Photo by Petr Kratochvil

Researchers believe they have discovered how a commonly administered vaccine protects children from developing acute lymphoblastic leukemia (ALL).

The Haemophilus influenzae Type b (Hib) vaccine is part of the standard vaccination schedule recommended for children by the US Centers for Disease Control and Prevention. The vaccine is routinely given in 4 doses before children reach 15 months of age.

The Hib vaccine prevents ear infections and meningitis caused by the Hib bacterium, but it may also protect against ALL.

This protection has been suggested in previous studies, but it is not well-known among the public at large, and the mechanism underlying this effect has been poorly understood.

Now, researchers have shown that recurrent Hib infections can shift certain genes into overdrive, converting pre-leukemic cells into full-blown cancer. The team described this work in Nature Immunology.

“These experiments help explain why the incidence of leukemia has been dramatically reduced since the advent of regular vaccinations during infancy,” said study author Markus Müschen, MD, PhD, of the University of California San Francisco.

“Hib and other childhood infections can cause recurrent and vehement immune responses, which we have found could lead to leukemia, but infants that have received vaccines are largely protected and acquire long-term immunity through very mild immune reactions.”

For this study, Dr Müschen and his colleagues tested the idea that chronic inflammation caused by recurrent infections might cause additional genetic lesions in blood cells already carrying an oncogene, promoting their transformation to overt disease.

The team conducted experiments in mice and discovered that the enzymes AID and RAG1-RAG2 drive this process. AID and RAG1-RAG2 introduce mutations in DNA that allow immune cells to adapt to infectious challenges, and these enzymes are necessary for a normal and efficient immune response.

The researchers found that, in the presence of chronic infection, AID and RAG1-RAG2 were hyperactivated, randomly cutting and mutating genes.

Additional experiments revealed that AID and RAG1-RAG2 working together is critical to introduce the additional lesions that result in ALL.

Though the researchers focused on a bacterial infection in this study, they believe the same mechanisms may be at work in viral infections.

The team is currently conducting experiments to determine if protection against leukemia is provided by vaccines against viral infections, such as the measles-mumps-rubella vaccine. ![]()

Drug on fast track to treat β-thalassemia

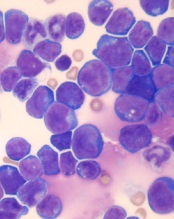

Image courtesy of NHLBI

The US Food and Drug Administration (FDA) has granted fast track designation to luspatercept for the treatment of patients with transfusion-dependent (TD) or non-transfusion-dependent (NTD) β-thalassemia.

Luspatercept is a modified activin receptor type IIB fusion protein that acts as a ligand trap for members of the transforming growth factor-β (TGF-β) superfamily involved in the late stages of erythropoiesis.

The drug regulates late-stage erythrocyte precursor differentiation and maturation. This mechanism of action is distinct from that of erythropoietin, which stimulates the proliferation of early stage erythrocyte precursor cells.

Luspatercept is currently in phase 2 trials in patients with β-thalassemia and individuals with myelodysplastic syndromes (MDS). Data from the trial in β-thalassemia were presented at the 2014 ASH Annual Meeting, and results from the MDS trial were recently presented at the 13th International Symposium on Myelodysplastic Syndromes.

Acceleron Pharma Inc. and Celgene Corporation are jointly developing luspatercept.

About fast track designation

The FDA’s fast track program is designed to facilitate the development of new drugs that are intended to treat serious or life-threatening conditions and that demonstrate the potential to address unmet medical needs.

The designation provides pharmaceutical companies with the opportunity for more frequent interaction with the FDA while developing a drug. It also allows a sponsor to submit sections of a biologics license application on a rolling basis, as they are finalized.

“The FDA’s fast track designation for the luspatercept development program recognizes the serious unmet medical needs of patients with β-thalassemia and the potential for luspatercept in this area,” said Jacqualyn A. Fouse, president of hematology/oncology for Celgene.

“Celgene and Acceleron are working diligently to initiate a phase 3 clinical program in 2015 to treat patients with β-thalassemia, and we look forward to continuing to work closely with health authorities and other important stakeholders to advance this program.”

Phase 2 trial in β-thalassemia

At ASH 2014, researchers presented results of a phase 2 trial in which they investigated whether luspatercept could increase hemoglobin levels and decrease transfusion dependence.

The goal was to increase hemoglobin levels 1.5 g/dL or more for at least 2 weeks in NTD patients and decrease the transfusion burden by 20% or more over 12 weeks in TD patients.

Thirty patients, 7 TD and 23 NTD, received an injection of luspatercept every 3 weeks for 3 months at doses ranging from 0.2 mg/kg to 1.0 mg/kg.

Three-quarters of patients who received 0.8 mg/kg to 1.0 mg/kg of luspatercept experienced an increase in their hemoglobin levels or a reduction in their transfusion burden.

Of the NTD patients, 8 of 12 with iron overload at baseline experienced a reduction in liver iron concentration of 1 mg or more at 16 weeks.

TD patients had a more than 60% reduction in transfusion burden over 12 weeks. This included 2 patients with β0 β0 genotype, who experienced a 79% and 75% reduction.

Luspatercept did not cause any treatment-related serious or severe adverse events. The most common adverse events were bone pain (20%), headache (17%), myalgia (13%), and asthenia (10%).

There was 1 grade 3 dose-limiting toxicity of worsening lumbar spine bone pain. And 3 patients discontinued treatment early, 1 each with occipital headache, ankle pain, and back pain. ![]()

Image courtesy of NHLBI

The US Food and Drug Administration (FDA) has granted fast track designation to luspatercept for the treatment of patients with transfusion-dependent (TD) or non-transfusion-dependent (NTD) β-thalassemia.

Luspatercept is a modified activin receptor type IIB fusion protein that acts as a ligand trap for members of the transforming growth factor-β (TGF-β) superfamily involved in the late stages of erythropoiesis.

The drug regulates late-stage erythrocyte precursor differentiation and maturation. This mechanism of action is distinct from that of erythropoietin, which stimulates the proliferation of early stage erythrocyte precursor cells.

Luspatercept is currently in phase 2 trials in patients with β-thalassemia and individuals with myelodysplastic syndromes (MDS). Data from the trial in β-thalassemia were presented at the 2014 ASH Annual Meeting, and results from the MDS trial were recently presented at the 13th International Symposium on Myelodysplastic Syndromes.

Acceleron Pharma Inc. and Celgene Corporation are jointly developing luspatercept.

About fast track designation

The FDA’s fast track program is designed to facilitate the development of new drugs that are intended to treat serious or life-threatening conditions and that demonstrate the potential to address unmet medical needs.

The designation provides pharmaceutical companies with the opportunity for more frequent interaction with the FDA while developing a drug. It also allows a sponsor to submit sections of a biologics license application on a rolling basis, as they are finalized.

“The FDA’s fast track designation for the luspatercept development program recognizes the serious unmet medical needs of patients with β-thalassemia and the potential for luspatercept in this area,” said Jacqualyn A. Fouse, president of hematology/oncology for Celgene.

“Celgene and Acceleron are working diligently to initiate a phase 3 clinical program in 2015 to treat patients with β-thalassemia, and we look forward to continuing to work closely with health authorities and other important stakeholders to advance this program.”

Phase 2 trial in β-thalassemia

At ASH 2014, researchers presented results of a phase 2 trial in which they investigated whether luspatercept could increase hemoglobin levels and decrease transfusion dependence.

The goal was to increase hemoglobin levels 1.5 g/dL or more for at least 2 weeks in NTD patients and decrease the transfusion burden by 20% or more over 12 weeks in TD patients.

Thirty patients, 7 TD and 23 NTD, received an injection of luspatercept every 3 weeks for 3 months at doses ranging from 0.2 mg/kg to 1.0 mg/kg.

Three-quarters of patients who received 0.8 mg/kg to 1.0 mg/kg of luspatercept experienced an increase in their hemoglobin levels or a reduction in their transfusion burden.

Of the NTD patients, 8 of 12 with iron overload at baseline experienced a reduction in liver iron concentration of 1 mg or more at 16 weeks.

TD patients had a more than 60% reduction in transfusion burden over 12 weeks. This included 2 patients with β0 β0 genotype, who experienced a 79% and 75% reduction.

Luspatercept did not cause any treatment-related serious or severe adverse events. The most common adverse events were bone pain (20%), headache (17%), myalgia (13%), and asthenia (10%).

There was 1 grade 3 dose-limiting toxicity of worsening lumbar spine bone pain. And 3 patients discontinued treatment early, 1 each with occipital headache, ankle pain, and back pain. ![]()

Image courtesy of NHLBI

The US Food and Drug Administration (FDA) has granted fast track designation to luspatercept for the treatment of patients with transfusion-dependent (TD) or non-transfusion-dependent (NTD) β-thalassemia.

Luspatercept is a modified activin receptor type IIB fusion protein that acts as a ligand trap for members of the transforming growth factor-β (TGF-β) superfamily involved in the late stages of erythropoiesis.

The drug regulates late-stage erythrocyte precursor differentiation and maturation. This mechanism of action is distinct from that of erythropoietin, which stimulates the proliferation of early stage erythrocyte precursor cells.

Luspatercept is currently in phase 2 trials in patients with β-thalassemia and individuals with myelodysplastic syndromes (MDS). Data from the trial in β-thalassemia were presented at the 2014 ASH Annual Meeting, and results from the MDS trial were recently presented at the 13th International Symposium on Myelodysplastic Syndromes.

Acceleron Pharma Inc. and Celgene Corporation are jointly developing luspatercept.

About fast track designation

The FDA’s fast track program is designed to facilitate the development of new drugs that are intended to treat serious or life-threatening conditions and that demonstrate the potential to address unmet medical needs.

The designation provides pharmaceutical companies with the opportunity for more frequent interaction with the FDA while developing a drug. It also allows a sponsor to submit sections of a biologics license application on a rolling basis, as they are finalized.

“The FDA’s fast track designation for the luspatercept development program recognizes the serious unmet medical needs of patients with β-thalassemia and the potential for luspatercept in this area,” said Jacqualyn A. Fouse, president of hematology/oncology for Celgene.

“Celgene and Acceleron are working diligently to initiate a phase 3 clinical program in 2015 to treat patients with β-thalassemia, and we look forward to continuing to work closely with health authorities and other important stakeholders to advance this program.”

Phase 2 trial in β-thalassemia

At ASH 2014, researchers presented results of a phase 2 trial in which they investigated whether luspatercept could increase hemoglobin levels and decrease transfusion dependence.

The goal was to increase hemoglobin levels 1.5 g/dL or more for at least 2 weeks in NTD patients and decrease the transfusion burden by 20% or more over 12 weeks in TD patients.

Thirty patients, 7 TD and 23 NTD, received an injection of luspatercept every 3 weeks for 3 months at doses ranging from 0.2 mg/kg to 1.0 mg/kg.

Three-quarters of patients who received 0.8 mg/kg to 1.0 mg/kg of luspatercept experienced an increase in their hemoglobin levels or a reduction in their transfusion burden.

Of the NTD patients, 8 of 12 with iron overload at baseline experienced a reduction in liver iron concentration of 1 mg or more at 16 weeks.

TD patients had a more than 60% reduction in transfusion burden over 12 weeks. This included 2 patients with β0 β0 genotype, who experienced a 79% and 75% reduction.

Luspatercept did not cause any treatment-related serious or severe adverse events. The most common adverse events were bone pain (20%), headache (17%), myalgia (13%), and asthenia (10%).

There was 1 grade 3 dose-limiting toxicity of worsening lumbar spine bone pain. And 3 patients discontinued treatment early, 1 each with occipital headache, ankle pain, and back pain. ![]()

LMWH doesn’t pose increased bleeding risk

Cancer patients with brain metastases who develop venous thromboembolism can safely receive the anticoagulant enoxaparin without further

increasing their risk of intracranial hemorrhage, according to a study published in Blood.

Cancer patients with brain metastases are known to have an increased risk of intracranial hemorrhage, but it has not been clear whether receiving anticoagulant therapy further increases that risk.

So a group of researchers set out to assess the risk of intracranial hemorrhage in cancer patients with brain metastases who received the low-molecular-weight-heparin (LMWH) enoxaparin.

Jeffrey Zwicker, MD, of Harvard Medical School in Boston, Massachusetts, and his colleagues studied the medical records of 293 cancer patients with brain metastasis, 104 of whom had received the LMWH enoxaparin and 189 who did not.

The researchers matched the patients in each group by the year of brain metastases diagnosis, tumor type, age, and gender. The team conducted a blinded review of radiographic imaging and categorized intracranial hemorrhages as “trace,” “measurable,” and “significant.”

At 1 year of follow-up, there was no significant difference between the treatment groups regarding the incidence of intracranial hemorrhage.

Nineteen percent of patients in the enoxaparin arm had measurable intracranial hemorrhages, compared to 21% of patients in the control arm (P=0.97). And 21% of patients in the enoxaparin arm had significant intracranial hemorrhages, compared to 22% of patients in the control arm (P=0.87).

The cumulative incidence of intracranial hemorrhage was 44% in the enoxaparin arm and 37% in the control arm (P=0.13).

In addition, there was no significant difference in overall survival between the treatment arms. The median overall survival was 8.4 months in the enoxaparin arm and 9.7 months in the control arm (P=0.65).

“While it is a very common clinical scenario to treat a patient with a metastatic brain tumor who also develops a blood clot, before this study, there was very little data to inform the difficult decision of whether or not to anticoagulate these patients,” Dr Zwicker said.

“Our findings, which demonstrate that current practice is safe, should reassure physicians that anticoagulants can be safely administered to patients with brain metastases and a history of blood clots.” ![]()

Cancer patients with brain metastases who develop venous thromboembolism can safely receive the anticoagulant enoxaparin without further

increasing their risk of intracranial hemorrhage, according to a study published in Blood.

Cancer patients with brain metastases are known to have an increased risk of intracranial hemorrhage, but it has not been clear whether receiving anticoagulant therapy further increases that risk.

So a group of researchers set out to assess the risk of intracranial hemorrhage in cancer patients with brain metastases who received the low-molecular-weight-heparin (LMWH) enoxaparin.

Jeffrey Zwicker, MD, of Harvard Medical School in Boston, Massachusetts, and his colleagues studied the medical records of 293 cancer patients with brain metastasis, 104 of whom had received the LMWH enoxaparin and 189 who did not.

The researchers matched the patients in each group by the year of brain metastases diagnosis, tumor type, age, and gender. The team conducted a blinded review of radiographic imaging and categorized intracranial hemorrhages as “trace,” “measurable,” and “significant.”

At 1 year of follow-up, there was no significant difference between the treatment groups regarding the incidence of intracranial hemorrhage.

Nineteen percent of patients in the enoxaparin arm had measurable intracranial hemorrhages, compared to 21% of patients in the control arm (P=0.97). And 21% of patients in the enoxaparin arm had significant intracranial hemorrhages, compared to 22% of patients in the control arm (P=0.87).

The cumulative incidence of intracranial hemorrhage was 44% in the enoxaparin arm and 37% in the control arm (P=0.13).

In addition, there was no significant difference in overall survival between the treatment arms. The median overall survival was 8.4 months in the enoxaparin arm and 9.7 months in the control arm (P=0.65).

“While it is a very common clinical scenario to treat a patient with a metastatic brain tumor who also develops a blood clot, before this study, there was very little data to inform the difficult decision of whether or not to anticoagulate these patients,” Dr Zwicker said.

“Our findings, which demonstrate that current practice is safe, should reassure physicians that anticoagulants can be safely administered to patients with brain metastases and a history of blood clots.” ![]()

Cancer patients with brain metastases who develop venous thromboembolism can safely receive the anticoagulant enoxaparin without further

increasing their risk of intracranial hemorrhage, according to a study published in Blood.

Cancer patients with brain metastases are known to have an increased risk of intracranial hemorrhage, but it has not been clear whether receiving anticoagulant therapy further increases that risk.

So a group of researchers set out to assess the risk of intracranial hemorrhage in cancer patients with brain metastases who received the low-molecular-weight-heparin (LMWH) enoxaparin.

Jeffrey Zwicker, MD, of Harvard Medical School in Boston, Massachusetts, and his colleagues studied the medical records of 293 cancer patients with brain metastasis, 104 of whom had received the LMWH enoxaparin and 189 who did not.

The researchers matched the patients in each group by the year of brain metastases diagnosis, tumor type, age, and gender. The team conducted a blinded review of radiographic imaging and categorized intracranial hemorrhages as “trace,” “measurable,” and “significant.”

At 1 year of follow-up, there was no significant difference between the treatment groups regarding the incidence of intracranial hemorrhage.

Nineteen percent of patients in the enoxaparin arm had measurable intracranial hemorrhages, compared to 21% of patients in the control arm (P=0.97). And 21% of patients in the enoxaparin arm had significant intracranial hemorrhages, compared to 22% of patients in the control arm (P=0.87).

The cumulative incidence of intracranial hemorrhage was 44% in the enoxaparin arm and 37% in the control arm (P=0.13).

In addition, there was no significant difference in overall survival between the treatment arms. The median overall survival was 8.4 months in the enoxaparin arm and 9.7 months in the control arm (P=0.65).

“While it is a very common clinical scenario to treat a patient with a metastatic brain tumor who also develops a blood clot, before this study, there was very little data to inform the difficult decision of whether or not to anticoagulate these patients,” Dr Zwicker said.

“Our findings, which demonstrate that current practice is safe, should reassure physicians that anticoagulants can be safely administered to patients with brain metastases and a history of blood clots.” ![]()

FDA grants inhibitor fast track designation for AML

The US Food and Drug Administration (FDA) has granted fast track designation to AG-120 for the treatment of patients with acute myelogenous leukemia (AML) who harbor an isocitrate dehydrogenase-1 (IDH1) mutation.

AG-120 is an oral, selective inhibitor of the mutated IDH1 protein that is under investigation in two phase 1 clinical trials, one in hematologic malignancies and one in advanced solid tumors.

Data from the phase 1 trial in hematologic malignancies were previously presented at the 26th EORTC-NCI-AACR Symposium on Molecular Targets and Cancer Therapeutics in November 2014.

Updated data from this trial are scheduled to be presented at the 20th Annual Congress of the European Hematology Association (EHA) next month.

About fast track designation

The FDA’s fast track drug development program is designed to expedite clinical development and submission of new drug applications for medicines with the potential to treat serious or life-threatening conditions and address unmet medical needs.

Fast track designation facilitates frequent interactions with the FDA review team, including meetings to discuss all aspects of development to support a drug’s approval, and also provides the opportunity to submit sections of a new drug application on a rolling basis as data become available.

“We are pleased that now both AG-120 and AG-221 have been granted fast track designation, demonstrating the FDA’s commitment to facilitate the development and expedite the review of our lead IDH programs as important new therapies for people with AML who carry these mutations,” said Chris Bowden, MD, chief medical officer of Agios Pharmaceuticals, Inc., the company developing AG-120 in cooperation with Celgene Corporation.

Phase 1 results

At the EORTC-NCI-AACR symposium, researchers presented results from the ongoing phase 1 trial of AG-120 in hematologic malignancies. The data included 17 patients with relapsed and/or refractory AML who had received a median of 2 prior treatments.

The patients were scheduled to receive AG-120 in 1 of 4 dose groups: 100 mg twice a day, 300 mg once a day, 500 mg once a day, and 800 mg once a day over continuous, 28-day cycles.

Of the 14 patients evaluable for response, 7 responded. Four patients achieved a complete response, 2 had a complete response in the marrow, and 1 had a partial response.

Responses occurred at all the dose levels tested. The maximum-tolerated dose was not reached. All responding patients were still on AG-120 at the time of presentation, and 1 patient with stable disease remained on the drug.

Researchers said AG-120 was generally well-tolerated. The majority of adverse events were grade 1 and 2. The most common of these were nausea, fatigue, and dyspnea.

Eight patients experienced serious adverse events, but these were primarily related to disease progression.

One patient experienced a dose-limiting toxicity of asymptomatic grade 3 QT prolongation at the highest dose tested, which improved to grade 1 with dose reduction. The patient was in complete remission and remained on AG-120 at the time of presentation.

There were 6 patient deaths, all unrelated to AG-120. Five deaths occurred after patients discontinued treatment due to progressive disease, and 1 patient died due to disease-related intracranial hemorrhage while on treatment.

“We look forward to presenting new data from the ongoing phase 1 study at the EHA Annual Congress next month,” Dr Bowden said, “and remain on track to initiate a global, registration-enabling, phase 3 study in collaboration with Celgene in AML patients who harbor an IDH1 mutation in the first half of 2016.” ![]()

The US Food and Drug Administration (FDA) has granted fast track designation to AG-120 for the treatment of patients with acute myelogenous leukemia (AML) who harbor an isocitrate dehydrogenase-1 (IDH1) mutation.

AG-120 is an oral, selective inhibitor of the mutated IDH1 protein that is under investigation in two phase 1 clinical trials, one in hematologic malignancies and one in advanced solid tumors.

Data from the phase 1 trial in hematologic malignancies were previously presented at the 26th EORTC-NCI-AACR Symposium on Molecular Targets and Cancer Therapeutics in November 2014.

Updated data from this trial are scheduled to be presented at the 20th Annual Congress of the European Hematology Association (EHA) next month.

About fast track designation

The FDA’s fast track drug development program is designed to expedite clinical development and submission of new drug applications for medicines with the potential to treat serious or life-threatening conditions and address unmet medical needs.

Fast track designation facilitates frequent interactions with the FDA review team, including meetings to discuss all aspects of development to support a drug’s approval, and also provides the opportunity to submit sections of a new drug application on a rolling basis as data become available.

“We are pleased that now both AG-120 and AG-221 have been granted fast track designation, demonstrating the FDA’s commitment to facilitate the development and expedite the review of our lead IDH programs as important new therapies for people with AML who carry these mutations,” said Chris Bowden, MD, chief medical officer of Agios Pharmaceuticals, Inc., the company developing AG-120 in cooperation with Celgene Corporation.

Phase 1 results

At the EORTC-NCI-AACR symposium, researchers presented results from the ongoing phase 1 trial of AG-120 in hematologic malignancies. The data included 17 patients with relapsed and/or refractory AML who had received a median of 2 prior treatments.

The patients were scheduled to receive AG-120 in 1 of 4 dose groups: 100 mg twice a day, 300 mg once a day, 500 mg once a day, and 800 mg once a day over continuous, 28-day cycles.

Of the 14 patients evaluable for response, 7 responded. Four patients achieved a complete response, 2 had a complete response in the marrow, and 1 had a partial response.

Responses occurred at all the dose levels tested. The maximum-tolerated dose was not reached. All responding patients were still on AG-120 at the time of presentation, and 1 patient with stable disease remained on the drug.

Researchers said AG-120 was generally well-tolerated. The majority of adverse events were grade 1 and 2. The most common of these were nausea, fatigue, and dyspnea.

Eight patients experienced serious adverse events, but these were primarily related to disease progression.

One patient experienced a dose-limiting toxicity of asymptomatic grade 3 QT prolongation at the highest dose tested, which improved to grade 1 with dose reduction. The patient was in complete remission and remained on AG-120 at the time of presentation.

There were 6 patient deaths, all unrelated to AG-120. Five deaths occurred after patients discontinued treatment due to progressive disease, and 1 patient died due to disease-related intracranial hemorrhage while on treatment.

“We look forward to presenting new data from the ongoing phase 1 study at the EHA Annual Congress next month,” Dr Bowden said, “and remain on track to initiate a global, registration-enabling, phase 3 study in collaboration with Celgene in AML patients who harbor an IDH1 mutation in the first half of 2016.” ![]()

The US Food and Drug Administration (FDA) has granted fast track designation to AG-120 for the treatment of patients with acute myelogenous leukemia (AML) who harbor an isocitrate dehydrogenase-1 (IDH1) mutation.

AG-120 is an oral, selective inhibitor of the mutated IDH1 protein that is under investigation in two phase 1 clinical trials, one in hematologic malignancies and one in advanced solid tumors.

Data from the phase 1 trial in hematologic malignancies were previously presented at the 26th EORTC-NCI-AACR Symposium on Molecular Targets and Cancer Therapeutics in November 2014.

Updated data from this trial are scheduled to be presented at the 20th Annual Congress of the European Hematology Association (EHA) next month.

About fast track designation

The FDA’s fast track drug development program is designed to expedite clinical development and submission of new drug applications for medicines with the potential to treat serious or life-threatening conditions and address unmet medical needs.

Fast track designation facilitates frequent interactions with the FDA review team, including meetings to discuss all aspects of development to support a drug’s approval, and also provides the opportunity to submit sections of a new drug application on a rolling basis as data become available.

“We are pleased that now both AG-120 and AG-221 have been granted fast track designation, demonstrating the FDA’s commitment to facilitate the development and expedite the review of our lead IDH programs as important new therapies for people with AML who carry these mutations,” said Chris Bowden, MD, chief medical officer of Agios Pharmaceuticals, Inc., the company developing AG-120 in cooperation with Celgene Corporation.

Phase 1 results

At the EORTC-NCI-AACR symposium, researchers presented results from the ongoing phase 1 trial of AG-120 in hematologic malignancies. The data included 17 patients with relapsed and/or refractory AML who had received a median of 2 prior treatments.

The patients were scheduled to receive AG-120 in 1 of 4 dose groups: 100 mg twice a day, 300 mg once a day, 500 mg once a day, and 800 mg once a day over continuous, 28-day cycles.

Of the 14 patients evaluable for response, 7 responded. Four patients achieved a complete response, 2 had a complete response in the marrow, and 1 had a partial response.

Responses occurred at all the dose levels tested. The maximum-tolerated dose was not reached. All responding patients were still on AG-120 at the time of presentation, and 1 patient with stable disease remained on the drug.

Researchers said AG-120 was generally well-tolerated. The majority of adverse events were grade 1 and 2. The most common of these were nausea, fatigue, and dyspnea.

Eight patients experienced serious adverse events, but these were primarily related to disease progression.

One patient experienced a dose-limiting toxicity of asymptomatic grade 3 QT prolongation at the highest dose tested, which improved to grade 1 with dose reduction. The patient was in complete remission and remained on AG-120 at the time of presentation.

There were 6 patient deaths, all unrelated to AG-120. Five deaths occurred after patients discontinued treatment due to progressive disease, and 1 patient died due to disease-related intracranial hemorrhage while on treatment.

“We look forward to presenting new data from the ongoing phase 1 study at the EHA Annual Congress next month,” Dr Bowden said, “and remain on track to initiate a global, registration-enabling, phase 3 study in collaboration with Celgene in AML patients who harbor an IDH1 mutation in the first half of 2016.” ![]()

DDW: Menopausal hormone therapy increases major GI bleed risk

Menopausal hormone therapy is associated with an increased risk of major gastrointestinal bleeding, particularly in the lower gastrointestinal tract, that is associated with duration of use, a study has found.

Analysis of data from 73,863 women enrolled in the Nurses’ Health Study II in 1989 showed that current users of menopausal hormone therapy had a 46% increase in the risk of a major gastrointestinal bleed and a more than twofold increase in the risk of a lower GI bleed or ischemic colitis, compared with never users, said Dr. Prashant Singh of Massachusetts General Hospital, Boston.

Past users showed a much smaller increase risk of bleeding, while increasing duration of hormone therapy was significantly associated with increasing risk of major and low gastrointestinal bleeding.

“Although our findings show that menopausal hormone therapy may increase the risk of major GI bleeding, especially in the lower GI tract, it is important for these patients to know that this therapy is still an effective treatment; however, both clinician and patient should be more cautious in using this therapy in some cases, such as with patients who have a history of ischemic colitis,” Dr. Singh said at the annual Digestive Disease Week.

Dr. Singh does not have any relevant financial or other relationship with any manufacturer or provider of commercial products or services that he discussed during the presentation.

Menopausal hormone therapy is associated with an increased risk of major gastrointestinal bleeding, particularly in the lower gastrointestinal tract, that is associated with duration of use, a study has found.

Analysis of data from 73,863 women enrolled in the Nurses’ Health Study II in 1989 showed that current users of menopausal hormone therapy had a 46% increase in the risk of a major gastrointestinal bleed and a more than twofold increase in the risk of a lower GI bleed or ischemic colitis, compared with never users, said Dr. Prashant Singh of Massachusetts General Hospital, Boston.

Past users showed a much smaller increase risk of bleeding, while increasing duration of hormone therapy was significantly associated with increasing risk of major and low gastrointestinal bleeding.

“Although our findings show that menopausal hormone therapy may increase the risk of major GI bleeding, especially in the lower GI tract, it is important for these patients to know that this therapy is still an effective treatment; however, both clinician and patient should be more cautious in using this therapy in some cases, such as with patients who have a history of ischemic colitis,” Dr. Singh said at the annual Digestive Disease Week.

Dr. Singh does not have any relevant financial or other relationship with any manufacturer or provider of commercial products or services that he discussed during the presentation.

Menopausal hormone therapy is associated with an increased risk of major gastrointestinal bleeding, particularly in the lower gastrointestinal tract, that is associated with duration of use, a study has found.

Analysis of data from 73,863 women enrolled in the Nurses’ Health Study II in 1989 showed that current users of menopausal hormone therapy had a 46% increase in the risk of a major gastrointestinal bleed and a more than twofold increase in the risk of a lower GI bleed or ischemic colitis, compared with never users, said Dr. Prashant Singh of Massachusetts General Hospital, Boston.

Past users showed a much smaller increase risk of bleeding, while increasing duration of hormone therapy was significantly associated with increasing risk of major and low gastrointestinal bleeding.

“Although our findings show that menopausal hormone therapy may increase the risk of major GI bleeding, especially in the lower GI tract, it is important for these patients to know that this therapy is still an effective treatment; however, both clinician and patient should be more cautious in using this therapy in some cases, such as with patients who have a history of ischemic colitis,” Dr. Singh said at the annual Digestive Disease Week.

Dr. Singh does not have any relevant financial or other relationship with any manufacturer or provider of commercial products or services that he discussed during the presentation.

FROM DDW 2015

Key clinical point: Menopausal hormone therapy is associated with an increased risk of major gastrointestinal bleeding, particularly in the lower gastrointestinal tract.

Major finding: Current users of menopausal hormone therapy had a 46% increase in the risk of a major gastrointestinal bleed and a more than twofold increase in the risk of a lower GI bleed or ischemic colitis.

Data source: Analysis of data from 73,863 women enrolled in the Nurses’ Health Study II.

Disclosures: No conflicts of interest were disclosed.

DDW: Urinary enzymes hint at gastric cancer

WASHINGTON – A simple urine test could detect gastric cancer even at an early stage, the test’s developers say.

The test, which looks for the presence of two metalloprotease enzymes labeled ADAM 12 and MMP-9/NGAL had 77.1% sensitivity and 82.9% specificity for gastric cancer when tested in 35 patients with the malignancy and an equal number of healthy controls, reported Dr. Takaya Shimura from the department of surgery at Boston Children’s Hospital and Harvard Medical School in Boston.

“This study represents the first demonstration of the presence of ADAM 12 and MMO-9/NGAL complex in the urine of gastric cancer patients,” he said at the annual Digestive Disease Week.

Dr. Stephen J. Meltzer of Johns Hopkins University, Baltimore, commented in an interview that the findings are convincing but preliminary.

A randomized clinical trial enrolling a larger number of patients and controls would be required before he would consider screening patients for the enzymes, said Dr. Meltzer, who was not involved in the study and comoderated the meeting session where the results were presented.

ADAM 12 (a disintegrin and metalloprotease 12) and MMP-9 (matrix metalloprotease 9) are both members of a family of enzymes involved in cellular adhesion, invasion, growth, and angiogenesis, Dr. Shimura explained. MMP-9, when complexed with NGAL (neutrophil gelatinase associated lipocalin) is protected from autodegradation.

The investigators, from the lab of Dr. Marsha A. Moses at Boston Children’s Hospital, and their collaborators in Japan had previously reported that MMPs in urine were independent predictors of both organ-confined and metastatic cancer.

Urinary assays are noninvasive, using easily accessed tissues that can be handled simply and inexpensively, making them ideal for cancer detection, Dr, Shimura said.

Current tests for gastric cancer, such as carcinoembryonic antigen (CEA) and cancer antigens (CA) 19-9 and 72-4, have poor sensitivity for detecting advanced disease, and are even worse at spotting early disease, he noted.

To see whether they could improve on the current lot of tests, the investigators enrolled 106 patients in a case-control study, settling eventually, after age and sex matching, on a cohort of 70 patients: 35 with primarily early-stage gastric cancer, and 35 healthy controls.

After screening the urine of participants for about 50 different antigenic proteins, they found that the patients with gastric cancer had significantly higher levels in their urine of both ADAM 12 (P < .001) and the MMP-9/NGAL complex (P = .020).

In a multivariate analysis, they showed that both enzymes were strong, independent predictors of gastric cancer, with an odds ratio for urinary MMO-9/NGAL of 6.71 (P = .002), and an OR of 15.4 for ADAM 12 (P = .002). In contrast, Helicobacter pylori infection was associated with a nonsignificant OR of 2.54.

In a receiver operating characteristic (ROC) analysis, they also found that MMP-9/NGAL was associated with an area-under-the curve (AUC) of 0.657 (P = .024), ADAM 12 was associated with an AUC of 0.757 (P < .001), and that the two combined had an AUC of 0.825 (P < .001).

As noted before, the sensitivity of the combined enzymes was 77%, and the specificity was 83%.

Finally, using immunohistochemical analysis, the investigators were able to show that gastric cancer tissues had high levels of coexpression of MMP-9 and NGAL (P <.001) and high expression levels of ADAM 12 (P < .001), compared with adjacent normal tissues.

WASHINGTON – A simple urine test could detect gastric cancer even at an early stage, the test’s developers say.

The test, which looks for the presence of two metalloprotease enzymes labeled ADAM 12 and MMP-9/NGAL had 77.1% sensitivity and 82.9% specificity for gastric cancer when tested in 35 patients with the malignancy and an equal number of healthy controls, reported Dr. Takaya Shimura from the department of surgery at Boston Children’s Hospital and Harvard Medical School in Boston.

“This study represents the first demonstration of the presence of ADAM 12 and MMO-9/NGAL complex in the urine of gastric cancer patients,” he said at the annual Digestive Disease Week.

Dr. Stephen J. Meltzer of Johns Hopkins University, Baltimore, commented in an interview that the findings are convincing but preliminary.

A randomized clinical trial enrolling a larger number of patients and controls would be required before he would consider screening patients for the enzymes, said Dr. Meltzer, who was not involved in the study and comoderated the meeting session where the results were presented.

ADAM 12 (a disintegrin and metalloprotease 12) and MMP-9 (matrix metalloprotease 9) are both members of a family of enzymes involved in cellular adhesion, invasion, growth, and angiogenesis, Dr. Shimura explained. MMP-9, when complexed with NGAL (neutrophil gelatinase associated lipocalin) is protected from autodegradation.

The investigators, from the lab of Dr. Marsha A. Moses at Boston Children’s Hospital, and their collaborators in Japan had previously reported that MMPs in urine were independent predictors of both organ-confined and metastatic cancer.

Urinary assays are noninvasive, using easily accessed tissues that can be handled simply and inexpensively, making them ideal for cancer detection, Dr, Shimura said.

Current tests for gastric cancer, such as carcinoembryonic antigen (CEA) and cancer antigens (CA) 19-9 and 72-4, have poor sensitivity for detecting advanced disease, and are even worse at spotting early disease, he noted.

To see whether they could improve on the current lot of tests, the investigators enrolled 106 patients in a case-control study, settling eventually, after age and sex matching, on a cohort of 70 patients: 35 with primarily early-stage gastric cancer, and 35 healthy controls.

After screening the urine of participants for about 50 different antigenic proteins, they found that the patients with gastric cancer had significantly higher levels in their urine of both ADAM 12 (P < .001) and the MMP-9/NGAL complex (P = .020).

In a multivariate analysis, they showed that both enzymes were strong, independent predictors of gastric cancer, with an odds ratio for urinary MMO-9/NGAL of 6.71 (P = .002), and an OR of 15.4 for ADAM 12 (P = .002). In contrast, Helicobacter pylori infection was associated with a nonsignificant OR of 2.54.

In a receiver operating characteristic (ROC) analysis, they also found that MMP-9/NGAL was associated with an area-under-the curve (AUC) of 0.657 (P = .024), ADAM 12 was associated with an AUC of 0.757 (P < .001), and that the two combined had an AUC of 0.825 (P < .001).

As noted before, the sensitivity of the combined enzymes was 77%, and the specificity was 83%.

Finally, using immunohistochemical analysis, the investigators were able to show that gastric cancer tissues had high levels of coexpression of MMP-9 and NGAL (P <.001) and high expression levels of ADAM 12 (P < .001), compared with adjacent normal tissues.

WASHINGTON – A simple urine test could detect gastric cancer even at an early stage, the test’s developers say.

The test, which looks for the presence of two metalloprotease enzymes labeled ADAM 12 and MMP-9/NGAL had 77.1% sensitivity and 82.9% specificity for gastric cancer when tested in 35 patients with the malignancy and an equal number of healthy controls, reported Dr. Takaya Shimura from the department of surgery at Boston Children’s Hospital and Harvard Medical School in Boston.

“This study represents the first demonstration of the presence of ADAM 12 and MMO-9/NGAL complex in the urine of gastric cancer patients,” he said at the annual Digestive Disease Week.

Dr. Stephen J. Meltzer of Johns Hopkins University, Baltimore, commented in an interview that the findings are convincing but preliminary.

A randomized clinical trial enrolling a larger number of patients and controls would be required before he would consider screening patients for the enzymes, said Dr. Meltzer, who was not involved in the study and comoderated the meeting session where the results were presented.

ADAM 12 (a disintegrin and metalloprotease 12) and MMP-9 (matrix metalloprotease 9) are both members of a family of enzymes involved in cellular adhesion, invasion, growth, and angiogenesis, Dr. Shimura explained. MMP-9, when complexed with NGAL (neutrophil gelatinase associated lipocalin) is protected from autodegradation.

The investigators, from the lab of Dr. Marsha A. Moses at Boston Children’s Hospital, and their collaborators in Japan had previously reported that MMPs in urine were independent predictors of both organ-confined and metastatic cancer.

Urinary assays are noninvasive, using easily accessed tissues that can be handled simply and inexpensively, making them ideal for cancer detection, Dr, Shimura said.

Current tests for gastric cancer, such as carcinoembryonic antigen (CEA) and cancer antigens (CA) 19-9 and 72-4, have poor sensitivity for detecting advanced disease, and are even worse at spotting early disease, he noted.

To see whether they could improve on the current lot of tests, the investigators enrolled 106 patients in a case-control study, settling eventually, after age and sex matching, on a cohort of 70 patients: 35 with primarily early-stage gastric cancer, and 35 healthy controls.

After screening the urine of participants for about 50 different antigenic proteins, they found that the patients with gastric cancer had significantly higher levels in their urine of both ADAM 12 (P < .001) and the MMP-9/NGAL complex (P = .020).

In a multivariate analysis, they showed that both enzymes were strong, independent predictors of gastric cancer, with an odds ratio for urinary MMO-9/NGAL of 6.71 (P = .002), and an OR of 15.4 for ADAM 12 (P = .002). In contrast, Helicobacter pylori infection was associated with a nonsignificant OR of 2.54.

In a receiver operating characteristic (ROC) analysis, they also found that MMP-9/NGAL was associated with an area-under-the curve (AUC) of 0.657 (P = .024), ADAM 12 was associated with an AUC of 0.757 (P < .001), and that the two combined had an AUC of 0.825 (P < .001).

As noted before, the sensitivity of the combined enzymes was 77%, and the specificity was 83%.

Finally, using immunohistochemical analysis, the investigators were able to show that gastric cancer tissues had high levels of coexpression of MMP-9 and NGAL (P <.001) and high expression levels of ADAM 12 (P < .001), compared with adjacent normal tissues.

AT DDW 2015

Key clinical point: Urinary levels of two metalloproteases were significantly elevated in the urine of patients with gastric cancer, compared with controls.

Major finding: High expression of ADAM 12 and MMP-9/NGAL complex had a 77% sensitivity and 83% specificity for gastric cancer.

Data source: Case-control study of 35 patients with gastric cancer and 35 controls.

Disclosures: The study was supported by the Advanced Medical Research Foundation in the United States and the Research Fellowship of the Uehara Memorial Foundation, Japan. Dr. Shimura reported having no conflicts of interest.

DDW: Scheduled for a colonoscopy? Pass the pretzels!

WASHINGTON – A novel, edible colon preparation could make obsolete the fasting and large volume of salty liquid cleansing that keep many a patient from completing their colonoscopies.

A pilot study showed that all 10 patients who ate a series of nutritionally balanced meals, drinks, and snacks such as pretzels and pudding had a successful colon cleansing according to the endoscopist at the time of the procedure, Dr. L. Campbell Levy of Dartmouth-Hitchcock Medical Center in Lebanon, N.H., reported at the annual Digestive Disease Week.

The preparation, which is blended with polyethylene glycol 3350, sorbitol, and ascorbic acid, did not produce any significant changes in electrolytes or creatinine.

There were no adverse events and, equally important, all 10 patients said that they would follow the edible bowel regimen again for a subsequent procedure.

In a video interview, he discussed the small study’s results and the plans for larger, randomized studies.

Dr. Levy reported no relevant conflicts. The inventor of the diet and the founder of Colonary Concepts were involved in the study.

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

On Twitter @pwendl

WASHINGTON – A novel, edible colon preparation could make obsolete the fasting and large volume of salty liquid cleansing that keep many a patient from completing their colonoscopies.

A pilot study showed that all 10 patients who ate a series of nutritionally balanced meals, drinks, and snacks such as pretzels and pudding had a successful colon cleansing according to the endoscopist at the time of the procedure, Dr. L. Campbell Levy of Dartmouth-Hitchcock Medical Center in Lebanon, N.H., reported at the annual Digestive Disease Week.

The preparation, which is blended with polyethylene glycol 3350, sorbitol, and ascorbic acid, did not produce any significant changes in electrolytes or creatinine.

There were no adverse events and, equally important, all 10 patients said that they would follow the edible bowel regimen again for a subsequent procedure.

In a video interview, he discussed the small study’s results and the plans for larger, randomized studies.

Dr. Levy reported no relevant conflicts. The inventor of the diet and the founder of Colonary Concepts were involved in the study.

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

On Twitter @pwendl

WASHINGTON – A novel, edible colon preparation could make obsolete the fasting and large volume of salty liquid cleansing that keep many a patient from completing their colonoscopies.

A pilot study showed that all 10 patients who ate a series of nutritionally balanced meals, drinks, and snacks such as pretzels and pudding had a successful colon cleansing according to the endoscopist at the time of the procedure, Dr. L. Campbell Levy of Dartmouth-Hitchcock Medical Center in Lebanon, N.H., reported at the annual Digestive Disease Week.

The preparation, which is blended with polyethylene glycol 3350, sorbitol, and ascorbic acid, did not produce any significant changes in electrolytes or creatinine.

There were no adverse events and, equally important, all 10 patients said that they would follow the edible bowel regimen again for a subsequent procedure.

In a video interview, he discussed the small study’s results and the plans for larger, randomized studies.

Dr. Levy reported no relevant conflicts. The inventor of the diet and the founder of Colonary Concepts were involved in the study.

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

On Twitter @pwendl

AT DDW® 2015

Wells score not effective at inpatient DVT detection

While effective in outpatient settings, the Wells score was not effective at detecting deep vein thrombosis in an inpatient setting, according to Dr. Patricia Silveira and her associates from Harvard Medical School, Boston.

Of 1,135 patients included in the study, 137 had proximal DVT. DVT incidence in the low, medium, and high pretest probability groups were 5.9%, 9.5%, and 16.4%, respectively. Although statistically significantly different, this is a very narrow range, in contrast to findings in previous studies, where incidence among the three groups was 3.0%, 16.6%, and 74.6% respectively.

The AUC for the accuracy of the Wells score was 0.6, only slightly better than chance. The failure rate in the low pretest group was 5.9%, and the efficiency was 11.9%.

“In inpatients,Wells DVT scores are inflated by comorbidities and nonspecific physical findings common among hospitalized patients, leaving very few patients in the low-probability Wells score category, and many patients without DVT in the moderate- and high-probability categories,” Dr. Erika Leemann Price and Dr. Tracy Minichiello wrote in a related editorial.

Find the full study and editorial in JAMA Internal Medicine (doi:10.1001/jamainternmed.2015.1687; doi:10.1001/jamainternmed.2015.1699).

While effective in outpatient settings, the Wells score was not effective at detecting deep vein thrombosis in an inpatient setting, according to Dr. Patricia Silveira and her associates from Harvard Medical School, Boston.

Of 1,135 patients included in the study, 137 had proximal DVT. DVT incidence in the low, medium, and high pretest probability groups were 5.9%, 9.5%, and 16.4%, respectively. Although statistically significantly different, this is a very narrow range, in contrast to findings in previous studies, where incidence among the three groups was 3.0%, 16.6%, and 74.6% respectively.

The AUC for the accuracy of the Wells score was 0.6, only slightly better than chance. The failure rate in the low pretest group was 5.9%, and the efficiency was 11.9%.

“In inpatients,Wells DVT scores are inflated by comorbidities and nonspecific physical findings common among hospitalized patients, leaving very few patients in the low-probability Wells score category, and many patients without DVT in the moderate- and high-probability categories,” Dr. Erika Leemann Price and Dr. Tracy Minichiello wrote in a related editorial.

Find the full study and editorial in JAMA Internal Medicine (doi:10.1001/jamainternmed.2015.1687; doi:10.1001/jamainternmed.2015.1699).

While effective in outpatient settings, the Wells score was not effective at detecting deep vein thrombosis in an inpatient setting, according to Dr. Patricia Silveira and her associates from Harvard Medical School, Boston.

Of 1,135 patients included in the study, 137 had proximal DVT. DVT incidence in the low, medium, and high pretest probability groups were 5.9%, 9.5%, and 16.4%, respectively. Although statistically significantly different, this is a very narrow range, in contrast to findings in previous studies, where incidence among the three groups was 3.0%, 16.6%, and 74.6% respectively.

The AUC for the accuracy of the Wells score was 0.6, only slightly better than chance. The failure rate in the low pretest group was 5.9%, and the efficiency was 11.9%.

“In inpatients,Wells DVT scores are inflated by comorbidities and nonspecific physical findings common among hospitalized patients, leaving very few patients in the low-probability Wells score category, and many patients without DVT in the moderate- and high-probability categories,” Dr. Erika Leemann Price and Dr. Tracy Minichiello wrote in a related editorial.

Find the full study and editorial in JAMA Internal Medicine (doi:10.1001/jamainternmed.2015.1687; doi:10.1001/jamainternmed.2015.1699).

MRD doesn’t suggest need for more treatment

© Hind Medyouf, German

Cancer Research Center

A new study suggests that minimal residual disease (MRD) alone is not predictive of outcomes in children with T-cell acute lymphoblastic leukemia (T-ALL).

Study investigators analyzed a small group of T-ALL patients who received similar treatment regimens, comparing those with and without MRD after induction.

None of the MRD-positive patients relapsed within the follow-up period, despite not receiving intensified treatment to fully eradicate their disease.

These results, published in Pediatric Blood & Cancer, suggest T-ALL patients with MRD may not need intensified treatment and can therefore avoid additional toxicities.

“Until now, the dogma has been that, for patients with leukemia who have minimal residual disease at the end of induction, we need to intensify their treatment, which also increases side effects,” said study author Hisham Abdel-Azim, MD, of Children’s Hospital Los Angeles in California.

“We have found, for T-ALL, patients have excellent outcomes without therapy intensification and its associated toxicities.”

Dr Abdel-Azim and his colleagues studied 33 children (ages 1 to 21) with newly diagnosed T-ALL. Their treatment included induction, augmented consolidation, interim maintenance (high-dose [5 g/m2] or escalating-dose intravenous methotrexate), 1 delayed intensification, and maintenance. Twenty-one patients underwent cranial irradiation, and 1 received a transplant.

After induction, 19 of the 32 patients analyzed had MRD, which was defined as ≥ 0.01% residual leukemia cells. At the end of consolidation, 6 of the 11 patients tested were MRD-positive. And at the end of interim maintenance, 2 of the 4 patients tested were MRD-positive.

The 19 patients who were MRD-positive after induction were in continuous complete remission at a median follow-up of 4 years (range, 1.3-7.1 years). The same was true for 13 of the 14 MRD-negative patients. One of the MRD-negative patients relapsed 18 months after diagnosis but was still alive with refractory disease at last follow-up.