User login

Team discovers target for antimalarial drugs

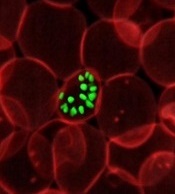

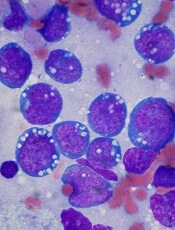

a red blood cell

Image courtesy of St. Jude

Children’s Research Hospital

New research indicates that manipulating the permeability of parasitophorous vacuoles could defeat malaria parasites.

The researchers unearthed this finding while studying the way in which the Toxoplasma gondii parasite, which causes toxoplasmosis, and Plasmodium parasites,

which cause malaria, access vital nutrients from their host cells.

The team described this work in Cell Host & Microbe.

Roughly a third of the world’s deadly infectious diseases are caused by pathogens that spend a large portion of their life inside parasitophorous vacuoles. This type of vacuole separates the host cytoplasm and the parasite by a membrane, protecting the parasite from the host cell’s defenses and providing an environment tailored to the parasite’s needs.

However, the membrane of the vacuole also acts as a barrier between the parasite and the host cell. This makes it more difficult for the parasite to release proteins involved in the transformation of the host cell beyond the membrane to spread the disease and for the pathogen to gain access to vital nutrients.

“Ultimately, what defines a parasite is that they require certain key nutrients from their host,” said study author Dan Gold, PhD, of the Massachusetts Institute of Technology in Cambridge.

“So they have had to evolve ways to get around their own barriers to gain access to these nutrients.”

Previous research suggested the vacuoles are selectively permeable to small molecules, allowing certain nutrients to pass through pores in the membrane. But until now, no one has been able to determine the molecular makeup of these pores or how they are formed.

When studying Toxoplasma, Dr Gold and his colleagues discovered 2 proteins secreted by the parasite, known as GRA17 and GRA23, which are responsible for forming these pores in the vacuole. The researchers discovered the proteins’ roles by accident while investigating how the parasites are able to release their own proteins out into the host cell beyond the vacuole membrane.

Similar research into how the related Plasmodium pathogens perform this trick revealed a protein export complex that transports encoded proteins from a parasite into its host red blood cell, which transforms the cell in a way that is vital to the spread of malaria.

“The clinical symptoms of malaria are dependent on this process and this remodeling of the red blood cell that occurs,” Dr Gold said.

The researchers identified proteins secreted by Toxoplasma that appeared to be homologues of this protein export complex in Plasmodium. But when they stopped these proteins from functioning, the team found it made no difference to the export of proteins from the parasite beyond the vacuole.

“We were left wondering what GRA17 and GRA23 actually do if they are not involved in protein export, and so we went back to look at this longstanding phenomenon of nutrient transport,” Dr Gold said.

When they added dyes to the host cell and knocked out the 2 proteins, the researchers found that it prevented the dyes flowing into the vacuole.

“That was our first indication that these proteins actually have a role in small-molecule transfer,” Dr Gold said.

When the researchers expressed a Plasmodium export complex gene in the modified Toxoplasma, they found the dyes were able to flow into the vacuole once again, suggesting this small-molecule transport function had been restored.

Since these proteins are only found in the parasite phylum Apicomplexa, to which both Toxoplasma and Plasmodium belong, they could be used as a drug target against the diseases they cause, the researchers said.

“This very strongly suggests that you could find small-molecule drugs to target these pores, which would be very damaging to these parasites but likely wouldn’t have any interaction with any human molecules,” Dr Gold said. “So I think this is a really strong potential drug target for restricting the access of these parasites to a set of nutrients.”![]()

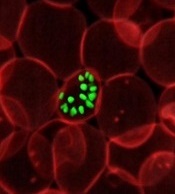

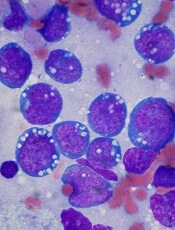

a red blood cell

Image courtesy of St. Jude

Children’s Research Hospital

New research indicates that manipulating the permeability of parasitophorous vacuoles could defeat malaria parasites.

The researchers unearthed this finding while studying the way in which the Toxoplasma gondii parasite, which causes toxoplasmosis, and Plasmodium parasites,

which cause malaria, access vital nutrients from their host cells.

The team described this work in Cell Host & Microbe.

Roughly a third of the world’s deadly infectious diseases are caused by pathogens that spend a large portion of their life inside parasitophorous vacuoles. This type of vacuole separates the host cytoplasm and the parasite by a membrane, protecting the parasite from the host cell’s defenses and providing an environment tailored to the parasite’s needs.

However, the membrane of the vacuole also acts as a barrier between the parasite and the host cell. This makes it more difficult for the parasite to release proteins involved in the transformation of the host cell beyond the membrane to spread the disease and for the pathogen to gain access to vital nutrients.

“Ultimately, what defines a parasite is that they require certain key nutrients from their host,” said study author Dan Gold, PhD, of the Massachusetts Institute of Technology in Cambridge.

“So they have had to evolve ways to get around their own barriers to gain access to these nutrients.”

Previous research suggested the vacuoles are selectively permeable to small molecules, allowing certain nutrients to pass through pores in the membrane. But until now, no one has been able to determine the molecular makeup of these pores or how they are formed.

When studying Toxoplasma, Dr Gold and his colleagues discovered 2 proteins secreted by the parasite, known as GRA17 and GRA23, which are responsible for forming these pores in the vacuole. The researchers discovered the proteins’ roles by accident while investigating how the parasites are able to release their own proteins out into the host cell beyond the vacuole membrane.

Similar research into how the related Plasmodium pathogens perform this trick revealed a protein export complex that transports encoded proteins from a parasite into its host red blood cell, which transforms the cell in a way that is vital to the spread of malaria.

“The clinical symptoms of malaria are dependent on this process and this remodeling of the red blood cell that occurs,” Dr Gold said.

The researchers identified proteins secreted by Toxoplasma that appeared to be homologues of this protein export complex in Plasmodium. But when they stopped these proteins from functioning, the team found it made no difference to the export of proteins from the parasite beyond the vacuole.

“We were left wondering what GRA17 and GRA23 actually do if they are not involved in protein export, and so we went back to look at this longstanding phenomenon of nutrient transport,” Dr Gold said.

When they added dyes to the host cell and knocked out the 2 proteins, the researchers found that it prevented the dyes flowing into the vacuole.

“That was our first indication that these proteins actually have a role in small-molecule transfer,” Dr Gold said.

When the researchers expressed a Plasmodium export complex gene in the modified Toxoplasma, they found the dyes were able to flow into the vacuole once again, suggesting this small-molecule transport function had been restored.

Since these proteins are only found in the parasite phylum Apicomplexa, to which both Toxoplasma and Plasmodium belong, they could be used as a drug target against the diseases they cause, the researchers said.

“This very strongly suggests that you could find small-molecule drugs to target these pores, which would be very damaging to these parasites but likely wouldn’t have any interaction with any human molecules,” Dr Gold said. “So I think this is a really strong potential drug target for restricting the access of these parasites to a set of nutrients.”![]()

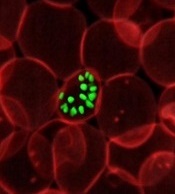

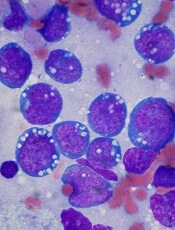

a red blood cell

Image courtesy of St. Jude

Children’s Research Hospital

New research indicates that manipulating the permeability of parasitophorous vacuoles could defeat malaria parasites.

The researchers unearthed this finding while studying the way in which the Toxoplasma gondii parasite, which causes toxoplasmosis, and Plasmodium parasites,

which cause malaria, access vital nutrients from their host cells.

The team described this work in Cell Host & Microbe.

Roughly a third of the world’s deadly infectious diseases are caused by pathogens that spend a large portion of their life inside parasitophorous vacuoles. This type of vacuole separates the host cytoplasm and the parasite by a membrane, protecting the parasite from the host cell’s defenses and providing an environment tailored to the parasite’s needs.

However, the membrane of the vacuole also acts as a barrier between the parasite and the host cell. This makes it more difficult for the parasite to release proteins involved in the transformation of the host cell beyond the membrane to spread the disease and for the pathogen to gain access to vital nutrients.

“Ultimately, what defines a parasite is that they require certain key nutrients from their host,” said study author Dan Gold, PhD, of the Massachusetts Institute of Technology in Cambridge.

“So they have had to evolve ways to get around their own barriers to gain access to these nutrients.”

Previous research suggested the vacuoles are selectively permeable to small molecules, allowing certain nutrients to pass through pores in the membrane. But until now, no one has been able to determine the molecular makeup of these pores or how they are formed.

When studying Toxoplasma, Dr Gold and his colleagues discovered 2 proteins secreted by the parasite, known as GRA17 and GRA23, which are responsible for forming these pores in the vacuole. The researchers discovered the proteins’ roles by accident while investigating how the parasites are able to release their own proteins out into the host cell beyond the vacuole membrane.

Similar research into how the related Plasmodium pathogens perform this trick revealed a protein export complex that transports encoded proteins from a parasite into its host red blood cell, which transforms the cell in a way that is vital to the spread of malaria.

“The clinical symptoms of malaria are dependent on this process and this remodeling of the red blood cell that occurs,” Dr Gold said.

The researchers identified proteins secreted by Toxoplasma that appeared to be homologues of this protein export complex in Plasmodium. But when they stopped these proteins from functioning, the team found it made no difference to the export of proteins from the parasite beyond the vacuole.

“We were left wondering what GRA17 and GRA23 actually do if they are not involved in protein export, and so we went back to look at this longstanding phenomenon of nutrient transport,” Dr Gold said.

When they added dyes to the host cell and knocked out the 2 proteins, the researchers found that it prevented the dyes flowing into the vacuole.

“That was our first indication that these proteins actually have a role in small-molecule transfer,” Dr Gold said.

When the researchers expressed a Plasmodium export complex gene in the modified Toxoplasma, they found the dyes were able to flow into the vacuole once again, suggesting this small-molecule transport function had been restored.

Since these proteins are only found in the parasite phylum Apicomplexa, to which both Toxoplasma and Plasmodium belong, they could be used as a drug target against the diseases they cause, the researchers said.

“This very strongly suggests that you could find small-molecule drugs to target these pores, which would be very damaging to these parasites but likely wouldn’t have any interaction with any human molecules,” Dr Gold said. “So I think this is a really strong potential drug target for restricting the access of these parasites to a set of nutrients.”![]()

AAN: Finding ways to improve door-to-needle times in stroke treatment

WASHINGTON – A streamlined emergency care service and a low-cost, tablet-based mobile telestroke system are two examples of shortening the time it takes for acute ischemic stroke patients to receive thrombolytic therapy that were presented at the annual meeting of the American Academy of Neurology.

American Heart Association/American Stroke Association guidelines recommend a door-to-needle (DTN) time of 60 minutes or less and set a goal for participating hospitals to administer tissue plasminogen activator (TPA) to at least 50% of their patients with acute ischemic stroke within 60 minutes of arriving at the hospital.

Dr. Judd Jensen described the efforts of Swedish Medical Center, Englewood, Colo. to streamline the emergency care of patients suspected of having an acute ischemic stroke after a task force determined that their previous “sequential, step-by-step process” wasted time. The median DTN time at the hospital’s stroke center had dropped from 46 minutes in 2010 to 39 minutes in 2013, which was better than the national average, “but we felt we could do better,” said Dr. Jensen, a neurologist at the hospital.

The process was modified so that more of the activities take place simultaneously, which includes immediately sending patients for a CT scan before entering the emergency department and administering IV TPA in the CT area to eligible patients, he explained. Previously, these patients were taken to a bed in the ED on arrival, registered, then examined by the emergency physician and neurologist and transported for a CT scan and then transported back to the ED where TPA was administered, if indicated, after several other steps were completed, including interpreting the CT scan, deciding about treatment, acquiring consent, and contacting the pharmacy to mix the TPA.

This process was improved by increasing pre-hospital notification by emergency medical services (EMS) and establishing a “launchpad” area in the back of the ED where the stroke team meets after EMS notification. On arrival, patients are transferred directly to the CT room where they are examined. The pharmacy is instructed to mix the TPA if an ischemic stroke is suspected, and the TPA is brought to the CT room where a stroke neurologist evaluates the CT scan and TPA is administered if indicated.

The impact of the revised process was evaluated in a prospective study of 262 acute ischemic stroke patients who received IV TPA between January 2010 and December 2014 at the hospital. They had a mean age of 73 years, 44% were male, and 84% were white. Their mean initial National Institutes of Health Stroke Scale (NIHSS) score was 12. The median DTN times dropped to a median of 31 minutes in 2014, Dr. Jensen said.

In 2014, almost 50% of the patients received TPA in 30 minutes or less, compared with about 25% in 2011, 2012, and 2013, he added, noting that 11 minutes was the fastest DTN time in 2014. Patients with an excellent discharge modified Rankin Scale (mRS) score (0 or 1) improved from 31% in 2010 and 30% in 2013 to 46% in 2014. During the time period studied, two patients had a symptomatic intracerebral hemorrhage, one in 2010 and another in 2012.

Dr. Jensen described the process as a multidisciplinary team effort, noting that it is important that emergency room physicians feel comfortable with the administration of TPA in the CT scan area, “because it is still their patient being administered a potentially fatal drug outside of the ED.”

At the meeting, Matthew Padrick, a medical student at the University of Virginia, Charlottesville, presented the results of a pilot study that targeted the EMS transport time as an “untapped treatment window” to improve the time to thrombolytic treatment using a low-cost mobile telestroke system to evaluate patients in the ambulance on their way to the hospital.

Because the catchment area covered by UVA includes a large rural area, transport times to the stroke center can be as long as 30 to 60 minutes, Mr. Padrick said.

In the “Improving Treatment with Rapid Evaluation of Acute Stroke via mobile Telemedicine” (iTREAT) study, he and his associates evaluated the feasibility and reliability of performing acute stroke assessments (with the NIHSS) in the ambulance. The iTREAT system, which includes an Apple iPad with retina display attached to the patient stretcher with an extendable clamp, a secure video conferencing application, a high-speed 4G LTE modem, a magnetic antenna on top of the ambulance, and the regional cellular network, “providing seamless connectivity,” he said. At a total cost of under $2,000, the system is designed so that the neurologist can evaluate the patient remotely, via the iPad.

Acting as patients, three medical students were given two unique stroke scenarios each, with stories and specific instructions; vascular neurologists did a face-to-face assessment and a remote iTREAT assessment from the hospital as the students traveled along the major routes to UVA Medical Center. NIHSS scores in the ambulance with the iTREAT system and with face-to-face assessments correlated well, with an overall intraclass correlation of 0.98, Mr. Padrick reported.

The ratings of audio-video quality during the iTREAT evaluations were judged to be ”good” or “excellent” and the NIHSS correlations and audio-video quality ratings improved with time, he added.

“We currently have IRB approval to move forward with real, live patient encounters and we are currently outfitting and training our local EMS agencies” with the system, Mr. Padrick said in an interview after the meeting.

Mr. Padrick has received research support from the American Heart Association. Dr. Judd had nothing to disclose.

WASHINGTON – A streamlined emergency care service and a low-cost, tablet-based mobile telestroke system are two examples of shortening the time it takes for acute ischemic stroke patients to receive thrombolytic therapy that were presented at the annual meeting of the American Academy of Neurology.

American Heart Association/American Stroke Association guidelines recommend a door-to-needle (DTN) time of 60 minutes or less and set a goal for participating hospitals to administer tissue plasminogen activator (TPA) to at least 50% of their patients with acute ischemic stroke within 60 minutes of arriving at the hospital.

Dr. Judd Jensen described the efforts of Swedish Medical Center, Englewood, Colo. to streamline the emergency care of patients suspected of having an acute ischemic stroke after a task force determined that their previous “sequential, step-by-step process” wasted time. The median DTN time at the hospital’s stroke center had dropped from 46 minutes in 2010 to 39 minutes in 2013, which was better than the national average, “but we felt we could do better,” said Dr. Jensen, a neurologist at the hospital.

The process was modified so that more of the activities take place simultaneously, which includes immediately sending patients for a CT scan before entering the emergency department and administering IV TPA in the CT area to eligible patients, he explained. Previously, these patients were taken to a bed in the ED on arrival, registered, then examined by the emergency physician and neurologist and transported for a CT scan and then transported back to the ED where TPA was administered, if indicated, after several other steps were completed, including interpreting the CT scan, deciding about treatment, acquiring consent, and contacting the pharmacy to mix the TPA.

This process was improved by increasing pre-hospital notification by emergency medical services (EMS) and establishing a “launchpad” area in the back of the ED where the stroke team meets after EMS notification. On arrival, patients are transferred directly to the CT room where they are examined. The pharmacy is instructed to mix the TPA if an ischemic stroke is suspected, and the TPA is brought to the CT room where a stroke neurologist evaluates the CT scan and TPA is administered if indicated.

The impact of the revised process was evaluated in a prospective study of 262 acute ischemic stroke patients who received IV TPA between January 2010 and December 2014 at the hospital. They had a mean age of 73 years, 44% were male, and 84% were white. Their mean initial National Institutes of Health Stroke Scale (NIHSS) score was 12. The median DTN times dropped to a median of 31 minutes in 2014, Dr. Jensen said.

In 2014, almost 50% of the patients received TPA in 30 minutes or less, compared with about 25% in 2011, 2012, and 2013, he added, noting that 11 minutes was the fastest DTN time in 2014. Patients with an excellent discharge modified Rankin Scale (mRS) score (0 or 1) improved from 31% in 2010 and 30% in 2013 to 46% in 2014. During the time period studied, two patients had a symptomatic intracerebral hemorrhage, one in 2010 and another in 2012.

Dr. Jensen described the process as a multidisciplinary team effort, noting that it is important that emergency room physicians feel comfortable with the administration of TPA in the CT scan area, “because it is still their patient being administered a potentially fatal drug outside of the ED.”

At the meeting, Matthew Padrick, a medical student at the University of Virginia, Charlottesville, presented the results of a pilot study that targeted the EMS transport time as an “untapped treatment window” to improve the time to thrombolytic treatment using a low-cost mobile telestroke system to evaluate patients in the ambulance on their way to the hospital.

Because the catchment area covered by UVA includes a large rural area, transport times to the stroke center can be as long as 30 to 60 minutes, Mr. Padrick said.

In the “Improving Treatment with Rapid Evaluation of Acute Stroke via mobile Telemedicine” (iTREAT) study, he and his associates evaluated the feasibility and reliability of performing acute stroke assessments (with the NIHSS) in the ambulance. The iTREAT system, which includes an Apple iPad with retina display attached to the patient stretcher with an extendable clamp, a secure video conferencing application, a high-speed 4G LTE modem, a magnetic antenna on top of the ambulance, and the regional cellular network, “providing seamless connectivity,” he said. At a total cost of under $2,000, the system is designed so that the neurologist can evaluate the patient remotely, via the iPad.

Acting as patients, three medical students were given two unique stroke scenarios each, with stories and specific instructions; vascular neurologists did a face-to-face assessment and a remote iTREAT assessment from the hospital as the students traveled along the major routes to UVA Medical Center. NIHSS scores in the ambulance with the iTREAT system and with face-to-face assessments correlated well, with an overall intraclass correlation of 0.98, Mr. Padrick reported.

The ratings of audio-video quality during the iTREAT evaluations were judged to be ”good” or “excellent” and the NIHSS correlations and audio-video quality ratings improved with time, he added.

“We currently have IRB approval to move forward with real, live patient encounters and we are currently outfitting and training our local EMS agencies” with the system, Mr. Padrick said in an interview after the meeting.

Mr. Padrick has received research support from the American Heart Association. Dr. Judd had nothing to disclose.

WASHINGTON – A streamlined emergency care service and a low-cost, tablet-based mobile telestroke system are two examples of shortening the time it takes for acute ischemic stroke patients to receive thrombolytic therapy that were presented at the annual meeting of the American Academy of Neurology.

American Heart Association/American Stroke Association guidelines recommend a door-to-needle (DTN) time of 60 minutes or less and set a goal for participating hospitals to administer tissue plasminogen activator (TPA) to at least 50% of their patients with acute ischemic stroke within 60 minutes of arriving at the hospital.

Dr. Judd Jensen described the efforts of Swedish Medical Center, Englewood, Colo. to streamline the emergency care of patients suspected of having an acute ischemic stroke after a task force determined that their previous “sequential, step-by-step process” wasted time. The median DTN time at the hospital’s stroke center had dropped from 46 minutes in 2010 to 39 minutes in 2013, which was better than the national average, “but we felt we could do better,” said Dr. Jensen, a neurologist at the hospital.

The process was modified so that more of the activities take place simultaneously, which includes immediately sending patients for a CT scan before entering the emergency department and administering IV TPA in the CT area to eligible patients, he explained. Previously, these patients were taken to a bed in the ED on arrival, registered, then examined by the emergency physician and neurologist and transported for a CT scan and then transported back to the ED where TPA was administered, if indicated, after several other steps were completed, including interpreting the CT scan, deciding about treatment, acquiring consent, and contacting the pharmacy to mix the TPA.

This process was improved by increasing pre-hospital notification by emergency medical services (EMS) and establishing a “launchpad” area in the back of the ED where the stroke team meets after EMS notification. On arrival, patients are transferred directly to the CT room where they are examined. The pharmacy is instructed to mix the TPA if an ischemic stroke is suspected, and the TPA is brought to the CT room where a stroke neurologist evaluates the CT scan and TPA is administered if indicated.

The impact of the revised process was evaluated in a prospective study of 262 acute ischemic stroke patients who received IV TPA between January 2010 and December 2014 at the hospital. They had a mean age of 73 years, 44% were male, and 84% were white. Their mean initial National Institutes of Health Stroke Scale (NIHSS) score was 12. The median DTN times dropped to a median of 31 minutes in 2014, Dr. Jensen said.

In 2014, almost 50% of the patients received TPA in 30 minutes or less, compared with about 25% in 2011, 2012, and 2013, he added, noting that 11 minutes was the fastest DTN time in 2014. Patients with an excellent discharge modified Rankin Scale (mRS) score (0 or 1) improved from 31% in 2010 and 30% in 2013 to 46% in 2014. During the time period studied, two patients had a symptomatic intracerebral hemorrhage, one in 2010 and another in 2012.

Dr. Jensen described the process as a multidisciplinary team effort, noting that it is important that emergency room physicians feel comfortable with the administration of TPA in the CT scan area, “because it is still their patient being administered a potentially fatal drug outside of the ED.”

At the meeting, Matthew Padrick, a medical student at the University of Virginia, Charlottesville, presented the results of a pilot study that targeted the EMS transport time as an “untapped treatment window” to improve the time to thrombolytic treatment using a low-cost mobile telestroke system to evaluate patients in the ambulance on their way to the hospital.

Because the catchment area covered by UVA includes a large rural area, transport times to the stroke center can be as long as 30 to 60 minutes, Mr. Padrick said.

In the “Improving Treatment with Rapid Evaluation of Acute Stroke via mobile Telemedicine” (iTREAT) study, he and his associates evaluated the feasibility and reliability of performing acute stroke assessments (with the NIHSS) in the ambulance. The iTREAT system, which includes an Apple iPad with retina display attached to the patient stretcher with an extendable clamp, a secure video conferencing application, a high-speed 4G LTE modem, a magnetic antenna on top of the ambulance, and the regional cellular network, “providing seamless connectivity,” he said. At a total cost of under $2,000, the system is designed so that the neurologist can evaluate the patient remotely, via the iPad.

Acting as patients, three medical students were given two unique stroke scenarios each, with stories and specific instructions; vascular neurologists did a face-to-face assessment and a remote iTREAT assessment from the hospital as the students traveled along the major routes to UVA Medical Center. NIHSS scores in the ambulance with the iTREAT system and with face-to-face assessments correlated well, with an overall intraclass correlation of 0.98, Mr. Padrick reported.

The ratings of audio-video quality during the iTREAT evaluations were judged to be ”good” or “excellent” and the NIHSS correlations and audio-video quality ratings improved with time, he added.

“We currently have IRB approval to move forward with real, live patient encounters and we are currently outfitting and training our local EMS agencies” with the system, Mr. Padrick said in an interview after the meeting.

Mr. Padrick has received research support from the American Heart Association. Dr. Judd had nothing to disclose.

AT THE AAN 2015 ANNUAL MEETING

Combo targets AML, BL in the same way

Image by Ed Uthman

Combining a cholesterol-lowering drug and a contraceptive steroid could be a safe, effective treatment for leukemias, lymphomas, and other malignancies, according to researchers.

Their work helps explain how this combination treatment, bezafibrate and medroxyprogesterone acetate (BaP), kills cancer cells.

The team discovered that BaP’s mechanism of action is the same in acute myeloid leukemia (AML) and Burkitt lymphoma (BL) cells.

The findings have been published in Cancer Research.

BaP has been shown to prolong survival in early stage trials of elderly AML patients, when compared to standard palliative care. BaP has also been used alongside chemotherapy to successfully treat children with BL.

However, it was unclear whether BaP’s activity against these 2 very different malignancies was mediated by a common mechanism or by different effects in each cancer.

To gain some insight, Andrew Southam, PhD, of the University of Birmingham in the UK, and his colleagues investigated the drugs’ effects on the metabolism and chemical make-up of AML and BL cells.

They found that, in both cell types, BaP blocks stearoyl CoA desaturase, an enzyme crucial to the production of fatty acids, which cancer cells need to grow and multiply. The team also showed that BaP’s ability to deactivate stearoyl CoA desaturase was what prompted the cancer cells to die.

“Developing drugs to target the fatty-acid building blocks of cancer cells has been a promising area of research in recent years,” Dr Southam said. “It is very exciting we have identified these non-toxic drugs already sitting on pharmacy shelves.”

He and his colleagues believe these findings also open up the possibility that BaP could be used to treat other cancers that rely on high levels of stearoyl CoA desaturase to grow, including chronic lymphocytic leukemia and some types of non-Hodgkin lymphoma, as well as prostate, colon, and esophageal cancers.

“This drug combination shows real promise,” said Chris Bunce, PhD, also of the University of Birmingham.

“Affordable, effective, non-toxic treatments that extend survival, while offering a good quality of life, are in demand for almost all types of cancer.” ![]()

Image by Ed Uthman

Combining a cholesterol-lowering drug and a contraceptive steroid could be a safe, effective treatment for leukemias, lymphomas, and other malignancies, according to researchers.

Their work helps explain how this combination treatment, bezafibrate and medroxyprogesterone acetate (BaP), kills cancer cells.

The team discovered that BaP’s mechanism of action is the same in acute myeloid leukemia (AML) and Burkitt lymphoma (BL) cells.

The findings have been published in Cancer Research.

BaP has been shown to prolong survival in early stage trials of elderly AML patients, when compared to standard palliative care. BaP has also been used alongside chemotherapy to successfully treat children with BL.

However, it was unclear whether BaP’s activity against these 2 very different malignancies was mediated by a common mechanism or by different effects in each cancer.

To gain some insight, Andrew Southam, PhD, of the University of Birmingham in the UK, and his colleagues investigated the drugs’ effects on the metabolism and chemical make-up of AML and BL cells.

They found that, in both cell types, BaP blocks stearoyl CoA desaturase, an enzyme crucial to the production of fatty acids, which cancer cells need to grow and multiply. The team also showed that BaP’s ability to deactivate stearoyl CoA desaturase was what prompted the cancer cells to die.

“Developing drugs to target the fatty-acid building blocks of cancer cells has been a promising area of research in recent years,” Dr Southam said. “It is very exciting we have identified these non-toxic drugs already sitting on pharmacy shelves.”

He and his colleagues believe these findings also open up the possibility that BaP could be used to treat other cancers that rely on high levels of stearoyl CoA desaturase to grow, including chronic lymphocytic leukemia and some types of non-Hodgkin lymphoma, as well as prostate, colon, and esophageal cancers.

“This drug combination shows real promise,” said Chris Bunce, PhD, also of the University of Birmingham.

“Affordable, effective, non-toxic treatments that extend survival, while offering a good quality of life, are in demand for almost all types of cancer.” ![]()

Image by Ed Uthman

Combining a cholesterol-lowering drug and a contraceptive steroid could be a safe, effective treatment for leukemias, lymphomas, and other malignancies, according to researchers.

Their work helps explain how this combination treatment, bezafibrate and medroxyprogesterone acetate (BaP), kills cancer cells.

The team discovered that BaP’s mechanism of action is the same in acute myeloid leukemia (AML) and Burkitt lymphoma (BL) cells.

The findings have been published in Cancer Research.

BaP has been shown to prolong survival in early stage trials of elderly AML patients, when compared to standard palliative care. BaP has also been used alongside chemotherapy to successfully treat children with BL.

However, it was unclear whether BaP’s activity against these 2 very different malignancies was mediated by a common mechanism or by different effects in each cancer.

To gain some insight, Andrew Southam, PhD, of the University of Birmingham in the UK, and his colleagues investigated the drugs’ effects on the metabolism and chemical make-up of AML and BL cells.

They found that, in both cell types, BaP blocks stearoyl CoA desaturase, an enzyme crucial to the production of fatty acids, which cancer cells need to grow and multiply. The team also showed that BaP’s ability to deactivate stearoyl CoA desaturase was what prompted the cancer cells to die.

“Developing drugs to target the fatty-acid building blocks of cancer cells has been a promising area of research in recent years,” Dr Southam said. “It is very exciting we have identified these non-toxic drugs already sitting on pharmacy shelves.”

He and his colleagues believe these findings also open up the possibility that BaP could be used to treat other cancers that rely on high levels of stearoyl CoA desaturase to grow, including chronic lymphocytic leukemia and some types of non-Hodgkin lymphoma, as well as prostate, colon, and esophageal cancers.

“This drug combination shows real promise,” said Chris Bunce, PhD, also of the University of Birmingham.

“Affordable, effective, non-toxic treatments that extend survival, while offering a good quality of life, are in demand for almost all types of cancer.” ![]()

Consider laser ablation therapy for treatment of benign thyroid nodules

NASHVILLE – Ultrasound-guided laser ablation therapy was found to be a clinically safe, effective, and well-tolerated option for the treatment of benign thyroid nodules, both solid and mixed, in a retrospective, multicenter study presented at the annual meeting of the American Association of Clinical Endocrinologists.

“We know that image-guided laser ablation of solid thyroid nodules has demonstrated favorable results in several prospective randomized trials,” said Dr. Enrico Papini of Regina Apostolorum Hospital in Rome. “However, these results were obtained in selected patients, with single treatments and fixed modalities of treatment; so the question is, what happens in a real. clinical practice?”

Dr. Papini explained that the aim of the study was to assess clinical efficacy and side effects of laser ablation therapy (LAT) in a large series of unselected benign thyroid nodules of variable structure and size, using data from centers who use LAT as a standard operating technique. Patients with solid or mixed nodules with up to 40% fluid composition, benign cytological findings, and normal thyroid function were included.

Clinical records of 1,534 thyroid nodules in 1,531 patients, all of whom were treated in the last 10 years, was collected from eight Italian thyroid referral centers. A total of 1,837 LAT procedures were performed on these nodules, of which 1,280 (83% of 1,534) were treated in a single session. All nodules were treated in no more than three consecutive sessions, with a fixed output power of 3 watts. According to Dr. Papini, the laser is only fired for up to 10 minutes.

Mean nodule volume significantly decreased following LAT from 27 ± 24 mL at baseline to 8 ± 8 mL at 12 months after treatment (P < .001), and mean nodule volume reduction was 72% ± 11%, with an overall range of 48%-100%. Mixed nodules experienced significantly larger decreases than solid ones. On average, mixed nodule volume decreased 79% ± 7%, versus 72% ± 11% for solid nodules (P < .001) because of fluid components being drained prior to LAT.

Symptoms decreased from 49% at baseline to 10% at 12 months post-treatment. Similarly robust reductions were also seen in cosmetic signs, which decreased 86% to 8% over 12 months. Only 17 patients experienced a complication, including 8 with a “major” complication of dysphonia, which resolved within 2-84 days, and 9 with “minor” complications, such as skin burn and hematoma.

“Laser ablation was performed in outpatient setting, with no hospital admission after treatment,” said Dr. Papini. “It was well-tolerated, and severe pain – requiring more than 3 days of analgesics – occurred in less than 2% of patients.”

Dr. Papini did not report any relevant financial disclosures.

NASHVILLE – Ultrasound-guided laser ablation therapy was found to be a clinically safe, effective, and well-tolerated option for the treatment of benign thyroid nodules, both solid and mixed, in a retrospective, multicenter study presented at the annual meeting of the American Association of Clinical Endocrinologists.

“We know that image-guided laser ablation of solid thyroid nodules has demonstrated favorable results in several prospective randomized trials,” said Dr. Enrico Papini of Regina Apostolorum Hospital in Rome. “However, these results were obtained in selected patients, with single treatments and fixed modalities of treatment; so the question is, what happens in a real. clinical practice?”

Dr. Papini explained that the aim of the study was to assess clinical efficacy and side effects of laser ablation therapy (LAT) in a large series of unselected benign thyroid nodules of variable structure and size, using data from centers who use LAT as a standard operating technique. Patients with solid or mixed nodules with up to 40% fluid composition, benign cytological findings, and normal thyroid function were included.

Clinical records of 1,534 thyroid nodules in 1,531 patients, all of whom were treated in the last 10 years, was collected from eight Italian thyroid referral centers. A total of 1,837 LAT procedures were performed on these nodules, of which 1,280 (83% of 1,534) were treated in a single session. All nodules were treated in no more than three consecutive sessions, with a fixed output power of 3 watts. According to Dr. Papini, the laser is only fired for up to 10 minutes.

Mean nodule volume significantly decreased following LAT from 27 ± 24 mL at baseline to 8 ± 8 mL at 12 months after treatment (P < .001), and mean nodule volume reduction was 72% ± 11%, with an overall range of 48%-100%. Mixed nodules experienced significantly larger decreases than solid ones. On average, mixed nodule volume decreased 79% ± 7%, versus 72% ± 11% for solid nodules (P < .001) because of fluid components being drained prior to LAT.

Symptoms decreased from 49% at baseline to 10% at 12 months post-treatment. Similarly robust reductions were also seen in cosmetic signs, which decreased 86% to 8% over 12 months. Only 17 patients experienced a complication, including 8 with a “major” complication of dysphonia, which resolved within 2-84 days, and 9 with “minor” complications, such as skin burn and hematoma.

“Laser ablation was performed in outpatient setting, with no hospital admission after treatment,” said Dr. Papini. “It was well-tolerated, and severe pain – requiring more than 3 days of analgesics – occurred in less than 2% of patients.”

Dr. Papini did not report any relevant financial disclosures.

NASHVILLE – Ultrasound-guided laser ablation therapy was found to be a clinically safe, effective, and well-tolerated option for the treatment of benign thyroid nodules, both solid and mixed, in a retrospective, multicenter study presented at the annual meeting of the American Association of Clinical Endocrinologists.

“We know that image-guided laser ablation of solid thyroid nodules has demonstrated favorable results in several prospective randomized trials,” said Dr. Enrico Papini of Regina Apostolorum Hospital in Rome. “However, these results were obtained in selected patients, with single treatments and fixed modalities of treatment; so the question is, what happens in a real. clinical practice?”

Dr. Papini explained that the aim of the study was to assess clinical efficacy and side effects of laser ablation therapy (LAT) in a large series of unselected benign thyroid nodules of variable structure and size, using data from centers who use LAT as a standard operating technique. Patients with solid or mixed nodules with up to 40% fluid composition, benign cytological findings, and normal thyroid function were included.

Clinical records of 1,534 thyroid nodules in 1,531 patients, all of whom were treated in the last 10 years, was collected from eight Italian thyroid referral centers. A total of 1,837 LAT procedures were performed on these nodules, of which 1,280 (83% of 1,534) were treated in a single session. All nodules were treated in no more than three consecutive sessions, with a fixed output power of 3 watts. According to Dr. Papini, the laser is only fired for up to 10 minutes.

Mean nodule volume significantly decreased following LAT from 27 ± 24 mL at baseline to 8 ± 8 mL at 12 months after treatment (P < .001), and mean nodule volume reduction was 72% ± 11%, with an overall range of 48%-100%. Mixed nodules experienced significantly larger decreases than solid ones. On average, mixed nodule volume decreased 79% ± 7%, versus 72% ± 11% for solid nodules (P < .001) because of fluid components being drained prior to LAT.

Symptoms decreased from 49% at baseline to 10% at 12 months post-treatment. Similarly robust reductions were also seen in cosmetic signs, which decreased 86% to 8% over 12 months. Only 17 patients experienced a complication, including 8 with a “major” complication of dysphonia, which resolved within 2-84 days, and 9 with “minor” complications, such as skin burn and hematoma.

“Laser ablation was performed in outpatient setting, with no hospital admission after treatment,” said Dr. Papini. “It was well-tolerated, and severe pain – requiring more than 3 days of analgesics – occurred in less than 2% of patients.”

Dr. Papini did not report any relevant financial disclosures.

AT AACE 2015

Key clinical point: Ultrasound-guided laser ablation therapy is a clinically effective and well-tolerated tool for treating benign solid and mixed thyroid nodules.

Major finding: In 1,837 treatments for 1,534 nodules, mean nodule volume decreased from 27 ± 24 mL at baseline to 8 ± 8 mL at 12 months after treatment (P < .001), and mean nodule volume reduction was 72% ± 11% (range 48%-100%).

Data source: Retrospective, multicenter study of 1,534 benign solid and mixed thyroid nodules.

Disclosures: Dr. Papini did not report any relevant financial disclosures.

Botox treatments improve urinary incontinence in neurogenic bladder dysfunction

NEW ORLEANS – Regular injections of onabotulinumtoxinA significantly decreased urinary incontinence in patients with neurogenic detrusor bladder overactivity over 4 years of follow-up in 4-year extension study results of a randomized trial.

Incontinence episodes decreased from an average of four per day to one or less after each treatment, Dr. Eric Rovner said at the annual meeting of the American Urological Association.

Each treatment was effective for about 9 months, and the benefit consistent throughout the 4-year study, said Dr. Rovner of the Medical University of South Carolina, Charleston.

About 90% of patients had at least a 50% reduction in incontinence episodes, and more than half experienced a complete cessation of incontinence.

OnabotulinumtoxinA (Botox) was approved in 2011 as a treatment for neurogenic urinary incontinence. Each treatment consists of 20 injections delivered cystoscopically.

Dr. Rovner reported a post hoc analysis of 227 patients who completed 4 years of treatment – a 1-year placebo-controlled trial, and 3 years of open-label extension with a dosage of 200 units of onabotulinumtoxinA.

Patients were relatively young (mean 45 years); about half were male. Most (53%) had multiple sclerosis. The remainder had a spinal cord injury that affected bladder function. Half were taking an anticholinergic medication, but had not responded to it.

Most patients (71%) were already performing intermittent catheterization. Despite that, they had a mean of four incontinence episodes each day.

Over the entire 4 years, onabotulinumtoxinA was associated with significant and consistent improvements in incontinence, with a mean decrease of up to 3.8 incidents per day each year. Each year, about 90% experienced at least a 50% improvement. About half experienced a complete cessation of incontinence over the period.

Urinary tract infections occurred in 20% of patients in years 1 and 2, and 18% in years 3 and 4, which was not significantly different. Urinary retention was highest in year 1 (12%) and dropped to 2% by years 3 and 4.

In the first year, 39% of those who didn’t need intermittent catheterization at baseline had to begin doing so. By year 2, the de novo catheterization rate was 11%. It was 8% in year 3, and in year 4, there were no new catheterizations.

These changes were not only statistically significant, but clinically important, Dr. Rovner said. On a secondary measure, the Incontinence Quality of Life Questionnaire (I-QOL), patients experienced a mean increase of more than 30 points over each study year. An 11-point change is usually considered clinically meaningful, he said.

“This was making a big difference for these patients.”

Dr. Rovner disclosed relationships with Allergan and a number of other pharmaceutical and medical device companies.

On Twitter @alz_gal

NEW ORLEANS – Regular injections of onabotulinumtoxinA significantly decreased urinary incontinence in patients with neurogenic detrusor bladder overactivity over 4 years of follow-up in 4-year extension study results of a randomized trial.

Incontinence episodes decreased from an average of four per day to one or less after each treatment, Dr. Eric Rovner said at the annual meeting of the American Urological Association.

Each treatment was effective for about 9 months, and the benefit consistent throughout the 4-year study, said Dr. Rovner of the Medical University of South Carolina, Charleston.

About 90% of patients had at least a 50% reduction in incontinence episodes, and more than half experienced a complete cessation of incontinence.

OnabotulinumtoxinA (Botox) was approved in 2011 as a treatment for neurogenic urinary incontinence. Each treatment consists of 20 injections delivered cystoscopically.

Dr. Rovner reported a post hoc analysis of 227 patients who completed 4 years of treatment – a 1-year placebo-controlled trial, and 3 years of open-label extension with a dosage of 200 units of onabotulinumtoxinA.

Patients were relatively young (mean 45 years); about half were male. Most (53%) had multiple sclerosis. The remainder had a spinal cord injury that affected bladder function. Half were taking an anticholinergic medication, but had not responded to it.

Most patients (71%) were already performing intermittent catheterization. Despite that, they had a mean of four incontinence episodes each day.

Over the entire 4 years, onabotulinumtoxinA was associated with significant and consistent improvements in incontinence, with a mean decrease of up to 3.8 incidents per day each year. Each year, about 90% experienced at least a 50% improvement. About half experienced a complete cessation of incontinence over the period.

Urinary tract infections occurred in 20% of patients in years 1 and 2, and 18% in years 3 and 4, which was not significantly different. Urinary retention was highest in year 1 (12%) and dropped to 2% by years 3 and 4.

In the first year, 39% of those who didn’t need intermittent catheterization at baseline had to begin doing so. By year 2, the de novo catheterization rate was 11%. It was 8% in year 3, and in year 4, there were no new catheterizations.

These changes were not only statistically significant, but clinically important, Dr. Rovner said. On a secondary measure, the Incontinence Quality of Life Questionnaire (I-QOL), patients experienced a mean increase of more than 30 points over each study year. An 11-point change is usually considered clinically meaningful, he said.

“This was making a big difference for these patients.”

Dr. Rovner disclosed relationships with Allergan and a number of other pharmaceutical and medical device companies.

On Twitter @alz_gal

NEW ORLEANS – Regular injections of onabotulinumtoxinA significantly decreased urinary incontinence in patients with neurogenic detrusor bladder overactivity over 4 years of follow-up in 4-year extension study results of a randomized trial.

Incontinence episodes decreased from an average of four per day to one or less after each treatment, Dr. Eric Rovner said at the annual meeting of the American Urological Association.

Each treatment was effective for about 9 months, and the benefit consistent throughout the 4-year study, said Dr. Rovner of the Medical University of South Carolina, Charleston.

About 90% of patients had at least a 50% reduction in incontinence episodes, and more than half experienced a complete cessation of incontinence.

OnabotulinumtoxinA (Botox) was approved in 2011 as a treatment for neurogenic urinary incontinence. Each treatment consists of 20 injections delivered cystoscopically.

Dr. Rovner reported a post hoc analysis of 227 patients who completed 4 years of treatment – a 1-year placebo-controlled trial, and 3 years of open-label extension with a dosage of 200 units of onabotulinumtoxinA.

Patients were relatively young (mean 45 years); about half were male. Most (53%) had multiple sclerosis. The remainder had a spinal cord injury that affected bladder function. Half were taking an anticholinergic medication, but had not responded to it.

Most patients (71%) were already performing intermittent catheterization. Despite that, they had a mean of four incontinence episodes each day.

Over the entire 4 years, onabotulinumtoxinA was associated with significant and consistent improvements in incontinence, with a mean decrease of up to 3.8 incidents per day each year. Each year, about 90% experienced at least a 50% improvement. About half experienced a complete cessation of incontinence over the period.

Urinary tract infections occurred in 20% of patients in years 1 and 2, and 18% in years 3 and 4, which was not significantly different. Urinary retention was highest in year 1 (12%) and dropped to 2% by years 3 and 4.

In the first year, 39% of those who didn’t need intermittent catheterization at baseline had to begin doing so. By year 2, the de novo catheterization rate was 11%. It was 8% in year 3, and in year 4, there were no new catheterizations.

These changes were not only statistically significant, but clinically important, Dr. Rovner said. On a secondary measure, the Incontinence Quality of Life Questionnaire (I-QOL), patients experienced a mean increase of more than 30 points over each study year. An 11-point change is usually considered clinically meaningful, he said.

“This was making a big difference for these patients.”

Dr. Rovner disclosed relationships with Allergan and a number of other pharmaceutical and medical device companies.

On Twitter @alz_gal

AT THE AUA ANNUAL MEETING

Key clinical point: OnabotulinumtoxinA injections produced a consistent and significant improvement in urinary incontinence in patients with neurogenic detrusor bladder overactivity.

Major finding: Nearly 90% of patients had at least a 50% improvement in incontinence episodes, and almost half experienced a complete cessation.

Data source: A post hoc analysis of 4-year results from a 1-year, randomized, placebo-controlled trial with 3 years of open-label extension in 227 patients.

Disclosures: Dr. Rovner disclosed relationships with Allergan and a number of other pharmaceutical and medical device companies.

How mAbs produce lasting antitumor effects

Photo by Linda Bartlett

Results of preclinical research help explain how antitumor monoclonal antibodies (mAbs) fight lymphoma.

Researchers uncovered a 2-step process that revolves around 2 antibody-binding receptors found on different types of immune cells.

Experiments suggested that these Fc receptors are needed to eradicate lymphoma and ensure it doesn’t return.

The researchers reported these findings in an article published in Cell.

“These findings suggests ways current anticancer antibody treatments might be improved, as well as combined with other immune-system-stimulating therapies to help cancer patients,” said study author Jeffrey Ravetch, MD, PhD, of The Rockefeller University in New York, New York.

Previous research has shown that antitumor mAbs bind to Fc receptors on activated immune cells, prompting those immune cells to kill tumors.

However, it was unclear which Fc receptors are involved or how the tumor killing led the immune system to generate memory T cells against these same antigens, in case the tumor producing them should return.

Dr Ravetch and David DiLillo, PhD, also of The Rockefeller University, investigated this process by injecting CD20-expressing lymphoma cells into mice with immune systems engineered to contain human Fc receptors, treating the mice with anti-CD20 mAbs, and then re-introducing lymphoma.

Wild-type C57BL/6 mice received syngeneic EL4 lymphoma cells expressing human CD20 (EL4-hCD20 cells). When these mice received treatment with an mIgG2a isotype anti-hCD20 mAb, they all survived.

Ninety days later, after the mAb had been cleared from their systems, the mice were re-challenged with EL4-hCD20 tumor cells, at a dose 10-fold greater than the initial challenge, but they did not receive any additional mAb.

All of these mice survived, but tumor/mAb-primed mice that were re-challenged with EL4-wild-type cells, which don’t express hCD20, had poor survival. Results were similar with a different anti-hCD20 mAb, clone 2B8.

The researchers also re-challenged tumor/mAb-primed mice with B6BL lymphoma cells that expressed either hCD20 or an irrelevant antigen, mCD20. All of the mice re-challenged with B6BL-mCD20 cells had died by day 31, but 80% of the mice re-challenged with B6BL-hCD20 cells survived at least 90 days.

Drs Ravetch and DiLillo said these results suggest an anti-hCD20 immune response is generated after the initial FcγR-mediated clearance of tumor cells by antibody-dependent cellular cytotoxicity.

The researchers then took a closer look at the role of Fc receptors, keeping in mind that different types of immune cells can express different receptors.

Based on the cells the researchers thought were involved, they looked to the Fc receptors expressed by cytotoxic immune cells that carried out the initial attack on tumors, as well as the Fc receptors found on dendritic cells, which are crucial to the formation of memory T cells.

To test the involvement of these receptors, the pair altered the mAbs delivered to the lymphoma-infected mice so as to change their affinity for the receptors. Then, they looked for changes in the survival rate of the mice after the first and second challenges with lymphoma cells.

When they dissected this process, the researchers uncovered 2 steps. The Fc receptor FcγRIIIA, which is found on macrophages, responded to mAbs and prompted the macrophages to engulf and destroy the antibody-laden tumor cells.

These same antibodies, still attached to tumor antigens, activated a second receptor, FcγRIIA, on dendritic cells, which used the antigen to prime T cells. The result was the generation of a T-cell memory response that protected the mice against future lymphoma cells expressing CD20.

“By engineering the antibodies so as to increase their affinity for both FcγRIIIA and FcγRIIA, we were able to optimize both steps in this process,” Dr DiLillo said.

“Current antibody therapies are only engineered to improve the immediate killing of tumor cells but not the formation of immunological memory. We are proposing that an ideal antibody therapy would be engineered to take full advantage of both steps.” ![]()

Photo by Linda Bartlett

Results of preclinical research help explain how antitumor monoclonal antibodies (mAbs) fight lymphoma.

Researchers uncovered a 2-step process that revolves around 2 antibody-binding receptors found on different types of immune cells.

Experiments suggested that these Fc receptors are needed to eradicate lymphoma and ensure it doesn’t return.

The researchers reported these findings in an article published in Cell.

“These findings suggests ways current anticancer antibody treatments might be improved, as well as combined with other immune-system-stimulating therapies to help cancer patients,” said study author Jeffrey Ravetch, MD, PhD, of The Rockefeller University in New York, New York.

Previous research has shown that antitumor mAbs bind to Fc receptors on activated immune cells, prompting those immune cells to kill tumors.

However, it was unclear which Fc receptors are involved or how the tumor killing led the immune system to generate memory T cells against these same antigens, in case the tumor producing them should return.

Dr Ravetch and David DiLillo, PhD, also of The Rockefeller University, investigated this process by injecting CD20-expressing lymphoma cells into mice with immune systems engineered to contain human Fc receptors, treating the mice with anti-CD20 mAbs, and then re-introducing lymphoma.

Wild-type C57BL/6 mice received syngeneic EL4 lymphoma cells expressing human CD20 (EL4-hCD20 cells). When these mice received treatment with an mIgG2a isotype anti-hCD20 mAb, they all survived.

Ninety days later, after the mAb had been cleared from their systems, the mice were re-challenged with EL4-hCD20 tumor cells, at a dose 10-fold greater than the initial challenge, but they did not receive any additional mAb.

All of these mice survived, but tumor/mAb-primed mice that were re-challenged with EL4-wild-type cells, which don’t express hCD20, had poor survival. Results were similar with a different anti-hCD20 mAb, clone 2B8.

The researchers also re-challenged tumor/mAb-primed mice with B6BL lymphoma cells that expressed either hCD20 or an irrelevant antigen, mCD20. All of the mice re-challenged with B6BL-mCD20 cells had died by day 31, but 80% of the mice re-challenged with B6BL-hCD20 cells survived at least 90 days.

Drs Ravetch and DiLillo said these results suggest an anti-hCD20 immune response is generated after the initial FcγR-mediated clearance of tumor cells by antibody-dependent cellular cytotoxicity.

The researchers then took a closer look at the role of Fc receptors, keeping in mind that different types of immune cells can express different receptors.

Based on the cells the researchers thought were involved, they looked to the Fc receptors expressed by cytotoxic immune cells that carried out the initial attack on tumors, as well as the Fc receptors found on dendritic cells, which are crucial to the formation of memory T cells.

To test the involvement of these receptors, the pair altered the mAbs delivered to the lymphoma-infected mice so as to change their affinity for the receptors. Then, they looked for changes in the survival rate of the mice after the first and second challenges with lymphoma cells.

When they dissected this process, the researchers uncovered 2 steps. The Fc receptor FcγRIIIA, which is found on macrophages, responded to mAbs and prompted the macrophages to engulf and destroy the antibody-laden tumor cells.

These same antibodies, still attached to tumor antigens, activated a second receptor, FcγRIIA, on dendritic cells, which used the antigen to prime T cells. The result was the generation of a T-cell memory response that protected the mice against future lymphoma cells expressing CD20.

“By engineering the antibodies so as to increase their affinity for both FcγRIIIA and FcγRIIA, we were able to optimize both steps in this process,” Dr DiLillo said.

“Current antibody therapies are only engineered to improve the immediate killing of tumor cells but not the formation of immunological memory. We are proposing that an ideal antibody therapy would be engineered to take full advantage of both steps.” ![]()

Photo by Linda Bartlett

Results of preclinical research help explain how antitumor monoclonal antibodies (mAbs) fight lymphoma.

Researchers uncovered a 2-step process that revolves around 2 antibody-binding receptors found on different types of immune cells.

Experiments suggested that these Fc receptors are needed to eradicate lymphoma and ensure it doesn’t return.

The researchers reported these findings in an article published in Cell.

“These findings suggests ways current anticancer antibody treatments might be improved, as well as combined with other immune-system-stimulating therapies to help cancer patients,” said study author Jeffrey Ravetch, MD, PhD, of The Rockefeller University in New York, New York.

Previous research has shown that antitumor mAbs bind to Fc receptors on activated immune cells, prompting those immune cells to kill tumors.

However, it was unclear which Fc receptors are involved or how the tumor killing led the immune system to generate memory T cells against these same antigens, in case the tumor producing them should return.

Dr Ravetch and David DiLillo, PhD, also of The Rockefeller University, investigated this process by injecting CD20-expressing lymphoma cells into mice with immune systems engineered to contain human Fc receptors, treating the mice with anti-CD20 mAbs, and then re-introducing lymphoma.

Wild-type C57BL/6 mice received syngeneic EL4 lymphoma cells expressing human CD20 (EL4-hCD20 cells). When these mice received treatment with an mIgG2a isotype anti-hCD20 mAb, they all survived.

Ninety days later, after the mAb had been cleared from their systems, the mice were re-challenged with EL4-hCD20 tumor cells, at a dose 10-fold greater than the initial challenge, but they did not receive any additional mAb.

All of these mice survived, but tumor/mAb-primed mice that were re-challenged with EL4-wild-type cells, which don’t express hCD20, had poor survival. Results were similar with a different anti-hCD20 mAb, clone 2B8.

The researchers also re-challenged tumor/mAb-primed mice with B6BL lymphoma cells that expressed either hCD20 or an irrelevant antigen, mCD20. All of the mice re-challenged with B6BL-mCD20 cells had died by day 31, but 80% of the mice re-challenged with B6BL-hCD20 cells survived at least 90 days.

Drs Ravetch and DiLillo said these results suggest an anti-hCD20 immune response is generated after the initial FcγR-mediated clearance of tumor cells by antibody-dependent cellular cytotoxicity.

The researchers then took a closer look at the role of Fc receptors, keeping in mind that different types of immune cells can express different receptors.

Based on the cells the researchers thought were involved, they looked to the Fc receptors expressed by cytotoxic immune cells that carried out the initial attack on tumors, as well as the Fc receptors found on dendritic cells, which are crucial to the formation of memory T cells.

To test the involvement of these receptors, the pair altered the mAbs delivered to the lymphoma-infected mice so as to change their affinity for the receptors. Then, they looked for changes in the survival rate of the mice after the first and second challenges with lymphoma cells.

When they dissected this process, the researchers uncovered 2 steps. The Fc receptor FcγRIIIA, which is found on macrophages, responded to mAbs and prompted the macrophages to engulf and destroy the antibody-laden tumor cells.

These same antibodies, still attached to tumor antigens, activated a second receptor, FcγRIIA, on dendritic cells, which used the antigen to prime T cells. The result was the generation of a T-cell memory response that protected the mice against future lymphoma cells expressing CD20.

“By engineering the antibodies so as to increase their affinity for both FcγRIIIA and FcγRIIA, we were able to optimize both steps in this process,” Dr DiLillo said.

“Current antibody therapies are only engineered to improve the immediate killing of tumor cells but not the formation of immunological memory. We are proposing that an ideal antibody therapy would be engineered to take full advantage of both steps.” ![]()

Corrona Begins

Over the last 15 years the treatment of psoriasis has been transformed with the advent of biologic agents. Now we have a whole new generation of treatments that is emerging. With all of these therapeutic options, the dermatologic community is in need of increasing data to help us further understand both the therapies and the disease state.

A new independent US psoriasis registry has been established. This registry is a joint collaboration with the National Psoriasis Foundation and Corrona, Inc (Consortium of Rheumatology Researchers of North America, Inc). Data will be gathered through comprehensive questionnaires completed by patients and their dermatologists during appointments.

The registry will function to collect and analyze clinical data, and thereby allow investigators to achieve the following: (1) compare the safety and effectiveness of psoriasis treatments, (2) better understand psoriasis comorbidities, and (3) explore the natural history of the disease.

The registry will begin recruiting patients this year. Initially, the registry will track the drug safety reporting for secukinumab. The goal is for the CORRONA psoriasis registry to enroll at least 3000 patients with psoriasis who are taking secukinumab and then follow their treatment for at least 8 years.

In addition to studying safety and effectiveness of therapeutics, the registry also will help identify potential etiologies of psoriasis, study the relationship between psoriasis and other health conditions, and examine the impact of the condition on quality of life, among other outcomes.

To become an investigator in the registry or learn more about it, visit www.psoriasis.org/corrona-registry.

What’s the issue?

This registry is a welcomed addition to the study of psoriasis. It has the potential to add critical information in the years to come.

Over the last 15 years the treatment of psoriasis has been transformed with the advent of biologic agents. Now we have a whole new generation of treatments that is emerging. With all of these therapeutic options, the dermatologic community is in need of increasing data to help us further understand both the therapies and the disease state.

A new independent US psoriasis registry has been established. This registry is a joint collaboration with the National Psoriasis Foundation and Corrona, Inc (Consortium of Rheumatology Researchers of North America, Inc). Data will be gathered through comprehensive questionnaires completed by patients and their dermatologists during appointments.

The registry will function to collect and analyze clinical data, and thereby allow investigators to achieve the following: (1) compare the safety and effectiveness of psoriasis treatments, (2) better understand psoriasis comorbidities, and (3) explore the natural history of the disease.

The registry will begin recruiting patients this year. Initially, the registry will track the drug safety reporting for secukinumab. The goal is for the CORRONA psoriasis registry to enroll at least 3000 patients with psoriasis who are taking secukinumab and then follow their treatment for at least 8 years.

In addition to studying safety and effectiveness of therapeutics, the registry also will help identify potential etiologies of psoriasis, study the relationship between psoriasis and other health conditions, and examine the impact of the condition on quality of life, among other outcomes.

To become an investigator in the registry or learn more about it, visit www.psoriasis.org/corrona-registry.

What’s the issue?

This registry is a welcomed addition to the study of psoriasis. It has the potential to add critical information in the years to come.

Over the last 15 years the treatment of psoriasis has been transformed with the advent of biologic agents. Now we have a whole new generation of treatments that is emerging. With all of these therapeutic options, the dermatologic community is in need of increasing data to help us further understand both the therapies and the disease state.

A new independent US psoriasis registry has been established. This registry is a joint collaboration with the National Psoriasis Foundation and Corrona, Inc (Consortium of Rheumatology Researchers of North America, Inc). Data will be gathered through comprehensive questionnaires completed by patients and their dermatologists during appointments.

The registry will function to collect and analyze clinical data, and thereby allow investigators to achieve the following: (1) compare the safety and effectiveness of psoriasis treatments, (2) better understand psoriasis comorbidities, and (3) explore the natural history of the disease.

The registry will begin recruiting patients this year. Initially, the registry will track the drug safety reporting for secukinumab. The goal is for the CORRONA psoriasis registry to enroll at least 3000 patients with psoriasis who are taking secukinumab and then follow their treatment for at least 8 years.

In addition to studying safety and effectiveness of therapeutics, the registry also will help identify potential etiologies of psoriasis, study the relationship between psoriasis and other health conditions, and examine the impact of the condition on quality of life, among other outcomes.

To become an investigator in the registry or learn more about it, visit www.psoriasis.org/corrona-registry.

What’s the issue?

This registry is a welcomed addition to the study of psoriasis. It has the potential to add critical information in the years to come.

SAEM: STEMI in the ED: Will lower incidence threaten timely care?

SAN DIEGO – Although cardiovascular disease is on the rise, incidence of ST-elevation myocardial infarction has steadily declined in recent years, with STEMI visits to emergency departments dropping by almost a third between 2006 and 2011, and STEMI-related hospitalizations down as well.

The decline is likely the result of better medical management of known cardiovascular disease, resulting in fewer STEMIs. It may also stem from the bypassing of emergency departments by emergency medical technicians, who can take patients straight to a catheterization lab when they detect STEMI, said Dr. Michael J. Ward, a leading researcher in emergency health care from Vanderbilt University in Nashville, Tenn., at the annual meeting of the Society for Academic Emergency Medicine.

But this trend, while a good thing for most patients, presents potential pitfalls for emergency departments in achieving timely treatment, he said.

In a STEMI incidence study using data on about 1.43 million ED STEMI visits from the Nationwide Emergency Department Sample (NEDS) during 2006-2011, ED STEMI visits per 10,000 U.S. adults declined significantly, from 10.1 in 2006 to 7.3 in 2011. Declines were seen across all age groups and regions during the study period, Dr. Ward and colleagues found in their recently published study (Am. J. Cardiol. 2015;115:167-70).

In a separate analysis of the same data, transfer rates of STEMI patients increased from 15% in 2006 to 20.6% in 2011. Patients without insurance were 60% (adjusted odds ratio, 1.64) more likely to be transferred when presenting to an ED with STEMI than patients with insurance, the investigators found.

Both trends – the decline in presentations to the ED and the increase in transfers – could mean higher risk for patients presenting to EDs with STEMI, Dr. Ward said in an interview.

“You basically have 90 minutes from the time a STEMI patient presents to get the vessel open,” Dr. Ward said. “There’s really very little margin for error. If you’re seeing fewer STEMIs, are you and your staff going to be less practiced? And what if patients present unusually? What if it’s not the older male with chest pain, but a younger female with back pain or just not feeling well?”

The finding of an increase in transfers is problematic as well, he said. “Only about a third of ED facilities have catheterization capabilities. As EDs see fewer and fewer STEMI patients, they may not be able to maintain their ability to recognize and care for them, or develop a lower threshold for transfer.”

Even after adjusting for confounders such as age, presentation at a rural facility, and presentation on a weekend, the likelihood for transfer among self-pay patients, compared with those with any form of insurance, including Medicare and Medicaid, was increased by 64%, Dr. Ward reported.

The findings show that STEMI patients without insurance “are much more likely to be transferred, receiving less timely and therefore lower quality care for the most severe form of heart attack,” Dr. Ward said.

The reasons for this are unknown, he said. “One may be that patients without insurance are presenting to facilities that don’t have the ability to treat them: rural facilities, or facilities without the capability to treat this particular type of emergency. The other possibility is that they’re presenting to one that does have the capability, yet they’re still being transferred.”

Even if a patient with STEMI presents to a facility without the capability to treat a STEMI, and there’s another next door that can, “it still introduces a significant delay,” and with that higher risks, he said.

Dr. Ward’s research was funded by grants from the National Institutes of Health. He disclosed no conflicts of interest.

SAN DIEGO – Although cardiovascular disease is on the rise, incidence of ST-elevation myocardial infarction has steadily declined in recent years, with STEMI visits to emergency departments dropping by almost a third between 2006 and 2011, and STEMI-related hospitalizations down as well.

The decline is likely the result of better medical management of known cardiovascular disease, resulting in fewer STEMIs. It may also stem from the bypassing of emergency departments by emergency medical technicians, who can take patients straight to a catheterization lab when they detect STEMI, said Dr. Michael J. Ward, a leading researcher in emergency health care from Vanderbilt University in Nashville, Tenn., at the annual meeting of the Society for Academic Emergency Medicine.

But this trend, while a good thing for most patients, presents potential pitfalls for emergency departments in achieving timely treatment, he said.

In a STEMI incidence study using data on about 1.43 million ED STEMI visits from the Nationwide Emergency Department Sample (NEDS) during 2006-2011, ED STEMI visits per 10,000 U.S. adults declined significantly, from 10.1 in 2006 to 7.3 in 2011. Declines were seen across all age groups and regions during the study period, Dr. Ward and colleagues found in their recently published study (Am. J. Cardiol. 2015;115:167-70).

In a separate analysis of the same data, transfer rates of STEMI patients increased from 15% in 2006 to 20.6% in 2011. Patients without insurance were 60% (adjusted odds ratio, 1.64) more likely to be transferred when presenting to an ED with STEMI than patients with insurance, the investigators found.

Both trends – the decline in presentations to the ED and the increase in transfers – could mean higher risk for patients presenting to EDs with STEMI, Dr. Ward said in an interview.

“You basically have 90 minutes from the time a STEMI patient presents to get the vessel open,” Dr. Ward said. “There’s really very little margin for error. If you’re seeing fewer STEMIs, are you and your staff going to be less practiced? And what if patients present unusually? What if it’s not the older male with chest pain, but a younger female with back pain or just not feeling well?”