User login

Furuncular Myiasis in 2 American Travelers Returning From Senegal

Case Reports

Patient 1

A 16-year-old adolescent boy presented to the emergency department with painful, pruritic, erythematous nodules on the bilateral legs of 1 week’s duration. The lesions had developed 1 week after returning from a monthlong trip to Senegal with a volunteer youth group. He did not recall sustaining any painful insect bites or illnesses while traveling in Africa and only noticed the erythematous papules on the legs when he returned home to the United States. After consulting with his primary care physician and a local dermatologist, the patient began taking oral cephalexin for suspected bacterial furunculosis with no considerable improvement. Over the course of 1 week, the lesions became increasingly painful and pruritic, prompting a visit to the emergency department. Prior to his arrival, the patient reported squeezing a live worm from one of the lesions on the right ankle.

On presentation, the patient was afebrile (temperature, 36.7°C) and his vital signs revealed no abnormalities. Physical examination revealed tender erythematous nodules on the bilateral heels, ankles, and shins with pinpoint puncta noted at the center of many of the lesions (Figure 1). The nodules were warm and indurated and no pulsatile movement was appreciated. The legs appeared to be well perfused with intact sensation and motor function. The patient brought in the live mobile larva that he extruded from the lesion on the right ankle. Both the departments of infectious diseases and dermatology were consulted and a preliminary diagnosis of furuncular myiasis was made.

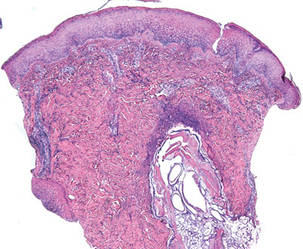

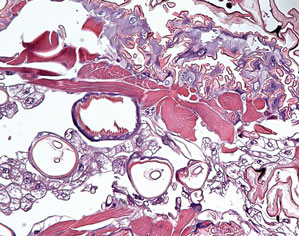

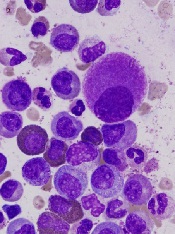

The lesions were occluded with petroleum jelly and the patient was instructed to follow-up with the dermatology department later that same day. On follow-up in the dermatology clinic, the tips of intact larvae were appreciated at the central puncta of some of the lesions (Figure 2). Lidocaine adrenaline tetracaine gel was applied to lesions on the legs for 40 minutes, then lidocaine gel 1% was injected into each lesion. On injection, immobile larvae were ejected from the central puncta of most of the lesions; the remaining lesions were treated via 3-mm punch biopsy as a means of extraction. Each nodule contained only a single larva, all of which were dead at the time of removal (Figure 3). The wounds were left open and the patient was instructed to continue treatment with cephalexin with leg elevation and rest. Pathologic examination of deep dermal skin sections revealed larval fragments encased by a thick chitinous cuticle with spines that were consistent with furuncular myiasis (Figures 4 and 5). Given the patient’s recent history of travel to Africa along with the morphology of the extracted specimens, the larvae were identified as Cordylobia anthropophaga, a common cause of furuncular myiasis in that region.

Patient 2

The next week, a 17-year-old adolescent girl who had been on the same trip to Senegal as patient 1 presented with 2 similar erythematous nodules with central crusts on the left inner thigh and buttock. On noticing the lesions approximately 3 days prior to presentation, the patient applied topical antibiotic ointment to each nodule, which incited the evacuation of white tube-shaped structures that were presented for examination. On presentation, the nodules were healing well. Given the patient’s travel history and physical examination, a presumptive diagnosis of furuncular myiasis from C anthropophaga also was made.

|

Comment

The term myiasis stems from the Greek term for fly and is used to describe the infestation of fly larvae in living vertebrates.1 Myiasis has many classifications, the 3 most common being furuncular, migratory, and wound myiasis, which are differentiated by the different fly species found in distinct regions of the world. Furuncular myiasis is the most benign form, usually affecting only a localized region of the skin; migratory myiasis is characterized by larvae traveling substantial distances from one anatomic site to another within the lower layers of the epidermis; and wound myiasis involves rapid reproduction of larvae in necrotic tissue with subsequent tissue destruction.2

The clinical presentation of the lesions noted in our patients suggested a diagnosis of furuncular myiasis, which commonly is caused by Dermatobia hominis, C anthropophaga, Cuterebra species, Wohlfahrtia vigil, and Wohlfahrtia opaca larvae.3Dermatobia hominis is the most common cause of furuncular myiasis and usually is found in Central and South America. Our patients likely developed an infestation of C anthropophaga (also known as the tumbu fly), a yellow-brown, 7- to 12-mm blowfly commonly found throughout tropical Africa.3 Although C anthropophaga is historically limited to sub-Saharan Africa, there has been a report of a case acquired in Portugal.4

In a review of the literature, C anthropophaga myiasis was documented in Italian travelers returning from Senegal5-7; our cases are unique because they represent North American travelers returning from Senegal with furuncular myiasis. Furuncular myiasis from C anthropophaga has been reported in travelers returning to North America from other African countries, including Angola,8 Tanzania,9-11 Kenya,9 Sierra Leone,12 and Ivory Coast.13 Several cases of ocular myiasis from D hominis and Oestrus ovis have been reported in European travelers returning from Tunisia.14,15

Tumbu fly infestations typically affect dogs and rodents but can arise in human hosts.3 Children may be affected by C anthropophaga furuncular myiasis more often than adults because they have thinner skin and less immunity to the larvae.2

|

There are 2 mechanisms by which infestation of human hosts by C anthropophaga can occur. Most commonly, female flies lay eggs in shady areas in soil that is contaminated by feces or urine. The hatched larvae can survive in the ground for up to 2 weeks and later attach to a host when prompted by heat or movement.3 Therefore, clothing set out to dry may be contaminated by this soil. Alternatively, female flies can lay eggs directly onto clothing that is contaminated by feces or urine and the larvae subsequently hatch outside the soil with easy access to human skin once the clothing is worn.2

Common penetration sites are the head, neck, and back, as well as areas covered by contaminated or infested clothing.2,3 Penetration of the human skin occurs instantly and is a painless process that is rarely noticed by the human host.3 The larvae burrow into the skin for 8 to 12 days, resulting in a furuncle that occasionally secretes a serous fluid.2 Within the first 2 days of infestation, the host may experience symptoms ranging from local pruritus to severe pain. Six days following initial onset, an intense inflammatory response may result in local lymphadenopathy along with fever and fatigue.2 The larvae use their posterior spiracles to create openings in the skin to create air holes that allow them to breathe.3 On physical examination, the spiracles generally appear as 1- to 3-mm dark linear streaks within furuncles, which is important in the diagnosis of C anthropophaga furuncular myiasis.1,3 If spiracles are not appreciated on initial examination, diagnosis can be made by submerging the affected areas in water or saliva to look for air bubbles arising from the central puncta of the lesions.1

All causes of furuncular myiasis are characterized by a ratio of 1 larva to 1 furuncle.16 Although most of these types of larvae that can cause furuncular myiasis result in single lesions, C anthropophaga infestation often produces several furuncles that may coalesce into plaques.1,2 The differential diagnosis for C anthropophaga furuncular myiasis includes pyoderma, impetigo, staphylococcal furunculosis, cutaneous leishmaniasis, infected cyst, retained foreign body, and facticial disease.2,3 Dracunculiasis also may be considered, which occurs after ingestion of contaminated water.2 Ultrasonography may be helpful for the diagnosis of furuncular myiasis, as it can facilitate identification of foreign bodies, abscesses, and even larvae in some cases.17 Definitive diagnosis of any type of myiasis involves extraction of the larva and identification of the family, genus, and species by a parasitologist.1 Some experts suggest rearing preserved live larvae with raw meat after extraction because adult specimens are more reliable than larvae for species diagnosis.1

Treatment of furuncular myiasis involves occlusion and extraction of the larvae from the skin. Suffocation of the larvae by occlusion of air holes with petroleum jelly, paraffin oil, bacon fat, glue, and other obstructing substances forces the larvae to emerge in search of oxygen, though immature larvae may be more reluctant than mature ones.2,3 Definitive treatment involves the direct removal of the larvae by surgery or expulsion by pressure, though it is recommended that lesions are pretreated with occlusive techniques.1,3 Other reported methods of extraction include injection of lidocaine and the use of a commercial venom extractor.1 It should be noted that rupture and incomplete extraction of larvae can lead to secondary infections and allergic reactions. Lesions can be pretreated with lidocaine gel prior to extraction, and antibiotics should be used in cases of secondary bacterial infection. Ivermectin also has been reported as a treatment of furuncular myiasis and other types of myiasis.1 Prevention of infestation by C anthropophaga includes avoidance of endemic areas, maintaining good hygiene, and ironing clothing or drying it in sunny locations.1,2 Overall, furuncular myiasis has a good prognosis with rapid recovery and a low incidence of complications.1

Conclusion

We present 2 cases of travelers returning to North America from Senegal with C anthropophaga furuncular myiasis. Careful review of travel history, physical examination, and identification of fly larvae are important for diagnosis. Individuals traveling to sub-Saharan Africa should avoid drying clothes in shady places and lying on the ground. They also are urged to iron their clothing before wearing it.

1. Caissie R, Beaulieu F, Giroux M, et al. Cutaneous myiasis: diagnosis, treatment, and prevention. J Oral Maxillofac Surg. 2008;66:560-568.

2. McGraw TA, Turiansky GW. Cutaneous myiasis. J Am Acad Dermatol. 2008;58:907-926.

3. Robbins K, Khachemoune A. Cutaneous myiasis: a review of the common types of myiasis. Int J Dermatol. 2010;49:1092-1098.

4. Curtis SJ, Edwards C, Athulathmuda C, et al. Case of the month: cutaneous myiasis in a returning traveller from the Algarve: first report of tumbu maggots, Cordylobia anthropophaga, acquired in Portugal. Emerg Med J. 2006;23:236-237.

5. Veraldi S, Brusasco A, Süss L. Cutaneous myiasis caused by larvae of Cordylobia anthropophaga (Blanchard). Int J Dermatol. 1993;32:184-187.

6. Cultrera R, Dettori G, Calderaro A, et al. Cutaneous myiasis caused by Cordylobia anthropophaga (Blanchard 1872): description of 5 cases from costal regions of Senegal [in Italian]. Parassitologia. 1993;35:47-49.

7. Fusco FM, Nardiello S, Brancaccio G, et al. Cutaneous myiasis from Cordylobia anthropophaga in a traveller returning from Senegal: a case study [in Italian]. Infez Med. 2005;13:109-111.

8. Lee EJ, Robinson F. Furuncular myiasis of the face caused by larva of the tumbu fly (Cordylobia anthropophaga)[published online ahead of print July 21, 2006]. Eye (Lond). 2007;21:268-269.

9. Rice PL, Gleason N. Two cases of myiasis in the United States by the African tumbu fly, Cordylobia anthropophaga (Diptera, Calliphoridae). Am J Trop Med Hyg. 1972;21:62-65.

10. March CH. A case of “ver du Cayor” in Manhattan. Arch Dermatol. 1964;90:32-33.

11. Schorr WF. Tumbu-fly myiasis in Marshfield, Wis. Arch Dermatol. 1967;95:61-62.

12. Potter TS, Dorman MA, Ghaemi M, et al. Inflammatory papules on the back of a traveling businessman. tumbu

fly myiasis. Arch Dermatol. 1995;131:951, 954.

13. Ockenhouse CF, Samlaska CP, Benson PM, et al. Cutaneous myiasis caused by the African tumbu fly (Cordylobia anthropophaga). Arch Dermatol. 1990;126:199-202.

14. Kaouech E, Kallel K, Belhadj S, et al. Dermatobia hominis furuncular myiasis in a man returning from Latin America: first imported case in Tunisia [in French]. Med Trop (Mars). 2010;70:135-136.

15. Zayani A, Chaabouni M, Gouiaa R, et al. Conjuctival myiasis. 23 cases in the Tunisian Sahel [in French]. Arch Inst Pasteur Tunis. 1989;66:289-292.

16. Latorre M, Ullate JV, Sanchez J, et al. A case of myiasis due to Dermatobia hominis. Eur J Clin Microbiol Infect Dis. 1993;12:968-969.

17. Mahal JJ, Sperling JD. Furuncular myiasis from Dermatobia hominis: a case of human botfly infestation [published online ahead of print February 1, 2010]. J Emerg Med. 2012;43:618-621.

Case Reports

Patient 1

A 16-year-old adolescent boy presented to the emergency department with painful, pruritic, erythematous nodules on the bilateral legs of 1 week’s duration. The lesions had developed 1 week after returning from a monthlong trip to Senegal with a volunteer youth group. He did not recall sustaining any painful insect bites or illnesses while traveling in Africa and only noticed the erythematous papules on the legs when he returned home to the United States. After consulting with his primary care physician and a local dermatologist, the patient began taking oral cephalexin for suspected bacterial furunculosis with no considerable improvement. Over the course of 1 week, the lesions became increasingly painful and pruritic, prompting a visit to the emergency department. Prior to his arrival, the patient reported squeezing a live worm from one of the lesions on the right ankle.

On presentation, the patient was afebrile (temperature, 36.7°C) and his vital signs revealed no abnormalities. Physical examination revealed tender erythematous nodules on the bilateral heels, ankles, and shins with pinpoint puncta noted at the center of many of the lesions (Figure 1). The nodules were warm and indurated and no pulsatile movement was appreciated. The legs appeared to be well perfused with intact sensation and motor function. The patient brought in the live mobile larva that he extruded from the lesion on the right ankle. Both the departments of infectious diseases and dermatology were consulted and a preliminary diagnosis of furuncular myiasis was made.

The lesions were occluded with petroleum jelly and the patient was instructed to follow-up with the dermatology department later that same day. On follow-up in the dermatology clinic, the tips of intact larvae were appreciated at the central puncta of some of the lesions (Figure 2). Lidocaine adrenaline tetracaine gel was applied to lesions on the legs for 40 minutes, then lidocaine gel 1% was injected into each lesion. On injection, immobile larvae were ejected from the central puncta of most of the lesions; the remaining lesions were treated via 3-mm punch biopsy as a means of extraction. Each nodule contained only a single larva, all of which were dead at the time of removal (Figure 3). The wounds were left open and the patient was instructed to continue treatment with cephalexin with leg elevation and rest. Pathologic examination of deep dermal skin sections revealed larval fragments encased by a thick chitinous cuticle with spines that were consistent with furuncular myiasis (Figures 4 and 5). Given the patient’s recent history of travel to Africa along with the morphology of the extracted specimens, the larvae were identified as Cordylobia anthropophaga, a common cause of furuncular myiasis in that region.

Patient 2

The next week, a 17-year-old adolescent girl who had been on the same trip to Senegal as patient 1 presented with 2 similar erythematous nodules with central crusts on the left inner thigh and buttock. On noticing the lesions approximately 3 days prior to presentation, the patient applied topical antibiotic ointment to each nodule, which incited the evacuation of white tube-shaped structures that were presented for examination. On presentation, the nodules were healing well. Given the patient’s travel history and physical examination, a presumptive diagnosis of furuncular myiasis from C anthropophaga also was made.

|

Comment

The term myiasis stems from the Greek term for fly and is used to describe the infestation of fly larvae in living vertebrates.1 Myiasis has many classifications, the 3 most common being furuncular, migratory, and wound myiasis, which are differentiated by the different fly species found in distinct regions of the world. Furuncular myiasis is the most benign form, usually affecting only a localized region of the skin; migratory myiasis is characterized by larvae traveling substantial distances from one anatomic site to another within the lower layers of the epidermis; and wound myiasis involves rapid reproduction of larvae in necrotic tissue with subsequent tissue destruction.2

The clinical presentation of the lesions noted in our patients suggested a diagnosis of furuncular myiasis, which commonly is caused by Dermatobia hominis, C anthropophaga, Cuterebra species, Wohlfahrtia vigil, and Wohlfahrtia opaca larvae.3Dermatobia hominis is the most common cause of furuncular myiasis and usually is found in Central and South America. Our patients likely developed an infestation of C anthropophaga (also known as the tumbu fly), a yellow-brown, 7- to 12-mm blowfly commonly found throughout tropical Africa.3 Although C anthropophaga is historically limited to sub-Saharan Africa, there has been a report of a case acquired in Portugal.4

In a review of the literature, C anthropophaga myiasis was documented in Italian travelers returning from Senegal5-7; our cases are unique because they represent North American travelers returning from Senegal with furuncular myiasis. Furuncular myiasis from C anthropophaga has been reported in travelers returning to North America from other African countries, including Angola,8 Tanzania,9-11 Kenya,9 Sierra Leone,12 and Ivory Coast.13 Several cases of ocular myiasis from D hominis and Oestrus ovis have been reported in European travelers returning from Tunisia.14,15

Tumbu fly infestations typically affect dogs and rodents but can arise in human hosts.3 Children may be affected by C anthropophaga furuncular myiasis more often than adults because they have thinner skin and less immunity to the larvae.2

|

There are 2 mechanisms by which infestation of human hosts by C anthropophaga can occur. Most commonly, female flies lay eggs in shady areas in soil that is contaminated by feces or urine. The hatched larvae can survive in the ground for up to 2 weeks and later attach to a host when prompted by heat or movement.3 Therefore, clothing set out to dry may be contaminated by this soil. Alternatively, female flies can lay eggs directly onto clothing that is contaminated by feces or urine and the larvae subsequently hatch outside the soil with easy access to human skin once the clothing is worn.2

Common penetration sites are the head, neck, and back, as well as areas covered by contaminated or infested clothing.2,3 Penetration of the human skin occurs instantly and is a painless process that is rarely noticed by the human host.3 The larvae burrow into the skin for 8 to 12 days, resulting in a furuncle that occasionally secretes a serous fluid.2 Within the first 2 days of infestation, the host may experience symptoms ranging from local pruritus to severe pain. Six days following initial onset, an intense inflammatory response may result in local lymphadenopathy along with fever and fatigue.2 The larvae use their posterior spiracles to create openings in the skin to create air holes that allow them to breathe.3 On physical examination, the spiracles generally appear as 1- to 3-mm dark linear streaks within furuncles, which is important in the diagnosis of C anthropophaga furuncular myiasis.1,3 If spiracles are not appreciated on initial examination, diagnosis can be made by submerging the affected areas in water or saliva to look for air bubbles arising from the central puncta of the lesions.1

All causes of furuncular myiasis are characterized by a ratio of 1 larva to 1 furuncle.16 Although most of these types of larvae that can cause furuncular myiasis result in single lesions, C anthropophaga infestation often produces several furuncles that may coalesce into plaques.1,2 The differential diagnosis for C anthropophaga furuncular myiasis includes pyoderma, impetigo, staphylococcal furunculosis, cutaneous leishmaniasis, infected cyst, retained foreign body, and facticial disease.2,3 Dracunculiasis also may be considered, which occurs after ingestion of contaminated water.2 Ultrasonography may be helpful for the diagnosis of furuncular myiasis, as it can facilitate identification of foreign bodies, abscesses, and even larvae in some cases.17 Definitive diagnosis of any type of myiasis involves extraction of the larva and identification of the family, genus, and species by a parasitologist.1 Some experts suggest rearing preserved live larvae with raw meat after extraction because adult specimens are more reliable than larvae for species diagnosis.1

Treatment of furuncular myiasis involves occlusion and extraction of the larvae from the skin. Suffocation of the larvae by occlusion of air holes with petroleum jelly, paraffin oil, bacon fat, glue, and other obstructing substances forces the larvae to emerge in search of oxygen, though immature larvae may be more reluctant than mature ones.2,3 Definitive treatment involves the direct removal of the larvae by surgery or expulsion by pressure, though it is recommended that lesions are pretreated with occlusive techniques.1,3 Other reported methods of extraction include injection of lidocaine and the use of a commercial venom extractor.1 It should be noted that rupture and incomplete extraction of larvae can lead to secondary infections and allergic reactions. Lesions can be pretreated with lidocaine gel prior to extraction, and antibiotics should be used in cases of secondary bacterial infection. Ivermectin also has been reported as a treatment of furuncular myiasis and other types of myiasis.1 Prevention of infestation by C anthropophaga includes avoidance of endemic areas, maintaining good hygiene, and ironing clothing or drying it in sunny locations.1,2 Overall, furuncular myiasis has a good prognosis with rapid recovery and a low incidence of complications.1

Conclusion

We present 2 cases of travelers returning to North America from Senegal with C anthropophaga furuncular myiasis. Careful review of travel history, physical examination, and identification of fly larvae are important for diagnosis. Individuals traveling to sub-Saharan Africa should avoid drying clothes in shady places and lying on the ground. They also are urged to iron their clothing before wearing it.

Case Reports

Patient 1

A 16-year-old adolescent boy presented to the emergency department with painful, pruritic, erythematous nodules on the bilateral legs of 1 week’s duration. The lesions had developed 1 week after returning from a monthlong trip to Senegal with a volunteer youth group. He did not recall sustaining any painful insect bites or illnesses while traveling in Africa and only noticed the erythematous papules on the legs when he returned home to the United States. After consulting with his primary care physician and a local dermatologist, the patient began taking oral cephalexin for suspected bacterial furunculosis with no considerable improvement. Over the course of 1 week, the lesions became increasingly painful and pruritic, prompting a visit to the emergency department. Prior to his arrival, the patient reported squeezing a live worm from one of the lesions on the right ankle.

On presentation, the patient was afebrile (temperature, 36.7°C) and his vital signs revealed no abnormalities. Physical examination revealed tender erythematous nodules on the bilateral heels, ankles, and shins with pinpoint puncta noted at the center of many of the lesions (Figure 1). The nodules were warm and indurated and no pulsatile movement was appreciated. The legs appeared to be well perfused with intact sensation and motor function. The patient brought in the live mobile larva that he extruded from the lesion on the right ankle. Both the departments of infectious diseases and dermatology were consulted and a preliminary diagnosis of furuncular myiasis was made.

The lesions were occluded with petroleum jelly and the patient was instructed to follow-up with the dermatology department later that same day. On follow-up in the dermatology clinic, the tips of intact larvae were appreciated at the central puncta of some of the lesions (Figure 2). Lidocaine adrenaline tetracaine gel was applied to lesions on the legs for 40 minutes, then lidocaine gel 1% was injected into each lesion. On injection, immobile larvae were ejected from the central puncta of most of the lesions; the remaining lesions were treated via 3-mm punch biopsy as a means of extraction. Each nodule contained only a single larva, all of which were dead at the time of removal (Figure 3). The wounds were left open and the patient was instructed to continue treatment with cephalexin with leg elevation and rest. Pathologic examination of deep dermal skin sections revealed larval fragments encased by a thick chitinous cuticle with spines that were consistent with furuncular myiasis (Figures 4 and 5). Given the patient’s recent history of travel to Africa along with the morphology of the extracted specimens, the larvae were identified as Cordylobia anthropophaga, a common cause of furuncular myiasis in that region.

Patient 2

The next week, a 17-year-old adolescent girl who had been on the same trip to Senegal as patient 1 presented with 2 similar erythematous nodules with central crusts on the left inner thigh and buttock. On noticing the lesions approximately 3 days prior to presentation, the patient applied topical antibiotic ointment to each nodule, which incited the evacuation of white tube-shaped structures that were presented for examination. On presentation, the nodules were healing well. Given the patient’s travel history and physical examination, a presumptive diagnosis of furuncular myiasis from C anthropophaga also was made.

|

Comment

The term myiasis stems from the Greek term for fly and is used to describe the infestation of fly larvae in living vertebrates.1 Myiasis has many classifications, the 3 most common being furuncular, migratory, and wound myiasis, which are differentiated by the different fly species found in distinct regions of the world. Furuncular myiasis is the most benign form, usually affecting only a localized region of the skin; migratory myiasis is characterized by larvae traveling substantial distances from one anatomic site to another within the lower layers of the epidermis; and wound myiasis involves rapid reproduction of larvae in necrotic tissue with subsequent tissue destruction.2

The clinical presentation of the lesions noted in our patients suggested a diagnosis of furuncular myiasis, which commonly is caused by Dermatobia hominis, C anthropophaga, Cuterebra species, Wohlfahrtia vigil, and Wohlfahrtia opaca larvae.3Dermatobia hominis is the most common cause of furuncular myiasis and usually is found in Central and South America. Our patients likely developed an infestation of C anthropophaga (also known as the tumbu fly), a yellow-brown, 7- to 12-mm blowfly commonly found throughout tropical Africa.3 Although C anthropophaga is historically limited to sub-Saharan Africa, there has been a report of a case acquired in Portugal.4

In a review of the literature, C anthropophaga myiasis was documented in Italian travelers returning from Senegal5-7; our cases are unique because they represent North American travelers returning from Senegal with furuncular myiasis. Furuncular myiasis from C anthropophaga has been reported in travelers returning to North America from other African countries, including Angola,8 Tanzania,9-11 Kenya,9 Sierra Leone,12 and Ivory Coast.13 Several cases of ocular myiasis from D hominis and Oestrus ovis have been reported in European travelers returning from Tunisia.14,15

Tumbu fly infestations typically affect dogs and rodents but can arise in human hosts.3 Children may be affected by C anthropophaga furuncular myiasis more often than adults because they have thinner skin and less immunity to the larvae.2

|

There are 2 mechanisms by which infestation of human hosts by C anthropophaga can occur. Most commonly, female flies lay eggs in shady areas in soil that is contaminated by feces or urine. The hatched larvae can survive in the ground for up to 2 weeks and later attach to a host when prompted by heat or movement.3 Therefore, clothing set out to dry may be contaminated by this soil. Alternatively, female flies can lay eggs directly onto clothing that is contaminated by feces or urine and the larvae subsequently hatch outside the soil with easy access to human skin once the clothing is worn.2

Common penetration sites are the head, neck, and back, as well as areas covered by contaminated or infested clothing.2,3 Penetration of the human skin occurs instantly and is a painless process that is rarely noticed by the human host.3 The larvae burrow into the skin for 8 to 12 days, resulting in a furuncle that occasionally secretes a serous fluid.2 Within the first 2 days of infestation, the host may experience symptoms ranging from local pruritus to severe pain. Six days following initial onset, an intense inflammatory response may result in local lymphadenopathy along with fever and fatigue.2 The larvae use their posterior spiracles to create openings in the skin to create air holes that allow them to breathe.3 On physical examination, the spiracles generally appear as 1- to 3-mm dark linear streaks within furuncles, which is important in the diagnosis of C anthropophaga furuncular myiasis.1,3 If spiracles are not appreciated on initial examination, diagnosis can be made by submerging the affected areas in water or saliva to look for air bubbles arising from the central puncta of the lesions.1

All causes of furuncular myiasis are characterized by a ratio of 1 larva to 1 furuncle.16 Although most of these types of larvae that can cause furuncular myiasis result in single lesions, C anthropophaga infestation often produces several furuncles that may coalesce into plaques.1,2 The differential diagnosis for C anthropophaga furuncular myiasis includes pyoderma, impetigo, staphylococcal furunculosis, cutaneous leishmaniasis, infected cyst, retained foreign body, and facticial disease.2,3 Dracunculiasis also may be considered, which occurs after ingestion of contaminated water.2 Ultrasonography may be helpful for the diagnosis of furuncular myiasis, as it can facilitate identification of foreign bodies, abscesses, and even larvae in some cases.17 Definitive diagnosis of any type of myiasis involves extraction of the larva and identification of the family, genus, and species by a parasitologist.1 Some experts suggest rearing preserved live larvae with raw meat after extraction because adult specimens are more reliable than larvae for species diagnosis.1

Treatment of furuncular myiasis involves occlusion and extraction of the larvae from the skin. Suffocation of the larvae by occlusion of air holes with petroleum jelly, paraffin oil, bacon fat, glue, and other obstructing substances forces the larvae to emerge in search of oxygen, though immature larvae may be more reluctant than mature ones.2,3 Definitive treatment involves the direct removal of the larvae by surgery or expulsion by pressure, though it is recommended that lesions are pretreated with occlusive techniques.1,3 Other reported methods of extraction include injection of lidocaine and the use of a commercial venom extractor.1 It should be noted that rupture and incomplete extraction of larvae can lead to secondary infections and allergic reactions. Lesions can be pretreated with lidocaine gel prior to extraction, and antibiotics should be used in cases of secondary bacterial infection. Ivermectin also has been reported as a treatment of furuncular myiasis and other types of myiasis.1 Prevention of infestation by C anthropophaga includes avoidance of endemic areas, maintaining good hygiene, and ironing clothing or drying it in sunny locations.1,2 Overall, furuncular myiasis has a good prognosis with rapid recovery and a low incidence of complications.1

Conclusion

We present 2 cases of travelers returning to North America from Senegal with C anthropophaga furuncular myiasis. Careful review of travel history, physical examination, and identification of fly larvae are important for diagnosis. Individuals traveling to sub-Saharan Africa should avoid drying clothes in shady places and lying on the ground. They also are urged to iron their clothing before wearing it.

1. Caissie R, Beaulieu F, Giroux M, et al. Cutaneous myiasis: diagnosis, treatment, and prevention. J Oral Maxillofac Surg. 2008;66:560-568.

2. McGraw TA, Turiansky GW. Cutaneous myiasis. J Am Acad Dermatol. 2008;58:907-926.

3. Robbins K, Khachemoune A. Cutaneous myiasis: a review of the common types of myiasis. Int J Dermatol. 2010;49:1092-1098.

4. Curtis SJ, Edwards C, Athulathmuda C, et al. Case of the month: cutaneous myiasis in a returning traveller from the Algarve: first report of tumbu maggots, Cordylobia anthropophaga, acquired in Portugal. Emerg Med J. 2006;23:236-237.

5. Veraldi S, Brusasco A, Süss L. Cutaneous myiasis caused by larvae of Cordylobia anthropophaga (Blanchard). Int J Dermatol. 1993;32:184-187.

6. Cultrera R, Dettori G, Calderaro A, et al. Cutaneous myiasis caused by Cordylobia anthropophaga (Blanchard 1872): description of 5 cases from costal regions of Senegal [in Italian]. Parassitologia. 1993;35:47-49.

7. Fusco FM, Nardiello S, Brancaccio G, et al. Cutaneous myiasis from Cordylobia anthropophaga in a traveller returning from Senegal: a case study [in Italian]. Infez Med. 2005;13:109-111.

8. Lee EJ, Robinson F. Furuncular myiasis of the face caused by larva of the tumbu fly (Cordylobia anthropophaga)[published online ahead of print July 21, 2006]. Eye (Lond). 2007;21:268-269.

9. Rice PL, Gleason N. Two cases of myiasis in the United States by the African tumbu fly, Cordylobia anthropophaga (Diptera, Calliphoridae). Am J Trop Med Hyg. 1972;21:62-65.

10. March CH. A case of “ver du Cayor” in Manhattan. Arch Dermatol. 1964;90:32-33.

11. Schorr WF. Tumbu-fly myiasis in Marshfield, Wis. Arch Dermatol. 1967;95:61-62.

12. Potter TS, Dorman MA, Ghaemi M, et al. Inflammatory papules on the back of a traveling businessman. tumbu

fly myiasis. Arch Dermatol. 1995;131:951, 954.

13. Ockenhouse CF, Samlaska CP, Benson PM, et al. Cutaneous myiasis caused by the African tumbu fly (Cordylobia anthropophaga). Arch Dermatol. 1990;126:199-202.

14. Kaouech E, Kallel K, Belhadj S, et al. Dermatobia hominis furuncular myiasis in a man returning from Latin America: first imported case in Tunisia [in French]. Med Trop (Mars). 2010;70:135-136.

15. Zayani A, Chaabouni M, Gouiaa R, et al. Conjuctival myiasis. 23 cases in the Tunisian Sahel [in French]. Arch Inst Pasteur Tunis. 1989;66:289-292.

16. Latorre M, Ullate JV, Sanchez J, et al. A case of myiasis due to Dermatobia hominis. Eur J Clin Microbiol Infect Dis. 1993;12:968-969.

17. Mahal JJ, Sperling JD. Furuncular myiasis from Dermatobia hominis: a case of human botfly infestation [published online ahead of print February 1, 2010]. J Emerg Med. 2012;43:618-621.

1. Caissie R, Beaulieu F, Giroux M, et al. Cutaneous myiasis: diagnosis, treatment, and prevention. J Oral Maxillofac Surg. 2008;66:560-568.

2. McGraw TA, Turiansky GW. Cutaneous myiasis. J Am Acad Dermatol. 2008;58:907-926.

3. Robbins K, Khachemoune A. Cutaneous myiasis: a review of the common types of myiasis. Int J Dermatol. 2010;49:1092-1098.

4. Curtis SJ, Edwards C, Athulathmuda C, et al. Case of the month: cutaneous myiasis in a returning traveller from the Algarve: first report of tumbu maggots, Cordylobia anthropophaga, acquired in Portugal. Emerg Med J. 2006;23:236-237.

5. Veraldi S, Brusasco A, Süss L. Cutaneous myiasis caused by larvae of Cordylobia anthropophaga (Blanchard). Int J Dermatol. 1993;32:184-187.

6. Cultrera R, Dettori G, Calderaro A, et al. Cutaneous myiasis caused by Cordylobia anthropophaga (Blanchard 1872): description of 5 cases from costal regions of Senegal [in Italian]. Parassitologia. 1993;35:47-49.

7. Fusco FM, Nardiello S, Brancaccio G, et al. Cutaneous myiasis from Cordylobia anthropophaga in a traveller returning from Senegal: a case study [in Italian]. Infez Med. 2005;13:109-111.

8. Lee EJ, Robinson F. Furuncular myiasis of the face caused by larva of the tumbu fly (Cordylobia anthropophaga)[published online ahead of print July 21, 2006]. Eye (Lond). 2007;21:268-269.

9. Rice PL, Gleason N. Two cases of myiasis in the United States by the African tumbu fly, Cordylobia anthropophaga (Diptera, Calliphoridae). Am J Trop Med Hyg. 1972;21:62-65.

10. March CH. A case of “ver du Cayor” in Manhattan. Arch Dermatol. 1964;90:32-33.

11. Schorr WF. Tumbu-fly myiasis in Marshfield, Wis. Arch Dermatol. 1967;95:61-62.

12. Potter TS, Dorman MA, Ghaemi M, et al. Inflammatory papules on the back of a traveling businessman. tumbu

fly myiasis. Arch Dermatol. 1995;131:951, 954.

13. Ockenhouse CF, Samlaska CP, Benson PM, et al. Cutaneous myiasis caused by the African tumbu fly (Cordylobia anthropophaga). Arch Dermatol. 1990;126:199-202.

14. Kaouech E, Kallel K, Belhadj S, et al. Dermatobia hominis furuncular myiasis in a man returning from Latin America: first imported case in Tunisia [in French]. Med Trop (Mars). 2010;70:135-136.

15. Zayani A, Chaabouni M, Gouiaa R, et al. Conjuctival myiasis. 23 cases in the Tunisian Sahel [in French]. Arch Inst Pasteur Tunis. 1989;66:289-292.

16. Latorre M, Ullate JV, Sanchez J, et al. A case of myiasis due to Dermatobia hominis. Eur J Clin Microbiol Infect Dis. 1993;12:968-969.

17. Mahal JJ, Sperling JD. Furuncular myiasis from Dermatobia hominis: a case of human botfly infestation [published online ahead of print February 1, 2010]. J Emerg Med. 2012;43:618-621.

Practice Points

- Cutaneous myiasis is caused by an infestation of fly larvae and can present as furuncles (furuncular myiasis), migratory inflammatory linear plaques (migratory myiasis), and worsening tissue destruction in existing wounds (wound myiasis).

- Furuncular myiasis should be included in the differential diagnosis in patients with furuncular skin lesions who have recently traveled to Central America, South America, or sub-Saharan Africa.

- Furuncular myiasis may be treated by both occlusive and extraction techniques.

Reduced resident duty hours haven’t changed patient outcomes

Patient mortality and morbidity outcomes have not changed since the most recent round of reforms to medical residents’ duty hours in 2011, according to two of the first nationwide studies to assess these “improvements,” which both were published online Dec. 9 in JAMA.

In addition, one of the studies found no difference between pre-reform and post-reform scores or on pass rates for oral or written national in-training and board certification examinations.

Thus, two separate studies involving millions of hospitalized patients across the country have both found that these reforms had no discernible effect on patient care. However, both groups of researchers cautioned that their studies were observational and therefore subject to potential biases and that they covered only the first 2 years that the duty-hours reforms have been in place.

The 2011 requirements expanded on those enacted in 2003 by further restricting residents’ duty hours, in the hope of reducing medical errors attributed to exhausted residents. The hours of continuous in-hospital duty were reduced from 30 to 16 for first-year residents and to 24 for upper-year residents, and the interval between shifts was increased to at least 8 hours off for first-year residents and at least 14 hours off for upper-year residents.

“Duty hour reform is arguably one of the largest efforts ever undertaken to improve the quality and safety of patient care in teaching hospitals,” said Dr. Mitesh S. Patel of the University of Pennsylvania and the Veterans Affairs Hospital Center for Health Equity Research and Promotion, both in Philadelphia, and his associates.

They assessed 30-day mortality and readmissions among 2,790,356 Medicare patients who were treated either for acute MI, stroke, gastrointestinal bleeding, or heart failure, or who underwent general, orthopedic, or vascular surgery, at 3,104 hospitals between 2009 and 2012. The investigators found no significant associations, either positive or negative, between the reforms to residents’ duty hours and any patient outcomes. Sensitivity analyses confirmed the results of the primary data analyses.

“Our findings suggest that ... the goals of improving the quality and safety of patient care ... were not being achieved. Conversely, concerns that outcomes might actually worsen because of decreased continuity of care have not been borne out,” Dr. Patel and his associates said (JAMA 2014 Dec. 9 [doi:10.1001/jama.2014.15273]).

The investigators noted that their study was limited in that it could not take into account hospitals’ adherence to the new requirements. Their study also did not assess other outcomes such as patient safety indicators or complication rates, which “may better elucidate the relative effects of decreased resident fatigue and increased patient hand offs.” And their study couldn’t address any possible confounding effects from other concurrent policy initiatives aimed at improving care for Medicare beneficiaries, such as the Hospital Readmissions Reduction Program.

In the other study, a separate group of researchers used data from the American College of Surgeons National Surgical Quality Improvement Program to assess outcomes for 535,499 patients who underwent general surgery at 131 hospitals during the 2 years before and the 2 years after the reforms to residents’ duty hours were implemented. This included 23 teaching hospitals in which residents were involved in at least 95% of general surgeries, said Dr. Ravi Rajaram of the division of research and optimal patient care, American College of Surgeons, and the Institute for Public Health and Medicine at Northwestern University, both in Chicago, and his associates.

The reforms were not associated with any change in rates of patient mortality or serious morbidity, either in the study population as a whole or in the subgroups of high-risk and low-risk patients. They also had no effect on secondary outcomes such as surgical-site infection or sepsis. These results remained consistent across several sensitivity analyses.

Neither mean scores for in-training, written board, and oral board examinations nor pass rates for those examinations showed any significant changes during the study period.

“Moreover, first-year trainees, who were most directly affected by the 2011 reforms, did not improve their ABSITE [American Board of Surgery In-Training Examination] scores, despite presumably more free time to prepare,” Dr. Rajaram and his associates said (JAMA 2014 Dec. 9 [doi:10.1001/JAMA.2014.15277]).

They cautioned that their study assessed only the first 2 years following duty-hour reform, and “there may be differences in patient care or resident examination performance that are evident only several years after implementation and adoption of new duty-hour requirements.” In addition, a retrospective observational study such as this one could not produce the high-level evidence needed to guide policy decisions. “To that end, a national multicenter cluster-randomized trial is being conducted (the Flexibility In duty hour Requirements for Surgical Trainees [FIRST] trial), comparing current duty-hour requirements with flexible duty hours to assess the effects of this intervention on patient outcomes and resident well-being. This trial may further inform the debate of how to optimally structure postgraduate training,” they said.

The results of these two large studies are aligned with those of most previous research into the effects of duty hour requirements on patient outcomes. There is a consistent theme: a lack of a major beneficial effect.

Complex problems often demand complex answers. The goal is for the medical profession to move forward with more comprehensive and nuanced approaches to help fulfill its responsibility to provide trainees with the necessary skills to manage fatigue and allow the safest environment for quality care.

Dr. James A. Arrigh is chair of the Accreditation Council for Graduate Medical Education (ACGME) residency review committee for internal medicine. Dr. James C. Hebert is chair of the ACGME Council of Review Committee Chairs. They made these remarks in an editorial accompanying the studies.

The results of these two large studies are aligned with those of most previous research into the effects of duty hour requirements on patient outcomes. There is a consistent theme: a lack of a major beneficial effect.

Complex problems often demand complex answers. The goal is for the medical profession to move forward with more comprehensive and nuanced approaches to help fulfill its responsibility to provide trainees with the necessary skills to manage fatigue and allow the safest environment for quality care.

Dr. James A. Arrigh is chair of the Accreditation Council for Graduate Medical Education (ACGME) residency review committee for internal medicine. Dr. James C. Hebert is chair of the ACGME Council of Review Committee Chairs. They made these remarks in an editorial accompanying the studies.

The results of these two large studies are aligned with those of most previous research into the effects of duty hour requirements on patient outcomes. There is a consistent theme: a lack of a major beneficial effect.

Complex problems often demand complex answers. The goal is for the medical profession to move forward with more comprehensive and nuanced approaches to help fulfill its responsibility to provide trainees with the necessary skills to manage fatigue and allow the safest environment for quality care.

Dr. James A. Arrigh is chair of the Accreditation Council for Graduate Medical Education (ACGME) residency review committee for internal medicine. Dr. James C. Hebert is chair of the ACGME Council of Review Committee Chairs. They made these remarks in an editorial accompanying the studies.

Patient mortality and morbidity outcomes have not changed since the most recent round of reforms to medical residents’ duty hours in 2011, according to two of the first nationwide studies to assess these “improvements,” which both were published online Dec. 9 in JAMA.

In addition, one of the studies found no difference between pre-reform and post-reform scores or on pass rates for oral or written national in-training and board certification examinations.

Thus, two separate studies involving millions of hospitalized patients across the country have both found that these reforms had no discernible effect on patient care. However, both groups of researchers cautioned that their studies were observational and therefore subject to potential biases and that they covered only the first 2 years that the duty-hours reforms have been in place.

The 2011 requirements expanded on those enacted in 2003 by further restricting residents’ duty hours, in the hope of reducing medical errors attributed to exhausted residents. The hours of continuous in-hospital duty were reduced from 30 to 16 for first-year residents and to 24 for upper-year residents, and the interval between shifts was increased to at least 8 hours off for first-year residents and at least 14 hours off for upper-year residents.

“Duty hour reform is arguably one of the largest efforts ever undertaken to improve the quality and safety of patient care in teaching hospitals,” said Dr. Mitesh S. Patel of the University of Pennsylvania and the Veterans Affairs Hospital Center for Health Equity Research and Promotion, both in Philadelphia, and his associates.

They assessed 30-day mortality and readmissions among 2,790,356 Medicare patients who were treated either for acute MI, stroke, gastrointestinal bleeding, or heart failure, or who underwent general, orthopedic, or vascular surgery, at 3,104 hospitals between 2009 and 2012. The investigators found no significant associations, either positive or negative, between the reforms to residents’ duty hours and any patient outcomes. Sensitivity analyses confirmed the results of the primary data analyses.

“Our findings suggest that ... the goals of improving the quality and safety of patient care ... were not being achieved. Conversely, concerns that outcomes might actually worsen because of decreased continuity of care have not been borne out,” Dr. Patel and his associates said (JAMA 2014 Dec. 9 [doi:10.1001/jama.2014.15273]).

The investigators noted that their study was limited in that it could not take into account hospitals’ adherence to the new requirements. Their study also did not assess other outcomes such as patient safety indicators or complication rates, which “may better elucidate the relative effects of decreased resident fatigue and increased patient hand offs.” And their study couldn’t address any possible confounding effects from other concurrent policy initiatives aimed at improving care for Medicare beneficiaries, such as the Hospital Readmissions Reduction Program.

In the other study, a separate group of researchers used data from the American College of Surgeons National Surgical Quality Improvement Program to assess outcomes for 535,499 patients who underwent general surgery at 131 hospitals during the 2 years before and the 2 years after the reforms to residents’ duty hours were implemented. This included 23 teaching hospitals in which residents were involved in at least 95% of general surgeries, said Dr. Ravi Rajaram of the division of research and optimal patient care, American College of Surgeons, and the Institute for Public Health and Medicine at Northwestern University, both in Chicago, and his associates.

The reforms were not associated with any change in rates of patient mortality or serious morbidity, either in the study population as a whole or in the subgroups of high-risk and low-risk patients. They also had no effect on secondary outcomes such as surgical-site infection or sepsis. These results remained consistent across several sensitivity analyses.

Neither mean scores for in-training, written board, and oral board examinations nor pass rates for those examinations showed any significant changes during the study period.

“Moreover, first-year trainees, who were most directly affected by the 2011 reforms, did not improve their ABSITE [American Board of Surgery In-Training Examination] scores, despite presumably more free time to prepare,” Dr. Rajaram and his associates said (JAMA 2014 Dec. 9 [doi:10.1001/JAMA.2014.15277]).

They cautioned that their study assessed only the first 2 years following duty-hour reform, and “there may be differences in patient care or resident examination performance that are evident only several years after implementation and adoption of new duty-hour requirements.” In addition, a retrospective observational study such as this one could not produce the high-level evidence needed to guide policy decisions. “To that end, a national multicenter cluster-randomized trial is being conducted (the Flexibility In duty hour Requirements for Surgical Trainees [FIRST] trial), comparing current duty-hour requirements with flexible duty hours to assess the effects of this intervention on patient outcomes and resident well-being. This trial may further inform the debate of how to optimally structure postgraduate training,” they said.

Patient mortality and morbidity outcomes have not changed since the most recent round of reforms to medical residents’ duty hours in 2011, according to two of the first nationwide studies to assess these “improvements,” which both were published online Dec. 9 in JAMA.

In addition, one of the studies found no difference between pre-reform and post-reform scores or on pass rates for oral or written national in-training and board certification examinations.

Thus, two separate studies involving millions of hospitalized patients across the country have both found that these reforms had no discernible effect on patient care. However, both groups of researchers cautioned that their studies were observational and therefore subject to potential biases and that they covered only the first 2 years that the duty-hours reforms have been in place.

The 2011 requirements expanded on those enacted in 2003 by further restricting residents’ duty hours, in the hope of reducing medical errors attributed to exhausted residents. The hours of continuous in-hospital duty were reduced from 30 to 16 for first-year residents and to 24 for upper-year residents, and the interval between shifts was increased to at least 8 hours off for first-year residents and at least 14 hours off for upper-year residents.

“Duty hour reform is arguably one of the largest efforts ever undertaken to improve the quality and safety of patient care in teaching hospitals,” said Dr. Mitesh S. Patel of the University of Pennsylvania and the Veterans Affairs Hospital Center for Health Equity Research and Promotion, both in Philadelphia, and his associates.

They assessed 30-day mortality and readmissions among 2,790,356 Medicare patients who were treated either for acute MI, stroke, gastrointestinal bleeding, or heart failure, or who underwent general, orthopedic, or vascular surgery, at 3,104 hospitals between 2009 and 2012. The investigators found no significant associations, either positive or negative, between the reforms to residents’ duty hours and any patient outcomes. Sensitivity analyses confirmed the results of the primary data analyses.

“Our findings suggest that ... the goals of improving the quality and safety of patient care ... were not being achieved. Conversely, concerns that outcomes might actually worsen because of decreased continuity of care have not been borne out,” Dr. Patel and his associates said (JAMA 2014 Dec. 9 [doi:10.1001/jama.2014.15273]).

The investigators noted that their study was limited in that it could not take into account hospitals’ adherence to the new requirements. Their study also did not assess other outcomes such as patient safety indicators or complication rates, which “may better elucidate the relative effects of decreased resident fatigue and increased patient hand offs.” And their study couldn’t address any possible confounding effects from other concurrent policy initiatives aimed at improving care for Medicare beneficiaries, such as the Hospital Readmissions Reduction Program.

In the other study, a separate group of researchers used data from the American College of Surgeons National Surgical Quality Improvement Program to assess outcomes for 535,499 patients who underwent general surgery at 131 hospitals during the 2 years before and the 2 years after the reforms to residents’ duty hours were implemented. This included 23 teaching hospitals in which residents were involved in at least 95% of general surgeries, said Dr. Ravi Rajaram of the division of research and optimal patient care, American College of Surgeons, and the Institute for Public Health and Medicine at Northwestern University, both in Chicago, and his associates.

The reforms were not associated with any change in rates of patient mortality or serious morbidity, either in the study population as a whole or in the subgroups of high-risk and low-risk patients. They also had no effect on secondary outcomes such as surgical-site infection or sepsis. These results remained consistent across several sensitivity analyses.

Neither mean scores for in-training, written board, and oral board examinations nor pass rates for those examinations showed any significant changes during the study period.

“Moreover, first-year trainees, who were most directly affected by the 2011 reforms, did not improve their ABSITE [American Board of Surgery In-Training Examination] scores, despite presumably more free time to prepare,” Dr. Rajaram and his associates said (JAMA 2014 Dec. 9 [doi:10.1001/JAMA.2014.15277]).

They cautioned that their study assessed only the first 2 years following duty-hour reform, and “there may be differences in patient care or resident examination performance that are evident only several years after implementation and adoption of new duty-hour requirements.” In addition, a retrospective observational study such as this one could not produce the high-level evidence needed to guide policy decisions. “To that end, a national multicenter cluster-randomized trial is being conducted (the Flexibility In duty hour Requirements for Surgical Trainees [FIRST] trial), comparing current duty-hour requirements with flexible duty hours to assess the effects of this intervention on patient outcomes and resident well-being. This trial may further inform the debate of how to optimally structure postgraduate training,” they said.

Key clinical point: The newest (2011) reforms to resident duty hours haven’t changed patient mortality or morbidity outcomes.

Major finding: 30-day mortality and readmissions among almost 3 million Medicare patients at 3,104 hospitals did not change between 2009 and 2012.

Data source: Two observational cohort studies of millions of hospitalized adults across the country, comparing patient outcomes before with those after the 2011 reforms in duty hours for residents.

Disclosures: Dr. Patel’s study was funded in part by the National Heart, Lung, and Blood Institute, the Department of Veterans Affairs, and the Robert Wood Johnson Foundation. Dr. Rajaram’s study was supported by the Agency for Healthcare Research and Quality, the American College of Surgeons, and Merck. All of the investigators reported having no relevant financial conflicts of interest.

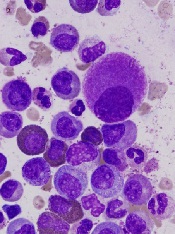

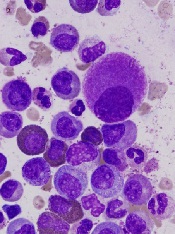

Investigational sotatercept improves heme parameters in MDS

SAN FRANCISCO – A first-in-class investigational agent called sotatercept appears to be safe and to improve hematologic parameters in patients with lower-risk myelodysplastic syndrome or nonproliferative chronic myelomonocytic leukemia and anemia requiring transfusion, a study showed.

In the open-label phase II dose-finding study of sotatercept in patients with myelodysplastic syndrome (MDS) or nonproliferative chronic myelomonocytic leukemia (CMML), hematologic improvement according to International Working Group (IWG) 2006 criteria was seen in 24 of 53 evaluable patients, said Dr. Rami Komrokji of the Moffitt Cancer Center,Tampa.

The patients were all refractory to, or were deemed to have a low chance of responding to, an erythropoiesis-stimulating agent (ESA), Dr. Komrokji said at the annual meeting of the American Society of Hematology.

“A medication like sotatercept would probably have a role in the management of anemia in lower-risk MDS patients. The treatment is administered every 3 weeks, which makes it also logistically easier for the patients to get the treatment. I don’t think we have seen any safety concern, at least at this point, about the chronic use of this medication,” he said in an interview.

Sotatercept (ACE-011) is an activin type IIA receptor fusion protein that acts on late-stage erythropoiesis to increase the release of mature erythrocytes into circulation. The mechanism of action is distinct from that of erythropoietins such as epoetin alfa (Procrit, Epogen) or darbapoietin alfa (Aranesp).

In clinical trials with healthy volunteers, sotatercept has been shown to increase hemoglobin levels, suggesting that it could help to reduce anemia and perhaps lessen dependence on transfusions among patients with lower-risk MDS, Dr. Komrokji said.

He and his colleagues at centers in the United States and France enrolled patients with low-risk or intermediate-1–risk MDS as defined by the International Prognostic Scoring System (IPSS), or nonproliferative CMML (fewer than 13,000 white blood cells per microliter). The patients had to have anemia requiring at least 2 red blood cell (RBC) transfusions in the 12 weeks before enrollment for hemoglobin levels below 9.0 g/dL, and no response, loss of response, or a low chance of response to an ESA. Those patients with serum erythropoietin levels greater than 500 mIU/mL were considered to have a low chance of responding to an ESA.

The patients received subcutaneous injections of sotatercept at doses of 0.1, 0.3, 0.5, or 1.0 mg/kg once every 3 weeks.

As noted, the rate of overall hematologic improvement by IWG 2006 criteria was 45%, occurring in 24 of 53 patients available for evaluation. Five of 44 patients with a high transfusion burden (4 or more RBC units required within 8 weeks) were able to be free of RBC transfusions for at least 8 weeks, as were 5 of 9 with a low transfusion burden (fewer than 4 RBC units over a period of 8 weeks).

Looking at the efficacy in patients with a high transfusion burden, the investigators found that 4 of 6 assigned to the 0.3-mg/kg dose group and 8 of 14 assigned to the 1-mg/kg dose group had a reduction in transfusion burden. The median duration of effect was 106 days, with the longest response lasting for 150 days.

There were no major adverse events in the study, and no apparent increase in risk for thrombosis, as had been seen in some studies of ESAs. Another theoretical risk with this type of agent is hypertension, but there was only one grade 3 case and no grade 4 cases of hypertension in the study, Dr. Komrokji said.

Sotatercept is currently in phase II trials for anemia related to hematologic malignancies and other diseases.

SAN FRANCISCO – A first-in-class investigational agent called sotatercept appears to be safe and to improve hematologic parameters in patients with lower-risk myelodysplastic syndrome or nonproliferative chronic myelomonocytic leukemia and anemia requiring transfusion, a study showed.

In the open-label phase II dose-finding study of sotatercept in patients with myelodysplastic syndrome (MDS) or nonproliferative chronic myelomonocytic leukemia (CMML), hematologic improvement according to International Working Group (IWG) 2006 criteria was seen in 24 of 53 evaluable patients, said Dr. Rami Komrokji of the Moffitt Cancer Center,Tampa.

The patients were all refractory to, or were deemed to have a low chance of responding to, an erythropoiesis-stimulating agent (ESA), Dr. Komrokji said at the annual meeting of the American Society of Hematology.

“A medication like sotatercept would probably have a role in the management of anemia in lower-risk MDS patients. The treatment is administered every 3 weeks, which makes it also logistically easier for the patients to get the treatment. I don’t think we have seen any safety concern, at least at this point, about the chronic use of this medication,” he said in an interview.

Sotatercept (ACE-011) is an activin type IIA receptor fusion protein that acts on late-stage erythropoiesis to increase the release of mature erythrocytes into circulation. The mechanism of action is distinct from that of erythropoietins such as epoetin alfa (Procrit, Epogen) or darbapoietin alfa (Aranesp).

In clinical trials with healthy volunteers, sotatercept has been shown to increase hemoglobin levels, suggesting that it could help to reduce anemia and perhaps lessen dependence on transfusions among patients with lower-risk MDS, Dr. Komrokji said.

He and his colleagues at centers in the United States and France enrolled patients with low-risk or intermediate-1–risk MDS as defined by the International Prognostic Scoring System (IPSS), or nonproliferative CMML (fewer than 13,000 white blood cells per microliter). The patients had to have anemia requiring at least 2 red blood cell (RBC) transfusions in the 12 weeks before enrollment for hemoglobin levels below 9.0 g/dL, and no response, loss of response, or a low chance of response to an ESA. Those patients with serum erythropoietin levels greater than 500 mIU/mL were considered to have a low chance of responding to an ESA.

The patients received subcutaneous injections of sotatercept at doses of 0.1, 0.3, 0.5, or 1.0 mg/kg once every 3 weeks.

As noted, the rate of overall hematologic improvement by IWG 2006 criteria was 45%, occurring in 24 of 53 patients available for evaluation. Five of 44 patients with a high transfusion burden (4 or more RBC units required within 8 weeks) were able to be free of RBC transfusions for at least 8 weeks, as were 5 of 9 with a low transfusion burden (fewer than 4 RBC units over a period of 8 weeks).

Looking at the efficacy in patients with a high transfusion burden, the investigators found that 4 of 6 assigned to the 0.3-mg/kg dose group and 8 of 14 assigned to the 1-mg/kg dose group had a reduction in transfusion burden. The median duration of effect was 106 days, with the longest response lasting for 150 days.

There were no major adverse events in the study, and no apparent increase in risk for thrombosis, as had been seen in some studies of ESAs. Another theoretical risk with this type of agent is hypertension, but there was only one grade 3 case and no grade 4 cases of hypertension in the study, Dr. Komrokji said.

Sotatercept is currently in phase II trials for anemia related to hematologic malignancies and other diseases.

SAN FRANCISCO – A first-in-class investigational agent called sotatercept appears to be safe and to improve hematologic parameters in patients with lower-risk myelodysplastic syndrome or nonproliferative chronic myelomonocytic leukemia and anemia requiring transfusion, a study showed.

In the open-label phase II dose-finding study of sotatercept in patients with myelodysplastic syndrome (MDS) or nonproliferative chronic myelomonocytic leukemia (CMML), hematologic improvement according to International Working Group (IWG) 2006 criteria was seen in 24 of 53 evaluable patients, said Dr. Rami Komrokji of the Moffitt Cancer Center,Tampa.

The patients were all refractory to, or were deemed to have a low chance of responding to, an erythropoiesis-stimulating agent (ESA), Dr. Komrokji said at the annual meeting of the American Society of Hematology.

“A medication like sotatercept would probably have a role in the management of anemia in lower-risk MDS patients. The treatment is administered every 3 weeks, which makes it also logistically easier for the patients to get the treatment. I don’t think we have seen any safety concern, at least at this point, about the chronic use of this medication,” he said in an interview.

Sotatercept (ACE-011) is an activin type IIA receptor fusion protein that acts on late-stage erythropoiesis to increase the release of mature erythrocytes into circulation. The mechanism of action is distinct from that of erythropoietins such as epoetin alfa (Procrit, Epogen) or darbapoietin alfa (Aranesp).

In clinical trials with healthy volunteers, sotatercept has been shown to increase hemoglobin levels, suggesting that it could help to reduce anemia and perhaps lessen dependence on transfusions among patients with lower-risk MDS, Dr. Komrokji said.

He and his colleagues at centers in the United States and France enrolled patients with low-risk or intermediate-1–risk MDS as defined by the International Prognostic Scoring System (IPSS), or nonproliferative CMML (fewer than 13,000 white blood cells per microliter). The patients had to have anemia requiring at least 2 red blood cell (RBC) transfusions in the 12 weeks before enrollment for hemoglobin levels below 9.0 g/dL, and no response, loss of response, or a low chance of response to an ESA. Those patients with serum erythropoietin levels greater than 500 mIU/mL were considered to have a low chance of responding to an ESA.

The patients received subcutaneous injections of sotatercept at doses of 0.1, 0.3, 0.5, or 1.0 mg/kg once every 3 weeks.

As noted, the rate of overall hematologic improvement by IWG 2006 criteria was 45%, occurring in 24 of 53 patients available for evaluation. Five of 44 patients with a high transfusion burden (4 or more RBC units required within 8 weeks) were able to be free of RBC transfusions for at least 8 weeks, as were 5 of 9 with a low transfusion burden (fewer than 4 RBC units over a period of 8 weeks).

Looking at the efficacy in patients with a high transfusion burden, the investigators found that 4 of 6 assigned to the 0.3-mg/kg dose group and 8 of 14 assigned to the 1-mg/kg dose group had a reduction in transfusion burden. The median duration of effect was 106 days, with the longest response lasting for 150 days.

There were no major adverse events in the study, and no apparent increase in risk for thrombosis, as had been seen in some studies of ESAs. Another theoretical risk with this type of agent is hypertension, but there was only one grade 3 case and no grade 4 cases of hypertension in the study, Dr. Komrokji said.

Sotatercept is currently in phase II trials for anemia related to hematologic malignancies and other diseases.

Key clinical point: Sotatercept is a first-in-its-class agent that stimulates erythropoiesis through a mechanism different from that of erythropoietins.

Major finding: The rate of overall hematologic improvement by IWG 2006 criteria was 45%, occurring in 24 of 53 patients available for evaluation.

Data source: An ongoing phase II study with data available on 53 patients with MDS or nonproliferative CMML.

Disclosures: The study is sponsored by Celgene. Dr. Komrokji reported consulting for and receiving research funding from the company.

Nilotinib plus chemotherapy pays off for older patients with Ph+ALL

SAN FRANCISCO– The study was small but encouraging: Among 47 older patients with newly diagnosed acute lymphoblastic leukemia positive for the Philadelphia chromosome, 41 had a complete hematologic response to a combination of chemotherapy and the targeted agent nilotinib (Tasigna), report investigators from a European consortium.

“The data I have presented show that the combination of nilotinib with this age-adapted chemotherapy is highly effective. We do have quite a reasonable overall survival estimate at 2 years of just more than 70%,” said Dr. Oliver Ottmann of Goethe University in Frankfurt, on behalf of colleagues in the European Working Group for Adult ALL (EWALL).

The study also shows that although some centers are reluctant to offer allogeneic stem cell transplantation (SCT) to older patients, it is still a viable treatment option in this population, Dr. Ottmann said at a briefing at the annual meeting of the American Society of Hematology.

Although older patients with newly diagnosed Philadelphia-positive (Ph+) ALL have a high complete hematologic response rate (CHR) with imatinib (Gleevec), they generally have a poor prognosis because of a high rate of relapse.

Because nilotinib, a potent inhibitor of the ABL kinase, has good efficacy in the chronic and accelerated phase of Ph+ chronic myeloid leukemia, the EWALL investigators initiated a study to evaluate it in combination with chemotherapy in the front-line setting.

Adults aged 55 years and older with ALL positive for the Philadelphia chromosome and/or BCR-ABL1 fusion who were treatment naive or had not received therapy other than corticosteroids, single-dose vincristine, or three doses of cyclophosphamide were eligible.

Details of the combination regimen are available online.

Briefly, following a prephase with dexamethasone and optional cyclophosphamide, patients receive nilotinib 400 mg twice daily starting with induction and continuously thereafter. During induction, nilotinib is given with intravenous injections of vincristine and dexamethasone for 4 weeks, followed by consolidation with nilotinib, methotrexate, asparaginase and cytarabine. Maintenance consists of nilotinib, 6-mercaptopurine, and methotrexate once weekly for 1 month then every other month, and dexamethasone and vincristine in 2 month intervals up to 24 months.

The data Dr. Ottmann reported come from an interim analysis of the ongoing study. As of August 2014, data on 47 of 56 patients was available for an efficacy analysis, As noted before, the rate of CHR was 87%, occurring in 41 of 47 patients. The treatment evoked a partial response or no response in 2 patients, and there was one death during the induction phase. Additionally, three patients discontinued therapy early and were not included in the assessment, but at least one had a complete response later on, Dr. Ottmann noted.

The median time to a complete response (CR) was 41 days, but CRs occurred as early as 25 days and as late as 62 days after the start of therapy. The remissions at the time of data cutoff appeared to be durable, but follow-up is still early, he said.

Overall survival at a median follow-up for all patients of 8.6 months was 72.7% at 30 months for patients who did not undergo SCT (allowed under the protocol), and 67.1% at 30 months for patients who underwent SCT. This difference was not significant, but only nine patients at the time of data cutoff had undergone transplantation.

“It will be interesting to see how this will proceed if the transplant-free patients will do as well as the others,” Dr. Ottmann said.

An analysis of molecular response by minimal residual disease (MRD) time point showed a significant further increase with the consolidation chemotherapy and kinase inhibitor, emphasizing that “continuing the treatment in this form emphasizes the depth of response. If we then look at the rate of MRD negativity using high quality assays, then a quarter of the patients have undetectable polymerase chain reaction during the consolidation cycles, and approximately 80% achieves something that we call a major molecular response,” he said.

Dr. Ottmann did not provide updated safety data, but at the time of the data cutoff, there had been 34 serious adverse events reported, 11 of which occurred during induction, 16 during consolidation, 6 during maintenance, and 1 following discontinuation. The most-common adverse events were infections and neutropenic fevers. Single serious adverse events included metabolic, cardiovascular, neurologic, renal, and hepatic events.

The trial is expected to be completed in the next few months.

SAN FRANCISCO– The study was small but encouraging: Among 47 older patients with newly diagnosed acute lymphoblastic leukemia positive for the Philadelphia chromosome, 41 had a complete hematologic response to a combination of chemotherapy and the targeted agent nilotinib (Tasigna), report investigators from a European consortium.

“The data I have presented show that the combination of nilotinib with this age-adapted chemotherapy is highly effective. We do have quite a reasonable overall survival estimate at 2 years of just more than 70%,” said Dr. Oliver Ottmann of Goethe University in Frankfurt, on behalf of colleagues in the European Working Group for Adult ALL (EWALL).

The study also shows that although some centers are reluctant to offer allogeneic stem cell transplantation (SCT) to older patients, it is still a viable treatment option in this population, Dr. Ottmann said at a briefing at the annual meeting of the American Society of Hematology.

Although older patients with newly diagnosed Philadelphia-positive (Ph+) ALL have a high complete hematologic response rate (CHR) with imatinib (Gleevec), they generally have a poor prognosis because of a high rate of relapse.

Because nilotinib, a potent inhibitor of the ABL kinase, has good efficacy in the chronic and accelerated phase of Ph+ chronic myeloid leukemia, the EWALL investigators initiated a study to evaluate it in combination with chemotherapy in the front-line setting.

Adults aged 55 years and older with ALL positive for the Philadelphia chromosome and/or BCR-ABL1 fusion who were treatment naive or had not received therapy other than corticosteroids, single-dose vincristine, or three doses of cyclophosphamide were eligible.

Details of the combination regimen are available online.

Briefly, following a prephase with dexamethasone and optional cyclophosphamide, patients receive nilotinib 400 mg twice daily starting with induction and continuously thereafter. During induction, nilotinib is given with intravenous injections of vincristine and dexamethasone for 4 weeks, followed by consolidation with nilotinib, methotrexate, asparaginase and cytarabine. Maintenance consists of nilotinib, 6-mercaptopurine, and methotrexate once weekly for 1 month then every other month, and dexamethasone and vincristine in 2 month intervals up to 24 months.

The data Dr. Ottmann reported come from an interim analysis of the ongoing study. As of August 2014, data on 47 of 56 patients was available for an efficacy analysis, As noted before, the rate of CHR was 87%, occurring in 41 of 47 patients. The treatment evoked a partial response or no response in 2 patients, and there was one death during the induction phase. Additionally, three patients discontinued therapy early and were not included in the assessment, but at least one had a complete response later on, Dr. Ottmann noted.

The median time to a complete response (CR) was 41 days, but CRs occurred as early as 25 days and as late as 62 days after the start of therapy. The remissions at the time of data cutoff appeared to be durable, but follow-up is still early, he said.

Overall survival at a median follow-up for all patients of 8.6 months was 72.7% at 30 months for patients who did not undergo SCT (allowed under the protocol), and 67.1% at 30 months for patients who underwent SCT. This difference was not significant, but only nine patients at the time of data cutoff had undergone transplantation.

“It will be interesting to see how this will proceed if the transplant-free patients will do as well as the others,” Dr. Ottmann said.