User login

What explains poor adherence to eosinophilic esophagitis therapy?

Almost half of adult patients with eosinophilic esophagitis (EoE) reported poor adherence to long-term medical and dietary therapy, with age younger than 40 years and low necessity beliefs being the strongest predictors, a new study finds.

Clinicians need to spend more time discussing the need for EoE therapy with their patients, especially if they are younger, according to lead author Maria L. Haasnoot, MD, of Amsterdam University Medical Center (UMC), the Netherlands, and colleagues.

“Chronic treatment is necessary to maintain suppression of the inflammation and prevent negative outcomes in the long-term,” they write.

Until the recent approval of dupilumab (Dupixent) by the U.S. Food and Drug Administration, patients with EoE relied upon off-label options, including proton pump inhibitors and swallowed topical steroids, as well as dietary interventions for ongoing suppression of inflammation. But only about 1 in 6 patients achieve complete remission at 5 years, according to Dr. Haasnoot and colleagues.

“It is uncertain to what degree limited adherence to treatment [plays] a role in the limited long-term effects of treatment,” they write.

The findings were published online in American Journal of Gastroenterology.

Addressing a knowledge gap

The cross-sectional study involved 177 adult patients with EoE treated at Amsterdam UMC, who were prescribed dietary or medical maintenance therapy. Of note, some patients were treated with budesonide, which is approved for EoE in Europe but not in the United States.

Median participant age was 43 years, with a male-skewed distribution (71% men). Patients had been on EoE treatment for 2-6 years. Most (76%) were on medical treatments. Nearly half were on diets that avoided one to five food groups, with some on both medical treatments and elimination diets.

Using a link sent by mail, participants completed the online Medication Adherence Rating Scale, along with several other questionnaires, such as the Beliefs about Medicine Questionnaire, to measure secondary outcomes, including a patient’s view of how necessary or disruptive maintenance therapy is in their life.

The overall prevalence of poor adherence to therapy was high (41.8%), including a nonsignificant difference in adherence between medical and dietary therapies.

“It might come as a surprise that dietary-treated patients are certainly not less adherent to treatment than medically treated patients,” the authors write, noting that the opposite is usually true.

Multivariate logistic regression showed that patients younger than 40 years were more than twice as likely to be poorly adherent (odds ratio, 2.571; 95% confidence interval, 1.195-5.532). Those with low necessity beliefs were more than four times as likely to be poorly adherent (OR, 4.423; 95% CI, 2.169-9.016). Other factors linked to poor adherence were patients with longer disease duration and more severe symptoms.

“Clinicians should pay more attention to treatment adherence, particularly in younger patients,” the authors conclude. “The necessity of treatment should be actively discussed, and efforts should be done to take doubts away, as this may improve treatment adherence and subsequently may improve treatment effects and long-term outcomes.”

More patient education needed

According to Jennifer L. Horsley-Silva, MD, of Mayo Clinic, Scottsdale, Ariz., “This study is important, as it is one of the first studies to investigate the rate of treatment adherence in EoE patients and attempts to identify factors associated with adherence both in medically and dietary treated patients.”

Dr. Horsley-Silva commented that the findings align with recent research she and her colleagues conducted at the Mayo Clinic, where few patients successfully completed a six-food elimination diet, even when paired with a dietitian. As with the present study, success trended lower among younger adults. “These findings highlight the need for physicians treating EoE to motivate all patients, but especially younger patients, by discussing disease pathophysiology and explaining the reason for maintenance treatment early on,” Dr. Horsley-Silva said.

Conversations should also address the discordance between symptoms and histologic disease, patient doubts and concerns, and other barriers to adherence, she noted.

“Shared decisionmaking is of utmost importance when deciding upon a maintenance treatment strategy and should be readdressed continually,” she added.

Gary W. Falk, MD, of Penn Medicine, Philadelphia, said that patients with EoE may be poorly adherent because therapies tend to be complicated and people often forget to take their medications, especially when their symptoms improve, even though this is a poor indicator of underlying disease. The discordance between symptoms and histology is “not commonly appreciated by the EoE GI community,” he noted.

Patients may benefit from knowing that untreated or undertreated EoE increases the risk for strictures and stenoses, need for dilation, and frequency of food bolus impactions, Dr. Falk said.

“The other thing we know is that once someone is induced into remission, and they stay on therapy ... long-term remission can be maintained,” he added.

The impact of Dupilumab

John Leung, MD, of Boston Food Allergy Center, also cited the complexities of EoE therapies as reason for poor adherence, though he believes this paradigm will shift now that dupilumab has been approved. Dupilumab injections are “just once a week, so it’s much easier in terms of frequency,” Dr. Leung said. “I would expect that the compliance [for dupilumab] will be better” than for older therapies.

Dr. Leung, who helped conduct the dupilumab clinical trials contributing to its approval for EoE and receives speaking honoraria from manufacturer Regeneron/Sanofi, said that dupilumab also overcomes the challenges with elimination diets while offering relief for concomitant conditions, such as “asthma, eczema, food allergies, and seasonal allergies.”

But Dr. Falk, who also worked on the dupilumab clinical trials, said the situation is “not straightforward,” even with FDA approval.

“There are going to be significant costs with [prescribing dupilumab], because it’s a biologic,” Dr. Falk said.

Dr. Falk also pointed out that prior authorization will be required, and until more studies can be conducted, the true impact of once-weekly dosing versus daily dosing remains unknown.

“I would say [dupilumab] has the potential to improve adherence, but we need to see if that’s going to be the case or not,” Dr. Falk said.

The authors disclosed relationships with Dr. Falk Pharma, AstraZeneca, and Sanofi/Regeneron (the manufacturers of Dupixent [dupilumab]), among others. Dr. Horsley-Silva, Dr. Falk, and Dr. Leung conducted clinical trials for dupilumab on behalf of Sanofi/Regeneron, with Dr. Leung also disclosing speaking honoraria from Sanofi/Regeneron. Dr. Horsley-Silva has acted as a clinical trial site principal investigator for Allakos and Celgene/Bristol-Myers Squibb.

A version of this article first appeared on Medscape.com.

Almost half of adult patients with eosinophilic esophagitis (EoE) reported poor adherence to long-term medical and dietary therapy, with age younger than 40 years and low necessity beliefs being the strongest predictors, a new study finds.

Clinicians need to spend more time discussing the need for EoE therapy with their patients, especially if they are younger, according to lead author Maria L. Haasnoot, MD, of Amsterdam University Medical Center (UMC), the Netherlands, and colleagues.

“Chronic treatment is necessary to maintain suppression of the inflammation and prevent negative outcomes in the long-term,” they write.

Until the recent approval of dupilumab (Dupixent) by the U.S. Food and Drug Administration, patients with EoE relied upon off-label options, including proton pump inhibitors and swallowed topical steroids, as well as dietary interventions for ongoing suppression of inflammation. But only about 1 in 6 patients achieve complete remission at 5 years, according to Dr. Haasnoot and colleagues.

“It is uncertain to what degree limited adherence to treatment [plays] a role in the limited long-term effects of treatment,” they write.

The findings were published online in American Journal of Gastroenterology.

Addressing a knowledge gap

The cross-sectional study involved 177 adult patients with EoE treated at Amsterdam UMC, who were prescribed dietary or medical maintenance therapy. Of note, some patients were treated with budesonide, which is approved for EoE in Europe but not in the United States.

Median participant age was 43 years, with a male-skewed distribution (71% men). Patients had been on EoE treatment for 2-6 years. Most (76%) were on medical treatments. Nearly half were on diets that avoided one to five food groups, with some on both medical treatments and elimination diets.

Using a link sent by mail, participants completed the online Medication Adherence Rating Scale, along with several other questionnaires, such as the Beliefs about Medicine Questionnaire, to measure secondary outcomes, including a patient’s view of how necessary or disruptive maintenance therapy is in their life.

The overall prevalence of poor adherence to therapy was high (41.8%), including a nonsignificant difference in adherence between medical and dietary therapies.

“It might come as a surprise that dietary-treated patients are certainly not less adherent to treatment than medically treated patients,” the authors write, noting that the opposite is usually true.

Multivariate logistic regression showed that patients younger than 40 years were more than twice as likely to be poorly adherent (odds ratio, 2.571; 95% confidence interval, 1.195-5.532). Those with low necessity beliefs were more than four times as likely to be poorly adherent (OR, 4.423; 95% CI, 2.169-9.016). Other factors linked to poor adherence were patients with longer disease duration and more severe symptoms.

“Clinicians should pay more attention to treatment adherence, particularly in younger patients,” the authors conclude. “The necessity of treatment should be actively discussed, and efforts should be done to take doubts away, as this may improve treatment adherence and subsequently may improve treatment effects and long-term outcomes.”

More patient education needed

According to Jennifer L. Horsley-Silva, MD, of Mayo Clinic, Scottsdale, Ariz., “This study is important, as it is one of the first studies to investigate the rate of treatment adherence in EoE patients and attempts to identify factors associated with adherence both in medically and dietary treated patients.”

Dr. Horsley-Silva commented that the findings align with recent research she and her colleagues conducted at the Mayo Clinic, where few patients successfully completed a six-food elimination diet, even when paired with a dietitian. As with the present study, success trended lower among younger adults. “These findings highlight the need for physicians treating EoE to motivate all patients, but especially younger patients, by discussing disease pathophysiology and explaining the reason for maintenance treatment early on,” Dr. Horsley-Silva said.

Conversations should also address the discordance between symptoms and histologic disease, patient doubts and concerns, and other barriers to adherence, she noted.

“Shared decisionmaking is of utmost importance when deciding upon a maintenance treatment strategy and should be readdressed continually,” she added.

Gary W. Falk, MD, of Penn Medicine, Philadelphia, said that patients with EoE may be poorly adherent because therapies tend to be complicated and people often forget to take their medications, especially when their symptoms improve, even though this is a poor indicator of underlying disease. The discordance between symptoms and histology is “not commonly appreciated by the EoE GI community,” he noted.

Patients may benefit from knowing that untreated or undertreated EoE increases the risk for strictures and stenoses, need for dilation, and frequency of food bolus impactions, Dr. Falk said.

“The other thing we know is that once someone is induced into remission, and they stay on therapy ... long-term remission can be maintained,” he added.

The impact of Dupilumab

John Leung, MD, of Boston Food Allergy Center, also cited the complexities of EoE therapies as reason for poor adherence, though he believes this paradigm will shift now that dupilumab has been approved. Dupilumab injections are “just once a week, so it’s much easier in terms of frequency,” Dr. Leung said. “I would expect that the compliance [for dupilumab] will be better” than for older therapies.

Dr. Leung, who helped conduct the dupilumab clinical trials contributing to its approval for EoE and receives speaking honoraria from manufacturer Regeneron/Sanofi, said that dupilumab also overcomes the challenges with elimination diets while offering relief for concomitant conditions, such as “asthma, eczema, food allergies, and seasonal allergies.”

But Dr. Falk, who also worked on the dupilumab clinical trials, said the situation is “not straightforward,” even with FDA approval.

“There are going to be significant costs with [prescribing dupilumab], because it’s a biologic,” Dr. Falk said.

Dr. Falk also pointed out that prior authorization will be required, and until more studies can be conducted, the true impact of once-weekly dosing versus daily dosing remains unknown.

“I would say [dupilumab] has the potential to improve adherence, but we need to see if that’s going to be the case or not,” Dr. Falk said.

The authors disclosed relationships with Dr. Falk Pharma, AstraZeneca, and Sanofi/Regeneron (the manufacturers of Dupixent [dupilumab]), among others. Dr. Horsley-Silva, Dr. Falk, and Dr. Leung conducted clinical trials for dupilumab on behalf of Sanofi/Regeneron, with Dr. Leung also disclosing speaking honoraria from Sanofi/Regeneron. Dr. Horsley-Silva has acted as a clinical trial site principal investigator for Allakos and Celgene/Bristol-Myers Squibb.

A version of this article first appeared on Medscape.com.

Almost half of adult patients with eosinophilic esophagitis (EoE) reported poor adherence to long-term medical and dietary therapy, with age younger than 40 years and low necessity beliefs being the strongest predictors, a new study finds.

Clinicians need to spend more time discussing the need for EoE therapy with their patients, especially if they are younger, according to lead author Maria L. Haasnoot, MD, of Amsterdam University Medical Center (UMC), the Netherlands, and colleagues.

“Chronic treatment is necessary to maintain suppression of the inflammation and prevent negative outcomes in the long-term,” they write.

Until the recent approval of dupilumab (Dupixent) by the U.S. Food and Drug Administration, patients with EoE relied upon off-label options, including proton pump inhibitors and swallowed topical steroids, as well as dietary interventions for ongoing suppression of inflammation. But only about 1 in 6 patients achieve complete remission at 5 years, according to Dr. Haasnoot and colleagues.

“It is uncertain to what degree limited adherence to treatment [plays] a role in the limited long-term effects of treatment,” they write.

The findings were published online in American Journal of Gastroenterology.

Addressing a knowledge gap

The cross-sectional study involved 177 adult patients with EoE treated at Amsterdam UMC, who were prescribed dietary or medical maintenance therapy. Of note, some patients were treated with budesonide, which is approved for EoE in Europe but not in the United States.

Median participant age was 43 years, with a male-skewed distribution (71% men). Patients had been on EoE treatment for 2-6 years. Most (76%) were on medical treatments. Nearly half were on diets that avoided one to five food groups, with some on both medical treatments and elimination diets.

Using a link sent by mail, participants completed the online Medication Adherence Rating Scale, along with several other questionnaires, such as the Beliefs about Medicine Questionnaire, to measure secondary outcomes, including a patient’s view of how necessary or disruptive maintenance therapy is in their life.

The overall prevalence of poor adherence to therapy was high (41.8%), including a nonsignificant difference in adherence between medical and dietary therapies.

“It might come as a surprise that dietary-treated patients are certainly not less adherent to treatment than medically treated patients,” the authors write, noting that the opposite is usually true.

Multivariate logistic regression showed that patients younger than 40 years were more than twice as likely to be poorly adherent (odds ratio, 2.571; 95% confidence interval, 1.195-5.532). Those with low necessity beliefs were more than four times as likely to be poorly adherent (OR, 4.423; 95% CI, 2.169-9.016). Other factors linked to poor adherence were patients with longer disease duration and more severe symptoms.

“Clinicians should pay more attention to treatment adherence, particularly in younger patients,” the authors conclude. “The necessity of treatment should be actively discussed, and efforts should be done to take doubts away, as this may improve treatment adherence and subsequently may improve treatment effects and long-term outcomes.”

More patient education needed

According to Jennifer L. Horsley-Silva, MD, of Mayo Clinic, Scottsdale, Ariz., “This study is important, as it is one of the first studies to investigate the rate of treatment adherence in EoE patients and attempts to identify factors associated with adherence both in medically and dietary treated patients.”

Dr. Horsley-Silva commented that the findings align with recent research she and her colleagues conducted at the Mayo Clinic, where few patients successfully completed a six-food elimination diet, even when paired with a dietitian. As with the present study, success trended lower among younger adults. “These findings highlight the need for physicians treating EoE to motivate all patients, but especially younger patients, by discussing disease pathophysiology and explaining the reason for maintenance treatment early on,” Dr. Horsley-Silva said.

Conversations should also address the discordance between symptoms and histologic disease, patient doubts and concerns, and other barriers to adherence, she noted.

“Shared decisionmaking is of utmost importance when deciding upon a maintenance treatment strategy and should be readdressed continually,” she added.

Gary W. Falk, MD, of Penn Medicine, Philadelphia, said that patients with EoE may be poorly adherent because therapies tend to be complicated and people often forget to take their medications, especially when their symptoms improve, even though this is a poor indicator of underlying disease. The discordance between symptoms and histology is “not commonly appreciated by the EoE GI community,” he noted.

Patients may benefit from knowing that untreated or undertreated EoE increases the risk for strictures and stenoses, need for dilation, and frequency of food bolus impactions, Dr. Falk said.

“The other thing we know is that once someone is induced into remission, and they stay on therapy ... long-term remission can be maintained,” he added.

The impact of Dupilumab

John Leung, MD, of Boston Food Allergy Center, also cited the complexities of EoE therapies as reason for poor adherence, though he believes this paradigm will shift now that dupilumab has been approved. Dupilumab injections are “just once a week, so it’s much easier in terms of frequency,” Dr. Leung said. “I would expect that the compliance [for dupilumab] will be better” than for older therapies.

Dr. Leung, who helped conduct the dupilumab clinical trials contributing to its approval for EoE and receives speaking honoraria from manufacturer Regeneron/Sanofi, said that dupilumab also overcomes the challenges with elimination diets while offering relief for concomitant conditions, such as “asthma, eczema, food allergies, and seasonal allergies.”

But Dr. Falk, who also worked on the dupilumab clinical trials, said the situation is “not straightforward,” even with FDA approval.

“There are going to be significant costs with [prescribing dupilumab], because it’s a biologic,” Dr. Falk said.

Dr. Falk also pointed out that prior authorization will be required, and until more studies can be conducted, the true impact of once-weekly dosing versus daily dosing remains unknown.

“I would say [dupilumab] has the potential to improve adherence, but we need to see if that’s going to be the case or not,” Dr. Falk said.

The authors disclosed relationships with Dr. Falk Pharma, AstraZeneca, and Sanofi/Regeneron (the manufacturers of Dupixent [dupilumab]), among others. Dr. Horsley-Silva, Dr. Falk, and Dr. Leung conducted clinical trials for dupilumab on behalf of Sanofi/Regeneron, with Dr. Leung also disclosing speaking honoraria from Sanofi/Regeneron. Dr. Horsley-Silva has acted as a clinical trial site principal investigator for Allakos and Celgene/Bristol-Myers Squibb.

A version of this article first appeared on Medscape.com.

Telemedicine and Home Pregnancy Testing for iPLEDGE: A Survey of Clinician Perspectives

To the Editor:

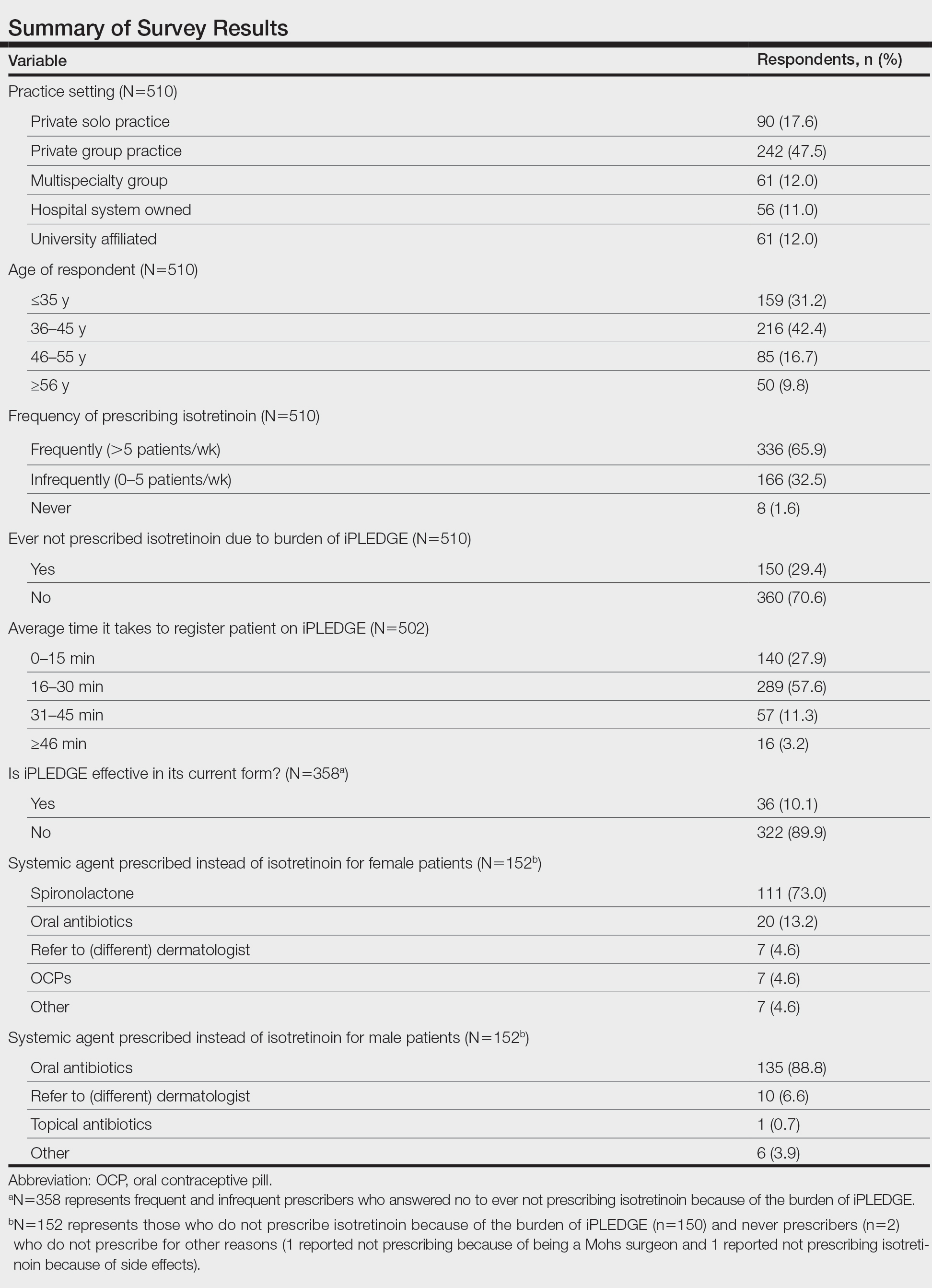

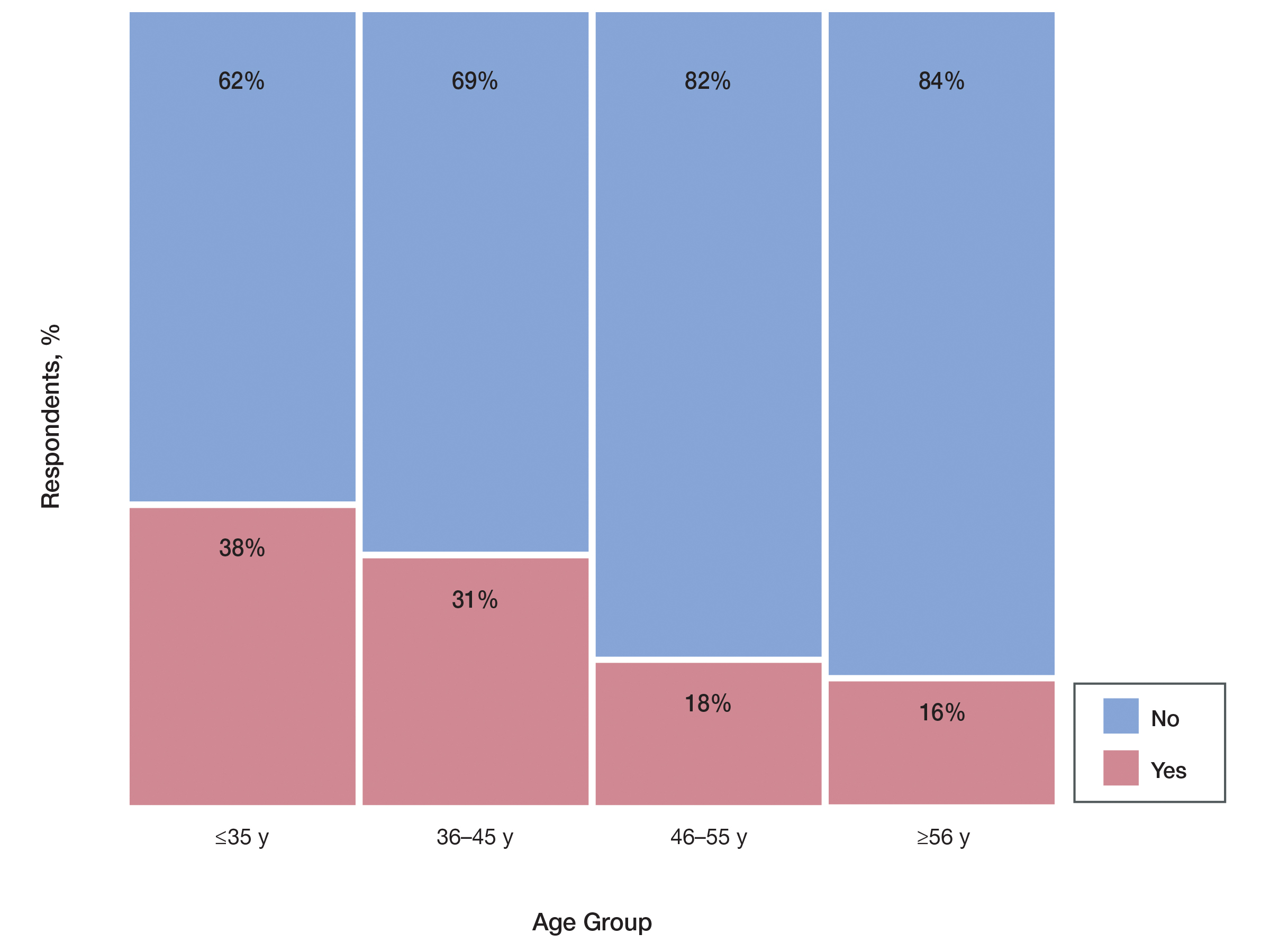

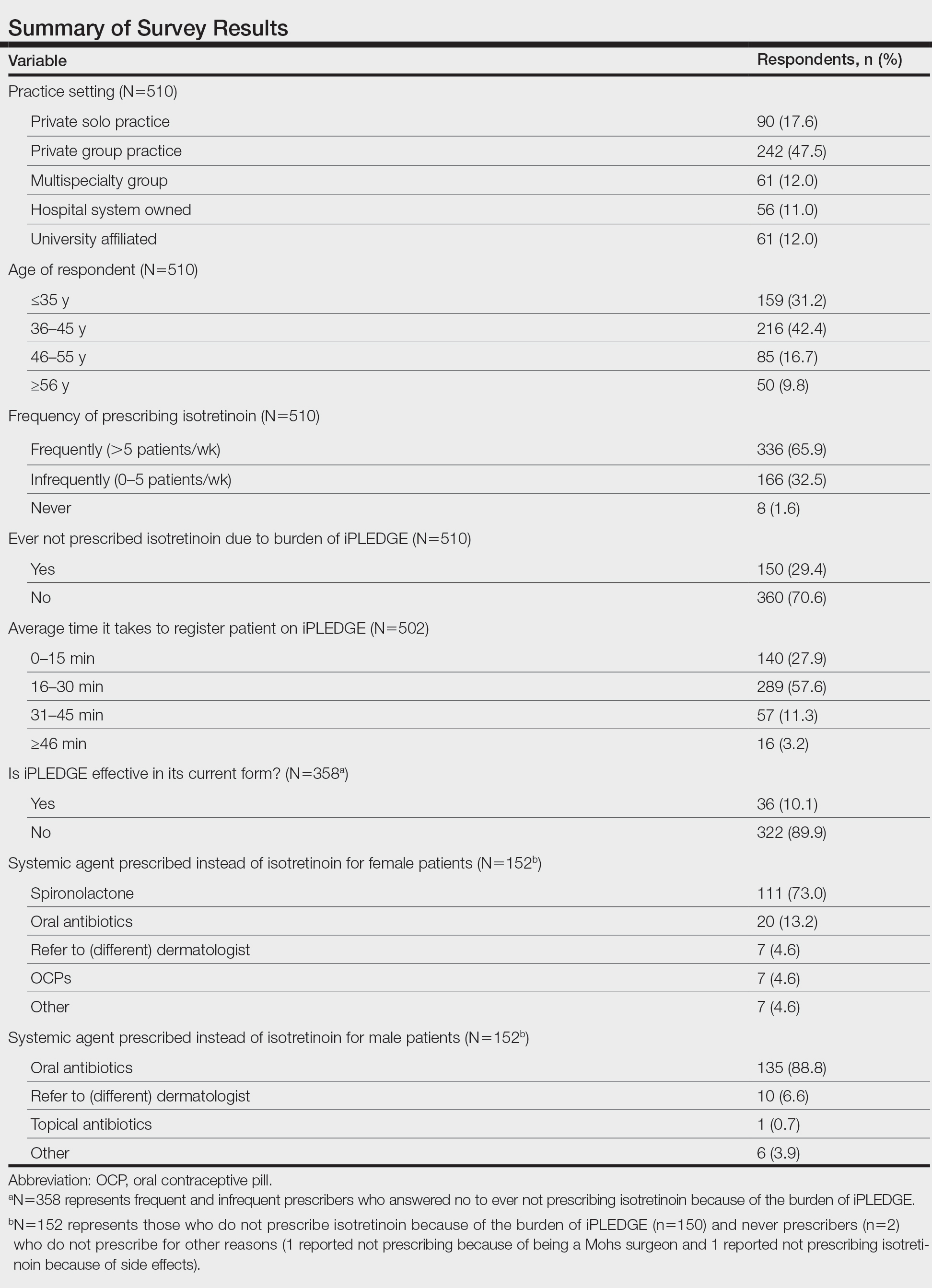

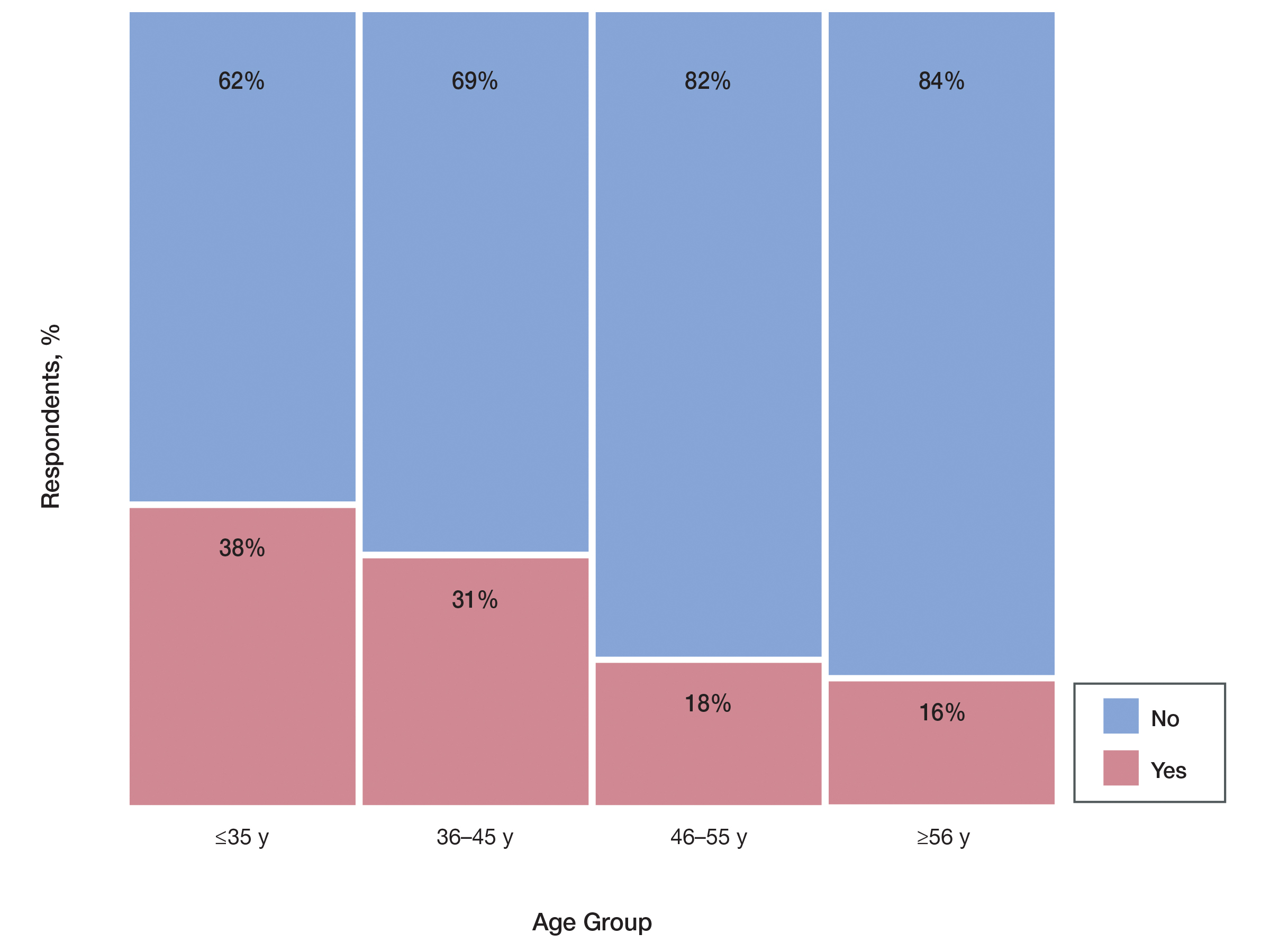

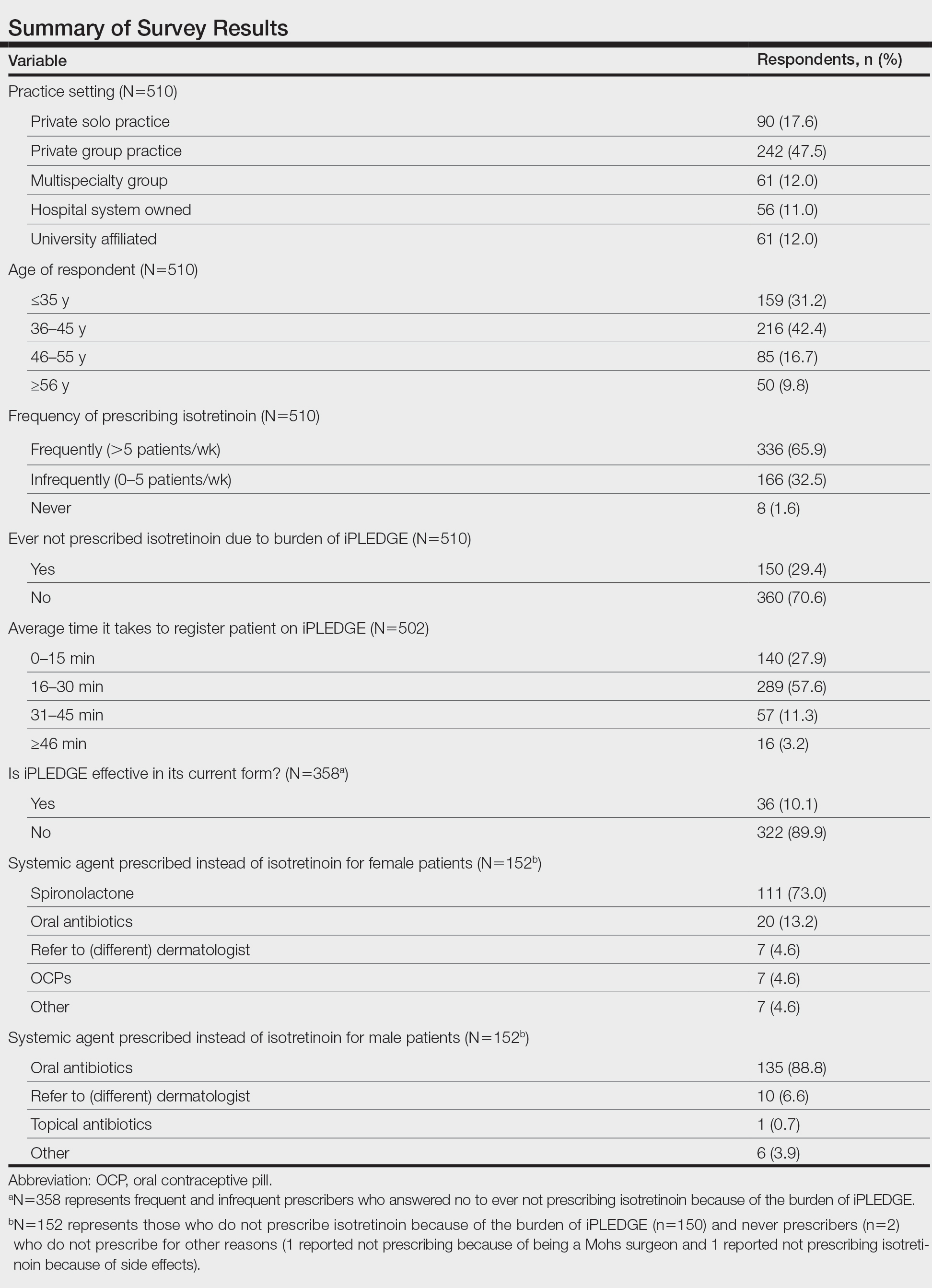

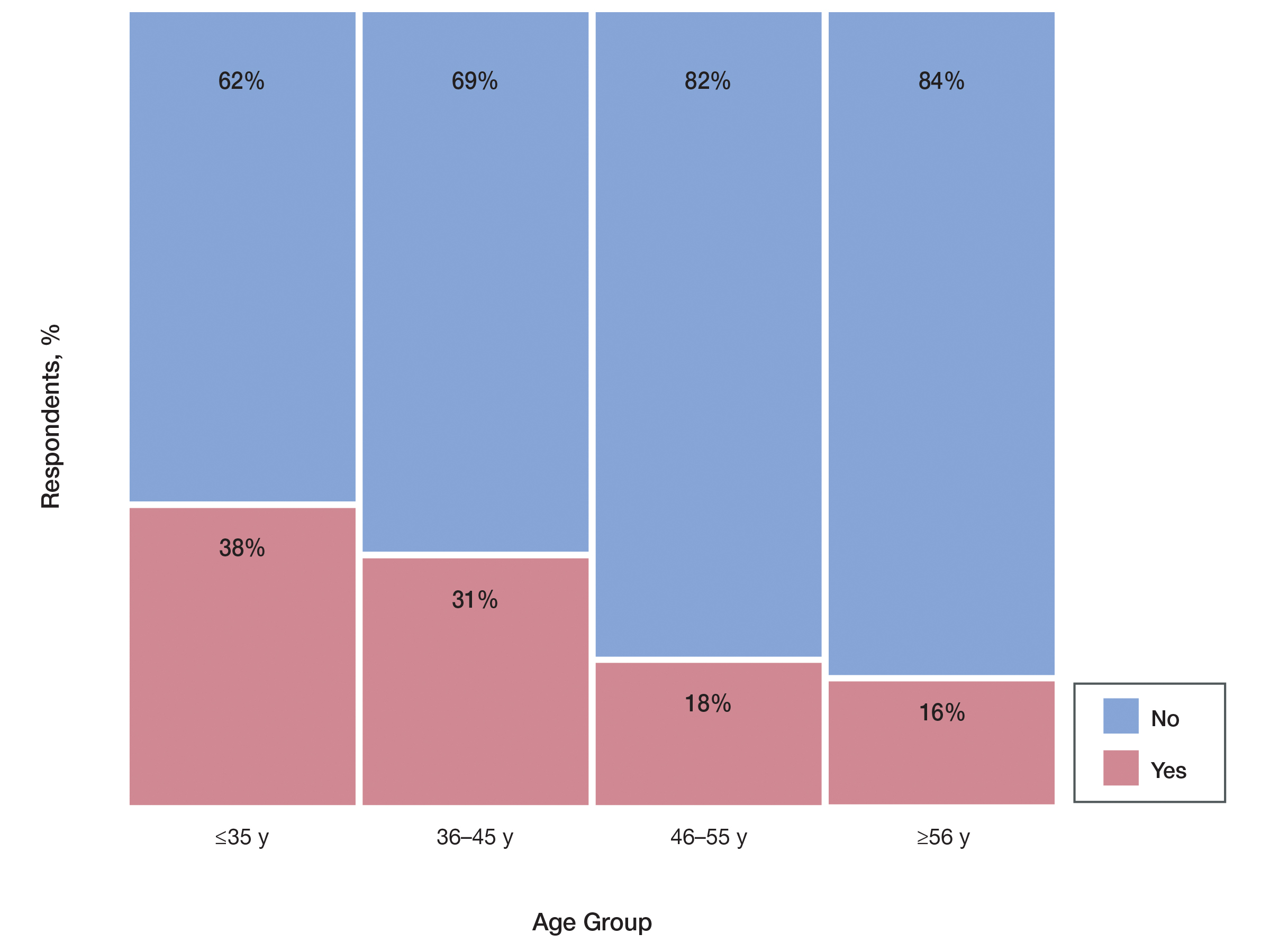

In response to the challenges of the COVID-19 pandemic, iPLEDGE announced that they would accept results from home pregnancy tests and explicitly permit telemedicine.1 Given the financial and logistical burdens associated with iPLEDGE, these changes have the potential to increase access.2 However, it is unclear whether these modifications will be allowed to continue. We sought to evaluate clinician perspectives on the role of telemedicine and home pregnancy testing for iPLEDGE.

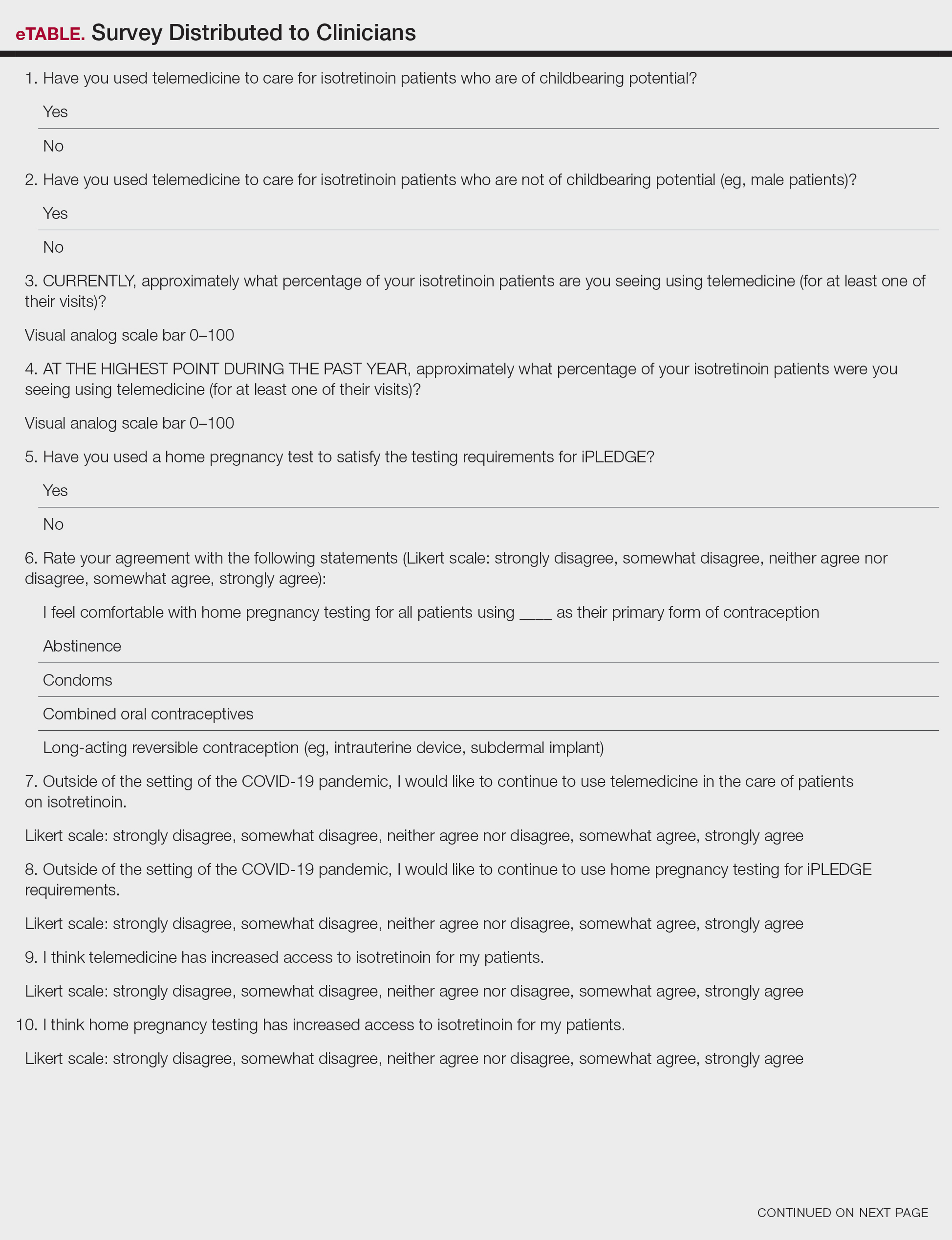

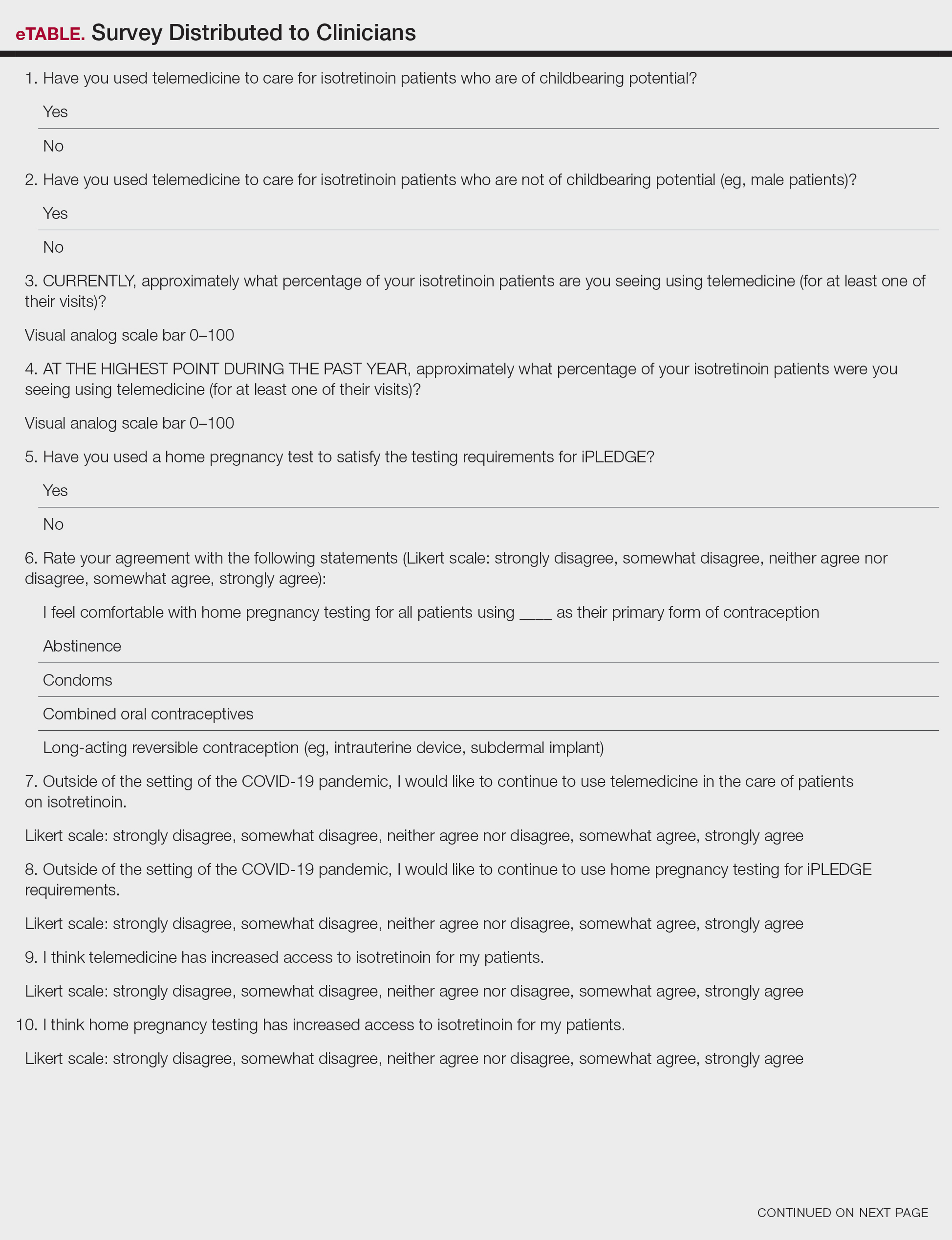

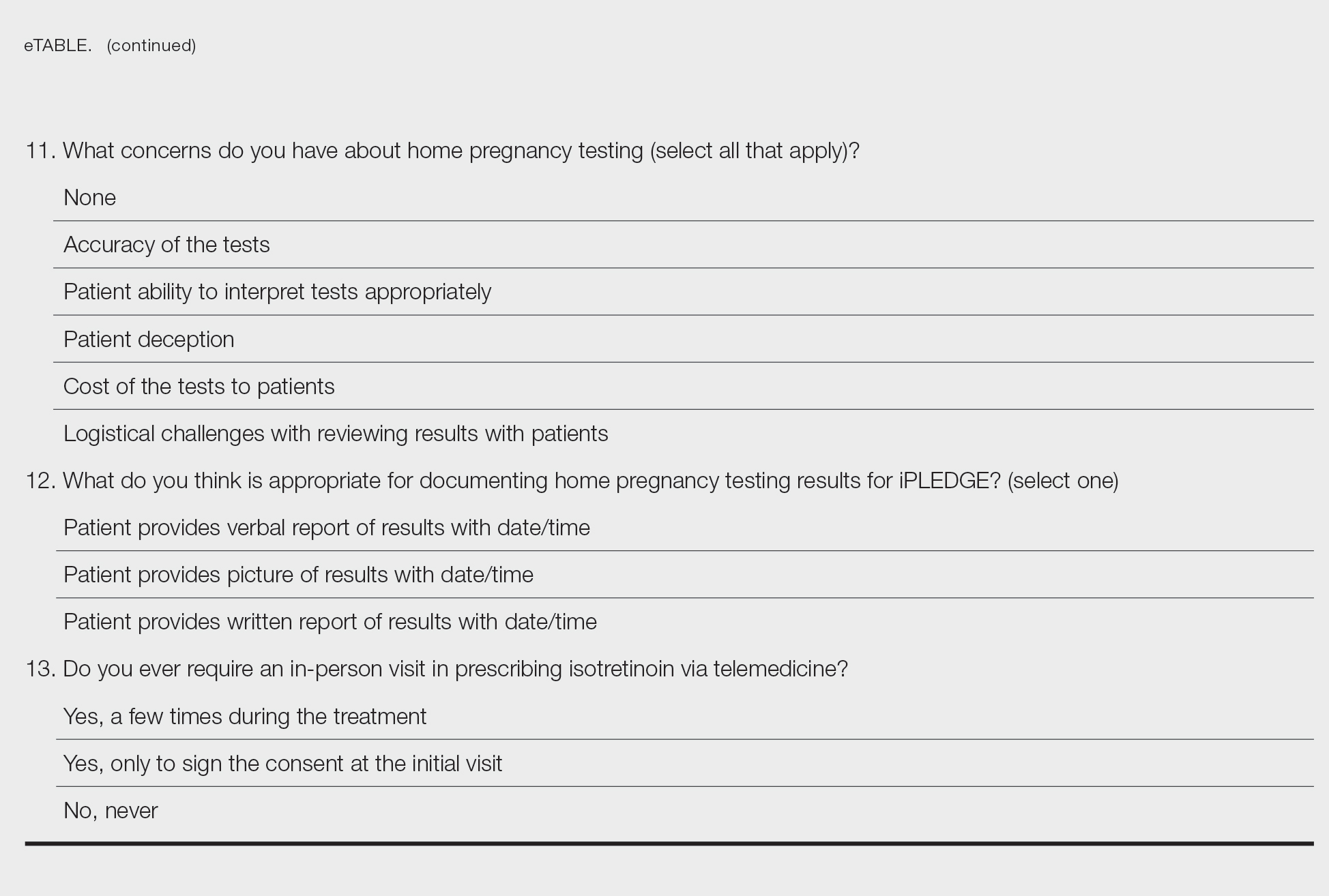

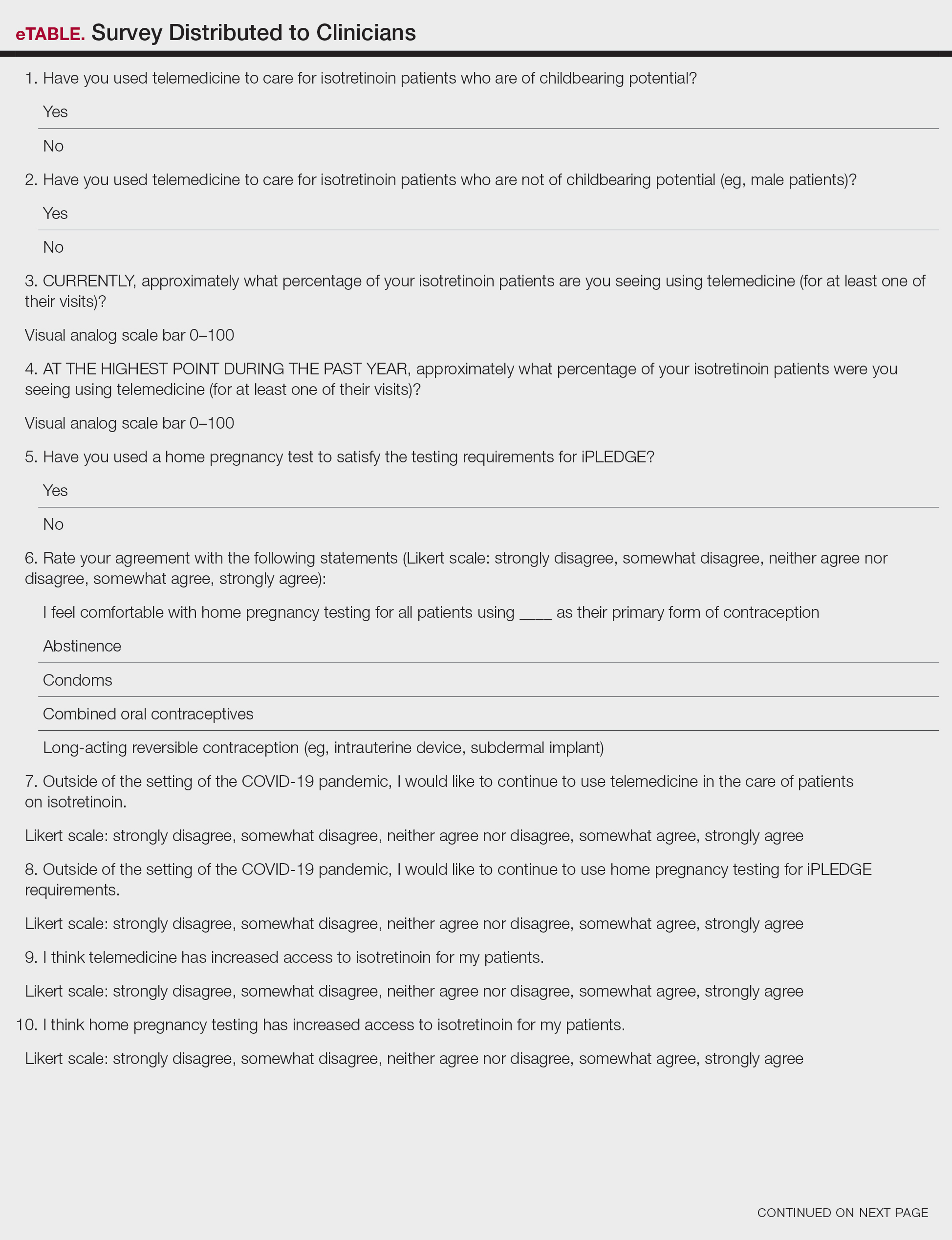

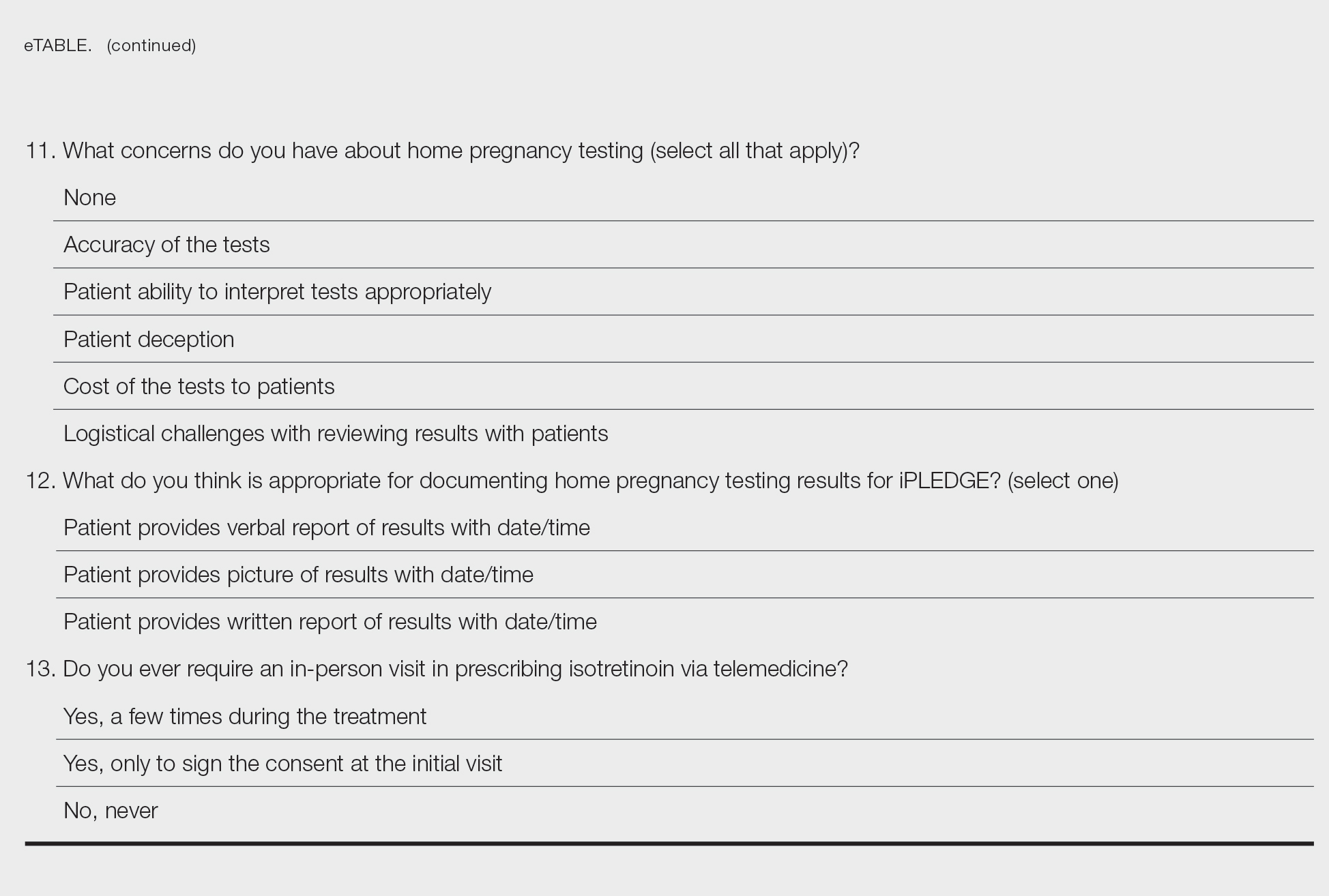

After piloting among several clinicians, a 13-question survey was distributed using the Qualtrics platform to members of the American Acne & Rosacea Society between April 14, 2021, and June 14, 2021. This survey consisted of items addressing provider practices and perspectives on telemedicine and home pregnancy testing for patients taking isotretinoin (eTable). Respondents were asked whether they think telemedicine and home pregnancy testing have improved access to care and whether they would like to continue these practices going forward. In addition, participants were asked about their concerns with home pregnancy testing and how comfortable they feel with home pregnancy testing for various contraceptive strategies (abstinence, condoms, combined oral contraceptives, and long-acting reversible contraception). This study was deemed exempt (category 2) by the University of Pennsylvania (Philadelphia, Pennsylvania) institutional review board (Protocol #844549).

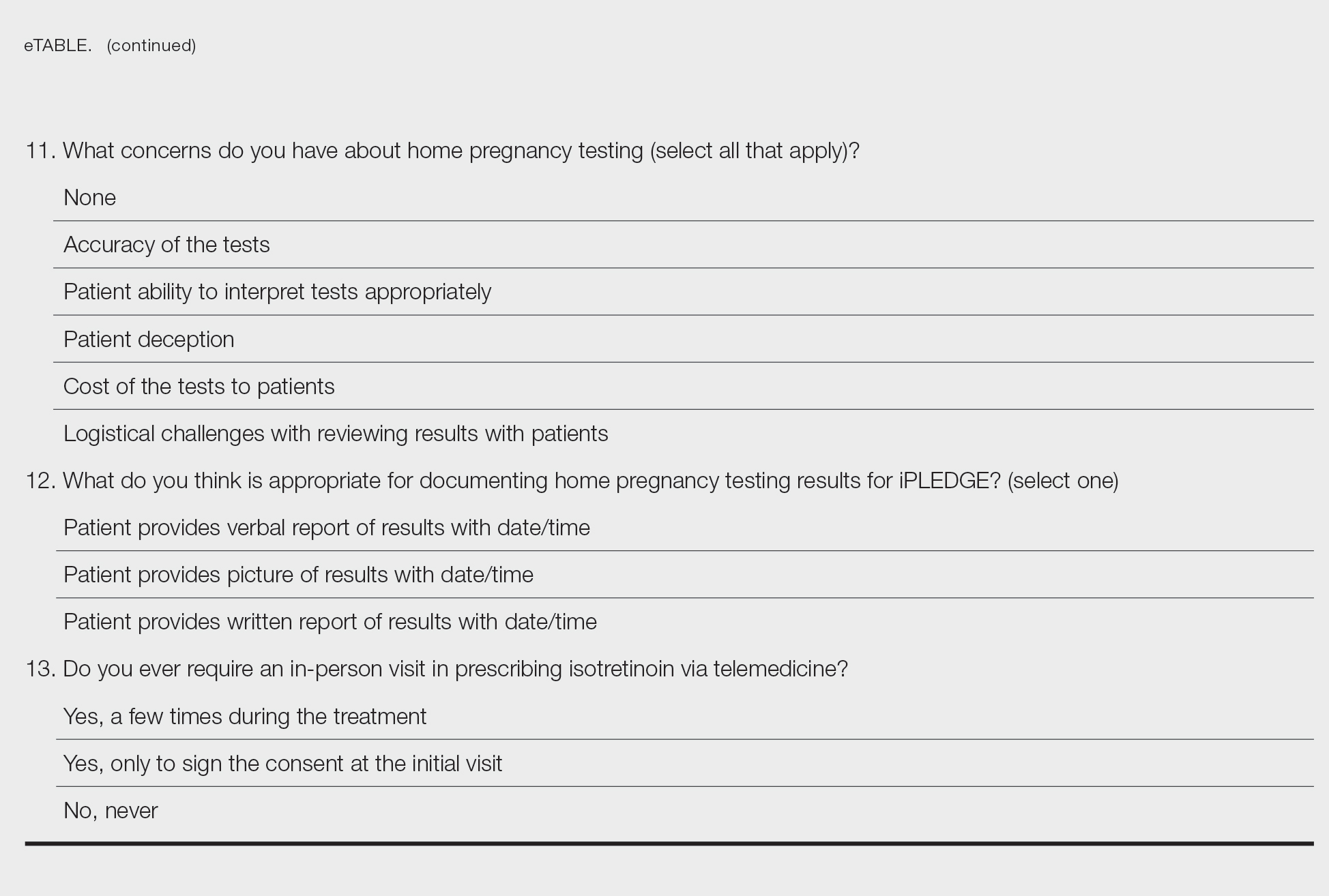

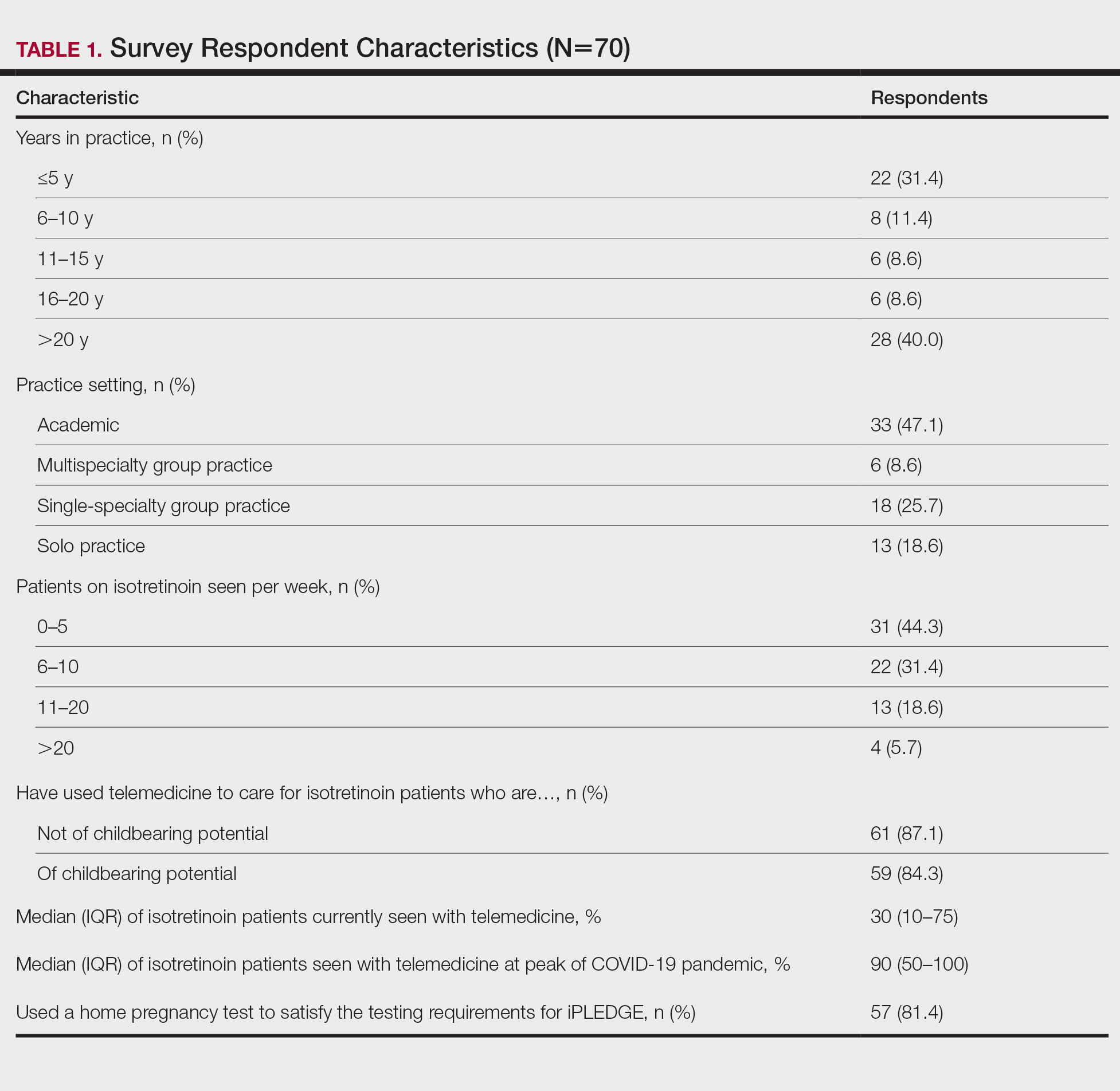

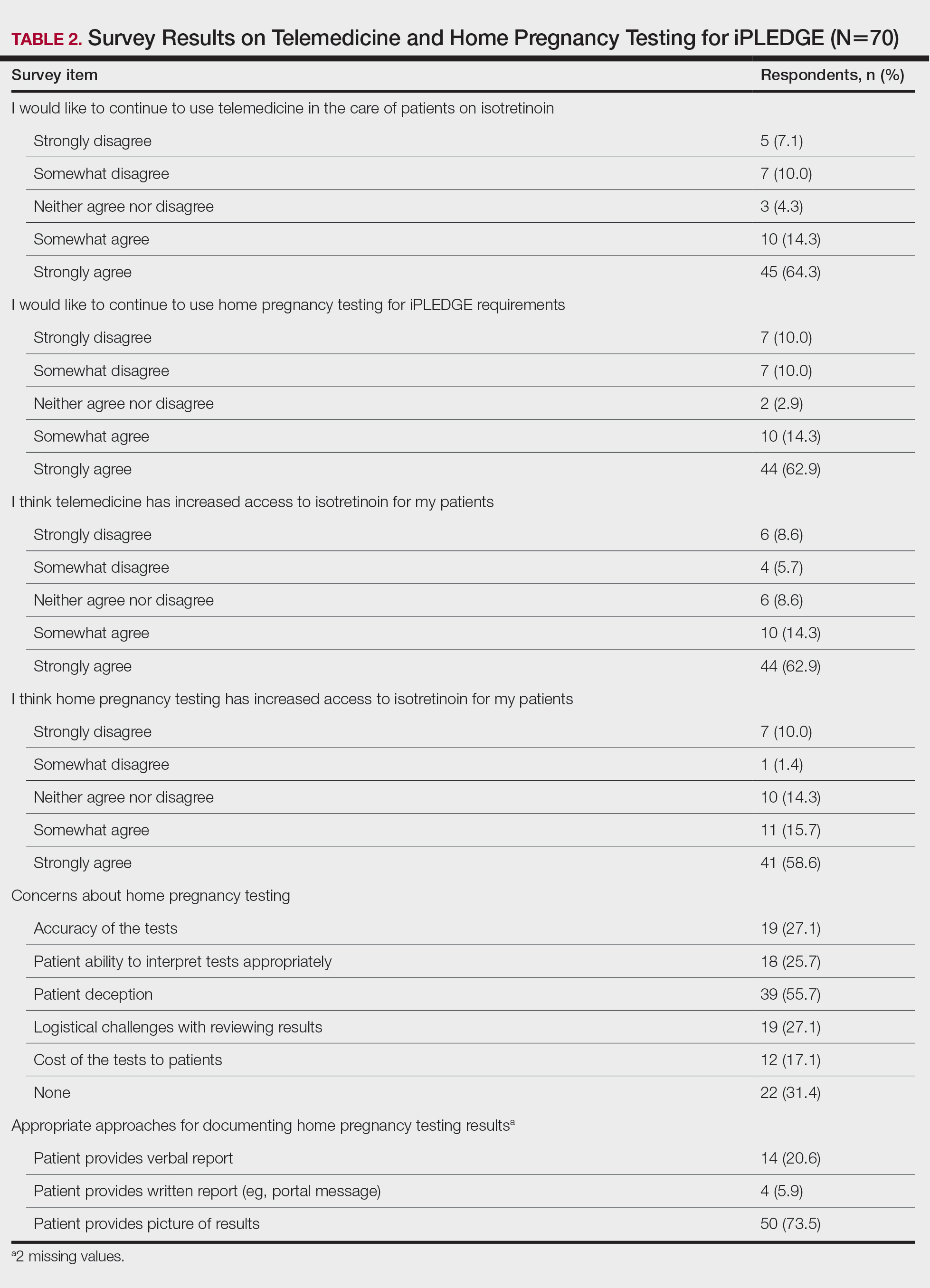

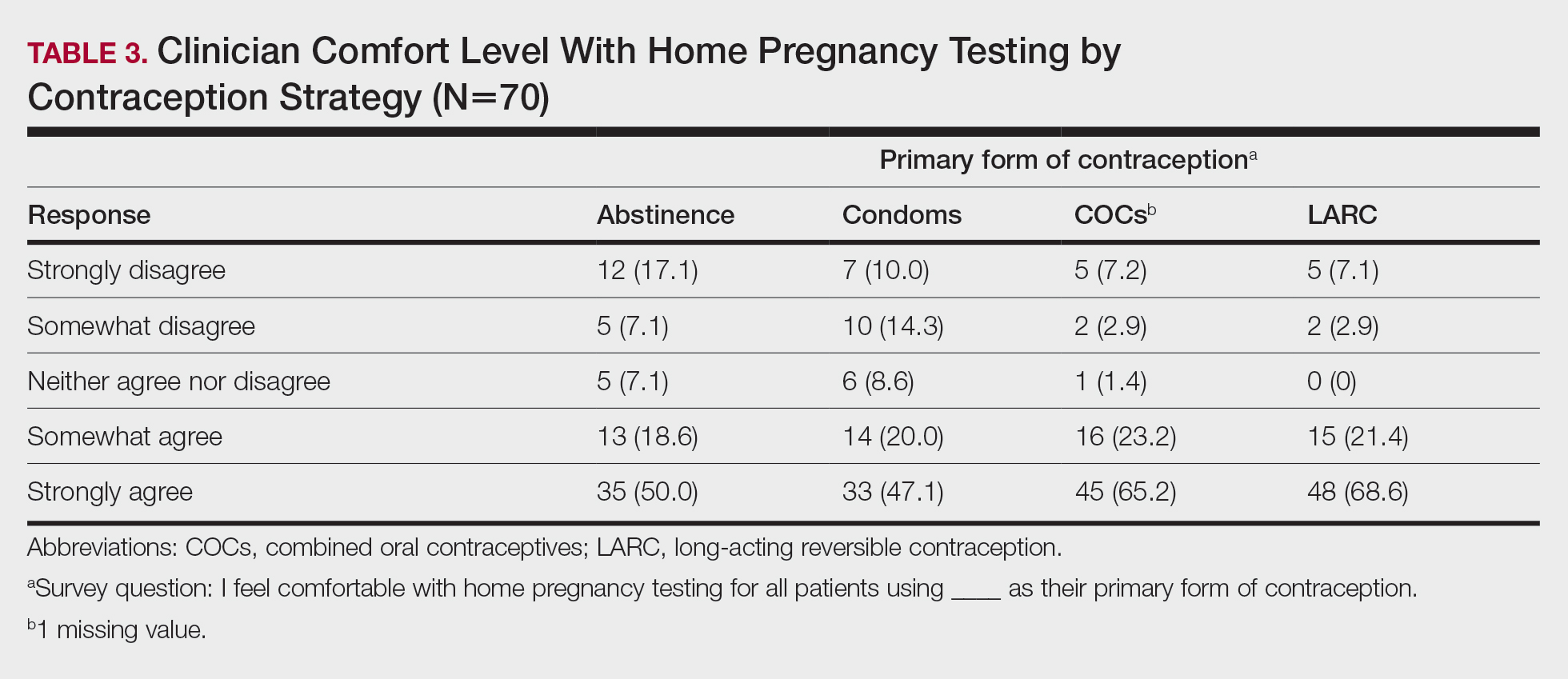

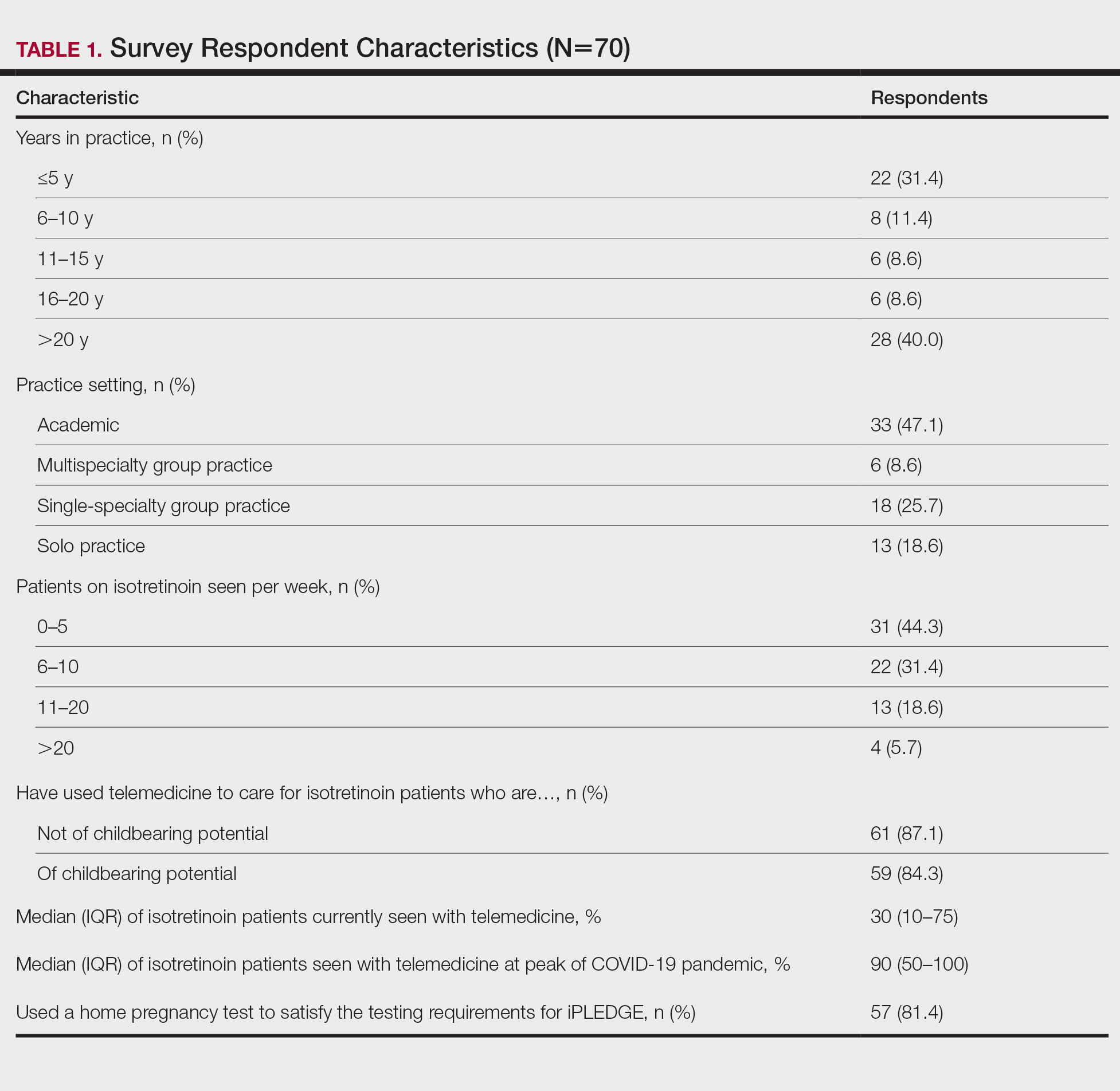

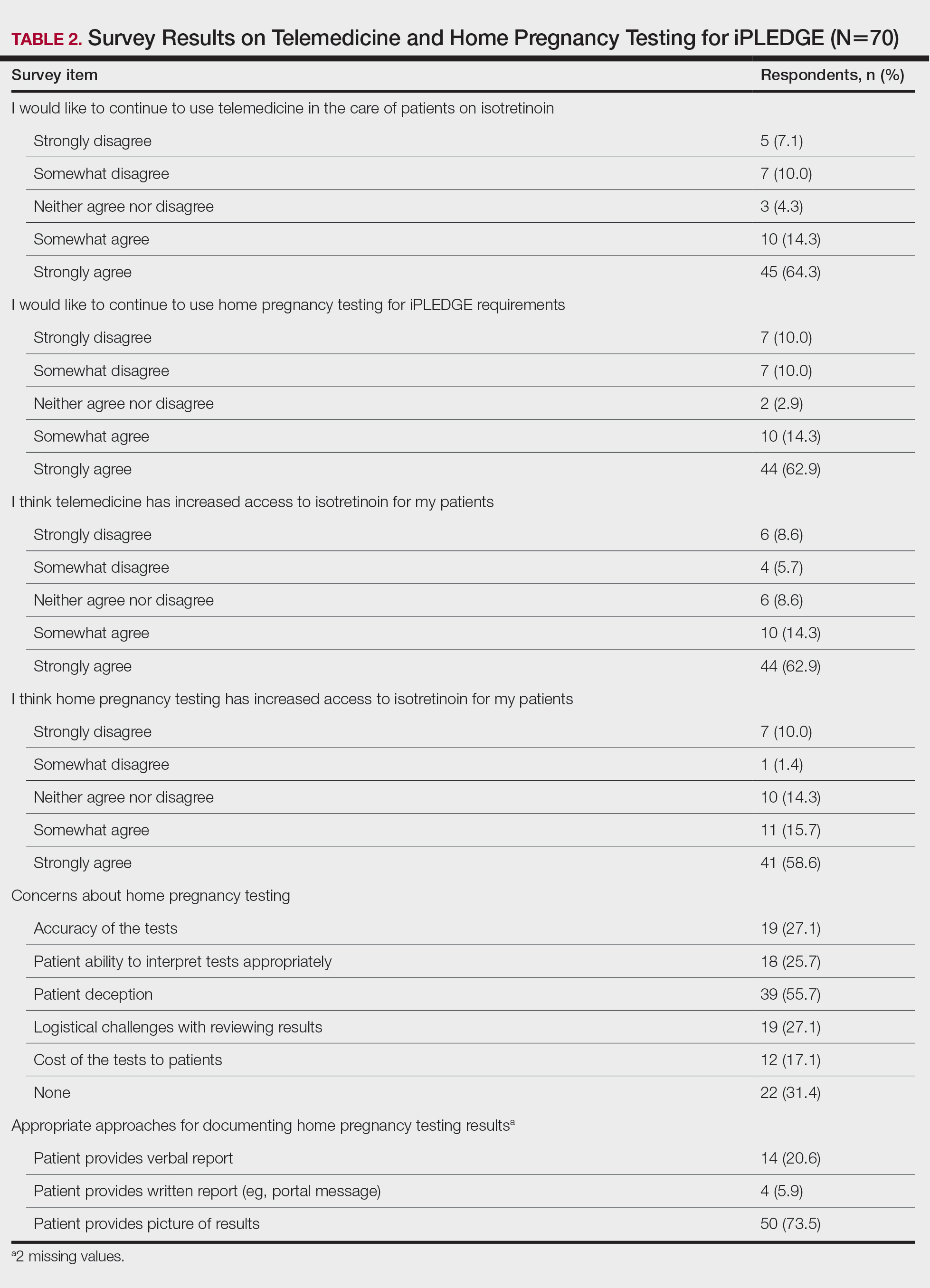

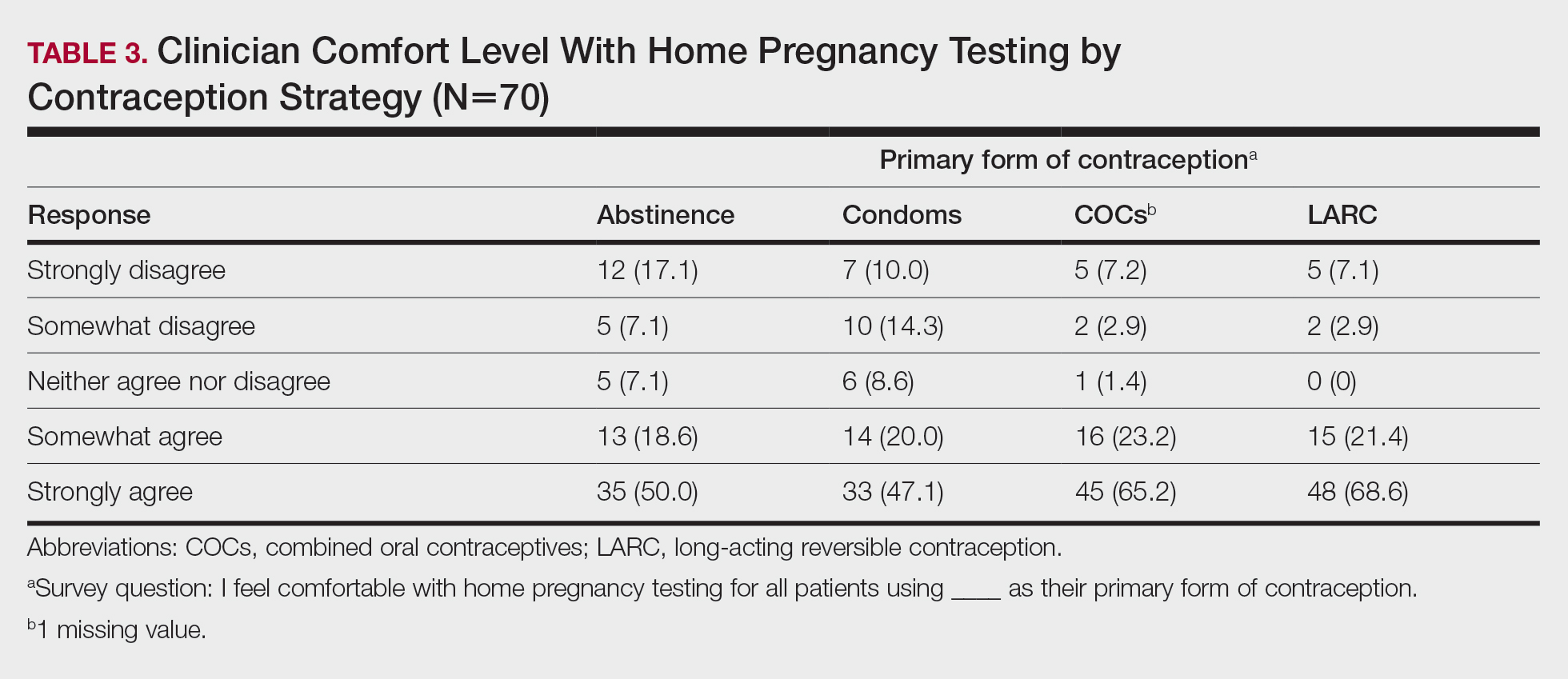

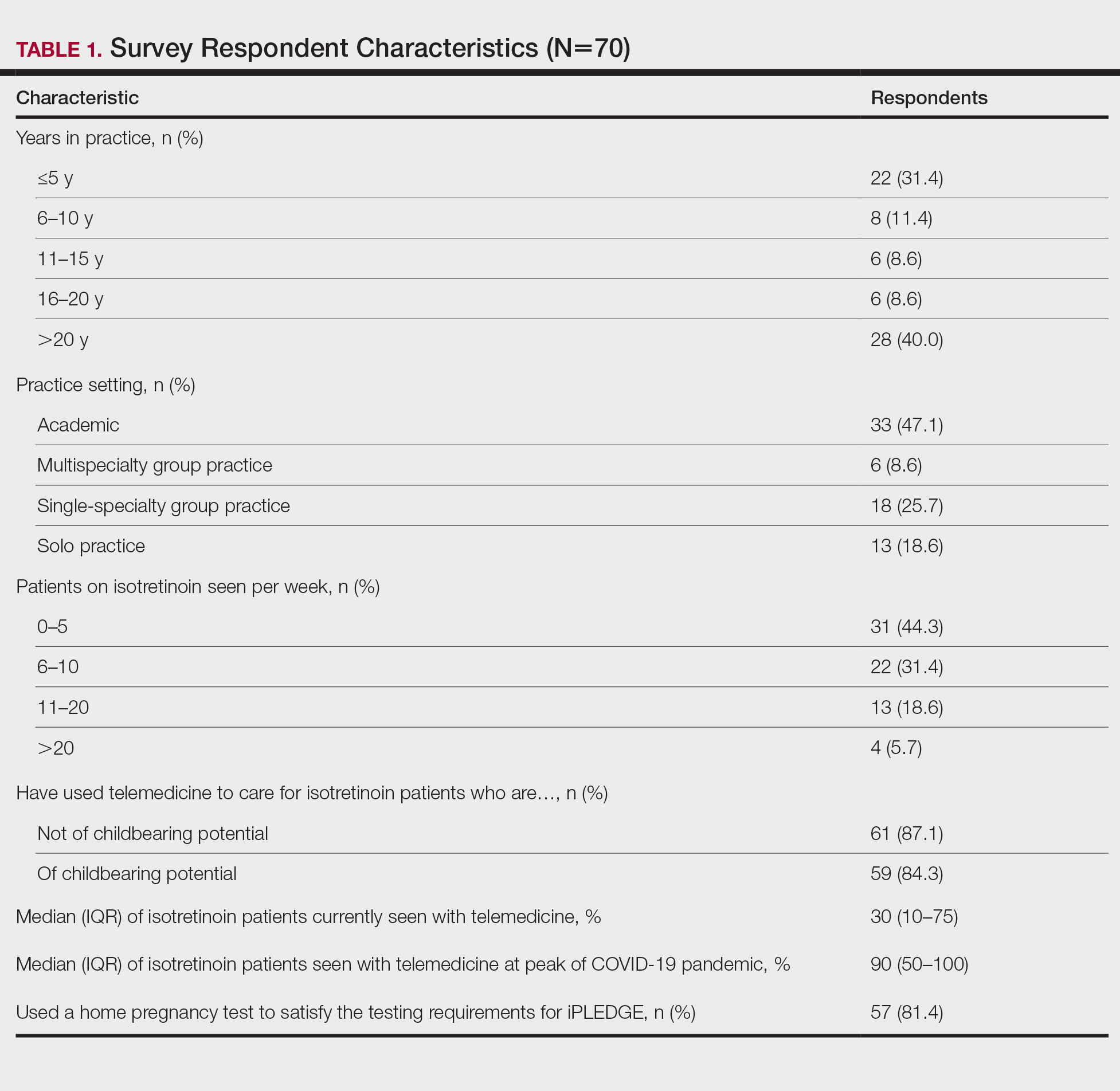

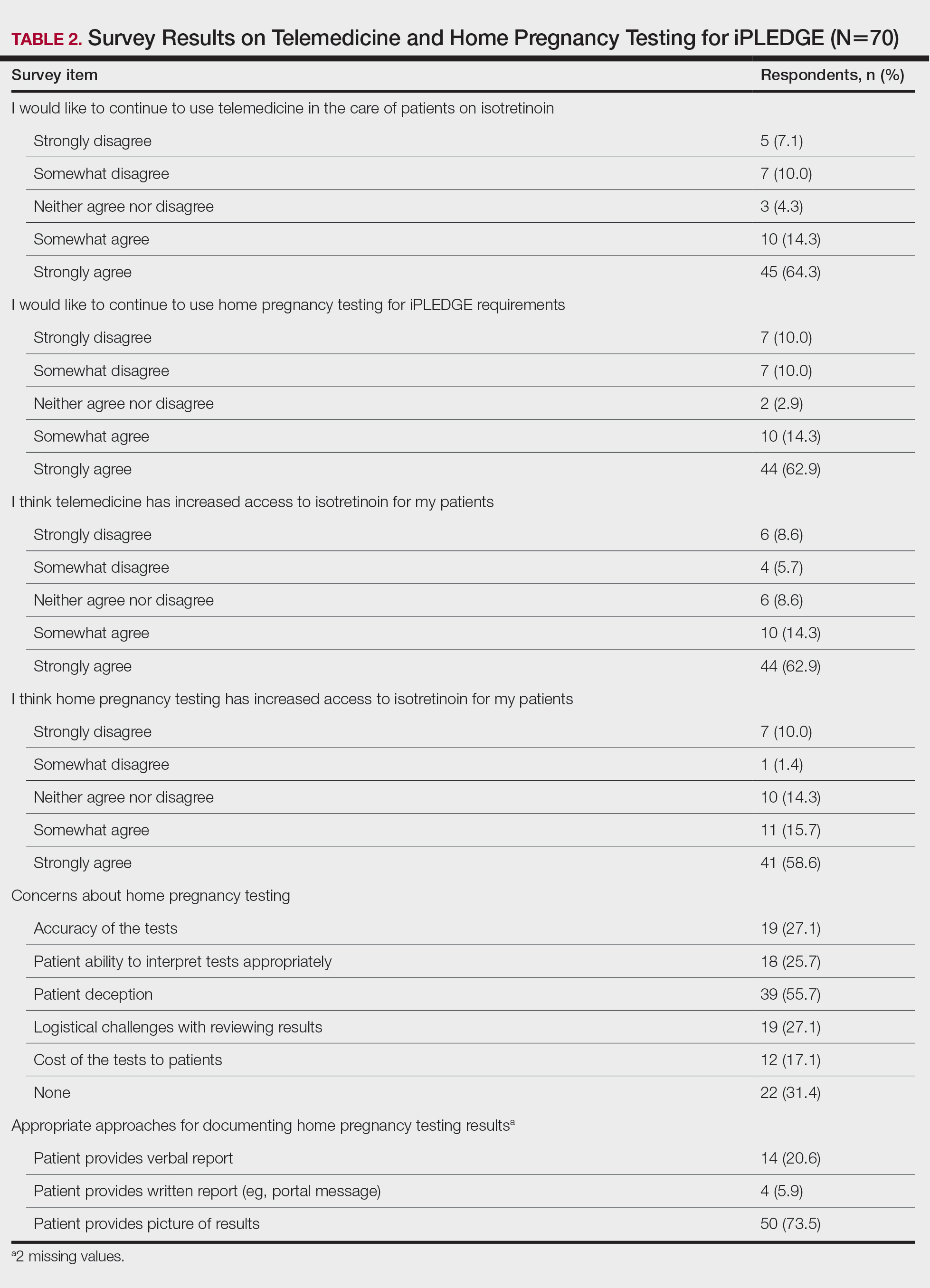

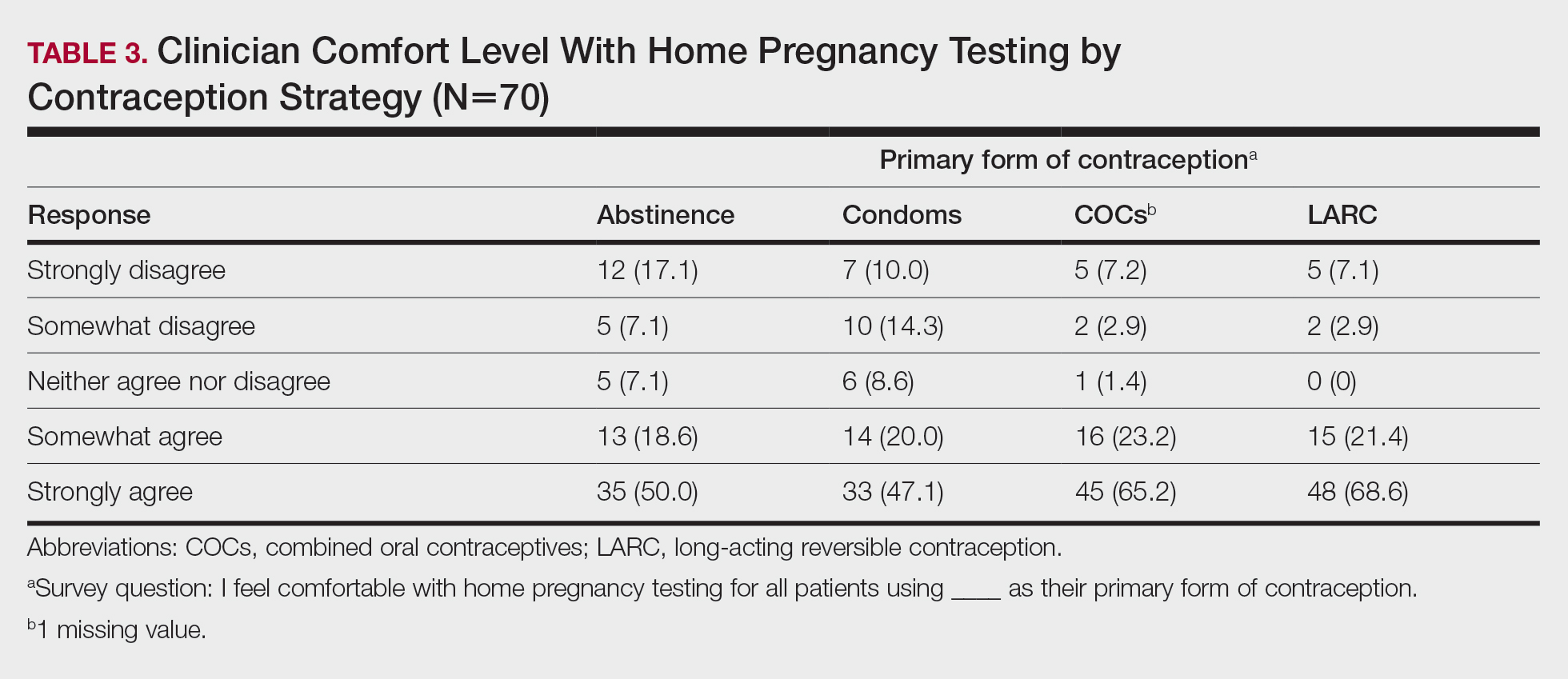

Among 70 clinicians who completed the survey (response rate, 6.4%), 33 (47.1%) practiced in an academic setting. At the peak of the COVID-19 pandemic, clinicians reported using telemedicine for a median of 90% (IQR=50%–100%) of their patients on isotretinoin, and 57 respondents (81.4%) reported having patients use a home pregnancy test for iPLEDGE (Table 1). More than 75% (55/70) agreed that they would like to continue to use telemedicine for patients on isotretinoin, and more than 75% (54/70) agreed that they would like to continue using home pregnancy testing for patients outside the setting of the COVID-19 pandemic. More than 75% (54/70) agreed that telemedicine has increased access for their patients, and more than 70% (52/70) agreed that home pregnancy testing has increased access (Table 2). Clinicians agreed that they would be comfortable using home pregnancy testing for patients choosing long-acting reversible contraception (63/70 [90.0%]), combined oral contraceptives (61/69 [88.4%]), condoms (47/70 [67.1%]), or abstinence (48/70 [68.6%])(Table 3).

The most common concerns about home pregnancy testing were patient deception (39/70 [55.7%]), logistical challenges with reviewing results (19/70 [27.1%]), accuracy of the tests (19/70 [27.1%]), and patient ability to interpret tests appropriately (18/70 [25.7%]). To document testing results, 50 respondents (73.5%) would require a picture of results, 4 (5.9%) would accept a written report from the patient, and 14 (20.6%) would accept a verbal report from the patient (Table 2).

In this survey, clinicians expressed interest in continuing to use telemedicine and home pregnancy testing to care for patients with acne treated with isotretinoin. More than 75% agreed that these changes have increased access, which is notable, as several studies have identified that female and minority patients may face iPLEDGE-associated access barriers.3,4 Continuing to allow home pregnancy testing and explicitly permitting telemedicine can enable clinicians to provide patient-centered care.2

Although clinicians felt comfortable with a variety of contraceptive strategies, particularly those with high reported effectiveness,5 there were concerns about deception and interpretation of test results. Future studies are needed to identify optimal workflows for home pregnancy testing and whether patients should be required to provide a photograph of the results.

This survey study is limited by the possibility of sampling and response bias due to the low response rate. Although the use of national listservs was employed to maximize the generalizability of the results, given the response rate, future studies are needed to evaluate whether these findings generalize to other settings. In addition, given iPLEDGE-associated access barriers, further research is needed to examine how changes such as telemedicine and home pregnancy testing influence both access to isotretinoin and pregnancy prevention.

Acknowledgments—We would like to thank Stacey Moore (Montclair, New Jersey) and the American Acne & Rosacea Society for their help distributing the survey.

- Kane S, Admani S. COVID-19 pandemic leading to the accelerated development of a virtual health model for isotretinoin. J Dermatol Nurses Assoc. 2021;13:54-57.

- Barbieri JS, Frieden IJ, Nagler AR. Isotretinoin, patient safety, and patient-centered care-time to reform iPLEDGE. JAMA Dermatol. 2020;156:21-22.

- Barbieri JS, Shin DB, Wang S, et al. Association of race/ethnicity and sex with differences in health care use and treatment for acne. JAMA Dermatol. 2020;156:312-319.

- Charrow A, Xia FD, Lu J, et al. Differences in isotretinoin start, interruption, and early termination across race and sex in the iPLEDGE era. PloS One. 2019;14:E0210445.

- Barbieri JS, Roe AH, Mostaghimi A. Simplifying contraception requirements for iPLEDGE: a decision analysis. J Am Acad Dermatol. 2020;83:104-108.

To the Editor:

In response to the challenges of the COVID-19 pandemic, iPLEDGE announced that they would accept results from home pregnancy tests and explicitly permit telemedicine.1 Given the financial and logistical burdens associated with iPLEDGE, these changes have the potential to increase access.2 However, it is unclear whether these modifications will be allowed to continue. We sought to evaluate clinician perspectives on the role of telemedicine and home pregnancy testing for iPLEDGE.

After piloting among several clinicians, a 13-question survey was distributed using the Qualtrics platform to members of the American Acne & Rosacea Society between April 14, 2021, and June 14, 2021. This survey consisted of items addressing provider practices and perspectives on telemedicine and home pregnancy testing for patients taking isotretinoin (eTable). Respondents were asked whether they think telemedicine and home pregnancy testing have improved access to care and whether they would like to continue these practices going forward. In addition, participants were asked about their concerns with home pregnancy testing and how comfortable they feel with home pregnancy testing for various contraceptive strategies (abstinence, condoms, combined oral contraceptives, and long-acting reversible contraception). This study was deemed exempt (category 2) by the University of Pennsylvania (Philadelphia, Pennsylvania) institutional review board (Protocol #844549).

Among 70 clinicians who completed the survey (response rate, 6.4%), 33 (47.1%) practiced in an academic setting. At the peak of the COVID-19 pandemic, clinicians reported using telemedicine for a median of 90% (IQR=50%–100%) of their patients on isotretinoin, and 57 respondents (81.4%) reported having patients use a home pregnancy test for iPLEDGE (Table 1). More than 75% (55/70) agreed that they would like to continue to use telemedicine for patients on isotretinoin, and more than 75% (54/70) agreed that they would like to continue using home pregnancy testing for patients outside the setting of the COVID-19 pandemic. More than 75% (54/70) agreed that telemedicine has increased access for their patients, and more than 70% (52/70) agreed that home pregnancy testing has increased access (Table 2). Clinicians agreed that they would be comfortable using home pregnancy testing for patients choosing long-acting reversible contraception (63/70 [90.0%]), combined oral contraceptives (61/69 [88.4%]), condoms (47/70 [67.1%]), or abstinence (48/70 [68.6%])(Table 3).

The most common concerns about home pregnancy testing were patient deception (39/70 [55.7%]), logistical challenges with reviewing results (19/70 [27.1%]), accuracy of the tests (19/70 [27.1%]), and patient ability to interpret tests appropriately (18/70 [25.7%]). To document testing results, 50 respondents (73.5%) would require a picture of results, 4 (5.9%) would accept a written report from the patient, and 14 (20.6%) would accept a verbal report from the patient (Table 2).

In this survey, clinicians expressed interest in continuing to use telemedicine and home pregnancy testing to care for patients with acne treated with isotretinoin. More than 75% agreed that these changes have increased access, which is notable, as several studies have identified that female and minority patients may face iPLEDGE-associated access barriers.3,4 Continuing to allow home pregnancy testing and explicitly permitting telemedicine can enable clinicians to provide patient-centered care.2

Although clinicians felt comfortable with a variety of contraceptive strategies, particularly those with high reported effectiveness,5 there were concerns about deception and interpretation of test results. Future studies are needed to identify optimal workflows for home pregnancy testing and whether patients should be required to provide a photograph of the results.

This survey study is limited by the possibility of sampling and response bias due to the low response rate. Although the use of national listservs was employed to maximize the generalizability of the results, given the response rate, future studies are needed to evaluate whether these findings generalize to other settings. In addition, given iPLEDGE-associated access barriers, further research is needed to examine how changes such as telemedicine and home pregnancy testing influence both access to isotretinoin and pregnancy prevention.

Acknowledgments—We would like to thank Stacey Moore (Montclair, New Jersey) and the American Acne & Rosacea Society for their help distributing the survey.

To the Editor:

In response to the challenges of the COVID-19 pandemic, iPLEDGE announced that they would accept results from home pregnancy tests and explicitly permit telemedicine.1 Given the financial and logistical burdens associated with iPLEDGE, these changes have the potential to increase access.2 However, it is unclear whether these modifications will be allowed to continue. We sought to evaluate clinician perspectives on the role of telemedicine and home pregnancy testing for iPLEDGE.

After piloting among several clinicians, a 13-question survey was distributed using the Qualtrics platform to members of the American Acne & Rosacea Society between April 14, 2021, and June 14, 2021. This survey consisted of items addressing provider practices and perspectives on telemedicine and home pregnancy testing for patients taking isotretinoin (eTable). Respondents were asked whether they think telemedicine and home pregnancy testing have improved access to care and whether they would like to continue these practices going forward. In addition, participants were asked about their concerns with home pregnancy testing and how comfortable they feel with home pregnancy testing for various contraceptive strategies (abstinence, condoms, combined oral contraceptives, and long-acting reversible contraception). This study was deemed exempt (category 2) by the University of Pennsylvania (Philadelphia, Pennsylvania) institutional review board (Protocol #844549).

Among 70 clinicians who completed the survey (response rate, 6.4%), 33 (47.1%) practiced in an academic setting. At the peak of the COVID-19 pandemic, clinicians reported using telemedicine for a median of 90% (IQR=50%–100%) of their patients on isotretinoin, and 57 respondents (81.4%) reported having patients use a home pregnancy test for iPLEDGE (Table 1). More than 75% (55/70) agreed that they would like to continue to use telemedicine for patients on isotretinoin, and more than 75% (54/70) agreed that they would like to continue using home pregnancy testing for patients outside the setting of the COVID-19 pandemic. More than 75% (54/70) agreed that telemedicine has increased access for their patients, and more than 70% (52/70) agreed that home pregnancy testing has increased access (Table 2). Clinicians agreed that they would be comfortable using home pregnancy testing for patients choosing long-acting reversible contraception (63/70 [90.0%]), combined oral contraceptives (61/69 [88.4%]), condoms (47/70 [67.1%]), or abstinence (48/70 [68.6%])(Table 3).

The most common concerns about home pregnancy testing were patient deception (39/70 [55.7%]), logistical challenges with reviewing results (19/70 [27.1%]), accuracy of the tests (19/70 [27.1%]), and patient ability to interpret tests appropriately (18/70 [25.7%]). To document testing results, 50 respondents (73.5%) would require a picture of results, 4 (5.9%) would accept a written report from the patient, and 14 (20.6%) would accept a verbal report from the patient (Table 2).

In this survey, clinicians expressed interest in continuing to use telemedicine and home pregnancy testing to care for patients with acne treated with isotretinoin. More than 75% agreed that these changes have increased access, which is notable, as several studies have identified that female and minority patients may face iPLEDGE-associated access barriers.3,4 Continuing to allow home pregnancy testing and explicitly permitting telemedicine can enable clinicians to provide patient-centered care.2

Although clinicians felt comfortable with a variety of contraceptive strategies, particularly those with high reported effectiveness,5 there were concerns about deception and interpretation of test results. Future studies are needed to identify optimal workflows for home pregnancy testing and whether patients should be required to provide a photograph of the results.

This survey study is limited by the possibility of sampling and response bias due to the low response rate. Although the use of national listservs was employed to maximize the generalizability of the results, given the response rate, future studies are needed to evaluate whether these findings generalize to other settings. In addition, given iPLEDGE-associated access barriers, further research is needed to examine how changes such as telemedicine and home pregnancy testing influence both access to isotretinoin and pregnancy prevention.

Acknowledgments—We would like to thank Stacey Moore (Montclair, New Jersey) and the American Acne & Rosacea Society for their help distributing the survey.

- Kane S, Admani S. COVID-19 pandemic leading to the accelerated development of a virtual health model for isotretinoin. J Dermatol Nurses Assoc. 2021;13:54-57.

- Barbieri JS, Frieden IJ, Nagler AR. Isotretinoin, patient safety, and patient-centered care-time to reform iPLEDGE. JAMA Dermatol. 2020;156:21-22.

- Barbieri JS, Shin DB, Wang S, et al. Association of race/ethnicity and sex with differences in health care use and treatment for acne. JAMA Dermatol. 2020;156:312-319.

- Charrow A, Xia FD, Lu J, et al. Differences in isotretinoin start, interruption, and early termination across race and sex in the iPLEDGE era. PloS One. 2019;14:E0210445.

- Barbieri JS, Roe AH, Mostaghimi A. Simplifying contraception requirements for iPLEDGE: a decision analysis. J Am Acad Dermatol. 2020;83:104-108.

- Kane S, Admani S. COVID-19 pandemic leading to the accelerated development of a virtual health model for isotretinoin. J Dermatol Nurses Assoc. 2021;13:54-57.

- Barbieri JS, Frieden IJ, Nagler AR. Isotretinoin, patient safety, and patient-centered care-time to reform iPLEDGE. JAMA Dermatol. 2020;156:21-22.

- Barbieri JS, Shin DB, Wang S, et al. Association of race/ethnicity and sex with differences in health care use and treatment for acne. JAMA Dermatol. 2020;156:312-319.

- Charrow A, Xia FD, Lu J, et al. Differences in isotretinoin start, interruption, and early termination across race and sex in the iPLEDGE era. PloS One. 2019;14:E0210445.

- Barbieri JS, Roe AH, Mostaghimi A. Simplifying contraception requirements for iPLEDGE: a decision analysis. J Am Acad Dermatol. 2020;83:104-108.

PRACTICE POINTS

- The majority of clinicians report that the use of telemedicine and home pregnancy testing for iPLEDGE has improved access to care and that they would like to continue these practices.

- Continuing to allow home pregnancy testing and explicitly permitting telemedicine can enable clinicians to provide patient-centered care for patients treated with isotretinoin.

The toll of the unwanted pregnancy

In the wake of the Supreme Court’s June decision to repeal a federal right to abortion, many women will now be faced with the prospect of carrying an unwanted pregnancy to term.

One group of researchers has studied the fate of these women and their families for the last decade. Their findings show that women who were denied an abortion are worse off physically, mentally, and economically than those who underwent the procedure.

“There has been much hypothesizing about harms from abortion without considering what the consequences are when someone wants an abortion and can’t get one,” said Diana Greene Foster, PhD, professor of obstetrics and gynecology at University of California, San Francisco.

Dr. Foster leads the Turnaway Study, one of the first efforts to examine the physical and mental health effects of receiving or being denied abortions. The ongoing research also charts the economic and social outcomes of women and their families in either circumstance.

Dr. Foster and her colleagues have followed women through childbirth, examining their well-being through phone interviews months to years after the initial interviews.

The economic consequences of carrying an unwanted pregnancy are clear. Women who did not receive a wanted abortion were more likely to live under the poverty line and struggle to cover basic living expenses like food, housing, and transportation.

The physical toll is also significant.

A 2019 analysis from the Turnaway Study found that eight out of 1,132 participants died, two after delivery, during the five-year follow up period – a far greater proportion than what would be expected among women of reproductive age. The researchers also found that women who carry unwanted pregnancies have more comorbid conditions before and after delivery than other women.

Lauren J. Ralph, PhD, MPH, an epidemiologist and member of the Turnaway Study team, examined the physical well-being of women after delivering their unwanted pregnancies.

“They reported more chronic pain, more gestational hypertension, and were more likely to rate their health as fair or poor,” Dr. Ralph said. “Somewhat to our surprise, we also found that two women denied abortions died due to pregnancy-related causes. This is my biggest concern with the loss of abortion access, as all scientific evidence indicates we will see a rise in maternal deaths as a result.”

At least one preliminary study, released as a preprint and not yet peer reviewed, estimates that the number of women who will die each year from pregnancy complications will rise by 24%. For Black women, mortality could jump from 18% to 39% in every state, according to the researchers from the University of Colorado, Boulder.

State of denial

Regulations set in place at abortion clinics in each state individually determine who is able to obtain an abortion, dictated by a “gestational age limit” – how far along a woman is in her pregnancy from the end of her menstrual cycle. Some of the women from the Turnaway Study were unable to receive an abortion because of how far along they were. Others were granted the abortion because they were just under their state’s limit.

Before the latest Supreme Court ruling, this limit was 20 weeks in most states. Now, the cutoff can be as little as 6 weeks – before many women know they are pregnant – or zero weeks, under the most restrictive laws.

Over 70% of women who are denied an abortion carry the pregnancy to term, according to Dr. Foster’s analysis.

Interviews with nearly 1,000 women – in both the first and second trimester of pregnancy – in the Turnaway Study who sought abortions at 30 abortion clinics around the country revealed the main reasons for seeking the procedure were (a) not being able to afford a child; (b) the pregnancy coming at the wrong time in life; or (c) the partner involved not being suitable.

According to the U.S. Centers for Disease Control and Prevention, 59.7% of women seeking an abortion in the United States are already mothers. Having an unplanned child results in dramatically worse economic circumstances for their other children, who become nearly four times more likely to live below the poverty line than their peers. They also experience slower physical and mental development as a result of the arrival of the new sibling.

The latest efforts by states to ban abortion could make the situation much worse, said Liza Fuentes, DrPh, senior research scientist at the Guttmacher Institute. “We will need further research on what it means for women to be denied care in the context of the new restrictions,” Dr. Fuentes told this news organization.

Researchers cannot yet predict how many women will be unable to obtain an abortion in the coming months. But John Donahue, PhD, JD, an economist and professor of law at Stanford (Calif.) University, estimated that state laws would prevent roughly one-third of the 1 million abortions per year based on 2021 figures.

Dr. Ralph and her colleagues with the Turnaway Study know that restricting access to abortions will not make the need for abortions disappear. Rather, women will be forced to travel, potentially long distances at significant cost, for the procedure or will seek medication abortion by mail through virtual clinics.

But Dr. Ralph said she’s concerned about women who live in areas where telehealth abortions are banned, or who discover their pregnancies late, as medical abortions are only recommended for women who are 10 weeks pregnant or less.

“They may look to self-source the medications online or elsewhere, potentially putting themself at legal risk,” she said. “And, as my research has shown, others may turn to self-managing an abortion with herbs, other drugs or medications, or physical methods like hitting themselves in the abdomen; with this they put themselves at both legal and potentially medical risk.”

Constance Bohon, MD, an ob.gyn. in Washington, D.C., said further research should track what happens to women if they’re forced to leave a job to care for another child.

“Many of these women live paycheck to paycheck and cannot afford the cost of an additional child,” Dr. Bohon said. “They may also need to rely on social service agencies to help them find food and housing.”

Dr. Fuentes said she hopes the Turnaway Study will inspire other researchers to examine laws that outlaw abortion and the corresponding long-term effects on women.

“From a medical and a public health standpoint, these laws are unjust,” Dr. Fuentes said in an interview. “They’re not grounded in evidence, and they incur great costs not just to pregnant people but their families and their communities as well.”

A version of this article first appeared on Medscape.com.

In the wake of the Supreme Court’s June decision to repeal a federal right to abortion, many women will now be faced with the prospect of carrying an unwanted pregnancy to term.

One group of researchers has studied the fate of these women and their families for the last decade. Their findings show that women who were denied an abortion are worse off physically, mentally, and economically than those who underwent the procedure.

“There has been much hypothesizing about harms from abortion without considering what the consequences are when someone wants an abortion and can’t get one,” said Diana Greene Foster, PhD, professor of obstetrics and gynecology at University of California, San Francisco.

Dr. Foster leads the Turnaway Study, one of the first efforts to examine the physical and mental health effects of receiving or being denied abortions. The ongoing research also charts the economic and social outcomes of women and their families in either circumstance.

Dr. Foster and her colleagues have followed women through childbirth, examining their well-being through phone interviews months to years after the initial interviews.

The economic consequences of carrying an unwanted pregnancy are clear. Women who did not receive a wanted abortion were more likely to live under the poverty line and struggle to cover basic living expenses like food, housing, and transportation.

The physical toll is also significant.

A 2019 analysis from the Turnaway Study found that eight out of 1,132 participants died, two after delivery, during the five-year follow up period – a far greater proportion than what would be expected among women of reproductive age. The researchers also found that women who carry unwanted pregnancies have more comorbid conditions before and after delivery than other women.

Lauren J. Ralph, PhD, MPH, an epidemiologist and member of the Turnaway Study team, examined the physical well-being of women after delivering their unwanted pregnancies.

“They reported more chronic pain, more gestational hypertension, and were more likely to rate their health as fair or poor,” Dr. Ralph said. “Somewhat to our surprise, we also found that two women denied abortions died due to pregnancy-related causes. This is my biggest concern with the loss of abortion access, as all scientific evidence indicates we will see a rise in maternal deaths as a result.”

At least one preliminary study, released as a preprint and not yet peer reviewed, estimates that the number of women who will die each year from pregnancy complications will rise by 24%. For Black women, mortality could jump from 18% to 39% in every state, according to the researchers from the University of Colorado, Boulder.

State of denial

Regulations set in place at abortion clinics in each state individually determine who is able to obtain an abortion, dictated by a “gestational age limit” – how far along a woman is in her pregnancy from the end of her menstrual cycle. Some of the women from the Turnaway Study were unable to receive an abortion because of how far along they were. Others were granted the abortion because they were just under their state’s limit.

Before the latest Supreme Court ruling, this limit was 20 weeks in most states. Now, the cutoff can be as little as 6 weeks – before many women know they are pregnant – or zero weeks, under the most restrictive laws.

Over 70% of women who are denied an abortion carry the pregnancy to term, according to Dr. Foster’s analysis.

Interviews with nearly 1,000 women – in both the first and second trimester of pregnancy – in the Turnaway Study who sought abortions at 30 abortion clinics around the country revealed the main reasons for seeking the procedure were (a) not being able to afford a child; (b) the pregnancy coming at the wrong time in life; or (c) the partner involved not being suitable.

According to the U.S. Centers for Disease Control and Prevention, 59.7% of women seeking an abortion in the United States are already mothers. Having an unplanned child results in dramatically worse economic circumstances for their other children, who become nearly four times more likely to live below the poverty line than their peers. They also experience slower physical and mental development as a result of the arrival of the new sibling.

The latest efforts by states to ban abortion could make the situation much worse, said Liza Fuentes, DrPh, senior research scientist at the Guttmacher Institute. “We will need further research on what it means for women to be denied care in the context of the new restrictions,” Dr. Fuentes told this news organization.

Researchers cannot yet predict how many women will be unable to obtain an abortion in the coming months. But John Donahue, PhD, JD, an economist and professor of law at Stanford (Calif.) University, estimated that state laws would prevent roughly one-third of the 1 million abortions per year based on 2021 figures.

Dr. Ralph and her colleagues with the Turnaway Study know that restricting access to abortions will not make the need for abortions disappear. Rather, women will be forced to travel, potentially long distances at significant cost, for the procedure or will seek medication abortion by mail through virtual clinics.

But Dr. Ralph said she’s concerned about women who live in areas where telehealth abortions are banned, or who discover their pregnancies late, as medical abortions are only recommended for women who are 10 weeks pregnant or less.

“They may look to self-source the medications online or elsewhere, potentially putting themself at legal risk,” she said. “And, as my research has shown, others may turn to self-managing an abortion with herbs, other drugs or medications, or physical methods like hitting themselves in the abdomen; with this they put themselves at both legal and potentially medical risk.”

Constance Bohon, MD, an ob.gyn. in Washington, D.C., said further research should track what happens to women if they’re forced to leave a job to care for another child.

“Many of these women live paycheck to paycheck and cannot afford the cost of an additional child,” Dr. Bohon said. “They may also need to rely on social service agencies to help them find food and housing.”

Dr. Fuentes said she hopes the Turnaway Study will inspire other researchers to examine laws that outlaw abortion and the corresponding long-term effects on women.

“From a medical and a public health standpoint, these laws are unjust,” Dr. Fuentes said in an interview. “They’re not grounded in evidence, and they incur great costs not just to pregnant people but their families and their communities as well.”

A version of this article first appeared on Medscape.com.

In the wake of the Supreme Court’s June decision to repeal a federal right to abortion, many women will now be faced with the prospect of carrying an unwanted pregnancy to term.

One group of researchers has studied the fate of these women and their families for the last decade. Their findings show that women who were denied an abortion are worse off physically, mentally, and economically than those who underwent the procedure.

“There has been much hypothesizing about harms from abortion without considering what the consequences are when someone wants an abortion and can’t get one,” said Diana Greene Foster, PhD, professor of obstetrics and gynecology at University of California, San Francisco.

Dr. Foster leads the Turnaway Study, one of the first efforts to examine the physical and mental health effects of receiving or being denied abortions. The ongoing research also charts the economic and social outcomes of women and their families in either circumstance.

Dr. Foster and her colleagues have followed women through childbirth, examining their well-being through phone interviews months to years after the initial interviews.

The economic consequences of carrying an unwanted pregnancy are clear. Women who did not receive a wanted abortion were more likely to live under the poverty line and struggle to cover basic living expenses like food, housing, and transportation.

The physical toll is also significant.

A 2019 analysis from the Turnaway Study found that eight out of 1,132 participants died, two after delivery, during the five-year follow up period – a far greater proportion than what would be expected among women of reproductive age. The researchers also found that women who carry unwanted pregnancies have more comorbid conditions before and after delivery than other women.

Lauren J. Ralph, PhD, MPH, an epidemiologist and member of the Turnaway Study team, examined the physical well-being of women after delivering their unwanted pregnancies.

“They reported more chronic pain, more gestational hypertension, and were more likely to rate their health as fair or poor,” Dr. Ralph said. “Somewhat to our surprise, we also found that two women denied abortions died due to pregnancy-related causes. This is my biggest concern with the loss of abortion access, as all scientific evidence indicates we will see a rise in maternal deaths as a result.”

At least one preliminary study, released as a preprint and not yet peer reviewed, estimates that the number of women who will die each year from pregnancy complications will rise by 24%. For Black women, mortality could jump from 18% to 39% in every state, according to the researchers from the University of Colorado, Boulder.

State of denial

Regulations set in place at abortion clinics in each state individually determine who is able to obtain an abortion, dictated by a “gestational age limit” – how far along a woman is in her pregnancy from the end of her menstrual cycle. Some of the women from the Turnaway Study were unable to receive an abortion because of how far along they were. Others were granted the abortion because they were just under their state’s limit.

Before the latest Supreme Court ruling, this limit was 20 weeks in most states. Now, the cutoff can be as little as 6 weeks – before many women know they are pregnant – or zero weeks, under the most restrictive laws.

Over 70% of women who are denied an abortion carry the pregnancy to term, according to Dr. Foster’s analysis.

Interviews with nearly 1,000 women – in both the first and second trimester of pregnancy – in the Turnaway Study who sought abortions at 30 abortion clinics around the country revealed the main reasons for seeking the procedure were (a) not being able to afford a child; (b) the pregnancy coming at the wrong time in life; or (c) the partner involved not being suitable.

According to the U.S. Centers for Disease Control and Prevention, 59.7% of women seeking an abortion in the United States are already mothers. Having an unplanned child results in dramatically worse economic circumstances for their other children, who become nearly four times more likely to live below the poverty line than their peers. They also experience slower physical and mental development as a result of the arrival of the new sibling.

The latest efforts by states to ban abortion could make the situation much worse, said Liza Fuentes, DrPh, senior research scientist at the Guttmacher Institute. “We will need further research on what it means for women to be denied care in the context of the new restrictions,” Dr. Fuentes told this news organization.

Researchers cannot yet predict how many women will be unable to obtain an abortion in the coming months. But John Donahue, PhD, JD, an economist and professor of law at Stanford (Calif.) University, estimated that state laws would prevent roughly one-third of the 1 million abortions per year based on 2021 figures.

Dr. Ralph and her colleagues with the Turnaway Study know that restricting access to abortions will not make the need for abortions disappear. Rather, women will be forced to travel, potentially long distances at significant cost, for the procedure or will seek medication abortion by mail through virtual clinics.

But Dr. Ralph said she’s concerned about women who live in areas where telehealth abortions are banned, or who discover their pregnancies late, as medical abortions are only recommended for women who are 10 weeks pregnant or less.

“They may look to self-source the medications online or elsewhere, potentially putting themself at legal risk,” she said. “And, as my research has shown, others may turn to self-managing an abortion with herbs, other drugs or medications, or physical methods like hitting themselves in the abdomen; with this they put themselves at both legal and potentially medical risk.”

Constance Bohon, MD, an ob.gyn. in Washington, D.C., said further research should track what happens to women if they’re forced to leave a job to care for another child.

“Many of these women live paycheck to paycheck and cannot afford the cost of an additional child,” Dr. Bohon said. “They may also need to rely on social service agencies to help them find food and housing.”

Dr. Fuentes said she hopes the Turnaway Study will inspire other researchers to examine laws that outlaw abortion and the corresponding long-term effects on women.

“From a medical and a public health standpoint, these laws are unjust,” Dr. Fuentes said in an interview. “They’re not grounded in evidence, and they incur great costs not just to pregnant people but their families and their communities as well.”

A version of this article first appeared on Medscape.com.

No adverse impact of obesity in biologic-treated IBD

Patients with both inflammatory bowel disease (IBD) and obesity starting on new biologic therapies do not face an increased risk for hospitalization, IBD-related surgery, or serious infection, reveals a multicenter U.S. study published online in American Journal of Gastroenterology.

“Our findings were a bit surprising, since prior studies had suggested higher clinical disease activity and risk of flare and lower rates of endoscopic remission in obese patients treated with biologics,” Siddharth Singh, MD, MS, director of the IBD Center at the University of California, San Diego, told this news organization.

“However, in this study we focused on harder outcomes, including risk of hospitalization and surgery, and did not observe any detrimental effect,” he said.

Based on the findings, Dr. Singh believes that biologics are “completely safe and effective to use in obese patients.”

He clarified, however, that “examining the overall body of evidence, I still think obesity results in more rapid clearance of biologics, which negatively impacts the likelihood of achieving symptomatic and endoscopic remission.”

“Hence, there should be a low threshold to monitor and optimize biologic drug concentrations in obese patients. I preferentially use biologics that are dosed based on body weight in patients with class II or III obesity,” he said.

Research findings

Dr. Singh and colleagues write that, given that between 15% and 45% of patients with IBD are obese and a further 20%-40% are overweight, obesity is an “increasingly important consideration” in its management.

It is believed that obesity, largely via visceral adiposity, has a negative impact on IBD via increased production of adipokines, chemokines, and cytokines, such as tumor necrosis factor (TNF) alpha and interleukin-6, thus affecting treatment response as well as increasing the risk for complications and infections.

However, studies of the association between obesity and poorer treatment response, both large and small, have yielded conflicting results, potentially owing to methodological limitations.

To investigate further, Dr. Singh and colleagues gathered electronic health record data from five health systems in California on adults with IBD who were new users of TNF-alpha antagonists, or the monoclonal antibodies vedolizumab or ustekinumab, between Jan. 1, 2010, and June 30, 2017.

World Health Organization definitions were used to classify the patients as having normal BMI, overweight, or obesity, and the risk for all-cause hospitalization, IBD-related surgery, or serious infection was compared between the groups.

The team reviewed the cases of 3,038 patients with IBD, of whom 31.1% had ulcerative colitis. Among the participants, 28.2% were classified as overweight and 13.7% as obese. TNF-alpha antagonists were used by 76.3% of patients.

Patients with obesity were significantly older, were more likely to be of Hispanic ethnicity, had a higher burden of comorbidities, and were more likely to have elevated C-reactive protein levels at baseline.

However, there were no significant differences between obese and nonobese patients in terms of IBD type, class of biologic prescribed, prior surgery, or prior biologic exposure.

Within 1 year of starting a new biologic therapy, 22.9% of patients required hospitalization, whereas 3.3% required surgery and 5.8% were hospitalized with a serious infection.

Cox proportional hazard analyses showed that obesity was not associated with an increased risk for hospitalization versus normal body mass index (adjusted hazard ratio, 0.90; 95% confidence interval, 0.72-1.13), nor was it associated with IBD-related surgery (aHR, 0.62; 95% CI, 0.31-1.22) or serious infection (aHR, 1.11; 95% CI, 0.73-1.71).

The results were similar when the patients were stratified by IBD type and index biologic therapy, the researchers write.

When analyzed as a continuous variable, BMI was associated with a lower risk for hospitalization (aHR, 0.98 per 1 kg/m2; P = .044) but not with IBD-related surgery or serious infection.

Reassuring results for the standard of care

Discussing their findings, the authors note that “the discrepancy among studies potentially reflects the shortcomings of overall obesity measured using BMI to capture clinically meaningful adiposity.”

“A small but growing body of literature suggests visceral adipose tissue is a potentially superior prognostic measure of adiposity and better predicts adverse outcomes in IBD.”

Dr. Singh said that it would be “very interesting” to examine the relationship between visceral adiposity, as inferred from waist circumference, and IBD outcomes.

Approached for comment, Stephen B. Hanauer, MD, Clifford Joseph Barborka Professor, Northwestern University Feinberg School of Medicine, Chicago, said, “At the present time, there are no new clinical implications based on this study.”

He said in an interview that it “does not require any change in the current standard of care but rather attempts to reassure that the standard of care does not change for obese patients.”

“With that being said, the standard of care may require dosing adjustments for patients based on weight, as is already the case for infliximab/ustekinumab, and monitoring to treat to target in obese patients as well as in normal or underweight patients,” Dr. Hanauer concluded.

The study was supported by the ACG Junior Faculty Development Award and the Crohn’s and Colitis Foundation Career Development Award to Dr. Singh. Dr. Singh is supported by the National Institute of Diabetes and Digestive and Kidney Diseases and reports relationships with AbbVie, Janssen, and Pfizer. The other authors report numerous financial relationships. Dr. Hanauer reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Patients with both inflammatory bowel disease (IBD) and obesity starting on new biologic therapies do not face an increased risk for hospitalization, IBD-related surgery, or serious infection, reveals a multicenter U.S. study published online in American Journal of Gastroenterology.

“Our findings were a bit surprising, since prior studies had suggested higher clinical disease activity and risk of flare and lower rates of endoscopic remission in obese patients treated with biologics,” Siddharth Singh, MD, MS, director of the IBD Center at the University of California, San Diego, told this news organization.

“However, in this study we focused on harder outcomes, including risk of hospitalization and surgery, and did not observe any detrimental effect,” he said.

Based on the findings, Dr. Singh believes that biologics are “completely safe and effective to use in obese patients.”

He clarified, however, that “examining the overall body of evidence, I still think obesity results in more rapid clearance of biologics, which negatively impacts the likelihood of achieving symptomatic and endoscopic remission.”

“Hence, there should be a low threshold to monitor and optimize biologic drug concentrations in obese patients. I preferentially use biologics that are dosed based on body weight in patients with class II or III obesity,” he said.

Research findings

Dr. Singh and colleagues write that, given that between 15% and 45% of patients with IBD are obese and a further 20%-40% are overweight, obesity is an “increasingly important consideration” in its management.

It is believed that obesity, largely via visceral adiposity, has a negative impact on IBD via increased production of adipokines, chemokines, and cytokines, such as tumor necrosis factor (TNF) alpha and interleukin-6, thus affecting treatment response as well as increasing the risk for complications and infections.

However, studies of the association between obesity and poorer treatment response, both large and small, have yielded conflicting results, potentially owing to methodological limitations.

To investigate further, Dr. Singh and colleagues gathered electronic health record data from five health systems in California on adults with IBD who were new users of TNF-alpha antagonists, or the monoclonal antibodies vedolizumab or ustekinumab, between Jan. 1, 2010, and June 30, 2017.

World Health Organization definitions were used to classify the patients as having normal BMI, overweight, or obesity, and the risk for all-cause hospitalization, IBD-related surgery, or serious infection was compared between the groups.

The team reviewed the cases of 3,038 patients with IBD, of whom 31.1% had ulcerative colitis. Among the participants, 28.2% were classified as overweight and 13.7% as obese. TNF-alpha antagonists were used by 76.3% of patients.

Patients with obesity were significantly older, were more likely to be of Hispanic ethnicity, had a higher burden of comorbidities, and were more likely to have elevated C-reactive protein levels at baseline.

However, there were no significant differences between obese and nonobese patients in terms of IBD type, class of biologic prescribed, prior surgery, or prior biologic exposure.

Within 1 year of starting a new biologic therapy, 22.9% of patients required hospitalization, whereas 3.3% required surgery and 5.8% were hospitalized with a serious infection.

Cox proportional hazard analyses showed that obesity was not associated with an increased risk for hospitalization versus normal body mass index (adjusted hazard ratio, 0.90; 95% confidence interval, 0.72-1.13), nor was it associated with IBD-related surgery (aHR, 0.62; 95% CI, 0.31-1.22) or serious infection (aHR, 1.11; 95% CI, 0.73-1.71).

The results were similar when the patients were stratified by IBD type and index biologic therapy, the researchers write.

When analyzed as a continuous variable, BMI was associated with a lower risk for hospitalization (aHR, 0.98 per 1 kg/m2; P = .044) but not with IBD-related surgery or serious infection.

Reassuring results for the standard of care

Discussing their findings, the authors note that “the discrepancy among studies potentially reflects the shortcomings of overall obesity measured using BMI to capture clinically meaningful adiposity.”

“A small but growing body of literature suggests visceral adipose tissue is a potentially superior prognostic measure of adiposity and better predicts adverse outcomes in IBD.”

Dr. Singh said that it would be “very interesting” to examine the relationship between visceral adiposity, as inferred from waist circumference, and IBD outcomes.

Approached for comment, Stephen B. Hanauer, MD, Clifford Joseph Barborka Professor, Northwestern University Feinberg School of Medicine, Chicago, said, “At the present time, there are no new clinical implications based on this study.”

He said in an interview that it “does not require any change in the current standard of care but rather attempts to reassure that the standard of care does not change for obese patients.”

“With that being said, the standard of care may require dosing adjustments for patients based on weight, as is already the case for infliximab/ustekinumab, and monitoring to treat to target in obese patients as well as in normal or underweight patients,” Dr. Hanauer concluded.

The study was supported by the ACG Junior Faculty Development Award and the Crohn’s and Colitis Foundation Career Development Award to Dr. Singh. Dr. Singh is supported by the National Institute of Diabetes and Digestive and Kidney Diseases and reports relationships with AbbVie, Janssen, and Pfizer. The other authors report numerous financial relationships. Dr. Hanauer reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Patients with both inflammatory bowel disease (IBD) and obesity starting on new biologic therapies do not face an increased risk for hospitalization, IBD-related surgery, or serious infection, reveals a multicenter U.S. study published online in American Journal of Gastroenterology.

“Our findings were a bit surprising, since prior studies had suggested higher clinical disease activity and risk of flare and lower rates of endoscopic remission in obese patients treated with biologics,” Siddharth Singh, MD, MS, director of the IBD Center at the University of California, San Diego, told this news organization.

“However, in this study we focused on harder outcomes, including risk of hospitalization and surgery, and did not observe any detrimental effect,” he said.

Based on the findings, Dr. Singh believes that biologics are “completely safe and effective to use in obese patients.”

He clarified, however, that “examining the overall body of evidence, I still think obesity results in more rapid clearance of biologics, which negatively impacts the likelihood of achieving symptomatic and endoscopic remission.”

“Hence, there should be a low threshold to monitor and optimize biologic drug concentrations in obese patients. I preferentially use biologics that are dosed based on body weight in patients with class II or III obesity,” he said.

Research findings

Dr. Singh and colleagues write that, given that between 15% and 45% of patients with IBD are obese and a further 20%-40% are overweight, obesity is an “increasingly important consideration” in its management.

It is believed that obesity, largely via visceral adiposity, has a negative impact on IBD via increased production of adipokines, chemokines, and cytokines, such as tumor necrosis factor (TNF) alpha and interleukin-6, thus affecting treatment response as well as increasing the risk for complications and infections.

However, studies of the association between obesity and poorer treatment response, both large and small, have yielded conflicting results, potentially owing to methodological limitations.

To investigate further, Dr. Singh and colleagues gathered electronic health record data from five health systems in California on adults with IBD who were new users of TNF-alpha antagonists, or the monoclonal antibodies vedolizumab or ustekinumab, between Jan. 1, 2010, and June 30, 2017.

World Health Organization definitions were used to classify the patients as having normal BMI, overweight, or obesity, and the risk for all-cause hospitalization, IBD-related surgery, or serious infection was compared between the groups.

The team reviewed the cases of 3,038 patients with IBD, of whom 31.1% had ulcerative colitis. Among the participants, 28.2% were classified as overweight and 13.7% as obese. TNF-alpha antagonists were used by 76.3% of patients.

Patients with obesity were significantly older, were more likely to be of Hispanic ethnicity, had a higher burden of comorbidities, and were more likely to have elevated C-reactive protein levels at baseline.

However, there were no significant differences between obese and nonobese patients in terms of IBD type, class of biologic prescribed, prior surgery, or prior biologic exposure.

Within 1 year of starting a new biologic therapy, 22.9% of patients required hospitalization, whereas 3.3% required surgery and 5.8% were hospitalized with a serious infection.

Cox proportional hazard analyses showed that obesity was not associated with an increased risk for hospitalization versus normal body mass index (adjusted hazard ratio, 0.90; 95% confidence interval, 0.72-1.13), nor was it associated with IBD-related surgery (aHR, 0.62; 95% CI, 0.31-1.22) or serious infection (aHR, 1.11; 95% CI, 0.73-1.71).

The results were similar when the patients were stratified by IBD type and index biologic therapy, the researchers write.

When analyzed as a continuous variable, BMI was associated with a lower risk for hospitalization (aHR, 0.98 per 1 kg/m2; P = .044) but not with IBD-related surgery or serious infection.

Reassuring results for the standard of care

Discussing their findings, the authors note that “the discrepancy among studies potentially reflects the shortcomings of overall obesity measured using BMI to capture clinically meaningful adiposity.”

“A small but growing body of literature suggests visceral adipose tissue is a potentially superior prognostic measure of adiposity and better predicts adverse outcomes in IBD.”

Dr. Singh said that it would be “very interesting” to examine the relationship between visceral adiposity, as inferred from waist circumference, and IBD outcomes.

Approached for comment, Stephen B. Hanauer, MD, Clifford Joseph Barborka Professor, Northwestern University Feinberg School of Medicine, Chicago, said, “At the present time, there are no new clinical implications based on this study.”

He said in an interview that it “does not require any change in the current standard of care but rather attempts to reassure that the standard of care does not change for obese patients.”

“With that being said, the standard of care may require dosing adjustments for patients based on weight, as is already the case for infliximab/ustekinumab, and monitoring to treat to target in obese patients as well as in normal or underweight patients,” Dr. Hanauer concluded.

The study was supported by the ACG Junior Faculty Development Award and the Crohn’s and Colitis Foundation Career Development Award to Dr. Singh. Dr. Singh is supported by the National Institute of Diabetes and Digestive and Kidney Diseases and reports relationships with AbbVie, Janssen, and Pfizer. The other authors report numerous financial relationships. Dr. Hanauer reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

What’s Diet Got to Do With It? Basic and Clinical Science Behind Diet and Acne

The current understanding of the pathogenesis of acne includes altered keratinization, follicular obstruction, overproduction of sebum, and microbial colonization ( Cutibacterium acnes ) of the pilosebaceous unit resulting in perifollicular inflammation. 1 A deeper dive into the hormonal and molecular drivers of acne have implicated insulin, insulinlike growth factor 1 (IGF-1), corticotropin-releasing hormone, the phosphoinositide 3 -kinase/Akt pathway, mitogen-activated protein kinase pathway, and the nuclear factor κ B pathway. 2-4 A Western diet comprised of high glycemic index foods, carbohydrates, and dairy enhances the production of insulin and IGF-1. A downstream effect of excess insulin and IGF-1 is overactivity of the mammalian target of rapamycin complex 1 (mTORC1), a major promoter of cellular growth and proliferation that primarily is regulated through nutrient availability. 5 This article will review our understanding of the impact of the Western diet on acne pathogenesis and highlight the existing evidence behind the contributions of the mTORC1 pathway in this process. Although quality randomized controlled trials analyzing these effects are limited, dermatologists should understand the existing evidence supporting the potential impacts of diet on acne.

The Western Diet