User login

Commentary: Migraine and the relationship to gynecologic conditions, May 2023

The theme of this month's round-up is women's health, specifically as it relates to migraine. Three recent studies have highlighted the connection between estrogen and migraine, in terms of the potential increase in risk for certain conditions, such as gestational hypertension and endometriosis, and the use of potential therapies, such as calcitonin gene-related peptide (CGRP) antagonist medications to treat menstrual migraine.

Although most practitioners know that there is a deep connection between vascular risk and migraine, most are unfamiliar with the specific interplay between these two conditions. Antihypertensive medications are common preventive treatments for migraine, and migraine itself has been associated with an increased risk for specific vascular issues in pregnancy, most notably venous sinus thrombosis. Crowe and colleagues specifically looked at whether women with migraine experience a higher risk for hypertensive disorders of pregnancy.

This was a UK-based prospective cohort study using a large longitudinal database called the Clinical Practice Research Datalink. Over 1 million live-born or stillborn deliveries were analyzed from 1993 through 2020. The data were linked to diagnosis and prescription codes for migraine that were filled or documented before 20 weeks of gestation and compared with diagnosis codes for hypertensive disorders that occurred from week 20 through the pregnancy and delivery. Regression models were then used to estimate risk ratios and CI. Only single pregnancies were counted because multiple pregnancies already are associated with a higher risk for most other vascular conditions.

Any history of migraine prior to pregnancy was associated with an increased risk for gestational hypertension, eclampsia, and preeclampsia (relative risk 1.17). The greatest risk was higher frequency. Migraine that persisted into the first trimester led to a relative risk of 1.84. The use of migraine medications, especially vasoconstrictive-type medications, was also associated with a higher risk compared with women without migraine.

Women with migraine frequently present before family planning to discuss the potential risks and options of migraine treatment during pregnancy. In addition to discussing the most appropriate preventive and acute medications, it would be appropriate also to discuss any potential red flag symptoms that may occur during pregnancy. This discussion should include hypertensive disorders of pregnancy as per this study.

Since the advent of CGRP antagonist treatments for migraine, many practitioners and patients have been curious to know whether specific features of migraine are better treated with this class of medication. There are now both acute and preventive CGRP antagonists, both as small molecule agents and monoclonal antibodies (mAb). Menstrual migraines specifically can be a more difficult-to-treat subtype, and often when other triggers are negated, hormonal fluctuation can still be a significant problem for many patients. Verhagen and colleagues set out to determine whether CGRP mAb are more or less effective for menstrually associated migraine.

This analysis was post hoc, using data from a single-arm study investigating the efficacy of two of the CGRP mAb medications: erenumab and fremanezumab. Patients were included if they had a history of migraine with > 8 monthly migraine days and at least one antihypertensive or antiepileptic preventive treatment for migraine had previously failed. Any other prophylactic medications were tapered before starting this trial; patients were given a validated electronic diary, and adherence to this diary had to be > 80%. Women were also excluded if they did not have regular menses (for instance, if they were on continuous hormonal contraception) or they were postmenopausal. Logistic regression was used to compare the preventive effect of these medications on perimenstrual and non-perimenstrual migraine attacks.

A total of 45 women were included in this observation. The relative reduction in total monthly migraine days was 31.4%; 28% were noted during and around menses, 32% were during other times of the menstrual cycle. Sensitivity analysis showed no significant difference between these two periods of time, and the ratio remained statistically similar as well.

It appears that the relative reduction in monthly migraine days did not fluctuate when the patient was treated with a CGRP antagonist mAb. Although other classes of preventive medication, specifically onabotulinumtoxinA (Botox), may affect menstrually associated migraine less potently, it appears that the CGRP antagonist class may be just as effective regardless of the underlying migraine trigger. It would definitely be worth considering a CGRP antagonist trial, or the addition of a CGRP mAb, if menstrual migraine remains significant despite otherwise effective preventive treatment.

Migraine is strongly affected by fluctuations in estrogen, and women with endometriosis often experience headaches associated with their severe attacks. Pasquini and colleagues specifically looked to see if the headache associated with endometriosis could be better diagnosed. Specifically, were these women experiencing migraine or another headache disorder?

This was a consecutive case-control series of 131 women admitted to a specialty endometriosis clinic. They were given a validated headache questionnaire that was reviewed by a neurologist to determine a diagnosis of migraine vs a diagnosis of another headache disorder. The case group included women with a history of endometriosis who were previously diagnosed with migraine, while the control group consisted of women with endometriosis only who did not have a history of headache.

Diagnosis of migraine was made in 53.4% of all patients: 18.6% of those experienced pure menstrual migraine (defined as migraine only occurring perimenstrually), 46% had some menstrually associated migraine symptoms, and 36% had purely non-menstrual migraine. Painful periods and dysuria were more frequent in patients with endometriosis and migraine compared with those without migraine. Other menstrually related conditions, including the duration of endometriosis, the phenotype of endometriosis, the presence of other systemic comorbidities, or heavy menstrual bleeding did not seem to differ significantly between the migraine and non-migraine groups.

Women of reproductive age consistently are seen most often for migraine and other headache conditions. Much of this is related to menstrual migraine and the effect that hormonal fluctuation has on migraine frequency and severity. Most practitioners work closely with their patient's gynecologist to determine which hormonal treatments and migraine treatments are most appropriate and safe for each individual situation. This study in particular sheds light on the particular phenotypes of headache pain and the specific headache diagnosis that most women with endometriosis experience.

The theme of this month's round-up is women's health, specifically as it relates to migraine. Three recent studies have highlighted the connection between estrogen and migraine, in terms of the potential increase in risk for certain conditions, such as gestational hypertension and endometriosis, and the use of potential therapies, such as calcitonin gene-related peptide (CGRP) antagonist medications to treat menstrual migraine.

Although most practitioners know that there is a deep connection between vascular risk and migraine, most are unfamiliar with the specific interplay between these two conditions. Antihypertensive medications are common preventive treatments for migraine, and migraine itself has been associated with an increased risk for specific vascular issues in pregnancy, most notably venous sinus thrombosis. Crowe and colleagues specifically looked at whether women with migraine experience a higher risk for hypertensive disorders of pregnancy.

This was a UK-based prospective cohort study using a large longitudinal database called the Clinical Practice Research Datalink. Over 1 million live-born or stillborn deliveries were analyzed from 1993 through 2020. The data were linked to diagnosis and prescription codes for migraine that were filled or documented before 20 weeks of gestation and compared with diagnosis codes for hypertensive disorders that occurred from week 20 through the pregnancy and delivery. Regression models were then used to estimate risk ratios and CI. Only single pregnancies were counted because multiple pregnancies already are associated with a higher risk for most other vascular conditions.

Any history of migraine prior to pregnancy was associated with an increased risk for gestational hypertension, eclampsia, and preeclampsia (relative risk 1.17). The greatest risk was higher frequency. Migraine that persisted into the first trimester led to a relative risk of 1.84. The use of migraine medications, especially vasoconstrictive-type medications, was also associated with a higher risk compared with women without migraine.

Women with migraine frequently present before family planning to discuss the potential risks and options of migraine treatment during pregnancy. In addition to discussing the most appropriate preventive and acute medications, it would be appropriate also to discuss any potential red flag symptoms that may occur during pregnancy. This discussion should include hypertensive disorders of pregnancy as per this study.

Since the advent of CGRP antagonist treatments for migraine, many practitioners and patients have been curious to know whether specific features of migraine are better treated with this class of medication. There are now both acute and preventive CGRP antagonists, both as small molecule agents and monoclonal antibodies (mAb). Menstrual migraines specifically can be a more difficult-to-treat subtype, and often when other triggers are negated, hormonal fluctuation can still be a significant problem for many patients. Verhagen and colleagues set out to determine whether CGRP mAb are more or less effective for menstrually associated migraine.

This analysis was post hoc, using data from a single-arm study investigating the efficacy of two of the CGRP mAb medications: erenumab and fremanezumab. Patients were included if they had a history of migraine with > 8 monthly migraine days and at least one antihypertensive or antiepileptic preventive treatment for migraine had previously failed. Any other prophylactic medications were tapered before starting this trial; patients were given a validated electronic diary, and adherence to this diary had to be > 80%. Women were also excluded if they did not have regular menses (for instance, if they were on continuous hormonal contraception) or they were postmenopausal. Logistic regression was used to compare the preventive effect of these medications on perimenstrual and non-perimenstrual migraine attacks.

A total of 45 women were included in this observation. The relative reduction in total monthly migraine days was 31.4%; 28% were noted during and around menses, 32% were during other times of the menstrual cycle. Sensitivity analysis showed no significant difference between these two periods of time, and the ratio remained statistically similar as well.

It appears that the relative reduction in monthly migraine days did not fluctuate when the patient was treated with a CGRP antagonist mAb. Although other classes of preventive medication, specifically onabotulinumtoxinA (Botox), may affect menstrually associated migraine less potently, it appears that the CGRP antagonist class may be just as effective regardless of the underlying migraine trigger. It would definitely be worth considering a CGRP antagonist trial, or the addition of a CGRP mAb, if menstrual migraine remains significant despite otherwise effective preventive treatment.

Migraine is strongly affected by fluctuations in estrogen, and women with endometriosis often experience headaches associated with their severe attacks. Pasquini and colleagues specifically looked to see if the headache associated with endometriosis could be better diagnosed. Specifically, were these women experiencing migraine or another headache disorder?

This was a consecutive case-control series of 131 women admitted to a specialty endometriosis clinic. They were given a validated headache questionnaire that was reviewed by a neurologist to determine a diagnosis of migraine vs a diagnosis of another headache disorder. The case group included women with a history of endometriosis who were previously diagnosed with migraine, while the control group consisted of women with endometriosis only who did not have a history of headache.

Diagnosis of migraine was made in 53.4% of all patients: 18.6% of those experienced pure menstrual migraine (defined as migraine only occurring perimenstrually), 46% had some menstrually associated migraine symptoms, and 36% had purely non-menstrual migraine. Painful periods and dysuria were more frequent in patients with endometriosis and migraine compared with those without migraine. Other menstrually related conditions, including the duration of endometriosis, the phenotype of endometriosis, the presence of other systemic comorbidities, or heavy menstrual bleeding did not seem to differ significantly between the migraine and non-migraine groups.

Women of reproductive age consistently are seen most often for migraine and other headache conditions. Much of this is related to menstrual migraine and the effect that hormonal fluctuation has on migraine frequency and severity. Most practitioners work closely with their patient's gynecologist to determine which hormonal treatments and migraine treatments are most appropriate and safe for each individual situation. This study in particular sheds light on the particular phenotypes of headache pain and the specific headache diagnosis that most women with endometriosis experience.

The theme of this month's round-up is women's health, specifically as it relates to migraine. Three recent studies have highlighted the connection between estrogen and migraine, in terms of the potential increase in risk for certain conditions, such as gestational hypertension and endometriosis, and the use of potential therapies, such as calcitonin gene-related peptide (CGRP) antagonist medications to treat menstrual migraine.

Although most practitioners know that there is a deep connection between vascular risk and migraine, most are unfamiliar with the specific interplay between these two conditions. Antihypertensive medications are common preventive treatments for migraine, and migraine itself has been associated with an increased risk for specific vascular issues in pregnancy, most notably venous sinus thrombosis. Crowe and colleagues specifically looked at whether women with migraine experience a higher risk for hypertensive disorders of pregnancy.

This was a UK-based prospective cohort study using a large longitudinal database called the Clinical Practice Research Datalink. Over 1 million live-born or stillborn deliveries were analyzed from 1993 through 2020. The data were linked to diagnosis and prescription codes for migraine that were filled or documented before 20 weeks of gestation and compared with diagnosis codes for hypertensive disorders that occurred from week 20 through the pregnancy and delivery. Regression models were then used to estimate risk ratios and CI. Only single pregnancies were counted because multiple pregnancies already are associated with a higher risk for most other vascular conditions.

Any history of migraine prior to pregnancy was associated with an increased risk for gestational hypertension, eclampsia, and preeclampsia (relative risk 1.17). The greatest risk was higher frequency. Migraine that persisted into the first trimester led to a relative risk of 1.84. The use of migraine medications, especially vasoconstrictive-type medications, was also associated with a higher risk compared with women without migraine.

Women with migraine frequently present before family planning to discuss the potential risks and options of migraine treatment during pregnancy. In addition to discussing the most appropriate preventive and acute medications, it would be appropriate also to discuss any potential red flag symptoms that may occur during pregnancy. This discussion should include hypertensive disorders of pregnancy as per this study.

Since the advent of CGRP antagonist treatments for migraine, many practitioners and patients have been curious to know whether specific features of migraine are better treated with this class of medication. There are now both acute and preventive CGRP antagonists, both as small molecule agents and monoclonal antibodies (mAb). Menstrual migraines specifically can be a more difficult-to-treat subtype, and often when other triggers are negated, hormonal fluctuation can still be a significant problem for many patients. Verhagen and colleagues set out to determine whether CGRP mAb are more or less effective for menstrually associated migraine.

This analysis was post hoc, using data from a single-arm study investigating the efficacy of two of the CGRP mAb medications: erenumab and fremanezumab. Patients were included if they had a history of migraine with > 8 monthly migraine days and at least one antihypertensive or antiepileptic preventive treatment for migraine had previously failed. Any other prophylactic medications were tapered before starting this trial; patients were given a validated electronic diary, and adherence to this diary had to be > 80%. Women were also excluded if they did not have regular menses (for instance, if they were on continuous hormonal contraception) or they were postmenopausal. Logistic regression was used to compare the preventive effect of these medications on perimenstrual and non-perimenstrual migraine attacks.

A total of 45 women were included in this observation. The relative reduction in total monthly migraine days was 31.4%; 28% were noted during and around menses, 32% were during other times of the menstrual cycle. Sensitivity analysis showed no significant difference between these two periods of time, and the ratio remained statistically similar as well.

It appears that the relative reduction in monthly migraine days did not fluctuate when the patient was treated with a CGRP antagonist mAb. Although other classes of preventive medication, specifically onabotulinumtoxinA (Botox), may affect menstrually associated migraine less potently, it appears that the CGRP antagonist class may be just as effective regardless of the underlying migraine trigger. It would definitely be worth considering a CGRP antagonist trial, or the addition of a CGRP mAb, if menstrual migraine remains significant despite otherwise effective preventive treatment.

Migraine is strongly affected by fluctuations in estrogen, and women with endometriosis often experience headaches associated with their severe attacks. Pasquini and colleagues specifically looked to see if the headache associated with endometriosis could be better diagnosed. Specifically, were these women experiencing migraine or another headache disorder?

This was a consecutive case-control series of 131 women admitted to a specialty endometriosis clinic. They were given a validated headache questionnaire that was reviewed by a neurologist to determine a diagnosis of migraine vs a diagnosis of another headache disorder. The case group included women with a history of endometriosis who were previously diagnosed with migraine, while the control group consisted of women with endometriosis only who did not have a history of headache.

Diagnosis of migraine was made in 53.4% of all patients: 18.6% of those experienced pure menstrual migraine (defined as migraine only occurring perimenstrually), 46% had some menstrually associated migraine symptoms, and 36% had purely non-menstrual migraine. Painful periods and dysuria were more frequent in patients with endometriosis and migraine compared with those without migraine. Other menstrually related conditions, including the duration of endometriosis, the phenotype of endometriosis, the presence of other systemic comorbidities, or heavy menstrual bleeding did not seem to differ significantly between the migraine and non-migraine groups.

Women of reproductive age consistently are seen most often for migraine and other headache conditions. Much of this is related to menstrual migraine and the effect that hormonal fluctuation has on migraine frequency and severity. Most practitioners work closely with their patient's gynecologist to determine which hormonal treatments and migraine treatments are most appropriate and safe for each individual situation. This study in particular sheds light on the particular phenotypes of headache pain and the specific headache diagnosis that most women with endometriosis experience.

Commentary: New genetic information and treatments for DLBCL, May 2023

Diffuse large B-cell lymphoma (DLBCL) is both a clinically and molecularly heterogenous disease. The International Prognostic Index (IPI), which is based on clinical and laboratory variables, is still currently used to delineate risk at the time of diagnosis. Diffuse large B-cell lymphoma can also further be classified into either germinal center B-cell (GCB) or activated B-cell (ABC) subtype, also known as the cell-of-origin classification (COO), which has been prognostic in prior studies.1 COO is based on gene expression profiling (GEP), though it can be estimated by immunohistochemistry.

Although these classifications are available, treatment of DLBCL has largely remained uniform over the past few decades. Despite encouraging preclinical data and early trials, large randomized studies had not demonstrated an advantage of rituximab, cyclophosphamide, doxorubicin hydrochloride, vincristine sulfate, and prednisone (R-CHOP) plus X over R-CHOP alone.2,3 The REMoDL-B trial, which included 801 adult patients with DLBCL, including patients with ABC, GCB, or molecular high grade (MHG) classification by GEP. Patients received one cycle of R-CHOP and were randomly assigned to R-CHOP (n = 407) alone or bortezomib–R-CHOP (n = 394) for cycles 2-6. Initial reports did not demonstrate any clear benefit of the addition of bortezomib.4 More recently, however, 5-year follow-up data demonstrate that the addition of bortezomib confers an advantage over R-CHOP in patients with ABC and MHG DLBCL (Davies et al). Bortezomib–R-CHOP vs R-CHOP significantly improved 60-month progression-free survival (PFS) in the ABC (adjusted hazard ratio [aHR] 0.65; P = .041) and MHG (aHR 0.46; P = .011) groups and overall survival (OS) in the ABC group (aHR 0.58; P = .032). The GCB group showed no significant difference in PFS or OS.

Despite the results of REMoDL-B, it is unlikely that this study will change practice. GEP is not readily available and with the approval of polatuzumab (pola)–R-CHP, based on the results of POLARIX trial, there is new option available for patients with newly diagnosed DLBCL with a high IPI. A recent meta-analysis of 12 randomized controlled trials (Sheng et al) involving 8376 patients with previously untreated ABC- or GCB-type DLBCL who received pola–R-CHP or other regimens was also recently performed. This study showed that pola–R-CHP prolonged PFS in patients with ABC-type DLBCL compared with bortezomib–R-CHOP (hazard ratio [HR] 0.52; P = .02); ibrutinib–R-CHOP (HR 0.43; P = .001); lenalidomide–R-CHOP (HR 0.51; P = .009); Obinutuzumab–CHOP (HR 0.46; P = .008); R-CHOP (HR 0.40; P < .001); and bortezomib, rituximab, and cyclophosphamide (HR 0.44; P = .07). Pola–R-CHP had no PFS benefit in patients with GCB-type DLBCL. Although it is difficult to directly compare trials, these data suggest that pola–R-CHP is active in ABC subtype DLBCL.

Together, these trials suggest that there still may be a role for more personalized therapy in DLBCL, though there may be room for improvement. Recent studies have suggested more complex genomic underpinnings in DLBCL beyond COO, which will hopefully be studied in the context of DLBCL trials.5

In the second line, patients with primary refractory or early relapse of DLBCL now have the option of anti-CD19 chimeric antigen receptor (CAR) T-cell therapy, based on the results of the ZUMA-7 and TRANSFORM studies.6,7 Lisocabtagene maraleucel (liso-cel) was also found to have a manageable safety profile in older patients with large B-cell lymphoma who were not transplant candidates in the PILOT study, leading to approval in this setting.8 More recently, axicabtagene ciloleucel (axi-cel) was found to be an effective second-line therapy with a manageable safety profile for patients aged ≥ 65 years as well (Westin et al). These findings are from a preplanned analysis of 109 patients aged ≥ 65 years from ZUMA-7 who were randomly assigned to receive second-line axi-cel (n = 51) or standard of care (SOC) (n = 58; two or three cycles of chemoimmunotherapy followed by high-dose chemotherapy with autologous stem-cell transplantation). At a median follow-up of 24.3 months, the median event-free survival was significantly longer with axi-cel vs SOC; 21.5 vs 2.5 months; HR, 0.276; descriptive P < .0001). Rates of grade 3 or higher treatment-emergent adverse events were 94% and 82% with axi-cel and SOC, respectively. Although these patients were considered transplant eligible, this study demonstrates that axi-cel can be safely administered to older patients.

Additional References

1. Rosenwald A, Wright G, Chan WC, et al; Lymphoma/Leukemia Molecular Profiling Project. The use of molecular profiling to predict survival after chemotherapy for diffuse large-B-cell lymphoma. N Engl J Med. 2002;346:1937-1947. doi: 10.1056/NEJMoa012914

2. Younes A, Sehn LH, Johnson P, et al; PHOENIX investigators. Randomized phase III trial of ibrutinib and rituximab plus cyclophosphamide, doxorubicin, vincristine, and prednisone in non-germinal center B-cell diffuse large B-cell lymphoma. J Clin Oncol. 2019;37:1285-1295. doi: 10.1200/JCO.18.02403

3. Nowakowski GS, Chiappella A, Gascoyne RD, et al; ROBUST Trial Investigators. ROBUST: a phase III study of lenalidomide plus R-CHOP versus placebo plus R-CHOP in previously untreated patients with ABC-type diffuse large B-cell lymphoma. J Clin Oncol. 2021;39:1317-1328. doi: 10.1200/JCO.20.01366

4. Davies A, Cummin TE, Barrans S, et al. Gene-expression profiling of bortezomib added to standard chemoimmunotherapy for diffuse large B-cell lymphoma (REMoDL-B): an open-label, randomised, phase 3 trial. Lancet Oncol. 2019;20:649-662. doi: 10.1016/S1470-2045(18)30935-5

5. Crombie JL, Armand P. Diffuse large B-cell lymphoma's new genomics: the bridge and the chasm. J Clin Oncol. 2020;38:3565-3574. doi: 10.1200/JCO.20.01501

6. Locke FL, Miklos DB, Jacobson CA, et al for All ZUMA-7 Investigators and Contributing Kite Members. Axicabtagene ciloleucel as second-line therapy for large B-cell lymphoma. N Engl J Med. 2022;386:640-654. doi: 10.1056/NEJMoa2116133

7. Abramson JS, Solomon SR, Arnason JE, et al; TRANSFORM Investigators. Lisocabtagene maraleucel as second-line therapy for large B-cell lymphoma: primary analysis of phase 3 TRANSFORM study. Blood. 2023:141:1675-1684. doi: 10.1182/blood.2022018730

8. Sehgal A, Hoda D, Riedell PA, et al. Lisocabtagene maraleucel as second-line therapy in adults with relapsed or refractory large B-cell lymphoma who were not intended for haematopoietic stem cell transplantation (PILOT): an open-label, phase 2 study. Lancet Oncol. 2022;23:1066-1077. doi: 10.1016/S1470-2045(22)00339-4

Diffuse large B-cell lymphoma (DLBCL) is both a clinically and molecularly heterogenous disease. The International Prognostic Index (IPI), which is based on clinical and laboratory variables, is still currently used to delineate risk at the time of diagnosis. Diffuse large B-cell lymphoma can also further be classified into either germinal center B-cell (GCB) or activated B-cell (ABC) subtype, also known as the cell-of-origin classification (COO), which has been prognostic in prior studies.1 COO is based on gene expression profiling (GEP), though it can be estimated by immunohistochemistry.

Although these classifications are available, treatment of DLBCL has largely remained uniform over the past few decades. Despite encouraging preclinical data and early trials, large randomized studies had not demonstrated an advantage of rituximab, cyclophosphamide, doxorubicin hydrochloride, vincristine sulfate, and prednisone (R-CHOP) plus X over R-CHOP alone.2,3 The REMoDL-B trial, which included 801 adult patients with DLBCL, including patients with ABC, GCB, or molecular high grade (MHG) classification by GEP. Patients received one cycle of R-CHOP and were randomly assigned to R-CHOP (n = 407) alone or bortezomib–R-CHOP (n = 394) for cycles 2-6. Initial reports did not demonstrate any clear benefit of the addition of bortezomib.4 More recently, however, 5-year follow-up data demonstrate that the addition of bortezomib confers an advantage over R-CHOP in patients with ABC and MHG DLBCL (Davies et al). Bortezomib–R-CHOP vs R-CHOP significantly improved 60-month progression-free survival (PFS) in the ABC (adjusted hazard ratio [aHR] 0.65; P = .041) and MHG (aHR 0.46; P = .011) groups and overall survival (OS) in the ABC group (aHR 0.58; P = .032). The GCB group showed no significant difference in PFS or OS.

Despite the results of REMoDL-B, it is unlikely that this study will change practice. GEP is not readily available and with the approval of polatuzumab (pola)–R-CHP, based on the results of POLARIX trial, there is new option available for patients with newly diagnosed DLBCL with a high IPI. A recent meta-analysis of 12 randomized controlled trials (Sheng et al) involving 8376 patients with previously untreated ABC- or GCB-type DLBCL who received pola–R-CHP or other regimens was also recently performed. This study showed that pola–R-CHP prolonged PFS in patients with ABC-type DLBCL compared with bortezomib–R-CHOP (hazard ratio [HR] 0.52; P = .02); ibrutinib–R-CHOP (HR 0.43; P = .001); lenalidomide–R-CHOP (HR 0.51; P = .009); Obinutuzumab–CHOP (HR 0.46; P = .008); R-CHOP (HR 0.40; P < .001); and bortezomib, rituximab, and cyclophosphamide (HR 0.44; P = .07). Pola–R-CHP had no PFS benefit in patients with GCB-type DLBCL. Although it is difficult to directly compare trials, these data suggest that pola–R-CHP is active in ABC subtype DLBCL.

Together, these trials suggest that there still may be a role for more personalized therapy in DLBCL, though there may be room for improvement. Recent studies have suggested more complex genomic underpinnings in DLBCL beyond COO, which will hopefully be studied in the context of DLBCL trials.5

In the second line, patients with primary refractory or early relapse of DLBCL now have the option of anti-CD19 chimeric antigen receptor (CAR) T-cell therapy, based on the results of the ZUMA-7 and TRANSFORM studies.6,7 Lisocabtagene maraleucel (liso-cel) was also found to have a manageable safety profile in older patients with large B-cell lymphoma who were not transplant candidates in the PILOT study, leading to approval in this setting.8 More recently, axicabtagene ciloleucel (axi-cel) was found to be an effective second-line therapy with a manageable safety profile for patients aged ≥ 65 years as well (Westin et al). These findings are from a preplanned analysis of 109 patients aged ≥ 65 years from ZUMA-7 who were randomly assigned to receive second-line axi-cel (n = 51) or standard of care (SOC) (n = 58; two or three cycles of chemoimmunotherapy followed by high-dose chemotherapy with autologous stem-cell transplantation). At a median follow-up of 24.3 months, the median event-free survival was significantly longer with axi-cel vs SOC; 21.5 vs 2.5 months; HR, 0.276; descriptive P < .0001). Rates of grade 3 or higher treatment-emergent adverse events were 94% and 82% with axi-cel and SOC, respectively. Although these patients were considered transplant eligible, this study demonstrates that axi-cel can be safely administered to older patients.

Additional References

1. Rosenwald A, Wright G, Chan WC, et al; Lymphoma/Leukemia Molecular Profiling Project. The use of molecular profiling to predict survival after chemotherapy for diffuse large-B-cell lymphoma. N Engl J Med. 2002;346:1937-1947. doi: 10.1056/NEJMoa012914

2. Younes A, Sehn LH, Johnson P, et al; PHOENIX investigators. Randomized phase III trial of ibrutinib and rituximab plus cyclophosphamide, doxorubicin, vincristine, and prednisone in non-germinal center B-cell diffuse large B-cell lymphoma. J Clin Oncol. 2019;37:1285-1295. doi: 10.1200/JCO.18.02403

3. Nowakowski GS, Chiappella A, Gascoyne RD, et al; ROBUST Trial Investigators. ROBUST: a phase III study of lenalidomide plus R-CHOP versus placebo plus R-CHOP in previously untreated patients with ABC-type diffuse large B-cell lymphoma. J Clin Oncol. 2021;39:1317-1328. doi: 10.1200/JCO.20.01366

4. Davies A, Cummin TE, Barrans S, et al. Gene-expression profiling of bortezomib added to standard chemoimmunotherapy for diffuse large B-cell lymphoma (REMoDL-B): an open-label, randomised, phase 3 trial. Lancet Oncol. 2019;20:649-662. doi: 10.1016/S1470-2045(18)30935-5

5. Crombie JL, Armand P. Diffuse large B-cell lymphoma's new genomics: the bridge and the chasm. J Clin Oncol. 2020;38:3565-3574. doi: 10.1200/JCO.20.01501

6. Locke FL, Miklos DB, Jacobson CA, et al for All ZUMA-7 Investigators and Contributing Kite Members. Axicabtagene ciloleucel as second-line therapy for large B-cell lymphoma. N Engl J Med. 2022;386:640-654. doi: 10.1056/NEJMoa2116133

7. Abramson JS, Solomon SR, Arnason JE, et al; TRANSFORM Investigators. Lisocabtagene maraleucel as second-line therapy for large B-cell lymphoma: primary analysis of phase 3 TRANSFORM study. Blood. 2023:141:1675-1684. doi: 10.1182/blood.2022018730

8. Sehgal A, Hoda D, Riedell PA, et al. Lisocabtagene maraleucel as second-line therapy in adults with relapsed or refractory large B-cell lymphoma who were not intended for haematopoietic stem cell transplantation (PILOT): an open-label, phase 2 study. Lancet Oncol. 2022;23:1066-1077. doi: 10.1016/S1470-2045(22)00339-4

Diffuse large B-cell lymphoma (DLBCL) is both a clinically and molecularly heterogenous disease. The International Prognostic Index (IPI), which is based on clinical and laboratory variables, is still currently used to delineate risk at the time of diagnosis. Diffuse large B-cell lymphoma can also further be classified into either germinal center B-cell (GCB) or activated B-cell (ABC) subtype, also known as the cell-of-origin classification (COO), which has been prognostic in prior studies.1 COO is based on gene expression profiling (GEP), though it can be estimated by immunohistochemistry.

Although these classifications are available, treatment of DLBCL has largely remained uniform over the past few decades. Despite encouraging preclinical data and early trials, large randomized studies had not demonstrated an advantage of rituximab, cyclophosphamide, doxorubicin hydrochloride, vincristine sulfate, and prednisone (R-CHOP) plus X over R-CHOP alone.2,3 The REMoDL-B trial, which included 801 adult patients with DLBCL, including patients with ABC, GCB, or molecular high grade (MHG) classification by GEP. Patients received one cycle of R-CHOP and were randomly assigned to R-CHOP (n = 407) alone or bortezomib–R-CHOP (n = 394) for cycles 2-6. Initial reports did not demonstrate any clear benefit of the addition of bortezomib.4 More recently, however, 5-year follow-up data demonstrate that the addition of bortezomib confers an advantage over R-CHOP in patients with ABC and MHG DLBCL (Davies et al). Bortezomib–R-CHOP vs R-CHOP significantly improved 60-month progression-free survival (PFS) in the ABC (adjusted hazard ratio [aHR] 0.65; P = .041) and MHG (aHR 0.46; P = .011) groups and overall survival (OS) in the ABC group (aHR 0.58; P = .032). The GCB group showed no significant difference in PFS or OS.

Despite the results of REMoDL-B, it is unlikely that this study will change practice. GEP is not readily available and with the approval of polatuzumab (pola)–R-CHP, based on the results of POLARIX trial, there is new option available for patients with newly diagnosed DLBCL with a high IPI. A recent meta-analysis of 12 randomized controlled trials (Sheng et al) involving 8376 patients with previously untreated ABC- or GCB-type DLBCL who received pola–R-CHP or other regimens was also recently performed. This study showed that pola–R-CHP prolonged PFS in patients with ABC-type DLBCL compared with bortezomib–R-CHOP (hazard ratio [HR] 0.52; P = .02); ibrutinib–R-CHOP (HR 0.43; P = .001); lenalidomide–R-CHOP (HR 0.51; P = .009); Obinutuzumab–CHOP (HR 0.46; P = .008); R-CHOP (HR 0.40; P < .001); and bortezomib, rituximab, and cyclophosphamide (HR 0.44; P = .07). Pola–R-CHP had no PFS benefit in patients with GCB-type DLBCL. Although it is difficult to directly compare trials, these data suggest that pola–R-CHP is active in ABC subtype DLBCL.

Together, these trials suggest that there still may be a role for more personalized therapy in DLBCL, though there may be room for improvement. Recent studies have suggested more complex genomic underpinnings in DLBCL beyond COO, which will hopefully be studied in the context of DLBCL trials.5

In the second line, patients with primary refractory or early relapse of DLBCL now have the option of anti-CD19 chimeric antigen receptor (CAR) T-cell therapy, based on the results of the ZUMA-7 and TRANSFORM studies.6,7 Lisocabtagene maraleucel (liso-cel) was also found to have a manageable safety profile in older patients with large B-cell lymphoma who were not transplant candidates in the PILOT study, leading to approval in this setting.8 More recently, axicabtagene ciloleucel (axi-cel) was found to be an effective second-line therapy with a manageable safety profile for patients aged ≥ 65 years as well (Westin et al). These findings are from a preplanned analysis of 109 patients aged ≥ 65 years from ZUMA-7 who were randomly assigned to receive second-line axi-cel (n = 51) or standard of care (SOC) (n = 58; two or three cycles of chemoimmunotherapy followed by high-dose chemotherapy with autologous stem-cell transplantation). At a median follow-up of 24.3 months, the median event-free survival was significantly longer with axi-cel vs SOC; 21.5 vs 2.5 months; HR, 0.276; descriptive P < .0001). Rates of grade 3 or higher treatment-emergent adverse events were 94% and 82% with axi-cel and SOC, respectively. Although these patients were considered transplant eligible, this study demonstrates that axi-cel can be safely administered to older patients.

Additional References

1. Rosenwald A, Wright G, Chan WC, et al; Lymphoma/Leukemia Molecular Profiling Project. The use of molecular profiling to predict survival after chemotherapy for diffuse large-B-cell lymphoma. N Engl J Med. 2002;346:1937-1947. doi: 10.1056/NEJMoa012914

2. Younes A, Sehn LH, Johnson P, et al; PHOENIX investigators. Randomized phase III trial of ibrutinib and rituximab plus cyclophosphamide, doxorubicin, vincristine, and prednisone in non-germinal center B-cell diffuse large B-cell lymphoma. J Clin Oncol. 2019;37:1285-1295. doi: 10.1200/JCO.18.02403

3. Nowakowski GS, Chiappella A, Gascoyne RD, et al; ROBUST Trial Investigators. ROBUST: a phase III study of lenalidomide plus R-CHOP versus placebo plus R-CHOP in previously untreated patients with ABC-type diffuse large B-cell lymphoma. J Clin Oncol. 2021;39:1317-1328. doi: 10.1200/JCO.20.01366

4. Davies A, Cummin TE, Barrans S, et al. Gene-expression profiling of bortezomib added to standard chemoimmunotherapy for diffuse large B-cell lymphoma (REMoDL-B): an open-label, randomised, phase 3 trial. Lancet Oncol. 2019;20:649-662. doi: 10.1016/S1470-2045(18)30935-5

5. Crombie JL, Armand P. Diffuse large B-cell lymphoma's new genomics: the bridge and the chasm. J Clin Oncol. 2020;38:3565-3574. doi: 10.1200/JCO.20.01501

6. Locke FL, Miklos DB, Jacobson CA, et al for All ZUMA-7 Investigators and Contributing Kite Members. Axicabtagene ciloleucel as second-line therapy for large B-cell lymphoma. N Engl J Med. 2022;386:640-654. doi: 10.1056/NEJMoa2116133

7. Abramson JS, Solomon SR, Arnason JE, et al; TRANSFORM Investigators. Lisocabtagene maraleucel as second-line therapy for large B-cell lymphoma: primary analysis of phase 3 TRANSFORM study. Blood. 2023:141:1675-1684. doi: 10.1182/blood.2022018730

8. Sehgal A, Hoda D, Riedell PA, et al. Lisocabtagene maraleucel as second-line therapy in adults with relapsed or refractory large B-cell lymphoma who were not intended for haematopoietic stem cell transplantation (PILOT): an open-label, phase 2 study. Lancet Oncol. 2022;23:1066-1077. doi: 10.1016/S1470-2045(22)00339-4

Surprising brain activity moments before death

This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I’m Dr F. Perry Wilson of the Yale School of Medicine.

All the participants in the study I am going to tell you about this week died. And three of them died twice. But their deaths provide us with a fascinating window into the complex electrochemistry of the dying brain. What we might be looking at, indeed, is the physiologic correlate of the near-death experience.

The concept of the near-death experience is culturally ubiquitous. And though the content seems to track along culture lines – Western Christians are more likely to report seeing guardian angels, while Hindus are more likely to report seeing messengers of the god of death – certain factors seem to transcend culture: an out-of-body experience; a feeling of peace; and, of course, the light at the end of the tunnel.

As a materialist, I won’t discuss the possibility that these commonalities reflect some metaphysical structure to the afterlife. More likely, it seems to me, is that the commonalities result from the fact that the experience is mediated by our brains, and our brains, when dying, may be more alike than different.

We are talking about this study, appearing in the Proceedings of the National Academy of Sciences, by Jimo Borjigin and her team.

Dr. Borjigin studies the neural correlates of consciousness, perhaps one of the biggest questions in all of science today. To wit,

The study in question follows four unconscious patients –comatose patients, really – as life-sustaining support was withdrawn, up until the moment of death. Three had suffered severe anoxic brain injury in the setting of prolonged cardiac arrest. Though the heart was restarted, the brain damage was severe. The fourth had a large brain hemorrhage. All four patients were thus comatose and, though not brain-dead, unresponsive – with the lowest possible Glasgow Coma Scale score. No response to outside stimuli.

The families had made the decision to withdraw life support – to remove the breathing tube – but agreed to enroll their loved one in the study.

The team applied EEG leads to the head, EKG leads to the chest, and other monitoring equipment to observe the physiologic changes that occurred as the comatose and unresponsive patient died.

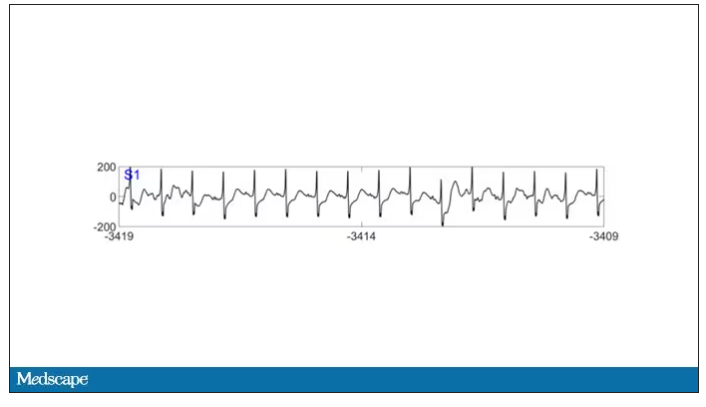

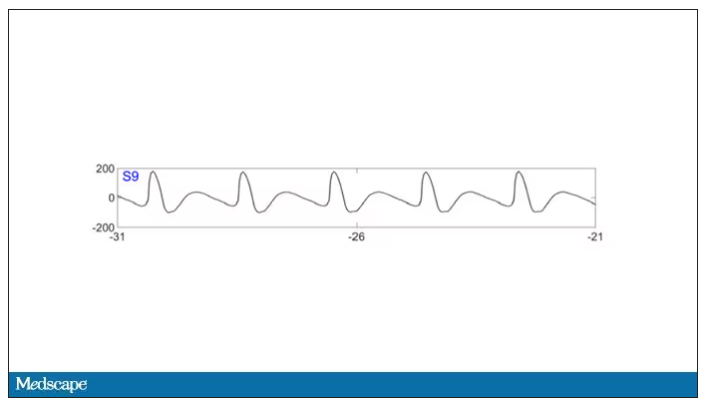

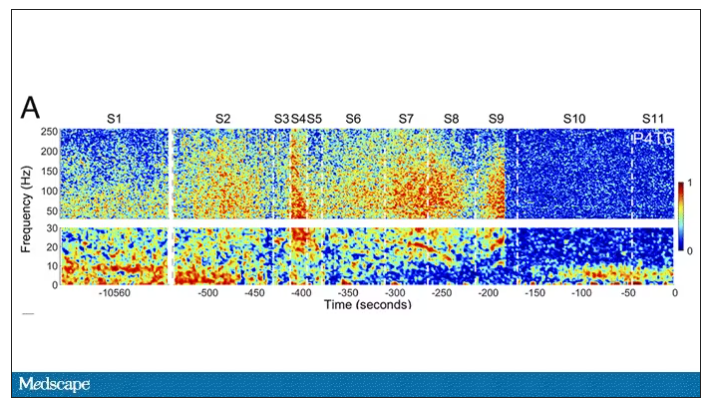

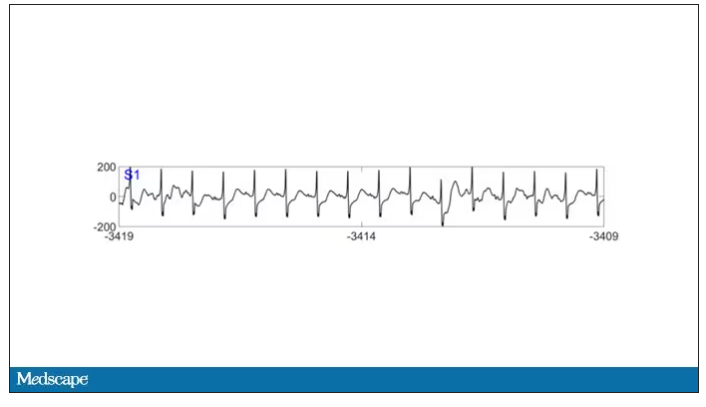

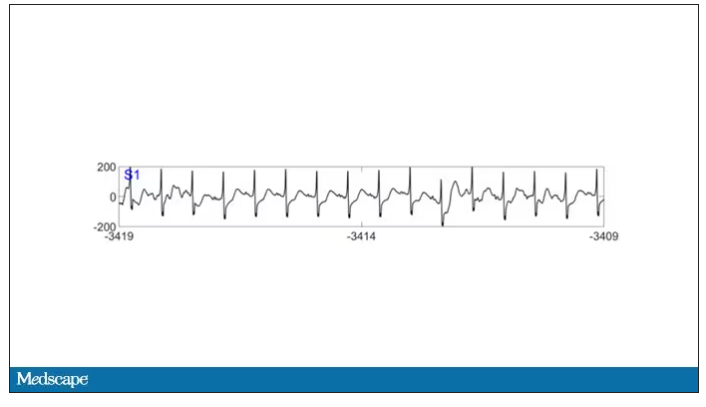

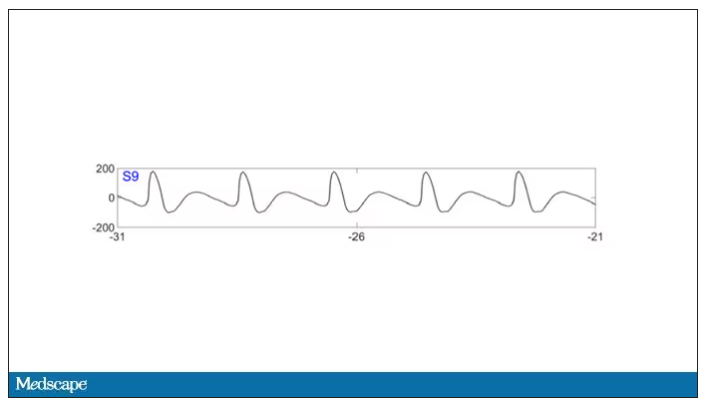

As the heart rhythm evolved from this:

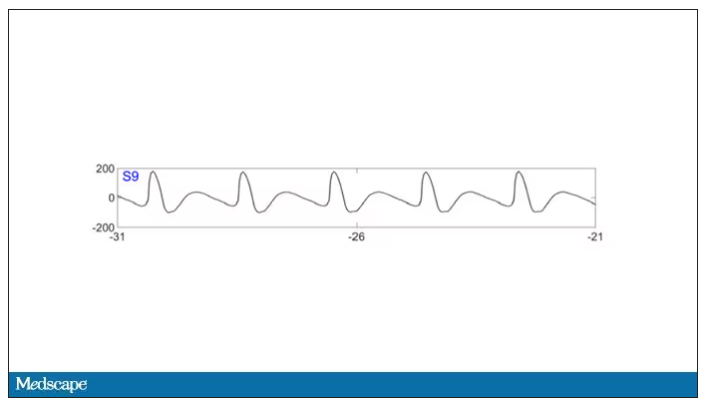

To this:

And eventually stopped.

But this is a study about the brain, not the heart.

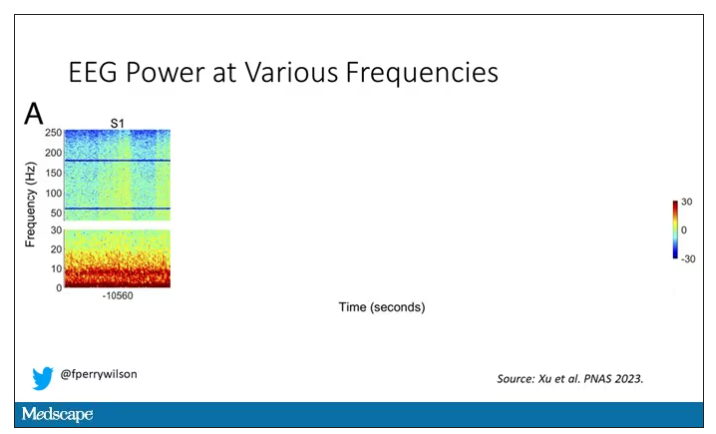

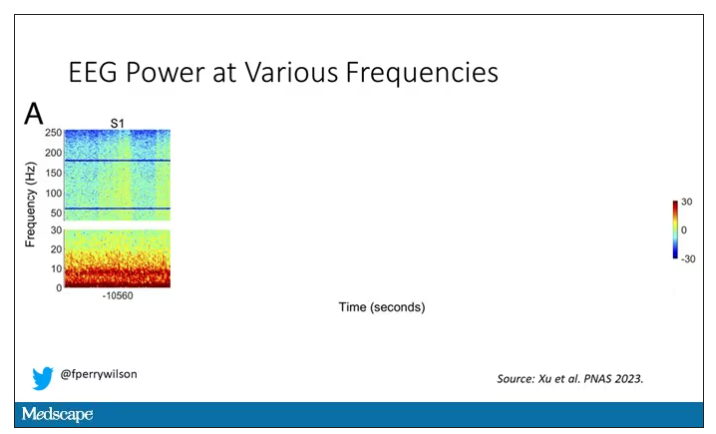

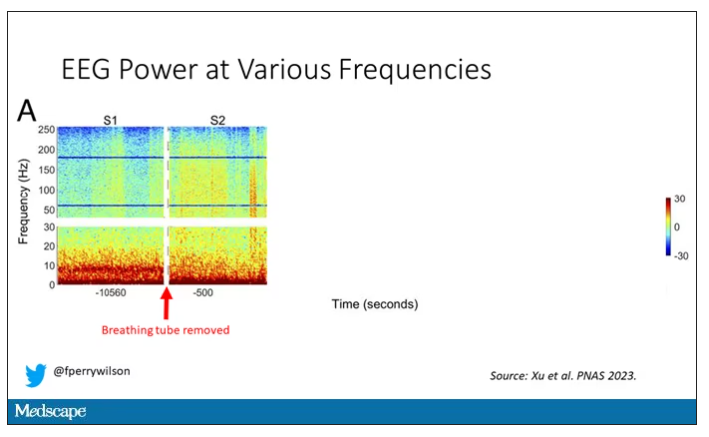

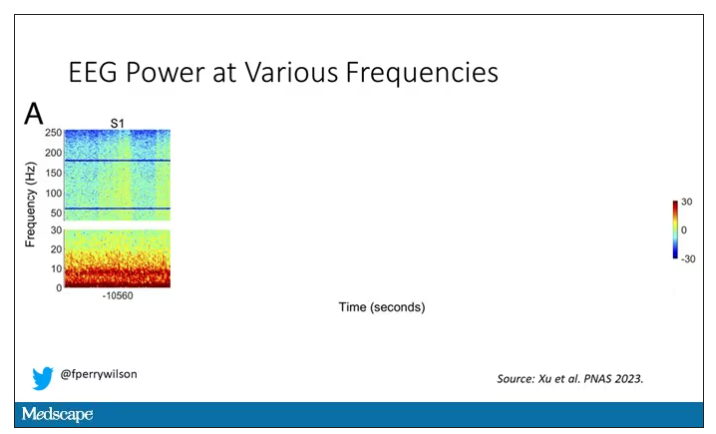

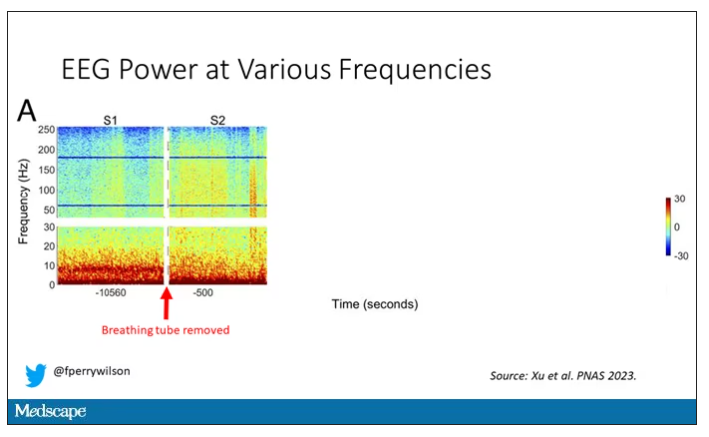

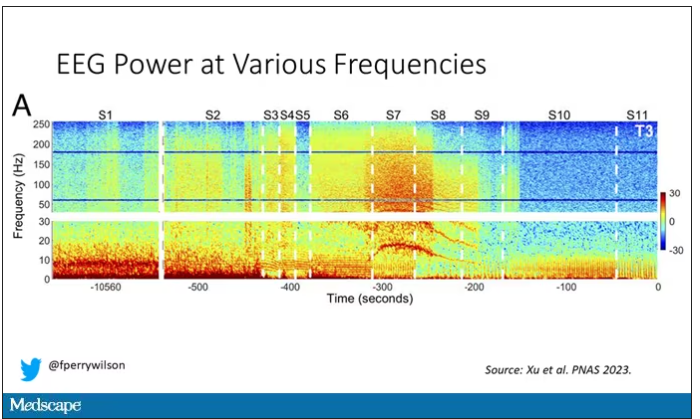

Prior to the withdrawal of life support, the brain electrical signals looked like this:

What you see is the EEG power at various frequencies, with red being higher. All the red was down at the low frequencies. Consciousness, at least as we understand it, is a higher-frequency phenomenon.

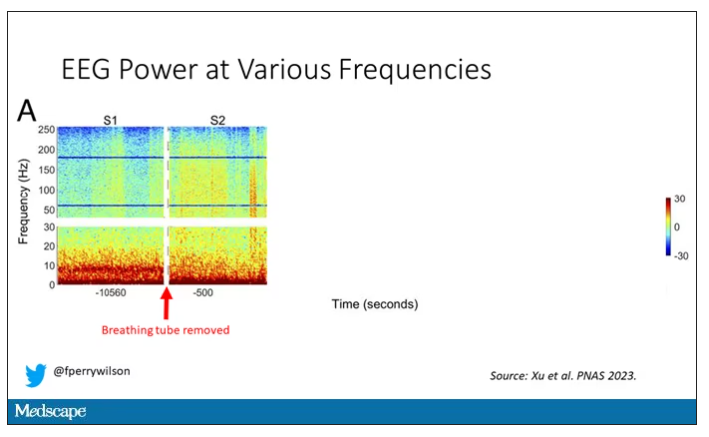

Right after the breathing tube was removed, the power didn’t change too much, but you can see some increased activity at the higher frequencies.

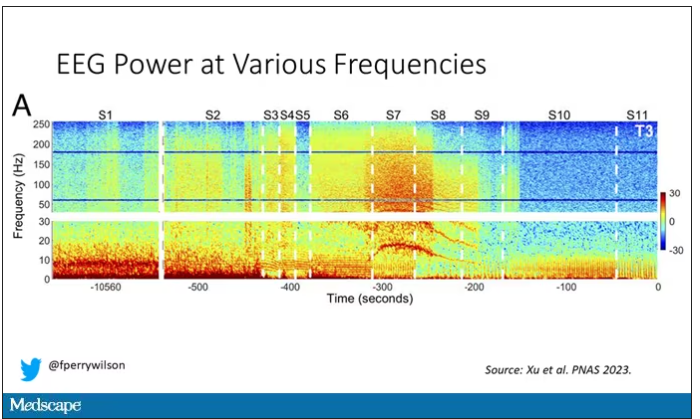

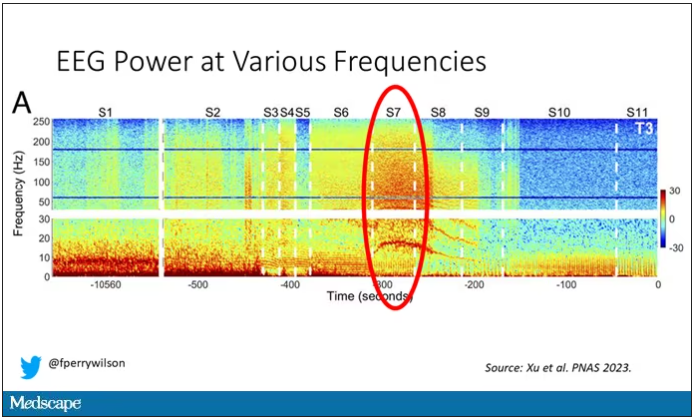

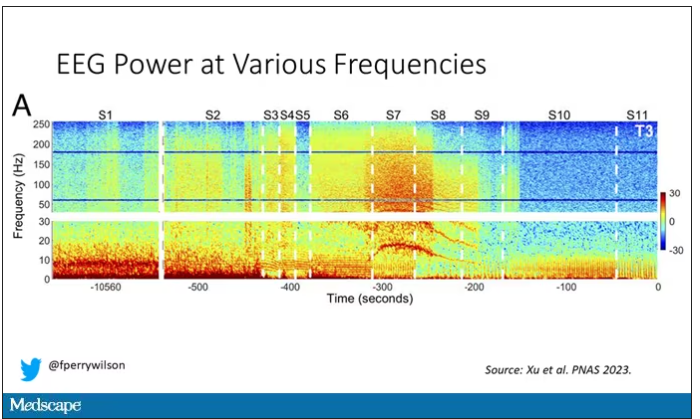

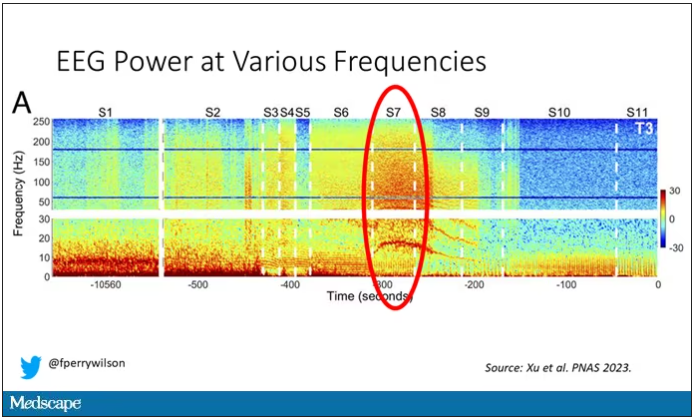

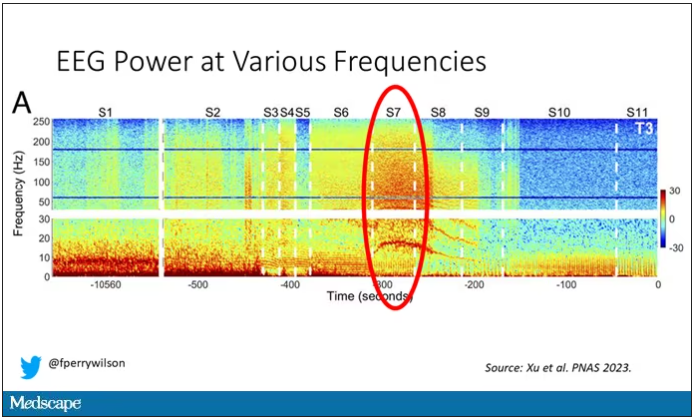

But in two of the four patients, something really surprising happened. Watch what happens as the brain gets closer and closer to death.

Here, about 300 seconds before death, there was a power surge at the high gamma frequencies.

This spike in power occurred in the somatosensory cortex and the dorsolateral prefrontal cortex, areas that are associated with conscious experience. It seems that this patient, 5 minutes before death, was experiencing something.

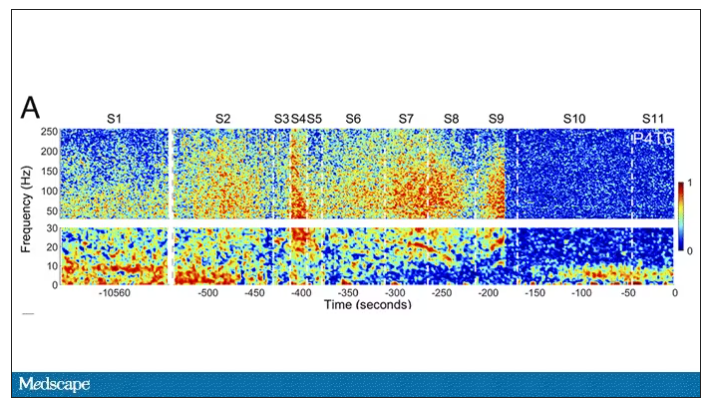

But I know what you’re thinking. This is a brain that is not receiving oxygen. Cells are going to become disordered quickly and start firing randomly – a last gasp, so to speak, before the end. Meaningless noise.

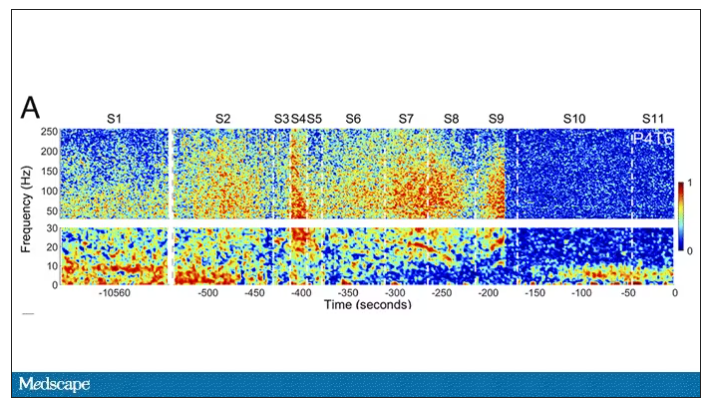

But connectivity mapping tells a different story. The signals seem to have structure.

Those high-frequency power surges increased connectivity in the posterior cortical “hot zone,” an area of the brain many researchers feel is necessary for conscious perception. This figure is not a map of raw brain electrical output like the one I showed before, but of coherence between brain regions in the consciousness hot zone. Those red areas indicate cross-talk – not the disordered scream of dying neurons, but a last set of messages passing back and forth from the parietal and posterior temporal lobes.

In fact, the electrical patterns of the brains in these patients looked very similar to the patterns seen in dreaming humans, as well as in patients with epilepsy who report sensations of out-of-body experiences.

It’s critical to realize two things here. First, these signals of consciousness were not present before life support was withdrawn. These comatose patients had minimal brain activity; there was no evidence that they were experiencing anything before the process of dying began. These brains are behaving fundamentally differently near death.

But second, we must realize that, although the brains of these individuals, in their last moments, appeared to be acting in a way that conscious brains act, we have no way of knowing if the patients were truly having a conscious experience. As I said, all the patients in the study died. Short of those metaphysics I alluded to earlier, we will have no way to ask them how they experienced their final moments.

Let’s be clear: This study doesn’t answer the question of what happens when we die. It says nothing about life after death or the existence or persistence of the soul. But what it does do is shed light on an incredibly difficult problem in neuroscience: the problem of consciousness. And as studies like this move forward, we may discover that the root of consciousness comes not from the breath of God or the energy of a living universe, but from very specific parts of the very complicated machine that is the brain, acting together to produce something transcendent. And to me, that is no less sublime.

Dr. Wilson is an associate professor of medicine and director of Yale’s Clinical and Translational Research Accelerator, Yale University, New Haven, Conn. His science communication work can be found in the Huffington Post, on NPR, and on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn’t, is available now. Dr. Wilson has disclosed no relevant financial relationships.

This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I’m Dr F. Perry Wilson of the Yale School of Medicine.

All the participants in the study I am going to tell you about this week died. And three of them died twice. But their deaths provide us with a fascinating window into the complex electrochemistry of the dying brain. What we might be looking at, indeed, is the physiologic correlate of the near-death experience.

The concept of the near-death experience is culturally ubiquitous. And though the content seems to track along culture lines – Western Christians are more likely to report seeing guardian angels, while Hindus are more likely to report seeing messengers of the god of death – certain factors seem to transcend culture: an out-of-body experience; a feeling of peace; and, of course, the light at the end of the tunnel.

As a materialist, I won’t discuss the possibility that these commonalities reflect some metaphysical structure to the afterlife. More likely, it seems to me, is that the commonalities result from the fact that the experience is mediated by our brains, and our brains, when dying, may be more alike than different.

We are talking about this study, appearing in the Proceedings of the National Academy of Sciences, by Jimo Borjigin and her team.

Dr. Borjigin studies the neural correlates of consciousness, perhaps one of the biggest questions in all of science today. To wit,

The study in question follows four unconscious patients –comatose patients, really – as life-sustaining support was withdrawn, up until the moment of death. Three had suffered severe anoxic brain injury in the setting of prolonged cardiac arrest. Though the heart was restarted, the brain damage was severe. The fourth had a large brain hemorrhage. All four patients were thus comatose and, though not brain-dead, unresponsive – with the lowest possible Glasgow Coma Scale score. No response to outside stimuli.

The families had made the decision to withdraw life support – to remove the breathing tube – but agreed to enroll their loved one in the study.

The team applied EEG leads to the head, EKG leads to the chest, and other monitoring equipment to observe the physiologic changes that occurred as the comatose and unresponsive patient died.

As the heart rhythm evolved from this:

To this:

And eventually stopped.

But this is a study about the brain, not the heart.

Prior to the withdrawal of life support, the brain electrical signals looked like this:

What you see is the EEG power at various frequencies, with red being higher. All the red was down at the low frequencies. Consciousness, at least as we understand it, is a higher-frequency phenomenon.

Right after the breathing tube was removed, the power didn’t change too much, but you can see some increased activity at the higher frequencies.

But in two of the four patients, something really surprising happened. Watch what happens as the brain gets closer and closer to death.

Here, about 300 seconds before death, there was a power surge at the high gamma frequencies.

This spike in power occurred in the somatosensory cortex and the dorsolateral prefrontal cortex, areas that are associated with conscious experience. It seems that this patient, 5 minutes before death, was experiencing something.

But I know what you’re thinking. This is a brain that is not receiving oxygen. Cells are going to become disordered quickly and start firing randomly – a last gasp, so to speak, before the end. Meaningless noise.

But connectivity mapping tells a different story. The signals seem to have structure.

Those high-frequency power surges increased connectivity in the posterior cortical “hot zone,” an area of the brain many researchers feel is necessary for conscious perception. This figure is not a map of raw brain electrical output like the one I showed before, but of coherence between brain regions in the consciousness hot zone. Those red areas indicate cross-talk – not the disordered scream of dying neurons, but a last set of messages passing back and forth from the parietal and posterior temporal lobes.

In fact, the electrical patterns of the brains in these patients looked very similar to the patterns seen in dreaming humans, as well as in patients with epilepsy who report sensations of out-of-body experiences.

It’s critical to realize two things here. First, these signals of consciousness were not present before life support was withdrawn. These comatose patients had minimal brain activity; there was no evidence that they were experiencing anything before the process of dying began. These brains are behaving fundamentally differently near death.

But second, we must realize that, although the brains of these individuals, in their last moments, appeared to be acting in a way that conscious brains act, we have no way of knowing if the patients were truly having a conscious experience. As I said, all the patients in the study died. Short of those metaphysics I alluded to earlier, we will have no way to ask them how they experienced their final moments.

Let’s be clear: This study doesn’t answer the question of what happens when we die. It says nothing about life after death or the existence or persistence of the soul. But what it does do is shed light on an incredibly difficult problem in neuroscience: the problem of consciousness. And as studies like this move forward, we may discover that the root of consciousness comes not from the breath of God or the energy of a living universe, but from very specific parts of the very complicated machine that is the brain, acting together to produce something transcendent. And to me, that is no less sublime.

Dr. Wilson is an associate professor of medicine and director of Yale’s Clinical and Translational Research Accelerator, Yale University, New Haven, Conn. His science communication work can be found in the Huffington Post, on NPR, and on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn’t, is available now. Dr. Wilson has disclosed no relevant financial relationships.

This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I’m Dr F. Perry Wilson of the Yale School of Medicine.

All the participants in the study I am going to tell you about this week died. And three of them died twice. But their deaths provide us with a fascinating window into the complex electrochemistry of the dying brain. What we might be looking at, indeed, is the physiologic correlate of the near-death experience.

The concept of the near-death experience is culturally ubiquitous. And though the content seems to track along culture lines – Western Christians are more likely to report seeing guardian angels, while Hindus are more likely to report seeing messengers of the god of death – certain factors seem to transcend culture: an out-of-body experience; a feeling of peace; and, of course, the light at the end of the tunnel.

As a materialist, I won’t discuss the possibility that these commonalities reflect some metaphysical structure to the afterlife. More likely, it seems to me, is that the commonalities result from the fact that the experience is mediated by our brains, and our brains, when dying, may be more alike than different.

We are talking about this study, appearing in the Proceedings of the National Academy of Sciences, by Jimo Borjigin and her team.

Dr. Borjigin studies the neural correlates of consciousness, perhaps one of the biggest questions in all of science today. To wit,

The study in question follows four unconscious patients –comatose patients, really – as life-sustaining support was withdrawn, up until the moment of death. Three had suffered severe anoxic brain injury in the setting of prolonged cardiac arrest. Though the heart was restarted, the brain damage was severe. The fourth had a large brain hemorrhage. All four patients were thus comatose and, though not brain-dead, unresponsive – with the lowest possible Glasgow Coma Scale score. No response to outside stimuli.

The families had made the decision to withdraw life support – to remove the breathing tube – but agreed to enroll their loved one in the study.

The team applied EEG leads to the head, EKG leads to the chest, and other monitoring equipment to observe the physiologic changes that occurred as the comatose and unresponsive patient died.

As the heart rhythm evolved from this:

To this:

And eventually stopped.

But this is a study about the brain, not the heart.

Prior to the withdrawal of life support, the brain electrical signals looked like this:

What you see is the EEG power at various frequencies, with red being higher. All the red was down at the low frequencies. Consciousness, at least as we understand it, is a higher-frequency phenomenon.

Right after the breathing tube was removed, the power didn’t change too much, but you can see some increased activity at the higher frequencies.

But in two of the four patients, something really surprising happened. Watch what happens as the brain gets closer and closer to death.

Here, about 300 seconds before death, there was a power surge at the high gamma frequencies.

This spike in power occurred in the somatosensory cortex and the dorsolateral prefrontal cortex, areas that are associated with conscious experience. It seems that this patient, 5 minutes before death, was experiencing something.

But I know what you’re thinking. This is a brain that is not receiving oxygen. Cells are going to become disordered quickly and start firing randomly – a last gasp, so to speak, before the end. Meaningless noise.

But connectivity mapping tells a different story. The signals seem to have structure.

Those high-frequency power surges increased connectivity in the posterior cortical “hot zone,” an area of the brain many researchers feel is necessary for conscious perception. This figure is not a map of raw brain electrical output like the one I showed before, but of coherence between brain regions in the consciousness hot zone. Those red areas indicate cross-talk – not the disordered scream of dying neurons, but a last set of messages passing back and forth from the parietal and posterior temporal lobes.

In fact, the electrical patterns of the brains in these patients looked very similar to the patterns seen in dreaming humans, as well as in patients with epilepsy who report sensations of out-of-body experiences.

It’s critical to realize two things here. First, these signals of consciousness were not present before life support was withdrawn. These comatose patients had minimal brain activity; there was no evidence that they were experiencing anything before the process of dying began. These brains are behaving fundamentally differently near death.

But second, we must realize that, although the brains of these individuals, in their last moments, appeared to be acting in a way that conscious brains act, we have no way of knowing if the patients were truly having a conscious experience. As I said, all the patients in the study died. Short of those metaphysics I alluded to earlier, we will have no way to ask them how they experienced their final moments.

Let’s be clear: This study doesn’t answer the question of what happens when we die. It says nothing about life after death or the existence or persistence of the soul. But what it does do is shed light on an incredibly difficult problem in neuroscience: the problem of consciousness. And as studies like this move forward, we may discover that the root of consciousness comes not from the breath of God or the energy of a living universe, but from very specific parts of the very complicated machine that is the brain, acting together to produce something transcendent. And to me, that is no less sublime.

Dr. Wilson is an associate professor of medicine and director of Yale’s Clinical and Translational Research Accelerator, Yale University, New Haven, Conn. His science communication work can be found in the Huffington Post, on NPR, and on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn’t, is available now. Dr. Wilson has disclosed no relevant financial relationships.

Cancer pain declines with cannabis use

in a study.

Physician-prescribed cannabis, particularly cannabinoids, has been shown to ease cancer-related pain in adult cancer patients, who often find inadequate pain relief from medications including opioids, Saro Aprikian, MSc, a medical student at the Royal College of Surgeons, Dublin, and colleagues, wrote in their paper.

However, real-world data on the safety and effectiveness of cannabis in the cancer population and the impact on use of other medications are lacking, the researchers said.

In the study, published in BMJ Supportive & Palliative Care, the researchers reviewed data from 358 adults with cancer who were part of a multicenter cannabis registry in Canada between May 2015 and October 2018.

The average age of the patients was 57.6 years, and 48% were men. The top three cancer diagnoses in the study population were genitorurinary, breast, and colorectal.

Pain was the most common reason for obtaining a medical cannabis prescription, cited by 72.4% of patients.

Data were collected at follow-up visits conducted every 3 months over 1 year. Pain was assessed via the Brief Pain Inventory (BPI) and revised Edmonton Symptom Assessment System (ESAS-r) questionnaires and compared to baseline values. Patients rated their pain intensity on a sliding scale of 0 (none) to 10 (worst possible). Pain relief was rated on a scale of 0% (none) to 100% (complete).

Compared to baseline scores, patients showed significant decreases at 3, 6 and 9 months for BPI worst pain (5.5 at baseline, 3.6 for 3, 6, and 9 months) average pain (4.1 at baseline, 2.4, 2.3, and 2.7 for 3, 6, and 9 months, respectively), overall pain severity (2.7 at baseline, 2.3, 2.3, and 2.4 at 3, 6, and 9 months, respectively), and pain interference with daily life (4.3 at baseline, 2.4, 2.2, and 2.4 at 3, 6, and 9 months, respectively; P less than .01 for all four pain measures).

“Pain severity as reported in the ESAS-r decreased significantly at 3-month, 6-month and 9-month follow-ups,” the researchers noted.

In addition, total medication burden based on the medication quantification scale (MQS) and morphine equivalent daily dose (MEDD) were recorded at 3, 6, 9, and 12 months. MQS scores decreased compared to baseline at 3, 6, 9, and 12 months in 10%, 23.5%, 26.2%, and 31.6% of patients, respectively. Also compared with baseline, 11.1%, 31.3%, and 14.3% of patients reported decreases in MEDD scores at 3, 6, and 9 months, respectively.

Overall, products with equal amounts of active ingredients tetrahydrocannabinol (THC) and cannabidiol (CBD) were more effective than were those with a predominance of either THC or CBD, the researchers wrote.

Medical cannabis was well-tolerated; a total of 15 moderate to severe side effects were reported by 11 patients, 13 of which were minor. The most common side effects were sleepiness and fatigue, and five patients discontinued their medical cannabis because of side effects. The two serious side effects reported during the study period – pneumonia and a cardiovascular event – were deemed unlikely related to the patients’ medicinal cannabis use.

The findings were limited by several factors, including the observational design, which prevented conclusions about causality, the researchers noted. Other limitations included the loss of many patients to follow-up and incomplete data on other prescription medications in many cases.

The results support the use of medical cannabis by cancer patients as an adjunct pain relief strategy and a way to potentially reduce the use of other medications such as opioids, the authors concluded.

The study was supported by the Canadian Consortium for the Investigation of Cannabinoids, Collège des Médecins du Québec, and the Canopy Growth Corporation. The researchers had no financial conflicts to disclose.

in a study.

Physician-prescribed cannabis, particularly cannabinoids, has been shown to ease cancer-related pain in adult cancer patients, who often find inadequate pain relief from medications including opioids, Saro Aprikian, MSc, a medical student at the Royal College of Surgeons, Dublin, and colleagues, wrote in their paper.

However, real-world data on the safety and effectiveness of cannabis in the cancer population and the impact on use of other medications are lacking, the researchers said.

In the study, published in BMJ Supportive & Palliative Care, the researchers reviewed data from 358 adults with cancer who were part of a multicenter cannabis registry in Canada between May 2015 and October 2018.

The average age of the patients was 57.6 years, and 48% were men. The top three cancer diagnoses in the study population were genitorurinary, breast, and colorectal.

Pain was the most common reason for obtaining a medical cannabis prescription, cited by 72.4% of patients.

Data were collected at follow-up visits conducted every 3 months over 1 year. Pain was assessed via the Brief Pain Inventory (BPI) and revised Edmonton Symptom Assessment System (ESAS-r) questionnaires and compared to baseline values. Patients rated their pain intensity on a sliding scale of 0 (none) to 10 (worst possible). Pain relief was rated on a scale of 0% (none) to 100% (complete).

Compared to baseline scores, patients showed significant decreases at 3, 6 and 9 months for BPI worst pain (5.5 at baseline, 3.6 for 3, 6, and 9 months) average pain (4.1 at baseline, 2.4, 2.3, and 2.7 for 3, 6, and 9 months, respectively), overall pain severity (2.7 at baseline, 2.3, 2.3, and 2.4 at 3, 6, and 9 months, respectively), and pain interference with daily life (4.3 at baseline, 2.4, 2.2, and 2.4 at 3, 6, and 9 months, respectively; P less than .01 for all four pain measures).

“Pain severity as reported in the ESAS-r decreased significantly at 3-month, 6-month and 9-month follow-ups,” the researchers noted.

In addition, total medication burden based on the medication quantification scale (MQS) and morphine equivalent daily dose (MEDD) were recorded at 3, 6, 9, and 12 months. MQS scores decreased compared to baseline at 3, 6, 9, and 12 months in 10%, 23.5%, 26.2%, and 31.6% of patients, respectively. Also compared with baseline, 11.1%, 31.3%, and 14.3% of patients reported decreases in MEDD scores at 3, 6, and 9 months, respectively.

Overall, products with equal amounts of active ingredients tetrahydrocannabinol (THC) and cannabidiol (CBD) were more effective than were those with a predominance of either THC or CBD, the researchers wrote.

Medical cannabis was well-tolerated; a total of 15 moderate to severe side effects were reported by 11 patients, 13 of which were minor. The most common side effects were sleepiness and fatigue, and five patients discontinued their medical cannabis because of side effects. The two serious side effects reported during the study period – pneumonia and a cardiovascular event – were deemed unlikely related to the patients’ medicinal cannabis use.

The findings were limited by several factors, including the observational design, which prevented conclusions about causality, the researchers noted. Other limitations included the loss of many patients to follow-up and incomplete data on other prescription medications in many cases.

The results support the use of medical cannabis by cancer patients as an adjunct pain relief strategy and a way to potentially reduce the use of other medications such as opioids, the authors concluded.

The study was supported by the Canadian Consortium for the Investigation of Cannabinoids, Collège des Médecins du Québec, and the Canopy Growth Corporation. The researchers had no financial conflicts to disclose.

in a study.

Physician-prescribed cannabis, particularly cannabinoids, has been shown to ease cancer-related pain in adult cancer patients, who often find inadequate pain relief from medications including opioids, Saro Aprikian, MSc, a medical student at the Royal College of Surgeons, Dublin, and colleagues, wrote in their paper.

However, real-world data on the safety and effectiveness of cannabis in the cancer population and the impact on use of other medications are lacking, the researchers said.

In the study, published in BMJ Supportive & Palliative Care, the researchers reviewed data from 358 adults with cancer who were part of a multicenter cannabis registry in Canada between May 2015 and October 2018.

The average age of the patients was 57.6 years, and 48% were men. The top three cancer diagnoses in the study population were genitorurinary, breast, and colorectal.

Pain was the most common reason for obtaining a medical cannabis prescription, cited by 72.4% of patients.

Data were collected at follow-up visits conducted every 3 months over 1 year. Pain was assessed via the Brief Pain Inventory (BPI) and revised Edmonton Symptom Assessment System (ESAS-r) questionnaires and compared to baseline values. Patients rated their pain intensity on a sliding scale of 0 (none) to 10 (worst possible). Pain relief was rated on a scale of 0% (none) to 100% (complete).

Compared to baseline scores, patients showed significant decreases at 3, 6 and 9 months for BPI worst pain (5.5 at baseline, 3.6 for 3, 6, and 9 months) average pain (4.1 at baseline, 2.4, 2.3, and 2.7 for 3, 6, and 9 months, respectively), overall pain severity (2.7 at baseline, 2.3, 2.3, and 2.4 at 3, 6, and 9 months, respectively), and pain interference with daily life (4.3 at baseline, 2.4, 2.2, and 2.4 at 3, 6, and 9 months, respectively; P less than .01 for all four pain measures).

“Pain severity as reported in the ESAS-r decreased significantly at 3-month, 6-month and 9-month follow-ups,” the researchers noted.

In addition, total medication burden based on the medication quantification scale (MQS) and morphine equivalent daily dose (MEDD) were recorded at 3, 6, 9, and 12 months. MQS scores decreased compared to baseline at 3, 6, 9, and 12 months in 10%, 23.5%, 26.2%, and 31.6% of patients, respectively. Also compared with baseline, 11.1%, 31.3%, and 14.3% of patients reported decreases in MEDD scores at 3, 6, and 9 months, respectively.

Overall, products with equal amounts of active ingredients tetrahydrocannabinol (THC) and cannabidiol (CBD) were more effective than were those with a predominance of either THC or CBD, the researchers wrote.

Medical cannabis was well-tolerated; a total of 15 moderate to severe side effects were reported by 11 patients, 13 of which were minor. The most common side effects were sleepiness and fatigue, and five patients discontinued their medical cannabis because of side effects. The two serious side effects reported during the study period – pneumonia and a cardiovascular event – were deemed unlikely related to the patients’ medicinal cannabis use.

The findings were limited by several factors, including the observational design, which prevented conclusions about causality, the researchers noted. Other limitations included the loss of many patients to follow-up and incomplete data on other prescription medications in many cases.

The results support the use of medical cannabis by cancer patients as an adjunct pain relief strategy and a way to potentially reduce the use of other medications such as opioids, the authors concluded.

The study was supported by the Canadian Consortium for the Investigation of Cannabinoids, Collège des Médecins du Québec, and the Canopy Growth Corporation. The researchers had no financial conflicts to disclose.

FROM BMJ SUPPORTIVE & PALLIATIVE CARE

New outbreaks of Marburg virus disease: What clinicians need to know

What do green monkeys, fruit bats, and python caves all have in common? All have been implicated in outbreaks as transmission sources of the rare but deadly Marburg virus. Marburg virus is in the same Filoviridae family of highly pathogenic RNA viruses as Ebola virus, and similarly can cause a rapidly progressive and fatal viral hemorrhagic fever.

In the first reported Marburg outbreak in 1967, laboratory workers in Marburg and Frankfurt, Germany, and in Belgrade, Yugoslavia, developed severe febrile illnesses with massive hemorrhage and multiorgan system dysfunction after contact with infected African green monkeys imported from Uganda.

The majority of MVD outbreaks have occurred in sub-Saharan Africa, and primarily in three African countries: Angola, the Democratic Republic of Congo, and Uganda. In sub-Saharan Africa, these sporadic outbreaks have had high case fatality rates (up to 80%-90%) and been linked to human exposure to the oral secretions or urinary/fecal droppings of Egyptian fruit bats (Rousettus aegyptiacus), the animal reservoir for Marburg virus. These exposures have primarily occurred among miners or tourists frequenting bat-infested mines or caves, including Uganda’s python cave, where Centers for Disease Control and Prevention investigators have conducted ecological studies on Marburg-infected bats. Person-to-person transmission occurs from direct contact with the blood or bodily fluids of an infected person or contact with a contaminated object (for example, unsterilized needles and syringes in a large nosocomial outbreak in Angola).

On April 6, 2023, the CDC issued a Health Advisory for U.S. clinicians and public health departments regarding two separate MVD outbreaks in Equatorial Guinea and Tanzania. These first-ever MVD outbreaks in both West and East African countries appear to be epidemiologically unrelated. As of March 24, 2023, in Equatorial Guinea, a total of 15 confirmed cases, including 11 deaths, and 23 probable cases, all deceased, have been identified in multiple districts since the outbreak declaration in February 2023. In Tanzania, a total of eight cases, including five deaths, have been reported among villagers in a northwest region since the outbreak declaration in March 2023. While so far cases in the Tanzania MVD outbreak have been epidemiologically linked, in Equatorial Guinea some cases have no identified epidemiological links, raising concern for ongoing community spread.

To date, no cases in these outbreaks have been reported in the United States or outside the affected countries. Overall, the risk of MVD in nonendemic countries, like the United States, is low but there is still a risk of importation. As of May 2, 2023, CDC has issued a Level 2 travel alert (practice enhanced precautions) for Marburg in Equatorial Guinea and a Level 1 travel watch (practice usual precautions) for Marburg in Tanzania. Travelers to these countries are advised to avoid nonessential travel to areas with active outbreaks and practice preventative measures, including avoiding contact with sick people, blood and bodily fluids, dead bodies, fruit bats, and nonhuman primates. International travelers returning to the United States from these countries are advised to self-monitor for Marburg symptoms during travel and for 21 days after country departure. Travelers who develop signs or symptoms of MVD should immediately self-isolate and contact their local health department or clinician.

So, how should clinicians manage such return travelers? In the setting of these new MVD outbreaks in sub-Saharan Africa, what do U.S. clinicians need to know? Clinicians should consider MVD in the differential diagnosis of ill patients with a compatible exposure history and clinical presentation. A detailed exposure history should be obtained to determine if patients have been to an area with an active MVD outbreak during their incubation period (in the past 21 days), had concerning epidemiologic risk factors (for example, presence at funerals, health care facilities, in mines/caves) while in the affected area, and/or had contact with a suspected or confirmed MVD case.

Clinical diagnosis of MVD is challenging as the initial dry symptoms of infection are nonspecific (fever, influenza-like illness, malaise, anorexia, etc.) and can resemble other febrile infectious illnesses. Similarly, presenting alternative or concurrent infections, particularly in febrile return travelers, include malaria, Lassa fever, typhoid, and measles. From these nonspecific symptoms, patients with MVD can then progress to the more severe wet symptoms (for example, vomiting, diarrhea, and bleeding). Common clinical features of MVD have been described based on the clinical presentation and course of cases in MVD outbreaks. Notably, in the original Marburg outbreak, maculopapular rash and conjunctival injection were early patient symptoms and most patient deaths occurred during the second week of illness progression.

Supportive care, including aggressive fluid replacement, is the mainstay of therapy for MVD. Currently, there are no Food and Drug Administration–approved antiviral treatments or vaccines for Marburg virus. Despite their viral similarities, vaccines against Ebola virus have not been shown to be protective against Marburg virus. Marburg virus vaccine development is ongoing, with a few promising candidate vaccines in early phase 1 and 2 clinical trials. In 2022, in response to MVD outbreaks in Ghana and Guinea, the World Health Organization convened an international Marburg virus vaccine consortium which is working to promote global research collaboration for more rapid vaccine development.

In the absence of definitive therapies, early identification of patients with suspected MVD is critical for preventing the spread of infection to close contacts. Like Ebola virus–infected patients, only symptomatic MVD patients are infectious and all patients with suspected MVD should be isolated in a private room and cared for in accordance with infection control procedures. As MVD is a nationally notifiable disease, suspected cases should be reported to local or state health departments as per jurisdictional requirements. Clinicians should also consult with their local or state health department and CDC for guidance on testing patients with suspected MVD and consider prompt evaluation for other infectious etiologies in the patient’s differential diagnosis. Comprehensive guidance for clinicians on screening and diagnosing patients with MVD is available on the CDC website at https://www.cdc.gov/vhf/marburg/index.html.

Dr. Appiah (she/her) is a medical epidemiologist in the division of global migration and quarantine at the CDC. Dr. Appiah holds adjunct faculty appointment in the division of infectious diseases at Emory University, Atlanta. She also holds a commission in the U.S. Public Health Service and is a resident advisor, Uganda, U.S. President’s Malaria Initiative, at the CDC.

What do green monkeys, fruit bats, and python caves all have in common? All have been implicated in outbreaks as transmission sources of the rare but deadly Marburg virus. Marburg virus is in the same Filoviridae family of highly pathogenic RNA viruses as Ebola virus, and similarly can cause a rapidly progressive and fatal viral hemorrhagic fever.