User login

Focusing on Inattention: The Diagnostic Accuracy of Brief Measures of Inattention for Detecting Delirium

Delirium is an acute neurocognitive disorder1 that affects up to 25% of older emergency department (ED) and hospitalized patients.2-4 The relationship between delirium and adverse outcomes is well documented.5-7 Delirium is a strong predictor of increased length of mechanical ventilation, longer intensive care unit and hospital stays, increased risk of falls, long-term cognitive impairment, and mortality.8-13 Delirium is frequently missed by healthcare professionals2,14-16 and goes undetected in up to 3 out of 4 patients by bedside nurses and medical practitioners in many hospital settings.14,17-22 A significant barrier to recognizing delirium is the absence of brief delirium assessments.

In an effort to improve delirium recognition in the acute care setting, there has been a concerted effort to develop and validate brief delirium assessments. To address this unmet need, 4 ‘A’s Test (4AT), the Brief Confusion Assessment Method (bCAM), and the 3-minute diagnostic assessment for CAM-defined delirium (3D-CAM) are 1- to 3-minute delirium assessments that were validated in acutely ill older patients.23 However, 1 to 3 minutes may still be too long in busy clinical environments, and briefer (<30 seconds) delirium assessments may be needed.

One potential more-rapid method to screen for delirium is to specifically test for the presence of inattention, which is a cardinal feature of delirium.24,25 Inattention can be ascertained by having the patient recite the months backwards, recite the days of the week backwards, or spell a word backwards.26 Recent studies have evaluated the diagnostic accuracy of reciting the months of the year backwards for delirium. O’Regan et al.27 evaluated the diagnostic accuracy of the month of the year backwards from December to July (MOTYB-6) and observed that this task was 84% sensitive and 90% specific for delirium in older patients. However, they performed the reference standard delirium assessments in patients who had a positive MOTYB-6, which can overestimate sensitivity and underestimate specificity (verification bias).28 Fick et al.29 examined the diagnostic accuracy of 20 individual elements of the 3D-CAM and observed that reciting the months of the year backwards from December to January (MOTYB-12) was 83% sensitive and 69% specific for delirium. However, this was an exploratory study that was designed to identify an element of the 3D-CAM that had the best diagnostic accuracy.

To address these limitations, we sought to evaluate the diagnostic performance of the MOTYB-6 and MOTYB-12 for delirium as diagnosed by a reference standard. We also explored other brief tests of inattention such as spelling a word (“LUNCH”) backwards, reciting the days of the week backwards, 10-letter vigilance “A” task, and 5 picture recognition task.

METHODS

Study Design and Setting

This was a preplanned secondary analysis of a prospective observational study that validated 3 delirium assessments.30,31 This study was conducted at a tertiary care, academic ED. The local institutional review board (IRB) reviewed and approved this study. Informed consent from the patient or an authorized surrogate was obtained whenever possible. Because this was an observational study and posed minimal risk to the patient, the IRB granted a waiver of consent for patients who were both unable to provide consent and were without an authorized surrogate available in the ED or by phone.

Selection of Participants

We enrolled a convenience sample of patients between June 2010 and February 2012 Monday through Friday from 8

Research assistants approached patients who met inclusion criteria and determined if any exclusion criteria were present. If none of the exclusion criteria were present, then the research assistant reviewed the informed consent document with the patient or authorized surrogate if the patient was not capable of providing consent. If a patient was not capable of providing consent and no authorized surrogate was available, then the patient was enrolled (under the waiver of consent) as long as the patient assented to be a part of the study. Once the patient was enrolled, the research assistant contacted the physician rater and reference standard psychiatrists to approach the patient.

Measures of Inattention

An emergency physician (JHH) who had no formal training in the mental status assessment of elders administered a cognitive battery to the patient, including tests of inattention. The following inattention tasks were administered:

- Spell the word “LUNCH” backwards.30 Patients were initially allowed to spell the word “LUNCH” forwards. Patients who were unable to perform the task were assigned 5 errors.

- Recite the months of the year backwards from December to July.23,26,27,30,32 Patients who were unable to perform the task were assigned 6 errors.

- Recite the days of the week backwards.23,26,33 Patients who were unable to perform the task were assigned 7 errors.

- Ten-letter vigilance “A” task.34 The patient was given a series of 10 letters (“S-A-V-E-A-H-A-A-R-T”) every 3 seconds and was asked to squeeze the rater’s hand every time the patient heard the letter “A.” Patients who were unable to perform the task were assigned 10 errors.

- Five picture recognition task.34 Patients were shown 5 objects on picture cards. Afterwards, patients were shown 10 pictures with the previously shown objects intermingled. The patient had to identify which objects were seen previously in the first 5 pictures. Patients who were unable to perform the task were assigned 10 errors.

- Recite the months of the year backwards from December to January.29 Patients who were unable to perform the task were assigned 12 errors.

Reference Standard for Delirium

A comprehensive consultation-liaison psychiatrist assessment was the reference standard for delirium; the diagnosis of delirium was based on Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition, Text Revision (DSM-IV-TR) criteria.35 Three psychiatrists who each had an average of 11 years of clinical experience and regularly diagnosed delirium as part of their daily clinical practice were available to perform these assessments. To arrive at the diagnosis of delirium, they interviewed those who best understood the patient’s mental status (eg, the patient’s family members or caregivers, physician, and nurses). They also reviewed the patient’s medical record and radiology and laboratory test results. They performed bedside cognitive testing that included, but was not limited to, the Mini-Mental State Examination, Clock Drawing Test, Luria hand sequencing task, and tests for verbal fluency. A focused neurological examination was also performed (ie, screening for paraphasic errors, tremors, tone, asterixis, frontal release signs, etc.), and they also evaluated the patient for affective lability, hallucinations, and level of alertness. If the presence of delirium was still questionable, then confrontational naming, proverb interpretation or similarities, and assessments for apraxias were performed at the discretion of the psychiatrist. The psychiatrists were blinded to the physician’s assessments, and the assessments were conducted within 3 hours of each other.

Additional Variables Collected

Using medical record review, comorbidity burden, severity of illness, and premorbid cognition were ascertained. The Charlson Comorbidity Index, a weighted index that takes into account the number and seriousness of 19 preexisting comorbid conditions, was used to quantify comorbidity burden; higher scores indicate higher comorbid burden.36,37 The Acute Physiology Score of the Acute Physiology and Chronic Health Evaluation II was used to quantify severity of illness.38 This score is based upon the initial values of 12 routine physiologic measurements such as vital sign and laboratory abnormalities; higher scores represent higher severities of illness.38 The medical record was reviewed to ascertain the presence of premorbid cognitive impairment; any documentation of dementia in the patient’s clinical problem list or physician history and physical examination from the outpatient or inpatient settings was considered positive. The medical record review was performed by a research assistant and was double-checked for accuracy by one of the investigators (JHH).

Data Analyses

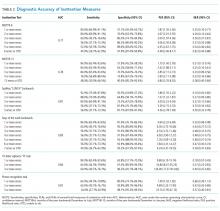

Measures of central tendency and dispersion for continuous variables were reported as medians and interquartile ranges. Categorical variables were reported as proportions. Receiver operating characteristic curves were constructed for each inattention task. Area under the receiver operating characteristic curves (AUC) was reported to provide a global measure of diagnostic accuracy. Sensitivities, specificities, positive likelihood ratios (PLRs), and negative likelihood ratios (NLRs) with their 95% CIs were calculated using the psychiatrist’s assessment as the reference standard.39 Cut-points with PLRs greater than 10 (strongly increased the likelihood of delirium) or NLRs less than 0.1 (strongly decreased the likelihood of delirium) were preferentially reported whenever possible.

All statistical analyses were performed with open source R statistical software version 3.0.1 (http://www.r-project.org/), SAS 9.4 (SAS Institute, Cary, NC), and Microsoft Excel 2010 (Microsoft Inc., Redmond, WA).

RESULTS

DISCUSSION

Delirium is frequently missed by healthcare providers because it is not routinely screened for in the acute care setting. To help address this deficiency of care, we evaluated several brief measures of inattention that take less than 30 seconds to complete. We observed that any errors made on the MOTYB-6 and MOTYB-12 tasks had very good sensitivities (80% and 84%) but were limited by their modest specificities (approximately 50%) for delirium. As a result, these assessments have limited clinical utility as standalone delirium screens. We also explored other commonly used brief measures of inattention and at a variety of error cutoffs. Reciting the days of the week backwards appeared to best balance sensitivity and specificity. None of the inattention measures could convincingly rule out delirium (NLR < 0.10), but the vigilance “A” and picture recognition tasks may have clinical utility in ruling in delirium (PLR > 10). Overall, all the inattention tasks, including MOTYB-6 and MOTYB-12, had very good diagnostic performances based upon their AUC. However, achieving a high sensitivity often had to be sacrificed for specificity or, alternatively, achieving a high specificity had to be sacrificed for sensitivity.

Inattention has been shown to be the cardinal feature for delirium,40 and its assessment using cognitive testing has been recommended to help identify the presence of delirium according to an expert consensus panel.26 The diagnostic performance of the MOTYB-12 observed in our study is similar to a study by Fick et al., who reported that MOTYB-12 had very good sensitivity (83%) but had modest specificity (69%) with a cutoff of 1 or more errors. Hendry et al. observed that the MOTYB-12 was 91% sensitive and 50% specific using a cutoff of 4 or more errors. With regard to the MOTYB-6, our reported specificity was different from what was observed by O’Regan et al.27 Using 1 or more errors as a cutoff, they observed a much higher specificity for delirium than we did (90% vs 57%). Discordant observations regarding the diagnostic accuracy for other inattention tasks also exist. We observed that making any error on the days of the week backwards task was 84% sensitive and 82% specific for delirium, whereas Fick et al. observed a sensitivity and specificity of 50% and 94%, respectively. For the vigilance “A” task, we observed that making 2 or more errors over a series of 10 letters was 64.0% sensitive and 91.4% specific for delirium, whereas Pompei et al.41 observed that making 2 or more errors over a series of 60 letters was 51% sensitive and 77% specific for delirium.

The abovementioned discordant findings may be driven by spectrum bias, wherein the sensitivities and specificities for each inattention task may differ in different subgroups. As a result, differences in the age distribution, proportion of college graduates, history of dementia, and susceptibility to delirium can influence overall sensitivity and specificity. Objective measures of delirium, including the inattention screens studied, are particularly prone to spectrum bias.31,34 However, the strength of this approach is that the assessment of inattention becomes less reliant upon clinical judgment and allows it to be used by raters from a wide range of clinical backgrounds. On the other hand, a subjective interpretation of these inattention tasks may allow the rater to capture the subtleties of inattention (ie, decreased speed of performance in a highly intelligent and well-educated patient without dementia). The disadvantage of this approach, however, is that it is more dependent on clinical judgment and may have decreased diagnostic accuracy in those with less clinical experience or with limited training.14,42,43 These factors must be carefully considered when determining which delirium assessment to use.

Additional research is required to determine the clinical utility of these brief inattention assessments. These findings need to be further validated in larger studies, and the optimal cutoff of each task for different subgroup of patients (eg, demented vs nondemented) needs to be further clarified. It is not completely clear whether these inattention tests can serve as standalone assessments. Depending on the cutoff used, some of these assessments may have unacceptable false negative or false positive rates that may lead to increased adverse patient outcomes or increased resource utilization, respectively. Additional components or assessments may be needed to improve the diagnostic accuracy of these assessments. In addition to understanding these inattention assessments’ diagnostic accuracies, their ability to predict adverse outcomes also needs to be investigated. While a previous study observed that making any error on the MOTYB-12 task was associated with increased physical restraint use and prolonged hospital length of stay,44 these assessments’ ability to prognosticate long-term outcomes such as mortality or long-term cognition or function need to be studied. Lastly, studies should also evaluate how easily implementable these assessments are and whether improved delirium recognition leads to improved patient outcomes.

This study has several notable limitations. Though planned a priori, this was a secondary analysis of a larger investigation designed to validate 3 delirium assessments. Our sample size was also relatively small, causing our 95% CIs to overlap in most cases and limiting the statistical power to truly determine whether one measure is better than the other. We also asked the patient to recite the months backwards from December to July as well as recite the months backwards from December to January. It is possible that the patient may have performed better at going from December to January because of learning effect. Our reference standard for delirium was based upon DSM-IV-TR criteria. The new DSM-V criteria may be more restrictive and may slightly change the sensitivities and specificities of the inattention tasks. We enrolled a convenience sample and enrolled patients who were more likely to be male, have cardiovascular chief complaints, and be admitted to the hospital; as a result, selection bias may have been introduced. Lastly, this study was conducted in a single center and enrolled patients who were 65 years and older. Our findings may not be generalizable to other settings and in those who are less than 65 years of age.

CONCLUSIONS

The MOTYB-6 and MOTYB-12 tasks had very good sensitivities but modest specificities (approximately 50%) using any error made as a cutoff; increasing cutoff to 2 errors and 3 errors, respectively, improved their specificities (approximately 70%) with minimal impact to their sensitivities. Reciting the days of the week backwards, spelling the word “LUNCH” backwards, and the 10-letter vigilance “A” task appeared to perform the best in ruling out delirium but only moderately decreased the likelihood of delirium. The 10-letter Vigilance “A” and picture recognition task

Disclosure

This study was funded by the Emergency Medicine Foundation Career Development Award, National Institutes of Health K23AG032355, and National Center for Research Resources, Grant UL1 RR024975-01. The authors report no financial conflicts of interest.

1. American Psychiatric Association. Diagnostic and statistical manual of mental disorders: DSM-5. Washington, DC: American Psychiatric Association; 2013.

33. Hamrick I, Hafiz R, Cummings DM. Use of days of the week in a modified mini-mental state exam (M-MMSE) for detecting geriatric cognitive impairment. J Am Board Fam Med. 2013;26(4):429-435.

Delirium is an acute neurocognitive disorder1 that affects up to 25% of older emergency department (ED) and hospitalized patients.2-4 The relationship between delirium and adverse outcomes is well documented.5-7 Delirium is a strong predictor of increased length of mechanical ventilation, longer intensive care unit and hospital stays, increased risk of falls, long-term cognitive impairment, and mortality.8-13 Delirium is frequently missed by healthcare professionals2,14-16 and goes undetected in up to 3 out of 4 patients by bedside nurses and medical practitioners in many hospital settings.14,17-22 A significant barrier to recognizing delirium is the absence of brief delirium assessments.

In an effort to improve delirium recognition in the acute care setting, there has been a concerted effort to develop and validate brief delirium assessments. To address this unmet need, 4 ‘A’s Test (4AT), the Brief Confusion Assessment Method (bCAM), and the 3-minute diagnostic assessment for CAM-defined delirium (3D-CAM) are 1- to 3-minute delirium assessments that were validated in acutely ill older patients.23 However, 1 to 3 minutes may still be too long in busy clinical environments, and briefer (<30 seconds) delirium assessments may be needed.

One potential more-rapid method to screen for delirium is to specifically test for the presence of inattention, which is a cardinal feature of delirium.24,25 Inattention can be ascertained by having the patient recite the months backwards, recite the days of the week backwards, or spell a word backwards.26 Recent studies have evaluated the diagnostic accuracy of reciting the months of the year backwards for delirium. O’Regan et al.27 evaluated the diagnostic accuracy of the month of the year backwards from December to July (MOTYB-6) and observed that this task was 84% sensitive and 90% specific for delirium in older patients. However, they performed the reference standard delirium assessments in patients who had a positive MOTYB-6, which can overestimate sensitivity and underestimate specificity (verification bias).28 Fick et al.29 examined the diagnostic accuracy of 20 individual elements of the 3D-CAM and observed that reciting the months of the year backwards from December to January (MOTYB-12) was 83% sensitive and 69% specific for delirium. However, this was an exploratory study that was designed to identify an element of the 3D-CAM that had the best diagnostic accuracy.

To address these limitations, we sought to evaluate the diagnostic performance of the MOTYB-6 and MOTYB-12 for delirium as diagnosed by a reference standard. We also explored other brief tests of inattention such as spelling a word (“LUNCH”) backwards, reciting the days of the week backwards, 10-letter vigilance “A” task, and 5 picture recognition task.

METHODS

Study Design and Setting

This was a preplanned secondary analysis of a prospective observational study that validated 3 delirium assessments.30,31 This study was conducted at a tertiary care, academic ED. The local institutional review board (IRB) reviewed and approved this study. Informed consent from the patient or an authorized surrogate was obtained whenever possible. Because this was an observational study and posed minimal risk to the patient, the IRB granted a waiver of consent for patients who were both unable to provide consent and were without an authorized surrogate available in the ED or by phone.

Selection of Participants

We enrolled a convenience sample of patients between June 2010 and February 2012 Monday through Friday from 8

Research assistants approached patients who met inclusion criteria and determined if any exclusion criteria were present. If none of the exclusion criteria were present, then the research assistant reviewed the informed consent document with the patient or authorized surrogate if the patient was not capable of providing consent. If a patient was not capable of providing consent and no authorized surrogate was available, then the patient was enrolled (under the waiver of consent) as long as the patient assented to be a part of the study. Once the patient was enrolled, the research assistant contacted the physician rater and reference standard psychiatrists to approach the patient.

Measures of Inattention

An emergency physician (JHH) who had no formal training in the mental status assessment of elders administered a cognitive battery to the patient, including tests of inattention. The following inattention tasks were administered:

- Spell the word “LUNCH” backwards.30 Patients were initially allowed to spell the word “LUNCH” forwards. Patients who were unable to perform the task were assigned 5 errors.

- Recite the months of the year backwards from December to July.23,26,27,30,32 Patients who were unable to perform the task were assigned 6 errors.

- Recite the days of the week backwards.23,26,33 Patients who were unable to perform the task were assigned 7 errors.

- Ten-letter vigilance “A” task.34 The patient was given a series of 10 letters (“S-A-V-E-A-H-A-A-R-T”) every 3 seconds and was asked to squeeze the rater’s hand every time the patient heard the letter “A.” Patients who were unable to perform the task were assigned 10 errors.

- Five picture recognition task.34 Patients were shown 5 objects on picture cards. Afterwards, patients were shown 10 pictures with the previously shown objects intermingled. The patient had to identify which objects were seen previously in the first 5 pictures. Patients who were unable to perform the task were assigned 10 errors.

- Recite the months of the year backwards from December to January.29 Patients who were unable to perform the task were assigned 12 errors.

Reference Standard for Delirium

A comprehensive consultation-liaison psychiatrist assessment was the reference standard for delirium; the diagnosis of delirium was based on Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition, Text Revision (DSM-IV-TR) criteria.35 Three psychiatrists who each had an average of 11 years of clinical experience and regularly diagnosed delirium as part of their daily clinical practice were available to perform these assessments. To arrive at the diagnosis of delirium, they interviewed those who best understood the patient’s mental status (eg, the patient’s family members or caregivers, physician, and nurses). They also reviewed the patient’s medical record and radiology and laboratory test results. They performed bedside cognitive testing that included, but was not limited to, the Mini-Mental State Examination, Clock Drawing Test, Luria hand sequencing task, and tests for verbal fluency. A focused neurological examination was also performed (ie, screening for paraphasic errors, tremors, tone, asterixis, frontal release signs, etc.), and they also evaluated the patient for affective lability, hallucinations, and level of alertness. If the presence of delirium was still questionable, then confrontational naming, proverb interpretation or similarities, and assessments for apraxias were performed at the discretion of the psychiatrist. The psychiatrists were blinded to the physician’s assessments, and the assessments were conducted within 3 hours of each other.

Additional Variables Collected

Using medical record review, comorbidity burden, severity of illness, and premorbid cognition were ascertained. The Charlson Comorbidity Index, a weighted index that takes into account the number and seriousness of 19 preexisting comorbid conditions, was used to quantify comorbidity burden; higher scores indicate higher comorbid burden.36,37 The Acute Physiology Score of the Acute Physiology and Chronic Health Evaluation II was used to quantify severity of illness.38 This score is based upon the initial values of 12 routine physiologic measurements such as vital sign and laboratory abnormalities; higher scores represent higher severities of illness.38 The medical record was reviewed to ascertain the presence of premorbid cognitive impairment; any documentation of dementia in the patient’s clinical problem list or physician history and physical examination from the outpatient or inpatient settings was considered positive. The medical record review was performed by a research assistant and was double-checked for accuracy by one of the investigators (JHH).

Data Analyses

Measures of central tendency and dispersion for continuous variables were reported as medians and interquartile ranges. Categorical variables were reported as proportions. Receiver operating characteristic curves were constructed for each inattention task. Area under the receiver operating characteristic curves (AUC) was reported to provide a global measure of diagnostic accuracy. Sensitivities, specificities, positive likelihood ratios (PLRs), and negative likelihood ratios (NLRs) with their 95% CIs were calculated using the psychiatrist’s assessment as the reference standard.39 Cut-points with PLRs greater than 10 (strongly increased the likelihood of delirium) or NLRs less than 0.1 (strongly decreased the likelihood of delirium) were preferentially reported whenever possible.

All statistical analyses were performed with open source R statistical software version 3.0.1 (http://www.r-project.org/), SAS 9.4 (SAS Institute, Cary, NC), and Microsoft Excel 2010 (Microsoft Inc., Redmond, WA).

RESULTS

DISCUSSION

Delirium is frequently missed by healthcare providers because it is not routinely screened for in the acute care setting. To help address this deficiency of care, we evaluated several brief measures of inattention that take less than 30 seconds to complete. We observed that any errors made on the MOTYB-6 and MOTYB-12 tasks had very good sensitivities (80% and 84%) but were limited by their modest specificities (approximately 50%) for delirium. As a result, these assessments have limited clinical utility as standalone delirium screens. We also explored other commonly used brief measures of inattention and at a variety of error cutoffs. Reciting the days of the week backwards appeared to best balance sensitivity and specificity. None of the inattention measures could convincingly rule out delirium (NLR < 0.10), but the vigilance “A” and picture recognition tasks may have clinical utility in ruling in delirium (PLR > 10). Overall, all the inattention tasks, including MOTYB-6 and MOTYB-12, had very good diagnostic performances based upon their AUC. However, achieving a high sensitivity often had to be sacrificed for specificity or, alternatively, achieving a high specificity had to be sacrificed for sensitivity.

Inattention has been shown to be the cardinal feature for delirium,40 and its assessment using cognitive testing has been recommended to help identify the presence of delirium according to an expert consensus panel.26 The diagnostic performance of the MOTYB-12 observed in our study is similar to a study by Fick et al., who reported that MOTYB-12 had very good sensitivity (83%) but had modest specificity (69%) with a cutoff of 1 or more errors. Hendry et al. observed that the MOTYB-12 was 91% sensitive and 50% specific using a cutoff of 4 or more errors. With regard to the MOTYB-6, our reported specificity was different from what was observed by O’Regan et al.27 Using 1 or more errors as a cutoff, they observed a much higher specificity for delirium than we did (90% vs 57%). Discordant observations regarding the diagnostic accuracy for other inattention tasks also exist. We observed that making any error on the days of the week backwards task was 84% sensitive and 82% specific for delirium, whereas Fick et al. observed a sensitivity and specificity of 50% and 94%, respectively. For the vigilance “A” task, we observed that making 2 or more errors over a series of 10 letters was 64.0% sensitive and 91.4% specific for delirium, whereas Pompei et al.41 observed that making 2 or more errors over a series of 60 letters was 51% sensitive and 77% specific for delirium.

The abovementioned discordant findings may be driven by spectrum bias, wherein the sensitivities and specificities for each inattention task may differ in different subgroups. As a result, differences in the age distribution, proportion of college graduates, history of dementia, and susceptibility to delirium can influence overall sensitivity and specificity. Objective measures of delirium, including the inattention screens studied, are particularly prone to spectrum bias.31,34 However, the strength of this approach is that the assessment of inattention becomes less reliant upon clinical judgment and allows it to be used by raters from a wide range of clinical backgrounds. On the other hand, a subjective interpretation of these inattention tasks may allow the rater to capture the subtleties of inattention (ie, decreased speed of performance in a highly intelligent and well-educated patient without dementia). The disadvantage of this approach, however, is that it is more dependent on clinical judgment and may have decreased diagnostic accuracy in those with less clinical experience or with limited training.14,42,43 These factors must be carefully considered when determining which delirium assessment to use.

Additional research is required to determine the clinical utility of these brief inattention assessments. These findings need to be further validated in larger studies, and the optimal cutoff of each task for different subgroup of patients (eg, demented vs nondemented) needs to be further clarified. It is not completely clear whether these inattention tests can serve as standalone assessments. Depending on the cutoff used, some of these assessments may have unacceptable false negative or false positive rates that may lead to increased adverse patient outcomes or increased resource utilization, respectively. Additional components or assessments may be needed to improve the diagnostic accuracy of these assessments. In addition to understanding these inattention assessments’ diagnostic accuracies, their ability to predict adverse outcomes also needs to be investigated. While a previous study observed that making any error on the MOTYB-12 task was associated with increased physical restraint use and prolonged hospital length of stay,44 these assessments’ ability to prognosticate long-term outcomes such as mortality or long-term cognition or function need to be studied. Lastly, studies should also evaluate how easily implementable these assessments are and whether improved delirium recognition leads to improved patient outcomes.

This study has several notable limitations. Though planned a priori, this was a secondary analysis of a larger investigation designed to validate 3 delirium assessments. Our sample size was also relatively small, causing our 95% CIs to overlap in most cases and limiting the statistical power to truly determine whether one measure is better than the other. We also asked the patient to recite the months backwards from December to July as well as recite the months backwards from December to January. It is possible that the patient may have performed better at going from December to January because of learning effect. Our reference standard for delirium was based upon DSM-IV-TR criteria. The new DSM-V criteria may be more restrictive and may slightly change the sensitivities and specificities of the inattention tasks. We enrolled a convenience sample and enrolled patients who were more likely to be male, have cardiovascular chief complaints, and be admitted to the hospital; as a result, selection bias may have been introduced. Lastly, this study was conducted in a single center and enrolled patients who were 65 years and older. Our findings may not be generalizable to other settings and in those who are less than 65 years of age.

CONCLUSIONS

The MOTYB-6 and MOTYB-12 tasks had very good sensitivities but modest specificities (approximately 50%) using any error made as a cutoff; increasing cutoff to 2 errors and 3 errors, respectively, improved their specificities (approximately 70%) with minimal impact to their sensitivities. Reciting the days of the week backwards, spelling the word “LUNCH” backwards, and the 10-letter vigilance “A” task appeared to perform the best in ruling out delirium but only moderately decreased the likelihood of delirium. The 10-letter Vigilance “A” and picture recognition task

Disclosure

This study was funded by the Emergency Medicine Foundation Career Development Award, National Institutes of Health K23AG032355, and National Center for Research Resources, Grant UL1 RR024975-01. The authors report no financial conflicts of interest.

Delirium is an acute neurocognitive disorder1 that affects up to 25% of older emergency department (ED) and hospitalized patients.2-4 The relationship between delirium and adverse outcomes is well documented.5-7 Delirium is a strong predictor of increased length of mechanical ventilation, longer intensive care unit and hospital stays, increased risk of falls, long-term cognitive impairment, and mortality.8-13 Delirium is frequently missed by healthcare professionals2,14-16 and goes undetected in up to 3 out of 4 patients by bedside nurses and medical practitioners in many hospital settings.14,17-22 A significant barrier to recognizing delirium is the absence of brief delirium assessments.

In an effort to improve delirium recognition in the acute care setting, there has been a concerted effort to develop and validate brief delirium assessments. To address this unmet need, 4 ‘A’s Test (4AT), the Brief Confusion Assessment Method (bCAM), and the 3-minute diagnostic assessment for CAM-defined delirium (3D-CAM) are 1- to 3-minute delirium assessments that were validated in acutely ill older patients.23 However, 1 to 3 minutes may still be too long in busy clinical environments, and briefer (<30 seconds) delirium assessments may be needed.

One potential more-rapid method to screen for delirium is to specifically test for the presence of inattention, which is a cardinal feature of delirium.24,25 Inattention can be ascertained by having the patient recite the months backwards, recite the days of the week backwards, or spell a word backwards.26 Recent studies have evaluated the diagnostic accuracy of reciting the months of the year backwards for delirium. O’Regan et al.27 evaluated the diagnostic accuracy of the month of the year backwards from December to July (MOTYB-6) and observed that this task was 84% sensitive and 90% specific for delirium in older patients. However, they performed the reference standard delirium assessments in patients who had a positive MOTYB-6, which can overestimate sensitivity and underestimate specificity (verification bias).28 Fick et al.29 examined the diagnostic accuracy of 20 individual elements of the 3D-CAM and observed that reciting the months of the year backwards from December to January (MOTYB-12) was 83% sensitive and 69% specific for delirium. However, this was an exploratory study that was designed to identify an element of the 3D-CAM that had the best diagnostic accuracy.

To address these limitations, we sought to evaluate the diagnostic performance of the MOTYB-6 and MOTYB-12 for delirium as diagnosed by a reference standard. We also explored other brief tests of inattention such as spelling a word (“LUNCH”) backwards, reciting the days of the week backwards, 10-letter vigilance “A” task, and 5 picture recognition task.

METHODS

Study Design and Setting

This was a preplanned secondary analysis of a prospective observational study that validated 3 delirium assessments.30,31 This study was conducted at a tertiary care, academic ED. The local institutional review board (IRB) reviewed and approved this study. Informed consent from the patient or an authorized surrogate was obtained whenever possible. Because this was an observational study and posed minimal risk to the patient, the IRB granted a waiver of consent for patients who were both unable to provide consent and were without an authorized surrogate available in the ED or by phone.

Selection of Participants

We enrolled a convenience sample of patients between June 2010 and February 2012 Monday through Friday from 8

Research assistants approached patients who met inclusion criteria and determined if any exclusion criteria were present. If none of the exclusion criteria were present, then the research assistant reviewed the informed consent document with the patient or authorized surrogate if the patient was not capable of providing consent. If a patient was not capable of providing consent and no authorized surrogate was available, then the patient was enrolled (under the waiver of consent) as long as the patient assented to be a part of the study. Once the patient was enrolled, the research assistant contacted the physician rater and reference standard psychiatrists to approach the patient.

Measures of Inattention

An emergency physician (JHH) who had no formal training in the mental status assessment of elders administered a cognitive battery to the patient, including tests of inattention. The following inattention tasks were administered:

- Spell the word “LUNCH” backwards.30 Patients were initially allowed to spell the word “LUNCH” forwards. Patients who were unable to perform the task were assigned 5 errors.

- Recite the months of the year backwards from December to July.23,26,27,30,32 Patients who were unable to perform the task were assigned 6 errors.

- Recite the days of the week backwards.23,26,33 Patients who were unable to perform the task were assigned 7 errors.

- Ten-letter vigilance “A” task.34 The patient was given a series of 10 letters (“S-A-V-E-A-H-A-A-R-T”) every 3 seconds and was asked to squeeze the rater’s hand every time the patient heard the letter “A.” Patients who were unable to perform the task were assigned 10 errors.

- Five picture recognition task.34 Patients were shown 5 objects on picture cards. Afterwards, patients were shown 10 pictures with the previously shown objects intermingled. The patient had to identify which objects were seen previously in the first 5 pictures. Patients who were unable to perform the task were assigned 10 errors.

- Recite the months of the year backwards from December to January.29 Patients who were unable to perform the task were assigned 12 errors.

Reference Standard for Delirium

A comprehensive consultation-liaison psychiatrist assessment was the reference standard for delirium; the diagnosis of delirium was based on Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition, Text Revision (DSM-IV-TR) criteria.35 Three psychiatrists who each had an average of 11 years of clinical experience and regularly diagnosed delirium as part of their daily clinical practice were available to perform these assessments. To arrive at the diagnosis of delirium, they interviewed those who best understood the patient’s mental status (eg, the patient’s family members or caregivers, physician, and nurses). They also reviewed the patient’s medical record and radiology and laboratory test results. They performed bedside cognitive testing that included, but was not limited to, the Mini-Mental State Examination, Clock Drawing Test, Luria hand sequencing task, and tests for verbal fluency. A focused neurological examination was also performed (ie, screening for paraphasic errors, tremors, tone, asterixis, frontal release signs, etc.), and they also evaluated the patient for affective lability, hallucinations, and level of alertness. If the presence of delirium was still questionable, then confrontational naming, proverb interpretation or similarities, and assessments for apraxias were performed at the discretion of the psychiatrist. The psychiatrists were blinded to the physician’s assessments, and the assessments were conducted within 3 hours of each other.

Additional Variables Collected

Using medical record review, comorbidity burden, severity of illness, and premorbid cognition were ascertained. The Charlson Comorbidity Index, a weighted index that takes into account the number and seriousness of 19 preexisting comorbid conditions, was used to quantify comorbidity burden; higher scores indicate higher comorbid burden.36,37 The Acute Physiology Score of the Acute Physiology and Chronic Health Evaluation II was used to quantify severity of illness.38 This score is based upon the initial values of 12 routine physiologic measurements such as vital sign and laboratory abnormalities; higher scores represent higher severities of illness.38 The medical record was reviewed to ascertain the presence of premorbid cognitive impairment; any documentation of dementia in the patient’s clinical problem list or physician history and physical examination from the outpatient or inpatient settings was considered positive. The medical record review was performed by a research assistant and was double-checked for accuracy by one of the investigators (JHH).

Data Analyses

Measures of central tendency and dispersion for continuous variables were reported as medians and interquartile ranges. Categorical variables were reported as proportions. Receiver operating characteristic curves were constructed for each inattention task. Area under the receiver operating characteristic curves (AUC) was reported to provide a global measure of diagnostic accuracy. Sensitivities, specificities, positive likelihood ratios (PLRs), and negative likelihood ratios (NLRs) with their 95% CIs were calculated using the psychiatrist’s assessment as the reference standard.39 Cut-points with PLRs greater than 10 (strongly increased the likelihood of delirium) or NLRs less than 0.1 (strongly decreased the likelihood of delirium) were preferentially reported whenever possible.

All statistical analyses were performed with open source R statistical software version 3.0.1 (http://www.r-project.org/), SAS 9.4 (SAS Institute, Cary, NC), and Microsoft Excel 2010 (Microsoft Inc., Redmond, WA).

RESULTS

DISCUSSION

Delirium is frequently missed by healthcare providers because it is not routinely screened for in the acute care setting. To help address this deficiency of care, we evaluated several brief measures of inattention that take less than 30 seconds to complete. We observed that any errors made on the MOTYB-6 and MOTYB-12 tasks had very good sensitivities (80% and 84%) but were limited by their modest specificities (approximately 50%) for delirium. As a result, these assessments have limited clinical utility as standalone delirium screens. We also explored other commonly used brief measures of inattention and at a variety of error cutoffs. Reciting the days of the week backwards appeared to best balance sensitivity and specificity. None of the inattention measures could convincingly rule out delirium (NLR < 0.10), but the vigilance “A” and picture recognition tasks may have clinical utility in ruling in delirium (PLR > 10). Overall, all the inattention tasks, including MOTYB-6 and MOTYB-12, had very good diagnostic performances based upon their AUC. However, achieving a high sensitivity often had to be sacrificed for specificity or, alternatively, achieving a high specificity had to be sacrificed for sensitivity.

Inattention has been shown to be the cardinal feature for delirium,40 and its assessment using cognitive testing has been recommended to help identify the presence of delirium according to an expert consensus panel.26 The diagnostic performance of the MOTYB-12 observed in our study is similar to a study by Fick et al., who reported that MOTYB-12 had very good sensitivity (83%) but had modest specificity (69%) with a cutoff of 1 or more errors. Hendry et al. observed that the MOTYB-12 was 91% sensitive and 50% specific using a cutoff of 4 or more errors. With regard to the MOTYB-6, our reported specificity was different from what was observed by O’Regan et al.27 Using 1 or more errors as a cutoff, they observed a much higher specificity for delirium than we did (90% vs 57%). Discordant observations regarding the diagnostic accuracy for other inattention tasks also exist. We observed that making any error on the days of the week backwards task was 84% sensitive and 82% specific for delirium, whereas Fick et al. observed a sensitivity and specificity of 50% and 94%, respectively. For the vigilance “A” task, we observed that making 2 or more errors over a series of 10 letters was 64.0% sensitive and 91.4% specific for delirium, whereas Pompei et al.41 observed that making 2 or more errors over a series of 60 letters was 51% sensitive and 77% specific for delirium.

The abovementioned discordant findings may be driven by spectrum bias, wherein the sensitivities and specificities for each inattention task may differ in different subgroups. As a result, differences in the age distribution, proportion of college graduates, history of dementia, and susceptibility to delirium can influence overall sensitivity and specificity. Objective measures of delirium, including the inattention screens studied, are particularly prone to spectrum bias.31,34 However, the strength of this approach is that the assessment of inattention becomes less reliant upon clinical judgment and allows it to be used by raters from a wide range of clinical backgrounds. On the other hand, a subjective interpretation of these inattention tasks may allow the rater to capture the subtleties of inattention (ie, decreased speed of performance in a highly intelligent and well-educated patient without dementia). The disadvantage of this approach, however, is that it is more dependent on clinical judgment and may have decreased diagnostic accuracy in those with less clinical experience or with limited training.14,42,43 These factors must be carefully considered when determining which delirium assessment to use.

Additional research is required to determine the clinical utility of these brief inattention assessments. These findings need to be further validated in larger studies, and the optimal cutoff of each task for different subgroup of patients (eg, demented vs nondemented) needs to be further clarified. It is not completely clear whether these inattention tests can serve as standalone assessments. Depending on the cutoff used, some of these assessments may have unacceptable false negative or false positive rates that may lead to increased adverse patient outcomes or increased resource utilization, respectively. Additional components or assessments may be needed to improve the diagnostic accuracy of these assessments. In addition to understanding these inattention assessments’ diagnostic accuracies, their ability to predict adverse outcomes also needs to be investigated. While a previous study observed that making any error on the MOTYB-12 task was associated with increased physical restraint use and prolonged hospital length of stay,44 these assessments’ ability to prognosticate long-term outcomes such as mortality or long-term cognition or function need to be studied. Lastly, studies should also evaluate how easily implementable these assessments are and whether improved delirium recognition leads to improved patient outcomes.

This study has several notable limitations. Though planned a priori, this was a secondary analysis of a larger investigation designed to validate 3 delirium assessments. Our sample size was also relatively small, causing our 95% CIs to overlap in most cases and limiting the statistical power to truly determine whether one measure is better than the other. We also asked the patient to recite the months backwards from December to July as well as recite the months backwards from December to January. It is possible that the patient may have performed better at going from December to January because of learning effect. Our reference standard for delirium was based upon DSM-IV-TR criteria. The new DSM-V criteria may be more restrictive and may slightly change the sensitivities and specificities of the inattention tasks. We enrolled a convenience sample and enrolled patients who were more likely to be male, have cardiovascular chief complaints, and be admitted to the hospital; as a result, selection bias may have been introduced. Lastly, this study was conducted in a single center and enrolled patients who were 65 years and older. Our findings may not be generalizable to other settings and in those who are less than 65 years of age.

CONCLUSIONS

The MOTYB-6 and MOTYB-12 tasks had very good sensitivities but modest specificities (approximately 50%) using any error made as a cutoff; increasing cutoff to 2 errors and 3 errors, respectively, improved their specificities (approximately 70%) with minimal impact to their sensitivities. Reciting the days of the week backwards, spelling the word “LUNCH” backwards, and the 10-letter vigilance “A” task appeared to perform the best in ruling out delirium but only moderately decreased the likelihood of delirium. The 10-letter Vigilance “A” and picture recognition task

Disclosure

This study was funded by the Emergency Medicine Foundation Career Development Award, National Institutes of Health K23AG032355, and National Center for Research Resources, Grant UL1 RR024975-01. The authors report no financial conflicts of interest.

1. American Psychiatric Association. Diagnostic and statistical manual of mental disorders: DSM-5. Washington, DC: American Psychiatric Association; 2013.

33. Hamrick I, Hafiz R, Cummings DM. Use of days of the week in a modified mini-mental state exam (M-MMSE) for detecting geriatric cognitive impairment. J Am Board Fam Med. 2013;26(4):429-435.

1. American Psychiatric Association. Diagnostic and statistical manual of mental disorders: DSM-5. Washington, DC: American Psychiatric Association; 2013.

33. Hamrick I, Hafiz R, Cummings DM. Use of days of the week in a modified mini-mental state exam (M-MMSE) for detecting geriatric cognitive impairment. J Am Board Fam Med. 2013;26(4):429-435.

© 2018 Society of Hospital Medicine

Gabapentin Use in Acute Alcohol Withdrawal Management

The prevalence of alcohol dependence in the U.S. represents a significant public health concern. Alcohol use disorder (AUD) is estimated to affect 6.7% of Americans and is the fourth leading preventable cause of death.1 Men and women who have served in the military are at an even higher risk of excessive alcohol use. More than 20% of service members report binge drinking every week.2 This risk is further exacerbated in veterans who have experienced active combat or who have comorbid health conditions, such as posttraumatic stress disorder.3

Background

Individuals that regularly consume excessive amounts of alcohol can develop acute alcohol withdrawal syndrome (AWS) after abrupt discontinuation or significant reduction of alcohol intake. Patients admitted for acute alcohol withdrawal may experience complicated courses of treatment and extended lengths of hospitalization.4,5 Cessation from chronic alcohol intake elicits a pathophysiologic response from increased N-methyl-d-aspartate receptor activity and decreased γ-aminobutyric acid (GABA) receptor function.

Autonomic and psychomotor hyperactivity disturbances, such as anxiety, nausea, tremors, diaphoresis, and tachycardia, may occur as early as 6 to 8 hours after cessation of use. Within 48 to 72 hours of alcohol cessation, patients may be at an increased risk of experiencing tonic-clonic seizures, visual and auditory hallucinations, and delirium tremens (DTs), which may be accompanied by signs of extreme autonomic hyperactivity and agitation.6 Patients hospitalized within acute settings require frequent medical supervision for acute alcohol withdrawal, especially in patients at high risk for seizure or DTs because morbidity and mortality risk is increased.7

Benzodiazepines remain the standard of care for management of moderate-to-severe symptoms of AWS. Strong evidence supports the use of benzodiazepines to reduce withdrawal severity, incidence of delirium, and seizures in AWS by enhancing GABA activity.8 However, the adverse effect (AE) burden associated with benzodiazepines can be a major limitation throughout care. Benzodiazepines also may be limited in their use in select patient populations, such as in older adults or patients who present with hepatic dysfunction due to the risk of increased AEs or metabolite accumulation.6 A high dosing requirement of benzodiazepine for symptom management can lead to oversedation to the point of requiring intubation, increasing length of stay in the intensive care unit (ICU), and the risk of nosocomial infections.9

Anticonvulsants, such as carbamazepine, valproic acid, and gabapentin, have shown to be superior to placebo and equal in efficacy to benzodiazepines for symptom management in mild-to-moderate alcohol withdrawal in both inpatient and outpatient settings.6-8 However, these agents are not recommended as first-line monotherapy due to the limited number of randomized trials supporting their efficacy over benzodiazepines in preventing severe symptoms of withdrawal, such as seizures or delirium.10-12 Nonetheless, the mechanism of action of anticonvulsants may help raise seizure threshold in patients and provide a benzodiazepine-sparing effect by enhancing GABAergic activity and lowering neuronal excitability.13

Gabapentin makes an attractive agent for clinical use because of its anxiolytic and sedative properties that can be used to potentially target symptoms analogous with AWS when the use of benzodiazepines becomes a safety concern. Although similar in chemical structure, gabapentin is not metabolized to GABA and does not directly interact with the receptor. Gabapentin may increase GABA concentrations by direct synthesis of GABA and indirectly through interaction with voltage-gated calcium channels.13 In addition to its overall safety profile, gabapentin may be a viable adjuvant because emerging data may suggest a potential role in the management of acute alcohol withdrawal.12,14,15

Gabapentin for Alcohol Withdrawal at VAPORHCS

Although not currently included in the alcohol withdrawal protocol at Veterans Affairs Portland Health Care System (VAPORHCS), gabapentin has been added to the standard of care in select patients per the discretion of the attending physician. Anecdotal reports of patients experiencing milder symptoms and less benzodiazepine administration have facilitated use of gabapentin in alcohol withdrawal management at VAPORHCS. However, routine use of gabapentin is not consistent among all patients treated for acute alcohol withdrawal, and dosing schedules of gabapentin seem highly variable. Standard symptom management for acute alcohol withdrawal should be consistent for all affected individuals, using evidence-based medicine in order to achieve optimal outcomes and improve harm reduction.

The objective of this quality assurance/quality improvement (QA/QI) project was to assess the amount of lorazepam required for symptom management in acute alcohol withdrawal when gabapentin is used as an adjunct to treatment and to evaluate the impact on symptom management using the Clinical Institute Withdrawal Assessment for Alcohol scale, revised version (CIWA-Ar) in patients admitted to the ICU and general medicine wards for acute alcohol withdrawal at VAPORHCS.16 If a possible adjunct for the treatment of alcohol withdrawal has the potential to reduce benzodiazepine requirements and minimize AEs, a thorough evaluation of the treatment should be conducted before its practice is incorporated into the current standard of care.

Methods

The following QA/QI project was approved locally by the VAPORHCS associate chief of staff/Office of Research and Development and is considered to be nonresearch VHA operations activity and exempt from an institutional review board committee review. This project was a single-center, retrospective chart review of patients admitted to the ICU and general medicine wards at VAPORHCS with acute alcohol withdrawal. The CIWA-Ar protocol order sets between January 1, 2014 and December 31, 2015, were retrieved through the Computerized Patient Record System (CPRS) at VAPORHCS.

Patients with an alcohol withdrawal protocol order set who received gabapentin with or without lorazepam during hospitalization were identified for chart review. Patients were eligible for review if they were aged ≥ 18 years with a primary or secondary diagnosis of acute alcohol withdrawal and had a CIWA-Ar protocol order set placed during hospitalization. Patients must have been administered gabapentin, lorazepam, or both while the CIWA-Ar protocol was active. Patients with an active outpatient prescription for gabapentin or benzodiazepine filled within the previous 30 days, documented history of psychosis or epileptic seizure disorder, or other concomitant benzodiazepines or antiepileptics administered while on the CIWA-Ar protocol were excluded from the analysis.

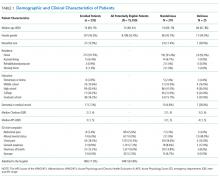

Baseline characteristics for patients eligible for review were collected and included age; sex; race, body mass index (BMI); estimated creatinine clearance (CrCl); toxicology screen at admission (if available), history of substance use disorder, AWS, or history of withdrawal seizures; and history of a sedative hypnotics (not including benzodiazepines) prescription within 30 days prior to admission.17

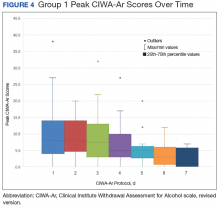

The primary endpoint was the total amount of lorazepam administered from the time of admission to the time of discontinuation of the alcohol withdrawal protocol. The dose, frequency, and amount of lorazepam and gabapentin administered daily were collected for each patient while on the CIWA-Ar protocol. Secondary endpoints included rate of the CIWA-Ar score reduction, time to protocol discontinuation, as well as incidence and onset of peak delirium scores during hospitalization. Cumulative CIWA-Ar scores over 24 hours were averaged per patient per day while on CIWA-Ar protocol. Peak CIWA-Ar scores per patient per day on the protocol also were collected. Time to protocol termination was determined by date of order for discontinuation or by date when scoring had ceased and protocol order was inadvertently continued. Peak Intensive Care Delirium Screening Checklist (ICDSC) scores were collected for patients admitted to the ICU.18 Day of peak ICDSC scores also were evaluated.

Statistical Analysis

The sample size for this analysis was determined by the number of patients identified who met the inclusion criteria and did not meet any of the exclusion criteria. Power was not calculated to estimate sample size needed to determine statistical significance. One hundred patients treated for alcohol withdrawal was established as the target sample size for this project. Descriptive statistics were performed to analyze patient baseline characteristics and primary and secondary objective data.

Results

A total of 1,611 CIWA-Ar protocol orders were identified between January 1, 2014 and December 31, 2015.

Primary Endpoint

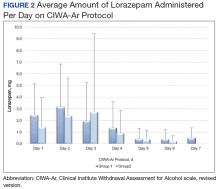

The average amount of lorazepam administered for the total duration on CIWA-Ar protocol was 7.9 mg (median 6, ± 8.2) among

Secondary Endpoints

On average, the total number of days spent on CIWA-Ar protocol was 3.8 (median 4, ± 1.5) in group 1 compared with 4.1 (median 4, ± 1.6) in group 2. Rate of CIWA-Ar protocol discontinuation for patients in group 1 and group 2 is shown in Figure 3.

Discussion

The purpose of this project was to evaluate gabapentin use at VAPORHCS for alcohol withdrawal and evaluate the impact on symptom management. Patients who were started on gabapentin on the initiation of the alcohol withdrawal protocol received less lorazepam dosing compared with patients who received only lorazepam for symptom management for alcohol withdrawal. Except for day 3, average lorazepam dosage per day on the alcohol withdrawal protocol was lower in patients who were also taking gabapentin.

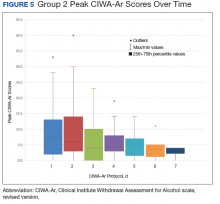

This trend also can be seen in the recorded peak CIWA-Ar scores per day as illustrated in Figures 4 and 5.

Limitations

Prior to evaluation, power analysis was not calculated to estimate an appropriate sample size necessary to determine statistical significance. Results from this evaluation are not definitive and are meant to be hypothesis generating for future analysis.

There were several limitations that were identified throughout this project. For this review, history and extent of patient’s prior alcohol use was not assessed. Therefore, the degree of symptom severity in which patients may have experienced during withdrawal may not have been adequately matched between groups. The inherent subjectivity of CIWA-Ar scoring was considered a limitation because scores were determined by clinical interpretation among various nursing staff. As this was a retrospective review, exact timing of medications administered as well as additional supportive care measures, such as ancillary medications for symptom management, were not accounted for and controlled between groups.

Patients presenting to the emergency department or from a facility outside of VAPORHCS for acute AWS may have had incomplete documentation of the onset of symptoms on presentation or of the medications administered prior to being admitted, which may have confounded initial CIWA-Ar scoring and total duration required to be on a withdrawal protocol. Some patients may have received benzodiazepines at initial presentation prior to gabapentin initiation and may have inaccurately reflected its efficacy potential to manage symptoms without the need for lorazepam.

There were 10 patients that were identified who received gabapentin on the alcohol withdrawal protocol and did not receive any lorazepam. This retrospective review could not be determined whether these patients did not require lorazepam because initiating gabapentin reduced severity or simply because their withdrawal symptoms were not severe enough to warrant the need for lorazepam, regardless of gabapentin use.

Gabapentin dosing was not standardized among patients, averaging from 100 mg to 3,600 mg per day. This wide variation in dose may have influenced the requirement of lorazepam needed for symptom management in patients receiving minimal doses or AEs experienced in patients who received large doses. Initiation and/or select dosing of gabapentin may have been dependent on the experience of the provider and familiarity with its use in alcohol withdrawal management. Interestingly, patients with a history of withdrawal seizures (13%) were identified only within the lorazepam-only group. This could suggest that patients with prior symptoms of severe alcohol withdrawal were selected to receive lorazepam-only at the discretion of the provider.

Existing literature investigating gabapentin utilization in alcohol withdrawal has demonstrated benefit for patients with mild-to-moderate symptoms in both inpatient and outpatient studies. However, supporting evidence is limited by the differences in design, methods, and comparators within each trial. Leung and colleagues identified 5 studies that utilized gabapentin as monotherapy or in combination with other agents in alcohol withdrawal.13 Three of these studies were performed within an inpatient setting, each differing in trial design, inclusion/exclusion criteria, intervention, and outcomes. Gabapentin dosing strategies were highly variable among studies. Collectively, the differences noted make it difficult to generalize that similar outcomes would result in other patient populations. The purpose of this project was to evaluate gabapentin use at VAPORHCS for alcohol withdrawal and evaluate the impact on symptom management. Future projects could be designed to draw more specific conclusions.

Conclusion

On average, the required benzodiazepine dosage was lower with concomitant use of gabapentin in acute AWS management. The duration for patients on alcohol withdrawal protocol was not reduced with use of gabapentin. Between group (ie, history of withdrawal seizures, blood alcohol level) and among group (ie, gabapentin administration) differences prevent direct correlations to be drawn from this evaluation. Future reviews should include power analysis to establish an appropriate sample size to determine statistical significance among identified covariates. Further evaluation of the use of gabapentin for withdrawal management is warranted prior to incorporating its routine use in the current standard of care for patients experiencing acute AWS.

Acknowledgments

The authors thank Ryan Bickel, PharmD, BCCCP, Critical Care Clinical Pharmacist; Stephen M. Smith, PhD, Director of Medical Critical Care; Gordon Wong, PharmD, Clinical Applications Coordinator; and Eileen Wilbur, RPh, Research Pharmacy Supervisor.

1. Stahre M, Roeber J, Kanny D, Brewer RD, Zhang X. Contribution of excessive alcohol consumption to deaths and years of potential life lost in the United States. Prev Chronic Dis. 2014;11:E109.

2. National Institute on Drug Abuse. Military. https://www.drugabuse.gov/related-topics/military. Updated April 2016. Accessed January 10, 2018.

3. Bohnert KM, Ilgen MA, Rosen CS, Desai RA, Austin K, Blow FC. The association between substance use disorders and mortality among a cohort of veterans with posttraumatic stress disorder: variation by age cohort and mortality type. Drug Alcohol Depend. 2013;128(1-2):98-103.

4. Foy A, Kay J, Taylor A. The course of alcohol withdrawal in a general hospital. QJM. 1997;90(4):253-261.

5. Carlson RW, Kumar NN, Wong-Mckinstry E, et al. Alcohol withdrawal syndrome. Crit Care Clin. 2012;28(4):549-585.

6. National Institute for Health and Care Excellence. Alcohol use disorders: diagnosis and clinical management of alcohol-related physical complications. https://www.nice.org.uk/guidance/cg100. Published June 2010. Updated April 2017. Accessed January 10, 2018.

7. Sarff MC, Gold JA. Alcohol withdrawal syndromes in the intensive care unit. Crit Care Med. 2010;38(suppl 9):494-501.

8. U.S. Department of Veteran Affairs, U.S. Department of Defense. VA/DoD clinical practice guideline for the management of substance use disorders. https://www.healthquality.va.gov/guidelines/MH/sud/VADODSUDCPGRevised22216.pdf. Published December 2015. Accessed January 10, 2018.

9. Gold JA, Rimal B, Nolan A, Nelson LS. A strategy of escalating doses of benzodiazepines and phenobarbital administration reduces the need for mechanical ventilation in delirium tremens. Crit Care Med. 2007;35(3):724-730.

10. Amato L, Minozzi S, Davoli M. Efficacy and safety of pharmacological interventions for the treatment of the alcohol withdrawal syndrome. Cochrane Database Syst Rev. 2011(6):D008537.

11. Ntais C, Pakos E, Kyzas P, Ioannidis JP. Benzodiazepines for alcohol withdrawal. Cochrane Database Syst Rev. 2005;20(3):CD005063.

12. Bonnet U, Hamzavi-Abedi R, Specka M, Wiltfang J, Lieb B, Scherbaum N. An open trial of gabapentin in acute alcohol withdrawal using an oral loading protocol. Alcohol Alcohol. 2010;45(2):143-145.

13. Leung JG, Hall-Flavin D, Nelson S, Schmidt KA, Schak KM. Role of gabapentin in the management of alcohol withdrawal and dependence. Ann Pharmacother. 2015;49(8):897-906.

14. Johnson BA, Swift RM, Addolorato G, Ciraulo DA, Myrick H. Safety and efficacy of GABAergic medications for treating alcoholism. Alcohol Clin Exp Res. 2005;29:248-254.

15. Myrick H, Malcolm R, Randall PK, et al. A double blind trial of gabapentin vs lorazepam in the treatment of alcohol withdrawal. Alcohol Clin Exp Res. 2009;33(9):1582-1588.

16. Sullivan JT, Sykora K, Schneiderman J, Naranjo CA, Sellers EM. Assessment of alcohol withdrawal: the revised clinical institute withdrawal assessment for alcohol scale (CIWA-Ar). Br J Addict. 1989;84(11):1353-1357.

17 Cockcroft DW, Gault MH. Prediction of creatinine clearance from serum creatinine. Nephron. 1976;16(1):31-41.

18. Bergeron N, Dubois MJ, Dumont M, Dial S, Skrobik Y. Intensive care delirium screening checklist: evaluation of a new screening tool. Intensive Care Med. 2001;27(5):859-864.

The prevalence of alcohol dependence in the U.S. represents a significant public health concern. Alcohol use disorder (AUD) is estimated to affect 6.7% of Americans and is the fourth leading preventable cause of death.1 Men and women who have served in the military are at an even higher risk of excessive alcohol use. More than 20% of service members report binge drinking every week.2 This risk is further exacerbated in veterans who have experienced active combat or who have comorbid health conditions, such as posttraumatic stress disorder.3

Background

Individuals that regularly consume excessive amounts of alcohol can develop acute alcohol withdrawal syndrome (AWS) after abrupt discontinuation or significant reduction of alcohol intake. Patients admitted for acute alcohol withdrawal may experience complicated courses of treatment and extended lengths of hospitalization.4,5 Cessation from chronic alcohol intake elicits a pathophysiologic response from increased N-methyl-d-aspartate receptor activity and decreased γ-aminobutyric acid (GABA) receptor function.

Autonomic and psychomotor hyperactivity disturbances, such as anxiety, nausea, tremors, diaphoresis, and tachycardia, may occur as early as 6 to 8 hours after cessation of use. Within 48 to 72 hours of alcohol cessation, patients may be at an increased risk of experiencing tonic-clonic seizures, visual and auditory hallucinations, and delirium tremens (DTs), which may be accompanied by signs of extreme autonomic hyperactivity and agitation.6 Patients hospitalized within acute settings require frequent medical supervision for acute alcohol withdrawal, especially in patients at high risk for seizure or DTs because morbidity and mortality risk is increased.7

Benzodiazepines remain the standard of care for management of moderate-to-severe symptoms of AWS. Strong evidence supports the use of benzodiazepines to reduce withdrawal severity, incidence of delirium, and seizures in AWS by enhancing GABA activity.8 However, the adverse effect (AE) burden associated with benzodiazepines can be a major limitation throughout care. Benzodiazepines also may be limited in their use in select patient populations, such as in older adults or patients who present with hepatic dysfunction due to the risk of increased AEs or metabolite accumulation.6 A high dosing requirement of benzodiazepine for symptom management can lead to oversedation to the point of requiring intubation, increasing length of stay in the intensive care unit (ICU), and the risk of nosocomial infections.9

Anticonvulsants, such as carbamazepine, valproic acid, and gabapentin, have shown to be superior to placebo and equal in efficacy to benzodiazepines for symptom management in mild-to-moderate alcohol withdrawal in both inpatient and outpatient settings.6-8 However, these agents are not recommended as first-line monotherapy due to the limited number of randomized trials supporting their efficacy over benzodiazepines in preventing severe symptoms of withdrawal, such as seizures or delirium.10-12 Nonetheless, the mechanism of action of anticonvulsants may help raise seizure threshold in patients and provide a benzodiazepine-sparing effect by enhancing GABAergic activity and lowering neuronal excitability.13

Gabapentin makes an attractive agent for clinical use because of its anxiolytic and sedative properties that can be used to potentially target symptoms analogous with AWS when the use of benzodiazepines becomes a safety concern. Although similar in chemical structure, gabapentin is not metabolized to GABA and does not directly interact with the receptor. Gabapentin may increase GABA concentrations by direct synthesis of GABA and indirectly through interaction with voltage-gated calcium channels.13 In addition to its overall safety profile, gabapentin may be a viable adjuvant because emerging data may suggest a potential role in the management of acute alcohol withdrawal.12,14,15

Gabapentin for Alcohol Withdrawal at VAPORHCS

Although not currently included in the alcohol withdrawal protocol at Veterans Affairs Portland Health Care System (VAPORHCS), gabapentin has been added to the standard of care in select patients per the discretion of the attending physician. Anecdotal reports of patients experiencing milder symptoms and less benzodiazepine administration have facilitated use of gabapentin in alcohol withdrawal management at VAPORHCS. However, routine use of gabapentin is not consistent among all patients treated for acute alcohol withdrawal, and dosing schedules of gabapentin seem highly variable. Standard symptom management for acute alcohol withdrawal should be consistent for all affected individuals, using evidence-based medicine in order to achieve optimal outcomes and improve harm reduction.

The objective of this quality assurance/quality improvement (QA/QI) project was to assess the amount of lorazepam required for symptom management in acute alcohol withdrawal when gabapentin is used as an adjunct to treatment and to evaluate the impact on symptom management using the Clinical Institute Withdrawal Assessment for Alcohol scale, revised version (CIWA-Ar) in patients admitted to the ICU and general medicine wards for acute alcohol withdrawal at VAPORHCS.16 If a possible adjunct for the treatment of alcohol withdrawal has the potential to reduce benzodiazepine requirements and minimize AEs, a thorough evaluation of the treatment should be conducted before its practice is incorporated into the current standard of care.

Methods

The following QA/QI project was approved locally by the VAPORHCS associate chief of staff/Office of Research and Development and is considered to be nonresearch VHA operations activity and exempt from an institutional review board committee review. This project was a single-center, retrospective chart review of patients admitted to the ICU and general medicine wards at VAPORHCS with acute alcohol withdrawal. The CIWA-Ar protocol order sets between January 1, 2014 and December 31, 2015, were retrieved through the Computerized Patient Record System (CPRS) at VAPORHCS.

Patients with an alcohol withdrawal protocol order set who received gabapentin with or without lorazepam during hospitalization were identified for chart review. Patients were eligible for review if they were aged ≥ 18 years with a primary or secondary diagnosis of acute alcohol withdrawal and had a CIWA-Ar protocol order set placed during hospitalization. Patients must have been administered gabapentin, lorazepam, or both while the CIWA-Ar protocol was active. Patients with an active outpatient prescription for gabapentin or benzodiazepine filled within the previous 30 days, documented history of psychosis or epileptic seizure disorder, or other concomitant benzodiazepines or antiepileptics administered while on the CIWA-Ar protocol were excluded from the analysis.

Baseline characteristics for patients eligible for review were collected and included age; sex; race, body mass index (BMI); estimated creatinine clearance (CrCl); toxicology screen at admission (if available), history of substance use disorder, AWS, or history of withdrawal seizures; and history of a sedative hypnotics (not including benzodiazepines) prescription within 30 days prior to admission.17

The primary endpoint was the total amount of lorazepam administered from the time of admission to the time of discontinuation of the alcohol withdrawal protocol. The dose, frequency, and amount of lorazepam and gabapentin administered daily were collected for each patient while on the CIWA-Ar protocol. Secondary endpoints included rate of the CIWA-Ar score reduction, time to protocol discontinuation, as well as incidence and onset of peak delirium scores during hospitalization. Cumulative CIWA-Ar scores over 24 hours were averaged per patient per day while on CIWA-Ar protocol. Peak CIWA-Ar scores per patient per day on the protocol also were collected. Time to protocol termination was determined by date of order for discontinuation or by date when scoring had ceased and protocol order was inadvertently continued. Peak Intensive Care Delirium Screening Checklist (ICDSC) scores were collected for patients admitted to the ICU.18 Day of peak ICDSC scores also were evaluated.

Statistical Analysis

The sample size for this analysis was determined by the number of patients identified who met the inclusion criteria and did not meet any of the exclusion criteria. Power was not calculated to estimate sample size needed to determine statistical significance. One hundred patients treated for alcohol withdrawal was established as the target sample size for this project. Descriptive statistics were performed to analyze patient baseline characteristics and primary and secondary objective data.

Results

A total of 1,611 CIWA-Ar protocol orders were identified between January 1, 2014 and December 31, 2015.

Primary Endpoint

The average amount of lorazepam administered for the total duration on CIWA-Ar protocol was 7.9 mg (median 6, ± 8.2) among

Secondary Endpoints

On average, the total number of days spent on CIWA-Ar protocol was 3.8 (median 4, ± 1.5) in group 1 compared with 4.1 (median 4, ± 1.6) in group 2. Rate of CIWA-Ar protocol discontinuation for patients in group 1 and group 2 is shown in Figure 3.

Discussion