User login

Improving Teamwork and Patient Outcomes with Daily Structured Interdisciplinary Bedside Rounds: A Multimethod Evaluation

Evidence has emerged over the last decade of the importance of the front line patient care team in improving quality and safety of patient care.1-3 Improving collaboration and workflow is thought to increase reliability of care delivery.1 One promising method to improve collaboration is the interdisciplinary ward round (IDR), whereby medical, nursing, and allied health staff attend ward rounds together. IDRs have been shown to reduce the average cost and length of hospital stay,4,5 although a recent systematic review found inconsistent improvements across studies.6 Using the term “interdisciplinary,” however, does not necessarily imply the inclusion of all disciplines necessary for patient care. The challenge of conducting interdisciplinary rounds is considerable in today’s busy clinical environment: health professionals who are spread across multiple locations within the hospital, and who have competing hospital responsibilities and priorities, must come together at the same time and for a set period each day. A survey with respondents from Australia, the United States, and Canada found that only 65% of rounds labelled “interdisciplinary” included a physician.7

While IDRs are not new, structured IDRs involve the purposeful inclusion of all disciplinary groups relevant to a patient’s care, alongside a checklist tool to aid comprehensive but concise daily assessment of progress and treatment planning. Novel, structured IDR interventions have been tested recently in various settings, resulting in improved teamwork, hospital performance, and patient outcomes in the US, including the Structured Interdisciplinary Bedside Round (SIBR) model.8-12

The aim of this study was to assess the impact of the new structure and the associated practice changes on interprofessional working and a set of key patient and hospital outcome measures. As part of the intervention, the hospital established an Acute Medical Unit (AMU) based on the Accountable Care Unit model.13

METHODS

Description of the Intervention

The AMU brought together 2 existing medical wards, a general medical ward and a 48-hour turnaround Medical Assessment Unit (MAU), into 1 geographical location with 26 beds. Prior to the merger, the MAU and general medical ward had separate and distinct cultures and workflows. The MAU was staffed with experienced nurses; nurses worked within a patient allocation model, the workload was shared, and relationships were collegial. In contrast, the medical ward was more typical of the remainder of the hospital: nurses had a heavy workload, managed a large group of longer-term complex patients, and they used a team-based nursing model of care in which senior nurses supervised junior staff. It was decided that because of the seniority of the MAU staff, they should be in charge of the combined AMU, and the patient allocation model of care would be used to facilitate SIBR.

Consultants, junior doctors, nurses, and allied health professionals (including a pharmacist, physiotherapist, occupational therapist, and social worker) were geographically aligned to the new ward, allowing them to participate as a team in daily structured ward rounds. Rounds are scheduled at the same time each day to enable family participation. The ward round is coordinated by a registrar or intern, with input from patient, family, nursing staff, pharmacy, allied health, and other doctors (intern, registrar, and consultant) based on the unit. The patient load is distributed between 2 rounds: 1 scheduled for 10

Data Collection Strategy

The study was set in an AMU in a large tertiary care hospital in regional Australia and used a convergent parallel multimethod approach14 to evaluate the implementation and effect of SIBR in the AMU. The study population consisted of 32 clinicians employed at the study hospital: (1) the leadership team involved in the development and implementation of the intervention and (2) members of clinical staff who were part of the AMU team.

Qualitative Data

Qualitative measures consisted of semistructured interviews. We utilized multiple strategies to recruit interviewees, including a snowball technique, criterion sampling,15 and emergent sampling, so that we could seek the views of both the leadership team responsible for the implementation and “frontline” clinical staff whose daily work was directly affected by it. Everyone who was initially recruited agreed to be interviewed, and additional frontline staff asked to be interviewed once they realized that we were asking about how staff experienced the changes in practice.

The research team developed a semistructured interview guide based on an understanding of the merger of the 2 units as well as an understanding of changes in practice of the rounds (provided in Appendix 1). The questions were pilot tested on a separate unit and revised. Questions were structured into 5 topic areas: planning and implementation of AMU/SIBR model, changes in work practices because of the new model, team functioning, job satisfaction, and perceived impact of the new model on patients and families. All interviews were audio-recorded and transcribed verbatim for analysis.

Quantitative Data

Quantitative data were collected on patient outcome measures: length of stay (LOS), discharge date and time, mode of separation (including death), primary diagnostic category, total hospital stay cost and “clinical response calls,” and patient demographic data (age, gender, and Patient Clinical Complexity Level [PCCL]). The PCCL is a standard measure used in Australian public inpatient facilities and is calculated for each episode of care.16 It measures the cumulative effect of a patient’s complications and/or comorbidities and takes an integer value between 0 (no clinical complexity effect) and 4 (catastrophic clinical complexity effect).

Data regarding LOS, diagnosis (Australian Refined Diagnosis Related Groups [AR-DRG], version 7), discharge date, and mode of separation (including death) were obtained from the New South Wales Ministry of Health’s Health Information Exchange for patients discharged during the year prior to the intervention through 1 year after the implementation of the intervention. The total hospital stay cost for these individuals was obtained from the local Health Service Organizational Performance Management unit. Inclusion criteria were inpatients aged over 15 years experiencing acute episodes of care; patients with a primary diagnostic category of mental diseases and disorders were excluded. LOS was calculated based on ward stay. AMU data were compared with the remaining hospital ward data (the control group). Data on “clinical response calls” per month per ward were also obtained for the 12 months prior to intervention and the 12 months of the intervention.

Analysis

Qualitative Analysis

Qualitative data analysis consisted of a hybrid form of textual analysis, combining inductive and deductive logics.17,18 Initially, 3 researchers (J.P., J.J., and R.C.W.) independently coded the interview data inductively to identify themes. Discrepancies were resolved through discussion until consensus was reached. Then, to further facilitate analysis, the researchers deductively imposed a matrix categorization, consisting of 4 a priori categories: context/conditions, practices/processes, professional interactions, and consequences.19,20 Additional a priori categories were used to sort the themes further in terms of experiences prior to, during, and following implementation of the intervention. To compare changes in those different time periods, we wanted to know what themes were related to implementation and whether those themes continued to be applicable to sustainability of the changes.

Quantitative analysis. Distribution of continuous data was examined by using the one-sample Kolmogorov-Smirnov test. We compared pre-SIBR (baseline) measures using the Student t test for normally distributed data, the Mann-Whitney U z test for nonparametric data (denoted as M-W U z), and χ2 tests for categorical data. Changes in monthly “clinical response calls” between the AMU and the control wards over time were explored by using analysis of variance (ANOVA). Changes in LOS and cost of stay from the year prior to the intervention to the first year of the intervention were analyzed by using generalized linear models, which are a form of linear regression. Factors, or independent variables, included in the models were time period (before or during intervention), ward (AMU or control), an interaction term (time by ward), patient age, gender, primary diagnosis (major diagnostic categories of the AR-DRG version 7.0), and acuity (PCCL). The estimated marginal means for cost of stay for the 12-month period prior to the intervention and for the first 12 months of the intervention were produced. All statistical analyses were performed by using IBM SPSS version 21 (IBM Corp., Armonk, New York) and with alpha set at P < .05.

RESULTS

Qualitative Evaluation of the Intervention

Participants.

Three researchers (RCW, JP, and JJ) conducted in-person, semistructured interviews with 32 clinicians (9 male, 23 female) during a 3-day period. The duration of the interviews ranged from 19 minutes to 68 minutes. Participants consisted of 8 doctors, 18 nurses, 5 allied health professionals, and an administrator. Ten of the participants were involved in the leadership group that drove the planning and implementation of SIBR and the AMU.

Themes

Context and Conditions of Work

Practices and Processes

Participants perceived postintervention work processes to be more efficient. A primary example was a near-universal approval of the time saved from not “chasing” other professionals now that they were predictably available on the ward. More timely decision-making was thought to result from this predicted availability and associated improvements in communication.

The SIBR enforced a workflow on all staff, who felt there was less flexibility to work autonomously (doctors) or according to patients’ needs (nurses). More junior staff expressed anxiety about delayed completion of discharge-related administrative tasks because of the midday completion of the round. Allied health professionals who had commitments in other areas of the hospital often faced a dilemma about how to prioritize SIBR attendance and activities on other wards. This was managed differently depending on the specific allied health profession and the individuals within that profession.

Professional Interactions

In terms of interprofessional dynamics on the AMU, the implementation of SIBR resulted in a shift in power between the doctors and the nurses. In the old ward, doctors largely controlled the timing of medical rounding processes. In the new AMU, doctors had to relinquish some control over the timing of personal workflow to comply with the requirements of SIBR. Furthermore, there was evidence that this had some impact on traditional hierarchical models of communication and created a more level playing field, as nonmedical professionals felt more empowered to voice their thoughts during and outside of rounds.

The rounds provided much greater visibility of the “big picture” and each profession’s role within it; this allowed each clinician to adjust their work to fit in and take account of others. The process was not instantaneous, and trust developed over a period of weeks. Better communication meant fewer misunderstandings, and workload dropped.

The participation of allied health professionals in the round enhanced clinician interprofessional skills and knowledge. The more inclusive approach facilitated greater trust between clinical disciplines and a development of increased confidence among nursing, allied health, and administrative professionals.

In contrast to the positive impacts of the new model of care on communication and relationships within the AMU, interdepartmental relationships were seen to have suffered. The processes and practices of the new AMU are different to those in the other hospital departments, resulting in some isolation of the unit and difficulties interacting with other areas of the hospital. For example, the trade-offs that allied health professionals made to participate in SIBR often came at the expense of other units or departments.

Consequences

All interviewees lauded the benefits of the SIBR intervention for patients. Patients were perceived to be better informed and more respected, and they benefited from greater perceived timeliness of treatment and discharge, easier access to doctors, better continuity of treatment and outcomes, improved nurse knowledge of their circumstances, and fewer gaps in their care. Clinicians spoke directly to the patient during SIBR, rather than consulting with professional colleagues over the patient’s head. Some staff felt that doctors were now thinking of patients as “people” rather than “a set of symptoms.” Nurses discovered that informed patients are easier to manage.

Staff members were prepared to compromise on their own needs in the interests of the patient. The emphasis on the patient during rounds resulted in improved advocacy behaviors of clinicians. The nurses became more empowered and able to show greater initiative. Families appeared to find it much easier to access the doctors and obtain information about the patient, resulting in less distress and a greater sense of control and trust in the process.

Quantitative Evaluation of the Intervention

Hospital Outcomes

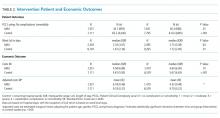

Patient demographics did not change over time within either the AMU or control wards. However, there were significant increases in Patient Clinical Complexity Level (PCCL) ratings for both the AMU (44.7% to 40.3%; P<0.05) and the control wards (65.2% to 61.6%; P < .001). There was not a statistically significant shift over time in median LoS on the ward prior to (2.16 days, IQR 3.07) and during SIBR in the AMU (2.15 days; IQR 3.28), while LoS increased in the control (pre-SIBR: 1.67, 2.34; during SIBR 1.73, 2.40; M-W U z = -2.46, P = .014). Mortality rates were stable across time for both the AMU (pre-SIBR 2.6% [95% confidence interval {CI}, 1.9-3.5]; during SIBR 2.8% [95% CI, 2.1-3.7]) and the control (pre-SIBR 1.3% [95% CI, 1.0-1.5]; during SIBR 1.2% [95% CI, 1.0-1.4]).

The total number of “clinical response calls” or “flags” per month dropped significantly from pre-SIBR to during SIBR for the AMU from a mean of 63.1 (standard deviation 15.1) to 31.5 (10.8), but remained relatively stable in the control (pre-SIBR 72.5 [17.6]; during SIBR 74.0 [28.3]), and this difference was statistically significant (F (1,44) = 9.03; P = .004). There was no change in monthly “red flags” or “rapid response calls” over time (AMU: 10.5 [3.6] to 9.1 [4.7]; control: 40.3 [11.7] to 41.8 [10.8]). The change in total “clinical response calls” over time was attributable to the “yellow flags” or the decline in “calls for clinical review” in the AMU (from 52.6 [13.5] to 22.4 [9.2]). The average monthly “yellow flags” remained stable in the control (pre-SIBR 32.2 [11.6]; during SIBR 32.3 [22.4]). The AMU and the control wards differed significantly in how the number of monthly “calls for clinical review” changed from pre-SIBR to during SIBR (F (1,44) = 12.18; P = .001).

The 2 main outcome measures, LOS and costs, were analyzed to determine whether changes over time differed between the AMU and the control wards after accounting for age, gender, and PCCL. There was no statistically significant difference between the AMU and control wards in terms of change in LOS over time (Wald χ2 = 1.05; degrees of freedom [df] = 1; P = .31). There was a statistically significant interaction for cost of stay, indicating that ward types differed in how they changed over time (with a drop in cost over time observed in the AMU and an increase observed in the control) (Wald χ2 = 6.34; df = 1; P = .012.

DISCUSSION

We report on the implementation of an AMU model of care, including the reorganization of a nursing unit, implementation of IDR, and geographical localization. Our study design allowed a more comprehensive assessment of the implementation of system redesign to include provider perceptions and clinical outcomes.

The 2 very different cultures of the old wards that were combined into the AMU, as well as the fact that the teams had not previously worked together, made the merger of the 2 wards difficult. Historically, the 2 teams had worked in very different ways, and this created barriers to implementation. The SIBR also demanded new ways of working closely with other disciplines, which disrupted older clinical cultures and relationships. While organizational culture is often discussed, and even measured, the full impact of cultural factors when making workplace changes is frequently underestimated.21 The development of a new culture takes time, and it can lag organizational structural changes by months or even years.22 As our interviewees expressed, often emotionally, there was a sense of loss during the merger of the 2 units. While this is a potential consequence of any large organizational change, it could be addressed during the planning stages, prior to implementation, by acknowledging and perhaps honoring what is being left behind. It is safe to assume that future units implementing the rounding intervention will not fully realize commensurate levels of culture change until well after the structural and process changes are finalized, and only then if explicit effort is made to engender cultural change.

Overall, however, the interviewees perceived that the SIBR intervention led to improved teamwork and team functioning. These improvements were thought to benefit task performance and patient safety. Our study is consistent with other research in the literature that reported that greater staff empowerment and commitment is associated with interdisciplinary patient care interventions in front line caregiving teams.23,24 The perception of a more equal nurse-physician relationship resulted in improved job satisfaction, better interprofessional relationships, and perceived improvements in patient care. A flatter power gradient across professions and increased interdisciplinary teamwork has been shown to be associated with improved patient outcomes.25,26

Changes to clinician workflow can significantly impact the introduction of new models of care. A mandated time each day for structured rounds meant less flexibility in workflow for clinicians and made greater demands on their time management and communication skills. Furthermore, the need for human resource negotiations with nurse representatives was an unexpected component of successfully introducing the changes to workflow. Once the benefits of saved time and better communication became evident, changes to workflow were generally accepted. These challenges can be managed if stakeholders are engaged and supportive of the changes.13

Finally, our findings emphasize the importance of combining qualitative and quantitative data when evaluating an intervention. In this case, the qualitative outcomes that include “intangible” positive effects, such as cultural change and improved staff understanding of one another’s roles, might encourage us to continue with the SIBR intervention, which would allow more time to see if the trend of reduced LOS identified in the statistical analysis would translate to a significant effect over time.

We are unable to identify which aspects of the intervention led to the greatest impact on our outcomes. A recent study found that interdisciplinary rounds had no impact on patients’ perceptions of shared decision-making or care satisfaction.27 Although our findings indicated many potential benefits for patients, we were not able to interview patients or their carers to confirm these findings. In addition, we do not have any patient-centered outcomes, which would be important to consider in future work. Although our data on clinical response calls might be seen as a proxy for adverse events, we do not have data on adverse events or errors, and these are important to consider in future work. Finally, our findings are based on data from a single institution.

CONCLUSIONS

While there were some criticisms, participants expressed overwhelmingly positive reactions to the SIBR. The biggest reported benefit was perceived improved communication and understanding between and within the clinical professions, and between clinicians and patients. Improved communication was perceived to have fostered improved teamwork and team functioning, with most respondents feeling that they were a valued part of the new team. Improved teamwork was thought to contribute to improved task performance and led interviewees to perceive a higher level of patient safety. This research highlights the need for multimethod evaluations that address contextual factors as well as clinical outcomes.

Acknowledgments

The authors would like to acknowledge the clinicians and staff members who participated in this study. We would also like to acknowledge the support from the NSW Clinical Excellence Commission, in particular, Dr. Peter Kennedy, Mr. Wilson Yeung, Ms. Tracy Clarke, and Mr. Allan Zhang, and also from Ms. Karen Storey and Mr. Steve Shea of the Organisational Performance Management team at the Orange Health Service.

Disclosures

None of the authors had conflicts of interest in relation to the conduct or reporting of this study, with the exception that the lead author’s institution, the Australian Institute of Health Innovation, received a small grant from the New South Wales Clinical Excellence Commission to conduct the work. Ethics approval for the research was granted by the Greater Western Area Health Service Human Research Ethics Committee (HREC/13/GWAHS/22). All interviewees consented to participate in the study. For patient data, consent was not obtained, but presented data are anonymized. The full dataset is available from the corresponding author with restrictions. This research was funded by the NSW Clinical Excellence Commission, who also encouraged submission of the article for publication. The funding source did not have any role in conduct or reporting of the study. R.C.W., J.P., and J.J. conceptualized and conducted the qualitative component of the study, including method, data collection, data analysis, and writing of the manuscript. G.L., C.H., and H.D. conceptualized the quantitative component of the study, including method, data collection, data analysis, and writing of the manuscript. G.S. contributed to conceptualization of the study, and significantly contributed to the revision of the manuscript. All authors, external and internal, had full access to all of the data (including statistical reports and tables) in the study and can take responsibility for the integrity of the data and the accuracy of the data analysis. As the lead author, R.C.W. affirms that the manuscript is an honest, accurate, and transparent account of the study being reported, that no important aspects of the study have been omitted, and that any discrepancies from the study as planned have been explained.

1. Johnson JK, Batalden PB. Educating health professionals to improve care within the clinical microsystem. McLaughlin and Kaluzny’s Continuous Quality Improvement In Health Care. Burlington: Jones & Bartlett Learning; 2013.

2. Mohr JJ, Batalden P, Barach PB. Integrating patient safety into the clinical microsystem. Qual Saf Health Care. 2004;13:ii34-ii38. PubMed

3. Sanchez JA, Barach PR. High reliability organizations and surgical microsystems: re-engineering surgical care. Surg Clin North Am. 2012;92:1-14. PubMed

4. Curley C, McEachern JE, Speroff T. A firm trial of interdisciplinary rounds on the inpatient medical wards: an intervention designed using continuous quality improvement. Med Care. 1998;36:AS4-AS12. PubMed

5. O’Mahony S, Mazur E, Charney P, Wang Y, Fine J. Use of multidisciplinary rounds to simultaneously improve quality outcomes, enhance resident education, and shorten length of stay. J Gen Intern Med. 2007;22:1073-1079. PubMed

6. Pannick S, Beveridge I, Wachter RM, Sevdalis N. Improving the quality and safety of care on the medical ward: a review and synthesis of the evidence base. Eur J Intern Med. 2014;25:874-887. PubMed

7. Halm MA, Gagner S, Goering M, Sabo J, Smith M, Zaccagnini M. Interdisciplinary rounds: impact on patients, families, and staff. Clin Nurse Spec. 2003;17:133-142. PubMed

8. Stein J, Murphy D, Payne C, et al. A remedy for fragmented hospital care. Harvard Business Review. 2013.

9. O’Leary KJ, Buck R, Fligiel HM, et al. Structured interdisciplinary rounds in a medical teaching unit: improving patient safety. Arch Intern Med. 2010;171:678-684. PubMed

10. O’Leary KJ, Haviley C, Slade ME, Shah HM, Lee J, Williams MV. Improving teamwork: impact of structured interdisciplinary rounds on a hospitalist unit. J Hosp Med. 2011;6:88-93. PubMed

11. O’Leary KJ, Ritter CD, Wheeler H, Szekendi MK, Brinton TS, Williams MV. Teamwork on inpatient medical units: assessing attitudes and barriers. Qual Saf Health Care. 2011;19:117-121. PubMed

12. O’Leary KJ, Creden AJ, Slade ME, et al. Implementation of unit-based interventions to improve teamwork and patient safety on a medical service. Am J Med Qual. 2014;30:409-416. PubMed

13. Stein J, Payne C, Methvin A, et al. Reorganizing a hospital ward as an accountable care unit. J Hosp Med. 2015;10:36-40. PubMed

14. Creswell JW. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. Thousand Oaks: SAGE Publications; 2013.

15. Palinkas LA, Horwitz SM, Green CA, Wisdom JP, Duan N, Hoagwood K. Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Adm Pol Ment Health. 2015;42:533-544. PubMed

16. Australian Consortium for Classification Development (ACCD). Review of the AR-DRG classification Case Complexity Process: Final Report; 2014.

http://ihpa.gov.au/internet/ihpa/publishing.nsf/Content/admitted-acute. Accessed September 21, 2015.

17. Lofland J, Lofland LH. Analyzing Social Settings. Belmont: Wadsworth Publishing Company; 2006.

18. Miles MB, Huberman AM, Saldaña J. Qualitative Data Analysis: A Methods Sourcebook. Los Angeles: SAGE Publications; 2014.

19. Corbin J, Strauss A. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Thousand Oaks: SAGE Publications; 2008.

20. Corbin JM, Strauss A. Grounded theory research: procedures, canons, and evaluative criteria. Qual Sociol. 1990;13:3-21.

21. O’Leary KJ, Johnson JK, Auerbach AD. Do interdisciplinary rounds improve patient outcomes? only if they improve teamwork. J Hosp Med. 2016;11:524-525. PubMed

22. Clay-Williams R. Restructuring and the resilient organisation: implications for health care. In: Hollnagel E, Braithwaite J, Wears R, editors. Resilient health care. Surrey: Ashgate Publishing Limited; 2013.

23. Williams I, Dickinson H, Robinson S, Allen C. Clinical microsystems and the NHS: a sustainable method for improvement? J Health Organ and Manag. 2009;23:119-132. PubMed

24. Nelson EC, Godfrey MM, Batalden PB, et al. Clinical microsystems, part 1. The building blocks of health systems. Jt Comm J Qual Patient Saf. 2008;34:367-378. PubMed

25. Chisholm-Burns MA, Lee JK, Spivey CA, et al. US pharmacists’ effect as team members on patient care: systematic review and meta-analyses. Med Care. 2010;48:923-933. PubMed

26. Zwarenstein M, Goldman J, Reeves S. Interprofessional collaboration: effects of practice-based interventions on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2009;3:CD000072. PubMed

27. O’Leary KJ, Killarney A, Hansen LO, et al. Effect of patient-centred bedside rounds on hospitalised patients’ decision control, activation and satisfaction with care. BMJ Qual Saf. 2015;25:921-928. PubMed

Evidence has emerged over the last decade of the importance of the front line patient care team in improving quality and safety of patient care.1-3 Improving collaboration and workflow is thought to increase reliability of care delivery.1 One promising method to improve collaboration is the interdisciplinary ward round (IDR), whereby medical, nursing, and allied health staff attend ward rounds together. IDRs have been shown to reduce the average cost and length of hospital stay,4,5 although a recent systematic review found inconsistent improvements across studies.6 Using the term “interdisciplinary,” however, does not necessarily imply the inclusion of all disciplines necessary for patient care. The challenge of conducting interdisciplinary rounds is considerable in today’s busy clinical environment: health professionals who are spread across multiple locations within the hospital, and who have competing hospital responsibilities and priorities, must come together at the same time and for a set period each day. A survey with respondents from Australia, the United States, and Canada found that only 65% of rounds labelled “interdisciplinary” included a physician.7

While IDRs are not new, structured IDRs involve the purposeful inclusion of all disciplinary groups relevant to a patient’s care, alongside a checklist tool to aid comprehensive but concise daily assessment of progress and treatment planning. Novel, structured IDR interventions have been tested recently in various settings, resulting in improved teamwork, hospital performance, and patient outcomes in the US, including the Structured Interdisciplinary Bedside Round (SIBR) model.8-12

The aim of this study was to assess the impact of the new structure and the associated practice changes on interprofessional working and a set of key patient and hospital outcome measures. As part of the intervention, the hospital established an Acute Medical Unit (AMU) based on the Accountable Care Unit model.13

METHODS

Description of the Intervention

The AMU brought together 2 existing medical wards, a general medical ward and a 48-hour turnaround Medical Assessment Unit (MAU), into 1 geographical location with 26 beds. Prior to the merger, the MAU and general medical ward had separate and distinct cultures and workflows. The MAU was staffed with experienced nurses; nurses worked within a patient allocation model, the workload was shared, and relationships were collegial. In contrast, the medical ward was more typical of the remainder of the hospital: nurses had a heavy workload, managed a large group of longer-term complex patients, and they used a team-based nursing model of care in which senior nurses supervised junior staff. It was decided that because of the seniority of the MAU staff, they should be in charge of the combined AMU, and the patient allocation model of care would be used to facilitate SIBR.

Consultants, junior doctors, nurses, and allied health professionals (including a pharmacist, physiotherapist, occupational therapist, and social worker) were geographically aligned to the new ward, allowing them to participate as a team in daily structured ward rounds. Rounds are scheduled at the same time each day to enable family participation. The ward round is coordinated by a registrar or intern, with input from patient, family, nursing staff, pharmacy, allied health, and other doctors (intern, registrar, and consultant) based on the unit. The patient load is distributed between 2 rounds: 1 scheduled for 10

Data Collection Strategy

The study was set in an AMU in a large tertiary care hospital in regional Australia and used a convergent parallel multimethod approach14 to evaluate the implementation and effect of SIBR in the AMU. The study population consisted of 32 clinicians employed at the study hospital: (1) the leadership team involved in the development and implementation of the intervention and (2) members of clinical staff who were part of the AMU team.

Qualitative Data

Qualitative measures consisted of semistructured interviews. We utilized multiple strategies to recruit interviewees, including a snowball technique, criterion sampling,15 and emergent sampling, so that we could seek the views of both the leadership team responsible for the implementation and “frontline” clinical staff whose daily work was directly affected by it. Everyone who was initially recruited agreed to be interviewed, and additional frontline staff asked to be interviewed once they realized that we were asking about how staff experienced the changes in practice.

The research team developed a semistructured interview guide based on an understanding of the merger of the 2 units as well as an understanding of changes in practice of the rounds (provided in Appendix 1). The questions were pilot tested on a separate unit and revised. Questions were structured into 5 topic areas: planning and implementation of AMU/SIBR model, changes in work practices because of the new model, team functioning, job satisfaction, and perceived impact of the new model on patients and families. All interviews were audio-recorded and transcribed verbatim for analysis.

Quantitative Data

Quantitative data were collected on patient outcome measures: length of stay (LOS), discharge date and time, mode of separation (including death), primary diagnostic category, total hospital stay cost and “clinical response calls,” and patient demographic data (age, gender, and Patient Clinical Complexity Level [PCCL]). The PCCL is a standard measure used in Australian public inpatient facilities and is calculated for each episode of care.16 It measures the cumulative effect of a patient’s complications and/or comorbidities and takes an integer value between 0 (no clinical complexity effect) and 4 (catastrophic clinical complexity effect).

Data regarding LOS, diagnosis (Australian Refined Diagnosis Related Groups [AR-DRG], version 7), discharge date, and mode of separation (including death) were obtained from the New South Wales Ministry of Health’s Health Information Exchange for patients discharged during the year prior to the intervention through 1 year after the implementation of the intervention. The total hospital stay cost for these individuals was obtained from the local Health Service Organizational Performance Management unit. Inclusion criteria were inpatients aged over 15 years experiencing acute episodes of care; patients with a primary diagnostic category of mental diseases and disorders were excluded. LOS was calculated based on ward stay. AMU data were compared with the remaining hospital ward data (the control group). Data on “clinical response calls” per month per ward were also obtained for the 12 months prior to intervention and the 12 months of the intervention.

Analysis

Qualitative Analysis

Qualitative data analysis consisted of a hybrid form of textual analysis, combining inductive and deductive logics.17,18 Initially, 3 researchers (J.P., J.J., and R.C.W.) independently coded the interview data inductively to identify themes. Discrepancies were resolved through discussion until consensus was reached. Then, to further facilitate analysis, the researchers deductively imposed a matrix categorization, consisting of 4 a priori categories: context/conditions, practices/processes, professional interactions, and consequences.19,20 Additional a priori categories were used to sort the themes further in terms of experiences prior to, during, and following implementation of the intervention. To compare changes in those different time periods, we wanted to know what themes were related to implementation and whether those themes continued to be applicable to sustainability of the changes.

Quantitative analysis. Distribution of continuous data was examined by using the one-sample Kolmogorov-Smirnov test. We compared pre-SIBR (baseline) measures using the Student t test for normally distributed data, the Mann-Whitney U z test for nonparametric data (denoted as M-W U z), and χ2 tests for categorical data. Changes in monthly “clinical response calls” between the AMU and the control wards over time were explored by using analysis of variance (ANOVA). Changes in LOS and cost of stay from the year prior to the intervention to the first year of the intervention were analyzed by using generalized linear models, which are a form of linear regression. Factors, or independent variables, included in the models were time period (before or during intervention), ward (AMU or control), an interaction term (time by ward), patient age, gender, primary diagnosis (major diagnostic categories of the AR-DRG version 7.0), and acuity (PCCL). The estimated marginal means for cost of stay for the 12-month period prior to the intervention and for the first 12 months of the intervention were produced. All statistical analyses were performed by using IBM SPSS version 21 (IBM Corp., Armonk, New York) and with alpha set at P < .05.

RESULTS

Qualitative Evaluation of the Intervention

Participants.

Three researchers (RCW, JP, and JJ) conducted in-person, semistructured interviews with 32 clinicians (9 male, 23 female) during a 3-day period. The duration of the interviews ranged from 19 minutes to 68 minutes. Participants consisted of 8 doctors, 18 nurses, 5 allied health professionals, and an administrator. Ten of the participants were involved in the leadership group that drove the planning and implementation of SIBR and the AMU.

Themes

Context and Conditions of Work

Practices and Processes

Participants perceived postintervention work processes to be more efficient. A primary example was a near-universal approval of the time saved from not “chasing” other professionals now that they were predictably available on the ward. More timely decision-making was thought to result from this predicted availability and associated improvements in communication.

The SIBR enforced a workflow on all staff, who felt there was less flexibility to work autonomously (doctors) or according to patients’ needs (nurses). More junior staff expressed anxiety about delayed completion of discharge-related administrative tasks because of the midday completion of the round. Allied health professionals who had commitments in other areas of the hospital often faced a dilemma about how to prioritize SIBR attendance and activities on other wards. This was managed differently depending on the specific allied health profession and the individuals within that profession.

Professional Interactions

In terms of interprofessional dynamics on the AMU, the implementation of SIBR resulted in a shift in power between the doctors and the nurses. In the old ward, doctors largely controlled the timing of medical rounding processes. In the new AMU, doctors had to relinquish some control over the timing of personal workflow to comply with the requirements of SIBR. Furthermore, there was evidence that this had some impact on traditional hierarchical models of communication and created a more level playing field, as nonmedical professionals felt more empowered to voice their thoughts during and outside of rounds.

The rounds provided much greater visibility of the “big picture” and each profession’s role within it; this allowed each clinician to adjust their work to fit in and take account of others. The process was not instantaneous, and trust developed over a period of weeks. Better communication meant fewer misunderstandings, and workload dropped.

The participation of allied health professionals in the round enhanced clinician interprofessional skills and knowledge. The more inclusive approach facilitated greater trust between clinical disciplines and a development of increased confidence among nursing, allied health, and administrative professionals.

In contrast to the positive impacts of the new model of care on communication and relationships within the AMU, interdepartmental relationships were seen to have suffered. The processes and practices of the new AMU are different to those in the other hospital departments, resulting in some isolation of the unit and difficulties interacting with other areas of the hospital. For example, the trade-offs that allied health professionals made to participate in SIBR often came at the expense of other units or departments.

Consequences

All interviewees lauded the benefits of the SIBR intervention for patients. Patients were perceived to be better informed and more respected, and they benefited from greater perceived timeliness of treatment and discharge, easier access to doctors, better continuity of treatment and outcomes, improved nurse knowledge of their circumstances, and fewer gaps in their care. Clinicians spoke directly to the patient during SIBR, rather than consulting with professional colleagues over the patient’s head. Some staff felt that doctors were now thinking of patients as “people” rather than “a set of symptoms.” Nurses discovered that informed patients are easier to manage.

Staff members were prepared to compromise on their own needs in the interests of the patient. The emphasis on the patient during rounds resulted in improved advocacy behaviors of clinicians. The nurses became more empowered and able to show greater initiative. Families appeared to find it much easier to access the doctors and obtain information about the patient, resulting in less distress and a greater sense of control and trust in the process.

Quantitative Evaluation of the Intervention

Hospital Outcomes

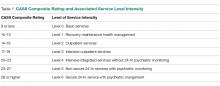

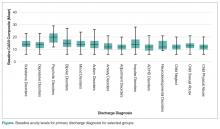

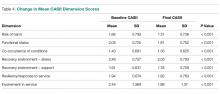

Patient demographics did not change over time within either the AMU or control wards. However, there were significant increases in Patient Clinical Complexity Level (PCCL) ratings for both the AMU (44.7% to 40.3%; P<0.05) and the control wards (65.2% to 61.6%; P < .001). There was not a statistically significant shift over time in median LoS on the ward prior to (2.16 days, IQR 3.07) and during SIBR in the AMU (2.15 days; IQR 3.28), while LoS increased in the control (pre-SIBR: 1.67, 2.34; during SIBR 1.73, 2.40; M-W U z = -2.46, P = .014). Mortality rates were stable across time for both the AMU (pre-SIBR 2.6% [95% confidence interval {CI}, 1.9-3.5]; during SIBR 2.8% [95% CI, 2.1-3.7]) and the control (pre-SIBR 1.3% [95% CI, 1.0-1.5]; during SIBR 1.2% [95% CI, 1.0-1.4]).

The total number of “clinical response calls” or “flags” per month dropped significantly from pre-SIBR to during SIBR for the AMU from a mean of 63.1 (standard deviation 15.1) to 31.5 (10.8), but remained relatively stable in the control (pre-SIBR 72.5 [17.6]; during SIBR 74.0 [28.3]), and this difference was statistically significant (F (1,44) = 9.03; P = .004). There was no change in monthly “red flags” or “rapid response calls” over time (AMU: 10.5 [3.6] to 9.1 [4.7]; control: 40.3 [11.7] to 41.8 [10.8]). The change in total “clinical response calls” over time was attributable to the “yellow flags” or the decline in “calls for clinical review” in the AMU (from 52.6 [13.5] to 22.4 [9.2]). The average monthly “yellow flags” remained stable in the control (pre-SIBR 32.2 [11.6]; during SIBR 32.3 [22.4]). The AMU and the control wards differed significantly in how the number of monthly “calls for clinical review” changed from pre-SIBR to during SIBR (F (1,44) = 12.18; P = .001).

The 2 main outcome measures, LOS and costs, were analyzed to determine whether changes over time differed between the AMU and the control wards after accounting for age, gender, and PCCL. There was no statistically significant difference between the AMU and control wards in terms of change in LOS over time (Wald χ2 = 1.05; degrees of freedom [df] = 1; P = .31). There was a statistically significant interaction for cost of stay, indicating that ward types differed in how they changed over time (with a drop in cost over time observed in the AMU and an increase observed in the control) (Wald χ2 = 6.34; df = 1; P = .012.

DISCUSSION

We report on the implementation of an AMU model of care, including the reorganization of a nursing unit, implementation of IDR, and geographical localization. Our study design allowed a more comprehensive assessment of the implementation of system redesign to include provider perceptions and clinical outcomes.

The 2 very different cultures of the old wards that were combined into the AMU, as well as the fact that the teams had not previously worked together, made the merger of the 2 wards difficult. Historically, the 2 teams had worked in very different ways, and this created barriers to implementation. The SIBR also demanded new ways of working closely with other disciplines, which disrupted older clinical cultures and relationships. While organizational culture is often discussed, and even measured, the full impact of cultural factors when making workplace changes is frequently underestimated.21 The development of a new culture takes time, and it can lag organizational structural changes by months or even years.22 As our interviewees expressed, often emotionally, there was a sense of loss during the merger of the 2 units. While this is a potential consequence of any large organizational change, it could be addressed during the planning stages, prior to implementation, by acknowledging and perhaps honoring what is being left behind. It is safe to assume that future units implementing the rounding intervention will not fully realize commensurate levels of culture change until well after the structural and process changes are finalized, and only then if explicit effort is made to engender cultural change.

Overall, however, the interviewees perceived that the SIBR intervention led to improved teamwork and team functioning. These improvements were thought to benefit task performance and patient safety. Our study is consistent with other research in the literature that reported that greater staff empowerment and commitment is associated with interdisciplinary patient care interventions in front line caregiving teams.23,24 The perception of a more equal nurse-physician relationship resulted in improved job satisfaction, better interprofessional relationships, and perceived improvements in patient care. A flatter power gradient across professions and increased interdisciplinary teamwork has been shown to be associated with improved patient outcomes.25,26

Changes to clinician workflow can significantly impact the introduction of new models of care. A mandated time each day for structured rounds meant less flexibility in workflow for clinicians and made greater demands on their time management and communication skills. Furthermore, the need for human resource negotiations with nurse representatives was an unexpected component of successfully introducing the changes to workflow. Once the benefits of saved time and better communication became evident, changes to workflow were generally accepted. These challenges can be managed if stakeholders are engaged and supportive of the changes.13

Finally, our findings emphasize the importance of combining qualitative and quantitative data when evaluating an intervention. In this case, the qualitative outcomes that include “intangible” positive effects, such as cultural change and improved staff understanding of one another’s roles, might encourage us to continue with the SIBR intervention, which would allow more time to see if the trend of reduced LOS identified in the statistical analysis would translate to a significant effect over time.

We are unable to identify which aspects of the intervention led to the greatest impact on our outcomes. A recent study found that interdisciplinary rounds had no impact on patients’ perceptions of shared decision-making or care satisfaction.27 Although our findings indicated many potential benefits for patients, we were not able to interview patients or their carers to confirm these findings. In addition, we do not have any patient-centered outcomes, which would be important to consider in future work. Although our data on clinical response calls might be seen as a proxy for adverse events, we do not have data on adverse events or errors, and these are important to consider in future work. Finally, our findings are based on data from a single institution.

CONCLUSIONS

While there were some criticisms, participants expressed overwhelmingly positive reactions to the SIBR. The biggest reported benefit was perceived improved communication and understanding between and within the clinical professions, and between clinicians and patients. Improved communication was perceived to have fostered improved teamwork and team functioning, with most respondents feeling that they were a valued part of the new team. Improved teamwork was thought to contribute to improved task performance and led interviewees to perceive a higher level of patient safety. This research highlights the need for multimethod evaluations that address contextual factors as well as clinical outcomes.

Acknowledgments

The authors would like to acknowledge the clinicians and staff members who participated in this study. We would also like to acknowledge the support from the NSW Clinical Excellence Commission, in particular, Dr. Peter Kennedy, Mr. Wilson Yeung, Ms. Tracy Clarke, and Mr. Allan Zhang, and also from Ms. Karen Storey and Mr. Steve Shea of the Organisational Performance Management team at the Orange Health Service.

Disclosures

None of the authors had conflicts of interest in relation to the conduct or reporting of this study, with the exception that the lead author’s institution, the Australian Institute of Health Innovation, received a small grant from the New South Wales Clinical Excellence Commission to conduct the work. Ethics approval for the research was granted by the Greater Western Area Health Service Human Research Ethics Committee (HREC/13/GWAHS/22). All interviewees consented to participate in the study. For patient data, consent was not obtained, but presented data are anonymized. The full dataset is available from the corresponding author with restrictions. This research was funded by the NSW Clinical Excellence Commission, who also encouraged submission of the article for publication. The funding source did not have any role in conduct or reporting of the study. R.C.W., J.P., and J.J. conceptualized and conducted the qualitative component of the study, including method, data collection, data analysis, and writing of the manuscript. G.L., C.H., and H.D. conceptualized the quantitative component of the study, including method, data collection, data analysis, and writing of the manuscript. G.S. contributed to conceptualization of the study, and significantly contributed to the revision of the manuscript. All authors, external and internal, had full access to all of the data (including statistical reports and tables) in the study and can take responsibility for the integrity of the data and the accuracy of the data analysis. As the lead author, R.C.W. affirms that the manuscript is an honest, accurate, and transparent account of the study being reported, that no important aspects of the study have been omitted, and that any discrepancies from the study as planned have been explained.

Evidence has emerged over the last decade of the importance of the front line patient care team in improving quality and safety of patient care.1-3 Improving collaboration and workflow is thought to increase reliability of care delivery.1 One promising method to improve collaboration is the interdisciplinary ward round (IDR), whereby medical, nursing, and allied health staff attend ward rounds together. IDRs have been shown to reduce the average cost and length of hospital stay,4,5 although a recent systematic review found inconsistent improvements across studies.6 Using the term “interdisciplinary,” however, does not necessarily imply the inclusion of all disciplines necessary for patient care. The challenge of conducting interdisciplinary rounds is considerable in today’s busy clinical environment: health professionals who are spread across multiple locations within the hospital, and who have competing hospital responsibilities and priorities, must come together at the same time and for a set period each day. A survey with respondents from Australia, the United States, and Canada found that only 65% of rounds labelled “interdisciplinary” included a physician.7

While IDRs are not new, structured IDRs involve the purposeful inclusion of all disciplinary groups relevant to a patient’s care, alongside a checklist tool to aid comprehensive but concise daily assessment of progress and treatment planning. Novel, structured IDR interventions have been tested recently in various settings, resulting in improved teamwork, hospital performance, and patient outcomes in the US, including the Structured Interdisciplinary Bedside Round (SIBR) model.8-12

The aim of this study was to assess the impact of the new structure and the associated practice changes on interprofessional working and a set of key patient and hospital outcome measures. As part of the intervention, the hospital established an Acute Medical Unit (AMU) based on the Accountable Care Unit model.13

METHODS

Description of the Intervention

The AMU brought together 2 existing medical wards, a general medical ward and a 48-hour turnaround Medical Assessment Unit (MAU), into 1 geographical location with 26 beds. Prior to the merger, the MAU and general medical ward had separate and distinct cultures and workflows. The MAU was staffed with experienced nurses; nurses worked within a patient allocation model, the workload was shared, and relationships were collegial. In contrast, the medical ward was more typical of the remainder of the hospital: nurses had a heavy workload, managed a large group of longer-term complex patients, and they used a team-based nursing model of care in which senior nurses supervised junior staff. It was decided that because of the seniority of the MAU staff, they should be in charge of the combined AMU, and the patient allocation model of care would be used to facilitate SIBR.

Consultants, junior doctors, nurses, and allied health professionals (including a pharmacist, physiotherapist, occupational therapist, and social worker) were geographically aligned to the new ward, allowing them to participate as a team in daily structured ward rounds. Rounds are scheduled at the same time each day to enable family participation. The ward round is coordinated by a registrar or intern, with input from patient, family, nursing staff, pharmacy, allied health, and other doctors (intern, registrar, and consultant) based on the unit. The patient load is distributed between 2 rounds: 1 scheduled for 10

Data Collection Strategy

The study was set in an AMU in a large tertiary care hospital in regional Australia and used a convergent parallel multimethod approach14 to evaluate the implementation and effect of SIBR in the AMU. The study population consisted of 32 clinicians employed at the study hospital: (1) the leadership team involved in the development and implementation of the intervention and (2) members of clinical staff who were part of the AMU team.

Qualitative Data

Qualitative measures consisted of semistructured interviews. We utilized multiple strategies to recruit interviewees, including a snowball technique, criterion sampling,15 and emergent sampling, so that we could seek the views of both the leadership team responsible for the implementation and “frontline” clinical staff whose daily work was directly affected by it. Everyone who was initially recruited agreed to be interviewed, and additional frontline staff asked to be interviewed once they realized that we were asking about how staff experienced the changes in practice.

The research team developed a semistructured interview guide based on an understanding of the merger of the 2 units as well as an understanding of changes in practice of the rounds (provided in Appendix 1). The questions were pilot tested on a separate unit and revised. Questions were structured into 5 topic areas: planning and implementation of AMU/SIBR model, changes in work practices because of the new model, team functioning, job satisfaction, and perceived impact of the new model on patients and families. All interviews were audio-recorded and transcribed verbatim for analysis.

Quantitative Data

Quantitative data were collected on patient outcome measures: length of stay (LOS), discharge date and time, mode of separation (including death), primary diagnostic category, total hospital stay cost and “clinical response calls,” and patient demographic data (age, gender, and Patient Clinical Complexity Level [PCCL]). The PCCL is a standard measure used in Australian public inpatient facilities and is calculated for each episode of care.16 It measures the cumulative effect of a patient’s complications and/or comorbidities and takes an integer value between 0 (no clinical complexity effect) and 4 (catastrophic clinical complexity effect).

Data regarding LOS, diagnosis (Australian Refined Diagnosis Related Groups [AR-DRG], version 7), discharge date, and mode of separation (including death) were obtained from the New South Wales Ministry of Health’s Health Information Exchange for patients discharged during the year prior to the intervention through 1 year after the implementation of the intervention. The total hospital stay cost for these individuals was obtained from the local Health Service Organizational Performance Management unit. Inclusion criteria were inpatients aged over 15 years experiencing acute episodes of care; patients with a primary diagnostic category of mental diseases and disorders were excluded. LOS was calculated based on ward stay. AMU data were compared with the remaining hospital ward data (the control group). Data on “clinical response calls” per month per ward were also obtained for the 12 months prior to intervention and the 12 months of the intervention.

Analysis

Qualitative Analysis

Qualitative data analysis consisted of a hybrid form of textual analysis, combining inductive and deductive logics.17,18 Initially, 3 researchers (J.P., J.J., and R.C.W.) independently coded the interview data inductively to identify themes. Discrepancies were resolved through discussion until consensus was reached. Then, to further facilitate analysis, the researchers deductively imposed a matrix categorization, consisting of 4 a priori categories: context/conditions, practices/processes, professional interactions, and consequences.19,20 Additional a priori categories were used to sort the themes further in terms of experiences prior to, during, and following implementation of the intervention. To compare changes in those different time periods, we wanted to know what themes were related to implementation and whether those themes continued to be applicable to sustainability of the changes.

Quantitative analysis. Distribution of continuous data was examined by using the one-sample Kolmogorov-Smirnov test. We compared pre-SIBR (baseline) measures using the Student t test for normally distributed data, the Mann-Whitney U z test for nonparametric data (denoted as M-W U z), and χ2 tests for categorical data. Changes in monthly “clinical response calls” between the AMU and the control wards over time were explored by using analysis of variance (ANOVA). Changes in LOS and cost of stay from the year prior to the intervention to the first year of the intervention were analyzed by using generalized linear models, which are a form of linear regression. Factors, or independent variables, included in the models were time period (before or during intervention), ward (AMU or control), an interaction term (time by ward), patient age, gender, primary diagnosis (major diagnostic categories of the AR-DRG version 7.0), and acuity (PCCL). The estimated marginal means for cost of stay for the 12-month period prior to the intervention and for the first 12 months of the intervention were produced. All statistical analyses were performed by using IBM SPSS version 21 (IBM Corp., Armonk, New York) and with alpha set at P < .05.

RESULTS

Qualitative Evaluation of the Intervention

Participants.

Three researchers (RCW, JP, and JJ) conducted in-person, semistructured interviews with 32 clinicians (9 male, 23 female) during a 3-day period. The duration of the interviews ranged from 19 minutes to 68 minutes. Participants consisted of 8 doctors, 18 nurses, 5 allied health professionals, and an administrator. Ten of the participants were involved in the leadership group that drove the planning and implementation of SIBR and the AMU.

Themes

Context and Conditions of Work

Practices and Processes

Participants perceived postintervention work processes to be more efficient. A primary example was a near-universal approval of the time saved from not “chasing” other professionals now that they were predictably available on the ward. More timely decision-making was thought to result from this predicted availability and associated improvements in communication.

The SIBR enforced a workflow on all staff, who felt there was less flexibility to work autonomously (doctors) or according to patients’ needs (nurses). More junior staff expressed anxiety about delayed completion of discharge-related administrative tasks because of the midday completion of the round. Allied health professionals who had commitments in other areas of the hospital often faced a dilemma about how to prioritize SIBR attendance and activities on other wards. This was managed differently depending on the specific allied health profession and the individuals within that profession.

Professional Interactions

In terms of interprofessional dynamics on the AMU, the implementation of SIBR resulted in a shift in power between the doctors and the nurses. In the old ward, doctors largely controlled the timing of medical rounding processes. In the new AMU, doctors had to relinquish some control over the timing of personal workflow to comply with the requirements of SIBR. Furthermore, there was evidence that this had some impact on traditional hierarchical models of communication and created a more level playing field, as nonmedical professionals felt more empowered to voice their thoughts during and outside of rounds.

The rounds provided much greater visibility of the “big picture” and each profession’s role within it; this allowed each clinician to adjust their work to fit in and take account of others. The process was not instantaneous, and trust developed over a period of weeks. Better communication meant fewer misunderstandings, and workload dropped.

The participation of allied health professionals in the round enhanced clinician interprofessional skills and knowledge. The more inclusive approach facilitated greater trust between clinical disciplines and a development of increased confidence among nursing, allied health, and administrative professionals.

In contrast to the positive impacts of the new model of care on communication and relationships within the AMU, interdepartmental relationships were seen to have suffered. The processes and practices of the new AMU are different to those in the other hospital departments, resulting in some isolation of the unit and difficulties interacting with other areas of the hospital. For example, the trade-offs that allied health professionals made to participate in SIBR often came at the expense of other units or departments.

Consequences

All interviewees lauded the benefits of the SIBR intervention for patients. Patients were perceived to be better informed and more respected, and they benefited from greater perceived timeliness of treatment and discharge, easier access to doctors, better continuity of treatment and outcomes, improved nurse knowledge of their circumstances, and fewer gaps in their care. Clinicians spoke directly to the patient during SIBR, rather than consulting with professional colleagues over the patient’s head. Some staff felt that doctors were now thinking of patients as “people” rather than “a set of symptoms.” Nurses discovered that informed patients are easier to manage.

Staff members were prepared to compromise on their own needs in the interests of the patient. The emphasis on the patient during rounds resulted in improved advocacy behaviors of clinicians. The nurses became more empowered and able to show greater initiative. Families appeared to find it much easier to access the doctors and obtain information about the patient, resulting in less distress and a greater sense of control and trust in the process.

Quantitative Evaluation of the Intervention

Hospital Outcomes

Patient demographics did not change over time within either the AMU or control wards. However, there were significant increases in Patient Clinical Complexity Level (PCCL) ratings for both the AMU (44.7% to 40.3%; P<0.05) and the control wards (65.2% to 61.6%; P < .001). There was not a statistically significant shift over time in median LoS on the ward prior to (2.16 days, IQR 3.07) and during SIBR in the AMU (2.15 days; IQR 3.28), while LoS increased in the control (pre-SIBR: 1.67, 2.34; during SIBR 1.73, 2.40; M-W U z = -2.46, P = .014). Mortality rates were stable across time for both the AMU (pre-SIBR 2.6% [95% confidence interval {CI}, 1.9-3.5]; during SIBR 2.8% [95% CI, 2.1-3.7]) and the control (pre-SIBR 1.3% [95% CI, 1.0-1.5]; during SIBR 1.2% [95% CI, 1.0-1.4]).

The total number of “clinical response calls” or “flags” per month dropped significantly from pre-SIBR to during SIBR for the AMU from a mean of 63.1 (standard deviation 15.1) to 31.5 (10.8), but remained relatively stable in the control (pre-SIBR 72.5 [17.6]; during SIBR 74.0 [28.3]), and this difference was statistically significant (F (1,44) = 9.03; P = .004). There was no change in monthly “red flags” or “rapid response calls” over time (AMU: 10.5 [3.6] to 9.1 [4.7]; control: 40.3 [11.7] to 41.8 [10.8]). The change in total “clinical response calls” over time was attributable to the “yellow flags” or the decline in “calls for clinical review” in the AMU (from 52.6 [13.5] to 22.4 [9.2]). The average monthly “yellow flags” remained stable in the control (pre-SIBR 32.2 [11.6]; during SIBR 32.3 [22.4]). The AMU and the control wards differed significantly in how the number of monthly “calls for clinical review” changed from pre-SIBR to during SIBR (F (1,44) = 12.18; P = .001).

The 2 main outcome measures, LOS and costs, were analyzed to determine whether changes over time differed between the AMU and the control wards after accounting for age, gender, and PCCL. There was no statistically significant difference between the AMU and control wards in terms of change in LOS over time (Wald χ2 = 1.05; degrees of freedom [df] = 1; P = .31). There was a statistically significant interaction for cost of stay, indicating that ward types differed in how they changed over time (with a drop in cost over time observed in the AMU and an increase observed in the control) (Wald χ2 = 6.34; df = 1; P = .012.

DISCUSSION

We report on the implementation of an AMU model of care, including the reorganization of a nursing unit, implementation of IDR, and geographical localization. Our study design allowed a more comprehensive assessment of the implementation of system redesign to include provider perceptions and clinical outcomes.

The 2 very different cultures of the old wards that were combined into the AMU, as well as the fact that the teams had not previously worked together, made the merger of the 2 wards difficult. Historically, the 2 teams had worked in very different ways, and this created barriers to implementation. The SIBR also demanded new ways of working closely with other disciplines, which disrupted older clinical cultures and relationships. While organizational culture is often discussed, and even measured, the full impact of cultural factors when making workplace changes is frequently underestimated.21 The development of a new culture takes time, and it can lag organizational structural changes by months or even years.22 As our interviewees expressed, often emotionally, there was a sense of loss during the merger of the 2 units. While this is a potential consequence of any large organizational change, it could be addressed during the planning stages, prior to implementation, by acknowledging and perhaps honoring what is being left behind. It is safe to assume that future units implementing the rounding intervention will not fully realize commensurate levels of culture change until well after the structural and process changes are finalized, and only then if explicit effort is made to engender cultural change.

Overall, however, the interviewees perceived that the SIBR intervention led to improved teamwork and team functioning. These improvements were thought to benefit task performance and patient safety. Our study is consistent with other research in the literature that reported that greater staff empowerment and commitment is associated with interdisciplinary patient care interventions in front line caregiving teams.23,24 The perception of a more equal nurse-physician relationship resulted in improved job satisfaction, better interprofessional relationships, and perceived improvements in patient care. A flatter power gradient across professions and increased interdisciplinary teamwork has been shown to be associated with improved patient outcomes.25,26

Changes to clinician workflow can significantly impact the introduction of new models of care. A mandated time each day for structured rounds meant less flexibility in workflow for clinicians and made greater demands on their time management and communication skills. Furthermore, the need for human resource negotiations with nurse representatives was an unexpected component of successfully introducing the changes to workflow. Once the benefits of saved time and better communication became evident, changes to workflow were generally accepted. These challenges can be managed if stakeholders are engaged and supportive of the changes.13

Finally, our findings emphasize the importance of combining qualitative and quantitative data when evaluating an intervention. In this case, the qualitative outcomes that include “intangible” positive effects, such as cultural change and improved staff understanding of one another’s roles, might encourage us to continue with the SIBR intervention, which would allow more time to see if the trend of reduced LOS identified in the statistical analysis would translate to a significant effect over time.

We are unable to identify which aspects of the intervention led to the greatest impact on our outcomes. A recent study found that interdisciplinary rounds had no impact on patients’ perceptions of shared decision-making or care satisfaction.27 Although our findings indicated many potential benefits for patients, we were not able to interview patients or their carers to confirm these findings. In addition, we do not have any patient-centered outcomes, which would be important to consider in future work. Although our data on clinical response calls might be seen as a proxy for adverse events, we do not have data on adverse events or errors, and these are important to consider in future work. Finally, our findings are based on data from a single institution.

CONCLUSIONS

While there were some criticisms, participants expressed overwhelmingly positive reactions to the SIBR. The biggest reported benefit was perceived improved communication and understanding between and within the clinical professions, and between clinicians and patients. Improved communication was perceived to have fostered improved teamwork and team functioning, with most respondents feeling that they were a valued part of the new team. Improved teamwork was thought to contribute to improved task performance and led interviewees to perceive a higher level of patient safety. This research highlights the need for multimethod evaluations that address contextual factors as well as clinical outcomes.

Acknowledgments

The authors would like to acknowledge the clinicians and staff members who participated in this study. We would also like to acknowledge the support from the NSW Clinical Excellence Commission, in particular, Dr. Peter Kennedy, Mr. Wilson Yeung, Ms. Tracy Clarke, and Mr. Allan Zhang, and also from Ms. Karen Storey and Mr. Steve Shea of the Organisational Performance Management team at the Orange Health Service.

Disclosures

None of the authors had conflicts of interest in relation to the conduct or reporting of this study, with the exception that the lead author’s institution, the Australian Institute of Health Innovation, received a small grant from the New South Wales Clinical Excellence Commission to conduct the work. Ethics approval for the research was granted by the Greater Western Area Health Service Human Research Ethics Committee (HREC/13/GWAHS/22). All interviewees consented to participate in the study. For patient data, consent was not obtained, but presented data are anonymized. The full dataset is available from the corresponding author with restrictions. This research was funded by the NSW Clinical Excellence Commission, who also encouraged submission of the article for publication. The funding source did not have any role in conduct or reporting of the study. R.C.W., J.P., and J.J. conceptualized and conducted the qualitative component of the study, including method, data collection, data analysis, and writing of the manuscript. G.L., C.H., and H.D. conceptualized the quantitative component of the study, including method, data collection, data analysis, and writing of the manuscript. G.S. contributed to conceptualization of the study, and significantly contributed to the revision of the manuscript. All authors, external and internal, had full access to all of the data (including statistical reports and tables) in the study and can take responsibility for the integrity of the data and the accuracy of the data analysis. As the lead author, R.C.W. affirms that the manuscript is an honest, accurate, and transparent account of the study being reported, that no important aspects of the study have been omitted, and that any discrepancies from the study as planned have been explained.

1. Johnson JK, Batalden PB. Educating health professionals to improve care within the clinical microsystem. McLaughlin and Kaluzny’s Continuous Quality Improvement In Health Care. Burlington: Jones & Bartlett Learning; 2013.

2. Mohr JJ, Batalden P, Barach PB. Integrating patient safety into the clinical microsystem. Qual Saf Health Care. 2004;13:ii34-ii38. PubMed

3. Sanchez JA, Barach PR. High reliability organizations and surgical microsystems: re-engineering surgical care. Surg Clin North Am. 2012;92:1-14. PubMed

4. Curley C, McEachern JE, Speroff T. A firm trial of interdisciplinary rounds on the inpatient medical wards: an intervention designed using continuous quality improvement. Med Care. 1998;36:AS4-AS12. PubMed

5. O’Mahony S, Mazur E, Charney P, Wang Y, Fine J. Use of multidisciplinary rounds to simultaneously improve quality outcomes, enhance resident education, and shorten length of stay. J Gen Intern Med. 2007;22:1073-1079. PubMed

6. Pannick S, Beveridge I, Wachter RM, Sevdalis N. Improving the quality and safety of care on the medical ward: a review and synthesis of the evidence base. Eur J Intern Med. 2014;25:874-887. PubMed

7. Halm MA, Gagner S, Goering M, Sabo J, Smith M, Zaccagnini M. Interdisciplinary rounds: impact on patients, families, and staff. Clin Nurse Spec. 2003;17:133-142. PubMed

8. Stein J, Murphy D, Payne C, et al. A remedy for fragmented hospital care. Harvard Business Review. 2013.

9. O’Leary KJ, Buck R, Fligiel HM, et al. Structured interdisciplinary rounds in a medical teaching unit: improving patient safety. Arch Intern Med. 2010;171:678-684. PubMed

10. O’Leary KJ, Haviley C, Slade ME, Shah HM, Lee J, Williams MV. Improving teamwork: impact of structured interdisciplinary rounds on a hospitalist unit. J Hosp Med. 2011;6:88-93. PubMed

11. O’Leary KJ, Ritter CD, Wheeler H, Szekendi MK, Brinton TS, Williams MV. Teamwork on inpatient medical units: assessing attitudes and barriers. Qual Saf Health Care. 2011;19:117-121. PubMed

12. O’Leary KJ, Creden AJ, Slade ME, et al. Implementation of unit-based interventions to improve teamwork and patient safety on a medical service. Am J Med Qual. 2014;30:409-416. PubMed

13. Stein J, Payne C, Methvin A, et al. Reorganizing a hospital ward as an accountable care unit. J Hosp Med. 2015;10:36-40. PubMed

14. Creswell JW. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. Thousand Oaks: SAGE Publications; 2013.

15. Palinkas LA, Horwitz SM, Green CA, Wisdom JP, Duan N, Hoagwood K. Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Adm Pol Ment Health. 2015;42:533-544. PubMed

16. Australian Consortium for Classification Development (ACCD). Review of the AR-DRG classification Case Complexity Process: Final Report; 2014.

http://ihpa.gov.au/internet/ihpa/publishing.nsf/Content/admitted-acute. Accessed September 21, 2015.

17. Lofland J, Lofland LH. Analyzing Social Settings. Belmont: Wadsworth Publishing Company; 2006.

18. Miles MB, Huberman AM, Saldaña J. Qualitative Data Analysis: A Methods Sourcebook. Los Angeles: SAGE Publications; 2014.

19. Corbin J, Strauss A. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Thousand Oaks: SAGE Publications; 2008.

20. Corbin JM, Strauss A. Grounded theory research: procedures, canons, and evaluative criteria. Qual Sociol. 1990;13:3-21.

21. O’Leary KJ, Johnson JK, Auerbach AD. Do interdisciplinary rounds improve patient outcomes? only if they improve teamwork. J Hosp Med. 2016;11:524-525. PubMed

22. Clay-Williams R. Restructuring and the resilient organisation: implications for health care. In: Hollnagel E, Braithwaite J, Wears R, editors. Resilient health care. Surrey: Ashgate Publishing Limited; 2013.

23. Williams I, Dickinson H, Robinson S, Allen C. Clinical microsystems and the NHS: a sustainable method for improvement? J Health Organ and Manag. 2009;23:119-132. PubMed

24. Nelson EC, Godfrey MM, Batalden PB, et al. Clinical microsystems, part 1. The building blocks of health systems. Jt Comm J Qual Patient Saf. 2008;34:367-378. PubMed

25. Chisholm-Burns MA, Lee JK, Spivey CA, et al. US pharmacists’ effect as team members on patient care: systematic review and meta-analyses. Med Care. 2010;48:923-933. PubMed