User login

LAA excision of no benefit in persistent AF ablation

SAN FRANCISCO – Adding left atrial appendage excision to pulmonary vein isolation does not reduce the rate of recurrence in persistent atrial fibrillation, according to a Russian investigation.

Eighty-eight patients with persistent atrial fibrillation (AF) were randomized to thoracoscopic pulmonary vein isolation (PVI) with bilateral epicardial ganglia ablation and box lesion set of the posterior left atrial wall; 88 others were randomized to that approach plus left atrial appendage (LAA) amputation. After 18 months, 64 out of 87 patients in the LAA-excision group (73.6%) and 61 out of 86 patients (70.9%) in the control group were free from recurrent AF, meaning no episodes greater than 30 seconds (P = .73). Freedom from any atrial arrhythmia after a single procedure with or without follow-up antiarrhythmic drugs (AADs) was also similar, with 70.9% in the control and 74.7% in the treatment groups. “Both approaches had excellent” results with no differences in complication rates, but there “was no reduction in AF recurrence when LAA excision was performed,” said investigator Dr. Alexander Romanov of the State Research Institute of Circulation Pathology, Novosibirsk, Russia.

The results are a bit surprising because some previous studies have suggested that electrical isolation of the LAA improves AF ablation success, and surgical excision might be expected to have a similar effect. In many places in the United States, LAA excisions are routine in open heart surgery when patients have AF, to prevent stroke. Guidelines for AF management from the American Heart Association, American College of Cardiology, and Heart Rhythm Society published in 2014 give a class IIb recommendation, saying “surgical excision of the left atrial appendage may be considered in patients undergoing cardiac surgery,” with an evidence level of C, meaning there are no data to support the recommendation, only expert consensus (J Am Coll Cardiol. 2014;64[21]:2246-80).

There were no significant differences between the groups; patients were about 60 years old, on average, and more than 80% in both groups had baseline CHADS2 scores of 0 or 1. All patients had persistent AF for more than a week but no longer than a year; longer-standing cases were excluded, as were patients with prior heart surgeries or catheter ablations. There were no statistically significant differences in operative times or complications. A few patients in each arm needed sternotomies for hemostasis, and one in each arm had a stroke during follow-up. Patients were followed at regular intervals by ECG and Holter monitoring.

AADs were allowed during the blanking period; patients could continue them afterwards for AF recurrence or have endocardial redo ablations; 10 patients in the control group (12%) and 13 in the LAA group (15%) had repeat procedures (P = .55). Most were for right atrial flutter and a few for left atrial flutter. “Only one redo case was for true AF recurrence,” Dr. Romanov said.

The team did not test for exertion intolerance and other potential LAA excision problems.

Dr. Romanov is a speaker for Medtronic, Biosense Webster, and Boston Scientific.

|

Dr. John Day |

This study is interesting because it goes against what other studies are showing, which is that LAA isolation increases the success rate with AF ablation. What makes me a little suspicious is that the success rates in both arms of this study were unusually high for persistent AF. If they were more in line with previous reports, I would feel a little bit better concluding that LAA isolation doesn’t’ help.

I know anecdotally from having done thousands of these ablations that there are some patients whose AF originates from the LAA, and if you treat it, you improve their outcomes.

Dr. John Day is the director of Intermountain Heart Rhythm Specialists in Murray, Utah, and the current president of the Hearth Rhythm Society. He has no disclosures.

|

Dr. John Day |

This study is interesting because it goes against what other studies are showing, which is that LAA isolation increases the success rate with AF ablation. What makes me a little suspicious is that the success rates in both arms of this study were unusually high for persistent AF. If they were more in line with previous reports, I would feel a little bit better concluding that LAA isolation doesn’t’ help.

I know anecdotally from having done thousands of these ablations that there are some patients whose AF originates from the LAA, and if you treat it, you improve their outcomes.

Dr. John Day is the director of Intermountain Heart Rhythm Specialists in Murray, Utah, and the current president of the Hearth Rhythm Society. He has no disclosures.

|

Dr. John Day |

This study is interesting because it goes against what other studies are showing, which is that LAA isolation increases the success rate with AF ablation. What makes me a little suspicious is that the success rates in both arms of this study were unusually high for persistent AF. If they were more in line with previous reports, I would feel a little bit better concluding that LAA isolation doesn’t’ help.

I know anecdotally from having done thousands of these ablations that there are some patients whose AF originates from the LAA, and if you treat it, you improve their outcomes.

Dr. John Day is the director of Intermountain Heart Rhythm Specialists in Murray, Utah, and the current president of the Hearth Rhythm Society. He has no disclosures.

SAN FRANCISCO – Adding left atrial appendage excision to pulmonary vein isolation does not reduce the rate of recurrence in persistent atrial fibrillation, according to a Russian investigation.

Eighty-eight patients with persistent atrial fibrillation (AF) were randomized to thoracoscopic pulmonary vein isolation (PVI) with bilateral epicardial ganglia ablation and box lesion set of the posterior left atrial wall; 88 others were randomized to that approach plus left atrial appendage (LAA) amputation. After 18 months, 64 out of 87 patients in the LAA-excision group (73.6%) and 61 out of 86 patients (70.9%) in the control group were free from recurrent AF, meaning no episodes greater than 30 seconds (P = .73). Freedom from any atrial arrhythmia after a single procedure with or without follow-up antiarrhythmic drugs (AADs) was also similar, with 70.9% in the control and 74.7% in the treatment groups. “Both approaches had excellent” results with no differences in complication rates, but there “was no reduction in AF recurrence when LAA excision was performed,” said investigator Dr. Alexander Romanov of the State Research Institute of Circulation Pathology, Novosibirsk, Russia.

The results are a bit surprising because some previous studies have suggested that electrical isolation of the LAA improves AF ablation success, and surgical excision might be expected to have a similar effect. In many places in the United States, LAA excisions are routine in open heart surgery when patients have AF, to prevent stroke. Guidelines for AF management from the American Heart Association, American College of Cardiology, and Heart Rhythm Society published in 2014 give a class IIb recommendation, saying “surgical excision of the left atrial appendage may be considered in patients undergoing cardiac surgery,” with an evidence level of C, meaning there are no data to support the recommendation, only expert consensus (J Am Coll Cardiol. 2014;64[21]:2246-80).

There were no significant differences between the groups; patients were about 60 years old, on average, and more than 80% in both groups had baseline CHADS2 scores of 0 or 1. All patients had persistent AF for more than a week but no longer than a year; longer-standing cases were excluded, as were patients with prior heart surgeries or catheter ablations. There were no statistically significant differences in operative times or complications. A few patients in each arm needed sternotomies for hemostasis, and one in each arm had a stroke during follow-up. Patients were followed at regular intervals by ECG and Holter monitoring.

AADs were allowed during the blanking period; patients could continue them afterwards for AF recurrence or have endocardial redo ablations; 10 patients in the control group (12%) and 13 in the LAA group (15%) had repeat procedures (P = .55). Most were for right atrial flutter and a few for left atrial flutter. “Only one redo case was for true AF recurrence,” Dr. Romanov said.

The team did not test for exertion intolerance and other potential LAA excision problems.

Dr. Romanov is a speaker for Medtronic, Biosense Webster, and Boston Scientific.

SAN FRANCISCO – Adding left atrial appendage excision to pulmonary vein isolation does not reduce the rate of recurrence in persistent atrial fibrillation, according to a Russian investigation.

Eighty-eight patients with persistent atrial fibrillation (AF) were randomized to thoracoscopic pulmonary vein isolation (PVI) with bilateral epicardial ganglia ablation and box lesion set of the posterior left atrial wall; 88 others were randomized to that approach plus left atrial appendage (LAA) amputation. After 18 months, 64 out of 87 patients in the LAA-excision group (73.6%) and 61 out of 86 patients (70.9%) in the control group were free from recurrent AF, meaning no episodes greater than 30 seconds (P = .73). Freedom from any atrial arrhythmia after a single procedure with or without follow-up antiarrhythmic drugs (AADs) was also similar, with 70.9% in the control and 74.7% in the treatment groups. “Both approaches had excellent” results with no differences in complication rates, but there “was no reduction in AF recurrence when LAA excision was performed,” said investigator Dr. Alexander Romanov of the State Research Institute of Circulation Pathology, Novosibirsk, Russia.

The results are a bit surprising because some previous studies have suggested that electrical isolation of the LAA improves AF ablation success, and surgical excision might be expected to have a similar effect. In many places in the United States, LAA excisions are routine in open heart surgery when patients have AF, to prevent stroke. Guidelines for AF management from the American Heart Association, American College of Cardiology, and Heart Rhythm Society published in 2014 give a class IIb recommendation, saying “surgical excision of the left atrial appendage may be considered in patients undergoing cardiac surgery,” with an evidence level of C, meaning there are no data to support the recommendation, only expert consensus (J Am Coll Cardiol. 2014;64[21]:2246-80).

There were no significant differences between the groups; patients were about 60 years old, on average, and more than 80% in both groups had baseline CHADS2 scores of 0 or 1. All patients had persistent AF for more than a week but no longer than a year; longer-standing cases were excluded, as were patients with prior heart surgeries or catheter ablations. There were no statistically significant differences in operative times or complications. A few patients in each arm needed sternotomies for hemostasis, and one in each arm had a stroke during follow-up. Patients were followed at regular intervals by ECG and Holter monitoring.

AADs were allowed during the blanking period; patients could continue them afterwards for AF recurrence or have endocardial redo ablations; 10 patients in the control group (12%) and 13 in the LAA group (15%) had repeat procedures (P = .55). Most were for right atrial flutter and a few for left atrial flutter. “Only one redo case was for true AF recurrence,” Dr. Romanov said.

The team did not test for exertion intolerance and other potential LAA excision problems.

Dr. Romanov is a speaker for Medtronic, Biosense Webster, and Boston Scientific.

AT HEART RHYTHM 2016

Key clinical point: Adding left atrial appendage excision to pulmonary vein isolation does not reduce the rate of recurrence in persistent atrial fibrillation.

Major finding: After 18 months, 64 out of 87 patients in the LAA-excision group (73.6%) and 61 out of 86 patients (70.9%) in the control group were free from recurrent AF, meaning no episodes greater than 30 seconds (P = 0.73).

Data source: Randomized trial in 176 patients with persistent AF.

Disclosures: The lead investigator is a speaker for Medtronic, Biosense Webster, and Boston Scientific.

Alemtuzumab plus CHOP didn’t boost survival in elderly patients with peripheral T-cell lymphomas

CHICAGO – Adding the monoclonal anti-CD52 antibody alemtuzumab to CHOP (A-CHOP) increased response rates in elderly patients with peripheral T-cell lymphomas but did not improve their survival, based on the final results from 116 patients treated in the international ACT-2 phase III trial.

Complete responses were seen in 43% of 58 patients given CHOP (cyclophosphamide, doxorubicin, vincristine, and prednisolone) and in 60% of 58 patients given A-CHOP in the trial. However, trial participants did not significantly differ in event-free survival and progression-free survival at 3 years.

Further, overall survival at 3 years was 38% for the patients given A-CHOP and 56% for the patients given CHOP. The poorer overall survival was mainly the result of treatment-related toxicity, Dr. Lorenz H. Trümper reported at the annual meeting of the American Society of Clinical Oncology.

The estimated 3-year disease-free survival is 25% for elderly patients with peripheral T-cell lymphomas. Previous phase II trials had indicated that alemtuzumab was active in primary and relapsed T-cell lymphoma, prompting the study of adjuvant alemtuzumab in combination with dose-dense CHOP-14 in patients with previously untreated peripheral T-cell lymphoma, he said.

Although the treatment protocol demanded stringent monitoring for cytomegalovirus and Epstein-Barr virus and anti-infective prophylaxis, there were more grade 3 or higher infections in the A-CHOP group (40%) than the CHOP group (21%). The higher infection rates were attributed to higher rates of grade 3/4 hematotoxicity in patients given A-CHOP. Grade 4 leukocytopenia was seen in 70% with A-CHOP and 54% with CHOP; grade 3/4 thrombocytopenia was seen in 19% given A-CHOP and 13% given CHOP, according to Dr. Trümper of the University of Göttingen, Germany.

For the study, 116 patients from 52 centers were randomized to receive either six cycles of CHOP or A-CHOP given at 14-day intervals with granulocyte-colony stimulating factor (G-CSF) support. Initially, patients received a total of 360 mg of alemtuzumab (90 mg given at each of the first four cycles of CHOP). After patient 39 was enrolled, the dose was reduced to 120 mg (30 mg given at each of the first four cycles of CHOP). Median patient age was 69 years, and 58% of the trial participants were men.

Treatment was completed as planned in 79% of the CHOP patients and in 57% of the A-CHOP patients.

The study was sponsored by the University of Göttingen. Dr. Trümper is a consultant or adviser to Hexal and Janssen-Ortho, and receives research funding from Genzyme, the maker of alemtuzumab (Lemtrada), as well as other drug companies.

On Twitter @maryjodales

CHICAGO – Adding the monoclonal anti-CD52 antibody alemtuzumab to CHOP (A-CHOP) increased response rates in elderly patients with peripheral T-cell lymphomas but did not improve their survival, based on the final results from 116 patients treated in the international ACT-2 phase III trial.

Complete responses were seen in 43% of 58 patients given CHOP (cyclophosphamide, doxorubicin, vincristine, and prednisolone) and in 60% of 58 patients given A-CHOP in the trial. However, trial participants did not significantly differ in event-free survival and progression-free survival at 3 years.

Further, overall survival at 3 years was 38% for the patients given A-CHOP and 56% for the patients given CHOP. The poorer overall survival was mainly the result of treatment-related toxicity, Dr. Lorenz H. Trümper reported at the annual meeting of the American Society of Clinical Oncology.

The estimated 3-year disease-free survival is 25% for elderly patients with peripheral T-cell lymphomas. Previous phase II trials had indicated that alemtuzumab was active in primary and relapsed T-cell lymphoma, prompting the study of adjuvant alemtuzumab in combination with dose-dense CHOP-14 in patients with previously untreated peripheral T-cell lymphoma, he said.

Although the treatment protocol demanded stringent monitoring for cytomegalovirus and Epstein-Barr virus and anti-infective prophylaxis, there were more grade 3 or higher infections in the A-CHOP group (40%) than the CHOP group (21%). The higher infection rates were attributed to higher rates of grade 3/4 hematotoxicity in patients given A-CHOP. Grade 4 leukocytopenia was seen in 70% with A-CHOP and 54% with CHOP; grade 3/4 thrombocytopenia was seen in 19% given A-CHOP and 13% given CHOP, according to Dr. Trümper of the University of Göttingen, Germany.

For the study, 116 patients from 52 centers were randomized to receive either six cycles of CHOP or A-CHOP given at 14-day intervals with granulocyte-colony stimulating factor (G-CSF) support. Initially, patients received a total of 360 mg of alemtuzumab (90 mg given at each of the first four cycles of CHOP). After patient 39 was enrolled, the dose was reduced to 120 mg (30 mg given at each of the first four cycles of CHOP). Median patient age was 69 years, and 58% of the trial participants were men.

Treatment was completed as planned in 79% of the CHOP patients and in 57% of the A-CHOP patients.

The study was sponsored by the University of Göttingen. Dr. Trümper is a consultant or adviser to Hexal and Janssen-Ortho, and receives research funding from Genzyme, the maker of alemtuzumab (Lemtrada), as well as other drug companies.

On Twitter @maryjodales

CHICAGO – Adding the monoclonal anti-CD52 antibody alemtuzumab to CHOP (A-CHOP) increased response rates in elderly patients with peripheral T-cell lymphomas but did not improve their survival, based on the final results from 116 patients treated in the international ACT-2 phase III trial.

Complete responses were seen in 43% of 58 patients given CHOP (cyclophosphamide, doxorubicin, vincristine, and prednisolone) and in 60% of 58 patients given A-CHOP in the trial. However, trial participants did not significantly differ in event-free survival and progression-free survival at 3 years.

Further, overall survival at 3 years was 38% for the patients given A-CHOP and 56% for the patients given CHOP. The poorer overall survival was mainly the result of treatment-related toxicity, Dr. Lorenz H. Trümper reported at the annual meeting of the American Society of Clinical Oncology.

The estimated 3-year disease-free survival is 25% for elderly patients with peripheral T-cell lymphomas. Previous phase II trials had indicated that alemtuzumab was active in primary and relapsed T-cell lymphoma, prompting the study of adjuvant alemtuzumab in combination with dose-dense CHOP-14 in patients with previously untreated peripheral T-cell lymphoma, he said.

Although the treatment protocol demanded stringent monitoring for cytomegalovirus and Epstein-Barr virus and anti-infective prophylaxis, there were more grade 3 or higher infections in the A-CHOP group (40%) than the CHOP group (21%). The higher infection rates were attributed to higher rates of grade 3/4 hematotoxicity in patients given A-CHOP. Grade 4 leukocytopenia was seen in 70% with A-CHOP and 54% with CHOP; grade 3/4 thrombocytopenia was seen in 19% given A-CHOP and 13% given CHOP, according to Dr. Trümper of the University of Göttingen, Germany.

For the study, 116 patients from 52 centers were randomized to receive either six cycles of CHOP or A-CHOP given at 14-day intervals with granulocyte-colony stimulating factor (G-CSF) support. Initially, patients received a total of 360 mg of alemtuzumab (90 mg given at each of the first four cycles of CHOP). After patient 39 was enrolled, the dose was reduced to 120 mg (30 mg given at each of the first four cycles of CHOP). Median patient age was 69 years, and 58% of the trial participants were men.

Treatment was completed as planned in 79% of the CHOP patients and in 57% of the A-CHOP patients.

The study was sponsored by the University of Göttingen. Dr. Trümper is a consultant or adviser to Hexal and Janssen-Ortho, and receives research funding from Genzyme, the maker of alemtuzumab (Lemtrada), as well as other drug companies.

On Twitter @maryjodales

AT ASCO 16

Key clinical point: Adding the monoclonal anti-CD52 antibody alemtuzumab to CHOP (A-CHOP) increased response rates in elderly patients with peripheral T-cell lymphomas but did not improve their survival.

Major finding: Overall survival at 3 years was 38% for the patients given A-CHOP and 56% for the patients given CHOP.

Data source: Results from 116 patients treated in the international ACT-2 phase III trial.

Disclosures: The study was sponsored by the University of Göttingen, Germany. Dr. Trümper is a consultant or adviser to Hexal and Janssen-Ortho, and receives research funding from Genzyme, the maker of alemtuzumab, as well as other drug companies.

Hydroxychloroquine, abatacept linked with reduced type 2 diabetes

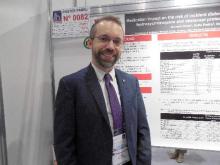

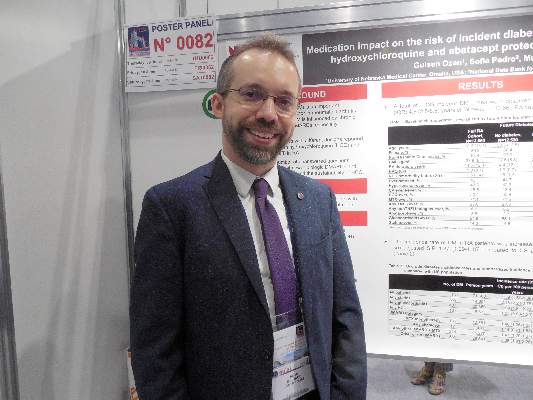

LONDON – U.S. rheumatoid arthritis patients had a significantly reduced incidence of type 2 diabetes when on treatment with either hydroxychloroquine or abatacept in an analysis of more than 13,000 patients enrolled in a U.S. national registry during 2000-2015.

The same analysis also showed statistically significant increases in the risk for new-onset type 2 diabetes in rheumatoid arthritis (RA) patients treated with a glucocorticoid or a statin, Kaleb Michaud, Ph.D., reported in a poster at the European Congress of Rheumatology.

“Our findings can inform clinicians about determining appropriate treatment decisions in rheumatoid arthritis patients at increased risk for developing type 2 diabetes,” said Dr. Michaud, an epidemiologist at the University of Nebraska in Omaha and also codirector of the National Data Bank for Rheumatic Diseases in Wichita, Kan., the source of the data used in his study.

Hydroxychloroquine, a relatively inexpensive drug that’s often used in RA patients in combination with other drugs, might be a good agent to consider adding to the therapeutic mix of RA patients at risk for developing type 2 diabetes, he said in an interview. Although the implications of this finding for the use of abatacept (Orencia) are less clear, it is a signal of a novel benefit from the drug that merits further study, Dr. Michaud said.

The findings also suggest that, when rheumatoid arthritis patients receive statin treatment, they are candidates for more intensive monitoring of diabetes onset, he added.

His analysis focused on the 13,669 RA patients who participated in the National Data Bank for Rheumatic Diseases for at least a year during 2000-2015 and had not been diagnosed as having diabetes of any type at the time they entered the registry. During a median 4.6 years of follow-up, 1,139 patients either self-reported receiving a new diagnosis of type 2 diabetes or began treatment with a hypoglycemic medication. Patients averaged about 59 years old at the time they entered the registry, and about 80% were women.

Dr. Michaud and his associates assessed the incidence rate of type 2 diabetes according to six categories of RA treatment: methotrexate monotherapy, which they used as the reference group; any treatment with abatacept with or without methotrexate; any treatment with hydroxychloroquine; any treatment with a glucocorticoid; treatment with any other disease-modifying antirheumatic drug (DMARD) with methotrexate; and treatment with any other DMARD without methotrexate or no DMARD treatment. A seventh treatment category was treatment with a statin.

A series of time-varying Cox proportional hazard models that adjusted for sociodemographic variables, comorbidities, body mass index, and measures of RA severity showed that, when compared with methotrexate monotherapy, patients treated with abatacept had a statistically significant 48% reduced rate of developing diabetes, and those treated with hydroxychloroquine had a statistically significant 33% reduced diabetes incidence, they reported.

In contrast, compared with methotrexate monotherapy, treatment with a glucocorticoid linked with a statistically significant 31% increased rate of type 2 diabetes, and treatment with a statin linked with a statistically significant 56% increased rate of diabetes. Other forms of DMARD treatment, including tumor necrosis factor inhibitors and other biologic DMARDs, had no statistically significant link with diabetes development.

Dr. Michaud also reported additional analyses that further defined the associations between hydroxychloroquine treatment and reduced diabetes incidence: The reduction in diabetes incidence became statistically significant in patients only when they had received the drug for at least 2 years. The link with reduced diabetes incidence seemed dose dependent, with a stronger protective effect in patients who received at least 400 mg/day. Also, the strength of the protection waned in patients who had discontinued hydroxychloroquine for at least 6 months, and the protective effect completely disappeared once patients were off hydroxychloroquine for at least 1 year. Finally, the link between hydroxychloroquine use and reduced diabetes incidence also was statistically significant in patients who concurrently received a glucocorticoid, but a significant protective association disappeared in patients who received a statin as well as hydroxychloroquine.

Dr. Michaud had no disclosures. The study received no commercial support.

On Twitter @mitchelzoler

LONDON – U.S. rheumatoid arthritis patients had a significantly reduced incidence of type 2 diabetes when on treatment with either hydroxychloroquine or abatacept in an analysis of more than 13,000 patients enrolled in a U.S. national registry during 2000-2015.

The same analysis also showed statistically significant increases in the risk for new-onset type 2 diabetes in rheumatoid arthritis (RA) patients treated with a glucocorticoid or a statin, Kaleb Michaud, Ph.D., reported in a poster at the European Congress of Rheumatology.

“Our findings can inform clinicians about determining appropriate treatment decisions in rheumatoid arthritis patients at increased risk for developing type 2 diabetes,” said Dr. Michaud, an epidemiologist at the University of Nebraska in Omaha and also codirector of the National Data Bank for Rheumatic Diseases in Wichita, Kan., the source of the data used in his study.

Hydroxychloroquine, a relatively inexpensive drug that’s often used in RA patients in combination with other drugs, might be a good agent to consider adding to the therapeutic mix of RA patients at risk for developing type 2 diabetes, he said in an interview. Although the implications of this finding for the use of abatacept (Orencia) are less clear, it is a signal of a novel benefit from the drug that merits further study, Dr. Michaud said.

The findings also suggest that, when rheumatoid arthritis patients receive statin treatment, they are candidates for more intensive monitoring of diabetes onset, he added.

His analysis focused on the 13,669 RA patients who participated in the National Data Bank for Rheumatic Diseases for at least a year during 2000-2015 and had not been diagnosed as having diabetes of any type at the time they entered the registry. During a median 4.6 years of follow-up, 1,139 patients either self-reported receiving a new diagnosis of type 2 diabetes or began treatment with a hypoglycemic medication. Patients averaged about 59 years old at the time they entered the registry, and about 80% were women.

Dr. Michaud and his associates assessed the incidence rate of type 2 diabetes according to six categories of RA treatment: methotrexate monotherapy, which they used as the reference group; any treatment with abatacept with or without methotrexate; any treatment with hydroxychloroquine; any treatment with a glucocorticoid; treatment with any other disease-modifying antirheumatic drug (DMARD) with methotrexate; and treatment with any other DMARD without methotrexate or no DMARD treatment. A seventh treatment category was treatment with a statin.

A series of time-varying Cox proportional hazard models that adjusted for sociodemographic variables, comorbidities, body mass index, and measures of RA severity showed that, when compared with methotrexate monotherapy, patients treated with abatacept had a statistically significant 48% reduced rate of developing diabetes, and those treated with hydroxychloroquine had a statistically significant 33% reduced diabetes incidence, they reported.

In contrast, compared with methotrexate monotherapy, treatment with a glucocorticoid linked with a statistically significant 31% increased rate of type 2 diabetes, and treatment with a statin linked with a statistically significant 56% increased rate of diabetes. Other forms of DMARD treatment, including tumor necrosis factor inhibitors and other biologic DMARDs, had no statistically significant link with diabetes development.

Dr. Michaud also reported additional analyses that further defined the associations between hydroxychloroquine treatment and reduced diabetes incidence: The reduction in diabetes incidence became statistically significant in patients only when they had received the drug for at least 2 years. The link with reduced diabetes incidence seemed dose dependent, with a stronger protective effect in patients who received at least 400 mg/day. Also, the strength of the protection waned in patients who had discontinued hydroxychloroquine for at least 6 months, and the protective effect completely disappeared once patients were off hydroxychloroquine for at least 1 year. Finally, the link between hydroxychloroquine use and reduced diabetes incidence also was statistically significant in patients who concurrently received a glucocorticoid, but a significant protective association disappeared in patients who received a statin as well as hydroxychloroquine.

Dr. Michaud had no disclosures. The study received no commercial support.

On Twitter @mitchelzoler

LONDON – U.S. rheumatoid arthritis patients had a significantly reduced incidence of type 2 diabetes when on treatment with either hydroxychloroquine or abatacept in an analysis of more than 13,000 patients enrolled in a U.S. national registry during 2000-2015.

The same analysis also showed statistically significant increases in the risk for new-onset type 2 diabetes in rheumatoid arthritis (RA) patients treated with a glucocorticoid or a statin, Kaleb Michaud, Ph.D., reported in a poster at the European Congress of Rheumatology.

“Our findings can inform clinicians about determining appropriate treatment decisions in rheumatoid arthritis patients at increased risk for developing type 2 diabetes,” said Dr. Michaud, an epidemiologist at the University of Nebraska in Omaha and also codirector of the National Data Bank for Rheumatic Diseases in Wichita, Kan., the source of the data used in his study.

Hydroxychloroquine, a relatively inexpensive drug that’s often used in RA patients in combination with other drugs, might be a good agent to consider adding to the therapeutic mix of RA patients at risk for developing type 2 diabetes, he said in an interview. Although the implications of this finding for the use of abatacept (Orencia) are less clear, it is a signal of a novel benefit from the drug that merits further study, Dr. Michaud said.

The findings also suggest that, when rheumatoid arthritis patients receive statin treatment, they are candidates for more intensive monitoring of diabetes onset, he added.

His analysis focused on the 13,669 RA patients who participated in the National Data Bank for Rheumatic Diseases for at least a year during 2000-2015 and had not been diagnosed as having diabetes of any type at the time they entered the registry. During a median 4.6 years of follow-up, 1,139 patients either self-reported receiving a new diagnosis of type 2 diabetes or began treatment with a hypoglycemic medication. Patients averaged about 59 years old at the time they entered the registry, and about 80% were women.

Dr. Michaud and his associates assessed the incidence rate of type 2 diabetes according to six categories of RA treatment: methotrexate monotherapy, which they used as the reference group; any treatment with abatacept with or without methotrexate; any treatment with hydroxychloroquine; any treatment with a glucocorticoid; treatment with any other disease-modifying antirheumatic drug (DMARD) with methotrexate; and treatment with any other DMARD without methotrexate or no DMARD treatment. A seventh treatment category was treatment with a statin.

A series of time-varying Cox proportional hazard models that adjusted for sociodemographic variables, comorbidities, body mass index, and measures of RA severity showed that, when compared with methotrexate monotherapy, patients treated with abatacept had a statistically significant 48% reduced rate of developing diabetes, and those treated with hydroxychloroquine had a statistically significant 33% reduced diabetes incidence, they reported.

In contrast, compared with methotrexate monotherapy, treatment with a glucocorticoid linked with a statistically significant 31% increased rate of type 2 diabetes, and treatment with a statin linked with a statistically significant 56% increased rate of diabetes. Other forms of DMARD treatment, including tumor necrosis factor inhibitors and other biologic DMARDs, had no statistically significant link with diabetes development.

Dr. Michaud also reported additional analyses that further defined the associations between hydroxychloroquine treatment and reduced diabetes incidence: The reduction in diabetes incidence became statistically significant in patients only when they had received the drug for at least 2 years. The link with reduced diabetes incidence seemed dose dependent, with a stronger protective effect in patients who received at least 400 mg/day. Also, the strength of the protection waned in patients who had discontinued hydroxychloroquine for at least 6 months, and the protective effect completely disappeared once patients were off hydroxychloroquine for at least 1 year. Finally, the link between hydroxychloroquine use and reduced diabetes incidence also was statistically significant in patients who concurrently received a glucocorticoid, but a significant protective association disappeared in patients who received a statin as well as hydroxychloroquine.

Dr. Michaud had no disclosures. The study received no commercial support.

On Twitter @mitchelzoler

AT THE EULAR 2016 CONGRESS

Key clinical point: U.S. rheumatoid arthritis patients on hydroxychloroquine or abatacept had a significantly reduced incidence of type 2 diabetes, compared with patients on methotrexate monotherapy.

Major finding: Hydroxychloroquine cut type 2 diabetes incidence by 33% and abatacept cut it by 48%, compared with patients on methotrexate monotherapy.

Data source: Analysis of data collected from 13,669 U.S. rheumatoid arthritis patients enrolled in a registry during 2000-2015.

Disclosures: Dr. Michaud had no disclosures. The study received no commercial support.

Temozolomide + RT boosts survival in elderly with glioblastoma

CHICAGO – Adding concomitant and adjuvant temozolomide to a shorter course of radiotherapy (RT) for elderly patients with glioblastoma improves progression-free survival, according to a phase III study presented at the annual meeting of the American Society of Clinical Oncology.

Both patients with and without MGMT methylated promoters in their tumors benefited, with the greatest benefit accruing to patients with promoter methylation, reported Dr. James Perry, head of the division of neurology at Sunnybrook Health Sciences Centre, Toronto.

The age of peak incidence of glioblastoma is 64 years, and the incidence is increasing. Best practice has been surgical resection and 6 weeks of radiation combined with oral temozolomide. A pivotal trial just over 10 years ago was restricted to patients younger than age 70 years and included few patients older than 65 years. It showed decreasing benefit of temozolomide with increasing age. Furthermore, trials in the elderly have compared only head-to-head radiation schedules or radiation alone with temozolomide alone. There has been no evidence on which to base practice for combining radiotherapy with temozolomide, Dr. Perry said.

To address this deficiency, Dr. Perry and his colleagues randomly assigned patients 65 years or older with newly diagnosed glioblastoma either to a short course of RT, consisting of 40 Gy in 15 fractions over 3 weeks or to the same radiotherapy plus 3 weeks of concomitant temozolomide and then adjuvant temozolomide for 12 months or until progression.

Patients (n = 562; 281 in each arm) averaged 73 years (range, 65-90 years), 77% had Eastern Cooperative Oncology Group performance score (PS) 0/1 and the rest PS 2, and 58% had had tumor resection.

Median overall survival was 9.3 months with the combined therapy and 7.6 months with radiation alone. Median progression-free survival was 5.3 vs. 3.9 months, respectively, with a hazard ratio of 0.50 (P less than .0001).

MGMT promoter methylation is a predictive marker for benefit from chemotherapy and a prognostic factor for survival. Forty-six percent of the tumors had this promoter methylation.

Temozolomide use added relatively more benefit if promoters were methylated. Patients with methylated promoters had an overall survival of 7.7 months with radiation alone and 13.5 months with combined radiation and temozolomide (HR, 0.53; P = .0001). For unmethylated promoters, overall survival with radiation alone was 7.9 months and was 10.0 months when temozolomide was added (HR, 0.75; P = .055), suggesting some benefit from temozolomide, although not as great as with tumors with methylated promoters.

“The advantage of this combined treatment with chemoradiation was achieved with minimal side effects,” Dr. Perry reported. Mild nausea and vomiting occurred mostly in the first weeks of therapy, and a slight increase in grade 3/4 hematologic toxicity was seen but occurred in less than 5% of patients. No differences in quality of life were reported between the treatment arms.

Most patients could easily complete the treatment plan, with 97% adherence to the 3 weeks of chemoradiation. “This is quite important because the elderly often have difficulties with mobility, distance from treatment centers, and sometimes don’t have caregivers [who] are able to bring them back and forth for treatment,” Dr. Perry said. He suggested that the shorter course of radiotherapy may have been one factor in the high adherence rate.

Based on these results of higher efficacy using concomitant and adjuvant temozolomide with manageable toxicities and no sacrifice in quality of life, “oncologists now have evidence to consider radiation with chemotherapy in all newly diagnosed elderly patients with glioblastoma,” he said.

Dr. Julie Vose, president of the American Society of Clinical Oncology, said the study was important because it compared the treatments in the appropriate patient population, that is, in the elderly who have the highest incidence of glioblastoma.

CHICAGO – Adding concomitant and adjuvant temozolomide to a shorter course of radiotherapy (RT) for elderly patients with glioblastoma improves progression-free survival, according to a phase III study presented at the annual meeting of the American Society of Clinical Oncology.

Both patients with and without MGMT methylated promoters in their tumors benefited, with the greatest benefit accruing to patients with promoter methylation, reported Dr. James Perry, head of the division of neurology at Sunnybrook Health Sciences Centre, Toronto.

The age of peak incidence of glioblastoma is 64 years, and the incidence is increasing. Best practice has been surgical resection and 6 weeks of radiation combined with oral temozolomide. A pivotal trial just over 10 years ago was restricted to patients younger than age 70 years and included few patients older than 65 years. It showed decreasing benefit of temozolomide with increasing age. Furthermore, trials in the elderly have compared only head-to-head radiation schedules or radiation alone with temozolomide alone. There has been no evidence on which to base practice for combining radiotherapy with temozolomide, Dr. Perry said.

To address this deficiency, Dr. Perry and his colleagues randomly assigned patients 65 years or older with newly diagnosed glioblastoma either to a short course of RT, consisting of 40 Gy in 15 fractions over 3 weeks or to the same radiotherapy plus 3 weeks of concomitant temozolomide and then adjuvant temozolomide for 12 months or until progression.

Patients (n = 562; 281 in each arm) averaged 73 years (range, 65-90 years), 77% had Eastern Cooperative Oncology Group performance score (PS) 0/1 and the rest PS 2, and 58% had had tumor resection.

Median overall survival was 9.3 months with the combined therapy and 7.6 months with radiation alone. Median progression-free survival was 5.3 vs. 3.9 months, respectively, with a hazard ratio of 0.50 (P less than .0001).

MGMT promoter methylation is a predictive marker for benefit from chemotherapy and a prognostic factor for survival. Forty-six percent of the tumors had this promoter methylation.

Temozolomide use added relatively more benefit if promoters were methylated. Patients with methylated promoters had an overall survival of 7.7 months with radiation alone and 13.5 months with combined radiation and temozolomide (HR, 0.53; P = .0001). For unmethylated promoters, overall survival with radiation alone was 7.9 months and was 10.0 months when temozolomide was added (HR, 0.75; P = .055), suggesting some benefit from temozolomide, although not as great as with tumors with methylated promoters.

“The advantage of this combined treatment with chemoradiation was achieved with minimal side effects,” Dr. Perry reported. Mild nausea and vomiting occurred mostly in the first weeks of therapy, and a slight increase in grade 3/4 hematologic toxicity was seen but occurred in less than 5% of patients. No differences in quality of life were reported between the treatment arms.

Most patients could easily complete the treatment plan, with 97% adherence to the 3 weeks of chemoradiation. “This is quite important because the elderly often have difficulties with mobility, distance from treatment centers, and sometimes don’t have caregivers [who] are able to bring them back and forth for treatment,” Dr. Perry said. He suggested that the shorter course of radiotherapy may have been one factor in the high adherence rate.

Based on these results of higher efficacy using concomitant and adjuvant temozolomide with manageable toxicities and no sacrifice in quality of life, “oncologists now have evidence to consider radiation with chemotherapy in all newly diagnosed elderly patients with glioblastoma,” he said.

Dr. Julie Vose, president of the American Society of Clinical Oncology, said the study was important because it compared the treatments in the appropriate patient population, that is, in the elderly who have the highest incidence of glioblastoma.

CHICAGO – Adding concomitant and adjuvant temozolomide to a shorter course of radiotherapy (RT) for elderly patients with glioblastoma improves progression-free survival, according to a phase III study presented at the annual meeting of the American Society of Clinical Oncology.

Both patients with and without MGMT methylated promoters in their tumors benefited, with the greatest benefit accruing to patients with promoter methylation, reported Dr. James Perry, head of the division of neurology at Sunnybrook Health Sciences Centre, Toronto.

The age of peak incidence of glioblastoma is 64 years, and the incidence is increasing. Best practice has been surgical resection and 6 weeks of radiation combined with oral temozolomide. A pivotal trial just over 10 years ago was restricted to patients younger than age 70 years and included few patients older than 65 years. It showed decreasing benefit of temozolomide with increasing age. Furthermore, trials in the elderly have compared only head-to-head radiation schedules or radiation alone with temozolomide alone. There has been no evidence on which to base practice for combining radiotherapy with temozolomide, Dr. Perry said.

To address this deficiency, Dr. Perry and his colleagues randomly assigned patients 65 years or older with newly diagnosed glioblastoma either to a short course of RT, consisting of 40 Gy in 15 fractions over 3 weeks or to the same radiotherapy plus 3 weeks of concomitant temozolomide and then adjuvant temozolomide for 12 months or until progression.

Patients (n = 562; 281 in each arm) averaged 73 years (range, 65-90 years), 77% had Eastern Cooperative Oncology Group performance score (PS) 0/1 and the rest PS 2, and 58% had had tumor resection.

Median overall survival was 9.3 months with the combined therapy and 7.6 months with radiation alone. Median progression-free survival was 5.3 vs. 3.9 months, respectively, with a hazard ratio of 0.50 (P less than .0001).

MGMT promoter methylation is a predictive marker for benefit from chemotherapy and a prognostic factor for survival. Forty-six percent of the tumors had this promoter methylation.

Temozolomide use added relatively more benefit if promoters were methylated. Patients with methylated promoters had an overall survival of 7.7 months with radiation alone and 13.5 months with combined radiation and temozolomide (HR, 0.53; P = .0001). For unmethylated promoters, overall survival with radiation alone was 7.9 months and was 10.0 months when temozolomide was added (HR, 0.75; P = .055), suggesting some benefit from temozolomide, although not as great as with tumors with methylated promoters.

“The advantage of this combined treatment with chemoradiation was achieved with minimal side effects,” Dr. Perry reported. Mild nausea and vomiting occurred mostly in the first weeks of therapy, and a slight increase in grade 3/4 hematologic toxicity was seen but occurred in less than 5% of patients. No differences in quality of life were reported between the treatment arms.

Most patients could easily complete the treatment plan, with 97% adherence to the 3 weeks of chemoradiation. “This is quite important because the elderly often have difficulties with mobility, distance from treatment centers, and sometimes don’t have caregivers [who] are able to bring them back and forth for treatment,” Dr. Perry said. He suggested that the shorter course of radiotherapy may have been one factor in the high adherence rate.

Based on these results of higher efficacy using concomitant and adjuvant temozolomide with manageable toxicities and no sacrifice in quality of life, “oncologists now have evidence to consider radiation with chemotherapy in all newly diagnosed elderly patients with glioblastoma,” he said.

Dr. Julie Vose, president of the American Society of Clinical Oncology, said the study was important because it compared the treatments in the appropriate patient population, that is, in the elderly who have the highest incidence of glioblastoma.

AT THE 2016 ASCO ANNUAL MEETING

Key clinical point: Combining temozolomide with radiotherapy prolongs survival for elderly with glioblastoma.

Major finding: Combining temozolomide with radiotherapy increased survival by 33% vs. radiation alone.

Data source: Global phase III study of 562 elderly patients with newly diagnosed glioblastoma randomized to temozolomide plus radiation vs radiation alone.

Disclosures: Dr. Perry reported stock or other ownership interests in DelMar Pharmaceuticals and VBL Therapeutics. Dr. Vose reported receiving honoraria from Sanofi-Aventis and Seattle Genetics; consulting for Bio Connections; and receiving research funding to her institution from Acerta, Bristol-Myers Squibb, Celgene, Genentech, GlaxoSmithKline, Incyte, Janssen Biotech, Kite Pharma, Pharmacyclics, and Spectrum Pharmaceuticals.

Does Preoperative Hypercapnia Predict Postoperative Complications in Patients with Obstructive Sleep Apnea?

Clinical question: Are patients with obstructive sleep apnea (OSA) and preoperative hypercapnia more likely to experience postoperative complications than those without?

Background: Obesity hypoventilation syndrome (OHS) is known to increase medical morbidity in patients with OSA, but its impact on postoperative outcome is unknown.

Study design: Retrospective cohort study.

Setting: Single tertiary-care center.

Synopsis: The study examined 1,800 patients with body mass index (BMI) ≥30 who underwent polysomnography, elective non-cardiac surgery (NCS), and had a blood gas performed. Of those, 194 patients were identified as having OSA with hypercapnia, and 325 were identified as having only OSA. Investigators found that the presence of hypercapnia in patients with OSA, whether from OHS, COPD, or another cause, was associated with worse postoperative outcomes. They found a statistically significant increase in postoperative respiratory failure (21% versus 2%), heart failure (8% versus 0%), tracheostomy (2% versus 1%), and ICU transfer (21% versus 6%). Mortality data did not reach significance.

The major limitation to the study is that hypercapnia is underrecognized in this patient population, and as a result, only patients who had a blood gas were included; many hypercapnic patients may have had elective NCS without receiving a blood gas and were thus excluded.

Bottom line: Consider performing a preoperative blood gas in patients with OSA undergoing elective NCS to help with postoperative complication risk assessment.

Citation: Kaw R, Bhateja P, Paz y Mar H, et al. Postoperative complications in patients with unrecognized obesity hypoventilation syndrome undergoing elective noncardiac surgery. Chest. 2016;149(1):84-91 doi:10.1378/chest.14-3216.

Clinical question: Are patients with obstructive sleep apnea (OSA) and preoperative hypercapnia more likely to experience postoperative complications than those without?

Background: Obesity hypoventilation syndrome (OHS) is known to increase medical morbidity in patients with OSA, but its impact on postoperative outcome is unknown.

Study design: Retrospective cohort study.

Setting: Single tertiary-care center.

Synopsis: The study examined 1,800 patients with body mass index (BMI) ≥30 who underwent polysomnography, elective non-cardiac surgery (NCS), and had a blood gas performed. Of those, 194 patients were identified as having OSA with hypercapnia, and 325 were identified as having only OSA. Investigators found that the presence of hypercapnia in patients with OSA, whether from OHS, COPD, or another cause, was associated with worse postoperative outcomes. They found a statistically significant increase in postoperative respiratory failure (21% versus 2%), heart failure (8% versus 0%), tracheostomy (2% versus 1%), and ICU transfer (21% versus 6%). Mortality data did not reach significance.

The major limitation to the study is that hypercapnia is underrecognized in this patient population, and as a result, only patients who had a blood gas were included; many hypercapnic patients may have had elective NCS without receiving a blood gas and were thus excluded.

Bottom line: Consider performing a preoperative blood gas in patients with OSA undergoing elective NCS to help with postoperative complication risk assessment.

Citation: Kaw R, Bhateja P, Paz y Mar H, et al. Postoperative complications in patients with unrecognized obesity hypoventilation syndrome undergoing elective noncardiac surgery. Chest. 2016;149(1):84-91 doi:10.1378/chest.14-3216.

Clinical question: Are patients with obstructive sleep apnea (OSA) and preoperative hypercapnia more likely to experience postoperative complications than those without?

Background: Obesity hypoventilation syndrome (OHS) is known to increase medical morbidity in patients with OSA, but its impact on postoperative outcome is unknown.

Study design: Retrospective cohort study.

Setting: Single tertiary-care center.

Synopsis: The study examined 1,800 patients with body mass index (BMI) ≥30 who underwent polysomnography, elective non-cardiac surgery (NCS), and had a blood gas performed. Of those, 194 patients were identified as having OSA with hypercapnia, and 325 were identified as having only OSA. Investigators found that the presence of hypercapnia in patients with OSA, whether from OHS, COPD, or another cause, was associated with worse postoperative outcomes. They found a statistically significant increase in postoperative respiratory failure (21% versus 2%), heart failure (8% versus 0%), tracheostomy (2% versus 1%), and ICU transfer (21% versus 6%). Mortality data did not reach significance.

The major limitation to the study is that hypercapnia is underrecognized in this patient population, and as a result, only patients who had a blood gas were included; many hypercapnic patients may have had elective NCS without receiving a blood gas and were thus excluded.

Bottom line: Consider performing a preoperative blood gas in patients with OSA undergoing elective NCS to help with postoperative complication risk assessment.

Citation: Kaw R, Bhateja P, Paz y Mar H, et al. Postoperative complications in patients with unrecognized obesity hypoventilation syndrome undergoing elective noncardiac surgery. Chest. 2016;149(1):84-91 doi:10.1378/chest.14-3216.

Rapid Immunoassays for Heparin-Induced Thrombocytopenia Offer Fast Screening Possibilities

Clinical question: How useful are rapid immunoassays (RIs) compared to other tests for heparin-induced thrombocytopenia (HIT)?

Background: HIT is a clinicopathologic diagnosis, which traditionally requires clinical criteria and laboratory confirmation through initial testing with enzyme-linked immunosorbent assay (ELISA) and “gold standard” testing with washed platelet functional assays when available. There are an increasing number of RIs available, which have lab turnaround times of less than one hour. Their clinical utility is not well understood.

Study design: Meta-analysis.

Setting: Twenty-three studies.

Synopsis: The authors found 23 articles to include for review. These studies included 5,637 unique patients and included heterogeneous (medical, surgical, non-ICU) populations. These articles examined six different rapid immunoassays, which have been developed in recent years. All RIs examined had excellent negative predictive values (NPVs) ranging from 0.99 to 1.00, though positive predictive values (PPVs) had much wider variation (0.42–0.71). The greatest limitation in this meta-analysis is that 17 of the studies were marked as “high risk of bias” because they did not compare the RIs to the “gold standard” assay.

Bottom line: RIs for the diagnosis of HIT have very high NPVs and may be usefully incorporated into the diagnostic algorithm for HIT, but they cannot take the place of “gold standard” washed platelet functional assays.

Citation: Sun L, Gimotty PA, Lakshmanan S, Cuker A. Diagnostic accuracy of rapid immunoassays for heparin-induced thrombocytopenia: a systematic review and meta-analysis [published online ahead of print January 14, 2016]. Thromb Haemost. doi:10.1160/TH15-06-0523.

Clinical question: How useful are rapid immunoassays (RIs) compared to other tests for heparin-induced thrombocytopenia (HIT)?

Background: HIT is a clinicopathologic diagnosis, which traditionally requires clinical criteria and laboratory confirmation through initial testing with enzyme-linked immunosorbent assay (ELISA) and “gold standard” testing with washed platelet functional assays when available. There are an increasing number of RIs available, which have lab turnaround times of less than one hour. Their clinical utility is not well understood.

Study design: Meta-analysis.

Setting: Twenty-three studies.

Synopsis: The authors found 23 articles to include for review. These studies included 5,637 unique patients and included heterogeneous (medical, surgical, non-ICU) populations. These articles examined six different rapid immunoassays, which have been developed in recent years. All RIs examined had excellent negative predictive values (NPVs) ranging from 0.99 to 1.00, though positive predictive values (PPVs) had much wider variation (0.42–0.71). The greatest limitation in this meta-analysis is that 17 of the studies were marked as “high risk of bias” because they did not compare the RIs to the “gold standard” assay.

Bottom line: RIs for the diagnosis of HIT have very high NPVs and may be usefully incorporated into the diagnostic algorithm for HIT, but they cannot take the place of “gold standard” washed platelet functional assays.

Citation: Sun L, Gimotty PA, Lakshmanan S, Cuker A. Diagnostic accuracy of rapid immunoassays for heparin-induced thrombocytopenia: a systematic review and meta-analysis [published online ahead of print January 14, 2016]. Thromb Haemost. doi:10.1160/TH15-06-0523.

Clinical question: How useful are rapid immunoassays (RIs) compared to other tests for heparin-induced thrombocytopenia (HIT)?

Background: HIT is a clinicopathologic diagnosis, which traditionally requires clinical criteria and laboratory confirmation through initial testing with enzyme-linked immunosorbent assay (ELISA) and “gold standard” testing with washed platelet functional assays when available. There are an increasing number of RIs available, which have lab turnaround times of less than one hour. Their clinical utility is not well understood.

Study design: Meta-analysis.

Setting: Twenty-three studies.

Synopsis: The authors found 23 articles to include for review. These studies included 5,637 unique patients and included heterogeneous (medical, surgical, non-ICU) populations. These articles examined six different rapid immunoassays, which have been developed in recent years. All RIs examined had excellent negative predictive values (NPVs) ranging from 0.99 to 1.00, though positive predictive values (PPVs) had much wider variation (0.42–0.71). The greatest limitation in this meta-analysis is that 17 of the studies were marked as “high risk of bias” because they did not compare the RIs to the “gold standard” assay.

Bottom line: RIs for the diagnosis of HIT have very high NPVs and may be usefully incorporated into the diagnostic algorithm for HIT, but they cannot take the place of “gold standard” washed platelet functional assays.

Citation: Sun L, Gimotty PA, Lakshmanan S, Cuker A. Diagnostic accuracy of rapid immunoassays for heparin-induced thrombocytopenia: a systematic review and meta-analysis [published online ahead of print January 14, 2016]. Thromb Haemost. doi:10.1160/TH15-06-0523.

Tandem ASCT for neuroblastoma comes with caveat

Photo by Chad McNeeley

CHICAGO—A phase 3 study of tandem autologous stem cell transplant (ASCT) for children with high-risk neuroblastoma has shown that tandem transplant as consolidation significantly improved event-free survival (EFS).

Nevertheless, the improvement comes “with an important caveat,” according to Julie R. Park, MD, “that this is in children who survive induction without disease progression after induction or severe induction-related toxicity.”

Of note, the tandem transplant did not increase toxicity or regimen-related mortality.

Dr Park, of the Seattle Children’s Hospital and University of Washington, presented the study data on behalf of the Children’s Oncology Group and the COG ANBL0532 Study Committee during the plenary session at the 2016 ASCO Annual Meeting (LBA3*).

Neuroblastoma, Dr Park explained, is a disease that occurs in young children and is the most common extracranial tumor of childhood. Patients with high-risk neuroblastoma account for 50% of all children diagnosed with the disease, and neuroblastoma accounts for more than 10% of all childhood cancer mortality.

The outcome for high-risk neuroblastoma patients is dismal: fewer than 50% survive following current multi-agent, aggressive therapy, she said.

Randomized clinical trials performed over the last 25 years “have taught us that dose intensification of therapy is important,” Dr Park said, “and the treatment of minimal residual disease with non-cross resistant therapies is equally important.”

Pilot studies of tandem ASCT demonstrated tolerable toxicity and suggested efficacy, and clinical trials demonstrated that collecting peripheral blood stem cells in small children with neuroblastoma was feasible.

So the investigators undertook the current study to improve 3-year EFS of high-risk neuroblastoma patients using a strategy of tandem transplant consolidation.

Eligibility

Patients with newly diagnosed high-risk neuroblastoma were eligible. They had to have metastatic disease (INSS stage 4) and be older than 18 months to be eligible for the trial.

They could be any age if they had INSS stage 2, 3, or 4 with MYCN amplification.

“Our prior studies had identified that the group with regional disease and toddlers with MYCN non-amplified metastatic disease had a better outcome compared to other children with high-risk neuroblastoma,” Dr Park commented.

“Therefore, these children were non-randomly assigned to single transplant and are not included in the randomization results provided today,” she explained.

All children had to have normal cardiac, liver, and renal function.

Trial design: Induction

Induction therapy consisted of 6 cycles of chemotherapy—2 cycles of cyclophosphamide/topotecan, followed by 4 alternating cycles of cisplatin/etoposide and cyclophosphamide/vincristine/doxorubicin.

Peripheral blood stem cells (PBSCs) were harvested after the first 2 cycles of dose intensive cyclophosphamide and topotecan.

Patients had surgery on the primary tumor after 5 cycles of induction.

Trial design: Consolidation

Following completion of induction therapy, patients were assessed for eligibility for consolidation. These criteria included sufficient PBSC harvest, adequate organ function, no evidence of disease progression, and consent for post induction therapy.

Children eligible for consolidation were randomized to either the standard transplant with carboplatin, etoposide, melphalan or tandem transplant with cyclophosphamide and thiotepa followed 6 – 8 weeks later by the second transplant with carboplatin, etoposide, and melphalan.

Patients in both arms received radiotherapy to their primary tumor site.

Trial Design: Post-consolidation

Patients then went on for post consolidation chemotherapy with isotretinoin.

“During the conduct of ANBL0532,” Dr Park pointed out, “there was an additional trial running within the Children’s Oncology Group for which patients were eligible to enroll.”

So from 2007 – 2009, the additional trial (ANBL0032) randomized patients to their then standard of isotretinoin or isotretinoin plus the anti-GD2 antibody dinutuximab and cytokines.

“In 2009, results of that study were released and the dinutuximab arm was superior,” she noted, so patients were no longer randomized to isotretinoin only.

Prior studies indicated that 40% of patients went off trial at the end of induction therapy, so the investigators enrolled 665 patients to ensure a randomization of at least 332 patients.

Data cut-off for the presentation was March 31, 2016.

Patient demographics

Of the 665 patients accrued, 13 were ineligible. Twenty-seven patients with favorable prognosis were non-randomly assigned to single ASCT.

Forty percent (229 patients) went off protocol therapy prior to randomization. The main reasons for this were death (8 patients), progressive disease (48 patients), and physician and patient discretion (206 patients).

Investigators randomized 355 patients, 179 to single ASCT and 176 to tandem ASCT.

Patients were a median age of 37.2 months, 38.2% had MYCN amplification, and 88% were INSS stage 4.

The randomized cohorts of patients were similar to the overall patient cohort in terms of age, MYCN amplification, and stage 4 disease.

The randomizations were also well balanced in terms of MYCN amplifications, stage 4 disease, and response to induction chemotherapy.

The investigators retrospectively reviewed the patient characteristics, including age at diagnosis, early response to induction, and assignment to receive immunotherapy, “and there was no statistically significant difference between the randomized cohorts for these characteristics,” Dr Park said.

Safety

The most commonly observed grade 3 or greater non-hematologic toxicities in the single and tandem arms included infection (18.3% and 17.9%, respectively), mucosal toxicities (17.2% and 13.6%, respectively), and hepatic toxicities, including sinusoidal obstructive disorder (6.5% and 6.2%, respectively).

The investigators observed no significant differences between the arms in regard to the rate of these toxicities. In addition, they observed no difference between the arms in the rate of regimen-related mortality, 4.1% in the single arm, and 1.2% in the tandem arm.

Efficacy

For all patients (n=652) from time of enrollment, the 3-year EFS was 51.0% and the 3-year OS was 68.3%.

In randomized patients (n=355), from the time of randomization, the 3-year EFS was 54.8% and the 3-year OS, 71.5%, with a median survival time of 4.6 years.

There was a statistically significant improvement in EFS for those patients assigned to tandem transplant, with a 3-year EFS of 61.4% as compared to those assigned to single transplant, who had a 3-year EFS of 48.4% (P=0.0081).

There was not a statistically significant difference in OS. However, Dr Park pointed out that the trial was not powered to detect a difference in overall survival.

When looking at the cohort of stage 4 patients who were older than 18 months, investigators again saw a statistically significant improvement in EFS. The 3-year EFS was 59%.1 for tandem transplants vs 45.5% for single transplants, P=0.0083.

Again, there was no difference in OS in the INSS stage 4 patients older than 18 months.

“And finally, when we analyzed the outcome for children who were assigned to receive immunotherapy,” Dr Park pointed out, “from the time of start of immunotherapy, there was a statistically significant improvement in both event-free survival and overall survival for those children who received tandem transplants.”

The 3-year EFS for patients who received a tandem transplant and immunotherapy was 73.7% compared to the 3-year EFS of 56.0% for patients who received a single transplant and immunotherapy (P=0.0033).

The 3-year overall survival was also significantly improved for patients who received tandem transplants and immunotherapy (83.7%) compared to those who received a single transplant and immunotherapy (74.4%) (P=0.0322).

Conclusions

“Tandem transplant consolidation improves outcome in patients with high-risk neuroblastoma,” Dr Park said, with the important caveat mentioned earlier.

The tandem transplant does not increase toxicity or regimen-related mortality, and the benefit of tandem ASCT remains following anti-GD2-directed immunotherapy. ![]()

*Data in the presentation differ slightly from the abstract

Photo by Chad McNeeley

CHICAGO—A phase 3 study of tandem autologous stem cell transplant (ASCT) for children with high-risk neuroblastoma has shown that tandem transplant as consolidation significantly improved event-free survival (EFS).

Nevertheless, the improvement comes “with an important caveat,” according to Julie R. Park, MD, “that this is in children who survive induction without disease progression after induction or severe induction-related toxicity.”

Of note, the tandem transplant did not increase toxicity or regimen-related mortality.

Dr Park, of the Seattle Children’s Hospital and University of Washington, presented the study data on behalf of the Children’s Oncology Group and the COG ANBL0532 Study Committee during the plenary session at the 2016 ASCO Annual Meeting (LBA3*).

Neuroblastoma, Dr Park explained, is a disease that occurs in young children and is the most common extracranial tumor of childhood. Patients with high-risk neuroblastoma account for 50% of all children diagnosed with the disease, and neuroblastoma accounts for more than 10% of all childhood cancer mortality.

The outcome for high-risk neuroblastoma patients is dismal: fewer than 50% survive following current multi-agent, aggressive therapy, she said.

Randomized clinical trials performed over the last 25 years “have taught us that dose intensification of therapy is important,” Dr Park said, “and the treatment of minimal residual disease with non-cross resistant therapies is equally important.”

Pilot studies of tandem ASCT demonstrated tolerable toxicity and suggested efficacy, and clinical trials demonstrated that collecting peripheral blood stem cells in small children with neuroblastoma was feasible.

So the investigators undertook the current study to improve 3-year EFS of high-risk neuroblastoma patients using a strategy of tandem transplant consolidation.

Eligibility

Patients with newly diagnosed high-risk neuroblastoma were eligible. They had to have metastatic disease (INSS stage 4) and be older than 18 months to be eligible for the trial.

They could be any age if they had INSS stage 2, 3, or 4 with MYCN amplification.

“Our prior studies had identified that the group with regional disease and toddlers with MYCN non-amplified metastatic disease had a better outcome compared to other children with high-risk neuroblastoma,” Dr Park commented.

“Therefore, these children were non-randomly assigned to single transplant and are not included in the randomization results provided today,” she explained.

All children had to have normal cardiac, liver, and renal function.

Trial design: Induction

Induction therapy consisted of 6 cycles of chemotherapy—2 cycles of cyclophosphamide/topotecan, followed by 4 alternating cycles of cisplatin/etoposide and cyclophosphamide/vincristine/doxorubicin.

Peripheral blood stem cells (PBSCs) were harvested after the first 2 cycles of dose intensive cyclophosphamide and topotecan.

Patients had surgery on the primary tumor after 5 cycles of induction.

Trial design: Consolidation

Following completion of induction therapy, patients were assessed for eligibility for consolidation. These criteria included sufficient PBSC harvest, adequate organ function, no evidence of disease progression, and consent for post induction therapy.

Children eligible for consolidation were randomized to either the standard transplant with carboplatin, etoposide, melphalan or tandem transplant with cyclophosphamide and thiotepa followed 6 – 8 weeks later by the second transplant with carboplatin, etoposide, and melphalan.

Patients in both arms received radiotherapy to their primary tumor site.

Trial Design: Post-consolidation

Patients then went on for post consolidation chemotherapy with isotretinoin.

“During the conduct of ANBL0532,” Dr Park pointed out, “there was an additional trial running within the Children’s Oncology Group for which patients were eligible to enroll.”

So from 2007 – 2009, the additional trial (ANBL0032) randomized patients to their then standard of isotretinoin or isotretinoin plus the anti-GD2 antibody dinutuximab and cytokines.

“In 2009, results of that study were released and the dinutuximab arm was superior,” she noted, so patients were no longer randomized to isotretinoin only.

Prior studies indicated that 40% of patients went off trial at the end of induction therapy, so the investigators enrolled 665 patients to ensure a randomization of at least 332 patients.

Data cut-off for the presentation was March 31, 2016.

Patient demographics

Of the 665 patients accrued, 13 were ineligible. Twenty-seven patients with favorable prognosis were non-randomly assigned to single ASCT.

Forty percent (229 patients) went off protocol therapy prior to randomization. The main reasons for this were death (8 patients), progressive disease (48 patients), and physician and patient discretion (206 patients).

Investigators randomized 355 patients, 179 to single ASCT and 176 to tandem ASCT.

Patients were a median age of 37.2 months, 38.2% had MYCN amplification, and 88% were INSS stage 4.

The randomized cohorts of patients were similar to the overall patient cohort in terms of age, MYCN amplification, and stage 4 disease.

The randomizations were also well balanced in terms of MYCN amplifications, stage 4 disease, and response to induction chemotherapy.

The investigators retrospectively reviewed the patient characteristics, including age at diagnosis, early response to induction, and assignment to receive immunotherapy, “and there was no statistically significant difference between the randomized cohorts for these characteristics,” Dr Park said.

Safety

The most commonly observed grade 3 or greater non-hematologic toxicities in the single and tandem arms included infection (18.3% and 17.9%, respectively), mucosal toxicities (17.2% and 13.6%, respectively), and hepatic toxicities, including sinusoidal obstructive disorder (6.5% and 6.2%, respectively).

The investigators observed no significant differences between the arms in regard to the rate of these toxicities. In addition, they observed no difference between the arms in the rate of regimen-related mortality, 4.1% in the single arm, and 1.2% in the tandem arm.

Efficacy

For all patients (n=652) from time of enrollment, the 3-year EFS was 51.0% and the 3-year OS was 68.3%.

In randomized patients (n=355), from the time of randomization, the 3-year EFS was 54.8% and the 3-year OS, 71.5%, with a median survival time of 4.6 years.

There was a statistically significant improvement in EFS for those patients assigned to tandem transplant, with a 3-year EFS of 61.4% as compared to those assigned to single transplant, who had a 3-year EFS of 48.4% (P=0.0081).

There was not a statistically significant difference in OS. However, Dr Park pointed out that the trial was not powered to detect a difference in overall survival.

When looking at the cohort of stage 4 patients who were older than 18 months, investigators again saw a statistically significant improvement in EFS. The 3-year EFS was 59%.1 for tandem transplants vs 45.5% for single transplants, P=0.0083.

Again, there was no difference in OS in the INSS stage 4 patients older than 18 months.

“And finally, when we analyzed the outcome for children who were assigned to receive immunotherapy,” Dr Park pointed out, “from the time of start of immunotherapy, there was a statistically significant improvement in both event-free survival and overall survival for those children who received tandem transplants.”

The 3-year EFS for patients who received a tandem transplant and immunotherapy was 73.7% compared to the 3-year EFS of 56.0% for patients who received a single transplant and immunotherapy (P=0.0033).

The 3-year overall survival was also significantly improved for patients who received tandem transplants and immunotherapy (83.7%) compared to those who received a single transplant and immunotherapy (74.4%) (P=0.0322).

Conclusions

“Tandem transplant consolidation improves outcome in patients with high-risk neuroblastoma,” Dr Park said, with the important caveat mentioned earlier.

The tandem transplant does not increase toxicity or regimen-related mortality, and the benefit of tandem ASCT remains following anti-GD2-directed immunotherapy. ![]()

*Data in the presentation differ slightly from the abstract

Photo by Chad McNeeley

CHICAGO—A phase 3 study of tandem autologous stem cell transplant (ASCT) for children with high-risk neuroblastoma has shown that tandem transplant as consolidation significantly improved event-free survival (EFS).

Nevertheless, the improvement comes “with an important caveat,” according to Julie R. Park, MD, “that this is in children who survive induction without disease progression after induction or severe induction-related toxicity.”

Of note, the tandem transplant did not increase toxicity or regimen-related mortality.

Dr Park, of the Seattle Children’s Hospital and University of Washington, presented the study data on behalf of the Children’s Oncology Group and the COG ANBL0532 Study Committee during the plenary session at the 2016 ASCO Annual Meeting (LBA3*).

Neuroblastoma, Dr Park explained, is a disease that occurs in young children and is the most common extracranial tumor of childhood. Patients with high-risk neuroblastoma account for 50% of all children diagnosed with the disease, and neuroblastoma accounts for more than 10% of all childhood cancer mortality.

The outcome for high-risk neuroblastoma patients is dismal: fewer than 50% survive following current multi-agent, aggressive therapy, she said.

Randomized clinical trials performed over the last 25 years “have taught us that dose intensification of therapy is important,” Dr Park said, “and the treatment of minimal residual disease with non-cross resistant therapies is equally important.”

Pilot studies of tandem ASCT demonstrated tolerable toxicity and suggested efficacy, and clinical trials demonstrated that collecting peripheral blood stem cells in small children with neuroblastoma was feasible.

So the investigators undertook the current study to improve 3-year EFS of high-risk neuroblastoma patients using a strategy of tandem transplant consolidation.

Eligibility

Patients with newly diagnosed high-risk neuroblastoma were eligible. They had to have metastatic disease (INSS stage 4) and be older than 18 months to be eligible for the trial.

They could be any age if they had INSS stage 2, 3, or 4 with MYCN amplification.