User login

Botulinum toxin for chronic pain: What's on the horizon?

Botulinum toxin (BoNT) was first approved by the US Food and Drug Administration (FDA) for the treatment of strabismus and blepharospasm in 1989. Since then, approved indications have expanded to include spasticity, cervical dystonia, severe axillary hyperhidrosis, bladder dysfunction, and chronic migraine headache, as well as multiple cosmetic uses.1,2 Over the course of 30 years of clinical use, BoNT has proven to be effective and safe.3,4 This has led to the expanded use of BoNT for additional medical conditions.1,2

In the review that follows, we will discuss the utility of BoNT in the treatment of headaches, spasticity, and cervical dystonia. We will then explore the evidence for emerging indications that include chronic joint pain, trigeminal neuralgia, and plantar fasciitis. But first, a brief word about how BoNT works and its safety profile.

Seven toxins, but only 2 are used for medical purposes

BoNT is naturally produced by Clostridium botulinum, an anaerobic, spore-forming bacteria.1 BoNT inhibits acetylcholine release from presynaptic vesicles at the neuromuscular junctions, which results in flaccid paralysis in peripheral skeletal musculature and autonomic nerve terminals.1,5 These effects from BoNT can last up to 3 to 6 months.1

Seven different toxins have been identified (A, B, C, D, E, F, and G), but only toxins A and B are currently used for medical purposes.5 Both have similar effects, although there are slight differences in mechanism of action. Toxin B injections are also reported to be slightly more painful. There are also differences in preparation, with some requiring reconstitution, which vary by brand. Certain types of BoNT require refrigeration, and an in-depth review of the manufacturer’s guidelines is recommended before use.

Safety and adverse effects

Although BoNT is 1 of the most lethal toxins known to humans, it has been used in clinical medicine for more than 30 years and has proven to be safe if used properly.3 Adverse effects are rare and are often location and dose dependent (200 U and higher). Immediate or acute adverse effects are usually mild and can include bruising, headache, allergic reactions, edema, skin conditions, infection, or pain at the injection site.4 Delayed adverse effects can include muscle weakness that persists throughout the 3 to 6 months of duration and is usually related to incorrect placement or unintentional spread.4

Serious adverse events are rare: there are reports of the development of botulism, generalized paralysis, dysphagia, respiratory effects, and even death in patients who had received BoNT injections.3 In a majority of cases, a direct relationship with BoNT was never established, and in most incidents reported, there were significant comorbidities that could have contributed to the adverse event.3 These events appear to be related to higher doses of BoNT, as well as possible incorrect injection placement.3

Knowledge of anatomy and correct placement of BoNT are vitally important, as they have a significant impact on the effectiveness of treatment and adverse events.3 In preventing adverse events, those administering BoNT need to be familiar with the BoNT brand being used, verify proper storage consistent with the manufacturer’s recommendations, and confirm correct dosages with proper reconstitution process.3

Continue to: BoNT is contraindicated

BoNT is contraindicated in those with a history of a previous anaphylactic reaction to BoNT. Patients with known hypersensitivity to BoNT, including those with neuromuscular junction diseases and anterior horn disorders, should be considered for other forms of treatment due to the risk of an exaggerated response. No adverse events have been recorded in regard to pregnancy and lactation, although these remain a potential contraindication.3,4,6

Taking a closer look at current indications

Headaches

Chronic migraine (CM) is defined by the International Headache Society as at least 15 days per month with headaches and 8 of those days with migraine features. BoNT has been FDA approved for treatment of CM since 2011. This was based on 2 large, double-blind, randomized, placebo-controlled trials that showed a significant reduction from baseline for headaches and migraine days, total time, and frequency of migraines.7,8

Subsequent studies have continued to show benefit for CM treatment. In a recent Cochrane systematic review and meta-analysis, it was determined that BoNT can decrease frequency of CM by 2 days per month, and it is recommended by several organizations as a treatment option for CM.9

Low-quality evidence has not shown benefit for tension-type headaches. However, further research is warranted, especially for chronic tension-type headache, which is defined as daily tension headaches.10

Spasticity

Spasticity is caused by an insult to the brain or spinal cord and can often occur after a stroke, brain or spinal cord injury, cerebral palsy, or other neurologic condition.11 BoNT was initially FDA approved in 2010 for treatment of upper limb spasticity in adults, although it had been used for treatment for spasticity for more than 20 years prior to that. It currently is approved for upper and lower spasticity in adults and recently was expanded to include pediatrics.12

Continue to: A small case series...

A small case series conducted soon after BoNT was introduced showed promising results, and subsequent meta-analyses and systematic reviews have shown positive results for use of BoNT for the management of spasticity.13 Studies have begun to focus on specific regions of the upper and lower limbs to identify optimal sites for injections.

Cervical dystonia

Cervical dystonia (CD) is the most common form of dystonia and is defined as impairment of activities of daily living due to abnormal postures of the head and neck. BoNT was approved for CD in 1999 after several pivotal randomized placebo-controlled double-blind studies showed improvement of symptoms.14 Several BoNT formulations have been given Level A classification, and can be considered a potential first-line treatment for CD.15,16 The most common adverse effects reported have been dry mouth, dysphagia, muscle weakness, and neck pain.14-16

BoNT is currently being used off-label for management of multiple types of dystonia with reported success, as research on its use for noncervical dystonia (including limb, laryngeal, oromandibular, and truncal) continues. Although there are case series and some randomized trials exploring BoNT for certain types of dystonia, most are lacking high-quality evidence from double-blind, randomized controlled trials.14-16

Exploring the evidence for emerging indications

There has been significant interest in using BoNT for management for both nociceptive and neuropathic pain symptoms.5

Nociceptive pain is the irritation and painful response to actual or potential tissue damage. It is a major component of chronic pain and is difficult to treat, with limited effective options.5,17

Continue to: Neuropathic pain

Neuropathic pain is related to abnormalities that disrupt the normal function of the nervous system. Abnormalities could be related to anatomic or structural changes that cause compression, trauma, scar tissue, or a number of other conditions that affect nerve function. These can be either central or peripheral and can be caused by multiple etiologies.

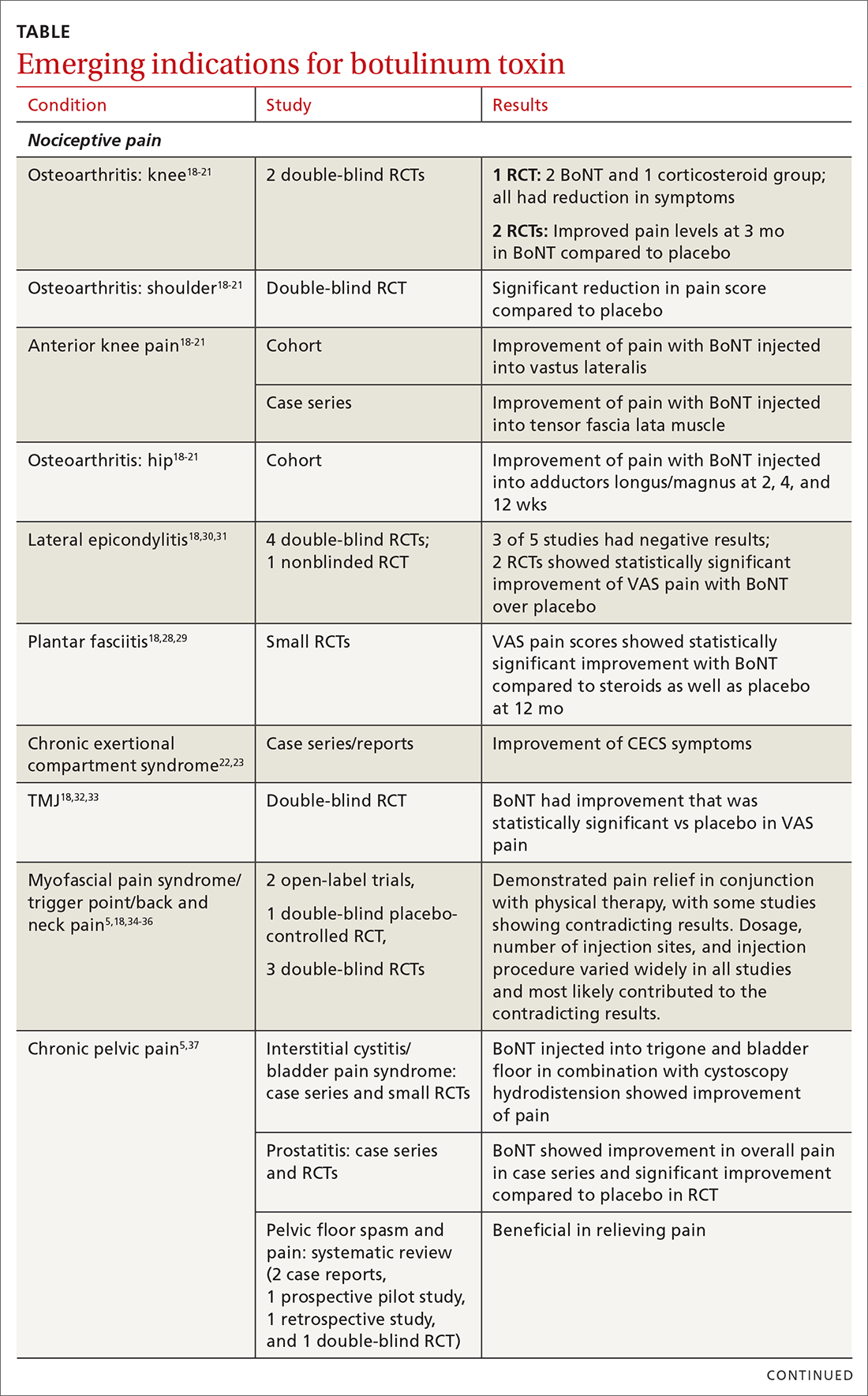

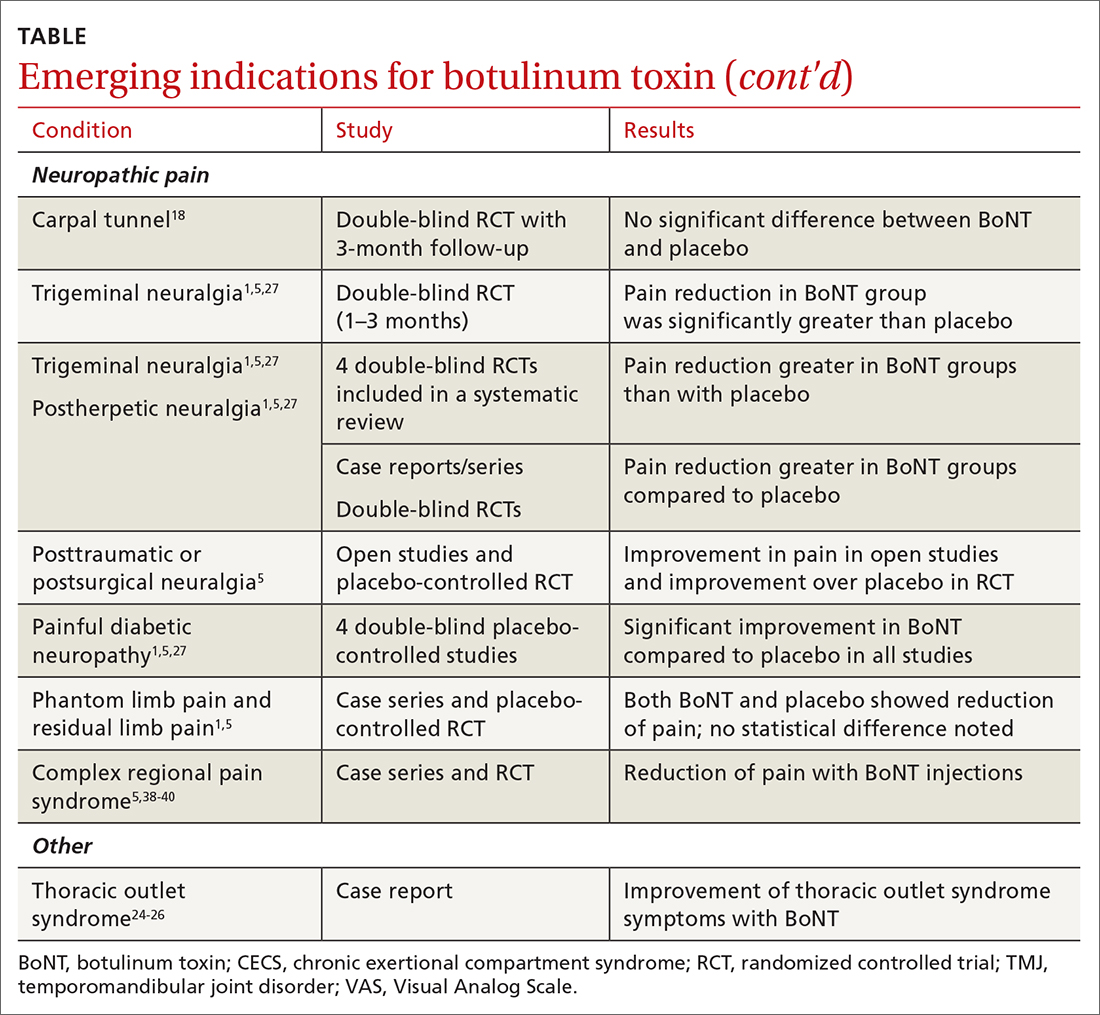

The following discussion explores the evidence for potential emerging indications for BoNT. The TABLE1,5,18-40 summarizes what we know to date.

Chronic joint pain

Refractory joint pain is difficult to treat and can be debilitating for patients. It can have multiple causes but is most commonly related to arthritic changes. Due to the difficulty with treatment, there have been attempts to use BoNT as an intra-articular treatment for refractory joint pain. Results vary and are related to several factors, including the initial degree of pain, the BoNT dosage, and the formulation used, as well as the joint injected.

There appears to be a potentially significant improvement in short-term pain with BoNT compared to conventional therapies, such as physical therapy, nonsteroidal anti-inflammatory drugs, corticosteroid injections, and hyaluronic acid injections. In studies evaluating long-term benefits, it was noted that after 6 months, there was no significant difference between BoNT and control groups.19-21

The knee joint has been the focus of most research, but BoNT has also been used for shoulder and ankle pain, with success. Recent meta-analyses evaluating knee and shoulder pain have shown BoNT is safe and effective for joint pain.20,21 There has been no significant difference noted in adverse events with BoNT compared to controls. Currently, more long-term data and research are needed, but BoNT is safe and a potentially effective treatment option for short-term relief of refractory joint pain.19-21

Continue to: Chronic exertional compartment sydrome

Chronic exertional compartment syndrome

Chronic exertional compartment syndrome (CECS) is defined subjectively as pain in a specific compartment that develops during exercise and resolves upon stopping, as well as objectively with an increase in intra-muscular pressure.22 It is most common in the lower leg and is a difficult condition to manage. Nonsurgical and surgical options are only successful at returning the patient to full activity 40% to 80% of the time.23

An initial study done in 2013 of BoNT injected into the anterior and lateral compartments of the lower extremity showed that symptoms resolved completely in 94% of patients treated.22 The actual mechanism of benefit is not clearly understood but is potentially related to muscle atrophy and loss of contractile tissue. However, it has not been reported that these changes have affected the strength or performance of patients who receive BoNT for CECS.23

Thoracic outlet syndrome

Thoracic outlet syndrome (TOS) is a compression of neurovascular structures within the thoracic outlet. There are several locations of potential compression, as well as possible neurogenic, vascular, or nonspecific manifestations.24 Compression can be from a structural variant, such as a cervical rib, or due to soft tissue from the scalene or pectoralis musculature. TOS is difficult to diagnose and treat. Physical therapy is the mainstay of treatment, but failure is common and treatment options are otherwise limited. Decompression surgery is an option if conservative management fails, but it has a high recurrence rate.24

In an effort to harness the therapeutic value of muscle atrophy, denervation, and relaxation afforded by BoNT, clinicians have injected the agent into the anterior and middle scalenes and the pectoralis minor to provide patients with relief from TOS.24 This treatment requires advanced imaging with either fluoroscopy or ultrasound guidance for correct placement and knowledge of surrounding anatomy. Small case reports and case series have demonstrated success, but a small double-blind randomized controlled study of 37 individuals with neurogenic TOS in 2011 did not show a reduction in symptoms.25 Multiple subsequent case reports and case series have continued to show positive results.24,25 A recent retrospective study showed that patients with TOS who had positive results with BoNT had better surgical outcomes.26

Trigeminal neuralgia and peripheral nerve pain

A meta-analysis in 2019 reviewed evidence for trigeminal neuralgia as well as other types of peripheral neuropathies, including diabetic neuropathy and postherpetic neuropathy. It showed that BoNT injections are safe, as well as effective, for short-term relief at 3 months. However, overall study sizes were small and long-term data are still lacking; larger high-quality studies are needed for further substantiation.27

Continue to: Plantar fascitis

Plantar fasciitis

BoNT has been used for treatment of plantar fasciitis. Small randomized controlled studies have compared BoNT to both placebo and corticosteroids, showing that BoNT has better long-term outcomes at 3, 6, and 12 months.28,29 BoNT is currently being used when standard treatments have failed; however, larger randomized controlled studies are still needed prior to BoNT being accepted as standard treatment.29

Lateral epicondylitis

A systematic review and meta-analysis done in 2017 showed that BoNT is superior to placebo at 16 weeks. No significant difference was noted between BoNT and corticosteroids at 8 weeks, although corticosteroids did demonstrate better improvement at the short-term interval of 2 to 4 weeks.30 As expected, BoNT was associated with grip-strength weakness compared to placebo and corticosteroids at 12 weeks. Subsequent small randomized controlled studies have continued to show benefit with BoNT, but all studies noted grip weakness (which resolved) and duration of effect was dose dependent.30,31

Temporomandibular joint pain

BoNT has been studied in the treatment of temporomandibular joint (TMJ) pain and dislocations since 1998, and was shown to improve quality of life.32 BoNT has been injected into the musculature surrounding the TMJ, as well as into the joint, and has proven to be effective in these areas.33 There are limited treatment options for TMJ pain and dislocations, and although research is still ongoing, BoNT is considered a potential treatment option.32,33

Myofascial, neck, and back chronic pain

Chronic back pain is common and can be due to multiple conditions. BoNT has been studied for treatment focusing on myofascial pain in the neck and back region. Case series have shown improvement with targeted BoNT injections.34 However, in randomized controlled double-blind studies comparing BoNT to placebo, local anesthetics, and steroids, there were no significant differences in pain scores.35,36 The majority of studies have been landmark based or used the site of maximal tenderness as guidance for injections, but there is some evidence that targeted injections focusing on specific muscle groups may improve benefit.5 This usually requires the use of imaging for guidance.

Chronic pelvic pain

Chronic pelvic pain is common and has been reported to affect 1 in 7 women.37 It is often difficult to diagnose the exact source of the pain, and it can be very difficult to treat. In a 2020 systematic review (including 12 observational studies and 5 randomized controlled trials) of BoNT for treatment of chronic pelvic pain, the quality of evidence varied widely.38 Observational studies showed good benefit, but only 1 randomized trial showed statistical difference with the use of BoNT for pelvic pain. No serious adverse events were reported in any of the studies.38 Chronic pelvic pain can be caused by a number of different conditions, and more high-quality research for BoNT is needed, focusing on specific causes.5,38

Continue to: Complex regional pain

Complex regional pain

Complex regional pain syndrome (CRPS) can be a debilitating condition that causes pain, sympathetic dysregulation, and central nervous system sensitization, often related to a traumatic event. Incidence is reported as 5 to 26 per 100,000, although it most likely is severely underdiagnosed.39 Treatment options are limited, and often patients continue to struggle with pain.

Due to the mechanism of action of BoNT, it has a high potential benefit for treatment of the allodynia and hyperalgesia associated with CRPS. BoNT injections have been used for the treatment of CRPS with limited success.40

There is currently limited evidence on BoNT for CRPS, and uncertainty regarding the best injection location remains. Studies have looked at lumbar sympathetic blocks, intra-articular, and grid-like BoNT injections over the area affected by CRPS.39-41 Case studies/series and observational studies have shown success with minimal adverse reactions, but larger high-quality, randomized controlled double-blind studies are still lacking.39-41

Concluding thoughts

Most chronic pain conditions have very limited treatment options, making the exploration of BoNT as a potential addition to those treatments an appealing possibility. Since it was first introduced in 1989, it has been proven to be safe, with limited adverse events, for the treatment of chronic pain.

However, providers need to be familiar with the type and formulation of BoNT product being used. Extensive knowledge of surrounding anatomy and ability to place BoNT in an exact location (which may require either fluoroscopy or ultrasound guidance) is essential.

Continue to: Adequate research and evidence...

Adequate research and evidence for most of the applications discussed in this article are still lacking; some limitations include small sample size, bias, lower quality, and poor methodology. There is also a lack of standardization, including which BoNT product is used, dosage, and location of BoNT placement. All of these issues will need to be addressed in further research.

CORRESPONDENCE

Caleb Dickison, DO, CAQSM, 36065 Darnall Loop, Fort Hood, TX 76544; [email protected]

1. Hehr JD, Schoenbrunner AR, Janis JE. The use of botulinum toxin in pain management: basic science and clinical applications. Plast Reconstr Surg. 2020;145:629e-636e. doi: 10.1097/PRS.0000000000006559

2. Dressler D. Therapeutically relevant features of botulinum toxin drugs. Toxicon. 2020;175:64-68. doi: 10.1016/j.toxicon.2019.12.005

3. Yiannakopoulou E. Serious and long-term adverse events associated with the therapeutic and cosmetic use of botulinum toxin. Pharmacology. 2015;95:65-69. doi: 10.1159/000370245

4. Wollina U, Konrad H. Managing adverse events associated with botulinum toxin type A. Am J Clin Dermatol. 2005;6:141-150. https://doi.org/10.2165/00128071-200506030-00001

5. Guzman S, Helander E, Elhassan A. Use of botulinum toxin for chronic pain management. Topics in Pain Management. 2016;31:1-8. doi: 10.1097/01.TPM.0000482997.94909.69

6. Coté TR, Mohan AK, Polder JA, et al. Botulinum toxin type A injections: adverse events reported to the US Food and Drug Administration in therapeutic and cosmetic cases. J Am Acad Dermatol. 2005;53:407‐415. doi: 10.1016/j.jaad.2005.06.011

7. Aurora SK, Dodick DW, Turkel CC, et al; PREEMPT 1 Chronic Migraine Study Group. OnabotulinumtoxinA for treatment of chronic migraine: results from the double-blind, randomized, placebo-controlled phase of the PREEMPT 1 trial. Cephalalgia. 2010;30:793-803. doi: 10.1177/0333102410364676

8. Diener HC, Dodick DW, Aurora SK, et al; PREEMPT 2 Chronic Migraine Study Group. OnabotulinumtoxinA for treatment of chronic migraine: results from the double-blind, randomized, placebo-controlled phase of the PREEMPT 2 trial. Cephalalgia. 2010Jul;30:804-814. doi: 10.1177/0333102410364677

9. Herd CP, Tomlinson CL, Rick C, et al. Cochrane systematic review and meta-analysis of botulinum toxin for the prevention of migraine. BMJ Open. 2019;9:e027953. doi: 10.1136/bmjopen-2018-027953

10. Freund B, Rao A. Efficacy of botulinum toxin in tension-type headaches: a systematic review of the literature. Pain Pract. 2019;19:541-551. doi: 10.1111/papr.12773

11. Ward A. Spasticity treatment with botulinum toxins. J Neural Transm. 2008;115:607-616. https://doi.org/10.1007/s00702-007-0833-2

12. Ipsen announces FDA approval of Dysport® (abobotulinumtoxinA) for the treatment of upper limb spasticity in children, excluding cerebral palsy [press release]. September 26, 2019. Accessed October 27, 2021. www.businesswire.com/news/home/20190926005480/en/Ipsen-Announces-FDA-Approval-Dysport%C2%AE-abobotulinumtoxinA-Treatment

13. Das TK, Park DM. Effect of treatment with botulinum toxin on spasticity. Postgrad Med J. 1989;65:208-210. doi: 10.1136/pgmj.65.762.208

14. Spiegel LL, Ostrem JL, Bledsoe IO. FDA approvals and consensus guidelines for botulinum toxins in the treatment of dystonia. Toxins (Basel). 2020;12:332. doi: 10.3390/toxins12050332

15. Castelão M, Marques RE, Duarte GS, et al. Botulinum toxin type A therapy for cervical dystonia. Cochrane Database Syst Rev. 2017;12:CD003633. doi: 10.1002/14651858.CD003633.pub3

16. Contarino MF, Van Den Dool J, Balash Y, et al. Clinical practice: evidence-based recommendations for the treatment of cervical dystonia with botulinum toxin. Front Neurol. 2017;8:35. doi: 10.3389/fneur.2017.00035

17. Kumar R. Therapeutic use of botulinum toxin in pain treatment. Neuronal Signal. 2018;2:NS20180058. https://doi.org/10.1042/NS20180058

18. Singh JA. Use of botulinum toxin in musculoskeletal pain. F1000Research. 2013;2:52. https://doi.org/10.12688/f1000research.2-52.v2

19. Blanshan N, Krug H. The use of botulinum toxin for the treatment of chronic joint pain: clinical and experimental evidence. Toxins (Basel). 2020;12:314. doi: 10.3390/toxins12050314

20. Hsu P-C, Wu W-T, Han D-S, et al. Comparative effectiveness of botulinum toxin injection for chronic shoulder pain: a meta-analysis of randomized controlled trials. Toxins (Basel). 2020;12:251. doi: 10.3390/toxins12040251

21. Zhai S, Huang B, Yu K. The efficacy and safety of botulinum toxin type A in painful knee osteoarthritis: a systematic review and meta-analysis. J Int Med Res. 2020;48:300060519895868. doi: 10.1177/0300060519895868

22. Isner-Horobeti ME, Dufour SP, Blaes C, et al. Intramuscular pressure before and after botulinum toxin in chronic exertional compartment syndrome of the leg: a preliminary study. Am J Sports Med. 2013;41:2558‐2566. doi: 10.1177/0363546513499183

23. Hutto WM, Schroeder PB, Leggit JC. Botulinum toxin as a novel treatment for chronic exertional compartment syndrome in the US Military. Mil Med. 2019;184:e458‐e461. doi: 10.1093/milmed/usy223

24. Rahman A, Hamid A, Inozemtsev K, et al. Thoracic outlet syndrome treated with injecting botulinum toxin into middle scalene muscle and pectoral muscle interfascial planes: a case report. A A Pract. 2019;12:235‐237. doi: 10.1213/XAA.0000000000000894

25. Finlayson HC, O’Connor RJ, Brasher PMA, et al. Botulinum toxin injection for management of thoracic outlet syndrome: a double-blind, randomized, controlled trial. Pain. 2011;152:2023-2028. doi: 10.1016/j.pain.2011.04.027

26. Donahue DM, Godoy IRB, Gupta R, et al. Sonographically guided botulinum toxin injections in patients with neurogenic thoracic outlet syndrome: correlation with surgical outcomes. Skeletal Radiol. 2020;49:715-722. https://doi.org/10.1007/s00256-019-03331-9

27. Wei J, Zhu X, Yang G, et al. The efficacy and safety of botulinum toxin type A in treatment of trigeminal neuralgia and peripheral neuropathic pain: a meta‐analysis of randomized controlled trials. Brain Behav. 2019;9:e01409. doi: 10.1002/brb3.1409

28. Samant PD, Kale SY, Ahmed S, et al. Randomized controlled study comparing clinical outcomes after injection botulinum toxin type A versus corticosteroids in chronic plantar fasciitis. Int J Res Orthop. 2018;4:672-675. http://dx.doi.org/10.18203/issn.2455-4510.IntJResOrthop20182744

29. Fry DA. Is botulinum toxin injection effective in reducing pain in patients diagnosed with plantar fasciitis? PCOM Physician Assistant Studies Student Scholarship. 2019;461. https://digitalcommons.pcom.edu/pa_systematic_reviews/461

30. Lin YC, Wu WT, Hsu YC, et al. Comparative effectiveness of botulinum toxin versus non-surgical treatments for treating lateral epicondylitis: a systematic review and meta-analysis. Clin Rehabil. 2018;32:131-145. doi: 10.1177/0269215517702517

31. Ruiz AG, Díaz GV, Fernández BR, et al. Effects of ultrasound-guided administration of botulinum toxin (incobotulinumtoxinA) in patients with lateral epicondylitis. Toxins (Basel). 2019;11:46. doi: 10.3390/toxins11010046

32. Villa S, Raoul G, Machuron F, et al. Improvement in quality of life after botulinum toxin injection for temporomandibular disorder. J Stomatol Oral Maxillofac Surg. 2019;120:2-6. doi: 10.1016/j.jormas.2018.10.00

33. Fu KY, Che, HM, Sun ZP, et al. Long-term efficacy of botulinum toxin type A for the treatment of habitual dislocation of the temporomandibular joint. Br J Oral Maxillofac Surg. 2010;48:281-284. doi: 10.1016/j.bjoms.2009.07.014

34. Machado D, Kumar A, Jabbari B. Abobotulinum toxin A in the treatment of chronic low back pain. Toxins (Basel). 2016;8:374. doi: 10.3390/toxins8120374

35. Cogné M, Petit H, Creuzé A, et al. Are paraspinous intramuscular injections of botulinum toxin a (BoNT-A) efficient in the treatment of chronic low-back pain? A randomised, double-blinded crossover trial. BMC Musculoskelet Disord. 2017;18:454. https://doi.org/10.1186/s12891-017-1816-6

36. Ahmed S, Subramaniam S, Sidhu K, et al. Effect of local anesthetic versus botulinum toxin-A injections for myofascial pain disorders. Clin J Pain. 2019;35:353-367. doi: 10.1097/AJP.0000000000000681

37. Mathias SD, Kuppermann M, Liberman RF, et al. Chronic pelvic pain: prevalence, health-related quality of life, and economic correlates. Obstet Gynecol. 1996;87:321-327. doi: 10.1016/0029-7844(95)00458-0

38. Luo FY, Nasr-Esfahani M, Jarrell J, et al. Botulinum toxin injection for chronic pelvic pain: a systematic review. Acta Obstet Gynecol Scand. 2020;99:1595-1602. https://doi.org/10.1111/aogs.13946

39. Lessard L, Bartow MJ, Lee J, et al. Botulinum toxin A: a novel therapeutic modality for upper extremity chronic regional pain syndrome. Plast Reconstr Surg Glob Open. 2018;6:e1847. doi: 10.1097/GOX.0000000000001847

40. Lee Y, Lee CJ, Choi E, et al. Lumbar sympathetic block with botulinum toxin type A and type B for the complex regional pain syndrome. Toxins (Basel). 2018;10:164. doi: 10.3390/toxins10040164

41. Kwak H, Koh DJ, Min K. Botulinum toxin treatment for intractable allodynia in a patient with complex regional pain syndrome: a case report. Neurology Asia. 2020;25:215-219.

Botulinum toxin (BoNT) was first approved by the US Food and Drug Administration (FDA) for the treatment of strabismus and blepharospasm in 1989. Since then, approved indications have expanded to include spasticity, cervical dystonia, severe axillary hyperhidrosis, bladder dysfunction, and chronic migraine headache, as well as multiple cosmetic uses.1,2 Over the course of 30 years of clinical use, BoNT has proven to be effective and safe.3,4 This has led to the expanded use of BoNT for additional medical conditions.1,2

In the review that follows, we will discuss the utility of BoNT in the treatment of headaches, spasticity, and cervical dystonia. We will then explore the evidence for emerging indications that include chronic joint pain, trigeminal neuralgia, and plantar fasciitis. But first, a brief word about how BoNT works and its safety profile.

Seven toxins, but only 2 are used for medical purposes

BoNT is naturally produced by Clostridium botulinum, an anaerobic, spore-forming bacteria.1 BoNT inhibits acetylcholine release from presynaptic vesicles at the neuromuscular junctions, which results in flaccid paralysis in peripheral skeletal musculature and autonomic nerve terminals.1,5 These effects from BoNT can last up to 3 to 6 months.1

Seven different toxins have been identified (A, B, C, D, E, F, and G), but only toxins A and B are currently used for medical purposes.5 Both have similar effects, although there are slight differences in mechanism of action. Toxin B injections are also reported to be slightly more painful. There are also differences in preparation, with some requiring reconstitution, which vary by brand. Certain types of BoNT require refrigeration, and an in-depth review of the manufacturer’s guidelines is recommended before use.

Safety and adverse effects

Although BoNT is 1 of the most lethal toxins known to humans, it has been used in clinical medicine for more than 30 years and has proven to be safe if used properly.3 Adverse effects are rare and are often location and dose dependent (200 U and higher). Immediate or acute adverse effects are usually mild and can include bruising, headache, allergic reactions, edema, skin conditions, infection, or pain at the injection site.4 Delayed adverse effects can include muscle weakness that persists throughout the 3 to 6 months of duration and is usually related to incorrect placement or unintentional spread.4

Serious adverse events are rare: there are reports of the development of botulism, generalized paralysis, dysphagia, respiratory effects, and even death in patients who had received BoNT injections.3 In a majority of cases, a direct relationship with BoNT was never established, and in most incidents reported, there were significant comorbidities that could have contributed to the adverse event.3 These events appear to be related to higher doses of BoNT, as well as possible incorrect injection placement.3

Knowledge of anatomy and correct placement of BoNT are vitally important, as they have a significant impact on the effectiveness of treatment and adverse events.3 In preventing adverse events, those administering BoNT need to be familiar with the BoNT brand being used, verify proper storage consistent with the manufacturer’s recommendations, and confirm correct dosages with proper reconstitution process.3

Continue to: BoNT is contraindicated

BoNT is contraindicated in those with a history of a previous anaphylactic reaction to BoNT. Patients with known hypersensitivity to BoNT, including those with neuromuscular junction diseases and anterior horn disorders, should be considered for other forms of treatment due to the risk of an exaggerated response. No adverse events have been recorded in regard to pregnancy and lactation, although these remain a potential contraindication.3,4,6

Taking a closer look at current indications

Headaches

Chronic migraine (CM) is defined by the International Headache Society as at least 15 days per month with headaches and 8 of those days with migraine features. BoNT has been FDA approved for treatment of CM since 2011. This was based on 2 large, double-blind, randomized, placebo-controlled trials that showed a significant reduction from baseline for headaches and migraine days, total time, and frequency of migraines.7,8

Subsequent studies have continued to show benefit for CM treatment. In a recent Cochrane systematic review and meta-analysis, it was determined that BoNT can decrease frequency of CM by 2 days per month, and it is recommended by several organizations as a treatment option for CM.9

Low-quality evidence has not shown benefit for tension-type headaches. However, further research is warranted, especially for chronic tension-type headache, which is defined as daily tension headaches.10

Spasticity

Spasticity is caused by an insult to the brain or spinal cord and can often occur after a stroke, brain or spinal cord injury, cerebral palsy, or other neurologic condition.11 BoNT was initially FDA approved in 2010 for treatment of upper limb spasticity in adults, although it had been used for treatment for spasticity for more than 20 years prior to that. It currently is approved for upper and lower spasticity in adults and recently was expanded to include pediatrics.12

Continue to: A small case series...

A small case series conducted soon after BoNT was introduced showed promising results, and subsequent meta-analyses and systematic reviews have shown positive results for use of BoNT for the management of spasticity.13 Studies have begun to focus on specific regions of the upper and lower limbs to identify optimal sites for injections.

Cervical dystonia

Cervical dystonia (CD) is the most common form of dystonia and is defined as impairment of activities of daily living due to abnormal postures of the head and neck. BoNT was approved for CD in 1999 after several pivotal randomized placebo-controlled double-blind studies showed improvement of symptoms.14 Several BoNT formulations have been given Level A classification, and can be considered a potential first-line treatment for CD.15,16 The most common adverse effects reported have been dry mouth, dysphagia, muscle weakness, and neck pain.14-16

BoNT is currently being used off-label for management of multiple types of dystonia with reported success, as research on its use for noncervical dystonia (including limb, laryngeal, oromandibular, and truncal) continues. Although there are case series and some randomized trials exploring BoNT for certain types of dystonia, most are lacking high-quality evidence from double-blind, randomized controlled trials.14-16

Exploring the evidence for emerging indications

There has been significant interest in using BoNT for management for both nociceptive and neuropathic pain symptoms.5

Nociceptive pain is the irritation and painful response to actual or potential tissue damage. It is a major component of chronic pain and is difficult to treat, with limited effective options.5,17

Continue to: Neuropathic pain

Neuropathic pain is related to abnormalities that disrupt the normal function of the nervous system. Abnormalities could be related to anatomic or structural changes that cause compression, trauma, scar tissue, or a number of other conditions that affect nerve function. These can be either central or peripheral and can be caused by multiple etiologies.

The following discussion explores the evidence for potential emerging indications for BoNT. The TABLE1,5,18-40 summarizes what we know to date.

Chronic joint pain

Refractory joint pain is difficult to treat and can be debilitating for patients. It can have multiple causes but is most commonly related to arthritic changes. Due to the difficulty with treatment, there have been attempts to use BoNT as an intra-articular treatment for refractory joint pain. Results vary and are related to several factors, including the initial degree of pain, the BoNT dosage, and the formulation used, as well as the joint injected.

There appears to be a potentially significant improvement in short-term pain with BoNT compared to conventional therapies, such as physical therapy, nonsteroidal anti-inflammatory drugs, corticosteroid injections, and hyaluronic acid injections. In studies evaluating long-term benefits, it was noted that after 6 months, there was no significant difference between BoNT and control groups.19-21

The knee joint has been the focus of most research, but BoNT has also been used for shoulder and ankle pain, with success. Recent meta-analyses evaluating knee and shoulder pain have shown BoNT is safe and effective for joint pain.20,21 There has been no significant difference noted in adverse events with BoNT compared to controls. Currently, more long-term data and research are needed, but BoNT is safe and a potentially effective treatment option for short-term relief of refractory joint pain.19-21

Continue to: Chronic exertional compartment sydrome

Chronic exertional compartment syndrome

Chronic exertional compartment syndrome (CECS) is defined subjectively as pain in a specific compartment that develops during exercise and resolves upon stopping, as well as objectively with an increase in intra-muscular pressure.22 It is most common in the lower leg and is a difficult condition to manage. Nonsurgical and surgical options are only successful at returning the patient to full activity 40% to 80% of the time.23

An initial study done in 2013 of BoNT injected into the anterior and lateral compartments of the lower extremity showed that symptoms resolved completely in 94% of patients treated.22 The actual mechanism of benefit is not clearly understood but is potentially related to muscle atrophy and loss of contractile tissue. However, it has not been reported that these changes have affected the strength or performance of patients who receive BoNT for CECS.23

Thoracic outlet syndrome

Thoracic outlet syndrome (TOS) is a compression of neurovascular structures within the thoracic outlet. There are several locations of potential compression, as well as possible neurogenic, vascular, or nonspecific manifestations.24 Compression can be from a structural variant, such as a cervical rib, or due to soft tissue from the scalene or pectoralis musculature. TOS is difficult to diagnose and treat. Physical therapy is the mainstay of treatment, but failure is common and treatment options are otherwise limited. Decompression surgery is an option if conservative management fails, but it has a high recurrence rate.24

In an effort to harness the therapeutic value of muscle atrophy, denervation, and relaxation afforded by BoNT, clinicians have injected the agent into the anterior and middle scalenes and the pectoralis minor to provide patients with relief from TOS.24 This treatment requires advanced imaging with either fluoroscopy or ultrasound guidance for correct placement and knowledge of surrounding anatomy. Small case reports and case series have demonstrated success, but a small double-blind randomized controlled study of 37 individuals with neurogenic TOS in 2011 did not show a reduction in symptoms.25 Multiple subsequent case reports and case series have continued to show positive results.24,25 A recent retrospective study showed that patients with TOS who had positive results with BoNT had better surgical outcomes.26

Trigeminal neuralgia and peripheral nerve pain

A meta-analysis in 2019 reviewed evidence for trigeminal neuralgia as well as other types of peripheral neuropathies, including diabetic neuropathy and postherpetic neuropathy. It showed that BoNT injections are safe, as well as effective, for short-term relief at 3 months. However, overall study sizes were small and long-term data are still lacking; larger high-quality studies are needed for further substantiation.27

Continue to: Plantar fascitis

Plantar fasciitis

BoNT has been used for treatment of plantar fasciitis. Small randomized controlled studies have compared BoNT to both placebo and corticosteroids, showing that BoNT has better long-term outcomes at 3, 6, and 12 months.28,29 BoNT is currently being used when standard treatments have failed; however, larger randomized controlled studies are still needed prior to BoNT being accepted as standard treatment.29

Lateral epicondylitis

A systematic review and meta-analysis done in 2017 showed that BoNT is superior to placebo at 16 weeks. No significant difference was noted between BoNT and corticosteroids at 8 weeks, although corticosteroids did demonstrate better improvement at the short-term interval of 2 to 4 weeks.30 As expected, BoNT was associated with grip-strength weakness compared to placebo and corticosteroids at 12 weeks. Subsequent small randomized controlled studies have continued to show benefit with BoNT, but all studies noted grip weakness (which resolved) and duration of effect was dose dependent.30,31

Temporomandibular joint pain

BoNT has been studied in the treatment of temporomandibular joint (TMJ) pain and dislocations since 1998, and was shown to improve quality of life.32 BoNT has been injected into the musculature surrounding the TMJ, as well as into the joint, and has proven to be effective in these areas.33 There are limited treatment options for TMJ pain and dislocations, and although research is still ongoing, BoNT is considered a potential treatment option.32,33

Myofascial, neck, and back chronic pain

Chronic back pain is common and can be due to multiple conditions. BoNT has been studied for treatment focusing on myofascial pain in the neck and back region. Case series have shown improvement with targeted BoNT injections.34 However, in randomized controlled double-blind studies comparing BoNT to placebo, local anesthetics, and steroids, there were no significant differences in pain scores.35,36 The majority of studies have been landmark based or used the site of maximal tenderness as guidance for injections, but there is some evidence that targeted injections focusing on specific muscle groups may improve benefit.5 This usually requires the use of imaging for guidance.

Chronic pelvic pain

Chronic pelvic pain is common and has been reported to affect 1 in 7 women.37 It is often difficult to diagnose the exact source of the pain, and it can be very difficult to treat. In a 2020 systematic review (including 12 observational studies and 5 randomized controlled trials) of BoNT for treatment of chronic pelvic pain, the quality of evidence varied widely.38 Observational studies showed good benefit, but only 1 randomized trial showed statistical difference with the use of BoNT for pelvic pain. No serious adverse events were reported in any of the studies.38 Chronic pelvic pain can be caused by a number of different conditions, and more high-quality research for BoNT is needed, focusing on specific causes.5,38

Continue to: Complex regional pain

Complex regional pain

Complex regional pain syndrome (CRPS) can be a debilitating condition that causes pain, sympathetic dysregulation, and central nervous system sensitization, often related to a traumatic event. Incidence is reported as 5 to 26 per 100,000, although it most likely is severely underdiagnosed.39 Treatment options are limited, and often patients continue to struggle with pain.

Due to the mechanism of action of BoNT, it has a high potential benefit for treatment of the allodynia and hyperalgesia associated with CRPS. BoNT injections have been used for the treatment of CRPS with limited success.40

There is currently limited evidence on BoNT for CRPS, and uncertainty regarding the best injection location remains. Studies have looked at lumbar sympathetic blocks, intra-articular, and grid-like BoNT injections over the area affected by CRPS.39-41 Case studies/series and observational studies have shown success with minimal adverse reactions, but larger high-quality, randomized controlled double-blind studies are still lacking.39-41

Concluding thoughts

Most chronic pain conditions have very limited treatment options, making the exploration of BoNT as a potential addition to those treatments an appealing possibility. Since it was first introduced in 1989, it has been proven to be safe, with limited adverse events, for the treatment of chronic pain.

However, providers need to be familiar with the type and formulation of BoNT product being used. Extensive knowledge of surrounding anatomy and ability to place BoNT in an exact location (which may require either fluoroscopy or ultrasound guidance) is essential.

Continue to: Adequate research and evidence...

Adequate research and evidence for most of the applications discussed in this article are still lacking; some limitations include small sample size, bias, lower quality, and poor methodology. There is also a lack of standardization, including which BoNT product is used, dosage, and location of BoNT placement. All of these issues will need to be addressed in further research.

CORRESPONDENCE

Caleb Dickison, DO, CAQSM, 36065 Darnall Loop, Fort Hood, TX 76544; [email protected]

Botulinum toxin (BoNT) was first approved by the US Food and Drug Administration (FDA) for the treatment of strabismus and blepharospasm in 1989. Since then, approved indications have expanded to include spasticity, cervical dystonia, severe axillary hyperhidrosis, bladder dysfunction, and chronic migraine headache, as well as multiple cosmetic uses.1,2 Over the course of 30 years of clinical use, BoNT has proven to be effective and safe.3,4 This has led to the expanded use of BoNT for additional medical conditions.1,2

In the review that follows, we will discuss the utility of BoNT in the treatment of headaches, spasticity, and cervical dystonia. We will then explore the evidence for emerging indications that include chronic joint pain, trigeminal neuralgia, and plantar fasciitis. But first, a brief word about how BoNT works and its safety profile.

Seven toxins, but only 2 are used for medical purposes

BoNT is naturally produced by Clostridium botulinum, an anaerobic, spore-forming bacteria.1 BoNT inhibits acetylcholine release from presynaptic vesicles at the neuromuscular junctions, which results in flaccid paralysis in peripheral skeletal musculature and autonomic nerve terminals.1,5 These effects from BoNT can last up to 3 to 6 months.1

Seven different toxins have been identified (A, B, C, D, E, F, and G), but only toxins A and B are currently used for medical purposes.5 Both have similar effects, although there are slight differences in mechanism of action. Toxin B injections are also reported to be slightly more painful. There are also differences in preparation, with some requiring reconstitution, which vary by brand. Certain types of BoNT require refrigeration, and an in-depth review of the manufacturer’s guidelines is recommended before use.

Safety and adverse effects

Although BoNT is 1 of the most lethal toxins known to humans, it has been used in clinical medicine for more than 30 years and has proven to be safe if used properly.3 Adverse effects are rare and are often location and dose dependent (200 U and higher). Immediate or acute adverse effects are usually mild and can include bruising, headache, allergic reactions, edema, skin conditions, infection, or pain at the injection site.4 Delayed adverse effects can include muscle weakness that persists throughout the 3 to 6 months of duration and is usually related to incorrect placement or unintentional spread.4

Serious adverse events are rare: there are reports of the development of botulism, generalized paralysis, dysphagia, respiratory effects, and even death in patients who had received BoNT injections.3 In a majority of cases, a direct relationship with BoNT was never established, and in most incidents reported, there were significant comorbidities that could have contributed to the adverse event.3 These events appear to be related to higher doses of BoNT, as well as possible incorrect injection placement.3

Knowledge of anatomy and correct placement of BoNT are vitally important, as they have a significant impact on the effectiveness of treatment and adverse events.3 In preventing adverse events, those administering BoNT need to be familiar with the BoNT brand being used, verify proper storage consistent with the manufacturer’s recommendations, and confirm correct dosages with proper reconstitution process.3

Continue to: BoNT is contraindicated

BoNT is contraindicated in those with a history of a previous anaphylactic reaction to BoNT. Patients with known hypersensitivity to BoNT, including those with neuromuscular junction diseases and anterior horn disorders, should be considered for other forms of treatment due to the risk of an exaggerated response. No adverse events have been recorded in regard to pregnancy and lactation, although these remain a potential contraindication.3,4,6

Taking a closer look at current indications

Headaches

Chronic migraine (CM) is defined by the International Headache Society as at least 15 days per month with headaches and 8 of those days with migraine features. BoNT has been FDA approved for treatment of CM since 2011. This was based on 2 large, double-blind, randomized, placebo-controlled trials that showed a significant reduction from baseline for headaches and migraine days, total time, and frequency of migraines.7,8

Subsequent studies have continued to show benefit for CM treatment. In a recent Cochrane systematic review and meta-analysis, it was determined that BoNT can decrease frequency of CM by 2 days per month, and it is recommended by several organizations as a treatment option for CM.9

Low-quality evidence has not shown benefit for tension-type headaches. However, further research is warranted, especially for chronic tension-type headache, which is defined as daily tension headaches.10

Spasticity

Spasticity is caused by an insult to the brain or spinal cord and can often occur after a stroke, brain or spinal cord injury, cerebral palsy, or other neurologic condition.11 BoNT was initially FDA approved in 2010 for treatment of upper limb spasticity in adults, although it had been used for treatment for spasticity for more than 20 years prior to that. It currently is approved for upper and lower spasticity in adults and recently was expanded to include pediatrics.12

Continue to: A small case series...

A small case series conducted soon after BoNT was introduced showed promising results, and subsequent meta-analyses and systematic reviews have shown positive results for use of BoNT for the management of spasticity.13 Studies have begun to focus on specific regions of the upper and lower limbs to identify optimal sites for injections.

Cervical dystonia

Cervical dystonia (CD) is the most common form of dystonia and is defined as impairment of activities of daily living due to abnormal postures of the head and neck. BoNT was approved for CD in 1999 after several pivotal randomized placebo-controlled double-blind studies showed improvement of symptoms.14 Several BoNT formulations have been given Level A classification, and can be considered a potential first-line treatment for CD.15,16 The most common adverse effects reported have been dry mouth, dysphagia, muscle weakness, and neck pain.14-16

BoNT is currently being used off-label for management of multiple types of dystonia with reported success, as research on its use for noncervical dystonia (including limb, laryngeal, oromandibular, and truncal) continues. Although there are case series and some randomized trials exploring BoNT for certain types of dystonia, most are lacking high-quality evidence from double-blind, randomized controlled trials.14-16

Exploring the evidence for emerging indications

There has been significant interest in using BoNT for management for both nociceptive and neuropathic pain symptoms.5

Nociceptive pain is the irritation and painful response to actual or potential tissue damage. It is a major component of chronic pain and is difficult to treat, with limited effective options.5,17

Continue to: Neuropathic pain

Neuropathic pain is related to abnormalities that disrupt the normal function of the nervous system. Abnormalities could be related to anatomic or structural changes that cause compression, trauma, scar tissue, or a number of other conditions that affect nerve function. These can be either central or peripheral and can be caused by multiple etiologies.

The following discussion explores the evidence for potential emerging indications for BoNT. The TABLE1,5,18-40 summarizes what we know to date.

Chronic joint pain

Refractory joint pain is difficult to treat and can be debilitating for patients. It can have multiple causes but is most commonly related to arthritic changes. Due to the difficulty with treatment, there have been attempts to use BoNT as an intra-articular treatment for refractory joint pain. Results vary and are related to several factors, including the initial degree of pain, the BoNT dosage, and the formulation used, as well as the joint injected.

There appears to be a potentially significant improvement in short-term pain with BoNT compared to conventional therapies, such as physical therapy, nonsteroidal anti-inflammatory drugs, corticosteroid injections, and hyaluronic acid injections. In studies evaluating long-term benefits, it was noted that after 6 months, there was no significant difference between BoNT and control groups.19-21

The knee joint has been the focus of most research, but BoNT has also been used for shoulder and ankle pain, with success. Recent meta-analyses evaluating knee and shoulder pain have shown BoNT is safe and effective for joint pain.20,21 There has been no significant difference noted in adverse events with BoNT compared to controls. Currently, more long-term data and research are needed, but BoNT is safe and a potentially effective treatment option for short-term relief of refractory joint pain.19-21

Continue to: Chronic exertional compartment sydrome

Chronic exertional compartment syndrome

Chronic exertional compartment syndrome (CECS) is defined subjectively as pain in a specific compartment that develops during exercise and resolves upon stopping, as well as objectively with an increase in intra-muscular pressure.22 It is most common in the lower leg and is a difficult condition to manage. Nonsurgical and surgical options are only successful at returning the patient to full activity 40% to 80% of the time.23

An initial study done in 2013 of BoNT injected into the anterior and lateral compartments of the lower extremity showed that symptoms resolved completely in 94% of patients treated.22 The actual mechanism of benefit is not clearly understood but is potentially related to muscle atrophy and loss of contractile tissue. However, it has not been reported that these changes have affected the strength or performance of patients who receive BoNT for CECS.23

Thoracic outlet syndrome

Thoracic outlet syndrome (TOS) is a compression of neurovascular structures within the thoracic outlet. There are several locations of potential compression, as well as possible neurogenic, vascular, or nonspecific manifestations.24 Compression can be from a structural variant, such as a cervical rib, or due to soft tissue from the scalene or pectoralis musculature. TOS is difficult to diagnose and treat. Physical therapy is the mainstay of treatment, but failure is common and treatment options are otherwise limited. Decompression surgery is an option if conservative management fails, but it has a high recurrence rate.24

In an effort to harness the therapeutic value of muscle atrophy, denervation, and relaxation afforded by BoNT, clinicians have injected the agent into the anterior and middle scalenes and the pectoralis minor to provide patients with relief from TOS.24 This treatment requires advanced imaging with either fluoroscopy or ultrasound guidance for correct placement and knowledge of surrounding anatomy. Small case reports and case series have demonstrated success, but a small double-blind randomized controlled study of 37 individuals with neurogenic TOS in 2011 did not show a reduction in symptoms.25 Multiple subsequent case reports and case series have continued to show positive results.24,25 A recent retrospective study showed that patients with TOS who had positive results with BoNT had better surgical outcomes.26

Trigeminal neuralgia and peripheral nerve pain

A meta-analysis in 2019 reviewed evidence for trigeminal neuralgia as well as other types of peripheral neuropathies, including diabetic neuropathy and postherpetic neuropathy. It showed that BoNT injections are safe, as well as effective, for short-term relief at 3 months. However, overall study sizes were small and long-term data are still lacking; larger high-quality studies are needed for further substantiation.27

Continue to: Plantar fascitis

Plantar fasciitis

BoNT has been used for treatment of plantar fasciitis. Small randomized controlled studies have compared BoNT to both placebo and corticosteroids, showing that BoNT has better long-term outcomes at 3, 6, and 12 months.28,29 BoNT is currently being used when standard treatments have failed; however, larger randomized controlled studies are still needed prior to BoNT being accepted as standard treatment.29

Lateral epicondylitis

A systematic review and meta-analysis done in 2017 showed that BoNT is superior to placebo at 16 weeks. No significant difference was noted between BoNT and corticosteroids at 8 weeks, although corticosteroids did demonstrate better improvement at the short-term interval of 2 to 4 weeks.30 As expected, BoNT was associated with grip-strength weakness compared to placebo and corticosteroids at 12 weeks. Subsequent small randomized controlled studies have continued to show benefit with BoNT, but all studies noted grip weakness (which resolved) and duration of effect was dose dependent.30,31

Temporomandibular joint pain

BoNT has been studied in the treatment of temporomandibular joint (TMJ) pain and dislocations since 1998, and was shown to improve quality of life.32 BoNT has been injected into the musculature surrounding the TMJ, as well as into the joint, and has proven to be effective in these areas.33 There are limited treatment options for TMJ pain and dislocations, and although research is still ongoing, BoNT is considered a potential treatment option.32,33

Myofascial, neck, and back chronic pain

Chronic back pain is common and can be due to multiple conditions. BoNT has been studied for treatment focusing on myofascial pain in the neck and back region. Case series have shown improvement with targeted BoNT injections.34 However, in randomized controlled double-blind studies comparing BoNT to placebo, local anesthetics, and steroids, there were no significant differences in pain scores.35,36 The majority of studies have been landmark based or used the site of maximal tenderness as guidance for injections, but there is some evidence that targeted injections focusing on specific muscle groups may improve benefit.5 This usually requires the use of imaging for guidance.

Chronic pelvic pain

Chronic pelvic pain is common and has been reported to affect 1 in 7 women.37 It is often difficult to diagnose the exact source of the pain, and it can be very difficult to treat. In a 2020 systematic review (including 12 observational studies and 5 randomized controlled trials) of BoNT for treatment of chronic pelvic pain, the quality of evidence varied widely.38 Observational studies showed good benefit, but only 1 randomized trial showed statistical difference with the use of BoNT for pelvic pain. No serious adverse events were reported in any of the studies.38 Chronic pelvic pain can be caused by a number of different conditions, and more high-quality research for BoNT is needed, focusing on specific causes.5,38

Continue to: Complex regional pain

Complex regional pain

Complex regional pain syndrome (CRPS) can be a debilitating condition that causes pain, sympathetic dysregulation, and central nervous system sensitization, often related to a traumatic event. Incidence is reported as 5 to 26 per 100,000, although it most likely is severely underdiagnosed.39 Treatment options are limited, and often patients continue to struggle with pain.

Due to the mechanism of action of BoNT, it has a high potential benefit for treatment of the allodynia and hyperalgesia associated with CRPS. BoNT injections have been used for the treatment of CRPS with limited success.40

There is currently limited evidence on BoNT for CRPS, and uncertainty regarding the best injection location remains. Studies have looked at lumbar sympathetic blocks, intra-articular, and grid-like BoNT injections over the area affected by CRPS.39-41 Case studies/series and observational studies have shown success with minimal adverse reactions, but larger high-quality, randomized controlled double-blind studies are still lacking.39-41

Concluding thoughts

Most chronic pain conditions have very limited treatment options, making the exploration of BoNT as a potential addition to those treatments an appealing possibility. Since it was first introduced in 1989, it has been proven to be safe, with limited adverse events, for the treatment of chronic pain.

However, providers need to be familiar with the type and formulation of BoNT product being used. Extensive knowledge of surrounding anatomy and ability to place BoNT in an exact location (which may require either fluoroscopy or ultrasound guidance) is essential.

Continue to: Adequate research and evidence...

Adequate research and evidence for most of the applications discussed in this article are still lacking; some limitations include small sample size, bias, lower quality, and poor methodology. There is also a lack of standardization, including which BoNT product is used, dosage, and location of BoNT placement. All of these issues will need to be addressed in further research.

CORRESPONDENCE

Caleb Dickison, DO, CAQSM, 36065 Darnall Loop, Fort Hood, TX 76544; [email protected]

1. Hehr JD, Schoenbrunner AR, Janis JE. The use of botulinum toxin in pain management: basic science and clinical applications. Plast Reconstr Surg. 2020;145:629e-636e. doi: 10.1097/PRS.0000000000006559

2. Dressler D. Therapeutically relevant features of botulinum toxin drugs. Toxicon. 2020;175:64-68. doi: 10.1016/j.toxicon.2019.12.005

3. Yiannakopoulou E. Serious and long-term adverse events associated with the therapeutic and cosmetic use of botulinum toxin. Pharmacology. 2015;95:65-69. doi: 10.1159/000370245

4. Wollina U, Konrad H. Managing adverse events associated with botulinum toxin type A. Am J Clin Dermatol. 2005;6:141-150. https://doi.org/10.2165/00128071-200506030-00001

5. Guzman S, Helander E, Elhassan A. Use of botulinum toxin for chronic pain management. Topics in Pain Management. 2016;31:1-8. doi: 10.1097/01.TPM.0000482997.94909.69

6. Coté TR, Mohan AK, Polder JA, et al. Botulinum toxin type A injections: adverse events reported to the US Food and Drug Administration in therapeutic and cosmetic cases. J Am Acad Dermatol. 2005;53:407‐415. doi: 10.1016/j.jaad.2005.06.011

7. Aurora SK, Dodick DW, Turkel CC, et al; PREEMPT 1 Chronic Migraine Study Group. OnabotulinumtoxinA for treatment of chronic migraine: results from the double-blind, randomized, placebo-controlled phase of the PREEMPT 1 trial. Cephalalgia. 2010;30:793-803. doi: 10.1177/0333102410364676

8. Diener HC, Dodick DW, Aurora SK, et al; PREEMPT 2 Chronic Migraine Study Group. OnabotulinumtoxinA for treatment of chronic migraine: results from the double-blind, randomized, placebo-controlled phase of the PREEMPT 2 trial. Cephalalgia. 2010Jul;30:804-814. doi: 10.1177/0333102410364677

9. Herd CP, Tomlinson CL, Rick C, et al. Cochrane systematic review and meta-analysis of botulinum toxin for the prevention of migraine. BMJ Open. 2019;9:e027953. doi: 10.1136/bmjopen-2018-027953

10. Freund B, Rao A. Efficacy of botulinum toxin in tension-type headaches: a systematic review of the literature. Pain Pract. 2019;19:541-551. doi: 10.1111/papr.12773

11. Ward A. Spasticity treatment with botulinum toxins. J Neural Transm. 2008;115:607-616. https://doi.org/10.1007/s00702-007-0833-2

12. Ipsen announces FDA approval of Dysport® (abobotulinumtoxinA) for the treatment of upper limb spasticity in children, excluding cerebral palsy [press release]. September 26, 2019. Accessed October 27, 2021. www.businesswire.com/news/home/20190926005480/en/Ipsen-Announces-FDA-Approval-Dysport%C2%AE-abobotulinumtoxinA-Treatment

13. Das TK, Park DM. Effect of treatment with botulinum toxin on spasticity. Postgrad Med J. 1989;65:208-210. doi: 10.1136/pgmj.65.762.208

14. Spiegel LL, Ostrem JL, Bledsoe IO. FDA approvals and consensus guidelines for botulinum toxins in the treatment of dystonia. Toxins (Basel). 2020;12:332. doi: 10.3390/toxins12050332

15. Castelão M, Marques RE, Duarte GS, et al. Botulinum toxin type A therapy for cervical dystonia. Cochrane Database Syst Rev. 2017;12:CD003633. doi: 10.1002/14651858.CD003633.pub3

16. Contarino MF, Van Den Dool J, Balash Y, et al. Clinical practice: evidence-based recommendations for the treatment of cervical dystonia with botulinum toxin. Front Neurol. 2017;8:35. doi: 10.3389/fneur.2017.00035

17. Kumar R. Therapeutic use of botulinum toxin in pain treatment. Neuronal Signal. 2018;2:NS20180058. https://doi.org/10.1042/NS20180058

18. Singh JA. Use of botulinum toxin in musculoskeletal pain. F1000Research. 2013;2:52. https://doi.org/10.12688/f1000research.2-52.v2

19. Blanshan N, Krug H. The use of botulinum toxin for the treatment of chronic joint pain: clinical and experimental evidence. Toxins (Basel). 2020;12:314. doi: 10.3390/toxins12050314

20. Hsu P-C, Wu W-T, Han D-S, et al. Comparative effectiveness of botulinum toxin injection for chronic shoulder pain: a meta-analysis of randomized controlled trials. Toxins (Basel). 2020;12:251. doi: 10.3390/toxins12040251

21. Zhai S, Huang B, Yu K. The efficacy and safety of botulinum toxin type A in painful knee osteoarthritis: a systematic review and meta-analysis. J Int Med Res. 2020;48:300060519895868. doi: 10.1177/0300060519895868

22. Isner-Horobeti ME, Dufour SP, Blaes C, et al. Intramuscular pressure before and after botulinum toxin in chronic exertional compartment syndrome of the leg: a preliminary study. Am J Sports Med. 2013;41:2558‐2566. doi: 10.1177/0363546513499183

23. Hutto WM, Schroeder PB, Leggit JC. Botulinum toxin as a novel treatment for chronic exertional compartment syndrome in the US Military. Mil Med. 2019;184:e458‐e461. doi: 10.1093/milmed/usy223

24. Rahman A, Hamid A, Inozemtsev K, et al. Thoracic outlet syndrome treated with injecting botulinum toxin into middle scalene muscle and pectoral muscle interfascial planes: a case report. A A Pract. 2019;12:235‐237. doi: 10.1213/XAA.0000000000000894

25. Finlayson HC, O’Connor RJ, Brasher PMA, et al. Botulinum toxin injection for management of thoracic outlet syndrome: a double-blind, randomized, controlled trial. Pain. 2011;152:2023-2028. doi: 10.1016/j.pain.2011.04.027

26. Donahue DM, Godoy IRB, Gupta R, et al. Sonographically guided botulinum toxin injections in patients with neurogenic thoracic outlet syndrome: correlation with surgical outcomes. Skeletal Radiol. 2020;49:715-722. https://doi.org/10.1007/s00256-019-03331-9

27. Wei J, Zhu X, Yang G, et al. The efficacy and safety of botulinum toxin type A in treatment of trigeminal neuralgia and peripheral neuropathic pain: a meta‐analysis of randomized controlled trials. Brain Behav. 2019;9:e01409. doi: 10.1002/brb3.1409

28. Samant PD, Kale SY, Ahmed S, et al. Randomized controlled study comparing clinical outcomes after injection botulinum toxin type A versus corticosteroids in chronic plantar fasciitis. Int J Res Orthop. 2018;4:672-675. http://dx.doi.org/10.18203/issn.2455-4510.IntJResOrthop20182744

29. Fry DA. Is botulinum toxin injection effective in reducing pain in patients diagnosed with plantar fasciitis? PCOM Physician Assistant Studies Student Scholarship. 2019;461. https://digitalcommons.pcom.edu/pa_systematic_reviews/461

30. Lin YC, Wu WT, Hsu YC, et al. Comparative effectiveness of botulinum toxin versus non-surgical treatments for treating lateral epicondylitis: a systematic review and meta-analysis. Clin Rehabil. 2018;32:131-145. doi: 10.1177/0269215517702517

31. Ruiz AG, Díaz GV, Fernández BR, et al. Effects of ultrasound-guided administration of botulinum toxin (incobotulinumtoxinA) in patients with lateral epicondylitis. Toxins (Basel). 2019;11:46. doi: 10.3390/toxins11010046

32. Villa S, Raoul G, Machuron F, et al. Improvement in quality of life after botulinum toxin injection for temporomandibular disorder. J Stomatol Oral Maxillofac Surg. 2019;120:2-6. doi: 10.1016/j.jormas.2018.10.00

33. Fu KY, Che, HM, Sun ZP, et al. Long-term efficacy of botulinum toxin type A for the treatment of habitual dislocation of the temporomandibular joint. Br J Oral Maxillofac Surg. 2010;48:281-284. doi: 10.1016/j.bjoms.2009.07.014

34. Machado D, Kumar A, Jabbari B. Abobotulinum toxin A in the treatment of chronic low back pain. Toxins (Basel). 2016;8:374. doi: 10.3390/toxins8120374

35. Cogné M, Petit H, Creuzé A, et al. Are paraspinous intramuscular injections of botulinum toxin a (BoNT-A) efficient in the treatment of chronic low-back pain? A randomised, double-blinded crossover trial. BMC Musculoskelet Disord. 2017;18:454. https://doi.org/10.1186/s12891-017-1816-6

36. Ahmed S, Subramaniam S, Sidhu K, et al. Effect of local anesthetic versus botulinum toxin-A injections for myofascial pain disorders. Clin J Pain. 2019;35:353-367. doi: 10.1097/AJP.0000000000000681

37. Mathias SD, Kuppermann M, Liberman RF, et al. Chronic pelvic pain: prevalence, health-related quality of life, and economic correlates. Obstet Gynecol. 1996;87:321-327. doi: 10.1016/0029-7844(95)00458-0

38. Luo FY, Nasr-Esfahani M, Jarrell J, et al. Botulinum toxin injection for chronic pelvic pain: a systematic review. Acta Obstet Gynecol Scand. 2020;99:1595-1602. https://doi.org/10.1111/aogs.13946

39. Lessard L, Bartow MJ, Lee J, et al. Botulinum toxin A: a novel therapeutic modality for upper extremity chronic regional pain syndrome. Plast Reconstr Surg Glob Open. 2018;6:e1847. doi: 10.1097/GOX.0000000000001847

40. Lee Y, Lee CJ, Choi E, et al. Lumbar sympathetic block with botulinum toxin type A and type B for the complex regional pain syndrome. Toxins (Basel). 2018;10:164. doi: 10.3390/toxins10040164

41. Kwak H, Koh DJ, Min K. Botulinum toxin treatment for intractable allodynia in a patient with complex regional pain syndrome: a case report. Neurology Asia. 2020;25:215-219.

1. Hehr JD, Schoenbrunner AR, Janis JE. The use of botulinum toxin in pain management: basic science and clinical applications. Plast Reconstr Surg. 2020;145:629e-636e. doi: 10.1097/PRS.0000000000006559

2. Dressler D. Therapeutically relevant features of botulinum toxin drugs. Toxicon. 2020;175:64-68. doi: 10.1016/j.toxicon.2019.12.005

3. Yiannakopoulou E. Serious and long-term adverse events associated with the therapeutic and cosmetic use of botulinum toxin. Pharmacology. 2015;95:65-69. doi: 10.1159/000370245

4. Wollina U, Konrad H. Managing adverse events associated with botulinum toxin type A. Am J Clin Dermatol. 2005;6:141-150. https://doi.org/10.2165/00128071-200506030-00001

5. Guzman S, Helander E, Elhassan A. Use of botulinum toxin for chronic pain management. Topics in Pain Management. 2016;31:1-8. doi: 10.1097/01.TPM.0000482997.94909.69

6. Coté TR, Mohan AK, Polder JA, et al. Botulinum toxin type A injections: adverse events reported to the US Food and Drug Administration in therapeutic and cosmetic cases. J Am Acad Dermatol. 2005;53:407‐415. doi: 10.1016/j.jaad.2005.06.011

7. Aurora SK, Dodick DW, Turkel CC, et al; PREEMPT 1 Chronic Migraine Study Group. OnabotulinumtoxinA for treatment of chronic migraine: results from the double-blind, randomized, placebo-controlled phase of the PREEMPT 1 trial. Cephalalgia. 2010;30:793-803. doi: 10.1177/0333102410364676

8. Diener HC, Dodick DW, Aurora SK, et al; PREEMPT 2 Chronic Migraine Study Group. OnabotulinumtoxinA for treatment of chronic migraine: results from the double-blind, randomized, placebo-controlled phase of the PREEMPT 2 trial. Cephalalgia. 2010Jul;30:804-814. doi: 10.1177/0333102410364677

9. Herd CP, Tomlinson CL, Rick C, et al. Cochrane systematic review and meta-analysis of botulinum toxin for the prevention of migraine. BMJ Open. 2019;9:e027953. doi: 10.1136/bmjopen-2018-027953

10. Freund B, Rao A. Efficacy of botulinum toxin in tension-type headaches: a systematic review of the literature. Pain Pract. 2019;19:541-551. doi: 10.1111/papr.12773

11. Ward A. Spasticity treatment with botulinum toxins. J Neural Transm. 2008;115:607-616. https://doi.org/10.1007/s00702-007-0833-2

12. Ipsen announces FDA approval of Dysport® (abobotulinumtoxinA) for the treatment of upper limb spasticity in children, excluding cerebral palsy [press release]. September 26, 2019. Accessed October 27, 2021. www.businesswire.com/news/home/20190926005480/en/Ipsen-Announces-FDA-Approval-Dysport%C2%AE-abobotulinumtoxinA-Treatment

13. Das TK, Park DM. Effect of treatment with botulinum toxin on spasticity. Postgrad Med J. 1989;65:208-210. doi: 10.1136/pgmj.65.762.208

14. Spiegel LL, Ostrem JL, Bledsoe IO. FDA approvals and consensus guidelines for botulinum toxins in the treatment of dystonia. Toxins (Basel). 2020;12:332. doi: 10.3390/toxins12050332

15. Castelão M, Marques RE, Duarte GS, et al. Botulinum toxin type A therapy for cervical dystonia. Cochrane Database Syst Rev. 2017;12:CD003633. doi: 10.1002/14651858.CD003633.pub3

16. Contarino MF, Van Den Dool J, Balash Y, et al. Clinical practice: evidence-based recommendations for the treatment of cervical dystonia with botulinum toxin. Front Neurol. 2017;8:35. doi: 10.3389/fneur.2017.00035

17. Kumar R. Therapeutic use of botulinum toxin in pain treatment. Neuronal Signal. 2018;2:NS20180058. https://doi.org/10.1042/NS20180058

18. Singh JA. Use of botulinum toxin in musculoskeletal pain. F1000Research. 2013;2:52. https://doi.org/10.12688/f1000research.2-52.v2

19. Blanshan N, Krug H. The use of botulinum toxin for the treatment of chronic joint pain: clinical and experimental evidence. Toxins (Basel). 2020;12:314. doi: 10.3390/toxins12050314

20. Hsu P-C, Wu W-T, Han D-S, et al. Comparative effectiveness of botulinum toxin injection for chronic shoulder pain: a meta-analysis of randomized controlled trials. Toxins (Basel). 2020;12:251. doi: 10.3390/toxins12040251

21. Zhai S, Huang B, Yu K. The efficacy and safety of botulinum toxin type A in painful knee osteoarthritis: a systematic review and meta-analysis. J Int Med Res. 2020;48:300060519895868. doi: 10.1177/0300060519895868

22. Isner-Horobeti ME, Dufour SP, Blaes C, et al. Intramuscular pressure before and after botulinum toxin in chronic exertional compartment syndrome of the leg: a preliminary study. Am J Sports Med. 2013;41:2558‐2566. doi: 10.1177/0363546513499183

23. Hutto WM, Schroeder PB, Leggit JC. Botulinum toxin as a novel treatment for chronic exertional compartment syndrome in the US Military. Mil Med. 2019;184:e458‐e461. doi: 10.1093/milmed/usy223

24. Rahman A, Hamid A, Inozemtsev K, et al. Thoracic outlet syndrome treated with injecting botulinum toxin into middle scalene muscle and pectoral muscle interfascial planes: a case report. A A Pract. 2019;12:235‐237. doi: 10.1213/XAA.0000000000000894

25. Finlayson HC, O’Connor RJ, Brasher PMA, et al. Botulinum toxin injection for management of thoracic outlet syndrome: a double-blind, randomized, controlled trial. Pain. 2011;152:2023-2028. doi: 10.1016/j.pain.2011.04.027

26. Donahue DM, Godoy IRB, Gupta R, et al. Sonographically guided botulinum toxin injections in patients with neurogenic thoracic outlet syndrome: correlation with surgical outcomes. Skeletal Radiol. 2020;49:715-722. https://doi.org/10.1007/s00256-019-03331-9

27. Wei J, Zhu X, Yang G, et al. The efficacy and safety of botulinum toxin type A in treatment of trigeminal neuralgia and peripheral neuropathic pain: a meta‐analysis of randomized controlled trials. Brain Behav. 2019;9:e01409. doi: 10.1002/brb3.1409

28. Samant PD, Kale SY, Ahmed S, et al. Randomized controlled study comparing clinical outcomes after injection botulinum toxin type A versus corticosteroids in chronic plantar fasciitis. Int J Res Orthop. 2018;4:672-675. http://dx.doi.org/10.18203/issn.2455-4510.IntJResOrthop20182744

29. Fry DA. Is botulinum toxin injection effective in reducing pain in patients diagnosed with plantar fasciitis? PCOM Physician Assistant Studies Student Scholarship. 2019;461. https://digitalcommons.pcom.edu/pa_systematic_reviews/461

30. Lin YC, Wu WT, Hsu YC, et al. Comparative effectiveness of botulinum toxin versus non-surgical treatments for treating lateral epicondylitis: a systematic review and meta-analysis. Clin Rehabil. 2018;32:131-145. doi: 10.1177/0269215517702517

31. Ruiz AG, Díaz GV, Fernández BR, et al. Effects of ultrasound-guided administration of botulinum toxin (incobotulinumtoxinA) in patients with lateral epicondylitis. Toxins (Basel). 2019;11:46. doi: 10.3390/toxins11010046

32. Villa S, Raoul G, Machuron F, et al. Improvement in quality of life after botulinum toxin injection for temporomandibular disorder. J Stomatol Oral Maxillofac Surg. 2019;120:2-6. doi: 10.1016/j.jormas.2018.10.00

33. Fu KY, Che, HM, Sun ZP, et al. Long-term efficacy of botulinum toxin type A for the treatment of habitual dislocation of the temporomandibular joint. Br J Oral Maxillofac Surg. 2010;48:281-284. doi: 10.1016/j.bjoms.2009.07.014

34. Machado D, Kumar A, Jabbari B. Abobotulinum toxin A in the treatment of chronic low back pain. Toxins (Basel). 2016;8:374. doi: 10.3390/toxins8120374

35. Cogné M, Petit H, Creuzé A, et al. Are paraspinous intramuscular injections of botulinum toxin a (BoNT-A) efficient in the treatment of chronic low-back pain? A randomised, double-blinded crossover trial. BMC Musculoskelet Disord. 2017;18:454. https://doi.org/10.1186/s12891-017-1816-6

36. Ahmed S, Subramaniam S, Sidhu K, et al. Effect of local anesthetic versus botulinum toxin-A injections for myofascial pain disorders. Clin J Pain. 2019;35:353-367. doi: 10.1097/AJP.0000000000000681

37. Mathias SD, Kuppermann M, Liberman RF, et al. Chronic pelvic pain: prevalence, health-related quality of life, and economic correlates. Obstet Gynecol. 1996;87:321-327. doi: 10.1016/0029-7844(95)00458-0

38. Luo FY, Nasr-Esfahani M, Jarrell J, et al. Botulinum toxin injection for chronic pelvic pain: a systematic review. Acta Obstet Gynecol Scand. 2020;99:1595-1602. https://doi.org/10.1111/aogs.13946

39. Lessard L, Bartow MJ, Lee J, et al. Botulinum toxin A: a novel therapeutic modality for upper extremity chronic regional pain syndrome. Plast Reconstr Surg Glob Open. 2018;6:e1847. doi: 10.1097/GOX.0000000000001847

40. Lee Y, Lee CJ, Choi E, et al. Lumbar sympathetic block with botulinum toxin type A and type B for the complex regional pain syndrome. Toxins (Basel). 2018;10:164. doi: 10.3390/toxins10040164

41. Kwak H, Koh DJ, Min K. Botulinum toxin treatment for intractable allodynia in a patient with complex regional pain syndrome: a case report. Neurology Asia. 2020;25:215-219.

PRACTICE RECOMMENDATIONS

› Consider botulinum toxin (BoNT) for patients with headache, spasticity, or cervical dystonia, as the FDA has approved BoNT for pain relief in these conditions. A

Strength of recommendation (SOR)

A Good-quality patient-oriented evidence

B Inconsistent or limited-quality patient-oriented evidence

C Consensus, usual practice, opinion, disease-oriented evidence, case series

AGA Clinical Care Pathway: Screening, diagnosis, and treatment of NAFLD and NASH

The American Gastroenterological Association recently published a Clinical Care Pathway for screening, diagnosis, and treatment of patients with nonalcoholic fatty liver disease (NAFLD).

Recommendations are intended for a spectrum of clinical settings, including primary care, obesity medicine, gastroenterology, hepatology, and endocrinology practices, reported lead author Fasiha Kanwal, MD, of Baylor College of Medicine, Houston, and colleagues.