User login

Twincretin ‘impressive’: Topline data from phase 3 trial in diabetes

Tirzepatide, a novel subcutaneously injected drug that acts via two related but separate pathways of glucose control, produced strikingly positive effects in top-line results from the phase 3, placebo-controlled study SURPASS-1 in 478 adults with type 2 diabetes, according to a Dec. 9 press release from the manufacturer, Lilly.

The tirzepatide molecule exerts agonist effects at both the glucose-dependent insulinotropic polypeptide (GIP) receptor and the glucagon-like peptide-1 (GLP-1) receptor, and has been called a “twincretin” for its activity encompassing two different incretins. Phase 2 trial results caused excitement, with one physician calling the data “unbelievable” when reported in 2018.

SURPASS-1 enrolled patients who were very early in the course of their disease, had on average relatively mild elevation in glucose levels, and few metabolic comorbidities. They took one of three doses of the agent (5, 10, or 15 mg) as monotherapy or placebo for 40 weeks.

Julio Rosenstock, MD, said in the Lilly statement: “The study took a bold approach in assessing A1c targets. Not only did nearly 90% of all participants taking tirzepatide meet the standard A1c goal of less than 7%, more than half taking the highest dose also achieved an A1c less than 5.7%, the level seen in people without diabetes.”

Dr. Rosenstock is principal investigator of SURPASS-1 and director of the Dallas Diabetes Research Center in Texas.

The discontinuation rate in the high-dose group was 21.5% compared with less than 10% in the two lower-dose cohorts. Lilly said most of the dropouts “were due to the pandemic and family or work reasons.” The dropout rate in the placebo group was 14.8%.

These data were not included in the efficacy analysis, however, which “muddied” the analysis somewhat, one pharma analyst told BioPharma Dive.

Commenting on the new trial data, Ildiko Lingvay, MD, said in an interview: “I am very impressed with these results,” which are “unprecedented for any glucose-lowering medication that has ever been tested.”

Dr. Lingvay, of the department of internal medicine/endocrinology, and medical director, office of clinical trials management at UT Southwestern Medical Center, Dallas, was not involved in the study.

She added that the weight loss seen with tirzepatide “is equally impressive with greater than 10% of body weight loss above placebo achieved within 40 weeks of treatment and without any directed weight loss efforts.”

If the agent is eventually approved, “I am enthusiastic about the prospect of having another very powerful tool to address both diabetes and obesity,” she added.

The full results of SURPASS-1 will be presented at the American Diabetes Association 81st Scientific Sessions and published in a peer-reviewed journal in 2021.

SURPASS-1 is one of eight phase 3 studies of the drug, including five registration studies and one large 12,500-patient cardiovascular outcomes trial.

Tirzepatide patients lost up to 20 lb, side effect profile ‘reassuring’

In the study, patients had been recently diagnosed with type 2 diabetes (average duration, 4.8 years) and 54% were treatment-naive. Average baseline hemoglobin A1c was 7.9% and mean weight was 85.9 kg (189 pounds).

Patients started on a subcutaneous injectable dose of tirzepatide of 2.5 mg per week, which was titrated up to the final dose – 5, 10, or 15 mg – in 2.5-mg increments given as monotherapy for 40 weeks and compared with placebo.

Treatment with tirzepatide resulted in average reductions in A1c from baseline that ranged from 1.87% to 2.07%, depending on the dose, and were all significant compared with an increase of 0.4% with placebo.

The percentage of patients whose A1c fell to normal levels (less than 5.7%) ranged from 30.5% to 51.7%, compared with 0.9% among controls, and again, was significant for all doses.

Patients treated with tirzepatide also lost weight. Average weight reductions after 40 weeks were significant and ranged from 7.0 to 9.5 kg (15-21 pounds) compared with an average loss of 0.7 kg (1.5 pounds) among patients who received placebo.

The most common adverse events were gastrointestinal-related and mild to moderate in severity, and usually occurred during dose escalation.

Dr. Lingvay said the safety data reported are “reassuring, with side effects in the anticipated range and comparable with other medications in the GLP-1 agonist class.”

And no hypoglycemic (level 2, < 54 mg/dL) events were reported, “which is impressive considering the overall glucose level achieved,” she noted.

“I am eagerly awaiting the results of the other studies within the SURPASS program and hope those will confirm these initial findings and provide additional safety and efficacy information in a wider range of patients with type 2 diabetes,” she concluded.

Dr. Lingvay has reported receiving research funding, advisory/consulting fees, and/or other support from Novo Nordisk, Eli Lilly, Sanofi, AstraZeneca, Boehringer Ingelheim, Janssen, Intercept, Intarcia, Target Pharma, Merck, Pfizer, Novartis, GI Dynamics, Mylan, MannKind, Valeritas, Bayer, and Zealand Pharma.

A version of this article originally appeared on Medscape.com.

Tirzepatide, a novel subcutaneously injected drug that acts via two related but separate pathways of glucose control, produced strikingly positive effects in top-line results from the phase 3, placebo-controlled study SURPASS-1 in 478 adults with type 2 diabetes, according to a Dec. 9 press release from the manufacturer, Lilly.

The tirzepatide molecule exerts agonist effects at both the glucose-dependent insulinotropic polypeptide (GIP) receptor and the glucagon-like peptide-1 (GLP-1) receptor, and has been called a “twincretin” for its activity encompassing two different incretins. Phase 2 trial results caused excitement, with one physician calling the data “unbelievable” when reported in 2018.

SURPASS-1 enrolled patients who were very early in the course of their disease, had on average relatively mild elevation in glucose levels, and few metabolic comorbidities. They took one of three doses of the agent (5, 10, or 15 mg) as monotherapy or placebo for 40 weeks.

Julio Rosenstock, MD, said in the Lilly statement: “The study took a bold approach in assessing A1c targets. Not only did nearly 90% of all participants taking tirzepatide meet the standard A1c goal of less than 7%, more than half taking the highest dose also achieved an A1c less than 5.7%, the level seen in people without diabetes.”

Dr. Rosenstock is principal investigator of SURPASS-1 and director of the Dallas Diabetes Research Center in Texas.

The discontinuation rate in the high-dose group was 21.5% compared with less than 10% in the two lower-dose cohorts. Lilly said most of the dropouts “were due to the pandemic and family or work reasons.” The dropout rate in the placebo group was 14.8%.

These data were not included in the efficacy analysis, however, which “muddied” the analysis somewhat, one pharma analyst told BioPharma Dive.

Commenting on the new trial data, Ildiko Lingvay, MD, said in an interview: “I am very impressed with these results,” which are “unprecedented for any glucose-lowering medication that has ever been tested.”

Dr. Lingvay, of the department of internal medicine/endocrinology, and medical director, office of clinical trials management at UT Southwestern Medical Center, Dallas, was not involved in the study.

She added that the weight loss seen with tirzepatide “is equally impressive with greater than 10% of body weight loss above placebo achieved within 40 weeks of treatment and without any directed weight loss efforts.”

If the agent is eventually approved, “I am enthusiastic about the prospect of having another very powerful tool to address both diabetes and obesity,” she added.

The full results of SURPASS-1 will be presented at the American Diabetes Association 81st Scientific Sessions and published in a peer-reviewed journal in 2021.

SURPASS-1 is one of eight phase 3 studies of the drug, including five registration studies and one large 12,500-patient cardiovascular outcomes trial.

Tirzepatide patients lost up to 20 lb, side effect profile ‘reassuring’

In the study, patients had been recently diagnosed with type 2 diabetes (average duration, 4.8 years) and 54% were treatment-naive. Average baseline hemoglobin A1c was 7.9% and mean weight was 85.9 kg (189 pounds).

Patients started on a subcutaneous injectable dose of tirzepatide of 2.5 mg per week, which was titrated up to the final dose – 5, 10, or 15 mg – in 2.5-mg increments given as monotherapy for 40 weeks and compared with placebo.

Treatment with tirzepatide resulted in average reductions in A1c from baseline that ranged from 1.87% to 2.07%, depending on the dose, and were all significant compared with an increase of 0.4% with placebo.

The percentage of patients whose A1c fell to normal levels (less than 5.7%) ranged from 30.5% to 51.7%, compared with 0.9% among controls, and again, was significant for all doses.

Patients treated with tirzepatide also lost weight. Average weight reductions after 40 weeks were significant and ranged from 7.0 to 9.5 kg (15-21 pounds) compared with an average loss of 0.7 kg (1.5 pounds) among patients who received placebo.

The most common adverse events were gastrointestinal-related and mild to moderate in severity, and usually occurred during dose escalation.

Dr. Lingvay said the safety data reported are “reassuring, with side effects in the anticipated range and comparable with other medications in the GLP-1 agonist class.”

And no hypoglycemic (level 2, < 54 mg/dL) events were reported, “which is impressive considering the overall glucose level achieved,” she noted.

“I am eagerly awaiting the results of the other studies within the SURPASS program and hope those will confirm these initial findings and provide additional safety and efficacy information in a wider range of patients with type 2 diabetes,” she concluded.

Dr. Lingvay has reported receiving research funding, advisory/consulting fees, and/or other support from Novo Nordisk, Eli Lilly, Sanofi, AstraZeneca, Boehringer Ingelheim, Janssen, Intercept, Intarcia, Target Pharma, Merck, Pfizer, Novartis, GI Dynamics, Mylan, MannKind, Valeritas, Bayer, and Zealand Pharma.

A version of this article originally appeared on Medscape.com.

Tirzepatide, a novel subcutaneously injected drug that acts via two related but separate pathways of glucose control, produced strikingly positive effects in top-line results from the phase 3, placebo-controlled study SURPASS-1 in 478 adults with type 2 diabetes, according to a Dec. 9 press release from the manufacturer, Lilly.

The tirzepatide molecule exerts agonist effects at both the glucose-dependent insulinotropic polypeptide (GIP) receptor and the glucagon-like peptide-1 (GLP-1) receptor, and has been called a “twincretin” for its activity encompassing two different incretins. Phase 2 trial results caused excitement, with one physician calling the data “unbelievable” when reported in 2018.

SURPASS-1 enrolled patients who were very early in the course of their disease, had on average relatively mild elevation in glucose levels, and few metabolic comorbidities. They took one of three doses of the agent (5, 10, or 15 mg) as monotherapy or placebo for 40 weeks.

Julio Rosenstock, MD, said in the Lilly statement: “The study took a bold approach in assessing A1c targets. Not only did nearly 90% of all participants taking tirzepatide meet the standard A1c goal of less than 7%, more than half taking the highest dose also achieved an A1c less than 5.7%, the level seen in people without diabetes.”

Dr. Rosenstock is principal investigator of SURPASS-1 and director of the Dallas Diabetes Research Center in Texas.

The discontinuation rate in the high-dose group was 21.5% compared with less than 10% in the two lower-dose cohorts. Lilly said most of the dropouts “were due to the pandemic and family or work reasons.” The dropout rate in the placebo group was 14.8%.

These data were not included in the efficacy analysis, however, which “muddied” the analysis somewhat, one pharma analyst told BioPharma Dive.

Commenting on the new trial data, Ildiko Lingvay, MD, said in an interview: “I am very impressed with these results,” which are “unprecedented for any glucose-lowering medication that has ever been tested.”

Dr. Lingvay, of the department of internal medicine/endocrinology, and medical director, office of clinical trials management at UT Southwestern Medical Center, Dallas, was not involved in the study.

She added that the weight loss seen with tirzepatide “is equally impressive with greater than 10% of body weight loss above placebo achieved within 40 weeks of treatment and without any directed weight loss efforts.”

If the agent is eventually approved, “I am enthusiastic about the prospect of having another very powerful tool to address both diabetes and obesity,” she added.

The full results of SURPASS-1 will be presented at the American Diabetes Association 81st Scientific Sessions and published in a peer-reviewed journal in 2021.

SURPASS-1 is one of eight phase 3 studies of the drug, including five registration studies and one large 12,500-patient cardiovascular outcomes trial.

Tirzepatide patients lost up to 20 lb, side effect profile ‘reassuring’

In the study, patients had been recently diagnosed with type 2 diabetes (average duration, 4.8 years) and 54% were treatment-naive. Average baseline hemoglobin A1c was 7.9% and mean weight was 85.9 kg (189 pounds).

Patients started on a subcutaneous injectable dose of tirzepatide of 2.5 mg per week, which was titrated up to the final dose – 5, 10, or 15 mg – in 2.5-mg increments given as monotherapy for 40 weeks and compared with placebo.

Treatment with tirzepatide resulted in average reductions in A1c from baseline that ranged from 1.87% to 2.07%, depending on the dose, and were all significant compared with an increase of 0.4% with placebo.

The percentage of patients whose A1c fell to normal levels (less than 5.7%) ranged from 30.5% to 51.7%, compared with 0.9% among controls, and again, was significant for all doses.

Patients treated with tirzepatide also lost weight. Average weight reductions after 40 weeks were significant and ranged from 7.0 to 9.5 kg (15-21 pounds) compared with an average loss of 0.7 kg (1.5 pounds) among patients who received placebo.

The most common adverse events were gastrointestinal-related and mild to moderate in severity, and usually occurred during dose escalation.

Dr. Lingvay said the safety data reported are “reassuring, with side effects in the anticipated range and comparable with other medications in the GLP-1 agonist class.”

And no hypoglycemic (level 2, < 54 mg/dL) events were reported, “which is impressive considering the overall glucose level achieved,” she noted.

“I am eagerly awaiting the results of the other studies within the SURPASS program and hope those will confirm these initial findings and provide additional safety and efficacy information in a wider range of patients with type 2 diabetes,” she concluded.

Dr. Lingvay has reported receiving research funding, advisory/consulting fees, and/or other support from Novo Nordisk, Eli Lilly, Sanofi, AstraZeneca, Boehringer Ingelheim, Janssen, Intercept, Intarcia, Target Pharma, Merck, Pfizer, Novartis, GI Dynamics, Mylan, MannKind, Valeritas, Bayer, and Zealand Pharma.

A version of this article originally appeared on Medscape.com.

Type 2 Diabetes 2021

This supplement to Clinician Reviews brings together key updates in the field of T2D to help care for patients who have not only T2D, but also other interconnected diseases.

Supplementary Materials:

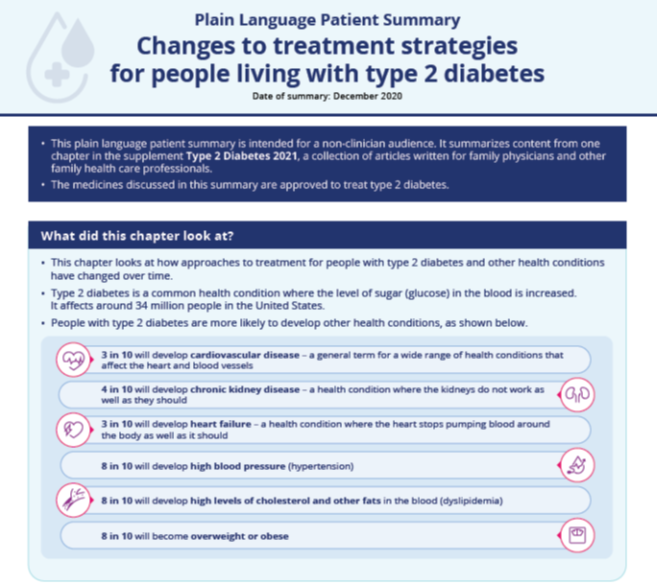

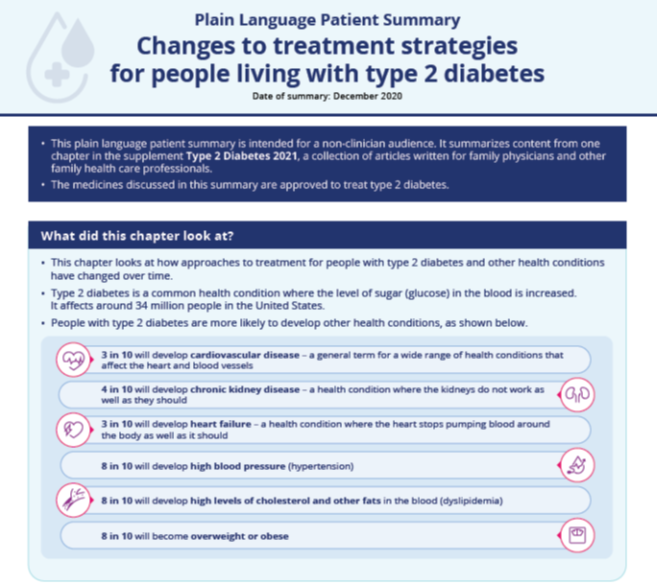

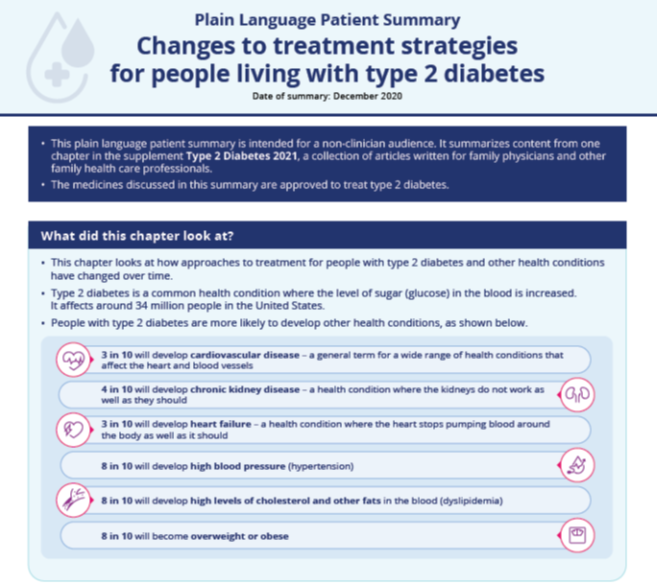

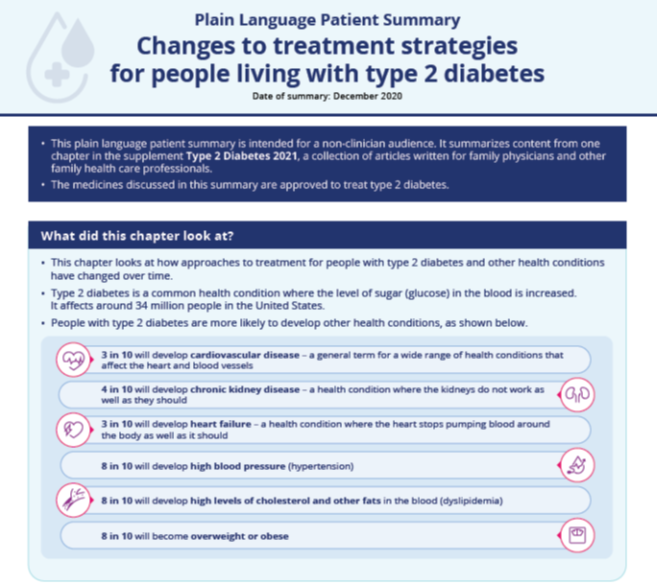

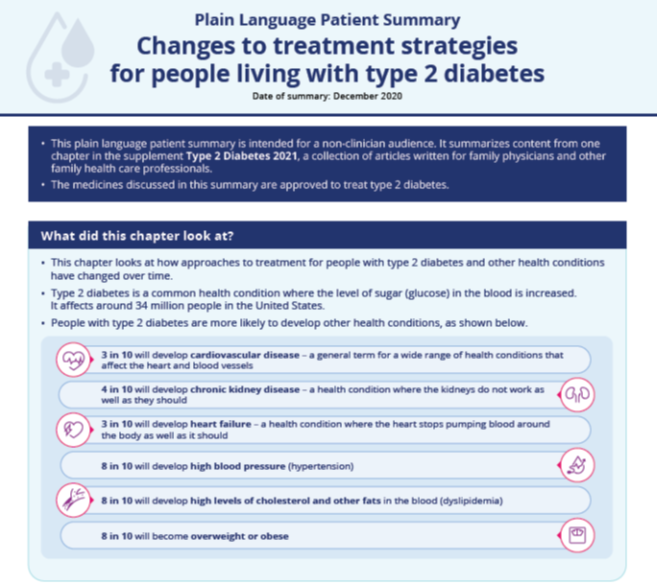

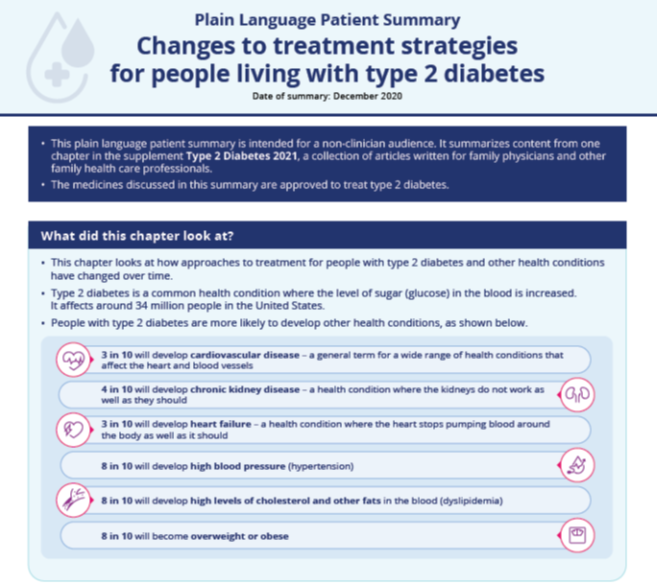

Chapter 1: Evolution of Type 2 Diabetes Treatment

Plain Language Patient Summary:

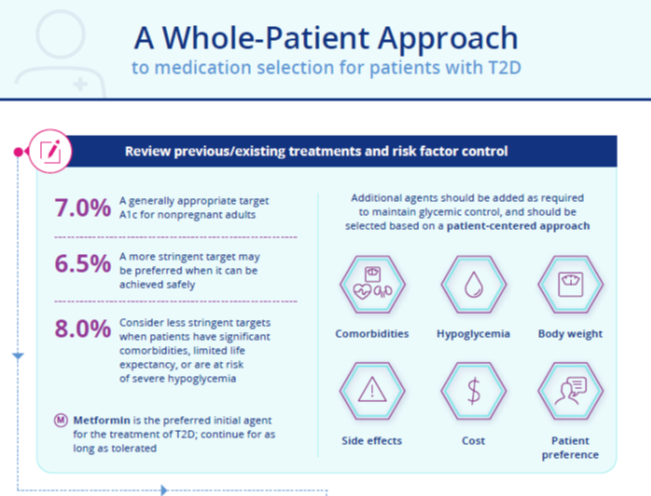

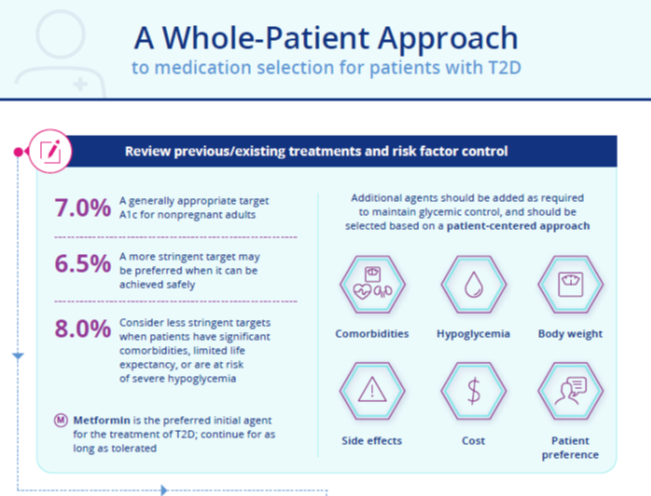

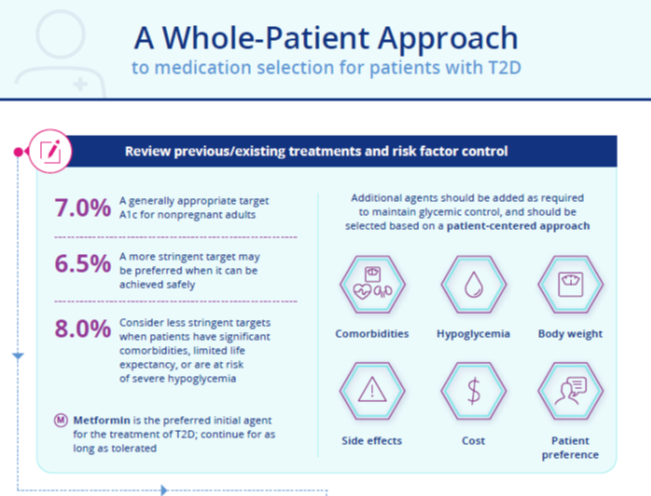

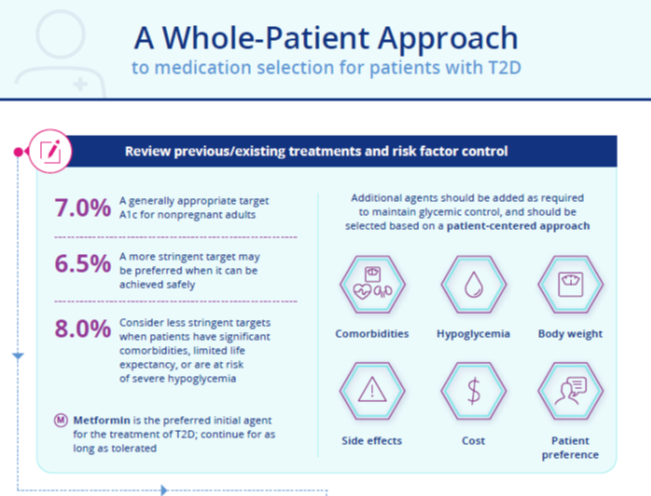

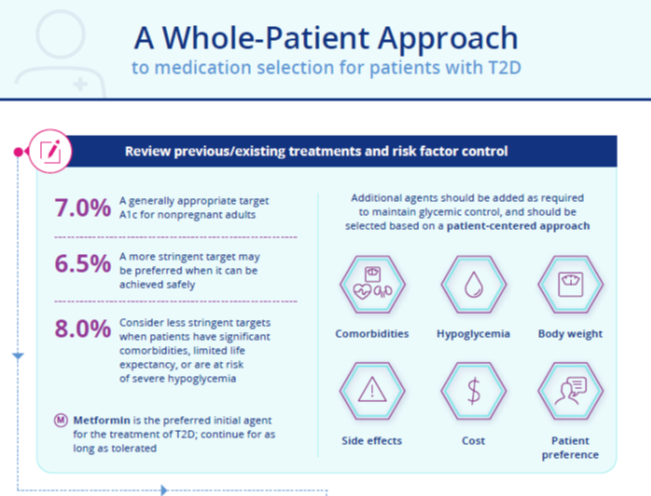

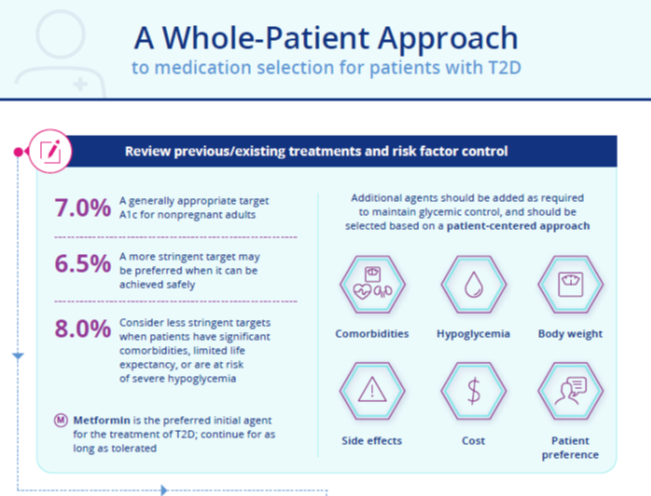

Infographic:

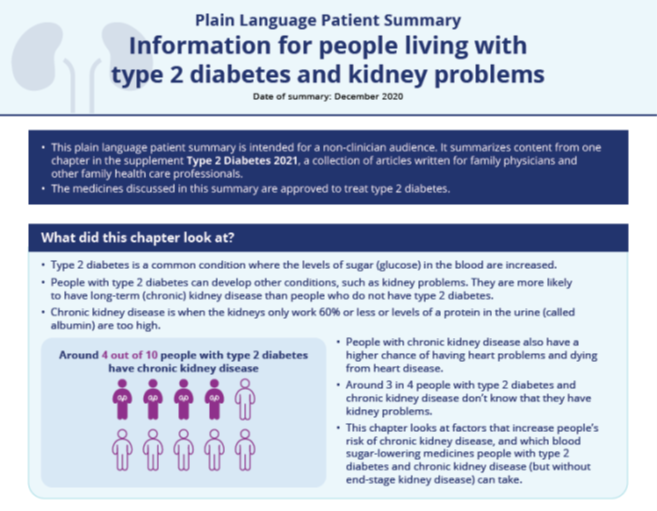

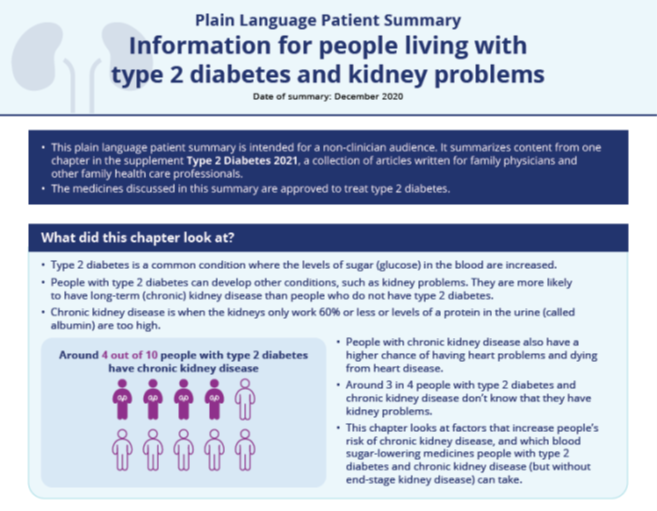

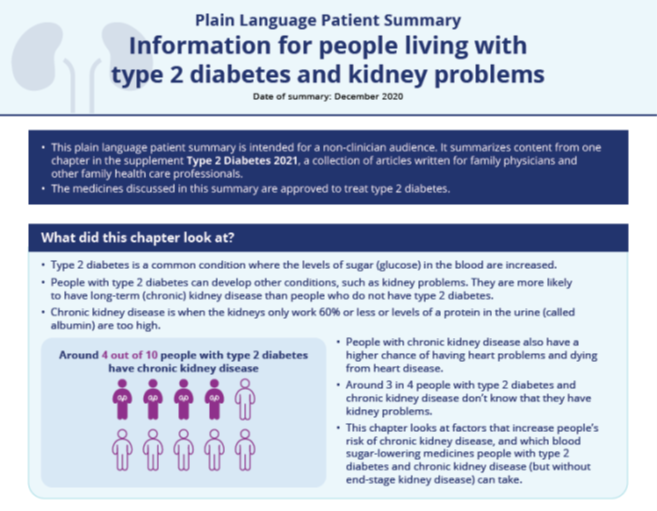

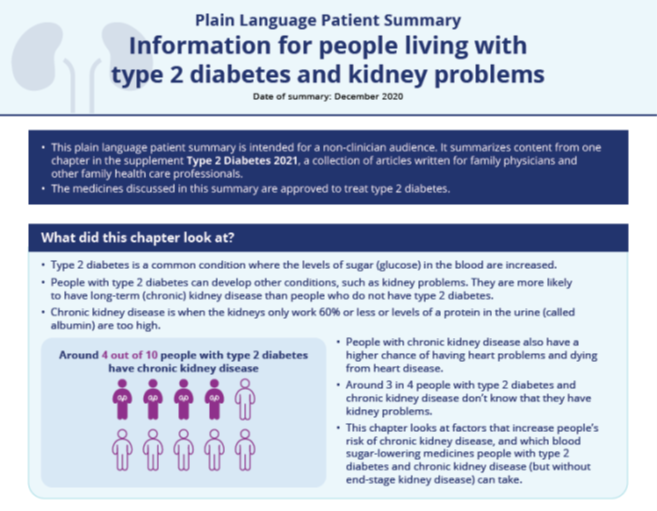

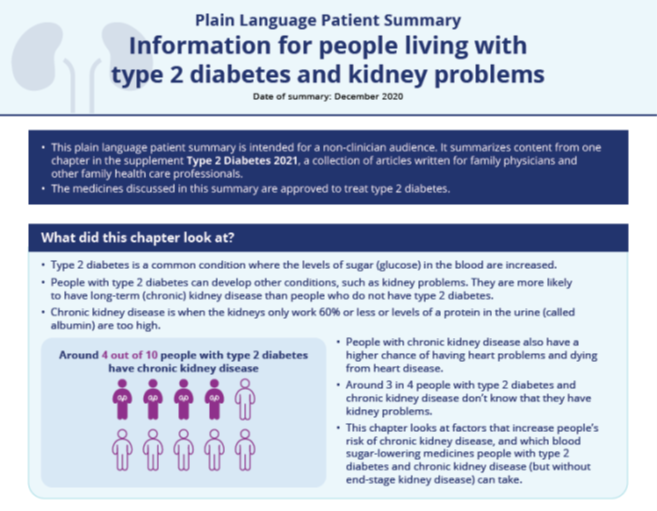

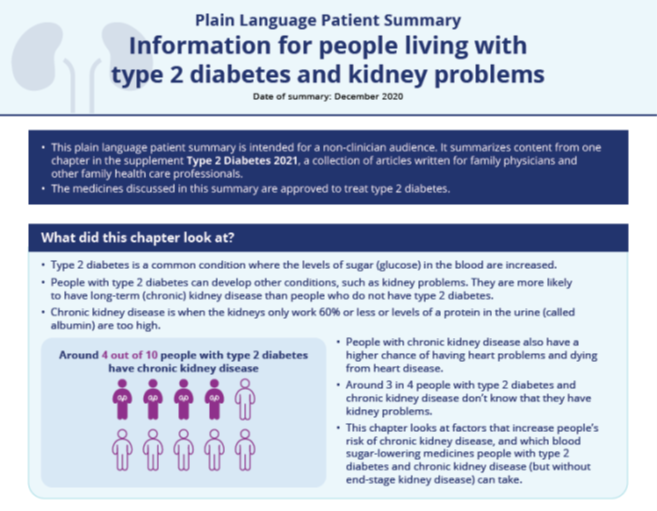

Chapter 2: A Practical Approach to Managing Kidney Disease in Type 2 Diabetes

Plain Language Patient Summary:

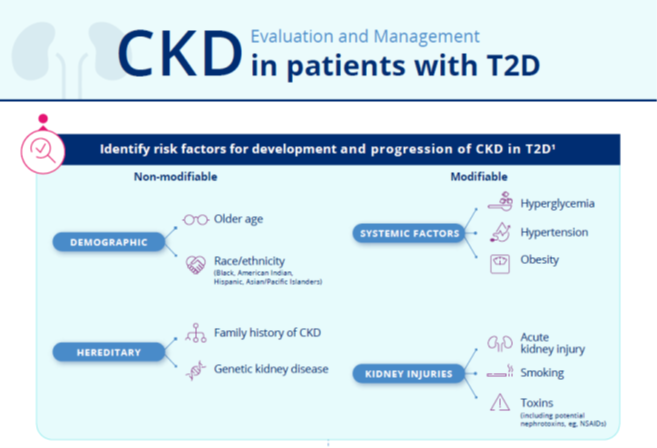

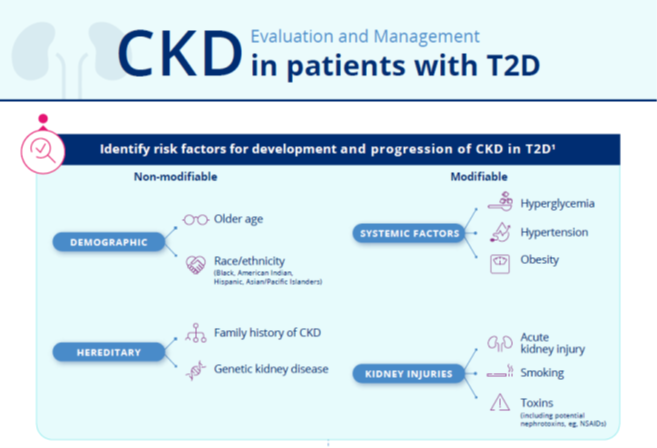

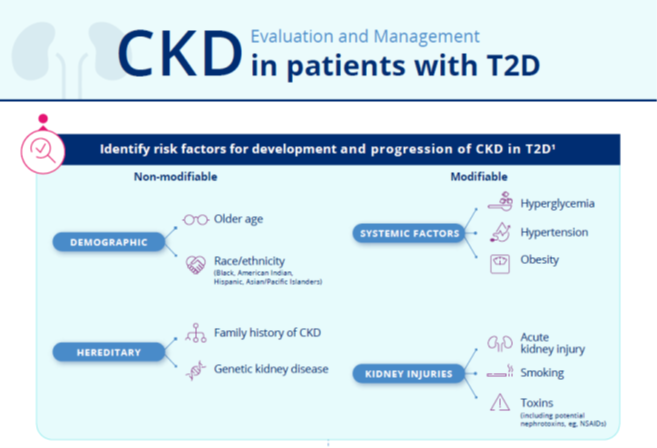

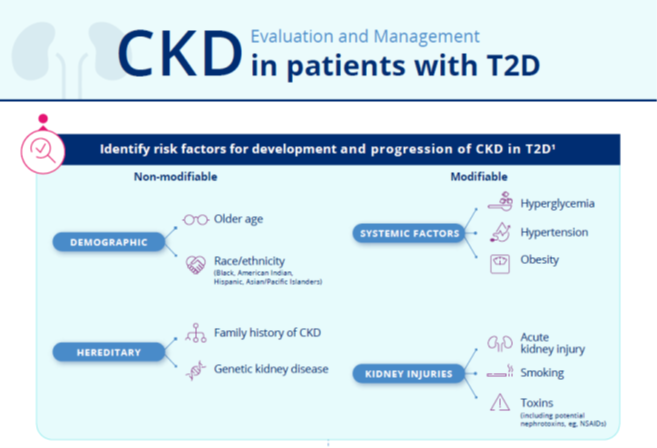

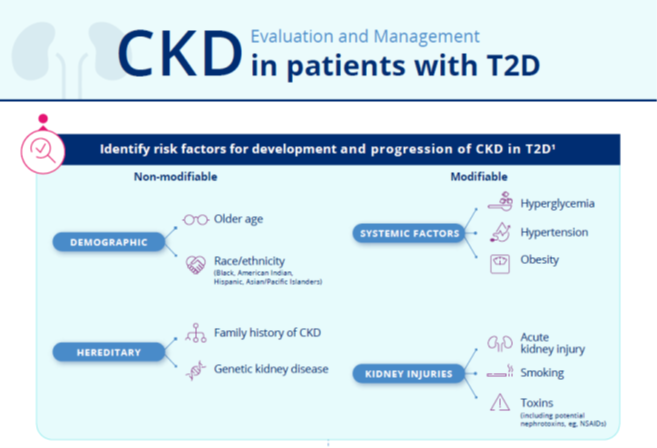

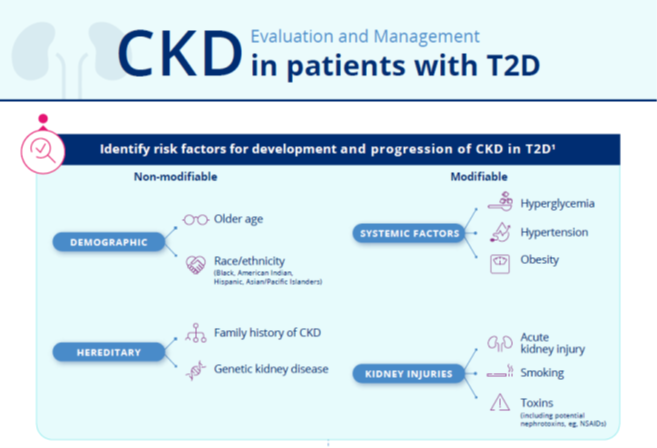

Infographic:

Chapter 3: Heart Failure in Patients with Type 2 Diabetes

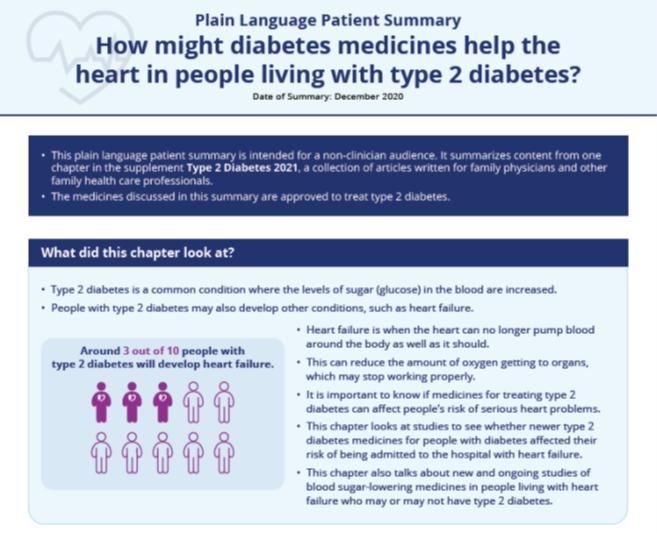

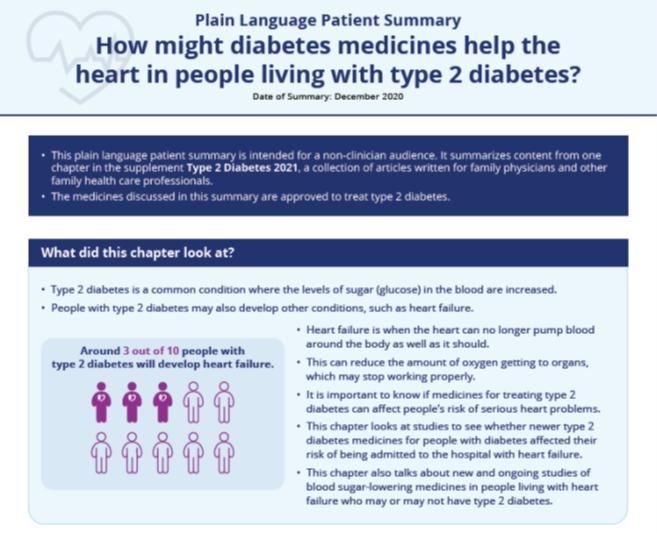

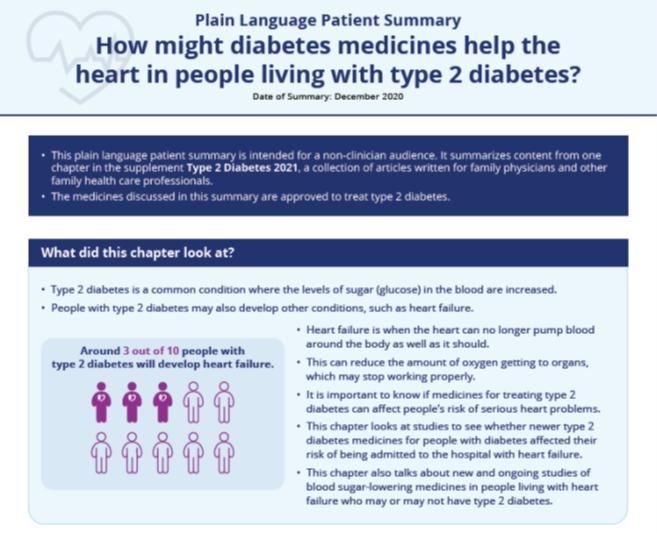

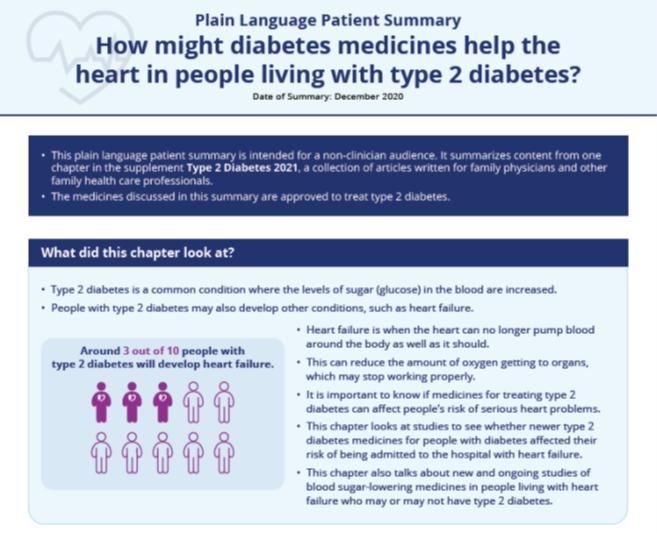

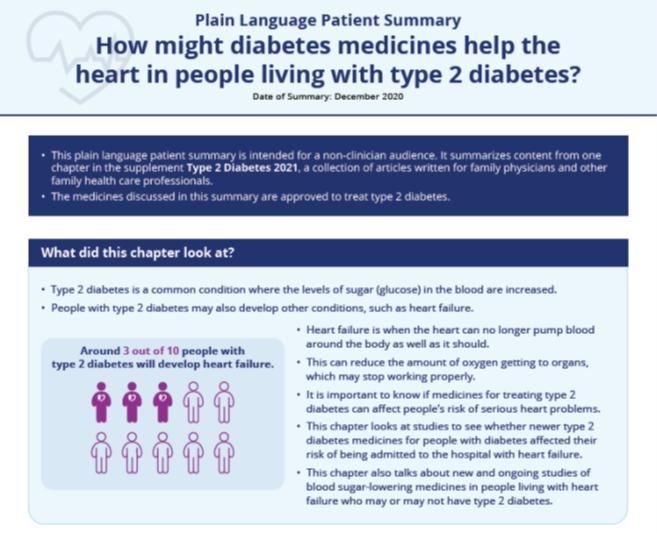

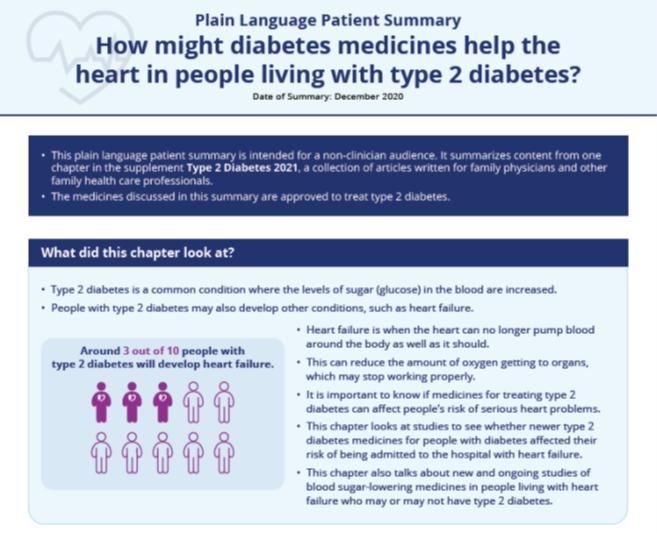

Plain Language Patient Summary:

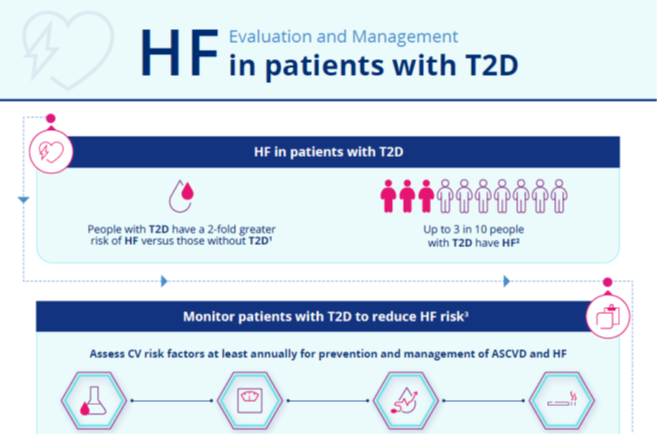

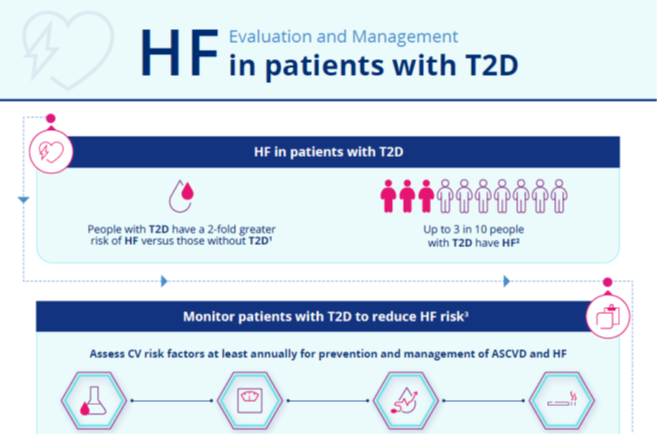

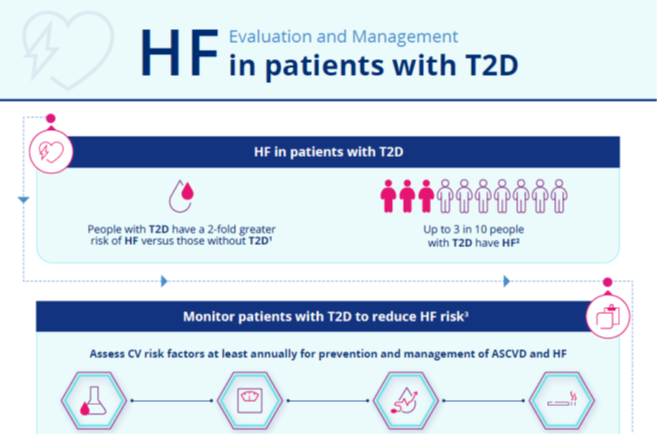

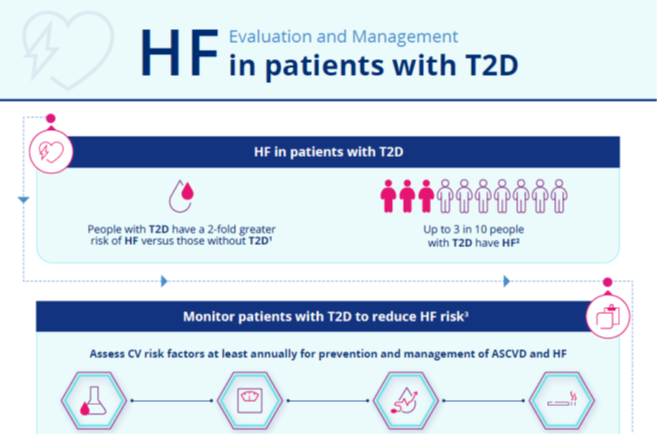

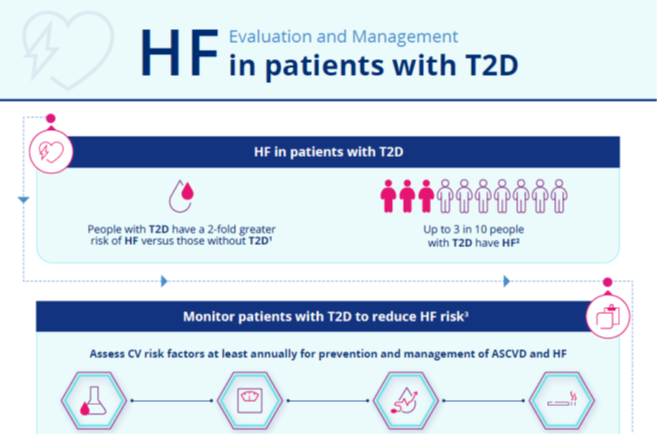

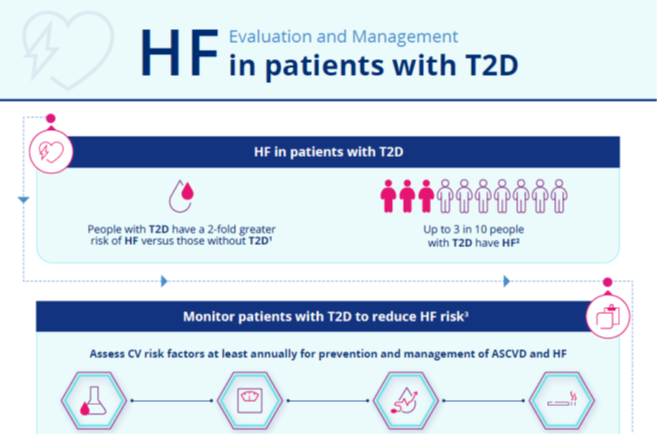

Infographic:

Chapter 4: Overcoming Therapeutic Inertia: Practical Approaches

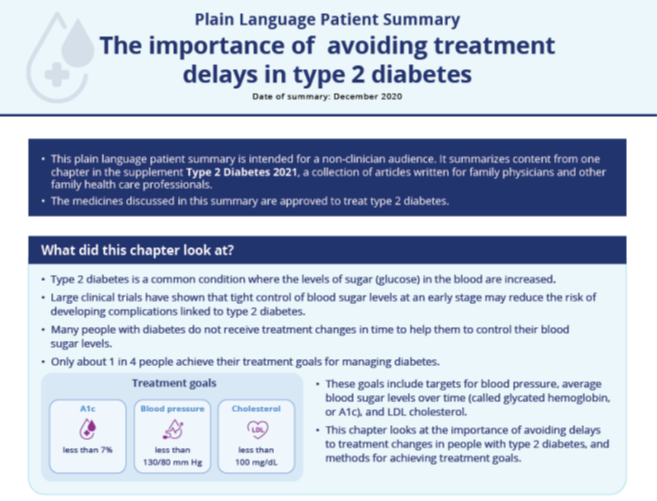

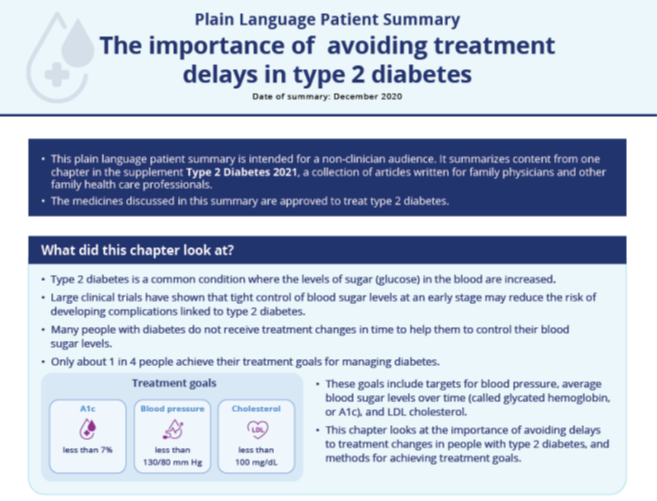

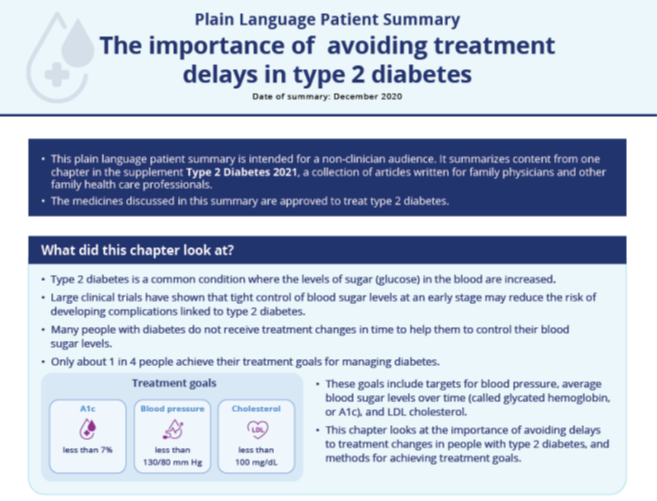

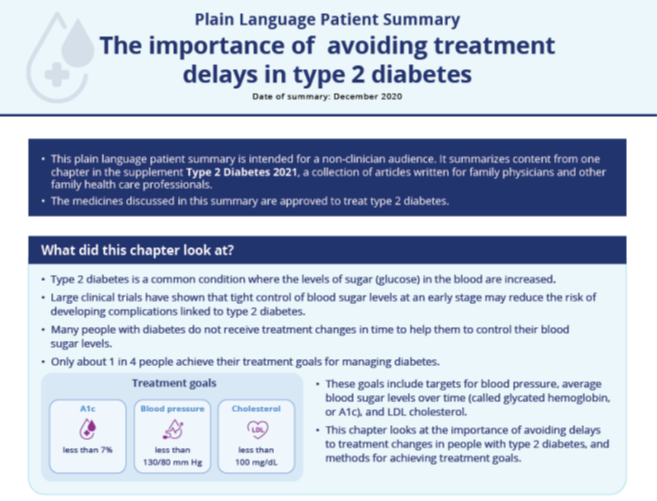

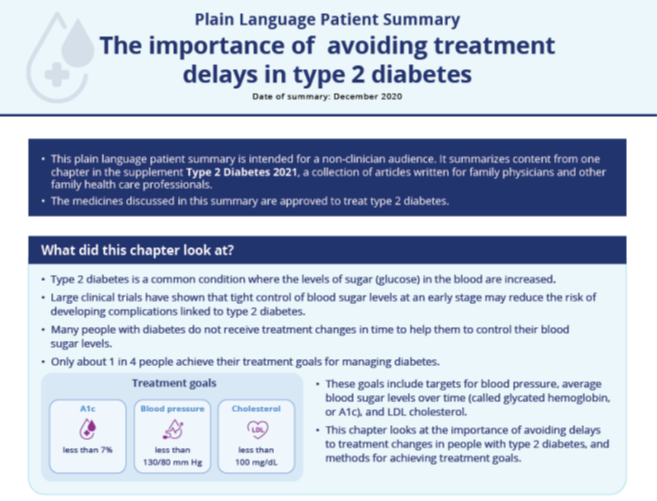

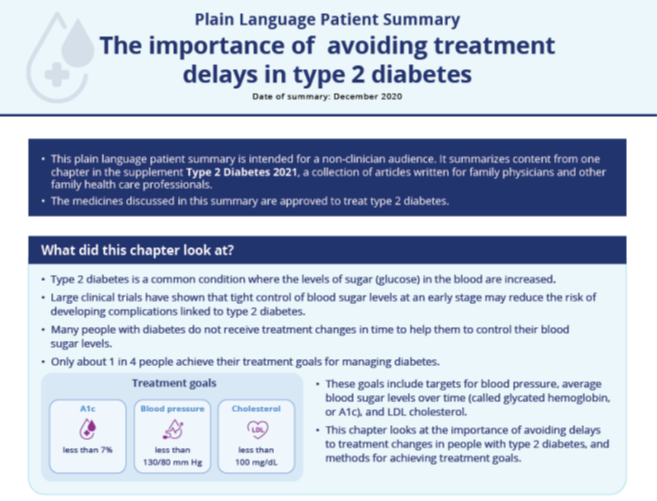

Plain Language Patient Summary:

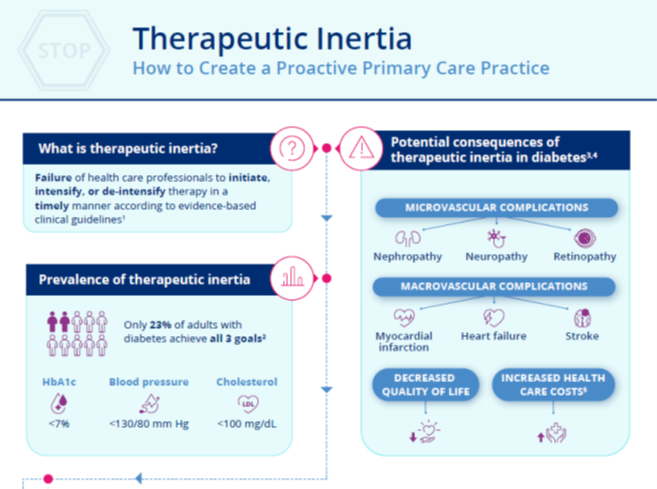

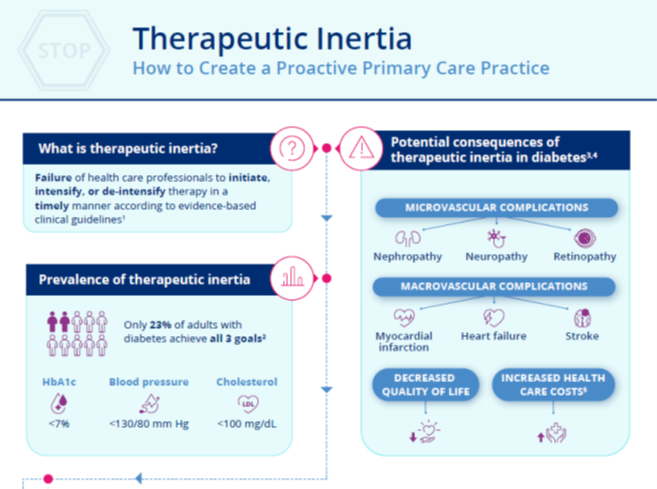

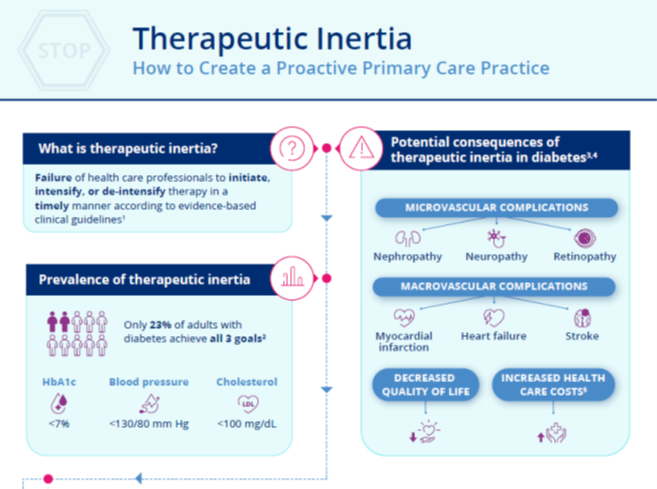

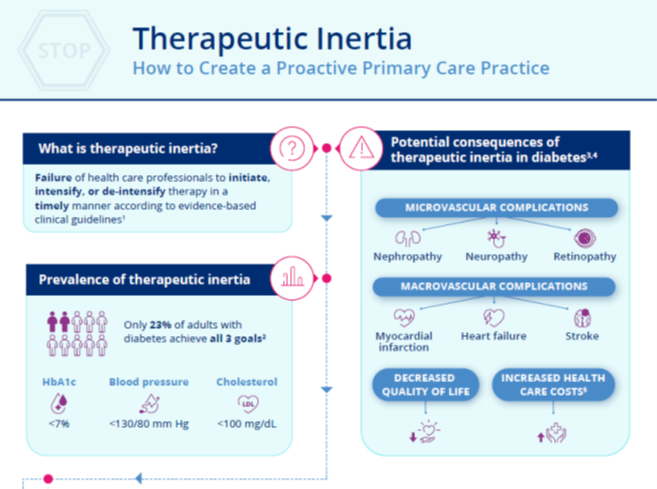

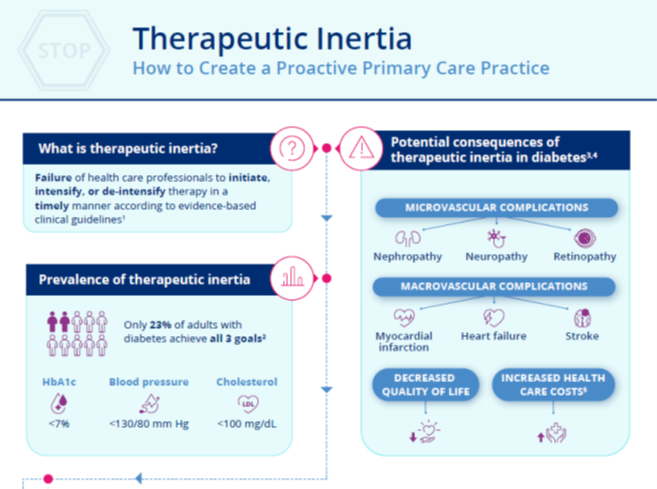

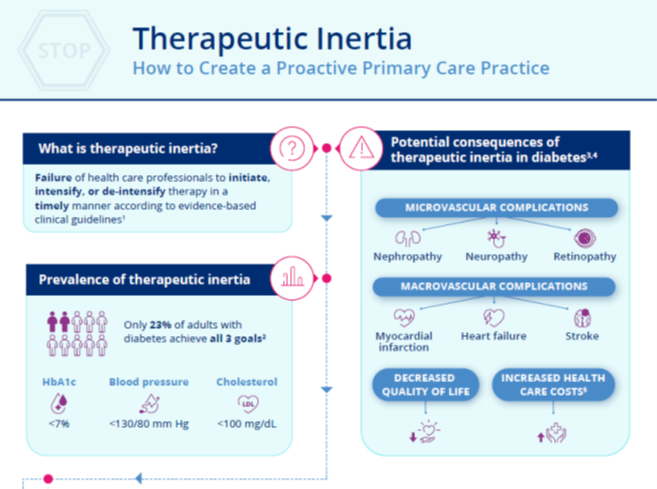

Infographic:

Supplemental Materials are joint copyright © 2020 Frontline Medical Communications and Boehringer Ingelheim Pharmaceuticals, Inc.

This supplement to Clinician Reviews brings together key updates in the field of T2D to help care for patients who have not only T2D, but also other interconnected diseases.

Supplementary Materials:

Chapter 1: Evolution of Type 2 Diabetes Treatment

Plain Language Patient Summary:

Infographic:

Chapter 2: A Practical Approach to Managing Kidney Disease in Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 3: Heart Failure in Patients with Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 4: Overcoming Therapeutic Inertia: Practical Approaches

Plain Language Patient Summary:

Infographic:

Supplemental Materials are joint copyright © 2020 Frontline Medical Communications and Boehringer Ingelheim Pharmaceuticals, Inc.

This supplement to Clinician Reviews brings together key updates in the field of T2D to help care for patients who have not only T2D, but also other interconnected diseases.

Supplementary Materials:

Chapter 1: Evolution of Type 2 Diabetes Treatment

Plain Language Patient Summary:

Infographic:

Chapter 2: A Practical Approach to Managing Kidney Disease in Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 3: Heart Failure in Patients with Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 4: Overcoming Therapeutic Inertia: Practical Approaches

Plain Language Patient Summary:

Infographic:

Supplemental Materials are joint copyright © 2020 Frontline Medical Communications and Boehringer Ingelheim Pharmaceuticals, Inc.

ACC/AHA update two atrial fibrillation performance measures

The American College of Cardiology and American Heart Association Task Force on Performance Measures have made two changes to performance measures for adults with atrial fibrillation or atrial flutter.

The 2020 Update to the 2016 ACC/AHA Clinical Performance and Quality Measures for Adults With Atrial Fibrillation or Atrial Flutter was published online Dec. 7 in the Journal of the American College of Cardiology and Circulation: Cardiovascular Quality and Outcomes. It was developed in collaboration with the Heart Rhythm Society.

Both performance measure changes were prompted by, and are in accordance with, the 2019 ACC/AHA/Heart Rhythm Society atrial fibrillation guideline focused update issued in January 2019, and reported by this news organization at that time.

The first change is the clarification that valvular atrial fibrillation is atrial fibrillation with either moderate or severe mitral stenosis or a mechanical heart valve. This change is incorporated into all the performance measures.

The second change, which only applies to the performance measure of anticoagulation prescribed, is the separation of a male and female threshold for the CHA2DS2-VASc score.

This threshold is now a score higher than 1 for men and higher than 2 for women, further demonstrating that the risk for stroke differs for men and women with atrial fibrillation or atrial flutter, the ACC/AHA noted in a press release.

“Successful implementation of these updated performance measures by clinicians and healthcare organizations will lead to quality improvement for adult patients with atrial fibrillation or atrial flutter,” they said.

A version of this article originally appeared on Medscape.com.

The American College of Cardiology and American Heart Association Task Force on Performance Measures have made two changes to performance measures for adults with atrial fibrillation or atrial flutter.

The 2020 Update to the 2016 ACC/AHA Clinical Performance and Quality Measures for Adults With Atrial Fibrillation or Atrial Flutter was published online Dec. 7 in the Journal of the American College of Cardiology and Circulation: Cardiovascular Quality and Outcomes. It was developed in collaboration with the Heart Rhythm Society.

Both performance measure changes were prompted by, and are in accordance with, the 2019 ACC/AHA/Heart Rhythm Society atrial fibrillation guideline focused update issued in January 2019, and reported by this news organization at that time.

The first change is the clarification that valvular atrial fibrillation is atrial fibrillation with either moderate or severe mitral stenosis or a mechanical heart valve. This change is incorporated into all the performance measures.

The second change, which only applies to the performance measure of anticoagulation prescribed, is the separation of a male and female threshold for the CHA2DS2-VASc score.

This threshold is now a score higher than 1 for men and higher than 2 for women, further demonstrating that the risk for stroke differs for men and women with atrial fibrillation or atrial flutter, the ACC/AHA noted in a press release.

“Successful implementation of these updated performance measures by clinicians and healthcare organizations will lead to quality improvement for adult patients with atrial fibrillation or atrial flutter,” they said.

A version of this article originally appeared on Medscape.com.

The American College of Cardiology and American Heart Association Task Force on Performance Measures have made two changes to performance measures for adults with atrial fibrillation or atrial flutter.

The 2020 Update to the 2016 ACC/AHA Clinical Performance and Quality Measures for Adults With Atrial Fibrillation or Atrial Flutter was published online Dec. 7 in the Journal of the American College of Cardiology and Circulation: Cardiovascular Quality and Outcomes. It was developed in collaboration with the Heart Rhythm Society.

Both performance measure changes were prompted by, and are in accordance with, the 2019 ACC/AHA/Heart Rhythm Society atrial fibrillation guideline focused update issued in January 2019, and reported by this news organization at that time.

The first change is the clarification that valvular atrial fibrillation is atrial fibrillation with either moderate or severe mitral stenosis or a mechanical heart valve. This change is incorporated into all the performance measures.

The second change, which only applies to the performance measure of anticoagulation prescribed, is the separation of a male and female threshold for the CHA2DS2-VASc score.

This threshold is now a score higher than 1 for men and higher than 2 for women, further demonstrating that the risk for stroke differs for men and women with atrial fibrillation or atrial flutter, the ACC/AHA noted in a press release.

“Successful implementation of these updated performance measures by clinicians and healthcare organizations will lead to quality improvement for adult patients with atrial fibrillation or atrial flutter,” they said.

A version of this article originally appeared on Medscape.com.

Type 2 Diabetes 2021

This supplement to The Journal of Family Practice brings together key updates in the field of T2D to help care for patients who have not only T2D, but also other interconnected diseases.

Supplementary Materials:

Chapter 1: Evolution of Type 2 Diabetes Treatment

Plain Language Patient Summary:

Infographic:

Chapter 2: A Practical Approach to Managing Kidney Disease in Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 3: Heart Failure in Patients with Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 4: Overcoming Therapeutic Inertia: Practical Approaches

Plain Language Patient Summary:

Infographic:

Supplemental Materials are joint copyright © 2020 Frontline Medical Communications and Boehringer Ingelheim Pharmaceuticals, Inc.

This supplement to The Journal of Family Practice brings together key updates in the field of T2D to help care for patients who have not only T2D, but also other interconnected diseases.

Supplementary Materials:

Chapter 1: Evolution of Type 2 Diabetes Treatment

Plain Language Patient Summary:

Infographic:

Chapter 2: A Practical Approach to Managing Kidney Disease in Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 3: Heart Failure in Patients with Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 4: Overcoming Therapeutic Inertia: Practical Approaches

Plain Language Patient Summary:

Infographic:

Supplemental Materials are joint copyright © 2020 Frontline Medical Communications and Boehringer Ingelheim Pharmaceuticals, Inc.

This supplement to The Journal of Family Practice brings together key updates in the field of T2D to help care for patients who have not only T2D, but also other interconnected diseases.

Supplementary Materials:

Chapter 1: Evolution of Type 2 Diabetes Treatment

Plain Language Patient Summary:

Infographic:

Chapter 2: A Practical Approach to Managing Kidney Disease in Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 3: Heart Failure in Patients with Type 2 Diabetes

Plain Language Patient Summary:

Infographic:

Chapter 4: Overcoming Therapeutic Inertia: Practical Approaches

Plain Language Patient Summary:

Infographic:

Supplemental Materials are joint copyright © 2020 Frontline Medical Communications and Boehringer Ingelheim Pharmaceuticals, Inc.

Raising psychiatry up ‘from depths of the asylums’

New biography captures Dr. Anthony Clare’s complexity

In “Psychiatrist in the Chair,” authors Brendan Kelly and Muiris Houston tell the story of a fellow Irishman, Anthony Clare, MD, who brought intelligence and eloquence to psychiatry. They tell a well-measured, well-referenced story of Anthony Clare’s personal and professional life. They capture his eloquence, wit, charm, and success in psychiatry as well as alluding to Dr. Clare’s self-reported “some kind of Irish darkness.”

In 1983, I was a young Scottish psychiatrist entering a fusty profession. Suddenly, there was Dr. Anthony Clare on the BBC! In “In the Psychiatrist’s Chair,” Dr. Clare interviewed celebrities. In addition to describing his Irish darkness, Brendan Kelly, MD, PhD and Muiris Houston, MD, FRCGP, both of whom are affiliated with Trinity College Dublin, note that Dr. Clare said: “I’m better at destroying systems than I am at putting them together – I do rather look for people to interview who will not live up to the prediction; there’s an element of destructiveness that’s still in me.”

I still listen to his talks on YouTube. His delicate probing questioning of B.F. Skinner, PhD, is one of my favorites, as he expertly and in an ever-so-friendly manner, teases out Dr. Skinner’s views of his upbringing and tags them to his behavorialism. It is this skill as an interviewer that captured us; can psychiatrists really be this clever? Yes, we can. All of the young and hopeful psychiatrists could see a future.

Dr. Clare raised psychiatry up from the depths of the asylums. He showed that a psychiatrist can be kind, charming, and sophisticated – handsome and helpful, not the ghouls of old movies. He did what needed to be done to psychiatry at that time: He set us on a footing that was not scary to the public. His vision for psychiatry was to improve services to those in need, reduce stigma, and show the public that there is a continuum between health and illness. He also took on the push for diagnoses, which he felt separated the normal from the abnormal, us from them.

He wrote his seminal work 10 years after graduating from the University College of Dublin. “Psychiatry in Dissent: Controversial Issues in Thought and Practice” was published in 1976, and is still considered one of the most influential texts in psychiatry. Dr. Clare “legitimized psychiatry not only in the eyes of the public but in the eyes of psychiatrists too,” the authors wrote. He did not support psychoanalysis and eschewed the rigor attached to the learning of new psychotherapies. He took renowned experts to task, but in ever such an elegant way. He successfully took on Hans Eysenck, PhD, I think because Dr. Eysenck insulted the intelligence of the Irish. He had a measured response to the anti-psychiatrists Thomas Szasz, MD, and R.D. Laing, MD, incorporating their ideas into his view of psychiatry. Dr. Clare was a social psychiatrist who highlighted the role of poverty and lack of access to mental health services. He stated that psychiatry was a “shambles, a mess and at a very primitive level.”

I enjoyed learning about his fight to make the membership exam for entrance into the Royal College of Psychiatry worthy of its name. Dr. Clare helped found the Association of Psychiatrists in Training (APIT) and wrote eloquently about the difference between training and indoctrination, which he described as having people fit a predetermined paradigm of how psychiatry should be constructed and practiced, versus education, which he defined as forming the mind. He highlighted the lack of good training facilities, and teaching staff in many parts of the United Kingdom. When Dr. Clare studied candidates in Edinburgh, he found that 70% had no child, forensic, or intellectual disability training. By the time I did my training there, I was able to get experience in all three subspecialties. He opposed the granting of automatic membership to current consultants, many of whom he considered to be “dunderheads.” . I can attest to that!

In the later phase of his life, Dr. Clare likened his self-punishing regime at the height of his hyperproductive fame to an addiction – a fix, with its risk/reward, pain/pleasure kick. He identified fear as being an unacknowledged presence in most of his life. There are vague hints from Dr. Clare’s friends and colleagues that something drove him back to Ireland from a successful life in London. Although his wife was described as being fully supportive of him, her words on his tombstone indicate something: What they indicate you can decide. She called him “a loving husband, father and grandfather, orator, physician, writer and broadcaster.” No mention was made of his being one of the greatest psychiatrists of his generation.

In 2000, he wrote “On Men: Masculinity in Crisis,” about men and the patriarchy, and highlighted the concept of “performance-based self-worth” in men. He stated: “What is the point of an awful lot of what I do. I’m in my 50s. I think one should be spending a good deal of your time doing things you want to do ... and what is that? I want to see much more of my family and friends. I want to continue making a contribution, but how can I best do that? ... I am contaminated by patriarchy; there is no man who isn’t. There is hope for men only if they ‘acknowledge the end of patriarchal power and participate in the discussion of how the post-patriarchal age is to be negotiated”. He opined whether it is still the case that, if men do not reevaluate their roles, they will soon be entirely irrelevant as social beings. The value of men is less in income generation but more in cultivating involvement, awareness, consistency, and caring, he stated.

As always with famous and talented people, we are interested not only in their professional gifts to us but in their personal journeys, and the authors, Dr. Kelly and Dr. Houston have given us this rich profile of one of my lifelong heroes, Anthony Clare. Anthony Clare makes you feel good about being a psychiatrist, and that is such an important gift.

Dr. Heru is professor of psychiatry at the University of Colorado at Denver, Aurora. She is editor of “Working With Families in Medical Settings: A Multidisciplinary Guide for Psychiatrists and Other Health Professionals” (New York: Routledge, 2013). She has no conflicts of interest.

New biography captures Dr. Anthony Clare’s complexity

New biography captures Dr. Anthony Clare’s complexity

In “Psychiatrist in the Chair,” authors Brendan Kelly and Muiris Houston tell the story of a fellow Irishman, Anthony Clare, MD, who brought intelligence and eloquence to psychiatry. They tell a well-measured, well-referenced story of Anthony Clare’s personal and professional life. They capture his eloquence, wit, charm, and success in psychiatry as well as alluding to Dr. Clare’s self-reported “some kind of Irish darkness.”

In 1983, I was a young Scottish psychiatrist entering a fusty profession. Suddenly, there was Dr. Anthony Clare on the BBC! In “In the Psychiatrist’s Chair,” Dr. Clare interviewed celebrities. In addition to describing his Irish darkness, Brendan Kelly, MD, PhD and Muiris Houston, MD, FRCGP, both of whom are affiliated with Trinity College Dublin, note that Dr. Clare said: “I’m better at destroying systems than I am at putting them together – I do rather look for people to interview who will not live up to the prediction; there’s an element of destructiveness that’s still in me.”

I still listen to his talks on YouTube. His delicate probing questioning of B.F. Skinner, PhD, is one of my favorites, as he expertly and in an ever-so-friendly manner, teases out Dr. Skinner’s views of his upbringing and tags them to his behavorialism. It is this skill as an interviewer that captured us; can psychiatrists really be this clever? Yes, we can. All of the young and hopeful psychiatrists could see a future.

Dr. Clare raised psychiatry up from the depths of the asylums. He showed that a psychiatrist can be kind, charming, and sophisticated – handsome and helpful, not the ghouls of old movies. He did what needed to be done to psychiatry at that time: He set us on a footing that was not scary to the public. His vision for psychiatry was to improve services to those in need, reduce stigma, and show the public that there is a continuum between health and illness. He also took on the push for diagnoses, which he felt separated the normal from the abnormal, us from them.

He wrote his seminal work 10 years after graduating from the University College of Dublin. “Psychiatry in Dissent: Controversial Issues in Thought and Practice” was published in 1976, and is still considered one of the most influential texts in psychiatry. Dr. Clare “legitimized psychiatry not only in the eyes of the public but in the eyes of psychiatrists too,” the authors wrote. He did not support psychoanalysis and eschewed the rigor attached to the learning of new psychotherapies. He took renowned experts to task, but in ever such an elegant way. He successfully took on Hans Eysenck, PhD, I think because Dr. Eysenck insulted the intelligence of the Irish. He had a measured response to the anti-psychiatrists Thomas Szasz, MD, and R.D. Laing, MD, incorporating their ideas into his view of psychiatry. Dr. Clare was a social psychiatrist who highlighted the role of poverty and lack of access to mental health services. He stated that psychiatry was a “shambles, a mess and at a very primitive level.”

I enjoyed learning about his fight to make the membership exam for entrance into the Royal College of Psychiatry worthy of its name. Dr. Clare helped found the Association of Psychiatrists in Training (APIT) and wrote eloquently about the difference between training and indoctrination, which he described as having people fit a predetermined paradigm of how psychiatry should be constructed and practiced, versus education, which he defined as forming the mind. He highlighted the lack of good training facilities, and teaching staff in many parts of the United Kingdom. When Dr. Clare studied candidates in Edinburgh, he found that 70% had no child, forensic, or intellectual disability training. By the time I did my training there, I was able to get experience in all three subspecialties. He opposed the granting of automatic membership to current consultants, many of whom he considered to be “dunderheads.” . I can attest to that!

In the later phase of his life, Dr. Clare likened his self-punishing regime at the height of his hyperproductive fame to an addiction – a fix, with its risk/reward, pain/pleasure kick. He identified fear as being an unacknowledged presence in most of his life. There are vague hints from Dr. Clare’s friends and colleagues that something drove him back to Ireland from a successful life in London. Although his wife was described as being fully supportive of him, her words on his tombstone indicate something: What they indicate you can decide. She called him “a loving husband, father and grandfather, orator, physician, writer and broadcaster.” No mention was made of his being one of the greatest psychiatrists of his generation.

In 2000, he wrote “On Men: Masculinity in Crisis,” about men and the patriarchy, and highlighted the concept of “performance-based self-worth” in men. He stated: “What is the point of an awful lot of what I do. I’m in my 50s. I think one should be spending a good deal of your time doing things you want to do ... and what is that? I want to see much more of my family and friends. I want to continue making a contribution, but how can I best do that? ... I am contaminated by patriarchy; there is no man who isn’t. There is hope for men only if they ‘acknowledge the end of patriarchal power and participate in the discussion of how the post-patriarchal age is to be negotiated”. He opined whether it is still the case that, if men do not reevaluate their roles, they will soon be entirely irrelevant as social beings. The value of men is less in income generation but more in cultivating involvement, awareness, consistency, and caring, he stated.

As always with famous and talented people, we are interested not only in their professional gifts to us but in their personal journeys, and the authors, Dr. Kelly and Dr. Houston have given us this rich profile of one of my lifelong heroes, Anthony Clare. Anthony Clare makes you feel good about being a psychiatrist, and that is such an important gift.

Dr. Heru is professor of psychiatry at the University of Colorado at Denver, Aurora. She is editor of “Working With Families in Medical Settings: A Multidisciplinary Guide for Psychiatrists and Other Health Professionals” (New York: Routledge, 2013). She has no conflicts of interest.

In “Psychiatrist in the Chair,” authors Brendan Kelly and Muiris Houston tell the story of a fellow Irishman, Anthony Clare, MD, who brought intelligence and eloquence to psychiatry. They tell a well-measured, well-referenced story of Anthony Clare’s personal and professional life. They capture his eloquence, wit, charm, and success in psychiatry as well as alluding to Dr. Clare’s self-reported “some kind of Irish darkness.”

In 1983, I was a young Scottish psychiatrist entering a fusty profession. Suddenly, there was Dr. Anthony Clare on the BBC! In “In the Psychiatrist’s Chair,” Dr. Clare interviewed celebrities. In addition to describing his Irish darkness, Brendan Kelly, MD, PhD and Muiris Houston, MD, FRCGP, both of whom are affiliated with Trinity College Dublin, note that Dr. Clare said: “I’m better at destroying systems than I am at putting them together – I do rather look for people to interview who will not live up to the prediction; there’s an element of destructiveness that’s still in me.”

I still listen to his talks on YouTube. His delicate probing questioning of B.F. Skinner, PhD, is one of my favorites, as he expertly and in an ever-so-friendly manner, teases out Dr. Skinner’s views of his upbringing and tags them to his behavorialism. It is this skill as an interviewer that captured us; can psychiatrists really be this clever? Yes, we can. All of the young and hopeful psychiatrists could see a future.

Dr. Clare raised psychiatry up from the depths of the asylums. He showed that a psychiatrist can be kind, charming, and sophisticated – handsome and helpful, not the ghouls of old movies. He did what needed to be done to psychiatry at that time: He set us on a footing that was not scary to the public. His vision for psychiatry was to improve services to those in need, reduce stigma, and show the public that there is a continuum between health and illness. He also took on the push for diagnoses, which he felt separated the normal from the abnormal, us from them.

He wrote his seminal work 10 years after graduating from the University College of Dublin. “Psychiatry in Dissent: Controversial Issues in Thought and Practice” was published in 1976, and is still considered one of the most influential texts in psychiatry. Dr. Clare “legitimized psychiatry not only in the eyes of the public but in the eyes of psychiatrists too,” the authors wrote. He did not support psychoanalysis and eschewed the rigor attached to the learning of new psychotherapies. He took renowned experts to task, but in ever such an elegant way. He successfully took on Hans Eysenck, PhD, I think because Dr. Eysenck insulted the intelligence of the Irish. He had a measured response to the anti-psychiatrists Thomas Szasz, MD, and R.D. Laing, MD, incorporating their ideas into his view of psychiatry. Dr. Clare was a social psychiatrist who highlighted the role of poverty and lack of access to mental health services. He stated that psychiatry was a “shambles, a mess and at a very primitive level.”

I enjoyed learning about his fight to make the membership exam for entrance into the Royal College of Psychiatry worthy of its name. Dr. Clare helped found the Association of Psychiatrists in Training (APIT) and wrote eloquently about the difference between training and indoctrination, which he described as having people fit a predetermined paradigm of how psychiatry should be constructed and practiced, versus education, which he defined as forming the mind. He highlighted the lack of good training facilities, and teaching staff in many parts of the United Kingdom. When Dr. Clare studied candidates in Edinburgh, he found that 70% had no child, forensic, or intellectual disability training. By the time I did my training there, I was able to get experience in all three subspecialties. He opposed the granting of automatic membership to current consultants, many of whom he considered to be “dunderheads.” . I can attest to that!

In the later phase of his life, Dr. Clare likened his self-punishing regime at the height of his hyperproductive fame to an addiction – a fix, with its risk/reward, pain/pleasure kick. He identified fear as being an unacknowledged presence in most of his life. There are vague hints from Dr. Clare’s friends and colleagues that something drove him back to Ireland from a successful life in London. Although his wife was described as being fully supportive of him, her words on his tombstone indicate something: What they indicate you can decide. She called him “a loving husband, father and grandfather, orator, physician, writer and broadcaster.” No mention was made of his being one of the greatest psychiatrists of his generation.

In 2000, he wrote “On Men: Masculinity in Crisis,” about men and the patriarchy, and highlighted the concept of “performance-based self-worth” in men. He stated: “What is the point of an awful lot of what I do. I’m in my 50s. I think one should be spending a good deal of your time doing things you want to do ... and what is that? I want to see much more of my family and friends. I want to continue making a contribution, but how can I best do that? ... I am contaminated by patriarchy; there is no man who isn’t. There is hope for men only if they ‘acknowledge the end of patriarchal power and participate in the discussion of how the post-patriarchal age is to be negotiated”. He opined whether it is still the case that, if men do not reevaluate their roles, they will soon be entirely irrelevant as social beings. The value of men is less in income generation but more in cultivating involvement, awareness, consistency, and caring, he stated.

As always with famous and talented people, we are interested not only in their professional gifts to us but in their personal journeys, and the authors, Dr. Kelly and Dr. Houston have given us this rich profile of one of my lifelong heroes, Anthony Clare. Anthony Clare makes you feel good about being a psychiatrist, and that is such an important gift.

Dr. Heru is professor of psychiatry at the University of Colorado at Denver, Aurora. She is editor of “Working With Families in Medical Settings: A Multidisciplinary Guide for Psychiatrists and Other Health Professionals” (New York: Routledge, 2013). She has no conflicts of interest.

RARE DISEASES REPORT: Cancers

Rare cancers, though individually rare by definition, impose a tremendous burden on adult and pediatric patient populations, especially when considering hematological cancers. In this Rare Diseases Report: Cancers, we bring you the latest information on new and ongoing developments in the treatment of some of these cancers through interviews with frontline researchers in the field.

- Survey reveals special impact of COVID-19 on patients with rare disorders

- New agents boost survival – and complexity – in chronic lymphocytic leukemia

- Targeted therapies may alter the landscape of MCL treatment

- Will CAR T push beyond lymphoma? There’s no guarantee

Rare cancers, though individually rare by definition, impose a tremendous burden on adult and pediatric patient populations, especially when considering hematological cancers. In this Rare Diseases Report: Cancers, we bring you the latest information on new and ongoing developments in the treatment of some of these cancers through interviews with frontline researchers in the field.

- Survey reveals special impact of COVID-19 on patients with rare disorders

- New agents boost survival – and complexity – in chronic lymphocytic leukemia

- Targeted therapies may alter the landscape of MCL treatment

- Will CAR T push beyond lymphoma? There’s no guarantee

Rare cancers, though individually rare by definition, impose a tremendous burden on adult and pediatric patient populations, especially when considering hematological cancers. In this Rare Diseases Report: Cancers, we bring you the latest information on new and ongoing developments in the treatment of some of these cancers through interviews with frontline researchers in the field.

- Survey reveals special impact of COVID-19 on patients with rare disorders

- New agents boost survival – and complexity – in chronic lymphocytic leukemia

- Targeted therapies may alter the landscape of MCL treatment

- Will CAR T push beyond lymphoma? There’s no guarantee

BTK Inhibitors: Researchers Eye Next-Generation Treatment Options for MS

Bruton tyrosine kinase (BTK) inhibitors effectively treat certain leukemias and lymphomas, due to their ability to inhibit a protein kinase that is critical for B cell receptor signaling. These agents can inhibit antigen-triggered activation and maturation of B cells and their release of pro-inflammatory cytokines. BTK is also a key signaling protein that controls activation of monocytes, macrophages and neutrophils and its inhibition reduces the activation of these cells.

Judging from the papers presented at the recent MS Virtual 2020—the 8th Joint ACTRIMS-ECTRIMS Meeting, BTK inhibitors are showing promise in the management of multiple sclerosis (MS), as well, as researchers eye next-generation treatment options. They may prove to be effective not only in relapsing remitting multiple sclerosis, but also on progressive disease through their effect on brain macrophages and microglial cells.

Leading the way at MS Virtual 2020 was a presentation by Patrick Vermersch, MD, PhD, who summarized the results to date of masitinib’s role in primary progressive MS (PPMS) and non-active secondar progressive MS (nSPMS). The professor of neurology at Lille University in Lille, France, and his colleagues conducted a phase 3 study to assess masitinib’s efficacy at two dosage levels – 4.5 mg/kg/day (n=199) and a 6.0 mg/kg/day (n=199) -- vs placebo (n=101). The randomized, double-blinded, placebo controlled, two-parallel group trial involved adult participants with EDSS scores between 2.0 and 6.0 with either PPMS or nSPMS. Investigators looked at overall EDSS change from baseline using a variety of repeated measures, with an emphasis on determining whether participants improved, were stable, or worsened. Among the results

- In the lower-dose contingent, the odds of either a reduction in EDSS progression or increased EDSS improvement improved by 39% for patients taking masitinib, vs those receiving placebo

- Also, among those receiving the lower dose, the odds of experiencing disability progression decreased by 37% in the treatment group, compared with placebo

- Results using the higher dose of masitinib were inconclusive

Based on these results, investigators concluded that masitinib 4.5 mg/kg/day is very likely to emerge as a new treatment option for individuals with for PPMS and nSPMS.

Another MS Virtual 2020 presentation focused on fenebrutinib, an investigational, noncovalent investigational BTK inhibitor being studies as a possible treatment for MS. Investigators assessed the potency, selectivity, and kinetics of inhibition of the BTK enzyme via fenebrutinib, compared with two other BTK inhibitors, evobrutinib and tolebrutinib, in a panel of 219 human kinases.

Fenebrutinib was found to be a more potent inhibitor, compared with the other two BTK inhibitors. Additionally, fenebrutinib effectively blocked B cell and basophil activation. Moreover, fenebrutinib inhibited fewer off target kinases >50%, compared with the other two BTK inhibitors. Investigators concluded that fenebrutinib’s high selectivity and potency shows promise as a drug linked with fewer adverse events and a better treatment profile than other investigational BTK inhibitors.

These encouraging findings are leading to the initiation of three phase 3 clinical trials involving fenebrutinib, announced at MS Virtual 2020 by Stephen L. Hauser, MD, professor of neurology, and director of the UCSF Weill Institute for Neurosciences. The trials will evaluate fenebrutinib’s impact on disease progression in relapsing MS and primary progressive MS. The primary endpoint of the studies will be 12-week composite Confirmed Disability Progression, which investigators hope will provide a stronger, more thorough assessment of disability progression, vs EDSS score alone.

Other BTK inhibitors worth watching include:

- Evobrutinib: An open-label phase 2 study has shown that the drug is linked with reduced annualized relapse rate through 108 weeks

- BIIB091: Safety and tolerability has been demonstrated in phase 1, clearing the way for further investigation

- Tolebrutinib: Under assessment for its ability to inhibit microglia-driven inflammation in murine models

Torke S, Pretzch R, Hausler D, et al. Inhibition of Bruton’s tyrosine kinase interferes with pathogenic B-cell development in inflammatory CNS demyelinating disease. Acta Neuropathol. 2020;140;535-548. doi: https://doi.org/10.1007/

Bruton tyrosine kinase (BTK) inhibitors effectively treat certain leukemias and lymphomas, due to their ability to inhibit a protein kinase that is critical for B cell receptor signaling. These agents can inhibit antigen-triggered activation and maturation of B cells and their release of pro-inflammatory cytokines. BTK is also a key signaling protein that controls activation of monocytes, macrophages and neutrophils and its inhibition reduces the activation of these cells.

Judging from the papers presented at the recent MS Virtual 2020—the 8th Joint ACTRIMS-ECTRIMS Meeting, BTK inhibitors are showing promise in the management of multiple sclerosis (MS), as well, as researchers eye next-generation treatment options. They may prove to be effective not only in relapsing remitting multiple sclerosis, but also on progressive disease through their effect on brain macrophages and microglial cells.

Leading the way at MS Virtual 2020 was a presentation by Patrick Vermersch, MD, PhD, who summarized the results to date of masitinib’s role in primary progressive MS (PPMS) and non-active secondar progressive MS (nSPMS). The professor of neurology at Lille University in Lille, France, and his colleagues conducted a phase 3 study to assess masitinib’s efficacy at two dosage levels – 4.5 mg/kg/day (n=199) and a 6.0 mg/kg/day (n=199) -- vs placebo (n=101). The randomized, double-blinded, placebo controlled, two-parallel group trial involved adult participants with EDSS scores between 2.0 and 6.0 with either PPMS or nSPMS. Investigators looked at overall EDSS change from baseline using a variety of repeated measures, with an emphasis on determining whether participants improved, were stable, or worsened. Among the results

- In the lower-dose contingent, the odds of either a reduction in EDSS progression or increased EDSS improvement improved by 39% for patients taking masitinib, vs those receiving placebo

- Also, among those receiving the lower dose, the odds of experiencing disability progression decreased by 37% in the treatment group, compared with placebo

- Results using the higher dose of masitinib were inconclusive

Based on these results, investigators concluded that masitinib 4.5 mg/kg/day is very likely to emerge as a new treatment option for individuals with for PPMS and nSPMS.

Another MS Virtual 2020 presentation focused on fenebrutinib, an investigational, noncovalent investigational BTK inhibitor being studies as a possible treatment for MS. Investigators assessed the potency, selectivity, and kinetics of inhibition of the BTK enzyme via fenebrutinib, compared with two other BTK inhibitors, evobrutinib and tolebrutinib, in a panel of 219 human kinases.

Fenebrutinib was found to be a more potent inhibitor, compared with the other two BTK inhibitors. Additionally, fenebrutinib effectively blocked B cell and basophil activation. Moreover, fenebrutinib inhibited fewer off target kinases >50%, compared with the other two BTK inhibitors. Investigators concluded that fenebrutinib’s high selectivity and potency shows promise as a drug linked with fewer adverse events and a better treatment profile than other investigational BTK inhibitors.

These encouraging findings are leading to the initiation of three phase 3 clinical trials involving fenebrutinib, announced at MS Virtual 2020 by Stephen L. Hauser, MD, professor of neurology, and director of the UCSF Weill Institute for Neurosciences. The trials will evaluate fenebrutinib’s impact on disease progression in relapsing MS and primary progressive MS. The primary endpoint of the studies will be 12-week composite Confirmed Disability Progression, which investigators hope will provide a stronger, more thorough assessment of disability progression, vs EDSS score alone.

Other BTK inhibitors worth watching include:

- Evobrutinib: An open-label phase 2 study has shown that the drug is linked with reduced annualized relapse rate through 108 weeks

- BIIB091: Safety and tolerability has been demonstrated in phase 1, clearing the way for further investigation

- Tolebrutinib: Under assessment for its ability to inhibit microglia-driven inflammation in murine models

Bruton tyrosine kinase (BTK) inhibitors effectively treat certain leukemias and lymphomas, due to their ability to inhibit a protein kinase that is critical for B cell receptor signaling. These agents can inhibit antigen-triggered activation and maturation of B cells and their release of pro-inflammatory cytokines. BTK is also a key signaling protein that controls activation of monocytes, macrophages and neutrophils and its inhibition reduces the activation of these cells.

Judging from the papers presented at the recent MS Virtual 2020—the 8th Joint ACTRIMS-ECTRIMS Meeting, BTK inhibitors are showing promise in the management of multiple sclerosis (MS), as well, as researchers eye next-generation treatment options. They may prove to be effective not only in relapsing remitting multiple sclerosis, but also on progressive disease through their effect on brain macrophages and microglial cells.

Leading the way at MS Virtual 2020 was a presentation by Patrick Vermersch, MD, PhD, who summarized the results to date of masitinib’s role in primary progressive MS (PPMS) and non-active secondar progressive MS (nSPMS). The professor of neurology at Lille University in Lille, France, and his colleagues conducted a phase 3 study to assess masitinib’s efficacy at two dosage levels – 4.5 mg/kg/day (n=199) and a 6.0 mg/kg/day (n=199) -- vs placebo (n=101). The randomized, double-blinded, placebo controlled, two-parallel group trial involved adult participants with EDSS scores between 2.0 and 6.0 with either PPMS or nSPMS. Investigators looked at overall EDSS change from baseline using a variety of repeated measures, with an emphasis on determining whether participants improved, were stable, or worsened. Among the results

- In the lower-dose contingent, the odds of either a reduction in EDSS progression or increased EDSS improvement improved by 39% for patients taking masitinib, vs those receiving placebo

- Also, among those receiving the lower dose, the odds of experiencing disability progression decreased by 37% in the treatment group, compared with placebo

- Results using the higher dose of masitinib were inconclusive

Based on these results, investigators concluded that masitinib 4.5 mg/kg/day is very likely to emerge as a new treatment option for individuals with for PPMS and nSPMS.

Another MS Virtual 2020 presentation focused on fenebrutinib, an investigational, noncovalent investigational BTK inhibitor being studies as a possible treatment for MS. Investigators assessed the potency, selectivity, and kinetics of inhibition of the BTK enzyme via fenebrutinib, compared with two other BTK inhibitors, evobrutinib and tolebrutinib, in a panel of 219 human kinases.

Fenebrutinib was found to be a more potent inhibitor, compared with the other two BTK inhibitors. Additionally, fenebrutinib effectively blocked B cell and basophil activation. Moreover, fenebrutinib inhibited fewer off target kinases >50%, compared with the other two BTK inhibitors. Investigators concluded that fenebrutinib’s high selectivity and potency shows promise as a drug linked with fewer adverse events and a better treatment profile than other investigational BTK inhibitors.

These encouraging findings are leading to the initiation of three phase 3 clinical trials involving fenebrutinib, announced at MS Virtual 2020 by Stephen L. Hauser, MD, professor of neurology, and director of the UCSF Weill Institute for Neurosciences. The trials will evaluate fenebrutinib’s impact on disease progression in relapsing MS and primary progressive MS. The primary endpoint of the studies will be 12-week composite Confirmed Disability Progression, which investigators hope will provide a stronger, more thorough assessment of disability progression, vs EDSS score alone.

Other BTK inhibitors worth watching include:

- Evobrutinib: An open-label phase 2 study has shown that the drug is linked with reduced annualized relapse rate through 108 weeks

- BIIB091: Safety and tolerability has been demonstrated in phase 1, clearing the way for further investigation

- Tolebrutinib: Under assessment for its ability to inhibit microglia-driven inflammation in murine models

Torke S, Pretzch R, Hausler D, et al. Inhibition of Bruton’s tyrosine kinase interferes with pathogenic B-cell development in inflammatory CNS demyelinating disease. Acta Neuropathol. 2020;140;535-548. doi: https://doi.org/10.1007/

Torke S, Pretzch R, Hausler D, et al. Inhibition of Bruton’s tyrosine kinase interferes with pathogenic B-cell development in inflammatory CNS demyelinating disease. Acta Neuropathol. 2020;140;535-548. doi: https://doi.org/10.1007/

Adding atezolizumab to chemo doesn’t worsen QOL in early TNBC

In the randomized phase 3 trial, patients received neoadjuvant atezolizumab or placebo plus chemotherapy, followed by surgery and adjuvant atezolizumab or observation.

An analysis of patient-reported outcomes showed that, after a brief decline in health-related quality of life (HRQOL) in both the atezolizumab and control arms, the burden of treatment eased and then remained similar throughout follow-up.

“Treatment-related symptoms in both arms were similar, and the addition of atezolizumab to chemotherapy was tolerable, with no additional treatment side-effect bother reported,” said Elizabeth Mittendorf, MD, PhD, of the Dana-Farber Cancer Institute in Boston.

She presented these results in an oral abstract presentation during the 2020 San Antonio Breast Cancer Symposium.

Initial results

Primary results of the IMpassion031 trial were reported at ESMO 2020 and published in The Lancet.

The trial enrolled patients with treatment-naive early TNBC, and they were randomized to receive chemotherapy plus atezolizumab or placebo. Atezolizumab was given at 840 mg intravenously every 2 weeks.

Chemotherapy consisted of nab-paclitaxel at 125 mg/m2 every week for 12 weeks followed by doxorubicin at 60 mg/m2 and cyclophosphamide at 600 mg/m2 every 2 weeks for 8 weeks. Patients then underwent surgery, which was followed by either atezolizumab maintenance or observation.

The addition of atezolizumab was associated with a 17% improvement in the rate of pathological complete response (pCR) in the intention-to-treat population.

Among patients positive for PD-L1, atezolizumab was associated with a 20% improvement in pCR rate.

Patients have their say

At SABCS 2020, Dr. Mittendorf presented patient-reported outcomes (PRO) for 161 patients randomized to atezolizumab plus chemotherapy and 167 assigned to placebo plus chemotherapy.

The outcome measures – including role function (the ability to work or pursue common everyday activities), physical function, emotional and social function, and HRQOL – came from scores on the European Organization for Research and Treatment of Cancer (EORTC) Quality of Life Questionnaire Core 30 (QLQ-C30).

The exploratory PRO endpoints were mean function and disease- or treatment-related symptoms as well as mean change from baseline in these symptoms.

At baseline, mean physical function scores were high in both arms, at approximately 90%. They dropped to about 65% in each arm by cycle 5 and rebounded to about 80% by cycle 7.

“The change was clinically meaningful, in that the dip was greater than 10 points,” Dr. Mittendorf said. “As patients completed neoadjuvant therapy and were in the adjuvant phase of the study, there was an increase and stabilization with respect to their physical function.”

Similarly, role function declined from 89% in each arm at baseline to a nadir of about 55% in the placebo arm and 50% in the atezolizumab arm. After cycle 5, role function levels began to increase in both arms and remained above 70% from cycle 7 onward.

Role function did not completely recover or stabilize among patients in the atezolizumab arm, “and we attribute this to the fact that these patients continue to receive atezolizumab, which requires that they come to clinic for that therapy, thereby potentially impacting role function,” Dr. Mittendorf said.

She reported that HRQOL declined from a median of nearly 80% at baseline in both arms to a nadir of 62% in the placebo arm and 52% in the atezolizumab arm by cycle 5. HRQOL rebounded starting at cycle 6 and stabilized thereafter, with little daylight between the treatment arms at the most recent follow-up.

The investigators saw worsening of the treatment-related symptoms fatigue, nausea/vomiting, and diarrhea in each arm, with the highest level of symptoms except pain reported at cycle 5. The highest reported pain level was seen at cycle 4.

In each arm, the symptoms declined and stabilized in the adjuvant setting, with mean values at week 16 similar to those reported at baseline for most symptoms. The exception was fatigue, which remained slightly elevated in both arms.

Regarding side-effect bother, a similar proportion of patients in each arm reported increased level of bother by visit during the neoadjuvant phase. However, no additional side-effect bother was reported by patients receiving maintenance atezolizumab, compared with patients in the placebo arm, who were followed with observation alone.

‘Reassuring’ data

“What we can conclude from the PRO in IMpassion031 is that early breast cancer patients are relatively asymptomatic. They do have a good baseline quality of life and very good functioning. The neoadjuvant therapy certainly has deterioration in quality of life and functioning, but this is transient, and there was no evident burden from the atezolizumab,” said invited discussant Sylvia Adams, MD, of New York University.

She said it’s reassuring to see the addition of atezolizumab did not adversely affect HRQOL but added that the study “also shows that chemotherapy has a major impact on well-being, as expected.”

Questions and problems that still need to be addressed regarding the use of immunotherapies in early TNBC include whether every patient needs chemotherapy or immunotherapy, a lack of predictive biomarkers, whether increases in pCR rates after neoadjuvant immunotherapy and chemotherapy will translate into improved survival, and the optimal chemotherapy backbone and schedule, she said.

IMpassion031 is sponsored by F. Hoffman-LaRoche. Dr. Mittendorf disclosed relationships with Roche/Genentech, GlaxoSmithKline, Physicians’ Education Resource, AstraZeneca, Exact Sciences, Merck, Peregrine Pharmaceuticals, SELLAS Life Sciences, TapImmune, EMD Serono, Galena Biopharma, Bristol Myers Squibb, and Lilly. Dr. Adams disclosed relationships with Genentech, Bristol Myers Squibb, Merck, Amgen, Celgene, and Novartis.

SOURCE: Mittendorf E at al. SABCS 2020, Abstract GS3-02.

In the randomized phase 3 trial, patients received neoadjuvant atezolizumab or placebo plus chemotherapy, followed by surgery and adjuvant atezolizumab or observation.

An analysis of patient-reported outcomes showed that, after a brief decline in health-related quality of life (HRQOL) in both the atezolizumab and control arms, the burden of treatment eased and then remained similar throughout follow-up.

“Treatment-related symptoms in both arms were similar, and the addition of atezolizumab to chemotherapy was tolerable, with no additional treatment side-effect bother reported,” said Elizabeth Mittendorf, MD, PhD, of the Dana-Farber Cancer Institute in Boston.

She presented these results in an oral abstract presentation during the 2020 San Antonio Breast Cancer Symposium.

Initial results

Primary results of the IMpassion031 trial were reported at ESMO 2020 and published in The Lancet.

The trial enrolled patients with treatment-naive early TNBC, and they were randomized to receive chemotherapy plus atezolizumab or placebo. Atezolizumab was given at 840 mg intravenously every 2 weeks.

Chemotherapy consisted of nab-paclitaxel at 125 mg/m2 every week for 12 weeks followed by doxorubicin at 60 mg/m2 and cyclophosphamide at 600 mg/m2 every 2 weeks for 8 weeks. Patients then underwent surgery, which was followed by either atezolizumab maintenance or observation.

The addition of atezolizumab was associated with a 17% improvement in the rate of pathological complete response (pCR) in the intention-to-treat population.

Among patients positive for PD-L1, atezolizumab was associated with a 20% improvement in pCR rate.

Patients have their say

At SABCS 2020, Dr. Mittendorf presented patient-reported outcomes (PRO) for 161 patients randomized to atezolizumab plus chemotherapy and 167 assigned to placebo plus chemotherapy.

The outcome measures – including role function (the ability to work or pursue common everyday activities), physical function, emotional and social function, and HRQOL – came from scores on the European Organization for Research and Treatment of Cancer (EORTC) Quality of Life Questionnaire Core 30 (QLQ-C30).

The exploratory PRO endpoints were mean function and disease- or treatment-related symptoms as well as mean change from baseline in these symptoms.

At baseline, mean physical function scores were high in both arms, at approximately 90%. They dropped to about 65% in each arm by cycle 5 and rebounded to about 80% by cycle 7.

“The change was clinically meaningful, in that the dip was greater than 10 points,” Dr. Mittendorf said. “As patients completed neoadjuvant therapy and were in the adjuvant phase of the study, there was an increase and stabilization with respect to their physical function.”

Similarly, role function declined from 89% in each arm at baseline to a nadir of about 55% in the placebo arm and 50% in the atezolizumab arm. After cycle 5, role function levels began to increase in both arms and remained above 70% from cycle 7 onward.

Role function did not completely recover or stabilize among patients in the atezolizumab arm, “and we attribute this to the fact that these patients continue to receive atezolizumab, which requires that they come to clinic for that therapy, thereby potentially impacting role function,” Dr. Mittendorf said.

She reported that HRQOL declined from a median of nearly 80% at baseline in both arms to a nadir of 62% in the placebo arm and 52% in the atezolizumab arm by cycle 5. HRQOL rebounded starting at cycle 6 and stabilized thereafter, with little daylight between the treatment arms at the most recent follow-up.

The investigators saw worsening of the treatment-related symptoms fatigue, nausea/vomiting, and diarrhea in each arm, with the highest level of symptoms except pain reported at cycle 5. The highest reported pain level was seen at cycle 4.

In each arm, the symptoms declined and stabilized in the adjuvant setting, with mean values at week 16 similar to those reported at baseline for most symptoms. The exception was fatigue, which remained slightly elevated in both arms.

Regarding side-effect bother, a similar proportion of patients in each arm reported increased level of bother by visit during the neoadjuvant phase. However, no additional side-effect bother was reported by patients receiving maintenance atezolizumab, compared with patients in the placebo arm, who were followed with observation alone.

‘Reassuring’ data

“What we can conclude from the PRO in IMpassion031 is that early breast cancer patients are relatively asymptomatic. They do have a good baseline quality of life and very good functioning. The neoadjuvant therapy certainly has deterioration in quality of life and functioning, but this is transient, and there was no evident burden from the atezolizumab,” said invited discussant Sylvia Adams, MD, of New York University.

She said it’s reassuring to see the addition of atezolizumab did not adversely affect HRQOL but added that the study “also shows that chemotherapy has a major impact on well-being, as expected.”

Questions and problems that still need to be addressed regarding the use of immunotherapies in early TNBC include whether every patient needs chemotherapy or immunotherapy, a lack of predictive biomarkers, whether increases in pCR rates after neoadjuvant immunotherapy and chemotherapy will translate into improved survival, and the optimal chemotherapy backbone and schedule, she said.

IMpassion031 is sponsored by F. Hoffman-LaRoche. Dr. Mittendorf disclosed relationships with Roche/Genentech, GlaxoSmithKline, Physicians’ Education Resource, AstraZeneca, Exact Sciences, Merck, Peregrine Pharmaceuticals, SELLAS Life Sciences, TapImmune, EMD Serono, Galena Biopharma, Bristol Myers Squibb, and Lilly. Dr. Adams disclosed relationships with Genentech, Bristol Myers Squibb, Merck, Amgen, Celgene, and Novartis.

SOURCE: Mittendorf E at al. SABCS 2020, Abstract GS3-02.

In the randomized phase 3 trial, patients received neoadjuvant atezolizumab or placebo plus chemotherapy, followed by surgery and adjuvant atezolizumab or observation.

An analysis of patient-reported outcomes showed that, after a brief decline in health-related quality of life (HRQOL) in both the atezolizumab and control arms, the burden of treatment eased and then remained similar throughout follow-up.

“Treatment-related symptoms in both arms were similar, and the addition of atezolizumab to chemotherapy was tolerable, with no additional treatment side-effect bother reported,” said Elizabeth Mittendorf, MD, PhD, of the Dana-Farber Cancer Institute in Boston.

She presented these results in an oral abstract presentation during the 2020 San Antonio Breast Cancer Symposium.

Initial results

Primary results of the IMpassion031 trial were reported at ESMO 2020 and published in The Lancet.

The trial enrolled patients with treatment-naive early TNBC, and they were randomized to receive chemotherapy plus atezolizumab or placebo. Atezolizumab was given at 840 mg intravenously every 2 weeks.

Chemotherapy consisted of nab-paclitaxel at 125 mg/m2 every week for 12 weeks followed by doxorubicin at 60 mg/m2 and cyclophosphamide at 600 mg/m2 every 2 weeks for 8 weeks. Patients then underwent surgery, which was followed by either atezolizumab maintenance or observation.

The addition of atezolizumab was associated with a 17% improvement in the rate of pathological complete response (pCR) in the intention-to-treat population.

Among patients positive for PD-L1, atezolizumab was associated with a 20% improvement in pCR rate.

Patients have their say

At SABCS 2020, Dr. Mittendorf presented patient-reported outcomes (PRO) for 161 patients randomized to atezolizumab plus chemotherapy and 167 assigned to placebo plus chemotherapy.

The outcome measures – including role function (the ability to work or pursue common everyday activities), physical function, emotional and social function, and HRQOL – came from scores on the European Organization for Research and Treatment of Cancer (EORTC) Quality of Life Questionnaire Core 30 (QLQ-C30).

The exploratory PRO endpoints were mean function and disease- or treatment-related symptoms as well as mean change from baseline in these symptoms.

At baseline, mean physical function scores were high in both arms, at approximately 90%. They dropped to about 65% in each arm by cycle 5 and rebounded to about 80% by cycle 7.

“The change was clinically meaningful, in that the dip was greater than 10 points,” Dr. Mittendorf said. “As patients completed neoadjuvant therapy and were in the adjuvant phase of the study, there was an increase and stabilization with respect to their physical function.”

Similarly, role function declined from 89% in each arm at baseline to a nadir of about 55% in the placebo arm and 50% in the atezolizumab arm. After cycle 5, role function levels began to increase in both arms and remained above 70% from cycle 7 onward.

Role function did not completely recover or stabilize among patients in the atezolizumab arm, “and we attribute this to the fact that these patients continue to receive atezolizumab, which requires that they come to clinic for that therapy, thereby potentially impacting role function,” Dr. Mittendorf said.

She reported that HRQOL declined from a median of nearly 80% at baseline in both arms to a nadir of 62% in the placebo arm and 52% in the atezolizumab arm by cycle 5. HRQOL rebounded starting at cycle 6 and stabilized thereafter, with little daylight between the treatment arms at the most recent follow-up.

The investigators saw worsening of the treatment-related symptoms fatigue, nausea/vomiting, and diarrhea in each arm, with the highest level of symptoms except pain reported at cycle 5. The highest reported pain level was seen at cycle 4.

In each arm, the symptoms declined and stabilized in the adjuvant setting, with mean values at week 16 similar to those reported at baseline for most symptoms. The exception was fatigue, which remained slightly elevated in both arms.

Regarding side-effect bother, a similar proportion of patients in each arm reported increased level of bother by visit during the neoadjuvant phase. However, no additional side-effect bother was reported by patients receiving maintenance atezolizumab, compared with patients in the placebo arm, who were followed with observation alone.

‘Reassuring’ data

“What we can conclude from the PRO in IMpassion031 is that early breast cancer patients are relatively asymptomatic. They do have a good baseline quality of life and very good functioning. The neoadjuvant therapy certainly has deterioration in quality of life and functioning, but this is transient, and there was no evident burden from the atezolizumab,” said invited discussant Sylvia Adams, MD, of New York University.

She said it’s reassuring to see the addition of atezolizumab did not adversely affect HRQOL but added that the study “also shows that chemotherapy has a major impact on well-being, as expected.”

Questions and problems that still need to be addressed regarding the use of immunotherapies in early TNBC include whether every patient needs chemotherapy or immunotherapy, a lack of predictive biomarkers, whether increases in pCR rates after neoadjuvant immunotherapy and chemotherapy will translate into improved survival, and the optimal chemotherapy backbone and schedule, she said.

IMpassion031 is sponsored by F. Hoffman-LaRoche. Dr. Mittendorf disclosed relationships with Roche/Genentech, GlaxoSmithKline, Physicians’ Education Resource, AstraZeneca, Exact Sciences, Merck, Peregrine Pharmaceuticals, SELLAS Life Sciences, TapImmune, EMD Serono, Galena Biopharma, Bristol Myers Squibb, and Lilly. Dr. Adams disclosed relationships with Genentech, Bristol Myers Squibb, Merck, Amgen, Celgene, and Novartis.

SOURCE: Mittendorf E at al. SABCS 2020, Abstract GS3-02.

FROM SABCS 2020

Four factors may predict better survival with cabozantinib in mRCC

Starting cabozantinib at 60 mg/day, prior nephrectomy, favorable- or intermediate-risk disease, and body mass index of 25 kg/m2 or higher were all significantly associated with better OS.