User login

More adolescents seek medical care for mental health issues

Less than a decade ago, the ED at Rady Children’s Hospital in San Diego would see maybe one or two young psychiatric patients per day, said Benjamin Maxwell, MD, the hospital’s interim director of child and adolescent psychiatry.

Now, it’s not unusual for the ED to see 10 psychiatric patients in a day, and sometimes even 20, said Dr. Maxwell. “What a lot of times is happening now is kids aren’t getting the care they need, until it gets to the point where it is dangerous.”

EDs throughout California are reporting a sharp increase in adolescents and young adults seeking care for a mental health crisis. In 2018, California EDs treated 84,584 young patients aged 13-21 years who had a primary diagnosis involving mental health. That is up from 59,705 in 2012, a 42% increase, according to data provided by the Office of Statewide Health Planning and Development.

By comparison, the number of ED encounters among that age group for all other diagnoses grew by just 4% over the same period. And the number of encounters involving mental health among all other age groups – everyone except adolescents and young adults – rose by about 18%.

The spike in youth mental health visits corresponds with a recent survey that found that members of “Generation Z” – defined in the survey as people born since 1997 – are more likely than other generations to report their mental health as fair or poor. The 2018 polling, done on behalf of the American Psychological Association, also found that members of Generation Z, along with millennials, are more likely to report receiving treatment for mental health issues.

The trend corresponds with another alarming development, as well: a marked increase in suicides among teens and young adults. About 7.5 of every 100,000 young people aged 13-21 in California died by suicide in 2017, up from a rate of 4.9 per 100,000 in 2008, according to the latest figures from the Centers for Disease Control and Prevention. Nationwide, suicides in that age range rose from 7.2 to 11.3 per 100,000 from 2008 to 2017.

Researchers are studying the causes for the surging reports of mental distress among America’s young people. Many recent theories note that the trend parallels the rise of social media, an ever-present window on peer activities that can exacerbate adolescent insecurities and open new avenues of bullying.

“Even though this generation has been raised with social media, youth are feeling more disconnected, judged, bullied, and pressured from their peers,” said Susan Coats, EdD, a school psychologist at Baldwin Park Unified School District near Los Angeles.

“Social media: It’s a blessing and it’s a curse,” Dr. Coats added. “Social media has brought youth together in a forum where maybe they may have felt isolated before, but it also has undermined interpersonal relationships.”

Members of Generation Z also report significant levels of stress about personal debt, housing instability, and hunger, as well as mass shootings and climate change, according to the American Psychological Association survey.

“We’re not doing a great job with … catching things before they devolve into broader problems, and we’re not doing a good job with prevention,” said Lishaun Francis, associate director of health collaborations at Children Now, a nonprofit based in Oakland, Calif.

Many California school districts don’t have enough school psychologists and don’t devote enough resources to teaching students how to cope with depression, anxiety, and other mental health issues, said Ms. Coats, who chairs the mental health and crisis consultation committee of the California Association of School Psychologists.

In the broader community, medical providers also are struggling to keep up. “Many times there aren’t psychiatric beds available for kids in our community,” Dr. Maxwell said.

Most of the adolescents who come into the ED at Rady Children’s Hospital during a mental health crisis are considering suicide, have attempted suicide, or have harmed themselves, said Dr. Maxwell, who is also the hospital’s medical director of inpatient psychiatry.

These patients are triaged and quickly seen by a social worker. Often, a behavioral health assistant is assigned to sit with the patients throughout their stay.

“Suicidal patients – we don’t want them to be alone at all in a busy emergency department,” Dr. Maxwell said. “So that’s a major staffing increase.”

Rady Children’s Hospital plans to open a six-bed, 24-hour psychiatric ED in the spring. Improving emergency care will help, Dr. Maxwell said, but a better solution would be to intervene with young people before they need an ED.

“The ED surge probably represents a failure of the system at large,” Dr. Maxwell said. “They’re ending up in the emergency department because they’re not getting the care they need, when they need it.”

Phillip Reese is a data reporting specialist and an assistant professor of journalism at California State University–Sacramento. This Kaiser Health News story first published on California Healthline, a service of the California Health Care Foundation. KHN is a nonprofit national health policy news service. It is an editorially independent program of the Henry J. Kaiser Family Foundation that is not affiliated with Kaiser Permanente.

Less than a decade ago, the ED at Rady Children’s Hospital in San Diego would see maybe one or two young psychiatric patients per day, said Benjamin Maxwell, MD, the hospital’s interim director of child and adolescent psychiatry.

Now, it’s not unusual for the ED to see 10 psychiatric patients in a day, and sometimes even 20, said Dr. Maxwell. “What a lot of times is happening now is kids aren’t getting the care they need, until it gets to the point where it is dangerous.”

EDs throughout California are reporting a sharp increase in adolescents and young adults seeking care for a mental health crisis. In 2018, California EDs treated 84,584 young patients aged 13-21 years who had a primary diagnosis involving mental health. That is up from 59,705 in 2012, a 42% increase, according to data provided by the Office of Statewide Health Planning and Development.

By comparison, the number of ED encounters among that age group for all other diagnoses grew by just 4% over the same period. And the number of encounters involving mental health among all other age groups – everyone except adolescents and young adults – rose by about 18%.

The spike in youth mental health visits corresponds with a recent survey that found that members of “Generation Z” – defined in the survey as people born since 1997 – are more likely than other generations to report their mental health as fair or poor. The 2018 polling, done on behalf of the American Psychological Association, also found that members of Generation Z, along with millennials, are more likely to report receiving treatment for mental health issues.

The trend corresponds with another alarming development, as well: a marked increase in suicides among teens and young adults. About 7.5 of every 100,000 young people aged 13-21 in California died by suicide in 2017, up from a rate of 4.9 per 100,000 in 2008, according to the latest figures from the Centers for Disease Control and Prevention. Nationwide, suicides in that age range rose from 7.2 to 11.3 per 100,000 from 2008 to 2017.

Researchers are studying the causes for the surging reports of mental distress among America’s young people. Many recent theories note that the trend parallels the rise of social media, an ever-present window on peer activities that can exacerbate adolescent insecurities and open new avenues of bullying.

“Even though this generation has been raised with social media, youth are feeling more disconnected, judged, bullied, and pressured from their peers,” said Susan Coats, EdD, a school psychologist at Baldwin Park Unified School District near Los Angeles.

“Social media: It’s a blessing and it’s a curse,” Dr. Coats added. “Social media has brought youth together in a forum where maybe they may have felt isolated before, but it also has undermined interpersonal relationships.”

Members of Generation Z also report significant levels of stress about personal debt, housing instability, and hunger, as well as mass shootings and climate change, according to the American Psychological Association survey.

“We’re not doing a great job with … catching things before they devolve into broader problems, and we’re not doing a good job with prevention,” said Lishaun Francis, associate director of health collaborations at Children Now, a nonprofit based in Oakland, Calif.

Many California school districts don’t have enough school psychologists and don’t devote enough resources to teaching students how to cope with depression, anxiety, and other mental health issues, said Ms. Coats, who chairs the mental health and crisis consultation committee of the California Association of School Psychologists.

In the broader community, medical providers also are struggling to keep up. “Many times there aren’t psychiatric beds available for kids in our community,” Dr. Maxwell said.

Most of the adolescents who come into the ED at Rady Children’s Hospital during a mental health crisis are considering suicide, have attempted suicide, or have harmed themselves, said Dr. Maxwell, who is also the hospital’s medical director of inpatient psychiatry.

These patients are triaged and quickly seen by a social worker. Often, a behavioral health assistant is assigned to sit with the patients throughout their stay.

“Suicidal patients – we don’t want them to be alone at all in a busy emergency department,” Dr. Maxwell said. “So that’s a major staffing increase.”

Rady Children’s Hospital plans to open a six-bed, 24-hour psychiatric ED in the spring. Improving emergency care will help, Dr. Maxwell said, but a better solution would be to intervene with young people before they need an ED.

“The ED surge probably represents a failure of the system at large,” Dr. Maxwell said. “They’re ending up in the emergency department because they’re not getting the care they need, when they need it.”

Phillip Reese is a data reporting specialist and an assistant professor of journalism at California State University–Sacramento. This Kaiser Health News story first published on California Healthline, a service of the California Health Care Foundation. KHN is a nonprofit national health policy news service. It is an editorially independent program of the Henry J. Kaiser Family Foundation that is not affiliated with Kaiser Permanente.

Less than a decade ago, the ED at Rady Children’s Hospital in San Diego would see maybe one or two young psychiatric patients per day, said Benjamin Maxwell, MD, the hospital’s interim director of child and adolescent psychiatry.

Now, it’s not unusual for the ED to see 10 psychiatric patients in a day, and sometimes even 20, said Dr. Maxwell. “What a lot of times is happening now is kids aren’t getting the care they need, until it gets to the point where it is dangerous.”

EDs throughout California are reporting a sharp increase in adolescents and young adults seeking care for a mental health crisis. In 2018, California EDs treated 84,584 young patients aged 13-21 years who had a primary diagnosis involving mental health. That is up from 59,705 in 2012, a 42% increase, according to data provided by the Office of Statewide Health Planning and Development.

By comparison, the number of ED encounters among that age group for all other diagnoses grew by just 4% over the same period. And the number of encounters involving mental health among all other age groups – everyone except adolescents and young adults – rose by about 18%.

The spike in youth mental health visits corresponds with a recent survey that found that members of “Generation Z” – defined in the survey as people born since 1997 – are more likely than other generations to report their mental health as fair or poor. The 2018 polling, done on behalf of the American Psychological Association, also found that members of Generation Z, along with millennials, are more likely to report receiving treatment for mental health issues.

The trend corresponds with another alarming development, as well: a marked increase in suicides among teens and young adults. About 7.5 of every 100,000 young people aged 13-21 in California died by suicide in 2017, up from a rate of 4.9 per 100,000 in 2008, according to the latest figures from the Centers for Disease Control and Prevention. Nationwide, suicides in that age range rose from 7.2 to 11.3 per 100,000 from 2008 to 2017.

Researchers are studying the causes for the surging reports of mental distress among America’s young people. Many recent theories note that the trend parallels the rise of social media, an ever-present window on peer activities that can exacerbate adolescent insecurities and open new avenues of bullying.

“Even though this generation has been raised with social media, youth are feeling more disconnected, judged, bullied, and pressured from their peers,” said Susan Coats, EdD, a school psychologist at Baldwin Park Unified School District near Los Angeles.

“Social media: It’s a blessing and it’s a curse,” Dr. Coats added. “Social media has brought youth together in a forum where maybe they may have felt isolated before, but it also has undermined interpersonal relationships.”

Members of Generation Z also report significant levels of stress about personal debt, housing instability, and hunger, as well as mass shootings and climate change, according to the American Psychological Association survey.

“We’re not doing a great job with … catching things before they devolve into broader problems, and we’re not doing a good job with prevention,” said Lishaun Francis, associate director of health collaborations at Children Now, a nonprofit based in Oakland, Calif.

Many California school districts don’t have enough school psychologists and don’t devote enough resources to teaching students how to cope with depression, anxiety, and other mental health issues, said Ms. Coats, who chairs the mental health and crisis consultation committee of the California Association of School Psychologists.

In the broader community, medical providers also are struggling to keep up. “Many times there aren’t psychiatric beds available for kids in our community,” Dr. Maxwell said.

Most of the adolescents who come into the ED at Rady Children’s Hospital during a mental health crisis are considering suicide, have attempted suicide, or have harmed themselves, said Dr. Maxwell, who is also the hospital’s medical director of inpatient psychiatry.

These patients are triaged and quickly seen by a social worker. Often, a behavioral health assistant is assigned to sit with the patients throughout their stay.

“Suicidal patients – we don’t want them to be alone at all in a busy emergency department,” Dr. Maxwell said. “So that’s a major staffing increase.”

Rady Children’s Hospital plans to open a six-bed, 24-hour psychiatric ED in the spring. Improving emergency care will help, Dr. Maxwell said, but a better solution would be to intervene with young people before they need an ED.

“The ED surge probably represents a failure of the system at large,” Dr. Maxwell said. “They’re ending up in the emergency department because they’re not getting the care they need, when they need it.”

Phillip Reese is a data reporting specialist and an assistant professor of journalism at California State University–Sacramento. This Kaiser Health News story first published on California Healthline, a service of the California Health Care Foundation. KHN is a nonprofit national health policy news service. It is an editorially independent program of the Henry J. Kaiser Family Foundation that is not affiliated with Kaiser Permanente.

Recurring rash on neck and axilla

The FP initially treated the area with topical ketoconazole cream, which stung, but partially improved the patient’s symptoms. Because the rash persisted, the FP performed a punch biopsy, which showed widespread epidermal acantholysis or separation of epidermal cells. This is a hallmark of pemphigus vulgaris and benign familial pemphigus (Hailey-Hailey disease).

Hailey-Hailey disease is an uncommon autosomal dominant inherited blistering disorder that affects connecting proteins in the epidermis, which is why histology overlaps with pemphigus. Symptoms may not present until the second or third decade of life and often occur in flexural or high friction areas, including the axilla and inguinal folds. Any skin injury may trigger a flare, including sunburn, infections, heavy sweating, or friction from clothes. Bacterial overgrowth or colonization can cause a bad odor and social isolation in severe cases.

The differential diagnosis of axillary skin disorders is broad and includes irritant contact dermatitis, contact dermatitis, seborrheic dermatitis, hidradenitis suppurativa, candida intertrigo, and psoriasis. Clinical clues that favor Hailey-Hailey disease include fragile vesicles or pustules at the periphery and small focal erosions. The family history is helpful but not always known.

Some patients require topical therapy sequentially, in combination, or personalized through trial and error that addresses the inflammation, bacterial overgrowth, and fungal disease. Patients also may require long-term doxycycline therapy to suppress flares. Most patients will benefit from at least prn use of topical steroids for inflammation. Addressing sweating with topical aluminum chloride or botulinum injections can be beneficial. Low dose naltrexone, as well as afamelanotide, has shown promise in a few small case series.

The patient in this case improved with topical triamcinolone 0.1% ointment bid and systemic doxycycline 100 mg bid for 2 weeks. However, he continued to require occasional rounds of oral doxycycline with flares.

Photos and text for Photo Rounds Friday courtesy of Jonathan Karnes, MD (copyright retained).

The FP initially treated the area with topical ketoconazole cream, which stung, but partially improved the patient’s symptoms. Because the rash persisted, the FP performed a punch biopsy, which showed widespread epidermal acantholysis or separation of epidermal cells. This is a hallmark of pemphigus vulgaris and benign familial pemphigus (Hailey-Hailey disease).

Hailey-Hailey disease is an uncommon autosomal dominant inherited blistering disorder that affects connecting proteins in the epidermis, which is why histology overlaps with pemphigus. Symptoms may not present until the second or third decade of life and often occur in flexural or high friction areas, including the axilla and inguinal folds. Any skin injury may trigger a flare, including sunburn, infections, heavy sweating, or friction from clothes. Bacterial overgrowth or colonization can cause a bad odor and social isolation in severe cases.

The differential diagnosis of axillary skin disorders is broad and includes irritant contact dermatitis, contact dermatitis, seborrheic dermatitis, hidradenitis suppurativa, candida intertrigo, and psoriasis. Clinical clues that favor Hailey-Hailey disease include fragile vesicles or pustules at the periphery and small focal erosions. The family history is helpful but not always known.

Some patients require topical therapy sequentially, in combination, or personalized through trial and error that addresses the inflammation, bacterial overgrowth, and fungal disease. Patients also may require long-term doxycycline therapy to suppress flares. Most patients will benefit from at least prn use of topical steroids for inflammation. Addressing sweating with topical aluminum chloride or botulinum injections can be beneficial. Low dose naltrexone, as well as afamelanotide, has shown promise in a few small case series.

The patient in this case improved with topical triamcinolone 0.1% ointment bid and systemic doxycycline 100 mg bid for 2 weeks. However, he continued to require occasional rounds of oral doxycycline with flares.

Photos and text for Photo Rounds Friday courtesy of Jonathan Karnes, MD (copyright retained).

The FP initially treated the area with topical ketoconazole cream, which stung, but partially improved the patient’s symptoms. Because the rash persisted, the FP performed a punch biopsy, which showed widespread epidermal acantholysis or separation of epidermal cells. This is a hallmark of pemphigus vulgaris and benign familial pemphigus (Hailey-Hailey disease).

Hailey-Hailey disease is an uncommon autosomal dominant inherited blistering disorder that affects connecting proteins in the epidermis, which is why histology overlaps with pemphigus. Symptoms may not present until the second or third decade of life and often occur in flexural or high friction areas, including the axilla and inguinal folds. Any skin injury may trigger a flare, including sunburn, infections, heavy sweating, or friction from clothes. Bacterial overgrowth or colonization can cause a bad odor and social isolation in severe cases.

The differential diagnosis of axillary skin disorders is broad and includes irritant contact dermatitis, contact dermatitis, seborrheic dermatitis, hidradenitis suppurativa, candida intertrigo, and psoriasis. Clinical clues that favor Hailey-Hailey disease include fragile vesicles or pustules at the periphery and small focal erosions. The family history is helpful but not always known.

Some patients require topical therapy sequentially, in combination, or personalized through trial and error that addresses the inflammation, bacterial overgrowth, and fungal disease. Patients also may require long-term doxycycline therapy to suppress flares. Most patients will benefit from at least prn use of topical steroids for inflammation. Addressing sweating with topical aluminum chloride or botulinum injections can be beneficial. Low dose naltrexone, as well as afamelanotide, has shown promise in a few small case series.

The patient in this case improved with topical triamcinolone 0.1% ointment bid and systemic doxycycline 100 mg bid for 2 weeks. However, he continued to require occasional rounds of oral doxycycline with flares.

Photos and text for Photo Rounds Friday courtesy of Jonathan Karnes, MD (copyright retained).

Environmental Scan: Drivers of social, political and environmental change

We are living through an era of rapidly accelerated social, political, and environmental change. Spiraling costs of medical care, consumer activism around health care delivery, an aging population, and growing evidence of climate change are just some of the big currents of change. These trends are national and global in scope, and as such, far beyond any one profession or sector to shape or control. It remains for the medical profession to understand the currents of the time and adapt in order to thrive in the future.

Regulatory environment in flux

Hospitals and clinicians continue to struggle with a regulatory framework designed to improve higher quality of care yet may be creating additional barriers to access and efficiency. The passionate debate about health care costs and coverage is ongoing at the national level and appears to be a central issue on the minds of voters. Although the outcome of the debate cannot be foreseen, it will be left to the medical profession to maintain standards of care. Although the Affordable Care Act may not be repealed, the federal government’s role may diminish as policy is likely to be made by state politicians and bureaucrats.1 As a result, organizations operating in multiple states may find it difficult to develop organization-wide business strategies. And with the shift to value-based reimbursement and issues related to data breaches regarding private patient health care information, many health care professionals will need better support and documentation tools to remain compliant. This puts a large burden on medical organizations to invest even more in information technology, data management systems, and a wide range of training up and down the organizational chart. Keeping up with the needs of physicians for secure data management will be costly but critical.

Patients will feel climate change

Environmental factors affecting the air we breathe are of primary concern for patients with a broad range of cardiorespiratory conditions.2 Healthy but vulnerable infants, children, pregnant women, and the elderly may also feel the effects.3 Air pollution, increased levels of pollen and ground-level ozone, and wildfire smoke are all tied to climate change and all can have a direct impact on the patients seen by chest physicians.4 Individuals exposed to these environmental conditions may experience diminished lung function, resulting in increased hospital admissions.

Keeping up with the latest research on probable health impacts of these environmental trends will be on the agenda of most chest physicians.5 Professional societies will need to prepare to serve the educational needs of members in this regard. Continuing education content on these topics will be needed. The field will respond with new diagnostic tools and new treatments.6 Climate change may be a global-level phenomenon, but for many chest physicians, it means treating increasing numbers of patients affected by pulmonary disease.

Mind the generation gap

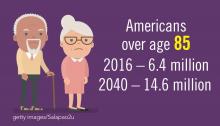

The population in the United States is primarily under the age of 65 years (84%), but the number of older citizens is on the rise. In 2016, there were 49.2 million people age 65 or older, and this number is projected to almost double to 98 million in 2060. The 85-and-over population is projected to more than double from 6.4 million in 2016 to 14.6 million in 2040 (a 129% increase).7

The medical needs of the aging population are already part of most medical institution’s planning but the current uncertainty in the health insurance market and the potential changes in Medicare coverage, not to mention the well-documented upcoming physician shortage, are complicating the planning process.8 Almost all acknowledge the “graying” of the population, but current approaches may not be sufficient given the projected the scale of the problems such as major increases in patients with chronic illnesses and the need for upscaling long-term geriatric care.

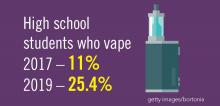

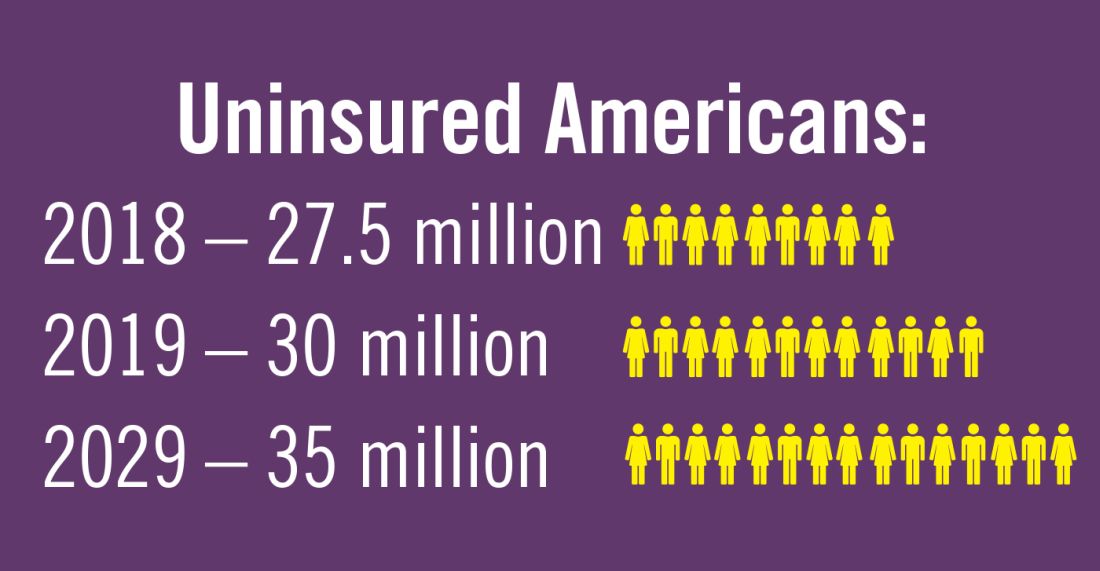

Planning for treating a growing elderly population shouldn’t mean ignoring some trends appearing among the younger population. E-cigarette use among middle- and high-school students may be creating millions of future patients with lung damage and nicotine addictions.9 Government intervention in this smoking epidemic is lagging behind the rapid spread of this unhealthy habit among young people.10 In 2019, health coverage of adults has begun to decline again after a decade of gains, and the possibility of this becoming a long-term trend has to be considered in planning for their treatment.11,12

References

1. Statistica. “Affordable Care Act - Statistics & Facts,” Matej Mikulic. 2019 Aug 14.

2. American Public Health Association and Centers for Disease Control and Prevention (2018). Climate Change Decreases the Quality of the Air We Breathe. Accessed 2019 Oct 7.

3. JAMA. 2019 Aug 13;322(6):546-56. doi: 10.1001/jama.2019.10255.

4. Lelieveld J et al. Cardiovascular disease burden from ambient air pollution in Europe reassessed using novel hazard ratio functions. Eur Heart J. 2019;40(20); 1590-6. doi: 10.1093/eurheartj/ehz135

5. European Respiratory Society. CME Online: Air pollution and respiratory health. 2018 Jun 21.

6. Environmental Protection Agency. “Particle Pollution and Your Patients’ Health,” Continuing Education for Particle Pollution Course. 2019 May 13.

7. U.S. Department of Health & Human Services. Administration on Aging. 2017 Profile of Older Americans. Accessed 2019 Oct 7.

8. Association of American Medical Colleges. Complexities of Physician Supply and Demand: Projection from 2017 to 2032. 2019 Apr.

9. Miech R et al. Trends in Adolescent Vaping, 2017-2019. N Eng J Med. 2019 Sep 18. doi: 10.1056/NEJMc1910739

10. Ned Sharpless, MD, Food and Drug Administration Acting Commissioner. “How the FDA is Regulating E-Cigarettes,” 2019 Sep 10.

11. Congressional Budget Office. Federal Subsidies for Health Insurance Coverage for People Under Age 65: 2019 to 2029. Washington D;C.: GPO, 2029

12. Galewitz P. “Breaking a 10-year streak, the number of uninsured Americans rises.” Internal Medicine News, 2019 Sep 11.

Note: Background research performed by Avenue M Group.

CHEST Inspiration is a collection of programmatic initiatives developed by the American College of Chest Physicians leadership and aimed at stimulating and encouraging innovation within the association. One of the components of CHEST Inspiration is the Environmental Scan, a series of articles focusing on the internal and external environmental factors that bear on success currently and in the future. See “Envisioning the Future: The CHEST Environmental Scan,” CHEST Physician, June 2019, p. 44, for an introduction to the series.

We are living through an era of rapidly accelerated social, political, and environmental change. Spiraling costs of medical care, consumer activism around health care delivery, an aging population, and growing evidence of climate change are just some of the big currents of change. These trends are national and global in scope, and as such, far beyond any one profession or sector to shape or control. It remains for the medical profession to understand the currents of the time and adapt in order to thrive in the future.

Regulatory environment in flux

Hospitals and clinicians continue to struggle with a regulatory framework designed to improve higher quality of care yet may be creating additional barriers to access and efficiency. The passionate debate about health care costs and coverage is ongoing at the national level and appears to be a central issue on the minds of voters. Although the outcome of the debate cannot be foreseen, it will be left to the medical profession to maintain standards of care. Although the Affordable Care Act may not be repealed, the federal government’s role may diminish as policy is likely to be made by state politicians and bureaucrats.1 As a result, organizations operating in multiple states may find it difficult to develop organization-wide business strategies. And with the shift to value-based reimbursement and issues related to data breaches regarding private patient health care information, many health care professionals will need better support and documentation tools to remain compliant. This puts a large burden on medical organizations to invest even more in information technology, data management systems, and a wide range of training up and down the organizational chart. Keeping up with the needs of physicians for secure data management will be costly but critical.

Patients will feel climate change

Environmental factors affecting the air we breathe are of primary concern for patients with a broad range of cardiorespiratory conditions.2 Healthy but vulnerable infants, children, pregnant women, and the elderly may also feel the effects.3 Air pollution, increased levels of pollen and ground-level ozone, and wildfire smoke are all tied to climate change and all can have a direct impact on the patients seen by chest physicians.4 Individuals exposed to these environmental conditions may experience diminished lung function, resulting in increased hospital admissions.

Keeping up with the latest research on probable health impacts of these environmental trends will be on the agenda of most chest physicians.5 Professional societies will need to prepare to serve the educational needs of members in this regard. Continuing education content on these topics will be needed. The field will respond with new diagnostic tools and new treatments.6 Climate change may be a global-level phenomenon, but for many chest physicians, it means treating increasing numbers of patients affected by pulmonary disease.

Mind the generation gap

The population in the United States is primarily under the age of 65 years (84%), but the number of older citizens is on the rise. In 2016, there were 49.2 million people age 65 or older, and this number is projected to almost double to 98 million in 2060. The 85-and-over population is projected to more than double from 6.4 million in 2016 to 14.6 million in 2040 (a 129% increase).7

The medical needs of the aging population are already part of most medical institution’s planning but the current uncertainty in the health insurance market and the potential changes in Medicare coverage, not to mention the well-documented upcoming physician shortage, are complicating the planning process.8 Almost all acknowledge the “graying” of the population, but current approaches may not be sufficient given the projected the scale of the problems such as major increases in patients with chronic illnesses and the need for upscaling long-term geriatric care.

Planning for treating a growing elderly population shouldn’t mean ignoring some trends appearing among the younger population. E-cigarette use among middle- and high-school students may be creating millions of future patients with lung damage and nicotine addictions.9 Government intervention in this smoking epidemic is lagging behind the rapid spread of this unhealthy habit among young people.10 In 2019, health coverage of adults has begun to decline again after a decade of gains, and the possibility of this becoming a long-term trend has to be considered in planning for their treatment.11,12

References

1. Statistica. “Affordable Care Act - Statistics & Facts,” Matej Mikulic. 2019 Aug 14.

2. American Public Health Association and Centers for Disease Control and Prevention (2018). Climate Change Decreases the Quality of the Air We Breathe. Accessed 2019 Oct 7.

3. JAMA. 2019 Aug 13;322(6):546-56. doi: 10.1001/jama.2019.10255.

4. Lelieveld J et al. Cardiovascular disease burden from ambient air pollution in Europe reassessed using novel hazard ratio functions. Eur Heart J. 2019;40(20); 1590-6. doi: 10.1093/eurheartj/ehz135

5. European Respiratory Society. CME Online: Air pollution and respiratory health. 2018 Jun 21.

6. Environmental Protection Agency. “Particle Pollution and Your Patients’ Health,” Continuing Education for Particle Pollution Course. 2019 May 13.

7. U.S. Department of Health & Human Services. Administration on Aging. 2017 Profile of Older Americans. Accessed 2019 Oct 7.

8. Association of American Medical Colleges. Complexities of Physician Supply and Demand: Projection from 2017 to 2032. 2019 Apr.

9. Miech R et al. Trends in Adolescent Vaping, 2017-2019. N Eng J Med. 2019 Sep 18. doi: 10.1056/NEJMc1910739

10. Ned Sharpless, MD, Food and Drug Administration Acting Commissioner. “How the FDA is Regulating E-Cigarettes,” 2019 Sep 10.

11. Congressional Budget Office. Federal Subsidies for Health Insurance Coverage for People Under Age 65: 2019 to 2029. Washington D;C.: GPO, 2029

12. Galewitz P. “Breaking a 10-year streak, the number of uninsured Americans rises.” Internal Medicine News, 2019 Sep 11.

Note: Background research performed by Avenue M Group.

CHEST Inspiration is a collection of programmatic initiatives developed by the American College of Chest Physicians leadership and aimed at stimulating and encouraging innovation within the association. One of the components of CHEST Inspiration is the Environmental Scan, a series of articles focusing on the internal and external environmental factors that bear on success currently and in the future. See “Envisioning the Future: The CHEST Environmental Scan,” CHEST Physician, June 2019, p. 44, for an introduction to the series.

We are living through an era of rapidly accelerated social, political, and environmental change. Spiraling costs of medical care, consumer activism around health care delivery, an aging population, and growing evidence of climate change are just some of the big currents of change. These trends are national and global in scope, and as such, far beyond any one profession or sector to shape or control. It remains for the medical profession to understand the currents of the time and adapt in order to thrive in the future.

Regulatory environment in flux

Hospitals and clinicians continue to struggle with a regulatory framework designed to improve higher quality of care yet may be creating additional barriers to access and efficiency. The passionate debate about health care costs and coverage is ongoing at the national level and appears to be a central issue on the minds of voters. Although the outcome of the debate cannot be foreseen, it will be left to the medical profession to maintain standards of care. Although the Affordable Care Act may not be repealed, the federal government’s role may diminish as policy is likely to be made by state politicians and bureaucrats.1 As a result, organizations operating in multiple states may find it difficult to develop organization-wide business strategies. And with the shift to value-based reimbursement and issues related to data breaches regarding private patient health care information, many health care professionals will need better support and documentation tools to remain compliant. This puts a large burden on medical organizations to invest even more in information technology, data management systems, and a wide range of training up and down the organizational chart. Keeping up with the needs of physicians for secure data management will be costly but critical.

Patients will feel climate change

Environmental factors affecting the air we breathe are of primary concern for patients with a broad range of cardiorespiratory conditions.2 Healthy but vulnerable infants, children, pregnant women, and the elderly may also feel the effects.3 Air pollution, increased levels of pollen and ground-level ozone, and wildfire smoke are all tied to climate change and all can have a direct impact on the patients seen by chest physicians.4 Individuals exposed to these environmental conditions may experience diminished lung function, resulting in increased hospital admissions.

Keeping up with the latest research on probable health impacts of these environmental trends will be on the agenda of most chest physicians.5 Professional societies will need to prepare to serve the educational needs of members in this regard. Continuing education content on these topics will be needed. The field will respond with new diagnostic tools and new treatments.6 Climate change may be a global-level phenomenon, but for many chest physicians, it means treating increasing numbers of patients affected by pulmonary disease.

Mind the generation gap

The population in the United States is primarily under the age of 65 years (84%), but the number of older citizens is on the rise. In 2016, there were 49.2 million people age 65 or older, and this number is projected to almost double to 98 million in 2060. The 85-and-over population is projected to more than double from 6.4 million in 2016 to 14.6 million in 2040 (a 129% increase).7

The medical needs of the aging population are already part of most medical institution’s planning but the current uncertainty in the health insurance market and the potential changes in Medicare coverage, not to mention the well-documented upcoming physician shortage, are complicating the planning process.8 Almost all acknowledge the “graying” of the population, but current approaches may not be sufficient given the projected the scale of the problems such as major increases in patients with chronic illnesses and the need for upscaling long-term geriatric care.

Planning for treating a growing elderly population shouldn’t mean ignoring some trends appearing among the younger population. E-cigarette use among middle- and high-school students may be creating millions of future patients with lung damage and nicotine addictions.9 Government intervention in this smoking epidemic is lagging behind the rapid spread of this unhealthy habit among young people.10 In 2019, health coverage of adults has begun to decline again after a decade of gains, and the possibility of this becoming a long-term trend has to be considered in planning for their treatment.11,12

References

1. Statistica. “Affordable Care Act - Statistics & Facts,” Matej Mikulic. 2019 Aug 14.

2. American Public Health Association and Centers for Disease Control and Prevention (2018). Climate Change Decreases the Quality of the Air We Breathe. Accessed 2019 Oct 7.

3. JAMA. 2019 Aug 13;322(6):546-56. doi: 10.1001/jama.2019.10255.

4. Lelieveld J et al. Cardiovascular disease burden from ambient air pollution in Europe reassessed using novel hazard ratio functions. Eur Heart J. 2019;40(20); 1590-6. doi: 10.1093/eurheartj/ehz135

5. European Respiratory Society. CME Online: Air pollution and respiratory health. 2018 Jun 21.

6. Environmental Protection Agency. “Particle Pollution and Your Patients’ Health,” Continuing Education for Particle Pollution Course. 2019 May 13.

7. U.S. Department of Health & Human Services. Administration on Aging. 2017 Profile of Older Americans. Accessed 2019 Oct 7.

8. Association of American Medical Colleges. Complexities of Physician Supply and Demand: Projection from 2017 to 2032. 2019 Apr.

9. Miech R et al. Trends in Adolescent Vaping, 2017-2019. N Eng J Med. 2019 Sep 18. doi: 10.1056/NEJMc1910739

10. Ned Sharpless, MD, Food and Drug Administration Acting Commissioner. “How the FDA is Regulating E-Cigarettes,” 2019 Sep 10.

11. Congressional Budget Office. Federal Subsidies for Health Insurance Coverage for People Under Age 65: 2019 to 2029. Washington D;C.: GPO, 2029

12. Galewitz P. “Breaking a 10-year streak, the number of uninsured Americans rises.” Internal Medicine News, 2019 Sep 11.

Note: Background research performed by Avenue M Group.

CHEST Inspiration is a collection of programmatic initiatives developed by the American College of Chest Physicians leadership and aimed at stimulating and encouraging innovation within the association. One of the components of CHEST Inspiration is the Environmental Scan, a series of articles focusing on the internal and external environmental factors that bear on success currently and in the future. See “Envisioning the Future: The CHEST Environmental Scan,” CHEST Physician, June 2019, p. 44, for an introduction to the series.

How to carve out a career as an educator during fellowship

Editor’s Note - As CHEST has just awarded the designation of Distinguished CHEST Educator (DCE) to 173 honorees at CHEST 2019 in New Orleans, this blog reminds fellows to start early to pursue a clinician educator role throughout their career.

While fellowship training is a time to continue building the foundation of expert clinical knowledge, it also offers an opportunity to start assembling a portfolio as a clinician educator. It takes time to compile educational scholarship and to establish a reputation within the communities of both teachers and learners, so it pays to get a head start. Moreover, it also takes time to master techniques for effective teaching to become that outstanding educator that you once looked up to as a medical student or resident. Below are some things that I found helpful in jump-starting that path during fellowship training.

Find a Capable Mentor

As with any sort of career planning, mentorship is key. Mentorship can open doors to expand your network and introduce opportunities for scholarship activities. Find a mentor who shares similar views and values with something that you feel passionate about. If you are planning on starting a scholarly project, make sure that your mentor has the background suited to help you maximize the experience and offer you the tools needed to achieve that end.

Determine What You Are Passionate About

Medical education is a vast field. Try to find something in medical education that is meaningful to you, whether it be in undergraduate medical education or graduate medical education or something else altogether. You want to be able to set yourself up for success, so the work has to be worthwhile.

Seek Out Opportunities to Teach

There are always opportunities to teach whether it entails precepting medical students on patient interviews or going over pulmonary/critical care topics at resident noon conferences. What I have found is that active participation in teaching opportunities tends to open a cascade of doors to more teaching opportunities.

Look for Opportunities to Be Involved in Educational Committees

Medical education, much like medicine, is a highly changing field. Leadership in medical education is always looking for resident/fellow representatives to bring new life and perspective to educational initiatives. Most of these opportunities do not require too much of a time commitment, and most committees often meet on a once-monthly basis. However, it connects you with faculty who are part of the leadership who can guide and help set you up for future success in medical education. During residency, I was able to take part in the intern curriculum committee to advise the direction of intern report. Now as a fellow, I’ve been able to meet many faculty and fellows with similar interests as mine in the CHEST Trainee Work Group.

Engage in Scholarly Activities

It is one thing to have a portfolio detailing teaching experiences, but it is another thing to have demonstrated published works in the space of medical education. It shows long-term promise as a clinician educator, and it shows leadership potential in advancing the field. It doesn’t take much to produce publications in medical education—there are always journals who look for trainees to contribute to the field whether it be an editorial or systematic review or innovative ideas.

About the Author

Justin K. Lui, MD, is a graduate of Boston University School of Medicine. He completed an internal medicine residency and chief residency at the University of Massachusetts Medical School. He is currently a second-year pulmonary and critical care medicine fellow at Boston University School of Medicine.

Reprinted from CHEST’s Thought Leader’s Blog, July 2019. This post is part of Our Life as a Fellow blog post series and includes “fellow life lessons” from current trainees in leadership with CHEST.

Editor’s Note - As CHEST has just awarded the designation of Distinguished CHEST Educator (DCE) to 173 honorees at CHEST 2019 in New Orleans, this blog reminds fellows to start early to pursue a clinician educator role throughout their career.

While fellowship training is a time to continue building the foundation of expert clinical knowledge, it also offers an opportunity to start assembling a portfolio as a clinician educator. It takes time to compile educational scholarship and to establish a reputation within the communities of both teachers and learners, so it pays to get a head start. Moreover, it also takes time to master techniques for effective teaching to become that outstanding educator that you once looked up to as a medical student or resident. Below are some things that I found helpful in jump-starting that path during fellowship training.

Find a Capable Mentor

As with any sort of career planning, mentorship is key. Mentorship can open doors to expand your network and introduce opportunities for scholarship activities. Find a mentor who shares similar views and values with something that you feel passionate about. If you are planning on starting a scholarly project, make sure that your mentor has the background suited to help you maximize the experience and offer you the tools needed to achieve that end.

Determine What You Are Passionate About

Medical education is a vast field. Try to find something in medical education that is meaningful to you, whether it be in undergraduate medical education or graduate medical education or something else altogether. You want to be able to set yourself up for success, so the work has to be worthwhile.

Seek Out Opportunities to Teach

There are always opportunities to teach whether it entails precepting medical students on patient interviews or going over pulmonary/critical care topics at resident noon conferences. What I have found is that active participation in teaching opportunities tends to open a cascade of doors to more teaching opportunities.

Look for Opportunities to Be Involved in Educational Committees

Medical education, much like medicine, is a highly changing field. Leadership in medical education is always looking for resident/fellow representatives to bring new life and perspective to educational initiatives. Most of these opportunities do not require too much of a time commitment, and most committees often meet on a once-monthly basis. However, it connects you with faculty who are part of the leadership who can guide and help set you up for future success in medical education. During residency, I was able to take part in the intern curriculum committee to advise the direction of intern report. Now as a fellow, I’ve been able to meet many faculty and fellows with similar interests as mine in the CHEST Trainee Work Group.

Engage in Scholarly Activities

It is one thing to have a portfolio detailing teaching experiences, but it is another thing to have demonstrated published works in the space of medical education. It shows long-term promise as a clinician educator, and it shows leadership potential in advancing the field. It doesn’t take much to produce publications in medical education—there are always journals who look for trainees to contribute to the field whether it be an editorial or systematic review or innovative ideas.

About the Author

Justin K. Lui, MD, is a graduate of Boston University School of Medicine. He completed an internal medicine residency and chief residency at the University of Massachusetts Medical School. He is currently a second-year pulmonary and critical care medicine fellow at Boston University School of Medicine.

Reprinted from CHEST’s Thought Leader’s Blog, July 2019. This post is part of Our Life as a Fellow blog post series and includes “fellow life lessons” from current trainees in leadership with CHEST.

Editor’s Note - As CHEST has just awarded the designation of Distinguished CHEST Educator (DCE) to 173 honorees at CHEST 2019 in New Orleans, this blog reminds fellows to start early to pursue a clinician educator role throughout their career.

While fellowship training is a time to continue building the foundation of expert clinical knowledge, it also offers an opportunity to start assembling a portfolio as a clinician educator. It takes time to compile educational scholarship and to establish a reputation within the communities of both teachers and learners, so it pays to get a head start. Moreover, it also takes time to master techniques for effective teaching to become that outstanding educator that you once looked up to as a medical student or resident. Below are some things that I found helpful in jump-starting that path during fellowship training.

Find a Capable Mentor

As with any sort of career planning, mentorship is key. Mentorship can open doors to expand your network and introduce opportunities for scholarship activities. Find a mentor who shares similar views and values with something that you feel passionate about. If you are planning on starting a scholarly project, make sure that your mentor has the background suited to help you maximize the experience and offer you the tools needed to achieve that end.

Determine What You Are Passionate About

Medical education is a vast field. Try to find something in medical education that is meaningful to you, whether it be in undergraduate medical education or graduate medical education or something else altogether. You want to be able to set yourself up for success, so the work has to be worthwhile.

Seek Out Opportunities to Teach

There are always opportunities to teach whether it entails precepting medical students on patient interviews or going over pulmonary/critical care topics at resident noon conferences. What I have found is that active participation in teaching opportunities tends to open a cascade of doors to more teaching opportunities.

Look for Opportunities to Be Involved in Educational Committees

Medical education, much like medicine, is a highly changing field. Leadership in medical education is always looking for resident/fellow representatives to bring new life and perspective to educational initiatives. Most of these opportunities do not require too much of a time commitment, and most committees often meet on a once-monthly basis. However, it connects you with faculty who are part of the leadership who can guide and help set you up for future success in medical education. During residency, I was able to take part in the intern curriculum committee to advise the direction of intern report. Now as a fellow, I’ve been able to meet many faculty and fellows with similar interests as mine in the CHEST Trainee Work Group.

Engage in Scholarly Activities

It is one thing to have a portfolio detailing teaching experiences, but it is another thing to have demonstrated published works in the space of medical education. It shows long-term promise as a clinician educator, and it shows leadership potential in advancing the field. It doesn’t take much to produce publications in medical education—there are always journals who look for trainees to contribute to the field whether it be an editorial or systematic review or innovative ideas.

About the Author

Justin K. Lui, MD, is a graduate of Boston University School of Medicine. He completed an internal medicine residency and chief residency at the University of Massachusetts Medical School. He is currently a second-year pulmonary and critical care medicine fellow at Boston University School of Medicine.

Reprinted from CHEST’s Thought Leader’s Blog, July 2019. This post is part of Our Life as a Fellow blog post series and includes “fellow life lessons” from current trainees in leadership with CHEST.

Meet the new CHEST® journal Deputy Editors

Christopher L. Carroll, MD, MS, FCCP

Dr. Carroll is a pediatric critical care physician at Connecticut Children’s Medical Center and a Professor of Pediatrics at the University of Connecticut. Dr. Carroll has a long-standing interest in social media and its use in academic medicine and medical education. He was an early adopter of social media in pulmonary and critical care medicine,and researches the use of social media in academic medicine. Dr. Carroll has served on numerous committees within CHEST, including most recently as Trustee of the CHEST Foundation and Chair of the Critical Care NetWork. Before being appointed Deputy Editor for Web and Multimedia for the journal CHEST, Dr. Carroll served as Social Media Section Editor from 2012-2018, and then Web and Multimedia Editor for the journal. He also co-chairs the Social Media Workgroup for CHEST. When not working or tweeting, Dr. Carroll can be found camping with his Boy Scout troop and parenting three amazingly nerdy and talented children who are fortunate to take after their grandparents.

Darcy D. Marciniuk, MD, FCCP

Dr. Marciniuk is a Professor of Respirology, Critical Care, and Sleep Medicine, and Associate Vice-President Research at the University of Saskatchewan, Saskatoon, SK, Canada. He is recognized internationally as an expert and leader in clinical exercise physiology, COPD, and pulmonary rehabilitation. Dr. Marciniuk is a Past President of CHEST and served as a founding Steering Committee member of Canada’s National Lung Health Framework, member and Chair of the Royal College of Physicians and Surgeons of Canada Respirology Examination Board, President of the Canadian Thoracic Society (CTS), and Co-Chair of the 2016 CHEST World Congress and 2005 CHEST Annual Meeting. He was the lead author of three COPD clinical practice guidelines, a panel member of international clinical practice guidelines in COPD, cardiopulmonary exercise testing, and pulmonary rehabilitation, and was a co-author of the published joint Canadian Thoracic Society/CHEST clinical practice guideline on preventing acute exacerbations of COPD.Susan Murin, MD, MSc, MBA, FCCP

Dr. Murin is currently serving as Vice-Dean for Clinical Affairs and Executive Director of the UC Davis Practice Management Group. She previously served as Program Director for the Pulmonary and Critical Care fellowship, Chief of the Division of Pulmonary, Critical Care and Sleep Medicine, and Vice-Chair for Clinical Affairs at UC Davis. Her past national service has included membership on the ACGME’s Internal Medicine RRC, Chair of the Pulmonary Medicine test-writing committee for the ABIM, and Chair of the Association of Pulmonary and Critical Care Medicine Program Directors. She has a long history of service to the college in a variety of roles and served as an Associate Editor of the CHEST journal or 14 years. Dr. Murin’s research has been focused in two areas: epidemiology of venous thromboembolism and the effects of smoking on the natural history of breast cancer. She remains active in clinical care and teaching at both UC Davis and the Northern California VA. When not working, she enjoys spending time with her three grown children, scuba diving, and tennis.

Christopher L. Carroll, MD, MS, FCCP

Dr. Carroll is a pediatric critical care physician at Connecticut Children’s Medical Center and a Professor of Pediatrics at the University of Connecticut. Dr. Carroll has a long-standing interest in social media and its use in academic medicine and medical education. He was an early adopter of social media in pulmonary and critical care medicine,and researches the use of social media in academic medicine. Dr. Carroll has served on numerous committees within CHEST, including most recently as Trustee of the CHEST Foundation and Chair of the Critical Care NetWork. Before being appointed Deputy Editor for Web and Multimedia for the journal CHEST, Dr. Carroll served as Social Media Section Editor from 2012-2018, and then Web and Multimedia Editor for the journal. He also co-chairs the Social Media Workgroup for CHEST. When not working or tweeting, Dr. Carroll can be found camping with his Boy Scout troop and parenting three amazingly nerdy and talented children who are fortunate to take after their grandparents.

Darcy D. Marciniuk, MD, FCCP

Dr. Marciniuk is a Professor of Respirology, Critical Care, and Sleep Medicine, and Associate Vice-President Research at the University of Saskatchewan, Saskatoon, SK, Canada. He is recognized internationally as an expert and leader in clinical exercise physiology, COPD, and pulmonary rehabilitation. Dr. Marciniuk is a Past President of CHEST and served as a founding Steering Committee member of Canada’s National Lung Health Framework, member and Chair of the Royal College of Physicians and Surgeons of Canada Respirology Examination Board, President of the Canadian Thoracic Society (CTS), and Co-Chair of the 2016 CHEST World Congress and 2005 CHEST Annual Meeting. He was the lead author of three COPD clinical practice guidelines, a panel member of international clinical practice guidelines in COPD, cardiopulmonary exercise testing, and pulmonary rehabilitation, and was a co-author of the published joint Canadian Thoracic Society/CHEST clinical practice guideline on preventing acute exacerbations of COPD.Susan Murin, MD, MSc, MBA, FCCP

Dr. Murin is currently serving as Vice-Dean for Clinical Affairs and Executive Director of the UC Davis Practice Management Group. She previously served as Program Director for the Pulmonary and Critical Care fellowship, Chief of the Division of Pulmonary, Critical Care and Sleep Medicine, and Vice-Chair for Clinical Affairs at UC Davis. Her past national service has included membership on the ACGME’s Internal Medicine RRC, Chair of the Pulmonary Medicine test-writing committee for the ABIM, and Chair of the Association of Pulmonary and Critical Care Medicine Program Directors. She has a long history of service to the college in a variety of roles and served as an Associate Editor of the CHEST journal or 14 years. Dr. Murin’s research has been focused in two areas: epidemiology of venous thromboembolism and the effects of smoking on the natural history of breast cancer. She remains active in clinical care and teaching at both UC Davis and the Northern California VA. When not working, she enjoys spending time with her three grown children, scuba diving, and tennis.

Christopher L. Carroll, MD, MS, FCCP

Dr. Carroll is a pediatric critical care physician at Connecticut Children’s Medical Center and a Professor of Pediatrics at the University of Connecticut. Dr. Carroll has a long-standing interest in social media and its use in academic medicine and medical education. He was an early adopter of social media in pulmonary and critical care medicine,and researches the use of social media in academic medicine. Dr. Carroll has served on numerous committees within CHEST, including most recently as Trustee of the CHEST Foundation and Chair of the Critical Care NetWork. Before being appointed Deputy Editor for Web and Multimedia for the journal CHEST, Dr. Carroll served as Social Media Section Editor from 2012-2018, and then Web and Multimedia Editor for the journal. He also co-chairs the Social Media Workgroup for CHEST. When not working or tweeting, Dr. Carroll can be found camping with his Boy Scout troop and parenting three amazingly nerdy and talented children who are fortunate to take after their grandparents.

Darcy D. Marciniuk, MD, FCCP

Dr. Marciniuk is a Professor of Respirology, Critical Care, and Sleep Medicine, and Associate Vice-President Research at the University of Saskatchewan, Saskatoon, SK, Canada. He is recognized internationally as an expert and leader in clinical exercise physiology, COPD, and pulmonary rehabilitation. Dr. Marciniuk is a Past President of CHEST and served as a founding Steering Committee member of Canada’s National Lung Health Framework, member and Chair of the Royal College of Physicians and Surgeons of Canada Respirology Examination Board, President of the Canadian Thoracic Society (CTS), and Co-Chair of the 2016 CHEST World Congress and 2005 CHEST Annual Meeting. He was the lead author of three COPD clinical practice guidelines, a panel member of international clinical practice guidelines in COPD, cardiopulmonary exercise testing, and pulmonary rehabilitation, and was a co-author of the published joint Canadian Thoracic Society/CHEST clinical practice guideline on preventing acute exacerbations of COPD.Susan Murin, MD, MSc, MBA, FCCP

Dr. Murin is currently serving as Vice-Dean for Clinical Affairs and Executive Director of the UC Davis Practice Management Group. She previously served as Program Director for the Pulmonary and Critical Care fellowship, Chief of the Division of Pulmonary, Critical Care and Sleep Medicine, and Vice-Chair for Clinical Affairs at UC Davis. Her past national service has included membership on the ACGME’s Internal Medicine RRC, Chair of the Pulmonary Medicine test-writing committee for the ABIM, and Chair of the Association of Pulmonary and Critical Care Medicine Program Directors. She has a long history of service to the college in a variety of roles and served as an Associate Editor of the CHEST journal or 14 years. Dr. Murin’s research has been focused in two areas: epidemiology of venous thromboembolism and the effects of smoking on the natural history of breast cancer. She remains active in clinical care and teaching at both UC Davis and the Northern California VA. When not working, she enjoys spending time with her three grown children, scuba diving, and tennis.

CPAP vs noninvasive ventilation for obesity hypoventilation syndrome

The conventional approach to treat hypoventilation has been to use noninvasive ventilation (NIV), while continuous positive airway pressure (CPAP) that does not augment alveolar ventilation improves gas exchange by maintaining upper airway patency and increasing functional residual capacity. To understand this rationale, it is important to first review the pathophysiology of OHS.

The hallmark of OHS is resting daytime awake arterial PaCO2of 45 mm Hg or greater in an obese patient (BMI > 30 kg/m2) in absence of any other identifiable cause. To recognize why only some but not all obese subjects develop OHS, it is important to understand the different components of pathophysiology that contribute to hypoventilation: (1) obesity-related reduction in functional residual capacity and lung compliance with resultant increase in work of breathing; (2) central hypoventilation related to leptin resistance and reduction in respiratory drive with REM hypoventilation; and (3) upper airway obstruction caused by upper airway fat deposition along with low FRC contributing to pharyngeal airway narrowing and increased airway collapsibility (Masa JF, et al. Eur Respir Rev. 2019; 28:180097).

CPAP vs NIV for OHS

Let us examine some of the studies that have compared the short-term efficacy of CPAP vs NIV in patients with OHS. In a small randomized controlled trial (RCT), the effectiveness of CPAP and NIV was compared in 36 patients with OHS (Piper AJ, et al. Thorax. 2008;63:395). Reduction in PaCO2 at 3 months was similar between the two groups. However, patients with persistent nocturnal desaturation despite optimal CPAP were excluded from the study. In another RCT of 60 patients with OHS who were either in stable condition or after an episode of acute on chronic hypercapnic respiratory failure, the use of CPAP or NIV showed similar improvements at 3 months in daytime PaCO2, quality of life, and sleep parameters (Howard ME, et al. Thorax. 2017;72:437).

In one of the largest randomized control trials, the Spanish Pickwick study randomized 221 patients with OHS and AHI >30/h to NIV, CPAP, and lifestyle modification (Masa JF, et al. Am J Respir Crit Care Med. 2015:192:86). PAP therapy included NIV that consisted of in-lab titration with bilevel PAP therapy targeted to tidal volume 5-6 mL/kg of actual body weight or CPAP. Life style modification served as the control group. Primary outcome was the change in PaCO2 at 2 months. Secondary outcomes were symptoms, HRQOL, polysomnographic parameters, spirometry, and 6-min walk distance (6 MWD). Mean AHI was 69/h, and mean PAP settings for NIV and CPAP were 20/7.7 cm and 11 cm H2O, respectively. NIV provided the greatest improvement in PaCO2 and serum HCO3 as compared with control group but not relative to CPAP group. CPAP improved PaCO2 as compared with control group only after adjustment of PAP use. Spirometry and 6 MWD and some HRQOL measures improved slightly more with NIV as compared to CPAP. Improvement in symptoms and polysomnographic parameters was similar between the two groups.

In another related study by the same group (Masa JF, et al. Thorax. 2016;71:899), 86 patients with OHS and mild OSA (AHI <30/h), were randomized to NIV and lifestyle modification. Mean AHI was 14/h and mean baseline PaCO2 was 49 +/-4 mm Hg. The NIV group with mean PAP adherence at 6 hours showed greater improvement in PaCO2 as compared with lifestyle modification (6 mm vs 2.8 mm Hg). They concluded that NIV was better than lifestyle modification in patients with OHS and mild OSA.

To determine the long-term clinical effectiveness of CPAP vs NIV, patients in the Pickwick study, who were initially assigned to either CPAP or NIV treatment group, were continued on their respective treatments, while subjects in the control group were again randomized at 2 months to either CPAP or NIV (Masa JF, et al. Lancet. 2019;393:1721). All subjects (CPAP n=107; NIV n=97) were followed for a minimum of 3 years. CPAP and NIV settings (pressure-targeted to desired tidal volume) were determined by in-lab titration without transcutaneous CO2 monitor, and daytime adjustment of PAP to improve oxygen saturation. Primary outcome was the number of hospitalization days per year. Mean CPAP was 10.7 cm H2O pressure and NIV 19.7/8.18 cm H2O pressure with an average respiratory rate of 14/min. Median PAP use and adherence > 4 h, respectively, were similar between the two groups (CPAP 6.0 h, adherence > 4 h 67% vs NIV 6.0/h, adherence >4 h 61%). Median duration of follow-up was 5.44 years (IOR 4.45-6.37 years) for both groups. Mean hospitalization days per patient-year were similar between the two groups (CPAP 1.63 vs NIV 1.44 days; adj RR 0.78, 95% CI 0.34-1.77; p=0.561). Overall mortality, adverse cardiovascular events, and arterial blood gas parameters were similar between the two groups, suggesting equal efficacy of CPAP and NIV in this group of stable patients with OHS with an AHI >30/h. Given the low complexity and cost of CPAP vs NIV, the authors concluded that CPAP may be the preferred PAP treatment modality until more studies are available.

An accompanying editorial (Murphy PB, et al. Lancet. 2019; 393:1674), discussed that since this study was powered for superiority as opposed to noninferiority of NIV (20% reduction in hospitalization with NIV when compared with CPAP), superiority could not be shown, due to the low event rate for hospitalization (NIV 1.44 days vs CPAP 1.63 days). It is also possible optimum NIV titration may not have been determined since TCO2 was not used. Furthermore, since this study was done only in patients with OHS and AHI >30/h, these results may not be applicable to patients with OHS and low AHI < 30/h that are more likely to have central hypoventilation and comorbidities, and this group may benefit from NIV as compared with CPAP.

Novel modes of bi-level PAP therapy

There are limited data on the use of the new bi-level PAP modalities, such as volume-targeted pressure support ventilation (PS) with fixed or auto-EPAP. The use of intelligent volume-assured pressure support ventilation (iVAPS) vs standard fixed pressure support ventilation in select OHS patients (n=18) showed equivalent control of chronic respiratory failure with no worsening of sleep quality and better PAP adherence (Kelly JL, et al. Respirology. 2014;19:596). In another small randomized, double-blind, crossover study, done on two consecutive nights in 11 patients with OHS, the use of auto-adjusting EPAP was noninferior to fixed EPAP (10.8 cm vs 11.8 cm H2O pressure), with no differences in sleep quality and patient preference (McArdle N. Sleep. 2017;40:1). Although the data are limited, these small studies suggest the use of new PAP modalities, such as variable PS to deliver target volumes and auto EPAP could offer the potential to initiate bi-level PAP therapy in outpatients without the in-lab titration. More studies are needed before bi-level PAP therapy can be safely initiated in outpatients with OHS.

Summary

In summary, how can we utilize the most effective PAP therapy for patients with OHS? Can we use a phenotype-dependent approach to PAP treatment options? The answer is probably yes. Recently published ATS Clinical Practice Guideline (Am J Respir Crit Care Med. 2019;200:e6-e24) suggests the use of PAP therapy for stable ambulatory patients with OHS as compared with no PAP therapy, and patients with OHS with AHI >30/h (approximately 70% of the OHS patients) can be initially started on CPAP instead of NIV. Patients who have persistent nocturnal desaturation despite optimum CPAP can be switched to NIV. On the other hand, data are limited on the use of CPAP in patients with OHS with AHI <30/h, and these patients can be started on NIV. PAP adherence >5-6 h, and weight loss using a multidisciplinary approach should be encouraged for all patients with OHS.

Dr. Dewan is Professor and Program Director, Sleep Medicine; Division of Pulmonary, Critical Care and Sleep Medicine; Chief, Pulmonary Section VA Medical Center; Creighton University, Omaha, Nebraska.

The conventional approach to treat hypoventilation has been to use noninvasive ventilation (NIV), while continuous positive airway pressure (CPAP) that does not augment alveolar ventilation improves gas exchange by maintaining upper airway patency and increasing functional residual capacity. To understand this rationale, it is important to first review the pathophysiology of OHS.

The hallmark of OHS is resting daytime awake arterial PaCO2of 45 mm Hg or greater in an obese patient (BMI > 30 kg/m2) in absence of any other identifiable cause. To recognize why only some but not all obese subjects develop OHS, it is important to understand the different components of pathophysiology that contribute to hypoventilation: (1) obesity-related reduction in functional residual capacity and lung compliance with resultant increase in work of breathing; (2) central hypoventilation related to leptin resistance and reduction in respiratory drive with REM hypoventilation; and (3) upper airway obstruction caused by upper airway fat deposition along with low FRC contributing to pharyngeal airway narrowing and increased airway collapsibility (Masa JF, et al. Eur Respir Rev. 2019; 28:180097).

CPAP vs NIV for OHS

Let us examine some of the studies that have compared the short-term efficacy of CPAP vs NIV in patients with OHS. In a small randomized controlled trial (RCT), the effectiveness of CPAP and NIV was compared in 36 patients with OHS (Piper AJ, et al. Thorax. 2008;63:395). Reduction in PaCO2 at 3 months was similar between the two groups. However, patients with persistent nocturnal desaturation despite optimal CPAP were excluded from the study. In another RCT of 60 patients with OHS who were either in stable condition or after an episode of acute on chronic hypercapnic respiratory failure, the use of CPAP or NIV showed similar improvements at 3 months in daytime PaCO2, quality of life, and sleep parameters (Howard ME, et al. Thorax. 2017;72:437).

In one of the largest randomized control trials, the Spanish Pickwick study randomized 221 patients with OHS and AHI >30/h to NIV, CPAP, and lifestyle modification (Masa JF, et al. Am J Respir Crit Care Med. 2015:192:86). PAP therapy included NIV that consisted of in-lab titration with bilevel PAP therapy targeted to tidal volume 5-6 mL/kg of actual body weight or CPAP. Life style modification served as the control group. Primary outcome was the change in PaCO2 at 2 months. Secondary outcomes were symptoms, HRQOL, polysomnographic parameters, spirometry, and 6-min walk distance (6 MWD). Mean AHI was 69/h, and mean PAP settings for NIV and CPAP were 20/7.7 cm and 11 cm H2O, respectively. NIV provided the greatest improvement in PaCO2 and serum HCO3 as compared with control group but not relative to CPAP group. CPAP improved PaCO2 as compared with control group only after adjustment of PAP use. Spirometry and 6 MWD and some HRQOL measures improved slightly more with NIV as compared to CPAP. Improvement in symptoms and polysomnographic parameters was similar between the two groups.

In another related study by the same group (Masa JF, et al. Thorax. 2016;71:899), 86 patients with OHS and mild OSA (AHI <30/h), were randomized to NIV and lifestyle modification. Mean AHI was 14/h and mean baseline PaCO2 was 49 +/-4 mm Hg. The NIV group with mean PAP adherence at 6 hours showed greater improvement in PaCO2 as compared with lifestyle modification (6 mm vs 2.8 mm Hg). They concluded that NIV was better than lifestyle modification in patients with OHS and mild OSA.

To determine the long-term clinical effectiveness of CPAP vs NIV, patients in the Pickwick study, who were initially assigned to either CPAP or NIV treatment group, were continued on their respective treatments, while subjects in the control group were again randomized at 2 months to either CPAP or NIV (Masa JF, et al. Lancet. 2019;393:1721). All subjects (CPAP n=107; NIV n=97) were followed for a minimum of 3 years. CPAP and NIV settings (pressure-targeted to desired tidal volume) were determined by in-lab titration without transcutaneous CO2 monitor, and daytime adjustment of PAP to improve oxygen saturation. Primary outcome was the number of hospitalization days per year. Mean CPAP was 10.7 cm H2O pressure and NIV 19.7/8.18 cm H2O pressure with an average respiratory rate of 14/min. Median PAP use and adherence > 4 h, respectively, were similar between the two groups (CPAP 6.0 h, adherence > 4 h 67% vs NIV 6.0/h, adherence >4 h 61%). Median duration of follow-up was 5.44 years (IOR 4.45-6.37 years) for both groups. Mean hospitalization days per patient-year were similar between the two groups (CPAP 1.63 vs NIV 1.44 days; adj RR 0.78, 95% CI 0.34-1.77; p=0.561). Overall mortality, adverse cardiovascular events, and arterial blood gas parameters were similar between the two groups, suggesting equal efficacy of CPAP and NIV in this group of stable patients with OHS with an AHI >30/h. Given the low complexity and cost of CPAP vs NIV, the authors concluded that CPAP may be the preferred PAP treatment modality until more studies are available.

An accompanying editorial (Murphy PB, et al. Lancet. 2019; 393:1674), discussed that since this study was powered for superiority as opposed to noninferiority of NIV (20% reduction in hospitalization with NIV when compared with CPAP), superiority could not be shown, due to the low event rate for hospitalization (NIV 1.44 days vs CPAP 1.63 days). It is also possible optimum NIV titration may not have been determined since TCO2 was not used. Furthermore, since this study was done only in patients with OHS and AHI >30/h, these results may not be applicable to patients with OHS and low AHI < 30/h that are more likely to have central hypoventilation and comorbidities, and this group may benefit from NIV as compared with CPAP.

Novel modes of bi-level PAP therapy