User login

Prevalence of Low Vitamin D Levels in Patients With Orthopedic Trauma

The role of vitamin D in general health maintenance is a topic of increasing interest and importance in the medical community. Not only has vitamin D deficiency been linked to a myriad of nonorthopedic maladies, including cancer, diabetes, and cardiovascular disease, but it has demonstrated an adverse effect on musculoskeletal health.1 Authors have found a correlation between vitamin D deficiency and muscle weakness, fragility fractures, and, most recently, fracture nonunion.1 Despite the detrimental effects of vitamin D deficiency on musculoskeletal and general health, evidence exists that vitamin D deficiency is surprisingly prevalent.2 This deficiency is known to be associated with increasing age, but recent studies have also found alarming rates of deficiency in younger populations.3,4

Although there has been some discussion regarding optimal serum levels of 25-hydroxyvitamin D, most experts have defined vitamin D deficiency as a 25-hydroxyvitamin D level of 20 ng/mL or less and insufficiency as 21 to 32 ng/mL.5 Hollis and Wagner5 found increased serum parathyroid hormone and bone resorption and impaired dietary absorption of calcium when 25-hydroxyvitamin D levels were under 32 ng/mL. Given these data, a 25-hydroxyvitamin D level of 21 to 32 ng/mL (52-72 nmol/L) can be considered as indicating a relative insufficiency of vitamin D, and a level of 20 ng/mL or less can be considered as indicating vitamin D deficiency.

Vitamin D plays a vital role in bone metabolism and has been implicated in increased fracture risk and in fracture healing ability. Therefore, documenting the prevalence of vitamin D deficiency in patients with trauma is the first step in raising awareness among orthopedic traumatologists and further developing a screening-and-treatment strategy for vitamin D deficiency in these patients. Steele and colleagues6 retrospectively studied 44 patients with high- and low-energy fractures and found an almost 60% prevalence of vitamin D insufficiency. If vitamin D insufficiency is this prevalent, treatment protocols for patients with fractures may require modifications that include routine screening and treatment for low vitamin D levels.

After noting a regular occurrence of hypovitaminosis D in our patient population (independent of age, sex, or medical comorbidities), we conducted a study to determine the prevalence of vitamin D deficiency in a large orthopedic trauma population.

Patients and Methods

After obtaining Institutional Review Board approval for this study, we retrospectively reviewed the charts of all patients with a fracture treated by 1 of 4 orthopedic traumatologists within a 21-month period (January 1, 2009 to September 30, 2010). Acute fracture and recorded 25-hydroxyvitamin D level were the primary criteria for study inclusion. Given the concern about vitamin D deficiency, it became common protocol to check the serum 25-hydroxyvitamin D levels of patients with acute fractures during the review period. Exclusion criteria were age under 18 years and presence of vitamin D deficiency risk factors, including renal insufficiency (creatinine level, ≥2 mg/dL), malabsorption, gastrectomy, active liver disease, acute myocardial infarction, alcoholism, anorexia nervosa, and steroid dependency.

During the period studied, 1830 patients over age 18 years were treated by 4 fellowship-trained orthopedic traumatologists. Of these patients, 889 (487 female, 402 male) met the inclusion criteria. Mean age was 53.8 years. Demographic data (age, sex, race, independent living status, comorbid medical conditions, medications) were collected from the patients’ medical records. Clinical data collected were mechanism of injury, fracture location and type, injury date, surgery date and surgical procedure performed (when applicable), and serum 25-hydroxyvitamin D levels.

Statistical Methods

Descriptive statistics (mean, median, mode) were calculated. The χ2 test was used when all cell frequencies were more than 5, and the Fisher exact probability test was used when any cell frequency was 5 or less. Prevalence of vitamin D deficiency and insufficiency was calculated in multiple patient populations. Patients were analyzed according to age and sex subgroups.

Definitions

Vitamin D deficiency was defined as a serum 25-hydroxyvitamin D level of 20 ng/mL or less and insufficiency as 21 to 32 ng/mL.2 As the serum test was performed independent of the investigators and with use of standard medical laboratory protocols and techniques, there should be no bias in the results. We had intended to have all patients undergo serum testing during the review period because that was our usual protocol. However, test results were available for only 889 (49%) of the 1830 patients with orthopedic trauma during the review period. Although a false-positive is theoretically possible, this series of orthopedic trauma patients is the largest in the literature and therefore should be more accurate than the previously reported small series.

Results

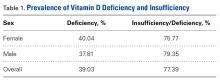

There were no significant (P < .05) age or sex differences in prevalence of vitamin D deficiency or insufficiency in our patient population. Overall prevalence of deficiency/insufficiency was 77.39%, and prevalence of deficiency alone was 39.03% (Table 1).

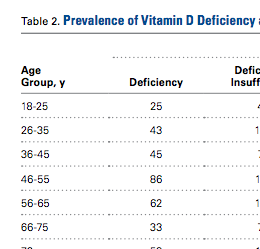

Women in the 18- to 25-year age group had a lower prevalence of deficiency (25%; P = .41) and insufficiency (41.7%; P = .16) than women in the other age groups (Table 3).

Discussion

We conducted this study to determine the prevalence of vitamin D deficiency in a large population of patients with orthopedic trauma. Results showed that vitamin D deficiency and insufficiency were prevalent in this population, which to our knowledge is the largest studied for vitamin D deficiency. In a 6-month study of 44 fractures, Steele and colleagues6 found an overall 60% rate of deficiency/insufficiency. Although their investigation is important—it was the first of its kind to evaluate patients with various fracture types, including those with high-energy causes—its numbers were small, and the period evaluated (June 1, 2006 to February 1, 2007) was short (8 months). Use of that time frame may have led to an underestimate of the prevalence of vitamin D deficiency, as vitamin D levels are higher in late summer because of increased sun exposure. Our study of 889 patients over 21 months allowed for seasonal variability of vitamin D levels. We did not notice a specific difference in patients who were treated during winter vs summer. Furthermore, our 77% prevalence of vitamin D insufficiency and 39% prevalence of vitamin D deficiency indicate how widespread low vitamin D levels are in a large Midwestern orthopedic trauma population. In the Pacific Northwest, Bee and colleagues7 studied seasonal differences in patients with surgically treated fractures and found an average difference of 3 ng/mL between winter and summer serum levels. However, the real issue, which should not be overlooked, is that the average 25-hydroxyvitamin D level was under 30 ng/mL in both cohorts (26.4 ng/mL in winter vs 29.8 ng/mL in summer). The emphasis should be that both levels were insufficient and that seasonal variance does not really change prevalence.

With use of the current definitions, it has been estimated that 1 billion people worldwide have vitamin D deficiency or insufficiency, with the elderly and certain ethnic populations at higher risk.8-10Vitamin D deficiency is a common diagnosis among elderly patients with hip fractures. According to various reports, 60% to 90% of patients treated for hip fractures are deficient or insufficient in vitamin D.8,9Hypovitaminosis D has also been noted in medical inpatients with and without risks for this deficiency.2 Surprisingly, low vitamin D levels are not isolated to the elderly. In Massachusetts, Gordon and colleagues11 found a 52% prevalence of vitamin D deficiency in Hispanic and black adolescents. Nesby-O’Dell and colleagues10 found that 42% of 15- to 49-year-old black women in the United States had vitamin D deficiency at the end of winter. Bogunovic and colleagues12 noted 5.5 times higher risk of low vitamin D levels in patients with darker skin tones. Although vitamin D deficiency has been linked to specific races, it frequently occurs in lower-risk populations as well. Sullivan and colleagues4 found a 48% prevalence of vitamin D deficiency in white preadolescent girls in Maine. Tangpricha and colleagues3 reported a 32% prevalence of vitamin D deficiency in otherwise fit healthcare providers sampled at a Boston hospital. Bogunovic and colleagues12 also showed that patients between ages 18 years and 50 years, and men, were more likely to have low vitamin D levels.

Establishing the prevalence of hypovitaminosis D in orthopedic trauma patients is needed in order to raise awareness of the disease and modify screening and treatment protocols. Brinker and O’Connor13 found vitamin D deficiency in 68% of patients with fracture nonunions, which suggests that hypovitaminosis D may partly account for difficulty in achieving fracture union. Bogunovic and colleagues12 found vitamin D insufficiency in 43% of 723 patients who underwent orthopedic surgery. Isolating the 121 patients on the trauma service revealed a 66% prevalence of low vitamin D levels. Our 77% prevalence of low vitamin D levels in 889 patients adds to the evidence that low levels are common in patients with orthopedic trauma. Understanding the importance of vitamin D deficiency can be significant in reducing the risk of complications, including delayed unions and nonunions, associated with treating orthopedic trauma cases.

Although our study indicates an alarming prevalence of insufficient vitamin D levels in our patient population, it does not provide a cause-and-effect link between low serum 25-hydroxyvitamin D levels and risk of fracture or nonunion. However, further investigations may yield clinically relevant data linking hypovitaminosis D with fracture risk. Although we did not include patients with nonunion in this study, new prospective investigations will address nonunions and subgroup analysis of race, fracture type, management type (surgical vs nonsurgical), injury date (to determine seasonal effect), and different treatment regimens.

The primary limitation of this study was its retrospective design. In addition, though we collected vitamin D data from 889 patients with acute fracture, our serum collection protocols were not standardized. Most patients who were admitted during initial orthopedic consultation in the emergency department had serum 25-hydroxyvitamin D levels drawn during their hospital stay, and patients initially treated in an ambulatory setting may not have had serum vitamin D levels drawn for up to 2 weeks after injury (the significance of this delay is unknown). Furthermore, the serum result rate for the overall orthopedic trauma population during the review period was only 49%, which could indicate selection bias. There are multiple explanations for the low rate. As with any new protocol or method, it takes time for the order to become standard practice; in the early stages, individuals can forget to ask for the test. In addition, during the review period, the serum test was also relatively new at our facility, and it was a “send-out” test, which could partly account for the lack of consistency. For example, some specimens were lost, and, in a number of other cases, excluded patients mistakenly had their 1,25-hydroxyvitamin D levels measured and were not comparable to included patients. Nevertheless, our sample of 889 patients with acute fractures remains the largest (by several hundred) reported in the literature.

From a practical standpoint, the present results were useful in updating our treatment protocols. Now we typically treat patients only prophylactically, with 50,000 units of vitamin D2 for 8 weeks and daily vitamin D3 and calcium until fracture healing. Patients are encouraged to continue daily vitamin D and calcium supplementation after fracture healing to maintain bone health. Compliance, however, remains a continued challenge and lack thereof can potentially explain the confusing effect of a supplementation protocol on the serum 25-hydroxyvitamin D level.14 The only patients who are not given prophylactic treatment are those who previously had been denied it (patients with chronic kidney disease or elevated blood calcium levels).

Vitamin D deficiency and insufficiency are prevalent in patients with orthopedic trauma. Studies are needed to further elucidate the relationship between low vitamin D levels and risk of complications. Retrospectively, without compliance monitoring, we have not seen a direct correlation with fracture complications.15 Our goal here was to increase orthopedic surgeons’ awareness of the problem and of the need to consider addressing low serum vitamin D levels. The treatment is low cost and low risk. The ultimate goal—if there is a prospective direct correlation between low serum vitamin D levels and complications—is to develop treatment strategies that can effectively lower the prevalence of low vitamin D levels.

Am J Orthop. 2016;45(7):E522-E526. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. Zaidi SA, Singh G, Owojori O, et al. Vitamin D deficiency in medical inpatients: a retrospective study of implications of untreated versus treated deficiency. Nutr Metab Insights. 2016;9:65-69.

2. Thomas MK, Lloyd-Jones DM, Thadhani RI, et al. Hypovitaminosis D in medical inpatients. N Engl J Med. 1998;338(12):777-783.

3. Tangpricha V, Pearce EN, Chen TC, Holick MF. Vitamin D insufficiency among free-living healthy young adults. Am J Med. 2002;112(8):659-662.

4. Sullivan SS, Rosen CJ, Halteman WA, Chen TC, Holick MF. Adolescent girls in Maine are at risk for vitamin D insufficiency. J Am Diet Assoc. 2005;105(6):971-974.

5. Hollis BW, Wagner CL. Normal serum vitamin D levels. N Engl J Med. 2005;352(5):515-516.

6. Steele B, Serota A, Helfet DL, Peterson M, Lyman S, Lane JM. Vitamin D deficiency: a common occurrence in both high- and low-energy fractures. HSS J. 2008;4(2):143-148.

7. Bee CR, Sheerin DV, Wuest TK, Fitzpatrick DC. Serum vitamin D levels in orthopaedic trauma patients living in the northwestern United States. J Orthop Trauma. 2013;27(5):e103-e106.

8. Bischoff-Ferrari HA, Can U, Staehelin HB, et al. Severe vitamin D deficiency in Swiss hip fracture patients. Bone. 2008;42(3):597-602.

9. Pieper CF, Colon-Emeric C, Caminis J, et al. Distribution and correlates of serum 25-hydroxyvitamin D levels in a sample of patients with hip fracture. Am J Geriatr Pharmacother. 2007;5(4):335-340.

10. Nesby-O’Dell S, Scanlon KS, Cogswell ME, et al. Hypovitaminosis D prevalence and determinants among African American and white women of reproductive age: third National Health and Nutrition Examination Survey, 1988–1994. Am J Clin Nutr. 2002;76(1):187-192.

11. Gordon CM, DePeter KC, Feldman HA, Grace E, Emans SJ. Prevalence of vitamin D deficiency among healthy adolescents. Arch Pediatr Adolesc Med. 2004;158(6):531-537.

12. Bogunovic L, Kim AD, Beamer BS, Nguyen J, Lane JM. Hypovitaminosis D in patients scheduled to undergo orthopaedic surgery: a single-center analysis. J Bone Joint Surg Am. 2010;92(13):2300-2304.

13. Brinker MR, O’Connor DP. Outcomes of tibial nonunion in older adults following treatment using the Ilizarov method. J Orthop Trauma. 2007;21(9):634-642.

14. Robertson DS, Jenkins T, Murtha YM, et al. Effectiveness of vitamin D therapy in orthopaedic trauma patients. J Orthop Trauma. 2015;29(11):e451-e453.

15. Bodendorfer BM, Cook JL, Robertson DS, et al. Do 25-hydroxyvitamin D levels correlate with fracture complications: J Orthop Trauma. 2016;30(9):e312-e317.

The role of vitamin D in general health maintenance is a topic of increasing interest and importance in the medical community. Not only has vitamin D deficiency been linked to a myriad of nonorthopedic maladies, including cancer, diabetes, and cardiovascular disease, but it has demonstrated an adverse effect on musculoskeletal health.1 Authors have found a correlation between vitamin D deficiency and muscle weakness, fragility fractures, and, most recently, fracture nonunion.1 Despite the detrimental effects of vitamin D deficiency on musculoskeletal and general health, evidence exists that vitamin D deficiency is surprisingly prevalent.2 This deficiency is known to be associated with increasing age, but recent studies have also found alarming rates of deficiency in younger populations.3,4

Although there has been some discussion regarding optimal serum levels of 25-hydroxyvitamin D, most experts have defined vitamin D deficiency as a 25-hydroxyvitamin D level of 20 ng/mL or less and insufficiency as 21 to 32 ng/mL.5 Hollis and Wagner5 found increased serum parathyroid hormone and bone resorption and impaired dietary absorption of calcium when 25-hydroxyvitamin D levels were under 32 ng/mL. Given these data, a 25-hydroxyvitamin D level of 21 to 32 ng/mL (52-72 nmol/L) can be considered as indicating a relative insufficiency of vitamin D, and a level of 20 ng/mL or less can be considered as indicating vitamin D deficiency.

Vitamin D plays a vital role in bone metabolism and has been implicated in increased fracture risk and in fracture healing ability. Therefore, documenting the prevalence of vitamin D deficiency in patients with trauma is the first step in raising awareness among orthopedic traumatologists and further developing a screening-and-treatment strategy for vitamin D deficiency in these patients. Steele and colleagues6 retrospectively studied 44 patients with high- and low-energy fractures and found an almost 60% prevalence of vitamin D insufficiency. If vitamin D insufficiency is this prevalent, treatment protocols for patients with fractures may require modifications that include routine screening and treatment for low vitamin D levels.

After noting a regular occurrence of hypovitaminosis D in our patient population (independent of age, sex, or medical comorbidities), we conducted a study to determine the prevalence of vitamin D deficiency in a large orthopedic trauma population.

Patients and Methods

After obtaining Institutional Review Board approval for this study, we retrospectively reviewed the charts of all patients with a fracture treated by 1 of 4 orthopedic traumatologists within a 21-month period (January 1, 2009 to September 30, 2010). Acute fracture and recorded 25-hydroxyvitamin D level were the primary criteria for study inclusion. Given the concern about vitamin D deficiency, it became common protocol to check the serum 25-hydroxyvitamin D levels of patients with acute fractures during the review period. Exclusion criteria were age under 18 years and presence of vitamin D deficiency risk factors, including renal insufficiency (creatinine level, ≥2 mg/dL), malabsorption, gastrectomy, active liver disease, acute myocardial infarction, alcoholism, anorexia nervosa, and steroid dependency.

During the period studied, 1830 patients over age 18 years were treated by 4 fellowship-trained orthopedic traumatologists. Of these patients, 889 (487 female, 402 male) met the inclusion criteria. Mean age was 53.8 years. Demographic data (age, sex, race, independent living status, comorbid medical conditions, medications) were collected from the patients’ medical records. Clinical data collected were mechanism of injury, fracture location and type, injury date, surgery date and surgical procedure performed (when applicable), and serum 25-hydroxyvitamin D levels.

Statistical Methods

Descriptive statistics (mean, median, mode) were calculated. The χ2 test was used when all cell frequencies were more than 5, and the Fisher exact probability test was used when any cell frequency was 5 or less. Prevalence of vitamin D deficiency and insufficiency was calculated in multiple patient populations. Patients were analyzed according to age and sex subgroups.

Definitions

Vitamin D deficiency was defined as a serum 25-hydroxyvitamin D level of 20 ng/mL or less and insufficiency as 21 to 32 ng/mL.2 As the serum test was performed independent of the investigators and with use of standard medical laboratory protocols and techniques, there should be no bias in the results. We had intended to have all patients undergo serum testing during the review period because that was our usual protocol. However, test results were available for only 889 (49%) of the 1830 patients with orthopedic trauma during the review period. Although a false-positive is theoretically possible, this series of orthopedic trauma patients is the largest in the literature and therefore should be more accurate than the previously reported small series.

Results

There were no significant (P < .05) age or sex differences in prevalence of vitamin D deficiency or insufficiency in our patient population. Overall prevalence of deficiency/insufficiency was 77.39%, and prevalence of deficiency alone was 39.03% (Table 1).

Women in the 18- to 25-year age group had a lower prevalence of deficiency (25%; P = .41) and insufficiency (41.7%; P = .16) than women in the other age groups (Table 3).

Discussion

We conducted this study to determine the prevalence of vitamin D deficiency in a large population of patients with orthopedic trauma. Results showed that vitamin D deficiency and insufficiency were prevalent in this population, which to our knowledge is the largest studied for vitamin D deficiency. In a 6-month study of 44 fractures, Steele and colleagues6 found an overall 60% rate of deficiency/insufficiency. Although their investigation is important—it was the first of its kind to evaluate patients with various fracture types, including those with high-energy causes—its numbers were small, and the period evaluated (June 1, 2006 to February 1, 2007) was short (8 months). Use of that time frame may have led to an underestimate of the prevalence of vitamin D deficiency, as vitamin D levels are higher in late summer because of increased sun exposure. Our study of 889 patients over 21 months allowed for seasonal variability of vitamin D levels. We did not notice a specific difference in patients who were treated during winter vs summer. Furthermore, our 77% prevalence of vitamin D insufficiency and 39% prevalence of vitamin D deficiency indicate how widespread low vitamin D levels are in a large Midwestern orthopedic trauma population. In the Pacific Northwest, Bee and colleagues7 studied seasonal differences in patients with surgically treated fractures and found an average difference of 3 ng/mL between winter and summer serum levels. However, the real issue, which should not be overlooked, is that the average 25-hydroxyvitamin D level was under 30 ng/mL in both cohorts (26.4 ng/mL in winter vs 29.8 ng/mL in summer). The emphasis should be that both levels were insufficient and that seasonal variance does not really change prevalence.

With use of the current definitions, it has been estimated that 1 billion people worldwide have vitamin D deficiency or insufficiency, with the elderly and certain ethnic populations at higher risk.8-10Vitamin D deficiency is a common diagnosis among elderly patients with hip fractures. According to various reports, 60% to 90% of patients treated for hip fractures are deficient or insufficient in vitamin D.8,9Hypovitaminosis D has also been noted in medical inpatients with and without risks for this deficiency.2 Surprisingly, low vitamin D levels are not isolated to the elderly. In Massachusetts, Gordon and colleagues11 found a 52% prevalence of vitamin D deficiency in Hispanic and black adolescents. Nesby-O’Dell and colleagues10 found that 42% of 15- to 49-year-old black women in the United States had vitamin D deficiency at the end of winter. Bogunovic and colleagues12 noted 5.5 times higher risk of low vitamin D levels in patients with darker skin tones. Although vitamin D deficiency has been linked to specific races, it frequently occurs in lower-risk populations as well. Sullivan and colleagues4 found a 48% prevalence of vitamin D deficiency in white preadolescent girls in Maine. Tangpricha and colleagues3 reported a 32% prevalence of vitamin D deficiency in otherwise fit healthcare providers sampled at a Boston hospital. Bogunovic and colleagues12 also showed that patients between ages 18 years and 50 years, and men, were more likely to have low vitamin D levels.

Establishing the prevalence of hypovitaminosis D in orthopedic trauma patients is needed in order to raise awareness of the disease and modify screening and treatment protocols. Brinker and O’Connor13 found vitamin D deficiency in 68% of patients with fracture nonunions, which suggests that hypovitaminosis D may partly account for difficulty in achieving fracture union. Bogunovic and colleagues12 found vitamin D insufficiency in 43% of 723 patients who underwent orthopedic surgery. Isolating the 121 patients on the trauma service revealed a 66% prevalence of low vitamin D levels. Our 77% prevalence of low vitamin D levels in 889 patients adds to the evidence that low levels are common in patients with orthopedic trauma. Understanding the importance of vitamin D deficiency can be significant in reducing the risk of complications, including delayed unions and nonunions, associated with treating orthopedic trauma cases.

Although our study indicates an alarming prevalence of insufficient vitamin D levels in our patient population, it does not provide a cause-and-effect link between low serum 25-hydroxyvitamin D levels and risk of fracture or nonunion. However, further investigations may yield clinically relevant data linking hypovitaminosis D with fracture risk. Although we did not include patients with nonunion in this study, new prospective investigations will address nonunions and subgroup analysis of race, fracture type, management type (surgical vs nonsurgical), injury date (to determine seasonal effect), and different treatment regimens.

The primary limitation of this study was its retrospective design. In addition, though we collected vitamin D data from 889 patients with acute fracture, our serum collection protocols were not standardized. Most patients who were admitted during initial orthopedic consultation in the emergency department had serum 25-hydroxyvitamin D levels drawn during their hospital stay, and patients initially treated in an ambulatory setting may not have had serum vitamin D levels drawn for up to 2 weeks after injury (the significance of this delay is unknown). Furthermore, the serum result rate for the overall orthopedic trauma population during the review period was only 49%, which could indicate selection bias. There are multiple explanations for the low rate. As with any new protocol or method, it takes time for the order to become standard practice; in the early stages, individuals can forget to ask for the test. In addition, during the review period, the serum test was also relatively new at our facility, and it was a “send-out” test, which could partly account for the lack of consistency. For example, some specimens were lost, and, in a number of other cases, excluded patients mistakenly had their 1,25-hydroxyvitamin D levels measured and were not comparable to included patients. Nevertheless, our sample of 889 patients with acute fractures remains the largest (by several hundred) reported in the literature.

From a practical standpoint, the present results were useful in updating our treatment protocols. Now we typically treat patients only prophylactically, with 50,000 units of vitamin D2 for 8 weeks and daily vitamin D3 and calcium until fracture healing. Patients are encouraged to continue daily vitamin D and calcium supplementation after fracture healing to maintain bone health. Compliance, however, remains a continued challenge and lack thereof can potentially explain the confusing effect of a supplementation protocol on the serum 25-hydroxyvitamin D level.14 The only patients who are not given prophylactic treatment are those who previously had been denied it (patients with chronic kidney disease or elevated blood calcium levels).

Vitamin D deficiency and insufficiency are prevalent in patients with orthopedic trauma. Studies are needed to further elucidate the relationship between low vitamin D levels and risk of complications. Retrospectively, without compliance monitoring, we have not seen a direct correlation with fracture complications.15 Our goal here was to increase orthopedic surgeons’ awareness of the problem and of the need to consider addressing low serum vitamin D levels. The treatment is low cost and low risk. The ultimate goal—if there is a prospective direct correlation between low serum vitamin D levels and complications—is to develop treatment strategies that can effectively lower the prevalence of low vitamin D levels.

Am J Orthop. 2016;45(7):E522-E526. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

The role of vitamin D in general health maintenance is a topic of increasing interest and importance in the medical community. Not only has vitamin D deficiency been linked to a myriad of nonorthopedic maladies, including cancer, diabetes, and cardiovascular disease, but it has demonstrated an adverse effect on musculoskeletal health.1 Authors have found a correlation between vitamin D deficiency and muscle weakness, fragility fractures, and, most recently, fracture nonunion.1 Despite the detrimental effects of vitamin D deficiency on musculoskeletal and general health, evidence exists that vitamin D deficiency is surprisingly prevalent.2 This deficiency is known to be associated with increasing age, but recent studies have also found alarming rates of deficiency in younger populations.3,4

Although there has been some discussion regarding optimal serum levels of 25-hydroxyvitamin D, most experts have defined vitamin D deficiency as a 25-hydroxyvitamin D level of 20 ng/mL or less and insufficiency as 21 to 32 ng/mL.5 Hollis and Wagner5 found increased serum parathyroid hormone and bone resorption and impaired dietary absorption of calcium when 25-hydroxyvitamin D levels were under 32 ng/mL. Given these data, a 25-hydroxyvitamin D level of 21 to 32 ng/mL (52-72 nmol/L) can be considered as indicating a relative insufficiency of vitamin D, and a level of 20 ng/mL or less can be considered as indicating vitamin D deficiency.

Vitamin D plays a vital role in bone metabolism and has been implicated in increased fracture risk and in fracture healing ability. Therefore, documenting the prevalence of vitamin D deficiency in patients with trauma is the first step in raising awareness among orthopedic traumatologists and further developing a screening-and-treatment strategy for vitamin D deficiency in these patients. Steele and colleagues6 retrospectively studied 44 patients with high- and low-energy fractures and found an almost 60% prevalence of vitamin D insufficiency. If vitamin D insufficiency is this prevalent, treatment protocols for patients with fractures may require modifications that include routine screening and treatment for low vitamin D levels.

After noting a regular occurrence of hypovitaminosis D in our patient population (independent of age, sex, or medical comorbidities), we conducted a study to determine the prevalence of vitamin D deficiency in a large orthopedic trauma population.

Patients and Methods

After obtaining Institutional Review Board approval for this study, we retrospectively reviewed the charts of all patients with a fracture treated by 1 of 4 orthopedic traumatologists within a 21-month period (January 1, 2009 to September 30, 2010). Acute fracture and recorded 25-hydroxyvitamin D level were the primary criteria for study inclusion. Given the concern about vitamin D deficiency, it became common protocol to check the serum 25-hydroxyvitamin D levels of patients with acute fractures during the review period. Exclusion criteria were age under 18 years and presence of vitamin D deficiency risk factors, including renal insufficiency (creatinine level, ≥2 mg/dL), malabsorption, gastrectomy, active liver disease, acute myocardial infarction, alcoholism, anorexia nervosa, and steroid dependency.

During the period studied, 1830 patients over age 18 years were treated by 4 fellowship-trained orthopedic traumatologists. Of these patients, 889 (487 female, 402 male) met the inclusion criteria. Mean age was 53.8 years. Demographic data (age, sex, race, independent living status, comorbid medical conditions, medications) were collected from the patients’ medical records. Clinical data collected were mechanism of injury, fracture location and type, injury date, surgery date and surgical procedure performed (when applicable), and serum 25-hydroxyvitamin D levels.

Statistical Methods

Descriptive statistics (mean, median, mode) were calculated. The χ2 test was used when all cell frequencies were more than 5, and the Fisher exact probability test was used when any cell frequency was 5 or less. Prevalence of vitamin D deficiency and insufficiency was calculated in multiple patient populations. Patients were analyzed according to age and sex subgroups.

Definitions

Vitamin D deficiency was defined as a serum 25-hydroxyvitamin D level of 20 ng/mL or less and insufficiency as 21 to 32 ng/mL.2 As the serum test was performed independent of the investigators and with use of standard medical laboratory protocols and techniques, there should be no bias in the results. We had intended to have all patients undergo serum testing during the review period because that was our usual protocol. However, test results were available for only 889 (49%) of the 1830 patients with orthopedic trauma during the review period. Although a false-positive is theoretically possible, this series of orthopedic trauma patients is the largest in the literature and therefore should be more accurate than the previously reported small series.

Results

There were no significant (P < .05) age or sex differences in prevalence of vitamin D deficiency or insufficiency in our patient population. Overall prevalence of deficiency/insufficiency was 77.39%, and prevalence of deficiency alone was 39.03% (Table 1).

Women in the 18- to 25-year age group had a lower prevalence of deficiency (25%; P = .41) and insufficiency (41.7%; P = .16) than women in the other age groups (Table 3).

Discussion

We conducted this study to determine the prevalence of vitamin D deficiency in a large population of patients with orthopedic trauma. Results showed that vitamin D deficiency and insufficiency were prevalent in this population, which to our knowledge is the largest studied for vitamin D deficiency. In a 6-month study of 44 fractures, Steele and colleagues6 found an overall 60% rate of deficiency/insufficiency. Although their investigation is important—it was the first of its kind to evaluate patients with various fracture types, including those with high-energy causes—its numbers were small, and the period evaluated (June 1, 2006 to February 1, 2007) was short (8 months). Use of that time frame may have led to an underestimate of the prevalence of vitamin D deficiency, as vitamin D levels are higher in late summer because of increased sun exposure. Our study of 889 patients over 21 months allowed for seasonal variability of vitamin D levels. We did not notice a specific difference in patients who were treated during winter vs summer. Furthermore, our 77% prevalence of vitamin D insufficiency and 39% prevalence of vitamin D deficiency indicate how widespread low vitamin D levels are in a large Midwestern orthopedic trauma population. In the Pacific Northwest, Bee and colleagues7 studied seasonal differences in patients with surgically treated fractures and found an average difference of 3 ng/mL between winter and summer serum levels. However, the real issue, which should not be overlooked, is that the average 25-hydroxyvitamin D level was under 30 ng/mL in both cohorts (26.4 ng/mL in winter vs 29.8 ng/mL in summer). The emphasis should be that both levels were insufficient and that seasonal variance does not really change prevalence.

With use of the current definitions, it has been estimated that 1 billion people worldwide have vitamin D deficiency or insufficiency, with the elderly and certain ethnic populations at higher risk.8-10Vitamin D deficiency is a common diagnosis among elderly patients with hip fractures. According to various reports, 60% to 90% of patients treated for hip fractures are deficient or insufficient in vitamin D.8,9Hypovitaminosis D has also been noted in medical inpatients with and without risks for this deficiency.2 Surprisingly, low vitamin D levels are not isolated to the elderly. In Massachusetts, Gordon and colleagues11 found a 52% prevalence of vitamin D deficiency in Hispanic and black adolescents. Nesby-O’Dell and colleagues10 found that 42% of 15- to 49-year-old black women in the United States had vitamin D deficiency at the end of winter. Bogunovic and colleagues12 noted 5.5 times higher risk of low vitamin D levels in patients with darker skin tones. Although vitamin D deficiency has been linked to specific races, it frequently occurs in lower-risk populations as well. Sullivan and colleagues4 found a 48% prevalence of vitamin D deficiency in white preadolescent girls in Maine. Tangpricha and colleagues3 reported a 32% prevalence of vitamin D deficiency in otherwise fit healthcare providers sampled at a Boston hospital. Bogunovic and colleagues12 also showed that patients between ages 18 years and 50 years, and men, were more likely to have low vitamin D levels.

Establishing the prevalence of hypovitaminosis D in orthopedic trauma patients is needed in order to raise awareness of the disease and modify screening and treatment protocols. Brinker and O’Connor13 found vitamin D deficiency in 68% of patients with fracture nonunions, which suggests that hypovitaminosis D may partly account for difficulty in achieving fracture union. Bogunovic and colleagues12 found vitamin D insufficiency in 43% of 723 patients who underwent orthopedic surgery. Isolating the 121 patients on the trauma service revealed a 66% prevalence of low vitamin D levels. Our 77% prevalence of low vitamin D levels in 889 patients adds to the evidence that low levels are common in patients with orthopedic trauma. Understanding the importance of vitamin D deficiency can be significant in reducing the risk of complications, including delayed unions and nonunions, associated with treating orthopedic trauma cases.

Although our study indicates an alarming prevalence of insufficient vitamin D levels in our patient population, it does not provide a cause-and-effect link between low serum 25-hydroxyvitamin D levels and risk of fracture or nonunion. However, further investigations may yield clinically relevant data linking hypovitaminosis D with fracture risk. Although we did not include patients with nonunion in this study, new prospective investigations will address nonunions and subgroup analysis of race, fracture type, management type (surgical vs nonsurgical), injury date (to determine seasonal effect), and different treatment regimens.

The primary limitation of this study was its retrospective design. In addition, though we collected vitamin D data from 889 patients with acute fracture, our serum collection protocols were not standardized. Most patients who were admitted during initial orthopedic consultation in the emergency department had serum 25-hydroxyvitamin D levels drawn during their hospital stay, and patients initially treated in an ambulatory setting may not have had serum vitamin D levels drawn for up to 2 weeks after injury (the significance of this delay is unknown). Furthermore, the serum result rate for the overall orthopedic trauma population during the review period was only 49%, which could indicate selection bias. There are multiple explanations for the low rate. As with any new protocol or method, it takes time for the order to become standard practice; in the early stages, individuals can forget to ask for the test. In addition, during the review period, the serum test was also relatively new at our facility, and it was a “send-out” test, which could partly account for the lack of consistency. For example, some specimens were lost, and, in a number of other cases, excluded patients mistakenly had their 1,25-hydroxyvitamin D levels measured and were not comparable to included patients. Nevertheless, our sample of 889 patients with acute fractures remains the largest (by several hundred) reported in the literature.

From a practical standpoint, the present results were useful in updating our treatment protocols. Now we typically treat patients only prophylactically, with 50,000 units of vitamin D2 for 8 weeks and daily vitamin D3 and calcium until fracture healing. Patients are encouraged to continue daily vitamin D and calcium supplementation after fracture healing to maintain bone health. Compliance, however, remains a continued challenge and lack thereof can potentially explain the confusing effect of a supplementation protocol on the serum 25-hydroxyvitamin D level.14 The only patients who are not given prophylactic treatment are those who previously had been denied it (patients with chronic kidney disease or elevated blood calcium levels).

Vitamin D deficiency and insufficiency are prevalent in patients with orthopedic trauma. Studies are needed to further elucidate the relationship between low vitamin D levels and risk of complications. Retrospectively, without compliance monitoring, we have not seen a direct correlation with fracture complications.15 Our goal here was to increase orthopedic surgeons’ awareness of the problem and of the need to consider addressing low serum vitamin D levels. The treatment is low cost and low risk. The ultimate goal—if there is a prospective direct correlation between low serum vitamin D levels and complications—is to develop treatment strategies that can effectively lower the prevalence of low vitamin D levels.

Am J Orthop. 2016;45(7):E522-E526. Copyright Frontline Medical Communications Inc. 2016. All rights reserved.

1. Zaidi SA, Singh G, Owojori O, et al. Vitamin D deficiency in medical inpatients: a retrospective study of implications of untreated versus treated deficiency. Nutr Metab Insights. 2016;9:65-69.

2. Thomas MK, Lloyd-Jones DM, Thadhani RI, et al. Hypovitaminosis D in medical inpatients. N Engl J Med. 1998;338(12):777-783.

3. Tangpricha V, Pearce EN, Chen TC, Holick MF. Vitamin D insufficiency among free-living healthy young adults. Am J Med. 2002;112(8):659-662.

4. Sullivan SS, Rosen CJ, Halteman WA, Chen TC, Holick MF. Adolescent girls in Maine are at risk for vitamin D insufficiency. J Am Diet Assoc. 2005;105(6):971-974.

5. Hollis BW, Wagner CL. Normal serum vitamin D levels. N Engl J Med. 2005;352(5):515-516.

6. Steele B, Serota A, Helfet DL, Peterson M, Lyman S, Lane JM. Vitamin D deficiency: a common occurrence in both high- and low-energy fractures. HSS J. 2008;4(2):143-148.

7. Bee CR, Sheerin DV, Wuest TK, Fitzpatrick DC. Serum vitamin D levels in orthopaedic trauma patients living in the northwestern United States. J Orthop Trauma. 2013;27(5):e103-e106.

8. Bischoff-Ferrari HA, Can U, Staehelin HB, et al. Severe vitamin D deficiency in Swiss hip fracture patients. Bone. 2008;42(3):597-602.

9. Pieper CF, Colon-Emeric C, Caminis J, et al. Distribution and correlates of serum 25-hydroxyvitamin D levels in a sample of patients with hip fracture. Am J Geriatr Pharmacother. 2007;5(4):335-340.

10. Nesby-O’Dell S, Scanlon KS, Cogswell ME, et al. Hypovitaminosis D prevalence and determinants among African American and white women of reproductive age: third National Health and Nutrition Examination Survey, 1988–1994. Am J Clin Nutr. 2002;76(1):187-192.

11. Gordon CM, DePeter KC, Feldman HA, Grace E, Emans SJ. Prevalence of vitamin D deficiency among healthy adolescents. Arch Pediatr Adolesc Med. 2004;158(6):531-537.

12. Bogunovic L, Kim AD, Beamer BS, Nguyen J, Lane JM. Hypovitaminosis D in patients scheduled to undergo orthopaedic surgery: a single-center analysis. J Bone Joint Surg Am. 2010;92(13):2300-2304.

13. Brinker MR, O’Connor DP. Outcomes of tibial nonunion in older adults following treatment using the Ilizarov method. J Orthop Trauma. 2007;21(9):634-642.

14. Robertson DS, Jenkins T, Murtha YM, et al. Effectiveness of vitamin D therapy in orthopaedic trauma patients. J Orthop Trauma. 2015;29(11):e451-e453.

15. Bodendorfer BM, Cook JL, Robertson DS, et al. Do 25-hydroxyvitamin D levels correlate with fracture complications: J Orthop Trauma. 2016;30(9):e312-e317.

1. Zaidi SA, Singh G, Owojori O, et al. Vitamin D deficiency in medical inpatients: a retrospective study of implications of untreated versus treated deficiency. Nutr Metab Insights. 2016;9:65-69.

2. Thomas MK, Lloyd-Jones DM, Thadhani RI, et al. Hypovitaminosis D in medical inpatients. N Engl J Med. 1998;338(12):777-783.

3. Tangpricha V, Pearce EN, Chen TC, Holick MF. Vitamin D insufficiency among free-living healthy young adults. Am J Med. 2002;112(8):659-662.

4. Sullivan SS, Rosen CJ, Halteman WA, Chen TC, Holick MF. Adolescent girls in Maine are at risk for vitamin D insufficiency. J Am Diet Assoc. 2005;105(6):971-974.

5. Hollis BW, Wagner CL. Normal serum vitamin D levels. N Engl J Med. 2005;352(5):515-516.

6. Steele B, Serota A, Helfet DL, Peterson M, Lyman S, Lane JM. Vitamin D deficiency: a common occurrence in both high- and low-energy fractures. HSS J. 2008;4(2):143-148.

7. Bee CR, Sheerin DV, Wuest TK, Fitzpatrick DC. Serum vitamin D levels in orthopaedic trauma patients living in the northwestern United States. J Orthop Trauma. 2013;27(5):e103-e106.

8. Bischoff-Ferrari HA, Can U, Staehelin HB, et al. Severe vitamin D deficiency in Swiss hip fracture patients. Bone. 2008;42(3):597-602.

9. Pieper CF, Colon-Emeric C, Caminis J, et al. Distribution and correlates of serum 25-hydroxyvitamin D levels in a sample of patients with hip fracture. Am J Geriatr Pharmacother. 2007;5(4):335-340.

10. Nesby-O’Dell S, Scanlon KS, Cogswell ME, et al. Hypovitaminosis D prevalence and determinants among African American and white women of reproductive age: third National Health and Nutrition Examination Survey, 1988–1994. Am J Clin Nutr. 2002;76(1):187-192.

11. Gordon CM, DePeter KC, Feldman HA, Grace E, Emans SJ. Prevalence of vitamin D deficiency among healthy adolescents. Arch Pediatr Adolesc Med. 2004;158(6):531-537.

12. Bogunovic L, Kim AD, Beamer BS, Nguyen J, Lane JM. Hypovitaminosis D in patients scheduled to undergo orthopaedic surgery: a single-center analysis. J Bone Joint Surg Am. 2010;92(13):2300-2304.

13. Brinker MR, O’Connor DP. Outcomes of tibial nonunion in older adults following treatment using the Ilizarov method. J Orthop Trauma. 2007;21(9):634-642.

14. Robertson DS, Jenkins T, Murtha YM, et al. Effectiveness of vitamin D therapy in orthopaedic trauma patients. J Orthop Trauma. 2015;29(11):e451-e453.

15. Bodendorfer BM, Cook JL, Robertson DS, et al. Do 25-hydroxyvitamin D levels correlate with fracture complications: J Orthop Trauma. 2016;30(9):e312-e317.

Purple Curvilinear Papules on the Back

The Diagnosis: Blaschkoid Graft-vs-host Disease

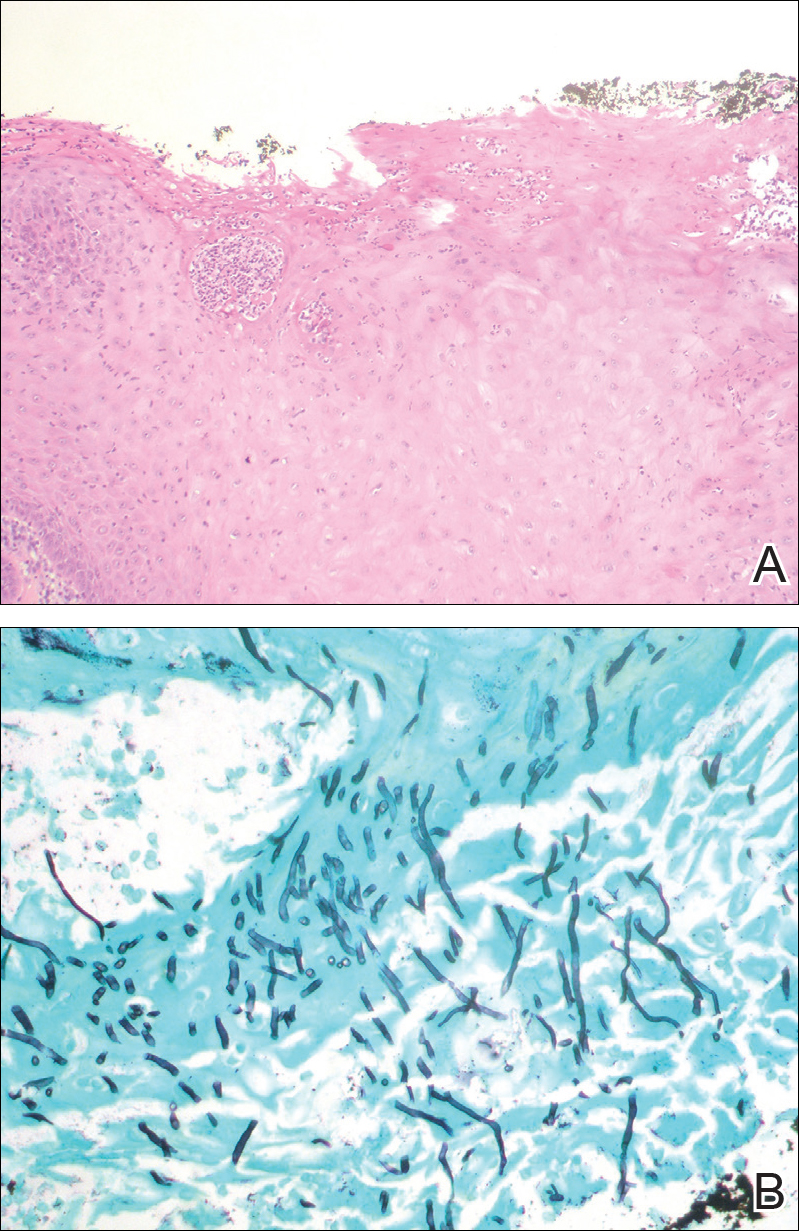

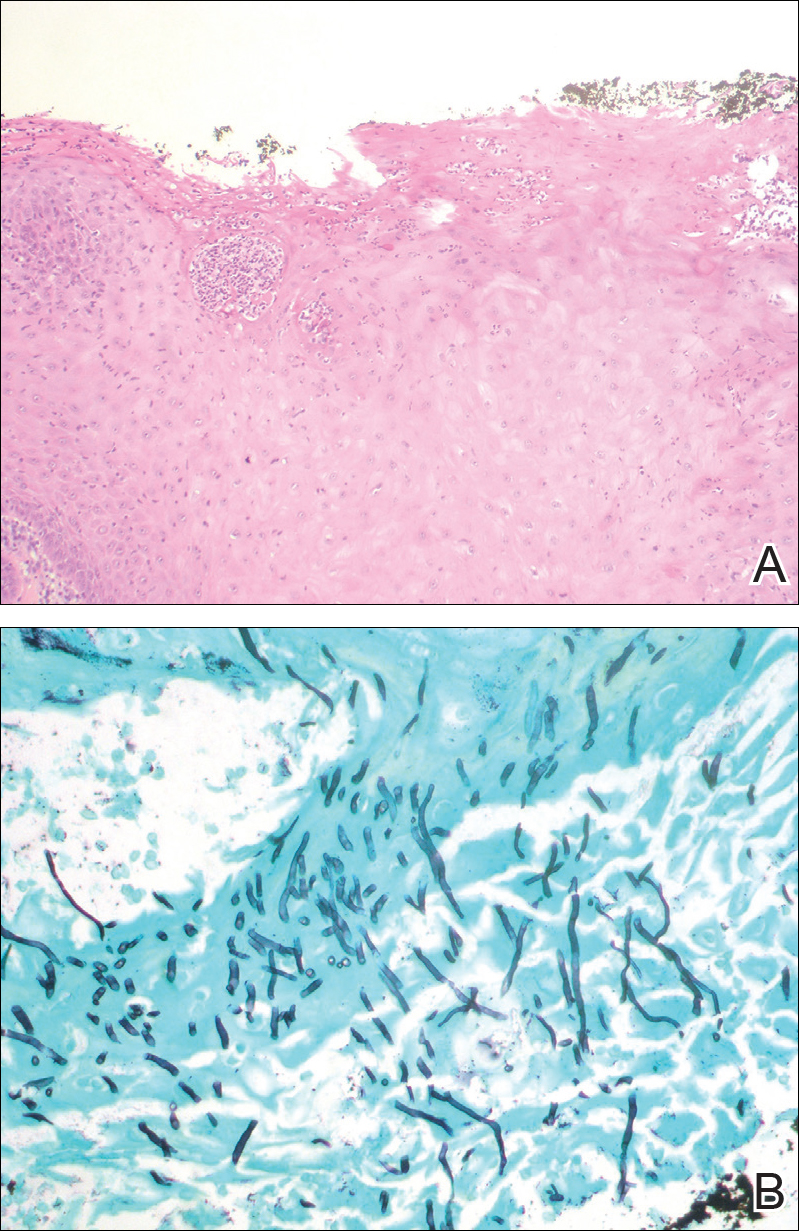

The patient had a history of myelodysplastic syndrome and underwent a bone marrow transplant 1 year prior to presentation. She had acute graft-vs-host disease (GVHD) 6 weeks following the transplant, which resolved with high-dose prednisone followed by UVB phototherapy. Skin biopsy demonstrated lichenoid dermatitis with vacuolar degeneration, dyskeratosis, and prominent pigment incontinence (Figure). Based on these findings and her clinical presentation, a diagnosis of blaschkoid GVHD was made.

Although acute GVHD is the result of immunocompetent donor T cells recognizing host tissues as foreign and initiating an immune response, the pathophysiology of chronic GVHD is not well understood.1,2 Theories for disease pathogenesis in chronic GVHD suggest an underlying autoimmune and/or alloreactive process.2-5 The skin often is the first organ affected in acute GVHD, and patients generally present with a pruritic morbilliform eruption that begins on the trunk and spreads to the rest of the body.1,2 Cutaneous manifestations of chronic GVHD may be protean. Lesions can resemble systemic sclerosis or morphea, lichen planus, psoriasis, ichthyosis, and many other conditions.2

The differential diagnosis of linear dermatoses includes herpes zoster, contact dermatitis, lichen striatus (blaschkitis), nevus unius lateris, inflammatory linear verrucous epidermal nevus, and incontinentia pigmenti.6,7 Lichen planus-like chronic GVHD occurring in a linear distribution has been described.6-14 Distinction between dermatomal and blaschkoid processes is diagnostically important. In the case of GVHD, dermatomal distribution may suggest an association between GVHD and prior herpes simplex virus or varicella-zoster virus infection.6,8 Herpesvirus may alter surface antigens of keratinocytes, rendering them targets of donor lymphocytes, and antibodies to viral particles may cross-react with host keratinocyte HLA antigens. It also is possible that dermatomal GVHD may simply be a type of isomorphic response (Köbner phenomenon).8

When cutaneous GVHD follows Blaschko lines, other mechanisms appear to be at play.9-14 It is plausible that these patients have an underlying genetic mosaicism, perhaps the result of a postzygotic mutation, that results in a daughter cell population that expresses surface antigens different from those of the primary cell population found elsewhere in the skin. Donor lymphocytes may selectively react to this mosaic population, leading to the clinical picture of chronic GVHD oriented along Blaschko lines.10,11,13,14

In conclusion, lichenoid linear GVHD following Blaschko lines is an uncommon presentation of chronic GVHD that highlights the heterogeneity of this disease and should be considered in the appropriate clinical setting.

- Ferrara JL, Levine JE, Reddy P, et al. Graft-versus-host disease. Lancet. 2009;373:1550-1561.

- Hymes SR, Alousi AM, Cowen EW. Graft-versus-host disease: part I. pathogenesis and clinical manifestations of graft-versus-host disease. J Am Acad Dermatol. 2012;66:515.e1-515.e18; quiz 533-534.

- Patriarca F, Skert C, Sperotto A, et al. The development of autoantibodies after allogeneic stem cell transplantation is related with chronic graft-vs-host disease and immune recovery. Exp Hematol. 2006;34:389-396.

- Shimada M, Onizuka M, Machida S, et al. Association of autoimmune disease-related gene polymorphisms with chronic graft-versus-host disease. Br J Haematol. 2007;139:458-463.

- Zhang C, Todorov I, Zhang Z, et al. Donor CD4+ T and B cells in transplants induce chronic graft-versus-host disease with autoimmune manifestations. Blood. 2006;107:2993-3001.

- Freemer CS, Farmer ER, Corio RL, et al. Lichenoid chronic graft-vs-host disease occurring in a dermatomal distribution. Arch Dermatol. 1994;130:70-72.

- Kikuchi A, Okamoto S, Takahashi S, et al. Linear chronic cutaneous graft-versus-host disease. J Am Acad Dermatol. 1997;37:1004-1006.

- Sanli H, Anadolu R, Arat M, et al. Dermatomal lichenoid graft-versus-host disease within herpes zoster scars. Int J Dermatol. 2003;42:562-564.

- Kennedy FE, Hilari H, Ferrer B, et al. Lichenoid chronic graft-vs-host disease following Blaschko lines. ActasDermosifiliogr. 2014;105:89-92.

- Lee SW, Kim YC, Lee E, et al. Linear lichenoid graft versus host disease: an unusual configuration following Blaschko's lines. J Dermatol. 2006;33:583-584.

- Beers B, Kalish RS, Kaye VN, et al. Unilateral linear lichenoid eruption after bone marrow transplantation: an unmasking of tolerance to an abnormal keratinocyte clone? J Am Acad Dermatol. 1993;28(5, pt 2):888-892.

- Wilson B, Lockman D. Linear lichenoid graft-vs-host disease. Arch Dermatol. 1994;130(9):1206-1208.

- Reisfeld PL. Lichenoid chronic graft-vs-host disease. Arch Dermatol. 1994;130:1207-1208.

- Vassallo C, Derlino F, Ripamonti F, et al. Lichenoid cutaneous chronic GvHD following Blaschko lines. Int J Dermatol. 2014;53:473-475.

The Diagnosis: Blaschkoid Graft-vs-host Disease

The patient had a history of myelodysplastic syndrome and underwent a bone marrow transplant 1 year prior to presentation. She had acute graft-vs-host disease (GVHD) 6 weeks following the transplant, which resolved with high-dose prednisone followed by UVB phototherapy. Skin biopsy demonstrated lichenoid dermatitis with vacuolar degeneration, dyskeratosis, and prominent pigment incontinence (Figure). Based on these findings and her clinical presentation, a diagnosis of blaschkoid GVHD was made.

Although acute GVHD is the result of immunocompetent donor T cells recognizing host tissues as foreign and initiating an immune response, the pathophysiology of chronic GVHD is not well understood.1,2 Theories for disease pathogenesis in chronic GVHD suggest an underlying autoimmune and/or alloreactive process.2-5 The skin often is the first organ affected in acute GVHD, and patients generally present with a pruritic morbilliform eruption that begins on the trunk and spreads to the rest of the body.1,2 Cutaneous manifestations of chronic GVHD may be protean. Lesions can resemble systemic sclerosis or morphea, lichen planus, psoriasis, ichthyosis, and many other conditions.2

The differential diagnosis of linear dermatoses includes herpes zoster, contact dermatitis, lichen striatus (blaschkitis), nevus unius lateris, inflammatory linear verrucous epidermal nevus, and incontinentia pigmenti.6,7 Lichen planus-like chronic GVHD occurring in a linear distribution has been described.6-14 Distinction between dermatomal and blaschkoid processes is diagnostically important. In the case of GVHD, dermatomal distribution may suggest an association between GVHD and prior herpes simplex virus or varicella-zoster virus infection.6,8 Herpesvirus may alter surface antigens of keratinocytes, rendering them targets of donor lymphocytes, and antibodies to viral particles may cross-react with host keratinocyte HLA antigens. It also is possible that dermatomal GVHD may simply be a type of isomorphic response (Köbner phenomenon).8

When cutaneous GVHD follows Blaschko lines, other mechanisms appear to be at play.9-14 It is plausible that these patients have an underlying genetic mosaicism, perhaps the result of a postzygotic mutation, that results in a daughter cell population that expresses surface antigens different from those of the primary cell population found elsewhere in the skin. Donor lymphocytes may selectively react to this mosaic population, leading to the clinical picture of chronic GVHD oriented along Blaschko lines.10,11,13,14

In conclusion, lichenoid linear GVHD following Blaschko lines is an uncommon presentation of chronic GVHD that highlights the heterogeneity of this disease and should be considered in the appropriate clinical setting.

The Diagnosis: Blaschkoid Graft-vs-host Disease

The patient had a history of myelodysplastic syndrome and underwent a bone marrow transplant 1 year prior to presentation. She had acute graft-vs-host disease (GVHD) 6 weeks following the transplant, which resolved with high-dose prednisone followed by UVB phototherapy. Skin biopsy demonstrated lichenoid dermatitis with vacuolar degeneration, dyskeratosis, and prominent pigment incontinence (Figure). Based on these findings and her clinical presentation, a diagnosis of blaschkoid GVHD was made.

Although acute GVHD is the result of immunocompetent donor T cells recognizing host tissues as foreign and initiating an immune response, the pathophysiology of chronic GVHD is not well understood.1,2 Theories for disease pathogenesis in chronic GVHD suggest an underlying autoimmune and/or alloreactive process.2-5 The skin often is the first organ affected in acute GVHD, and patients generally present with a pruritic morbilliform eruption that begins on the trunk and spreads to the rest of the body.1,2 Cutaneous manifestations of chronic GVHD may be protean. Lesions can resemble systemic sclerosis or morphea, lichen planus, psoriasis, ichthyosis, and many other conditions.2

The differential diagnosis of linear dermatoses includes herpes zoster, contact dermatitis, lichen striatus (blaschkitis), nevus unius lateris, inflammatory linear verrucous epidermal nevus, and incontinentia pigmenti.6,7 Lichen planus-like chronic GVHD occurring in a linear distribution has been described.6-14 Distinction between dermatomal and blaschkoid processes is diagnostically important. In the case of GVHD, dermatomal distribution may suggest an association between GVHD and prior herpes simplex virus or varicella-zoster virus infection.6,8 Herpesvirus may alter surface antigens of keratinocytes, rendering them targets of donor lymphocytes, and antibodies to viral particles may cross-react with host keratinocyte HLA antigens. It also is possible that dermatomal GVHD may simply be a type of isomorphic response (Köbner phenomenon).8

When cutaneous GVHD follows Blaschko lines, other mechanisms appear to be at play.9-14 It is plausible that these patients have an underlying genetic mosaicism, perhaps the result of a postzygotic mutation, that results in a daughter cell population that expresses surface antigens different from those of the primary cell population found elsewhere in the skin. Donor lymphocytes may selectively react to this mosaic population, leading to the clinical picture of chronic GVHD oriented along Blaschko lines.10,11,13,14

In conclusion, lichenoid linear GVHD following Blaschko lines is an uncommon presentation of chronic GVHD that highlights the heterogeneity of this disease and should be considered in the appropriate clinical setting.

- Ferrara JL, Levine JE, Reddy P, et al. Graft-versus-host disease. Lancet. 2009;373:1550-1561.

- Hymes SR, Alousi AM, Cowen EW. Graft-versus-host disease: part I. pathogenesis and clinical manifestations of graft-versus-host disease. J Am Acad Dermatol. 2012;66:515.e1-515.e18; quiz 533-534.

- Patriarca F, Skert C, Sperotto A, et al. The development of autoantibodies after allogeneic stem cell transplantation is related with chronic graft-vs-host disease and immune recovery. Exp Hematol. 2006;34:389-396.

- Shimada M, Onizuka M, Machida S, et al. Association of autoimmune disease-related gene polymorphisms with chronic graft-versus-host disease. Br J Haematol. 2007;139:458-463.

- Zhang C, Todorov I, Zhang Z, et al. Donor CD4+ T and B cells in transplants induce chronic graft-versus-host disease with autoimmune manifestations. Blood. 2006;107:2993-3001.

- Freemer CS, Farmer ER, Corio RL, et al. Lichenoid chronic graft-vs-host disease occurring in a dermatomal distribution. Arch Dermatol. 1994;130:70-72.

- Kikuchi A, Okamoto S, Takahashi S, et al. Linear chronic cutaneous graft-versus-host disease. J Am Acad Dermatol. 1997;37:1004-1006.

- Sanli H, Anadolu R, Arat M, et al. Dermatomal lichenoid graft-versus-host disease within herpes zoster scars. Int J Dermatol. 2003;42:562-564.

- Kennedy FE, Hilari H, Ferrer B, et al. Lichenoid chronic graft-vs-host disease following Blaschko lines. ActasDermosifiliogr. 2014;105:89-92.

- Lee SW, Kim YC, Lee E, et al. Linear lichenoid graft versus host disease: an unusual configuration following Blaschko's lines. J Dermatol. 2006;33:583-584.

- Beers B, Kalish RS, Kaye VN, et al. Unilateral linear lichenoid eruption after bone marrow transplantation: an unmasking of tolerance to an abnormal keratinocyte clone? J Am Acad Dermatol. 1993;28(5, pt 2):888-892.

- Wilson B, Lockman D. Linear lichenoid graft-vs-host disease. Arch Dermatol. 1994;130(9):1206-1208.

- Reisfeld PL. Lichenoid chronic graft-vs-host disease. Arch Dermatol. 1994;130:1207-1208.

- Vassallo C, Derlino F, Ripamonti F, et al. Lichenoid cutaneous chronic GvHD following Blaschko lines. Int J Dermatol. 2014;53:473-475.

- Ferrara JL, Levine JE, Reddy P, et al. Graft-versus-host disease. Lancet. 2009;373:1550-1561.

- Hymes SR, Alousi AM, Cowen EW. Graft-versus-host disease: part I. pathogenesis and clinical manifestations of graft-versus-host disease. J Am Acad Dermatol. 2012;66:515.e1-515.e18; quiz 533-534.

- Patriarca F, Skert C, Sperotto A, et al. The development of autoantibodies after allogeneic stem cell transplantation is related with chronic graft-vs-host disease and immune recovery. Exp Hematol. 2006;34:389-396.

- Shimada M, Onizuka M, Machida S, et al. Association of autoimmune disease-related gene polymorphisms with chronic graft-versus-host disease. Br J Haematol. 2007;139:458-463.

- Zhang C, Todorov I, Zhang Z, et al. Donor CD4+ T and B cells in transplants induce chronic graft-versus-host disease with autoimmune manifestations. Blood. 2006;107:2993-3001.

- Freemer CS, Farmer ER, Corio RL, et al. Lichenoid chronic graft-vs-host disease occurring in a dermatomal distribution. Arch Dermatol. 1994;130:70-72.

- Kikuchi A, Okamoto S, Takahashi S, et al. Linear chronic cutaneous graft-versus-host disease. J Am Acad Dermatol. 1997;37:1004-1006.

- Sanli H, Anadolu R, Arat M, et al. Dermatomal lichenoid graft-versus-host disease within herpes zoster scars. Int J Dermatol. 2003;42:562-564.

- Kennedy FE, Hilari H, Ferrer B, et al. Lichenoid chronic graft-vs-host disease following Blaschko lines. ActasDermosifiliogr. 2014;105:89-92.

- Lee SW, Kim YC, Lee E, et al. Linear lichenoid graft versus host disease: an unusual configuration following Blaschko's lines. J Dermatol. 2006;33:583-584.

- Beers B, Kalish RS, Kaye VN, et al. Unilateral linear lichenoid eruption after bone marrow transplantation: an unmasking of tolerance to an abnormal keratinocyte clone? J Am Acad Dermatol. 1993;28(5, pt 2):888-892.

- Wilson B, Lockman D. Linear lichenoid graft-vs-host disease. Arch Dermatol. 1994;130(9):1206-1208.

- Reisfeld PL. Lichenoid chronic graft-vs-host disease. Arch Dermatol. 1994;130:1207-1208.

- Vassallo C, Derlino F, Ripamonti F, et al. Lichenoid cutaneous chronic GvHD following Blaschko lines. Int J Dermatol. 2014;53:473-475.

A 56-year-old woman with a history of bone marrow transplant presented for evaluation of a nonpruritic rash of 3 months' duration. Physical examination revealed confluent purple-colored and hyperpigmented papules localized to the back and right arm in a curvilinear pattern. Laboratory results were notable for mildly elevated aspartate transaminase and alanine transaminase levels.

Pruritic and Painful Nodules on the Tongue

The Diagnosis: Chronic Hyperplastic Candidiasis (Nodular Form)

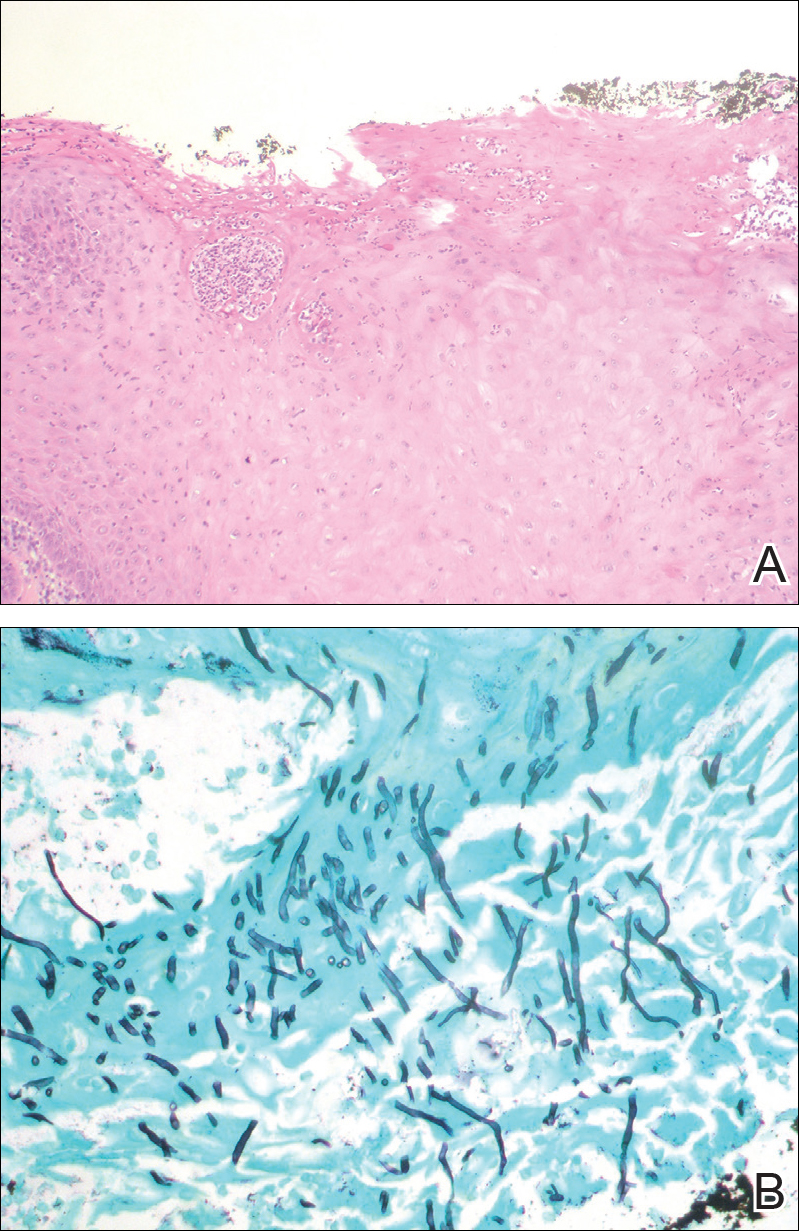

Chronic hyperplastic candidiasis (CHC) is a rare form of oropharyngeal candidiasis. The most frequent clinical presentation is a white plaque that cannot be detached (also known as candidal leukoplakia). It usually involves the anterior buccal mucosa, mainly the commissural area, though the palate and tongue also can be affected. The nodular type of CHC is even less common. Our patient exhibited the typical clinical presentation of the nodular type of CHC.1-3 The differential diagnosis includes leukoplakia, premalignant and malignant epithelial lesions, granular cell tumor, and florid oral papillomatosis.1,3 A biopsy usually is required for diagnostic confirmation. Histologically, CHC is characterized by parakeratosis and a hyperplastic epithelium invaded by Candida hyphae.4 Because Candida species are commensal in up to 50% of the healthy population, superficial colonization of tissues is not enough to indicate notable disease.1 In our patient, the histopathology revealed a hyperplastic mucosa without atypia and numerous hyphae (Figure). Both lingual swab and tissue cultures revealed high growth of Candida albicans.

Infection by C albicans depends on pathogen virulence and host factors such as wearing dentures, reduced salivary production, smoking habit, or immunosuppression.1,4 Apart from wearing dentures, our patient did not present with other predisposing factors. It is possible that the immunosuppressive status related to old age and associated oral changes contributed to Candida infection in this case.

Topical or systemic antifungal agents together with the elimination of predisposing factors are usual first-line treatments. Because of the relationship with atypia and the possibility of evolving into carcinoma in untreated or persistent lesions, follow-up is necessary to verify complete resolution after treatment.1,3,4 In the case reported herein, the lesions disappeared after 15 days of oral fluconazole treatment.

- Shibata T, Yamashita D, Hasegawa S, et al. Oral candidiasis mimicking tongue cancer [published online January 12, 2011]. Auris Nasus Larynx. 2011;38:418-420.

- Scardina GA, Ruggieri A, Messina P. Chronic hyperplastic candidosis: a pilot study of the efficacy of 0.18% isotretinoin. J Oral Sci. 2009;51:407-410.

- Sitheeque MA, Samaranayake LP. Chronic hyperplastic candidosis/candidiasis (candidal leukoplakia). Crit Rev Oral Biol Med. 2003;14:253-267.

- Williams DW, Bartie KL, Potts AJ, et al. Strain persistence of invasive Candida albicans in chronic hyperplastic candidosis that underwent malignant change. Gerodontology. 2001;18:73-78.

The Diagnosis: Chronic Hyperplastic Candidiasis (Nodular Form)

Chronic hyperplastic candidiasis (CHC) is a rare form of oropharyngeal candidiasis. The most frequent clinical presentation is a white plaque that cannot be detached (also known as candidal leukoplakia). It usually involves the anterior buccal mucosa, mainly the commissural area, though the palate and tongue also can be affected. The nodular type of CHC is even less common. Our patient exhibited the typical clinical presentation of the nodular type of CHC.1-3 The differential diagnosis includes leukoplakia, premalignant and malignant epithelial lesions, granular cell tumor, and florid oral papillomatosis.1,3 A biopsy usually is required for diagnostic confirmation. Histologically, CHC is characterized by parakeratosis and a hyperplastic epithelium invaded by Candida hyphae.4 Because Candida species are commensal in up to 50% of the healthy population, superficial colonization of tissues is not enough to indicate notable disease.1 In our patient, the histopathology revealed a hyperplastic mucosa without atypia and numerous hyphae (Figure). Both lingual swab and tissue cultures revealed high growth of Candida albicans.

Infection by C albicans depends on pathogen virulence and host factors such as wearing dentures, reduced salivary production, smoking habit, or immunosuppression.1,4 Apart from wearing dentures, our patient did not present with other predisposing factors. It is possible that the immunosuppressive status related to old age and associated oral changes contributed to Candida infection in this case.

Topical or systemic antifungal agents together with the elimination of predisposing factors are usual first-line treatments. Because of the relationship with atypia and the possibility of evolving into carcinoma in untreated or persistent lesions, follow-up is necessary to verify complete resolution after treatment.1,3,4 In the case reported herein, the lesions disappeared after 15 days of oral fluconazole treatment.

The Diagnosis: Chronic Hyperplastic Candidiasis (Nodular Form)

Chronic hyperplastic candidiasis (CHC) is a rare form of oropharyngeal candidiasis. The most frequent clinical presentation is a white plaque that cannot be detached (also known as candidal leukoplakia). It usually involves the anterior buccal mucosa, mainly the commissural area, though the palate and tongue also can be affected. The nodular type of CHC is even less common. Our patient exhibited the typical clinical presentation of the nodular type of CHC.1-3 The differential diagnosis includes leukoplakia, premalignant and malignant epithelial lesions, granular cell tumor, and florid oral papillomatosis.1,3 A biopsy usually is required for diagnostic confirmation. Histologically, CHC is characterized by parakeratosis and a hyperplastic epithelium invaded by Candida hyphae.4 Because Candida species are commensal in up to 50% of the healthy population, superficial colonization of tissues is not enough to indicate notable disease.1 In our patient, the histopathology revealed a hyperplastic mucosa without atypia and numerous hyphae (Figure). Both lingual swab and tissue cultures revealed high growth of Candida albicans.

Infection by C albicans depends on pathogen virulence and host factors such as wearing dentures, reduced salivary production, smoking habit, or immunosuppression.1,4 Apart from wearing dentures, our patient did not present with other predisposing factors. It is possible that the immunosuppressive status related to old age and associated oral changes contributed to Candida infection in this case.

Topical or systemic antifungal agents together with the elimination of predisposing factors are usual first-line treatments. Because of the relationship with atypia and the possibility of evolving into carcinoma in untreated or persistent lesions, follow-up is necessary to verify complete resolution after treatment.1,3,4 In the case reported herein, the lesions disappeared after 15 days of oral fluconazole treatment.

- Shibata T, Yamashita D, Hasegawa S, et al. Oral candidiasis mimicking tongue cancer [published online January 12, 2011]. Auris Nasus Larynx. 2011;38:418-420.

- Scardina GA, Ruggieri A, Messina P. Chronic hyperplastic candidosis: a pilot study of the efficacy of 0.18% isotretinoin. J Oral Sci. 2009;51:407-410.

- Sitheeque MA, Samaranayake LP. Chronic hyperplastic candidosis/candidiasis (candidal leukoplakia). Crit Rev Oral Biol Med. 2003;14:253-267.

- Williams DW, Bartie KL, Potts AJ, et al. Strain persistence of invasive Candida albicans in chronic hyperplastic candidosis that underwent malignant change. Gerodontology. 2001;18:73-78.

- Shibata T, Yamashita D, Hasegawa S, et al. Oral candidiasis mimicking tongue cancer [published online January 12, 2011]. Auris Nasus Larynx. 2011;38:418-420.

- Scardina GA, Ruggieri A, Messina P. Chronic hyperplastic candidosis: a pilot study of the efficacy of 0.18% isotretinoin. J Oral Sci. 2009;51:407-410.

- Sitheeque MA, Samaranayake LP. Chronic hyperplastic candidosis/candidiasis (candidal leukoplakia). Crit Rev Oral Biol Med. 2003;14:253-267.

- Williams DW, Bartie KL, Potts AJ, et al. Strain persistence of invasive Candida albicans in chronic hyperplastic candidosis that underwent malignant change. Gerodontology. 2001;18:73-78.

An 82-year-old woman with atrial fibrillation and chronic obstructive pulmonary disease presented with pruritic and painful lesions on the tongue of 10 years' duration. She had not undergone treatment with systemic or inhaled corticosteroids during the course of the pulmonary disease. On physical examination, several fleshy and well-defined erythematous papules speckled with whitish areas were observed on the dorsal aspect and anterior border of the tongue. Superficial whitish areas could not be removed by scraping.

VIDEO: Investigator discusses fulvestrant/everolimus combo

SAN ANTONIO – Most women with hormone receptor–positive breast cancer treated with an aromatase inhibitor will eventually develop resistance to these agents. Strategies for overcoming resistance include the addition of everolimus (Affinitor) to a steroidal aromatase inhibitor (AI) such as exemestane (Aromasin), as in the BOLERO-2 trial.

Alternatively, blocking estrogen-receptor signaling through the use of a selective estrogen receptor down regulator, such as fulvestrant (Faslodex), may result in more complete blockade of the ER signaling pathway than would a steroidal AI such as exemestane.

In this video interview at the San Antonio Breast Cancer Symposium, Noah S. Kornblum, MD, of the Montefiore-Einstein Center for Cancer Care, New York, discusses findings from the phase II PrECOG 0102 trial comparing a combination of fulvestrant and everolimus to fulvestrant and placebo for the treatment of postmenopausal women with hormone receptor–positive, HER2-negative breast cancer resistant to AI therapy.

The combination was associated with a median progression-free survival of 10.4 months, compared with 5.1 months for fulvestrant plus placebo (hazard ratio, 0.60; P = .02).

Dr. Kornblum says that the study provides additional evidence for adding everolimus to anti-estrogen therapy in AI-resistant disease.

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

SAN ANTONIO – Most women with hormone receptor–positive breast cancer treated with an aromatase inhibitor will eventually develop resistance to these agents. Strategies for overcoming resistance include the addition of everolimus (Affinitor) to a steroidal aromatase inhibitor (AI) such as exemestane (Aromasin), as in the BOLERO-2 trial.

Alternatively, blocking estrogen-receptor signaling through the use of a selective estrogen receptor down regulator, such as fulvestrant (Faslodex), may result in more complete blockade of the ER signaling pathway than would a steroidal AI such as exemestane.

In this video interview at the San Antonio Breast Cancer Symposium, Noah S. Kornblum, MD, of the Montefiore-Einstein Center for Cancer Care, New York, discusses findings from the phase II PrECOG 0102 trial comparing a combination of fulvestrant and everolimus to fulvestrant and placebo for the treatment of postmenopausal women with hormone receptor–positive, HER2-negative breast cancer resistant to AI therapy.

The combination was associated with a median progression-free survival of 10.4 months, compared with 5.1 months for fulvestrant plus placebo (hazard ratio, 0.60; P = .02).

Dr. Kornblum says that the study provides additional evidence for adding everolimus to anti-estrogen therapy in AI-resistant disease.

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

SAN ANTONIO – Most women with hormone receptor–positive breast cancer treated with an aromatase inhibitor will eventually develop resistance to these agents. Strategies for overcoming resistance include the addition of everolimus (Affinitor) to a steroidal aromatase inhibitor (AI) such as exemestane (Aromasin), as in the BOLERO-2 trial.

Alternatively, blocking estrogen-receptor signaling through the use of a selective estrogen receptor down regulator, such as fulvestrant (Faslodex), may result in more complete blockade of the ER signaling pathway than would a steroidal AI such as exemestane.

In this video interview at the San Antonio Breast Cancer Symposium, Noah S. Kornblum, MD, of the Montefiore-Einstein Center for Cancer Care, New York, discusses findings from the phase II PrECOG 0102 trial comparing a combination of fulvestrant and everolimus to fulvestrant and placebo for the treatment of postmenopausal women with hormone receptor–positive, HER2-negative breast cancer resistant to AI therapy.

The combination was associated with a median progression-free survival of 10.4 months, compared with 5.1 months for fulvestrant plus placebo (hazard ratio, 0.60; P = .02).

Dr. Kornblum says that the study provides additional evidence for adding everolimus to anti-estrogen therapy in AI-resistant disease.

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

AT SABCS 2016

Strategies for Preventing Patient Falls

Between 700,000 and 1 million people fall each year in U.S. hospitals, and about a third of those result in injuries that add an additional 6.3 days to hospital stays, according to a report from the Joint Commission Center for Transforming Healthcare. Some 11,000 falls are fatal. The Joint Commission Center for Transforming Healthcare has now issued a report on the subject called “Preventing Patient Falls: A Systematic Approach from the Joint Commission Center for Transforming Healthcare Project.”1

“We try to pick those topics that healthcare organizations just haven’t been able to fully tackle even though they’ve put a lot of time and resources into trying to fix them,” says Kelly Barnes, MS, a center project lead in the Center for Transforming Healthcare at The Joint Commission.

The Joint Commission project involved seven hospitals that used Robust Process Improvement, which incorporates tools from Lean Six Sigma and change management methodologies, to reduce falls with injury on inpatient pilot units within their organizations.

During the project, each organization identified the specific factors that led to falls with injury in their environment and developed solutions targeted to those factors. The organizations identified 30 root causes and developed 21 targeted solutions. Because the contributing factors were different at each organization, solution sets were unique to each. Afterward, the organizations saw an aggregate 35% reduction in falls and a 62% reduction in falls with injury.

“One of the takeaways is that you really need support across an organization to have success,” Barnes says. “The more engaged the entire organization is from top down all the way to the bottom, the more successful people are in solving the problems.”

The study resulted in a Targeted Solutions Tool (TST), free to all Joint Commission–accredited customers, to help hospitals.

“You can put your data right into the tool,” Barnes says. “It tells you what your top contributing factors are, and it gives you the solutions that have worked for those contributing factors at other organizations.”

Reference

Health Research & Educational Trust. Preventing patient falls: a systematic approach from the Joint Commission Center for Transforming Healthcare project. Hospitals in Pursuit of Excellence website.

Between 700,000 and 1 million people fall each year in U.S. hospitals, and about a third of those result in injuries that add an additional 6.3 days to hospital stays, according to a report from the Joint Commission Center for Transforming Healthcare. Some 11,000 falls are fatal. The Joint Commission Center for Transforming Healthcare has now issued a report on the subject called “Preventing Patient Falls: A Systematic Approach from the Joint Commission Center for Transforming Healthcare Project.”1