User login

Bipolar disorder: How to avoid overdiagnosis

Over the past decade, bipolar disorder (BD) has gained widespread recognition in mainstream culture and in the media,1 and awareness of this condition has increased substantially. As a result, patients commonly present with preconceived ideas about bipolarity that may or may not actually correspond with this diagnosis. In anticipation of seeing such patients, I offer 4 recommendations to help clinicians more accurately diagnose BD.

1. Screen for periods of manic or hypomanic mood. Effective screening questions include:

- “Have you ever had periods when you felt too happy, too angry, or on top of the world for several days in a row?”

- “Have you had periods when you would go several days without much sleep and still feel fine during the day?”

If the patient reports irritability rather than euphoria, try to better understand the phenomenology of his or her irritable mood. Among patients who experience mania, irritability often results from impatience, which in turn seems to be secondary to grandiosity, increased energy, and accelerated thought processes.2

2. Avoid using terms with low specificity, such as “mood swings” and “racing thoughts,” when you screen for manic symptoms. If the patient mentions these phrases, do not take them at face value; ask him or her to characterize them in detail. Differentiate chronic, quick fluctuations in affect—which are usually triggered by environmental factors and typically are reported by patients with personality disorders—from more persistent periods of mood polarization. Similarly, anxious patients commonly report having “racing thoughts.”

3. Distinguish patients who have a chronic, ongoing preoccupation with shopping from those who exhibit intermittent periods of excessive shopping and prodigality, which usually are associated with other manic symptoms.3 Spending money in excess is often cited as a classic symptom of mania or hypomania, but it may be an indicator of other conditions, such as compulsive buying.

4. Ask about any increases in goal-directed activity. This is a good way to identify true manic or hypomanic periods. Patients with anxiety or agitated depression may report an increase in psychomotor activity, but this is usually characterized more by restlessness and wandering, and not by a true increase in activity.

Consider a temporary diagnosis

When in doubt, it may be advisable to establish a temporary diagnosis of unspecified mood disorder, until you can learn more about the patient, obtain collateral information from family or friends, and request past medical records.

1. Ghouse AA, Sanches M, Zunta-Soares G, et al. Overdiagnosis of bipolar disorder: a critical analysis of the literature. Scientific World Journal. 2013;2013:297087. doi: 10.1155/2013/297087.

2. Carlat DJ. My favorite tips for sorting out diagnostic quandaries with bipolar disorder and adult attention-deficit hyperactivity disorder. Psychiatr Clin North Am. 2007;30(2):233-238.

3. Black DW. A review of compulsive buying disorder. World Psychiatry. 2007;6(1):14-18.

Over the past decade, bipolar disorder (BD) has gained widespread recognition in mainstream culture and in the media,1 and awareness of this condition has increased substantially. As a result, patients commonly present with preconceived ideas about bipolarity that may or may not actually correspond with this diagnosis. In anticipation of seeing such patients, I offer 4 recommendations to help clinicians more accurately diagnose BD.

1. Screen for periods of manic or hypomanic mood. Effective screening questions include:

- “Have you ever had periods when you felt too happy, too angry, or on top of the world for several days in a row?”

- “Have you had periods when you would go several days without much sleep and still feel fine during the day?”

If the patient reports irritability rather than euphoria, try to better understand the phenomenology of his or her irritable mood. Among patients who experience mania, irritability often results from impatience, which in turn seems to be secondary to grandiosity, increased energy, and accelerated thought processes.2

2. Avoid using terms with low specificity, such as “mood swings” and “racing thoughts,” when you screen for manic symptoms. If the patient mentions these phrases, do not take them at face value; ask him or her to characterize them in detail. Differentiate chronic, quick fluctuations in affect—which are usually triggered by environmental factors and typically are reported by patients with personality disorders—from more persistent periods of mood polarization. Similarly, anxious patients commonly report having “racing thoughts.”

3. Distinguish patients who have a chronic, ongoing preoccupation with shopping from those who exhibit intermittent periods of excessive shopping and prodigality, which usually are associated with other manic symptoms.3 Spending money in excess is often cited as a classic symptom of mania or hypomania, but it may be an indicator of other conditions, such as compulsive buying.

4. Ask about any increases in goal-directed activity. This is a good way to identify true manic or hypomanic periods. Patients with anxiety or agitated depression may report an increase in psychomotor activity, but this is usually characterized more by restlessness and wandering, and not by a true increase in activity.

Consider a temporary diagnosis

When in doubt, it may be advisable to establish a temporary diagnosis of unspecified mood disorder, until you can learn more about the patient, obtain collateral information from family or friends, and request past medical records.

Over the past decade, bipolar disorder (BD) has gained widespread recognition in mainstream culture and in the media,1 and awareness of this condition has increased substantially. As a result, patients commonly present with preconceived ideas about bipolarity that may or may not actually correspond with this diagnosis. In anticipation of seeing such patients, I offer 4 recommendations to help clinicians more accurately diagnose BD.

1. Screen for periods of manic or hypomanic mood. Effective screening questions include:

- “Have you ever had periods when you felt too happy, too angry, or on top of the world for several days in a row?”

- “Have you had periods when you would go several days without much sleep and still feel fine during the day?”

If the patient reports irritability rather than euphoria, try to better understand the phenomenology of his or her irritable mood. Among patients who experience mania, irritability often results from impatience, which in turn seems to be secondary to grandiosity, increased energy, and accelerated thought processes.2

2. Avoid using terms with low specificity, such as “mood swings” and “racing thoughts,” when you screen for manic symptoms. If the patient mentions these phrases, do not take them at face value; ask him or her to characterize them in detail. Differentiate chronic, quick fluctuations in affect—which are usually triggered by environmental factors and typically are reported by patients with personality disorders—from more persistent periods of mood polarization. Similarly, anxious patients commonly report having “racing thoughts.”

3. Distinguish patients who have a chronic, ongoing preoccupation with shopping from those who exhibit intermittent periods of excessive shopping and prodigality, which usually are associated with other manic symptoms.3 Spending money in excess is often cited as a classic symptom of mania or hypomania, but it may be an indicator of other conditions, such as compulsive buying.

4. Ask about any increases in goal-directed activity. This is a good way to identify true manic or hypomanic periods. Patients with anxiety or agitated depression may report an increase in psychomotor activity, but this is usually characterized more by restlessness and wandering, and not by a true increase in activity.

Consider a temporary diagnosis

When in doubt, it may be advisable to establish a temporary diagnosis of unspecified mood disorder, until you can learn more about the patient, obtain collateral information from family or friends, and request past medical records.

1. Ghouse AA, Sanches M, Zunta-Soares G, et al. Overdiagnosis of bipolar disorder: a critical analysis of the literature. Scientific World Journal. 2013;2013:297087. doi: 10.1155/2013/297087.

2. Carlat DJ. My favorite tips for sorting out diagnostic quandaries with bipolar disorder and adult attention-deficit hyperactivity disorder. Psychiatr Clin North Am. 2007;30(2):233-238.

3. Black DW. A review of compulsive buying disorder. World Psychiatry. 2007;6(1):14-18.

1. Ghouse AA, Sanches M, Zunta-Soares G, et al. Overdiagnosis of bipolar disorder: a critical analysis of the literature. Scientific World Journal. 2013;2013:297087. doi: 10.1155/2013/297087.

2. Carlat DJ. My favorite tips for sorting out diagnostic quandaries with bipolar disorder and adult attention-deficit hyperactivity disorder. Psychiatr Clin North Am. 2007;30(2):233-238.

3. Black DW. A review of compulsive buying disorder. World Psychiatry. 2007;6(1):14-18.

Who needs breast cancer genetics testing?

Advances in cancer genetics are rapidly changing how clinicians assess an individual’s risk for breast cancer. ObGyns counsel many women with a personal or family history of the disease, many of whom can benefit from genetics counseling and testing. As patients with a hereditary predisposition to breast cancer are at higher risk and are younger at diagnosis, it is imperative to identify them early so they can benefit from enhanced surveillance, chemoprevention, and discussions regarding risk-reducing surgeries. ObGyns are uniquely poised to identify young women at risk for hereditary cancer syndromes, and they play a crucial role in screening and prevention over the life span.

CASE Patient with breast cancer history asks about screening for her daughters

A 52-year-old woman presents for her annual examination. She underwent breast cancer treatment 10 years earlier and has done well since then. When asked about family history of breast cancer and ethnicity, she reports her mother had breast cancer later in life, and her mother’s father was of Ashkenazi Jewish ancestry.In addition, a maternal uncle had metastatic prostate cancer. You recall that breast cancer diagnosed before age 50 years and Ashkenazi ancestry are “red flags” for a hereditary cancer syndrome. The patient wonders how her daughters should be screened. What do you do next?

Having a risk assessment plan is crucial

Given increasing demands, limited time, and the abundance of information to be discussed with patients, primary care physicians may find it challenging to assess breast cancer risk, consider genetics testing for appropriate individuals, and counsel patients about risk management options. The process has become even more complex since the expansion in genetics knowledge and the advent of multigene panel testing. Not only is risk assessment crucial for this woman and her daughters, and for other patients, but a delay in diagnosing and treating breast cancer in patients with hereditary and familial cancer risks may represent a worrisome new trend in medical litigation.1,2 Clinicians must have a process in place for assessing risk in all patients and treating them appropriately.

The American Cancer Society (ACS) estimated that 252,710 cases of breast cancer would be diagnosed in 2017, leading to 40,610 deaths.3 Twelve percent to 14% of breast cancers are thought to be related to hereditary cancer predisposition syndromes.4–8 This means that, every year, almost 35,000 cases of breast cancer are attributable to hereditary risk. These cases can be detected early with enhanced surveillance, which carries the highest chance for cure, or prevented with risk-reducing surgery in identified genetic mutation carriers. Each child of a person with a genetic mutation predisposing to breast cancer has a 50% chance of inheriting the mutation and having a very high risk of cancer.

In this patient’s case, basic information is collected about her cancer-related personal and family history.

Asking a few key questions can help in stratifying risk:

- Have you or anyone in your family had cancer? What type, and at what age?

- If breast cancer, did it involve both breasts, or was it triple-negative?

- Is there a family history of ovarian cancer?

- Is there a family history of male breast cancer?

- Is there a family history of metastatic prostate cancer?

- Are you of Ashkenazi Jewish ethnicity?

- Have you or anyone in your family ever had genetics testing for cancer?

The hallmarks of hereditary cancer are multiple cancers in an individual or family; young age at diagnosis; and ovarian, pancreatic, or another rare cancer. Metastatic prostate cancer was added as a red flag for hereditary risk after a recent large series found that 11.8% of men with metastatic prostate cancer harbor germline mutations.9

CASE Continued

On further questioning, the patient reports she had triple-negative (estrogen receptor–, progesterone receptor–, and human epidermal growth factor receptor 2 [HER2]–negative) breast cancer, a feature of patients with germline BRCA1 (breast cancer susceptibility gene 1) mutations.10 In addition, her Ashkenazi ancestry is concerning, as there is a 1-in-40 chance of carrying 1 of the 3 Ashkenazi founder BRCA mutations.11 Is a genetics consultation needed?

Read about guidelines for referral and testing.

Guidelines for genetics referral and testing

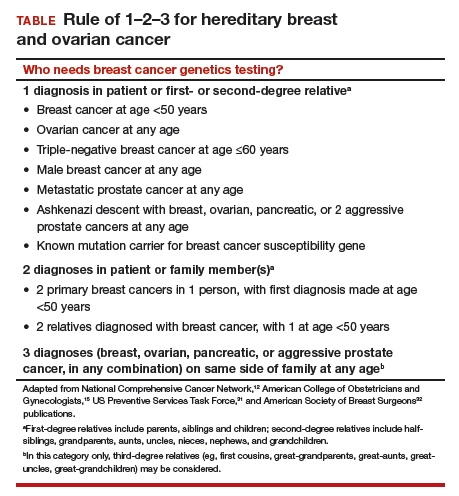

According to the TABLE, which summarizes national guidelines for genetics referral, maternal and paternal family histories are equally important. Our patient was under age 50 at diagnosis, has a history of triple-negative breast cancer, is of Ashkenazi ancestry, and has a family history of metastatic prostate cancer. She meets the criteria for genetics testing, and screening for her daughters most certainly will depend on the findings of that testing. If she carries a BRCA1 mutation, as might be anticipated, each daughter would have a 50% chance of having inherited the mutation. If they carry the mutation as well, they would begin breast magnetic resonance imaging (MRI) screening at age 25.12 If they decide against genetics testing, they could still undergo MRI screening as untested first-degree relatives of a BRCA carrier, per ACS recommendations.13

Integrating evidence and experience

Over the past 10 to 20 years, other breast cancer susceptibility genes (eg, BRCA2, PALB2, CHEK2) have been identified. More recently, next-generation sequencing has become commercially available. Laboratories can use this newer method to sequence multiple genes rapidly and in parallel, and its cost is similar to that of single-syndrome testing.14 When more than 1 gene can explain an inherited cancer syndrome, multigene panel testing may be more efficient and cost-effective. Use of multigene panel testing is supported in guidelines issued by the National Comprehensive Cancer Network,12 the American College of Obstetricians and Gynecologists,15 and other medical societies.

For our patient, the most logical strategy would be to test for the 3 mutations most common in the Ashkenazi population and then, if no mutation is found, perform multigene panel testing.

Formal genetics counseling can be very helpful for a patient, particularly in the era of multigene panel testing.16,17 A detailed pedigree (family tree) is elicited, and a genetics specialist determines whether testing is indicated and which test is best for the patient. Possible test findings are explained. The patient may be found to have a pathogenic variant with associated increased cancer risk, a negative test result (informative or uninformative), or a variant of uncertain significance (VUS). VUS is a gene mutation identified with an unknown effect on protein function and an unclear association with cancer risk. A finding of VUS may make the patient anxious,18 create uncertainty in the treating physician,19 and lead to harmful overtreatment, excessive surveillance, or unnecessary use of a preventive measure.19–21 Genetics counseling allows the patient, even the patient with VUS, to make appropriate decisions.22 Counseling may also help a patient or family process emotional responses, such as fear and guilt. In addition, counselors are familiar with relevant laws and regulations, such as the Genetic Information Nondiscrimination Act of 2008 (GINA), which protects patients from insurance and employment discrimination. Many professional guidelines recommend providing genetics counseling in conjunction with genetics testing,12,23 and some insurance companies and some states require counseling for coverage of testing.

Cost of genetics counseling. If patients are concerned about the cost of genetics testing, they can be reassured with the following information24–26:

- The Patient Protection and Affordable Care Act (ACA) identifies BRCA testing as a preventive service

- Medicare provides coverage for affected patients with a qualifying personal history

- 97% of commercial insurers and most state Medicaid programs provide coverage for hereditary cancer testing

- Most commercial laboratories have affordability programs that may provide additional support.

If a BRCA mutation is found: Many patients question the value of knowing whether they have a BRCA mutation. What our patient, her daughters, and others may not realize is that, if a BRCA mutation is found, breast MRI screening can begin at age 25. Although contrast-enhanced MRI screening is highly sensitive in detecting breast cancer,27–29 it lacks specificity and commonly yields false positives.

Some patients also worry about overdiagnosis with this highly sensitive test. Many do not realize that preventively prescribed oral contraceptives can reduce the risk of ovarian cancer by 50%, and cosmetically acceptable risk-reducing breast surgeries can reduce the risk by 90%.

Many are unaware of the associated risks with ovarian, prostate, pancreatic, and other cancers; of risk management options; and of assisted reproduction options, such as preimplantation genetics diagnosis, which can prevent the passing of a genetic mutation to future generations. The guidelines on risk management options are increasingly clear and helpful,12,30–32 and women often turn to their ObGyns for advice about health and prevention.

ObGyns are often the first-line providers for women with a personal or family history of breast cancer. Identification of at-risk patients begins with taking a careful family history and becoming familiar with the rapidly evolving guidelines in this important field. Identification of appropriate candidates for breast cancer genetics testing is a key step toward prevention, value-based care, and avoidance of legal liability.

CASE Resolved

In this case, testing for the 3 common Ashkenazi BRCA founder mutations was negative, and multigene panel testing was also negative. Her husband is not of Ashkenazi Jewish descent and there is no significant family history of cancer on his side. The daughters are advised to begin high-risk screening at the age of 32, 10 years earlier than their mother was diagnosed, but no genetic testing is indicated for them.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

- Phillips RL Jr, Bartholomew LA, Dovey SM, Fryer GE Jr, Miyoshi TJ, Green LA. Learning from malpractice claims about negligent, adverse events in primary care in the United States. Qual Saf Health Care. 2004;13(2):121–126.

- Saber Tehrani AS, Lee H, Mathews SC, et al. 25-year summary of US malpractice claims for diagnostic errors 1986–2010: an analysis from the National Practitioner Data Bank. BMJ Qual Saf. 2013;22(8):672–680.

- American Cancer Society. Breast Cancer Facts & Figures 2017-2018. https://www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/breast-cancer-factsand-figures/breast-cancer-facts-and-figures-2017-2018.pdf. Published 2017. Accessed December 28, 2017.

- Tung N, Battelli C, Allen B, et al. Frequency of mutations in individuals with breast cancer referred for BRCA1 and BRCA2 testing using next-generation sequencing with a 25-gene panel. Cancer. 2015;121(1):25–33.

- Tung N, Lin NU, Kidd J, et al. Frequency of germline mutations in 25 cancer susceptibility genes in a sequential series of patients with breast cancer. J Clin Oncol. 2016;34(13):1460–1468.

- Kurian AW, Hare EE, Mills MA, et al. Clinical evaluation of a multiple-gene sequencing panel for hereditary cancer risk assessment. J Clin Oncol. 2014;32(19):2001–2009.

- Easton DF, Pharoah PD, Antoniou AC, et al. Gene-panel sequencing and the prediction of breast-cancer risk. N Engl J Med. 2015;372(23):2243–2257.

- Yurgelun MB, Allen B, Kaldate RR, et al. Identification of a variety of mutations in cancer predisposition genes in patients with suspected Lynch syndrome. Gastroenterology. 2015;149(3):604–613.e20.

- Pritchard CC, Mateo J, Walsh MF, et al. Inherited DNA-repair gene mutations in men with metastatic prostate cancer. N Engl J Med. 2016;375(5):443–453.

- Mavaddat N, Barrowdale D, Andrulis IL, et al; Consortium of Investigators of Modifiers of BRCA1/2. Pathology of breast and ovarian cancers among BRCA1 and BRCA2 mutation carriers: results from the Consortium of Investigators of Modifiers of BRCA1/2 (CIMBA). Cancer Epidemiol Biomarkers Prev. 2012;21(1):134–147.

- Struewing JP, Hartge P, Wacholder S, et al. The risk of cancer associated with specific mutations of BRCA1 and BRCA2 among Ashkenazi Jews. N Engl J Med. 1997;336(20):1401–1408.

- National Comprehensive Cancer Network. NCCN Clinical Practice Guidelines in Oncology (NCCN Guidelines): Genetic/Familial High-Risk Assessment: Breast and Ovarian. Version 1.2018. https://www.nccn.org. Accessed December 28, 2017.

- Saslow D, Boetes C, Burke W, et al; American Cancer Society Breast Cancer Advisory Group. American Cancer Society guidelines for breast screening with MRI as an adjunct to mammography. CA Cancer J Clin. 2007;57(2):75–89.

- Heather JM, Chain B. The sequence of sequencers: the history of sequencing DNA. Genomics. 2016;107(1):1–8.

- American College of Obstetricians and Gynecologists Committee on Practice Bulletins-Gynecology. ACOG Practice Bulletin No. 182: Hereditary breast and ovarian cancer syndrome. Obstet Gynecol. 2017;130(3):e110–e126.

- Mester JL, Schreiber AH, Moran RT. Genetic counselors: your partners in clinical practice. Cleve Clin J Med. 2012;79(8):560–568.

- Smith M, Mester J, Eng C. How to spot heritable breast cancer: a primary care physician’s guide. Cleve Clin J Med. 2014;81(1):31–40.

- Welsh JL, Hoskin TL, Day CN, et al. Clinical decision-making in patients with variant of uncertain significance in BRCA1 or BRCA2 genes. Ann Surg Oncol. 2017;24(10):3067–3072.

- Kurian AW, Li Y, Hamilton AS, et al. Gaps in incorporating germline genetic testing into treatment decision-making for early-stage breast cancer. J Clin Oncol. 2017;35(20):2232–2239.

- Tung N, Domchek SM, Stadler Z, et al. Counselling framework for moderate-penetrance cancer-susceptibility mutations. Nat Rev Clin Oncol. 2016;13(9):581–588.

- Yu PP, Vose JM, Hayes DF. Genetic cancer susceptibility testing: increased technology, increased complexity. J Clin Oncol. 2015;33(31):3533–3534.

- Pederson HJ, Gopalakrishnan D, Noss R, Yanda C, Eng C, Grobmyer SR. Impact of multigene panel testing on surgical decision making in breast cancer patients. J Am Coll Surg. 2018;226(4):560–565.

- Robson ME, Bradbury AR, Arun B, et al. American Society of Clinical Oncology policy statement update: genetic and genomic testing for cancer susceptibility. J Clin Oncol. 2015;33(31):3660–3667.

- Preventive care benefits for women: What Marketplace health insurance plans cover. HealthCare.gov. https://www.healthcare.gov/coverage/what-marketplace-plans-cover/. Accessed May 15, 2018.

- Centers for Medicare & Medicaid Services. The Center for Consumer Information & Insurance Oversight: Affordable Care Act Implementation FAQs – Set 12. https://www.cms.gov/CCIIO/Resources/Fact-Sheets-and-FAQs/aca_implementation_faqs12.html. Accessed May 15, 2018.

- US Preventive Services Task Force. Final Recommendation Statement: BRCA-Related Cancer: Risk Assessment, Genetic Counseling, and Genetic Testing. https://www.uspreventiveservicestaskforce.org/Page/Document/RecommendationStatementFinal/brca-related-cancer-risk-assessment-genetic-counseling-and-genetic-testing. Published December 2013. Accessed May 15, 2018.

- Kuhl CK, Schrading S, Leutner CC, et al. Mammography, breast ultrasound, and magnetic resonance imaging for surveillance of women at high familial risk for breast cancer. J Clin Oncol. 2005;23(33):8469–8476.

- Lehman CD, Blume JD, Weatherall P, et al; International Breast MRI Consortium Working Group. Screening women at high risk for breast cancer with mammography and magnetic resonance imaging. Cancer. 2005;103(9):1898–1905.

- Kriege M, Brekelmans CT, Boetes C, et al; Magnetic Resonance Imaging Screening Study Group. Efficacy of MRI and mammography for breast-cancer screening in women with a familial or genetic predisposition. N Engl J Med. 2004;351(5):427–437.

- Pederson HJ, Padia SA, May M, Grobmyer S. Managing patients at genetic risk of breast cancer. Cleve Clin J Med. 2016;83(3):199–206.

- Moyer VA; US Preventive Services Task Force. Risk assessment, genetic counseling, and genetic testing for BRCA-related cancer in women: US Preventive Services Task Force recommendation statement. Ann Intern Med. 2014;160(4):271–281.

- American Society of Breast Surgeons. Consensus Guideline on Hereditary Genetic Testing for Patients With and Without Breast Cancer. Columbia, MD: American Society of Breast Surgeons. https://www.breastsurgeons.org/new_layout/about/statements/PDF_Statements/BRCA_Testing.pdf. Published March 14, 2017. Accessed December 28, 2017.

Advances in cancer genetics are rapidly changing how clinicians assess an individual’s risk for breast cancer. ObGyns counsel many women with a personal or family history of the disease, many of whom can benefit from genetics counseling and testing. As patients with a hereditary predisposition to breast cancer are at higher risk and are younger at diagnosis, it is imperative to identify them early so they can benefit from enhanced surveillance, chemoprevention, and discussions regarding risk-reducing surgeries. ObGyns are uniquely poised to identify young women at risk for hereditary cancer syndromes, and they play a crucial role in screening and prevention over the life span.

CASE Patient with breast cancer history asks about screening for her daughters

A 52-year-old woman presents for her annual examination. She underwent breast cancer treatment 10 years earlier and has done well since then. When asked about family history of breast cancer and ethnicity, she reports her mother had breast cancer later in life, and her mother’s father was of Ashkenazi Jewish ancestry.In addition, a maternal uncle had metastatic prostate cancer. You recall that breast cancer diagnosed before age 50 years and Ashkenazi ancestry are “red flags” for a hereditary cancer syndrome. The patient wonders how her daughters should be screened. What do you do next?

Having a risk assessment plan is crucial

Given increasing demands, limited time, and the abundance of information to be discussed with patients, primary care physicians may find it challenging to assess breast cancer risk, consider genetics testing for appropriate individuals, and counsel patients about risk management options. The process has become even more complex since the expansion in genetics knowledge and the advent of multigene panel testing. Not only is risk assessment crucial for this woman and her daughters, and for other patients, but a delay in diagnosing and treating breast cancer in patients with hereditary and familial cancer risks may represent a worrisome new trend in medical litigation.1,2 Clinicians must have a process in place for assessing risk in all patients and treating them appropriately.

The American Cancer Society (ACS) estimated that 252,710 cases of breast cancer would be diagnosed in 2017, leading to 40,610 deaths.3 Twelve percent to 14% of breast cancers are thought to be related to hereditary cancer predisposition syndromes.4–8 This means that, every year, almost 35,000 cases of breast cancer are attributable to hereditary risk. These cases can be detected early with enhanced surveillance, which carries the highest chance for cure, or prevented with risk-reducing surgery in identified genetic mutation carriers. Each child of a person with a genetic mutation predisposing to breast cancer has a 50% chance of inheriting the mutation and having a very high risk of cancer.

In this patient’s case, basic information is collected about her cancer-related personal and family history.

Asking a few key questions can help in stratifying risk:

- Have you or anyone in your family had cancer? What type, and at what age?

- If breast cancer, did it involve both breasts, or was it triple-negative?

- Is there a family history of ovarian cancer?

- Is there a family history of male breast cancer?

- Is there a family history of metastatic prostate cancer?

- Are you of Ashkenazi Jewish ethnicity?

- Have you or anyone in your family ever had genetics testing for cancer?

The hallmarks of hereditary cancer are multiple cancers in an individual or family; young age at diagnosis; and ovarian, pancreatic, or another rare cancer. Metastatic prostate cancer was added as a red flag for hereditary risk after a recent large series found that 11.8% of men with metastatic prostate cancer harbor germline mutations.9

CASE Continued

On further questioning, the patient reports she had triple-negative (estrogen receptor–, progesterone receptor–, and human epidermal growth factor receptor 2 [HER2]–negative) breast cancer, a feature of patients with germline BRCA1 (breast cancer susceptibility gene 1) mutations.10 In addition, her Ashkenazi ancestry is concerning, as there is a 1-in-40 chance of carrying 1 of the 3 Ashkenazi founder BRCA mutations.11 Is a genetics consultation needed?

Read about guidelines for referral and testing.

Guidelines for genetics referral and testing

According to the TABLE, which summarizes national guidelines for genetics referral, maternal and paternal family histories are equally important. Our patient was under age 50 at diagnosis, has a history of triple-negative breast cancer, is of Ashkenazi ancestry, and has a family history of metastatic prostate cancer. She meets the criteria for genetics testing, and screening for her daughters most certainly will depend on the findings of that testing. If she carries a BRCA1 mutation, as might be anticipated, each daughter would have a 50% chance of having inherited the mutation. If they carry the mutation as well, they would begin breast magnetic resonance imaging (MRI) screening at age 25.12 If they decide against genetics testing, they could still undergo MRI screening as untested first-degree relatives of a BRCA carrier, per ACS recommendations.13

Integrating evidence and experience

Over the past 10 to 20 years, other breast cancer susceptibility genes (eg, BRCA2, PALB2, CHEK2) have been identified. More recently, next-generation sequencing has become commercially available. Laboratories can use this newer method to sequence multiple genes rapidly and in parallel, and its cost is similar to that of single-syndrome testing.14 When more than 1 gene can explain an inherited cancer syndrome, multigene panel testing may be more efficient and cost-effective. Use of multigene panel testing is supported in guidelines issued by the National Comprehensive Cancer Network,12 the American College of Obstetricians and Gynecologists,15 and other medical societies.

For our patient, the most logical strategy would be to test for the 3 mutations most common in the Ashkenazi population and then, if no mutation is found, perform multigene panel testing.

Formal genetics counseling can be very helpful for a patient, particularly in the era of multigene panel testing.16,17 A detailed pedigree (family tree) is elicited, and a genetics specialist determines whether testing is indicated and which test is best for the patient. Possible test findings are explained. The patient may be found to have a pathogenic variant with associated increased cancer risk, a negative test result (informative or uninformative), or a variant of uncertain significance (VUS). VUS is a gene mutation identified with an unknown effect on protein function and an unclear association with cancer risk. A finding of VUS may make the patient anxious,18 create uncertainty in the treating physician,19 and lead to harmful overtreatment, excessive surveillance, or unnecessary use of a preventive measure.19–21 Genetics counseling allows the patient, even the patient with VUS, to make appropriate decisions.22 Counseling may also help a patient or family process emotional responses, such as fear and guilt. In addition, counselors are familiar with relevant laws and regulations, such as the Genetic Information Nondiscrimination Act of 2008 (GINA), which protects patients from insurance and employment discrimination. Many professional guidelines recommend providing genetics counseling in conjunction with genetics testing,12,23 and some insurance companies and some states require counseling for coverage of testing.

Cost of genetics counseling. If patients are concerned about the cost of genetics testing, they can be reassured with the following information24–26:

- The Patient Protection and Affordable Care Act (ACA) identifies BRCA testing as a preventive service

- Medicare provides coverage for affected patients with a qualifying personal history

- 97% of commercial insurers and most state Medicaid programs provide coverage for hereditary cancer testing

- Most commercial laboratories have affordability programs that may provide additional support.

If a BRCA mutation is found: Many patients question the value of knowing whether they have a BRCA mutation. What our patient, her daughters, and others may not realize is that, if a BRCA mutation is found, breast MRI screening can begin at age 25. Although contrast-enhanced MRI screening is highly sensitive in detecting breast cancer,27–29 it lacks specificity and commonly yields false positives.

Some patients also worry about overdiagnosis with this highly sensitive test. Many do not realize that preventively prescribed oral contraceptives can reduce the risk of ovarian cancer by 50%, and cosmetically acceptable risk-reducing breast surgeries can reduce the risk by 90%.

Many are unaware of the associated risks with ovarian, prostate, pancreatic, and other cancers; of risk management options; and of assisted reproduction options, such as preimplantation genetics diagnosis, which can prevent the passing of a genetic mutation to future generations. The guidelines on risk management options are increasingly clear and helpful,12,30–32 and women often turn to their ObGyns for advice about health and prevention.

ObGyns are often the first-line providers for women with a personal or family history of breast cancer. Identification of at-risk patients begins with taking a careful family history and becoming familiar with the rapidly evolving guidelines in this important field. Identification of appropriate candidates for breast cancer genetics testing is a key step toward prevention, value-based care, and avoidance of legal liability.

CASE Resolved

In this case, testing for the 3 common Ashkenazi BRCA founder mutations was negative, and multigene panel testing was also negative. Her husband is not of Ashkenazi Jewish descent and there is no significant family history of cancer on his side. The daughters are advised to begin high-risk screening at the age of 32, 10 years earlier than their mother was diagnosed, but no genetic testing is indicated for them.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

Advances in cancer genetics are rapidly changing how clinicians assess an individual’s risk for breast cancer. ObGyns counsel many women with a personal or family history of the disease, many of whom can benefit from genetics counseling and testing. As patients with a hereditary predisposition to breast cancer are at higher risk and are younger at diagnosis, it is imperative to identify them early so they can benefit from enhanced surveillance, chemoprevention, and discussions regarding risk-reducing surgeries. ObGyns are uniquely poised to identify young women at risk for hereditary cancer syndromes, and they play a crucial role in screening and prevention over the life span.

CASE Patient with breast cancer history asks about screening for her daughters

A 52-year-old woman presents for her annual examination. She underwent breast cancer treatment 10 years earlier and has done well since then. When asked about family history of breast cancer and ethnicity, she reports her mother had breast cancer later in life, and her mother’s father was of Ashkenazi Jewish ancestry.In addition, a maternal uncle had metastatic prostate cancer. You recall that breast cancer diagnosed before age 50 years and Ashkenazi ancestry are “red flags” for a hereditary cancer syndrome. The patient wonders how her daughters should be screened. What do you do next?

Having a risk assessment plan is crucial

Given increasing demands, limited time, and the abundance of information to be discussed with patients, primary care physicians may find it challenging to assess breast cancer risk, consider genetics testing for appropriate individuals, and counsel patients about risk management options. The process has become even more complex since the expansion in genetics knowledge and the advent of multigene panel testing. Not only is risk assessment crucial for this woman and her daughters, and for other patients, but a delay in diagnosing and treating breast cancer in patients with hereditary and familial cancer risks may represent a worrisome new trend in medical litigation.1,2 Clinicians must have a process in place for assessing risk in all patients and treating them appropriately.

The American Cancer Society (ACS) estimated that 252,710 cases of breast cancer would be diagnosed in 2017, leading to 40,610 deaths.3 Twelve percent to 14% of breast cancers are thought to be related to hereditary cancer predisposition syndromes.4–8 This means that, every year, almost 35,000 cases of breast cancer are attributable to hereditary risk. These cases can be detected early with enhanced surveillance, which carries the highest chance for cure, or prevented with risk-reducing surgery in identified genetic mutation carriers. Each child of a person with a genetic mutation predisposing to breast cancer has a 50% chance of inheriting the mutation and having a very high risk of cancer.

In this patient’s case, basic information is collected about her cancer-related personal and family history.

Asking a few key questions can help in stratifying risk:

- Have you or anyone in your family had cancer? What type, and at what age?

- If breast cancer, did it involve both breasts, or was it triple-negative?

- Is there a family history of ovarian cancer?

- Is there a family history of male breast cancer?

- Is there a family history of metastatic prostate cancer?

- Are you of Ashkenazi Jewish ethnicity?

- Have you or anyone in your family ever had genetics testing for cancer?

The hallmarks of hereditary cancer are multiple cancers in an individual or family; young age at diagnosis; and ovarian, pancreatic, or another rare cancer. Metastatic prostate cancer was added as a red flag for hereditary risk after a recent large series found that 11.8% of men with metastatic prostate cancer harbor germline mutations.9

CASE Continued

On further questioning, the patient reports she had triple-negative (estrogen receptor–, progesterone receptor–, and human epidermal growth factor receptor 2 [HER2]–negative) breast cancer, a feature of patients with germline BRCA1 (breast cancer susceptibility gene 1) mutations.10 In addition, her Ashkenazi ancestry is concerning, as there is a 1-in-40 chance of carrying 1 of the 3 Ashkenazi founder BRCA mutations.11 Is a genetics consultation needed?

Read about guidelines for referral and testing.

Guidelines for genetics referral and testing

According to the TABLE, which summarizes national guidelines for genetics referral, maternal and paternal family histories are equally important. Our patient was under age 50 at diagnosis, has a history of triple-negative breast cancer, is of Ashkenazi ancestry, and has a family history of metastatic prostate cancer. She meets the criteria for genetics testing, and screening for her daughters most certainly will depend on the findings of that testing. If she carries a BRCA1 mutation, as might be anticipated, each daughter would have a 50% chance of having inherited the mutation. If they carry the mutation as well, they would begin breast magnetic resonance imaging (MRI) screening at age 25.12 If they decide against genetics testing, they could still undergo MRI screening as untested first-degree relatives of a BRCA carrier, per ACS recommendations.13

Integrating evidence and experience

Over the past 10 to 20 years, other breast cancer susceptibility genes (eg, BRCA2, PALB2, CHEK2) have been identified. More recently, next-generation sequencing has become commercially available. Laboratories can use this newer method to sequence multiple genes rapidly and in parallel, and its cost is similar to that of single-syndrome testing.14 When more than 1 gene can explain an inherited cancer syndrome, multigene panel testing may be more efficient and cost-effective. Use of multigene panel testing is supported in guidelines issued by the National Comprehensive Cancer Network,12 the American College of Obstetricians and Gynecologists,15 and other medical societies.

For our patient, the most logical strategy would be to test for the 3 mutations most common in the Ashkenazi population and then, if no mutation is found, perform multigene panel testing.

Formal genetics counseling can be very helpful for a patient, particularly in the era of multigene panel testing.16,17 A detailed pedigree (family tree) is elicited, and a genetics specialist determines whether testing is indicated and which test is best for the patient. Possible test findings are explained. The patient may be found to have a pathogenic variant with associated increased cancer risk, a negative test result (informative or uninformative), or a variant of uncertain significance (VUS). VUS is a gene mutation identified with an unknown effect on protein function and an unclear association with cancer risk. A finding of VUS may make the patient anxious,18 create uncertainty in the treating physician,19 and lead to harmful overtreatment, excessive surveillance, or unnecessary use of a preventive measure.19–21 Genetics counseling allows the patient, even the patient with VUS, to make appropriate decisions.22 Counseling may also help a patient or family process emotional responses, such as fear and guilt. In addition, counselors are familiar with relevant laws and regulations, such as the Genetic Information Nondiscrimination Act of 2008 (GINA), which protects patients from insurance and employment discrimination. Many professional guidelines recommend providing genetics counseling in conjunction with genetics testing,12,23 and some insurance companies and some states require counseling for coverage of testing.

Cost of genetics counseling. If patients are concerned about the cost of genetics testing, they can be reassured with the following information24–26:

- The Patient Protection and Affordable Care Act (ACA) identifies BRCA testing as a preventive service

- Medicare provides coverage for affected patients with a qualifying personal history

- 97% of commercial insurers and most state Medicaid programs provide coverage for hereditary cancer testing

- Most commercial laboratories have affordability programs that may provide additional support.

If a BRCA mutation is found: Many patients question the value of knowing whether they have a BRCA mutation. What our patient, her daughters, and others may not realize is that, if a BRCA mutation is found, breast MRI screening can begin at age 25. Although contrast-enhanced MRI screening is highly sensitive in detecting breast cancer,27–29 it lacks specificity and commonly yields false positives.

Some patients also worry about overdiagnosis with this highly sensitive test. Many do not realize that preventively prescribed oral contraceptives can reduce the risk of ovarian cancer by 50%, and cosmetically acceptable risk-reducing breast surgeries can reduce the risk by 90%.

Many are unaware of the associated risks with ovarian, prostate, pancreatic, and other cancers; of risk management options; and of assisted reproduction options, such as preimplantation genetics diagnosis, which can prevent the passing of a genetic mutation to future generations. The guidelines on risk management options are increasingly clear and helpful,12,30–32 and women often turn to their ObGyns for advice about health and prevention.

ObGyns are often the first-line providers for women with a personal or family history of breast cancer. Identification of at-risk patients begins with taking a careful family history and becoming familiar with the rapidly evolving guidelines in this important field. Identification of appropriate candidates for breast cancer genetics testing is a key step toward prevention, value-based care, and avoidance of legal liability.

CASE Resolved

In this case, testing for the 3 common Ashkenazi BRCA founder mutations was negative, and multigene panel testing was also negative. Her husband is not of Ashkenazi Jewish descent and there is no significant family history of cancer on his side. The daughters are advised to begin high-risk screening at the age of 32, 10 years earlier than their mother was diagnosed, but no genetic testing is indicated for them.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

- Phillips RL Jr, Bartholomew LA, Dovey SM, Fryer GE Jr, Miyoshi TJ, Green LA. Learning from malpractice claims about negligent, adverse events in primary care in the United States. Qual Saf Health Care. 2004;13(2):121–126.

- Saber Tehrani AS, Lee H, Mathews SC, et al. 25-year summary of US malpractice claims for diagnostic errors 1986–2010: an analysis from the National Practitioner Data Bank. BMJ Qual Saf. 2013;22(8):672–680.

- American Cancer Society. Breast Cancer Facts & Figures 2017-2018. https://www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/breast-cancer-factsand-figures/breast-cancer-facts-and-figures-2017-2018.pdf. Published 2017. Accessed December 28, 2017.

- Tung N, Battelli C, Allen B, et al. Frequency of mutations in individuals with breast cancer referred for BRCA1 and BRCA2 testing using next-generation sequencing with a 25-gene panel. Cancer. 2015;121(1):25–33.

- Tung N, Lin NU, Kidd J, et al. Frequency of germline mutations in 25 cancer susceptibility genes in a sequential series of patients with breast cancer. J Clin Oncol. 2016;34(13):1460–1468.

- Kurian AW, Hare EE, Mills MA, et al. Clinical evaluation of a multiple-gene sequencing panel for hereditary cancer risk assessment. J Clin Oncol. 2014;32(19):2001–2009.

- Easton DF, Pharoah PD, Antoniou AC, et al. Gene-panel sequencing and the prediction of breast-cancer risk. N Engl J Med. 2015;372(23):2243–2257.

- Yurgelun MB, Allen B, Kaldate RR, et al. Identification of a variety of mutations in cancer predisposition genes in patients with suspected Lynch syndrome. Gastroenterology. 2015;149(3):604–613.e20.

- Pritchard CC, Mateo J, Walsh MF, et al. Inherited DNA-repair gene mutations in men with metastatic prostate cancer. N Engl J Med. 2016;375(5):443–453.

- Mavaddat N, Barrowdale D, Andrulis IL, et al; Consortium of Investigators of Modifiers of BRCA1/2. Pathology of breast and ovarian cancers among BRCA1 and BRCA2 mutation carriers: results from the Consortium of Investigators of Modifiers of BRCA1/2 (CIMBA). Cancer Epidemiol Biomarkers Prev. 2012;21(1):134–147.

- Struewing JP, Hartge P, Wacholder S, et al. The risk of cancer associated with specific mutations of BRCA1 and BRCA2 among Ashkenazi Jews. N Engl J Med. 1997;336(20):1401–1408.

- National Comprehensive Cancer Network. NCCN Clinical Practice Guidelines in Oncology (NCCN Guidelines): Genetic/Familial High-Risk Assessment: Breast and Ovarian. Version 1.2018. https://www.nccn.org. Accessed December 28, 2017.

- Saslow D, Boetes C, Burke W, et al; American Cancer Society Breast Cancer Advisory Group. American Cancer Society guidelines for breast screening with MRI as an adjunct to mammography. CA Cancer J Clin. 2007;57(2):75–89.

- Heather JM, Chain B. The sequence of sequencers: the history of sequencing DNA. Genomics. 2016;107(1):1–8.

- American College of Obstetricians and Gynecologists Committee on Practice Bulletins-Gynecology. ACOG Practice Bulletin No. 182: Hereditary breast and ovarian cancer syndrome. Obstet Gynecol. 2017;130(3):e110–e126.

- Mester JL, Schreiber AH, Moran RT. Genetic counselors: your partners in clinical practice. Cleve Clin J Med. 2012;79(8):560–568.

- Smith M, Mester J, Eng C. How to spot heritable breast cancer: a primary care physician’s guide. Cleve Clin J Med. 2014;81(1):31–40.

- Welsh JL, Hoskin TL, Day CN, et al. Clinical decision-making in patients with variant of uncertain significance in BRCA1 or BRCA2 genes. Ann Surg Oncol. 2017;24(10):3067–3072.

- Kurian AW, Li Y, Hamilton AS, et al. Gaps in incorporating germline genetic testing into treatment decision-making for early-stage breast cancer. J Clin Oncol. 2017;35(20):2232–2239.

- Tung N, Domchek SM, Stadler Z, et al. Counselling framework for moderate-penetrance cancer-susceptibility mutations. Nat Rev Clin Oncol. 2016;13(9):581–588.

- Yu PP, Vose JM, Hayes DF. Genetic cancer susceptibility testing: increased technology, increased complexity. J Clin Oncol. 2015;33(31):3533–3534.

- Pederson HJ, Gopalakrishnan D, Noss R, Yanda C, Eng C, Grobmyer SR. Impact of multigene panel testing on surgical decision making in breast cancer patients. J Am Coll Surg. 2018;226(4):560–565.

- Robson ME, Bradbury AR, Arun B, et al. American Society of Clinical Oncology policy statement update: genetic and genomic testing for cancer susceptibility. J Clin Oncol. 2015;33(31):3660–3667.

- Preventive care benefits for women: What Marketplace health insurance plans cover. HealthCare.gov. https://www.healthcare.gov/coverage/what-marketplace-plans-cover/. Accessed May 15, 2018.

- Centers for Medicare & Medicaid Services. The Center for Consumer Information & Insurance Oversight: Affordable Care Act Implementation FAQs – Set 12. https://www.cms.gov/CCIIO/Resources/Fact-Sheets-and-FAQs/aca_implementation_faqs12.html. Accessed May 15, 2018.

- US Preventive Services Task Force. Final Recommendation Statement: BRCA-Related Cancer: Risk Assessment, Genetic Counseling, and Genetic Testing. https://www.uspreventiveservicestaskforce.org/Page/Document/RecommendationStatementFinal/brca-related-cancer-risk-assessment-genetic-counseling-and-genetic-testing. Published December 2013. Accessed May 15, 2018.

- Kuhl CK, Schrading S, Leutner CC, et al. Mammography, breast ultrasound, and magnetic resonance imaging for surveillance of women at high familial risk for breast cancer. J Clin Oncol. 2005;23(33):8469–8476.

- Lehman CD, Blume JD, Weatherall P, et al; International Breast MRI Consortium Working Group. Screening women at high risk for breast cancer with mammography and magnetic resonance imaging. Cancer. 2005;103(9):1898–1905.

- Kriege M, Brekelmans CT, Boetes C, et al; Magnetic Resonance Imaging Screening Study Group. Efficacy of MRI and mammography for breast-cancer screening in women with a familial or genetic predisposition. N Engl J Med. 2004;351(5):427–437.

- Pederson HJ, Padia SA, May M, Grobmyer S. Managing patients at genetic risk of breast cancer. Cleve Clin J Med. 2016;83(3):199–206.

- Moyer VA; US Preventive Services Task Force. Risk assessment, genetic counseling, and genetic testing for BRCA-related cancer in women: US Preventive Services Task Force recommendation statement. Ann Intern Med. 2014;160(4):271–281.

- American Society of Breast Surgeons. Consensus Guideline on Hereditary Genetic Testing for Patients With and Without Breast Cancer. Columbia, MD: American Society of Breast Surgeons. https://www.breastsurgeons.org/new_layout/about/statements/PDF_Statements/BRCA_Testing.pdf. Published March 14, 2017. Accessed December 28, 2017.

- Phillips RL Jr, Bartholomew LA, Dovey SM, Fryer GE Jr, Miyoshi TJ, Green LA. Learning from malpractice claims about negligent, adverse events in primary care in the United States. Qual Saf Health Care. 2004;13(2):121–126.

- Saber Tehrani AS, Lee H, Mathews SC, et al. 25-year summary of US malpractice claims for diagnostic errors 1986–2010: an analysis from the National Practitioner Data Bank. BMJ Qual Saf. 2013;22(8):672–680.

- American Cancer Society. Breast Cancer Facts & Figures 2017-2018. https://www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/breast-cancer-factsand-figures/breast-cancer-facts-and-figures-2017-2018.pdf. Published 2017. Accessed December 28, 2017.

- Tung N, Battelli C, Allen B, et al. Frequency of mutations in individuals with breast cancer referred for BRCA1 and BRCA2 testing using next-generation sequencing with a 25-gene panel. Cancer. 2015;121(1):25–33.

- Tung N, Lin NU, Kidd J, et al. Frequency of germline mutations in 25 cancer susceptibility genes in a sequential series of patients with breast cancer. J Clin Oncol. 2016;34(13):1460–1468.

- Kurian AW, Hare EE, Mills MA, et al. Clinical evaluation of a multiple-gene sequencing panel for hereditary cancer risk assessment. J Clin Oncol. 2014;32(19):2001–2009.

- Easton DF, Pharoah PD, Antoniou AC, et al. Gene-panel sequencing and the prediction of breast-cancer risk. N Engl J Med. 2015;372(23):2243–2257.

- Yurgelun MB, Allen B, Kaldate RR, et al. Identification of a variety of mutations in cancer predisposition genes in patients with suspected Lynch syndrome. Gastroenterology. 2015;149(3):604–613.e20.

- Pritchard CC, Mateo J, Walsh MF, et al. Inherited DNA-repair gene mutations in men with metastatic prostate cancer. N Engl J Med. 2016;375(5):443–453.

- Mavaddat N, Barrowdale D, Andrulis IL, et al; Consortium of Investigators of Modifiers of BRCA1/2. Pathology of breast and ovarian cancers among BRCA1 and BRCA2 mutation carriers: results from the Consortium of Investigators of Modifiers of BRCA1/2 (CIMBA). Cancer Epidemiol Biomarkers Prev. 2012;21(1):134–147.

- Struewing JP, Hartge P, Wacholder S, et al. The risk of cancer associated with specific mutations of BRCA1 and BRCA2 among Ashkenazi Jews. N Engl J Med. 1997;336(20):1401–1408.

- National Comprehensive Cancer Network. NCCN Clinical Practice Guidelines in Oncology (NCCN Guidelines): Genetic/Familial High-Risk Assessment: Breast and Ovarian. Version 1.2018. https://www.nccn.org. Accessed December 28, 2017.

- Saslow D, Boetes C, Burke W, et al; American Cancer Society Breast Cancer Advisory Group. American Cancer Society guidelines for breast screening with MRI as an adjunct to mammography. CA Cancer J Clin. 2007;57(2):75–89.

- Heather JM, Chain B. The sequence of sequencers: the history of sequencing DNA. Genomics. 2016;107(1):1–8.

- American College of Obstetricians and Gynecologists Committee on Practice Bulletins-Gynecology. ACOG Practice Bulletin No. 182: Hereditary breast and ovarian cancer syndrome. Obstet Gynecol. 2017;130(3):e110–e126.

- Mester JL, Schreiber AH, Moran RT. Genetic counselors: your partners in clinical practice. Cleve Clin J Med. 2012;79(8):560–568.

- Smith M, Mester J, Eng C. How to spot heritable breast cancer: a primary care physician’s guide. Cleve Clin J Med. 2014;81(1):31–40.

- Welsh JL, Hoskin TL, Day CN, et al. Clinical decision-making in patients with variant of uncertain significance in BRCA1 or BRCA2 genes. Ann Surg Oncol. 2017;24(10):3067–3072.

- Kurian AW, Li Y, Hamilton AS, et al. Gaps in incorporating germline genetic testing into treatment decision-making for early-stage breast cancer. J Clin Oncol. 2017;35(20):2232–2239.

- Tung N, Domchek SM, Stadler Z, et al. Counselling framework for moderate-penetrance cancer-susceptibility mutations. Nat Rev Clin Oncol. 2016;13(9):581–588.

- Yu PP, Vose JM, Hayes DF. Genetic cancer susceptibility testing: increased technology, increased complexity. J Clin Oncol. 2015;33(31):3533–3534.

- Pederson HJ, Gopalakrishnan D, Noss R, Yanda C, Eng C, Grobmyer SR. Impact of multigene panel testing on surgical decision making in breast cancer patients. J Am Coll Surg. 2018;226(4):560–565.

- Robson ME, Bradbury AR, Arun B, et al. American Society of Clinical Oncology policy statement update: genetic and genomic testing for cancer susceptibility. J Clin Oncol. 2015;33(31):3660–3667.

- Preventive care benefits for women: What Marketplace health insurance plans cover. HealthCare.gov. https://www.healthcare.gov/coverage/what-marketplace-plans-cover/. Accessed May 15, 2018.

- Centers for Medicare & Medicaid Services. The Center for Consumer Information & Insurance Oversight: Affordable Care Act Implementation FAQs – Set 12. https://www.cms.gov/CCIIO/Resources/Fact-Sheets-and-FAQs/aca_implementation_faqs12.html. Accessed May 15, 2018.

- US Preventive Services Task Force. Final Recommendation Statement: BRCA-Related Cancer: Risk Assessment, Genetic Counseling, and Genetic Testing. https://www.uspreventiveservicestaskforce.org/Page/Document/RecommendationStatementFinal/brca-related-cancer-risk-assessment-genetic-counseling-and-genetic-testing. Published December 2013. Accessed May 15, 2018.

- Kuhl CK, Schrading S, Leutner CC, et al. Mammography, breast ultrasound, and magnetic resonance imaging for surveillance of women at high familial risk for breast cancer. J Clin Oncol. 2005;23(33):8469–8476.

- Lehman CD, Blume JD, Weatherall P, et al; International Breast MRI Consortium Working Group. Screening women at high risk for breast cancer with mammography and magnetic resonance imaging. Cancer. 2005;103(9):1898–1905.

- Kriege M, Brekelmans CT, Boetes C, et al; Magnetic Resonance Imaging Screening Study Group. Efficacy of MRI and mammography for breast-cancer screening in women with a familial or genetic predisposition. N Engl J Med. 2004;351(5):427–437.

- Pederson HJ, Padia SA, May M, Grobmyer S. Managing patients at genetic risk of breast cancer. Cleve Clin J Med. 2016;83(3):199–206.

- Moyer VA; US Preventive Services Task Force. Risk assessment, genetic counseling, and genetic testing for BRCA-related cancer in women: US Preventive Services Task Force recommendation statement. Ann Intern Med. 2014;160(4):271–281.

- American Society of Breast Surgeons. Consensus Guideline on Hereditary Genetic Testing for Patients With and Without Breast Cancer. Columbia, MD: American Society of Breast Surgeons. https://www.breastsurgeons.org/new_layout/about/statements/PDF_Statements/BRCA_Testing.pdf. Published March 14, 2017. Accessed December 28, 2017.

Take-home points

- The best genetics test is a good family history, updated annually

- Each year, 35,000 breast cancers are attributable to hereditary risk

- It is crucial to identify families at risk for hereditary breast cancer early, as cancers may begin in a woman's 30s; screening begins at age 25

- Multigene panel testing is efficient and cost-effective

- For patients who have highly penetrant pathogenic variants and are of childbearing age, preimplantation genetics diagnosis is an option

Ovarian masses: Surgery or surveillance?

A meaningful evolution has occurred over the past 30 years in the evaluation of ovarian tumors. In the 1980s, any palpable ovarian tumor was recommended for surgical removal.1 In the early 2000s, studies showed that unilocular cysts were at very low risk for malignancy, and surveillance was recommended.2 In the following decade, septate cysts were added to the list of ovarian tumors unlikely to be malignant, and nonsurgical therapy was suggested.3 It is estimated that 10% of women will undergo surgery for an adnexal mass in their lifetime, despite the fact that only 1 in 6 (13%–21%) of these masses is found to be malignant.4,5

A comprehensive, morphology-based pelvic ultrasonography is the first and most important step in evaluating an ovarian tumor’s risk of malignancy to determine whether surgery or surveillance is required.

Ovarian cancer continues to be the leading cause of gynecologic cancer death. Despite achieving superior surgical and cancer outcomes, a gynecologic oncologist performs only 40% of the initial ovarian cancer surgeries.6 Premenopausal and menopausal ovarian tumors are different in cause and consequence. Only 15% of premenopausal tumors are malignant, most commonly germ cell tumors, borderline ovarian tumors, and epithelial ovarian cancers. Tumors in menopausal women are less common but are more likely to be malignant. In actuality, up to 50% of tumors in this population are malignant. The most common of these malignancies are epithelial ovarian cancers, cancers metastatic to the ovary, and malignant stromal tumors.

Effective and evidence-based preoperative evaluations are available to help the clinician estimate a tumor’s risk of malignancy and determine which tumors are appropriate for referral to a specialist for surgery.

The actual incidence and prevalence of ovarian tumors are not known. From a review of almost 40,000 ultrasonography scans performed in the University of Kentucky Ovarian Cancer Screening Program, the estimated incidence and prevalence of ovarian abnormalities are 8.2 per 100 women annuallyand 17%, respectively.7 Seventy percent of these abnormalities have a unilocular or simple septate morphology and are at low risk for malignancy.7 The remaining 30% of abnormalities are high risk, although this represents only 9% of the total population evaluated. Since the vast majority of these abnormalities are expected to be asymptomatic, most will go unrecognized in the general population. For women who have an ovarian abnormality on ultrasonography, the majority will be at low risk for malignancy and will not require surgery.

Ovarian ultrasonography plus morphologic scoring comprise a comprehensive approach

The recently published recommendations of the First International Consensus Conference report on adnexal masses are summarized in TABLE 1.8 The expert panel reviewed the evidence and concluded that effective ultrasonography strategies exist and are well validated, and that low-risk asymptomatic ovarian cysts do not require surgical removal.

While no single ultrasonographic findingcan differentiate a benign from a malignant mass, morphologic scoring systems improve our ability to estimate a tumor’s malignant potential. In the United States, most practitioners in women’s health have ready access to gynecologic ultrasonography, but individual training and proficiency vary. Since not everyone is an expert sonographer, it is useful to employ an objective strategy when evaluating an ovarian tumor. The focus of a comprehensive ovarian ultrasonography is to recognize morphologic patterns that reflect a tumor’s malignant potential. While tumor volume is useful, tumor morphology is the most prognostic feature.

International Ovarian Tumor Analysis group

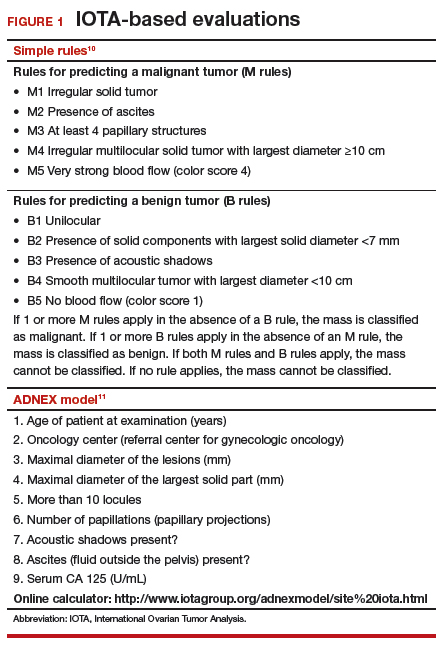

The International Ovarian Tumor Analysis (IOTA) group has published extensively on sonographic definitions and patterns that categorize tumors based on appearance.9 Simple rules and the ADNEX risk model are 2 of the group’s approaches (FIGURE 1).10,11 Both methods have been validated as effective for differentiating benign from malignant ovarian tumors, but neither has been used to study serial changes in ovarian morphology.

Regardless of the strategy employed, 25% of ovarian ultrasonography evaluations will be interpreted as “indeterminate” or “risk unknown.”10 The IOTA strategies have been successfully used in Europe for years, but they have not yet been studied or adopted in the United States.

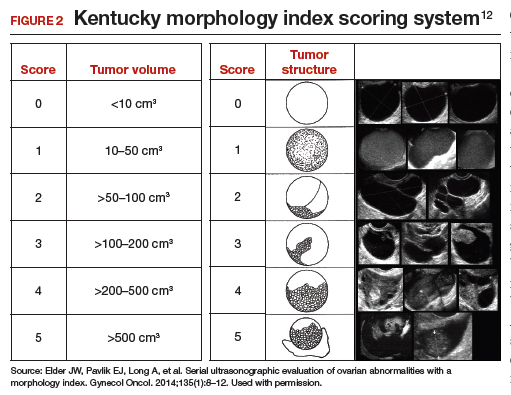

Kentucky morphology index

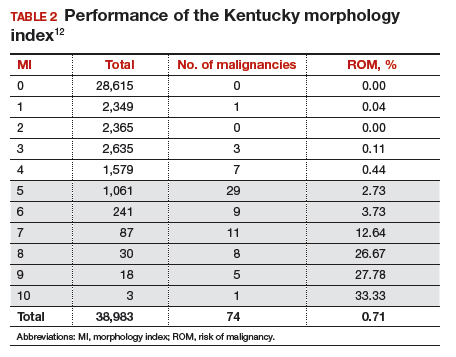

The morphology index (MI) from the University of Kentucky is an ultrasonography-based scoring system that combines tumor volume and tumor structure into a simple and effective index with a score ranging from 0 to 10 (FIGURE 2).12 A rising Kentucky MI score has a linear and predictable increase in the risk of ovarian malignancy. In a review of almost 40,000 sonograms, 85% of the malignancies had an MI score of 5 or greater (TABLE 2).12 Using this as a cutoff, the sensitivity and specificity for predicting malignancy was 86% and 98%, respectively.12

When comparing the ADNEX risk model with the Kentucky MI, investigators reviewed 45,000 ultrasound results and found that the majority of cancers were categorized by the ADNEX model in the lowest 4 of the 10 risk-of-malignancy groups, compared with only 15% for the MI.13 This clustering or skew is potentially problematic, since we expect higher scores to be more predictive of cancer than lower scores. It also infers that the ADNEX model may not be useful in serial surveillance strategies. Moreover, the ADNEX model identified only 30% of early stage cancers compared with identification of 80% with use of the MI.13

Serial ultrasonography

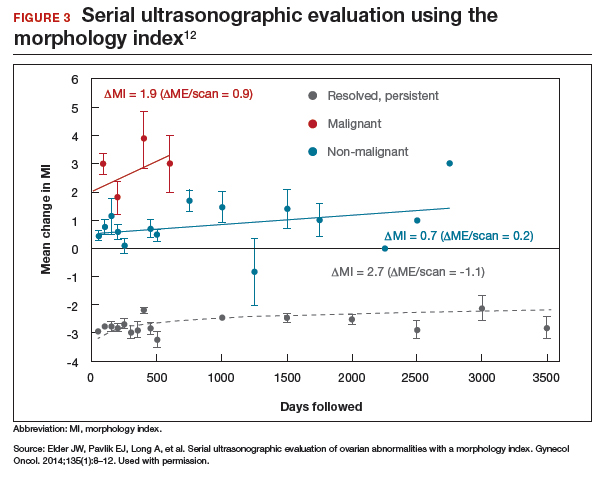

Serial ultrasonography is a concept similar to any longitudinal biomarker evaluation. In the United Kingdom Collaborative Trial of Ovarian Cancer Screening (UKCTOCS) program, the Risk of Ovarian Cancer Algorithm (ROCA) employs serial measurements of cancer antigen 125 (CA 125) to improve cancer detection. Serial ultrasonography similarly can be applied to better characterize a tumor’s physiology as well as its morphology. Over time, malignant ovarian tumors grow naturally in volume and complexity, and they do so at a rate faster than nonmalignant tumors. If this physical change can be measured objectively with ultrasonography, then serial sonography becomes a valuable diagnostic aid.

In comparing serial MI scores with clinical outcomes, studies have shown that malignant tumors exhibit a rapid increase, nonmalignant tumors have a stable or gradual rise, and resolving cysts show a decrease in MI score over time (FIGURE 3).12 An increase in the MI score of 1 or more per month (≥1 per month) is concerning for malignancy, and surgical removal should be considered. If the MI score of an asymptomatic ovarian tumor does not increase by 1 per month, it can be surveilled with intermittent ultrasonography.

Read about evaluating with serum biomarkers and sonography.

Serum biomarkers useful for determining risk, need for referral

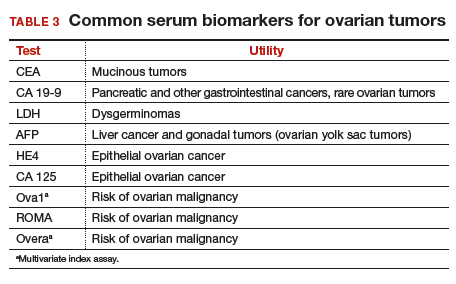

Serum biomarkers can be used to complement an ultrasonographic evaluation. They are particularly useful when surgery is recommended but the sonographic evaluation is indeterminate for malignancy risk. Many serum biomarkers are commonly used for the preoperative evaluation of an ovarian tumor or for surveillance of a malignancy following diagnosis (TABLE 3).

CA 125 is the most commonly ordered serum biomarker test for ovarian cancer. It is estimated that three‐quarters of CA 125 tests are ordered for preoperative use, which is not the US Food and Drug Administration (FDA) approved indication. Despite our clinical reliance on CA 125 as a diagnostic test prior to surgery, its utility is limited because of a low sensitivity for predicting cancer in premenopausal women and early stage disease.14,15 CA 125 specificity also varies widely, depending on patient age and other clinical factors, ranging from as low as 26% in premenopausal women to as high as 100% in postmenopausal women.16 Because CA 125 often is negative when early stage cancer is present, or positive when cancer is not, it is not recommended for preoperative use for determining whether an ovarian tumor is malignant or whether surgery is indicated. CA 125 should be used to monitor patients with a known ovarian malignancy.

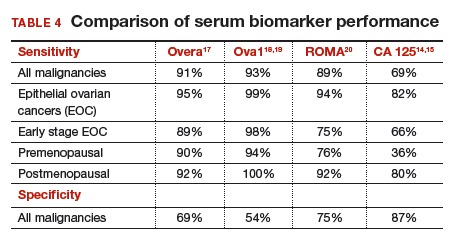

The new triage serum biomarkers, Overa, Ova1, and ROMA (Risk of Ovarian Malignancy Algorithm), are FDA cleared for preoperative use to help determine whether a woman needing surgery for an ovarian mass should be referred to a gynecologic oncologist.17–20 These tests should not be used to decide if surgery is indicated, but rather should be considered when the decision for surgery has already been made but the malignancy risk is unknown. A woman with a “high risk” result should be referred to a gynecologic oncologist, while one with a “low risk” score is very unlikely to have a malignancy and referral to a specialist is not necessary. TABLE 4 lists a comparison of the relative performance of these serum biomarkers.14,15,17–20 There are no published data on the use of serial triage biomarkers.

How to evaluate an ovarian tumor

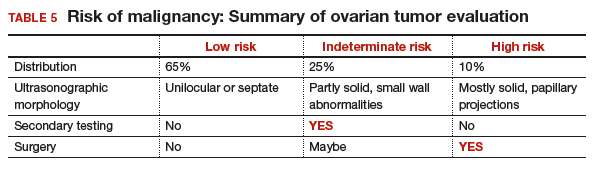

Approximately 65% of the time, ovarian cystic tumors can be identified accurately as low risk based on the initial sonographic evaluation (TABLE 5). In this scenario, the risk of malignancy is very low (<1%), no secondary testing is needed, and no surgery is recommended.1,3,21

About 10% of tumors are expected to have a high-risk morphology on ultrasonography, where the risk of malignancy exceeds 25% and referral to a gynecologic oncologist is required.

The remaining 25% of tumors cannot be accurately classified with a single ultrasonographic evaluation and are considered indeterminate.22 Indeterminate tumors require secondary testing to ascertain whether surgery is indicated. Secondary testing may consist of serial ultrasonography, magnetic resonance imaging (MRI), or serum triage biomarker testing if the decision for surgery has been made.

A 2-step process is recommended for evaluating an ovarian tumor.

Step 1. Perform a detailed ultrasonography study using a morphology-based system. Classify the tumor as:

- low risk (65%): unilocular, simple septate, no flow on color Doppler

- simple rules: benign

- MI score 0–3

- no secondary testing; no referral is recommended

- high risk (10%): irregular, mostly solid, papillary projections, very strong flow on color Doppler

- simple rules: malignant

- MI score ≥5

- no secondary testing; refer to a gynecologic oncologist

- indeterminate (25%): partly solid, small wall abnormalities, minimal or moderate flow on color Doppler

- simple rules: both M and B rules apply or no rule applies

- MI score usually 4–6

- perform secondary testing (step 2).

Step 2. Perform secondary testing as follows:

- serum triage biomarkers if surgery is planned (Ova1, ROMA, Overa), or

- MRI, or

- serial sonography.

The 3 case scenarios that follow illustrate how the ovarian tumor evaluation process may be applied in clinical practice, with referral to a gynecologic oncologist as appropriate.

CASE 1 Postmenopausal woman with urinary symptoms and pelvic pressure

A 61-year-old woman is referred with a newly identified ovarian tumor. She has had 1 month of urinary urgency, frequency, and pelvic pressure, but she denies vaginal bleeding or fever. She has no family history of cancer. The referring physician included results of a serum CA 125 (48 U/mL; normal, ≤35 U/mL). A pelvic examination reveals a palpable, irregular mass in the anterior pelvis with limited mobility.

What would be your next step in the evaluation of this patient?

Start with ultrasonography

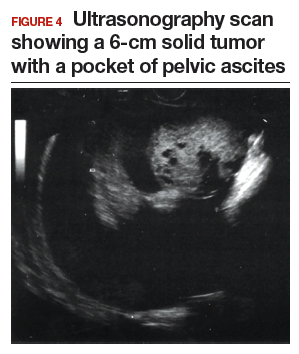

Step 1. Perform pelvic ultrasonography. In this patient, transvaginal sonography revealed a 6-cm (volume, 89 mL) mostly solid tumor (FIGURE 4). The maximum solid diameter of the tumor was 4.0 cm. There was a 20-mL pocket of pelvic ascites.

Results of morphology-based classification were as follows:

- simple rules: M1 and M5 positive; B rules: negative (malignant; high risk)

- ADNEX: 51.6% risk of malignancy (high risk)

- MI: 7 (high risk).

Step 2. Consider secondary testing. In this case, no secondary testing was recommended. Treatment plan. The patient was referred to a gynecologic oncologist for surgery and was found to have a stage IIA serous ovarian carcinoma.

CASE 2 Woman with history of pelvic symptoms and worsening pain

A 46-year-old woman presents with worsening pelvic pain over the last month. She has a long-standing history of pelvic pain, dysmenorrhea, and dyspareunia from suspected endometriosis. She has no family history of cancer. The referring physician included the following serum biomarker results: CA 125, 48 U/mL (normal, ≤35 U/mL), and HE4, 60 pM (normal, ≤150 pM). On pelvic examination, there is a palpable mass with limited mobility in the posterior cul-de-sac.

Based on the patient’s available history, physical examination, and biomarker information, how would you proceed?

Follow the 2-step process

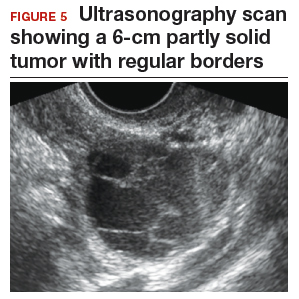

Step 1. Perform pelvic ultrasonography. Transvaginal sonography revealed a 6-cm (volume, 89 mL) partly solid tumor with regular internal borders (FIGURE 5). The maximum solid diameter of the tumor was 4.5 cm. There was no pelvic ascites.

Morphology classification was as follows:

- simple rules: M5 equivocal; B4 positive (indeterminate risk)

- ADNEX: 42.7% risk of malignancy (high risk)

- MI: 6 (indeterminate risk).

Step 2. Secondary testing was recommended for this patient. Test results were:

- repeat ultrasonography in 4 weeks with MI of 7 (volume score increase from 2 to 3, structure score unchanged at 4). Change in MI score +1 per month (high risk)

- Overa: 5.2 (high risk)

- ROMA: 11.8% (low risk).

Treatment plan. The patient was referred to a gynecologic oncologist because of an increasing MI score on serial sonography. Surgery revealed a stage IA grade 2 endometrioid adenocarcinoma of the ovary with surrounding endometriosis.

Read about treating a woman with postmenstrual bleeding.

CASE 3 Woman with postmenopausal bleeding seeks medical care

A 62-year-old woman is referred with new-onset postmenopausal spotting for 1 month. She was recently prescribed antibiotics for diverticulitis. She has no family history of cancer. The referring physician included the results of a serum CA 125, which was 48 U/mL (normal, ≤35 U/mL). On pelvic examination, a mobile cystic mass is noted in the posterior cul-de-sac.

Use the stepwise protocol to sort out findings

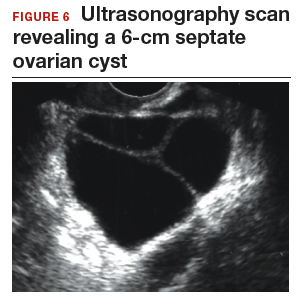

Step 1. Pelvic ultrasonography. Transvaginal sonography suggested the presence of an endometrial polyp and revealed a 6-cm (volume, 89 mL) septate ovarian cyst (FIGURE 6).

Based on morphology classification, risk was categorized as:

- simple rules: M rules negative; B2, B4, B5 positive (benign; low risk)

- ADNEX: 2.9% risk of malignancy (low risk)

- MI: 2 (low risk).

Step 2. No secondary testing was recommended in this case.

Treatment plan. The patient’s gynecologist performed a hysteroscopic polypectomy that revealed no cancer. Serial monitoring was recommended for the low-risk ovarian cyst. The next ultrasonography scan, at 6 months, was unchanged; a subsequent scan was ordered for 12 months later, and at that time the cyst had resolved.

Share your thoughts! Send your Letter to the Editor to [email protected]. Please include your name and the city and state in which you practice.

- Barber HR, Graber EA. The PMPO syndrome (postmenopausal palpable ovary syndrome). Obstet Gynecol. 1971;38(6):921–923.

- Modesitt SC, Pavlik EJ, Ueland FR, DePriest PD, Kryscio RJ, van Nagell JR Jr. Risk of malignancy in unilocular ovarian cystic tumors less than 10 centimeters in diameter. Obstet Gynecol. 2003;102(3):594–599.

- Saunders BA, Podzielinski I, Ware RA, et al. Risk of malignancy in sonographically confirmed septated cystic ovarian tumors. Gynecol Oncol. 2010;118(3):278–282.

- Moore RG, McMeekin DS, Brown AK, et al. A novel multiple marker bioassay utilizing HE4 and CA125 for the prediction of ovarian cancer in patients with a pelvic mass. Gynecol Oncol. 2009;112(1):40–46.

- Jordan SM, Bristow RE. Ovarian cancer biomarkers as diagnostic triage tests. Current Biomarker Findings. 2013;3:35–42.

- Giede KC, Kieser K, Dodge J, Rosen B. Who should operate on patients with ovarian cancer? An evidence-based review. Gynecol Oncol. 2005;99(2):447–461.

- Pavlik EJ, Ueland FR, Miller RW, et al. Frequency and disposition of ovarian abnormalities followed with serial transvaginal ultrasonography. Obstet Gynecol. 2013;122(2 pt 1):210–217.

- Glanc P, Benacerraf B, Bourne T, et al. First International Consensus Report on adnexal masses: management recommendations. J Ultrasound Med. 2017;36(5):849–863.

- Timmerman D, Valentin L, Bourne TGH, Collins WP, Verrelst H, Vergote I; International Ovarian Tumor Analysis (IOTA) Group. Terms, definitions and measurements to describe the sonographic features of adnexal tumors: a consensus opinion from the International Ovarian Tumor Analysis (IOTA) group. Ultrasound Obstet Gynecol. 2000;6(5):500–505.

- Timmerman D, Testa AC, Bourne T, et al. Simple ultrasound-based rules for the diagnosis of ovarian cancer. Ultrasound Obstet Gynecol. 2008;31(6):681–690.

- Van Calser B, Van Hoorde K, Valentin L, et al. Evaluating the risk of ovarian cancer before surgery using the ADNEX model to differentiate between benign, borderline, early and advanced stage invasive, and secondary metastatic tumours: prospective multicentre diagnostic study. BMJ. 2014;349:g5920.

- Elder JW, Pavlik EJ, Long A, et al. Serial ultrasonographic evaluation of ovarian abnormalities with a morphology index. Gynecol Oncol. 2014;135(1):8–12.