User login

What COVID did to MD income in 2020

, according to the Medscape Physician Compensation Report 2021: The Recovery Begins.

Almost 18,000 physicians in more than 29 specialties told Medscape about their income, hours worked, greatest challenges, and the unexpected impact of COVID-19 on their compensation.

How many physicians avoided massive losses

When the pandemic started around March 2020, “a great many physicians saw reductions in volume at first,” says Robert Pearl, MD, former CEO of the Permanente Medical Group and a professor at Stanford (Calif.) University.

Medscape’s survey report shows that a staggering 44% saw a 1%-25% reduction in patient volume, and 9% saw a 26%-50% decline. “That is indeed breathtaking,” Dr. Pearl says.

Several key factors saved many practices from hemorrhaging money, says Michael Belkin, JD, divisional vice president at Merritt Hawkins and Associates in Dallas. “Many physicians used the federal Paycheck Protection Program [PPP] to help keep themselves afloat,” he says. “A large percentage reduced their staff, which reduced their expenses, and many got some of their volume back by transitioning to telemedicine.”

In a 2020 survey for the Physicians Foundation, conducted by Merritt Hawkins, 48% of physicians said their practice had received PPP support, and most of those said the support was enough to allow them to stay open without reducing staff. Only 6% of practices that received PPP support did not stay open.

Telemedicine helped many practices

Early in the pandemic, Medicare reimbursements for telemedicine were equal with those for face-to-face visits. “Since telemedicine takes a third less time than an inpatient visit, doctors could see more patients,” Dr. Pearl says.

The switch was almost instantaneous in some practices. Within 3 days, a 200-provider multispecialty practice in Wilmington, N.C., went from not using telehealth to its being used by all physicians, the Medical Group Management Association reported. By late April, the practice was already back up to about 70% of normal overall production.

However, telemedicine could not help every specialty equally. “Generally, allergists can’t do their allergy testing virtually, and patients with mild problems probably put off visits,” Dr. Pearl says. Allergists experienced a large percentage decline in compensation, according to Medscape’s survey. For some, income fell from $301,000 the prior year to $274,000 this year.

Primary care struggled

Primary care physicians posted lower compensation than they did the prior year, but most rebounded to some degree. A study released in June 2020 projected that, even with telemedicine, primary care physicians would lose an average of $67,774 for the year.

However, Medscape’s survey found that internists’ average compensation declined from $251,000 in the prior year to $248,000, and average family physicians’ compensation actually rose from $234,000.

Pediatricians had a harder slog. Their average compensation sank from $232,000 to $221,000, according to the report. Even with telemedicine, parents of young children were not contacting the doctor. In May 2020, visits by children aged 3-5 years were down by 56%.

Many proceduralists recovered

Procedure-oriented specialties were particularly hard-hit at first, because many hospitals and some states banned all elective surgeries at the beginning of the pandemic.

“In March and April, ophthalmology practices were virtually at a standstill,” says John B. Pinto, an ophthalmology practice management consultant in San Diego. “But by the fourth quarter, operations were back to normal. Practices were fully open, and patients were coming back in.”

Medscape’s survey shows that, by year’s end, compensation was about the same as the year before for orthopedic surgeons ($511,000 in both the 2020 and 2021 reports); cardiologists actually did better ($438,000 in our 2020 report and $459,000 in 2021); and ophthalmologists’ compensation was about the same ($378,000 in our prior report and $379,000 in 2021).

Some other proceduralists, however, did not do as well. Otolaryngologists’ compensation fell to $417,000, the second-biggest percentage drop. “This may be because otolaryngologists’ chief procedures are tonsillectomies, sinus surgery, and nasal surgery, which can be put off,” Dr. Pearl says.

Anesthesiologists, who depend on surgical volume, also did not earn as much in 2020. Their compensation declined from $398,000 in our 2020 report to $378,000 in Medscape’s 2021 report.

“Not only has 70% of our revenue disappeared, but our physicians are still working every day,” an independent anesthesiology practice in Alabama told the MGMA early in the pandemic.

Plastic surgeons now the top earners

The biggest increase in compensation by far was made by plastic surgeons, whose income rose 9.8% over the year before, to $526,000. This put them at the top of the list

Dr. Pearl adds that plastic surgeons can perform their procedures in their offices, rather than in a hospital, where elective surgeries were often canceled.

Mr. Belkin says specialties other than plastic surgery had been offering more boutique cosmetic care even before the pandemic. In 2020, nonsurgical cosmetic procedures such as neurotoxin therapy, dermal filler procedures, chemical peels, and hair removal earned $3.1 billion in revenue, according to a survey by the Aesthetic Society.

Other specialties that earned more even during COVID

In Medscape’s survey, several specialties actually earned more during the pandemic than in 2019. Some specialties, such as critical care and public health, were integral in managing COVID patients and the pandemic.

However, some specialties involved in COVID care did not see an increase. Compensation for infectious disease specialists (at $245,000) and emergency medicine specialists (at $354,000) remained basically unchanged from the prior year, and for pulmonologists, it was slightly down.

Emergency departments reported decreases in volume of 40% or more early in the pandemic, according to the American College of Emergency Physicians. It was reported that patients were avoiding EDs for fear of contracting COVID, and car accidents were down because people ventured out less.

In this year’s report, psychiatrists saw a modest rise in compensation, to $275,000. “There has been an increase in mental health visits in the pandemic,” Dr. Pearl says. In 2020, about 4 in 10 adults in the United States reported symptoms of anxiety or depressive disorder, up from 1 in 10 adults the prior year. In addition, psychiatrists were third on the list of Merritt Hawkins’ most requested recruiting engagements.

Oncologists saw a rise in compensation, from $377,000 to $403,000. “Volume likely did not fall because cancer patients would go through with their chemotherapy in spite of the pandemic,” Dr. Pearl says. “The increase in income might have to do with the usual inflation in the cost of chemotherapy drugs.” Dr. Pinto saw the same trend for retinal surgeons, whose care also cannot be delayed.

Medscape’s survey also reports increases in compensation for rheumatologists, endocrinologists, and neurologists, but it reports small declines among dermatologists, radiologists, and gastroenterologists.

Gender-based pay gap remains in place

The gender-based pay gap in this year’s report is similar to that seen in Medscape’s report for the prior year. Men earned 27% more than women in 2021, compared with 25% more the year before. Some physicians commented that more women physicians maintained flexible or shorter work schedules to help with children who could not go into school.

“Having to be a full-time physician, full-time mom, and full-time teacher during our surge was unbelievable,” a primary care pediatrician in group practice and mother of two reported in November. “I felt pulled in all directions and didn’t do anything well.”

In addition, “men dominate some specialties that seem to have seen a smaller drop in volume in the pandemic, such as emergency medicine, infectious disease, pulmonology, and oncology,” says Halee Fischer-Wright, MD, CEO of MGMA.

Employed physicians shared their employers’ pain

Employed physicians, who typically work at hospitals, shared the financial pains of their institutions, particularly in the early stages of the pandemic. In April, hospital admissions were 34.1% below prepandemic levels, according to a study published in Health Affairs. That figure had risen by June, but it was still 8.3% below prepandemic volume.

By the end of the year, many hospitals and hospital systems were in the black, thanks in large part to generous federal subsidies, but actual operations still lost money for the year. Altogether, 42% of them posted an operational loss in 2020, up from the 23% in 2019, according to a survey by Moody’s Investors Service.

Medscape’s report shows that many employed physicians lost pay in 2020, and for many, pay had not returned to pre-COVID levels. Only 28% of primary care physicians and 32% of specialists who lost pay have seen it restored, according to the report. In addition, 15% of surveyed physicians did not receive an annual raise.

Many employed doctors are paid on the basis of relative value units (RVUs), which is a measure of the value of their work. In many cases, there was not enough work to reach RVU thresholds. Would hospitals and other employers lower RVU targets to meet the problem? “I haven’t seen our clients make concessions to providers along those lines,” Mr. Belkin says.

Physicians had to work longer hours

The Medscape report also found that in 2020, physicians saw fewer patients because each visit took longer.

“With the threat of COVID, in-person visits take more time than before,” Mr. Belkin says. “Physicians and staff have to prepare the exam room after each visit, and doctors must spend more time answering patients’ questions about COVID.”

“The new protocols to keep everyone safe add time between patients, and physicians have to answer patients’ questions about the pandemic and vaccines,” Dr. Fischer-Wright says. “You might see a 20% increase in time spent just on these non–revenue-generating COVID activities.”

Physicians still like their specialty

Although 2020 was a challenging year for physicians, the percentage of those who were satisfied with their specialty choice generally did not slip from the year before. It actually rose for several specialties – most notably, rheumatology, pulmonology, physical medicine and rehabilitation, and nephrology.

One specialty saw a decline in satisfaction with their specialty choice, and that was public health and preventive medicine, which plummeted 16 percentage points to 67% – putting it at the bottom of the list.

Even before the pandemic, many public health departments were chronically underfunded. This problem was possibly exacerbated by the pressures to keep up with COVID reporting and testing responsibilities.

Conclusion

Although 2020 was a wild ride for many physicians, many came out of it with only minor reductions in overall compensation, and some saw increases. Still, some specialties and many individuals experienced terrible financial stress and had to make changes in their lives and their spending in order to stay afloat.

“The biggest inhibitor to getting back to normal had to do with doctors who did not want to return because they did not want to risk getting COVID,” Dr. Pinto reports. But he notes that by February 2021 most doctors were completely vaccinated and could feel safe again.

A version of this article first appeared on Medscape.com.

, according to the Medscape Physician Compensation Report 2021: The Recovery Begins.

Almost 18,000 physicians in more than 29 specialties told Medscape about their income, hours worked, greatest challenges, and the unexpected impact of COVID-19 on their compensation.

How many physicians avoided massive losses

When the pandemic started around March 2020, “a great many physicians saw reductions in volume at first,” says Robert Pearl, MD, former CEO of the Permanente Medical Group and a professor at Stanford (Calif.) University.

Medscape’s survey report shows that a staggering 44% saw a 1%-25% reduction in patient volume, and 9% saw a 26%-50% decline. “That is indeed breathtaking,” Dr. Pearl says.

Several key factors saved many practices from hemorrhaging money, says Michael Belkin, JD, divisional vice president at Merritt Hawkins and Associates in Dallas. “Many physicians used the federal Paycheck Protection Program [PPP] to help keep themselves afloat,” he says. “A large percentage reduced their staff, which reduced their expenses, and many got some of their volume back by transitioning to telemedicine.”

In a 2020 survey for the Physicians Foundation, conducted by Merritt Hawkins, 48% of physicians said their practice had received PPP support, and most of those said the support was enough to allow them to stay open without reducing staff. Only 6% of practices that received PPP support did not stay open.

Telemedicine helped many practices

Early in the pandemic, Medicare reimbursements for telemedicine were equal with those for face-to-face visits. “Since telemedicine takes a third less time than an inpatient visit, doctors could see more patients,” Dr. Pearl says.

The switch was almost instantaneous in some practices. Within 3 days, a 200-provider multispecialty practice in Wilmington, N.C., went from not using telehealth to its being used by all physicians, the Medical Group Management Association reported. By late April, the practice was already back up to about 70% of normal overall production.

However, telemedicine could not help every specialty equally. “Generally, allergists can’t do their allergy testing virtually, and patients with mild problems probably put off visits,” Dr. Pearl says. Allergists experienced a large percentage decline in compensation, according to Medscape’s survey. For some, income fell from $301,000 the prior year to $274,000 this year.

Primary care struggled

Primary care physicians posted lower compensation than they did the prior year, but most rebounded to some degree. A study released in June 2020 projected that, even with telemedicine, primary care physicians would lose an average of $67,774 for the year.

However, Medscape’s survey found that internists’ average compensation declined from $251,000 in the prior year to $248,000, and average family physicians’ compensation actually rose from $234,000.

Pediatricians had a harder slog. Their average compensation sank from $232,000 to $221,000, according to the report. Even with telemedicine, parents of young children were not contacting the doctor. In May 2020, visits by children aged 3-5 years were down by 56%.

Many proceduralists recovered

Procedure-oriented specialties were particularly hard-hit at first, because many hospitals and some states banned all elective surgeries at the beginning of the pandemic.

“In March and April, ophthalmology practices were virtually at a standstill,” says John B. Pinto, an ophthalmology practice management consultant in San Diego. “But by the fourth quarter, operations were back to normal. Practices were fully open, and patients were coming back in.”

Medscape’s survey shows that, by year’s end, compensation was about the same as the year before for orthopedic surgeons ($511,000 in both the 2020 and 2021 reports); cardiologists actually did better ($438,000 in our 2020 report and $459,000 in 2021); and ophthalmologists’ compensation was about the same ($378,000 in our prior report and $379,000 in 2021).

Some other proceduralists, however, did not do as well. Otolaryngologists’ compensation fell to $417,000, the second-biggest percentage drop. “This may be because otolaryngologists’ chief procedures are tonsillectomies, sinus surgery, and nasal surgery, which can be put off,” Dr. Pearl says.

Anesthesiologists, who depend on surgical volume, also did not earn as much in 2020. Their compensation declined from $398,000 in our 2020 report to $378,000 in Medscape’s 2021 report.

“Not only has 70% of our revenue disappeared, but our physicians are still working every day,” an independent anesthesiology practice in Alabama told the MGMA early in the pandemic.

Plastic surgeons now the top earners

The biggest increase in compensation by far was made by plastic surgeons, whose income rose 9.8% over the year before, to $526,000. This put them at the top of the list

Dr. Pearl adds that plastic surgeons can perform their procedures in their offices, rather than in a hospital, where elective surgeries were often canceled.

Mr. Belkin says specialties other than plastic surgery had been offering more boutique cosmetic care even before the pandemic. In 2020, nonsurgical cosmetic procedures such as neurotoxin therapy, dermal filler procedures, chemical peels, and hair removal earned $3.1 billion in revenue, according to a survey by the Aesthetic Society.

Other specialties that earned more even during COVID

In Medscape’s survey, several specialties actually earned more during the pandemic than in 2019. Some specialties, such as critical care and public health, were integral in managing COVID patients and the pandemic.

However, some specialties involved in COVID care did not see an increase. Compensation for infectious disease specialists (at $245,000) and emergency medicine specialists (at $354,000) remained basically unchanged from the prior year, and for pulmonologists, it was slightly down.

Emergency departments reported decreases in volume of 40% or more early in the pandemic, according to the American College of Emergency Physicians. It was reported that patients were avoiding EDs for fear of contracting COVID, and car accidents were down because people ventured out less.

In this year’s report, psychiatrists saw a modest rise in compensation, to $275,000. “There has been an increase in mental health visits in the pandemic,” Dr. Pearl says. In 2020, about 4 in 10 adults in the United States reported symptoms of anxiety or depressive disorder, up from 1 in 10 adults the prior year. In addition, psychiatrists were third on the list of Merritt Hawkins’ most requested recruiting engagements.

Oncologists saw a rise in compensation, from $377,000 to $403,000. “Volume likely did not fall because cancer patients would go through with their chemotherapy in spite of the pandemic,” Dr. Pearl says. “The increase in income might have to do with the usual inflation in the cost of chemotherapy drugs.” Dr. Pinto saw the same trend for retinal surgeons, whose care also cannot be delayed.

Medscape’s survey also reports increases in compensation for rheumatologists, endocrinologists, and neurologists, but it reports small declines among dermatologists, radiologists, and gastroenterologists.

Gender-based pay gap remains in place

The gender-based pay gap in this year’s report is similar to that seen in Medscape’s report for the prior year. Men earned 27% more than women in 2021, compared with 25% more the year before. Some physicians commented that more women physicians maintained flexible or shorter work schedules to help with children who could not go into school.

“Having to be a full-time physician, full-time mom, and full-time teacher during our surge was unbelievable,” a primary care pediatrician in group practice and mother of two reported in November. “I felt pulled in all directions and didn’t do anything well.”

In addition, “men dominate some specialties that seem to have seen a smaller drop in volume in the pandemic, such as emergency medicine, infectious disease, pulmonology, and oncology,” says Halee Fischer-Wright, MD, CEO of MGMA.

Employed physicians shared their employers’ pain

Employed physicians, who typically work at hospitals, shared the financial pains of their institutions, particularly in the early stages of the pandemic. In April, hospital admissions were 34.1% below prepandemic levels, according to a study published in Health Affairs. That figure had risen by June, but it was still 8.3% below prepandemic volume.

By the end of the year, many hospitals and hospital systems were in the black, thanks in large part to generous federal subsidies, but actual operations still lost money for the year. Altogether, 42% of them posted an operational loss in 2020, up from the 23% in 2019, according to a survey by Moody’s Investors Service.

Medscape’s report shows that many employed physicians lost pay in 2020, and for many, pay had not returned to pre-COVID levels. Only 28% of primary care physicians and 32% of specialists who lost pay have seen it restored, according to the report. In addition, 15% of surveyed physicians did not receive an annual raise.

Many employed doctors are paid on the basis of relative value units (RVUs), which is a measure of the value of their work. In many cases, there was not enough work to reach RVU thresholds. Would hospitals and other employers lower RVU targets to meet the problem? “I haven’t seen our clients make concessions to providers along those lines,” Mr. Belkin says.

Physicians had to work longer hours

The Medscape report also found that in 2020, physicians saw fewer patients because each visit took longer.

“With the threat of COVID, in-person visits take more time than before,” Mr. Belkin says. “Physicians and staff have to prepare the exam room after each visit, and doctors must spend more time answering patients’ questions about COVID.”

“The new protocols to keep everyone safe add time between patients, and physicians have to answer patients’ questions about the pandemic and vaccines,” Dr. Fischer-Wright says. “You might see a 20% increase in time spent just on these non–revenue-generating COVID activities.”

Physicians still like their specialty

Although 2020 was a challenging year for physicians, the percentage of those who were satisfied with their specialty choice generally did not slip from the year before. It actually rose for several specialties – most notably, rheumatology, pulmonology, physical medicine and rehabilitation, and nephrology.

One specialty saw a decline in satisfaction with their specialty choice, and that was public health and preventive medicine, which plummeted 16 percentage points to 67% – putting it at the bottom of the list.

Even before the pandemic, many public health departments were chronically underfunded. This problem was possibly exacerbated by the pressures to keep up with COVID reporting and testing responsibilities.

Conclusion

Although 2020 was a wild ride for many physicians, many came out of it with only minor reductions in overall compensation, and some saw increases. Still, some specialties and many individuals experienced terrible financial stress and had to make changes in their lives and their spending in order to stay afloat.

“The biggest inhibitor to getting back to normal had to do with doctors who did not want to return because they did not want to risk getting COVID,” Dr. Pinto reports. But he notes that by February 2021 most doctors were completely vaccinated and could feel safe again.

A version of this article first appeared on Medscape.com.

, according to the Medscape Physician Compensation Report 2021: The Recovery Begins.

Almost 18,000 physicians in more than 29 specialties told Medscape about their income, hours worked, greatest challenges, and the unexpected impact of COVID-19 on their compensation.

How many physicians avoided massive losses

When the pandemic started around March 2020, “a great many physicians saw reductions in volume at first,” says Robert Pearl, MD, former CEO of the Permanente Medical Group and a professor at Stanford (Calif.) University.

Medscape’s survey report shows that a staggering 44% saw a 1%-25% reduction in patient volume, and 9% saw a 26%-50% decline. “That is indeed breathtaking,” Dr. Pearl says.

Several key factors saved many practices from hemorrhaging money, says Michael Belkin, JD, divisional vice president at Merritt Hawkins and Associates in Dallas. “Many physicians used the federal Paycheck Protection Program [PPP] to help keep themselves afloat,” he says. “A large percentage reduced their staff, which reduced their expenses, and many got some of their volume back by transitioning to telemedicine.”

In a 2020 survey for the Physicians Foundation, conducted by Merritt Hawkins, 48% of physicians said their practice had received PPP support, and most of those said the support was enough to allow them to stay open without reducing staff. Only 6% of practices that received PPP support did not stay open.

Telemedicine helped many practices

Early in the pandemic, Medicare reimbursements for telemedicine were equal with those for face-to-face visits. “Since telemedicine takes a third less time than an inpatient visit, doctors could see more patients,” Dr. Pearl says.

The switch was almost instantaneous in some practices. Within 3 days, a 200-provider multispecialty practice in Wilmington, N.C., went from not using telehealth to its being used by all physicians, the Medical Group Management Association reported. By late April, the practice was already back up to about 70% of normal overall production.

However, telemedicine could not help every specialty equally. “Generally, allergists can’t do their allergy testing virtually, and patients with mild problems probably put off visits,” Dr. Pearl says. Allergists experienced a large percentage decline in compensation, according to Medscape’s survey. For some, income fell from $301,000 the prior year to $274,000 this year.

Primary care struggled

Primary care physicians posted lower compensation than they did the prior year, but most rebounded to some degree. A study released in June 2020 projected that, even with telemedicine, primary care physicians would lose an average of $67,774 for the year.

However, Medscape’s survey found that internists’ average compensation declined from $251,000 in the prior year to $248,000, and average family physicians’ compensation actually rose from $234,000.

Pediatricians had a harder slog. Their average compensation sank from $232,000 to $221,000, according to the report. Even with telemedicine, parents of young children were not contacting the doctor. In May 2020, visits by children aged 3-5 years were down by 56%.

Many proceduralists recovered

Procedure-oriented specialties were particularly hard-hit at first, because many hospitals and some states banned all elective surgeries at the beginning of the pandemic.

“In March and April, ophthalmology practices were virtually at a standstill,” says John B. Pinto, an ophthalmology practice management consultant in San Diego. “But by the fourth quarter, operations were back to normal. Practices were fully open, and patients were coming back in.”

Medscape’s survey shows that, by year’s end, compensation was about the same as the year before for orthopedic surgeons ($511,000 in both the 2020 and 2021 reports); cardiologists actually did better ($438,000 in our 2020 report and $459,000 in 2021); and ophthalmologists’ compensation was about the same ($378,000 in our prior report and $379,000 in 2021).

Some other proceduralists, however, did not do as well. Otolaryngologists’ compensation fell to $417,000, the second-biggest percentage drop. “This may be because otolaryngologists’ chief procedures are tonsillectomies, sinus surgery, and nasal surgery, which can be put off,” Dr. Pearl says.

Anesthesiologists, who depend on surgical volume, also did not earn as much in 2020. Their compensation declined from $398,000 in our 2020 report to $378,000 in Medscape’s 2021 report.

“Not only has 70% of our revenue disappeared, but our physicians are still working every day,” an independent anesthesiology practice in Alabama told the MGMA early in the pandemic.

Plastic surgeons now the top earners

The biggest increase in compensation by far was made by plastic surgeons, whose income rose 9.8% over the year before, to $526,000. This put them at the top of the list

Dr. Pearl adds that plastic surgeons can perform their procedures in their offices, rather than in a hospital, where elective surgeries were often canceled.

Mr. Belkin says specialties other than plastic surgery had been offering more boutique cosmetic care even before the pandemic. In 2020, nonsurgical cosmetic procedures such as neurotoxin therapy, dermal filler procedures, chemical peels, and hair removal earned $3.1 billion in revenue, according to a survey by the Aesthetic Society.

Other specialties that earned more even during COVID

In Medscape’s survey, several specialties actually earned more during the pandemic than in 2019. Some specialties, such as critical care and public health, were integral in managing COVID patients and the pandemic.

However, some specialties involved in COVID care did not see an increase. Compensation for infectious disease specialists (at $245,000) and emergency medicine specialists (at $354,000) remained basically unchanged from the prior year, and for pulmonologists, it was slightly down.

Emergency departments reported decreases in volume of 40% or more early in the pandemic, according to the American College of Emergency Physicians. It was reported that patients were avoiding EDs for fear of contracting COVID, and car accidents were down because people ventured out less.

In this year’s report, psychiatrists saw a modest rise in compensation, to $275,000. “There has been an increase in mental health visits in the pandemic,” Dr. Pearl says. In 2020, about 4 in 10 adults in the United States reported symptoms of anxiety or depressive disorder, up from 1 in 10 adults the prior year. In addition, psychiatrists were third on the list of Merritt Hawkins’ most requested recruiting engagements.

Oncologists saw a rise in compensation, from $377,000 to $403,000. “Volume likely did not fall because cancer patients would go through with their chemotherapy in spite of the pandemic,” Dr. Pearl says. “The increase in income might have to do with the usual inflation in the cost of chemotherapy drugs.” Dr. Pinto saw the same trend for retinal surgeons, whose care also cannot be delayed.

Medscape’s survey also reports increases in compensation for rheumatologists, endocrinologists, and neurologists, but it reports small declines among dermatologists, radiologists, and gastroenterologists.

Gender-based pay gap remains in place

The gender-based pay gap in this year’s report is similar to that seen in Medscape’s report for the prior year. Men earned 27% more than women in 2021, compared with 25% more the year before. Some physicians commented that more women physicians maintained flexible or shorter work schedules to help with children who could not go into school.

“Having to be a full-time physician, full-time mom, and full-time teacher during our surge was unbelievable,” a primary care pediatrician in group practice and mother of two reported in November. “I felt pulled in all directions and didn’t do anything well.”

In addition, “men dominate some specialties that seem to have seen a smaller drop in volume in the pandemic, such as emergency medicine, infectious disease, pulmonology, and oncology,” says Halee Fischer-Wright, MD, CEO of MGMA.

Employed physicians shared their employers’ pain

Employed physicians, who typically work at hospitals, shared the financial pains of their institutions, particularly in the early stages of the pandemic. In April, hospital admissions were 34.1% below prepandemic levels, according to a study published in Health Affairs. That figure had risen by June, but it was still 8.3% below prepandemic volume.

By the end of the year, many hospitals and hospital systems were in the black, thanks in large part to generous federal subsidies, but actual operations still lost money for the year. Altogether, 42% of them posted an operational loss in 2020, up from the 23% in 2019, according to a survey by Moody’s Investors Service.

Medscape’s report shows that many employed physicians lost pay in 2020, and for many, pay had not returned to pre-COVID levels. Only 28% of primary care physicians and 32% of specialists who lost pay have seen it restored, according to the report. In addition, 15% of surveyed physicians did not receive an annual raise.

Many employed doctors are paid on the basis of relative value units (RVUs), which is a measure of the value of their work. In many cases, there was not enough work to reach RVU thresholds. Would hospitals and other employers lower RVU targets to meet the problem? “I haven’t seen our clients make concessions to providers along those lines,” Mr. Belkin says.

Physicians had to work longer hours

The Medscape report also found that in 2020, physicians saw fewer patients because each visit took longer.

“With the threat of COVID, in-person visits take more time than before,” Mr. Belkin says. “Physicians and staff have to prepare the exam room after each visit, and doctors must spend more time answering patients’ questions about COVID.”

“The new protocols to keep everyone safe add time between patients, and physicians have to answer patients’ questions about the pandemic and vaccines,” Dr. Fischer-Wright says. “You might see a 20% increase in time spent just on these non–revenue-generating COVID activities.”

Physicians still like their specialty

Although 2020 was a challenging year for physicians, the percentage of those who were satisfied with their specialty choice generally did not slip from the year before. It actually rose for several specialties – most notably, rheumatology, pulmonology, physical medicine and rehabilitation, and nephrology.

One specialty saw a decline in satisfaction with their specialty choice, and that was public health and preventive medicine, which plummeted 16 percentage points to 67% – putting it at the bottom of the list.

Even before the pandemic, many public health departments were chronically underfunded. This problem was possibly exacerbated by the pressures to keep up with COVID reporting and testing responsibilities.

Conclusion

Although 2020 was a wild ride for many physicians, many came out of it with only minor reductions in overall compensation, and some saw increases. Still, some specialties and many individuals experienced terrible financial stress and had to make changes in their lives and their spending in order to stay afloat.

“The biggest inhibitor to getting back to normal had to do with doctors who did not want to return because they did not want to risk getting COVID,” Dr. Pinto reports. But he notes that by February 2021 most doctors were completely vaccinated and could feel safe again.

A version of this article first appeared on Medscape.com.

Mnemonics can be real lifesavers

Mnemonics are often used to help remember complex groups of individual items related to a common theme. Studies have shown that college students studying with mnemonics outperform students using rote learning, suggesting that mnemonics are useful in retention of facts. 1

In 1 study, researchers compared memory athletes and control subjects before and after mnemonic training. 2 Findings showed that mnemonics created connectivity changes in the control group similar to memory athletes at baseline. These changes persisted for as long as 4 months after training, demonstrating that mnemonics have long-lasting effects on memory capacity. 2

The most frequently used forms of medical mnemonics are acronyms or acrostics. Acronyms are words in which each letter in the word corresponds to a series of words to be remembered. 3 A familiar example of a medical acronym is “SLUDGE,” which represents the symptoms for cholinergic toxicity (salivation, lacrimation, urination, defecation, gastrointestinal upset, emesis). An acrostic involves a phrase in which the first letter of each word corresponds to the first letter of a word to be remembered. 3 A commonly used acrostic phrase for the memorization of the 12 cranial nerves is “On Old Olympus’ Towering Tops A Finn And German Viewed Some Hops”: olfactory, optic, oculomotor, trochlear, trigeminal, abducens, facial, auditory (vestibulocochlear), glossopharyngeal, vagus, spinal accessory, hypoglossal.

Mnemonics are an effective way for medical students, residents, and current practitioners to effortlessly recall information. For example, COVERABCD is an acronym that stands for circulation/capnograph/color, oxygen, ventilation, endotracheal tube, review of equipment, airway, breathing, circulation, and drugs. 4 Runciman et al showed that the use of the acronym COVERABCD could have prevented or mitigated 60% of 2000 anesthetic incidents. 4 Another mnemonic, FAST, used to assess for a stroke, reduced median hospital arrival times by more than an hour. 5

There are hundreds of mnemonics related to medical practice. Collections of those that might be useful for family practitioners and medical residents can be found at the following links:

https://epomedicine.com/medical-mnemonics/

www.oxfordmedicaleducation.com/medical-mnemonics/

Kristyn McKnight, PharmD candidate

Hannah Lutz, PharmD candidate

Tracy Mahvan, MBA, PharmD, BCGP

School of Pharmacy, University of Wyoming, Laramie

1. Dave H, Awasthi S. An investigation of the role of mnemonics in higher education. Paper presented at: International Conference on Digital Pedagogies (ICDP); April 1-3, 2019; New Delhi, India. +http://dx.doi.org/10.2139/ssrn.3375714

2. Dresler M, Shirer WR, Konrad BN, et al. Mnemonic training reshapes the brain networks to support memory. Neuron. 2017;93:1227-1235. https://doi.org/10.1016/j.neuron. 2017.02.003

3. Nolen J. Mnemonic. Encyclopedia Britannica. July 20, 1998. Updated September 18, 2019. Accessed March 16, 2021. www.britannica.com/science/short-term-memory

4. Runciman WB, Webb RK, Klepper ID, et al. The Australian Incident Monitoring Study. Crisis management—validation of an algorithm by analysis of 2000 incident reports. Anaesth Intensive Care. 1993;21:579-592. https://doi.org/10.1177/0310057X9302100515

5. Wolters FJ, Paul NLM, Li L, et al. Sustained impact of UK FAST-test public education on response to stroke: a population-based time-series study. Int J Stroke. 2015;10:1108-1114. https://doi.org/1111/ijs.12484

Mnemonics are often used to help remember complex groups of individual items related to a common theme. Studies have shown that college students studying with mnemonics outperform students using rote learning, suggesting that mnemonics are useful in retention of facts. 1

In 1 study, researchers compared memory athletes and control subjects before and after mnemonic training. 2 Findings showed that mnemonics created connectivity changes in the control group similar to memory athletes at baseline. These changes persisted for as long as 4 months after training, demonstrating that mnemonics have long-lasting effects on memory capacity. 2

The most frequently used forms of medical mnemonics are acronyms or acrostics. Acronyms are words in which each letter in the word corresponds to a series of words to be remembered. 3 A familiar example of a medical acronym is “SLUDGE,” which represents the symptoms for cholinergic toxicity (salivation, lacrimation, urination, defecation, gastrointestinal upset, emesis). An acrostic involves a phrase in which the first letter of each word corresponds to the first letter of a word to be remembered. 3 A commonly used acrostic phrase for the memorization of the 12 cranial nerves is “On Old Olympus’ Towering Tops A Finn And German Viewed Some Hops”: olfactory, optic, oculomotor, trochlear, trigeminal, abducens, facial, auditory (vestibulocochlear), glossopharyngeal, vagus, spinal accessory, hypoglossal.

Mnemonics are an effective way for medical students, residents, and current practitioners to effortlessly recall information. For example, COVERABCD is an acronym that stands for circulation/capnograph/color, oxygen, ventilation, endotracheal tube, review of equipment, airway, breathing, circulation, and drugs. 4 Runciman et al showed that the use of the acronym COVERABCD could have prevented or mitigated 60% of 2000 anesthetic incidents. 4 Another mnemonic, FAST, used to assess for a stroke, reduced median hospital arrival times by more than an hour. 5

There are hundreds of mnemonics related to medical practice. Collections of those that might be useful for family practitioners and medical residents can be found at the following links:

https://epomedicine.com/medical-mnemonics/

www.oxfordmedicaleducation.com/medical-mnemonics/

Kristyn McKnight, PharmD candidate

Hannah Lutz, PharmD candidate

Tracy Mahvan, MBA, PharmD, BCGP

School of Pharmacy, University of Wyoming, Laramie

Mnemonics are often used to help remember complex groups of individual items related to a common theme. Studies have shown that college students studying with mnemonics outperform students using rote learning, suggesting that mnemonics are useful in retention of facts. 1

In 1 study, researchers compared memory athletes and control subjects before and after mnemonic training. 2 Findings showed that mnemonics created connectivity changes in the control group similar to memory athletes at baseline. These changes persisted for as long as 4 months after training, demonstrating that mnemonics have long-lasting effects on memory capacity. 2

The most frequently used forms of medical mnemonics are acronyms or acrostics. Acronyms are words in which each letter in the word corresponds to a series of words to be remembered. 3 A familiar example of a medical acronym is “SLUDGE,” which represents the symptoms for cholinergic toxicity (salivation, lacrimation, urination, defecation, gastrointestinal upset, emesis). An acrostic involves a phrase in which the first letter of each word corresponds to the first letter of a word to be remembered. 3 A commonly used acrostic phrase for the memorization of the 12 cranial nerves is “On Old Olympus’ Towering Tops A Finn And German Viewed Some Hops”: olfactory, optic, oculomotor, trochlear, trigeminal, abducens, facial, auditory (vestibulocochlear), glossopharyngeal, vagus, spinal accessory, hypoglossal.

Mnemonics are an effective way for medical students, residents, and current practitioners to effortlessly recall information. For example, COVERABCD is an acronym that stands for circulation/capnograph/color, oxygen, ventilation, endotracheal tube, review of equipment, airway, breathing, circulation, and drugs. 4 Runciman et al showed that the use of the acronym COVERABCD could have prevented or mitigated 60% of 2000 anesthetic incidents. 4 Another mnemonic, FAST, used to assess for a stroke, reduced median hospital arrival times by more than an hour. 5

There are hundreds of mnemonics related to medical practice. Collections of those that might be useful for family practitioners and medical residents can be found at the following links:

https://epomedicine.com/medical-mnemonics/

www.oxfordmedicaleducation.com/medical-mnemonics/

Kristyn McKnight, PharmD candidate

Hannah Lutz, PharmD candidate

Tracy Mahvan, MBA, PharmD, BCGP

School of Pharmacy, University of Wyoming, Laramie

1. Dave H, Awasthi S. An investigation of the role of mnemonics in higher education. Paper presented at: International Conference on Digital Pedagogies (ICDP); April 1-3, 2019; New Delhi, India. +http://dx.doi.org/10.2139/ssrn.3375714

2. Dresler M, Shirer WR, Konrad BN, et al. Mnemonic training reshapes the brain networks to support memory. Neuron. 2017;93:1227-1235. https://doi.org/10.1016/j.neuron. 2017.02.003

3. Nolen J. Mnemonic. Encyclopedia Britannica. July 20, 1998. Updated September 18, 2019. Accessed March 16, 2021. www.britannica.com/science/short-term-memory

4. Runciman WB, Webb RK, Klepper ID, et al. The Australian Incident Monitoring Study. Crisis management—validation of an algorithm by analysis of 2000 incident reports. Anaesth Intensive Care. 1993;21:579-592. https://doi.org/10.1177/0310057X9302100515

5. Wolters FJ, Paul NLM, Li L, et al. Sustained impact of UK FAST-test public education on response to stroke: a population-based time-series study. Int J Stroke. 2015;10:1108-1114. https://doi.org/1111/ijs.12484

1. Dave H, Awasthi S. An investigation of the role of mnemonics in higher education. Paper presented at: International Conference on Digital Pedagogies (ICDP); April 1-3, 2019; New Delhi, India. +http://dx.doi.org/10.2139/ssrn.3375714

2. Dresler M, Shirer WR, Konrad BN, et al. Mnemonic training reshapes the brain networks to support memory. Neuron. 2017;93:1227-1235. https://doi.org/10.1016/j.neuron. 2017.02.003

3. Nolen J. Mnemonic. Encyclopedia Britannica. July 20, 1998. Updated September 18, 2019. Accessed March 16, 2021. www.britannica.com/science/short-term-memory

4. Runciman WB, Webb RK, Klepper ID, et al. The Australian Incident Monitoring Study. Crisis management—validation of an algorithm by analysis of 2000 incident reports. Anaesth Intensive Care. 1993;21:579-592. https://doi.org/10.1177/0310057X9302100515

5. Wolters FJ, Paul NLM, Li L, et al. Sustained impact of UK FAST-test public education on response to stroke: a population-based time-series study. Int J Stroke. 2015;10:1108-1114. https://doi.org/1111/ijs.12484

Retail health clinics: What is their role in ObGyn care?

Retail Health Clinics (RHCs) are health care facilities located in high-traffic retail outlets with adjacent pharmacies that are intended to provide convenient and affordable care without sacrificing quality. The clinics add an option that complements services to individuals and families who otherwise would need to wait for an appointment with a traditional primary care physician or provider.1 Appointments are not necessary for episodic health needs. Usually open 7 days a week, RHCs offer extended hours on weekdays.2

The clinics are staffed by licensed, qualified advance practice providers, such as nurse practitioners and physician assistants, who are supervised by family physicians where required by state law. These clinicians have advanced education to diagnose, treat, and prescribe for nonemergent ailments such as colds and flu, rashes and skin irritation, and muscle strains or sprains.3 They are supported by an electronic health record that contains established evidence-based protocols.2,4

Evolution of retail health clinics

The first RHC, operated by QuickMedx, opened its doors in 2000 in Minneapolis– St. Paul.1,5 Only patients with a very limited number of illnesses were seen, and payment was cash. In 2005, this clinic was acquired by a major pharmacy retailer, which led to several acquisitions by other retailers and health care systems. In addition to accepting cash for a visit, the clinics formed contracts with health insurance companies. The average cost of a visit to an RHC in 2016 was estimated to be $70, considerably less than the cost at urgent care clinics ($124) and emergency rooms ($356).6

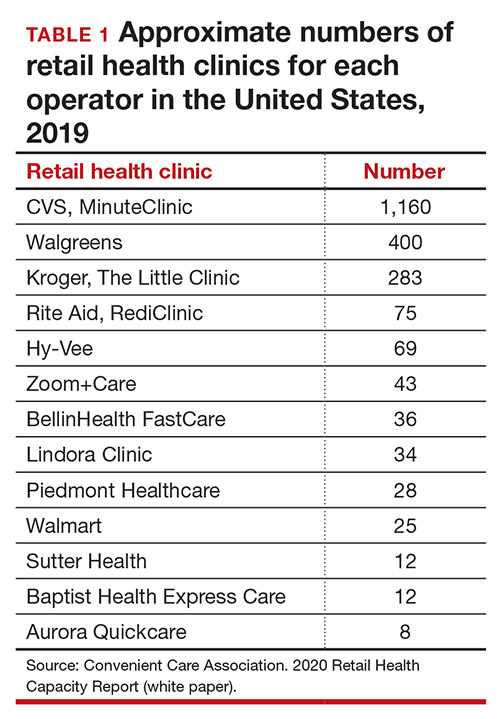

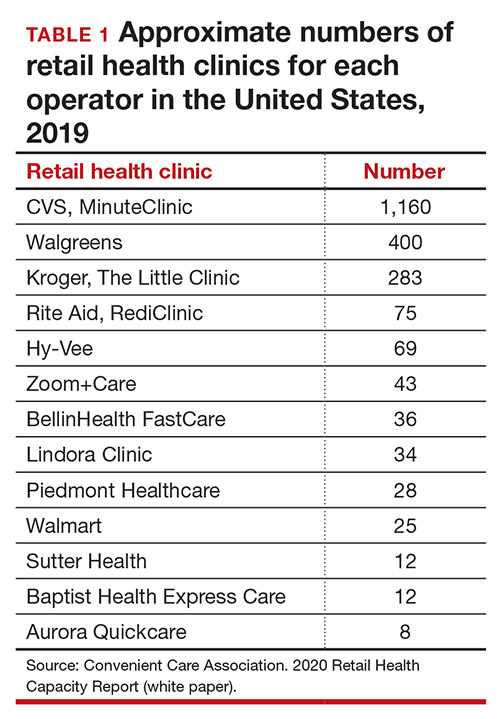

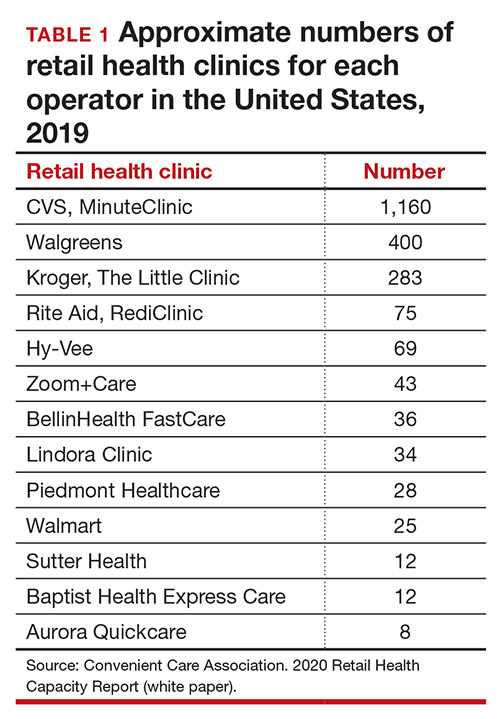

Today, more than 20 companies provide health care services at RHCs (TABLE 1). CVS (MinuteClinic) has the most retail clinics, followed by Walgreens, Kroger, Rite Aid, and Zoom+Care.1,2 There are now about 3,300 clinics in the United States, Canada, and Mexico (nearly all are located in the United States).2 Currently, RHCs are present in 44 states and the District of Columbia. Alabama, Alaska, Idaho, North Dakota, Vermont, and Wyoming are the only states without an RHC, while large-population states (California, Florida, Ohio, Pennsylvania, and Texas) have experienced an explosion in clinic openings.2

RHCs are found in high foot traffic locations, such as large retailers and grocery stores, and in prioritizing services such as drug stores. By analyzing 2019 clinic openings and population centers, the Convenient Care Association determined that more than half of the US population now lives within a 10-minute drive of an RHC.2 New locations are established on a regular basis, resulting in some flux in the total number of clinics.

Continue to: Services that RHCs provide...

Services that RHCs provide

As RHC locations expand, so do their services. Most RHCs pursue 1 of 2 models: health hubs or virtual care. Health hubs offer an expansion of services, which has resulted in retail health looking and operating more like the primary care providers in their communities.1,2 While most chains intend for patient visits to be brief, the growing health hub model intends to expand the period for patient visits. The major companies, CVS, Kroger, and Walmart, are offering an increase in their services and granting their providers a greater capacity to screen and treat patients for a wider range of conditions. By contrast, other clinic operators, such as Rite Aid RediClinics, are pursuing a more episodic and convenient care model with a greater adoption and expansion of telehealth and telemedicine.

Services at RHCs involve primarily acute care as well as some basic chronic disease management. About 90% of visits are for the following conditions: influenza, immunizations, upper respiratory infections, sinusitis, bronchitis, sore throat, inner ear infection, conjunctivitis, urinary tract infections, and blood tests.1-3 Other services available at most RHC locations involve screening and monitoring, wellness and physicals, travel health, treatment of minor injuries, and vaccinations and injections.

Women constitute half of all customers, and all RHCs offer women’s health services.7 Along with addressing acute care needs, women’s health services include contraception care and options, human papillomavirus (HPV) screening, pregnancy testing and initial prenatal evaluation, and evaluation for and treatment of urinary tract, bladder, and yeast infections.2,6

All RHCs provide counseling on sexual health concerns. Nearly all retail clinics in the United States provide screening and treatment for patients and their partners with sexually transmitted infections. RHC providers are required to follow up with patients regarding any blood work or culture results. When positive test results are confirmed for serious infections, such as hepatitis B and C, syphilis, and HIV, patients customarily are referred for treatment.2

The RHC patient base

RHCs serve an expanding base of patients who cite convenience as their primary motivation for utilizing these clinics. This consumer-driven market now encompasses, by some measures, nearly 50 million visits annually.2 These numbers have risen every year alongside a consistent increase in the number and spread of clinics across the country. During the COVID-19 pandemic, visits declined, consistent with other health care touchpoints, due to concerns about spreading the coronavirus.

The RHC industry has continued to adapt to a changing health care climate by embracing new telehealth solutions, enabling remote care, and expanding services by consumer demand.1,7 While convenience is a primary motivation for visiting an RHC, about two-thirds of RHC patients do not have a primary care provider.1 To support a broader continuum of care, RHCs regularly refer patients who do not have a primary care provider to other health care touchpoints when necessary.

Young and middle-aged adults (18–44 years) comprise the largest group of RHC patients. When patients were asked why they chose an RHC over “traditional doctors’ clinics,” many cited difficulties in accessing care, the appeal of lower costs, and proximity. The proportion of female RHC users was 50.9% and 56.8% for RHC nonusers.1-3

How RHCs compare with other episodic care clinics

The consumer has more choices to seek episodic care other than at physicians’ clinics or emergency rooms. An RHC, urgent care clinic, or freestanding emergency clinic increase access points for consumers. Along with the expanding number of RHCs, there are nearly 9,000 US urgent care centers, according to the Urgent Care Association, and more than 550 freestanding emergency rooms.8

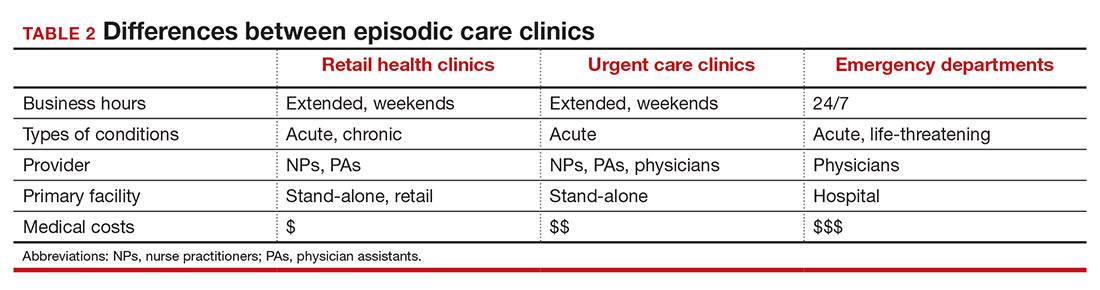

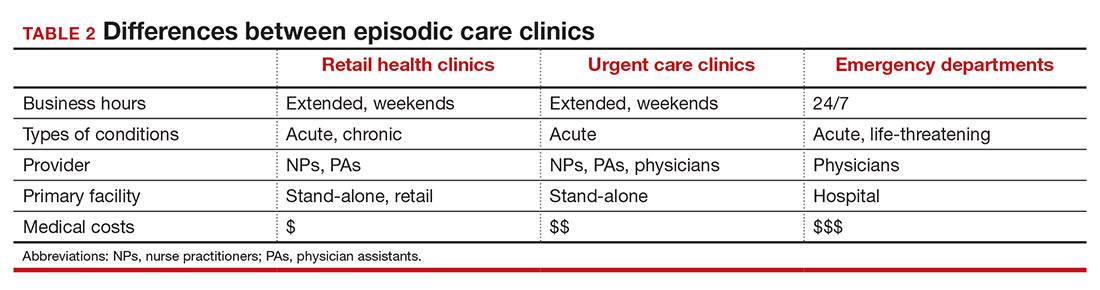

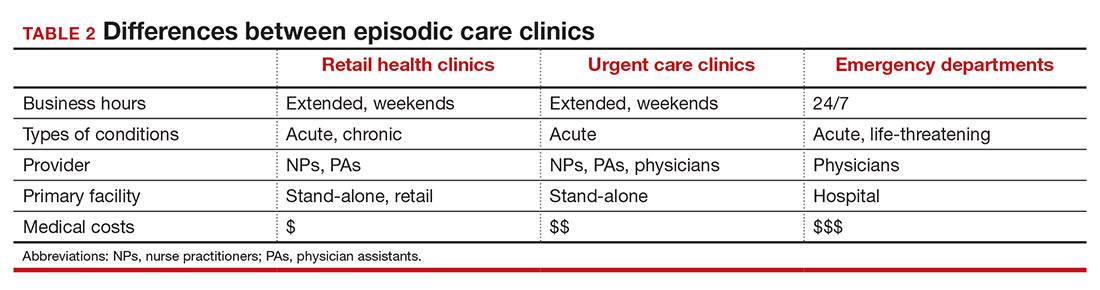

The main differences between these episodic care clinics are shown in TABLE 2. Hours of operation, types of conditions, available providers, location of the facility, and estimated costs are compared. All provide expanded business hours. Retail clinics address some chronic disease management along with acute care, engage only advanced practitioners, use retail stores, and are less costly to consumers.3,4,9

An RHC, urgent care clinic, or emergency department increases access points for consumers. Many emergency department visits can be handled in ambulatory settings such as RHCs and urgent care clinics.9,10 This can be helpful, especially in rural areas with a shortage of physicians. Most people want a relationship with a physician who will manage their care rather than seeing a different provider at every visit. While ObGyns deliver comprehensive care to women, however, in some underserved areas non-ObGyn clinics can fill the void. For example, RHCs can sometimes provide needed immunizations and health care information required in underserved areas.11

Many physicians are frustrated when they see patients who do not have a complete copy of their medical record and must piecemeal how to treat a patient. RHCs have adopted electronic medical records, and they regularly encourage patients to contact their physician (or find one, which can be difficult). Another limitation can be a referral from an RHC to a subspecialist rather than a primary care physician who could equally handle the condition.

Continue to: What ObGyns can do...

What ObGyns can do

Consumers have become accustomed to obtaining services where and when they want them, and they expect the same from their health care providers. While ObGyn practices are less affected by RHCs than family physicians or general internists, health care delivery in traditional clinics must be user friendly—that is, better, cheaper, and faster—for the patient-consumer to be more satisfied. Looking ahead, a nearby women’s health care group needs to have someone on call 24 hours a day, 7 days a week. That way, you can tell your patients that they can call you first if they need help. In the case of an ObGyn recommending that a patient go to an RHC or urgent care center, you will be aware of the visit and can follow up with your patient afterward.

Traditional clinics need to create ways for patients with acute illnesses to be seen that same day. Offering extended hours or technology options, such as online support, can help. Text message reminders, same-day access for appointments, and price transparency are necessary. It is important to encourage your women’s health patients to become more responsible for their own health and care, while taking into consideration their social determinants of health. While ObGyns should discuss with their patients when to visit an RHC (especially when their clinic is closed), emphasize that your own clinic is the patient’s medical home and encourage the importance of communicating what occurred during the RHC visit.

Working as a team by communicating well can create a community of health. It would be appropriate for you to represent your group by meeting practitioners at the nearby RHC. Being accessible and helpful would create a friendly and open professional relationship. Conversely, providers at retail clinics need to continually appreciate that women’s health clinics offer more comprehensive care. Select referrals from RHCs would help the most important person, the patient herself. ●

- Bachrach D, Frohlich J, Garcimonde A, et al. Building a culture of health: the value proposition of retail clinics. Robert Wood Johnson Foundation and Manatt Health. April 2015. https://www.rwjf.org/en/library/research/2015/04/the-value-proposition-of-retail-clinics.html. Accessed March 26, 2021.

- Bronstein N. Convenient Care Association–National Trade Association of Companies and Healthcare Systems for the Convenient Care Industry, January 1, 2020. https://www.ccaclinics.org/about-us/about-cca. Accessed January 10, 2021.

- Mehrotra A, Liu H, Adams JL, et al. Comparing costs and quality of care at retail clinics with that of other medical settings for 3 common illnesses. Ann Intern Med. 2009;151:321-328.

- Woodburn JD, Smith KL, Nelson GD. Quality of care in the retail health care setting using national clinical guidelines for acute pharyngitis. Am J Med Qual. 2007;22:457-462.

- Zamosky L. What retail clinic growth can teach physicians about patient demand. Threat or opportunity: retail clinic popularity is about convenience. Med Econ. 2014;91:22-24.

- SolvHealth website. Urgent care center vs emergency room. https://www.solvhealth.com/faq/urgent-care-center-vs-emergency-room. Accessed January, 13, 2021.

- Kvedar J, Coye MJ, Everett W. Connected health: a review of technologies and strategies to improve patient care with telemedicine and telehealth. Health Aff. 2014;33:194-199.

- Urgent Care Association website. Industry news: urgent care industry grows to more than 9,000 centers nationwide. February 24, 2020. https://www.ucaoa.org/About-UCA/Industry-News/ArtMID/10309/ArticleID/1468/INDUSTRY-NEWS-Urgent-Care-Industry-Grows-to-More-than-9000-Centers-Nationwide. Accessed March 26, 2021.

- Sussman A, Dunham L, Snower K, et al. Retail clinic utilization associated with lower total cost of care. Am J Manag Care. 2013;19:e148-57.

- Weinik RM, Burns RM, Mehrotra A. Many emergency department visits could be managed at urgent care centers and retail clinics. Health Aff. 2010;29:1630-1636.

- Goad JA, Taitel MS, Fensterheim LE, et al. Vaccinations administered during off-clinic hours at a national community pharmacy: implications for increasing patient access and convenience. Ann Fam Med. 2013;11:429-436.

Retail Health Clinics (RHCs) are health care facilities located in high-traffic retail outlets with adjacent pharmacies that are intended to provide convenient and affordable care without sacrificing quality. The clinics add an option that complements services to individuals and families who otherwise would need to wait for an appointment with a traditional primary care physician or provider.1 Appointments are not necessary for episodic health needs. Usually open 7 days a week, RHCs offer extended hours on weekdays.2

The clinics are staffed by licensed, qualified advance practice providers, such as nurse practitioners and physician assistants, who are supervised by family physicians where required by state law. These clinicians have advanced education to diagnose, treat, and prescribe for nonemergent ailments such as colds and flu, rashes and skin irritation, and muscle strains or sprains.3 They are supported by an electronic health record that contains established evidence-based protocols.2,4

Evolution of retail health clinics

The first RHC, operated by QuickMedx, opened its doors in 2000 in Minneapolis– St. Paul.1,5 Only patients with a very limited number of illnesses were seen, and payment was cash. In 2005, this clinic was acquired by a major pharmacy retailer, which led to several acquisitions by other retailers and health care systems. In addition to accepting cash for a visit, the clinics formed contracts with health insurance companies. The average cost of a visit to an RHC in 2016 was estimated to be $70, considerably less than the cost at urgent care clinics ($124) and emergency rooms ($356).6

Today, more than 20 companies provide health care services at RHCs (TABLE 1). CVS (MinuteClinic) has the most retail clinics, followed by Walgreens, Kroger, Rite Aid, and Zoom+Care.1,2 There are now about 3,300 clinics in the United States, Canada, and Mexico (nearly all are located in the United States).2 Currently, RHCs are present in 44 states and the District of Columbia. Alabama, Alaska, Idaho, North Dakota, Vermont, and Wyoming are the only states without an RHC, while large-population states (California, Florida, Ohio, Pennsylvania, and Texas) have experienced an explosion in clinic openings.2

RHCs are found in high foot traffic locations, such as large retailers and grocery stores, and in prioritizing services such as drug stores. By analyzing 2019 clinic openings and population centers, the Convenient Care Association determined that more than half of the US population now lives within a 10-minute drive of an RHC.2 New locations are established on a regular basis, resulting in some flux in the total number of clinics.

Continue to: Services that RHCs provide...

Services that RHCs provide

As RHC locations expand, so do their services. Most RHCs pursue 1 of 2 models: health hubs or virtual care. Health hubs offer an expansion of services, which has resulted in retail health looking and operating more like the primary care providers in their communities.1,2 While most chains intend for patient visits to be brief, the growing health hub model intends to expand the period for patient visits. The major companies, CVS, Kroger, and Walmart, are offering an increase in their services and granting their providers a greater capacity to screen and treat patients for a wider range of conditions. By contrast, other clinic operators, such as Rite Aid RediClinics, are pursuing a more episodic and convenient care model with a greater adoption and expansion of telehealth and telemedicine.

Services at RHCs involve primarily acute care as well as some basic chronic disease management. About 90% of visits are for the following conditions: influenza, immunizations, upper respiratory infections, sinusitis, bronchitis, sore throat, inner ear infection, conjunctivitis, urinary tract infections, and blood tests.1-3 Other services available at most RHC locations involve screening and monitoring, wellness and physicals, travel health, treatment of minor injuries, and vaccinations and injections.

Women constitute half of all customers, and all RHCs offer women’s health services.7 Along with addressing acute care needs, women’s health services include contraception care and options, human papillomavirus (HPV) screening, pregnancy testing and initial prenatal evaluation, and evaluation for and treatment of urinary tract, bladder, and yeast infections.2,6

All RHCs provide counseling on sexual health concerns. Nearly all retail clinics in the United States provide screening and treatment for patients and their partners with sexually transmitted infections. RHC providers are required to follow up with patients regarding any blood work or culture results. When positive test results are confirmed for serious infections, such as hepatitis B and C, syphilis, and HIV, patients customarily are referred for treatment.2

The RHC patient base

RHCs serve an expanding base of patients who cite convenience as their primary motivation for utilizing these clinics. This consumer-driven market now encompasses, by some measures, nearly 50 million visits annually.2 These numbers have risen every year alongside a consistent increase in the number and spread of clinics across the country. During the COVID-19 pandemic, visits declined, consistent with other health care touchpoints, due to concerns about spreading the coronavirus.

The RHC industry has continued to adapt to a changing health care climate by embracing new telehealth solutions, enabling remote care, and expanding services by consumer demand.1,7 While convenience is a primary motivation for visiting an RHC, about two-thirds of RHC patients do not have a primary care provider.1 To support a broader continuum of care, RHCs regularly refer patients who do not have a primary care provider to other health care touchpoints when necessary.

Young and middle-aged adults (18–44 years) comprise the largest group of RHC patients. When patients were asked why they chose an RHC over “traditional doctors’ clinics,” many cited difficulties in accessing care, the appeal of lower costs, and proximity. The proportion of female RHC users was 50.9% and 56.8% for RHC nonusers.1-3

How RHCs compare with other episodic care clinics

The consumer has more choices to seek episodic care other than at physicians’ clinics or emergency rooms. An RHC, urgent care clinic, or freestanding emergency clinic increase access points for consumers. Along with the expanding number of RHCs, there are nearly 9,000 US urgent care centers, according to the Urgent Care Association, and more than 550 freestanding emergency rooms.8

The main differences between these episodic care clinics are shown in TABLE 2. Hours of operation, types of conditions, available providers, location of the facility, and estimated costs are compared. All provide expanded business hours. Retail clinics address some chronic disease management along with acute care, engage only advanced practitioners, use retail stores, and are less costly to consumers.3,4,9

An RHC, urgent care clinic, or emergency department increases access points for consumers. Many emergency department visits can be handled in ambulatory settings such as RHCs and urgent care clinics.9,10 This can be helpful, especially in rural areas with a shortage of physicians. Most people want a relationship with a physician who will manage their care rather than seeing a different provider at every visit. While ObGyns deliver comprehensive care to women, however, in some underserved areas non-ObGyn clinics can fill the void. For example, RHCs can sometimes provide needed immunizations and health care information required in underserved areas.11

Many physicians are frustrated when they see patients who do not have a complete copy of their medical record and must piecemeal how to treat a patient. RHCs have adopted electronic medical records, and they regularly encourage patients to contact their physician (or find one, which can be difficult). Another limitation can be a referral from an RHC to a subspecialist rather than a primary care physician who could equally handle the condition.

Continue to: What ObGyns can do...

What ObGyns can do

Consumers have become accustomed to obtaining services where and when they want them, and they expect the same from their health care providers. While ObGyn practices are less affected by RHCs than family physicians or general internists, health care delivery in traditional clinics must be user friendly—that is, better, cheaper, and faster—for the patient-consumer to be more satisfied. Looking ahead, a nearby women’s health care group needs to have someone on call 24 hours a day, 7 days a week. That way, you can tell your patients that they can call you first if they need help. In the case of an ObGyn recommending that a patient go to an RHC or urgent care center, you will be aware of the visit and can follow up with your patient afterward.

Traditional clinics need to create ways for patients with acute illnesses to be seen that same day. Offering extended hours or technology options, such as online support, can help. Text message reminders, same-day access for appointments, and price transparency are necessary. It is important to encourage your women’s health patients to become more responsible for their own health and care, while taking into consideration their social determinants of health. While ObGyns should discuss with their patients when to visit an RHC (especially when their clinic is closed), emphasize that your own clinic is the patient’s medical home and encourage the importance of communicating what occurred during the RHC visit.

Working as a team by communicating well can create a community of health. It would be appropriate for you to represent your group by meeting practitioners at the nearby RHC. Being accessible and helpful would create a friendly and open professional relationship. Conversely, providers at retail clinics need to continually appreciate that women’s health clinics offer more comprehensive care. Select referrals from RHCs would help the most important person, the patient herself. ●

Retail Health Clinics (RHCs) are health care facilities located in high-traffic retail outlets with adjacent pharmacies that are intended to provide convenient and affordable care without sacrificing quality. The clinics add an option that complements services to individuals and families who otherwise would need to wait for an appointment with a traditional primary care physician or provider.1 Appointments are not necessary for episodic health needs. Usually open 7 days a week, RHCs offer extended hours on weekdays.2

The clinics are staffed by licensed, qualified advance practice providers, such as nurse practitioners and physician assistants, who are supervised by family physicians where required by state law. These clinicians have advanced education to diagnose, treat, and prescribe for nonemergent ailments such as colds and flu, rashes and skin irritation, and muscle strains or sprains.3 They are supported by an electronic health record that contains established evidence-based protocols.2,4

Evolution of retail health clinics

The first RHC, operated by QuickMedx, opened its doors in 2000 in Minneapolis– St. Paul.1,5 Only patients with a very limited number of illnesses were seen, and payment was cash. In 2005, this clinic was acquired by a major pharmacy retailer, which led to several acquisitions by other retailers and health care systems. In addition to accepting cash for a visit, the clinics formed contracts with health insurance companies. The average cost of a visit to an RHC in 2016 was estimated to be $70, considerably less than the cost at urgent care clinics ($124) and emergency rooms ($356).6

Today, more than 20 companies provide health care services at RHCs (TABLE 1). CVS (MinuteClinic) has the most retail clinics, followed by Walgreens, Kroger, Rite Aid, and Zoom+Care.1,2 There are now about 3,300 clinics in the United States, Canada, and Mexico (nearly all are located in the United States).2 Currently, RHCs are present in 44 states and the District of Columbia. Alabama, Alaska, Idaho, North Dakota, Vermont, and Wyoming are the only states without an RHC, while large-population states (California, Florida, Ohio, Pennsylvania, and Texas) have experienced an explosion in clinic openings.2

RHCs are found in high foot traffic locations, such as large retailers and grocery stores, and in prioritizing services such as drug stores. By analyzing 2019 clinic openings and population centers, the Convenient Care Association determined that more than half of the US population now lives within a 10-minute drive of an RHC.2 New locations are established on a regular basis, resulting in some flux in the total number of clinics.

Continue to: Services that RHCs provide...

Services that RHCs provide

As RHC locations expand, so do their services. Most RHCs pursue 1 of 2 models: health hubs or virtual care. Health hubs offer an expansion of services, which has resulted in retail health looking and operating more like the primary care providers in their communities.1,2 While most chains intend for patient visits to be brief, the growing health hub model intends to expand the period for patient visits. The major companies, CVS, Kroger, and Walmart, are offering an increase in their services and granting their providers a greater capacity to screen and treat patients for a wider range of conditions. By contrast, other clinic operators, such as Rite Aid RediClinics, are pursuing a more episodic and convenient care model with a greater adoption and expansion of telehealth and telemedicine.

Services at RHCs involve primarily acute care as well as some basic chronic disease management. About 90% of visits are for the following conditions: influenza, immunizations, upper respiratory infections, sinusitis, bronchitis, sore throat, inner ear infection, conjunctivitis, urinary tract infections, and blood tests.1-3 Other services available at most RHC locations involve screening and monitoring, wellness and physicals, travel health, treatment of minor injuries, and vaccinations and injections.

Women constitute half of all customers, and all RHCs offer women’s health services.7 Along with addressing acute care needs, women’s health services include contraception care and options, human papillomavirus (HPV) screening, pregnancy testing and initial prenatal evaluation, and evaluation for and treatment of urinary tract, bladder, and yeast infections.2,6

All RHCs provide counseling on sexual health concerns. Nearly all retail clinics in the United States provide screening and treatment for patients and their partners with sexually transmitted infections. RHC providers are required to follow up with patients regarding any blood work or culture results. When positive test results are confirmed for serious infections, such as hepatitis B and C, syphilis, and HIV, patients customarily are referred for treatment.2

The RHC patient base

RHCs serve an expanding base of patients who cite convenience as their primary motivation for utilizing these clinics. This consumer-driven market now encompasses, by some measures, nearly 50 million visits annually.2 These numbers have risen every year alongside a consistent increase in the number and spread of clinics across the country. During the COVID-19 pandemic, visits declined, consistent with other health care touchpoints, due to concerns about spreading the coronavirus.

The RHC industry has continued to adapt to a changing health care climate by embracing new telehealth solutions, enabling remote care, and expanding services by consumer demand.1,7 While convenience is a primary motivation for visiting an RHC, about two-thirds of RHC patients do not have a primary care provider.1 To support a broader continuum of care, RHCs regularly refer patients who do not have a primary care provider to other health care touchpoints when necessary.

Young and middle-aged adults (18–44 years) comprise the largest group of RHC patients. When patients were asked why they chose an RHC over “traditional doctors’ clinics,” many cited difficulties in accessing care, the appeal of lower costs, and proximity. The proportion of female RHC users was 50.9% and 56.8% for RHC nonusers.1-3

How RHCs compare with other episodic care clinics

The consumer has more choices to seek episodic care other than at physicians’ clinics or emergency rooms. An RHC, urgent care clinic, or freestanding emergency clinic increase access points for consumers. Along with the expanding number of RHCs, there are nearly 9,000 US urgent care centers, according to the Urgent Care Association, and more than 550 freestanding emergency rooms.8

The main differences between these episodic care clinics are shown in TABLE 2. Hours of operation, types of conditions, available providers, location of the facility, and estimated costs are compared. All provide expanded business hours. Retail clinics address some chronic disease management along with acute care, engage only advanced practitioners, use retail stores, and are less costly to consumers.3,4,9

An RHC, urgent care clinic, or emergency department increases access points for consumers. Many emergency department visits can be handled in ambulatory settings such as RHCs and urgent care clinics.9,10 This can be helpful, especially in rural areas with a shortage of physicians. Most people want a relationship with a physician who will manage their care rather than seeing a different provider at every visit. While ObGyns deliver comprehensive care to women, however, in some underserved areas non-ObGyn clinics can fill the void. For example, RHCs can sometimes provide needed immunizations and health care information required in underserved areas.11

Many physicians are frustrated when they see patients who do not have a complete copy of their medical record and must piecemeal how to treat a patient. RHCs have adopted electronic medical records, and they regularly encourage patients to contact their physician (or find one, which can be difficult). Another limitation can be a referral from an RHC to a subspecialist rather than a primary care physician who could equally handle the condition.

Continue to: What ObGyns can do...

What ObGyns can do

Consumers have become accustomed to obtaining services where and when they want them, and they expect the same from their health care providers. While ObGyn practices are less affected by RHCs than family physicians or general internists, health care delivery in traditional clinics must be user friendly—that is, better, cheaper, and faster—for the patient-consumer to be more satisfied. Looking ahead, a nearby women’s health care group needs to have someone on call 24 hours a day, 7 days a week. That way, you can tell your patients that they can call you first if they need help. In the case of an ObGyn recommending that a patient go to an RHC or urgent care center, you will be aware of the visit and can follow up with your patient afterward.

Traditional clinics need to create ways for patients with acute illnesses to be seen that same day. Offering extended hours or technology options, such as online support, can help. Text message reminders, same-day access for appointments, and price transparency are necessary. It is important to encourage your women’s health patients to become more responsible for their own health and care, while taking into consideration their social determinants of health. While ObGyns should discuss with their patients when to visit an RHC (especially when their clinic is closed), emphasize that your own clinic is the patient’s medical home and encourage the importance of communicating what occurred during the RHC visit.

Working as a team by communicating well can create a community of health. It would be appropriate for you to represent your group by meeting practitioners at the nearby RHC. Being accessible and helpful would create a friendly and open professional relationship. Conversely, providers at retail clinics need to continually appreciate that women’s health clinics offer more comprehensive care. Select referrals from RHCs would help the most important person, the patient herself. ●

- Bachrach D, Frohlich J, Garcimonde A, et al. Building a culture of health: the value proposition of retail clinics. Robert Wood Johnson Foundation and Manatt Health. April 2015. https://www.rwjf.org/en/library/research/2015/04/the-value-proposition-of-retail-clinics.html. Accessed March 26, 2021.