User login

Central Centrifugal Cicatricial Alopecia in Males: Analysis of Time to Diagnosis and Disease Severity

To the Editor:

Central centrifugal cicatricial alopecia (CCCA) is a chronic progressive type of scarring alopecia that primarily affects women of African descent.1 The disorder rarely is reported in men, which may be due to misdiagnosis or delayed diagnosis. Early diagnosis and treatment are the cornerstones to slow or halt disease progression and prevent permanent damage to hair follicles. This study aimed to investigate the time to diagnosis and disease severity among males with CCCA.

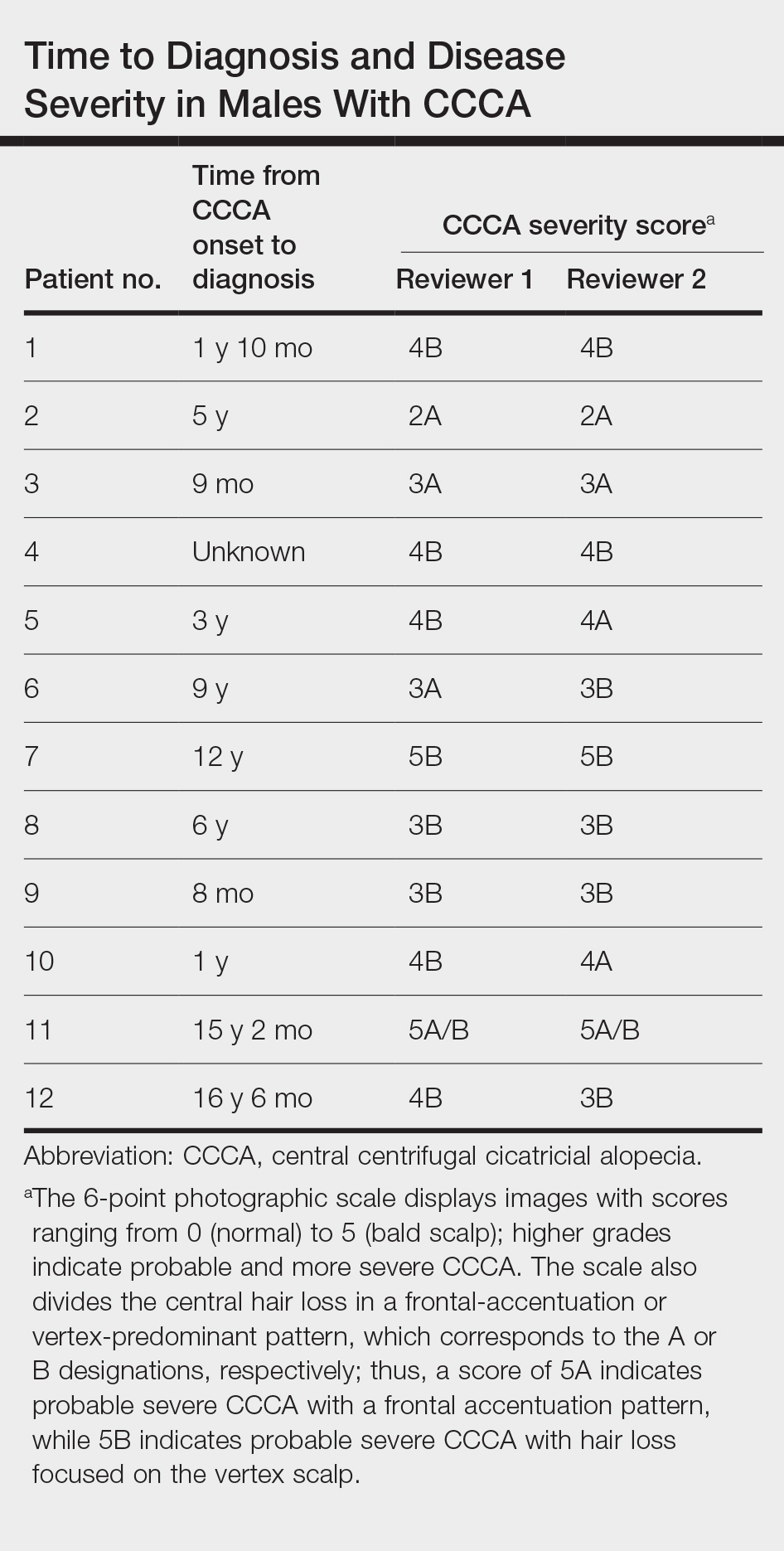

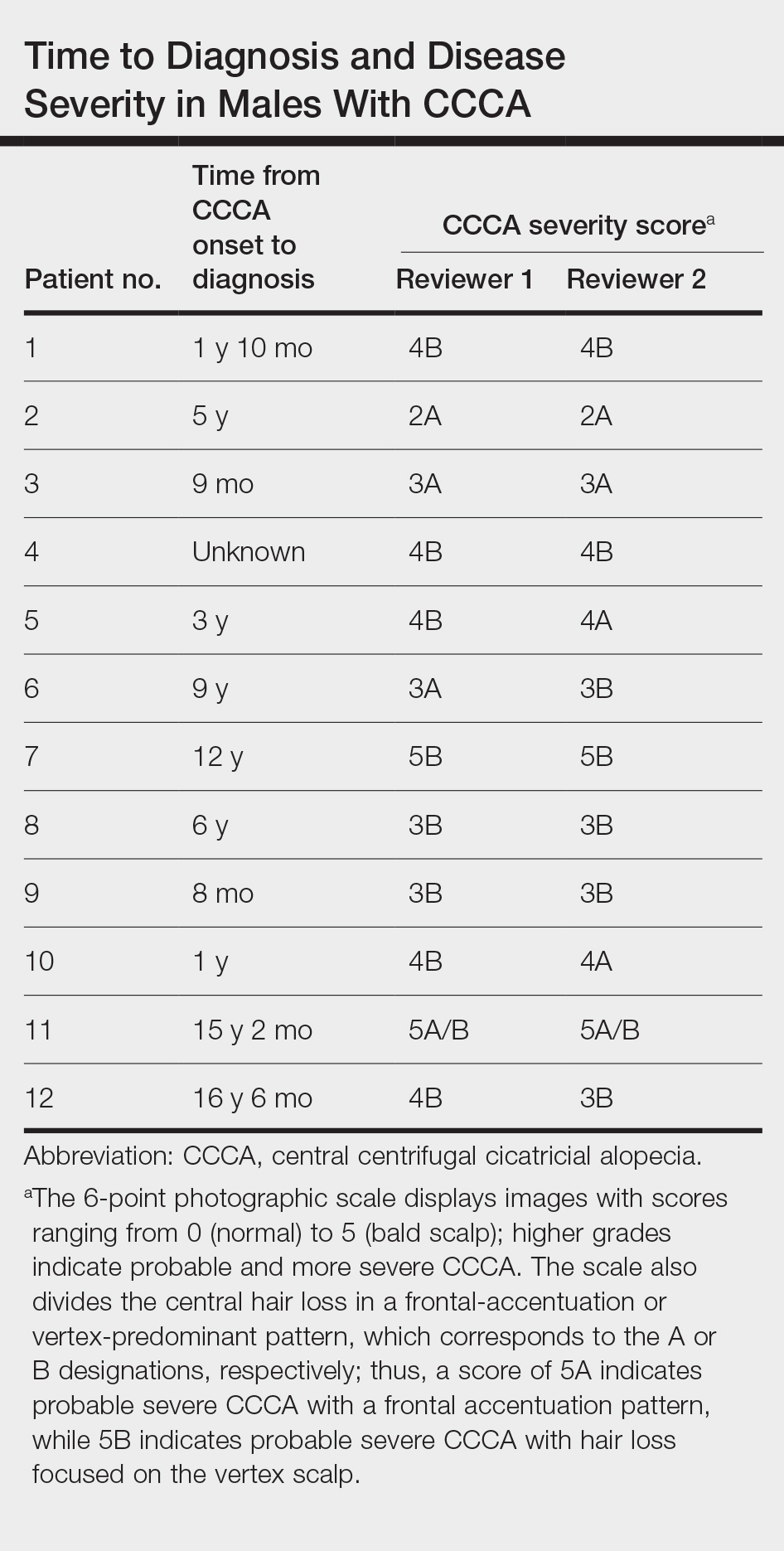

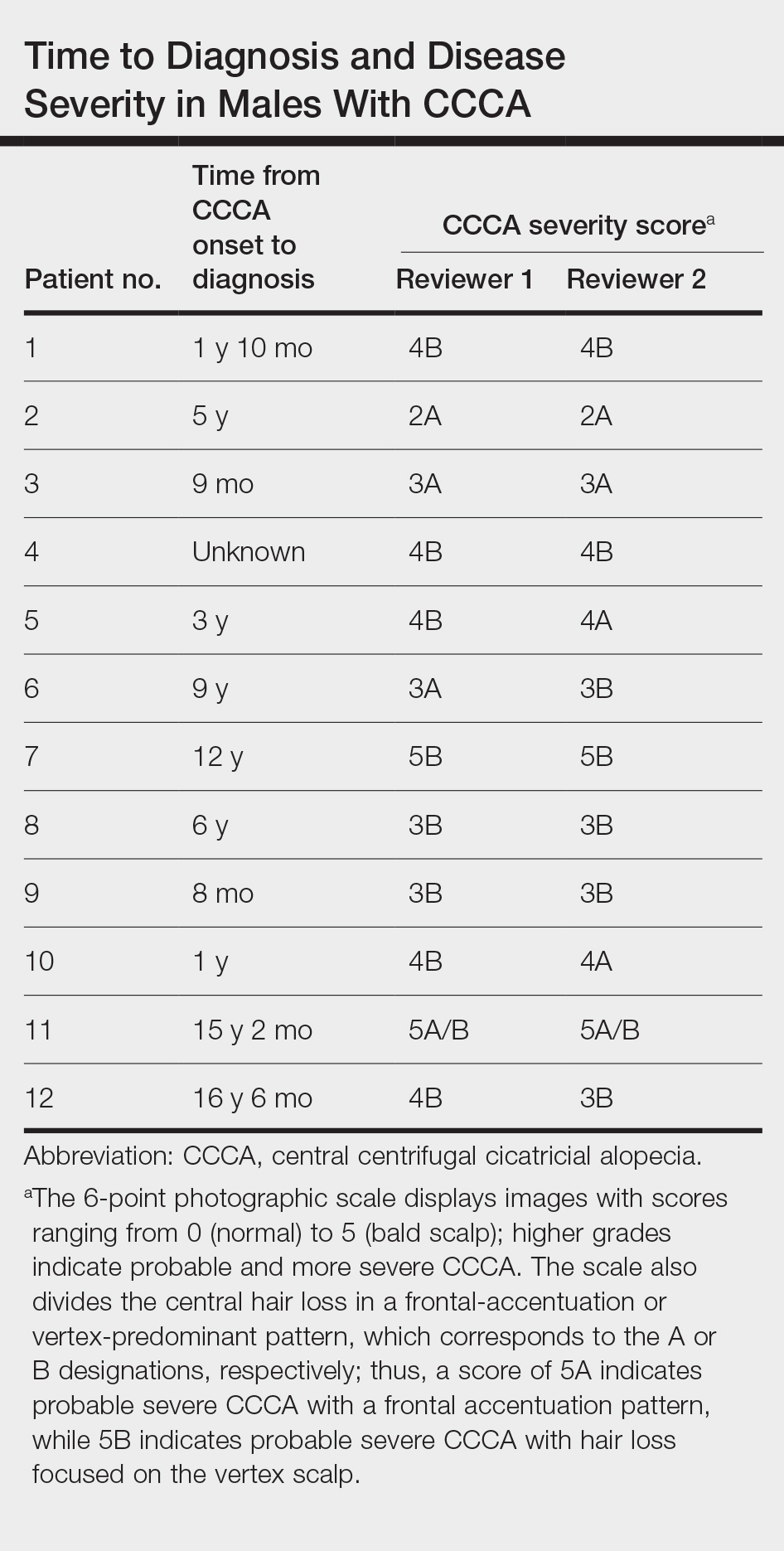

We conducted a retrospective chart review of male patients older than 18 years seen in outpatient clinics at an academic dermatology department (Philadelphia, Pennsylvania) between January 2012 and December 2022. An electronic query using the International Classification of Diseases, Ninth and Tenth Revisions, code L66.9 (cicatricial alopecia, unspecified) was performed. Patients were included if they had a clinical diagnosis of CCCA, histologic evidence of CCCA, and scalp photographs from the initial dermatology visit. Patients with folliculitis decalvans, scalp biopsy features that limited characterization, or no scalp biopsy were excluded from the study. Onset of CCCA was defined as the patient-reported start time of hair loss and/or scalp symptoms. To determine alopecia severity, the degree of central scalp hair loss was independently assessed by 2 dermatologists (S.C.T., T.O.) using the central scalp alopecia photographic scale in African American women.2,3 This 6-point photographic scale displays images with grades ranging from 0 (normal) to 5 (bald scalp); higher grades indicate probable and more severe CCCA. The scale also divides the central hair loss in a frontal-accentuation or vertex-predominant pattern, which corresponds to the A or B designations, respectively; thus, a score of 5A indicates probable severe CCCA with a frontal accentuation pattern, while 5B indicates probable severe CCCA with hair loss focused on the vertex scalp. This study was approved by the University of Pennsylvania institutional review board (approval #850730).

Of 108 male patients, 12 met the eligibility criteria. Nearly all patients (91.7% [11/12]) had a CCCA severity grade of 3 or higher at the initial dermatology visit, indicating extensive hair loss (Table). The clinical appearance of severity grades 2 through 5 is demonstrated in the Figure. Among patients with a known disease duration prior to diagnosis, 72.7% (8/11) were diagnosed more than 1 year after onset of CCCA, and 45.4% (5/11) were diagnosed more than 5 years after onset. On average (SD), it took 6.4 (5.9) years for patients to receive a diagnosis of CCCA after the onset of scalp symptoms and/or hair loss.

Randomized controlled trials evaluating treatment of CCCA are lacking, and anecdotal evidence posits a better treatment response in early CCCA; however, our results suggest that most male patients present with advanced CCCA and receive a diagnosis years after disease onset. Similar research in alopecia areata has shown that 72.4% (105/145) of patients received their diagnosis within a year after onset of symptoms, and the mean time from onset of symptoms to diagnosis was 1 year.4 In contrast, male patients with CCCA experience considerable diagnostic delays. This disparity indicates the need for clinicians to increase recognition of CCCA in men and quickly refer them to a dermatologist for prompt treatment.

Androgenetic alopecia (AGA) commonly is at the top of the differential diagnosis for hair loss on the vertex of the scalp in males, but clinicians should maintain a high index of suspicion for CCCA, especially when scalp symptoms or atypical features of AGA are present.5 Androgenetic alopecia typically is asymptomatic, whereas the symptoms of CCCA may include itching, tenderness, and/or burning.6,7 Trichoscopy is useful to evaluate for scarring, and a scalp biopsy may reveal other features to lower AGA on the differential. Educating patients, barbers, and hairstylists about the importance of early intervention also may encourage earlier visits before the scarring process is advanced. Further exploration into factors impacting diagnosis and CCCA severity may uncover implications for prognosis and treatment.

This study was limited by a small sample size, retrospective design, and single-center analysis. Some patients had comorbid hair loss conditions, which could affect disease severity. Moreover, the central scalp alopecia photographic scale2 was not validated in men or designed for assessment of the nonclassical hair loss distributions noted in some of our patients. Nonetheless, we hope these data will support clinicians in efforts to advocate for early diagnosis and treatment in patients with CCCA to ultimately help improve outcomes.

- Ogunleye TA, McMichael A, Olsen EA. Central centrifugal cicatricial alopecia: what has been achieved, current clues for future research. Dermatol Clin. 2014;32:173-181. doi:10.1016/j.det.2013.12.005

- Olsen EA, Callender V, McMichael A, et al. Central hair loss in African American women: incidence and potential risk factors. J Am Acad Dermatol. 2011;64:245-252. doi:10.1016/j.jaad.2009.11.693

- Olsen EA, Callendar V, Sperling L, et al. Central scalp alopecia photographic scale in African American women. Dermatol Ther. 2008;21:264-267. doi:10.1111/j.1529-8019.2008.00208.x

- Andersen YMF, Nymand L, DeLozier AM, et al. Patient characteristics and disease burden of alopecia areata in the Danish Skin Cohort. BMJ Open. 2022;12:E053137. doi:10.1136/bmjopen-2021-053137

- Davis EC, Reid SD, Callender VD, et al. Differentiating central centrifugal cicatricial alopecia and androgenetic alopecia in African American men. J Clin Aesthetic Dermatol. 2012;5:37-40.

- Jackson TK, Sow Y, Ayoade KO, et al. Central centrifugal cicatricial alopecia in males. J Am Acad Dermatol. 2023;89:1136-1140. doi:10.1016/j.jaad.2023.07.1011

- Lawson CN, Bakayoko A, Callender VD. Central centrifugal cicatricial alopecia: challenges and treatments. Dermatol Clin. 2021;39:389-405. doi:10.1016/j.det.2021.03.004

To the Editor:

Central centrifugal cicatricial alopecia (CCCA) is a chronic progressive type of scarring alopecia that primarily affects women of African descent.1 The disorder rarely is reported in men, which may be due to misdiagnosis or delayed diagnosis. Early diagnosis and treatment are the cornerstones to slow or halt disease progression and prevent permanent damage to hair follicles. This study aimed to investigate the time to diagnosis and disease severity among males with CCCA.

We conducted a retrospective chart review of male patients older than 18 years seen in outpatient clinics at an academic dermatology department (Philadelphia, Pennsylvania) between January 2012 and December 2022. An electronic query using the International Classification of Diseases, Ninth and Tenth Revisions, code L66.9 (cicatricial alopecia, unspecified) was performed. Patients were included if they had a clinical diagnosis of CCCA, histologic evidence of CCCA, and scalp photographs from the initial dermatology visit. Patients with folliculitis decalvans, scalp biopsy features that limited characterization, or no scalp biopsy were excluded from the study. Onset of CCCA was defined as the patient-reported start time of hair loss and/or scalp symptoms. To determine alopecia severity, the degree of central scalp hair loss was independently assessed by 2 dermatologists (S.C.T., T.O.) using the central scalp alopecia photographic scale in African American women.2,3 This 6-point photographic scale displays images with grades ranging from 0 (normal) to 5 (bald scalp); higher grades indicate probable and more severe CCCA. The scale also divides the central hair loss in a frontal-accentuation or vertex-predominant pattern, which corresponds to the A or B designations, respectively; thus, a score of 5A indicates probable severe CCCA with a frontal accentuation pattern, while 5B indicates probable severe CCCA with hair loss focused on the vertex scalp. This study was approved by the University of Pennsylvania institutional review board (approval #850730).

Of 108 male patients, 12 met the eligibility criteria. Nearly all patients (91.7% [11/12]) had a CCCA severity grade of 3 or higher at the initial dermatology visit, indicating extensive hair loss (Table). The clinical appearance of severity grades 2 through 5 is demonstrated in the Figure. Among patients with a known disease duration prior to diagnosis, 72.7% (8/11) were diagnosed more than 1 year after onset of CCCA, and 45.4% (5/11) were diagnosed more than 5 years after onset. On average (SD), it took 6.4 (5.9) years for patients to receive a diagnosis of CCCA after the onset of scalp symptoms and/or hair loss.

Randomized controlled trials evaluating treatment of CCCA are lacking, and anecdotal evidence posits a better treatment response in early CCCA; however, our results suggest that most male patients present with advanced CCCA and receive a diagnosis years after disease onset. Similar research in alopecia areata has shown that 72.4% (105/145) of patients received their diagnosis within a year after onset of symptoms, and the mean time from onset of symptoms to diagnosis was 1 year.4 In contrast, male patients with CCCA experience considerable diagnostic delays. This disparity indicates the need for clinicians to increase recognition of CCCA in men and quickly refer them to a dermatologist for prompt treatment.

Androgenetic alopecia (AGA) commonly is at the top of the differential diagnosis for hair loss on the vertex of the scalp in males, but clinicians should maintain a high index of suspicion for CCCA, especially when scalp symptoms or atypical features of AGA are present.5 Androgenetic alopecia typically is asymptomatic, whereas the symptoms of CCCA may include itching, tenderness, and/or burning.6,7 Trichoscopy is useful to evaluate for scarring, and a scalp biopsy may reveal other features to lower AGA on the differential. Educating patients, barbers, and hairstylists about the importance of early intervention also may encourage earlier visits before the scarring process is advanced. Further exploration into factors impacting diagnosis and CCCA severity may uncover implications for prognosis and treatment.

This study was limited by a small sample size, retrospective design, and single-center analysis. Some patients had comorbid hair loss conditions, which could affect disease severity. Moreover, the central scalp alopecia photographic scale2 was not validated in men or designed for assessment of the nonclassical hair loss distributions noted in some of our patients. Nonetheless, we hope these data will support clinicians in efforts to advocate for early diagnosis and treatment in patients with CCCA to ultimately help improve outcomes.

To the Editor:

Central centrifugal cicatricial alopecia (CCCA) is a chronic progressive type of scarring alopecia that primarily affects women of African descent.1 The disorder rarely is reported in men, which may be due to misdiagnosis or delayed diagnosis. Early diagnosis and treatment are the cornerstones to slow or halt disease progression and prevent permanent damage to hair follicles. This study aimed to investigate the time to diagnosis and disease severity among males with CCCA.

We conducted a retrospective chart review of male patients older than 18 years seen in outpatient clinics at an academic dermatology department (Philadelphia, Pennsylvania) between January 2012 and December 2022. An electronic query using the International Classification of Diseases, Ninth and Tenth Revisions, code L66.9 (cicatricial alopecia, unspecified) was performed. Patients were included if they had a clinical diagnosis of CCCA, histologic evidence of CCCA, and scalp photographs from the initial dermatology visit. Patients with folliculitis decalvans, scalp biopsy features that limited characterization, or no scalp biopsy were excluded from the study. Onset of CCCA was defined as the patient-reported start time of hair loss and/or scalp symptoms. To determine alopecia severity, the degree of central scalp hair loss was independently assessed by 2 dermatologists (S.C.T., T.O.) using the central scalp alopecia photographic scale in African American women.2,3 This 6-point photographic scale displays images with grades ranging from 0 (normal) to 5 (bald scalp); higher grades indicate probable and more severe CCCA. The scale also divides the central hair loss in a frontal-accentuation or vertex-predominant pattern, which corresponds to the A or B designations, respectively; thus, a score of 5A indicates probable severe CCCA with a frontal accentuation pattern, while 5B indicates probable severe CCCA with hair loss focused on the vertex scalp. This study was approved by the University of Pennsylvania institutional review board (approval #850730).

Of 108 male patients, 12 met the eligibility criteria. Nearly all patients (91.7% [11/12]) had a CCCA severity grade of 3 or higher at the initial dermatology visit, indicating extensive hair loss (Table). The clinical appearance of severity grades 2 through 5 is demonstrated in the Figure. Among patients with a known disease duration prior to diagnosis, 72.7% (8/11) were diagnosed more than 1 year after onset of CCCA, and 45.4% (5/11) were diagnosed more than 5 years after onset. On average (SD), it took 6.4 (5.9) years for patients to receive a diagnosis of CCCA after the onset of scalp symptoms and/or hair loss.

Randomized controlled trials evaluating treatment of CCCA are lacking, and anecdotal evidence posits a better treatment response in early CCCA; however, our results suggest that most male patients present with advanced CCCA and receive a diagnosis years after disease onset. Similar research in alopecia areata has shown that 72.4% (105/145) of patients received their diagnosis within a year after onset of symptoms, and the mean time from onset of symptoms to diagnosis was 1 year.4 In contrast, male patients with CCCA experience considerable diagnostic delays. This disparity indicates the need for clinicians to increase recognition of CCCA in men and quickly refer them to a dermatologist for prompt treatment.

Androgenetic alopecia (AGA) commonly is at the top of the differential diagnosis for hair loss on the vertex of the scalp in males, but clinicians should maintain a high index of suspicion for CCCA, especially when scalp symptoms or atypical features of AGA are present.5 Androgenetic alopecia typically is asymptomatic, whereas the symptoms of CCCA may include itching, tenderness, and/or burning.6,7 Trichoscopy is useful to evaluate for scarring, and a scalp biopsy may reveal other features to lower AGA on the differential. Educating patients, barbers, and hairstylists about the importance of early intervention also may encourage earlier visits before the scarring process is advanced. Further exploration into factors impacting diagnosis and CCCA severity may uncover implications for prognosis and treatment.

This study was limited by a small sample size, retrospective design, and single-center analysis. Some patients had comorbid hair loss conditions, which could affect disease severity. Moreover, the central scalp alopecia photographic scale2 was not validated in men or designed for assessment of the nonclassical hair loss distributions noted in some of our patients. Nonetheless, we hope these data will support clinicians in efforts to advocate for early diagnosis and treatment in patients with CCCA to ultimately help improve outcomes.

- Ogunleye TA, McMichael A, Olsen EA. Central centrifugal cicatricial alopecia: what has been achieved, current clues for future research. Dermatol Clin. 2014;32:173-181. doi:10.1016/j.det.2013.12.005

- Olsen EA, Callender V, McMichael A, et al. Central hair loss in African American women: incidence and potential risk factors. J Am Acad Dermatol. 2011;64:245-252. doi:10.1016/j.jaad.2009.11.693

- Olsen EA, Callendar V, Sperling L, et al. Central scalp alopecia photographic scale in African American women. Dermatol Ther. 2008;21:264-267. doi:10.1111/j.1529-8019.2008.00208.x

- Andersen YMF, Nymand L, DeLozier AM, et al. Patient characteristics and disease burden of alopecia areata in the Danish Skin Cohort. BMJ Open. 2022;12:E053137. doi:10.1136/bmjopen-2021-053137

- Davis EC, Reid SD, Callender VD, et al. Differentiating central centrifugal cicatricial alopecia and androgenetic alopecia in African American men. J Clin Aesthetic Dermatol. 2012;5:37-40.

- Jackson TK, Sow Y, Ayoade KO, et al. Central centrifugal cicatricial alopecia in males. J Am Acad Dermatol. 2023;89:1136-1140. doi:10.1016/j.jaad.2023.07.1011

- Lawson CN, Bakayoko A, Callender VD. Central centrifugal cicatricial alopecia: challenges and treatments. Dermatol Clin. 2021;39:389-405. doi:10.1016/j.det.2021.03.004

- Ogunleye TA, McMichael A, Olsen EA. Central centrifugal cicatricial alopecia: what has been achieved, current clues for future research. Dermatol Clin. 2014;32:173-181. doi:10.1016/j.det.2013.12.005

- Olsen EA, Callender V, McMichael A, et al. Central hair loss in African American women: incidence and potential risk factors. J Am Acad Dermatol. 2011;64:245-252. doi:10.1016/j.jaad.2009.11.693

- Olsen EA, Callendar V, Sperling L, et al. Central scalp alopecia photographic scale in African American women. Dermatol Ther. 2008;21:264-267. doi:10.1111/j.1529-8019.2008.00208.x

- Andersen YMF, Nymand L, DeLozier AM, et al. Patient characteristics and disease burden of alopecia areata in the Danish Skin Cohort. BMJ Open. 2022;12:E053137. doi:10.1136/bmjopen-2021-053137

- Davis EC, Reid SD, Callender VD, et al. Differentiating central centrifugal cicatricial alopecia and androgenetic alopecia in African American men. J Clin Aesthetic Dermatol. 2012;5:37-40.

- Jackson TK, Sow Y, Ayoade KO, et al. Central centrifugal cicatricial alopecia in males. J Am Acad Dermatol. 2023;89:1136-1140. doi:10.1016/j.jaad.2023.07.1011

- Lawson CN, Bakayoko A, Callender VD. Central centrifugal cicatricial alopecia: challenges and treatments. Dermatol Clin. 2021;39:389-405. doi:10.1016/j.det.2021.03.004

Practice Points

- Most males with central centrifugal cicatricial alopecia (CCCA) experience considerable diagnostic delays and typically present to dermatology with late-stage disease.

- Dermatologists should consider CCCA in the differential diagnosis for adult Black males with alopecia.

- More research is needed to explore advanced CCCA in males, including factors limiting timely diagnosis and the impact on quality of life in this population.

Listen to earn your patients’ trust

Recently, I had an interesting conversation while getting my hair cut. It gave me a great deal of insight into some of the problems we have right now with how medical information is shared and some of the disconnect our patients may feel.

The young woman who was cutting my hair asked me what I did for an occupation. I said that I was a physician. She said, “Can I please ask you an important question?” She asked me what my thoughts were about the COVID vaccine. She prefaced it with “I am so confused on whether I should get the vaccine. I have seen a number of TikTok videos that talk about nano particles in the COVID vaccine that can be very dangerous.”

I discussed with her how the COVID vaccine actually works and shared with her the remarkable success of the vaccine. I asked her what side effects she was worried about from the vaccine and what her fears were. She said that she had heard that a lot of people had died from the vaccine. I told her that severe reactions from the vaccine were very uncommon.

She then made a very telling comment: “I wish I could talk to a doctor about my concerns. I have been going to the same health center for the last 5 years and every time I go I see a different person.” She added, “I rarely have more than 5-10 minutes with the person that I am seeing and I rarely get the opportunity to ask questions.”

She thanked me for the information and said that she would be getting the COVID vaccine in the future. She said it is so hard to know where to get information now and the very different things that she heard confused her. She told me that she thought her generation got most of its information from short sound bites or TikTok and Instagram videos.

Why did she trust me? I still think that the medical profession is respected. We are all pressured to do more with less time. Conversations where we can listen and then respond go a long way. We can always listen and learn what information people need and will appreciate. I was also struck by how alone this person felt in our health care system. She did not have a relationship with any one person whom she could trust and reach out to with questions. Relationships with our patients go a long way to establishing trust.

Pearl

It takes time to listen to and answer our patients’ questions. We need to do that to fight the waves of misinformation our patients face.

Dr. Paauw is professor of medicine in the division of general internal medicine at the University of Washington, Seattle, and he serves as third-year medical student clerkship director at the University of Washington. He is a member of the editorial advisory board of Internal Medicine News. Dr. Paauw has no conflicts to disclose. Contact him at [email protected].

Recently, I had an interesting conversation while getting my hair cut. It gave me a great deal of insight into some of the problems we have right now with how medical information is shared and some of the disconnect our patients may feel.

The young woman who was cutting my hair asked me what I did for an occupation. I said that I was a physician. She said, “Can I please ask you an important question?” She asked me what my thoughts were about the COVID vaccine. She prefaced it with “I am so confused on whether I should get the vaccine. I have seen a number of TikTok videos that talk about nano particles in the COVID vaccine that can be very dangerous.”

I discussed with her how the COVID vaccine actually works and shared with her the remarkable success of the vaccine. I asked her what side effects she was worried about from the vaccine and what her fears were. She said that she had heard that a lot of people had died from the vaccine. I told her that severe reactions from the vaccine were very uncommon.

She then made a very telling comment: “I wish I could talk to a doctor about my concerns. I have been going to the same health center for the last 5 years and every time I go I see a different person.” She added, “I rarely have more than 5-10 minutes with the person that I am seeing and I rarely get the opportunity to ask questions.”

She thanked me for the information and said that she would be getting the COVID vaccine in the future. She said it is so hard to know where to get information now and the very different things that she heard confused her. She told me that she thought her generation got most of its information from short sound bites or TikTok and Instagram videos.

Why did she trust me? I still think that the medical profession is respected. We are all pressured to do more with less time. Conversations where we can listen and then respond go a long way. We can always listen and learn what information people need and will appreciate. I was also struck by how alone this person felt in our health care system. She did not have a relationship with any one person whom she could trust and reach out to with questions. Relationships with our patients go a long way to establishing trust.

Pearl

It takes time to listen to and answer our patients’ questions. We need to do that to fight the waves of misinformation our patients face.

Dr. Paauw is professor of medicine in the division of general internal medicine at the University of Washington, Seattle, and he serves as third-year medical student clerkship director at the University of Washington. He is a member of the editorial advisory board of Internal Medicine News. Dr. Paauw has no conflicts to disclose. Contact him at [email protected].

Recently, I had an interesting conversation while getting my hair cut. It gave me a great deal of insight into some of the problems we have right now with how medical information is shared and some of the disconnect our patients may feel.

The young woman who was cutting my hair asked me what I did for an occupation. I said that I was a physician. She said, “Can I please ask you an important question?” She asked me what my thoughts were about the COVID vaccine. She prefaced it with “I am so confused on whether I should get the vaccine. I have seen a number of TikTok videos that talk about nano particles in the COVID vaccine that can be very dangerous.”

I discussed with her how the COVID vaccine actually works and shared with her the remarkable success of the vaccine. I asked her what side effects she was worried about from the vaccine and what her fears were. She said that she had heard that a lot of people had died from the vaccine. I told her that severe reactions from the vaccine were very uncommon.

She then made a very telling comment: “I wish I could talk to a doctor about my concerns. I have been going to the same health center for the last 5 years and every time I go I see a different person.” She added, “I rarely have more than 5-10 minutes with the person that I am seeing and I rarely get the opportunity to ask questions.”

She thanked me for the information and said that she would be getting the COVID vaccine in the future. She said it is so hard to know where to get information now and the very different things that she heard confused her. She told me that she thought her generation got most of its information from short sound bites or TikTok and Instagram videos.

Why did she trust me? I still think that the medical profession is respected. We are all pressured to do more with less time. Conversations where we can listen and then respond go a long way. We can always listen and learn what information people need and will appreciate. I was also struck by how alone this person felt in our health care system. She did not have a relationship with any one person whom she could trust and reach out to with questions. Relationships with our patients go a long way to establishing trust.

Pearl

It takes time to listen to and answer our patients’ questions. We need to do that to fight the waves of misinformation our patients face.

Dr. Paauw is professor of medicine in the division of general internal medicine at the University of Washington, Seattle, and he serves as third-year medical student clerkship director at the University of Washington. He is a member of the editorial advisory board of Internal Medicine News. Dr. Paauw has no conflicts to disclose. Contact him at [email protected].

The Tyranny of Beta-Blockers

Beta-blockers are excellent drugs. They’re cheap and effective; feature prominently in hypertension guidelines; and remain a sine qua non for coronary artery disease, myocardial infarction, and heart failure treatment. They’ve been around forever, and we know they work. Good luck finding an adult medicine patient who isn’t on one.

Beta-blockers act by slowing resting heart rate (and blunting the heart rate response to exercise. The latter is a pernicious cause of activity intolerance that often goes unchecked. Even when the adverse effects of beta-blockers are appreciated, providers are loath to alter dosing, much less stop the drug. After all, beta-blockers are an integral part of guideline-directed medical therapy (GDMT), and GDMT saves lives.

Balancing Heart Rate and Stroke Volume Effects

To augment cardiac output and optimize oxygen uptake (VO2) during exercise, we need the heart rate response. In fact, the heart rate response contributes more to cardiac output than augmenting stroke volume (SV) and more to VO2 than the increase in arteriovenous (AV) oxygen difference. An inability to increase the heart rate commensurate with physiologic work is called chronotropic incompetence (CI). That’s what beta-blockers do ─ they cause CI.

Physiology dictates that CI will cause activity intolerance. That said, it’s hard to quantify the impact from beta-blockers at the individual patient level. Data suggest the heart rate effect is profound. A study in patients without heart failure found that 22% of participants on beta-blockers had CI, and the investigators used a conservative CI definition (≤ 62% of heart rate reserve used). A recent report published in JAMA Cardiology found that stopping beta-blockers in patients with heart failure allowed for an extra 30 beats/min at max exercise.

Wasserman and Whipp’s textbook, the last word on all things exercise, presents a sample subject who undergoes two separate cardiopulmonary exercise tests (CPETs). Before the first, he’s given a placebo, and before the second, he gets an intravenous beta-blocker. He’s a 23-year-old otherwise healthy male — the perfect test case for isolating beta-blocker impact without confounding by comorbid diseases, other medications, or deconditioning. His max heart rate dropped by 30 beats/min after the beta-blocker, identical to what we saw in the JAMA Cardiology study (with the heart rate increasing by 30 beats/min following withdrawal). Case closed. Stop the beta-blockers on your patients so they can meet their exercise goals and get healthy!

Such pithy enthusiasm discounts physiology’s complexities. When blunting our patient’s heart rate response with beta-blockers, we also increase diastolic filling time, which increases SV. For the 23-year-old in Wasserman and Whipp’s physiology textbook, the beta-blocker increased O2 pulse (the product of SV and AV difference). Presumably, this is mediated by the increased SV. There was a net reduction in VO2 peak, but it was nominal, suggesting that the drop in heart rate was largely offset by the increase in O2 pulse. For the patients in the JAMA Cardiology study, the entire group had a small increase in VO2 peak with beta-blocker withdrawal, but the effect differed by left ventricular function. Across different studies, the beta-blocker effect on heart rate is consistent but the change in overall exercise capacity is not.

Patient Variability in Beta-Blocker Response

In addition to left ventricular function, there are other factors likely to drive variability at the patient level. We’ve treated the response to beta-blockers as a class effect — an obvious oversimplification. The impact on exercise and the heart will vary by dose and drug (eg, atenolol vs metoprolol vs carvedilol, and so on). Beta-blockers can also affect the lungs, and we’re still debating how cautious to be in the presence of asthma or chronic obstructive pulmonary disease.

In a world of infinite time, resources, and expertise, we’d CPET everyone before and after beta-blocker use. Our current reality requires the unthinkable: We’ll have to talk to each other and our patients. For example, heart failure guidelines recommend titrating drugs to match the dose from trials that proved efficacy. These doses are quite high. Simple discussion with the cardiologist and the patient may allow for an adjustment back down with careful monitoring and close attention to activity tolerance. With any luck, you’ll preserve the benefits from GDMT while optimizing your patient›s ability to meet their exercise goals.

Dr. Holley, professor in the department of medicine, Uniformed Services University, Bethesda, Maryland, and a pulmonary/sleep and critical care medicine physician at MedStar Washington Hospital Center, Washington, disclosed ties with Metapharm, CHEST College, and WebMD.

A version of this article appeared on Medscape.com.

Beta-blockers are excellent drugs. They’re cheap and effective; feature prominently in hypertension guidelines; and remain a sine qua non for coronary artery disease, myocardial infarction, and heart failure treatment. They’ve been around forever, and we know they work. Good luck finding an adult medicine patient who isn’t on one.

Beta-blockers act by slowing resting heart rate (and blunting the heart rate response to exercise. The latter is a pernicious cause of activity intolerance that often goes unchecked. Even when the adverse effects of beta-blockers are appreciated, providers are loath to alter dosing, much less stop the drug. After all, beta-blockers are an integral part of guideline-directed medical therapy (GDMT), and GDMT saves lives.

Balancing Heart Rate and Stroke Volume Effects

To augment cardiac output and optimize oxygen uptake (VO2) during exercise, we need the heart rate response. In fact, the heart rate response contributes more to cardiac output than augmenting stroke volume (SV) and more to VO2 than the increase in arteriovenous (AV) oxygen difference. An inability to increase the heart rate commensurate with physiologic work is called chronotropic incompetence (CI). That’s what beta-blockers do ─ they cause CI.

Physiology dictates that CI will cause activity intolerance. That said, it’s hard to quantify the impact from beta-blockers at the individual patient level. Data suggest the heart rate effect is profound. A study in patients without heart failure found that 22% of participants on beta-blockers had CI, and the investigators used a conservative CI definition (≤ 62% of heart rate reserve used). A recent report published in JAMA Cardiology found that stopping beta-blockers in patients with heart failure allowed for an extra 30 beats/min at max exercise.

Wasserman and Whipp’s textbook, the last word on all things exercise, presents a sample subject who undergoes two separate cardiopulmonary exercise tests (CPETs). Before the first, he’s given a placebo, and before the second, he gets an intravenous beta-blocker. He’s a 23-year-old otherwise healthy male — the perfect test case for isolating beta-blocker impact without confounding by comorbid diseases, other medications, or deconditioning. His max heart rate dropped by 30 beats/min after the beta-blocker, identical to what we saw in the JAMA Cardiology study (with the heart rate increasing by 30 beats/min following withdrawal). Case closed. Stop the beta-blockers on your patients so they can meet their exercise goals and get healthy!

Such pithy enthusiasm discounts physiology’s complexities. When blunting our patient’s heart rate response with beta-blockers, we also increase diastolic filling time, which increases SV. For the 23-year-old in Wasserman and Whipp’s physiology textbook, the beta-blocker increased O2 pulse (the product of SV and AV difference). Presumably, this is mediated by the increased SV. There was a net reduction in VO2 peak, but it was nominal, suggesting that the drop in heart rate was largely offset by the increase in O2 pulse. For the patients in the JAMA Cardiology study, the entire group had a small increase in VO2 peak with beta-blocker withdrawal, but the effect differed by left ventricular function. Across different studies, the beta-blocker effect on heart rate is consistent but the change in overall exercise capacity is not.

Patient Variability in Beta-Blocker Response

In addition to left ventricular function, there are other factors likely to drive variability at the patient level. We’ve treated the response to beta-blockers as a class effect — an obvious oversimplification. The impact on exercise and the heart will vary by dose and drug (eg, atenolol vs metoprolol vs carvedilol, and so on). Beta-blockers can also affect the lungs, and we’re still debating how cautious to be in the presence of asthma or chronic obstructive pulmonary disease.

In a world of infinite time, resources, and expertise, we’d CPET everyone before and after beta-blocker use. Our current reality requires the unthinkable: We’ll have to talk to each other and our patients. For example, heart failure guidelines recommend titrating drugs to match the dose from trials that proved efficacy. These doses are quite high. Simple discussion with the cardiologist and the patient may allow for an adjustment back down with careful monitoring and close attention to activity tolerance. With any luck, you’ll preserve the benefits from GDMT while optimizing your patient›s ability to meet their exercise goals.

Dr. Holley, professor in the department of medicine, Uniformed Services University, Bethesda, Maryland, and a pulmonary/sleep and critical care medicine physician at MedStar Washington Hospital Center, Washington, disclosed ties with Metapharm, CHEST College, and WebMD.

A version of this article appeared on Medscape.com.

Beta-blockers are excellent drugs. They’re cheap and effective; feature prominently in hypertension guidelines; and remain a sine qua non for coronary artery disease, myocardial infarction, and heart failure treatment. They’ve been around forever, and we know they work. Good luck finding an adult medicine patient who isn’t on one.

Beta-blockers act by slowing resting heart rate (and blunting the heart rate response to exercise. The latter is a pernicious cause of activity intolerance that often goes unchecked. Even when the adverse effects of beta-blockers are appreciated, providers are loath to alter dosing, much less stop the drug. After all, beta-blockers are an integral part of guideline-directed medical therapy (GDMT), and GDMT saves lives.

Balancing Heart Rate and Stroke Volume Effects

To augment cardiac output and optimize oxygen uptake (VO2) during exercise, we need the heart rate response. In fact, the heart rate response contributes more to cardiac output than augmenting stroke volume (SV) and more to VO2 than the increase in arteriovenous (AV) oxygen difference. An inability to increase the heart rate commensurate with physiologic work is called chronotropic incompetence (CI). That’s what beta-blockers do ─ they cause CI.

Physiology dictates that CI will cause activity intolerance. That said, it’s hard to quantify the impact from beta-blockers at the individual patient level. Data suggest the heart rate effect is profound. A study in patients without heart failure found that 22% of participants on beta-blockers had CI, and the investigators used a conservative CI definition (≤ 62% of heart rate reserve used). A recent report published in JAMA Cardiology found that stopping beta-blockers in patients with heart failure allowed for an extra 30 beats/min at max exercise.

Wasserman and Whipp’s textbook, the last word on all things exercise, presents a sample subject who undergoes two separate cardiopulmonary exercise tests (CPETs). Before the first, he’s given a placebo, and before the second, he gets an intravenous beta-blocker. He’s a 23-year-old otherwise healthy male — the perfect test case for isolating beta-blocker impact without confounding by comorbid diseases, other medications, or deconditioning. His max heart rate dropped by 30 beats/min after the beta-blocker, identical to what we saw in the JAMA Cardiology study (with the heart rate increasing by 30 beats/min following withdrawal). Case closed. Stop the beta-blockers on your patients so they can meet their exercise goals and get healthy!

Such pithy enthusiasm discounts physiology’s complexities. When blunting our patient’s heart rate response with beta-blockers, we also increase diastolic filling time, which increases SV. For the 23-year-old in Wasserman and Whipp’s physiology textbook, the beta-blocker increased O2 pulse (the product of SV and AV difference). Presumably, this is mediated by the increased SV. There was a net reduction in VO2 peak, but it was nominal, suggesting that the drop in heart rate was largely offset by the increase in O2 pulse. For the patients in the JAMA Cardiology study, the entire group had a small increase in VO2 peak with beta-blocker withdrawal, but the effect differed by left ventricular function. Across different studies, the beta-blocker effect on heart rate is consistent but the change in overall exercise capacity is not.

Patient Variability in Beta-Blocker Response

In addition to left ventricular function, there are other factors likely to drive variability at the patient level. We’ve treated the response to beta-blockers as a class effect — an obvious oversimplification. The impact on exercise and the heart will vary by dose and drug (eg, atenolol vs metoprolol vs carvedilol, and so on). Beta-blockers can also affect the lungs, and we’re still debating how cautious to be in the presence of asthma or chronic obstructive pulmonary disease.

In a world of infinite time, resources, and expertise, we’d CPET everyone before and after beta-blocker use. Our current reality requires the unthinkable: We’ll have to talk to each other and our patients. For example, heart failure guidelines recommend titrating drugs to match the dose from trials that proved efficacy. These doses are quite high. Simple discussion with the cardiologist and the patient may allow for an adjustment back down with careful monitoring and close attention to activity tolerance. With any luck, you’ll preserve the benefits from GDMT while optimizing your patient›s ability to meet their exercise goals.

Dr. Holley, professor in the department of medicine, Uniformed Services University, Bethesda, Maryland, and a pulmonary/sleep and critical care medicine physician at MedStar Washington Hospital Center, Washington, disclosed ties with Metapharm, CHEST College, and WebMD.

A version of this article appeared on Medscape.com.

The Impact of the Recent Supreme Court Ruling on the Dermatology Recruitment Pipeline

The ruling by the Supreme Court of the United States (SCOTUS) in 20231,2 on the use of race-based criteria in college admissions was met with a range of reactions across the country. Given the implications of this decision on the future makeup of higher education, the downstream effects on medical school admissions, and the possible further impact on graduate medical education programs, we sought to explore the potential impact of the landmark decision from the perspective of dermatology residency program directors and offer insights on this pivotal judgment.

Background on the SCOTUS Ruling

In June 2023, SCOTUS issued its formal decision on 2 court cases brought by the organization Students for Fair Admissions (SFFA) against the University of North Carolina at Chapel Hill1 and Harvard University (Cambridge, Massachusetts)2 that addressed college admissions practices dealing with the use of race as a selection criterion in the application process. The cases alleged that these universities had overly emphasized race in the admissions process and thus were in violation of the Civil Rights Act of 1964 as well as the 14th Amendment.1,2

The SCOTUS justices voted 6 to 3 in favor of the argument presented by the SFFA, determining that the use of race in the college admissions process essentially constituted a form of racial discrimination. The ruling was in contrast to a prior decision in 2003 that centered on law school admissions at the University of Michigan (Ann Arbor, Michigan) in which SCOTUS previously had determined that race could be used as one factor amongst other criteria in the higher education selection process.3 In the 2023 decision siding with SFFA, SCOTUS did acknowledge that it was still acceptable for selection processes to consider “an applicant’s discussion of how race affected his or her life, be it through discrimination, inspiration, or otherwise.”2

Effect on Undergraduate Admissions

Prior to the 2023 ruling, several states had already passed independent laws against the use of affirmative action or race-based selection criteria in the admissions process at public colleges and universities.4 As a result, these institutions would already be conforming to the principles set forth in the SCOTUS ruling and major changes to their undergraduate admissions policies would not be expected; however, a considerable number of colleges and universities—particularly those considered highly selective with applicant acceptance rates that are well below the national average—reported the use of race as a factor in their admissions processes in standardized reporting surveys.5 For these institutions, it is no longer considered acceptable (based on the SCOTUS decision) to use race as a singular factor in admissions or to implement race-conscious decision-making—in which individuals are considered differently based solely on their race—as part of the undergraduate selection process.

In light of these rulings, many institutions have explicitly committed to upholding principles of diversity in their recruitment processes, acknowledging the multifaceted nature of diversity beyond strictly racial terms—including but not limited to socioeconomic diversity, religious diversity, or gender diversity—which is in compliance with the interpretation ruling by the US Department of Education and the US Department of Justice.6 Additionally, select institutions have taken approaches to explicitly include questions on ways in which applicants have overcome obstacles or challenges, allowing an opportunity for individuals who have had such experiences related to race an opportunity to incorporate these elements into their applications. Finally, some institutions have taken a more limited approach, eliminating ways in which race is explicitly addressed in the application and focusing on race-neutral elements of the application in their approach to selection.7

Because the first college admission cycle since the 2023 SCOTUS ruling is still underway, we have yet to witness the full impact of this decision on the current undergraduate admissions landscape.

Effect on Medical School Admissions and Rotations

Although SCOTUS specifically examined the undergraduate admissions process, the ruling on race-conscious admissions also had a profound impact on graduate school admissions including medical school admission processes.1,2,8,9 This is because the language of the majority opinion refers to “university programs” in its ruling, which also has been broadly interpreted to include graduate school programs. As with undergraduate admissions, it has been interpreted by national medical education organizations and institutions that medical schools also cannot consider an applicant’s race or ethnicity as a specific factor in the admissions process.1,2,8,9

Lived individual experiences, including essays that speak to an applicant’s lived experiences and career aspirations related to race, still can be taken into account. In particular, holistic review still can be utilized to evaluate medical school candidates and may play a more integral role in the medical school admissions process now than in the past.8,10,11 After the ruling, Justice Sonia Sotomayor noted that “today’s decision leaves intact holistic college admissions and recruitment efforts that seek to enroll diverse classes without using racial classifications.”1

The ruling asserted that universities may define their mission as they see fit. As a result, the ruling did not affect medical school missions or strategic plans, including those that may aim to diversify the health care workforce.8,10,11 The ruling also did not affect the ability to utilize pathway programs to encourage a career in medicine or recruitment relationships with diverse undergraduate or community-based organizations. Student interest groups also can be involved in the relationship-building or recruitment activities for medical schools.8,10,11 Guidance from the US Department of Education and US Department of Justice noted that institutions may consider race in identifying prospective applicants through recruitment and outreach, “provided that their outreach and recruitment programs do not provide targeted groups of prospective students preference in the admissions process, and provided that all students—whether part of a specifically targeted group or not—enjoy the same opportunity to apply and compete for admission.”12

In regard to pathways programs, slots cannot be reserved and preference cannot be given to applicants who participated in these programs if race was a factor in selecting participants.8 Similarly, medical school away electives related to diversity cannot be reserved for those of a specific race or ethnicity; however, these electives can utilize commitment to stated aims and missions of the rotation, such as a commitment to diversity within medicine, as a basis to selecting candidates.8

The ruling did not address how race or ethnicity is factored into financial aid or scholarship determination. There has been concern in higher education that the legal framework utilized in the SCOTUS decision could affect financial aid and scholarship decisions; therefore, many institutions are proceeding with caution in their approach.8

Effect on Residency Selection

Because the SCOTUS ruling references colleges and universities, not health care employers, it should not affect the residency selection process; however, there is variability in how health care institutions are interpreting the impact of the ruling on residency selection, with some taking a more prescriptive and cautious view on the matter. Additionally, with that said, residency selection is considered an employment practice covered by Title VII of the Civil Rights Act of 1964,13 which already prohibits the consideration of race in hiring decisions.7 Under Title VII, it is unlawful for employers to discriminate against someone because of race, color, religion, sex, or national origin, and it is “unlawful to use policies or practices that seem neutral but have the effect of discriminating against people because of their race, color, religion, sex … or national origin.” Title VII also states that employers cannot “make employment decisions based on stereotypes or assumptions about a person’s abilities, traits, or performance because of their race, color, religion, sex … or national origin.”13

Importantly, Title VII does not imply that employers need to abandon their diversity, equity, or inclusion initiatives, and it does not imply that employers must revoke their mission to improve diversity in the workforce. Title VII does not state that racial information cannot be available. It would be permissible to use racial data to assess recruitment trends, identify inequities, and create programs to eliminate barriers and decrease bias14; for example, if a program identified that, based on their current review system, students who are underrepresented in medicine were disproportionately screened out of the applicant pool or interview group, they may wish to revisit their review process to identify and eliminate possible biases. Programs also may wish to adopt educational programs for reviewers (eg, implicit bias training) or educational content on the potential for bias in commonly used review criteria, such as the US Medical Licensing Examination, clerkship grades, and the Medical Student Performance Evaluation.15 Reviewers can and should consider applications in an individualized and holistic manner in which experiences, traits, skills, and academic metrics are assessed together for compatibility with the values and mission of the training program.16

Future Directions for Dermatology

Beyond the SCOTUS ruling, there have been other shifts in the dermatology residency application process that have affected candidate review. Dermatology programs recently have adopted the use of preference signaling in residency applications. Preliminary data from the Association of American Medical Colleges for the 2024 application cycle indicated that of the 81 programs analyzed, there was a nearly 0% chance of an applicant receiving an interview invitation from a program that they did not signal. The median signal-to-interview conversion rate for the 81 dermatology programs analyzed was 55% for gold signals and 15% for silver signals.17 It can be inferred from these data that programs are using preference signaling as important criteria for consideration of interview invitation. Programs may choose to focus most of their attention on the applicant pool who has signaled them. Because the number and type of signals available is equal among all applicants, we hope that this provides an equitable way for all applicants to garner holistic review from programs that interested them. In addition, there has been a 30% decrease in average applications submitted per dermatology applicant.18 With a substantial decline in applications to dermatology, we hope that reviewers are able to spend more time devoted to comprehensive holistic review.

Although signals are equitable for applicants, their distribution among programs may not be; for example, in a given year, a program might find that all their gold signals came from non–underrepresented in medicine students. We encourage programs to carefully review applicant data to ensure their recruitment process is not inadvertently discriminatory and is in alignment with their goals and mission.

- Students for Fair Admissions, Inc. v University of North Carolina, 567 F. Supp. 3d 580 (M.D.N.C. 2021).

- Students for Fair Admissions, Inc. v President and Fellows of Harvard College, 600 US ___ (2023).

- Grutter v Bollinger, 539 US 306 (2003).

- Saul S. 9 states have banned affirmative action. here’s what that looks like. The New York Times. October 31, 2022. https://www.nytimes.com/2022/10/31/us/politics/affirmative-action-ban-states.html

- Desilver D. Private, selective colleges are most likely to use race, ethnicity as a factor in admissions decisions. Pew Research Center. July 14, 2023. Accessed May 29, 2024. https://www.pewresearch.org/short-reads/2023/07/14/private-selective-colleges-are-most-likely-to-use-race-ethnicity-as-a-factor-in-admissions-decisions/

- US Department of Education. Justice and education departments release resources to advance diversity and opportunity in higher education. August 14, 2023. Accessed May 17, 2024. https://www.ed.gov/news/press-releases/advance-diversity-and-opportunity-higher-education-justice-and-education-departments-release-resources-advance-diversity-and-opportunity-higher-education

- Amponsah MN, Hamid RD. Harvard overhauls college application in wake of affirmative action decision. The Harvard Crimson. August 3, 2023. Accessed May 17, 2024. https://www.thecrimson.com/article/2023/8/3/harvard-admission-essay-change/

- Association of American Medical Colleges. Frequently asked questions: what does the Harvard and UNC decision mean for medical education? August 24, 2023. Accessed May 17, 2024. https://www.aamc.org/media/68771/download?attachment%3Fattachment

- American Medical Association. Affirmative action ends: how Supreme Court ruling impacts medical schools & the health care workforce. July 7, 2023. Accessed May 17, 2024. https://www.ama-assn.org/medical-students/medical-school-life/affirmative-action-ends-how-supreme-court-ruling-impacts

- Association of American Medical Colleges. How can medical schools boost racial diversity in the wake of the recent Supreme Court ruling? July 27, 2023. Accessed May 17, 2024. https://www.aamc.org/news/how-can-medical-schools-boost-racial-diversity-wake-recent-supreme-court-ruling

- Association of American Medical Colleges. Diversity in medical school admissions. Updated March 18, 2024. Accessed May 17, 2024. https://www.aamc.org/about-us/mission-areas/medical-education/diversity-medical-school-admissions

- United States Department of Justice. Questions and answers regarding the Supreme Court’s decision in Students For Fair Admissions, Inc. v. Harvard College and University of North Carolina. August 14, 2023. Accessed May 29, 2024. https://www.justice.gov/d9/2023-08/post-sffa_resource_faq_final_508.pdf

- US Department of Justice. Title VII of the Civil Rights Act of 1964. Accessed May 17, 2024. https://www.justice.gov/crt/laws-we-enforce

- Zheng L. How to effectively—and legally—use racial data for DEI. Harvard Business Review. July 24, 2023. Accessed May 17, 2024. https://hbr.org/2023/07/how-to-effectively-and-legally-use-racial-data-for-dei

- Crites K, Johnson J, Scott N, et al. Increasing diversity in residency training programs. Cureus. 2022;14:E25962. doi:10.7759/cureus.25962

- Association of American Medical Colleges. Holistic principles in resident selection: an introduction. Accessed May 17, 2024. https://www.aamc.org/media/44586/download?attachment

- Association of American Medical Colleges. Exploring the relationship between program signaling & interview invitations across specialties 2024 ERAS® preliminary analysis. December 29, 2023. Accessed May 17, 2024. https://www.aamc.org/media/74811/download?attachment

- Association of American Medical Colleges. Preliminary program signaling data and their impact on residency selection. October 24, 2023. Accessed May 17, 2024. https://www.aamc.org/services/eras-institutions/program-signaling-data#:~:text=Preliminary%20Program%20Signaling%20Data%20and%20Their%20Impact%20on%20Residency%20Selection,-Oct.&text=Program%20signals%20are%20a%20mechanism,whom%20to%20invite%20for%20interview

The ruling by the Supreme Court of the United States (SCOTUS) in 20231,2 on the use of race-based criteria in college admissions was met with a range of reactions across the country. Given the implications of this decision on the future makeup of higher education, the downstream effects on medical school admissions, and the possible further impact on graduate medical education programs, we sought to explore the potential impact of the landmark decision from the perspective of dermatology residency program directors and offer insights on this pivotal judgment.

Background on the SCOTUS Ruling

In June 2023, SCOTUS issued its formal decision on 2 court cases brought by the organization Students for Fair Admissions (SFFA) against the University of North Carolina at Chapel Hill1 and Harvard University (Cambridge, Massachusetts)2 that addressed college admissions practices dealing with the use of race as a selection criterion in the application process. The cases alleged that these universities had overly emphasized race in the admissions process and thus were in violation of the Civil Rights Act of 1964 as well as the 14th Amendment.1,2

The SCOTUS justices voted 6 to 3 in favor of the argument presented by the SFFA, determining that the use of race in the college admissions process essentially constituted a form of racial discrimination. The ruling was in contrast to a prior decision in 2003 that centered on law school admissions at the University of Michigan (Ann Arbor, Michigan) in which SCOTUS previously had determined that race could be used as one factor amongst other criteria in the higher education selection process.3 In the 2023 decision siding with SFFA, SCOTUS did acknowledge that it was still acceptable for selection processes to consider “an applicant’s discussion of how race affected his or her life, be it through discrimination, inspiration, or otherwise.”2

Effect on Undergraduate Admissions

Prior to the 2023 ruling, several states had already passed independent laws against the use of affirmative action or race-based selection criteria in the admissions process at public colleges and universities.4 As a result, these institutions would already be conforming to the principles set forth in the SCOTUS ruling and major changes to their undergraduate admissions policies would not be expected; however, a considerable number of colleges and universities—particularly those considered highly selective with applicant acceptance rates that are well below the national average—reported the use of race as a factor in their admissions processes in standardized reporting surveys.5 For these institutions, it is no longer considered acceptable (based on the SCOTUS decision) to use race as a singular factor in admissions or to implement race-conscious decision-making—in which individuals are considered differently based solely on their race—as part of the undergraduate selection process.

In light of these rulings, many institutions have explicitly committed to upholding principles of diversity in their recruitment processes, acknowledging the multifaceted nature of diversity beyond strictly racial terms—including but not limited to socioeconomic diversity, religious diversity, or gender diversity—which is in compliance with the interpretation ruling by the US Department of Education and the US Department of Justice.6 Additionally, select institutions have taken approaches to explicitly include questions on ways in which applicants have overcome obstacles or challenges, allowing an opportunity for individuals who have had such experiences related to race an opportunity to incorporate these elements into their applications. Finally, some institutions have taken a more limited approach, eliminating ways in which race is explicitly addressed in the application and focusing on race-neutral elements of the application in their approach to selection.7

Because the first college admission cycle since the 2023 SCOTUS ruling is still underway, we have yet to witness the full impact of this decision on the current undergraduate admissions landscape.

Effect on Medical School Admissions and Rotations

Although SCOTUS specifically examined the undergraduate admissions process, the ruling on race-conscious admissions also had a profound impact on graduate school admissions including medical school admission processes.1,2,8,9 This is because the language of the majority opinion refers to “university programs” in its ruling, which also has been broadly interpreted to include graduate school programs. As with undergraduate admissions, it has been interpreted by national medical education organizations and institutions that medical schools also cannot consider an applicant’s race or ethnicity as a specific factor in the admissions process.1,2,8,9

Lived individual experiences, including essays that speak to an applicant’s lived experiences and career aspirations related to race, still can be taken into account. In particular, holistic review still can be utilized to evaluate medical school candidates and may play a more integral role in the medical school admissions process now than in the past.8,10,11 After the ruling, Justice Sonia Sotomayor noted that “today’s decision leaves intact holistic college admissions and recruitment efforts that seek to enroll diverse classes without using racial classifications.”1

The ruling asserted that universities may define their mission as they see fit. As a result, the ruling did not affect medical school missions or strategic plans, including those that may aim to diversify the health care workforce.8,10,11 The ruling also did not affect the ability to utilize pathway programs to encourage a career in medicine or recruitment relationships with diverse undergraduate or community-based organizations. Student interest groups also can be involved in the relationship-building or recruitment activities for medical schools.8,10,11 Guidance from the US Department of Education and US Department of Justice noted that institutions may consider race in identifying prospective applicants through recruitment and outreach, “provided that their outreach and recruitment programs do not provide targeted groups of prospective students preference in the admissions process, and provided that all students—whether part of a specifically targeted group or not—enjoy the same opportunity to apply and compete for admission.”12

In regard to pathways programs, slots cannot be reserved and preference cannot be given to applicants who participated in these programs if race was a factor in selecting participants.8 Similarly, medical school away electives related to diversity cannot be reserved for those of a specific race or ethnicity; however, these electives can utilize commitment to stated aims and missions of the rotation, such as a commitment to diversity within medicine, as a basis to selecting candidates.8

The ruling did not address how race or ethnicity is factored into financial aid or scholarship determination. There has been concern in higher education that the legal framework utilized in the SCOTUS decision could affect financial aid and scholarship decisions; therefore, many institutions are proceeding with caution in their approach.8

Effect on Residency Selection

Because the SCOTUS ruling references colleges and universities, not health care employers, it should not affect the residency selection process; however, there is variability in how health care institutions are interpreting the impact of the ruling on residency selection, with some taking a more prescriptive and cautious view on the matter. Additionally, with that said, residency selection is considered an employment practice covered by Title VII of the Civil Rights Act of 1964,13 which already prohibits the consideration of race in hiring decisions.7 Under Title VII, it is unlawful for employers to discriminate against someone because of race, color, religion, sex, or national origin, and it is “unlawful to use policies or practices that seem neutral but have the effect of discriminating against people because of their race, color, religion, sex … or national origin.” Title VII also states that employers cannot “make employment decisions based on stereotypes or assumptions about a person’s abilities, traits, or performance because of their race, color, religion, sex … or national origin.”13

Importantly, Title VII does not imply that employers need to abandon their diversity, equity, or inclusion initiatives, and it does not imply that employers must revoke their mission to improve diversity in the workforce. Title VII does not state that racial information cannot be available. It would be permissible to use racial data to assess recruitment trends, identify inequities, and create programs to eliminate barriers and decrease bias14; for example, if a program identified that, based on their current review system, students who are underrepresented in medicine were disproportionately screened out of the applicant pool or interview group, they may wish to revisit their review process to identify and eliminate possible biases. Programs also may wish to adopt educational programs for reviewers (eg, implicit bias training) or educational content on the potential for bias in commonly used review criteria, such as the US Medical Licensing Examination, clerkship grades, and the Medical Student Performance Evaluation.15 Reviewers can and should consider applications in an individualized and holistic manner in which experiences, traits, skills, and academic metrics are assessed together for compatibility with the values and mission of the training program.16

Future Directions for Dermatology

Beyond the SCOTUS ruling, there have been other shifts in the dermatology residency application process that have affected candidate review. Dermatology programs recently have adopted the use of preference signaling in residency applications. Preliminary data from the Association of American Medical Colleges for the 2024 application cycle indicated that of the 81 programs analyzed, there was a nearly 0% chance of an applicant receiving an interview invitation from a program that they did not signal. The median signal-to-interview conversion rate for the 81 dermatology programs analyzed was 55% for gold signals and 15% for silver signals.17 It can be inferred from these data that programs are using preference signaling as important criteria for consideration of interview invitation. Programs may choose to focus most of their attention on the applicant pool who has signaled them. Because the number and type of signals available is equal among all applicants, we hope that this provides an equitable way for all applicants to garner holistic review from programs that interested them. In addition, there has been a 30% decrease in average applications submitted per dermatology applicant.18 With a substantial decline in applications to dermatology, we hope that reviewers are able to spend more time devoted to comprehensive holistic review.

Although signals are equitable for applicants, their distribution among programs may not be; for example, in a given year, a program might find that all their gold signals came from non–underrepresented in medicine students. We encourage programs to carefully review applicant data to ensure their recruitment process is not inadvertently discriminatory and is in alignment with their goals and mission.

The ruling by the Supreme Court of the United States (SCOTUS) in 20231,2 on the use of race-based criteria in college admissions was met with a range of reactions across the country. Given the implications of this decision on the future makeup of higher education, the downstream effects on medical school admissions, and the possible further impact on graduate medical education programs, we sought to explore the potential impact of the landmark decision from the perspective of dermatology residency program directors and offer insights on this pivotal judgment.

Background on the SCOTUS Ruling

In June 2023, SCOTUS issued its formal decision on 2 court cases brought by the organization Students for Fair Admissions (SFFA) against the University of North Carolina at Chapel Hill1 and Harvard University (Cambridge, Massachusetts)2 that addressed college admissions practices dealing with the use of race as a selection criterion in the application process. The cases alleged that these universities had overly emphasized race in the admissions process and thus were in violation of the Civil Rights Act of 1964 as well as the 14th Amendment.1,2

The SCOTUS justices voted 6 to 3 in favor of the argument presented by the SFFA, determining that the use of race in the college admissions process essentially constituted a form of racial discrimination. The ruling was in contrast to a prior decision in 2003 that centered on law school admissions at the University of Michigan (Ann Arbor, Michigan) in which SCOTUS previously had determined that race could be used as one factor amongst other criteria in the higher education selection process.3 In the 2023 decision siding with SFFA, SCOTUS did acknowledge that it was still acceptable for selection processes to consider “an applicant’s discussion of how race affected his or her life, be it through discrimination, inspiration, or otherwise.”2

Effect on Undergraduate Admissions

Prior to the 2023 ruling, several states had already passed independent laws against the use of affirmative action or race-based selection criteria in the admissions process at public colleges and universities.4 As a result, these institutions would already be conforming to the principles set forth in the SCOTUS ruling and major changes to their undergraduate admissions policies would not be expected; however, a considerable number of colleges and universities—particularly those considered highly selective with applicant acceptance rates that are well below the national average—reported the use of race as a factor in their admissions processes in standardized reporting surveys.5 For these institutions, it is no longer considered acceptable (based on the SCOTUS decision) to use race as a singular factor in admissions or to implement race-conscious decision-making—in which individuals are considered differently based solely on their race—as part of the undergraduate selection process.

In light of these rulings, many institutions have explicitly committed to upholding principles of diversity in their recruitment processes, acknowledging the multifaceted nature of diversity beyond strictly racial terms—including but not limited to socioeconomic diversity, religious diversity, or gender diversity—which is in compliance with the interpretation ruling by the US Department of Education and the US Department of Justice.6 Additionally, select institutions have taken approaches to explicitly include questions on ways in which applicants have overcome obstacles or challenges, allowing an opportunity for individuals who have had such experiences related to race an opportunity to incorporate these elements into their applications. Finally, some institutions have taken a more limited approach, eliminating ways in which race is explicitly addressed in the application and focusing on race-neutral elements of the application in their approach to selection.7

Because the first college admission cycle since the 2023 SCOTUS ruling is still underway, we have yet to witness the full impact of this decision on the current undergraduate admissions landscape.

Effect on Medical School Admissions and Rotations

Although SCOTUS specifically examined the undergraduate admissions process, the ruling on race-conscious admissions also had a profound impact on graduate school admissions including medical school admission processes.1,2,8,9 This is because the language of the majority opinion refers to “university programs” in its ruling, which also has been broadly interpreted to include graduate school programs. As with undergraduate admissions, it has been interpreted by national medical education organizations and institutions that medical schools also cannot consider an applicant’s race or ethnicity as a specific factor in the admissions process.1,2,8,9

Lived individual experiences, including essays that speak to an applicant’s lived experiences and career aspirations related to race, still can be taken into account. In particular, holistic review still can be utilized to evaluate medical school candidates and may play a more integral role in the medical school admissions process now than in the past.8,10,11 After the ruling, Justice Sonia Sotomayor noted that “today’s decision leaves intact holistic college admissions and recruitment efforts that seek to enroll diverse classes without using racial classifications.”1

The ruling asserted that universities may define their mission as they see fit. As a result, the ruling did not affect medical school missions or strategic plans, including those that may aim to diversify the health care workforce.8,10,11 The ruling also did not affect the ability to utilize pathway programs to encourage a career in medicine or recruitment relationships with diverse undergraduate or community-based organizations. Student interest groups also can be involved in the relationship-building or recruitment activities for medical schools.8,10,11 Guidance from the US Department of Education and US Department of Justice noted that institutions may consider race in identifying prospective applicants through recruitment and outreach, “provided that their outreach and recruitment programs do not provide targeted groups of prospective students preference in the admissions process, and provided that all students—whether part of a specifically targeted group or not—enjoy the same opportunity to apply and compete for admission.”12

In regard to pathways programs, slots cannot be reserved and preference cannot be given to applicants who participated in these programs if race was a factor in selecting participants.8 Similarly, medical school away electives related to diversity cannot be reserved for those of a specific race or ethnicity; however, these electives can utilize commitment to stated aims and missions of the rotation, such as a commitment to diversity within medicine, as a basis to selecting candidates.8

The ruling did not address how race or ethnicity is factored into financial aid or scholarship determination. There has been concern in higher education that the legal framework utilized in the SCOTUS decision could affect financial aid and scholarship decisions; therefore, many institutions are proceeding with caution in their approach.8

Effect on Residency Selection

Because the SCOTUS ruling references colleges and universities, not health care employers, it should not affect the residency selection process; however, there is variability in how health care institutions are interpreting the impact of the ruling on residency selection, with some taking a more prescriptive and cautious view on the matter. Additionally, with that said, residency selection is considered an employment practice covered by Title VII of the Civil Rights Act of 1964,13 which already prohibits the consideration of race in hiring decisions.7 Under Title VII, it is unlawful for employers to discriminate against someone because of race, color, religion, sex, or national origin, and it is “unlawful to use policies or practices that seem neutral but have the effect of discriminating against people because of their race, color, religion, sex … or national origin.” Title VII also states that employers cannot “make employment decisions based on stereotypes or assumptions about a person’s abilities, traits, or performance because of their race, color, religion, sex … or national origin.”13

Importantly, Title VII does not imply that employers need to abandon their diversity, equity, or inclusion initiatives, and it does not imply that employers must revoke their mission to improve diversity in the workforce. Title VII does not state that racial information cannot be available. It would be permissible to use racial data to assess recruitment trends, identify inequities, and create programs to eliminate barriers and decrease bias14; for example, if a program identified that, based on their current review system, students who are underrepresented in medicine were disproportionately screened out of the applicant pool or interview group, they may wish to revisit their review process to identify and eliminate possible biases. Programs also may wish to adopt educational programs for reviewers (eg, implicit bias training) or educational content on the potential for bias in commonly used review criteria, such as the US Medical Licensing Examination, clerkship grades, and the Medical Student Performance Evaluation.15 Reviewers can and should consider applications in an individualized and holistic manner in which experiences, traits, skills, and academic metrics are assessed together for compatibility with the values and mission of the training program.16

Future Directions for Dermatology