User login

Why Incorporating Obstetric History Matters for CVD Risk Management in Autoimmune Diseases

NEW YORK — Systemic autoimmune disease is well-recognized as a major risk factor for cardiovascular disease (CVD), but less recognized as a cardiovascular risk factor is a history of pregnancy complications, including preeclampsia, and cardiologists and rheumatologists need to include an obstetric history when managing patients with autoimmune diseases, a specialist in reproductive health in rheumatology told attendees at the 4th Annual Cardiometabolic Risk in Inflammatory Conditions conference.

“Autoimmune diseases, lupus in particular, increase the risk for both cardiovascular disease and maternal placental syndromes,” Lisa R. Sammaritano, MD, a professor at Hospital for Special Surgery in New York City and a specialist in reproductive health issues in rheumatology patients, told attendees. “For those patients who have complications during pregnancy, it further increases their already increased risk for later cardiovascular disease.”

CVD Risk Double Whammy

A history of systemic lupus erythematosus (SLE) and problematic pregnancy can be a double whammy for CVD risk. Dr. Sammaritano cited a 2022 meta-analysis that showed patients with SLE had a 2.5 times greater risk for stroke and almost three times greater risk for myocardial infarction than people without SLE.

Maternal placental syndromes include pregnancy loss, restricted fetal growth, preeclampsia, premature membrane rupture, placental abruption, and intrauterine fetal demise, Dr. Sammaritano said. Hypertensive disorders of pregnancy, formerly called adverse pregnancy outcomes, she noted, include gestational hypertension, preeclampsia, and eclampsia.

Pregnancy complications can have an adverse effect on the mother’s postpartum cardiovascular health, Dr. Sammaritano noted, a fact borne out by the cardiovascular health after maternal placental syndromes population-based retrospective cohort study and a 2007 meta-analysis that found a history of preeclampsia doubles the risk for venous thromboembolism, stroke, and ischemic heart disease up to 15 years after pregnancy.

“It is always important to obtain a reproductive health history from patients with autoimmune diseases,” Dr. Sammaritano told this news organization in an interview. “This is an integral part of any medical history. In the usual setting, this includes not only pregnancy history but also use of contraception in reproductive-aged women. Unplanned pregnancy can lead to adverse outcomes in the setting of active or severe autoimmune disease or when teratogenic medications are used.”

Pregnancy history can be a factor in a woman’s cardiovascular health more than 15 years postpartum, even if a woman is no longer planning a pregnancy or is menopausal. “As such, this history is important in assessing every woman’s risk profile for CVD in addition to usual traditional risk factors,” Dr. Sammaritano said.

“It is even more important for women with autoimmune disorders, who have been shown to have an already increased risk for CVD independent of their pregnancy history, likely related to a chronic inflammatory state and other autoimmune-related factors such as presence of antiphospholipid antibodies [aPL] or use of corticosteroids.”

Timing of disease onset is also an issue, she said. “In patients with SLE, for example, onset of CVD is much earlier than in the general population,” Dr. Sammaritano said. “As a result, these patients should likely be assessed for risk — both traditional and other risk factors — earlier than the general population, especially if an adverse obstetric history is present.”

At the younger end of the age continuum, women with autoimmune disease, including SLE and antiphospholipid syndrome, who are pregnant should be put on guideline-directed low-dose aspirin preeclampsia prophylaxis, Dr. Sammaritano said. “Whether every patient with SLE needs this is still uncertain, but certainly, those with a history of renal disease, hypertension, or aPL antibody clearly do,” she added.

The evidence supporting hydroxychloroquine (HCQ) in these patients is controversial, but Dr. Sammaritano noted two meta-analyses, one in 2022 and the other in 2023, that showed that HCQ lowered the risk for preeclampsia in women.

“The clear benefit of HCQ in preventing maternal disease complications, including flare, means we recommend it regardless for all patients with SLE at baseline and during pregnancy [if tolerated],” Dr. Sammaritano said. “The benefit or optimal use of these medications in other autoimmune diseases is less studied and less certain.”

Dr. Sammaritano added in her presentation, “We really need better therapies and, hopefully, those will be on the way, but I think the takeaway message, particularly for practicing rheumatologists and cardiologists, is to ask the question about obstetric history. Many of us don’t. It doesn’t seem relevant in the moment, but it really is in terms of the patient’s long-term risk for cardiovascular disease.”

The Case for Treatment During Pregnancy

Prophylaxis against pregnancy complications in patients with autoimmune disease may be achievable, Taryn Youngstein, MBBS, consultant rheumatologist and codirector of the Centre of Excellence in Vasculitis Research, Imperial College London, London, England, told this news organization after Dr. Sammaritano’s presentation. At the 2023 American College of Rheumatology Annual Meeting, her group reported the safety and effectiveness of continuing tocilizumab in pregnant women with Takayasu arteritis, a large-vessel vasculitis predominantly affecting women of reproductive age.

“What traditionally happens is you would stop the biologic particularly before the third trimester because of safety and concerns that the monoclonal antibody is actively transported across the placenta, which means the baby gets much more concentration of the drug than the mum,” Dr. Youngstein said.

It’s a situation physicians must monitor closely, she said. “The mum is donating their immune system to the baby, but they’re also donating drug.”

“In high-risk patients, we would share decision-making with the patient,” Dr. Youngstein continued. “We have decided it’s too high of a risk for us to stop the drug, so we have been continuing the interleukin-6 [IL-6] inhibitor throughout the entire pregnancy.”

The data from Dr. Youngstein’s group showed that pregnant women with Takayasu arteritis who continued IL-6 inhibition therapy all carried to term with healthy births.

“We’ve shown that it’s relatively safe to do that, but you have to be very careful in monitoring the baby,” she said. This includes not giving the infant any live vaccines at birth because it will have the high levels of IL-6 inhibition, she said.

Dr. Sammaritano and Dr. Youngstein had no relevant financial relationships to disclose.

A version of this article appeared on Medscape.com.

NEW YORK — Systemic autoimmune disease is well-recognized as a major risk factor for cardiovascular disease (CVD), but less recognized as a cardiovascular risk factor is a history of pregnancy complications, including preeclampsia, and cardiologists and rheumatologists need to include an obstetric history when managing patients with autoimmune diseases, a specialist in reproductive health in rheumatology told attendees at the 4th Annual Cardiometabolic Risk in Inflammatory Conditions conference.

“Autoimmune diseases, lupus in particular, increase the risk for both cardiovascular disease and maternal placental syndromes,” Lisa R. Sammaritano, MD, a professor at Hospital for Special Surgery in New York City and a specialist in reproductive health issues in rheumatology patients, told attendees. “For those patients who have complications during pregnancy, it further increases their already increased risk for later cardiovascular disease.”

CVD Risk Double Whammy

A history of systemic lupus erythematosus (SLE) and problematic pregnancy can be a double whammy for CVD risk. Dr. Sammaritano cited a 2022 meta-analysis that showed patients with SLE had a 2.5 times greater risk for stroke and almost three times greater risk for myocardial infarction than people without SLE.

Maternal placental syndromes include pregnancy loss, restricted fetal growth, preeclampsia, premature membrane rupture, placental abruption, and intrauterine fetal demise, Dr. Sammaritano said. Hypertensive disorders of pregnancy, formerly called adverse pregnancy outcomes, she noted, include gestational hypertension, preeclampsia, and eclampsia.

Pregnancy complications can have an adverse effect on the mother’s postpartum cardiovascular health, Dr. Sammaritano noted, a fact borne out by the cardiovascular health after maternal placental syndromes population-based retrospective cohort study and a 2007 meta-analysis that found a history of preeclampsia doubles the risk for venous thromboembolism, stroke, and ischemic heart disease up to 15 years after pregnancy.

“It is always important to obtain a reproductive health history from patients with autoimmune diseases,” Dr. Sammaritano told this news organization in an interview. “This is an integral part of any medical history. In the usual setting, this includes not only pregnancy history but also use of contraception in reproductive-aged women. Unplanned pregnancy can lead to adverse outcomes in the setting of active or severe autoimmune disease or when teratogenic medications are used.”

Pregnancy history can be a factor in a woman’s cardiovascular health more than 15 years postpartum, even if a woman is no longer planning a pregnancy or is menopausal. “As such, this history is important in assessing every woman’s risk profile for CVD in addition to usual traditional risk factors,” Dr. Sammaritano said.

“It is even more important for women with autoimmune disorders, who have been shown to have an already increased risk for CVD independent of their pregnancy history, likely related to a chronic inflammatory state and other autoimmune-related factors such as presence of antiphospholipid antibodies [aPL] or use of corticosteroids.”

Timing of disease onset is also an issue, she said. “In patients with SLE, for example, onset of CVD is much earlier than in the general population,” Dr. Sammaritano said. “As a result, these patients should likely be assessed for risk — both traditional and other risk factors — earlier than the general population, especially if an adverse obstetric history is present.”

At the younger end of the age continuum, women with autoimmune disease, including SLE and antiphospholipid syndrome, who are pregnant should be put on guideline-directed low-dose aspirin preeclampsia prophylaxis, Dr. Sammaritano said. “Whether every patient with SLE needs this is still uncertain, but certainly, those with a history of renal disease, hypertension, or aPL antibody clearly do,” she added.

The evidence supporting hydroxychloroquine (HCQ) in these patients is controversial, but Dr. Sammaritano noted two meta-analyses, one in 2022 and the other in 2023, that showed that HCQ lowered the risk for preeclampsia in women.

“The clear benefit of HCQ in preventing maternal disease complications, including flare, means we recommend it regardless for all patients with SLE at baseline and during pregnancy [if tolerated],” Dr. Sammaritano said. “The benefit or optimal use of these medications in other autoimmune diseases is less studied and less certain.”

Dr. Sammaritano added in her presentation, “We really need better therapies and, hopefully, those will be on the way, but I think the takeaway message, particularly for practicing rheumatologists and cardiologists, is to ask the question about obstetric history. Many of us don’t. It doesn’t seem relevant in the moment, but it really is in terms of the patient’s long-term risk for cardiovascular disease.”

The Case for Treatment During Pregnancy

Prophylaxis against pregnancy complications in patients with autoimmune disease may be achievable, Taryn Youngstein, MBBS, consultant rheumatologist and codirector of the Centre of Excellence in Vasculitis Research, Imperial College London, London, England, told this news organization after Dr. Sammaritano’s presentation. At the 2023 American College of Rheumatology Annual Meeting, her group reported the safety and effectiveness of continuing tocilizumab in pregnant women with Takayasu arteritis, a large-vessel vasculitis predominantly affecting women of reproductive age.

“What traditionally happens is you would stop the biologic particularly before the third trimester because of safety and concerns that the monoclonal antibody is actively transported across the placenta, which means the baby gets much more concentration of the drug than the mum,” Dr. Youngstein said.

It’s a situation physicians must monitor closely, she said. “The mum is donating their immune system to the baby, but they’re also donating drug.”

“In high-risk patients, we would share decision-making with the patient,” Dr. Youngstein continued. “We have decided it’s too high of a risk for us to stop the drug, so we have been continuing the interleukin-6 [IL-6] inhibitor throughout the entire pregnancy.”

The data from Dr. Youngstein’s group showed that pregnant women with Takayasu arteritis who continued IL-6 inhibition therapy all carried to term with healthy births.

“We’ve shown that it’s relatively safe to do that, but you have to be very careful in monitoring the baby,” she said. This includes not giving the infant any live vaccines at birth because it will have the high levels of IL-6 inhibition, she said.

Dr. Sammaritano and Dr. Youngstein had no relevant financial relationships to disclose.

A version of this article appeared on Medscape.com.

NEW YORK — Systemic autoimmune disease is well-recognized as a major risk factor for cardiovascular disease (CVD), but less recognized as a cardiovascular risk factor is a history of pregnancy complications, including preeclampsia, and cardiologists and rheumatologists need to include an obstetric history when managing patients with autoimmune diseases, a specialist in reproductive health in rheumatology told attendees at the 4th Annual Cardiometabolic Risk in Inflammatory Conditions conference.

“Autoimmune diseases, lupus in particular, increase the risk for both cardiovascular disease and maternal placental syndromes,” Lisa R. Sammaritano, MD, a professor at Hospital for Special Surgery in New York City and a specialist in reproductive health issues in rheumatology patients, told attendees. “For those patients who have complications during pregnancy, it further increases their already increased risk for later cardiovascular disease.”

CVD Risk Double Whammy

A history of systemic lupus erythematosus (SLE) and problematic pregnancy can be a double whammy for CVD risk. Dr. Sammaritano cited a 2022 meta-analysis that showed patients with SLE had a 2.5 times greater risk for stroke and almost three times greater risk for myocardial infarction than people without SLE.

Maternal placental syndromes include pregnancy loss, restricted fetal growth, preeclampsia, premature membrane rupture, placental abruption, and intrauterine fetal demise, Dr. Sammaritano said. Hypertensive disorders of pregnancy, formerly called adverse pregnancy outcomes, she noted, include gestational hypertension, preeclampsia, and eclampsia.

Pregnancy complications can have an adverse effect on the mother’s postpartum cardiovascular health, Dr. Sammaritano noted, a fact borne out by the cardiovascular health after maternal placental syndromes population-based retrospective cohort study and a 2007 meta-analysis that found a history of preeclampsia doubles the risk for venous thromboembolism, stroke, and ischemic heart disease up to 15 years after pregnancy.

“It is always important to obtain a reproductive health history from patients with autoimmune diseases,” Dr. Sammaritano told this news organization in an interview. “This is an integral part of any medical history. In the usual setting, this includes not only pregnancy history but also use of contraception in reproductive-aged women. Unplanned pregnancy can lead to adverse outcomes in the setting of active or severe autoimmune disease or when teratogenic medications are used.”

Pregnancy history can be a factor in a woman’s cardiovascular health more than 15 years postpartum, even if a woman is no longer planning a pregnancy or is menopausal. “As such, this history is important in assessing every woman’s risk profile for CVD in addition to usual traditional risk factors,” Dr. Sammaritano said.

“It is even more important for women with autoimmune disorders, who have been shown to have an already increased risk for CVD independent of their pregnancy history, likely related to a chronic inflammatory state and other autoimmune-related factors such as presence of antiphospholipid antibodies [aPL] or use of corticosteroids.”

Timing of disease onset is also an issue, she said. “In patients with SLE, for example, onset of CVD is much earlier than in the general population,” Dr. Sammaritano said. “As a result, these patients should likely be assessed for risk — both traditional and other risk factors — earlier than the general population, especially if an adverse obstetric history is present.”

At the younger end of the age continuum, women with autoimmune disease, including SLE and antiphospholipid syndrome, who are pregnant should be put on guideline-directed low-dose aspirin preeclampsia prophylaxis, Dr. Sammaritano said. “Whether every patient with SLE needs this is still uncertain, but certainly, those with a history of renal disease, hypertension, or aPL antibody clearly do,” she added.

The evidence supporting hydroxychloroquine (HCQ) in these patients is controversial, but Dr. Sammaritano noted two meta-analyses, one in 2022 and the other in 2023, that showed that HCQ lowered the risk for preeclampsia in women.

“The clear benefit of HCQ in preventing maternal disease complications, including flare, means we recommend it regardless for all patients with SLE at baseline and during pregnancy [if tolerated],” Dr. Sammaritano said. “The benefit or optimal use of these medications in other autoimmune diseases is less studied and less certain.”

Dr. Sammaritano added in her presentation, “We really need better therapies and, hopefully, those will be on the way, but I think the takeaway message, particularly for practicing rheumatologists and cardiologists, is to ask the question about obstetric history. Many of us don’t. It doesn’t seem relevant in the moment, but it really is in terms of the patient’s long-term risk for cardiovascular disease.”

The Case for Treatment During Pregnancy

Prophylaxis against pregnancy complications in patients with autoimmune disease may be achievable, Taryn Youngstein, MBBS, consultant rheumatologist and codirector of the Centre of Excellence in Vasculitis Research, Imperial College London, London, England, told this news organization after Dr. Sammaritano’s presentation. At the 2023 American College of Rheumatology Annual Meeting, her group reported the safety and effectiveness of continuing tocilizumab in pregnant women with Takayasu arteritis, a large-vessel vasculitis predominantly affecting women of reproductive age.

“What traditionally happens is you would stop the biologic particularly before the third trimester because of safety and concerns that the monoclonal antibody is actively transported across the placenta, which means the baby gets much more concentration of the drug than the mum,” Dr. Youngstein said.

It’s a situation physicians must monitor closely, she said. “The mum is donating their immune system to the baby, but they’re also donating drug.”

“In high-risk patients, we would share decision-making with the patient,” Dr. Youngstein continued. “We have decided it’s too high of a risk for us to stop the drug, so we have been continuing the interleukin-6 [IL-6] inhibitor throughout the entire pregnancy.”

The data from Dr. Youngstein’s group showed that pregnant women with Takayasu arteritis who continued IL-6 inhibition therapy all carried to term with healthy births.

“We’ve shown that it’s relatively safe to do that, but you have to be very careful in monitoring the baby,” she said. This includes not giving the infant any live vaccines at birth because it will have the high levels of IL-6 inhibition, she said.

Dr. Sammaritano and Dr. Youngstein had no relevant financial relationships to disclose.

A version of this article appeared on Medscape.com.

Tool May Help Prioritize High-Risk Patients for Hysteroscopy

Hysteroscopy is a crucial examination for the diagnosis of endometrial cancer. In Brazil, women with postmenopausal bleeding who need to undergo this procedure in the public health system wait in line alongside patients with less severe complaints. Until now, there has been no system to prioritize patients at high risk for cancer. But this situation may change, thanks to a Brazilian study published in February in the Journal of Clinical Medicine.

Researchers from the Municipal Hospital of Vila Santa Catarina in São Paulo, a public unit managed by Hospital Israelita Albert Einstein, have developed the Endometrial Malignancy Prediction System (EMPS), a nomogram to identify patients at high risk for endometrial cancer and prioritize them in the hysteroscopy waiting list.

Bruna Bottura, MD, a gynecologist and obstetrician at Hospital Israelita Albert Einstein and the study’s lead author, told this news organization that the idea to create the nomogram arose during the COVID-19 pandemic. “We noticed that ... when outpatient clinics resumed, we were seeing many patients for intrauterine device (IUD) removal. We thought it was unfair for a patient with postmenopausal bleeding, who has a chance of having cancer, to have to wait in the same line as a patient needing IUD removal,” she said. This realization motivated the development of the tool, which was overseen by Renato Moretti-Marques, MD, PhD.

The EMPS Score

The team conducted a retrospective case-control study involving 1945 patients with suspected endometrial cancer who had undergone diagnostic hysteroscopy at Hospital Israelita Albert Einstein between March 2019 and March 2022. Among these patients, 107 were diagnosed with precursor lesions or endometrial cancer on the basis of biopsy. The other 1838 participants, who had had cancer ruled out by biopsy, formed the control group.

Through bivariate and multivariate linear regression analysis, the authors determined that the presence or absence of hypertension, diabetes, postmenopausal bleeding, endometrial polyps, uterine volume, number of pregnancies, body mass index, age, and endometrial thickness were the main risk factors for endometrial cancer diagnosis.

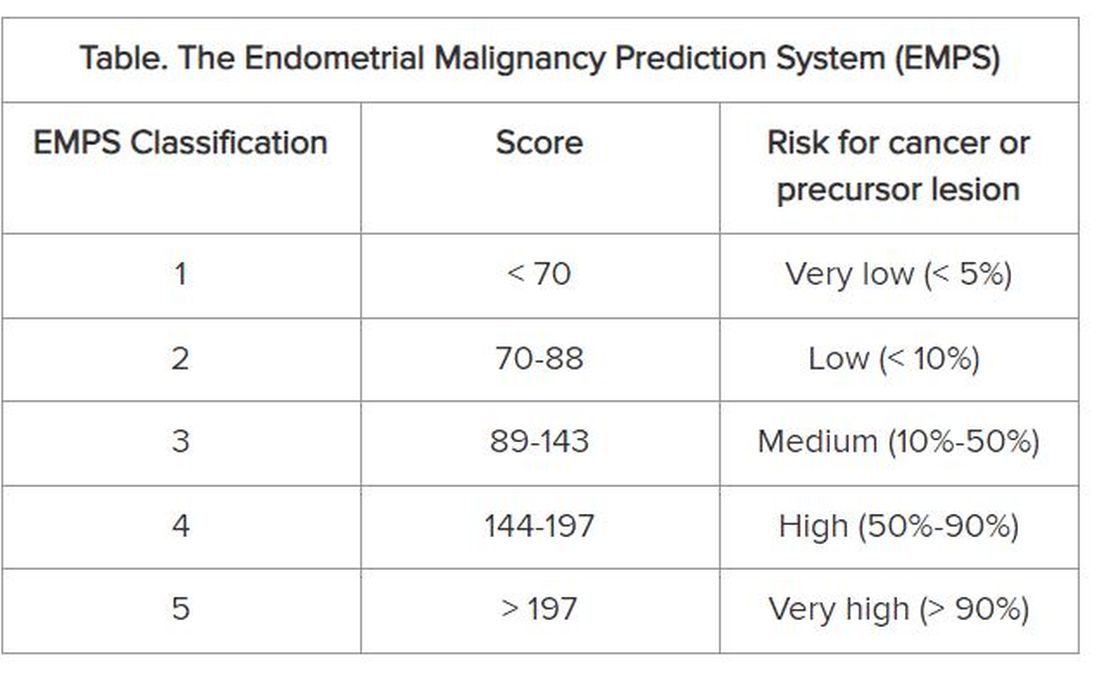

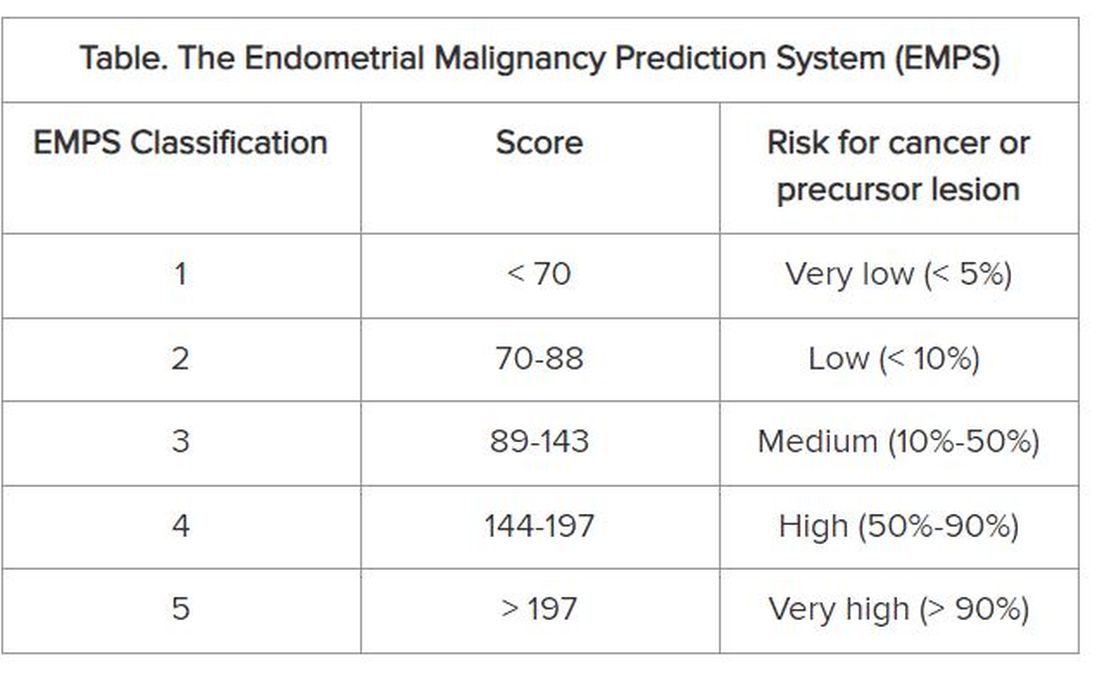

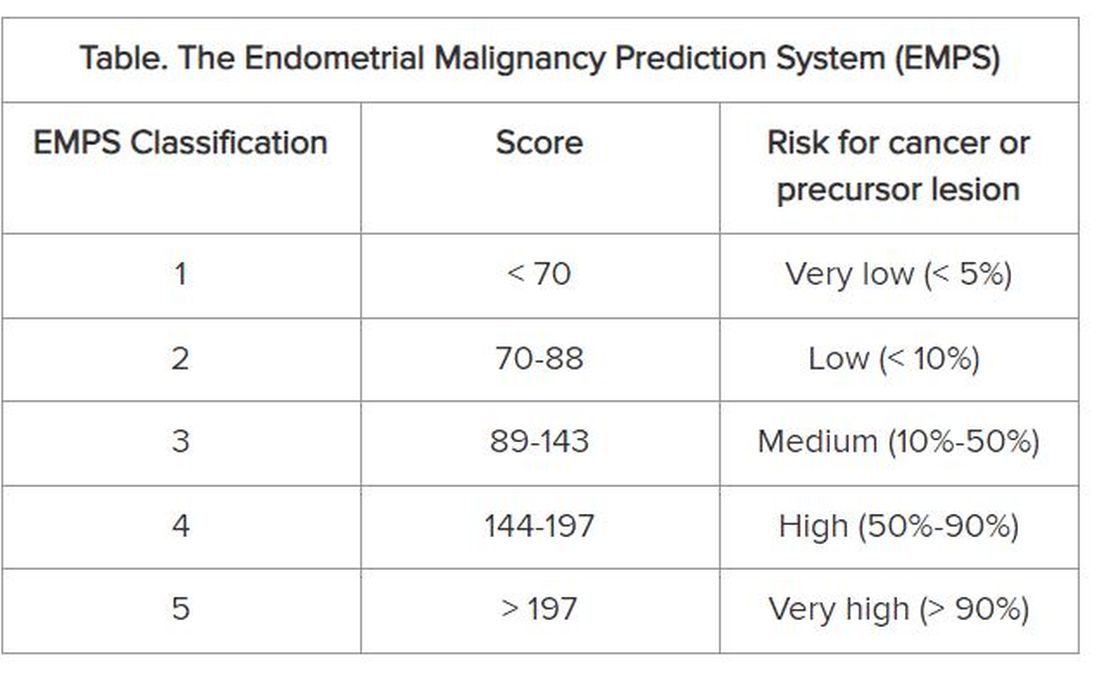

On the basis of these data, the group developed the EMPS nomogram. Physicians can use it to classify the patient’s risk according to the sum of the scores assigned to each of these factors.

The Table shows the classification system. The scoring tables available in the supplemental materials of the article can be accessed here.

Focus on Primary Care

The goal is not to remove patients classified as low risk from the hysteroscopy waiting list, but rather to prioritize those classified as high risk to get the examination, according to Dr. Bottura.

At the Municipal Hospital of Vila Santa Catarina, the average wait time for hysteroscopy was 120 days. But because the unit is focused on oncologic patients and has a high level of organization, this time is much shorter than observed in other parts of Brazil’s National Health Service, said Dr. Bottura. “Many patients are on the hysteroscopy waiting list for 2 years. Considering patients in more advanced stages [of endometrial cancer], it makes a difference,” she said.

Although the nomogram was developed in tertiary care, it is aimed at professionals working in primary care. The reason is that physicians from primary care health units refer women with clinical indications for hysteroscopy to specialized national health services, such as the Municipal Hospital of Vila Santa Catarina. “Our goal is the primary sector, to enable the clinic to refer this high-risk patient sooner. By the time you reach the tertiary sector, where hysteroscopies are performed, all patients will undergo the procedure. Usually, it is not the hospitals that predetermine the line, but rather the health clinics,” she explained.

The researchers hope to continue the research, starting with a prospective study. “We intend to apply and evaluate the tool within our own service to observe whether any patient with a high [EMPS] score patient ended up waiting too long to be referred. In fact, this will be a system validation step,” said Dr. Bottura.

In parallel, the team has a proposal to take the tool to health clinics in the same region as the study hospital. “We know this involves changing the protocol at a national level, so it’s more challenging,” said Dr. Bottura. She added that the final goal is to create a calculator, possibly an app, that allows primary care doctors to calculate the risk score in the office. This calculator could enable risk classification to be linked to patient referrals.

This story was translated from the Medscape Portuguese edition using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

Hysteroscopy is a crucial examination for the diagnosis of endometrial cancer. In Brazil, women with postmenopausal bleeding who need to undergo this procedure in the public health system wait in line alongside patients with less severe complaints. Until now, there has been no system to prioritize patients at high risk for cancer. But this situation may change, thanks to a Brazilian study published in February in the Journal of Clinical Medicine.

Researchers from the Municipal Hospital of Vila Santa Catarina in São Paulo, a public unit managed by Hospital Israelita Albert Einstein, have developed the Endometrial Malignancy Prediction System (EMPS), a nomogram to identify patients at high risk for endometrial cancer and prioritize them in the hysteroscopy waiting list.

Bruna Bottura, MD, a gynecologist and obstetrician at Hospital Israelita Albert Einstein and the study’s lead author, told this news organization that the idea to create the nomogram arose during the COVID-19 pandemic. “We noticed that ... when outpatient clinics resumed, we were seeing many patients for intrauterine device (IUD) removal. We thought it was unfair for a patient with postmenopausal bleeding, who has a chance of having cancer, to have to wait in the same line as a patient needing IUD removal,” she said. This realization motivated the development of the tool, which was overseen by Renato Moretti-Marques, MD, PhD.

The EMPS Score

The team conducted a retrospective case-control study involving 1945 patients with suspected endometrial cancer who had undergone diagnostic hysteroscopy at Hospital Israelita Albert Einstein between March 2019 and March 2022. Among these patients, 107 were diagnosed with precursor lesions or endometrial cancer on the basis of biopsy. The other 1838 participants, who had had cancer ruled out by biopsy, formed the control group.

Through bivariate and multivariate linear regression analysis, the authors determined that the presence or absence of hypertension, diabetes, postmenopausal bleeding, endometrial polyps, uterine volume, number of pregnancies, body mass index, age, and endometrial thickness were the main risk factors for endometrial cancer diagnosis.

On the basis of these data, the group developed the EMPS nomogram. Physicians can use it to classify the patient’s risk according to the sum of the scores assigned to each of these factors.

The Table shows the classification system. The scoring tables available in the supplemental materials of the article can be accessed here.

Focus on Primary Care

The goal is not to remove patients classified as low risk from the hysteroscopy waiting list, but rather to prioritize those classified as high risk to get the examination, according to Dr. Bottura.

At the Municipal Hospital of Vila Santa Catarina, the average wait time for hysteroscopy was 120 days. But because the unit is focused on oncologic patients and has a high level of organization, this time is much shorter than observed in other parts of Brazil’s National Health Service, said Dr. Bottura. “Many patients are on the hysteroscopy waiting list for 2 years. Considering patients in more advanced stages [of endometrial cancer], it makes a difference,” she said.

Although the nomogram was developed in tertiary care, it is aimed at professionals working in primary care. The reason is that physicians from primary care health units refer women with clinical indications for hysteroscopy to specialized national health services, such as the Municipal Hospital of Vila Santa Catarina. “Our goal is the primary sector, to enable the clinic to refer this high-risk patient sooner. By the time you reach the tertiary sector, where hysteroscopies are performed, all patients will undergo the procedure. Usually, it is not the hospitals that predetermine the line, but rather the health clinics,” she explained.

The researchers hope to continue the research, starting with a prospective study. “We intend to apply and evaluate the tool within our own service to observe whether any patient with a high [EMPS] score patient ended up waiting too long to be referred. In fact, this will be a system validation step,” said Dr. Bottura.

In parallel, the team has a proposal to take the tool to health clinics in the same region as the study hospital. “We know this involves changing the protocol at a national level, so it’s more challenging,” said Dr. Bottura. She added that the final goal is to create a calculator, possibly an app, that allows primary care doctors to calculate the risk score in the office. This calculator could enable risk classification to be linked to patient referrals.

This story was translated from the Medscape Portuguese edition using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

Hysteroscopy is a crucial examination for the diagnosis of endometrial cancer. In Brazil, women with postmenopausal bleeding who need to undergo this procedure in the public health system wait in line alongside patients with less severe complaints. Until now, there has been no system to prioritize patients at high risk for cancer. But this situation may change, thanks to a Brazilian study published in February in the Journal of Clinical Medicine.

Researchers from the Municipal Hospital of Vila Santa Catarina in São Paulo, a public unit managed by Hospital Israelita Albert Einstein, have developed the Endometrial Malignancy Prediction System (EMPS), a nomogram to identify patients at high risk for endometrial cancer and prioritize them in the hysteroscopy waiting list.

Bruna Bottura, MD, a gynecologist and obstetrician at Hospital Israelita Albert Einstein and the study’s lead author, told this news organization that the idea to create the nomogram arose during the COVID-19 pandemic. “We noticed that ... when outpatient clinics resumed, we were seeing many patients for intrauterine device (IUD) removal. We thought it was unfair for a patient with postmenopausal bleeding, who has a chance of having cancer, to have to wait in the same line as a patient needing IUD removal,” she said. This realization motivated the development of the tool, which was overseen by Renato Moretti-Marques, MD, PhD.

The EMPS Score

The team conducted a retrospective case-control study involving 1945 patients with suspected endometrial cancer who had undergone diagnostic hysteroscopy at Hospital Israelita Albert Einstein between March 2019 and March 2022. Among these patients, 107 were diagnosed with precursor lesions or endometrial cancer on the basis of biopsy. The other 1838 participants, who had had cancer ruled out by biopsy, formed the control group.

Through bivariate and multivariate linear regression analysis, the authors determined that the presence or absence of hypertension, diabetes, postmenopausal bleeding, endometrial polyps, uterine volume, number of pregnancies, body mass index, age, and endometrial thickness were the main risk factors for endometrial cancer diagnosis.

On the basis of these data, the group developed the EMPS nomogram. Physicians can use it to classify the patient’s risk according to the sum of the scores assigned to each of these factors.

The Table shows the classification system. The scoring tables available in the supplemental materials of the article can be accessed here.

Focus on Primary Care

The goal is not to remove patients classified as low risk from the hysteroscopy waiting list, but rather to prioritize those classified as high risk to get the examination, according to Dr. Bottura.

At the Municipal Hospital of Vila Santa Catarina, the average wait time for hysteroscopy was 120 days. But because the unit is focused on oncologic patients and has a high level of organization, this time is much shorter than observed in other parts of Brazil’s National Health Service, said Dr. Bottura. “Many patients are on the hysteroscopy waiting list for 2 years. Considering patients in more advanced stages [of endometrial cancer], it makes a difference,” she said.

Although the nomogram was developed in tertiary care, it is aimed at professionals working in primary care. The reason is that physicians from primary care health units refer women with clinical indications for hysteroscopy to specialized national health services, such as the Municipal Hospital of Vila Santa Catarina. “Our goal is the primary sector, to enable the clinic to refer this high-risk patient sooner. By the time you reach the tertiary sector, where hysteroscopies are performed, all patients will undergo the procedure. Usually, it is not the hospitals that predetermine the line, but rather the health clinics,” she explained.

The researchers hope to continue the research, starting with a prospective study. “We intend to apply and evaluate the tool within our own service to observe whether any patient with a high [EMPS] score patient ended up waiting too long to be referred. In fact, this will be a system validation step,” said Dr. Bottura.

In parallel, the team has a proposal to take the tool to health clinics in the same region as the study hospital. “We know this involves changing the protocol at a national level, so it’s more challenging,” said Dr. Bottura. She added that the final goal is to create a calculator, possibly an app, that allows primary care doctors to calculate the risk score in the office. This calculator could enable risk classification to be linked to patient referrals.

This story was translated from the Medscape Portuguese edition using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

Lower Urinary Tract Symptoms Associated With Poorer Cognition in Older Adults

Lower urinary tract symptoms were significantly associated with lower scores on measures of cognitive impairment in older adults, based on data from approximately 10,000 individuals.

“We know that lower urinary tract symptoms are very common in aging men and women;” however, older adults often underreport symptoms and avoid seeking treatment, Belinda Williams, MD, of the University of Alabama, Birmingham, said in a presentation at the annual meeting of the American Geriatrics Society.

“Evidence also shows us that the incidence of lower urinary tract symptoms (LUTS) is higher in patients with dementia,” she said. However, the association between cognitive impairment and LUTS has not been well studied, she said.

To address this knowledge gap, Dr. Williams and colleagues reviewed data from older adults with and without LUTS who were enrolled in the REasons for Geographic and Racial Differences in Stroke (REGARDS) study, a cohort study including 30,239 Black or White adults aged 45 years and older who completed telephone or in-home assessments in 2003-2007 and in 2013-2017.

The study population included 6062 women and 4438 men who responded to questionnaires about LUTS and completed several cognitive tests via telephone in 2019-2010. The tests evaluated verbal fluency, executive function, and memory, and included the Six-Item Screener, Animal Naming, Letter F naming, and word list learning; lower scores indicated poorer cognitive performance.

Participants who met the criteria for LUTS were categorized as having mild, moderate, or severe symptoms.

The researchers controlled for age, race, education, income, and urban/rural setting in a multivariate analysis. The mean ages of the women and men were 69 years and 63 years, respectively; 41% and 32% were Black, 59% and 68% were White.

Overall, 70% of women and 62% of men reported LUTS; 6.2% and 8.2%, respectively, met criteria for cognitive impairment. The association between cognitive impairment and LUTS was statistically significant for all specific tests (P < .01), but not for the global cognitive domain tests.

Black men were more likely to report LUTS than White men, but LUTS reports were similar between Black and White women.

Moderate LUTS was the most common degree of severity for men and women (54% and 64%, respectively).

The most common symptom overall was pre-toilet leakage (urge urinary incontinence), reported by 94% of women and 91% of men. The next most common symptoms for men and women were nocturia and urgency.

“We found that, across the board, in all the cognitive tests, LUTS were associated with lower cognitive test scores,” Dr. Williams said in her presentation. Little differences were seen on the Six-Item Screener, she noted, but when they further analyzed the data using scores lower than 4 to indicate cognitive impairment, they found significant association with LUTS, she said.

The results showing that the presence of LUTS was consistently associated with lower cognitive test scores of verbal fluency, executive function, and memory, are applicable in clinical practice, Dr. Williams said in her presentation.

“Recognizing the subtle changes in cognition among older adults with LUTS may impact treatment decisions,” she said. “For example, we can encourage and advise our patients to be physically and cognitively active and to avoid anticholinergic medications.”

Next steps for research include analyzing longitudinal changes in cognition among participants with and without LUTS, said Dr. Williams.

During a question-and-answer session, Dr. Williams agreed with a comment that incorporating cognitive screening strategies in to LUTS clinical pathways might be helpful, such as conducting a baseline Montreal Cognitive Assessment Test (MoCA) in patients with LUTS. “Periodic repeat MoCAs thereafter can help assess decline in cognition,” she said.

The study was supported by the National Institutes of Neurological Disorders and Stroke and the National Institute on Aging. The researchers had no financial conflicts to disclose.

Lower urinary tract symptoms were significantly associated with lower scores on measures of cognitive impairment in older adults, based on data from approximately 10,000 individuals.

“We know that lower urinary tract symptoms are very common in aging men and women;” however, older adults often underreport symptoms and avoid seeking treatment, Belinda Williams, MD, of the University of Alabama, Birmingham, said in a presentation at the annual meeting of the American Geriatrics Society.

“Evidence also shows us that the incidence of lower urinary tract symptoms (LUTS) is higher in patients with dementia,” she said. However, the association between cognitive impairment and LUTS has not been well studied, she said.

To address this knowledge gap, Dr. Williams and colleagues reviewed data from older adults with and without LUTS who were enrolled in the REasons for Geographic and Racial Differences in Stroke (REGARDS) study, a cohort study including 30,239 Black or White adults aged 45 years and older who completed telephone or in-home assessments in 2003-2007 and in 2013-2017.

The study population included 6062 women and 4438 men who responded to questionnaires about LUTS and completed several cognitive tests via telephone in 2019-2010. The tests evaluated verbal fluency, executive function, and memory, and included the Six-Item Screener, Animal Naming, Letter F naming, and word list learning; lower scores indicated poorer cognitive performance.

Participants who met the criteria for LUTS were categorized as having mild, moderate, or severe symptoms.

The researchers controlled for age, race, education, income, and urban/rural setting in a multivariate analysis. The mean ages of the women and men were 69 years and 63 years, respectively; 41% and 32% were Black, 59% and 68% were White.

Overall, 70% of women and 62% of men reported LUTS; 6.2% and 8.2%, respectively, met criteria for cognitive impairment. The association between cognitive impairment and LUTS was statistically significant for all specific tests (P < .01), but not for the global cognitive domain tests.

Black men were more likely to report LUTS than White men, but LUTS reports were similar between Black and White women.

Moderate LUTS was the most common degree of severity for men and women (54% and 64%, respectively).

The most common symptom overall was pre-toilet leakage (urge urinary incontinence), reported by 94% of women and 91% of men. The next most common symptoms for men and women were nocturia and urgency.

“We found that, across the board, in all the cognitive tests, LUTS were associated with lower cognitive test scores,” Dr. Williams said in her presentation. Little differences were seen on the Six-Item Screener, she noted, but when they further analyzed the data using scores lower than 4 to indicate cognitive impairment, they found significant association with LUTS, she said.

The results showing that the presence of LUTS was consistently associated with lower cognitive test scores of verbal fluency, executive function, and memory, are applicable in clinical practice, Dr. Williams said in her presentation.

“Recognizing the subtle changes in cognition among older adults with LUTS may impact treatment decisions,” she said. “For example, we can encourage and advise our patients to be physically and cognitively active and to avoid anticholinergic medications.”

Next steps for research include analyzing longitudinal changes in cognition among participants with and without LUTS, said Dr. Williams.

During a question-and-answer session, Dr. Williams agreed with a comment that incorporating cognitive screening strategies in to LUTS clinical pathways might be helpful, such as conducting a baseline Montreal Cognitive Assessment Test (MoCA) in patients with LUTS. “Periodic repeat MoCAs thereafter can help assess decline in cognition,” she said.

The study was supported by the National Institutes of Neurological Disorders and Stroke and the National Institute on Aging. The researchers had no financial conflicts to disclose.

Lower urinary tract symptoms were significantly associated with lower scores on measures of cognitive impairment in older adults, based on data from approximately 10,000 individuals.

“We know that lower urinary tract symptoms are very common in aging men and women;” however, older adults often underreport symptoms and avoid seeking treatment, Belinda Williams, MD, of the University of Alabama, Birmingham, said in a presentation at the annual meeting of the American Geriatrics Society.

“Evidence also shows us that the incidence of lower urinary tract symptoms (LUTS) is higher in patients with dementia,” she said. However, the association between cognitive impairment and LUTS has not been well studied, she said.

To address this knowledge gap, Dr. Williams and colleagues reviewed data from older adults with and without LUTS who were enrolled in the REasons for Geographic and Racial Differences in Stroke (REGARDS) study, a cohort study including 30,239 Black or White adults aged 45 years and older who completed telephone or in-home assessments in 2003-2007 and in 2013-2017.

The study population included 6062 women and 4438 men who responded to questionnaires about LUTS and completed several cognitive tests via telephone in 2019-2010. The tests evaluated verbal fluency, executive function, and memory, and included the Six-Item Screener, Animal Naming, Letter F naming, and word list learning; lower scores indicated poorer cognitive performance.

Participants who met the criteria for LUTS were categorized as having mild, moderate, or severe symptoms.

The researchers controlled for age, race, education, income, and urban/rural setting in a multivariate analysis. The mean ages of the women and men were 69 years and 63 years, respectively; 41% and 32% were Black, 59% and 68% were White.

Overall, 70% of women and 62% of men reported LUTS; 6.2% and 8.2%, respectively, met criteria for cognitive impairment. The association between cognitive impairment and LUTS was statistically significant for all specific tests (P < .01), but not for the global cognitive domain tests.

Black men were more likely to report LUTS than White men, but LUTS reports were similar between Black and White women.

Moderate LUTS was the most common degree of severity for men and women (54% and 64%, respectively).

The most common symptom overall was pre-toilet leakage (urge urinary incontinence), reported by 94% of women and 91% of men. The next most common symptoms for men and women were nocturia and urgency.

“We found that, across the board, in all the cognitive tests, LUTS were associated with lower cognitive test scores,” Dr. Williams said in her presentation. Little differences were seen on the Six-Item Screener, she noted, but when they further analyzed the data using scores lower than 4 to indicate cognitive impairment, they found significant association with LUTS, she said.

The results showing that the presence of LUTS was consistently associated with lower cognitive test scores of verbal fluency, executive function, and memory, are applicable in clinical practice, Dr. Williams said in her presentation.

“Recognizing the subtle changes in cognition among older adults with LUTS may impact treatment decisions,” she said. “For example, we can encourage and advise our patients to be physically and cognitively active and to avoid anticholinergic medications.”

Next steps for research include analyzing longitudinal changes in cognition among participants with and without LUTS, said Dr. Williams.

During a question-and-answer session, Dr. Williams agreed with a comment that incorporating cognitive screening strategies in to LUTS clinical pathways might be helpful, such as conducting a baseline Montreal Cognitive Assessment Test (MoCA) in patients with LUTS. “Periodic repeat MoCAs thereafter can help assess decline in cognition,” she said.

The study was supported by the National Institutes of Neurological Disorders and Stroke and the National Institute on Aging. The researchers had no financial conflicts to disclose.

FROM AGS 2024

Clinicians Call for Easing FDA Warnings on Low-Dose Estrogen

Charles Powell, MD, said he sometimes has a hard time persuading patients to start on low-dose vaginal estrogen, which can help prevent urinary tract infections and ease other symptoms of menopause.

Many women fear taking these vaginal products because of what Dr. Powell considers excessively strong warnings about the risk for cancer and cardiovascular disease linked to daily estrogen pills that were issued in the early 2000s.

He is advocating for the US Food and Drug Administration (FDA) to remove the boxed warning on low-dose estrogen. His efforts are separate from his roles as an associate professor of urology at the Indiana University School of Medicine, and as a member of the American Urological Association (AUA), Dr. Powell said.

In his quest to find out how to change labeling, Dr. Powell has gained a quick education about drug regulation. He has enlisted Representative Jim Baird (R-IN) and Senator Mike Braun (R-IN) to contact the FDA on his behalf, while congressional staff guided him through the hurdles of getting the warning label changed. For instance, a manufacturer of low-dose estrogen may need to become involved.

“You don’t learn this in med school,” Dr. Powell said in an interview.

With this work, Dr. Powell is wading into a long-standing argument between the FDA and some clinicians and researchers about the potential harms of low-dose estrogen.

He is doing so at a time of increased interest in understanding genitourinary syndrome of menopause (GSM), a term coined a decade ago by the International Society for the Study of Women’s Sexual Health and the North American Menopause Society to cover “a constellation of conditions” related to urogenital atrophy.

Symptoms of GSM include vaginal dryness and burning and recurrent urinary tract infections.

The federal government in 2022 began a project budgeted with nearly $1 million to review evidence on treatments, including vaginal and low-dose estrogen. The aim is to eventually help the AUA develop clinical guidelines for addressing GSM.

In addition, a bipartisan Senate bill introduced in May calls for authorizing $125 million over 5 years for the National Institutes of Health (NIH) to fund research on menopause. Senator Patty Murray (D-WA), the lead sponsor of the bill, is a longtime advocate for women’s health and serves as chairwoman for the Senate Appropriations Committee, which largely sets the NIH budget.

“The bottom line is, for too long, menopause has been overlooked, underinvested in and left behind,” Sen. Murray said during a May 2 press conference. “It is well past time to stop treating menopause like some kind of secret and start treating it like the major mainstream public health issue it is.”

Evidence Demands

Increased federal funding for menopause research could help efforts to change the warning label on low-dose estrogen, according to JoAnn Manson, MD, chief of preventive medicine at Brigham and Women’s Hospital in Boston.

Dr. Manson was a leader of the Women’s Health Initiative (WHI), a major federally funded research project launched in 1991 to investigate if hormone therapy and diet could protect older women from chronic diseases related to aging.

Before the WHI, clinicians prescribed hormones to prevent cardiovascular disease, based on evidence from earlier research.

But in 2002, a WHI trial that compared estrogen-progestin tablets with placebo was halted early because of disturbing findings, including an association with higher risk for breast cancer and cardiovascular disease.

Compared with placebo, for every 10,000 women taking estrogen plus progestin annually, incidences of cardiovascular disease, stroke, pulmonary embolism, and invasive breast cancer were seven to eight times higher.

In January 2003, the FDA announced it would put a boxed warning about cardiovascular risk and cancer risk on estrogen products, reflecting the WHI finding.

The agency at the time said clinicians should work with patients to assess risks and benefits of these products to manage the effects of menopause.

But more news on the potential harms of estrogen followed in 2004: A WHI study comparing estrogen-only pills with placebo produced signals of a small increased risk for stroke, although it also indicated no excess risk for breast cancer for at least 6.8 years of use.

Dr. Manson and the North American Menopause Society in 2016 filed a petition with the FDA to remove the boxed warning that appears on the front of low-dose estrogen products. The group wanted the information on risks moved to the usual warning section of the label.

Two years later, the FDA rejected the petition, citing the absence of “well-controlled studies,” to prove low-dose topical estrogen poses less risk to women than the high-dose pills studied in the WHI.

The FDA told this news organization that it stands by the decisions in its rejection of the petition.

Persuading the FDA to revise the labels on low-dose estrogen products likely will require evidence from randomized, large-scale studies, Dr. Manson said. The agency has not been satisfied to date with findings from other kinds of studies, including observational research.

“Once that evidence is available that the benefit-risk profile is different for different formulations and the evidence is compelling and definitive, that warning should change,” Dr. Manson told this news organization.

But the warning continues to have a chilling effect on patient willingness to use low-dose vaginal estrogen, even with the FDA’s continued endorsement of estrogen for menopause symptoms, clinicians told this news organization.

Risa Kagan, MD, a gynecologist at Sutter Health in Berkeley, California, said in many cases her patients’ partners also need to be reassured. Dr. Kagan said she still sees women who have had to discontinue sexual intercourse because of pain. In some cases, the patients will bring the medicine home only to find that the warnings frighten their spouses.

“The spouse says, ‘Oh my God, I don’t want you to get dementia, to get breast cancer, it’s not worth it, so let’s keep doing outercourse’,” meaning sexual relations without penetration, Dr. Kagan said.

Difficult Messaging

From the initial unveiling of disappointing WHI results, clinicians and researchers have stressed that women could continue using estrogen products for managing symptoms of menopause, even while advising strongly against their continued use with the intention of preventing heart disease.

Newly published findings from follow-ups of WHI participants may give clinicians and patients even more confidence for the use of estrogen products in early menopause.

According to the study, which Dr. Manson coauthored, younger women have a low risk for cardiovascular disease and other associated conditions when taking hormone therapy. Risks attributed to these drugs were less than one additional adverse event per 1000 women annually. This population may also derive significant quality-of-life benefits for symptom relief.

Dr. Manson told this news organization that estrogen in lower doses and delivered through the skin as a patch or gel may further reduce risks.

“The WHI findings should never be used as a reason to deny hormone therapy to women in early menopause with bothersome menopausal symptoms,” Dr. Manson said. “Many women are good candidates for treatment and, in shared decision-making with their clinicians, should be able to receive appropriate and personalized healthcare for their needs.”

But the current FDA warning label makes it difficult to help women understand the risk and benefits of low-dose estrogen, according to Stephanie Faubion, MD, MBA, medical director at the North American Menopause Society and director of Mayo Clinic’s Center for Women’s Health in Jacksonville, Florida.

Clinicians now must set aside time to explain the warnings to women when they prescribe low-dose estrogen, Dr. Faubion said.

“The package insert is going to look scary: I prepare women for that because otherwise they often won’t even fill it or use it.”

A version of this article appeared on Medscape.com .

Charles Powell, MD, said he sometimes has a hard time persuading patients to start on low-dose vaginal estrogen, which can help prevent urinary tract infections and ease other symptoms of menopause.

Many women fear taking these vaginal products because of what Dr. Powell considers excessively strong warnings about the risk for cancer and cardiovascular disease linked to daily estrogen pills that were issued in the early 2000s.

He is advocating for the US Food and Drug Administration (FDA) to remove the boxed warning on low-dose estrogen. His efforts are separate from his roles as an associate professor of urology at the Indiana University School of Medicine, and as a member of the American Urological Association (AUA), Dr. Powell said.

In his quest to find out how to change labeling, Dr. Powell has gained a quick education about drug regulation. He has enlisted Representative Jim Baird (R-IN) and Senator Mike Braun (R-IN) to contact the FDA on his behalf, while congressional staff guided him through the hurdles of getting the warning label changed. For instance, a manufacturer of low-dose estrogen may need to become involved.

“You don’t learn this in med school,” Dr. Powell said in an interview.

With this work, Dr. Powell is wading into a long-standing argument between the FDA and some clinicians and researchers about the potential harms of low-dose estrogen.

He is doing so at a time of increased interest in understanding genitourinary syndrome of menopause (GSM), a term coined a decade ago by the International Society for the Study of Women’s Sexual Health and the North American Menopause Society to cover “a constellation of conditions” related to urogenital atrophy.

Symptoms of GSM include vaginal dryness and burning and recurrent urinary tract infections.

The federal government in 2022 began a project budgeted with nearly $1 million to review evidence on treatments, including vaginal and low-dose estrogen. The aim is to eventually help the AUA develop clinical guidelines for addressing GSM.

In addition, a bipartisan Senate bill introduced in May calls for authorizing $125 million over 5 years for the National Institutes of Health (NIH) to fund research on menopause. Senator Patty Murray (D-WA), the lead sponsor of the bill, is a longtime advocate for women’s health and serves as chairwoman for the Senate Appropriations Committee, which largely sets the NIH budget.

“The bottom line is, for too long, menopause has been overlooked, underinvested in and left behind,” Sen. Murray said during a May 2 press conference. “It is well past time to stop treating menopause like some kind of secret and start treating it like the major mainstream public health issue it is.”

Evidence Demands

Increased federal funding for menopause research could help efforts to change the warning label on low-dose estrogen, according to JoAnn Manson, MD, chief of preventive medicine at Brigham and Women’s Hospital in Boston.

Dr. Manson was a leader of the Women’s Health Initiative (WHI), a major federally funded research project launched in 1991 to investigate if hormone therapy and diet could protect older women from chronic diseases related to aging.

Before the WHI, clinicians prescribed hormones to prevent cardiovascular disease, based on evidence from earlier research.

But in 2002, a WHI trial that compared estrogen-progestin tablets with placebo was halted early because of disturbing findings, including an association with higher risk for breast cancer and cardiovascular disease.

Compared with placebo, for every 10,000 women taking estrogen plus progestin annually, incidences of cardiovascular disease, stroke, pulmonary embolism, and invasive breast cancer were seven to eight times higher.

In January 2003, the FDA announced it would put a boxed warning about cardiovascular risk and cancer risk on estrogen products, reflecting the WHI finding.

The agency at the time said clinicians should work with patients to assess risks and benefits of these products to manage the effects of menopause.

But more news on the potential harms of estrogen followed in 2004: A WHI study comparing estrogen-only pills with placebo produced signals of a small increased risk for stroke, although it also indicated no excess risk for breast cancer for at least 6.8 years of use.

Dr. Manson and the North American Menopause Society in 2016 filed a petition with the FDA to remove the boxed warning that appears on the front of low-dose estrogen products. The group wanted the information on risks moved to the usual warning section of the label.

Two years later, the FDA rejected the petition, citing the absence of “well-controlled studies,” to prove low-dose topical estrogen poses less risk to women than the high-dose pills studied in the WHI.

The FDA told this news organization that it stands by the decisions in its rejection of the petition.

Persuading the FDA to revise the labels on low-dose estrogen products likely will require evidence from randomized, large-scale studies, Dr. Manson said. The agency has not been satisfied to date with findings from other kinds of studies, including observational research.

“Once that evidence is available that the benefit-risk profile is different for different formulations and the evidence is compelling and definitive, that warning should change,” Dr. Manson told this news organization.

But the warning continues to have a chilling effect on patient willingness to use low-dose vaginal estrogen, even with the FDA’s continued endorsement of estrogen for menopause symptoms, clinicians told this news organization.

Risa Kagan, MD, a gynecologist at Sutter Health in Berkeley, California, said in many cases her patients’ partners also need to be reassured. Dr. Kagan said she still sees women who have had to discontinue sexual intercourse because of pain. In some cases, the patients will bring the medicine home only to find that the warnings frighten their spouses.

“The spouse says, ‘Oh my God, I don’t want you to get dementia, to get breast cancer, it’s not worth it, so let’s keep doing outercourse’,” meaning sexual relations without penetration, Dr. Kagan said.

Difficult Messaging

From the initial unveiling of disappointing WHI results, clinicians and researchers have stressed that women could continue using estrogen products for managing symptoms of menopause, even while advising strongly against their continued use with the intention of preventing heart disease.

Newly published findings from follow-ups of WHI participants may give clinicians and patients even more confidence for the use of estrogen products in early menopause.

According to the study, which Dr. Manson coauthored, younger women have a low risk for cardiovascular disease and other associated conditions when taking hormone therapy. Risks attributed to these drugs were less than one additional adverse event per 1000 women annually. This population may also derive significant quality-of-life benefits for symptom relief.

Dr. Manson told this news organization that estrogen in lower doses and delivered through the skin as a patch or gel may further reduce risks.

“The WHI findings should never be used as a reason to deny hormone therapy to women in early menopause with bothersome menopausal symptoms,” Dr. Manson said. “Many women are good candidates for treatment and, in shared decision-making with their clinicians, should be able to receive appropriate and personalized healthcare for their needs.”

But the current FDA warning label makes it difficult to help women understand the risk and benefits of low-dose estrogen, according to Stephanie Faubion, MD, MBA, medical director at the North American Menopause Society and director of Mayo Clinic’s Center for Women’s Health in Jacksonville, Florida.

Clinicians now must set aside time to explain the warnings to women when they prescribe low-dose estrogen, Dr. Faubion said.

“The package insert is going to look scary: I prepare women for that because otherwise they often won’t even fill it or use it.”

A version of this article appeared on Medscape.com .

Charles Powell, MD, said he sometimes has a hard time persuading patients to start on low-dose vaginal estrogen, which can help prevent urinary tract infections and ease other symptoms of menopause.

Many women fear taking these vaginal products because of what Dr. Powell considers excessively strong warnings about the risk for cancer and cardiovascular disease linked to daily estrogen pills that were issued in the early 2000s.

He is advocating for the US Food and Drug Administration (FDA) to remove the boxed warning on low-dose estrogen. His efforts are separate from his roles as an associate professor of urology at the Indiana University School of Medicine, and as a member of the American Urological Association (AUA), Dr. Powell said.

In his quest to find out how to change labeling, Dr. Powell has gained a quick education about drug regulation. He has enlisted Representative Jim Baird (R-IN) and Senator Mike Braun (R-IN) to contact the FDA on his behalf, while congressional staff guided him through the hurdles of getting the warning label changed. For instance, a manufacturer of low-dose estrogen may need to become involved.

“You don’t learn this in med school,” Dr. Powell said in an interview.

With this work, Dr. Powell is wading into a long-standing argument between the FDA and some clinicians and researchers about the potential harms of low-dose estrogen.

He is doing so at a time of increased interest in understanding genitourinary syndrome of menopause (GSM), a term coined a decade ago by the International Society for the Study of Women’s Sexual Health and the North American Menopause Society to cover “a constellation of conditions” related to urogenital atrophy.

Symptoms of GSM include vaginal dryness and burning and recurrent urinary tract infections.

The federal government in 2022 began a project budgeted with nearly $1 million to review evidence on treatments, including vaginal and low-dose estrogen. The aim is to eventually help the AUA develop clinical guidelines for addressing GSM.

In addition, a bipartisan Senate bill introduced in May calls for authorizing $125 million over 5 years for the National Institutes of Health (NIH) to fund research on menopause. Senator Patty Murray (D-WA), the lead sponsor of the bill, is a longtime advocate for women’s health and serves as chairwoman for the Senate Appropriations Committee, which largely sets the NIH budget.

“The bottom line is, for too long, menopause has been overlooked, underinvested in and left behind,” Sen. Murray said during a May 2 press conference. “It is well past time to stop treating menopause like some kind of secret and start treating it like the major mainstream public health issue it is.”

Evidence Demands

Increased federal funding for menopause research could help efforts to change the warning label on low-dose estrogen, according to JoAnn Manson, MD, chief of preventive medicine at Brigham and Women’s Hospital in Boston.

Dr. Manson was a leader of the Women’s Health Initiative (WHI), a major federally funded research project launched in 1991 to investigate if hormone therapy and diet could protect older women from chronic diseases related to aging.

Before the WHI, clinicians prescribed hormones to prevent cardiovascular disease, based on evidence from earlier research.

But in 2002, a WHI trial that compared estrogen-progestin tablets with placebo was halted early because of disturbing findings, including an association with higher risk for breast cancer and cardiovascular disease.

Compared with placebo, for every 10,000 women taking estrogen plus progestin annually, incidences of cardiovascular disease, stroke, pulmonary embolism, and invasive breast cancer were seven to eight times higher.

In January 2003, the FDA announced it would put a boxed warning about cardiovascular risk and cancer risk on estrogen products, reflecting the WHI finding.

The agency at the time said clinicians should work with patients to assess risks and benefits of these products to manage the effects of menopause.

But more news on the potential harms of estrogen followed in 2004: A WHI study comparing estrogen-only pills with placebo produced signals of a small increased risk for stroke, although it also indicated no excess risk for breast cancer for at least 6.8 years of use.

Dr. Manson and the North American Menopause Society in 2016 filed a petition with the FDA to remove the boxed warning that appears on the front of low-dose estrogen products. The group wanted the information on risks moved to the usual warning section of the label.

Two years later, the FDA rejected the petition, citing the absence of “well-controlled studies,” to prove low-dose topical estrogen poses less risk to women than the high-dose pills studied in the WHI.

The FDA told this news organization that it stands by the decisions in its rejection of the petition.

Persuading the FDA to revise the labels on low-dose estrogen products likely will require evidence from randomized, large-scale studies, Dr. Manson said. The agency has not been satisfied to date with findings from other kinds of studies, including observational research.

“Once that evidence is available that the benefit-risk profile is different for different formulations and the evidence is compelling and definitive, that warning should change,” Dr. Manson told this news organization.

But the warning continues to have a chilling effect on patient willingness to use low-dose vaginal estrogen, even with the FDA’s continued endorsement of estrogen for menopause symptoms, clinicians told this news organization.

Risa Kagan, MD, a gynecologist at Sutter Health in Berkeley, California, said in many cases her patients’ partners also need to be reassured. Dr. Kagan said she still sees women who have had to discontinue sexual intercourse because of pain. In some cases, the patients will bring the medicine home only to find that the warnings frighten their spouses.

“The spouse says, ‘Oh my God, I don’t want you to get dementia, to get breast cancer, it’s not worth it, so let’s keep doing outercourse’,” meaning sexual relations without penetration, Dr. Kagan said.

Difficult Messaging

From the initial unveiling of disappointing WHI results, clinicians and researchers have stressed that women could continue using estrogen products for managing symptoms of menopause, even while advising strongly against their continued use with the intention of preventing heart disease.

Newly published findings from follow-ups of WHI participants may give clinicians and patients even more confidence for the use of estrogen products in early menopause.

According to the study, which Dr. Manson coauthored, younger women have a low risk for cardiovascular disease and other associated conditions when taking hormone therapy. Risks attributed to these drugs were less than one additional adverse event per 1000 women annually. This population may also derive significant quality-of-life benefits for symptom relief.

Dr. Manson told this news organization that estrogen in lower doses and delivered through the skin as a patch or gel may further reduce risks.

“The WHI findings should never be used as a reason to deny hormone therapy to women in early menopause with bothersome menopausal symptoms,” Dr. Manson said. “Many women are good candidates for treatment and, in shared decision-making with their clinicians, should be able to receive appropriate and personalized healthcare for their needs.”

But the current FDA warning label makes it difficult to help women understand the risk and benefits of low-dose estrogen, according to Stephanie Faubion, MD, MBA, medical director at the North American Menopause Society and director of Mayo Clinic’s Center for Women’s Health in Jacksonville, Florida.

Clinicians now must set aside time to explain the warnings to women when they prescribe low-dose estrogen, Dr. Faubion said.

“The package insert is going to look scary: I prepare women for that because otherwise they often won’t even fill it or use it.”

A version of this article appeared on Medscape.com .

In HPV-Positive Head and Neck Cancer, Treatment Is a Quandary

The topic of head and neck cancer is especially timely since the disease is evolving. A hematologist/oncologist with the Association of VA Hematology/Oncology (AVAHO) told colleagues that specialists are grappling with how to de-escalate treatment.

Molly Tokaz, MD, of Veterans Affairs Puget Sound Health Care and the University of Washington said tobacco is fading as a cause as fewer people smoke, and that human papillomavirus (HPV) is triggering more cases. HPV-positive patients have better prognoses, raising the prospect that their treatment could be adjusted.

“Instead of increasing the amount of therapy we're giving, we’re trying to peel it back,” she said. “If they’re going to respond no matter what we do, why are we going in with these huge weapons of mass destruction if we can get the same results with something more like a light infantry?”

Tokaz spoke about deescalating therapy at a May 2024 regional AVAHO meeting in Seattle that was focused on head and neck cancer. She elaborated on her presentation in an interview with Federal Practitioner. according to Tokaz, 90% of head and neck cancers are mucosal squamous cell carcinomas (SCC). HPV is associated specifically with nasopharyngeal cancer, which is distinct from SCC, and oropharyngeal cancer, which has been linked to better prognoses.

HPV-positive head and neck cancer is a unique entity with its own epidemiology, clinical prognosis, and treatment. “Patients tend to be younger without the same number of comorbid conditions,” Tokaz said. “Some of them are never smokers or light smokers. So, it's a different demographic than we’ve seen traditionally.”

The bad news is that HPV-associated head and neck cancer numbers are on the rise. Fortunately, outcomes tend to be better for the HPV-positive forms.

As for therapy for head and neck cancer, immunotherapy and targeted therapy play smaller roles than in some other cancers because the form tends to be diagnosed in early stages before metastases appear. Surgery, chemotherapy, and radiation remain the major treatments. According to Tokaz’s presentation, surgery, or radiation—often with minimal adjuvant chemotherapy—can be appropriate for the earliest stage I and II cases of head and neck SCC. (She noted that HPV-positive oropharyngeal squamous cell carcinoma has its own staging system.)

Stage I and II cases make up 15% of new diagnoses and have a 5-year survival rate of > 70%. “In the earliest days, our main role was to make radiation work better and reduce it while adding a minimum amount of toxicity mutations,” she said. “Chemotherapy can help, but it’s only demonstrated improvement in overall survival in patients with positive surgical margins and extracapsular extension.”

In Stage III, IVA, and IVB cases, which make up 70% of new diagnoses, chemotherapy plus radiation is recommended. Five-year survival drops to 30% to 50%. Finally, 10% of new diagnoses are Stage IVC, which is incurable and median survival is < 1 year.

Since HPV-positive patients generally have better prognoses, oncologists are considering how to adjust their treatment. However, Tokaz notes that clinical trials have not shown a benefit from less intensive treatment in these patients. “At this point, we still treat them the same way as HPV-negative patients. But it's an ongoing area of research.”

Researchers are also exploring how to optimize regimens in patients ineligible for treatment with the chemotherapy agent cisplatin. “These folks have been traditionally excluded from clinical trials because they’re sicker,” Tokaz explained. “Researchers normally want the fittest and the best patients [in trials]. If you give a drug to someone with a lot of other comorbid conditions, they might not do as well with it, and it makes your drug look bad.”

Figuring out how to treat these patients is an especially urgent task in head and neck cancer because so many patients are frail and have comorbidities. More globally, Tokaz said the rise of HPV-related head and neck cancer highlights the importance of HPV vaccination, which is crucial for preventing cervical and anal cancer in addition to head and neck cancer. “HPV vaccination for children and young adults is crucial.”

Molly Tokaz, MD, reported no relevant financial relationships.

The topic of head and neck cancer is especially timely since the disease is evolving. A hematologist/oncologist with the Association of VA Hematology/Oncology (AVAHO) told colleagues that specialists are grappling with how to de-escalate treatment.

Molly Tokaz, MD, of Veterans Affairs Puget Sound Health Care and the University of Washington said tobacco is fading as a cause as fewer people smoke, and that human papillomavirus (HPV) is triggering more cases. HPV-positive patients have better prognoses, raising the prospect that their treatment could be adjusted.

“Instead of increasing the amount of therapy we're giving, we’re trying to peel it back,” she said. “If they’re going to respond no matter what we do, why are we going in with these huge weapons of mass destruction if we can get the same results with something more like a light infantry?”

Tokaz spoke about deescalating therapy at a May 2024 regional AVAHO meeting in Seattle that was focused on head and neck cancer. She elaborated on her presentation in an interview with Federal Practitioner. according to Tokaz, 90% of head and neck cancers are mucosal squamous cell carcinomas (SCC). HPV is associated specifically with nasopharyngeal cancer, which is distinct from SCC, and oropharyngeal cancer, which has been linked to better prognoses.

HPV-positive head and neck cancer is a unique entity with its own epidemiology, clinical prognosis, and treatment. “Patients tend to be younger without the same number of comorbid conditions,” Tokaz said. “Some of them are never smokers or light smokers. So, it's a different demographic than we’ve seen traditionally.”

The bad news is that HPV-associated head and neck cancer numbers are on the rise. Fortunately, outcomes tend to be better for the HPV-positive forms.